Abstract

The prediction level at x () and mean magnitude of relative error () are measured based on the magnitude of relative error between real and predicted values. They are the standard metrics that evaluate accurate effort estimates. However, these values might not reveal the magnitude of over-/under-estimation. This study aims to define additional information associated with the and to help practitioners better interpret those values. We propose the formulas associated with the and to express the level of scatters of predictive values versus actual values on the left (), on the right (), and on the mean of the scatters (). We depict the benefit of the formulas with three use case points datasets. The proposed formulas might contribute to enriching the value of the and in validating the effort estimation.

MSC:

68N30; 62J20; 62P99

1. Introduction

One of the essential aspects of developing software projects is software effort estimation (SEE) [1,2,3,4,5]. In the early stages of project development, resources or budgets must be measured. The estimation might assist software project managers in determining how much money from the budget is being spent on maintenance activities or project completion. The inaccurate estimate might lead to unrealistic resource allocation and project risk, leading to project failure [6]. The accuracy of effort estimation is, therefore, crucial. Scientists have always adopted evaluation criteria when comparing their proposal models to estimate software project efforts with others. The model that best meets the evaluation criteria is likely to be the most appropriate model chosen to be used to estimate subsequent projects [6,7].

The magnitude of relative error (), prediction level at x (), and mean magnitude of relative error () that were proposed by [8] are well-known evaluation criteria in SEE (see Equations (1)–(3) below). Although Myrtveit, Stensrud, and Shepperd stated that this criterion might have some disadvantages given the , it is still widely used in the validation of real effort estimation. As presented in Table 1, many researchers have used them for measuring the accurate predictive effort estimation:

where is the predicted and is the i-th observed value.

Table 1.

Summary of reviewed related papers in recent years, where the MMRE was adopted.

As can be seen, Equations (2) and (3) only provide evaluation criteria for estimation, and they might not reveal the distribution of predicted values and observed values around a baseline. A baseline is a straight line where predictive and actual values are the same, i.e., a line with an equation . Although such information might be determined by analyzing the prediction residuals, additional information associated with the and might be more interesting. The distribution of these values might be helpful information for researchers because it might bring additional information about the predictive model’s performance.

This article proposes the additional information related to the and by defining formulas, , , and , to determine how the predicted values in comparison with actual values are symmetric around the baseline. The , , and values may reflect the proposed model’s trend. The closer the values reach 0, the better the proposed model distributes around the baseline. On the other hand, if they approach either –1 or +1, the proposed model is either under- or over-estimated. Hence, their value might contribute to the and .

The sections of this article are organized as follows: Section 2 presents the related works; Section 3 proposes the three formulas , , and ; the characteristics of the sig formula are presented in Section 4; Section 5 presents the research questions; Section 6 gives the result and discussion; Section 7 conveys the conclusion and future work.

2. Related Works

The and might be used in SEE situations because they interpret the absolute percentage error. Since the and do not scale, they might be used to aggregate estimated errors from software development projects of various sizes. According to Jørgensen et al., however, there is no top score constraint for overestimating in the ; underestimating effort will never result in an score more significant than one. In their publication [18], they investigated the requirements for the practical usage of the in effort estimation contexts. As a result, they stated that this criterion was still helpful in effort estimation in most cases.

According to Conte et al., one of the advantages of the is that its comparison might be adopted for all kinds of predicted models [8]. However, Foss et al. [19] concluded that it might not be correct when they simulated analysis using the model evaluation criteria . They realized that the might be an untrustworthy or insufficient criterion when choosing suitable linear predicted models in terms of the . They stated that the is likely to select a model that delivers an underestimation of a model. They advised employing a combination of the theoretical justification for the proposed models and additional criteria. A similar conclusion was also shown by Myrtveit et al. [20].

In addition, Kitchenham et al. [21] proposed the variable z (where z = predicted/actual) derived from the . They argued that the distribution of z was required to evaluate the accuracy of a predictive model. However, they also pointed out that the z variable had a limit regarding the summary statistics. The estimation may prefer prediction models that minimize overestimations over prediction systems rather than underestimates. Furthermore, several researchers used other criteria when evaluating the performance of effort estimation, such as the mean inverse balanced relative error (), mean balanced relative error (), mean absolute error (), and standardized accuracy (SA). They stated that the might be unbalanced and yield an asymmetry distribution [22,23]. This means that this criterion might cause some problems identifying under-estimating or over-estimating.

Moreover, as mentioned in the reports [24,25], the is possibly the most widely used measure of estimation error in research and industrial applications. There might be several advantages of the that can boost its popularity, such as: (1) it is unaffected by the sizing unit used; it makes no difference whether SEE is measured in hours or months of workload [20]; (2) it might be not influenced by scale; this means that the accuracy of the does not change with the sizing unit selected [26].

This section presents a literature overview of recent papers that used the and to validate the model’s accuracy in SEE. The search criteria are based on the most recently published articles with high citations. In addition, we will present some review studies in which the authors list the research articles related to SEE and the number of studies that use the and .

In 2022, Mahmood et al. investigated the effectiveness of machine-learning-based effort estimation based on the and [3]. They concluded that ensemble effort estimation techniques might be better than solo techniques. Praynlin 2021 also used the and other evaluation criteria to validate meta cognitive neuro fuzzy and other methods (particle swarm optimization, genetic algorithm, and backpropagation network) [9]. Furthermore, Fadhil et al. [10] proposed the DolBat model to predict effort estimation; this proposal was compared with the constructive cost model (COCOMO) based on the and [10]. As a result, they concluded that their model was better than COCOMO II. This criterion was also used by Hamid et al. [27] when they compared the IRDSS model with Delphi and Planning Poker [27].

Bilgaiyan, S. et al. [11] adopted the , , and mean-squared error () in comparison to the performance of the feedforward back-propagation neural network (NN), cascade correlation NN, and Elman NN in terms of effort estimation [11]. Mustapha et al. also used the , , and median of the magnitude of the relative error () to identify the accuracy of their approach when they investigated the use of random forest in software effort estimation [12]. The was also adopted to validate the accurate effort estimation reported in the publication [13,14].

On the other hand, this criterion was employed by Desai and Mohanty [17]; Effendi, Sarno, and Prasetyo [15]; and Khan et al. [16]. Effendi et al. compared the Optimization of COCOMO with COCOMO [15]; Khan et al. used it and the to compare their proposal and Delphi and Planning Poker [16], while Desai et al. applied the and root-mean-squared error (RMSE) to validate ANN-COA with Other neural network-based techniques [17].

Last but not least, Asad Ali and Carmine Gravino (2019) studied the machine learning approaches employed in software effort prediction from 1991 to 2017 [28]. A total of 75 papers were selected after carefully evaluating the inclusion/exclusion and quality assessment filter. The , , and were studied in those papers. Out of the 75 papers chosen, 69 used the as a measure of accuracy, accounting for 92% of the total. The following highest percentages were the at 69% and 47% for the . They claimed that the and are frequently employed as accuracy metrics in the papers they chose. In 2018, Gautam et al. examined software effort estimates published between 1981 and 2016 [29]. They provided a list of 56 publications that included the datasets used, validation techniques, performance metrics, statistical tests, and graphical analyses, where the and take into account 32 out of 56 observations (57%).

As presented above, there are several criteria that researchers might consider for their proposals, such as the , , , , and . However, the and are frequently used as evaluation criteria. Table 1 summarizes the several articles that adopted those criteria in validating SEE in recent years.

3. Sig Formula

This paper proposes a function expressed below by Equation (4). “i” represents the i-th item in the survey dataset. If the predicted value of the i-th item, denoted as , is greater than the actual value of the i-th item, denoted as , the value of is set to +1. If the predicted value is less than the actual one, the value of is set to –1. If there is no difference between the predicted and actual values, then is setto be equal to zero.

Three new formulas (, , and ) are introduced by Equations (5)–(7). The double sum in each of Equations (5) and (6) is the sum of of of the studied pairs. N is the number of all pairs.

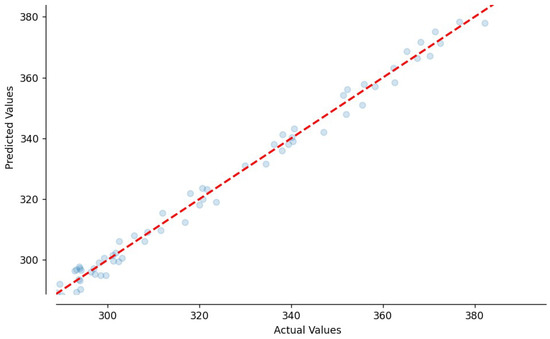

Suppose a coordinate system where actual values are on the horizontal axis and predicted values are on the vertical axis. In Figure 1, there is such a system with a dashed line of the equation (further, this line will be addressed as a “baseline’) and some dots. Each dot represents a studied pair. Its first coordinate is the actual value, and its second coordinate is the predicted value.

Figure 1.

A sample of the distribution of predicted and actual values around the baseline.

Lemma 1.

Assume “” is the number of dots above the baseline and “” is the number of dots below the baseline; we have:

Proof.

The sum in Equation (11) is the sum of all signs. As the sign of each dot is +1 if the dot lies above the baseline, –1 if it lies below the baseline, and 0 if the dot lies on the baseline, it is obvious that the sum in Equation (11) is greater than zero if the number of dots above the baseline is greater than the number of dots below.

Similarly, the sum is less than zero if the number of dots above the baseline is less than the number of dots below the baseline.

Finally, the sum is equal to zero either if the number of dots above the baseline is the same as the number of dots below the baseline or if all dots lie on the baseline. □

Lemma 2.

Let us suppose a chart with N dots such that not all of them lie below the baseline. Changing the y-coordinate of any dot with a position below the baseline (with its x-coordinate unchanged) such that this change causes the dot to move to a position above the baseline will result in an increase of the values of both and .

Proof.

Let us denote shortly again and denote k as the position of the dot with the changed y-coordinate. The change means than that will change to . Then, according to Equation (9):

and

Thus:

The values of and differ only in a term , respectively . It is obvious that , because:

as the assumption was

The values of and differ only in a term , respectively . Again, it is obvious that , because:

, as the assumption was

We conclude that if the number of consecutive dots above the baseline increases, the values of both and increase as well.

Remark: A similar lemma could be proven: If the number of consecutive dots below the baseline increases, the values of both and decrease. □

4. Characteristics of Sig Formula

Let us suppose a chart where the horizontal axis presents the actual values and the vertical axis stands for the expected values . The baseline in such a chart would be a straight line with the equation :

- If all predicted values are greater than all the respective actual values (i.e., all dots are above the baseline), then, according to Equation (11):which is the maximal possible value of .

- If all predicted values are smaller than all the respective actual values (i.e. all dots are below the baseline), then, according to Equation (11):which is the minimal possible value of .

- If there is no difference between each predicted value and the respective actual value (i.e., all dots are on the baseline), then, according to Equation (11):

- In general, the predicted and actual values fluctuate. Some predicted values might be greater than and others might be smaller than the respective actual ones. The dots in Figure 1 then form a cloudy distribution around the baseline. The value of lies then between –1 and +1.

- Moreover, in the case of a uniform symmetry (named UniSym), i.e., if the values alternate around the baseline (one dot is above the baseline, the next one below, the next above, etc.), then with the increasing number N of the observations, the value of approaches 0.

5. Research Questions

These research questions (RQs) should be answered in this study:

- RQ1: What are the difference of the , , and formulas?

- RQ2: What is the importance of additional information related to the and in measuring the performance of the predicted model?

6. Results and Discussion

In this section, we discuss the usefulness of additional information related to the and based on twenty-eight projects (Dataset-1) collected from [30,31,32,33] with eight different predicted assumptions as given in Table 2. The column “Real_P20” represents real efforts in terms of use case points (UCPs) [30,34]. The other columns (Model 1–Model 8) contain the corresponding estimated efforts. The UCPs were originally designed by Karner [35] as a simplification of the functional points method [36]. Size estimation is based on the UML use case model. The UCPs are used in industry or in research. Many studies map effort methods, including UCPs and UCP modification [7,37,38,39,40]. Use case points faced some design issues, which were discussed by Ouwerkerk and Abran [41]. The UCP design flaws are mainly based on scale transformation when the values of the UCP components are calculated [42].

Table 2.

The twenty-eight projects and eight different predicted assumptions.

The presented models simulate the possible prediction model’s behavior based on real scenarios. The models’ behavior is configured as follows:

- Model 1: The predicted efforts are random guessing such that their values are mostly greater than the real values, and the reaches the maximum compared with Models 5, 6, 7, and 8.

- Model 2: The predicted values are random guessing, as opposed to Model 1, where the is equal to the obtained from Model 1.

- Model 3: The predicted efforts are random guessing such that the first half of the predicted values is mostly less than the real values, but the remaining are mostly greater than the real values, where the is assumed equal to the obtained from Model 1.

- Model 4: It is assumed to be similar to Model 3 in the inverse sense. Furthermore, the predicted values in this model were purposefully chosen to minimize the .

- Models 5, 6, 7, and 8: The predicted efforts are based on the rule that one or more initial predicted values are greater/less than the actual values, and one or more subsequent predicted values are greater/less than the actual values. The following predicted values follow the same sequence as the previous ones. The rule is repeated until the testing dataset is exhausted. Furthermore, we assumed their are the same; they are greater than 0.7, but less than , and their is greater than or equal to Models 1, 2, 3, and 4.

As for the predictions (estimated values):

- The predicted values produced by Model 1 are mostly greater than the corresponding real values (all dots lie above the baseline), while Model 2 is the opposite.

- In Model 3, the dots in the first half mostly lie below and the dots in the second half mostly lie above the baseline, and vice versa in Model 4.

- The dots in Models 5 and 6 lie around the baseline, but the number of dots above the baseline is greater than the number of dots below the baseline (systematically overestimated). This is to demonstrate that the value is positive.

- The dots in Model 7 also lie around the baseline, but the number of dots above the baseline is smaller than the number of dots below the baseline. This is to demonstrate that the value is negative (systematically underestimated). Moreover, Model 8 is an approximately UniSym model. The value then reaches zero.

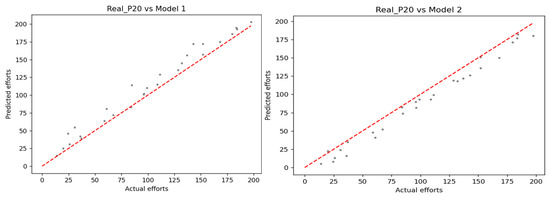

Figure 2 illustrates the scatter of predicted efforts obtained from Models 1 and 2 vs. actual efforts around the baseline. These scatters are generated by using the “scatter” function from the “matplotlib” library of Python. By default, this function automatically re-orders the values from the minimum to maximum values. Figure 3, Figure 4 and Figure 5 below are used in the same manner. As seen in these figures, most of the dots (a pair of actual and predicted values) in Model 1 are above the baseline (where the predicted and actual values are the same, ), resulting in , , and reaching +1.

Figure 2.

The scatter of predictive efforts vs. actual efforts: Model 1 vs. 2.

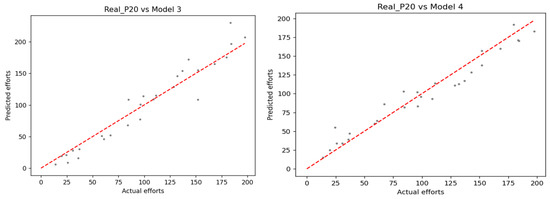

Figure 3.

The scatter of predictive efforts vs. actual efforts: Model 3 vs. 4.

Figure 4.

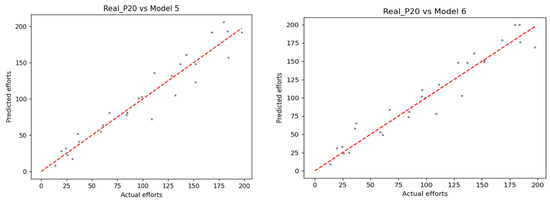

The scatter of predictive efforts vs. actual efforts: Model 5 vs. 6.

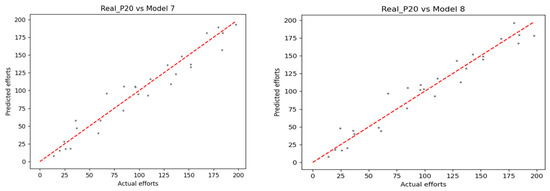

Figure 5.

The scatter of predictive efforts vs. actual efforts: Model 7 vs. 8.

In a similar manner in Model 2, all of the dots are below the baseline; therefore, , , and reach −1 (see Table 3). In practice, these assumptions are improbable because researchers are constantly looking for the most-efficient technique to obtain the best model. In some circumstances, the scatter between the real and predicted efforts might result in a case where the dots will lie around the baseline.

Table 3.

The summary of the values among the eight models.

Another scenario is shown in Models 3 and 4 (see Figure 3). The values calculated from both models reach zero. However, the scatter of the predicted and real values might not be realistic. In Model 3, the first half of the dots is mostly below the baseline, which results in , while the second half of dots is mostly above the baseline (see Table 3). This outcome is contrary to Model 4. Therefore, besides using only the value, we might consider adding and to gain an insight. On the other hand, Figure 4 presents another case (Models 5 and 6) where the values of and are useful to be used together with , and the discussion below might be the answer for RQ1 regarding the difference of , , and . As can be seen, Models 5 and 6 are fairly random patterns around the baseline (see Table 4). Those scatters lead to a result that the absolute values of the obtained from Models 5 and 6 are larger than the absolute values of (see Table 3). Furthermore, Model 6 is more symmetrical than Model 5, and the predicted efforts obtained from the projects P10, P11, and P12 in Model 5 show the different positive/negative errors compared with Model 6 (see Table 4), which leads to the fact that the absolute value of the obtained from Model 5 is larger than that obtained from Model 6. Moreover, the obtained from Model 5 is greater than the obtained from Model 6, which might reveal that the number of dots on the same side of the baseline in the second half of Model 5 is greater than in the second half of Model 6 (see Lema 2). This information might be interesting because, if we use Model 5 as the predicted model, we should consider when we want to predict effort estimation with high values, as these may be overestimated.

Table 4.

The difference between real efforts compared with predicted models.

Moreover, as shown in Figure 5, Models 7 and 8 randomly scatter between the predicted efforts against the actual efforts, whereas Model 8 is slightly more randomly scattered than Model 7. It is clear that the predicted efforts from P1 to P12 and P17 to P28 in both models have the same trend, but there are slight differences from P13 to P16 in Model 7 compared with Model 8 (see Table 4). The dots obtained from those efforts in Model 7 are below the baseline, which leads to the absolute value of , attained from Model 7 being higher than those attained from Model 8. In addition, the number of dots above the baseline in both models is less than the number of dots below it. As a result, the calculated values in both models are less than zero, while the absolute value of obtained from Model 7 is less than that obtained from Model 8.

Table 3 summarizes the statistical metrics from the eight models, including , , , the , and the . As can be seen, the and obtained from Model 4 gain the best performance due to its reaching the minimum and its reaching the maximum compared with the other models. Based on these metrics, this model might be the most suitable among the remaining models. Unfortunately, its specific information / values (obtained 0.527/−0.384) are farther from zero in comparison to the corresponding values obtained in Models 5 to 8 (see Lema 2). Thus, if we want to choose the suitable predictive model among the discussed eight models, we might consider choosing Model 5, 6, 7, or 8 due to the additional information related to the and obtained from those models being slightly closer to zero. This scenario might be a good example to answer RQ2; the values of , , and might be the useful additional information related to the and .

On the other hand, we suppose that we are considering two predictive models, namely Models 7 and 8, and we want to decide which one is better. We noticed that the and obtained from both are the same, 0.175 and 0.76, respectively. As discussed above, Model 8 has a fairly random scatter than Model 7 due to the , , and values obtained from Model 8 being smaller than those obtained from Model 7. Based on these findings, we might conclude that Model 8 outperforms Model 7.

Last but not least, , , and have a weakness, that is, if out of 28 predictions, 14 are underestimated by 1% and the remaining 14 are overestimated by 100%, the method proposed will indicate the prediction errors as symmetrical. This issue might raise confusion for software project practitioners. However, as mentioned in the purpose of these formulas, they are only additional information related to the and , we still rely on the criteria of the and with more information obtained from these formulas as useful information. As discussed above, if we omit , , and , this might lead to the selection of an improper model.

Case study: To be more specific, we verified the benefit of the , , and values based on the models obtained from XGBoost regression. XGBoost is a gradient-boosted decision tree development created for a highly efficient and accurate model. It is a Python open-source library, and it provides a framework such as the “fit” function to build a model based on the training dataset and the “predict” function to predict new values based on a new dataset [43]. In our scenario, we adopted XGBoost with a group of parameters. They include the learning rate (0.01), booster (“gblinear”), and n_estimators chosen by experimentation. We used Dataset-1 (twenty-eight projects) [30,31,32,33] and Dataset-2 (seventy-one projects) [31] as the historical datasets. Those datasets measure effort estimation in terms of use case points (UCPs) [30,34]. Both datasets are described by the unadjusted actor weight (UAW), unadjusted use case weight (UUCW), technical complexity factor (TCF), environmental complexity factor (ECF), and Real-P20. Real-P20 is considered as the dependent variable, and the UAW, UUCW, TCF, and ECF are considered as the independent variables.

Dataset-1/Dataset-2 is divided into two datasets, 80% of projects for training and the remaining projects for testing. As mentioned in Table 1, the an are used as the criteria to measure the accuracy of effort estimation in UCPs. In addition, the values of , , and are shown.

Table 5 shows the evaluation criteria obtained from the XGBoost models. As can be seen, the value obtained from the proposed models based on Dataset-1 and Dataset-2 is −0.556 and 0.286, respectively. Criterion shows a model scatter (variance). If the variance reaches zero, that model is better. In those two datasets, we can see that model XGBoost has a tendency to underestimate Dataset-1 due to the values of , , and being less than and so far from zero. The scatter of the model for Dataset-2 might be smaller than for Dataset-1 due to the absolute value of the obtained from the model for Dataset-1 is larger than that obtained from the model for Dataset-2. As can be seen, the absolute values of are similar for Dataset-1 and Dataset-2. However, the || obtained from Dataset-2 is smaller than that obtained from Dataset-1, which demonstrates that the scatter of the model for Dataset-2 might be smaller than for Dataset-1. These findings reveal that the , , and values might be used as reference criteria to consider choosing an appropriate predictive model. Compared to the and , which is an absolute sig value, we might be confident to state that the XGBoost model for Dataset-2 outperforms that for Dataset-1.

Table 5.

Evaluation criteria obtained from XGBoost.

7. Conclusions and Future Work

, , and refer to the sign of prediction error, not the magnitude. These values’ usefulness might be considered a contribution to the and . Using the and together with them as performance indicators could be beneficial to validate the symmetry of the predictive model values around the baseline. On the other hand, since those values indicate under-/over-estimation in terms of effort estimation independent of the used sizing unit, these criteria might be useful when validating the accuracy of the predicted model along with the and .

Moreover, as mentioned in Lemma 2, the lower the absolute value of and , the more homogeneous the distribution of the predicted and actual values around the baseline. Using , , and might detect whether the predictive model is under or over the baseline. Although this study proposes signals to identify the estimated model as under or over the baseline based on the limited dataset, based on the proof of Lemmas 1 and 2, the proposed formulas are completely adopted for the larger dataset. Last but not least, as discussed in Section 4, based on the adjusted values of , , and , the predictive model might be adjusted by increasing or decreasing the intercept, which might lead to a higher accuracy of the predictive model.

Limitations: Based on the , , and values, we just stated whether the model is over or under the baseline. It may be more helpful when the quantification of this over-/under-estimation is discussed further in the future.

Author Contributions

Conceptualization, H.H.T., P.S. and R.S.; methodology, H.H.T., P.S., M.F., Z.P. and R.S.; software, H.H.T.; validation, H.H.T. and M.F.; investigation, H.H.T., P.S., M.F., Z.P. and R.S.; resources, H.H.T., P.S., M.F., Z.P. and R.S.; data curation, H.H.T. and P.S.; writing—original draft preparation, H.H.T. and M.F.; writing—review and editing, H.H.T., P.S., R.S. and Z.P.; visualization, H.H.T., P.S., M.F., Z.P. and R.S.; supervision, P.S., Z.P. and R.S.; project administration, P.S., Z.P. and R.S.; funding acquisition, P.S., Z.P. and R.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Faculty of Applied Informatics, Tomas Bata University in Zlin, under Project No. RVO/FAI/2021/002.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This investigation collected datasets from Ochodek et al [32], Subriadi and Ningrum [33], and Silhavy et al. [30].

Acknowledgments

We would like to thank Hoang Lê Minh, Hông Vân Lê, and Nguyen Tien Zung for helpful comments. We would also like to express our very great appreciation to Susely Figueroa Iglesias for her insightful suggestions and careful reading of the manuscript. We would also like to extend our thanks to the Torus Actions company (https://torus.ai (accessed on 1 November 2022)) for allowing us to spend time completing the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Azzeh, M.; Nassif, A.B. Project productivity evaluation in early software effort estimation. J. Softw. Evol. Process. 2018, 30, e21110. [Google Scholar] [CrossRef]

- Braz, M.R.; Vergilio, S.R. Software effort estimation based on use cases. In Proceedings of the 30th Annual International Computer Software and Applications Conference (COMPSAC’06), Chicago, IL, USA, 17–21 September 2006; Volume 1, pp. 221–228. [Google Scholar]

- Mahmood, Y.; Kama, N.; Azmi, A.; Khan, A.S.; Ali, M. Software effort estimation accuracy prediction of machine learning techniques: A systematic performance evaluation. Softw. Pract. Exp. 2022, 52, 39–65. [Google Scholar] [CrossRef]

- Munialo, S.W.; Muketha, G.M. A review of agile software effort estimation methods. Int. J. Comput. Appl. Technol. Res. 2016, 5, 612–618. [Google Scholar] [CrossRef]

- Silhavy, R.; Silhavy, P.; Prokopova, Z. Evaluating subset selection methods for use case points estimation. Inf. Softw. Technol. 2018, 97, 1–9. [Google Scholar] [CrossRef]

- Trendowicz, A.; Jeffery, R. Software project effort estimation. Found. Best Pract. Guidel. Success Constr. Cost Model. 2014, 12, 277–293. [Google Scholar]

- Azzeh, M.; Nassif, A.B.; Attili, I.B. Predicting software effort from use case points: Systematic review. Sci. Comput. Program. 2021, 204, 102596. [Google Scholar] [CrossRef]

- Conte, S.D.; Dunsmore, H.E.; Shen, Y.E. Software Engineering Metrics and Models; Benjamin-Cummings Publishing Co., Inc.: San Francisco, CA, USA, 1986. [Google Scholar]

- Praynlin, E. Using meta-cognitive sequential learning neuro-fuzzy inference system to estimate software development effort. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8763–8776. [Google Scholar] [CrossRef]

- Fadhil, A.A.; Alsarraj, R.G.H.; Altaie, A.M. Software cost estimation based on dolphin algorithm. IEEE Access 2020, 8, 75279–75287. [Google Scholar] [CrossRef]

- Bilgaiyan, S.; Mishra, S.; Das, M. Effort estimation in agile software development using experimental validation of neural network models. Int. J. Inf. Tecnol. 2019, 11, 569–573. [Google Scholar] [CrossRef]

- Mustapha, H.; Abdelwahed, M. Investigating the use of random forest in software effort estimation. Procedia Comput. Sci. 2019, 148, 343–352. [Google Scholar]

- Ullah, A.; Wang, B.; Sheng, J.; Long, J.; Asim, M.; Riaz, F. A Novel Technique of Software Cost Estimation Using Flower Pollination Algorithm. In Proceedings of the 2019 International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 6–8 December 2019; pp. 654–658. [Google Scholar]

- Sethy, P.K.; Rani, S. Improvement in cocomo modal using optimization algorithms to reduce mmre values for effort estimation. In Proceedings of the 2019 4th International Conference on Internet of Things: Smart Innovation and Usages (IoT-SIU), Ghaziabad, India, 18–19 April 2019; pp. 1–4. [Google Scholar]

- Effendi, Y.A.; Sarno, R.; Prasetyo, J. Implementation of bat algorithm for cocomo ii optimization. In Proceedings of the 2018 International Seminar on Application for Technology of Information and Communication, Semarang, Indonesia, 21–22 September 2018; pp. 441–446. [Google Scholar]

- Khan, M.S.; ul Hassan, C.A.; Shah, M.A.; Shamim, A. Software cost and effort estimation using a new optimization algorithm inspired by strawberry plant. In Proceedings of the 2018 24th International Conference on Automation and Computing (ICAC), Newcastle upon Tyne, UK, 6–7 September 2018; pp. 1–6. [Google Scholar]

- Desai, V.S.; Mohanty, R. Ann-cuckoo optimization technique to predict software cost estimation. In Proceedings of the 2018 Conference on Information and Communication Technology (CICT), Jabalpur, India, 26–28 October 2018; pp. 1–6. [Google Scholar]

- Jørgensen, M.; Halkjelsvik, T.; Liestøl, K. When should we (not) use the mean magnitude of relative error (mmre) as an error measure in software development effort estimation? Inf. Softw. Technol. 2022, 143, 106784. [Google Scholar] [CrossRef]

- Foss, T.; Stensrud, E.; Kitchenham, B.; Myrtveit, I. A simulation study of the model evaluation criterion mmre. IEEE Trans. Softw. Eng. 2003, 29, 985–995. [Google Scholar] [CrossRef]

- Myrtveit, I.; Stensrud, E.; Shepperd, M. Reliability and validity in comparative studies of software prediction models. IEEE Trans. Softw. Eng. 2005, 31, 380–391. [Google Scholar] [CrossRef]

- Kitchenham, B.A.; Pickard, L.M.; MacDonell, S.G.; Shepperd, M.J. What accuracy statistics really measure [software estimation]. IEEE-Proc. Softw. 2001, 148, 81–85. [Google Scholar] [CrossRef]

- Shepperd, M.; MacDonell, S. Evaluating prediction systems in software project estimation. Inf. Softw. Technol. 2012, 54, 820–827. [Google Scholar] [CrossRef]

- Villalobos-Arias, L.; Quesada-Lopez, C.; Guevara-Coto, J.; Martınez, A.; Jenkins, M. Evaluating hyper-parameter tuning using random search in support vector machines for software effort estimation. In Proceedings of the 16th ACM International Conference on Predictive Models and Data Analytics in Software Engineering, Virtual, 8–9 November 2020; pp. 31–40. [Google Scholar]

- Idri, A.; Hosni, M.; Abran, A. Improved estimation of software development effort using classical and fuzzy analogy ensembles. Appl. Soft Comput. 2016, 49, 990–1019. [Google Scholar] [CrossRef]

- Gneiting, T. Making and evaluating point forecasts. J. Am. Stat. Assoc. 2011, 106, 746–762. [Google Scholar] [CrossRef]

- Strike, K.; El Emam, K.; Madhavji, N. Software cost estimation with incomplete data. IEEE Trans. Softw. Eng. 2001, 27, 890–908. [Google Scholar] [CrossRef]

- Hamid, M.; Zeshan, F.; Ahmad, A.; Ahmad, F.; Hamza, M.A.; Khan, Z.A.; Munawar, S.; Aljuaid, H. An intelligent recommender and decision support system (irdss) for effective management of software projects. IEEE Access 2020, 8, 140752–140766. [Google Scholar] [CrossRef]

- Ali, A.; Gravino, C. A systematic literature review of software effort prediction using machine learning methods. J. Softw. Evol. Process. 2019, 31, e2211. [Google Scholar] [CrossRef]

- Gautam, S.S.; Singh, V. The state-of-the-art in software development effort estimation. J. Softw. Evol. Process. 2018, 30, e1983. [Google Scholar] [CrossRef]

- Silhavy, R.; Silhavy, P.; Prokopova, Z. Algorithmic optimisation method for improving use case points estimation. PLoS ONE 2015, 10, e0141887. [Google Scholar] [CrossRef] [PubMed]

- Silhavy, R.; Silhavy, P.; Prokopova, Z. Analysis and selection of a regression model for the use case points method using a stepwise approach. J. Syst. Softw. 2017, 125, 1–14. [Google Scholar] [CrossRef]

- Ochodek, M.; Nawrocki, J.; Kwarciak, K. Simplifying effort estimation based on use case points. Inf. Softw. Technol. 2011, 53, 200–213. [Google Scholar] [CrossRef]

- Subriadi, A.P.; Ningrum, P.A. Critical review of the effort rate value in use case point method for estimating software development effort. J. Theroretical Appl. Inf. Technol. 2014, 59, 735–744. [Google Scholar]

- Hoc, H.T.; Hai, V.V.; Nhung, H.L.T.K. Adamoptimizer for the Optimisation of Use Case Points Estimation; Springer: Berlin/Heidelberg, Germany, 2020; pp. 747–756. [Google Scholar]

- Karner, G. Metrics for Objectory; No. LiTH-IDA-Ex-9344; University of Linkoping: Linkoping, Sweden, 1993; p. 21. [Google Scholar]

- ISO/IEC 20926:2009; Software and Systems Engineering—Software Measurement—IFPUG Functional Size Measurement Method. ISO/IEC: Geneva, Switzerland, 2009.

- Azzeh, M.; Nassif, A.; Banitaan, S. Comparative analysis of soft computing techniques for predicting software effort based use case points. Iet Softw. 2018, 12, 19–29. [Google Scholar] [CrossRef]

- Santos, R.; Vieira, D.; Bravo, A.; Suzuki, L.; Qudah, F. A systematic mapping study on the employment of neural networks on software engineering projects: Where to go next? J. Softw. Evol. Process. 2022, 34, e2402. [Google Scholar] [CrossRef]

- Carbonera, E.C.; Farias, K.; Bischoff, V. Software development effort estimation: A systematic mapping study. IET Softw. 2020, 14, 328–344. [Google Scholar] [CrossRef]

- Idri, A.; Hosni, M.; Abran, A. Systematic literature review of ensemble effort estimation. J. Syst. Softw. 2016, 118, 151–175. [Google Scholar] [CrossRef]

- Ouwerkerk, J.; Abran, A. An evaluation of the design of use case points (UCP). In Proceedings of the International Conference On Software Process And Product Measurement MENSURA, Cádiz, Spain, 6–8 November 2006; pp. 83–97. [Google Scholar]

- Abran, A. Software Metrics and Software Metrology; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Geron, A. Ensemble Learning and Random Forests; O’reilly: Sebastopol, CA, USA, 2019; pp. 189–212. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).