Abstract

This study examines the perceived and actual effectiveness of an LLM-driven tutor embedded in an educational game for Chinese as a foreign language (CFL) learners. Drawing on 82 chat sessions from 31 beginner-level (HSK3) CFL learners, we analyzed learners’ satisfaction ratings, accuracy before and after interacting with the tutor, and their post-interaction cognitive behaviors. The results showed that while most sessions received positive or neutral satisfaction scores, actual learning gains were limited, with only marginally significant improvements in accuracy following the learner-tutor interaction. Behavioral analysis further revealed that content-irrelevant responses (e.g., technical guidance) were linked to more effective, higher-level cognitive behaviors, whereas content-relevant responses (e.g., explanations of vocabulary or grammar) were associated with more superficial, less effective behaviors, suggesting a possible over-reliance on the LLM-driven tutor. Regression analyses also confirmed that neither satisfaction nor content relevance significantly predicted long-term behavior patterns. Taken together, these results indicate a disconnect between learners’ positive perceptions of the LLM-driven tutor and their actual learning benefits. This study highlights the need for multi-perspective evaluations of LLM-based educational tools and careful instructional design to avoid unintended cognitive dependence.

1. Introduction

As digital technologies evolve, digital game-based learning (DGBL), defined as the integration of educational content or learning principles within game environments (), is attracting increasing interest in language education. It offers highly engaging, goal-oriented experiences that can increase learners’ motivation, sustained participation, and development of cognitive and problem-solving skills (; ). In foreign language education, previous research has shown that DGBL can have a positive impact on learners’ language development and engagement (; ). Specifically, DGBL can not just support cognitive outcomes like vocabulary development and reading comprehension but also boost non-cognitive factors such as motivation, engagement, and self-confidence, especially for learners with lower proficiency or confidence in the target foreign language ().

Recent developments in artificial intelligence, especially the emergence of large language models (LLMs) like GPT-4o, have begun to influence how feedback is conceptualized and delivered in technology-enhanced learning environments (). Unlike shallow, rule-based tutoring systems that provide static, scripted responses (), tutors powered by LLMs have been argued to offer more flexible and engaging interactions. The LLMs can adjust in real time to a learner’s input and communication style, potentially making the learning experience more personalized and effective (). This shift has led to the emergence of LLM-driven learning tutors: dialogue-based agents integrated into educational environments that support learning by offering targeted hints, simplifying or elaborating content, and adapting task difficulty in response to learner’s performance. For instance, () introduced AI chatbots into role-playing scenarios where students practiced ordering food or asking for directions, receiving instant feedback on pronunciation, sentence structure, and phrasing. Similarly, () showed how AI tutors built on platforms such as Dialogflow and Vertex AI could offer step-by-step math problem-solving guidance personalized to the learner’s progress. As these tools become more accessible, systems that were once-complex are now becoming scalable across different educational settings, making personalized, real-time support a more attainable goal.

While the use of AI in education is expanding rapidly, there remains the debate over how effective LLM-driven tutors truly are. () used survey data to show that LLM-powered tutoring agents can increase learners’ engagement and sense of control in interactive training environments. However, feeling engaged or supported does not always translate into actual learning advancements. Prior studies have shown that learners may feel they are learning more when during certain types of instruction, even when their actual learning gains do not improve (). () discovered that while students supported by AI performed better in executing procedural steps, they did not demonstrate major gains in understanding concepts. Therefore, there is a growing need for studies to not only examine learners’ subjective satisfaction with AI tutors, but also explore whether such positive perceptions can translate into actual learning gains.

2. Literature Review

2.1. The Role of LLM-Driven Tutors in Digital Game-Based Learning Environments

DGBL has become an influential approach in education by integrating education content into engaging and interactive experiences. Features like story-rich environments, progress-oriented tasks, and prompt feedback are known to improve motivation and maintain learner involvement (; ). However, even with these engaging features, many traditional DGBL systems tend to rely on fixed, rule-based feedback that can feel rigid, superficial, and often fail to capture the complexities of how learners are really engaging with the content. For instance, () point out that early DGBL environments often used static hints or pop-up messages that did not adapt to students’ ongoing challenges or diverse thinking processes. As () emphasizes, such systems struggle to provide flexible support for reasoning, especially with open-ended tasks like constructing sentences or debating ideas. Because of this, the full potential of DGBL as a teaching tool can be limited by feedback systems that lack responsiveness and depth, especially in learning contexts that require customized, situational support to encourage continuous improvement.

As the use of generative artificial intelligence continues to grow, we are seeing exciting new opportunities to overcome this long-standing challenge in DGBL. Rather than relying on fixed hints, LLM-driven tutors aim to interpret learners’ intentions, handle open-ended inputs, and provide more personalized feedback that may address both meaning and discourse. For instance, () showed how AI role-play scenarios let students practice genuine conversations, with the system adapting dynamically to pronunciation errors and misunderstandings. Similarly, () found that AI tutors designed with natural language interfaces can guide learners through tasks in flexible, step-by-step ways. More recently, () fine-tuned a locally deployed Llama-based AI peer agent using principles from peer tutoring and teachable agent research to support a K-12 STEM game-based learning platform, enabling the agent to prompt explanations, ask clarification questions, and adaptively reinforce student memory through curiosity-driven interactions. However, despite these promising affordances, it is still uncertain whether such AI tutors genuinely foster deeper conceptual and transferable learning gains, rather than merely improving procedural performance, as recent evidence indicates ().

Although research on using LLM-driven tutors in DGBL is still emerging, insights from studies outside this specific context clarify their potential to support learning. In traditional educational settings, () showed that learners working with an AI tutor achieved higher learning gains in less time and reported greater engagement compared to those in active in-class conditions. () also found that learners rated LLM-generated feedback more positively than instructor feedback when the source was hidden, and still considered it supportive even after learning it came from an AI tutor. However, despite notable achievements in AI in education, its actual impact on learners and educational practice has yet to be fully demonstrated(). These concerns highlight the need to determine whether such systems truly foster deeper learning rather than only momentary engagement. This question is particularly relevant in DGBL environments, where immersive gameplay drives high levels of involvement (), yet limited empirical work has examined whether LLM-driven tutors can translate this engagement into sustained learning gains.

2.2. Effectiveness of LLM-Driven Tutors: Perception vs. Reality

2.2.1. Perceived Effectiveness

Based on () definition of perceived usefulness as the extent to which a system is believed to enhance performance, perceived effectiveness (PE) in this study refers to learners’ belief that interacting with the LLM-driven tutor enhances their learning outcomes. In educational contexts, most research relies on self-reported data like Likert scale ratings, satisfaction surveys, or open-ended reflections to capture learners’ perceptions toward digital technologies (). Empirical evidence shows that learners in language learning contexts report high levels of satisfaction with LLM-driven tutors, viewing them as valuable complementary tools that help sustain engagement and promote autonomous improvement (). That said, closer examination shows that learners’ positive perceptions of LLM-driven tutors are often driven more by practical features such as ease of use, information quality, and interactivity, rather than by the actual instructional quality of the system (). Drawing on persuasion research, surface-level functional cues, such as emotional appeal, may lead individuals to overestimate the usefulness of a system even when substantive content is limited (). While PE has been shown to influence learners’ attitudes and behavioral intentions toward educational technologies (), it may not always correspond to actual learning gains. Overall, although PE provides important insights into the learner experience, it should be interpreted carefully, as elevated perceptions may at times reflect engaging design features rather than substantive cognitive support.

2.2.2. Actual Effectiveness

Different from PE, which reflects learners’ subjective impressions of LLM-driven tutors, actual effectiveness (AE) refers to the measurable learning outcomes directly attributable to the tutors. AE is typically assessed through test scores, behavioral observations, or performance over time. For instance, () focused on students’ learning gains before and after interacting with an LLM tutor by comparing essay quality as well as confidence improvements. Meanwhile, () developed an eight-dimension evaluation taxonomy grounded in learning-sciences principles, synthesizing prior frameworks to assess LLM tutors’ feedback based on whether it can identify learner errors, provide actionable guidance, and respond in a motivational and pedagogically coherent manner. In addition to outcome-based evaluations of actual effectiveness, frameworks such as the ICAP framework (; ) offer a process-oriented perspective by categorizing learner engagement into Passive, Active, Constructive, and Interactive modes, with Constructive and Interactive behaviors being most strongly linked to meaningful learning gains. Although applications of ICAP to LLM-driven tutoring in DGBL environments remain limited, related studies highlight its potential as an evaluative lens. For example, () discovered that feedback encouraging elaboration and revision, hallmarks of true engagement, considerably boosted metacognitive processing, whereas simple confirmatory feedback like “Correct!” had little impact. Likewise, () found that students who received detailed, personalized video comments such as “Consider how this connects to concept X” performed better than those given brief, generic praise. These findings suggest that future evaluations of LLM-driven tutors may benefit from not only assessing learning outcomes, but also examining the quality of engagement they elicit, particularly whether interactions promote constructive meaning-making or merely surface-level response behaviors.

2.3. The Present Study

Despite growing interest in both perceived and actual effectiveness of LLM-driven tutors, several knowledge gaps remain. First, only a handful of studies jointly examine PE and AE within the same design, making it difficult to determine whether learners’ subjective impressions reflect meaningful learning benefits. Second, even when AE is considered, it is typically measured using test scores alone, with little attention paid to behavioral learning processes during learner–AI interaction, particularly within the DGBL environments.

Addressing these research gaps, this study aims to understand whether CFL learners’ perceptions of the effectiveness of the LLM-driven tutor align with their actual progress in the DGBL environment. In this paper, an LLM-driven tutor refers to an AI-powered virtual tutor built on an LLM, which is designed to provide learners with real-time linguistic support and adaptive feedback through text or voice interaction. This study also explores how learners’ behavioral patterns, referring to the ways they process and respond to in-game tasks (e.g., error correction vs. repeated errors), might change before and after interacting with the LLM-driven tutor. Specifically, we are interested in three key questions: (1) How does the content relevance of LLM-driven tutor responses affect learners’ perceived effectiveness of the interaction? (2) How does interaction with the LLM-driven tutor influence learners’ actual effectiveness? (3) To what extent do learners’ perceived effectiveness of the LLM-driven tutor translate into actual effectiveness? By exploring these questions, we hope to gain better insights into how LLM-driven tutors can be effectively designed and evaluated in the DGBL environment, eventually working toward improvements in both learner satisfaction and learning outcomes.

3. Method

3.1. LLM-Driven Tutor System

The learner–tutor interactions analyzed in this study were derived from an LLM-driven tutor embedded within the 3D educational game Ethan’s Life in Beijing, developed by our research team. The game was designed to help CFL learners acquire HSK3-level vocabulary and develop discourse comprehension skills. All game content, including storyline and character dialogues, was aligned with the learning objectives outlined in the HSK3 Standard Course (). The design of in-game tasks was consistent with the reading comprehension exercises featured in the HSK3 Standard Course Workbook (e.g., short passages followed by multiple-choice questions). The LLM-driven tutor is powered by iFlytek’s Spark LLM, with output generation constrained by a carefully crafted prompt engineering strategy.

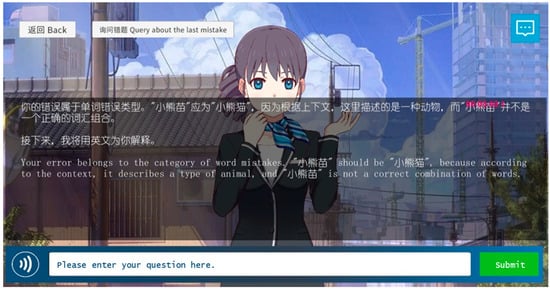

As shown in Figure 1, the LLM-driven tutor interface was designed with multiple instructional design considerations to support both engagement and comprehension. The tutor’s avatar is equipped with lip-synced animations synchronized with its spoken output, drawing on Mayer’s multimedia learning principles to facilitate audio–visual integration and pronunciation modeling (). Moreover, the tutor’s speech rate is set to 0.8×, closely matching the speed of HSK listening tests, allowing learners to adapt to authentic language input without cognitive overload.

Figure 1.

Screenshot of LLM-driven tutor interface.

The prompt design comprises three main components. First, it defines the LLM-driven tutor’s role explicitly as a professional Chinese language teacher and requires it to provide linguistic guidance from a pedagogical perspective. Second, the prompt includes the complete vocabulary and grammar points corresponding to the HSK level associated with the current game version. It instructs the LLM to incorporate explanations of any relevant Chinese language knowledge points present in the learner’s question. Third, the output format is standardized: the tutor is instructed to first offer a concise explanation in Chinese, limited to 150 characters, followed by a transition phrase—“接下来,我将用英文为你解释 (Next, I will explain in English)”—to introduce a supplementary explanation in English.

The prompt structure was deliberately crafted to align with established theories of language learning and instructional design. First, by explicitly defining the LLM-driven tutor’s role as a professional Chinese language teacher, the system adopts a pedagogical stance that promotes guided learning rather than generic information retrieval. This approach resonates with scaffolding theory () and recent evidence that role-play prompting enhances reasoning accuracy and domain-specific performance in LLMs (). Second, the integration of HSK-level vocabulary and grammar points reflects () i + 1 hypothesis, whereby the input is calibrated to be slightly more challenging than the learner’s current proficiency. Third, the standardized dual-language output format, which consists of a concise Chinese explanation (≤150 characters) followed by an English supplement, draws on Cognitive Load Theory (). This design minimizes extraneous load by presenting digestible, well-structured chunks, while the English transition aids cross-linguistic awareness (), enabling learners to reflect on both form and meaning without cognitive overload.

Learners can access the LLM-driven tutor by clicking the “Ask the Tutor” button in the game interface, which currently supports two main interaction modes. In the real-time inquiry mode, learners can input text or voice questions about linguistic or cultural content encountered during gameplay. The tutor then generates responses based on a pre-set prompt strategy. In the error inquiry mode, learners can click the “Ask About This Mistake” button after making an incorrect response. The system compiles relevant error information, including the question, available options, correct answer, and the learner’s choice, before analyzing the type of error (e.g., grammatical, lexical, or contextual) and providing a bilingual explanation of the underlying cause. Additionally, a conversation history function is integrated into the system. Learners can click the blue chat icon at the top-right corner of the interface to review past interactions with the LLM-driven tutor during their learning experience.

Although the tutor offers on-demand corrective feedback, its responses extend beyond mere error correction. For incorrect answers, the system explains not only the linguistic rule but also the conceptual rationale behind the correct form, aligning with best practices in form-focused instruction. As illustrated in Figure 1, when learners click the “Query about the last mistake” button, the system not only identifies the error category (e.g., word mistakes) but also provides a bilingual explanation that clarifies the linguistic rule and contextual meaning.

3.2. Participants

Thirty-one CFL learners (16 male, 15 female) participated in this study. Participants ranged in age from 18 to 35 years (M = 23.9) and, on average, had been studying Chinese for approximately 16 months. Their mean Chinese proficiency level was HSK 3. All participants were international students currently enrolled at a university in China, with academic majors spanning computer science, Teaching Chinese to Speakers of Other Languages (TCSOL), education, and other disciplines. They had diverse linguistic and cultural backgrounds, primarily from Asia and Africa. All participants possessed basic English proficiency, as English is commonly used as a supporting language for beginners learning Chinese (). None of the participants had prior experience with an LLM-driven Chinese learning tutor or a 3D Chinese educational game before taking part in the gameplay activity.

3.3. Procedure

Participants were students enrolled in a Chinese course who voluntarily took part in a classroom-based gameplay activity. The research team first made the game used in this study available for downloading on the official website, allowing learners to access and install it at their own discretion. During the gameplay session, participants independently played the game for approximately 60 min, using personal devices such as computers or tablets. Researchers did not intervene in the gameplay process, except for providing brief operational instructions at the beginning of the activity. Learners were free to control their own learning pace throughout the 60 min session and could initiate multiple chat sessions with the LLM-driven tutor during gameplay. To ensure completeness and accuracy of data collection, the game was programmed to automatically record participants’ learning process data during the gameplay session. This included their answer correctness, behavioral sequences before and after interactions with the LLM-driven tutor (e.g., whether a learner corrected a previous error, made a new error, or submitted the same incorrect option repeatedly), chat logs with the tutor, and responses to a post-interaction PE questionnaire for each dialogue. All data were uploaded in real time to a cloud-based MySQL server for subsequent analysis.

3.4. Category of Chat Topic

To capture the interaction process between learners and the LLM-driven tutor, this study analyzed the chat transcripts generated during their conversations. Considering that beginner-level CFL learners may have difficulty articulating their questions accurately, the analysis was based on the response types generated by the LLM-driven tutor. This approach served as a proxy for interpreting how the tutor understood and responded to learners’ intentions. During gameplay, learners were allowed to pose open-ended questions to the LLM-driven tutor. For example, some learners requested explanations of specific vocabulary items in a question (e.g., “What does xx mean?”), while others engaged in off-topic conversations unrelated to the game content (e.g., “Good morning, how are you doing today?”). Accordingly, the tutor’s responses were categorized into two broad types: content-relevant and content-irrelevant. Content-relevant responses referred to those directly related to the learning material, including explanations of vocabulary and grammar points that appeared in the game. In contrast, content-irrelevant responses were those unrelated to the learning content, such as technical guidance on how to operate the game.

4. Results

4.1. The Perceived Effectiveness of the LLM-Driven Tutor

After completing each chat session with the LLM-driven tutor (a chat session was defined as a complete learner–tutor exchange that began when a learner clicked the “Ask the tutor” button and ended when the learner returned to the game interface), learners were asked to evaluate the quality of the tutor’s responses during that session. The rating ranged from 1 to 3, where 1 indicated “satisfied,” 2 indicated “neutral,” and 3 indicated “dissatisfied,” with lower scores reflecting higher perceived effectiveness. Across the 31 participants, multiple sessions occurred during the gameplay, yielding a total of 82 chat sessions (range = 1–11, SD = 2.87). Each chat session contained an average of 1.68 turns (one turn = one learner question followed by a complete response from the tutor), indicating that most sessions were brief and often consisted of a single turn. The survey results showed that 47 chat sessions (57.3%) received a PE rating of 1, 31 sessions (37.8%) received a rating of 2, and only 4 sessions (4.9%) received a rating of 3. The average PE score across all sessions was 1.57, with 95.1% of sessions receiving either positive or neutral feedback. When averaging PE scores at the participant level, the mean rating was 1.47, indicating that the results were not dominated by a few highly active learners. These results suggest that the LLM-driven tutor was generally well-received by the learners. To further examine the relationship between response type and PE, this study compared PE scores between the 39 content-relevant responses (47.6%) and the 43 content-irrelevant responses (52.4%). The median satisfaction score for the content-relevant group was 1, with a mean rank of 36.90, while the content-irrelevant group had a median score of 2 and a mean rank of 45.67. Results from a Mann–Whitney U test indicated a marginally significant difference between the two groups (U = 659.000, z = −1.915, p = 0.056), with content-relevant responses being slightly more satisfactory to learners than content-irrelevant ones.

4.2. The Actual Effectiveness of the LLM-Driven Tutor

4.2.1. Differences in Accuracy Before and After Interacting with the LLM-Driven Tutor

In addition to analyzing PE, this study also assessed AE of the LLM-driven tutor. One of the key dimensions for AE was the correctness of the learner’s response to the last in-game task completed before the learner-tutor interaction and the first in-game task attempted afterward the same interaction. The first post-interaction in-game task was selected as a focal measure of AE because, compared to later items, it was less affected by practice effects or fatigue effects, thereby offering a more reliable indication of the tutor’s immediate impact (). Furthermore, performance on the first in-game task served as a timely reflection of the learner’s knowledge acquisition, providing insight into the immediate influence of the tutor on in-game performance.

Descriptive analysis showed that the average accuracy of the last in-game task completed before the interaction was 0.71, whereas the accuracy of the first in-game task answered after the interaction increased to 0.88. Due to incomplete response data in some sessions, where learners either did not complete an in-game task before or after the interaction, the paired comparison analysis was limited to 41 sessions with complete records for both time points. A paired-sample t-test revealed a marginally significant difference in accuracy before and after interacting with the LLM-driven tutor (t(40) = 2.012, p = 0.051, Cohen’s d = 0.31). This result suggests that learners’ in-game task performance slightly improved following the interaction.

4.2.2. Post-Interaction Cognitive Behavior Patterns

To further evaluate AE of the LLM-driven tutor, this study examined changes in learners’ cognitive behavior patterns following the learner-tutor interaction. The aim was to examine whether the tutor could improve learners’ knowledge application, error correction, and adjustments in learning strategies, as these behaviors may signal longer-term learning gains.

Five types of cognitive behaviors that occurred after interacting with the LLM-driven tutor were identified and coded: pass, failure, error correction, repeated errors, and new errors. These were categorized into two overarching groups based on whether learners successfully completed the in-game task: effective cognitive behaviors (pass and error correction) and ineffective cognitive behaviors (failure, repeated errors, and new errors) (see Table 1). Among them, error correction was classified as a highly effective cognitive behavior as it reflected deep processing and conceptual change following cognitive conflict (). In contrast, repeated errors were classified as highly ineffective cognitive behaviors as they suggested a lack of self-explanation or conceptual revision, potentially reinforcing erroneous patterns over time (). Similarly, a pass was considered effective because it reflected correct comprehension and application of knowledge without tutor assistance, while failure and new errors were deemed ineffective since they highlighted gaps in understanding or the inability to transfer feedback into accurate responses.

Table 1.

Classification of cognitive behaviors by effectiveness level.

To analyze the relationship between response relevance and cognitive behavior, a cross-tabulation framework was constructed with two dimensions: content relevance (relevant vs. irrelevant) and cognitive behavior type (highly effective, low effective, low ineffective, highly ineffective) (see Table 2). Given the difference in the total number of behaviors observed across the two groups, behavioral proportions within a fixed time window were used as the unit of analysis instead of raw frequencies to ensure the fairness and validity in group comparisons. Independent-sample t-tests revealed significant differences in cognitive behavior patterns between the content-relevant and content-irrelevant groups. Specifically, for highly effective cognitive behaviors, the content-irrelevant group had a significantly higher proportion than the content-relevant group (t(80) = 2.828, p < 0.05). Then, for low effective cognitive behaviors, the content-irrelevant group also had a significantly higher proportion than the content-relevant group (t(80) = 3.115, p < 0.01). As for low ineffective cognitive behaviors, the content-relevant group showed a significantly higher proportion (t(80) = –2.588, p < 0.05). Last, for highly ineffective cognitive behaviors, the difference did not reach statistical significance (t(80) = 1.750, p = 0.084), suggesting no significant difference between the two groups in this dimension.

Table 2.

Distribution of cognitive behaviors by content relevance and effectiveness level.

4.3. Relationship Between Perceived and Actual Effectiveness of the LLM-Driven Tutor

4.3.1. Effect of Perceived Effectiveness on Immediate Cognitive Performance

To investigate the effect of PE on learners’ immediate cognitive performance, this study used the change in response correctness as an indicator of learning impact. Specifically, the change in correctness was defined as the difference between the correctness of the first question answered after interacting with the LLM-driven tutor (1 = correct, 0 = incorrect) and the correctness of the most recent question answered before the interaction. The calculation formula was as follows:

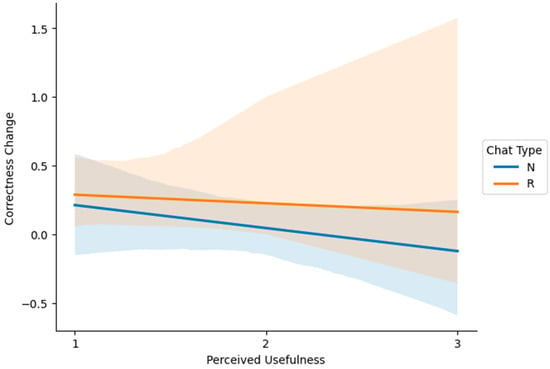

Spearman correlation analysis revealed no significant relationship between PE and change in correctness, either in the content-relevant group (ρ = −0.045, p = 0.873) or the content-irrelevant group (ρ = −0.214, p = 0.294). Figure 2 further illustrates the relationship between PE and changes in correctness. The x-axis represents learners’ satisfaction ratings of the tutor’s response (1 = very satisfied, 3 = dissatisfied), while the y-axis shows the change in correctness. The orange and blue lines represent trend lines for the content-relevant (R) and content-irrelevant (N) groups, respectively. Shaded areas indicate the 95% confidence intervals. As shown in Figure 2, both trend lines are nearly flat, suggesting no clear linear relationship between satisfaction ratings and performance change within either group. These findings indicate that learners’ PE of the LLM-driven tutor did not have a measurable impact on their immediate cognitive performance.

Figure 2.

Relationship between perceived effectiveness and the change in correctness.

4.3.2. Effect of Perceived Effectiveness of the LLM-Driven Tutor on Long-Term Learning Behavior Patterns

To examine whether PE of the LLM-driven tutor influenced learners’ long-term learning behavior, an Ordinary Least Squares (OLS) regression analysis was conducted. The dependent variables were the proportional occurrences of four types of cognitive behaviors following the learner–tutor interaction. The regression model was specified as follows:

where CR denotes Content Relatedness of the chat session. Model diagnostics revealed that the assumption of homoscedasticity was violated for the low effective and highly ineffective models. Consequently, heteroscedasticity-consistent robust standard errors (HC3) were applied to these models, while the high effective cognitive behavior and low ineffective cognitive behavior models retained standard OLS estimates.

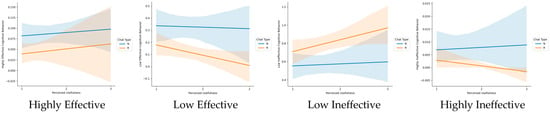

The regression results showed that all four models were statistically significant when applying robust standard errors where needed. Specifically, the high effective cognitive behavior model (F(3,78) = 2.807, p < 0.05, f2 = 0.107), low effective cognitive behavior model (robust F(3,78) = 7.217, p < 0.01, f2 = 0.140), low ineffective cognitive behavior model (F(3,78) = 2.923, p < 0.05, f2 = 0.112), and highly ineffective cognitive behavior model (robust F(3,78) = 3.032, p < 0.05, f2 = 0.044) all reached statistical significance. Further analysis of the predictor variables revealed that neither PE, content-relatedness, nor their interaction significantly predicted any of the four types of cognitive behavior (p > 0.05 in all cases). This suggests that learners’ subjective satisfaction with the LLM-driven tutor’s responses and the relevance of the chat content to the learning task were not reliable predictors of subsequent cognitive behavior patterns. In other words, no statistically significant association was found between PE and actual learning behavior when examined at the predictor level.

Figure 3 further illustrates the trends between PE and the proportions of each type of cognitive behavior. Across both the content-relevant group (orange) and the content-irrelevant group (blue), the trend lines for all four behavior types remained relatively flat, showing no clear linear patterns. The changes in behavioral proportions across different levels of perceived effectiveness were minimal, indicating that perceived effectiveness had little influence on learners’ cognitive behavior distribution. These findings reinforce that the predictive value of PE for long-term learning behavior patterns is also very limited.

Figure 3.

Relationship between perceived effectiveness and cognitive behaviors.

5. Conclusions and Discussion

This study examined the discrepancy between PE and AE of the LLM-driven tutor in the DGBL environment. First, this study assessed AE of the LLM-driven tutor by analyzing changes in learners’ immediate in-game task performance and shifts in cognitive learning behaviors following the interaction. Then, this study compared AE with learners’ subjective PE of the LLM-driven tutor. Specifically, this study first explored how the relevance of learner–tutor interactions to the education content influenced both PE and AE, and then further investigated the relationship between these two types of effectiveness. In terms of PE, consistent with prior research that reported learners’ generally high perceived effectiveness of AI tutors (; ), most learners in this study also viewed the LLM-driven tutor favorably.

However, analysis of AE revealed only marginally significant improvements in learners’ accuracy on the subsequent in-game task after interacting with the LLM-driven tutor, as confirmed by paired-sample t-tests. This suggests that the tutor had a limited immediate impact on learners’ cognitive performance. This finding aligns with earlier studies showing that when learners adopt the role of questioners rather than responders in AI-assisted language learning, their cognitive gains may be limited, which is potentially due to reduced opportunities for active thinking and language production (). Similarly, () found that learners who received direct LLM-generated answers showed only minimal performance gains compared to a control group without LLM support ().

Moreover, cognitive behavioral pattern analysis revealed significant differences between learners who received content-relevant responses from the LLM-driven tutor and those who received unrelated responses. The group receiving unrelated content exhibited a higher proportion of both highly effective and low effective cognitive behaviors, while the content-relevant group showed a significantly higher proportion of ineffective cognitive behaviors. This counterintuitive result aligned with the concern that when the AI tutor provides answers directly aligned with the learning content, it may reduce learners’ engagement in effective cognitive behaviors, which was raised in prior reviews (). According to cognitivist learning theory, meaningful learning occurs only when learners recognize and resolve misconceptions through cognitive conflict and negotiation of meaning (). The findings suggest that without proper guidance and instructional design, LLMs may be misused as substitutes for learner thinking rather than as scaffolds to support it, thereby suppressing necessary cognitive load and deep processing ().

The comparison between perceived and actual effectiveness further revealed a gap: although learners generally perceived the LLM-driven tutor as helpful, this perception did not translate into significant improvements in their actual cognitive performance. OLS regression analysis further confirmed that PE had limited predictive power for learners’ long-term behavioral patterns. These results highlight a critical issue: positive subjective evaluations of LLM-driven tutors do not necessarily reflect their effectiveness in improving learning outcomes. This discrepancy may stem not only from differences between perception and reality but also from variations in PE among different stakeholders. For example, () found that while learners participating in LLM-assisted EFL writing tasks believed that the LLM helped them identify weaknesses and iteratively refine their work, English education experts rated the effectiveness of LLM feedback significantly lower. Researchers attributed this divergence to differing evaluation criteria: learners prioritized ease of use and time-saving, while experts emphasized pedagogical logic and feedback structure (). Therefore, relying solely on learners’ PE is insufficient for evaluating LLM-based educational tools. A robust evaluation of these tools should incorporate the perspectives of multiple stakeholders, including teachers’ judgments about pedagogical alignment, parents’ perceptions of learning value, administrators’ considerations of sustainability and equity, and researchers’ empirical assessments of cognitive and behavioral outcomes (). Bridging the perception–effectiveness gap requires multi-perspective frameworks to guide the responsible integration of AI in education.

Interestingly, our findings suggest that content-irrelevant responses may foster more effective cognitive behaviors than content-relevant ones. To further investigate this unexpected pattern, we reviewed several complete chat histories involving content-irrelevant responses and observed notable interactional dynamics. For example, one learner began with a self-introduction (“I’m taking a Chinese course, and she is my classmate.”), to which the tutor responded with a friendly follow-up question (“It’s nice to meet you! What interesting things have happened in your class recently?”). This social exchange evolved into a seven-turn dialogue, during which the learner described their hometown and even asked about the availability of more English support. From the perspective of the ICAP framework (), such interactions may elevate learners from passive reception to constructive or even interactive engagement as learners actively generate new content, express personal experiences, and negotiate meaning. Furthermore, this aligns with Vygotsky’s social interaction theory, which emphasizes that authentic dialogue and social sharing can scaffold language development by situating learning within meaningful interpersonal exchanges (). Here, the learner’s willingness to elaborate on personal topics was likely stimulated by the tutor’s socially engaging prompts, thereby turning a casual conversation into an opportunity for real-world language practice.

Another example comes from a learner who asked a vague technical question about returning to the game. The tutor responded with “Could you express your question more clearly?”, which prompted the learner to reflect, reorganize their thoughts, and produce a more precise question. This reflective self-repair mirrors the constructive behaviors emphasized in ICAP, where learners deepen their understanding by clarifying and restructuring their own mental models.

These findings also highlight key design implications for future AI tutor development. While content-irrelevant responses unexpectedly encouraged constructive engagement, content-relevant responses often led to passive reliance, suggesting the need for guided but non-intrusive feedback. First, AI tutors could incorporate follow-up questions into content-relevant responses. For instance, after explaining why an answer is incorrect, the tutor could generate a similar example question and ask the learner to try again (“Can you choose the correct option for this new example?”). This would encourage learners to actively apply the knowledge they just learned, reinforcing error correction and improving retention. Second, tutors could integrate encouraging and socially engaging prompts, as observed in content-irrelevant dialogues. Acknowledging learners’ efforts (e.g., “Good try! Can you think of another example?”) or providing motivational comments may help maintain active engagement and boost confidence. Third, future AI tutors should leverage learner profiling (e.g., language proficiency level, commonly misunderstood knowledge points, and preferred tutoring methods) to deliver personalized guidance. By adapting feedback to individual needs, such as offering simpler explanations to beginners or challenging prompts to advanced learners, the tutor can better balance scaffolding with learner autonomy, thereby reducing over-reliance while fostering active knowledge construction.

This study first evaluated the AE of LLM-driven tutors in the DGBL environment by analyzing learner–tutor interaction data alongside immediate task performance and subsequent cognitive behavior changes. While learners expressed high PE, the AE was limited, and, in some cases, the LLM-driven tutor may have dampened learners’ cognitive engagement. These findings not only confirm earlier concerns regarding the potentially adverse impact of generative AI on learning outcomes () but also suggest that unstructured AI feedback may foster cognitive dependence rather than support metacognitive skill development. Nonetheless, certain limitations must be acknowledged. First, although some learners initiated multiple chat sessions (up to 11) within the 60 min gameplay, the dataset remained based on short-term interactions and single-session behavioral data, which may not fully capture long-term language development or transfer across contexts. Another limitation is that individual differences (e.g., learning styles, anxiety) were not analyzed, although they may play a critical role in shaping learners’ responses to LLM-driven tutoring. Additionally, variables such as task type, interaction frequency, and the LLM-driven tutor’s feedback styles were not systematically controlled. Future research should adopt longitudinal designs, extend the study duration, and diversify data sources, integrating both quantitative and qualitative methods to examine the cumulative effects and cognitive trajectories of diverse learner profiles in AI-supported environments.

Author Contributions

Conceptualization, L.Z. and L.F.; methodology, L.Z. and L.F.; software, L.F.; validation, L.Z.; formal analysis, L.F.; investigation, L.Z.; resources, L.Z. and L.F.; data curation, L.F.; writing—original draft preparation, L.F. and G.T.; writing—review and editing, L.Z., L.F. and G.T.; visualization, L.F.; supervision, L.Z.; project administration, L.Z.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the 2024 International Chinese Language Education Research Project “Research on Key Technologies and Application Evaluation of Intelligent Interaction and Virtual Context Integration for International Chinese Discourse Comprehension” (Project No. 24YH05C), and was supported by the 2023 Key Projects of Beijing Higher Education Society [ZD202309].

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Research Ethics Committee of Beijing University of Posts and Telecommunications (No. BUPT-E-2025007 Approval Date: 10 March 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Afzal, S., Dempsey, B., D’Helon, C., Mukhi, N., Pribic, M., Sickler, A., Strong, P., Vanchiswar, M., & Wilde, L. (2019). The personality of AI systems in education: Experiences with the Watson Tutor, a one-on-one virtual tutoring system. Childhood Education, 95(1), 44–52. [Google Scholar] [CrossRef]

- Ahmed, J., & Mahin, M. M. K. (2025). AI chatbot for solving mathematical problems using large language models and retrieval-augmented generation (RAG) with custom dataset integration. Available online: http://ar.cou.ac.bd:8080/handle/123456789/92 (accessed on 11 June 2025).

- Almulla, M. A. (2024). Investigating influencing factors of learning satisfaction in AI ChatGPT for research: University students’ perspective. Heliyon, 10(11), e32220. [Google Scholar] [CrossRef] [PubMed]

- Bialystok, E. (2001). Bilingualism in development: Language, literacy, and cognition. Cambridge University Press. [Google Scholar] [CrossRef]

- Borchers, C., Carvalho, P. F., Xia, M., Liu, P., Koedinger, K. R., & Aleven, V. (2023, August). What makes problem-solving practice effective? Comparing paper and AI tutoring. In European conference on technology enhanced learning (pp. 44–59). Springer Nature Switzerland. [Google Scholar]

- Chi, M. T. H. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. In Advances in instructional psychology (Vol. 5). Routledge. [Google Scholar]

- Chi, M. T. H., Adams, J., Bogusch, E. B., Bruchok, C., Kang, S., Lancaster, M., Levy, R., Li, N., McEldoon, K. L., Stump, G. S., Wylie, R., Xu, D., & Yaghmourian, D. L. (2018). Translating the ICAP theory of cognitive engagement into practice. Cognitive Science, 42(6), 1777–1832. [Google Scholar] [CrossRef] [PubMed]

- Chi, M. T. H., & Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational Psychologist, 49(4), 219–243. [Google Scholar] [CrossRef]

- Coffey, H. (2009). Digital game-based learning. Learn NC, 1–3. [Google Scholar]

- Connolly, T. M., Boyle, E. A., MacArthur, E., Hainey, T., & Boyle, J. M. (2012). A systematic literature review of empirical evidence on computer games and serious games. Computers & Education, 59(2), 661–686. [Google Scholar] [CrossRef]

- DaCosta, B. (2025). Generative AI meets adventure: Elevating text-based games for engaging language learning experiences. Open Journal of Social Sciences, 13(4), 601–644. [Google Scholar] [CrossRef]

- Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35(8), 982–1003. [Google Scholar] [CrossRef]

- Dillard, J. P., & Ha, Y. (2016). Interpreting perceived effectiveness: Understanding and addressing the problem of mean validity. Journal of Health Communication, 21(9), 1016–1022. [Google Scholar] [CrossRef]

- Duenas, T., & Ruiz, D. (2024). The risks of human overreliance on large language models for critical thinking. Available online: https://www.researchgate.net/publication/385743952_The_Risks_Of_Human_Overreliance_On_Large_Language_Models_For_Critical_Thinking (accessed on 11 June 2025).

- Han, J., Yoo, H., Myung, J., Kim, M., Lim, H., Kim, Y., Lee, T. Y., Hong, H., Kim, J., Ahn, S.-Y., & Oh, A. (2024). LLM-as-a-tutor in EFL writing education: Focusing on evaluation of student-LLM interaction. arXiv, arXiv:2310.05191. [Google Scholar]

- Holmes, W., Bialik, M., & Fadel, C. (2019). Artificial intelligence in education: Promises and implications for teaching and learning. The Center for Curriculum Redesign. [Google Scholar]

- Hung, C., Yang, J., & Hwang, G. (2018). A scoping review of digital game-based language learning. Educational Technology & Society, 21(3), 72–87. [Google Scholar]

- Hung, H. T., Chang, J. L., & Yeh, H. C. (2016, July). A review of trends in digital game-based language learning research. In 2016 IEEE 16th international conference on advanced learning technologies (ICALT) (pp. 508–512). IEEE. [Google Scholar]

- Jiang, L. (2014). HSK standard course 3. Beijing Language and Culture University Press. [Google Scholar]

- Ju, Q. (2023). Experimental evidence on negative impact of generative AI on scientific learning outcomes. arXiv, arXiv:2311.05629. [Google Scholar] [CrossRef]

- Keshtkar, F., Rastogi, N., Chalarca, S., & Bukhari, S. A. C. (2024). AI tutor: Student’s perceptions and expectations of AI-driven tutoring systems: A survey-based investigation. The International FLAIRS Conference Proceedings, 37(1). [Google Scholar] [CrossRef]

- Kestin, G., Miller, K., Klales, A., Milbourne, T., & Ponti, G. (2025). AI tutoring outperforms in-class active learning: An RCT introducing a novel research-based design in an authentic educational setting. Scientific Reports, 15, 17458. [Google Scholar] [CrossRef]

- Kong, W., Chen, L., & Zhang, S. (2023). Better zero-shot reasoning with role-play prompting. arXiv, arXiv:2308.07702. [Google Scholar]

- Krashen, S. D. (1985). The input hypothesis: Issues and implications. Longman. [Google Scholar]

- Kumar, H., Musabirov, I., Reza, M., Shi, J., Wang, X., Williams, J. J., Kuzminykh, A., & Liut, M. (2024). Guiding students in using LLMs in supported learning environments: Effects on interaction dynamics, learner performance, confidence, and trust. Proceedings of the ACM on Human-Computer Interaction, 8(CSCW2), 1–30. [Google Scholar] [CrossRef]

- Lin, Y., & Yu, Z. (2023). Extending Technology Acceptance Model to higher-education students’ use of digital academic reading tools on computers. International Journal of Educational Technology in Higher Education, 20, 34. [Google Scholar] [CrossRef]

- Liu, Y., Sahagun, J., & Sun, Y. (2021). An adaptive and interactive educational game platform for English learning enhancement using AI and chatbot techniques. Natural Language Processing, 11(23), 97–106. [Google Scholar] [CrossRef]

- Maurya, K. K., Srivatsa, K. A., Petukhova, K., & Kochmar, E. (2024). Unifying AI tutor evaluation: An evaluation taxonomy for pedagogical ability assessment of LLM-powered AI tutors. arXiv. Available online: https://arxiv.org/abs/2412.09416 (accessed on 7 October 2025). [CrossRef]

- Mayer, R. E. (2009). Multimedia learning (2nd ed.). Cambridge University Press. [Google Scholar] [CrossRef]

- Metcalfe, J. (2017). Learning from errors. Annual Review of Psychology, 68, 465–489. [Google Scholar] [CrossRef]

- Persky, A. M., Lee, E., & Schlesselman, L. S. (2020). Perception of learning versus performance as outcome measures of educational research. American Journal of Pharmaceutical Education, 84(7), 7782. [Google Scholar] [CrossRef] [PubMed]

- Plass, J. L., Homer, B. D., & Kinzer, C. K. (2015). Foundations of Game-Based Learning. Educational Psychologist, 50(4), 258–283. [Google Scholar] [CrossRef]

- Prastiwi, F. D., & Lestari, T. D. (2025). Digital game-based learning in enhancing English vocabulary: A systematic literature review. Jurnal Penelitian Ilmu Pendidikan Indonesia, 4(2), 349–358. [Google Scholar]

- Ruan, S., Jiang, L., Xu, Q., Liu, Z., Davis, G. M., Brunskill, E., & Landay, J. A. (2021, April 14–17). EnglishBot: An AI-powered conversational system for second language learning. 26th International Conference on Intelligent User Interfaces (pp. 434–444), College Station, TX, USA. [Google Scholar] [CrossRef]

- Ruwe, T., & Mayweg-Paus, E. (2024). Embracing LLM Feedback: The role of feedback providers and provider information for feedback effectiveness. Frontiers in Education, 9, 1461362. [Google Scholar] [CrossRef]

- Scherer, R., Siddiq, F., & Tondeur, J. (2019). The Technology Acceptance Model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education. Computers & Education, 128, 13–35. [Google Scholar] [CrossRef]

- Schwid, S. R., Tyler, C. M., Scheid, E. A., Weinstein, A., Goodman, A. D., & McDermott, M. P. (2003). Cognitive fatigue during a test requiring sustained attention: A pilot study. Multiple Sclerosis Journal, 9(5), 503–508. [Google Scholar] [CrossRef]

- Shahri, H., Emad, M., Ibrahim, N., Rais, R. N. B., & Al-Fayoumi, Y. (2024, April 24–25). Elevating education through AI tutor: Utilizing GPT-4 for personalized learning. 2024 15th Annual Undergraduate Research Conference on Applied Computing (URC) (pp. 1–5), Dubai, United Arab Emirates. [Google Scholar]

- Sommer, K. L., & Kulkarni, M. (2012). Does constructive performance feedback improve citizenship intentions and job satisfaction? The roles of perceived opportunities for advancement, respect, and mood. Human Resource Development Quarterly, 23(2), 177–201. [Google Scholar] [CrossRef]

- Sweller, J. (1994). Cognitive load theory, learning difficulty, and instructional design. Learning and Instruction, 4(4), 295–312. [Google Scholar] [CrossRef]

- VanLehn, K. (2011). The relative effectiveness of human tutoring, intelligent tutoring systems, and other tutoring systems. Educational Psychologist, 46(4), 197–221. [Google Scholar] [CrossRef]

- Vanzo, A., Chowdhury, S. P., & Sachan, M. (2024). GPT-4 as a homework tutor can improve student engagement and learning outcomes. arXiv, arXiv:2409.15981. [Google Scholar] [CrossRef]

- Vosniadou, S. (2013). Conceptual change in learning and instruction: The framework theory approach. In International handbook of research on conceptual change (2nd ed.). Routledge. [Google Scholar]

- Vygotsky, L. S. (1978). Mind in Society: The development of higher psychological processes. Harvard University Press. [Google Scholar]

- Wang, D. (2013). The use of English as a lingua franca in teaching Chinese as a foreign language: A case study of native Chinese teachers in Beijing. In K. Murata (Ed.), WASEDA studies in ELF communication (pp. 197–211). Springer. [Google Scholar] [CrossRef]

- Waring, M., & Evans, C. (2024). Facilitating students’ development of assessment and feedback skills through critical engagement with generative artificial intelligence. In Research handbook on innovations in assessment and feedback in higher education (pp. 330–354). Edward Elgar Publishing. [Google Scholar]

- Zeng, J., Parks, S., & Shang, J. (2020). To learn scientifically, effectively, and enjoyably: A review of educational games. Human Behavior and Emerging Technologies, 2(2), 186–195. [Google Scholar] [CrossRef]

- Zhai, C., Wibowo, S., & Li, L. D. (2024). The effects of over-reliance on AI dialogue systems on students’ cognitive abilities: A systematic review. Smart Learning Environments, 11(1), 28. [Google Scholar] [CrossRef]

- Zhu, C., Sam, C. H., Wu, Y., & Tang, Y. (2025). WIP: Enhancing game-based learning with AI-driven peer agents. arXiv. Available online: https://arxiv.org/abs/2508.01169 (accessed on 7 October 2025).

- Zou, D., Huang, Y., & Xie, H. (2021). Digital game-based vocabulary learning: Where are we and where are we going? Computer Assisted Language Learning, 34(5–6), 751–777. [Google Scholar] [CrossRef]

- Zou, S., Guo, K., Wang, J., & Liu, Y. (2025). Investigating students’ uptake of teacher- and ChatGPT-generated feedback in EFL writing: A comparison study. Computer Assisted Language Learning, 1–30. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).