Abstract

Rapid advances in artificial intelligence (AI) are reshaping curricula and work, yet technology and engineering education lack a coherent, critical AI literacy pathway. In this study, we (1) mapped dominant themes and intellectual bases and (2) compared AI literacy between secondary technical students and pre-service technology and engineering teachers to inform curriculum design. Moreover, we conducted a Web of Science bibliometric analysis (2015–2025) and derived a four-pillar framework (Foundational Knowledge, Critical Appraisal, Participatory Design, and Pedagogical Integration) of themes consolidated around GenAI/LLMs and ethics, with strong growth (1259 documents, 587 sources). Phase 2 was a cross-sectional field study (n = 145; secondary n = 77, higher education n = 68) using the AI literacy test. ANOVA showed higher total scores for pre-service teachers than secondary technical students (p = 0.02) and a sex effect favoring males (p = 0.01), with no interaction. MANCOVA found no multivariate group differences across 14 competencies, but univariate advantages for pre-service technology teachers were found in understanding intelligence (p = 0.002) and programmability (p = 0.045); critical AI literacy composites did not differ by group, while males outperformed females in interdisciplinarity and ethics. We conclude that structured, performance-based curricula aligned to the framework—emphasizing data practices, ethics/governance, and human–AI design—are needed in both sectors, alongside measures to close gender gaps.

1. Introduction

Artificial intelligence (AI) is increasingly becoming a key technology that is fundamentally changing various areas of society, from science and industry to people’s daily quality of life. Rapid advances in AI are transforming disciplines, the economy, and jobs, and challenging our established notions of human labor and the role of machines (UNESCO, 2009). Given the far-reaching impact of AI on society and the labor market, many analysts warn that AI will create new jobs while changing existing occupations, requiring a reorientation of workforce competencies (Shen & Zhang, 2024). The continued proliferation of AI has further put its benefits and risks under the spotlight, where nearly 40% of all jobs worldwide will be affected in the coming years in most scenarios by replacing some and complementing others (Pizzinelli et al., 2023). Thus, AI, as it is now, is likely to increase overall inequality (Pizzinelli et al., 2023). Moreover, the rapid development of AI could extend much further into professions, as AI not only complements humans in traditional technological solutions but can also replace some cognitive tasks (Cazzaniga et al., 2024). Due to the potential of AI for higher productivity and a greater emphasis on decision-making, empathy, and the creativity of people, human guidance and control might be critical (Stolpe & Hallström, 2024). Thus, AI literacy acquired and balanced through education can be seen as a key driver for working with and managing AI-driven solutions. This includes technical understanding, ethical awareness, and the ability to analytically evaluate the performance of AI. Therefore, educational institutions must provide future professionals with sound technological, pedagogical, and content knowledge as early as the secondary school level, while promoting an understanding of the social and ethical dimensions of AI use (Guzik et al., 2024; Rütti-Joy et al., 2023).

In recent years, there have been several projects and research both in this country and globally about how digital technologies and AI solutions can be most effectively introduced into education. For example, the national GEN-UI (Generative AI in Education) project aims at the comprehensive, effective, and efficient use of generative AI (GenAI) in the learning process at various levels of education (https://gen-ui.si/, accessed on 15 September 2025). The main objective of the GEN-UI project is to (1) investigate the importance of GenAI in terms of perception and changes in learning and teaching from the perspective of various stakeholders, taking into account the didactic, legal, financial, and ethical aspects of the use of GenAI in education in Slovenia; (2) analyze the status and needs of educational institutions for the effective and efficient use of GenAI in the educational process in Slovenia; (3) develop guidelines for effective and efficient use of GenAI for modern learning and teaching of teachers and students; (4) produce sample teaching scenarios in the field of the use of GenAI in selected educational institutions; and (5) develop guidelines for the effective and efficient use of GenAI for and in education.

Teachers and future teachers will play a central role in the meaningful integration of AI into education, especially in technical education, orienting the cadre toward technical and scientific professions. In addition to pedagogical strategies, teachers will need to develop a basic understanding and skills in the use and evaluation of AI tools to effectively teach content using these technologies meaningfully (Univerzitetna založba UM, 2022). Indeed, their AI competencies will largely shape students’ AI literacy, as teachers’ integration of AI content enables students to learn the basics of the technology and familiarize themselves with its meaningful ethical dimensions (Univerzitetna založba UM, 2022). This is also the strategic direction: teacher education must address the new competencies required in the age of AI because teachers are catalysts for change in the learning environment. Unfortunately, research shows that many teachers lack clear guidelines for teaching AI content, which reduces their confidence in integrating these topics (Lumanlan et al., 2025). This confirms the need to systematically develop the competencies of future and current teachers in the field of AI. This is particularly important in technical education, where students will work as future engineers and technicians in professions where AI is already part of everyday life.

The central motivation for our study stems from the challenges described above. We aim to investigate the current state of AI literacy among two key groups in education: students in secondary technical schools and students who will be the future teachers of technical subjects. These are two groups that will directly shape the future of technical and scientific professions: high school students as future professionals and users of AI in the field, and student teachers as communicators of AI knowledge to future generations. It is important to understand to what extent these students and future teachers are already familiar with AI, and what their attitudes, knowledge, and possible gaps in understanding are—in short, how familiar they are with AI. This will determine how well they will integrate AI into their professional work and how AI will affect their societal role and employability. Therefore, it is scientifically and practically relevant to investigate the level of AI literacy in future teachers of technical subjects and in students at technical schools. The results of such an investigation can contribute to the improvement of study programs and curricula.

1.1. Critical AI Literacy and Teacher Education

Critical AI literacy can be understood as the component of AI literacy that emphasizes questioning, interpreting, and reflecting on the assumptions, processes, and implications of AI (Touretzky et al., 2022). Moreover, critical AI literacy is about recognizing how AI systems are shaped by human decisions, identifying the ethical or societal dimensions of these systems, and critically evaluating their trustworthiness and potential biases (Touretzky et al., 2022). Critical AI literacy typically includes (1) the knowledge of how AI works and where it fails; (2) skills for analysis, verification, and critique; (3) ethical/civic orientations (fairness, accountability, and transparency); and (4) agency (Velander et al., 2024).

In Long and Magerko’s often cited definition of AI literacy, they emphasize that people with AI literacy should be able to “critically evaluate AI technologies, communicate and collaborate effectively with AI, and use AI as a tool” (Long & Magerko, 2020, p. 2) in everyday life. Critical AI literacy focuses on the first aspect, critical evaluation of AI, by focusing on audit, ethics, and reflective practice (Veldhuis et al., 2025). Hornberger et al.’s (2023) work refers to Long and Magerko (2020), for example, and states that an essential component of AI literacy is understanding how the development of AI is guided by human decisions and can perpetuate biases if these decisions are not carefully scrutinized. Across both secondary and higher education, there is a growing consensus that AI literacy must be critical, combining technical understanding of AI with critical thinking about AI’s role in society (Khuder et al., 2024; Velander et al., 2024).

The rapid integration of AI across social, educational, and professional domains has rendered AI literacy a fundamental component of contemporary digital competence. Prior studies have emphasized that AI literacy extends beyond mere familiarity with AI tools and encompasses an understanding of core algorithmic principles, data practices, and socio-ethical dimensions (Ng et al., 2021; Schüller, 2022). However, research to date has been characterized by fragmentation: competency models differ considerably across disciplines and educational levels, producing inconsistent guidelines for curriculum design (Chee et al., 2024). Moreover, existing reviews reveal a dearth of coherent learning pathways that span K-12 through higher education into workforce training, leaving practitioners and policymakers without a unified framework for lifelong AI literacy development (Zhang et al., 2025).

The key thematic clusters of critical AI literacy, as suggested by the literature, include technical foundations, AI’s strengths and weaknesses, data/algorithmic reasoning, ethics and societal impact, privacy and governance, agency and design/creation, classroom application and assessment, and interdisciplinarity.

First, technical foundations and data/algorithmic reasoning establish the necessary cognitive groundwork for learners. This cluster includes understanding core AI models (e.g., supervised vs. unsupervised learning, neural networks), data pipelines (collection, cleaning, and feature engineering), and algorithmic interpretability (Chee et al., 2024; Ng et al., 2021). Proficiency here empowers students to deconstruct AI systems, evaluate data quality, and grasp the mathematical and statistical principles underpinning model training and performance metrics (Schüller, 2022).

Second, AI’s strengths and weaknesses, along with ethics and societal impact and privacy/governance, form a critical–reflective dimension. Learners examine AI’s capabilities, such as pattern recognition, automation, and scalability, against its limitations, such as brittleness, bias, and “hallucinations” in large language models (Johri, 2020). Ethical inquiry covers fairness, accountability, transparency, and the social consequences of AI deployment (e.g., labor displacement, surveillance, and power asymmetries) (Atenas et al., 2023; Gartner & Krašna, 2023), while governance literacy addresses data protection regulations (e.g., GDPR), institutional policies, and public sector AI frameworks (Bing & Leong, 2025; Filgueiras, 2023).

Third, agency and design/creation and interdisciplinarity cultivate a creative–proactive posture. Under human-centered and Participatory Design principles, learners co-construct AI artifacts through prototyping, prompt engineering, and iterative error analysis (Johri, 2020; Shiri, 2024). An interdisciplinary approach integrates perspectives from computer science, engineering, sociology, philosophy, and policy studies to foster systems-level reasoning and address “wicked” socio-technical challenges (Chen et al., 2020; Zhang et al., 2025).

Finally, classroom application and assessment prescribe Pedagogical Integration strategies and evaluation tools. Innovative teaching methods, such as constructivist, project-based learning with authentic datasets (Kim et al., 2025), peer-supported collaborative tasks (Joseph et al., 2024), and case-based discussions, should be paired with performance-based assessments, reflective portfolios, and validated AI literacy scales to measure competence across cognitive, critical, and creative dimensions (Lintner, 2024; Schleiss et al., 2023; Walter, 2024).

To reorganize these themes into a coherent, actionable conceptual framework tailored for technology and engineering education, we organize AI literacy into four interrelated pillars:

- Foundational Knowledge

- Encompasses procedural and declarative mastery of AI algorithms, data lifecycle management, and model evaluation.

- Key outcomes: explain backpropagation, design a simple classifier, and critique dataset biases.

- Critical Appraisal

- Fosters reflective understanding of AI’s promises and perils, ethical principles, and governance contexts.

- Key outcomes: identify unfair outcomes in models, debate policy scenarios, and propose mitigation strategies.

- Participatory Design

- Empowers learners as co-designers of AI systems through prototyping, prompt engineering, and interdisciplinary collaboration.

- Key outcomes: develop a conversational agent via iterative testing, conduct stakeholder co-design workshops, and assess the socio-technical impacts.

- Pedagogical Integration

- Guides educators in embedding AI literacy across curricula, leveraging active learning and robust assessment.

- Key outcomes: structure a K-12 pathway from basic concepts to workforce competencies, apply formative feedback on AI projects, and adapt modules across disciplines.

These pillars are mutually reinforcing: Foundational Knowledge enables Critical Appraisal; critical insights inform responsible design; design practices generate new pedagogical approaches; and pedagogy perpetuates the cycle by nurturing deeper technical and reflective skills. By mapping specific competencies, learning activities, and assessment metrics onto each pillar, this framework offers technology and engineering programs a strategic roadmap to develop graduates who not only operate AI tools but also critically shape AI’s role in society.

Within a theoretical framework, our research builds on existing models and standards for the integration of AI in education. Among the most established is the American AI4K12 initiative, which has identified the so-called “five big ideas” (BIs) of AI as the core of the curriculum: BI1: perception; BI2: representation and reflection; BI3: learning; BI4: natural interaction; and BI5: social impact (Touretzky et al., 2022). These concepts serve as guidelines for what students should learn about AI at different levels of school education. International organizations such as the Organization for Economic Co-operation and Development (OECD) and the United Nations Educational, Scientific, and Cultural Organization (UNESCO) have also begun to issue recommendations and frameworks for the development of AI competencies. For example, in 2023, UNESCO developed a competency framework for AI literacy for students and teachers to support countries in integrating AI into their education systems. UNESCO recommends that such frameworks should be seamlessly integrated into all levels of education, along with the provision of appropriate infrastructure and ethical guidelines for the use of AI (UNESCO, 2009). Such standards and guidelines emphasize that the integration of AI in the classroom should be ethical, safe, and human-centered, while effectively developing the necessary knowledge and skills in learners. Our study sheds light on how much progress has been made in implementing these guidelines for the previously mentioned target groups and provides a starting point for further curriculum development and teacher training in the field of AI.

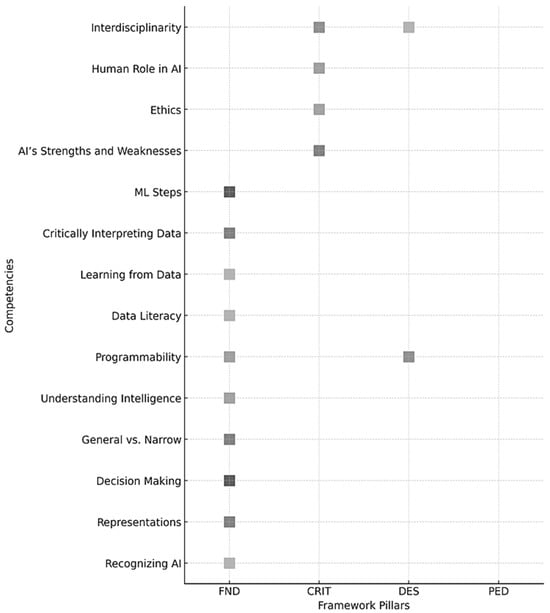

Building on these gaps, this study proposes a comprehensive competency framework that delineates 14 AI literacy competencies, synthesized from empirical studies and established frameworks (Chee et al., 2024; Long & Magerko, 2020; Shiri, 2024). Visual representation of how 14 AI literacy competences can be linked to four conceptual pillars is shown in Figure 1. The visualization supports rapid gap analysis and sequencing: educators can cluster outcomes by pillar, choose appropriate learning activities (e.g., CRIT for ethics debates; DES for prototyping), and scaffold progression from basic recognition and data literacy to pedagogical application. For curriculum designers, the pillar tags translate directly into course modules, assessment rubrics, and cross-course alignment, enabling coherent coverage without duplication. This structure also facilitates accreditation reporting and iterative program improvement by making the intended learning outcomes traceable to pillar-specific evidences.

Figure 1.

Mapping AI competences onto four conceptual pillars, where FND—Cognitive–technical foundation, CRIT—critical–reflective, DES—design and creation, and PED—pedagogical. Color boxes represent an alignment with corresponding pillar.

It has proven crucial that we demonstrate how these competencies must be configured and prioritized differently for distinct learner cohorts, ranging from K-12 students (emphasizing basic AI concepts and ethical awareness) to higher education learners (focusing on data–algorithmic reasoning and problem-solving) and workforce professionals (centering on AI tool utilization, error detection, and decision-making) (Chee et al., 2024). In addition, we articulate an implied developmental pathway that sequences learning objectives across educational stages, thereby addressing the longstanding absence of a structured, longitudinal approach to AI literacy (Chee et al., 2024).

1.2. Objectives and Research Questions of the Current Study

The purpose of this study was two-fold: (1) to map and critically characterize the dominant themes and intellectual bases of AI literacy in technology and engineering education and trace their evolution over time; (2) to empirically compare AI literacy between students in a technology teacher education program and secondary technical school students—examining overall levels, pinpointing which competence areas differ with statistical significance and associated effect sizes, and assessing differences in AI literacy—to generate evidence for targeted curriculum design and pedagogical interventions in technology and engineering education. Accordingly, we formulated the following research questions (RQ1–RQ4):

- RQ1: What are the dominant themes and intellectual bases of AI literacy in education, and how have these themes evolved over time?

- RQ2: What are the differences in the level of AI literacy measured by the total score between students in a technology teacher education program and secondary technical school students?

- RQ3: In which AI literacy competencies are there statistically significant differences between students in a technology teacher education program and secondary technical school students, and what is the effect?

- RQ4: What are the differences in critical AI literacy between students in a technology teacher education program and secondary technical school students?

Table 1 demonstrates that our measurement strategy is theoretically anchored and coverage-balanced across the core knowledge and critical dimensions of AI literacy. By aligning each bibliometric theme with Long and Magerko’s (2020) competencies as operationalized in the Hornberger et al. (2023) AI literacy test, the table shows strong construct coverage of (1) Representation and Reasoning and Learning (AI4K12 BI2–BI3) through items on knowledge representations, decision-making, supervised/unsupervised learning, and the machine learning (ML) pipeline; and (2) the societal impact strand (BI5) via ethics, human oversight, and legal challenges. Coverage of natural interaction (BI4) is present, but deliberately minimal (e.g., recognizing a chatbot), while perception (BI1) is absent, which is a direct consequence of Hornberger et al.’s (2023) decision not to develop items for robotics-specific competencies (action and reaction, sensors) and to exclude “Imagine the Future of AI” from the test blueprint. These instrument design choices are documented in their test development rationale and item-to-competency allocation, and they explain the mapping gaps in Participatory Design/Creation and privacy/governance depth (only one legal/policy item) that we treat as curricular implications rather than measurement targets in this study. Importantly, Hornberger et al. (2023) validate a unidimensional structure and report good reliability for a 30-item + 1 sorting task instrument, which justifies the use of a total AI literacy score in our between-group comparisons, while our table clarifies its content coverage across technical, critical, and societal facets.

Table 1.

Mapping bibliometric themes → AI competency with conceptual framework pillars and AI4K12 tags (Big Idea BI2–BI5), where FND—Cognitive–technical foundation, CRIT—critical–reflective, DES—design and creation, and PED—pedagogical.

The proposed framework offers actionable guidance for educators, curriculum designers, and policymakers to shift the pedagogical emphasis from transactional tool use toward critical, strategic, and ethical engagement with AI technologies. In practice, K-12 curricula should integrate project-based modules in which students employ constructivist datasets to explore AI mechanics and impacts (Kim et al., 2025), while higher education programs must embed interdisciplinary case studies that foster data literacy, algorithmic transparency, and governance considerations (Atenas et al., 2023; Filgueiras, 2023). For workforce training, organizations ought to adopt performance-based assessments and reflective portfolios that measure AI competence in real-world tasks (Joseph et al., 2024; Lintner, 2024). At the policy level, ministries of education and accreditation bodies should formalize a lifelong AI literacy continuum—spanning teacher professional development in intelligent TPACK (Velander et al., 2023), standardized assessment scales (Lintner, 2024), and cross-sector governance frameworks—to ensure equitable access and sustained skill development from early schooling through career progression (Filgueiras, 2023; Schüller, 2022). As Rupnik and Avsec (2025) noted, the beginning and end of schooling are very stressful for students, so increasing AI literacy could help reduce discomfort and increase the sense of successful completion, thereby reducing dropout rates and enabling high school students to make more informed decisions about further education.

2. Materials and Methods

In this study, we used a two-phase sequential quantitative design: Phase 1: a bibliometric evidence synthesis to derive and structure the conceptual framework for (critical) AI literacy; Phase 2: a non-experimental, cross-sectional comparative field study in authentic classroom settings to validate the framework Via the Hornberger et al. AI literacy test and compare cohorts.

2.1. Characteristics of Teacher Education and Secondary Technical School Study Programs

2.1.1. Faculty of Education

Information and communication technology (ICT) is systematically integrated into the study programs at the Faculty of Education, University of Ljubljana (n.d.). The dual-subject Mathematics–Computer Science program has ICT at its core: students deepen their knowledge of programming, algorithms, and data technologies while developing pedagogical and didactic competencies for high-quality teaching of mathematics and computer science in primary and secondary schools (Faculty of Education, University of Ljubljana, n.d.). In the Mathematics–Technology and Physics–Technology programs, ICT is an important supporting element, as students learn CAD, work with microcomputers and robotics, and use digital tools for experiments and technical drawing (Faculty of Education, University of Ljubljana, n.d.). ICT is also used in the Biology–Chemistry program for experimental work, measurement systems, and simulations, and in the Chemistry–Home Economics and Biology–Home Economics programs for planning, monitoring, and evaluating practical activities with specialized software (Faculty of Education, University of Ljubljana, n.d.).

2.1.2. Koper Technical Secondary School

The programs at Koper Technical Secondary School consistently incorporate ICT and strengthen digital skills and critical literacy regarding artificial intelligence (Secondary Technical School Koper, n.d.). The technical high school (4-year program) builds the foundations of computational thinking through computer science and mechanics courses and prepares students for further study in technical fields. The computer technician program (2-year vocational-technical) provides an in-depth understanding of computer systems, programming, networks, and IT infrastructure maintenance, and encourages systematic thinking about the operation of complex AI systems (Secondary Technical School Koper, n.d.). The three-year computer science program emphasizes the practical use of ICT in professional situations, from hardware and software maintenance to information management, with a clear focus on digital literacy and critical thinking (Secondary Technical School Koper, n.d.). The mechatronics technician program (2-year PTI) combines mechanical engineering, electrical engineering, and computer science with the use of controllers (PLC), microcomputers, and sensors in automation; the mechatronics operator program (3-year) trains students to work directly with automated systems and basic programming (Secondary Technical School Koper, n.d.). The Mechanical Technician program (4 years) combines classic engineering content with CAD/CAM, CNC machine programming, and simulations, and promotes digital innovation and the thoughtful introduction of AI into industrial environments (Secondary Technical School Koper, n.d.).

2.1.3. Škofja Loka School Center

Škofja Loka School Center offers a range of programs with a strong emphasis on ICT, developing the digital competencies and critical AI literacy of future technical staff (School Centre Škofja Loka, n.d.). The technical high school (4-year program) provides a broad scientific and technical foundation, including an introduction to programming and computational thinking, and encourages critical evaluation of technologies such as artificial intelligence (School Centre Škofja Loka, n.d.). Mechanical engineering offers in-depth knowledge of computer-aided design, CNC machine operation, and automated systems using specialized software (School Centre Škofja Loka, n.d.). The metalworking program develops precise use of CNC technologies, algorithmic thinking, and problem solving; programs for the maintenance and assembly of mechanical systems introduce computer-aided procedures for assembly, diagnostics, and servicing (School Centre Škofja Loka, n.d.). The mechanical installation technician program uses digital tools for technical documentation and management of modern smart energy systems (School Centre Škofja Loka, n.d.).

2.2. Participants and Field Data Collection

In a cross-sectional research design, the online test was administered in class under proctored conditions with the principal investigator present; students completed it on personal mobile devices (smartphones/tablets, e.g., iPads). Administration was device-agnostic, single-session, and standardized across classes. We collected data from secondary technical school students (n = 77, 53.1%) and university students (n = 68, 46.9%). Secondary technical school students were from two high schools, while university students belonged to one university. Data was collected in the 2024/2025 school/academic year. In total, 145 students were engaged in this study, of whom 58 were females (40%) and 87 were males (60%). Secondary school students were on average 17.9 years old (SD = 1.26), while their counterparts from the university were 21.24 years old on average (SD = 2.07).

The Hornberger et al. (2023) AI literacy test (grounded in the work of Long and Magerko (2020)) was administered in class under proctored conditions with the principal investigator present. Students completed the online test on personal mobile devices (smartphones/tablets, e.g., iPads) in a session length of 35–45 min; identical instructions across classrooms were provided. No incentives were offered.

This study was conducted in accordance with the Declaration of Helsinki and was reviewed and approved by the Ethics Commission of the Faculty of Education of the University of Ljubljana (approval code: 7/2025). The AI literacy test was delivered to students online via the 1KA portal (https://www.1ka.si/d/sl, accessed on 15 September 2025), with the main examiner present in the ICT-equipped classroom.

2.3. Research Methods

For this study, we used bibliometrics together with empirical testing as the primary method.

2.3.1. Bibliometrics

Firstly, a bibliometric method was used. We queried the Web of Science Core Collection (WoS CC) for records in English published from 2015 to 2025 (inclusive). Document types included articles, reviews, proceedings articles, and early access retained articles. The search strategy (WoS/TS field) is shown in Appendix A (Table A1).

We exported full records + cited references (CSV) on the final search date. Screening included (1) inclusion: empirical or review work on AI/algorithmic/data literacy (including critical/ethical/governance aspects) in education contexts relevant to technology and engineering, teacher education, and TVET/technical secondary; (2) exclusion: non-educational AI articles; purely technical CS without a literacy/competence focus; editorials, notes, and news; non-English articles; retracted items; and duplicates.

Data curation and cleaning consisted of (1) deduplication by DOI ± exact/near-title match; keeping WoS “early access” merged with final versions; (2) standardization: lowercase; strip punctuation; harmonizing US/UK variants; unifying synonyms (e.g., AI <-> artificial intelligence; pre-service <-> preservice; and TVET <-> technical and vocational education and training); (3) the stop list for overly general terms in co-word analyses: artificial intelligence; AI; education; student; teacher; higher education; case study; literature review; and (4) stemming/lemmatization for keywords where supported.

As a primary tool and for reproducibility, the R/bibliometrix tool was used with the visualization tool Biblioshiny 5.0 (Aria & Cuccurullo, 2017) for performance indicators, co-word networks, thematic maps, and co-occurrence/co-citation visuals.

We later mapped emergent themes to the Hornberger et al. (2023) AI literacy test (aligned to the work of Long and Magerko (2020)) to provide evidence of content validity in our measurement section.

Network analyses and parameters can be delivered as (1) co-word (conceptual structure) and co-occurrence/co-citation: source = author keywords (DE); min keyword frequency = 3; normalization = association strength; and counting = full; (2) co-citation (intellectual base): unit = cited references; threshold = ≥10 citations (reference-level) and ≥20 (source/journal-level); and normalization = association strength; show top 50–100 nodes by weight.

Planned outputs can be (1) basic descriptive and performance summary (annual output; top venues/authors/countries); (2) thematic map + evolution plot (main text); (3) co-citation maps; and (4) coverage check linking high-centrality themes to instrument content (Results/Discussion).

2.3.2. AI Literacy Test

For the purpose of this study, we used an AI literacy test developed by Hornberger et al. (2023), which is grounded on a widely accepted AI literacy framework developed by Long and Magerko (2020). The test items were systematically created and also refined in a cognitive interview and expert reviews. The test was administered several times, and items were also thoroughly examined using response theory, which provides validity evidence. Statistical checks confirmed that the test primarily measures one factor, where 14 competencies from Long and Magerko’s framework (2020) are successfully covered. The test is flexible enough to be adapted for both high school and university settings. It captures the core knowledge of AI—encompassing its nature, technologies, societal/ethical impacts, and data-related considerations—in a psychometrically rigorous manner, making it a justifiable choice for measuring AI literacy across these student populations (Hornberger et al., 2023). As a result, our final instrument covers the following 14 competencies: (1) recognizing AI, (2) understanding intelligence, (3) interdisciplinarity, (4) general vs. narrow, (5) AI’s strengths and weaknesses, (6) representations, (7) decision-making, (8) machine learning steps (i.e., the iterative process of training and testing AI), (9) the human role in AI, (10) data literacy, (11) learning from data, (12) critically interpreting data, (13) ethics, and (14) programmability. The final test consists of 31 items, where 30 of them were structured as multiple-choice items with one correct answer plus three distractors. The dichotomous scoring model was used, where responses are either universally correct with a value of 1 or incorrect with an outcome of 0. At one item, students were asked to sort the five process steps in supervised learning into the correct order, where only the correct order was graded with 1 point. The maximum possible score on the test was 31 and calculated as the sum of all dichotomous responses. Competency scores were calculated by summing the dichotomous responses (coded as 0 or 1) for all items related to each competency. The sum was then divided by the total number of items within each competency, resulting in a proportion ranging from 0 to 1, where higher values indicate a higher level of competency.

The AI literacy test used in our study achieved moderate internal consistency (Cronbach’s α = 0.76), indicating that items consistently measure the same underlying constructs, namely, basic knowledge, conceptual understanding, critical thinking skills around AI, and attitude/awareness, especially around ethics and AI’s impact on society (Tabachnick & Fidell, 2013).

AI4K12 tagging was performed post hoc by mapping each Hornberger et al. (2023) item’s Long and Magerko (2020) competency to the AI4K12 big ideas (see Table 1). Item-to-competency allocations and the removal of Item 07 follow the work of Hornberger et al. (2023). Robotics/sensing competencies were not included in item generation, which explains the absence of BI1 (perception).

2.4. Field Study Data Analysis

The data was analyzed using IBM SPSS software (v.25). The Cronbach alpha coefficient was used to support the reliability of constructs. In addition, descriptive statistics were used to summarize and describe the main characteristics of a dataset, such as the mean and standard deviations of the dependent variable, while multiple analysis of covariance was used to identify and confirm significant differences in AI literacy amongst students from different school settings. A Shapiro–Wilk test was used to check whether the data were taken from a normally distributed sample. An effect size partial eta-squared value (η2) was used to measure the strength of the relationship between variables.

3. Results

3.1. Bibliometrics Analysis of the AI Literacy Conceptual Framework

Our collection comprises 1259 documents published across a substantial 587 unique sources. This indicates a moderately sized yet diverse body of literature. The high number of distinct sources suggests that the research topic is not confined to a few specialized journals but is interdisciplinary or widely published across various fields. The timespan reveals an extremely recent collection, with an average age of just 0.768 years. The inclusion of “2025” in the timespan likely indicates that our WOS download was very recent and captured “early access” or “in press” articles, or perhaps the data pull was configured to project slightly into the future to include forthcoming publications. This strong emphasis on very recent publications means the analysis is focused on the cutting edge of the field, capturing the very latest developments and trends.

A remarkable 4810 unique authors contributed to these 1259 documents. This signifies a vast network of researchers actively engaged in this domain, indicating a vibrant and highly populated research community. The presence of 885 Keywords Plus (system-generated) and a much larger 3463 authors’ keywords (author-provided) suggests a rich and diverse thematic landscape. The higher number of author keywords usually points to a more granular and specific representation of the topics being explored, allowing for a detailed thematic analysis.

The collection covers a broad yet current range of research within its defined scope, involving a significant global community of scholars. The recency of the publications suggests a focus on emerging themes and breakthroughs.

An annual growth rate of 125.47% indicates an extraordinarily high annual growth rate. A rate of 125.47% suggests an explosive growth in publications within this field over the specified timespan. This is a strong indicator of a rapidly emerging, highly active, or perhaps newly recognized research area that has gained significant traction very recently. This is a domain that is experiencing a boom in scientific output.

The average of 4.37 co-authors per document is quite high, and only 174 documents (approx. 13.8%) are single-authored. This clearly indicates that research in this field is predominantly collaborative. It is not a domain where lone scholars typically work; teamwork and shared expertise are the norm. More than a quarter of our documents feature international collaboration, 26.13%. This is a healthy percentage, demonstrating that the research community is not only collaborative within national boundaries but also engages across borders, fostering diverse perspectives and potentially leveraging global expertise and resources.

The field is experiencing exponential growth, driven by a highly collaborative and internationally connected research community. Productivity is robust, characterized by a rapid increase in original research articles and systematic reviews.

An average of 10.46 citations per document is a very good figure, especially when considering the extremely young average age of the documents (0.768 years). Publications typically accumulate citations over time. For documents that are, on average, less than a year old, achieving over 10 citations per document is impressive. This suggests that the research in this collection is not only current but is also being quickly recognized, read, and cited by other researchers, indicating its immediate relevance and influence.

Despite the extreme recency of the collection, the publications are already demonstrating a significant and immediate impact, rapidly gaining traction, and influencing subsequent research. This suggests that the work being carried out is highly relevant and valuable to the wider scientific community. Key findings from the analysis are as follows:

- A “Booming” Field: The most striking finding is the explosive growth rate, combined with the very young average age of documents and their already significant citation impact. This strongly suggests the analysis of a research area that has either recently emerged, experienced a critical breakthrough, or is attracting a massive surge of interest and investment.

- Highly Collaborative Environment: The high average co-authorship and significant international collaboration point to a field where networked research and shared expertise are paramount.

- Timely and Influential Research: The studies, despite their newness, are quickly becoming foundational, indicating the immediate utility and relevance of the work.

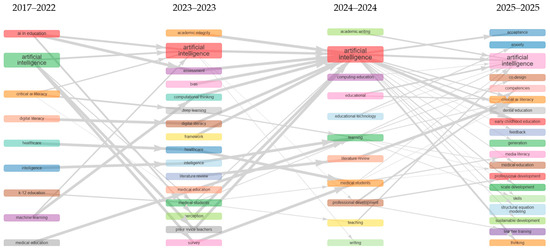

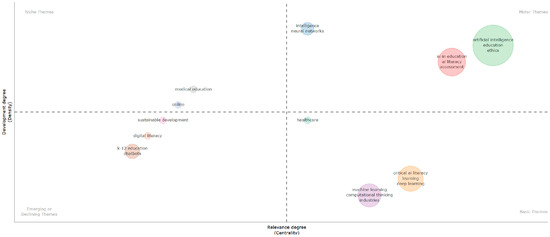

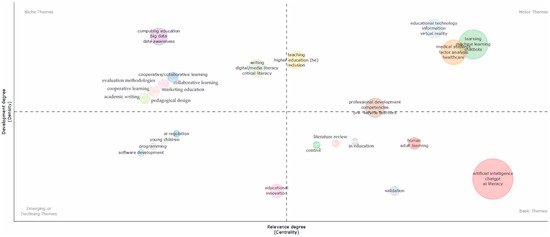

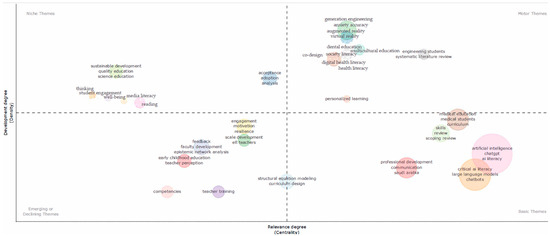

The provided plot (see Figure 2) vividly illustrates the rapid evolution of the research landscape concerning AI in education from 2017 to a projected 2025. The Sankey diagram shows the dynamic flow and transformation of topics, while the strategic maps provide a detailed snapshot of thematic positioning based on their centrality (relevance/interconnectedness) and density (development/cohesiveness) within each period.

Figure 2.

Thematic evolution map of authors’ keywords from 2017 to 2025.

The most striking and dominant trend across all periods is the explosive emergence and subsequent entrenchment of ChatGPT and Large Language Models (LLMs). From being virtually absent in 2017–2022, they quickly became foundational and driving forces in subsequent periods. “Artificial intelligence” itself, along with “AI literacy” and “ethics” (initially), consistently appear as central or motor themes, indicating their enduring importance as overarching concepts and critical research areas. The research trajectory clearly shifts from a general understanding of AI to its specific integration, challenges, and impact within educational settings, encompassing teaching, learning, and professional development. Over time, the field demonstrates a move toward more rigorous quantitative methodologies, with “structural equation modeling” emerging as a foundational theme. As the core themes mature, the research landscape diversifies significantly, with many specialized topics emerging in the “Niche Themes” quadrant.

Our analysis provides an interesting view into the evolving landscape of AI in various domains, particularly education, over recent years (see Figure 2). The analysis covers a rapidly evolving field, as indicated by the distinct short time periods (2023–2023, 2024–2024, and 2025–2025), which highlights the accelerating pace of research and publication. The use of the authors’ keywords field for clustering provides a conceptual understanding of topic co-occurrence and evolution. The topic evolution map provides a macroscopic view of how research themes have emerged, persisted, and shifted over time. The increasing number of nodes and the complexity of connections from the period 2017–2022 to 2025–2025 illustrate a rapidly expanding and diversifying research landscape.

This plot in Figure 2 vividly illustrates the dynamic nature of research themes:

- Foundational phase, 2017–2022: The initial period shows a broad interest in fundamental AI concepts (“artificial intelligence,” “machine learning”), their application in education (“AI in education”), and critical skills (“critical AI literacy,” “digital literacy,” and “computational thinking”). Ethical considerations (“ethics”) are already a motor theme, indicating early awareness. Sector-specific applications such as “healthcare,” “k-12 education,” and “medical education” appear as distinct, albeit less interconnected, areas.

- Rapid expansion and specificity, 2023–2023: This period shows a significant increase in thematic diversity, likely driven by recent advancements in AI. “Artificial intelligence” and “AI in education” remain central hubs, spawning connections to more specific areas. New themes emerge, including “academic integrity,” “assessment,” “bias,” “pre-service teachers,” “literature review,” and “perception.” This suggests a shift toward understanding the *implications* of AI on educational practices and specific stakeholders. “Computational thinking” and “deep learning” also gain prominence.

- Deepening and diversification, 2024–2024: The network continues to expand. “Artificial intelligence” maintains its pivotal role, linking to areas such as “academic writing,” “computing education,” “educational technology,” “learning,” “professional development,” and “teaching.” “AI in education” and “digital literacy” continue to connect to these new pedagogical and technological integration themes. This phase highlights a move toward practical implementation and the development of educational strategies.

- Integration, impact, and future focus, 2025–2025: This period presents a highly fragmented yet interconnected web of topics. “Artificial intelligence” and “AI in education” (and their closely related concepts such as “critical AI literacy,” “digital literacy,” and “ethics”) act as central anchors, connecting to a wide array of themes related to (a) human impact: “anxiety,” “feedback,” “perception”; (b) educational outcomes: “competencies,” “skills,” “scale development,” “sustainable development,” and “quality education”; (c) specific contexts: “early childhood education,” “medical education,” and “teacher training”; and (d) methodology/implementation: “structural equation modeling” and “teacher training.”

The thickness of arrows leading from “artificial intelligence” and “AI in education” to many new and diverse topics in 2025–2025 signifies their sustained and growing influence as overarching domains that encompass a broadening spectrum of research questions.

We also analyzed the strategic maps, which allowed us to understand the positioning of topics based on their relevance (centrality) and development (density) within each period. These maps are organized into four quadrants as follows:

- Motor Themes (Top Right): High centrality, High density. Well-developed and central to the field, they drive research.

- Niche Themes (Top Left): Low centrality, High density. Specialized areas, internally well-developed but less connected to the broader field.

- Basic Themes (Bottom Right): High centrality, Low density. Foundational concepts, cross-cutting but less internally developed (often because they are broadly accepted prerequisites).

- Emerging or Declining Themes (Bottom Left): Low centrality, Low density. Peripheral topics, either new and gaining traction or fading, are shown in Appendix B (see Strategic maps Figure A1, Figure A2, Figure A3 and Figure A4).

Across the period studied, the thematic cartography reveals consolidation around GenAI with LLMs, notably ChatGPT, emerging in 2023 as a durable, field-defining basic theme and remaining foundational through 2025, while the broader “chatbots” strand peaks as a motor theme in 2023–2024 before drifting toward the basic theme as discourse and practice coalesce around LLM-centric affordances and risks (Bettayeb et al., 2024; Boscardin et al., 2023; Eysenbach, 2023; Haroud & Saqri, 2025; Jensen et al., 2024). Foundational constructs—AI, AI literacy, and critical AI literacy—likewise stabilize in the basic quadrant by 2025, reflecting their anchoring role in curricula and competence frameworks and the growing effort to instrument and standardize assessment through scale development and validation (Lintner, 2024; Salhab, 2024; Schüller, 2022; Walter, 2024; Wilby & Esson, 2023). Applications show differentiated trajectories: medical students and medical education shift from motor (2023–2024) themes to basic (2025) themes as scoping and rapid reviews consolidate use cases (virtual patients, decision tutoring, and content generation), but also note limited outcomes evidence and calls for standardized competencies (Boscardin et al., 2023; J. Lee et al., 2021; Hale et al., 2024; Rincón et al., 2025; Sun et al., 2023). In parallel, K-12 AI education moves from the emerging theme to the motor theme by 2024 and sustains high centrality into 2025 as teacher readiness, AI literacy interventions, and design-based pedagogies scale up despite persistent capacity and policy gaps (I. A. Lee et al., 2021; Ng et al., 2022; Relmasira et al., 2023; Velander et al., 2023). Methodologically, the center of gravity moves rightward with an uptick in synthesis and rigor: scoping reviews, systematic reviews, and rapid reviews proliferate across domains, and structural equation modeling (e.g., PLS-SEM) features in studies interrogating human factors and adoption concerns, together signaling maturation of evidence practices by 2025 (Ahmad et al., 2023; Bettayeb et al., 2024; Hale et al., 2024; Hwang et al., 2022; Ji et al., 2022; J. Lee et al., 2021; Lintner, 2024; Sun et al., 2023; Tahiru, 2021). Ethical and societal strands remain salient yet stratify: digital literacy recedes from a broad basic theme anchor toward a more niche position as discourse narrows to AI-specific literacies and governance frameworks (Haroud & Saqri, 2025; Schüller, 2022), acceptance holds in the basic theme amid pragmatic integration in higher education, whereas anxiety occupies a locus nearer the motor theme, animated by concerns over bias, academic integrity, privacy, and skill atrophy documented across sectors (Ahmad et al., 2023; Bettayeb et al., 2024; Haroud & Saqri, 2025; Jensen et al., 2024; Wilby & Esson, 2023). Finally, interaction/skills themes, especially human–AI collaboration, stabilize as a niche with credible potential to move rightward, as emerging design knowledge on student–AI orchestration and teacher–AI role complementarity accumulates but still lacks large-scale empirical validation (Ji et al., 2022; Kim et al., 2025). Collectively, these movements portray a field converging on core literacies and LLM-mediated applications, deepening methodological synthesis and negotiating human-centric concerns, while application domains (medical and K-12) and collaboration paradigms supply the principal engines of near-term centrality.

A data-driven reading of theme dynamics suggests clear priorities. Researchers should concentrate on emergent frontiers—prompt engineering, AI regulation, and human–AI collaboration—while attending to demographic- and context-specific questions and ethical implications. Apparent declines warrant interpretation: many topics have not vanished but have been absorbed into broader, application-first conversations. Stable/basic themes remain essential scaffolding for cumulative inquiry; work that refines AI literacy or advances robust applications of ChatGPT/LLMs will retain high relevance. Niche clusters highlight under-connected sub-fields in which synthesis, theory-building, or bridge studies could unlock broader impact. Finally, the shift from “chatbots” to LLMs as basic themes, together with the prominence of prompt engineering and collaboration, points toward a near-term emphasis on optimizing human–AI interaction and augmenting (rather than replacing) human expertise across diverse educational settings.

We can sum up the key insights and provide guidance for data-driven interpretation as follows:

- The most striking finding is the rapid and profound impact of GenAI, epitomized by “ChatGPT” and “Large Language Models.” These terms moved almost instantaneously from non-existence (before 2023) to becoming central “basic themes” by 2023–2023 and onward. This indicates they are not merely new topics but fundamental shifts that redefine the research landscape, necessitating a re-evaluation of established practices and theories. Researchers must integrate GenAI tools and their implications into their studies. This includes exploring “prompt engineering” (implied by “engineering” in 2025–2025), addressing “academic integrity” (emerging in 2023–2023), and considering new forms of “digital AI literacy.”

- A shift in the focus to human elements is detected in recent periods. The field is steadily moving beyond purely technological discussions of AI toward a more nuanced understanding of its integration into human learning environments. Early dominance of core AI concepts (e.g., “machine learning” and “deep learning”) as distinct basic themes gives way to their integration, while “basic” and “emerging” quadrants increasingly feature terms such as “competencies,” “teacher training,” “student well-being,” “engagement,” “motivation,” and “teacher perception.” Future research should prioritize the human experience of AI, focusing on how AI impacts learners, educators, and the educational process itself. This includes developing evidence-based pedagogical designs and understanding the psychological and social implications of AI.

- The increasing presence of advanced research methodologies such as “factor analysis,” “systematic literature review” (as motor themes), and “structural equation modeling” (as a basic theme) indicates a maturing field. Researchers are moving toward more rigorous and robust quantitative and synthesis methods. Adopting and innovating with advanced research methods will be crucial for producing high-quality, impactful research. Methodological sophistication can lead to more reliable and generalizable findings.

- While “AI in education” remains a broad domain, there is growing specialization into specific educational contexts (“K-12 education,” “early childhood education,” “higher education,” “medical education,” and “nursing education”) and specialized literacies (“critical AI literacy,” “digital AI literacy,” and “communication AI literacy”). Geographical specificities (“Saudi Arabia”) also emerge, suggesting tailored research needs. Researchers should consider the unique challenges and opportunities of AI in diverse educational settings and cultural contexts. Tailored solutions and context-specific research are likely to gain prominence.

- “Ethics” was an early motor theme and remains central. “Bias” and “academic integrity” emerged quickly, and “AI regulation” is emerging. “Anxiety” and “student well-being” are also starting to be featured. This reflects a growing awareness of the potential risks and negative impacts of AI. Research on responsible AI, including ethical frameworks, fairness, transparency, and the psychological impact of AI, will be paramount. Developing guidelines and policies for AI use in education will be a key area.

In conclusion, the bibliometric analysis clearly shows a dynamic field experiencing rapid growth, driven significantly by the advent of GenAI. The research focus is evolving from general technical exploration to specific pedagogical applications, human-centric impacts, and rigorous methodological approaches. Researchers should strategically align their work with these emerging trends, paying particular attention to the practical, ethical, and human dimensions of AI integration in education.

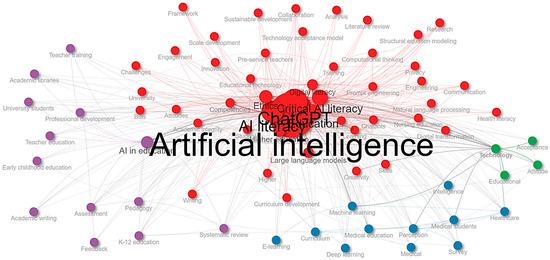

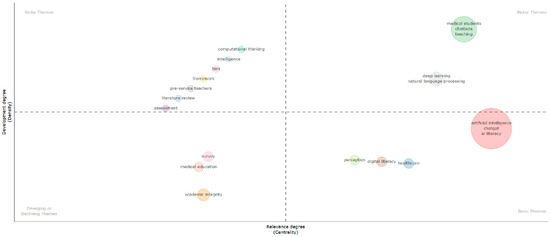

The visualization in Figure 3 provides valuable insights into the thematic structure and key concepts within the Web of Science (WoS) dataset, based on authors’ keywords.

Figure 3.

Co-occurrence network of authors’ keywords.

The network is highly centralized and dense, particularly around the terms “artificial intelligence” and “ethics, critical AI literacy.” These nodes are the largest in size, indicating their high frequency and significant co-occurrence with other keywords. Their central position signifies that they are the foundational and most overarching themes of the research collection. The network broadly follows a hub-and-spoke model, with “artificial intelligence” acting as the primary central hub, from which numerous connections radiate outward to various related concepts and communities. “Ethics, critical AI literacy” serves as a crucial secondary hub, especially within its own community.

The use of different colors (red, purple, blue, and green) clearly delineates distinct research communities or thematic clusters. This indicates that while “artificial intelligence” is the overarching subject, the research explored through these keywords branches into several focused sub-domains.

The Walktrap algorithm (Donthu et al., 2021) has successfully identified four prominent communities, each representing a specific thematic focus:

- Red Community: Core AI in Education and Ethical Implications (Central Hub). Key Terms: “artificial intelligence” (largest), “ethics, critical AI literacy” (largest), “large language models,” “education technology,” “pre-service teachers,” “innovation,” “sustainable development,” “collaboration,” “analysis,” “literature review,” “research,” “structural equation modeling,” “computational thinking,” “privacy,” “communication,” “natural language processing,” “health literacy,” “transformation,” “skills,” “creativity,” “competencies,” “chatbots,” “human–computer interaction,” “educational assessment,” “higher education,” “curriculum development,” “human-centered AI,” “responsible AI,” “digital literacy,” “digital citizenship,” “digital transformation,” and “learning analytics.” This is the dominant and most comprehensive community, reflecting the multifaceted discourse around artificial intelligence, especially in the context of education. The prominence of “ethics, critical AI literacy” alongside “artificial intelligence” highlights a strong and mature focus on the societal, ethical, and responsible integration of AI. The inclusion of “large language models” and “chatbots” points to research on cutting-edge AI technologies. Terms such as “pre-service teachers” and “higher education” indicate a focus on educator training and tertiary education. This community also encompasses broader research aspects such as sustainability, collaboration, and various research methodologies.

- Purple Community: Pedagogical Applications and Educational Stages (Left). Key Terms: “AI in education” (bridge term), “teacher training,” “university students,” “teacher education,” “early childhood education,” “pedagogy,” “assessment”, “academic writing,” “K-12 education,” “feedback,” and “bias.” This community zeroes in on the practical application and implications of AI across different educational stages and settings. It emphasizes the “how-to” and “who” of AI integration, focusing on specific learner groups (university, K-12, and early childhood) and educational processes (teacher training, pedagogy, assessment, academic writing, and feedback). The presence of “bias” indicates critical scrutiny of AI’s fairness and equity in educational contexts. “AI in education” serves as a crucial bridge node, connecting this practical cluster back to the main AI theme.

- Blue Community: AI in Medical and Healthcare Education (Bottom Right). Key Terms: “machine learning,” “deep learning,” “curriculum,” “medical education,” “healthcare,” “medical students,” “survey,” “perception,” “e-learning,” and “systematic review.” This distinct cluster demonstrates a specialized research niche focusing on the application of specific AI sub-fields, such as “machine learning” and “deep learning,” within the “medical education” and “healthcare” domains. It explores how these technologies are integrated into curricula for “medical students” and the broader healthcare field. Terms such as “perception” and “survey” suggest studies on attitudes and understanding within this professional group, while “systematic review” points to a methodological approach.

- Green Community: Technology Acceptance and Attitudes (Far Right). Key Terms: “technology,” “attitude,” “acceptance,” and “educational.” This smaller, yet significant, community investigates the human dimension of technology adoption. It focuses on the “acceptance” and “attitude” toward new technologies, like AI, within “educational” settings. This suggests research exploring factors influencing the willingness of stakeholders (e.g., students, teachers) to adopt and utilize AI tools.

The largest nodes are inherently the most relevant as they represent the most frequently co-occurring and central themes:

- “Artificial intelligence”: This term is the absolute core of the entire dataset. All other concepts and communities revolve around it, affirming its status as the primary subject of the collected research.

- “Ethics, critical AI literacy”: The equally large size and central position of this node within the red cluster highlight that the discourse is not merely about AI technology itself, but profoundly about its responsible development, deployment, and the necessity for users to critically understand its implications. This signifies a mature and ethically aware research field.

- “Large language models”: The prominence of LLMs shows that the most recent advancements in AI are actively being discussed and researched within this academic domain.

- “AI in education”: This node acts as a critical link, solidifying the application context of “artificial intelligence” within the “education” field, and connecting the central theoretical/ethical discussions to more practical pedagogical inquiries.

- “Machine learning”/“deep learning”: These terms indicate that research is also focused on the specific underlying AI techniques, particularly within applied domains such as medical education.

We can conclude that this “Authors’ Keywords network” reveals a dynamic and multifaceted research landscape centered on artificial intelligence. While the core interest lies in AI’s application and implications within education, there is a strong emphasis on ethical considerations and critical understanding. Furthermore, the network highlights specialized applications in fields such as medical education and an overarching concern with technology acceptance. This comprehensive map can guide researchers in identifying emerging trends, potential collaborators, and key gaps in the existing literature.

Insights from Phase 1 guided our expectations for Phase 2. The bibliometric maps show the field consolidating around two intertwined strands—foundational/cognitive knowledge (representation, learning, and related computational constructs) and critical/ethical engagement—within an LLM-centric landscape that foregrounds pre-service teacher contexts and responsible, human-centered AI (anticipating our cohort comparison).

Consistent with the Hornberger-based instrument’s content coverage (robust in BI2–BI3, substantial in BI5), we therefore expected (RQ2) higher total AI-literacy scores among pre-service technology teachers, given their greater exposure to structured coursework in core AI concepts, and (RQ3) group differences concentrated in computationally oriented competencies (e.g., understanding intelligence, programmability).

At the same time, because Phase 1 situates ethics/criticality as a central, widely diffused theme—and prior work characterizes these critical competencies as transversal and often developed through general digital/media literacies—we anticipated limited between-group divergence in critical AI literacy (RQ4).

Finally, the unidimensional structure and reliability of the instrument support the use of a total score for RQ2 while our theme-to-competency map clarifies how specific subscale contrasts in RQ3 instantiate the conceptual emphases identified in Phase 1.

3.2. AI Literacy Level and Differences

Using the published item-to-competency allocation of the Hornberger et al. (2023) test, our instrument covers BI2 (representation and reasoning) and BI3 (learning) robustly (≥17 items combined), offers substantial BI5 (societal impact) coverage (≥7 items across ethics, legal, and human-in-the-loop), includes minimal BI4 coverage (natural interaction) (chatbots), and, by design, no direct BI1 (perception) coverage because robotics/sensing competencies were not included in item development.

Table 2 shows results from descriptive statistics. Firstly, the total score of AI literacy is shown, followed by the scores of each competency from the work of Long and Magerko (2020). In total AI literacy, students from a technology teacher education program, on average, outperformed secondary technical school students (12.40 and 11.97, respectively). To compare students’ AI literacy measured in our study with results from the study of AI literacy in higher education settings, our average results of technology teacher education students (M = 12.40) are much lower than engineering and natural science students (M = 20.04), but comparable to social science students (M = 13.65) (Hornberger et al., 2023). It seems that for our higher education students, AI literacy is much closer to their counterparts in the United Kingdom and the United States of America (Hornberger et al., 2025).

Table 2.

Students’ AI literacy in total and across the competency of AI expressed with mean (M) and standard deviation (SD) (n = 145).

In a very recent international study by Hornberger et al. (2025), the authors indicated that German higher education students scored higher in comparison with UK and USA students (0.38, −0.12, and −0.24, respectively), while, based on our study, higher education students in Slovenia engaged in teacher education programs are comparable to UK students (−0.015). For comparison, we used item response theory (IRT) scores since they provide a more exact measurement.

The results of our study confirm some of the findings of a recent study by Licardo et al. (2025), in which almost half of high school students engaged in the study believe that their knowledge of AI is very poor or negligible, while the percentage among university students is above half of all those who engaged in the study. It was also found that more than 80% of both high school and university students have not received any organized education or training in the use of AI (Licardo et al., 2025). The survey also found that the school or faculty environment does not sufficiently encourage the development and use of AI. This is the opinion of almost half of the students, while the percentage of students who need more support is slightly lower (Licardo et al., 2025). Therefore, as the authors point out, the knowledge, extent of use, and impact of AI on learning are still unclear or insufficiently articulated (Licardo et al., 2025).

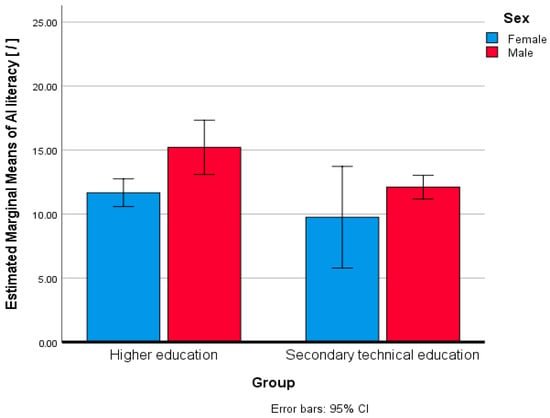

To identify differences between groups of students, we first checked the assumption of normal data distribution. The Shapiro–Wilk test of normality was used for AI literacy in total and for AI literacy competencies. The test revealed that a dataset across different study groups comes from a normal distribution (p > 0.05). Since the normality assumption was met, parametric tests were performed to reveal the differences between the groups involved in this study. Next, we ran a 2 × 2 between-subject factorial analysis of variance (ANOVA) where we had two independent variables, group membership and sex, while the AI literacy total score depended on the variables. When analyzing between-subject effects, we first conducted Levene’s test, which confirmed that dependence on the measure met the assumption of homogeneity of variance (p = 0.343 > 0.05). A test of subjects’ effects revealed significant differences in group membership (p = 0.02, partial η2 = 0.054) and sex effects (p = 0.01, partial η2 = 0.074). The joint effect of group and sex was not significant (p = 0.61 > 0.05). As shown in Figure 4, males from both educational settings outperformed their female counterparts, regardless of their group. The effect size of differences can be regarded as small to medium (Cohen et al., 2013).

Figure 4.

Estimated marginal means of the total score of AI literacy (n = 145).

3.3. AI Literacy Competencies and Between-Group Differences

In order to answer the second research question, we ran a MANCOVA with 14 competencies of AI literacy as the dependent variable, while group membership was independently controlled by sex as the covariate. Box’s test was conducted to determine whether the covariance matrices from the two samples were statistically equivalent and confirmed non-significance (p = 0.18 > 0.05). When we conducted the MANCOVA to examine the effect of group membership on 14 AI literacy competencies, the results showed a non-significant multivariate effect of group membership controlled by sex, and Wilks’ lambda = 0.86, F(14, 129) = 1.41, p = 0.15 > 0.05, indicating that technology teacher education students and secondary technical school students did not differ on the AI literacy scales where multivariant effects were considered. Excluding multivariate effects, differences were found only on the programmability scale (p = 0.045, small effect size η2 = 0.03) and in understanding intelligence (p = 0.002, medium effect size η2 = 0.07). Moreover, males outperformed females on the interdisciplinarity, data literacy, and ethics scales (p = 0.001, η2 = 0.07; p = 0.011, η2 = 0.05; p = 0.018, η2 = 0.04, respectively), where the effect size could be estimated as small to medium (Cohen et al., 2013).

Furthermore, our findings can be supported by the results of the study by Licardo et al. (2025), in which possible differences in students could be attributed to a better understanding of the operating principles behind these technologies among the university students. Both groups of students use AI mainly for task preparation, problem-solving, and data and information searching, while university students also use AI for translation of learning material and proofreading of their textual assignments (Licardo et al., 2025). It seems that university students are more aware of the complexity of critically evaluating information generated by such technologies.

3.4. Critical AI Literacy and Between-Group Differences

Of Hornberger et al.’s (2023) list of competencies, those most directly related to the critical AI competency align with idea #5, the societal impact of the five big ideas framework (Touretzky et al., 2022), which explains that AI can impact society both positively and negatively. The competencies are as follows:

- AI’s Strengths and Weaknesses: This competency relates to how AI may outperform or fall short of human abilities, thereby shaping real-world outcomes (positive or negative);

- Human Role in AI: This competency emphasizes designers’ and users’ influence on AI systems, underscoring how human decisions about data, goals, and oversight affect society;

- Ethics: This competency addresses issues of fairness, bias, privacy, and accountability in AI, all of which are key considerations for societal impact;

- Interdisciplinarity: This competency can show how AI’s societal effects are examined from multiple angles (e.g., legal, medical, and ethical).

To answer the third research question, we conducted a MANCOVA with four competencies of critical AI literacy as the dependent variable, while group membership was independently controlled by sex as the covariate. Box’s test was conducted on whether the covariance matrices from the two samples are statistically equivalent and confirmed non-significance (p = 0.31 > 0.05). When we conducted a MANCOVA to examine the effect of group membership on four AI literacy competencies, the results showed a non-significant multivariate effect of group membership controlled by sex, where Wilks’ lambda = 0.96, F(4, 139) = 1.19, p = 0.314 > 0.05, indicating that technology teacher education students and secondary technical school students do not differ on the critical AI literacy scales. Moreover, males outperformed females on the Interdisciplinarity and Ethics scales (p = 0.001, η2 = 0.07; p = 0.018, η2 = 0.04, respectively), where the effect size could be estimated as small to medium (Cohen et al., 2013).

To further support critical AI literacy in our study, authors from a very recent study (Licardo et al., 2025) found that in terms of ethical considerations, secondary school students emphasize privacy as their main concern when using AI, while university students consider the spreading of misinformation as the most pressing ethical challenge. In addition, university students may show higher critical awareness regarding the risk of excessive use of AI technologies compared with secondary school students, but the differences cannot be attributed to statistically proven phenomena. Both groups of students, with their knowledge and experience, agree that AI technologies increase efficacy and automation, which results in greater productivity of their tasks and assignments in the specific educational context (Licardo et al., 2025).

According to recent literature published by Velander et al. (2024), Veldhuis et al. (2025), and Yim (2024), no significant differences (p > 0.05) were found between groups based on critical AI literacy, which can be attributed to several overlapping factors that they identify in their analyses of AI literacy frameworks and practices:

- Both groups of students may have similarly limited formal AI learning opportunities, leading to comparable basic knowledge or awareness. Veldhuis et al. (2025) and Rihtaršič (2018) note that many learners—whether in secondary or higher education—often draw on the same informal resources (e.g., social media or popular science articles) to develop a basic understanding of AI concepts.

- Critical AI literacy, as conceptualized in both studies, is concerned with overarching skills for questioning and criticizing AI (e.g., recognizing biases, discussing ethical trade-offs). These transversal skills can be acquired as part of general digital literacy or media literacy. Consequently, Velander et al. (2024) report that even individuals with different educational backgrounds can achieve a similar level of critical understanding if they have a common foundation in digital or media literacy.

- Critical AI literacy is not a static field. New AI tools or controversies (such as large-scale language models, facial recognition, or algorithmic policy decisions) may emerge, and both groups learn about them simultaneously through widely available media. As a result, the knowledge gap or differences between education levels may not be as pronounced as one would expect when a new AI-related topic permeates public discourse and informal learning channels (Yim, 2024).

Moreover, the literature suggests that a combination of shared informal exposures, the broad nature of critical AI competencies, and the limitations of measurement tools often result in no significant group differences when assessing critical AI literacy (Velander et al., 2024; Veldhuis et al., 2025; Yim, 2024). Despite differences in formal educational pathways, learners may converge on a similar overall capacity or show high variability within groups that nullifies the average difference across them (Khuder et al., 2024).

Table 3 succinctly aggregates inferential results aligned with RQ2–RQ4, emphasizing patterns over descriptive detail. Pre-service technology teacher-education students scored higher on the total AI-literacy measure (small–medium effect size), with competency-level advantages concentrated in Understanding Intelligence and Programmability. Multivariate tests showed no overall group effect across all competencies and no between-group differences on the critical-AI composite, indicating that criticality may function as a transversal competence. Finally, small–medium sex effects emerged on the total score and on Interdisciplinarity, Data Literacy, and Ethics, suggesting targeted pedagogical supports may be warranted to mitigate subgroup disparities.

Table 3.

Condensed Results for RQ2–RQ4: Key Group and Sex Effects.

Citations for the values reported in the table: group main effects and total-score means (M = 12.40 vs. 11.97; p = 0.02; η2 = 0.054), and sex effect (p = 0.01; η2 = 0.074); competency-level univariate effects—Understanding Intelligence (p = 0.002; η2 = 0.07) and Programmability (p = 0.045; η2 = 0.03), plus sex differences on Interdisciplinarity, Data literacy, and Ethics (p = 0.001/0.011/0.018; η2 = 0.07/0.05/0.04).

4. Discussion

The discussion section is divided into five main themes, which together frame the interpretation of the results. This section begins with the prevalent themes and intellectual foundations of (critical) AI literacy in technology and engineering education, followed by analyses of students’ AI literacy and competencies in teacher education and secondary technical schools. Particular focus is placed on critical AI literacy before concluding this study with limitations and directions for future work.

4.1. Dominant Themes and Intellectual Bases of (Critical) AI Literacy in Technology and Engineering Education

Across 2017–2025, the thematic evolution map (Figure 2) and the strategic maps in Appendix B (Figure A1, Figure A2, Figure A3 and Figure A4) show a decisive shift from broad, conceptual treatments of “AI in education” toward application-rich, methodologically stronger work anchored in GenAI (LLMs/ChatGPT), AI/critical AI literacy, and human–AI interaction/governance (Tzirides et al., 2024). Beginning in 2023, LLMs emerge abruptly and stabilize as a basic/foundational theme, while “chatbots” peak as a motor theme in 2023–2024 before subsuming under an LLM-centric umbrella. By 2025, AI literacy and critical AI literacy consolidate in the basic quadrant, reflecting curricular embedding and growth in instrument development/validation; meanwhile, the broader “digital literacy” strand drifts toward a niche, as discourse narrows to AI-specific literacies and policy frameworks. These migrations coincide with a maturation of methods—growth in systematic/scoping reviews and structural equation modeling—and the rise in well-defined application arenas (notably K–12 and medical/health professions education). Together, the maps portray a field converging on core literacies and LLM-mediated practices while negotiating ethics, integrity, privacy, and bias as durable concerns.

The co-occurrence network (Figure 2) clarifies this organization into four Walktrap communities: a red hub around AI and ethics/critical AI literacy (linking to LLMs, pre-service teachers, assessment, and responsible/human-centered AI); a purple community on pedagogical application across educational stages (teacher education, K–12, assessment, and academic writing/feedback); a blue cluster emphasizing medical/health education (ML/DL, curricula, surveys, and systematic reviews); and a green cluster on technology acceptance/attitudes. The position and size of nodes underscore the centrality of ethics/criticality and the practical salience of pre-service teacher contexts, which anticipates our cohort comparison.

Our measurement strategy aligns with the following cartographies: the coverage is robust for AI4K12 BI2–BI3 (representation/reasoning; learning) and substantial for BI5 (societal impact), but minimal for BI4 (natural interaction) and, by design, absent for BI1 (perception/robotics), as already suggested by Licardo et al. (2025). This explains why competencies linked to human–AI interaction/robotics, visible in the maps as emerging or niche, may be underrepresented in our detected differences. Therefore, we interpret several “non-differences” cautiously as measurement coverage effects, not just cohort equivalence.