Two Hierarchical Guidance Laws of Pursuer in Orbital Pursuit–Evasion–Defense Game

Abstract

1. Introduction

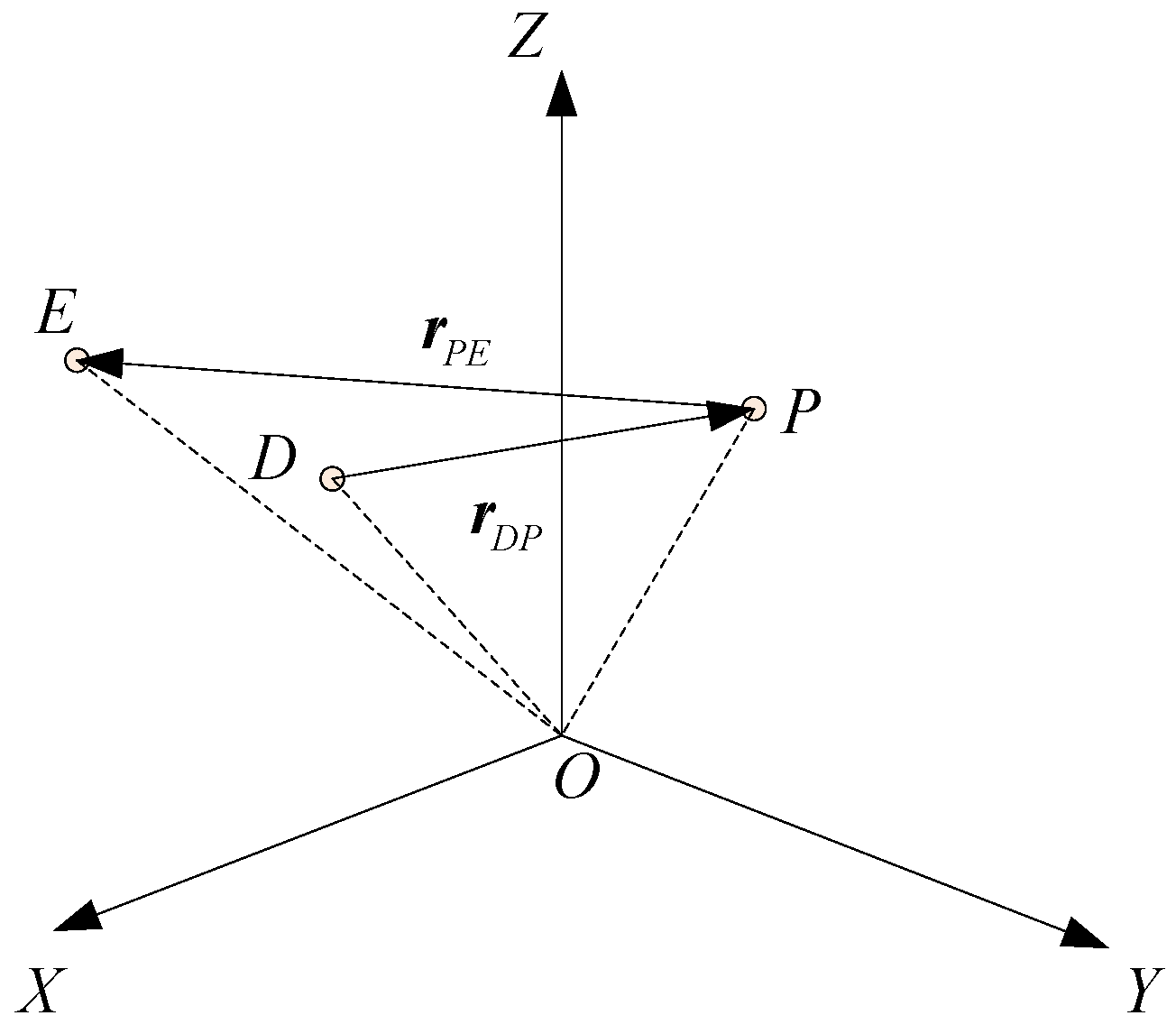

2. Mathematical Modeling of Spacecraft Pursuit–Evasion–Defense Game

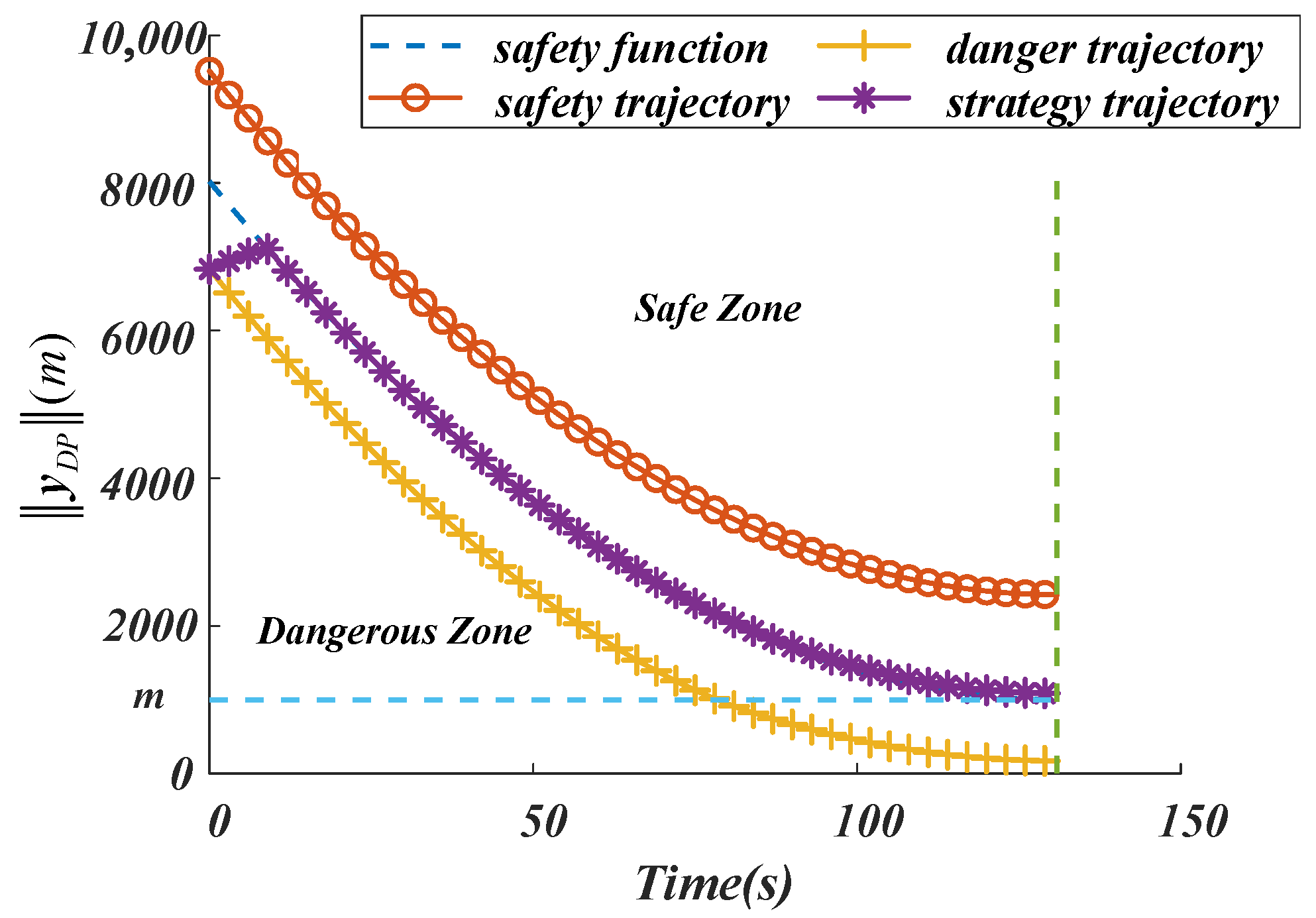

- (1)

- How does the pursuer weigh the two goals of pursuing the evader and evading the defender in the orbital pursuit–evasion–defense game?

- (2)

- When the pursuer determines the object of the game at this moment, how exactly to design the pursuer’s guidance law.

3. Simple Pursuit–Evasion Problem Guidance Law Design

3.1. Form of Optimal Guidance Law

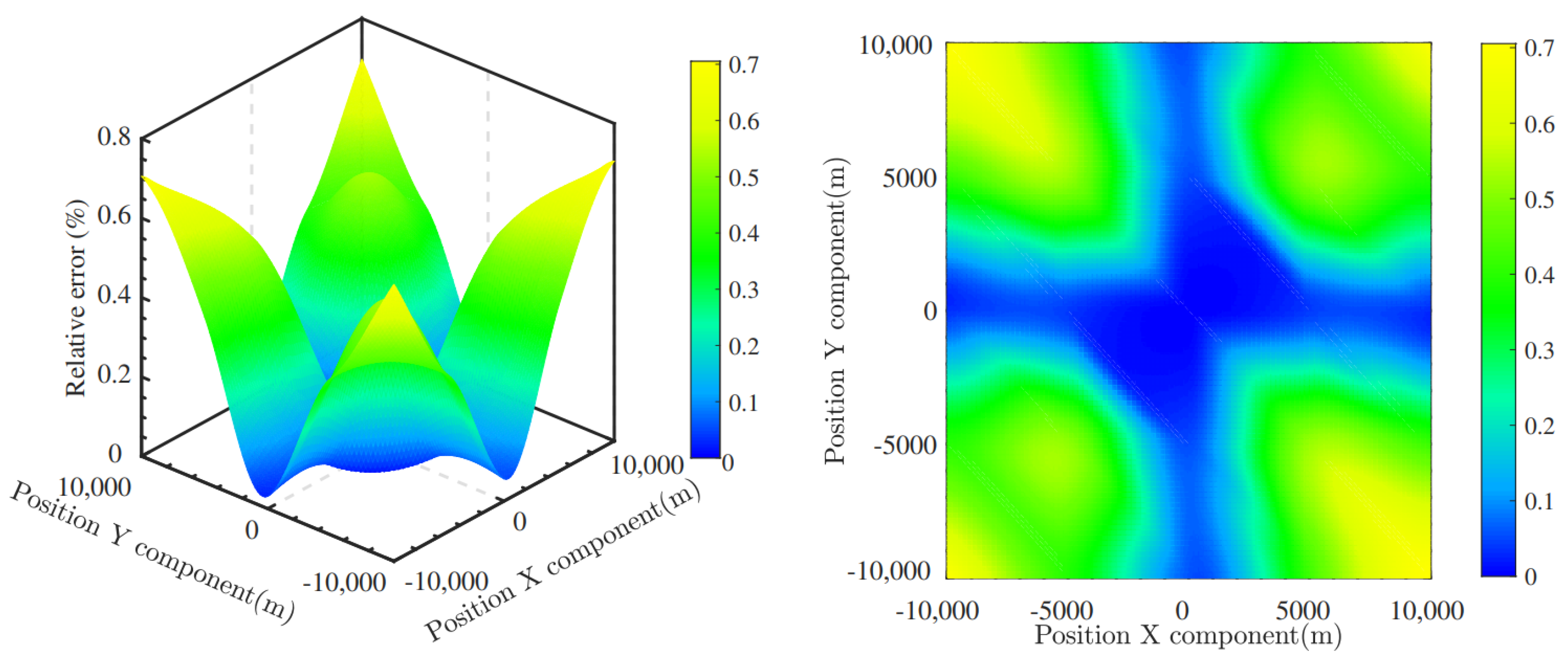

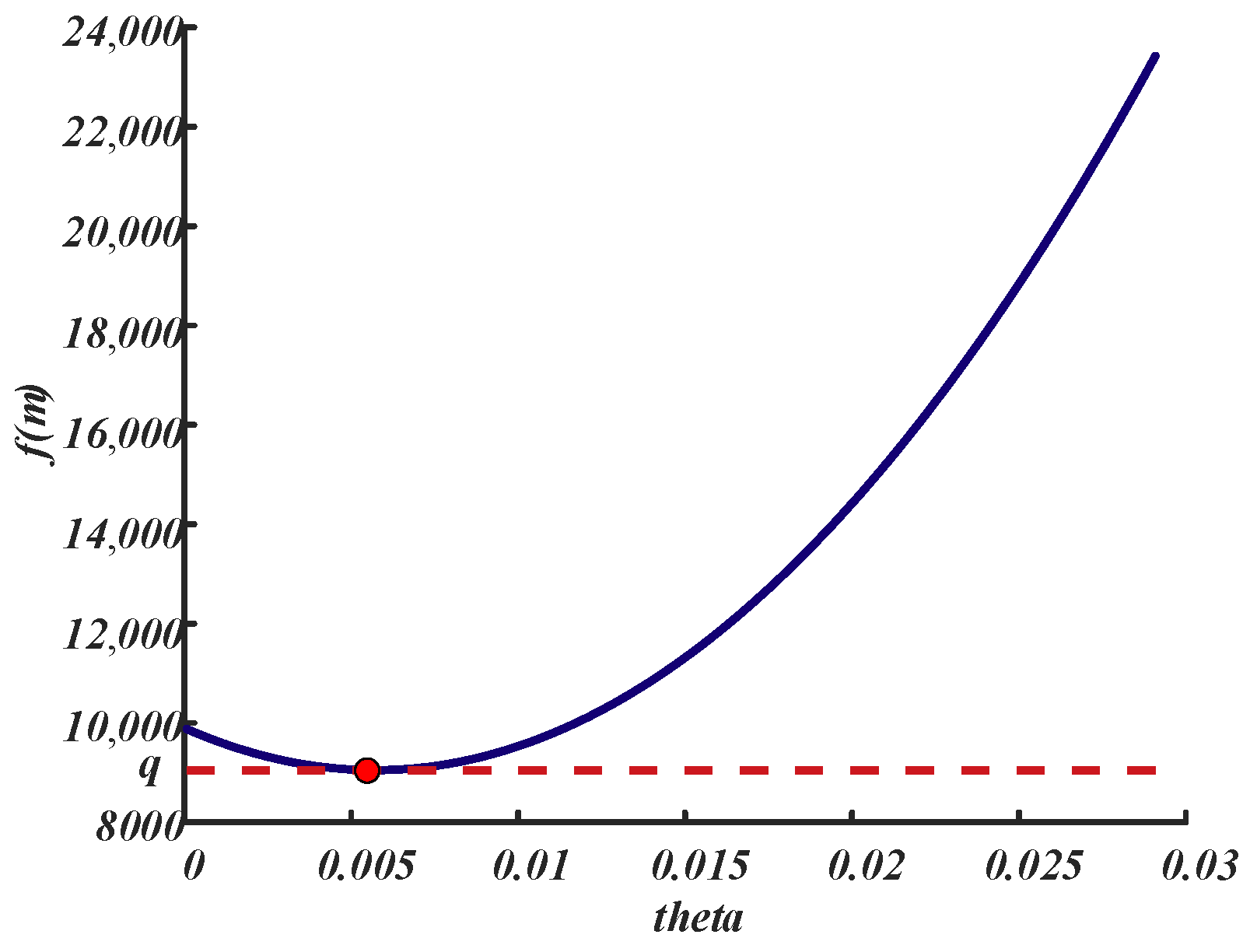

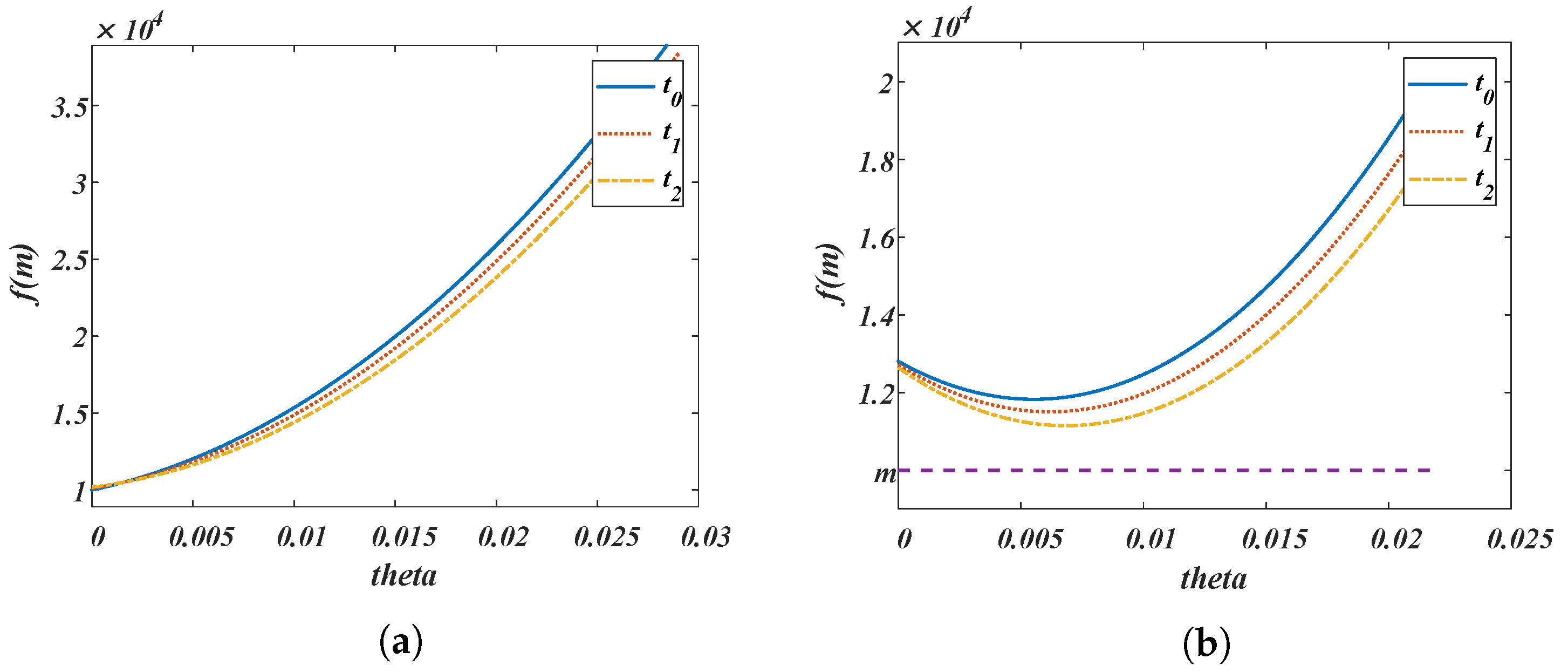

3.2. Solving for Time-to-Go

4. Pursuit–Evasion–Defense Problem Guidance Law Design

4.1. Conservative Guidance Law

4.2. Radical Guidance Law

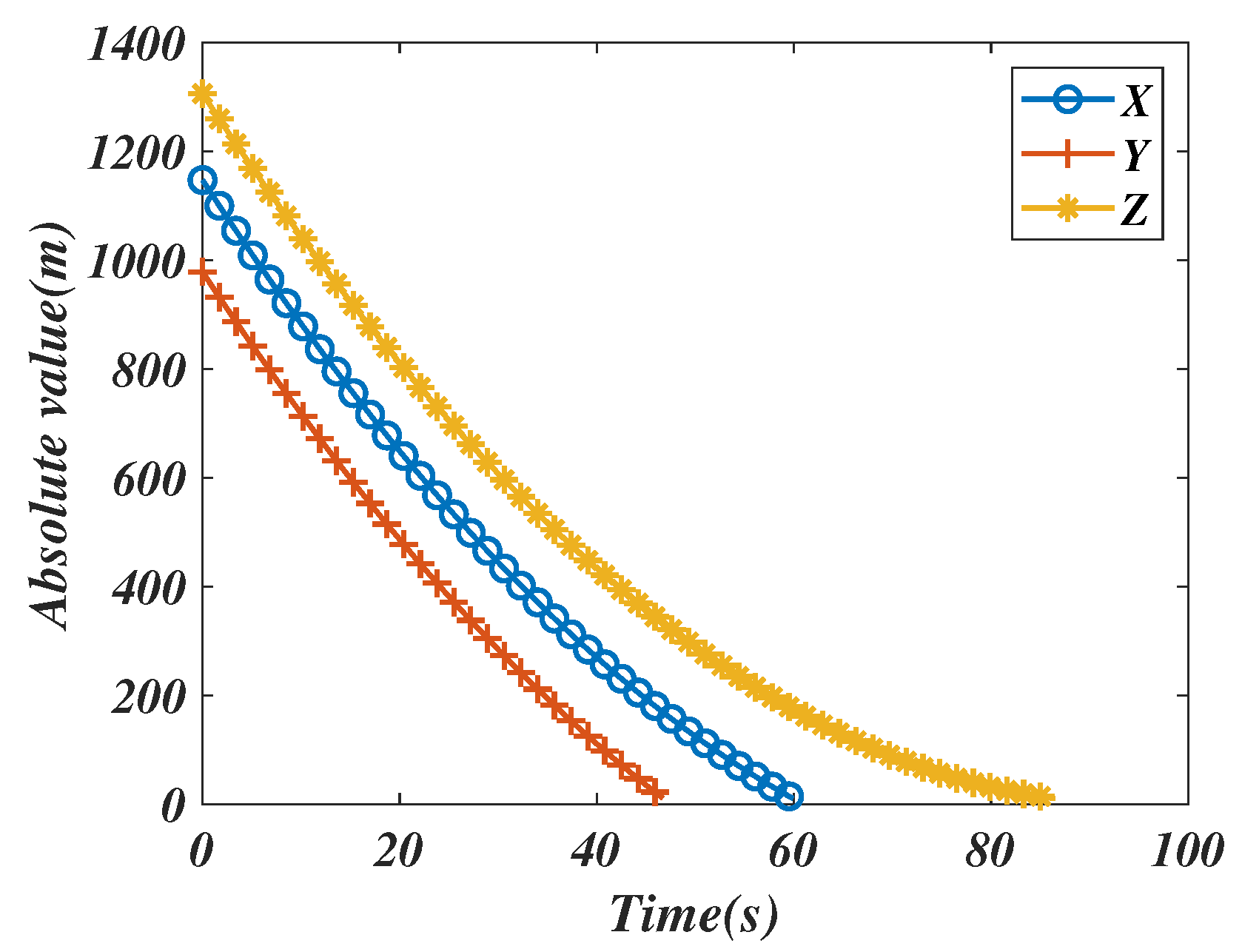

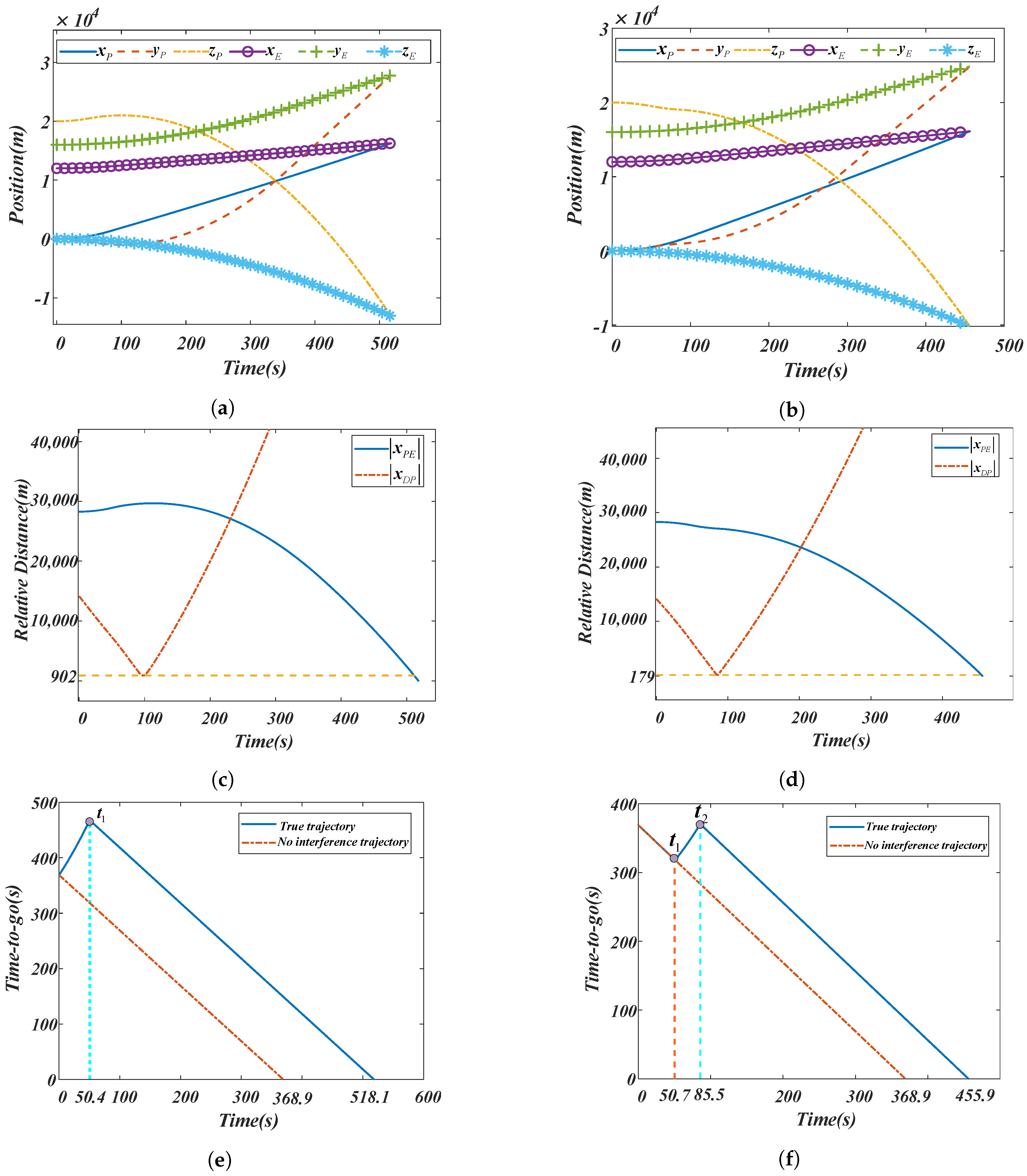

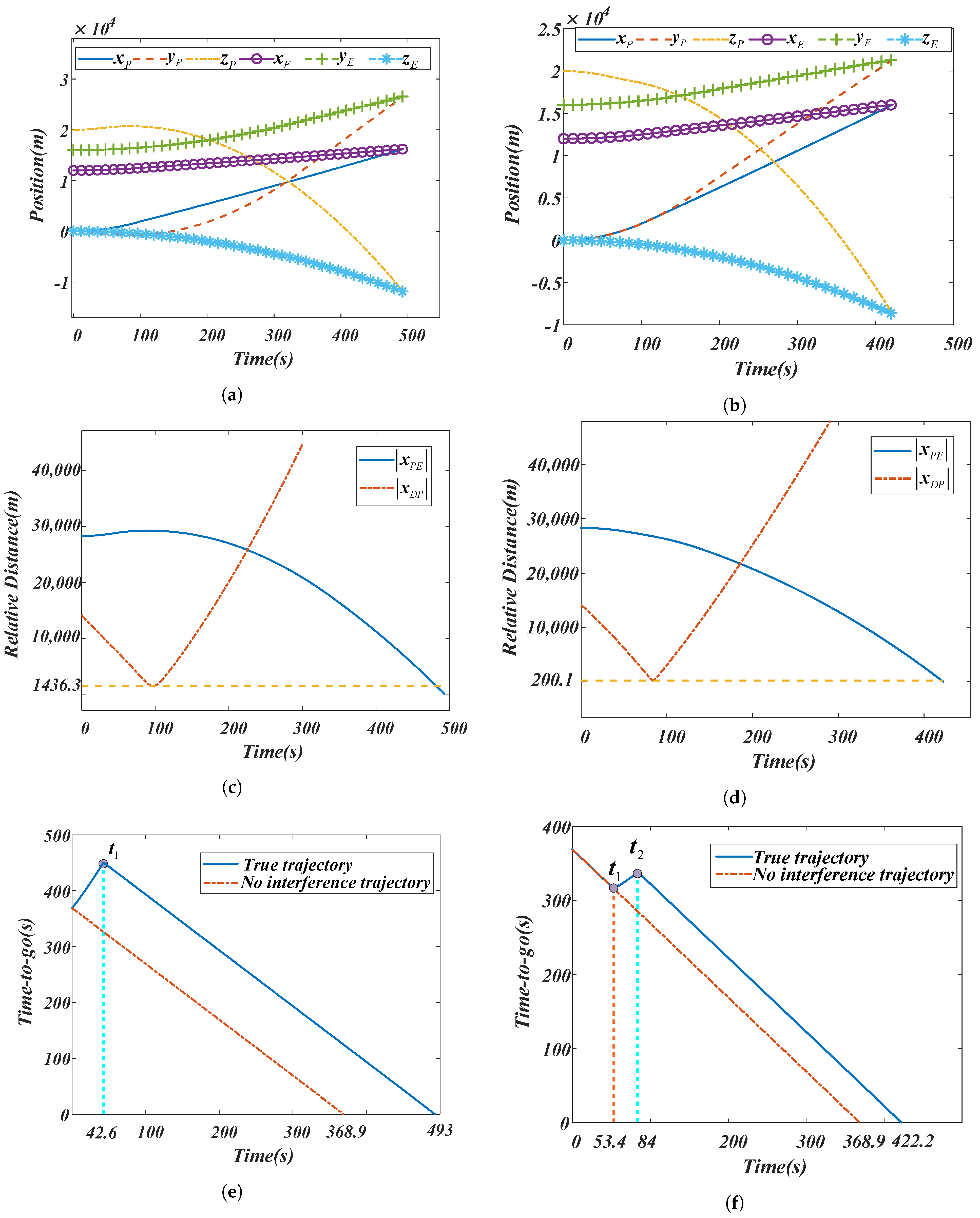

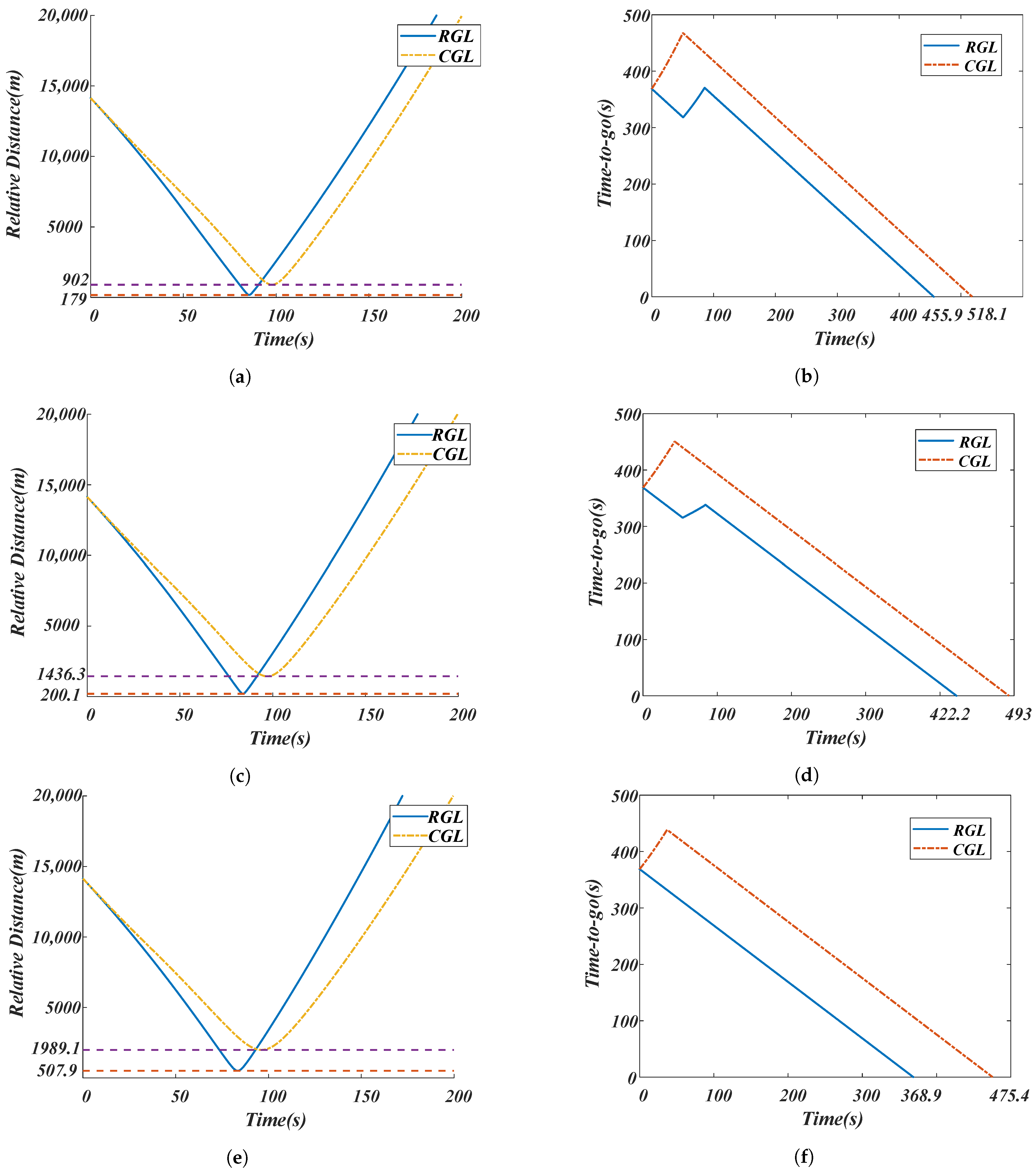

5. Simulations and Discussions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, X.; Li, M.; Wang, X.; Hu, J.; Zhao, Y.; Guo, M.; Xu, C.; Liu, W.; Wang, Y.; Hao, C.; et al. The Tianwen-1 Guidance, Navigation, and Control for Mars Entry, Descent, and Landing. Space Sci. Technol. 2021, 2021, 9846185. [Google Scholar] [CrossRef]

- Zhang, H.; Li, J.; Wang, Z.; Guan, Y. Guidance Navigation and Control for Chang’E-5 Powered Descent. Space Sci. Technol. 2021, 2021, 9823609. [Google Scholar] [CrossRef]

- Li, J.; Wang, Y.; Liu, Z.; Jing, X.; Hu, C. A New Recursive Composite Adaptive Controller for Robot Manipulators. Space Sci. Technol. 2021, 2021, 9801421. [Google Scholar] [CrossRef]

- Isaacs, R. Differential Games: A Mathematical Theory with Applications to Warfare and Pursuit, Control and Optimization; Wiley: Hoboken, NJ, USA, 1965. [Google Scholar]

- Wong, R.E. Some aerospace differential games. J. Spacecr. Rocket. 1967, 4, 1460–1465. [Google Scholar] [CrossRef]

- Anderson, G.M.; Grazier, V.W. Barrier in Pursuit-Evasion Problems between Two Low-Thrust Orbital Spacecraft. AIAA J. 1976, 14, 158–163. [Google Scholar] [CrossRef]

- Ho, Y.; Bryson, A.; Baron, S. Differential games and optimal pursuit-evasion strategies. IEEE Trans. Autom. Control. 1965, 10, 385–389. [Google Scholar] [CrossRef]

- Pontani, M.; Conway, B.A. Numerical Solution of the Three-Dimensional Orbital Pursuit-Evasion Game. J. Guid. Control. Dyn. 2009, 32, 474–487. [Google Scholar] [CrossRef]

- Sun, S.; Zhang, Q.; Loxton, R.; Li, B. Numerical solution of a pursuit-evasion differential game involving two spacecraft in low earth orbit. J. Ind. Manag. Optim. 2015, 11, 1127–1147. [Google Scholar] [CrossRef]

- Hafer, W.T.; Reed, H.L.; Turner, J.D.; Pham, K. Sensitivity Methods Applied to Orbital Pursuit Evasion. J. Guid. Control. Dyn. 2015, 38, 1118–1126. [Google Scholar] [CrossRef]

- Ghosh, P.; Conway, B. Near-Optimal Feedback Strategies for Optimal Control and Pursuit-Evasion Games: A Spatial Statistical Approach. In Proceedings of the AIAA/AAS Astrodynamics Specialist Conference, Minneapolis, MN, USA, 13–16 August 2012; American Institute of Aeronautics and Astronautics: San Deigo, CA, USA, 2012. [Google Scholar] [CrossRef]

- Jagat, A.; Sinclair, A.J. Optimization of Spacecraft Pursuit-Evasion Game Trajectories in The Euler-Hill Reference Frame. In Proceedings of the AIAA/AAS Astrodynamics Specialist Conference, San Diego, CA, USA, 4–7 August 2014; American Institute of Aeronautics and Astronautics: San Diego, CA, USA, 2014. [Google Scholar] [CrossRef]

- Innocenti, M.; Tartaglia, V. Game Theoretic Strategies for Spacecraft Rendezvous and Motion Synchronization. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, San Diego, CA, USA, 4–8 January 2016; American Institute of Aeronautics and Astronautics: San Diego, CA, USA, 2016. [Google Scholar] [CrossRef]

- Gutman, S.; Rubinsky, S. Exoatmospheric Thrust Vector Interception Via Time-to-Go Analysis. J. Guid. Control. Dyn. 2016, 39, 86–97. [Google Scholar] [CrossRef]

- Ye, D.; Shi, M.; Sun, Z. Satellite proximate interception vector guidance based on differential games. Chin. J. Aeronaut. 2018, 31, 1352–1361. [Google Scholar] [CrossRef]

- Kartal, Y.; Subbarao, K.; Dogan, A.; Lewis, F. Optimal game theoretic solution of the pursuit-evasion intercept problem using on-policy reinforcement learning. Int. J. Robust Nonlinear Control. 2021, 31, 7886–7903. [Google Scholar] [CrossRef]

- Li, D.; Cruz, J.B. Defending an Asset: A Linear Quadratic Game Approach. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1026–1044. [Google Scholar] [CrossRef]

- Rusnak, I. Guidance laws in defense against missile attack. In Proceedings of the 2008 IEEE 25th Convention of Electrical and Electronics Engineers in Israel, Eilat, Israel, 3–5 December 2008; pp. 90–94. [Google Scholar] [CrossRef]

- Liu, Y.; Li, R.; Wang, S. Orbital three-player differential game using semi-direct collocation with nonlinear programming. In Proceedings of the 2016 2nd International Conference on Control Science and Systems Engineering (ICCSSE), Singapore, 27–29 July 2016; pp. 217–222. [Google Scholar] [CrossRef]

- Liu, Y.; Li, R.; Hu, L.; Cai, Z.q. Optimal solution to orbital three-player defense problems using impulsive transfer. Soft Comput. 2018, 22, 2921–2934. [Google Scholar] [CrossRef]

- Zhou, J.; Zhao, L.; Cheng, J.; Wang, S.; Wang, Y. Pursuer’s Control Strategy for Orbital Pursuit-Evasion-Defense Game with Continuous Low Thrust Propulsion. Appl. Sci. 2019, 9, 3190. [Google Scholar] [CrossRef]

- Rubinsky, S.; Gutman, S. Vector Guidance Approach to Three-Player Conflict in Exoatmospheric Interception. J. Guid. Control. Dyn. 2015, 38, 2270–2286. [Google Scholar] [CrossRef]

- Wei, X.; Yang, J. Optimal Strategies for Multiple Unmanned Aerial Vehicles in a Pursuit/Evasion Differential Game. J. Guid. Control. Dyn. 2018, 41, 1799–1806. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, W.; Ding, J.; Stipanovic, D.M.; Tomlin, C.J. Guaranteed decentralized pursuit-evasion in the plane with multiple pursuers. In Proceedings of the IEEE Conference on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 4835–4840. [Google Scholar] [CrossRef]

- Palmer, P. Optimal Relocation of Satellites Flying in Near-Circular-Orbit Formations. J. Guid. Control. Dyn. 2006, 29, 519–526. [Google Scholar] [CrossRef]

- Kumar, R.R.; Seywald, H. Fuel-optimal stationkeeping via differential inclusions. J. Guid. Control. Dyn. 1995, 18, 1156–1162. [Google Scholar] [CrossRef]

- Wang, Q.; Zhou, B.; Duan, G.R. Robust gain scheduled control of spacecraft rendezvous system subject to input saturation. In Proceedings of the 33rd Chinese Control Conference, Nanjing, China, 28–30 July 2014; pp. 4204–4209. [Google Scholar] [CrossRef]

- Ye, D.; Shi, M.; Sun, Z. Satellite proximate pursuit-evasion game with different thrust configurations. Aerosp. Sci. Technol. 2020, 99, 105715. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, Y.; Liu, T.; Wei, C.; Zhang, R.; Gu, H. Two Hierarchical Guidance Laws of Pursuer in Orbital Pursuit–Evasion–Defense Game. Aerospace 2023, 10, 668. https://doi.org/10.3390/aerospace10080668

Wei Y, Liu T, Wei C, Zhang R, Gu H. Two Hierarchical Guidance Laws of Pursuer in Orbital Pursuit–Evasion–Defense Game. Aerospace. 2023; 10(8):668. https://doi.org/10.3390/aerospace10080668

Chicago/Turabian StyleWei, Yongshang, Tianxi Liu, Cheng Wei, Ruixiong Zhang, and Haiyu Gu. 2023. "Two Hierarchical Guidance Laws of Pursuer in Orbital Pursuit–Evasion–Defense Game" Aerospace 10, no. 8: 668. https://doi.org/10.3390/aerospace10080668

APA StyleWei, Y., Liu, T., Wei, C., Zhang, R., & Gu, H. (2023). Two Hierarchical Guidance Laws of Pursuer in Orbital Pursuit–Evasion–Defense Game. Aerospace, 10(8), 668. https://doi.org/10.3390/aerospace10080668