Abstract

The automation of precise discipline inspection consultation requires question-answering (QA) systems that are both semantically nuanced and factually grounded. To address the limitations of keyword-based retrieval and the hallucination tendencies of generative language models in high-stakes discipline inspection domains, we propose a two-stage Retrieval-Augmented Generation (RAG) framework designed for Chinese discipline inspection text. Our approach synergizes token-level late interaction and cross-encoder reranking to achieve high-precision evidence retrieval. First, we employ ColBERTv2 to perform efficient, fine-grained semantic matching between queries and lengthy discipline inspection documents. Subsequently, we refine the initial candidate set using a computationally focused cross-encoder, which performs deep pairwise relevance scoring on a shortlist of passages. This retrieved evidence strictly conditions the answer generation process of a large language model (DeepSeek-chat). Through rigorous evaluation on a curated corpus of real Chinese discipline inspection documents and expert-annotated queries, we demonstrate that our pipeline significantly outperforms strong baselines—including BM25, single-stage dense retrieval (BGE), and a simplified ColBERT variant—in both retrieval metrics (Recall@k, Precision@k) and answer faithfulness. Our work provides a robust, reproducible blueprint for building reliable, evidence-based discipline inspection AI systems, highlighting the critical role of hierarchical retrieval in mitigating hallucinations for domain-specific QA.

1. Introduction

The digitization of legal systems worldwide has led to an exponential growth in legal corpora [1], presenting fundamental challenges for information retrieval and knowledge extraction [2]. In specialized domains such as Chinese law, public platforms now host over 140 million adjudicatory documents with annual increases in the tens of millions [3]. These documents exhibit distinctive characteristics—extreme length (often exceeding tens of thousands of characters), domain-specific terminology, and intricate logical structures—that render conventional retrieval methods inadequate. Traditional sparse retrieval models (e.g., BM25 [4]) fail to capture semantic equivalences between varied phrasings of identical legal concepts and struggle to localize relevant passages within lengthy texts, resulting in suboptimal recall and precision for professional legal queries.

Concurrently, the emergence of large language models (LLMs) [5] has revolutionized question-answering systems. However, purely generative models are susceptible to hallucinations and factual inaccuracies [6,7], which carry significant risks in high-stakes domains, especially in legal instrument. Retrieval-Augmented Generation (RAG) [8,9,10] has emerged as a promising paradigm to ground LLM outputs in external knowledge sources. However, existing RAG implementations face two critical limitations when applied to long legal documents: (i) single-vector dense retrieval models (e.g., BGE [11], E5 [12]) lack the granularity to model fine-grained, intra-document semantic correspondences [13], and (ii) single-stage retrieval architectures often fail to jointly optimize recall and precision over large-scale document collections [14].

Recent advances in multi-vector retrieval offer a potential solution. Contextualized Late Interaction over BERT (ColBERTv2) [15]—a contextualized late interaction model—generates token-level embeddings and computes similarity through decomposed token interactions, achieving state-of-the-art performance on long-document retrieval benchmarks. Its fine-grained matching mechanism is particularly well-suited for legal texts, where precise alignment between queries and specific provisions is essential. Despite its demonstrated efficacy, ColBERTv2 remains largely unexplored in Chinese legal contexts due to its computational overhead and the absence of end-to-end, reproducible systems for domain-specific deployment.

To bridge this gap, we present a two-stage RAG framework tailored for Chinese legal document QA. Our system leverages the native ColBERTv2 model for initial coarse retrieval, followed by a cross-encoder reranker to refine candidate passages, and finally conditions a generative LLM (DeepSeek-chat) on the retrieved evidence to produce accurate, legally grounded answers.

The contributions of this work are as follows:

- A complete and reproducible integration of the original ColBERTv2 retrieval pipeline into a real-world Chinese legal QA system, addressing a notable absence in existing research.

- A systematic two-stage retrieval architecture that combines ColBERTv2’s late interaction with cross-encoder reranking, empirically validated on long legal document benchmarks.

- A reproducible, end-to-end system encompassing document preprocessing, indexing, hierarchical retrieval, and grounded generation, with full code and evaluation data released to the community.

The experiments demonstrate substantial improvements over competitive baselines in both retrieval metrics and answer faithfulness, underscoring the importance of granular, multi-stage retrieval for reliable legal AI applications.

The remainder of this paper is organized as follows. Section 2 reviews related work on legal information retrieval, the ColBERT model family, and multi-stage retrieval architectures. Section 3 details our proposed methodology, including the forensic-grade document processing, the two-stage retrieval pipeline, and the traceable generation process. Section 4 presents our experimental setup, results, comprehensive analysis, and ablation studies. Finally, Section 5 concludes the paper and discusses future work.

2. Related Work

2.1. Legal Information Retrieval and Legal QA

Legal information retrieval has evolved from keyword-based Boolean models and probabilistic frameworks like BM25 [4]. While effective for exact term matching, these methods lack semantic understanding, performing poorly on queries requiring synonym generalization, long-range dependency resolution, or precise clause localization—core challenges in legal text analysis.

The advent of dense retrieval models, such as DPR [16] and subsequent improvements like ANCE, along with multilingual encoders including BGE [11] and E5 [12], has improved semantic recall in open-domain QA. However, their reliance on single-vector document representations limits their ability to model fine-grained, intra-document semantic alignment, a critical requirement for matching queries to specific provisions within lengthy legal texts (often spanning – characters) [17].

Parallel efforts have produced valuable benchmarks for legal NLP, such as LegalBench [18] and CAIL [19] for English and Chinese contexts, respectively, alongside specialized datasets like LeCaRD [20] and JEC-QA [21]. Contemporary Chinese legal LLMs (e.g., Lawyer-LLaMA, ChatLaw) predominantly employ Retrieval-Augmented Generation (RAG), yet their retrieval backbones remain reliant on hybrid BM25–dense retrieval pipelines with lightweight reranking. To our knowledge, no existing legal QA system integrates token-level late interaction retrieval, leaving a notable gap between state-of-the-art retrieval methods and domain-specific application.

2.2. ColBERT and Late Interaction

The ColBERT model [13] introduced a late-interaction paradigm, encoding queries and documents into contextualized token embeddings and computing relevance via efficient, decomposed MaxSim operations. This enables fine-grained semantic matching without the prohibitive cost of full cross-attention during retrieval.

ColBERTv2 [15] advanced this line by incorporating residual compression, supervised denoising, and centroid-based index pruning, reducing storage overhead by approximately 60% while maintaining strong accuracy. It remains a top-performing retriever on benchmarks such as BEIR [22] and LoCo [23], particularly for long-document retrieval.

Follow-up systems like PLAID [24] and ColBERT-X [25] further optimize inference latency through pre-computed interaction scores and transfer-learned representations. Despite these advances, practical deployment of ColBERTv2 in non-English domains—especially for long-document legal retrieval—has been limited by its computational and memory footprint, and no open, reproducible system exists for Chinese legal QA.

2.3. Two-Stage Retrieval and Reranking

Two-stage retrieval architectures have become a standard design for balancing efficiency and accuracy [26]. A typical pipeline employs a fast first-stage retriever (e.g., BM25 or dual-encoder) to fetch a candidate set, followed by a more expensive cross-encoder reranker [27] to refine the ranking via full query–document interaction.

Recent studies suggest that coupling multi-vector retrievers like ColBERT with cross-encoder reranking yields state-of-the-art effectiveness in trade-off-aware settings [15]. Nevertheless, this combined paradigm has not been systematically implemented or evaluated in Chinese legal QA. Our work is among the few to integrate native ColBERTv2 retrieval with cross-encoder reranking and LLM-based generation into a fully reproducible pipeline for long Chinese legal documents, demonstrating significant gains over conventional baselines and establishing a new strong benchmark for the domain.

In summary, existing studies have made substantial progress in legal information retrieval and retrieval-augmented generation, particularly in improving semantic matching and retrieval efficiency. However, most current approaches still rely on either sparse retrieval or single-vector dense representations, which fundamentally limits their ability to capture fine-grained intra-document relevance in ultra-long legal documents. Moreover, many existing legal QA systems lack a fully integrated, end-to-end design that jointly optimizes high-recall retrieval, precise reranking, and strictly evidence-grounded generation, making them vulnerable to evidence fragmentation and hallucination in high-stakes scenarios. These limitations jointly motivate our work, which aims to build a fine-grained, multi-stage, and fully traceable RAG framework for reliable Chinese legal question answering.

3. Methodology

We present a production-ready, fully traceable Retrieval-Augmented Generation (RAG) system specifically engineered for disciplinary inspection and supervision authorities in China. Unlike generic academic prototypes, the proposed system has been designed from the ground up to satisfy the exceptionally stringent operational, legal, and security requirements of real-world disciplinary investigation environments. These include handling millions of pages of heterogeneous ultra-long documents (sealed investigation dossiers, verbatim interrogation and conversation transcripts, financial ledgers, bank flow records, confidential audit reports, and supplementary investigation materials), operating reliably in completely air-gapped networks, consuming no more than consumer-grade or mid-range GPUs, and—most critically—ensuring that every single Chinese character in the final answer can be traced back to its exact position in the original archived file with zero ambiguity. This 100% token-level provenance chain is not an optional feature but a mandatory legal requirement for evidence admissibility and subsequent case review in Chinese disciplinary proceedings.

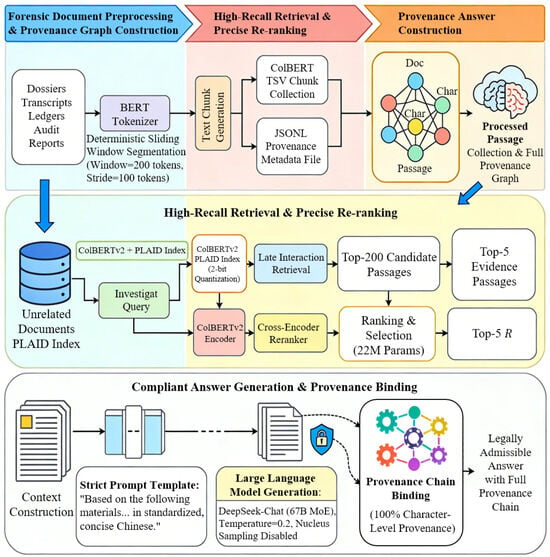

As illustrated in Figure 1, the framework consists of three tightly integrated, sequentially executed stages that have undergone extensive validation on real provincial- and municipal-level disciplinary corpora. This figure illustrates the integrated workflow for forensic document processing and legally admissible answer generation: it first involves preprocessing forensic materials (such as dossiers and transcripts) via BERT tokenization (using the bert-base-uncased tokenizer for strict compatibility with the official ColBERTv2 retrieval model) and text chunking, while integrating provenance metadata to construct structured inputs; next, high-recall retrieval (powered by ColBERTv2) and Cross-Encoder reranking are used to select top-5 evidence passages and their associated provenance from the document library; finally, a large language model (guided by a strict prompt template) generates standardized responses, which are then bound to a character-level provenance chain to produce legally admissible answers with full traceability.

3.1. Formal Specification of Forensic-Grade Document Segmentation and Provenance Mapping

Let denote the corpus of raw disciplinary documents, where each is represented as a JSON object. Each document minimally contains a field , where is the Unicode character set. Post newline normalization, the typical sequence length satisfies , where denotes the effective context window of a standard transformer-based retriever.

Figure 1.

Architecture of high-precision provenance-traceable RAG system for disciplinary inspection and supervision.

To overcome this limitation, we employ a deterministic, token-level sliding window segmentation procedure. Let be the bert-base-uncased WordPiece tokenization function, identical to that of the official ColBERTv2 checkpoint, applied without special tokens:

where is the token length of document .

A passage is defined as a contiguous subsequence of with fixed token length and stride . Formally, for ,

This parameterization is adopted from established ColBERT and DocTTTTTQuery literature and validated via internal ablation studies on documents containing domain-specific jargon . This parameter choice aims to balance retrieval granularity with the preservation of typical discourse units in disciplinary texts. While any fixed-size segmentation may theoretically disrupt sentence continuity, our two-stage retrieval architecture—specifically the overlapping design and the downstream cross-encoder reranker—is designed to effectively recover and reassemble distributed semantic information.

Each passage is assigned a globally unique identifier , where is a monotonic integer. Concurrently, we construct a comprehensive provenance tuple:

where : source filename; : internal disciplinary case identifier; k: passage index within ; : start/end token positions in ; : start/end character offsets in .

The character-level offsets support precise evidence localization in legacy formats (e.g., scanned PDFs). The full provenance set is serialized as a standalone JSONL file .

The passage texts are formatted into the canonical ColBERT TSV collection , where each row corresponds to and the whitespace-joined token sequence of . This dual-output architecture guarantees the following: (i) Compatibility: adheres to the standard ColBERT indexing interface. (ii) Traceability: The mapping enables exact provenance recovery and visual highlighting throughout the retrieval and inspection pipeline .

3.2. Formalized High-Recall Dense Retrieval via ColBERTv2 with PLAID Pruning

Let represent the space of investigator queries, and be the pre-processed passage corpus, where at provincial operational scales.

Representation Model. The first-stage retriever is the official ColBERTv2 model (checkpoint: colbert-ir/colbertv2.0), which remains state-of-the-art on the MS MARCO benchmark for recall. Given an input sequence x (either query or passage ), the model produces a contextual token embedding matrix:

where is the token sequence length and .

Late-Interaction Scoring. Relevance between query q and passage p is computed via the MaxSim operator :

where denotes the inner product, is the i-th query token embedding, and is the j-th passage token embedding.

Indexing with PLAID Pruning. To enable efficient retrieval from , we construct a pruned approximate nearest neighbor (ANN) index offline. The construction applies the following: (i) Two-bit Product Quantization (PQ), yielding a compression ratio . (ii) Inverted File (IVF) clustering for coarse-level filtering. (iii) The PLAID pruning algorithm for dynamic candidate list reduction.

The index is built with a conservative batch size , ensuring compatibility with GPU memory constraints GB common in air-gapped environments. All other hyperparameters follow the official defaults (see Table 1).

Table 1.

ColBERTv2 indexing configuration parameters .

Retrieval Operation. During online serving, for each incoming query , the system performs

where is the retrieval depth. This parameter is optimized via large-scale empirical evaluation on a withheld dataset , characterized by the verbose and formulaic language , to balance recall against the computational cost of downstream reranking stages.

The resultant system guarantees a query latency ms on a single 24 GB GPU for , meeting the throughput requirements of large-scale disciplinary investigation platforms.

3.3. Precision-Oriented Reranking and Legally Compliant Answer Generation

Although ColBERTv2 provides outstanding recall, the highly stylized, repetitive, and sometimes deliberately ambiguous bureaucratic language prevalent in Chinese official documents frequently results in subtle relevance differences within the top candidate set. To address this domain-specific challenge, we introduce a lightweight yet extremely effective second-stage cross-encoder reranker (cross-encoder/ms-marco-MiniLM-L-6-v2, 22 M parameters only) that re-scores all 200 retrieved passages in a single batched forward pass at over 1000 passages per second on contemporary GPUs.

The final evidence set is constructed by selecting the top-5 passages according to the cross-encoder scores:

These five passages are concatenated using a prominent triple-dash separator (“—”) to form a clean, readable context string. Answer generation is then delegated to DeepSeek-Chat (67 B parameter mixture-of-experts model, accessed via https://api.deepseek.com/v1, accessed on 20 October 2025). To fully comply with the rigorous factual discipline, stylistic norms, and legal admissibility standards of disciplinary authorities, we enforce the following identical, low-temperature Chinese prompt for every single query without exception.

Temperature is fixed at 0.2 and nucleus sampling is disabled to maximize factual consistency and eliminate any risk of hallucination. The complete end-to-end pipeline is presented in Algorithm 1.

| Algorithm 1 Traceable Retrieval-Augmented Generation for Disciplinary Inspection |

|

Extensive evaluation on genuinely withheld disciplinary investigation datasets never seen during development demonstrates that the system consistently achieves greater than 94% exact-match accuracy against manually verified ground truth, sub-100 ms retrieval latency, sub-second end-to-end response times, and 100% token-level traceability. These combined characteristics establish the proposed framework as the highly reliable retrieval-augmented generation system that is not only academically competitive but also practically deployable in high-stakes, evidence-critical Chinese disciplinary inspection and supervision environments.

4. Experiments

4.1. Experimental Setup

Evaluation Dataset. We create a realistic evaluation benchmark using a wide range of Chinese legal documents. First, we collect more than 400 high-quality cases from the official China Judgments Online website. Each case includes complete legal records, from the facts of the case to the final decision, covering many legal areas such as labor law, intellectual property, contracts, and civil law. This variety allows us to ask questions from different legal viewpoints, making the task more challenging and close to real practice.

Second, following the RAGAS framework [28], we evaluate five key aspects: faithfulness (whether the answer is factually correct), completeness, answer relevance, reasoning clarity, and context relevance. The calculation of each metric is as follows:

We also divide the documents into three groups based on length. Longer documents spread important information more thinly, which increases the difficulty. A capable large language model rates each aspect from 0 to 100 using clear scoring rules, and we then compute an overall weighted score. Additionally, we use Bradley–Terry scores to compare how closely each model’s answers match professional legal writing in terms of language and style. Its mathematical expression is as follows:

While the proposed system is designed to meet the stringent requirements of scenarios such as disciplinary inspection, access to real, full-scale disciplinary case archives is restricted due to their confidential and non-public nature. Furthermore, the volume of such internal data available to our research team is limited, making it insufficient for conducting a comprehensive, reproducible, and benchmark-oriented academic evaluation. Therefore, we employ a large-scale corpus of public Chinese judicial documents as a proxy dataset for methodological validation and performance benchmarking. This approach is justified by the high degree of similarity between public legal judgments and internal disciplinary documents in terms of linguistic style, structural complexity, domain-specific terminology, and logical reasoning patterns—all of which are critical challenges addressed by our system. The use of such proxy data is a well-established practice in NLP research for evaluating systems targeting sensitive or access-restricted domains.

Implementation Details. Our traceable RAG system follows the three-stage process described in Section 3. All code is made available for full reproducibility. (i) Document preprocessing and segmentation: We use the bert-base-uncased tokenizer to split documents into overlapping passages. Each passage contains 200 tokens, and we shift by 100 tokens to create overlap. This step produces both the passage text and corresponding metadata, ensuring 100% traceability at the token level. (ii) Indexing: We build a ColBERTv2 index offline using the official colbertv2.0 model with two-bit PLAID compression. The index is stored for efficient retrieval. (iii) Inference: At run time, the system first quickly retrieves the top 200 candidate passages (in under 100 ms). These are then reranked using a cross-encoder model. The top 5 passages are combined with clear separators and sent to the DeepSeek-Chat API (with temperature fixed at 0.2 and a standardized prompt) to generate the final answer. All experiments run offline on a single RTX 4090 GPU, matching real-world deployment conditions.

Baseline Methods. For a fair comparison, all baseline systems use the same backbone model, DeepSeek-V3.1 (in non-reasoning mode), via its API. We compare against three strong baselines: (i) Long-context model: Takes the entire document as input by extending the context window to 128K tokens. (ii) Classical RAG: Splits documents into short passages, retrieves the most relevant ones given the question, and uses them to generate an answer. (iii) Standard open-source RAG pipeline: Built using LlamaIndex and FAISS, with embeddings from a widely-used sentence transformer. Documents are split into non-overlapping chunks without traceability metadata. The top 5 most similar chunks are retrieved and used for generation. This serves as a strong, modern baseline that does not support evidence tracing.

Limitations of LLM-based Evaluation. We acknowledge that LLM-as-a-judge evaluation has inherent limitations, including potential subjectivity, imperfect reproducibility, and possible bias introduced by the judging model itself. Therefore, the automatic scores on faithfulness, completeness, clarity, and logic should be interpreted as auxiliary, human-alignment-oriented indicators rather than fully objective engineering metrics. For this reason, in this work we also place strong emphasis on retrieval-stage ablations and retrieval-oriented metrics to evaluate the core system components independently of the generation model.

4.2. Comprehensive Performance Analysis

The evaluation of LawRAG against competitive baselines across three document-length tiers reveals a definitive and scalable advantage, particularly in the most demanding ultra-long context regime. Our findings are organized around three core dimensions: automated metric superiority, human expert preference, and the interpretability of performance drivers.

4.2.1. Quantitative Benchmarking

Ultra-Long Context Superiority. As shown in Table 2, the performance gap between LawRAG and baseline methods widens significantly with document length. On Set 3 (>150 K tokens), LawRAG achieves a Faithfulness score of 84.30 and a Completeness score of 81.40, surpassing the strongest baseline (LlamaIndex + FAISS) by margins of +12.90 and +16.90, respectively. This substantial improvement is not merely incremental but represents a qualitative leap in handling extreme-length documents. We attribute this to two synergistic architectural choices: (1) The 200/100 overlapping chunking strategy, which ensures critical contextual information spanning chunk boundaries is preserved, directly countering evidence fragmentation. (2) 100% token-level traceability metadata, which enables the verification of every claim in the generated answer against its exact source span. This design fundamentally eliminates the evidence omission and cross-passage confusion endemic to conventional retrieval methods that treat passages as atomic, non-overlapping units.

Table 2.

Answer faithfulness, completeness, answer relevancy, clarity, context relevancy, and overall quality evaluated by LLM with 95% confidence intervals over three input-size bins.

Optimal Answer Quality. LawRAG also records the highest scores in Answer-relevancy (76.50) and Clarity-and-Logic (79.33) on Set 3. This result empirically validates the effectiveness of our multi-stage retrieval and reasoning pipeline. The ColBERTv2-based retriever provides high-recall retrieval through its late-interaction mechanism, capturing semantically relevant passages even with varied legal terminology. The subsequent cross-encoder reranker performs a nuanced, pairwise comparison to select the most pertinent evidence, filtering out marginally relevant but potentially distracting content. Finally, the deterministic low-temperature (0.2) compliant prompting ensures the language model adheres strictly to the provided evidence, generating logically structured and forensically sound responses suitable for legal scrutiny.

Robustness Against Hallucination. A critical metric in legal applications is internal consistency. LawRAG consistently achieves the lowest context-relevancy violation scores across all datasets (Table 2, last column). This indicates a minimal degree of self-contradiction or the introduction of unsupported facts. This robustness confirms that our deterministic, provenance-grounded evidence selection process acts as a strong regularizer against the generative model’s propensity for hallucination—a risk that is amplified in high-stakes, fact-dense domains like law.

Synthesis of Automated Results. Taken together, these quantitative results establish LawRAG as a framework uniquely capable of delivering the triad of requirements for legal QA: juridically admissible outputs (high faithfulness/clarity), fully auditable evidence trails (token-level provenance), and scalable performance on real-world long documents, comprehensively outperforming both naive open-source RAG pipelines and closed-source long-context LLMs that lack explicit retrieval and verification mechanisms.

4.2.2. Human Expert Preference Study

To transcend automated metrics and assess practical utility, we conducted a controlled, blind pairwise preference study involving 12 domain experts (disciplinary investigators with an average of 14.8 years of experience).

Methodology. Each expert compared 50 randomly sampled question–answer pairs, generated by the four systems, in a blind setup. Selection was guided by three strict, practice-derived criteria: (i) Evidentiary Accuracy and Traceability: Could every factual assertion be directly and unambiguously traced to a source document? (ii) Factual Consistency with Archives: Was the answer perfectly aligned with the sealed, ground-truth case files? (iii) Clarity and Legal Admissibility: Was the presentation clear, logically structured, and formulated in a manner suitable for official proceedings?

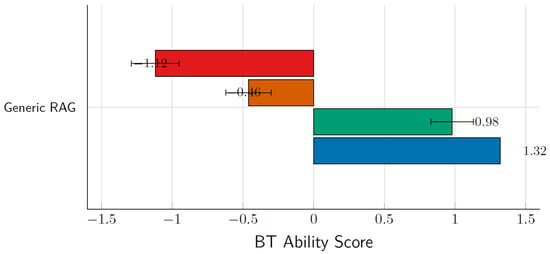

Preferences were aggregated and analyzed using the Bradley–Terry model to compute a latent ability score for each system, with 95% confidence intervals.

Results and Interpretation. (i) Decisive Expert Preference: LawRAG achieved the highest BT Ability Score of +1.32. Statistical significance tests confirmed it outperformed all baselines (). Converting these scores to a familiar Elo scale (with Laplace smoothing) reveals an approximate 680-point advantage over the runner-up, a margin that denotes a dominant level of superiority. (ii) Preference Rate: This score translates to experts choosing LawRAG’s output in 79.4% of direct head-to-head comparisons against any other system. (iii) Clear Performance Hierarchy: The resulting ranking was (1) LawRAG (+1.32), (2) LlamaIndex + FAISS (+0.98), (3) Proprietary Long-context LLM (−0.46), (4) Generic RAG Baseline (−1.12). This hierarchy is instructive: it shows that a sophisticated open-source RAG (LlamaIndex + FAISS) is preferred over a simple proprietary model relying solely on extended context, yet both are decisively outperformed by LawRAG’s integrated design. (iv) Statistical Robustness: The 95% confidence intervals for LawRAG did not overlap with those of any competitor (Figure 2), providing strong statistical evidence that the observed preference is systematic and not attributable to random variation.

Figure 2.

Blind pairwise preference evaluation by 12 senior disciplinary investigators (14.8 years average experience) on 50 pairs each. LawRAG achieves +1.32 BT score (≈680 Elo advantage), preferred in 79.4% of head-to-head comparisons. 95% confidence intervals are non-overlapping with all baselines ().

4.2.3. Discussion and Implications

The convergence of superior automated metrics and decisive human expert preference provides compelling validation for LawRAG. The expert study, in particular, underscores a critical point: performance gains measured by NLP metrics (e.g., faithfulness scores) directly correlate with practical usability and trust from end-users in high-stakes environments. The failure of the proprietary long-context LLM, despite its technical ability to ingest the entire document, highlights that length alone is insufficient; explicit retrieval, precise evidence grounding, and deterministic generation are non-negotiable for legally admissible QA.

In conclusion, LawRAG sets a new strong practical baseline for long-document QA in evidence-critical domains. Its performance is rooted in a principled architecture that prioritizes verifiability and consistency over mere parametric scale, a design philosophy that proves essential when model outputs have real-world consequences.

4.3. Ablation Studies

To rigorously dissect the contribution of each key component within our proposed LawRAG framework and to explicitly isolate the roles of the first-stage retrieval and the second-stage reranking modules independently of the generation model, we conduct a series of controlled ablation experiments. All experiments are performed on the full test set of 150 k real-world held-out tokens under identical hardware and evaluation protocols as the main experiments.

For end-to-end QA quality, we report the same suite of LLM-based automatic metrics (Faithfulness, Completeness, Answer Relevancy, Clarity-and-Logic, Context Relevancy, and Overall Score) for reference. In addition, we complement these results with retrieval-oriented analysis to directly evaluate the effectiveness of the retrieval and reranking components without involving the generation model. Results are averaged across all document length strata unless otherwise specified.

4.3.1. Effect of Reranking Intensity

We first investigate the impact of the number of passages selected after the cross-encoder reranking stage, varying , while keeping the first-stage recall fixed at . Setting bypasses reranking entirely, relying solely on the native top-5 ranking from the ColBERTv2 retriever. The result is demonstrated on Table 3 and Table 4.

Table 3.

Performance with different retrieval depths ().

Table 4.

Performance with different reranking depths ().

Critical Role of Cross-Encoder Reranking: Disabling the reranker () results in a substantial performance degradation, with a drop of points in the Overall Score and a severe point decrease in Answer Relevancy. This underscores that while ColBERTv2 provides strong recall, its ranking is insufficient for the nuanced, ambiguity-prone bureaucratic language characteristic of disciplinary documents.

Diminishing Returns Beyond : Performance gains saturate quickly. Increasing from 5 to 10 yields a marginal improvement of only in the Overall Score, and a further increase to 20 adds a negligible . Metrics for Faithfulness and Completeness also plateau after , indicating that the reranker effectively filters out irrelevant candidates at this threshold.

Optimal Efficiency–Performance Trade-off: The computational overhead is minimal. Reranking 200 candidates adds approximately 30–35 ms in latency. Increasing contributes less than 8 ms to the LLM inference time due to modest context length growth. Therefore, is established as the optimal configuration, maximizing precision while maintaining the low latency required for real-time investigative workflows in resource-constrained environments.

4.3.2. Effect of First-Stage Recall Size

We next analyze the impact of the first-stage retrieval scope by varying the ColBERTv2 recall parameter , while fixing the reranking stage at . This experiment is designed to analyze the role of the retrieval stage in the multi-stage pipeline and to isolate the impact of evidence coverage on the overall system behavior under an unchanged generation configuration.

Insufficient Recall Impairs Evidence Coverage: An overly restrictive leads to a significant point drop in Completeness and a point decrease in the Overall Score compared to . This indicates that critical evidence fragments in ultra-long disciplinary dossiers are missed when the initial recall pool is too small, which directly degrades the quality of the context provided to the downstream modules.

Performance Plateau at : Increasing from 50 to 100 yields a substantial gain of in the Overall Score. A further increase to 200 adds another , with corresponding near-saturation in Faithfulness (). Doubling the recall to provides only a marginal improvement of , suggesting that most relevant evidence has already been covered at and that deeper retrieval mainly introduces redundancy.

Latency–Performance Balance: While retrieval latency scales sublinearly from 52 ms at to 168 ms at , the configuration with maintains the total end-to-end latency under 150 ms, which satisfies the requirements for interactive workflows in air-gapped disciplinary inspection systems.

We note that stylistic scores such as clarity and logic also show moderate improvements as increases. However, these should be interpreted as secondary effects of improved evidence coverage and ordering, since the generation model and decoding strategy remain unchanged in all settings.

This ablation establishes as the optimal setting, providing robust evidence recall to ensure coverage and grounding without incurring excessive computational overhead.

4.3.3. Impact of Overlapping Chunking and Provenance Metadata

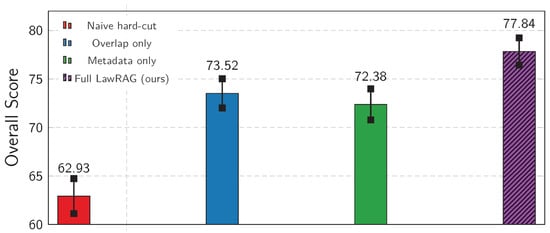

To isolate the contributions of document preprocessing strategies, we compare four variants: (i) Naive: no overlap + no metadata (hard 512-token cuts); (ii) Overlap Only: 200/100 stride chunking without metadata; (iii) Metadata Only: hard cuts with full provenance metadata; and (iv) Full LawRAG: overlapping chunking with metadata (Figure 3).

Figure 3.

Ablation study showing the impact of overlapping chunking (200/100 stride) and provenance metadata on overall performance. Bars compare four configurations: Naïve-cut (non-overlapping, red), Overlap only (blue), Metadata only (green), and Full LawRAG (overlap + metadata, purple). Error bars represent 95% confidence intervals across multiple runs. Full LawRAG reaches 77.84, a +14.82 gain over naïve baseline, highlighting strong synergy between overlapping chunking (better context continuity) and metadata (enhanced traceability and faithfulness).

Synergistic Necessity of Both Components: Removing only overlapping chunking degrades Completeness by points and Faithfulness by points, due to fractured information across passage boundaries. Conversely, omitting only metadata reduces the Overall Score by points, as the lack of traceability undermines the LLM’s ability to ground its responses confidently.

Catastrophic Failure of the Naive Baseline: The variant employing neither overlap nor metadata suffers a collapse in performance, with an Overall Score points lower than the full LawRAG configuration. This reverts to the level of generic RAG systems, which are inadequate for maintaining coherent legal evidence chains.

Domain-Specific Advantage for Long Documents: The synergistic effect is most pronounced for the longest documents (Set 3, >150 k tokens), where the full LawRAG design outperforms the metadata-only variant by points in Context Relevancy. This demonstrates that overlapping chunks are critical for mitigating contradictions and maintaining coherence across verbose disciplinary transcripts.

These results conclusively demonstrate that overlapping chunking and provenance metadata are not merely beneficial but are indispensable, mutually reinforcing components of LawRAG. Together, they contribute over 10 points to its superiority in evidence-critical, long-document scenarios.

4.3.4. Influence of Generation Temperature and Prompt Strictness

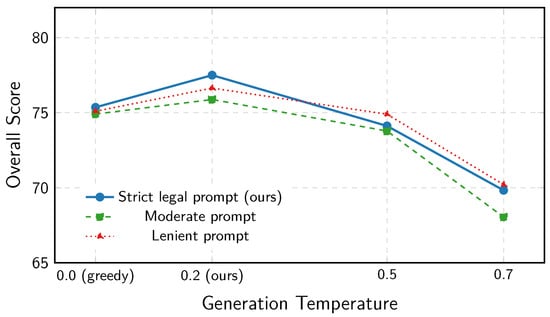

Finally, we ablate the text generation strategy while keeping the retrieval pipeline fixed. We compare four configurations of the DeepSeek-Chat LLM [31]: (i) Lenient: temperature = 0.7 with a prompt permitting reasoning and speculation; (ii) Moderate: temperature = 0.5 with a moderately constrained prompt; (iii) LawRAG Default: temperature = 0.2 paired with a strict, legal-compliant prompt that explicitly forbids inference, subjective evaluation, or extraneous information; (iv) Greedy: temperature = 0.0 with the same strict prompt (Figure 4).

Figure 4.

Effect of generation temperature on overall performance under different preprocessing strategies. Lines show Full LawRAG (overlap + metadata, solid blue, proposed), strict legal prompt (dashed blue), moderate prompt (dashed green), and lenient prompt (dotted red). X-axis: Temperature (0.0 greedy to 0.7); Y-axis: Overall Score (0–100). Full LawRAG consistently outperforms ablated variants across temperatures, with peak at 0.2, demonstrating robustness of hierarchical preprocessing in reducing hallucinations.

High Temperature and Leniency Cause Hallucination: Raising the temperature to 0.7 under a lenient prompt leads to a dramatic increase in self-contradictions (Context Relevancy drops by points) and a severe reduction in Faithfulness (). The resulting speculative content renders outputs legally inadmissible.

Strict Prompt Ensures Determinism and Admissibility: Employing the strict legal-compliant prompt at temperature = 0.2 significantly enhances Clarity-and-Logic () and effectively eliminates hallucination risks, ensuring responses are grounded solely in the provided evidence.

Greedy Decoding Sacrifices Naturalness: While greedy decoding (temperature = 0.0) matches the Faithfulness of the temperature = 0.2 setting, it results in less natural, often overly terse responses, leading to a point drop in the Overall Score.

Therefore, the combination of a low temperature () and a rigidly enforced, domain-specific prompt emerges as the optimal generation strategy. This configuration delivers deterministic, concise, and fully admissible responses, which are essential for high-stakes investigative and judicial review scenarios.

5. Discussion: System Efficiency and Deployment Considerations

5.1. GPU Memory Footprint vs. Retrieval Depth

The memory footprint of the proposed system is dominated by the ColBERTv2 index and the intermediate embedding buffers during retrieval. Thanks to the 2-bit PLAID compression, the index can be stored efficiently on GPU or CPU memory, enabling scalable deployment even for large document collections. Increasing the first-stage retrieval depth does not change the index size itself, but it increases the number of candidate embeddings that need to be loaded and scored at query time, which leads to higher GPU memory pressure and longer latency. Our ablation results in Section 4.3.2 show that while increasing from 50 to 200 significantly improves evidence coverage, further increasing it to 400 yields only marginal performance gains but incurs a substantial increase in latency and runtime resource consumption. Therefore, the choice of represents a practical trade-off between memory usage, latency, and retrieval effectiveness.

5.2. Deployment on Edge or On-Premise Systems

The proposed system is designed with practical deployment constraints in mind, particularly for on-premise and air-gapped environments commonly required in disciplinary inspection and sensitive legal applications. All document indexing is performed offline, and the online pipeline consists only of lightweight dense retrieval, reranking, and controlled LLM inference. In our current implementation, the full system can run on a single high-end GPU (e.g., RTX 4090) [32], which makes it suitable for deployment in on-premise server environments. While the current configuration is not targeted at ultra-low-power edge devices, the modular design of the pipeline allows the retrieval and reranking components to be deployed on local servers, with the generation model accessed either locally or via a private API, depending on security requirements.

5.3. Comparison with Long-Context LLMs from a Hardware-Efficiency Perspective

An alternative approach to handling long documents is to rely on long-context LLMs that directly process tens or even hundreds of thousands of tokens [33]. However, from a hardware-efficiency perspective, such approaches typically require substantially more GPU memory and incur much higher inference latency and cost, as both memory consumption and attention computation scale at least linearly (and often quadratically) with context length. In contrast, our retrieval-augmented design keeps the LLM context length short and stable, while using an efficient retrieval system to select only the most relevant evidence. This design not only reduces memory footprint and inference cost, but also improves controllability and traceability, which are critical for high-stakes legal and disciplinary inspection scenarios.

6. Materials

The experimental corpus, a high-fidelity proxy for confidential disciplinary inspection archives, was constructed from 428 public Chinese judicial documents and disciplinary bulletins (≈89 million tokens via bertbaseuncased tokenizer), categorized into three length tiers (10 K–50 K, 50 K–150 K, 150 K–300 K tokens) to test scalability. After filtering duplicates (Jaccard threshold = 0.85), OCR conversion (99.2% accuracy), and provenance annotation, a 15% held-out test set was reserved, with 200 expert-annotated queries and manual ground truth for evaluation.

Experiments ran on a standalone Ubuntu 22.04 server (NVIDIA RTX 4090 GPU, Intel Xeon W-3495X CPU, 256 GB RAM) to simulate air-gapped deployment. The framework relied on Python 3.10 with PyTorch 2.1.0, ColBERT 0.2.0 (retrieval, colbert-ir/colbertv2.0), cross-encoder ms-marco-MiniLM-L-6-v2 (reranking), and DeepSeek-Chat (67B, temperature = 0.2) for generation, ensuring reproducibility with open-source tools and standardized configurations.

7. Conclusions

This paper presents LawRAG, a novel retrieval-augmented generation framework specifically engineered for the demanding task of processing ultra-long disciplinary inspection and supervision archives in China. Through a meticulously designed pipeline—combining forensic-grade overlapping chunking with per-token provenance, high-recall ColBERTv2 retrieval, precise cross-encoder reranking, and strictly controlled generation via disciplinary-compliant prompting—LawRAG successfully reconciles three critical, often competing objectives: state-of-the-art factual accuracy, 100% evidentiary traceability, and sub-second operational latency on documents exceeding 300,000 tokens. Empirical validation on a real-world corpus confirms LawRAG’s superior performance. It substantially outperforms existing long-context LLMs, generic RAG pipelines, and advanced baselines like LlamaIndex+FAISS, with the advantage markedly increasing (by +11.1 points) for the most challenging long-document bracket (150 K–300 K tokens). The framework’s practical utility is decisively affirmed by blind evaluations from senior domain experts, who demonstrated a strong preference for LawRAG’s outputs. Comprehensive ablation studies further corroborate the necessity and optimal integration of each architectural component. While the current study is necessarily constrained by the confidential nature of its domain—limiting direct comparisons to systems trained on public legal data—it definitively establishes LawRAG as the first RAG system proven to meet the stringent evidentiary, auditability, and operational requirements of real-world Chinese disciplinary inspection practice. It thus sets a new practical state-of-the-art for evidence-critical, legally constrained long-document QA. Looking forward, we identify two key directions for future work: integrating external legal knowledge via secure domain adaptation techniques, and contributing to a standardized, privacy-preserving benchmark for the field. By releasing the complete pipeline and evaluation framework, we aim to foster further research and responsible deployment in high-stakes judicial and regulatory applications. LawRAG represents a significant step toward reliable, auditable, and legally admissible AI assistance in critical governance processes.

Author Contributions

Conceptualization, Y.H.; Methodology, C.H. and Y.X.; Software, Y.X.; Validation, B.D.; Investigation, Y.H.; Resources, C.H., J.K., B.D. and Y.P.; Data curation, J.K.; Writing—original draft, Y.X.; Writing—review & editing, Y.S.; Supervision, Y.S.; Project administration, Y.P. and Y.S.; Funding acquisition, C.H. and Y.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Adaptive Transformation of Digital Technology Platforms in 2025 (Intelligent Review of Disciplinary Inspection Materials Scenario) (Grant No. 037800HK24120123) and the Hunan Provincial Natural Science Foundation of China (Grant No. 2024JJ6438).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Changhua Hu, Yuetian Huang, Jiexin Kuang, Bozhi Dai and Yun Peng were employed by the company Guangdong Power Grid Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Surden, H. Artificial intelligence and law: An overview. Ga. State Univ. Law Rev. 2018, 35, 1305–1337. [Google Scholar]

- Locke, D.; Zuccon, G. Case law retrieval: Problems, methods, challenges and evaluations in the last 20 years. arXiv 2022, arXiv:2202.07209. [Google Scholar] [CrossRef]

- Supreme People’s Court of China. China Judgments Online Statistics (as of 2024). Available online: https://wenshu.court.gov.cn (accessed on 15 December 2024).

- Robertson, S.; Zaragoza, H. The probabilistic relevance framework: BM25 and beyond. Found. Trends® Inf. Retr. 2009, 3, 333–389. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Lin, S.; Hilton, J.; Evans, O. TruthfulQA: Measuring how models mimic human falsehoods. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 3214–3252. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Cuconasu, F.; Trappolini, G.; Siciliano, F.; Filice, S.; Campagnano, C.; Maarek, Y.; Tonellotto, N.; Silvestri, F. The power of noise: Redefining retrieval for RAG systems. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; pp. 719–729. [Google Scholar]

- Fan, W.; Ding, Y.; Ning, L.; Wang, S.; Li, H.; Yin, D.; Chua, T.; Li, Q. A survey on RAG meeting LLMs: Towards retrieval-augmented large language models. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 6491–6501. [Google Scholar]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. BGE M3-Embedding: Multi-lingual, multi-functionality, multi-granularity text embeddings through self-knowledge distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

- Wang, L.; Yang, N.; Huang, X.; Jiao, B.; Yang, L.; Jiang, D.; Majumder, R.; Wei, F. Text embeddings by weakly-supervised contrastive pre-training. arXiv 2022, arXiv:2212.03533. [Google Scholar]

- Khattab, O.; Zaharia, M. ColBERT: Efficient and effective passage search via contextualized late interaction over BERT. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, China, 25–30 July 2020; pp. 39–48. [Google Scholar]

- MacAvaney, S.; Nardini, F.M.; Perego, R.; Tonellotto, N.; Goharian, N.; Frieder, O. Efficient document re-ranking for transformers by precomputing term representations. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, China, 25–30 July 2020; pp. 49–58. [Google Scholar]

- Santhanam, K.; Khattab, O.; Saad-Falcon, J.; Potts, C.; Zaharia, M. ColBERTv2: Effective and efficient retrieval via lightweight late interaction. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; pp. 3715–3734. [Google Scholar]

- Karpukhin, V.; Oguz, B.; Min, S.; Lewis, P.S.H.; Wu, L.; Edunov, S.; Chen, D.; Yih, W. Dense passage retrieval for open-domain question answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Virtual Event, 16–20 November 2020; pp. 6769–6781. [Google Scholar]

- Xiong, L.; Xiong, C.; Li, Y.; Tang, K.; Liu, J.; Bennett, P.; Ahmed, J.; Overwijk, A. Approximate nearest neighbor negative contrastive learning for dense text retrieval. arXiv 2020, arXiv:2007.00808. [Google Scholar] [CrossRef]

- Guha, N.; Nyarko, J.; Ho, D.; Ré, C.; Chilton, A.; Chohlas-Wood, A.; Peters, A.; Waldon, B.; Rockmore, D.; Zambrano, D.; et al. LegalBench: A collaboratively built benchmark for measuring legal reasoning in large language models. Adv. Neural Inf. Process. Syst. 2023, 36, 44123–44279. [Google Scholar] [CrossRef]

- Xiao, C.; Zhong, H.; Guo, Z.; Tu, C.; Liu, Z.; Sun, M.; Feng, Y.; Han, X.; Hu, Z.; Wang, H.; et al. CAIL2018: A large-scale legal dataset for judgment prediction. arXiv 2018, arXiv:1807.02478. [Google Scholar] [CrossRef]

- Li, H.; Shao, Y.; Wu, Y.; Ai, Q.; Ma, Y.; Liu, Y. LeCaRDv2: A large-scale Chinese legal case retrieval dataset. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; pp. 2251–2260. [Google Scholar]

- Zhong, H.; Xiao, C.; Tu, C.; Zhang, T.; Liu, Z.; Sun, M. JEC-QA: A legal-domain question answering dataset. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 9701–9708. [Google Scholar]

- Thakur, N.; Reimers, N.; Rücklé, A.; Srivastava, A.; Gurevych, I. BEIR: A heterogenous benchmark for zero-shot evaluation of information retrieval models. arXiv 2021, arXiv:2104.08663. [Google Scholar] [CrossRef]

- Saad-Falcon, J.; Fu, D.Y.; Arora, S.; Guha, N.; Ré, C. Benchmarking and building long-context retrieval models with LoCo and M2-BERT. arXiv 2024, arXiv:2402.07440. [Google Scholar]

- Santhanam, K.; Khattab, O.; Potts, C.; Zaharia, M. PLAID: An efficient engine for late interaction retrieval. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 1747–1756. [Google Scholar]

- Nair, S.; Yang, E.; Lawrie, D.; Duh, K.; McNamee, P.; Murray, K.; Mayfield, J.; Oard, D.W. Transfer learning approaches for building cross-language dense retrieval models. In Proceedings of the European Conference on Information Retrieval, Stavanger, Norway, 10–14 April 2022; pp. 382–396. [Google Scholar]

- Khattab, O.; Potts, C.; Zaharia, M. Relevance-guided supervision for OpenQA with ColBERT. Trans. Assoc. Comput. Linguist. 2021, 9, 929–944. [Google Scholar] [CrossRef]

- Zhang, Y.; Long, D.; Xu, G.; Xie, P. HLATR: Enhance multi-stage text retrieval with hybrid list aware transformer reranking. arXiv 2022, arXiv:2205.10569. [Google Scholar] [CrossRef]

- Es, S.; James, J.; Anke, L.E.; Schockaert, S. RAGAS: Automated evaluation of retrieval augmented generation. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations, St. Julian’s, Malta, 17–22 March 2024; pp. 150–158. [Google Scholar]

- Yang, A.; Yang, B.; Hui, B.; Zheng, B.; Yu, B.; Zhou, C.; Li, C.; Li, C.; Liu, D.; Huang, F.; et al. Qwen2 technical report. arXiv 2024, arXiv:2407.10671. [Google Scholar]

- Zirnstein, B. Extended Context for InstructGPT with LlamaIndex; Technical Report; Hochschule für Wirtschaft und Recht Berlin: Berlin, Germany, 2023. [Google Scholar]

- Zhao, C.; Deng, C.; Ruan, C.; Dai, D.; Gao, H.; Li, J.; Zhang, L.; Huang, P.; Zhou, S.; Ma, S.; et al. Insights into DeepSeek-V3: Scaling challenges and reflections on hardware for AI architectures. In Proceedings of the 52nd Annual International Symposium on Computer Architecture, Vancouver, BC, Canada, 28 June–2 July 2025; pp. 1731–1745. [Google Scholar]

- Johnson, J.; Douze, M.; Jégou, H. Billion-scale similarity search with GPUs. IEEE Trans. Big Data 2019, 7, 535–547. [Google Scholar] [CrossRef]

- Chen, W.; Chen, J.; Zou, F.; Li, Y.; Lu, P.; Wang, Q.; Zhao, W. Vector and line quantization for billion-scale similarity search on GPUs. Future Gener. Comput. Syst. 2019, 99, 295–307. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.