Abstract

Artificial intelligence (AI) methods have been integrated in education during the last few decades. Interest in this integration has increased in recent years due to the popularity of AI. The use of explainable AI in educational settings is becoming a research trend. Explainable AI provides insight into the decisions made by AI, increases trust in AI, and enhances the effectiveness of the AI-supported processes. In this context, there is an increasing interest in the integration of AI, and specifically explainable AI, in the education of young children. This paper reviews research regarding explainable AI approaches in primary education in the context of teaching and learning. An exhaustive search using Google Scholar and Scopus was carried out to retrieve relevant work. After the application of exclusion criteria, twenty-three papers were included in the final list of reviewed papers. A categorization scheme for explainable AI approaches in primary education is outlined here. The main trends, tools, and findings in the reviewed papers are analyzed. To the best of the authors’ knowledge, there is no other published review on this topic.

1. Introduction

During the last few decades, there has been an effort to integrate artificial intelligence (AI) in education [1,2,3]. The integration of AI in education involves different aspects. One aspect is the teaching of AI to students. Another aspect concerns the use of AI to assist in teaching and learning tasks. An indicative example of this aspect is the incorporation of AI in interactive learning systems to provide real-time personalized interaction to students and teachers. An alternative example is the use of AI in processing data collected from specific learning settings. A third aspect is the use of AI to assist in policymaking and decision support. An example of using AI in policymaking is the processing of large amounts of data collected from national or international educational assessments and surveys in order to obtain information that could be reflected in future policies [4,5,6]. Examples of using AI in decision support include the identification of geographical regions requiring additional schools or teachers [7] and the determination of optimal school bus routes and stops [8].

One may note that there is sufficient experience in the integration of AI in higher education. First, the teaching of AI in higher education has been explored extensively during the last few decades in higher education, because AI is a learning subject in undergraduate and postgraduate levels. Consequently, there is an abundance of teaching experience, learning content, and learning tools concerning AI. Second, many higher education institutions have explored the use of AI to assist in teaching and learning. On the one hand, this has been done with customized learning tools developed within higher education institutions [9,10] and with other available AI-based tools [11]. On the other hand, research is also conducted about the use of AI in processing data collected from students (e.g., data stored in e-learning platforms and student projects). Moreover, the use of AI in decision support within higher education has been explored (e.g., identification of students facing difficulties in their studies [12].

There are studies about the integration of AI in the younger age groups as well, in addition to higher education. A recent development is an increasing interest in implementing relevant approaches in primary education, as demonstrated by the publications in this subject during the last few years. According to the International Standard Classification of Education by the United Nations Educational, Scientific, and Cultural Organization (UNESCO), primary education is an educational level following early childhood education and preceding secondary education [13]. The integration of AI in primary education is an emerging research direction for three main reasons: First, technology-enhanced learning is becoming increasingly popular in educational settings concerning the younger age groups. Teachers, children, and parents are now more experienced with technological resources compared to previous time periods, and this assists in the integration of technology in primary education. Educational applications and digital content are available for primary education, facilitating the implementation of technology-enhanced activities. Second, AI is becoming a hot topic at all levels of education, including primary education. The advances in AI during the last decade and the recent popularity of AI applications have provided an impetus for this. Third, the integration of AI in primary education has not been explored to the extent that it has in higher education. This leaves room for research work.

A prerequisite in many fields is the provision of explanations for the decisions made by AI-based systems. Education is not an exception. In certain cases (e.g., various types of rule-based systems and other comprehensible models) there are inherent mechanisms providing explanations for the produced outputs [14,15]. or straightforward ways of doing this. However, several AI methods do not have inherent mechanisms for (or there are no straightforward ways of) explaining their outputs, and the knowledge they encompass is also not comprehensible. This results in difficulties. Explainable AI (XAI) is an AI field aiming to deal with such issues. Generally speaking, XAI aims to offer comprehensive explanations of the procedures and functions of AI applications, making AI more transparent and understandable to a wider audience [16,17]. Interest in XAI is gradually increasing because it provides the means to enhance the accountability and fairness of AI systems, resulting in increased trust in AI decisions [18]. For these specific reasons, XAI is also gradually being integrated in educational settings [19].

This paper reviews work concerning the integration of XAI in primary education. The main reasons for such a review are the increasing interest in integrating AI in primary education and the increasing interest in XAI in all sectors, including education. A review of the integration of XAI in primary education will attract the interest of researchers and teachers. It will also provide insight to researchers and teachers about the main trends of approaches integrating XAI in primary education and how XAI may generally be useful in primary education. To the best of the authors’ knowledge, there is no other published review on this subject.

This paper is organized as follows: Section 2 presents the methodology, including the research questions and the search procedure. Section 3 outlines the main aspects of the reviewed papers. Section 4 presents the results, discussing the main trends, and briefly presenting reviewed papers according to the category to which they belong. Section 5 discusses aspects of the methodological quality of the reviewed papers. Section 6 presents the discussion. Finally, Section 7 presents the conclusions and the future research directions.

2. Materials and Methods

The preparation of this review was guided by explicit research questions. More specifically, these research questions were as follows:

- (1)

- In which main categories may the XAI approaches in primary education be discerned?

- (2)

- What are the main trends of the XAI approaches in primary education?

- (3)

- What are the main XAI tools or methods used in the XAI approaches in primary education?

- (4)

- In which primary education learning subjects are the XAI approaches used?

- (5)

- For which AI methods are XAI tools or methods used to provide explanations in XAI approaches in primary education?

- (6)

- Are single XAI tools and methods or a combination of them more preferred in XAI approaches in primary education?

- (7)

- Taking into consideration the XAI tools or methods most used in XAI approaches in primary education, which of the main functionalities offered are exploited?

An exhaustive search was carried out to retrieve relevant research using two search tools: Google Scholar and Scopus. The search was carried out from January to February 2025. These two search tools were selected because it is very likely that all relevant research can be retrieved from them. The default settings of both search tools were used. In both tools, the search was carried out in the full text of the documents and was not limited to titles, abstracts, and keywords. No limitation on the publication years was defined. All items retrieved by the two search tools were examined.

The search involved research about XAI and primary education. For the first term (i.e., explainable artificial intelligence), two alternative keywords were used in the search: (i) “explainable artificial intelligence” and (ii) “explainable ai”. For the second term (i.e., primary education), four alternative keywords were used in the search: (i) “elementary school”, (ii) “elementary education”, (iii) “primary school”, and (iv) “primary education”. This was done because, internationally, the alternative terms “primary education” and “elementary education” are used for the same educational level. Similarly, the alternative terms “primary school” and “elementary school” are used for schools at this educational level. Based on the aforementioned, the following logical expression was given as an input to both search tools:

(“explainable artificial intelligence” OR “explainable ai”) AND (“elementary school” OR “elementary education” OR “primary school” OR “primary education”).

In total, 1372 items were retrieved by using Google Scholar and Scopus. Duplicate retrieved items were excluded. The set of remaining items was examined to derive the work that would be reviewed. Retrieved items were excluded from the list of reviewed items according to specific criteria. More specifically, retrieved items were excluded in the following cases:

- (a)

- They involved papers published in journals that were retracted;

- (b)

- They involved work that was authored in a language other than English;

- (c)

- They were not accessible in general or through our institution;

- (d)

- They involved reviews, position papers, overviews, or editorials, and not research studies;

- (e)

- They did not involve education but some other field;

- (f)

- They did not involve primary education;

- (g)

- They did not involve the use of XAI;

- (h)

- They involved theses or technical reports.

A total of 140 duplicate items were found and were omitted. Further on, items meeting criteria (a)–(c) were excluded. The numbers of items that were excluded due to criteria (a), (b), and (c) were 1, 47, and 69, respectively. For several of the remaining items, a decision could be made to exclude them from the list of reviewed items (due to criteria (d)–(h)) by reading their title and/or abstract. In total, 901 were thus excluded. Another 214 items remained, whose full contents had to be checked; 191 of these remaining items were excluded due to criteria (e)–(g). In the end, 23 items remained and were used for the review. Table 1 depicts the relevant data. The table provides numerical data about the items excluded due to criteria (d)–(h). As already mentioned, the two search tools were used to search the full text of the papers for the combination of terms. The tools’ scope of search was not restricted to the titles, abstracts, and keywords of the items. This enabled the retrieval of the twenty-three reviewed papers because in several of them the combination of terms is not included in the title, abstract and keywords. However, this also meant the initial retrieval of many items that were not relevant and in which the given terms were merely mentioned somewhere in the text.

Table 1.

Data involving the number of items retrieved with the search tools.

3. Main Aspects of the Reviewed Papers

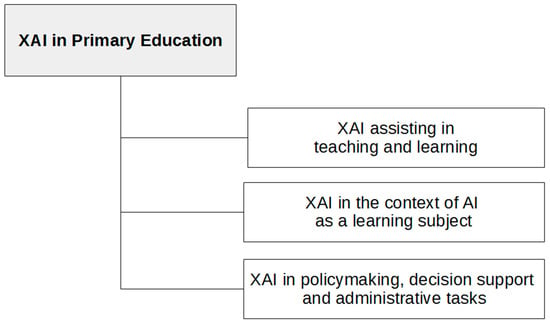

Before presenting the list of reviewed items, a categorization scheme for XAI approaches in primary education will be outlined here. The categorization scheme was derived based on the reviewed work and provides an answer to Research Question 1. More specifically, approaches using XAI in primary education may be divided into three main categories: (a) approaches that use XAI to assist in learning and teaching, (b) approaches that use XAI in the context of AI as a learning subject, and (c) approaches that use XAI in policymaking, decision support, and/or administrative tasks in an educational context. Henceforth, the three aforementioned categories will be referred to as categories A, B, and C, respectively. Note that this categorization scheme was implied in the Introduction.

Generally speaking, approaches in category A may be further divided into (i) approaches using XAI during learning and teaching and (ii) approaches using XAI for analysis and support tasks for learning and teaching. The former may involve XAI in face-to-face, blended, or distance learning activities. The latter usually involve the processing of data collected in the learning environment(s). This is mainly done after the learning procedure, but in some cases it may be done prior to the learning procedure (e.g., processing of pre-tests and questionnaires to obtain information about the status of learners before the learning procedure). Furthermore, XAI may be used in tasks requiring the processing of available data from other sources (e.g., datasets) to acquire information that is useful for teaching and learning. Moreover, XAI may be used to create new content and manage existing content. Approaches in category C may involve the handling of data derived from large-scale research studies at a regional, national, or international level. In addition, educational data may be processed in combination with other types of data. In this category, administrative tasks for teachers, educational personnel, executives, and policymakers are also included. Results derived from studies in category C may indirectly affect teaching and learning.

Figure 1 shows the categorization scheme for XAI approaches in primary education. Table 2 shows indicative tasks concerning XAI in each category. Tasks that have not been presented in the reviewed works (and, thus, constitute unexplored directions) are shown in italics.

Figure 1.

A categorization scheme for the XAI approaches in primary education.

Table 2.

Indicative tasks concerning XAI in each category of approaches.

Table 3 shows the list of the reviewed items. The reviewed items are shown in alphabetical order, based on the surname of the first author. For each item, the following are shown: the citation and the publication type, the category to which it belongs, the country (or countries) of the author(s), the AI method(s) explained, and the XAI tools or methods applied. In case of a study with multiple authors whose institutions are from different countries, all of these countries are shown in the corresponding cell. This was done for two studies [20,21]. The initials “UAE” stand for the United Arab Emirates. The term “Pub. Type” stands for “Publication Type”. The initials “CP” stand for “Conference Proceedings”, and the initials “JP” stand for “Journal Publication”. Table 3 provides answers to Research Questions 3 and 5.

Table 3.

The list of reviewed items.

The approaches were published in the time period 2019–2025. This means that they are recent approaches. This was expected, because in the last few years there has been an increasing interest in integrating AI in primary education. A consequence of this interest is the publication of approaches that specifically deal with XAI in primary education. The majority of the studies (thirteen) were published in journals. The other ten studies were published in conference proceedings.

As far as the categorization of the studies is concerned, the number of studies in categories A, B, and C is fourteen, two, and seven, respectively. Therefore, most approaches belong to category A. Fewer studies belong to category B compared to the other two categories.

One may note that almost all continents are involved in these studies. This is a positive aspect. Most of the studies are from Asia and Europe (ten studies in each continent). Three studies are from the Americas, and one is from Africa. The continent with the largest number of countries involved in the studies is Europe (seven countries), followed by Asia (five countries). Fifteen countries are involved in the studies. The countries with the most studies (i.e., three studies) are Germany and Japan. Seven other countries (i.e., India, the Netherlands, South Korea, Spain, the UAE, the UK and the USA) are involved in two studies, and the remaining countries are involved in a single study.

According to the data shown in Table 3, various XAI tools or methods are used in these studies. Most of the studies use a single XAI tool or method. A few studies use multiple XAI tools or methods. SHAP is the tool that was used in the most studies. In [20], a specific XAI tool or method is not mentioned. Provision of feature-based explanations is the function that XAI performs in most of the studies. In the following sections, the specific functions that XAI implements in the studies are further analyzed. Below, the functionality of the XAI tools or methods shown in Table 3 will be outlined.

SHAP (SHapley Additive exPlanations) is a tool that provides local and global explanations for any AI model. It is based on the SHAP values of features. SHAP values are calculated for each feature and show the positive or negative contribution of the feature in producing each output. SHAP conveniently provides visual explanations in the form of plots. The role of certain types of SHAP plots used in the reviewed papers will be briefly outlined. The global bar plot explains global feature importance by ordering the input features according to their mean absolute SHAP values and using a separate bar for each feature. Note that the bar plot may provide local explanations as well, by passing a row of SHAP values as a parameter to the plot function. In this case, the specific feature value is shown, along with the specific SHAP value. The beeswarm summary plot combines global and local explanations by ordering features according to their contribution to the output and showing the distribution of SHAP values and a coloring of the feature values for each feature across all dataset instances. For each feature, a dataset instance corresponds to a dot in the plot, with blue or red color to denote a low or high feature value, respectively. The individual force plot provides local explanations by showing the strength of the contribution of each feature to the specific output by ordering, coloring, and sizing features accordingly in a single bar line. The collective force plot provides global explanations by using the absolute mean SHAP values. The waterfall plot provides local explanations by showing the positive or negative contribution of each feature in separate bars. Scatter plots show dependencies (e.g., how a specific feature affects the output, or how two features interact). The term “force plot” used henceforth refers to the individual force plot, as this is the most frequently used or mentioned type of force plot in the specific studies, in other research, and on the Web. The term “individual force plot” is used henceforth only in the Discussion, to avoid ambiguities with the collective force plot. The term “collective force plot” is explicitly stated henceforth. Details about SHAP are available in the relevant paper [39] and the online documentation of SHAP [40].

Integrated gradients constitute an XAI method introduced in [41] to determine the contribution of features in the output. This is a feature attribution method for local explanations. Integrated gradients cumulate gradients along the path from the baseline to an input. This satisfies two axioms: sensitivity, and implementation invariance. According to sensitivity, differing single features that result in different predictions are given non-zero attributions. Implementation invariance signifies that the same attributions are given to two functionally equivalent models that produce the same outputs for all inputs.

Grad-CAM (Gradient-Weighted Class Activation Mapping) provides visual explanations (heatmaps) denoting the important parts of an image in producing the output of the model. It was introduced in [42]. It is more associated with image-related applications (e.g., image classification and image captioning) and deep neural networks. It focuses on local explanations.

Partial dependence plots are used to provide graphical explanations. More specifically, they denote how the values of one or two features affect the output. Partial dependence plots were introduced in [43]. They can be useful in showing specific values of input features that have a great effect on the output [7]. They provide global explanations.

BertViz is an XAI tool specifically designed to visualize and interpret the inner working of transformer-based models, particularly BERT (Bidirectional Encoder Representations from Transformer). According to [44], BertViz plays a crucial role in demystifying black-box behavior in Large Language Models (LLMs), thus contributing to more transparent and trustworthy AI.

ExpliClas is a Web service providing explanations for Weka classifiers. It was introduced in [45] and provides text and graphical explanations. Local and global explanations may be given. The specific version of ExpliClas provides explanations for four classifiers: J48, RepTree, RandomTree, and FURIA (Fuzzy Unordered Rule Induction Algorithm) [46].

LIME (Local Interpretable Model-Agnostic Explanations) is tool providing local explanations for any AI model. It was introduced in [47]. It identifies the influential features in producing the output for a specific instance. It works by approximating the AI model in the local region of the given instance. To do this, it analyzes how the output of the AI model changes with variations in the given instance.

FAMeX (FEature iMportance-based eXplanable AI algorithm) is an XAI method used in [25], citing other work that introduces it. This method uses a feature importance process to identify the most relevant features in producing the output. A criticality score is computed for each feature for this purpose.

4. Results

This section presents the main trends and a brief description of the reviewed studies. The presentation is organized according to the category to which the studies belong. First, approaches using XAI to assist in teaching and learning are presented in Section 4.1. In Section 4.2, approaches using XAI in the context of AI as a learning subject are presented. In Section 4.3, approaches using XAI in policymaking, decision support, and administrative tasks are presented.

4.1. Approaches Using XAI to Assist in Teaching and Learning

Various approaches using XAI in teaching and learning may be implemented. Two main types of these approaches may be discerned: (i) approaches in which XAI is used during learning and teaching, and (ii) approaches in which XAI is used in analysis and support tasks for learning and teaching. Four studies involved the use of XAI during learning and teaching [20,26,36,37]. The other studies concerned the use of XAI in analysis and support tasks for learning and teaching.

Relevant approaches are shown in Table 4, along with the corresponding main tasks performed by (X)AI, the learning subject, and the type of the approach. In the second column, the tasks implemented by the AI method that need to be explained are shown in plain text. The specific AI method(s) tested (or used) is (are) shown within parentheses. The tasks implemented by XAI are shown in italics in the corresponding column. The XAI tools explicitly mentioned are shown within parentheses. As shown in Table 4, multiple AI methods are used in some studies. In such studies, if the XAI tasks are based on one of the AI methods and not all of them, the specific AI method is shown in italics in Table 4. If the XAI tasks are based on all AI methods used, the AI methods are shown in plain text. Below, the main trends in these studies are outlined. Afterwards, the main aspects of several studies are briefly described in order to provide further details to the reader.

Table 4.

Main aspects of approaches using XAI to assist in learning and teaching.

4.1.1. Main Trends in the Reviewed Studies Reviewed Studies Using XAI to Assist in Teaching and Learning

Three studies concerned the use of XAI to provide real-time interaction in the context of e-learning systems [26,36,37]. One of these studies was a revised and extended version of another one [37]. These approaches may support purely distance learning activities and blended learning activities (i.e., a combination of face-to-face and Internet-based learning activities). In this context, XAI may be used to provide real-time explanations and advice to teachers and students. These aspects have been explored in several studies [26,36,37]. Two alternative approaches to the provision of explanations to students and teachers may be implemented. In the former, students and teachers acquire explanations about different aspects [26]. In the latter, teachers acquire the explanations provided to students [36,37].

In Internet-based activities, and especially in purely distance learning ones, teachers need to dedicate time in order to supervise students, deduce their progress, and interact with them in a timely and concise manner. Teachers may obtain feedback from XAI about the students’ overall estimated knowledge, their deficiencies, and their performance in the specific implemented tasks [26,37]. The availability of this information may reduce the amount of time that teachers would have to dedicate in order to deduce it by themselves. This enables them to take the necessary actions and interact accordingly with students. Students may directly benefit from XAI in their interaction with e-learning tools. As they work on their own, and usually in a different space and/or time from teachers, they need assistance in selecting the next tasks to implement. XAI may provide this assistance [26,37].

XAI may be used in classroom and lab activities. Teachers may employ educational presentations and demonstrations using XAI. Educational applications based on XAI may be shown to students by teachers, and students may also use them in technology-enhanced activities. Assessment and interaction in the learning procedure may benefit from XAI. XAI may be also used for classroom orchestration. In [20], the design of a classroom co-orchestration approach is discussed, in which the orchestration load is shared between the teacher and an AI system. In this context, XAI explains decisions to the teacher.

XAI may be used to perform analysis and support tasks for learning and teaching. As shown in Table 2, a type of task concerns the processing of data collected in the specific learning environment(s) using XAI. Relevant data may be collected before (e.g., pre-tests), during, and after learning. Different research directions may be explored. One main direction may concern the analysis of data collected from students. Data about individual student performance and interaction with the e-learning tool may be stored in e-learning tool databases [28]. In [28], data from completed math exercises in a math intelligent tutoring system were analyzed to assess access inequalities in online learning. SHAP was used to identify contributing features. Data in e-learning tools may also concern the interactions among students [38]. For instance, in [38], data from chat-based interactions among students who used an e-learning platform within the classroom were analyzed to predict students with low and high academic performance. SHAP identified the contribution of features in the prediction. Data may be collected from students via other methods as well (e.g., questionnaires, observations, recordings, interviews, discussions, projects, pre-tests, and post-tests) and processed with the assistance of XAI. In [30], students used tablets to interact with educational apps in rural learning centers after school. SHAP plots (i.e., waterfall and beeswarm summary plots) were used to explain the predictions of XGBoost (eXtreme Gradient Boosting) [48] with respect to students’ motivation. In [23] data about students’ eye movements in a virtual reality classroom were collected, with the purpose of identifying gender differences in learning. SHAP was used to perform feature selection in order to improve the performance of the tested classification model, and to provide explanations for the outputs of the best model using global bar and beeswarm summary plots. A second main direction concerns the analysis of data collected from teachers after the learning procedure, mainly using questionnaires, interviews, and discussions. A third direction is to analyze data concerning the interactions between students and teachers during learning [31]. A fourth main direction is to analyze aspects regarding the mechanisms of an AI-based e-learning system (that is, to explain the decisions made by the e-learning system). In this context, an e-learning approach using reinforcement learning to provide adaptive pedagogical support to students was presented in [35]. It is difficult to interpret the function approximation of the reinforcement algorithm. Therefore, XAI (i.e., integrated gradients) was used to determine the key features affecting the selection of the pedagogical policy by reinforcement learning.

Among the studies that use XAI for analysis and support tasks are the ones concerning language data in the form of text or oral data. Relevant studies include those in [27,31,34]. Various research directions may be explored in this concept. One direction is to analyze language data collected from the specific learning environment and acquire information about the learning process. Such an approach is presented in [31], in which students’ and teachers’ classroom dialogues were analyzed using a transformer model, i.e., the Global Variational Transformer Speaker Clustering (GVTSC) model. The XAI method used concerns the visualization of attention weights. This work was extended in [32] using visualization methods for the GVTSC model and a Large Language Model. Another direction is to process available language data from previous studies in order to acquire information assisting in language learning. This direction was explored in [34]. More specifically, linguistic modeling (i.e., proficiency and readability modeling) using available corpora was performed to identify linguistic properties that play an important role in language learning. SHAP was used to identify the most important features. Another direction concerns the creation of new learning content from available text or oral data. In [27] work towards this direction by translating learning content is discussed.

XAI may be used to support teaching and learning by assisting in the creation of new learning content and the management of existing learning content. Automated and semi-automated processes save time that may be dedicated to other tasks. This is particularly true in cases of creating/managing text-based content and a large number of educational items. It should be mentioned that the content’s authors may be unavailable or may have limited spare time. Relevant studies include [27,33]. In [27], machine translation with transformer models was used to translate learning content from English to the first language of students, and vice versa. XAI was used to explain the transformer models. SHAP provided plots to denote the contribution of input text features in producing output text. BertViz was used to visualize the attention mechanism of the models (i.e., the strength of weights in connections between tokens). In [33], the identification of educational items in an e-learning platform requiring revision was explored. Outlier detection with explanations and interpretable clustering were applied.

4.1.2. Brief Description of Reviewed Studies Using XAI to Assist in Teaching and Learning

In [23] gender differences in computational thinking skills in the context of an immersive virtual reality classroom were investigated. Eye movements were used as a biometric input to predict gender classification. Sensors were used to collect data from the participants. Five classification models were evaluated: Support Vector Machine, logistic regression, Random Forest, XGBoost, and LightGBM (Light Gradient-Boosting Machine) [49]. LightGBM exhibited the best performance. SHAP was used for two purposes: First, SHAP was used for feature selection. The performance of all five models was improved after carrying out feature selection, but LightGBM still outperformed the other models. Second, SHAP was used to explain the outputs of LightGBM, the model with the best performance. A global bar plot was used to classify the features according to their importance, and to denote their average impact on the output. A beeswarm summary plot was also used to summarize the positive and negative contribution of each feature across the dataset. This specific approach can be used to provide personalized learning support and assist in the design of the educational setting and adaptive tutoring systems.

In [26], an approach within the context of an e-learning platform is presented. The student takes a diagnostic test when they initially register on the platform. The approach models a student’s knowledge state using a deep knowledge tracing model [50] and predicts the response to a question in the next step. XGBoost is used to predict a student’s score in the final test based on the results of a diagnostic test. The learning subject is mathematics. Proprietary data from the KOFAC (Korean Foundation for the Advancement of Science and Creativity).were used. Knowledge concepts included in the diagnostic test are associated with Shapley values, which are used to explain the predicted final scores to teachers. Teachers obtain a viewpoint on students’ knowledge state and performance, saving time and assisting them in their online interaction with students. Shapley values are also used to provide advice to students about which activities they ought to do, and in which order. Therefore, the explainable component provides online feedback to teachers and students.

English is the official language in Uganda and is used in school education, but various ethnic languages are the first languages of many students. Luganda is a language spoken in rural central Uganda. This makes it difficult for students to comprehend the concepts taught in schools. The translation of learning material assists in its comprehension by students. A machine translation approach was carried out to translate primary-school social studies notes from English to Luganda, and vice versa [27]. Transformer models based on the Marian machine translation framework [51] were used for the translation. XAI was used to provide explanations for the outputs produced by the transformers. Two XAI tools were used: SHAP and BertViz. SHAP was used to provide a visualization of the contribution of features to the output. The force plot is explicitly mentioned in the paper. BertViz provided a visualization of the attention mechanism of the transformer models. Connections between tokens were visualized according to the strength of the corresponding attention weights. This study was one of the few to use multiple XAI tools.

Tablets are a convenient technological resource for areas with limited resources. In this context, a mobile learning approach using tablets was implemented after school in seven learning centers in rural parts of India [30]. The learning subject was English as a second language. The approach explored the factors affecting students’ motivation. The ARCS model [52], encompassing four main traits for motivation (i.e., attention, relevance, confidence, and satisfaction), was used. Educational apps for language learning were installed on the tablets, and a specific curriculum was followed. Records were kept for the attendance of students and their usage of tablets. The students also replied to a survey. XGBoost was used to predict the time the students dedicated to using the educational applications. SHAP was used to provide explanations for the derived predictions. Waterfall and beeswarm summary plots were used to show the contribution of the four features in the prediction.

In [20], the design of a co-orchestration approach in technology-enhanced classrooms is presented. It explores how responsibilities for managing social transitions in learning environments can be shared between teachers and AI systems. It aims to reduce teachers’ orchestration load while maintaining their sense of control. It emphasizes balancing teacher and AI system responsibilities to enhance the fluidity of learning transitions. The design and a prototype of the AI system were evaluated by seven teachers from different schools that teach in various grades. A prerequisite in the design of the AI system is to explain its decisions using the notion of XAI. This will enable teachers to assess whether the decision of the system is incorrect, in which case further action is needed by them. The specific type of XAI is not explicitly mentioned in the paper. The approach focuses on supporting the orchestration of social transitions, and not on other types of support.

The evaluation of educational items in e-learning platforms is generally an interesting topic, due to the large amount of items, the large number of users, the large amount of interaction data, and the variety of item content. Automated or semi-automated item evaluation methods enable content improvement, saving time for content authors, and may enhance learning and teaching. An approach to identify educational items for revision is presented in [33]. Explainable outlier detection and interpretable clustering were used to identify candidate items for revision. The educational items under consideration cover a wide range of school subjects (i.e., computing, mathematics, and English as a second language) and are available in an e-learning platform. The Isolation Forest algorithm [53] was used for outlier detection. Explanations were provided for the detected outliers to assist content authors. The researchers explored different methods for outlier explanations, including 2D scatter plots, as proposed in the LOOKOUT algorithm [54]. These 2D scatter plots are a type of lossless XAI visualization method that preserves all information in the data [17]. The researchers decided to use simple explanation methods. For each outlier, a listing of item properties with extreme values was presented. The basic boxplot method was used to identify properties with extreme values, and afterwards, related properties were grouped, assigning them comprehensible labels. Interpretable clustering concerns clustering approaches in which concise descriptions are given for clusters. This could be based on combinations of properties that often occur together.

An approach that could benefit the learning of Portuguese as a second language is presented in [34]. The researchers analyzed available corpora in Portuguese to perform linguistic modeling regarding the proficiency and readability levels. Linguistic complexity measures are associated with the proficiency and readability levels. Types of complexity measures include superficial, lexical, morphological, syntactic, and discourse-based measures. Four types of classifiers (i.e., Support Vector Machine, linear regression, Random Forest, and a multi-layer neural network) were tested for the proficiency and readability levels separately. SHAP was used to provide explanations by examining the contribution of features in the derived results. For this purpose, global bar plots and beeswarm summary plots were used.

In [35], an e-learning system is presented using reinforcement learning to adaptively assist in math learning. The system employs a narrative-based educational approach. Reinforcement learning is used in an AI guide to adaptively select a pedagogical strategy for a specific student (i.e., direct hints, generic encouragement, guided scaffolding prompts, or passive positive acknowledgment). The goal of reinforcement learning is to learn a decision policy by maximizing a reward function that takes into account the learning objectives. Features taken into consideration by reinforcement learning include fixed student features, learning activity features, and features concerning student interaction and performance during learning. The system was tested with students whose knowledge was evaluated with pre-tests and post-tests. The results were positive for students with low performance in the pre-tests. XAI was used offline to explain the decisions of reinforcement learning. This is needed because the policy optimization algorithms used in reinforcement learning to learn a decision policy that maximizes the reward are often difficult to interpret. This is the case for the proximal policy optimization algorithm [55] used in the study. Integrated gradient analysis was used to identify the most important features in policy selection. The results showed that the most important features are math anxiety and pre-test scores.

Tsiakas et al. [36,37] presented the architecture of a system intended to support students’ self-regulated skills. The overall concept of the system is based on a cognitive game including parameters whose configuration corresponds to a variety of skills and abilities. The main self-regulated skills supported are goal setting, self-efficacy, and task selection. The training process consists of sessions organized in rounds. An Open Learner Model [56] is used to record the student’s progress. Recommendations are provided to students at the beginning of each session (i.e., target score) and at the end of each session round (i.e., next task configurations). Alternative recommendations are provided based on the students’ profiles and context. Recommendations are based on probability models. For each recommendation, personalized explanations may be provided. Teachers may view and edit recommendations and explanations.

4.2. Approaches Using XAI in the Context of AI as a Learning Subject

AI may be integrated in primary education as a learning subject. It is considered to be useful to teach AI at all educational levels, due to the importance of AI in everyday tasks. AI as a learning subject involves various dimensions. Among others, these concern the acquisition of knowledge about (i) basic AI concepts, technologies, and processes; (ii) the role of AI in society and the responsible use of AI; and (iii) AI as a learning tool. The term “AI literacy” is used to define the knowledge about AI that every student needs to possess as a member of society.

Appropriately designed learning activities that correspond to the age of students are needed for teaching AI. This is also the case for relevant educational applications, presentations, and demonstrations that may be available to students in the classroom and through the Internet. Laboratory activities concerning AI may also be implemented. Alternative approaches may be implemented in the teaching of AI. On the one hand, the teaching of AI may be performed autonomously. On the other hand, the teaching of AI may be carried out in combination with or in the context of the teaching of other subjects (e.g., computational thinking, robotics, science, mathematics, language). XAI may be part of the AI curriculum.

Two studies were found in which XAI was used in the context of AI as a learning subject. Table 5 shows the main aspects of these studies. More specifically, for each study, the following are outlined: (a) the taught subject; (b) the tasks implemented by the AI method(s) shown in parentheses, for which explanations are provided; and (c) the tasks implemented by the XAI tools shown in parentheses. The cell contents concerning (a) and (b) are shown in plain text, whereas the contents concerning (c) are shown in italics. The following paragraphs include a short presentation of the three studies.

Table 5.

Main aspects of approaches using XAI in the context of AI as a learning subject.

Bias inherent in AI is an issue worthy of being taught to children, as AI affects many social sectors. Melsión et al. [21] focused on educating students about gender bias in supervised learning. Gender bias creates discrimination in AI decisions. An online educational platform was used for learning. A dataset consisting of images with gender bias was used to train the model, i.e., a combination of a Convolutional Neural Network (CNN) and a Long Short-Term Memory (LSTM). More specifically, the dataset used was a subset of the Microsoft COCO (Common Objects in Context) public dataset. XAI was incorporated in the platform to facilitate students’ learning. More specifically, Grad-CAM provided visual explanations to help preadolescents understand gender bias in supervised learning. The primary goal was to raise awareness and improve students’ comprehension of bias and fairness in AI decision-making.

Alonso [22] presented an approach for teaching AI to children in the context of workshops in a research center. Children were initially introduced to AI and its applications. They then interacted with a specialized application implemented in Scratch, a popular visual programming environment for children. The application identified the roles of basketball players. For a selected player, four WEKA classifiers (i.e., J48, RepTree, RandomTree, and FURIA) were employed to perform classification. Voting was used to produce an output. Explanations generated by ExpliClas were shown in text and visual format. Children evaluated the explanations and then learned how they were generated. This specific approach combines XAI learning with programming concepts and sports.

4.3. Approaches Using XAI in Policymaking, Decision Support, and Administrative Tasks

XAI may be used to obtain information from available data, assisting in educational policies, decision-making, and administrative tasks in primary education. Approaches that belong to this category are shown in Table 6. The main goals of these approaches concern the following: (i) student performance prediction, (ii) student performance analysis, (iii) identification of key factors affecting student performance, (iv) prediction of the required number of teachers by region, and (v) prediction of student attendance.

Table 6.

Main aspects of studies using XAI in policymaking, decision support, and administrative tasks.

4.3.1. Main Trends in the Reviewed Studies Using XAI in Policymaking, Decision Support, and Administrative Tasks

Table 6 summarizes the main aspects of the relevant studies. In the second column, the tasks performed by XAI are shown in italics, and the XAI tools explicitly mentioned are shown within parentheses. In the same column, the tasks implemented by the AI method(s) that need to be explained are shown in plain text. The specific AI method(s) tested (or used) is (are) shown within parentheses. In studies using multiple AI methods, if the XAI tasks are based on one of the AI methods, the specific AI method is shown in italics in Table 6. If the XAI tasks are based on all of the AI methods used, the AI methods are shown in plain text.

One may note that student performance is a key aspect in most of these studies (i.e., four studies). An explanation for this is the fact that student performance is an aspect that is frequently investigated in education. A further explanation is that performance in primary education affects performance at subsequent educational levels. Each of the aforementioned goals (iv) and (v) were pursued by a single study. Generally speaking, it is interesting that various aspects concerning education were explored.

Obviously, educational data may provide information for decision-making in primary education. However, in addition to educational data, other types of data (e.g., family, financial, and population data) may prove useful as well. Within specific countries, educational and other types of data are available at the national, regional, municipal, or school level. Open government data may assist researchers in conducting research that combines educational data and other types of data. Large-scale educational assessments may also be sources of educational and other types of data [4,5]. Typical examples of large-scale international educational assessments include the PISA (Program for International Student Assessment), TIMSS (Trends in International Mathematics and Science Study), and PIRLS (Progress in International Reading Literacy Study). Nadaf et al. [5] used data from TIMSS assessments. Silva et al. [4] used data from large-scale educational assessments within Brazil. Sanfo [6] used data from an international survey concerning French-speaking countries.

Several indicative studies combined educational data and other types of data [4,5,6,7,25]. Lee [7] presented an approach predicting the number of teachers required in each region. He used basic educational data such as the numbers of primary education teachers, students, schools, and classes. Further data used included data affecting the size and structure of the population (e.g., numbers of births, deaths, marriages, and divorces), economically active population data, and population movement data. A finding was that the economically active population is the most important factor affecting the prediction of the required number of teachers. Silva et al. [4] combined educational data (e.g., average number of students per class, teachers’ education, average daily teaching hours of teachers, students’ performance in large-scale assessments), economic data (i.e., investments in education), and social well-being data. A finding was that the teachers’ characteristics play the most important role in students’ performance. Sanfo [6] used data from an international survey combining educational data with learning environment data and data about students’ and teachers’ backgrounds. The purpose of the approach was to predict students’ learning outcomes. Nadaf et al. [5] combined educational data with family-related and demographic data. Kar et al. [25] used a dataset derived from a survey in Bangladesh involving students from all educational levels and, among others, including the financial status of the family.

Research may be carried out with datasets that are available beforehand. This was the usual case in almost all of the studies. However, datasets may be also created as a result of a specific study. For instance, in [29], the dataset used was created by the researchers from several schools in a country-level study based on students’ results in a math and an English test, according to international standards and students’ responses to a questionnaire.

Learning subjects explicitly stated in the studies are mentioned in Table 6. Obviously, two of the studies [7,24] do not concern a specific learning subject. In another study [4], specific learning subjects are not mentioned, but it may be assumed that a combination of learning subjects are involved.

4.3.2. Brief Description of Reviewed Studies Using XAI in Policymaking, Decision Support, and Administrative Tasks

Attendance is an important factor in education, as it affects overall student performance and participation in learning activities. By predicting when a student will be absent and the reasons for this, actions can be taken to prevent it. In this context, a theoretical approach for the prediction of school attendance is presented in [24]. The explainable approach is based on the Isabelle Insider and Infrastructure framework and the precondition refinement rule algorithm. A logical rule-based explanation is provided for the output of a black-box AI method (not specifically mentioned). The overall framework is based on computation tree logic (i.e., a type of temporal logics using branching) [57], attack trees (i.e., a model for risk analysis), and Kripke structures [58].

In the study of Lee [7], an approach predicting the number of required teachers in each region was applied. XGBoost was used for the predictions. Data sources involved Korean national public databases, the Korean Educational Development Institute (KEDI) and the National Statistical Office (NSO). The XAI methods used were feature importance, partial dependence plots, and SHAP. Feature importance showed that the most important features are the following (in descending order of significance): economically active population by city, number of classrooms by city, birth rate by city, number of students by city, and population migration. Partial dependence plots were used to show how numerical changes in a feature (e.g., economically active population) affected the prediction. The number of required teachers increased as the economically active population increased. Four different types of SHAP plots were used: beeswarm summary plots, force plots, scatter plots, and plots accumulating Shapley’s influence on all data. Beeswarm summary plots showed that the economically active population and the number of classrooms were the most important features in the prediction, and the variance of their SHAP values was greater. Force plots showed the contribution of features in teacher number prediction for specific cities and years. Scatter plots showed an above-average increase in the required number of teachers in case a specific value of the economically active population was surpassed.

Miao et al. [29] used SHAP extensively to explore the contribution of predictive factors to students’ math performance. A country-level study was carried out. XGBoost was used for performance predictions. Various SHAP plots were used. A force plot was used to provide a viewpoint on factors contributing positively and negatively to a specific student’s performance. A global bar plot and a beeswarm summary plot were used to derive the most important factors in performance prediction and the range of the SHAP values. The four most important factors were language (i.e., vocabulary and grammar skills), the grade level, reading (i.e., reading comprehension), and self-efficacy in math. It should be mentioned that math is taught in English (second language) in the specific country. The researchers then segmented student performance into four groups (very poor, poor, normal, and outstanding), according to test scores, and studied the SHAP values of various factors separately for each group. Scatter plots were used for this purpose. More specifically, scatter plots were created for each category for the following: (a) language test scores and SHAP values for the language feature, (b) reading test scores and SHAP values for the reading feature, (c) self-efficacy level in math and self-efficacy SHAP values, (d) the ability of students to use reading and problem-solving strategies and corresponding SHAP values, and (e) the ability of students to exercise PISA math learning strategies and corresponding SHAP values. Thresholds in the levels of these features for the corresponding SHAP values to become positive were derived. As expected, better (worse) levels in these features resulted in better (worse) math performance.

In [5], the factors affecting UAE students’ math performance in the TIMSS international assessment were examined. A boosted regression tree model, i.e., CatBoost [59], was used to model students’ performance. SHAP was used to assess the contribution of features to the students’ performance. Two types of plots produced by SHAP were used: beeswarm summary and dependence plots. According to the beeswarm summary plot, the three most important key features contributing to math performance were students’ confidence in mathematics, language difficulties, and familiarity with measurements and geometry. As far as the feature “language difficulties” is concerned, the distribution of SHAP values was wide, demonstrating the heterogeneity in its contribution (from very negative to very positive). This observation led the researchers to use a dependence plot for the contribution of language difficulties to math performance. A nonlinear relationship between them was observed.

Sanfo [6] used data from Burkina Faso’s 2019 Program for the Analysis of CONFEMEN Education Systems (PASEC). The initials CONFEMEN stand for Conference of Ministers of Education of French-Speaking States and Governments. Educational data were combined with other types of data (i.e., learning environment data and data about students’ and teachers’ backgrounds). Machine learning models were used to predict students’ learning outcomes. SHAP was used to identify the main features contributing to the results of three machine learning models exhibiting the best performance in classification (KNN, SVM) and regression (Random Forest regressor) from the set of models tested. The global bar plot (i.e., bars are the mean absolute SHAP values for the whole dataset) was used for global explainability, and the waterfall plot (i.e., SHAP values for a specific dataset instance) was used for local explainability. The features identified as most important for KNN and SVM were in the following order of importance: local development, community involvement, school infrastructure, and teacher experience. The features identified as most important for Random Forest regressor were in the following order of importance: school infrastructure, grade repetition, local development, and teacher experience. The use of different machine learning models verified the importance of three of the four features and provided a different viewpoint on one of the four features (grade repetition or community involvement) and the importance ordering.

In the work of Silva et al. [4], open government data and data from large-scale assessments were used for three purposes: (a) to explore the correlation between student performance and other parameters (e.g., teachers’ education, class size, social well-being, municipality investments), (b) to predict student performance based on past data, and (c) to explore the consistency of the correlation among student performance and other parameters and the analysis of feature importance. They used artificial neural networks, linear regression, and Random Forest for student performance prediction. Feature importance analysis was performed only for the Random Forest, which exhibited the best performance. It should be mentioned that the specific learning subjects involved are not mentioned in the specific paper, but they may be deduced. Student performance prediction is based on data concerning the school achievement rate and national exams. A search on the Web showed that the specific national exams involve at least language (Portuguese) and mathematics. Obviously, school achievement depends on all or most of the taught learning subjects.

5. Methodological Quality of the Reviewed Papers

An aspect of interest is to discuss the methodological quality of the reviewed studies. One may assess the methodological quality of each study in the following dimensions: (i) study design, (ii) sample size, (iii) data source, and (iv) limitations identified. Table 7 presents relevant data.

Table 7.

Main aspects of the methodological quality of the reviewed studies.

The studies concern varying numbers of participants, ranging from less than ten to hundreds or thousands. There are also studies in which the number of participants is not mentioned or details are missing. There are also studies in which there were no participants to evaluate the approach.

As far as the data sources are concerned, one may note that, in most studies relying on available datasets, there was an effort to mainly use publicly available datasets. This facilitated other researchers to conduct research with other methods and compare the results. In some cases, datasets created in the context of studies were made publicly available, also facilitating further research. In a specific study, a proprietary dataset was used.

The main limitations indicated concerned missing details (e.g., about the participants and their number, implementation details). Limitations concerning the evaluation were also indicated. There are studies lacking an evaluation with humans, or this evaluation is not thorough. A further limitation concerned the selection of features while implementing the AI models.

6. Discussion

A number of studies concerning XAI in primary education have been presented. XAI has shown its usefulness in different contexts. Obviously, teachers and parents of students may have some reservations about the use of AI in educational settings. However, some of these reservations may be surpassed due to XAI. The following sections discuss various aspects of the reviewed studies.

6.1. Answers to the Research Questions

In Section 2, seven research questions were mentioned, guiding the preparation of this review. Answers to these questions have been given in previous parts of this paper. In this section, we mention previous parts providing the answers, and we also discuss further aspects.

Research Question 1 involves the main categories in which XAI approaches in primary education may be discerned. Three main categories are described in Section 3 (text, Figure 1, Table 2 and Table 3). The relevant studies are presented in Section 4, according to the category to which they belong.

Most studies belong to category A or C. Relatively fewer studies belong to category B. There are a number of reasons for this. The teaching of AI to young students is a more recent development compared to the use of AI to assist in teaching, learning, policymaking, decision support, and administrative tasks at the specific educational level. Furthermore, it is more straightforward to implement approaches belonging to category A or C. Approaches belonging to category B require careful design and the establishment of the corresponding curriculum. According to Chiu [18], there does not seem to be a consensus among researchers about what needs to be taught concerning AI and how it should be taught to children. A further comment is that school teachers and students are obviously not experts in AI. This means that they need to interact with learning content and tools that they comprehend. This is especially the case for students, due to their young age. During the last few decades, experience in teaching AI in higher education has accumulated based on relevant learning outcomes, strategies, content, and tools. A corresponding process is required for primary schools (that is, gaining experience in teaching AI to young students according to learning outcomes and strategies, and using appropriate content and tools that need to be prepared).

Research Question 2 was as follows: “What are the main trends of the XAI approaches in primary education?” Answers to this question are given in Section 3 and Section 4.

Research Question 3 was as follows: “What are the main XAI tools or methods used in the XAI approaches in primary education?” Table 3 summarizes the XAI tools or methods used. In Section 3, the general functionality of the main XAI tools is outlined. In Section 4, it is explained how XAI tools or methods are used in the context of the reviewed studies. The relevant aspects are outlined in Table 4, Table 5 and Table 6.

Research Question 4 was as follows: “In which primary education learning subjects are the XAI approaches used?” In Section 4, learning subjects are mentioned for the reviewed studies, and they are outlined in Table 4, Table 5 and Table 6. These aspects are summarized in Table 8, which outlines the studies per learning subject. The studies involving a specific teaching subject explicitly mentioned are shown in Table 8, along with the two studies concerning general skills (i.e., self-regulated skills). The learning subjects are listed in alphabetical order in the table. The reviewed approaches deal with various learning subjects in primary education, including studies in all categories. It is useful to apply XAI in various learning subjects and examine the derived benefits.

Table 8.

Studies per learning subject.

Note that not all reviewed studies involve a specific learning subject. These studies are those of Lee [7] and Kammüller and Satija [24], which concern the prediction of the supply and demand of teachers by region and the prediction of student attendance, respectively. Therefore, they may not be associated with a specific learning subject. Furthermore, in the work of Kar et al. [25] analyzing survey data, no learning subject is mentioned in the dataset.

Furthermore, there are studies involving a combination of learning subjects [4,27,29,33]. It should be mentioned that the study of Silva et al. [4] concerns a combination of learning subjects because student performance was predicted using data from a large-scale educational assessment within Brazil. The assessment concerned the school achievement rate, which obviously depends on all (or most) of the teaching subjects and national exams, which involve at least language (Portuguese) and mathematics. For these reasons, the study of Silva et al. [4] is mentioned in the subjects of language and mathematics in Table 8. There is also a separate row in the table mentioning that Silva et al. (2024) [4] are associated with all or most of the learning subjects.

One may note that there is a tendency for using XAI in mathematics. More specifically, eleven of the twenty studies in Table 8 concern mathematics. This may be attributed to the difficulties that certain students face in mathematics specifically. Learning tools and analysis of math learning have attracted the interest of researchers. Examples of interactive learning tools for mathematics are presented in several studies [26,33,35]. Kim et al. [26] considered XAI in dynamically assisting in interactive learning activities. The other four approaches [28,33,35,38] involve XAI in the analysis of data stored in learning tools to provide offline assistance to teachers. Onishi et al. [31,32] also considered offline assistance of XAI to teachers (i.e., analysis of classroom dialogues). Mathematics is also a subject of large-scale assessments in education. Examples of relevant approaches include the works of Miao et al. [29], Nadaf et al. [5], and Sanfo [6]. Some of the reviewed studies have shown the interrelation of mathematics and language; that is, the knowledge level in language affects math learning.

XAI in second-language learning seems to be a direction followed by certain approaches. In Table 8, six relevant studies are included in the list of reviewed papers. Most countries are multilingual, and second-language learning is important for communication among their inhabitants. There are also countries in which the official language taught in schools differs from the first language of a percentage of the population. In addition, there are examples of countries (e.g., the UAE) in which the curriculum is taught in English [29], which differs from the first language of the country’s population. XAI has shown the ability to provide solutions in different scenarios involving second-language learning.

Research Question 5 was as follows: “For which AI methods were XAI tools or methods used to provide explanations in XAI approaches in primary education?” The specific AI methods are outlined in Table 3. Section 4 discusses which AI methods were used in the studies, along with their functionality. Table 4, Table 5 and Table 6 outline the AI methods used and their functionality. In some studies, multiple AI methods were used. In these cases, it is explained in Section 4 whether XAI tasks were implemented for all of these multiple AI methods or for specific ones of them. This is also shown in Table 4, Table 5 and Table 6.

Research Question 6 was as follows: “Were single XAI tools or methods or a combination of them more preferred in XAI approaches in primary education?” The answer to this question is given in Table 3, which outlines the XAI method(s) or tool(s) used in each study. Section 4 analyzes the tasks implemented by XAI, and relevant aspects are outlined in Table 4, Table 5 and Table 6. Based on the aforementioned, it can be seen that most of the studies used a single XAI tool or method. Few studies explored the combination of multiple XAI tools or methods. Indicative such studies include those of Kobusingye et al. [27] and Lee [7].

Nevertheless, the combination of different XAI tools or methods may provide advantages. In fact, this research question stems from the first author’s previous work in the combination of intelligent methods (i.e., neuro-symbolic approaches [60] and other types of combinations [61]) which offers benefits by exploiting the advantages of the combined methods. Specifically, the combination of different XAI tools or methods may provide a variety of explanatory viewpoints. A typical example is the work of Lee [7], which combined the explanations provided by feature importance, partial dependence plots, and SHAP. The information provided by feature importance may be also provided by SHAP with the global bar plot. However, the information provided by partial dependence plots about how changes in the value of a feature affect the output value may be usefully blended with the information provided by SHAP plots. In the work of Kobusingye et al. [27], SHAP and BertViz were combined, providing different explanatory viewpoints once again. SHAP plots showed the contribution of input text features, and BertViz visualized the attention mechanism of the transformer models. In Kar et al. [25], LIME, SHAP, and FAMeX were used to compare their results in the identification of features contributing most to the output.

Research Question 7 concerned the following: “Taking into consideration the XAI tool or method mostly used in XAI approaches in primary education, which of the main functionalities offered were exploited?” As shown in Table 3 and already mentioned in Section 3, SHAP is the tool that is used more frequently compared to other XAI tools or methods. The functionality of SHAP used in these studies involves, among others, the following:

- (a)

- Calculation of SHAP values. This is performed in all studies using SHAP.

- (b)

- Generation of various types of plots (Table 9).

Table 9. Types of SHAP plots mentioned in the reviewed studies.

Table 9. Types of SHAP plots mentioned in the reviewed studies. - (c)

- Depiction of feature contribution in producing the outputs. This is done in all studies using SHAP. SHAP values and the generated plots were used for this purpose.

- (d)

- Feature selection in order to improve the performance of the tested AI methods. This was done in the work of Gao et al. [23].

- (e)

- Provision of local and global explanations. Global explanations are provided with the global bar, beeswarm summary, collective force plot, and scatter plots. Local explanations are provided with waterfall and force (and individual) plots, and to a certain degree with the beeswarm summary plots. Local explanations are also considered to be the online feedback to teachers and students provided in the study of Kim et al. [26].

An interesting aspect is to explore the reasons for which SHAP was the most used tool in the studies. A major reason for this is that SHAP conveniently generates various types of plots that may be interpreted. Another reason is the fact that SHAP provides both global and local explanations. In education, it is necessary to examine both types of explanations in order to explore the overall tendencies and parameters affecting specific outputs. For instance, it is useful to examine features affecting a specific decision for a specific student in a specific learning situation. However, it is also useful to examine how features generally affect all students or specific categories of students. The examination of features affecting specific categories of students yielded useful insights in certain studies.

As mentioned in Section 4, different types of SHAP plots were found to be useful in the studies. Table 9 depicts the types of SHAP plots mentioned in the reviewed studies. It should be noted that the contents of this table are an indication of researchers’ tendencies in the types of SHAP plots that they use. However, it may be possible that further types of SHAP plots were used in certain studies but this was not mentioned in the corresponding papers (e.g., due to space limitations in the presentation of the work). One can note that the beeswarm summary plot is the type of plot used in most studies. This specific type of plot combines local and global explanation, as it shows results for every dataset instance. The second most used type of plot is the global bar plot. This plot provides convenient global explanation (similar to feature importance) by ordering the input features according to their mean absolute SHAP values in the whole dataset. The (individual) force and scatter plots share the position of the third most used type of plot. Scatter plots are useful to show dependencies. Individual force plots conveniently provide local explanations, showing the magnitude of the contribution of each feature.

6.2. Further Issues

There seems to be a tendency of using XAI to analyze results derived from large-scale educational assessments. The large amount of derived data enables the application of various AI methods. The analysis of the results may be conducted by the organizations or institutions that perform these assessments. However, individual researchers or groups of researchers may also analyze the results, because the relevant datasets are publicly available. The availability of these large datasets creates opportunities to test various AI (and specifically XAI) methods.

A few studies concerned rural regions [27,30], underprivileged regions [30], or regions with limited resources [30]. An interesting aspect in AI-based learning is implementing approaches handling difficulties in such regions and providing solutions to certain problems that education faces. Such approaches demonstrate the social role that AI (and specifically XAI) may play in providing equal opportunities for learning.

The approach discussed by Alonso [22] may provide ideas about how to integrate XAI as a learning subject. The teaching of XAI concepts in combination with other subjects, such as programming, may prove beneficial. Another useful conclusion deriving from this work is the incorporation of XAI in popular learning tools used in education, adjusting the presentation of explanations in order to make them comprehensible to children. Comprehensible AI models may also assist in the generation of adjusted explanations.

It should be mentioned that interactions between humans and AI systems affect the users’ level of trust in AI. In [62], it was demonstrated that VIRTSI (Variability and Impact of Reciprocal Trust States towards Intelligent Systems) could effectively simulate trust transition in human–AI interactions. The model’s ability to represent and adapt to individual trust dynamics suggests its potential for improving the design of AI systems that are more responsive to user trust levels. This approach captures how users’ trust levels evolve in response to AI-generated outputs. As XAI increases trust in AI systems, it would be useful to employ ideas from VIRTSI in order to assess the trust transition in human–XAI interactions in primary education.

An interesting aspect is to obtain measurable data about the impact of XAI on educational outcomes. For instance, these outcomes could involve reductions in workload, improvements in education due to policy changes, and improved student performance. Generally speaking, such data items are not yet available, and this represents a research gap. Obviously, information derived due to XAI could not have been obtained otherwise. It is useful to assess the impact of having such information.

As limitations of this research, one could mention the following: First, research items not authored in English were excluded. This is typical in reviews, for practical reasons, but it may exclude insightful work. Second, research items that were generally not accessible or were not accessible through our institution’s infrastructure during the time period of the search were excluded. This is also typical in reviews, but there is always a possibility that relevant work may be excluded. Third, the term “XAI” is generally recently used in research due to the increased interest. It may be possible that certain research carried out in the context of XAI is not explicitly identified as such by the corresponding researchers. Fourth, the search was carried out using two tools (i.e., Scopus and Google Scholar). Further tools could be used to perform the search, such as Web of Science, which is not accessible by members of our institution due to the unavailability of the corresponding subscription.

7. Conclusions and Future Directions

This paper reviewed research integrating XAI in primary education. XAI holds incredible potential to transform education by making AI-driven decisions transparent, fair, and easier to trust. By helping educators and students understand how AI tools produce their output, XAI fosters greater confidence and enables more effective learning experiences. The categorization scheme proposed here discerns three main types of XAI approaches in primary education. It provides a structured and simple framework through which the application of XAI in primary education can be understood. This framework not only highlights the diverse roles that XAI can play in educational contexts but also offers a foundation for future research and practice, guiding the development of more transparent, ethical, and effective AI solutions in primary education.

Studies from almost all continents have been presented here. Fifteen countries were involved in the studies. As the use of AI (and specifically XAI) in society and education increases, it is expected that more studies will be implemented in the specific countries and beyond. It should be noted that no studies from Oceania (e.g., Australia and New Zealand) were retrieved. It is expected that studies on this continent will be implemented in the near future.