Abstract

This paper presents a comprehensive overview of cutting-edge autonomous forklifts, with a strong emphasis on sensors, object detection and system functionality. It aims to explore how this technology is evolving and where it is likely headed in both the near and long-term future, while also highlighting the latest developments in both academic research and industrial applications. Given the critical importance of object detection and recognition in machine vision and autonomous vehicles, this area receives particular attention. The article provides an in-depth summary of both commercial and prototype forklifts, discussing key aspects such as design features, capabilities and benefits, and offers a detailed technical comparison. Specifically, it clarifies that all available data pertains to commercially available forklifts. To obtain a better understanding of the current state-of-the-art and its limitations, the analysis also reviews commercially available autonomous forklifts. Finally, this paper includes a comprehensive bibliography of research findings in this field.

1. Introduction

The literature review conducted in this paper reports a comprehensive analysis of the latest advances in perception and scanning technology related to autonomous forklift systems. This paper offers a complete evaluation of the present state-of-the-art techniques and developments in machine vision for independent forklifts. It encompasses various aspects such as the identification of objects, localization, mapping and path planning, emphasizing the significant challenges and recent advancements in each area. Additionally, emerging technologies such as deep learning, sensor fusion and real-time perception are deliberated in the perspective of their impact on boosting the capabilities of independent forklifts. Currently, autonomous vehicles are a hot topic as this era is currently witnessing a great deal of development in the field of self-driving that might possess the capability to revolutionize our lives in terms of safety, reliability and efficiency [1]. Transporting and delivering goods to storage places has been made easy by these promising vehicles, resulting in improvement in productivity.

Over the last two decades, there have been exceptional and unprecedented trends in the field of self-driving cars. The idea of self-driving cars first appeared in the 1920s, when the possibility of making an autonomous vehicle began to be seriously considered, as explained by Ondru et al. as in [2]. It also discussed the functioning of autonomous vehicles.The first promising endeavors of autonomous vehicles, although of course not fully autonomous, were in the 1950s, with the initial autonomous vehicles emerging in the 1980s through the efforts of Carnegie Mellon University’s Navlab [3].As the field progressed, multiple prominent companies and research centers became actively involved in its development. The automotive industry is presently advancing sensor-based solutions to enhance vehicle safety. These systems, known as Advanced Driver Assistance Systems (ADASs), utilize an array of sophisticated sensors as confirmed in [4].

In this paper, a review of different types of sensors such as Time of Flight (ToF) and LiDARs has been presented to illustrate the principle of sensor use in autonomous vehicles, and to equip the reader with the basic knowledge necessary to understand this technology. Additionally, this paper will explain the fundamentals of modern advancements in the key features of self-driving technology. Self-driving forklift trucks are employed in warehouses to move goods between different locations. These autonomous vehicles have already had a considerable effect on logistics operations, with most current self-driving forklifts depending on advanced sensors, as well as vision and Geographic Information System (GIS)-based guidance systems. In addition, the scope of this paper also considers automated forklifts with multi-level load actuation in warehouse racking systems, which require vertical lifting capabilities to operate effectively. This excludes more basic floor-level automated guided vehicles (AGVs) that do not perform vertical lifting into racking, as they serve different operational purposes.

This paper is structured as follows: Section 1 introduces the focus and objectives of the research, providing an overview of the current advancements in autonomous forklifts. Section 2 reviews related work, highlighting key academic contributions and solutions for developing autonomous vehicle prototypes. Section 3 explains the architecture of autonomous vehicle components, detailing their perception, localization, planning and control systems. Section 4 focuses on machine vision and object detection techniques, emphasizing models used in autonomous forklifts and deep learning-based detection methods, respectively. Section 5 examines industry applications and major manufacturers of autonomous forklifts. Finally, the discussion and conclusions are presented in Section 6 and in Section 7 respectively.

Methodology and Criteria

To ensure a comprehensive and unbiased analysis, an approach was employed to select relevant research papers. The search was conducted using established academic indexing databases, including Scopus, IEEE Xplore, SpringerLink, ACM Digital Library and ScienceDirect, focusing on keywords such as “Autonomous Forklifts”, “ machine Vision”, “Perception Technology”, “Scanning systems”, “Object Detection” and “Forklift Automation”. In addition, technical reports and white papers from official company websites indexed by these platforms were included to provide industry insights. Inclusion criteria prioritized peer-reviewed journal articles, conference papers and technical reports. Studies were selected based on their relevance to the main themes of the manuscript, with a particular emphasis on technological advancements, challenges and industry applications in the field of autonomous vehicle perception and scanning systems. Papers lacking sufficient technical detail or falling outside this scope were excluded. This methodology was designed to provide a focused yet comprehensive overview of the current state of the art in this domain.

2. Related Work

Contributions in academia across different domains related to the design and manufacturing of autonomous forklifts have propelled the investigation and advancement of this technology. In this section, the authors provide an overview of such a system’s key building blocks, with the main emphasis on sensing and ranging technology.

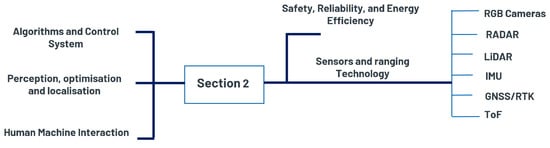

This section is summarized in Figure 1, which highlights the core components discussed in this chapter.

Figure 1.

Summary of the content of Section 2.

2.1. Algorithms and Control Systems

Researches, such as the authors of [5,6,7,8,9,10,11,12,13,14,15], have developed novel algorithms and control systems that enable autonomous forklifts to navigate, plan optimal paths, avoid obstacles and efficiently perform material-handling tasks. In [5], the discussion revolves around the creation of a self-driving forklift outlining “a system configuration using vision, laser ranger finder and sonar”. In addition, ref. [16] focuses on a closed-circuit fusion regulator for path-tracking strategies with industrial forklifts transporting substantial burdens at elevated velocities, taking into account factors like vehicle steadiness, security, skidding and convenience. In [17], Park Song introduced a network-oriented distributed method where system elements are linked to a “shared CAN network”, and “control functions” are subdivided into minor operations that are spread across multiple microcontrollers with a restricted computational capability.

The article in [10] introduces the omnidirectional setup and control method for the “Mini Heavy Loaded Forklift Autonomous Guided Vehicle (AGV)”, designed for adaptability and agility in tight and limited spaces. Marcuzzi et al. in [18] “focused on enhancing current (AGV) flexibility in non-structured environments”. The proposed method aims to address the issue of recognizing a pallet, whose position is uncertain, by combining laser range and visual data. It also involves generating and executing smooth paths in real-time to accurately reach the desired target. Also, an important study by Scheiner in [19] involved a thorough examination of both existing and cutting-edge radar-based object detection algorithms, including some of the most promising options from the fields of imagery and LiDAR. All tests were performed via a traditional automotive radar technique. Alongside presenting all structures, significant focus is given to the essential point cloud pre-processing for all techniques. Experimental prototypes were described early in 2010 in [20], where a multimodal framework for engaging with an independent robotic forklift was outlined. The robot employed audible and visual communication to express its present condition and planned maneuvers. The system also enables a seamless shift to manual control; when a person enters the forklift’s enclosure, they can take over its operation, and the forklift remains in manual mode until the person leaves. In [21], they present an analysis that utilizes signal temporal logic (STL), which is a meticulous and unequivocal language for specifying requirements. The suggested structure assesses test cases against the STL formulas and utilizes “the requirements to automatically detect test cases that do not meet the requirements”. Additionally, in [22], the authors introduced an integrated dual-sensor structure, laser and camera, and a novel algorithm named RLPF as a resolution to the issue of recognizing and localizing a pallet.

A group of researchers in [23] suggested a system that can identify the pallet using object detection based on deep learning from an image, utilizing the outcomes of object detection and measurement through a horizontal 3D light detection and ranging (LiDAR) method. Furthermore, the vertical LiDAR scans the pallet for precisely adjusting the height of the fork. The system was tested in real agricultural environments. In Japan, an important work, it utilizes the findings of object identification and quantification through horizontal 3D LiDAR, where the system precisely calculates the gap between the forklift and the pallet in the outdoor area, including the distance as well as the horizontal and vertical variations. The forklift is operated using resilient Sliding Mode Control (SMC) that can handle disruptions effectively. Additionally, “the vertical LiDAR scans the pallet” to ensure accurate adjustment of the fork’s height. Ulrich Behrje et al. in [24] introduced a self-driving forklift (AGV) built on a forklift. Although centralized transportation systems are commonly utilized in the industry, these systems are costly to establish and lack adaptability when it comes to scheduling modifications. This forklift utilizes a 3D (ToF) camera as its navigation sensor and operates without the need for artificial visual markers Furthermore, in [25], the authors proposed “a novel semifragile data hiding technique for real-time sensor data integrity verification and tamper detection and localization”. In particular, “the proposed data hiding technique utilizes 3-dimensional quantization index modulation (QIM) to insert a binary watermark into the LiDAR data at the time of data acquisition”. Lastly, in the study by L. Lynch et al. [26], the authors reviewed AGVs, their navigation technologies and integrated sensors.

2.2. Perception, Optimization and Localization

Autonomous forklifts rely on accurate perception and sensing capabilities to understand their environment. Scientific contributions in this area, such as [19,27,28,29,30], focus on improving object detection. Important articles that explain the most prominent challenges of object detection in the autonomous vehicle domain are as follows: refs. [22,31,32,33] discusses localization; refs. [34,35] elucidates mapping; and refs. [36,37] explores sensor fusion techniques. This may include developing new sensor technologies, designing robust perception algorithms and addressing challenges related to occlusions, dynamic environments and varying lighting conditions. Articles such as [38] contributed to this domain of perception and sensing. Additionally, in [39], an important survey was conducted to investigate the perception and sensing capabilities of autonomous vehicles in challenging weather conditions.

In scenarios where multiple autonomous forklifts work together, scientific contributions focus on optimizing coordination and task allocation. Research such as that by Krug et al. in [40] explores the predominant challenges linked to autonomous order picking systems in warehouses, shedding light on the constraints in speed, safety and accuracy.

Also, as explained in [41], the authors designed routing systems for intelligent warehouses, where the router addresses real-world operational challenges by ensuring that the operations are conflict-free.It adeptly handles traffic congestion, creates optimized trajectories for autonomous forklifts, and dynamically adjusts priorities and routes to prevent collisions. In [42], the authors formulated a deep learning approach for task selection within a warehouse setting for a fleet of autonomous vehicles. Moreover, the study of [43] explores an optimization challenge within the inbound warehouse process, focusing on the coordination of multiple resources in a specific type of automated warehouse system. As it became evident, the contributions in this field encompass a wide range of diverse approaches, involving the formulation of algorithms and techniques for tasks such as scheduling, load balancing and resource allocation. These endeavors aim to enhance the overall effectiveness and productivity.

Additional research about autonomous vehicles (AVs) can be found in [44]. The document provides an extensive examination of the cutting-edge techniques employed to enhance the effectiveness of AV systems in close-range or nearby automobile surroundings. It concentrates on recent investigations that employ deep learning sensor fusion algorithms for perception, localization and mapping.

Additionally, notable studies including those referenced below, have concentrated on the localization and picking aspects of industrial forklifts. These research works, including [22,45], emphasize the development of a robust range and visual method to comprehend their surroundings.

2.3. Human–Machine Interaction (HMI)

Research contributes to enhancing the interaction between autonomous forklifts and human operators or workers in shared environments. The most prominent research and the most cited in this domain are in [46]. The study presents a Human–Machine Interface system that integrates adaptive visual cues to improve the teleoperation of industrial vehicles, such as forklifts. This system evaluates the teleoperation context or work state and then presents the most appropriate visual cues on the HMI display.

J. Y. Chew et al. developed a virtual environment in [47] by implementing Human–Machine Interaction in a forklift operation. K. P. Divakarla et al. in [48] provided a detailed overview of key aspects related to autonomous vehicles. In [49], E. Cardarelli et al. presented an innovative Human–Machine Interface designed to enable user interaction with a fleet of automated guided vehicles (AGVs) utilized in industrial logistics operations.

The research conducted in [50] investigates the influence of (AGVs) by implementing the Internet of Things on Flexible Manufacturing Systems. Victoria Friebel, in [51], explored user perceptions on and usability criteria for HMI with autonomous vehicles. In [52], a uniquely developed method for global and behavioral planning for automated forklifts is shown. In [53], the authors concentrated on the effects of autonomous transportation, while considering human preferences. This domain may involve designing intuitive interfaces, safety protocols and communication systems to ensure effective collaboration and reduce the risk of accidents.

2.4. Safety, Reliability and Energy Efficiency

Autonomous forklifts operate in dynamic and safety-critical environments, and scientific contributions in this area aim to improve their safety and reliability. The authors of [54] presented a detailed explanation of safety technologies. They also provided a thorough description of safety technologies and the outcomes of an experiment, assessing how workers and autonomous systems interacted. In [55], the authors showcased a system design that utilizes infrastructure sensors to mitigate human–robot collisions at blind corners, enhancing the safety of automated forklifts.

The paper [56] focuses on discussing the key hardware and software components, detailing experiments highlighting the performance of “the Hot Metal Carier” (HMC) amidst dynamic objects in a typical worksite. The focus of the paper is on developing fail-safe mechanisms, real-time monitoring systems and advanced planning algorithms that prioritize safety considerations while ensuring efficient operations.

With a growing emphasis on sustainability, scientific contributions in this area aim to optimize the energy consumption of autonomous forklifts. Researchers and scholars are engaged in creating energy-saving navigation algorithms, smart power management systems and the integration of renewable energy sources to diminish ecological consequences and operational expenses.

Many researchers have made different contributions in this important domain such as Lam et al. [57], whose study focuses on a systematic examination of two cargo-handling equipment types—automated guided vehicles and forklifts—within container ports. It aims to assess their economic impact, power consumption and environmental implications. Also, the study by Yasanur Kayikci in [58] emphasizes the advantages of digitizing logistics processes and investigates the sustainability implications of such digitization. It adopts a qualitative approach, employing a single case study focused on Fast-Moving Consumer Goods (FMCG) companies and their transport service providers in Turkey.

In [59], Li Xie illustrates a study which involved creating a robotic platform, establishing an autonomous navigation control system, and developing a verified energy consumption model. This doctoral research aimed to enhance the energy efficiency of the Ilon Mecanum wheel. The paper [60] discusses the need for improved energy efficiency in ports and terminals due to rising energy costs and environmental concerns. It also identifies research gaps and proposes future directions for research in this area.

2.5. Sensors and Ranging Technology

In this era, sensors that utilize lasers to perform non-contact distance measurements have become a completely integral part of automation engineering.

In [36], Kocić et al. explore sensors and their fusion in autonomous vehicles, with a particular emphasis on “integrating essential sensors like cameras, radar, and LiDAR”. The study demonstrates that incorporating additional sensors into the fusion system enhances performance and solution robustness. Different simulators that are commonly used to simulate various sensor payloads for autonomous vehicles are summarized in Table 1.

Table 1.

Different simulators and their sensor support for autonomous vehicles.

Table 2 presents a detailed comparison of various types of sensors, highlighting their respective advantages and disadvantages.

Table 2.

Sensor comparison.

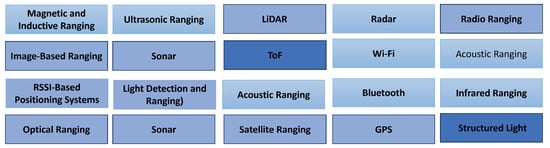

Numerous ranging technologies exist, each defined by its unique principles and practical uses. Some typical varieties of ranging technologies are as shown in Figure 2. Below are the most prevalent sensors used in perception systems.

Figure 2.

Different types of ranging technologies.

Table 3 outlines the most prevalent sensors used in perception systems.

Table 3.

Specifications of commonly used sensors in perception systems.

- Frame rate: Indicates the rate at which the sensor captures and processes data per second.

- Size: Refers to the physical dimensions of the sensor, typically described as small, compact, or medium to large.

- Visibility: Indicates the sensor’s performance under different visibility conditions, such as good visibility, which means the sensor performs well regardless of lighting or environmental conditions.

- Field of View: Describes the extent of the scene that the sensor can capture, ranging from wide (covering a large area) to narrow (focusing on a specific region).

- Resolution: Refers to the level of detail captured by the sensor, described as low (less detailed) or high (more detailed).

- Weather Effects: Represents the impact of adverse weather conditions on the sensor’s performance and functionality, with minimal meaning the sensor is relatively unaffected.

- Range: Specifies the maximum distance at which the sensor can effectively detect objects or gather data, varying from short to medium or long distances.

Table 4 provides an explanation of the comparison between various sensor technologies utilized in the manufacturing and design of autonomous forklifts, emphasizing their components, applications, and accuracy.

Table 4.

Comparison of sensor technologies for autonomous forklift manufacturing and design.

2.5.1. RGB Cameras

There are two types of cameras: visible VIS or infrared cameras, where the imaging sensor captures the image, usually with “either a charge-coupled device (CCD) or complementary metal oxide semiconductor (CMOS)” as identified by [61].

High precision and low noise are two of the unique characteristics of CCD image sensors, which are manufactured through a complex manufacturing process at a high cost. Additionally, CMOS devices consume less power than CCDs. For companies today, using video cameras as a sensor to detect the environment around self-driving forklifts is one of the most promising options. Compared to radar or LiDAR, they are more affordable, easy to use and highly efficient at classifying textures.

2.5.2. Radar

Radar stands for (Radio Detection and Ranging). Radar is a “sensor integrated into vehicles for different purposes like adaptive cruise control, blind spot warning collision warning and collision avoidance”, as defined by [36].

Radar uses radio waves to detect the presence and location of objects around the forklift, even in adverse weather conditions or low-visibility environments. It is particularly effective for detecting objects that might not be visible to cameras or LiDAR.

Oculii, as shown in Figure 3, is a company at the forefront of AI-powered radar perception software. They have unveiled FALCON and EAGLE, commercially available 4D imaging sensors boasting the highest resolution in the industry.

Figure 3.

Oculii imaging 4D radars.

2.5.3. LiDAR

LiDAR (Laser Detection and Ranging) is a technology that utilizes an infrared laser beam to calculate the distance between the sensor and nearby objects. In modern LiDAR systems, a rotating mechanism sweeps the laser beam across the field of vision. The lasers emit pulses, and these pulses bounce off objects. The resulting reflections generate a collection of data points, known as a point cloud, which accurately depicts these objects in a digital state. Santiago Royo and Maria Ballesta-Garcia affirmed in [62] that LiDAR imaging systems are currently one of the most popular subjects in the optronics sector, as they will be necessary for perceiving the surroundings of any self-driving vehicle. Furthermore, a summary of characteristics of the light sources and photodetectors particular to LiDAR imaging systems most employed in real-world scenarios is also mentioned in the paper. Lastly, the authors discussed unresolved matters for the advancement of LiDAR in autonomous vehicles.

As shown in Figure 4, the Sick TIM3XX Series Laser Scanner LiDAR Sensor provides advanced capabilities.

Figure 4.

TiM 150 Series Laser Scanner LiDAR Sensor.

The fundamental LiDAR imaging operating principle is based on the theory used for measurement in LiDAR imaging, where depth is measured by calculating time delays in events in the light emitted by the source. Therefore, “LiDAR is an active non-contact range detection technology, in which an optical signal is projected onto an object” as stated in [62]. Table 5 displays the most well-known LiDAR manufacturer companies.

Table 5.

The leading LiDAR manufacturing companies.

2.5.4. IMU

The Inertial Measurement Unit (IMU) is an electronic gadget that calculates the exact strength, rotational velocity and alignment of a body by utilizing a combination of accelerometers, gyroscopes and magnetometers. Autonomous forklifts rely on an IMU to sense their movement, including speed, direction and tilt. This sensor is essential for tasks like maintaining balance, tracking location and planning smooth paths. By combining IMU data with other sensors, the forklift can navigate precisely and efficiently, guaranteeing its safe and effective operation in diverse environments. IMU sensors, as shown in Figure 5, measure the forklift’s orientation, acceleration and angular velocity. By continuously tracking these parameters, IMUs help in estimating the forklift’s motion, mitigating the effects of vibrations, and improving overall navigation accuracy.

Figure 5.

(a) IMU—MEMSense; (b) IMU—LORD MicroStrain sensing system.

IMUs are commonly employed in the navigation systems of unmanned aerial vehicles (UAVs), satellites and landers to assist in flight control, attitude and heading reference systems. IMU-enabled GPS devices have been developed recently. An IMU-equipped GPS receiver can operate even when GPS signals are not accessible, such as in tunnels, indoors or when electrical interference is present [63,64].

2.5.5. GNSS/RTK

Satellite constellations that offer positioning, navigation and timing (PNT) services worldwide are collectively referred to as the Global Navigation Satellite System (GNSS).

The research conducted by Niels Joubert et al. [65] examines several recent developments in modern GNSSs and their potential impact on autonomous driving architectures.

Also, in [66], Andreas Schütz et al. explore the fusion of GNSS-RTK/INS/LiDAR through a loosely connected Kalman Filter within the realm of self-driving car applications. More specifically, the research centers around assessing possible LiDAR enhancements by contrasting the speed measurements acquired from the GNSS/INS fusion solution with the LiDAR data.

RTK stands for Real-Time Kinematic. Real-Time Kinematic positioning offers precise navigation for autonomous vehicles at an affordable cost [67].

An article by Miguel Tradacete et al. in [68] introduces a “GPS for a self-driving electric vehicle based on a Real-Time Kinematic Global Navigation Satellite System (RTK-GNSS), and an incremental-encoder odometry system”. Both components are combined into a single system using an Extended Kalman Filter (EKF), achieving accuracy down to the centimetre level. Another research [69], conducted by Ng, Johari et al. (2018), included an experimental and comparative analysis to evaluate the performance of the RTK-GPS in situations with flat and unobstructed terrain, uneven and unobstructed terrain, and uneven and obstructed terrain, respectively.

Figure 6 shows Trimble GNSS/RTK devices. They offer a range of RTK GNSS (Global Navigation Satellite System) receivers, antennas and applications. Trimble’s RTK solutions are known for their high accuracy, reliability and robustness.

Figure 6.

Trimble GNSS/RTK.

2.5.6. ToF

ToF stands For Time of Flight. It was first developed in the form of ToF measurement technology. Since then, ToF has largely been replaced by the more precise terminology LiDAR, as shown in [70].

These sensors are useful for detecting nearby objects, preventing collisions and ensuring safe operation in confined spaces.

Thinklucid Labs introduced a camera kit that combines ToF and RGB, called the Helios2+ Time of Flight (ToF) 3D Camera, as shown in Figure 7.

Figure 7.

ToF/RGB Helios 2+ KIT.

3. Architecture of AV Components and AV Categorization

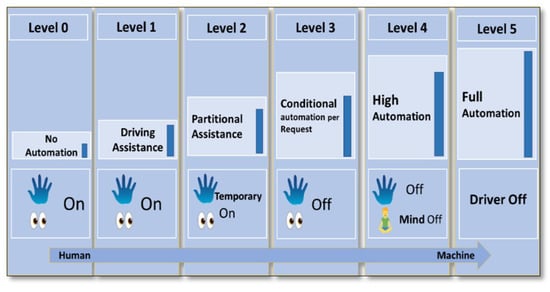

Figure 8 provides a summary of the key content in this section. Figure 9 clarifies the different levels of autonomous driving, clarifying specifically the detail of intervention required with automotive vehicles. These levels are described as follows: Autonomous vehicles are categorized into six distinct levels ranging from 0 to 5, each representing different levels of self-driving technology they possess, as illustrated below.

Figure 8.

Summary of Section 3’s content.

Figure 9.

Levels of automation in AVs—SAE levels.

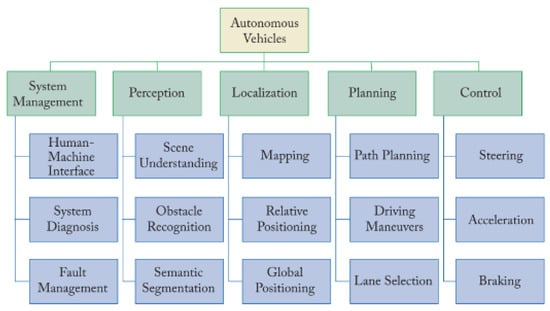

As presented in [1,71] by Kuutti et al., autonomous vehicles usually contain five functional components as shown in Figure 9.

- Perception;

- Localization;

- Planning;

- Control;

- System management.

Perception serves to sense the vehicle’s surroundings, identifying significant objects such as obstacles. Meanwhile, localization creates a map of the surroundings and accurately determines the position of the vehicle. Additionally, planning utilizes the information from perception and localization to chart the vehicle’s overarching actions, encompassing route selection, lane changes and target speed settings. In addition, the control module supervises the execution of particular tasks, such as steering, acceleration and braking. Lastly, system management monitors the functioning of all components and offers a user interface for interaction between humans and machines.

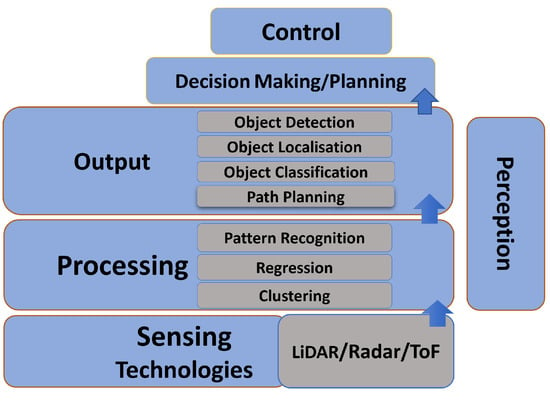

The intricate operation of autonomous vehicles consists of interconnected components, as illustrated in Figure 10.

Figure 10.

Typical autonomous vehicle concept.

The control system serves as the vehicle’s perspective brain. This central intelligence works in tandem with a network of sensors, including cameras, radar and LiDAR, which collectively gather real-time information about the vehicle’s surroundings. These sensory data are then processed by perception algorithms, enabling the vehicle to decipher and comprehend its environment. Following perception, the vehicle’s onboard artificial intelligence embarks on the critical task of planning and charting a course of action based on the assimilated data. This planning process involves decision-making algorithms that analyze factors such as traffic conditions, road obstacles and pedestrian movements. The ultimate goal is to generate a precise sequence of actions that will enable the vehicle to navigate its surroundings with safety and efficiency.

Operating in blended environments demands that autonomous vehicles flawlessly negotiate a diverse range of driving conditions, from bustling city streets to high-speed highways and unpredictable scenarios. To master this adaptability, a fusion of perception and planning is essential, equipping the vehicle to traverse varied landscapes with agility and accuracy.

Vehicle-to-vehicle (V2V) communication is an integral component of the autonomous vehicle revolution, enabling real-time information exchange among vehicles. This interconnectedness fosters enhanced road safety and efficiency. V2V communication facilitates collaborative decision-making, empowering vehicles to anticipate and respond to the actions of their counterparts on the road. For example, if a vehicle detects an obstacle or abrupt braking, it can instantly transmit this information to surrounding vehicles, allowing them to adapt their trajectories accordingly. The seamless interplay between control systems, sensors, perception algorithms, planning strategies, blended environment adaptability and V2V communication lies at the heart of successful autonomous vehicle implementation. This integration enables autonomous vehicles to adapt to dynamically changing surroundings and make informed real-time decisions.

3.1. Sensor Fusion and Perception

The integration of multiple sensors stands as a cornerstone in achieving robust perception systems across various technological domains. In fields like autonomous vehicles, robotics and industrial automation, the fusion of sensors such as LiDAR, radar and cameras is essential for understanding the environment.

By combining data from diverse sensors, these systems can compensate for individual sensor limitations, providing a more comprehensive and accurate understanding of the surroundings. This synergy not only improves the reliability of perception but also contributes to heightened safety and efficiency in complex operational scenarios. The seamless integration of multiple sensors represents a key advancement, ushering in a new era of intelligent and adaptive systems across various technological applications. In the article [72] by D. J. Yeong et al., the authors evaluate the functionalities and efficiency of sensors commonly utilized in autonomous vehicles and explores sensor fusion techniques.

The interaction between sensors and a controller in a machine vision system is shown in Figure 11.

Figure 11.

The interaction between sensors and a controller in a machine vision system [73].

Sensors, such as cameras, LiDAR and other specialized devices, capture raw data from the surrounding environment. These data are then relayed to the controller, a central processing unit tasked with interpreting and deciphering the incoming signals. Employing sophisticated algorithms and programming, the controller extracts meaningful insights, facilitating real-time analysis and response. This dynamic exchange between sensors and the controller is crucial in applications like object recognition, tracking and automation.

The fusion of data from cameras, LiDAR and other sensors represents a cutting-edge approach to enhancing perception in various technological domains. By integrating information from diverse sources, fusion techniques create a more comprehensive and accurate understanding of the environment. In applications such as autonomous vehicles, robotics and surveillance systems, this multi-sensor fusion enables a more robust and adaptive perception system. The synergy of camera, LiDAR and other sensor data not only improves object recognition but also enhances overall system reliability, paving the way for more sophisticated and capable technologies.

3.2. Real-Time Perception Algorithms and Architectures

As confirmed by [38], real-time perception algorithms and architectures are pivotal components in the realm of computer vision and artificial intelligence. These sophisticated systems enable machines to interpret and understand their surroundings instantaneously, providing a seamless interface between the digital and physical worlds. Whether applied in autonomous vehicles, surveillance systems or augmented reality, real-time perception algorithms harness advanced computational methods to rapidly process vast amounts of visual data. These algorithms, coupled with purpose-built architectures, empower machines to make split-second decisions, enhancing efficiency and responsiveness across diverse applications.

4. Deep Learning-Based Object Detection Techniques for Machine Visions

As widely recognized, machine vision plays a vital role in the overall development and deployment of autonomous vehicles, contributing to various aspects of perception, decision-making and control.

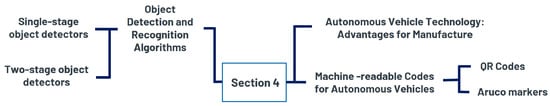

Figure 12 below clarifies the highlights and presents the most significant content. Recent important contributions such as [74,75] often involve advanced techniques such as computer vision, machine learning and sensor fusion. Researchers such as [76] presented a review of the perception systems and simulators for autonomous vehicles.

Figure 12.

Section 4’s highlights: key points summarized.

There is limited research in the field of autonomous forklifts, as seen in studies such as [77] (2020), [18] (2009), and [20] (2010), which indicate the early stages of research on this technology. Additionally, [11] focuses on vehicles handling wooden pallets, regardless of the type of load.

Moreover, [74] also made a contribution, wherein the vehicle can handle loads of the same size and shape. This underscores the significance of this field.

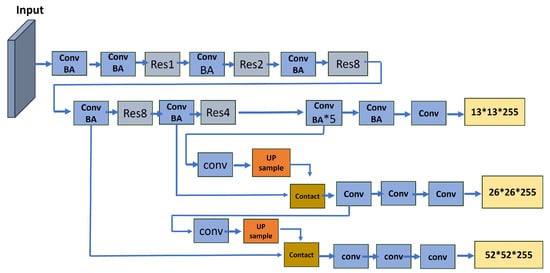

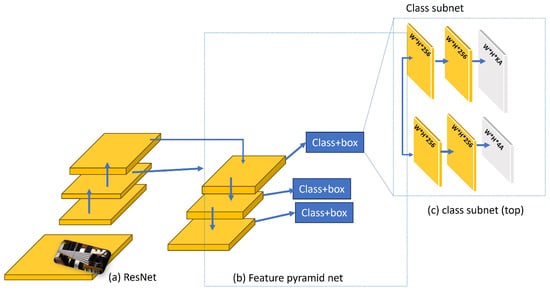

Figure 13 offers a comprehensive overview of the evolutionary landscape of deep learning methods for detection. Another significant advancement in object detection came with the introduction of You Only Look Once (YOLO), a method that divides images into a grid and simultaneously predicts bounding boxes and class probabilities for each region. The Single-Shot Multibox Detector (SSD) further enhanced real-time object detection by more effectively handling objects of varying shapes and sizes. The accompanying figure highlights these advancements, showcasing the remarkable contributions of YOLO, R-CNN and SSD in the continuous evolution of deep learning-based object detection techniques across a wide range of applications.

Figure 13.

Deep learning object detection methods and algorithms.

Advanced learning methods have emerged as the cutting-edge approach for object detection, surpassing traditional computer vision techniques. These approaches leverage deep neural networks, specifically convolutional neural networks (CNNs), to autonomously capture and extract complex features from visual data. Object detection techniques based on deep learning not only attain impressive accuracy but also provide improved efficiency and speed. They have made substantial advancements in the realm of computer vision, facilitating diverse applications such as self-driving cars, surveillance systems and image identification.

In this paragraph, we will explore some of the key advanced learning methods employed in object detection and their contributions to the field. Object detection can generally be categorized into two-stage and one-stage algorithms. Further detail will be provided below.

Unfortunately, specifications on the precise machine vision model type utilized by each company is unattainable. This is due to several factors:

- Companies often employ a hybrid approach, utilizing various models tailored to the specific tasks and demands of their autonomous forklifts.

- Given the continuous evolution of models, companies may periodically update their systems with newer, enhanced iterations.

- Detailed specifications concerning the models may be deemed proprietary information, thus not publicly disclosed.

Nevertheless, overarching insights into the typical model categories utilized in autonomous forklifts can be provided. Object Detection: This is a function for autonomous forklifts, enabling the recognition and localization of items in their surroundings, such as pallets, obstacles and individuals.

Prominent models for object detection that can be used for autonomous forklifts include YOLO, Faster R-CNN, Mask R-CNN, EfficientDet, Detection Transformer (DETR) and SSD. There are several popular algorithms and techniques used for object detection, such as convolutional neural networks (CNNs), and region-based convolutional neural networks (R-CNNs). These techniques, along with other variations and advancements, are widely used in the development of computer vision systems for autonomous forklifts.

4.1. Object Detection and Recognition Algorithms

4.1.1. Single-Stage Object Detectors

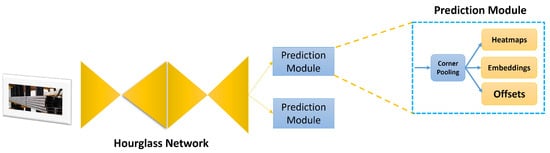

Deep learning-based methods have revolutionized computer vision tasks like object detection. Among these, regression/classification-based techniques such as YOLO, SSD, DETR (Detection Transformer) and CornerNet-2019 have emerged as prominent solutions. CornerNet-2019 is an object detection model, as shown in Figure 14, that utilizes a single pass through a convolutional neural network to simultaneously identify object bounding boxes and their corresponding corners. This approach eliminates the need for multiple stages, making CornerNet a one-stage object detection method.

Figure 14.

The architecture structure of CornerNet 2019 inspired by [78].

The YOLO model is one of the most important algorithms in this field. YOLO, which stands for “You Only Look Once”, is an object detection algorithm commonly used in computer vision and image processing tasks. It was launched in 2016 by Joseph Redmon et al. [79].

As per [80], the mechanism details of YOLO by are clarified below: Each bounding box is predicted based on the entire image features. The algorithm predicts a bounding box for every class and detects whether objects are present throughout the image. In YOLO, the complete image is segmented into a grid of size SxS. Each grid cell is assigned a confidence value based on the predicted B bounding box. Object presence is determined by the confidence value. An object in a grid with a confidence value of zero is absent. In order to detect an object accurately, it is essential for the confidence score to closely match or equal the predicted bounding box and the real object. Probabilities associated with classes have also been anticipated via every grid cell. To identify objects within an image, we utilize a bounding box with class probabilities exceeding a specific confidence threshold. Ultralytics presents YOLO-v5 and YOLO-v8, with the latter demonstrating impressive real-time performance.

Figure 15 illustrates the architecture. The latest addition to the YOLO family was released in February 2024. YOLOv9 was developed by a different group of developers, including Chien-Yao Wang, I-Hau Yeh, and Hong-Yuan Mark Liao, building upon the advances of its predecessors to deliver improved performance in object detection tasks [81]. Ultralytics released YOLO-v5 alongside YOLO-v8. While the formal paper release date is still pending, and additional features are yet to be incorporated into the YOLO-v8 repository, initial comparisons with its predecessors reveal its superiority, positioning it as the new state-of-the-art in the YOLO series [82].

Figure 15.

YOLO architecture pipeline structure.

The YOLO architecture is explained in Figure 15. The specific choice of technique depends on factors such as the requirements of the application, the available computational resources and the desired trade-off between accuracy and real-time performance. Moreover, RetinaNet, a one-stage object detection model, was introduced in the paper “Focal Loss for Dense Object Detection” by Tsung-Yi Lin, Priya Goyal, Ross Girshick and Kaiming He, which was presented at ICCV in 2017. Figure 16 illustrates the network architecture of RetinaNet. The one-stage object detectors such as SSD can be found in Figure 17.

Figure 16.

The structure of the RetinaNet network architecture.

Figure 17.

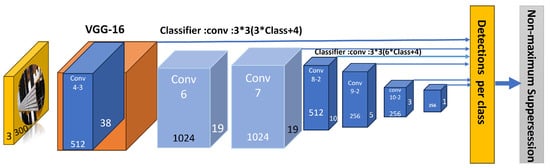

The structure of the SSD architecture.

The strucrure of the below SSD model inspired by [83].

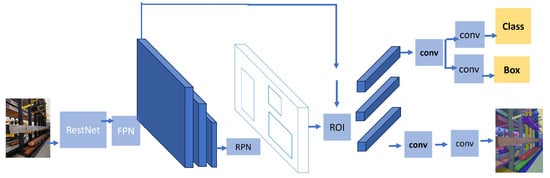

4.1.2. Two-Stage Object Detectors

Two-stage object detectors are a class of deep learning models used in computer vision to identify and locate objects within an image. The process is divided into two stages. The first stage, known as the region proposal network (RPN), analyzes the image to generate a set of candidate regions or proposals where objects are likely to be found. The second stage takes these proposals and refines them by classifying the objects and precisely regresses their bounding boxes. Two-stage object detectors, such as Mask R-CNN, are explained in detail below.

Region-based convolutional neural network (R-CNN) is a type of two-stage region proposal-based object detector, such as Mask R-CNN. The utilization of selective search was implemented to generate 2000 region proposals from test images, with only a restricted number of these proposals being retained. The next step involved the application of CNN to generate a feature vector of a constant length from each of the chosen region proposals. A linear SVM was employed to assign weights to the extracted feature vectors for object classification within each region proposal. To mitigate localization errors, a linear regression model was subsequently utilized to expect the boundaries of the bounding box.

The structure of the Mask R-CNN model is shown in Figure 18.

Figure 18.

The structure of the Mask R-CNN architecture.

As the architecture of the structure is inspired by Figure [84]. Mask R-CNN has become a prevalent tool in computer vision applications that demand intricate instance segmentation, including medical image analysis, autonomous vehicle operation and various other scenarios where precise object outlining is essential. Key milestones in MAsk R-CNN development are shown in Table 6.

Table 6.

Key milestones in Mask R-CNN development.

Table 7 shows a selection of the most cited and important articles in academia as per the author’s research.

Table 7.

Selected articles in academia covering various deep learning algorithms.

4.2. Autonomous Vehicle Technology: Advantages for Manufacturer

Autonomous forklifts are equipped with technology that enables them to operate independently from human control. Also, machine vision algorithms play a critical role in allowing these forklifts to navigate, perceive their surroundings, and make decisions based on what they see. In this section, we present a deep dive into the functionality of autonomous vehicle technology delving into the intricacies of autonomous vehicle technology. At the heart of this technology lies a fusion of sensors, cameras, radar and LiDAR, working in unison to perceive the surrounding environment. Advanced algorithms process these data in real-time, making critical decisions regarding steering, acceleration and braking [104].

As will be explained in further detail below in Section 4, partially autonomous vehicles with varying levels of automation are currently accessible worldwide to provide humanity with a more confident and safer way of delivering their services.

4.3. Machine-Readable Codes for Autonomous Vehicles

QR codes and ArUco markers are visual markers used in computer vision applications, and they can also play a role in enhancing the capabilities of autonomous vehicles.

In the domain of machine vision and autonomous vehicles, both QR codes and ArUco markers serve as visual markers or codes that can be recognized by cameras and sensors to aid in localization, navigation and object recognition.

Below is a brief comparison of the two types.

4.3.1. QR Codes

QR codes are commonly used for various purposes in the context of autonomous forklifts. Some examples are as follows:

Navigation: QR codes can serve as navigation markers or waypoints for autonomous forklifts. By placing QR codes strategically in the environment, the forklift’s sensors can detect and interpret them to determine its location and orientation. This helps the forklift to navigate accurately and perform tasks efficiently, such as finding the right storage location or following a predefined path.

Object Recognition: QR codes can be used to identify objects or pallets in a warehouse or manufacturing facility. Each item can be labeled with a unique QR code, which can store information about the item, such as its type, weight, destination or handling instructions. When the forklift encounters a QR code, it can scan and interpret it to gather relevant information, allowing it to handle the object appropriately. QR codes are a versatile tool that can enhance the capabilities of autonomous forklifts by providing critical information, aiding navigation and ensuring safe and efficient operations in various industrial settings.

Table 8 provides multiple short abstracts of selected references using QR codes in the field of object detection.

Table 8.

Selected references using QR codes.

4.3.2. ArUco Markers

ArUco markers are a type of augmented reality markers that are commonly used for computer vision applications. These markers are essentially patterns that are easily recognizable by computer vision systems, making them useful in various tracking and localization tasks. ArUco markers are often used in the field of robotics and autonomous systems as in [111]. Table 9 provides multiple short abstracts of selected references using AruCo marker codes in the field of object detection.

Table 9.

Summary of articles discussing ArUco marker applications.

5. Industry Applications and Manufacturers

Autonomous forklifts are equipped with technology that enables them to operate independently from human control. Also, machine vision algorithms play a critical role in allowing these forklifts to navigate, perceive their surroundings, and make decisions based on what they see.

This section is summarized in Figure 19, which highlights the core components discussed in this chapter. The figure provides a symbolic overview of the section.

Figure 19.

Summary of the content in Section 5.

Machine learning, on the other hand, plays a pivotal role, allowing the vehicle to continuously adapt and improve its performance based on accumulated experience. Connectivity features facilitate communication between autonomous vehicles and infrastructure, further enhancing safety and efficiency. This examination of autonomous technology emphasizes the smooth fusion of hardware and software, opening the door to a future in which self-driving vehicles effortlessly integrate into our everyday routines.

5.1. Autonomous Guided Vehicles (AGVs) and Autonomous Mobile Robots (AMRs)

Autonomous guided vehicles (AGVs) and autonomous mobile robots (AMRs) are essential tools for modern industrial automation and logistics. Both technologies streamline material handling, inventory management, and intra-facility transportation. While they share the common goal of autonomous navigation, AGVs and AMRs differ significantly in their operational methods, flexibility, and deployment scenarios. These distinctions set them apart from the autonomous vehicles often seen on roads and highways.

This section is summarized in Table 10, which presents a comparison between automated guided vehicles (AGVs) and autonomous mobile robots (AMRs) in the context of industrial automation. The figure highlights the key differences in functionality, flexibility, and technological integration, providing a clear overview of their respective roles and advantages in modern industrial applications

Table 10.

Comparison of AGVs and AMRs in industrial automation inspired by [118].

5.2. Companies Manufacturing Autonomous Forklifts

This research also focuses on automated forklifts designed for multi-level load handling in warehouse racking systems, emphasizing their essential vertical lifting capabilities. Conversely, floor-level automated guided vehicles (AGVs), which do not support vertical lifting into racking systems, are excluded from consideration as they serve different functional purposes.

There are several companies that provide autonomous forklifts or autonomous guided vehicles designed for material handling and warehouse automation. The vehicles described below are specifically designed to handle palletized loads.

Table 11 lists the leading companies in the field of self-driving forklifts, which provide warehouse solutions and fleet management solutions in the market, along with the country in which they originate.

Table 11.

Notable forklift companies adopting autonomous technologies.

Further details about the pioneer company’s products manufactured in this domain can be found below:

- Toyota Material Handling: Toyota is a well-known manufacturer of forklifts, and they also offer autonomous forklifts under the brand name “Autopilot”. Their AGVs are designed to work alongside human operators or autonomously in warehouses and distribution centers.

- KION Group: KION Group is a leading provider of intralogistics solutions and offers autonomous forklifts through their subsidiary, “Linde Material Handling”. Their AGVs are designed to navigate through warehouses and perform various material handling tasks without human intervention.

- Hyster-Yale Materials Handling: Hyster-Yale is another major manufacturer of forklifts and material-handling equipment. They offer autonomous solutions under the brand name “Hyster Robotic” or “Balyo” (their partner in autonomous technology). Their AGVs can be retrofitted onto existing forklifts, enabling them to operate autonomously.

- Seegrid: Seegrid specializes in autonomous mobile robots for material handling. They offer a range of autonomous pallet trucks and tow tractors that can navigate complex environments using vision-based technology. These AGVs are designed to optimize workflows and increase efficiency in warehouses and distribution centers.

- Aethon (acquired by ST Engineering): Aethon, now part of ST Engineering, offers autonomous mobile robots for material transportation and delivery within hospitals and industrial facilities. While not strictly forklifts, their AGVs are capable of autonomously transporting loads and can be customized to handle specific tasks.

Above are just a few examples, and the autonomous forklift market is continuously developing with new contestants entering the industry. Ongoing and continuous research is required in this field as the market for autonomous vehicles is ever-evolving.

These companies are at the forefront of developing autonomous forklift technology, aiming to improve efficiency and safety in warehouse and logistics operations.

Below, different types of autonomous forklifts are demonstrated to review all ideas and proposals about this technology in industrial and academic domains. In this section, the most prominent contributions drawn from various articles and conference papers in the field of design and advancement of autonomous forklifts are presented. Problems and challenges are outlined as well. These contributions, along with many others, contribute to the advancement of autonomous forklift technology, making it more capable, efficient, safe and sustainable.

The companies listed are well-known manufacturers in the forklift industry, but whether they are the most well known or top-selling can vary depending on different factors such as geographical location, market segment and specific requirements of customers. These companies have established themselves as leaders in the forklift market, but there are other manufacturers as well who may also be prominent in certain regions or sectors. Additionally, the status of being the top-selling manufacturer can change over time due to various factors such as technological advancements, market trends and competitive strategies.

The following are selected companies that manufacture autonomous forklifts in the market:

- The K-MATIC automated forklift uses smart software to manage tasks, navigate tight spaces and safely interact with other warehouse equipment. Three-dimensional cameras and laser sensors ensure smooth operation and fast adaptation to changing environments.

- Seegrid’s Palion Lift RS1 AMR delivers comprehensive automation for low-lift processes, ensuring safe and reliable material handling from storage to staging areas and work cells. With its advanced Smart Path sensing capabilities and 360° safety coverage, the RS1 performs exceptionally well in complex enterprise environments.

- Crown Equipment is a forklift that combines manual and automatic control in one machine. It has a single mast that extends forward and can be switched between driver-operated and self-driving modes with a simple control.

- OTTO Motors, acquired by Rockwell Automation in 2021, makes industrial self-driving vehicles designed for heavy-duty material transport. An autonomous forklift is engineered to facilitate the movement of pallets between stands, machines and various floor locations.Utilizing specialized sensors to ensure that the payload remains within safe limits, the OTTO Lifter autonomously and reliably assesses and picks up pallets, even when they are misplaced or wrapped in stretched film. Five 3D cameras aid in detecting overhanging objects, as well as in pallet tracking and docking.

- Vecna Robotics builds AMRs that can handle a variety of tasks, including towing trailers, moving pallets and performing machine tending.

- Balyo offers a fleet of AMRs designed for the agile and efficient movement of goods in warehouses and distribution centers. The VENNY Robotic by Balyo truck, equipped with unique 3D pallet detection.

- The Toyota RAE250 Autopilot is a top-of-the-line forklift designed specifically for warehouse automation. It combines the trusted design of a regular Toyota reach truck with a built-in navigation and safety system, allowing it to operate autonomously. This means that users receive the reliability of a proven forklift with the efficiency and cost-saving benefits of automation.

- Hyster Robotic CB is a self-driving truck that does not need any special adjustments to roads or its surroundings. It uses a laser system (LiDAR) to find its location and avoid obstacles, relying on the existing environment itself as a giant map.

- Combilift: The Combi-AGT offers flexibility by operating autonomously in guided aisles, functioning in free-roaming mode, or being manually driven. Figure 20 depicts the autonomous-guided forklift produced by Combilift.

Figure 20. The Combi-AGT autonomous-guided forklift truck by combilift.

Figure 20. The Combi-AGT autonomous-guided forklift truck by combilift.

Table 12 below shows various forklift manufacturers and the different types of sensors used for navigation.

Table 12.

Forklift manufacturers and types of sensors used for navigation.

5.3. Key Industry Applications

One of the most important advantages of using autonomous vehicles is that they are not subject to human control. Firstly, humans may become tired or fatigued during long working hours. Furthermore, other factors such as losing focus while driving or talking on the phone are not present in autonomous vehicles, and therefore, the use of autonomous vehicles can be considered much safer than human-controlled vehicles.

As will be explained in further detail below in Section 4, partially autonomous vehicles with varying levels of automation are currently accessible worldwide to provide humanity with a more confident and safer way of delivering this service.

Many expected advantages will be available if autonomous vehicles are used. The first will be a reduction in accidents as a result of minimizing the proportion of human interference with the vehicle while driving. Secondly, the exertion of driving would be alleviated for vehicle drivers, allowing them to perform other tasks or enjoy a moment of respite. Regarding vehicles used for transporting goods, it will be possible to operate those vehicles for longer hours than vehicles driven by humans.

5.4. Performance Benchmarks and Empirical Insights into Autonomous Forklifts

As per a new study [119], autonomous forklifts and intralogistics systems have significantly enhanced operational efficiency and reduced costs across various industries. A focused investigation into small- and medium-sized enterprises (SMEs) within Romania’s forklift industry highlights the transformative impact of artificial intelligence (AI) integration. Table 13 summarizes the influence of AI implementation on key business metrics, showcasing substantial benefits in operational performance and resource optimization.

Table 13.

Impact of AI implementation on key business metrics as per [119].

6. Discussion

For a long time, the technology of self-driving vehicles was a fantasy, and now the dream has come true as it has become actual and realistic. These vehicles can provide services to humanity such as delivering orders or carrying goods to storage places or places for sale.

6.1. Autonomous Forklifts: Economic Impact and Ethical Considerations

While an in-depth examination of empirical validation, economic impact and ethical considerations of autonomous forklifts is beyond the scope of this research, their importance warrants a brief overview. Autonomous forklifts leverage advanced robotics, AI and sensor technologies to revolutionize material-handling processes. Rigorous testing in real-world conditions has demonstrated their reliability, efficiency and safety, as well as their adaptability to diverse warehouse layouts and seamless integration with existing logistics systems.

From an economic perspective, these technologies offer substantial benefits, including reduced labor costs, enhanced operational efficiency and minimized inventory damage, significantly improving profitability and competitiveness. However, ethical considerations, such as potential job displacement, equitable distribution of economic benefits and ensuring safe human–robot interactions, remain critical. Addressing these challenges is vital to achieving the sustainable and socially responsible integration of autonomous forklifts into supply chain operations.

6.2. Challenges and Implications of Integrating Autonomous Technology into Forklifts

Margarita Martínez-Díaza and Francesc Soriguera [120] believe that autonomous vehicle manufacturers do not anticipate commercially releasing completely autonomous vehicles in the near future due to many considerations such as human behavior, ethics and traffic management. This has led to the use of these vehicles in less crowded and complex environments such as forklifts, etc. Furthermore, Martinez-Díaza and Soriguera claimed that technically, the clear “detection of obstacles at high speeds and long distances is one of the biggest difficulties” that require attention for the development of viable solutions.

Table 14 showcases selected publications addressing various challenges and the potential solutions that were listed.

Table 14.

Selected publications on key challenges and proposed solutions.

Designing machine vision systems for autonomous forklifts involves numerous complex challenges critical for ensuring safe and efficient operation in industrial environments. One primary challenge is managing the diverse and dynamic conditions these forklifts encounter. They must function effectively under varied lighting conditions, including the bright lights of loading docks, shadows in warehouses and low-light environments. Additionally, they need to handle dynamic obstacles such as other forklifts, workers and varying types of inventory, accurately predicting their movements. Navigating from narrow aisles to open storage areas requires the vision system to understand different spatial configurations and traffic patterns thoroughly.

Accurate and fast object detection and classification are essential, demanding high-speed processing and advanced algorithms to minimize latency. The system must detect small and partially occluded objects, such as pallets, boxes or equipment partially hidden behind shelves, and recognize objects despite variations in appearance due to orientation, distance and environmental conditions. Robustness and reliability are critical, requiring effective sensor fusion that integrates data from multiple sensors like cameras, LiDAR and radar. This integration must be performed efficiently to enhance reliability and ensure that fail-safe mechanisms are in place to handle sensor malfunctions without compromising safety.

Real-time processing poses significant computational demands, requiring a balance between high performance and limitations in power and processing power. Efficient algorithms that process data quickly while maintaining accuracy are essential for the real-time operation of autonomous forklifts. Training machine learning models with large and diverse datasets ensures they are comprehensive and representative. Balancing simulation and real-world testing is vital for robust system development, as real-world validation accounts for unpredictable conditions not present in simulations.

Handling edge cases and long-tail problems is another challenge, as the system must effectively manage rare and unusual scenarios, such as navigating through unexpected obstructions or dealing with unusual inventory sizes and shapes. Continuous learning is needed for the system to adapt to new situations without overfitting or degrading performance in known scenarios. The research in [136] seeks to investigate people’s receptiveness towards autonomous vehicles by respecting their trust and sustainability concerns. This objective was accomplished by formulating the technology acceptance model (TAM). A questionnaire was administered to 391 participants. In 2021, ref. [137] presents the next challenge for autonomous driving in 2021, which is the technological equivalent of the space race of this century. The authors argue that a rethink is required and offer an alternative vision. Also, Sun Tang in [138] proposed a comprehensive review and introduction of simulator investigation, and user portrayal was utilized in this research to close the divide between the present and the future. Todd Litman in [139] examined the effects of autonomous vehicles and their implications for transportation planning. The study suggests Level 5 autonomous vehicles, capable of operating “without a driver, may be commercially available and legal for use in some jurisdictions by late 2020” and will only become popular when autonomous vehicles become widespread and affordable, possibly in the timeframe of 2040 to 2060, according to their expectation. In [140], the paper “explores differences in perceptions of AV safety across 33,958 individuals in 51 countries”. The master thesis written by Filip Hucko in [141] illustrates research examining the essential pillars of autonomous technology in general. It further addresses the “development of autonomous vehicles and their future implications in a sharing economy”. Finally, the authors raised their concerns in their home country of Japan, where major concerns about the aging workforce are a critical issue. The current techniques for pallet identification and positioning, which rely on a single source of data such as RGB images or point cloud, can result in inaccurate placement or require significant computational resources, increasing costs significantly.

6.3. Additional Considerations

Bundle loads with uneven shapes or protruding elements pose a significant hurdle for autonomous forklifts. These features can prevent the forklift from securely grasping the load, leading to instability during transport. This instability increases the risk of the load shifting or falling, potentially causing damage to the goods, surrounding infrastructure and injuring nearby personnel. Furthermore, irregular shapes can disrupt the forklift’s sensors, hindering its ability to accurately measure distances and navigate obstacles. Therefore, ensuring the safe handling of such loads is crucial. This necessitates advancements in sensor technology and more sophisticated algorithms to mitigate these risks and maintain the safety standards required for autonomous forklifts.

Autonomous vehicles require perceptual systems to understand their surroundings, which can be categorized into 2D and 3D perception. In the context of cars, 2D and 3D perception rely on cameras and sensors to capture visual information like pictures and video streams. Algorithms analyze these data to identify objects, interpret traffic signals and recognize pedestrians or other vehicles. This allows the vehicle to perceive the environment and take appropriate actions. However, forklifts operate in complex 3D environments like warehouses with racks, merchandise and other obstacles. In such scenarios, 2D perception alone is insufficient for safe and efficient navigation. Therefore, forklifts often utilize depth-sensing technologies like LiDAR or depth cameras to acquire 3D data about their surroundings. This enables accurate perception of object sizes, shapes and positions in three-dimensional space. While computer vision applications for cars primarily address two-dimensional challenges, forklifts face the additional complexity of navigating in three dimensions, exemplified by the Bird’s-Eye View (BEV) mapping paradigm used in automotive technology, which employs a 2D top–down perspective.

In all scenarios, additional information enhances the vehicle’s ability to make safe and efficient decisions. Whether the vehicle is a car or a forklift, more data enable a more comprehensive understanding of its surroundings and internal state, which is crucial for effective operation.

Among the various sensors employed in autonomous forklifts, LiDAR and cameras stand out as the most prevalent due to their comprehensive capabilities in mapping, navigation and object detection. LiDAR’s exceptional accuracy in generating 3D maps, coupled with cameras’ versatility in visual recognition tasks, makes them indispensable components in the sensor arrays of these autonomous vehicles.

Moreover, when considering sensor specifications, key factors include frame rate, which indicates the number of frames captured per second, often measured in frames per second (fps). Size refers to the physical dimensions of the sensor, encompassing overall size and pixel dimensions. Visibility pertains to the sensor’s ability to capture data in different lighting conditions or environments. The field of view describes the extent of the scene captured by the sensor. Resolution denotes the level of detail in the captured images. Weather effects indicate the sensor’s resilience in diverse weather conditions. Range signifies the distance over which the sensor can effectively operate. Depending on the type of sensor, these specifications can vary, ensuring optimal performance in specific applications.

As mentioned in [142,143], numerous leading manufacturers of autonomous forklifts commonly employ a diverse array of machine vision models, including convolutional neural networks, deep learning models, 3D vision and depth sensing models, semantic segmentation models, instance segmentation models, reinforcement learning models and optical character recognition (OCR) models. CNNs, such as YOLO, SSD and Faster R-CNN, are widely adopted for image recognition and object detection due to their real-time accuracy and speed. Deep learning frameworks like TensorFlow and PyTorch facilitate the development of custom models tailored to specific applications. For processing 3D point cloud data from LiDAR or stereo cameras, models such as PointNet, PointNet++ and VoxelNet are frequently utilized. Semantic segmentation models like U-Net, SegNet and DeepLab aid in image segmentation and environmental context understanding, while instance segmentation models like Mask R-CNN provide both object location and segmentation masks. Reinforcement learning models contribute to decision-making and navigation by learning optimal paths and actions through trial and error. Additionally, OCR models such as Tesseract and custom CNN-based OCR models are used to interpret text, such as labels and barcodes, within the forklift’s environment. YOLO and Faster R-CNN are particularly prevalent for their robust real-time object detection performance, which is crucial for the dynamic environments in which autonomous forklifts operate. Mask R-CNN is also commonly used due to its combined object detection and segmentation capabilities.

Current autonomous forklift technology, despite significant advancements, faces several hurdles on the road to widespread adoption. These challenges include navigating complex and dynamic environments like busy warehouses with human workers and moving equipment. Sensor limitations, particularly in poor lighting and with reflective surfaces, impair navigation and object detection accuracy. Integrating these forklifts into existing infrastructure can be expensive and requires substantial initial investment, posing a barrier for smaller businesses. Additionally, they lack flexibility, often needing significant reprogramming to adapt to new tasks or layout changes.

Safety and regulatory concerns are paramount. Proving the safety of human–robot interactions requires complex and time-consuming efforts. Reliability and maintenance remain ongoing challenges, as technical issues can disrupt operations. Interoperability with human workers presents another hurdle, necessitating sophisticated systems for seamless coordination. Data security and privacy are also critical concerns due to the vast amount of data generated and used. Scaling up from pilot projects to full-scale deployments requires careful planning to handle increased volume and complexity.

Addressing these limitations, shown in Table 15, necessitates ongoing research, development and collaboration among technology providers, warehouse operators and regulatory bodies. Advancements in sensor technology, improved decision-making capabilities for complex environments and increased adaptability to varied tasks are crucial. Additionally, reducing costs, improving safety protocols and establishing robust data security measures are essential for the wider adoption of autonomous forklifts.

Table 15.

Critical limitations of current autonomous forklift technology.

7. Conclusions

In conclusion, the article provides a comprehensive overview of the current state-of-the-art solutions for autonomous forklifts, focusing on the crucial role of sensors, machine vision, object detection models and systems. By delving into the various sensor types, machine vision techniques and object detection models, the article highlights the key challenges and advancements in this rapidly evolving field. This knowledge serves as a valuable foundation for further research and development in autonomous forklifts, ultimately contributing to increased efficiency, safety and productivity within warehouse and logistics operations. Also, by providing an overview of the machine vision in autonomous forklifts, this article aims to stimulate further research and development efforts, thereby promoting technological advancement. Additionally, we examined the leading forklift manufacturers in the market, reviewing the specifications of each of them to understand their development to find out its path.

This research provides a valuable foundation for further research and development in autonomous forklifts, ultimately contributing to increased efficiency, safety and productivity in warehouse and logistics operations. The article specifically highlights the integration of advanced machine vision algorithms, such as YOLO and DETR, or other machine vision models to detect load types and ensure accurate spatial perception for efficient load handling. By providing an overview of machine vision applications in autonomous forklifts, this article aims to stimulate further research and development efforts, promoting technological advancement. Additionally, we examined leading forklift manufacturers, reviewing their specifications to understand their technological trajectory, particularly their incorporation of AI-driven solutions and how these developments align with industry needs for automation and precise handling in dynamic warehouse environments.

Author Contributions

M.A.F. was responsible for the primary writing and data preparation. D.T., J.C., P.T. and J.M. provided technical guidance and supported the writing of the methods section. G.D. and P.T. were responsible for revising, reviewing and editing the paper. Visualization was handled by J.M. and J.C. Reviewing, supervision and project administration were performed by D.T. Funding acquisition was carried out by J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Lero—the Science Foundation Ireland Research Centre for Software (www.lero.ie) and Combilift under the project titled: APPS Autonomous Payload Perception Systems: A Technical Feasibility Exploration.

Data Availability Statement

Data is contained within the article.

Acknowledgments

We acknowledge the support of Lero—the Science Foundation Ireland Research Centre for Software— and Combilift for funding this project. The term Blended Autonomous Vehicles (BAV), coined by our team, underscores our emphasis on practical autonomous systems rather than full autonomy.

Conflicts of Interest

Author Joseph Coleman and James Maguire were employed by the company Combilift. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ToF | Time of Flight Institute |

| LiDAR | Light Detection and Ranging |

| Radar | Radio Detection and Ranging |

| FOV | Field of View |

| CNN | Convolutional Neural Network |

| GNSS | Global Navigation Satellite System |

| AGV | Automated Guided Vehicle |

| AVs | Autonomous Vehicles |

| HMI | Human–Machine Interaction |

| IMU | Inertial Measurement Uni |

| RTK | Real-Time Kinematic |

References

- Kuutti, S.; Fallah, S.; Bowden, R.; Barber, P. Deep Learning for Autonomous Vehicle Control: Algorithms, State-of-the-Art, and Future Prospects; Morgan & Claypool Publishers: San Rafael, CA, USA, 2019. [Google Scholar]

- Ondruš, J.; Kolla, E.; Vertal’, P.; Šarić, Ž. How do autonomous cars work? In Transportation Research Procedia; Elsevier: Amsterdam, The Netherlands, 2020; Volume 44, pp. 226–233. [Google Scholar]

- Thorpe, C.; Hebert, M.H.; Kanade, T.; Shafer, S.A. Vision and navigation for the Carnegie-Mellon Navlab. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 362–373. [Google Scholar] [CrossRef]

- Kpmg, C.; Silberg, G.; Wallace, R.; Matuszak, G.; Plessers, J.; Brower, C.; Subramanian, D. Self-Driving Cars: The Next Revolution; Kpmg: Seattle, WA, USA, 2012. [Google Scholar]

- Tamba, T.A.; Hong, B.; Hong, K.S. A path following control of an unmanned autonomous forklift. Int. J. Control. Autom. Syst. 2009, 7, 113–122. [Google Scholar] [CrossRef]

- Widyotriatmo, A.; Hong, K.-S. Configuration control of an autonomous vehicle under nonholonomic and field-of-view constraints. Int. J. Imaging Robot. 2015, 15, 126–139. [Google Scholar]

- Mohammadi, A.; Mareels, I.; Oetomo, D. Model predictive motion control of autonomous forklift vehicles with dynamics balance constraint. In Proceedings of the 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–6. [Google Scholar]