Abstract

Achieving high-quality reconstructions of images is the focus of research in image compressed sensing. Group sparse representation improves the quality of reconstructed images by exploiting the non-local similarity of images; however, block-matching and dictionary learning in the image group construction process leads to a long reconstruction time and artifacts in the reconstructed images. To solve the above problems, a joint regularized image reconstruction model based on group sparse representation (GSR-JR) is proposed. A group sparse coefficients regularization term ensures the sparsity of the group coefficients and reduces the complexity of the model. The group sparse residual regularization term introduces the prior information of the image to improve the quality of the reconstructed image. The alternating direction multiplier method and iterative thresholding algorithm are applied to solve the optimization problem. Simulation experiments confirm that the optimized GSR-JR model is superior to other advanced image reconstruction models in reconstructed image quality and visual effects. When the sensing rate is 0.1, compared to the group sparse residual constraint with a nonlocal prior (GSRC-NLR) model, the gain of the peak signal-to-noise ratio (PSNR) and structural similarity (SSIM) is up to 4.86 dB and 0.1189, respectively.

1. Introduction

Compressed sensing (CS) [1,2,3] is a signal processing technique that allows for successful signal reconstruction with fewer measurements than Nyquist sampling [4]. The machinery not only overcomes Nyquist sampling’s constraints but also allows for simultaneous signal sampling and compression, lowering the cost of signal storage, transmission, and processing. Among the applications that have aroused the interest of researchers are single-pixel imaging [5], magnetic resonance imaging [6], radar imaging [7], wireless sensor networks [8], limited data computed tomography [9], optical diffusion tomography [10], ultrasound tomography [11], and electron tomography [12].

Since the measurements are much lower than the elements in the image, the reconstruction model is ill-posed [13], i.e., the solution to the optimization problem is not unique. In addressing the issue, the image prior information is gradually applied, which is used as a regularization constraint term in the reconstruction model to attain the optimal solution. In 2006, Candes et al. [14] proposed a minimum total variation (TV) model based on image gradient information [15]. It recovers the smoothed areas of the image while destroying the fine image structure. In 2013, Zhang et al. [16] introduced non-local similarity [17] as a regularization constraint into the TV model and proposed a non-local [18] regularization total variation model (TV-NLR). This model not only preserves the edges and details of the image but also promotes the development of TV-based image CS reconstruction. In 2007, Gan [19] presented a block-based compressed sensing (BCS) type natural image reconstruction model, which separates the image into image blocks, encodes them, and reconstructs each image block individually. However, there are block artifacts in the reconstructed image. In 2011, the Multi-hypothesis BCS with smoothed projected Landweber reconstruction (MH-BCS-SPL) [20] model was proposed to eliminate the block artifacts and improve reconstruction performance. It adopts an MH predictions strategy to attain an image block made from spatially surrounding blocks within an initial non-predicted reconstruction. The model is used to construct a preliminary reconstructed image owing to its short reconstruction time. Meanwhile, the sparse representation model based on image blocks has developed rapidly. However, the operation based on the image block ignores the connection of similar image blocks and the dictionaries learned from natural images with a high computational complexity. In 2018, Zha et al. [21] introduced an adaptive sparse non-local regularization CS reconstruction model (ASNR). The model employs the principal component analysis (PCA) [22] algorithm to learn dictionaries from the preliminary reconstruction of the image rather than genuine images, which reduces computational complexity and adds non-local similarity to preserve the image’s edges and details. Meanwhile, it promotes the further development of the patch-based sparse representation image CS reconstruction model.

The local and spatial connections of images play an important role in the field of image classification [23]. The goal is to investigate the structural correlation information between similar image blocks. In 2014, Zhang et al. [24] proposed a group-based sparse representation image restoration model (GSR). It uses image blocks with similar structures to build image groups as units for image processing and uses the singular value decomposition (SVD) algorithm to obtain an adaptive group dictionary, which improves the quality of reconstructed images. In 2018, Zha et al. [25,26] successively proposed a group-based sparse representation image CS reconstruction model with non-convex regularization and an image reconstruction model with the non-convex weighted nuclear norm. These models promote sparser group sparse coefficients by using the -norm or weighted nuclear norm to constrain the group sparse coefficients, reducing the computational complexity of the model while improving the quality of the reconstructed image. In 2020, Keshavarzian et al. [27] proposed an image reconstruction model based on a nonconvex LLP regularization of the group sparse representation, using an LLP norm closer to the norm to promote the sparsity of the group sparse coefficients and thus improve the quality of the reconstructed images. Zhao et al. [28] proposed an image reconstruction model based on group sparse representation and total variation to improve the quality of the reconstructed image by adding weights to the high-frequency components of the image. Zha et al. [29] proposed an image reconstruction model with a group sparsity residual constraint and non-local prior (GSRC-NLR), which uses the non-local similarity of the image to construct the group sparse residual [30] and converts the convex optimization problem into a problem of minimizing the group sparse residual. The reconstructed image quality is enhanced. However, the constraint on the group sparse coefficient is disregarded, resulting in longer reconstruction times.

Motivated by the group sparse representation and group sparse residual, in this paper, an optimization model is proposed for the group-based sparse representation of image CS reconstruction with joint regularization (GSR-JR). The model uses image groups as the unit of image processing. In order to reduce the complexity of the model and improve the quality of the model-reconstructed images, the group coefficient regularization constraint term and the group sparse residual regularization constraint term are added, respectively. The alternating direction multiplier method (ADMM) [31] framework and iterative thresholding algorithm [32] are also used to solve the model. Extensive simulation experiments verify the effectiveness and efficiency of the proposed model. The contents of this article are organized as follows:

Section 2 focuses on the theory of compressed sensing, the construction of image groups, group sparse representation, and the construction of group sparse residuals. In Section 3, the construction of the GSR-JR model and the solution scheme are described specifically. In Section 4, extensive simulation experiments are conducted to verify the performance of the GSR-JR model. In Section 5, we present the conclusion.

2. Related Work

2.1. Compressive Sensing

The CS theory reveals an image , which can be sparsely represented into the sparse transform domain. It can be expressed as , where is the sparse matrix, and is sparse coding coefficient. The sparse image can project into a low-dimensional space through a random measurement matrix . The matrix needs to meet the restricted isometry property conditions. The measurements of the image can be expressed as:

where denotes the measurements. The sensing rate is defined as . The purpose of CS recovery is to recover from as much as possible, which is usually expressed as the following optimization problem

where is a regulation parameter, and is the norm to constrain sparse coding coefficients. When is 0, the problem is a non-convex optimization problem, which is an NP problem and cannot be solved by polynomials. When is 1, the problem is a convex optimization problem, which can be solved by various convex optimization algorithms. Generally, the optimization problem is solved as the minimization problem [30]. Once the sparsest coding coefficient is attained, the reconstructed image can be obtained from the sparse matrix and sparse coding coefficients

2.2. Image Group Construction and Group Sparse Representation

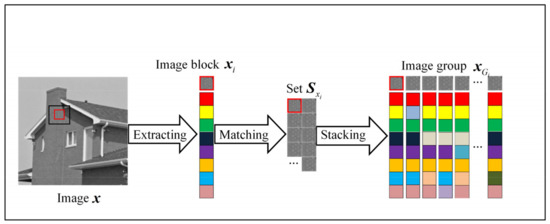

In this paper, image groups are sparse representation units for image processing. The following illustrates the image group construction and group sparse representation. Figure 1 depicts the image group construction. The initial image is divided into overlapped image blocks (red marked area). For each sample image block , similar image blocks are searched for in the search window (black marked area) to form the set . All elements in the set are converted into an image group by column. The construction of the image group can be simply expressed as , where means the extraction operation of the image group from the image.

Figure 1.

The flow chart of image group construction, where Extracting means extracting sample image blocks, Matching means matching similar image blocks, and Stacking means transforming similar image blocks to get image groups.

For each image group , the PCA dictionary learning algorithm is used to attain the group dictionary and group coefficient , which is directly learned from each image group, and each image group only needs to perform singular value decomposition once. Then each image group can be sparsely represented by solving the following norm minimization problem,

Once all the image groups are obtained, the image group should be rearranged and restored according to the corresponding position of the original image to obtain the reconstructed image. Because the image group contains repeated pixels, it also needs to perform pixel averaging. The image restoration operation can be expressed as

where indicates that the reverse operation of image extraction is to put the image group back to the corresponding position of the image. The is a matrix of image group size with all its elements being 1, which is used to count the weight of each pixel for pixel averaging. denotes the concatenation of all , and denotes the concatenation of all , The denotes the element-wise division of two vectors. The symbol is a simple representation of this operation.

According to the CS reconstruction model formula (1), the group sparse representation minimization problem can be obtained to realize image reconstruction

By solving the minimization problem with different algorithms, the reconstructed image can be obtained by .

2.3. Image Group Sparse Residual Construction

To improve the quality of the reconstructed image, group sparse residual regularization constraints are considered for introduction into the reconstructed model. The group sparsity residual is the difference between the group sparse coefficients of the initial reconstructed image and the corresponding group sparse coefficients of the original image, which can be defined as

where the initial reconstructed image is reconstructed by the MH-BCS-SPL model. It is the strategy to obtain the initial reconstructed image owing to the reconstruction time being short. Additionally, the model will be compared and analyzed in the subsequent sections. Where represents the group sparse coefficient of the initial reconstruction image, which can earn by

represents the group sparsity coefficient corresponding to the original image, which cannot be received directly. Inspired by the non-local mean filter algorithm, it can attain from . The is the vector form of , and the vector estimated is denoted as ,

where expresses the weight

where describes the 1st image block of the i-th image group in the initial reconstruction image, represents the j-th similar image block of the image group , is a constant, and is a normalization factor. The approximation of the group sparsity coefficient of the original image is obtained by replicating m times.

Therefore, the expressions for the group of sparse residuals are obtained.

3. The Scheme of GSR-JR Model

3.1. The Construction of the GSR-JR Model

The group sparse representation, Equation (6), and group sparsity residuals, Equation (7), are used to construct the optimization (GSR-JR) model, which can be expressed as

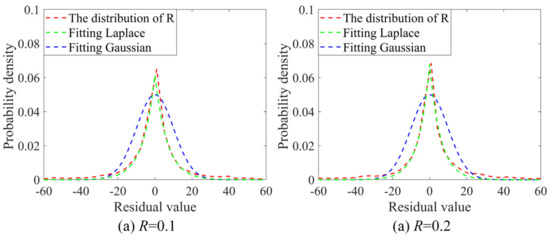

where the constrained norm of the group sparse residuals has not been determined; when , the group coefficient residual distribution satisfies the Laplace distribution; and when , it satisfies the Gaussian distribution. The image “house” is used to analyze the group’s sparse residual distribution in Figure 2.

Figure 2.

The group sparse residual distribution of the original and initial reconstructed images at different sensing rates: (a) , (b) .

Figure 2 shows the distribution curves of the group sparse residuals of “House” at different sensing rates. It demonstrates that the Laplace distribution, as opposed to the Gaussian distribution, can better fit the group sparse residual distribution. As a result, the Laplace distribution approximates the statistical distribution of the group sparse residual. In other words, the norm constrains the group sparsity residual.

The final representation of the proposed optimization model is

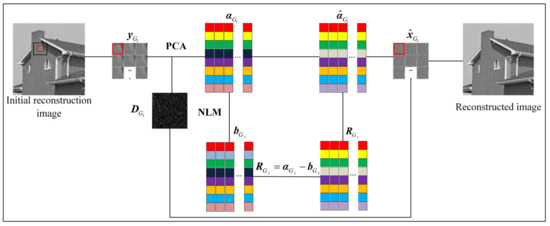

where the first term is a fidelity term, and the second term is a group sparse coefficient regularization term, which ensures the sparsity of the group sparse coefficients and reduces the complexity of the model with a regularization parameter . The third term is the group sparse residual regularization term, which improves the quality of the reconstructed image by increasing the prior information of the image, and the regularization parameter is . Figure 3 depicts the complete flowchart of the optimized GSR-JR model.

Figure 3.

The complete flowchart of the optimized GSR-JR model.

3.2. The Solution of the GSR-RC Model

The ADMM is an effective algorithmic framework for solving convex optimization problems. The core of the ADMM algorithm is to transform the unconstrained optimization problem into a series of constrained sub-problems through variable separation and then use individual algorithms to solve the constrained sub-problems separately. In this paper, the ADMM algorithm is used to solve the model and find the optimal solution. The complete solving process of the optimization model is shown in Algorithm 1.

First, the auxiliary variable and the constraint term are introduced to the optimization problem.

The optimization problem can transform into a constraint form by using the following formula:

the augmented Lagrange form

decomposes into three sub-problems

where is the regularization parameter, and the is the Lagrange multiplier. and sub-problems are specifically solved below, and the number of iterations t will be ignored for clarity.

- A.

- Solve the sub-problem

Given , the sub-problem is transformed into

where is the Gaussian random projection matrix, and it is difficult to solve the inverse of in each iteration. To facilitate the solution, the gradient descent algorithm is used

where represents the step size, and the represents the gradient direction of the objective function.

- B.

- Solve the sub-problem

Given , the sub-problem is transformed as

where . and are two regularization parameters, which will be set in subsequent operations.

Theorem 1.

Let, where represents the j-th element in the error vector, where, assuming thatis an independent distribution with a mean of zero and variance of, so when, the relationship between and can be expressed according to the following properties

where the represents the probability,. The proof of the theorem is inAppendix A.

Formula (22) can simplify as

where and .

The PCA learned a dictionary is an orthogonal dictionary. The problem can be reduced to

where the . According to the solution of the convex optimization problem

the solution is

where describes the operator of soft thresholding and denotes the element-wise product of two vectors. The represents a symbolic function. The means to take the larger number between two elements.

Then can be attained

Therefore, the group sparsity coefficients of all groups can be obtained, combined with the image group dictionary , which is obtained by PCA dictionary learning, and the reconstructed high-quality images can be obtained by .

| Algorithm 1: The optimized GSR-JR for CS image reconstruction |

| Require: The measurements and the random matrix Initial reconstruction: Initial reconstruction image by measurements Final reconstruction: Initial For to Max-Iteration do Update by Equation (16); ; for Each group in do Construction dictionary by using PCA. Update by computing Equation (8). Estimate by computing Equation (9) and Equation (10). Update , by computing . Update , by computing , Update by computing Equation (17). end for Update by computing all . Update by computing all . Update by computing Equation (18). end for Output: The final reconstruction image |

4. Experiment and Discussion

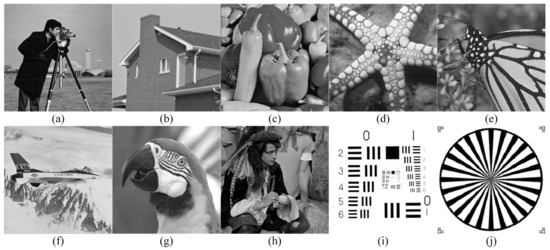

In this paper, extensive simulation experiments are conducted to validate the performance of the optimized GSR-JR model. Peak signal-to-noise ratio (PSNR) [33] and structural similarity (SSIM) [34] are used to evaluate the quality of the reconstructed image. The experiment uses ten standard images with the size of from the University of Southern California’s image library as the test image, as shown in Figure 4. All experimental simulation data are obtained by MATLAB R2020a simulation software on a Core i7-8565U 1.80 GHz computer with 4 GB RAM.

Figure 4.

Ten experimental test images: (a) Cameraman, (b) House, (c) Peppers, (d) Starfish, (e) Monarch, (f) Airplane, (g) Parrot, (h) Man, (i) Resolution chart, and (j) Camera test.

PSNR and SSIM are defined as

where MSE represents the mean square error between the original image and the reconstructed image , and m and n represent the height and width of the image, respectively. means brightness, means contrast, means structure, , represents the mean value of image and , , represents the variance of image and , represents the covariance of the image, and is a constant.

4.1. The Model Parameters Setting

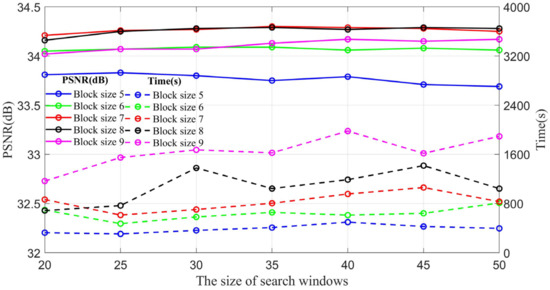

In the simulation experiment, a random Gaussian matrix is employed to obtain measurements based on an image block of size 32 × 32. The variance of the noise is set to , and the small constant is set to 0.4. Because the choice of regularization parameters will directly affect the performance of the model, two adaptive regularization parameters based on the representation-maximum posterior estimation relationship [35] are used. The forms of adaptive regularization parameters are and , where , and represents an estimate of the variance of the group sparse coefficient. The parameter is finally set to 0.01, 0.015, 0.025, 0.07, and 0.042 at different sensing rates. The size of the image block, the size of the search window, and the number of image similar blocks are all determined during the construction of an image group, as shown in Figure 5 and Figure 6.

Figure 5.

The PSNR and Time for “House” with different block sizes and different search windows sizes.

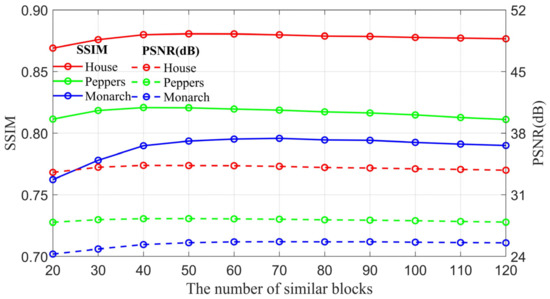

Figure 6.

The SSIM and PSNR with the number of similar blocks for three images.

Based on previous studies [24,25,26,27,28,29], the PSNR and reconstruction time are discussed for image block sizes from 5 × 5 to 9 × 9 and search window sizes from 20 × 20 to 50 × 50. From Figure 5, it can be observed that the PSNR varies less under different search windows for the same image block size, which indicates that the search window has less influence on the reconstruction model quality. The large variation of PSNR for different image block sizes under the same search window indicates that the image block size has a large impact on the model. It can be found that the PSNR is higher when the image block size is 7 × 7 and 8 × 8, and the PSNR is highest when the search window is 35 × 35. It can be noticed that the image reconstruction time increases with the increase of image blocks. However, it is worth noting that there is a minimum value of reconstruction time for each image block at different search windows. This indicates that the image blocks and the search window are matched. This is the reason why we discuss image blocks and search windows together. Considering the model performance and time together, the image block size is set to 7 × 7, and the search window size is set to 35 × 35.

In Figure 6, the effect of the number of similar blocks in an image group on the reconstruction model is discussed. It can be observed that as the sensing rate increases, the SSIM and PSNR of the images first increase and then decrease. The reason may be that when the image group contains a few image blocks, similar image blocks are processed in the same image group, increasing the connection between image blocks and thus improving the image quality. However, when the image group contains a large number of image blocks, i.e., the blocks with low similarity are processed in multiple image groups, errors occur in recovering the average pixels of the image group. Considering the SSIM and PSNR of the three images, the quality of the reconstructed image is relatively better when the number of image blocks is 60. Therefore, the number of similar blocks is set to 60. Based on the above discussion, the size of the image block is set to 7 × 7, the search window is 35 × 35, and the number of similar image blocks is 60.

4.2. The Effect of Group Sparse Coefficient Regularization Constraint

The goal of the group sparse coefficient regularization constraint is to reduce the complexity of the model by constraining the group sparse coefficients. Mallet demonstrated that when the signal is represented sparsely, the sparser the signal representation, the higher the signal reconstruction accuracy [36]. This section focuses on the role of the group sparse coefficient regularization constraint in the model. It is discussed whether the proposed model has the reconstruction performance under the group sparse representation regularization constraint when the sensing rate is 0.1, as shown in Table 1.

Table 1.

The results of the model with and without group sparse coefficient regularization.

From Table 1, it can be observed that the PSNR and SSIM of the reconstructed images are relatively high when the model contains group sparse coefficient regularization constraints. In terms of reconstruction time, the reconstruction time of the model with the regularization constraint is significantly reduced by about a factor of two. This indicates that the group sparse coefficient regularization constraint term can drive the group coefficients to be more sparse and reduce the complexity of the model. The discussion demonstrates that adding group sparse coefficient regularization constraints to the model improves the efficiency of the model.

4.3. Data Results

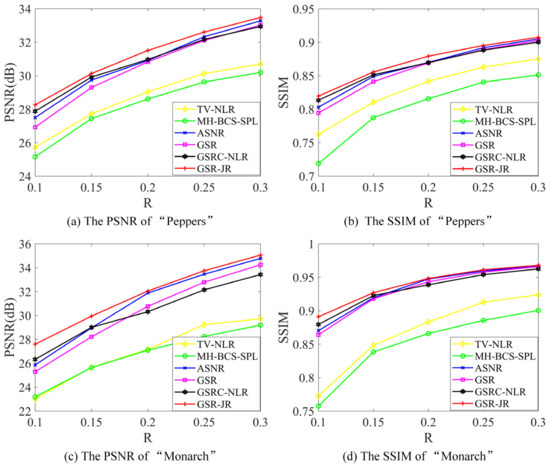

To validate the performance of the proposed model GSR-JR, the proposed GSR-JR model is compared with five existing image reconstruction models, TV-NLR, MH-BCS-SPL, ASNR, GSR, and GSRC-NLR. All comparison models are loaded from the authors’ website and parameters are set to default values according to the authors. Table 2 shows the PSNR and SSIM for 10 test images with different reconstruction models at sensing rates ranging from 0.1 to 0.3. The best values are shown in bold for observation. From Table 2, it can be observed that the proposed model significantly outperforms the other models at low sensing rates. When the sensing rate is 0.1, the average PSNR (SSIM) of the GSR-JR model is improved by 3.78 dB (0.0929), 3.72 dB (0.1291), 1.40 dB (0.0227), 1.80 dB (0.0298), and 1.164 dB (0.0209), respectively, compared with the other models. It is also detected that the PSNR and SSIM of the image reconstruction model increases significantly with the sensing rate. To visualize the trends of the PSNR and SSIM of the reconstructed images, Figure 7 shows the PSNR and SSIM of “Pepper” and “Monarch” at different sensing rates, respectively.

Table 2.

The PSNR (dB) and SSIM of six image reconstruction models at various sensing rates.

Figure 7.

The PSNR and SSIM of different images at all sensing rates. (a) The PSNR of “Peppers”, (b) the SSIM of “Peppers”, (c) the PSNR of “Monarch”, and (d) the SSIM of “Monarch”.

Figure 7 shows the PSNR and SSIM of the six reconstructed models of “Peppers” and “Monarch” at different sensing rates. It can observe that the PSNR and SSIM increase gradually as the sensing rate increase, and the PSNR and SSIM of the proposed model are significantly higher than those of the other models. In the PSNR images of Figure 7a,c, it can be concluded that the PSNR growth rate of different models changes when the sensing rate is 0.2. The PSNR of the ASNR model increases significantly, the GSRC-NLR model increases slowly, and the PSNR of the proposed GSRC-JR model increases steadily.

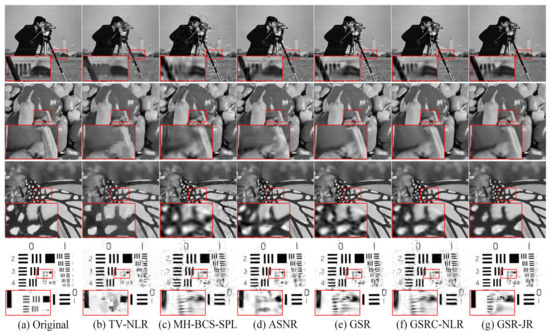

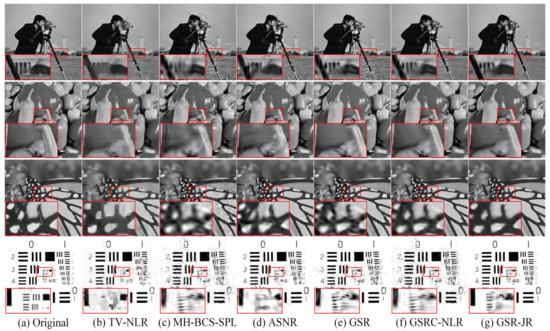

4.4. Visual Effects

Visual perception is the subjective evaluation of the quality of reconstructed images. To illustrate the visual differences of the reconstructed images by six image reconstruction models, the reconstructed images of “Cameraman”, “Peppers”, “Monarch”, and “Resolution chart” at sensing rates of 0.1 and 0.2 are plotted, as shown in Figure 8 and Figure 9. Specific areas of the images are also enlarged to show the differences in the details of the images reconstructed by the reconstructed model.

Figure 8.

Visual effects of images with various reconstruction models when . (a) Original image, (b) TV-NLR, (c) MH-BCS-SPL, (d) ASNR, (e) GSR, (f) GSRC-NLR, and (g) GSR-JR.

Figure 9.

Visual effects of reconstructed images with various reconstruction models when . (a) Original image, (b) TV-NLR, (c) MH-BCS-SPL, (d) ASNR, (e) GSR, (f) GSRC-NLR, and (g) GSR-JR.

Figure 8 shows the visual effect of the reconstructed image when the sensing rate is 0.1. It can observe that the reconstructed images of the TV-NLR model are severely blurred, and the contour boundaries and texture details of the images are hardly identified. The reconstructed images of the MH-BCS-SPL model can only identify the contours of the “Peppers” and “Monarch” images among the four images. The ASNR model also has a significant blurring effect on the reconstructed images, which is mainly around the image details. In the GSR model, although the “Monarch” still has unidentifiable artifacts, the stem of “Peppers” and the horizontal lines in the “Resolution chart” are identifiable. The GSRC-NLR model reconstructs the images visually well, although there are still some artifacts. Compared with the other models, the proposed GSR-JR model reconstructs the images with the best visual effect even though there are also artifacts, and the details of the images are easier to identify when comparing the magnified areas of the four images.

Figure 9 shows the visual effect of the reconstructed image when the sensing rate is 0.2. The TV-NLR model reconstructs the image with more detail, although there is still blurring in the “Peppers”. The MH-BCS-SPL model reconstructs the image with more details and textures, while the GSRC-NLR model reconstructs the image with relatively serious artifacts, and the GSR model reconstructs the image with some artifacts, but the reconstructed image has obvious visual effects. The visual effect of the reconstructed image of the ASNR model can only reach that of the proposed reconstructed model when the sensing rate is 0.2.

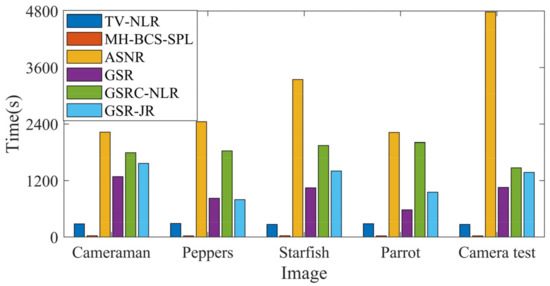

4.5. Reconstruction Time

Reconstruction time is also an important metric for evaluating image reconstruction models. The reconstruction times of the six image reconstruction models are analyzed in Figure 10. From the figure, it can be observed that the TV-NLR and MH-BCS-SPL models take relatively less time, but the quality of the reconstructed images is also worse. The optimized GSR-JR model takes less time than the ASNR model, though the reconstructed image is comparable. The proposed GSR-JR model combines the group sparse residual regularization constraint, so the reconstruction time is slightly higher than that of the GSR model. The reconstruction time is less than that of the GSRC-NLR model, owing to the group sparse coefficient regularization constraint. Considering the reconstruction quality and reconstruction time together, the proposed GSR-JR model is more practical. Meanwhile, it can be found that although the reconstruction time of the GSR-JR model is better than other reconstruction models based on image groups, it cannot achieve real-time image reconstruction, which is the limitation of the reconstruction model, and how to further reduce the reconstruction time of the model and achieve real-time image reconstruction is the direction that the model will continue to work on.

Figure 10.

The reconstruction time for the various model at .

5. Conclusions

In this paper, image groups are used as the sparse representation units to discuss and determine the parameters of image group construction, where the image block is set to 7 × 7; the search window is set to 35 × 35; and the number of similar blocks is set to 60. A group coefficient regularization constraint term is also introduced to reduce the complexity of the model, and the group sparse residual regularization constraint term to increase the prior information of the image to improve the quality of the reconstructed images. The ADMM algorithm framework and iterative thresholding algorithm are used to solve the model. The experimental simulation results verify the effectiveness and efficiency of the GSR-JR model; however, the reconstruction model cannot achieve real-time image reconstruction. In view of the current rapid development of convolutional neural networks and some cases of successful image reconstruction by combining traditional algorithms with neural networks, future research focuses on how to implement the proposed model by solving it using neural networks so as to achieve real-time high-quality image reconstruction and promote further development in the field of image CS reconstruction.

Author Contributions

Concept and structure of this paper, R.W.; resources, Y.Q. and H.Z.; writing—original draft preparation, R.W.; writing—review and editing, R.W., Y.Q. and Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (NSFC) (61675184).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Theorem 1.

Due to assuming an independent distribution with the mean and the variance , then each is also independent and the mean is

By invoking the Law of Large Numbers in probability theory, for any , it produces

Further, let , denote the series of all the groups and , and denote each element of by . Due to the assumption, the is independent with zero mean and the variance is . Therefore, it is possible to obtain

Therefore, the relationship between and is proved. □

References

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candès, E.J.; Romberg, J.; Tao, T. Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 2006, 59, 1207–1223. [Google Scholar] [CrossRef]

- Candès, E.J.; Romberg, J. Sparsity and incoherence in compressive sampling. Inverse Probl. 2007, 23, 969–985. [Google Scholar] [CrossRef]

- Nyquist, H. Certain Topics in Telegraph Transmission Theory. Proc. IEEE 1928, 47, 617–644. [Google Scholar] [CrossRef]

- Monin, S.; Hahamovich, E.; Rosenthal, A. Single-pixel imaging of dynamic objects using multi-frame motion estimation. Sci. Rep. 2021, 11, 7712. [Google Scholar] [CrossRef]

- Zheng, L.; Brian, N.; Mitra, S. Fast magnetic resonance imaging simulation with sparsely encoded wavelet domain data in a compressive sensing framework. J. Electron. Imaging 2013, 22, 57–61. [Google Scholar] [CrossRef][Green Version]

- Tello Alonso, M.T.; Lopez-Dekker, F.; Mallorqui, J.J. A Novel Strategy for Radar Imaging Based on Compressive Sensing. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4285–4295. [Google Scholar] [CrossRef]

- Liu, J.; Huang, K.; Yao, X. Common-innovation subspace pursuit for distributed compressed sensing in wireless sensor networks. IEEE Sens. J. 2019, 19, 1091–1103. [Google Scholar] [CrossRef]

- Courot, A.; Cabrera, D.; Gogin, N.; Gaillandre, L.; Lassau, N. Automatic cervical lymphadenopathy segmentation from CT data using deep learning. Diagn. Interv. Imaging 2021, 102, 675–681. [Google Scholar] [CrossRef] [PubMed]

- Markel, V.A.; Mital, V.; Schotland, J.C. Inverse problem in optical diffusion tomography. III. Inversion formulas and singular-value decomposition. J. Opt. Soc. Am. 2003, 20, 890–902. [Google Scholar] [CrossRef]

- Wiskin, J.; Malik, B.; Natesan, R.; Lenox, M. Quantitative assessment of breast density using transmission ultrasound tomography. Med. Phys. 2019, 46, 2610–2620. [Google Scholar] [CrossRef]

- Kiesel, P.; Alvarez, V.G.; Tsoy, N.; Maraspini, R.; Gorilak, P.; Varga, V.; Honigmann, A.; Pigino, G. The molecular structure of mammalian primary cilia revealed by cryo-electron tomography. Nat. Struct. Mol. Biol. 2020, 27, 1115–1124. [Google Scholar] [CrossRef]

- Vasin, V.V. Relationship of several variational methods for the approximate solution of ill-posed problems. Math. Notes Acad. Sci. USSR 1970, 7, 161–165. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Zenzo, S.D. A note on the gradient of a multi-image. Comput. Vis. Graph. Image Processing 1986, 33, 116–125. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, S.; Zhao, D.; Xiong, R.; Ma, S. Improved total variation based image compressive sensing recovery by nonlocal regularization. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS), Beijing, China, 19–23 May 2013; pp. 2836–2839. [Google Scholar] [CrossRef]

- Wang, S.; Liu, Z.W.; Dong, W.S.; Jiao, L.C.; Tang, Q.X. Total variation based image deblurring with nonlocal self-similarity constraint. Electron. Lett. 2011, 47, 916–918. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 60–65. [Google Scholar] [CrossRef]

- Gan, L. Block Compressed Sensing of Natural Images. In Proceedings of the 2007 15th International Conference on Digital Signal Processing, Wales, UK, 1–4 July 2007; pp. 403–406. [Google Scholar] [CrossRef]

- Chen, C.; Tramel, E.W.; Fowler, J.E. Compressed-sensing recovery of images and video using multi hypothesis predictions. In Proceedings of the 2011 Conference Record of the Forty Fifth Asilomar Conference on Signals, Systems and Computers (ASILOMAR), Pacific Grove, CA, USA, 6–9 November 2011; pp. 1193–1198. [Google Scholar] [CrossRef]

- Zha, Z.; Liu, X.; Zhang, X.; Chen, Y.; Tang, L.; Bai, Y.; Wang, Q.; Shang, Z. Compressed sensing image reconstruction via adaptive sparse nonlocal regularization. Vis. Comput. 2018, 34, 117–137. [Google Scholar] [CrossRef]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image Super-Resolution Via Sparse Representation. IEEE Trans. Image Processing 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Manzo, M. Attributed Relational SIFT-Based Regions Graph: Concepts and Applications. Mach. Learn. Knowl. Extr. 2020, 3, 233–255. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, D.; Gao, W. Group-based Sparse Representation for Image Restoration. IEEE Trans. Image Processing 2014, 23, 3336–3351. [Google Scholar] [CrossRef]

- Zha, Z.; Zhang, X.; Wang, Q.; Tang, L.; Liu, X. Group-based Sparse Representation for Image Compressive Sensing Reconstruction with Non-Convex Regularization. Neurocomputing 2017, 296, 55–63. [Google Scholar] [CrossRef]

- Zha, Z.; Zhang, X.; Wu, Y.; Wang, Q.; Liu, X.; Tang, L.; Yuan, X. Non-Convex Weighted Lp Nuclear Norm based ADMM Framework for Image Restoration. Neurocomputing 2018, 311, 209–224. [Google Scholar] [CrossRef]

- Zhao, F.; Fang, L.; Zhang, T.; Li, Z.; Xu, X. Image compressive sensing reconstruction via group sparse representation and weighted total variation. Syst. Eng. Electron. Technol. 2020, 42, 2172–2180. Available online: https://www.sys-ele.com/CN/10.3969/j.issn.1001-506X.2020.10.04 (accessed on 6 December 2021).

- Keshavarzian, R.; Aghagolzadeh, A.; Rezaii, T. LLp norm regularization based group sparse representation for image compressed sensing recovery. Signal Processing: Image Commun. 2019, 78, 477–493. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X.; Wen, B.; Zhou, J.; Zhu, C. Group Sparsity Residual Constraint with Non-Local Priors for Image Restoration. IEEE Trans. Image Processing 2020, 29, 8960–8975. [Google Scholar] [CrossRef]

- Zha, Z.; Liu, X.; Zhou, Z.; Huang, X.; Shi, J.; Shang, Z.; Tang, L.; Bai, Y.; Wang, Q.; Zhang, X. Image denoising via group sparsity residual constraint. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 1787–1791. [Google Scholar] [CrossRef]

- Boyd, S. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2010, 3, 1–122. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; Mol, C.D. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2010, 57, 1413–1457. [Google Scholar] [CrossRef]

- Avcibas, I.; Sankur, B.; Sayood, K. Statistical evaluation of image quality measures. J. Electron. Imaging 2002, 11, 206–223. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Processing 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Chang, S.G.; Yu, B.; Vetterli, M. Adaptive wavelet thresholding for image denoising and compression. IEEE Trans. Image Processing 2000, 9, 1532–1546. [Google Scholar] [CrossRef]

- Mallat, S.G.; Zhang, Z. Matching Pursuits with Time-Frequency Dictionaries. IEEE Trans. Signal Processing 1993, 41, 3397–3415. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).