Abstract

In 2020 and 2021, humanity lived in fear due to the COVID-19 pandemic. However, with the development of artificial intelligence technology, mankind is attempting to tackle many challenges from currently unpredictable epidemics. Korean society has been exposed to various infectious diseases since the Korean War in 1950, and to overcome them, the six most serious cases in National Notifiable Infectious Diseases (NNIDs) category I were defined. Although most infectious diseases have been overcome, viral hepatitis A has been on the rise in Korean society since 2010. Therefore, in this paper, the prediction of viral hepatitis A, which is rapidly spreading in Korean society, was predicted by region using the deep learning technique and a publicly available dataset. For this study, we gathered information from five organizations based on the open data policy: Korea Centers for Disease Control and Prevention (KCDC), National Institute of Environmental Research (NIER), Korea Meteorological Agency (KMA), Public Open Data Portal, and Korea Environment Corporation (KECO). Patient information, water environment information, weather information, population information, and air pollution information were acquired and correlations were identified. Next, an epidemic outbreak prediction was performed using data preprocessing and 3D LSTM. The experimental results were compared with various machine learning methods through RMSE. In this paper, we attempted to predict regional epidemic outbreaks of hepatitis A by linking the open data environment with deep learning. It is expected that the experimental process and results will be used to present the importance and usefulness of establishing an open data environment.

Keywords:

predicting; regional outbreaks; hepatitis A; deep learning; open data; big data; machine learning 1. Introduction

As we can see from the spread of COVID-19, SARS, and MERS, we can significantly reduce the number of victims if we can predict the epidemic. The reason why infectious diseases are considered “the existence of fear” in living things, including mankind, is because we do not know when, how and how they will occur [1,2,3].

Recently, many researchers have used the machine learning technique, a form of artificial intelligence, to obtain effective results in the prediction of changes in emotions or decision-making among people by data from social network systems, such as tweets on Twitter, posts on Facebook, and blogs [4,5]. Random Forest, Gradient Boost, Lasso, Ridge, Linear Regression, KNN, MLP, XG Boost, and Cat boost are commonly used for data prediction in machine learning techniques. Let us look at the pros and cons of some machine learning techniques. Linear regression offers advantages, such as simple implementation, easy understanding, quick training, and classification based on features. In the case of KNN, the advantages are ease of understanding and lower overheads in the adjustment of parameters. On the other hand, the disadvantages of linear regression include: its limitation to linear applications, its unsuitability to many real-life problems, the default assumption of input error, and its assumption of independent features may not always be true. In the case of KNN, extra care required for the selection of K, and the cost of computation is high when working with large datasets.

Recently, various disease prediction studies have been published. Santos, Carlos, and Matos studied influenza in 2014, but they only considered Portugal in their proposed work [6]. In 2015, Grover, Sangeeta, and Aujla processed data using tweets for swine flu [7]. In 2017, McGough and Sarah F studied zika virus, and they only predicted one parameter for forecasting [8]. In 2018, Nair, Lekha R., Sujala D. Shetty, and Siddhanth D. Shetty studied heart disease; however, they did not do so under the category of epidemics, so their study needed to be linked with a health care service provider in order to work in real time [9]. In 2019, Maurice and Nduwayezu studied malaria; their study was limited to Nigeria only [10]. In 2020, Petropoulos, Fotios, and Makridakis worked on COVID-19, but they did not use machine learning [11].

In Korea, there are six cases of National Notifiable Infectious Diseases (NNIDs) at category I infection according to the definition established in 1954, as shown in Table 1. Recently, rates of cholera, typhoid fever, paratyphoid fever, shigellosis, and enterohemorrhagic Escherichia coli have been low in Korea. Typhoid fever, cholera, and shigellosis in particular were highly prevalent in the 1960s. According to the analysis of the nation’s hepatitis A antibody retention rate for the 10 years between 2005 and 2014, 7 out of 10 infected people are in their 30s and 40s, and hepatitis A prevention measures for this age group are necessary. In the past 10 years, Korea has taken the openness of public data as a national indicator and has been opening up various daily data, such as population data, meteorological observation data, water quality data, and air quality data. For this reason, using stable and high-accuracy deep learning technology, we have been able to verify the relationship between diseases and the public data on daily life collected over many years.

Table 1.

Prevalence of National Notifiable Infectious Category I Diseases in Korea (restructured based on [12,13]).

Hence, in this paper, we aim to minimize the costs and damages involved in the prevention of epidemic outbreaks by predicting regional outbreaks of hepatitis A by using publicly available data in Korea and recent machine learning algorithms.

2. Prediction System of Hepatitis A

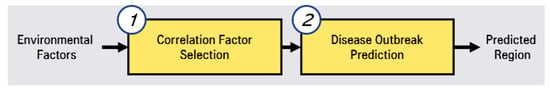

To predict hepatitis A, we conducted a two-phase approach, as shown in Figure 1.

Figure 1.

Two-phase approach of prediction system for hepatitis A.

The first step is correlated factor selection for learning for the prediction model. In this correlated factor selection step, we separate irrelevant factors from environmental factors through statistical analysis. The second step is disease outbreak prediction through LSTMs (long short-term memory networks) [14,15]. In this phase of the prediction, we preprocess the selected correlated factors and predictions by using LSTMs.

2.1. Correlated Factor Selection

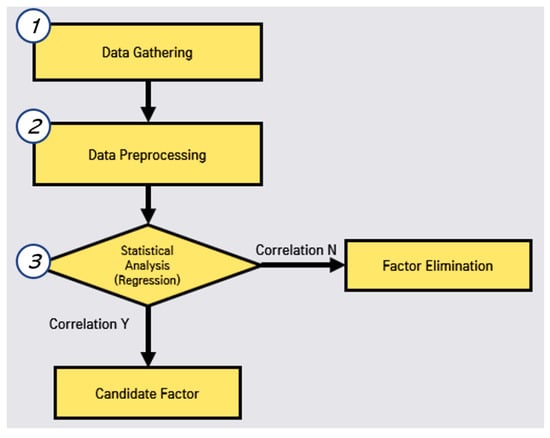

In this correlated factor selection, we conduct data gathering, data preprocessing, and statistical analysis, as shown in Figure 2. First, we perform web crawling to gather the open data for each region in Korea by studying open data sites in Korea.

Figure 2.

Process of correlated factor selection.

1. Patient information: KCDC (Korea Centers for Disease Control & Prevention), http://www.cdc.go.kr;

2. Water Environment Information: NIER (National Institute of Environmental Research), http://water.nier.go.kr/publicMain/mainContent.do;

3. Weather Information: KMA (Korea Meteorological Agency), https://data.kma.go.kr;

4. Population Information: Public Open Data Portal, https://www.data.go.kr/;

5. Air Pollution Information: KECO (Korea Environment Corporation) AirKorea, https://www.airkorea.or.kr.

Second, we perform data preprocessing for the missing values, the regulations of individual regions in Korea. Third, we perform the evaluation of the correlation between the disease (hepatitis A) and each environmental factor. In this evaluation, we eliminate the non-related factors. Subsequently, we can obtain the candidate factors to predict the outbreak.

2.2. Disease Outbreak Prediction with Hepatitis A by Regression Analysis

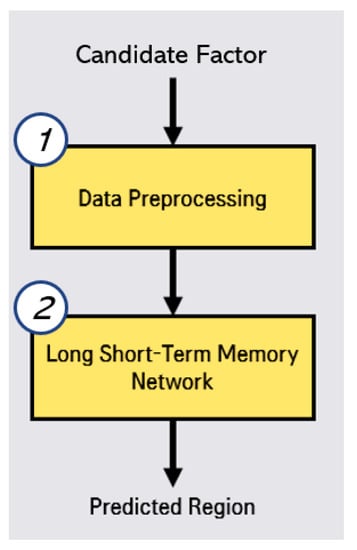

In this disease outbreak prediction, we conduct the two steps, data preprocessing and LSTMs by using selected correlated factor (candidate factor), as shown in Figure 3. In the preprocessing step, we reorganize the data by living area, feature scaling from 0 to 1. In the prediction by LSTMs step, we calculate that RMSE (Root Mean Square Error) [16] for Random Forest [17], Gradient Boosting Regression [18], Lasso [19], Ridge [20], Linear Regression [21], K-Neighbors Regression, MLP (Multi-Layer Perceptron) Regression [22], XGB Regression, and Cat Boost Regression. These RMSE evaluation results are used for the determination of hyper-parameter adjustment and optimal algorithm selection.

Figure 3.

Process of outbreak region prediction for hepatitis A.

3. Experimental Results

3.1. Correlated Factor Selection

We gather the data from the websites that mention ‘A. Correlated Factor Selection’ through web crawling, as shown in Table 2.

Table 2.

Examples of web crawling data.

Measurement data that are missing for various reasons are called missing values. Missing values are displayed as None, NaN, or blank in the program, and a dataset with many such missing values greatly affects the quality of the statistical prediction in the model. In particular, in machine learning models, all input values are assumed to be meaningful values, so missing values further affect the quality of the model. Rubin [23] classified missing data problems into three categories, which are missing completely at random (MCAR), missing at random (MAR), and not missing at random (NMAR). If the probability of being missing is the same for all cases, then the data are said to be MCAR. If the probability of being missing is the same only within groups defined by the observed data, then the data are MAR. If neither MCAR nor MAR holds, then the probability is MNAR. The methods of dealing with missing values are cross-sectional data, consisting of observation values viewed at one point in time for each item, and panel data (longitudinal data), consisting of observation values of multiple objects from multiple viewpoints using time series data. Methods commonly used for cross-sectional data include removing missing values, the imputation of mean or median values, the imputation of the most frequent values or 0 or specific constants, the imputation of K-NN, the MICE (Multivariate Imputation by Chained Equation) imputation method, and imputation using deep learning.

In this study, we used deep learning-based imputation, which is currently widely used; it is more accurate than other methods and has the ability to process a feature encoder. When there are too many missing value items, corresponding items are removed. We measured the missing values using a Random Forest regressor, and both the previous subsequent five the missing values were used as training data. We set the estimator to 50 and the max depth to 4 to prevent overfitting because there was little training data.

We removed the missing value, as shown in Table 3. We marked the missing value as ‘*’ to represent the blank information, as shown in Table 3 (upper). We replaced the missing values with new values according to the missing values policy, as shown in Table 3 (lower).

Table 3.

Replace processing for missing value (upper: original data, lower: replaced missing value).

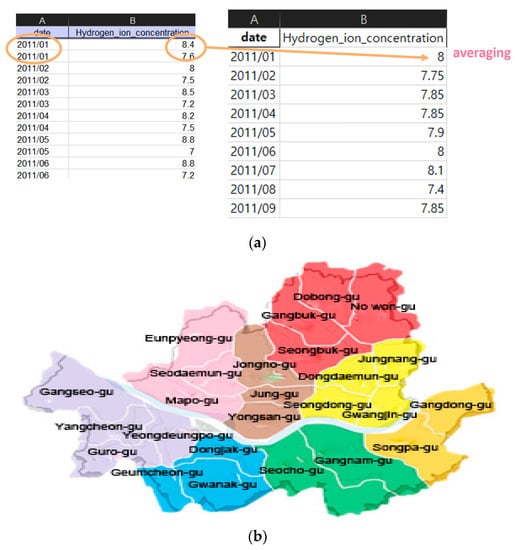

We performed the data regulation and region regulation for the monthly data as the mean of the monthly measured data, the integration for the region as living area and the correlated environment data, as shown in Figure 4. Figure 4a (left) shows the original data and Figure 4a (right) shows the mean of the monthly data. The publicly available data includes water quality measurement data that does not exist at a specific time due to problems such as the installation of measurement sensors. To solve this problem, we recombined the regions based on the living area, as shown in Figure 4b, and divided them into eight areas. Each color was arbitrarily selected as a color that could clearly distinguish the region.

Figure 4.

Regulation of data (a) left: original data, upper right: mean of monthly data, (b) integrated living area.

We then adjusted the number of epidemic outbreaks to the number of outbreaks per 100,000 population in order to measure the same conditions across different regions, as shown in Table 4.

Table 4.

Epidemic outbreaks to the number of outbreaks per 100,000 population.

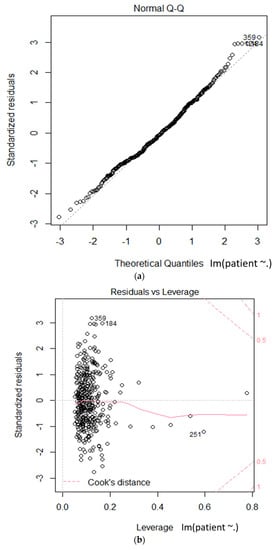

We used multiple regression analysis to verify reliable factors in the relationship between hepatitis A and environmental factors. We validated the goodness of fit of the model by using the R-squared value, as shown in Table 5. We obtained an R-squared value of 0.7054. We present the positive correlations for the COD (Chemical Oxygen Demand) values, total coliform count, total dissolved nitrogen, daily precipitation, and PM10 (particulate matter) in italic bold blue characters, and an $ indicator after the item name, in Table 5. The negative correlations for the TOC (Total Organic Carbon) values, number of Fecal E. coliform, monthly precipitation, and so2 are presented in italic, bold, underlined red characters, with a % indicator after the item name, in Table 5. Figure 5 shows the statistical results of the linear hypothesis between hepatitis A and the environmental factors. As a result of the test, the differences between the two groups were interpreted as statistically significant.

Table 5.

Multiple regression analysis results of each environmental factor and hepatitis A.

Figure 5.

Linear model based statistical test on the relationships between hepatitis A and environmental factors (a): theoretical quantiles vs standardized residuals; (b): leverage vs standardized residuals.

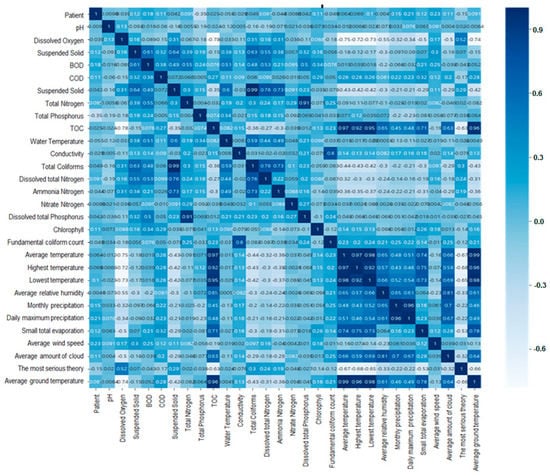

Figure 6 shows that the results of validation of correlation coefficient between environmental factors using heatmap Figure 6 shows that some environmental predictors of the model used in the regression analysis we used have low correlations with other environmental predictors in the correlation coefficient between hepatitis A and environmental factors. Therefore, it was verified that the data analysis did not show a negative effect. The 29 environmental factors used to correlate with hepatitis A patients information are hydrogen ion concentration, dissolved oxygen, BOD, COD, suspended solids, total nitrogen, total phosphorus, TOC, mercury, electrical conductivity, total coliform bacteria, dissolved total nitrogen, ammonia nitrogen, Acid nitrogen, dissolved total phosphorus, phosphate phosphorus, chlorophyll, E. coli bacteria, average temperature, maximum temperature, minimum temperature, average relative humidity, monthly precipitation, highest daily precipitation, small total evaporation, average wind speed, average cloud quantity, deep snow, average ground Temperature.

Figure 6.

Validation of correlation coefficient between environmental factors using heatmap.

3.2. Outbreak Region Prediction of Hapatitis A

Through the correlated factor selection process, we integrated patient information, water environment information, weather information, population information, and air pollution information, and refined the data per 100,000 population to obtain the results shown in Table 6. We removed data without patient information or relevant local information during this process. The data obtained were divided into 17 areas across the country, with relevance for 50 items, and Seoul was recombined into eight areas based on living standards. The data obtained are 613 national data from 2016 to 2018 and 769 Seoul data from 2011 to 2018.

Table 6.

Fifty integrated factors (patient information, region information, environmental factors).

Table 7 shows the normalized data by data scaling. We use the min–max normalization for rescaling the features. Min–max normalization consists in rescaling the range of features to scale the range in [0,1]. The Equation (1) for a min-max of [0,1] is given as:

Table 7.

Example of data scaling (upper: original data, lower: normalized data).

Table 7 (upper) represents the original data before scaling and Table 7 (lower) represents the data normalized by data scaling. In this process, we produce the same scale data for training and testing.

We chose the optimal model to be used for the LSTM network. Nine algorithms were tested, including Random Forest, Gradient Boost, Lasso, Ridge, Linear Regression, KNN, MLP, XG Boost, and Cat boost. Table 8 shows the comparison results for the nine algorithms to choose the candidate for tuning the hyper-parameters. We used the RMSE (Root Mean Square Errors) to compare the algorithm. According to the experimental results, Gradient Boost, Cat Boost, and Random Forest were selected for tuning the hyper-parameters. After tuning the parameters, the best optimal algorithm was Gradient Boost, whose value changed from 0.077935 to 0.0759682. We estimated the optimal parameter using Grid Search CV [24] for Gradient Boost, and modified the learning rate from the default value of 0.1 to 0.075, the N_estimators from the default value of 100 to 200, and the max_depth from the default value of 3 to 4. Grid search CV is a function provided by sklearn that automatically learns the number of cases that can be made with the values by entering the desired hyper-parameter and numerical range. Furthermore, it calculates the best-performing parameter as the final output based on the evaluation index (in this paper we used MSE) set by the user, based on the learned data [24].

Table 8.

RMSE Comparison results for the nine algorithms.

The tests were conducted in one area of Seoul, the training data used were from 2016 to March 2018, and the validation data used were from April to October 2018. To perform the predictions, the tests were conducted using data from November and December 2018. The epidemic of hepatitis A is shown in Figure 7. The blue line is the training data, the orange line is the validation data, the green line is the test data. Figure 7 visually presents the selection of training data, validation data, and test data within the time series data, including the change in the number of hepatitis A patients.

Figure 7.

Visualization of epidemic of hepatitis A dataset (training, validation, test data).

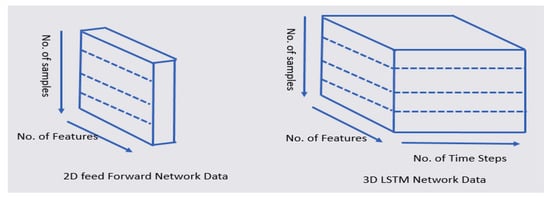

We transformed the 2D data into 3D data, as shown in Figure 8. The 2D data comprised a number of features and samples. The 3D data comprised a number of features, samples, and time steps. In order to predict the y_t + 1 time point using the LSTM, a total of six time steps was used from the y_t time point to the past y_t-5, as shown in Table 9. In our model, we use the sequential model, the LSTM layer, and the Dense layer. The optimizer is RMSprop (Root Mean Square propagation) and the loss function is MSE (Mean Square Error). RMSProp prevents the learning rate from dropping too close to zero by reflecting only the information of the new slope, rather than adding all the previous slopes uniformly. MSE is the most commonly used regression loss function. MSE is the sum of squared distances between the target variable and the predicted values. In order to process small data, the batch size was set to 2.

Figure 8.

Transformation of 2D feed-forward data into 3D LSTM data.

Table 9.

Serial data for LSTM.

We conduct 15 epochs for learning. Early stopper is used to halt the training of the LSTMs at the right time to avoid overfitting and underfitting the model.

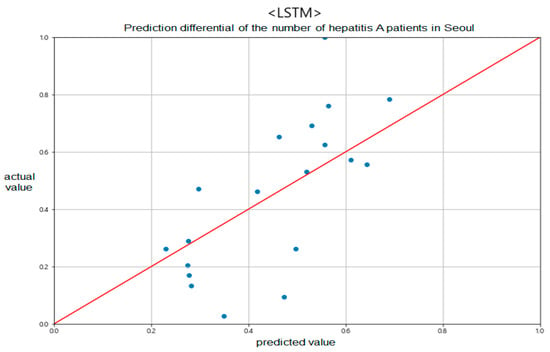

For this paper, because of the amount of data used was not large, we applied the early stopping algorithm to prevent overfitting. Figure 9 shows the comparison results of the predicted and actual values for one area of Seoul.

Figure 9.

Prediction differential of the number of hepatitis A patients in Seoul between predicted and actual values.

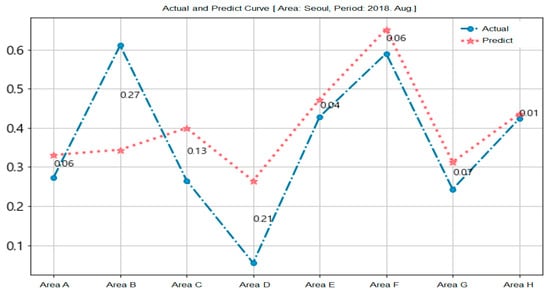

Figure 10 shows the prediction results for the epidemic of hepatitis A in Seoul. We used the training data (from January 2016 to July 2018) and the test data (August 2018) on the eight recombined areas of Seoul. The circle symbol is the actual data and the start mark is the predicted data for each area.

Figure 10.

Prediction of epidemic of hepatitis A in Seoul.

Areas B and D demonstrate many differences between forecasts and measurements because the weather and air pollution information used in the forecasts were not measured in a specific area, but rather across Seoul. This is another potential reason for the error that occurred when forcibly setting the eight recombined areas as the district area of Seoul.

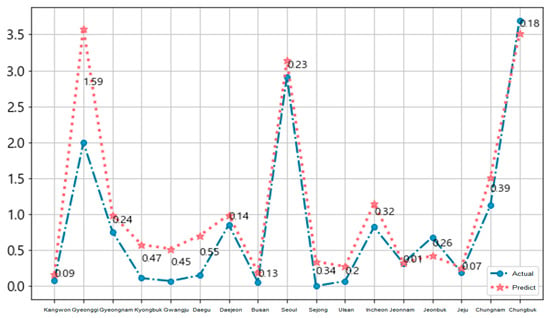

Figure 11 and Figure 12 show the national 17-area prediction of the epidemic of hepatitis A in Korea for each local government unit. We used the training data (from January 2016 to November 2018) and the test data (December 2018). The blue circle symbol is the actual data and the red star symbol is the predicted data for each area in Figure 11.

Figure 11.

National prediction of epidemic of hepatitis A per local government unit.

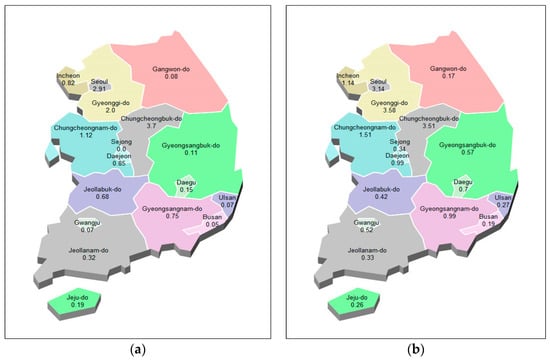

Figure 12.

National prediction map of epidemic of hepatitis A. (a): actual values, (b): predicted values.

4. Conclusions

In this paper, we propose a prediction model for the epidemic of hepatitis A. We analyzed the correlation between environmental factors and hepatitis A based on data collected from the public data system in Korea. The predictions of the area of occurrence were performed based on 3D LSTM, a machine learning method, using information on the water environment, the weather, the population, air pollution information, and hepatitis A patients.

The prediction of hepatitis A showed high accuracy with an error of about person per 100,000 population. We confirm that the environmental information in this study can predict the prevalence of hepatitis A. In addition, our study confirmed that fecal coliform count and PM10 among the environmental information were factors of high importance in predicting hepatitis A. In the future research, we will identify factors that increase reliability and apply them to more infectious diseases.

Author Contributions

Conceptualization, M.L. and I.N.; data curation, K.L., M.L. and I.N.; formal analysis, K.L. and I.N.; funding acquisition, I.N.; methodology, K.L., M.L. and I.N.; project administration, I.N.; resources, K.L. and M.L.; supervision, I.N.; validation, M.L. and I.N.; visualization, M.L.; writing—original draft, M.L. and I.N.; writing—review and editing, K.L. and I.N. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by research fund from Chosun University, 2020.

Data Availability Statement

We used public data from KCDC to study only information on the number of cases of infectious diseases by region. 1. Patient information: KCDC (Korea Centers for Disease Control & Prevention), http://www.cdc.go.kr; 2. Water Environment Information: National Institute of Environmental Research (NIER), http://water.nier.go.kr/publicMain/mainContent.do; 3. Weather Information: KMA (Korea Meteorological Agency), https://data.kma.go.kr; 4. Population Information: Public Open Data Portal, https://www.data.go.kr/; 5. Air Pollution Information: KECO (Korea Environment Corporation) AirKorea, https://www.airkorea.or.kr.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lee, M.K.; Paik, J.H.; Na, I.S. (Eds.) Outbreak Prediction of Hepatitis A in Korea based on Statistical Analysis and LSTM Network. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020. [Google Scholar]

- Park, S.; Cho, E. National Infectious Diseases Surveillance data of South Korea. Epidemiol. Health 2014, 36, e2014030. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alamo, T.; Reina, D.G.; Mammarella, M.; Abella, A. Covid-19: Open-Data Resources for Monitoring, Modeling, and Forecasting the Epidemic. Electronics 2020, 9, 827. [Google Scholar] [CrossRef]

- Singh, R.; Singh, R. Applications of sentiment analysis and machine learning techniques in disease outbreak prediction—A review. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Hong, T.; Pinson, P.; Fan, S.; Zareipour, H.; Troccoli, A.; Hyndman, R.J. Probabilistic energy forecasting: Global Energy Forecasting Competition 2014 and beyond. Int. J. Forecast. 2016, 32, 896–913. [Google Scholar] [CrossRef] [Green Version]

- Santos, J.C.; Matos, S. Analysing Twitter and web queries for flu trend prediction. Theor. Biol. Med Model. 2014, 11, 1–11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grover, S.; Aujla, G.S. Prediction model for Influenza epidemic based on Twitter data. Int. J. Adv. Res. Comput. Commun. Eng. 2014, 3, 7541–7545. [Google Scholar]

- McGough, S.F.; Brownstein, J.S.; Hawkins, J.B.; Santillana, M. Forecasting Zika Incidence in the 2016 Latin America Outbreak Combining Traditional Disease Surveillance with Search, Social Media, and News Report Data. PLOS Negl. Trop. Dis. 2017, 11, e0005295. [Google Scholar] [CrossRef] [PubMed]

- Nair, L.R.; Shetty, S.D.; Shetty, S.D. Applying spark based machine learning model on streaming big data for health status prediction. Comput. Electr. Eng. 2018, 65, 393–399. [Google Scholar] [CrossRef]

- Nduwayezu, M.; Satyabrata, A.; Han, S.Y.; Kim, J.E.; Kim, H.; Park, J.; Hwang, W.J. Malaria Epidemic Prediction Model by Using Twitter Data and Precipitation Volume in Nigeria. J. Korea Multimed. Soc. 2019, 22, 588–600. [Google Scholar]

- Petropoulos, F.; Makridakis, S. Forecasting the novel coronavirus COVID-19. PLoS ONE 2020, 15, e0231236. [Google Scholar] [CrossRef] [PubMed]

- Korea Centers for Disease Control and, P. 2013 Infectious Diseases Surveillance Yearbook; KCDC: Osong, Korea, 2014; pp. 50–63. [Google Scholar]

- Korea Centers for Disease Control and, P. Public Health Weekly Report Disease Surveillance Statistics, 10th ed.; KCDC: Osong, Korea, 2018. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9. [Google Scholar] [CrossRef] [PubMed]

- Cho, W.; Kim, S.; Na, M.; Na, I. Forecasting of Tomato Yields Using Attention-Based LSTM Network and ARMA Model. Electronics 2021, 10, 1576. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?—Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso: A retrospective. J. R. Stat. Soc. Ser. B 2011, 73, 273–282. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Schneider, A.; Hommel, G.; Blettner, M. Linear Regression Analysis. Dtsch. Aerzteblatt Online 2010. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Rubin, D.B. Inference and Missing Data. Biometrika 1976, 63, 581–590. [Google Scholar] [CrossRef]

- sklearn.model_selection.GridSearchCV. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.GridSearchCV.html (accessed on 7 October 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).