Abstract

Distance learning has become essential to higher education, yet its application in military officer training presents unique academic, operational, and security challenges. For Lithuania’s future officers, remote education must foster not only knowledge acquisition but also decision-making, leadership, and operational readiness—competencies traditionally developed in immersive, in-person environments. This study addresses these challenges by integrating System Dynamics Modelling, Contemporary Risk Management Standards (ISO 31000:2022; Dynamic Risk Management Framework), and Learning Analytics to evaluate the interdependencies among twelve critical factors influencing the system resilience and effectiveness of distance military education. Data were collected from fifteen domain experts through structured pairwise influence assessments, applying the fuzzy DEMATEL method to map causal relationships between criteria. Results identified key causal drivers such as Feedback Loop Effectiveness, Scenario Simulation Capability, and Predictive Intervention Effectiveness, which most strongly influence downstream outcomes like learner engagement, risk identification, and instructional adaptability. These findings emphasize the strategic importance of upstream feedback, proactive risk planning, and advanced analytics in enhancing operational readiness. By bridging theoretical modelling, contemporary risk governance, and advanced learning analytics, this study offers a scalable framework for decision-making in complex, high-stakes education systems. The causal relationships revealed here provide a blueprint not only for optimizing military distance education but also for enhancing overall system resilience and adaptability in other critical domains.

1. Introduction

Distance learning has become a defining feature of contemporary higher education, offering flexibility, scalability, and broader access to knowledge [1,2]. Yet, its application in military officer training introduces distinct academic and operational complexities that differ significantly from civilian contexts [3,4]. For Lithuania’s future officers, educational programs must not only convey theoretical knowledge but also cultivate decision-making, leadership, and operational readiness—competencies traditionally developed in immersive, in-person environments [5,6]. This dual requirement creates inherent tensions between pedagogical effectiveness, technological infrastructure, and the security-sensitive nature of military training.

From a disciplinary perspective, distance education for cadets spans multiple problem domains. The transition from classroom-based to virtual instruction disrupts established teaching models, challenging the delivery of practical skills, situational awareness, and collaborative problem-solving [7,8]. Ensuring assessment reliability and academic integrity is particularly difficult in distributed environments, especially when high-stakes evaluations underpin professional accreditation and readiness [9]. Sustaining motivation, discipline, and engagement—already complex in higher education—is intensified by the strictly structured yet physically decentralized cadet context [10]. Moreover, the integration of classified or sensitive operational content into online platforms demands stringent cybersecurity measures, which are often absent from civilian e-learning systems [11]. These challenges are further amplified by the nature of military higher education as a complex adaptive system, where pedagogical processes are tightly coupled with operational demands, personnel rotations, and evolving national security contexts [12,13]. Research in system dynamics and risk management has demonstrated the value of adaptive modelling for understanding interdependent subsystems such as instructional design, learner performance, and technological reliability [14,15,16]. However, applications of such modelling approaches to the specific requirements of military distance learning remain limited, despite the fact that performance outcomes in this domain have direct implications for national defence readiness.

In Lithuania, the development of distance education for armed forces personnel is shaped by the National Security Strategy [17], the Law on Higher Education and Research [18], and the Long-Term Development Programme of the Lithuanian Armed Forces [19]. These policy documents emphasize the need for flexible, technology-enabled training solutions to ensure operational readiness, lifelong learning opportunities for cadets and officers, and alignment with NATO standards for interoperability. The General Jonas Žemaitis Military Academy of Lithuania (MAL) has integrated e-learning and blended formats into officer training, especially during limited mobility, international deployments, and the COVID-19 pandemic. Under a Commandant’s order, the Academy shifted operations to Moodle, enabling remote lectures, tasks, and exams to ensure continuity [20]. It also delivered the Troop Leading Procedures in Movement Course for Ukrainian cadets under NATO’s Defence Education Enhancement Program entirely online, showcasing MAL’s capacity to apply distance learning in both national and international contexts [21]. Within this framework, distance military education is not only a pedagogical innovation but also a security requirement, ensuring continuity of professional development under hybrid threat conditions and during periods of national emergency.

The existing literature reveals three converging but under-integrated approaches that inform the present study. First, system dynamics modelling offers a means to capture feedback loops, delays, and non-linear effects within educational systems, providing tools for scenario simulation and overall system resilience analysis [22,23]. Second, contemporary risk management frameworks, including ISO 31000:2022 and the Dynamic Risk Management (DRM) framework, provide structured processes for identifying, assessing, and adapting to evolving threats and uncertainties in both pedagogical and operational contexts [24,25]. Third, learning analytics enables continuous performance monitoring, the detection of early warning indicators of learning lacks, and evidence-based instructional interventions [26,27].

Despite advances in each of these areas, their integration into a unified decision-making framework for security-sensitive distance education has not been systematically explored. The purpose of this study is to address this gap by using system dynamics modelling, modern risk management standards, and learning analytics into a tailored decision-making methodology for Lithuanian military cadet distance learning. This integration helps proactively identify and mitigate academic and operational risks ranging from skill loss to job attrition, while enabling the dynamic allocation of instructional and technological resources. This study aims to contribute both to the theoretical understanding of distance learning in complex, security-sensitive domains and to the practical development of resilient, adaptive education systems. Therefore, this study contributes to the combination of three methodological aspects and aims to identify the main causal factors, prioritize intervention points and increase the overall system resilience in military e-learning environments.

The article is structured as follows. Section 2 reviews the relevant literature and justifies the selection of study criteria. Section 3 details the methodology, including research design, classification of dimensions, justification for fuzzy DEMATEL (Decision-Making Trial and Evaluation Laboratory), and data collection. Section 4 reports the analytical results, while Section 5 discusses their implications for distance military education in complex, risk-sensitive environments. Section 6 concludes with key findings, practical implications, and future research directions.

2. Literature Review

Military distance education presents challenges that extend beyond those typically addressed in civilian e-learning [28]. Whereas civilian contexts prioritize efficiency, learner satisfaction, and employability, military programs must simultaneously ensure operational readiness, interoperability with allied standards, and continuity under deployment or crisis conditions [29]. Instructional materials often involve classified or security-sensitive content, requiring strict adherence to information assurance frameworks and overall system resilience against cyber intrusion. Cadets also operate within rigid routines and readiness demands, intensifying difficulties in sustaining motivation, discipline, and engagement. Moreover, officer training must cultivate leadership and decision-making competencies that are not easily transferable through conventional online methods [30,31]. These distinct requirements position military distance learning not merely as a pedagogical adaptation but as a strategic capability tied to force development and national security. Accordingly, analytical frameworks for this domain must integrate operational risk, technological robustness, and pedagogical dynamics, rather than relying on models derived from civilian distance-education contexts [32].

Designing a risk-aware, resilient distance-learning model for military officer education requires criteria grounded in three complementary knowledge streams: system dynamics of complex socio-technical systems, contemporary risk management, and learning analytics for data-driven decision-making. These domains are not independent but mutually reinforcing: system dynamics provides the modelling grammar for causal structures and feedback loops, risk management standards define the evaluative lens through which uncertainties are classified and mitigated, and learning analytics offers the continuous empirical signals that inform adaptive interventions. The integration of these three elements establishes a theoretical mechanism where models, norms, and data converge to guide resilient decision-making.

From a systems dynamics perspective, cadet distance learning can be conceptualized as a tightly coupled complex system where instructional design, technology reliability, and organizational processes co-evolve. Seminal system dynamics studies emphasize the importance of feedback loops, delays, nonlinearity, and policy resistance, highlighting how interventions in one part of the system can trigger unexpected effects elsewhere. For example, stricter assessment rules may discourage collaboration, or increased online monitoring could reduce student trust and engagement [33,34,35]. Strategy support and decision-focused modelling further motivate criteria on adaptivity (capacity to adjust processes in response) or overall system resilience and resource scalability because policy levers in education frequently create unintended consequences that must be revealed through causal maps and simulation [36]. In this study, system dynamics is positioned as the structural modelling core into which risk management requirements and learning analytics indicators can be embedded.

Contemporary risk management prioritizes structured identification, analysis, and treatment of uncertainty. ISO 31000 provides organization-level guidance for establishing risk criteria, controls, and continual improvement [37], while Aven clarifies risk conceptualization under deep uncertainty—informing the inclusion of assessment integrity, technology reliability, and cybersecurity/IA as explicit risk domains [38]. Overall system resilience engineering extends this to everyday performance—emphasizing an organization’s capacity to anticipate, monitor, respond, and learn—which directly motivates criteria on adaptive risk monitoring and organizational learning loops in distance programs [39].

Learning analytics (LA) contributions offer data-driven capability to surface early-warning indicators for disengagement, skill decay, and academic integrity risks. Foundational LA work and systematic reviews establish the role of behavioural traces, dashboards, and interventions in improving outcomes, but also stress ethics, privacy, and governance constraints that must be built into any monitoring architecture [40,41,42]. Meta-analytic evidence from online and blended learning links teaching/social/cognitive presence to actual learning and satisfaction [43], while recent scoping reviews show LA research tends to privilege behavioural measures (clicks, time-on-task), highlighting the need to include multi-dimensional engagement criteria (behavioural, cognitive, affective) [44].

Regarding assessment fairness, mixed but converging evidence suggests that malpractice in online exam misconduct remains a material risk; proctoring can reduce inflated scores, though equity, privacy, and validity concerns persist [45,46,47,48,49,50]. Given the defence context, cybersecurity and information assurance are non-negotiable. NIST SP 800-171 [51] specifies concrete safeguards for Controlled Unclassified Information that often appears in military curricula and training artefacts [51,52,53,54]. Vulnerability analyses of widely used learning management systems (LMS) platforms confirm persistent exposure to common vulnerabilities and exposures (CVE) tracked issues, justifying criteria on platform security posture and secure interoperability with defence systems [55]. At the same time, technology/service quality and instructor digital competence affect learning effectiveness at scale in online programs, reinforcing criteria on platform reliability/availability and instructor readiness for data-informed pedagogy [56].

Taken together, this literature base establishes a triangulated theoretical mechanism: system dynamics provides the structural logic of causal interactions, risk management supplies the evaluative criteria for handling uncertainty, and learning analytics ensures continuous data-driven feedback to adjust both models and controls. This synthesis justifies the application of Fuzzy Decision-Making Trial and Evaluation Laboratory to structure causal criteria into clusters, which can then be embedded in system dynamics modelling and used for adaptive risk treatment planning [57].

3. Methodology

3.1. Research Design

The present study adopts a Fuzzy Decision-Making Trial and Evaluation Laboratory (Fuzzy DEMATEL) approach to model and prioritize the complex causal relationships among critical factors affecting the overall system resilience and effectiveness of distance military education. The methodology integrates System Dynamics Modelling (SDM), Contemporary Risk Management Standards (CRMS), and Learning Analytics (LA) reflecting the study’s three primary dimensions identified in the introduction. This integration supports both qualitative expert judgment and quantitative network analysis within multi-criteria decision-making (MCDM) framework.

The three dimensions of the study are operationalized into twelve evaluation criteria that address pedagogical, technological, operational, and security-specific challenges (Table 1). Each criterion is based on previous research, ensuring conceptual validity.

Table 1.

Criteria for Fuzzy DEMATEL and representative sources.

3.2. Classification of Study Dimensions and Criteria

The study is structured around three interconnected dimensions that reflect the complex nature of distance military education. System Dynamics Modelling (SDM) captures the feedback loops, interdependencies, and time delays inherent in military training systems, enabling simulation of instructional interventions under operational constraints. Contemporary Risk Management Standards (CRMS), specifically ISO 31000:2022 and the Dynamic Risk Management Framework (DRMF), provide a structured approach for identifying, assessing, and mitigating both pedagogical and operational risks in a security-sensitive context. Learning Analytics (LA) leverages real-time educational data to monitor cadet performance, predict potential failures, and inform adaptive instructional strategies.

Details of the specific criteria selected for this study, belonging to the three dimensions discussed, are presented in Table 2.

Table 2.

Selected dimensions and criteria.

These three dimensions are represented by twelve criteria, each justified by the literature sources to ensure theoretical validity and practical significance. Moreover, to analyze the complex cause–effect relationships among these criteria, the study employs the multi-stage Multi-Criteria Decision-Making (MCDM) approach to evaluate and optimize the overall system resilience of distance learning for Lithuanian military cadets, integrating system dynamics modelling, contemporary risk management standards, and learning analytics. The methodological design follows the complex systems perspective, acknowledging the interdependence of educational, technological, and operational subsystems.

3.3. Justification for Fuzzy DEMATEL

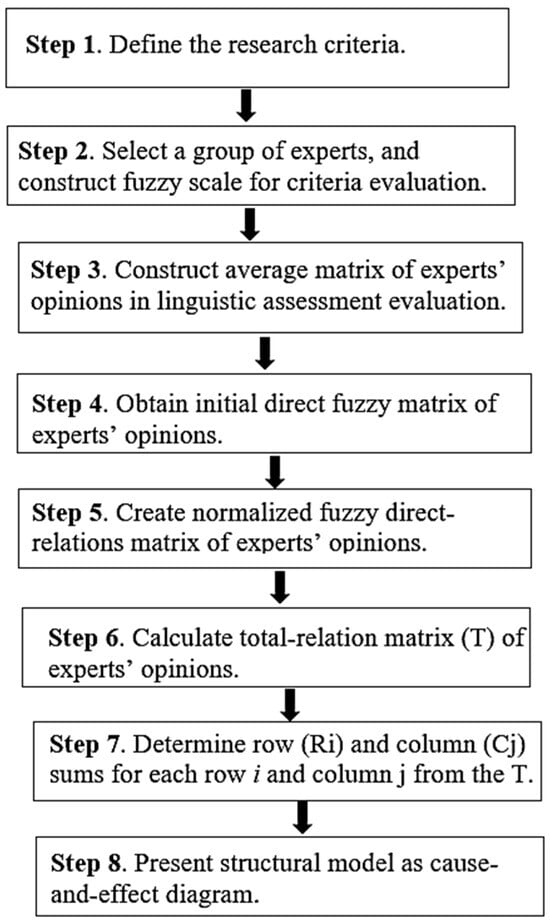

The DEMATEL (Decision-Making Trial and Evaluation Laboratory) technique is well-suited for recognition cause–effect structures in complex systems, while its fuzzy extension was chosen because it can handle the uncertainty and subjectivity of expert judgments while revealing the most influential and dependent factors in the system [57,58]. In military education, the interplay between operational constraints, technology readiness, and pedagogical outcomes is influenced by subjective judgments, making crisp numerical evaluations insufficient. The logical procedures of the Fuzzy DEMATEL method are presented in previous studies [59,60,61]. Accordingly, the full fuzzy-triangular DEMATEL method procedure can be used after eight steps of experts’ opinion examination (see Figure 1).

Figure 1.

Fuzzy DEMATEL method implementation steps.

Accordingly, the full fuzzy-triangular DEMATEL method procedure can be carried out in eight sequential steps of expert opinion examination (see Figure 1). First, the problem context is defined, and relevant evaluation criteria are established based on the literature and expert validation. Second, a panel of domain experts is purposively selected, and asked to provide pairwise influence assessments between criteria using predefined linguistic terms. Third, experts’ linguistic assessments are translated into triangular fuzzy numbers to capture uncertainty and imprecision in human judgement to ensure adequate knowledge coverage. Fourth, individual fuzzy matrices are aggregated to obtain a consolidated group direct-relation matrix. Fifth, normalization is applied to ensure comparability of scales across expert responses. Sixth, the total-relation matrix is computed, which incorporates both direct and indirect influences among criteria. Seventh, prominence (Ri + Ci) and relation (Ri − Ci) values are derived, enabling the classification of factors into cause (drivers) and effect (dependents). Finally, structural model as cause-and- effect diagram is constructed. This structured approach ensures methodological transparency. Also, the fuzzy-triangular representation maintains robustness by handling vagueness characteristic in expert-based evaluations.

As from the third step onward, the procedure is grounded in the mathematical formulations of the fuzzy-triangular DEMATEL method. These formulations are presented in detail through a structured, stepwise explanation below.

- Step 3. At the beginning, we must design the direct—relation matrix . First, the set of criteria for evaluating and optimizing the overall system resilience of distance learning for Lithuanian military cadets is defined as , where each characterizes the i-th criterion with . The set of experts is denoted as , where corresponds to the k-th expert . To capture expert opinions, a set of linguistic terms is introduced , where each represents the s-th linguistic term, must be used. Each expert , completes an individual direct-relation matrix, which can be expressed as Equation (1):

- Step 4. The linguistic terms in the direct-relation matrices are then converted into triangular fuzzy numbers using the established scale [61], shown in Table 3.

Table 3. Linguistic assessment scale based on triangular fuzzy values.

Table 3. Linguistic assessment scale based on triangular fuzzy values.

- Step 5. Design an initial direct-relation matrix with pair wise comparison. Develop the initial fuzzy direct-relation matrix Zk by having evaluators introduce the fuzzy pair-wise influence relationships between the components in matrix where k is the quantity of experts.

- Step 6. Calculate the normalized fuzzy direct-relation matrix “D” using Equation (3) linked to the general fuzzy direct-relation matrix Z.

- Step 7. Compute the total-relation matrix T using Equation (4). Upper and lower values are calculated separately.

- Step 8. Define row (Ri) and column (Cj) sums for each row i and column j from the T matrix, correspondingly, with next equations:

- Step 9. The causal diagram is built with the horizontal axis and the vertical axis . The horizontal axis, named Prominence, represents the overall degree of importance assigned to each criterion. The vertical axis, denoted as Relation, captures the magnitude of its causal influence within the system. In this framework, positive Relation values identify criteria functioning as causal drivers that shape system behaviour. The negative values indicate effect criteria that are primarily influenced by others. This distinction enables the prioritization of driver factors as leverage points for strengthening system resilience.

3.4. Data Collection Procedure

Prior to the evaluation process, a set of twelve criteria was developed based on an extensive review of the scientific literature and aligned with the three analytical dimensions of the study. These criteria were subjected to expert validation, during which participants assessed their significance, clarity, and comprehensiveness. Minor refinements in terminology were introduced to ensure alignment with operational and policy-specific language in the military education context. Following validation, experts conducted pairwise comparisons to evaluate the direct influence of each criterion on every other criterion. These assessments were expressed on a five-point linguistic scale ranging from ‘No influence’ to ‘Very high influence’ (see Table 3).

The empirical stage of the study relied on expert-based judgments to capture the complex interdependencies among the criteria relevant to military distance education. Given the security-sensitive and domain-specific nature of the research, a purposive sampling strategy was employed to ensure that participants possessed the necessary knowledge, experience, and operational insight to provide reliable judgments. Selection criteria included: (1) a minimum of ten years of professional experience in military education, instructional design, or defence-related IT and cybersecurity; (2) direct involvement in the planning, implementation, or evaluation of distance or blended learning programs; and (3) familiarity with risk management practices in military contexts. Data collection took place in May 2025 using a questionnaire designed as a relational matrix of twelve factors, where experts assessed the degree of influence between each pair. In total, fifteen experts participated: eight instructional staff members from the Lithuanian Military Academy, four defence information technology and cybersecurity specialists, and three military education policy and risk management officers. Each expert possessed at least ten years of professional experience, thereby providing both operational familiarity and strategic insight.

The experts’ linguistic evaluations were subsequently transformed into triangular fuzzy numbers in accordance with established fuzzy DEMATEL protocols [59]. This enabled systematic management of uncertainty and subjectivity inherent in human judgment. The overall methodological sequence is illustrated in Figure 1, which demonstrates the structured process of expert selection, criteria validation, pairwise influence assessment, fuzzy number conversion, and group matrix aggregation. This procedure ensured that the results were both analytically robust and representative of the domain expertise required in the military education context.

4. Results

This study applies Fuzzy System Theory in combination with the Decision-Making Trial and Evaluation Laboratory (DEMATEL) method to develop a structured and systematic approach for assessing the overall system resilience and effectiveness of distance learning for Lithuanian military cadets. The integration of fuzzy logic with DEMATEL enables the modelling of expert judgments under uncertainty, allowing for a nuanced and quantitative analysis of the complex interdependencies among critical factors. This approach is particularly suited to military education contexts, where both operational and pedagogical variables are interlinked, and where decision-making often occurs under conditions of incomplete or imprecise information.

As described in the Methodology Section, the analysis is grounded in expert-based evaluation. Fifteen subject-matter experts with extensive experience in military education, operational planning, and risk management participated in structured pairwise influence assessments. They evaluated twelve essential criteria: C1—Instructional Design Adaptability; C2—Feedback Loop Effectiveness; C3—Learner Engagement Dynamics; C4—Scenario Simulation Capability; C5—Risk Identification Completeness; C6—Risk Assessment Accuracy; C7—Contingency Planning Robustness; C8—Real-Time Risk Monitoring; C9—Early Warning Indicators; C10—Data-Driven Personalization; C11—Performance Monitoring Granularity; C12—Predictive Intervention Effectiveness, that together shape the sustainability and operational readiness potential of distance learning for military cadets (see Table 2). These criteria include pedagogical adaptability, operational risk management, and analytics-driven decision-making, reflecting the multidimensional nature of military distance education. Using the fuzzy DEMATEL approach, first linguistic assessment from experts’ opinions was identified (see Table 4).

Table 4.

Expert evaluations expressed in linguistic terms.

The assessments presented in Table 4 were changed into a fuzzy direct-relation matrix (see Table 5) to follow the fuzzy DEMATEL methodology and ensure a rigorous mathematical analysis to model and prioritize the complex causal relationships among critical factors affecting the overall system resilience and effectiveness of distance military education. Next, Equation (3) was applied to normalize the direct-relation matrix, producing the normalized direct-relation matrix to continue study’s multi-stage MCDM framework analysis procedure. Specifically, each fuzzy value was divided by the maximum entry identified in the matrix, thereby standardizing the scale of influence judgments while preserving the relative significance of inter-criterion relationships. This step is critical in the analysis of complex systems, as it refines the input data to facilitate reliable cross-dimensional integration of system dynamics modelling, contemporary risk management standards, and learning analytics.

Table 5.

The initial direct relation matrix.

Following normalization, the generalized relation matrix was derived (Step 5) and subsequently processed through a defuzzification procedure (Step 6), wherein triangular fuzzy values were systematically converted into crisp values. This transformation enhances analytical precision and enables quantitative evaluation across interdependent educational, technological, and operational subsystems.

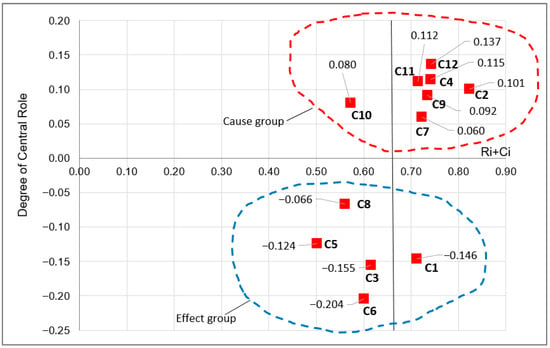

In Step 7, the study continued to identify causal structures within the system by calculating the row and column sums of the generalized relation matrix. These values (Ri + Ci and Ri − Ci) provide a quantitative basis for distinguishing between causal drivers and dependent effects, thus aligning with the core objective of the DEMATEL methodology: to uncover directional cause–effect dynamics within complex decision environments. In the specific context of distance military education, this process reveals the hierarchical influence structure of the twelve criteria, offering insights into how systemic overall system resilience can be optimized through targeted interventions (see Table 6).

Table 6.

Centrality (R + C) and causality (R − C) values for 12 criteria.

So, the hierarchical influence structure of the study criteria provides two critical measures for understanding the systemic role of each criterion: degree of centrality (Ri + Ci) and degree of causality (Ri − Ci). Moreover, centrality indicates the overall importance of a criterion within the system by capturing both the influence exerted (Ri) and the influence received (Ci). Higher Ri + Ci values reflect criteria that are highly interconnected and thus structurally central to system resilience and adaptability.

Consequently, according to calculations, the criteria with the highest centrality scores are Predictive Intervention Effectiveness (C12, 0.743), Scenario Simulation Capability (C4, 0.742), and Early Warning Indicators (C9, 0.734). These findings suggest that the ability to anticipate risks, simulate operational scenarios, and establish early detection mechanisms are essential for shaping the overall effectiveness of distance military education. Feedback Loop Effectiveness (C2, 0.823) also exhibits high centrality, highlighting the central role of adaptive information exchange in maintaining instructional and operational overall system resilience.

Conversely, Risk Assessment Accuracy (C6, 0.600) and Learner Engagement Dynamics (C3, 0.615) are positioned at the lower end of centrality, suggesting that while important, they are more peripheral outcomes that depend strongly on upstream drivers.

Causality (Ri − Ci) measure distinguishes driver (cause) criteria from dependent (effect) criteria. Positive values (Ri − Ci > 0) identify drivers that employ net influence on the system, while negative values indicate dependent factors that are primarily influenced by others:

- Driver criteria (Ri − Ci > 0): Six criteria fall into this group, including Predictive Intervention Effectiveness (C12, 0.137), Scenario Simulation Capability (C4, 0.115), Performance Monitoring Granularity (C11, 0.112), and Early Warning Indicators (C9, 0.092). These represent influence points within the system: strengthening them will force improvements across dependent factors. Notably, these drivers extent all three analytical dimensions, System Dynamics Modelling (SDM), Risk Management Standards (RMS), and Learning Analytics (LA), underscoring the interdependent nature of the decision environment.

- Effect criteria (Ri − Ci < 0): The remaining six criteria, including Instructional Design Adaptability (C1, −0.146), Learner Engagement Dynamics (C3, −0.155), and Risk Assessment Accuracy (C6, −0.204), are classified as outcomes. These reflect the end-state performance of the distance education system, improving only when upstream drivers are effectively enhanced.

The final step of the analysis involved constructing the cause-and-effect relationship diagram (see Figure 2), which provides a visual representation of the interdependencies among educational, technological, and operational subsystems.

Figure 2.

Causal diagram of interdependencies among resilience criteria in military distance education: cause group comprises C2, C4, C7, C9, C10, C11, and C12; the effect group includes C1, C3, C5, C6, and C8.

The diagram (see Figure 2) provides a structured basis for decision-makers to identify leverage points and formulate evidence-based strategies aimed at strengthening the sustainability and overall system resilience of distance learning in military education. The classification of criteria into causal factors (C12 < C4 < C11 < C2 < C9 < C10 < C7) and effect factors (C8 < C5 < C1 < C3 < C6) is particularly significant, as it describes which dimensions require practical intervention to generate system-wide improvements. Because causal criteria exert direct influence over the dependent effect criteria, they should be accorded priority in decision-making and resource allocation.

Furthermore, the dual interpretation of centrality and causality reveals a layered structure in which driver criteria act as enablers of overall system resilience and adaptability, while effect criteria represent performance indicators. Study results proved that strengthening Feedback Loop Effectiveness (C2) and Scenario Simulation Capability (C4) not only enhances real-time adaptability (C1, Instructional Design Adaptability) but also improves learner motivation (C3, Learner Engagement Dynamics). Similarly, bolstering Early Warning Indicators (C9) and Predictive Intervention Effectiveness (C12) enhances downstream operational safeguards such as Risk Identification Completeness (C5) and Real-Time Risk Monitoring (C8), thereby contributing to the overall resilience of the distance-learning system.

Additionally, this analysis demonstrates that the strategic priority should first target highest-ranked driver criteria, Predictive Intervention Effectiveness (C12, Ri + Ci = 0.743, Ri − Ci = 0.137), Scenario Simulation Capability (C4, Ri + Ci = 0.742; Ri − Ci = 0.115), and Performance Monitoring Granularity (C11, Ri + Ci = 0.715; Ri − Ci = 0.112), all show both high centrality and positive causality values, confirming their role as systemic factors. Because these criteria exert influence on multiple downstream effects (Instructional Design Adaptability, Learner Engagement Dynamics, and Risk Identification Completeness), investments here generate the strongest cascading impact across the entire system.

Also, effect criteria such as Instructional Design Adaptability (C1, Ri − Ci = −0.146), Learner Engagement Dynamics (C3, Ri − Ci = −0.155), and Risk Assessment Accuracy (C6, Ri − Ci = −0.204) exhibit negative causality, meaning they are dependent outcomes rather than drivers. Thus, efforts to directly refine pedagogy or engagement strategies are unlikely to succeed unless supported by the upstream reinforcement of feedback loops (C2, Ri − Ci = 0.101), simulation environments (C4), and predictive analytics (C12). This confirms the systems-thinking principle that improving outcomes requires strengthening their causal drivers rather than addressing symptoms in isolation.

Finally, the top-ranked driver criteria emerge from all three dimensions of the study: System Dynamics Modelling (Scenario Simulation Capability (C4), Feedback Loop Effectiveness (C2)), Risk Management Standards (Contingency Planning Robustness (C7)), and Learning Analytics (Predictive Intervention Effectiveness (C12), Performance Monitoring Granularity (C11), Early Warning Indicators (C9)). Notably, this result empirically confirms that overall system resilience in distance military education cannot be achieved through a single disciplinary aspect. Instead, a unified decision-support framework that integrates system dynamics, risk management, and learning analytics is essential to ensure adaptability of instructional processes, structural robustness of platforms and procedures, and operational readiness and security in training environments.

5. Discussion

The Fuzzy DEMATEL analysis clarifies a strong causal–effect structure among the twelve criteria, each aligned with the study’s three conceptual dimensions: System Dynamics Modelling, Contemporary Risk Management Standards, and Learning Analytics. This structure not only confirms theoretical expectations from prior literature but also provides a domain-specific interpretation for distance military education systems operating in complex, risk-sensitive environments.

From a system dynamics perspective, the identification of strong causal variables, particularly C2 (Feedback Loop Effectiveness), C4 (Scenario Simulation Capability), and C12 (Predictive Intervention Effectiveness), is consistent with previous modelling studies, which emphasize feedback-rich leverage points as determinants of system adaptability and performance under dynamic conditions [14,15,16]. In this study, these criteria form a reinforcing mechanism: feedback loops (C2) capture real-time learning signals, scenario simulations (C4) operationalize those signals in a training context, and predictive interventions (C12) ensure timely corrective action. Prior studies in defence simulation training similarly conclude that simulation-based adaptive design accelerates skill acquisition while enhancing decision-making under uncertainty [28].

In the risk management dimension, causal criteria such as C7 (Contingency Planning Robustness) and C9 (Early Warning Indicators) strongly influence effect variables like C5 (Risk Identification Completeness), C6 (Risk Assessment Accuracy), and C8 (Real-Time Risk Monitoring). This aligns with ISO 31000:2022 principles, where upstream contingency planning and early warning systems are basics for precise risk identification and assessment [24]. The results of this study extend those principles into a learning environment, showing that well-structured contingency protocols not only mitigate operational risks but also enhance the responsiveness of instructional systems in the event of disruptions—a finding supported by recent research in digital education risk governance [62].

The learning analytics dimension is marked by the causal positioning of C10 (Data-Driven Personalization), C11 (Performance Monitoring Granularity), and C12 (Predictive Intervention Effectiveness). These findings link earlier studies demonstrating that robust analytics infrastructures do not merely provide passive reporting but actively shape instructional strategies and learner engagement trajectories [27,43]. In this study’s context, analytics-driven personalization ensures that cadets’ learning paths are dynamically aligned with their performance data, thereby reducing attrition risk and maintaining training quality across geographically distributed cohorts.

When interpreted collectively, the causal group represents strategic control levers that, when optimized, trigger improvements in effect variables that serve as system performance indicators. This interplay reinforces the need for an integrated governance model that combines feedback-rich system design, proactive risk control, and real-time analytics. Such a model is essential for educational institutions tasked with preparing military officers for unpredictable, high-stakes environments.

Beyond the immediate military training setting, these findings resonate with the governance of other complex socio-technical systems where adaptability, overall system resilience, and data-driven decision-making are essential, such as critical infrastructure management, emergency response planning, and large-scale remote education. The emphasis on feedback loops, scenario simulation, and predictive interventions mirrors strategies adopted in sectors like civil aviation and disaster management, underscoring the transferability of this study’s recommendations.

While the present study provides a robust causal map grounded in expert consensus, it is limited by its overall system reliance on a single institutional context. Future research should conduct longitudinal studies across multiple military academies and operational scenarios to test the stability of the identified relationships. Moreover, simulation-based optimization and AI-enhanced predictive analytics could further refine intervention strategies, enabling real-time system reconfiguration in response to emerging threats or performance anomalies.

6. Conclusions

This study applied a structured fuzzy multi-criteria causal analysis to assess twelve interrelated criteria shaping the effectiveness and overall system resilience of distance military education. Using three interconnected dimensions, namely System Dynamics Modelling, Contemporary Risk Management Standards, and Learning Analytics, we distinguished causal drivers: Feedback Loop Effectiveness (C2), Scenario Simulation Capability (C4), Contingency Planning Robustness (C7), Early Warning Indicators (C9), Data-Driven Personalization (C10), Performance Monitoring Granularity (C11), and Predictive Intervention Effectiveness (C12), and effect indicators such as Instructional Design Adaptability (C1), Learner Engagement Dynamics (C3), Risk Identification Completeness (C5), Risk Assessment Accuracy (C6), and Real-Time Risk Monitoring (C8).

The dominance of feedback-rich and simulation-oriented drivers underscores that adaptability depends on timely data exchange and scenario-based rehearsal. Risk management functions, particularly upstream planning and early warning, are critical for accurate risk identification, while granular learning analytics and predictive interventions sustain learner engagement and operational readiness.

To put these insights into practice at the Lithuanian Military Academy, we propose a three-tiered implementation strategy:

- Short-term efforts should enhance feedback loops and pilot scenario simulations to improve adaptive instructional processes.

- Medium-term steps should develop predictive analytics dashboards and train instructors in learning analytics tools to operationalize monitoring and early warning.

- Long-term priorities integrate these systems with secure defence IT infrastructure and extend the framework to allied military academies, ensuring interoperability and scalability.

This approach prioritizes high-impact causal drivers, aligning resource allocation with systemic influence to maximize improvement outcomes. The integration of system dynamics, risk governance, and advanced analytics strengthens overall system resilience and adaptability in complex, high-stakes training environments. Furthermore, the framework is transferable to other security-sensitive domains such as emergency management, critical infrastructure protection, and large-scale online education, where robust, data-driven decision-making is essential.

Overall, this study provides a practical roadmap for designing resilient, adaptive, and data-informed military learning ecosystems capable of operating effectively under uncertainty and rapidly evolving conditions, bridging theoretical insights and actionable implementation.

Author Contributions

Conceptualization, A.V.V. and S.B.; methodology, A.V.V. and S.B.; software, S.B.; validation, A.V.V. and S.B.; formal analysis, S.B.; investigation, A.V.V. and S.B.; resources, A.V.V. and S.B.; data curation, S.B.; writing—original draft preparation, A.V.V. and S.B.; writing—review and editing, A.V.V. and S.B.; visualization, S.B.; supervision, S.B.; project administration, A.V.V.; funding acquisition, A.V.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of National Defence of Lithuania under the Study Support Project No. V-820 (15 December 2020), General Jonas Žemaitis Military Academy of Lithuania, Vilnius, Lithuania.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DEMATEL | Decision-Making Trial and Evaluation Laboratory technique |

| MCDM | Multi-Criteria Decision-Making |

| SDM | System Dynamics Modelling |

| RMS | Risk Management Standards |

| LA | Learning Analytics |

References

- Allen, I.E.; Seaman, J. Digital Compass Learning: Distance Education Enrollment Report 2017; Babson Survey Research Group: Babson Park, MA, USA, 2017. [Google Scholar]

- Bates, T. Teaching in a Digital Age: Guidelines for Designing Teaching and Learning; Tony Bates Associates Ltd.: Vancouver, BC, Canada, 2015. [Google Scholar]

- Starr-Glass, D. Experiences with Military Online Learners: Toward a Mindful Practice. J. Online Learn. Teach. 2013, 9, 353. [Google Scholar]

- Volk, F.; Floyd, C.G.; Shaler, L.; Ferguson, L.; Gavulic, A.M. Active Duty Military Learners and Distance Education: Factors of Persistence and Attrition. Am. J. Distance Educ. 2020, 34, 106–120. [Google Scholar] [CrossRef]

- Abdurachman, D. Military Leadership Development in the Digital Era. J. Ekon. 2022, 11, 1678–1683. [Google Scholar]

- Vonitsanos, G.; Armakolas, S.; Lazarinis, F.; Panagiotakopoulos, T.; Kameas, A. Blending Traditional and Online Approaches for Soft Skills Development in Higher Education. In Proceedings of the Innovating Higher Education Conference 2024, Limassol, Cyprus, 23–25 October 2024; p. 2. [Google Scholar]

- Means, B.; Toyama, Y.; Murphy, R.; Bakia, M.; Jones, K. Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies; U.S. Department of Education: Washington, DC, USA, 2010.

- Al Lily, A.E.A.; Ismail, A.F.; Abunasser, F.M.; Alhajhoj Alqahtani, R.H. Distance Education as a Response to Pandemics: Coronavirus and Arab Culture. Technol. Soc. 2020, 63, 101317. [Google Scholar] [CrossRef]

- King, C.G.; Guyette, R.W.; Piotrowski, C. Online Exams and Cheating: An Empirical Analysis of Business Students’ Views. J. Educ. Online 2009, 6, 1–11. [Google Scholar] [CrossRef]

- Holden, O.L.; Norris, M.E.; Kuhlmeier, V.A. Academic Integrity in Online Assessment: A Research Review. Front. Educ. 2021, 6, 639814. [Google Scholar] [CrossRef]

- Hart, C. Factors Associated with Student Persistence in an Online Program of Study: A Review of the Literature. J. Interact. Online Learn. 2012, 11, 19–42. [Google Scholar]

- Udroiu, A.M. The Cybersecurity of eLearning Platforms. In Proceedings of the 13th eLearning and Software for Education Conference (eLSE), Bucharest, Romania, 27–28 April 2017; Carol I National Defence University Publishing House: Bucharest, Romania, 2017; pp. 374–379. [Google Scholar]

- Sweeney, L.B.; Sterman, J.D. Bathtub Dynamics: Initial Results of a Systems Thinking Inventory. Syst. Dyn. Rev. 2000, 16, 249–286. [Google Scholar] [CrossRef]

- Toma, R.A.D.A.; Popa, C.D.C. The Military Higher Education System Transformations in the New Security Context. J. Boeнeн жypнaл 2023, 4, 345–358. Available online: https://www.ceeol.com/search/article-detail?id=1211869 (accessed on 5 September 2025).

- Flagg, M.; Nadolski, M.; Sanchez, J.; McDermott, T.; Anton, P.S. The Nature of the Defense Innovation Problem; RAND Corporation: Santa Monica, CA, USA, 2022. [Google Scholar]

- Kunc, M.; O’Brien, F.A. Exploring the Development of a Methodology for Scenario Use: Combining Scenario and Resource Mapping Approaches. Technol. Forecast. Soc. Change 2017, 124, 150–159. [Google Scholar] [CrossRef]

- Government of the Republic of Lithuania. National Security Strategy. Approved by Resolution No. XIII-202 of the Seimas of the Republic of Lithuania on 17 January 2017. Available online: https://kam.lt/en/the-seimas-approved-the-reviewed-national-security-strategy/ (accessed on 5 September 2025).

- Republic of Lithuania, Seimas. Law on Higher Education and Research. Law No. XI-242, Adopted 30 April 2009, with Amendments Up to 2016–2017. Available online: https://e-seimas.lrs.lt/portal/legalAct/lt/TAD/548a2a30ead611e59b76f36d7fa634f8 (accessed on 5 September 2025).

- Ministry of National Defence of the Republic of Lithuania. Long-Term Development Programme of the National Defence System for 2022–2035. Approved by the Parliament of the Republic of Lithuania. 2022. Available online: https://kam.lt (accessed on 5 September 2025).

- Lithuanian Armed Forces FAQ on Education Continuity. Available online: https://kariuomene.lt/en/newsevents/frequently-asked-questions-about-the-lithuanian-armed-forces-in-the-context-of-covid19/21863?utm (accessed on 5 September 2025).

- Distance Course for Ukrainian Cadets (TLP Course). Available online: https://thedefensepost.com/2022/11/07/lithuania-distance-training-ukraine/?utm (accessed on 5 September 2025).

- Tamim, S.R. Analyzing the Complexities of Online Education Systems: A Systems Thinking Perspective. TechTrends 2020, 64, 740–750. [Google Scholar] [CrossRef]

- Woods, D.D. Resilience Engineering: Concepts and Precepts; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- ISO 31000:2022; Risk Management—Guidelines. International Organization for Standardization: Geneva, Switzerland, 2022.

- Bamber, C.; Elezi, E. Enterprise-Wide Risk Management in Higher Education: Beyond the Paradigm of Managing Risk by Categories. Bus. Process Manag. J. 2024. [Google Scholar] [CrossRef]

- Siemens, G.; Long, P. Penetrating the Fog: Analytics in Learning and Education. Educ. Rev. 2011, 46, 31–40. [Google Scholar]

- Ifenthaler, D.; Yau, J.Y.-K. Utilising Learning Analytics for Study Success: Reflections on Current Empirical Findings. In Utilizing Learning Analytics to Support Study Success; Springer: Cham, Switzerland, 2019; pp. 27–36. [Google Scholar] [CrossRef]

- Starr-Glass, D. Military learners: Experience in the design and management of online learning environments. J. Online Learn. Teach. 2011, 7, 147–158. [Google Scholar]

- Joiner, K.F.; Tutty, M.G. A Tale of Two Allied Defence Departments: New Assurance Initiatives for Managing Increasing System Complexity, Interconnectedness and Vulnerability. Aust. J. Multi-Discip. Eng. 2018, 14, 4–25. [Google Scholar] [CrossRef]

- Erkinovich, S.Z.; Sharofiddinovna, I.M. Innovative Methods for Developing Future Young Officers’ Soft Skills: Principles of the “Best Commander” Correctional Program. Web Teach. Indersci. Res. 2025, 3, 165–170. [Google Scholar]

- Sottilare, R.A. Examining the Role of Knowledge Management in Adaptive Military Training Systems. In Adaptive Instructional Systems. HCII 2024; Sottilare, R.A., Schwarz, J., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2024; Volume 14727, pp. 300–313. [Google Scholar] [CrossRef]

- Barana, A.; Fissore, C.; Floris, F.; Fradiante, V.; Marchisio Conte, M.; Roman, F.; Spinello, E. Digital Education: Theoretical Frameworks and Best Practices for Teaching and Learning in the Security and Defence Area. In Handbook on Teaching Methodology for the Education, Training and Simulation of Hybrid Warfare; Ludovika University Press: Budapest, Hungary, 2025; pp. 13–41. [Google Scholar] [CrossRef]

- Sterman, J.D. Business Dynamics: Systems Thinking and Modeling for a Complex World; McGraw-Hill: Boston, MA, USA, 2000. [Google Scholar]

- Meadows, D.H. Thinking in Systems: A Primer; Chelsea Green Publishing: White River Junction, VT, USA, 2008. [Google Scholar]

- Torres, J.P.; Kunc, M.; O’Brien, F.A. Supporting Strategy Using System Dynamics. Eur. J. Oper. Res. 2017, 260, 1085–1097. [Google Scholar] [CrossRef]

- Kunc, M. Strategic Analytics: Integrating Management Science and Strategy; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- ISO 31000:2018; Risk Management—Guidelines. ISO: Geneva, Switzerland, 2018.

- Aven, T. Further reflections on EFSA’s work on uncertainty in scientific assessments. J. Risk Res. 2017, 24, 553–561. [Google Scholar] [CrossRef]

- Hollnagel, E. Epilogue: RAG—The Resilience Analysis Grid. In Resilience Engineering in Practice; CRC Press: Boca Raton, FL, USA, 2017; pp. 275–296. [Google Scholar]

- Leitner, P.; Khalil, M.; Ebner, M. Learning Analytics in Higher Education—A Literature Review. In Learning Analytics: Fundaments, Applications, and Trends; Springer: Cham, Switzerland, 2017; pp. 1–23. [Google Scholar] [CrossRef]

- Slade, S.; Prinsloo, P. Learning Analytics: Ethical Issues and Dilemmas. Am. Behav. Sci. 2013, 57, 1510–1529. [Google Scholar] [CrossRef]

- Martin, F.; Wu, T.; Wan, L.; Xie, K. A Meta-Analysis on the Community of Inquiry Presences and Learning Outcomes in Online and Blended Learning Environments. Online Learn. 2022, 26, 325–359. [Google Scholar] [CrossRef]

- Parta, I.B.M.W.; Soelistya, D.; Hanafiah, H.; Basri, T.H.; Saputri, D.Y. Advancing teacher professional development for interactive learning technologies in digitally enriched classrooms. J. Educ. Manag. Instr. (JEMIN) 2025, 5, 138–148. [Google Scholar] [CrossRef]

- Sabrina, F.; Azad, S.; Sohail, S.; Thakur, S. Ensuring academic integrity in online assessments: A literature review and recommendations. Int. J. Inf. Educ. Technol. 2022, 12, 60–70. [Google Scholar] [CrossRef]

- Newton, P.M. How Common Is Cheating in Online Exams and Did It Increase During COVID-19? A Review of the Evidence. Ethics Educ. 2024, 19, 323–343. [Google Scholar] [CrossRef]

- Dendir, S. Cheating in Online Courses: Evidence from Online Proctoring. Int. Rev. Econ. Educ. 2020, 35, 100176. [Google Scholar] [CrossRef]

- Lee, K.; Fanguy, M. Online exam proctoring technologies: Educational innovation or deterioration? Br. J. Educ. Technol. 2022, 53, 475–490. [Google Scholar] [CrossRef]

- Chan, J.C.; Ahn, D. Unproctored Online Exams Provide Meaningful Assessment of Student Learning. Proc. Natl. Acad. Sci. USA 2023, 120, e2302020120. [Google Scholar] [CrossRef] [PubMed]

- Arnold, I.J. Online Proctored Assessment during COVID-19: Has Cheating Increased? J. Econ. Educ. 2022, 53, 277–295. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology (NIST). SP 800-171 Rev. 2: Protecting Controlled Unclassified Information in Nonfederal Systems and Organizations; NIST: Gaithersburg, MD, USA, 2020. [CrossRef]

- National Institute of Standards and Technology (NIST). Protecting Controlled Unclassified Information in Nonfederal Systems and Organizations—Overview. 2021. Available online: https://csrc.nist.gov/publications/fips (accessed on 13 August 2025).

- Zhang, D.; Lawrence, C.; Sellitto, M.; Wald, R.; Schaake, M.; Ho, D.E.; Altman, R.; Grotto, A. Enhancing International Cooperation in AI Research: The Case for a Multilateral AI Research Institute. Stanford Institute for Human-Centered Artificial Intelligence, 2022–05. Available online: https://hai-production.s3.amazonaws.com/files/2022-05/HAI%20Policy%20White%20Paper%20-%20Enhancing%20International%20Cooperation%20in%20AI%20Research.pdf, (accessed on 13 August 2025).

- Akacha, S.A.-L.; Awad, A.I. Enhancing Security and Sustainability of e-Learning Software Systems: A Comprehensive Vulnerability Analysis and Recommendations for Stakeholders. Sustainability 2023, 15, 14132. [Google Scholar] [CrossRef]

- Korać, D.; Damjanović, B.; Simić, D. A model of digital identity for better information security in e-learning systems. J. Supercomput. 2022, 78, 3325–3354. [Google Scholar] [CrossRef]

- Xu, Y.; Yin, D.; Zhou, D. Investigating Users’ Tagging Behavior in Online Academic Community Based on Growth Model: Difference between Active and Inactive Users. Inf. Syst. Front. 2019, 21, 761–772. [Google Scholar] [CrossRef]

- Lin, R.; Chu, J.; Yang, L.; Lou, L.; Yu, H.; Yang, J. What are the determinants of rural-urban divide in teachers’ digital teaching competence? Empirical evidence from a large sample. Humanit. Soc. Sci. Commun. 2023, 10, 422. [Google Scholar] [CrossRef]

- Si, S.L.; You, X.Y.; Liu, H.C.; Zhang, P. DEMATEL Technique: A Systematic Review of the State-of-the-Art Literature on Methodologies and Applications. Math. Probl. Eng. 2018, 2018, 3696457. [Google Scholar] [CrossRef]

- Navickiene, O.; Bekesiene, S.; Vasiliauskas, A.V. Optimizing Unmanned Combat Air Systems Autonomy, Survivability, and Combat Effectiveness: A Fuzzy DEMATEL Assessment of Critical Technological and Strategic Drivers. In Proceedings of the 2025 International Conference on Military Technologies (ICMT), Brno, Czech Republic, 20–23 May 2025; IEEE: New York, NY, USA, 2025; pp. 1–8. [Google Scholar] [CrossRef]

- Bekesiene, S.; Smaliukienė, R.; Vaičaitienė, R.; Bagdžiūnienė, D.; Kanapeckaitė, R.; Kapustian, O.; Nakonechnyi, O. Prioritizing Competencies for Soldier’s Mental Resilience: An Application of Integrative Fuzzy-Trapezoidal Decision-Making Trial and Evaluation Laboratory in Updating Training Program. Front. Psychol. 2024, 14, 1239481. [Google Scholar] [CrossRef] [PubMed]

- Bekesiene, S.; Meidute-Kavaliauskiene, I.; Hošková-Mayerová, Š. Military Leader Behavior Formation for Sustainable Country Security. Sustainability 2021, 13, 4521. [Google Scholar] [CrossRef]

- Vasiliauskas, A.V.; Bekesiene, S.; Navickienė, O. Importance of Modern Aerial Combat Systems in National Defence: Key Factors in Acquiring Aerial Combat Systems for Lithuania’s Needs. In Proceedings of the 2025 International Conference on Military Technologies (ICMT), Brno, Czech Republic, 20–23 May 2025; IEEE: New York, NY, USA, 2025; pp. 1–8. [Google Scholar] [CrossRef]

- Rusu, T.M.; Odagiu, A.; Pop, H.; Paulette, L. Sustainability Performance Reporting. Sustainability 2024, 16, 8538. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).