1. Introduction

The global diffusion of Korean culture and South Korea’s expanding economic integration have substantially increased demand for Korean language education [

1,

2,

3]. This growth has significantly increased the workload of educational institutions and organizations responsible for evaluating language proficiency [

2,

3,

4]. Writing assessment, a fundamental component of assessing linguistic competence, presents a particularly significant challenge [

5]. The manual grading of essays is a notoriously labor-intensive, time-consuming, and expensive process [

6,

7]. Furthermore, it is susceptible to significant variability, including inconsistencies between different raters (inter-rater reliability) and even inconsistencies from the same rater over time (intra-rater reliability) [

8,

9]. These challenges are magnified in the context of high-stakes, large-scale examinations such as the Test of Proficiency in Korean (TOPIK), where fairness, consistency, and efficiency are paramount [

10,

11].

To address these systemic bottlenecks, the field of educational technology has increasingly turned to Automated Essay Scoring (AES). AES systems leverage computational methods to analyze and score written text, offering a powerful technological intervention to augment or automate the grading process [

12]. The primary promise of AES lies in its potential to deliver immediate, consistent, and scalable feedback, thereby decreasing the burden on human educators and providing learners with timely evaluations to guide their development [

13]. As demand continues to rise, robust and reliable AES systems have become essential to the sustainable expansion of high-quality language education [

14].

The field of AES has undergone a significant technological evolution since its emergence. The earliest pioneering systems, such as the Project Essay Grader (PEG) developed in the 1960s, operated on the principle of feature engineering [

15]. These systems analyzed writing based on a set of hand-crafted, quantifiable proxies for writing quality, often referred to as “proxes” and “trins.” These included surface-level linguistic features such as word and sentence counts, grammatical error frequencies, vocabulary diversity metrics, and readability scores like the Flesch–Kincaid grade level [

16]. While effective to a degree, these models were limited in their ability to comprehend the deeper semantic content or logical structure of an essay [

17].

The advent of deep learning marked a paradigm shift in AES [

18,

19,

20]. Architectures like Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and, more recently, Transformers (e.g., BERT) made manual feature engineering unnecessary [

19,

21]. These models could learn complex, high-dimensional representations of syntax and semantics directly from raw text, leading to substantial improvements in scoring accuracy [

21,

22,

23]. However, this shift often came at the cost of interpretability, creating “black box” models whose decision-making processes lacked transparency [

23,

24].

The state of the art has shifted to an integrative paradigm enabled by large language models (LLMs) [

25]. AES development follows a U-shaped trajectory, from transparent, engineered features, through a period of end-to-end deep learning with limited interpretability, and now toward a principled synthesis of both [

19,

25]. LLMs function as modules within hybrid systems for zero-shot evaluation, generation of explanatory feedback, and, in this study, construction of high-quality reference texts for fine-grained semantic comparison [

26]. This work adopts that paradigm, combining the reliability of engineered linguistic features with the semantic modeling capacity of LLMs [

25]. Their approach utilizes an LLM (GPT-3) primarily as a feature encoder. It generates neural context embeddings from existing, human-graded essays to compute sophisticated features, such as the local semantic coherence between adjacent sentences. In this model, the “gold standard” for discourse is derived from a pre-existing, human-annotated corpus. The challenge of creating reliable benchmarks is particularly acute in the Korean context, where the field of LLM evaluation is still developing. While several benchmarks like the Open Ko-LLM Leaderboard and HAE-RAE Bench have been introduced, much of the existing evaluation infrastructure relies on the direct translation of English benchmarks, an approach that may not fully capture the unique linguistic and cultural nuances of Korean. This highlights a critical need for native evaluation methodologies and resources [

27,

28]. Our work contributes to this area by proposing and validating a method for generating high-quality, Korean-specific reference texts for assessment, a necessary step for building more reliable evaluation tools.

While AES for English has been extensively researched, its application to other languages, particularly for non-native (L2) learners, presents a distinct set of challenges [

29]. L2 writing is characterized by unique linguistic phenomena that can confound systems trained primarily on native-speaker text [

30]. These include linguistic transfer, in which L1 grammar and writing conventions influence L2 production, together with more frequent error types that standard AES models may misinterpret [

31].

These general L2 challenges are compounded by the specific linguistic characteristics of the Korean language [

32]. Korean encodes grammatical relations primarily via case-marking particles (조사, josa) and verbal inflections, creating difficulties for learners and for automated analysis [

32,

33]. Indeed, analyses of learner corpora show that errors in particle usage are among the most frequent mistakes made by Korean L2 learners. Furthermore, the relative scarcity of large-scale, publicly available, and well-annotated corpora of Korean L2 writing has historically hindered research and development in this area, creating a significant gap compared to the resources available for English [

34,

35]. An entire subfield of Korean NLP has emerged to address this challenge, developing sophisticated methods for the annotation, detection, and correction of these specific error types. A successful AES system for Korean L2 writing must therefore be designed to be robust to these specific linguistic features and error patterns [

36,

37]. A successful AES system for Korean L2 writing must therefore be designed to be robust to these specific linguistic features and error patterns [

33].

This paper proposes a novel AES system designed to address the diverse and interrelated challenges of evaluating Korean L2 writing. The proposed solution is conceived not as a monolithic algorithm but as a holistic, multi-component socio-technical system for application in the educational domain. The system’s design is motivated by the central hypothesis that a more accurate, reliable, and human-like assessment can be achieved by systematically integrating two complementary sets of features, mirroring the dual focus of human graders on both form and content. While conceptually similar in its hybrid nature to previous work [

25], our work diverges from this approach in its fundamental application of the LLM. Rather than using the LLM as an encoder of existing texts, we employ it as a generator of the benchmark itself. Our system prompts a state-of-the-art LLM to create a diverse set of twenty new, high-quality “ideal” reference essays for each topic. The resulting semantic feature, therefore, measures global topical relevance against this generated benchmark. This is a distinct methodological choice aimed at evaluating content alignment, particularly for under-resourced languages like Korean, where large, expertly graded L2 corpora are less available.

The first component of our system consists of a suite of traditional linguistic features. These quantifiable metrics, such as lexical diversity, syntactic complexity, and readability, provide a robust and interpretable measure of a writer’s foundational linguistic proficiency. They effectively assess how the learner constructs language.

The second, and novel, component is an LLM-powered semantic feature. This feature directly evaluates the substance of the writing such as its coherence, topical relevance, and content quality. It is calculated by measuring the semantic similarity between the learner’s essay and an “ideal” reference answer generated by an LLM. This approach uses the LLM as a proxy for the world knowledge and topical understanding that a human expert brings to the grading process. This feature assesses what the learner has written.

By integrating these two distinct feature sets, the proposed hybrid architecture leverages the respective strengths of both established and emerging paradigms: the precision and interpretability of engineered linguistic features and the deep semantic comprehension of LLMs. This systemic approach aims to capture a more complete and nuanced picture of a learner’s writing ability than could be achieved by either approach in isolation. Furthermore, the system is designed for practical application within real-world educational frameworks by utilizing the official Korean Language Learner Corpus from the National Institute of the Korean Language (NIKL) and aligning its 6-point scoring output with the established TOPIK proficiency levels.

The primary contributions of this research are fourfold:

The design and implementation of a novel hybrid AES system specifically for assessing the Korean writing of non-native speakers.

The introduction of a new feature for semantic evaluation based on calculating the similarity between a student’s essay and a high-quality reference answer generated by an LLM.

A comprehensive evaluation of the proposed system using the official Korean Language Learner Corpus from the National Institute of the Korean Language, with performance benchmarked against the 6-level TOPIK proficiency scale.

An empirical demonstration that the proposed hybrid, systemic approach significantly outperforms models based on either traditional linguistic or semantic features alone.

The remainder of this paper is organized as follows:

Section 2 details the materials and methods, including the dataset, system architecture, and feature engineering processes.

Section 3 describes the experimental setup and evaluation protocol.

Section 4 presents and analyzes the results of our experiments.

Section 5 discusses the interpretation and implications of these findings, as well as the limitations of the study. Finally,

Section 6 provides concluding remarks.

2. Materials and Methods

This section details the methodology employed to develop and validate the hybrid AES system. We first describe the dataset used for training and evaluation. Next, we present the overall system architecture, followed by a detailed explanation of the two distinct feature engineering pipelines, one for traditional linguistic features and another for the novel LLM-based semantic feature. Finally, we describe the predictive model used to generate the final proficiency scores.

2.1. Dataset: The Korean Language Learner Corpus

The foundation of this study is the Korean Language Learner Corpus (한국어 학습자 말뭉치), a large-scale linguistic resource compiled and maintained by the NIKL of the Republic of Korea. This corpus is specifically designed for research in second language acquisition and Korean language education by collecting texts produced by non-native learners of Korean. The first phase of its construction, completed in 2022, gathered approximately 6.2 million eojols (a Korean unit of spacing, similar to a word or phrase) from learners representing 143 countries and 99 native language backgrounds.

For this study, we utilized a subset of the corpus containing written essays. Each essay in the dataset is accompanied by metadata, including the learner’s proficiency level, which is graded on the 6-level scale corresponding to the TOPIK framework (Levels 1–6) (

Table 1).

This expert-assigned proficiency level serves as the ground-truth label and the target variable for our predictive model. The corpus provides a rich and diverse collection of authentic L2 writing, encompassing the typical error patterns, grammatical structures, and lexical choices characteristic of learners at different stages of proficiency [

38]. Access to the corpus for research purposes is managed through the NIKL’s “Korean Language Learner Corpus Sharing Center”.

To ensure sufficient coverage and comparability across prompts, we developed and evaluated the AES system using essays drawn from the 20 most frequently occurring topics in the corpus (

Table 2). Topic frequencies were computed from the corpus metadata, and essays were filtered accordingly. Focusing on the highest-frequency topics thus yields a dataset that remains representative of common communicative tasks while enabling rigorous, topic-aware analysis of model performance.

2.2. System Architecture

The proposed AES system employs a hybrid, feature-based architecture designed to conduct a holistic assessment of writing quality by evaluating both linguistic form and semantic content.

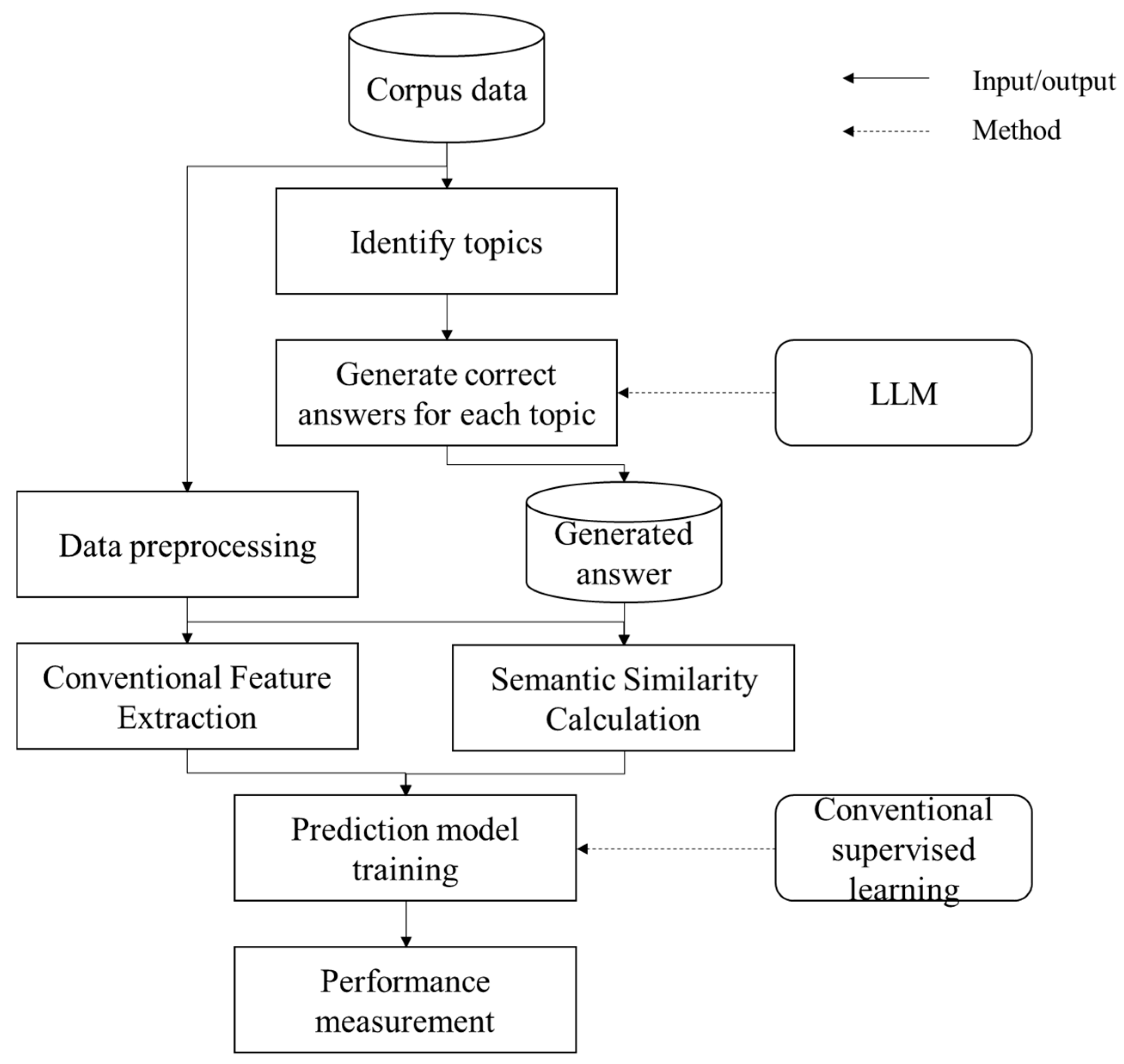

Figure 1 presents an end-to-end architecture that converts raw learner essays into TOPIK-aligned proficiency predictions. The pipeline begins with topic identification from corpus metadata. As we mentioned

Section 2.1, essays associated with the 20 most frequent prompts are retained to ensure adequate sample sizes per prompt and comparability across prompts.

For each retained topic, a large language model generates a set of high-quality reference responses subject to length and style constraints. These references function as semantic anchors rather than as training labels. Essays are then normalized through script cleanup, sentence segmentation, tokenization, and metadata validation. Two parallel feature branches are constructed. In the semantic branch, each essay and its topic-specific references are embedded with a sentence-level encoder, and similarity statistics are computed. We use top-k cosine similarities and aggregate descriptors such as mean, maximum, variance, and coverage indicators to summarize content alignment at the topic level. In the conventional branch, interpretable descriptors of form are extracted, including character/word level length measures, lexical diversity indices, syntactic complexity indicators, discourse and cohesion cues, and surface error rates. Feature quality control removes degenerate vectors, caps extreme values, and records missingness flags.

The branches are concatenated after scaling and type handling to form a unified feature vector. Supervised learning is applied to the concatenated representation to predict proficiency levels 1–6. To prevent leakage, topic identifiers are excluded from the learner, text normalization is performed prior to any feature computation, and cross-validation is conducted with topic-aware folds so that prompts do not span training and validation partitions. Model selection relies on cross-validation with a bounded hyperparameter search and class-balanced sampling where appropriate. Outputs are evaluated using Quadratic Weighted Kappa (QWK), Mean Absolute Error (MAE), and Root Mean Squared Error (RMSE). Confusion matrices provide label-space diagnostics, and ablations quantify the marginal contribution of the semantic and conventional branches. This architecture integrates interpretable linguistic evidence with topic-aware semantic evidence derived from LLM-generated references, enabling reliable, content- and form-sensitive assessment while maintaining reproducibility and control over prompt effects.

2.3. Feature Engineering 1: Linguistic Form Analysis

This pipeline extracts a set of established linguistic features that serve as proxies for writing proficiency. These features are designed to be robust and interpretable, capturing foundational aspects of a learner’s command of the Korean language.

Lexical Features: These features measure the richness and diversity of the vocabulary used [

5,

39].

Word and Morpheme Counts: Total number of words (eojols), unique words, morphemes, and unique morphemes [

40,

41].

Type-Token Ratio (TTR): Calculated as the number of unique morphemes (types) divided by the total number of morphemes (tokens). A higher TTR generally indicates greater lexical diversity [

42].

Syntactic Complexity Features: These features assess the sophistication of the grammatical structures employed by the learner [

43].

Sentence-Level Metrics: Total number of sentences and average sentence length (in words and morphemes). Longer, more complex sentences are often characteristic of higher proficiency levels [

44].

Subordination and Clause Complexity: Metrics derived from syntactic parsing, such as the average depth of the parse tree and the ratio of subordinate clauses to main clauses, are used to quantify grammatical complexity [

45].

2.4. Feature Engineering II: LLM-Based Semantic Content Analysis

This pipeline introduces a novel feature to evaluate the quality and relevance of the essay’s content, addressing a common limitation of traditional AES systems that focus primarily on form. The process involves two steps, (1) generating a benchmark answer and (2) calculating similarity.

First, for each essay prompt in the dataset, a Large Language Model (GPT-4o) is prompted to generate an exemplary essay [

46]. The prompt instructs the LLM to write a well-structured, coherent, and topically rich response that would be representative of a TOPIK Level 6 performance. This LLM-generated text serves as a “golden” or ideal answer, encapsulating the key concepts and logical structure expected for a high-scoring response on that topic. This approach leverages LLMs’ ability to produce high-quality, contextually relevant text that can serve as a reliable benchmark for comparison.

| Prompt 1: ideal answer generation |

System: You are an expert Korean writing instructor and TOPIK rater.

User: Generate exemplar responses under the following constraints.

[Input]

- Topic: {TOPIC}

[Task]

- Produce 20 distinct Korean essays representative of TOPIK Level 6 for the given topic.

- Ensure clear diversity across essays, minimize lexical and phrasal overlap.

[Form and language]

- Register: formal written Korean suitable for an exam response

- Structure: introduction, development, and conclusion

- Length: ~580 characters on average; ~152 eojeols (±10) on average

- Sentences: preferably 10–14 per essay

- Linguistic requirements: correct spacing and punctuation; appropriate case particles and verbal endings; natural cohesion

- Prohibitions: no bullet lists, no mention of the instructions, no external quotations or sources, no code-switching

[Output format]

- Return a single JSON object whose sole key is the topic string and whose value is an array of 20 essay strings.

- Example: {“{TOPIC}”: [“Essay 1”, “Essay 2”, …, “Essay 20”]}

- Output valid UTF-8 JSON only, with no additional commentary |

After answer generation, semantic similarities are calculated to quantify how well a student’s essay aligns with the ideal answer.

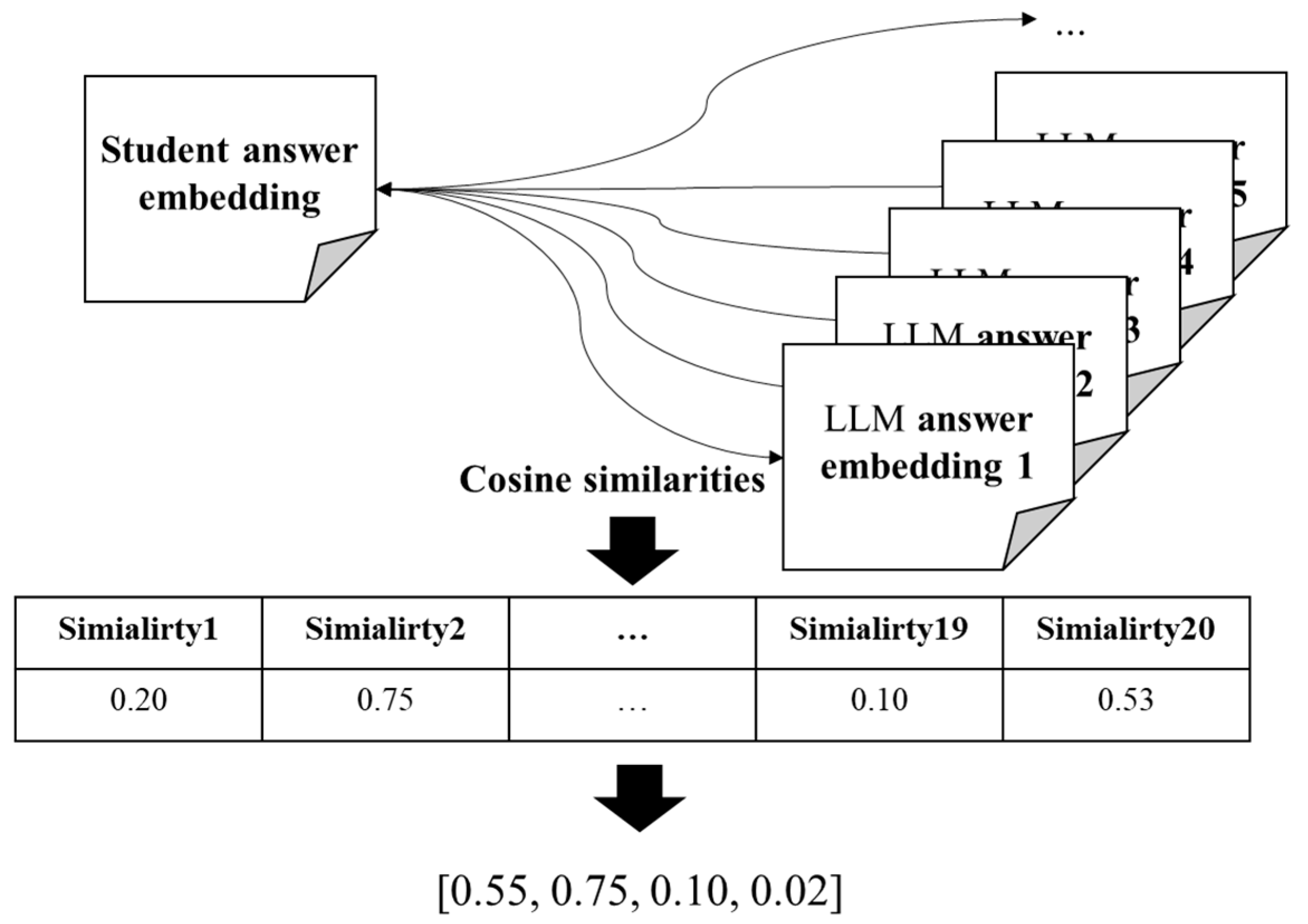

Figure 2 illustrates the semantic branch of the hybrid AES. For each topic, both the student essay and 20 reference essays produced by an LLM are embedded with the same sentence-transformer encoder and L2-normalized. Pairwise cosine similarities are then calculated between the student vector and all reference vectors. The resulting similarity distribution is summarized by the top-k values (k = 5 in our main experiments) together with aggregate descriptors such as the maximum, mean, and variance. These statistics quantify topic-level content alignment and are concatenated with conventional linguistic features for supervised learning.

We measure the semantic similarity between the two texts. Both the student’s essay and the LLM-generated reference answer are converted into high-dimensional vector representations (embeddings) using a pre-trained sentence-transformer model (Sentence-BERT) [

47]. These models are adept at capturing the semantic meaning of text, going beyond simple keyword matching. The cosine similarity between the two vectors is then calculated as below [

48].

which equals the cosine of the angle between

and

. The resulting score, ranging from −1 to 1 (but typically 0 to 1 for this task), serves as the semantic feature. A score closer to 1 indicates a high degree of semantic overlap and topical relevance between the student’s writing and the high-proficiency benchmark.

2.5. Predictive Modeling

We formulate automated essay scoring as an ordinal prediction task with target levels 1–6 aligned to the TOPIK scale. Three feature spaces are considered. The first feature space is a conventional set capturing surface form, lexical diversity, syntactic complexity, cohesion cues, and error indicators. The second feature set is a semantic set derived from similarity statistics between each student essay and a bank of twenty topic-specific reference responses. The last set is a combined feature set obtained by concatenation after scaling and type handling.

Five supervised learners are evaluated to represent linear, kernel, and tree-based families, which include L2-regularized linear regression (Ridge), support vector regression with an RBF kernel (SVR), random forest, histogram-based gradient boosting (HistGB), and gradient-boosted decision trees (XGBoost). Models are trained in regression mode to respect the ordinal structure of the labels.

Ridge penalizes the squared magnitude of coefficients to stabilize estimation and reduce variance. It also mitigates multicollinearity and yields well-conditioned solutions in high-dimensional feature spaces [

49].

SVR optimizes an ε-insensitive loss with margin maximization, producing sparse solutions based on support vectors. Nonlinear relations are modeled via kernels, with

and

governing regularization and function complexity [

50,

51].

Random Forest constructs an ensemble of decision trees using bootstrap sampling and random feature subsetting at each split. Aggregating decorrelated trees captures nonlinear interactions and provides robustness to overfitting [

52].

HistGB discretizes continuous features into histogram bins and fits additive decision trees via gradient boosting. Generalization and efficiency are controlled by learning rate, tree depth, regularization, and early stopping [

53,

54].

XGBoost implements a regularized gradient-boosting framework with second-order optimization and sparsity-aware split finding. Shrinkage and column/row subsampling, together with L1/L2 penalties, provide effective control of overfitting and computational cost [

55].

At inference, continuous outputs are clipped to [

1,

6] and rounded to the nearest integer for label-based diagnostics. For Ridge and SVR, numeric predictors are standardized to zero mean and unit variance, and tree-based models are fit on the raw scale. Feature quality control removes degenerate vectors, caps extreme values, and records missingness indicators; median imputation is applied where required.

Model selection uses nested, topic-aware cross-validation to prevent prompt leakage. The outer evaluation employs grouped folds with topics as grouping units and stratification on proficiency level within each fold. The inner loop performs hyperparameter tuning by grid or randomized search restricted to bounded ranges. Early stopping is used for gradient-boosting models based on a validation split drawn from the training fold. Where supported, class weights inversely proportional to label frequencies are enabled as a robustness check. Random seeds are fixed for reproducibility.

2.6. Performance Measurement

We employed a 5-fold topic-aware grouped cross-validation to ensure that essays written for the same prompt did not appear in both the training and test sets of any fold. Within each training fold, a further 90/10 split was used to create a validation set for hyperparameter tuning and early stopping in the gradient boosting models. We evaluate the model’s performance using three standard metrics in AES research, which together provide a comprehensive view of the prediction performance. Because the target value is an ordinal six-level proficiency grade, model performance is quantified using (i) quadratic weighted QWK, a chance-corrected coefficient of agreement adjusted for ordinal categories, (ii) MAE, and (iii) RMSE.

2.6.1. Quadratic Weighted Kappa (QWK)

Quadratic Weighted Kappa (QWK) is a statistical measure that evaluates the level of agreement between two raters (e.g., a human and an AES model) on an ordinal scale [

56]. It is a variant of Cohen’s Kappa that is specifically designed for ordered categories, making it ideal for essay scoring. QWK not only corrects for agreement that could occur by chance but also penalizes larger disagreements more heavily than smaller ones. For example, a model predicting a ‘1’ for an essay that a human scored as a ‘6’ is penalized more than a model predicting a ‘5’ for the same essay. The formula for QWK is defined as below.

where

is the observed number of essays that received score

from the human and score

from the model.

is the expected number of agreements by chance, calculated form the marginal totals of scores for the human and the model.

is the weight matrix, which defines the penalty for disagreement. For QWK, the weights are calculated quadratically based on the distance between the scores:

N is the number of possible score categories. A QWK score of 1 indicates perfect agreement, 0 indicates agreement no better than chance, and negative values indicate agreement worse than chance.

2.6.2. Mean Absolute Error (MAE)

Mean Absolute Error (MAE) measures the average magnitude of the errors in a set of predictions, without considering their direction [

57]. It is calculated as the average of the absolute differences between the predicted scores and the actual scores. MAE is easily interpretable because it is in the same units as the essay scores. For instance, an MAE of 0.5 means the model’s predictions are, on average, half a point away from the human scores. Because it does not square the errors, MAE is less sensitive to large, infrequent errors (outliers) than RMSE. The formula for MAE is:

is the total number of essays,

is the true human-assigned score for the

i-th essay, and

is the score predicted by the model for the

i-th essay.

2.6.3. Root Mean Squared Error (RMSE)

The RMSE is the square root of the average of the squared differences between the predicted and actual scores [

58]. Like MAE, RMSE measures the average error in the same units as the scores. However, the key difference is that RMSE squares the errors before averaging them. Therefore, large errors are given relatively high weight. A four-point error has 16 times more impact on the overall error than a one-point error. Therefore, RMSE is particularly sensitive to outliers and is a useful metric for penalizing models that produce large and severe scoring errors. The formula for RMSE is:

2.6.4. Statistical Significance Testing

To determine whether the observed differences in model performance were statistically significant, we conducted pairwise comparisons of the Quadratic Weighted Kappa (QWK) scores. We employed the DeLong test, a non-parametric method for comparing the Area Under the Curve (AUC) of two correlated Receiver Operating Characteristic (ROC) curves, which can be adapted for ordinal agreement metrics like QWK. The null hypothesis was that the two models have equal performance, and we used a significance level of α = 0.05 to evaluate our claims of model superiority.

3. Results

This section presents the empirical results of our experiments, evaluating the performance of various regression models using three distinct feature sets: conventional linguistic features, semantic similarity scores, and a combination of both. The models were assessed using QWK, MAE, and RMSE to provide a comprehensive view of their scoring accuracy.

3.1. Overall Model Performance

The primary experiment involved training and evaluating five different prediction models (Ridge, SVR, RandomForest, HistGB, and XGBoost) on the three feature configurations. The overall performance of each model across the entire test set is summarized in

Table 3. The results clearly demonstrate significant performance differences based on both the selection of model and the feature set employed.

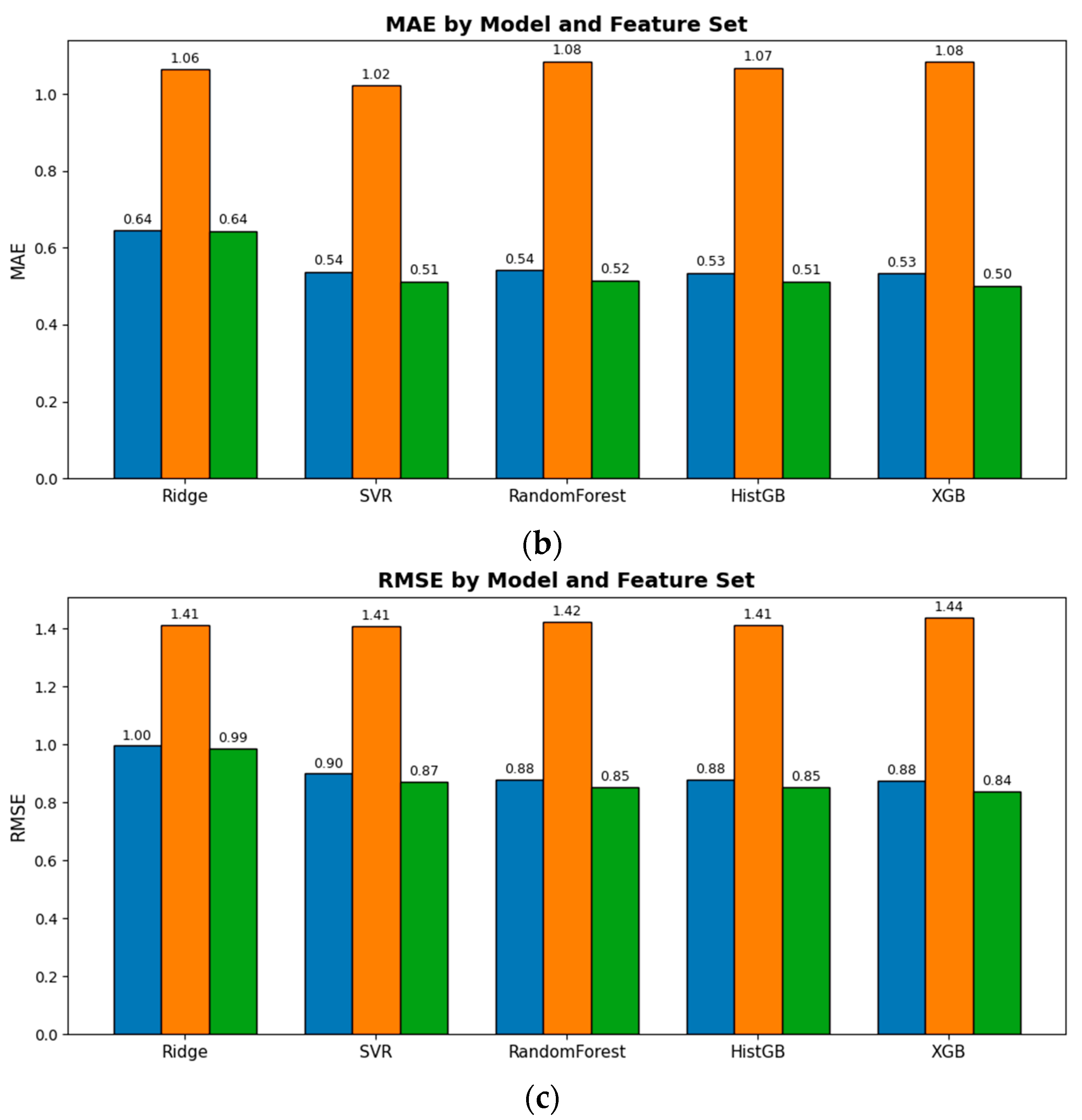

The results clearly indicate that the hybrid approach yields superior performance. The models trained exclusively on the semantic similarity feature performed poorly, with QWK scores near zero, suggesting that content similarity alone is insufficient for nuanced scoring. The models using conventional linguistic features established a strong baseline, with the XGBoost model achieving a QWK of 0.782. However, the highest accuracy was consistently achieved with the combined feature set (

Figure 3).

Among the algorithms, the tree-based ensemble models (RandomForest, HistGB, and XGBoost) outperformed the Ridge and SVR models. The best result was obtained by the XGBoost model using the combined feature set, which achieved a QWK of 0.801, an MAE of 0.501, and an RMSE of 0.839. This result validates our central hypothesis that integrating traditional linguistic analysis with LLM-based semantic content evaluation provides a more robust and accurate scoring system. Crucially, pairwise statistical tests confirmed that the QWK score of the hybrid XGBoost model was significantly superior to all other model configurations and baselines (DeLong’s test,

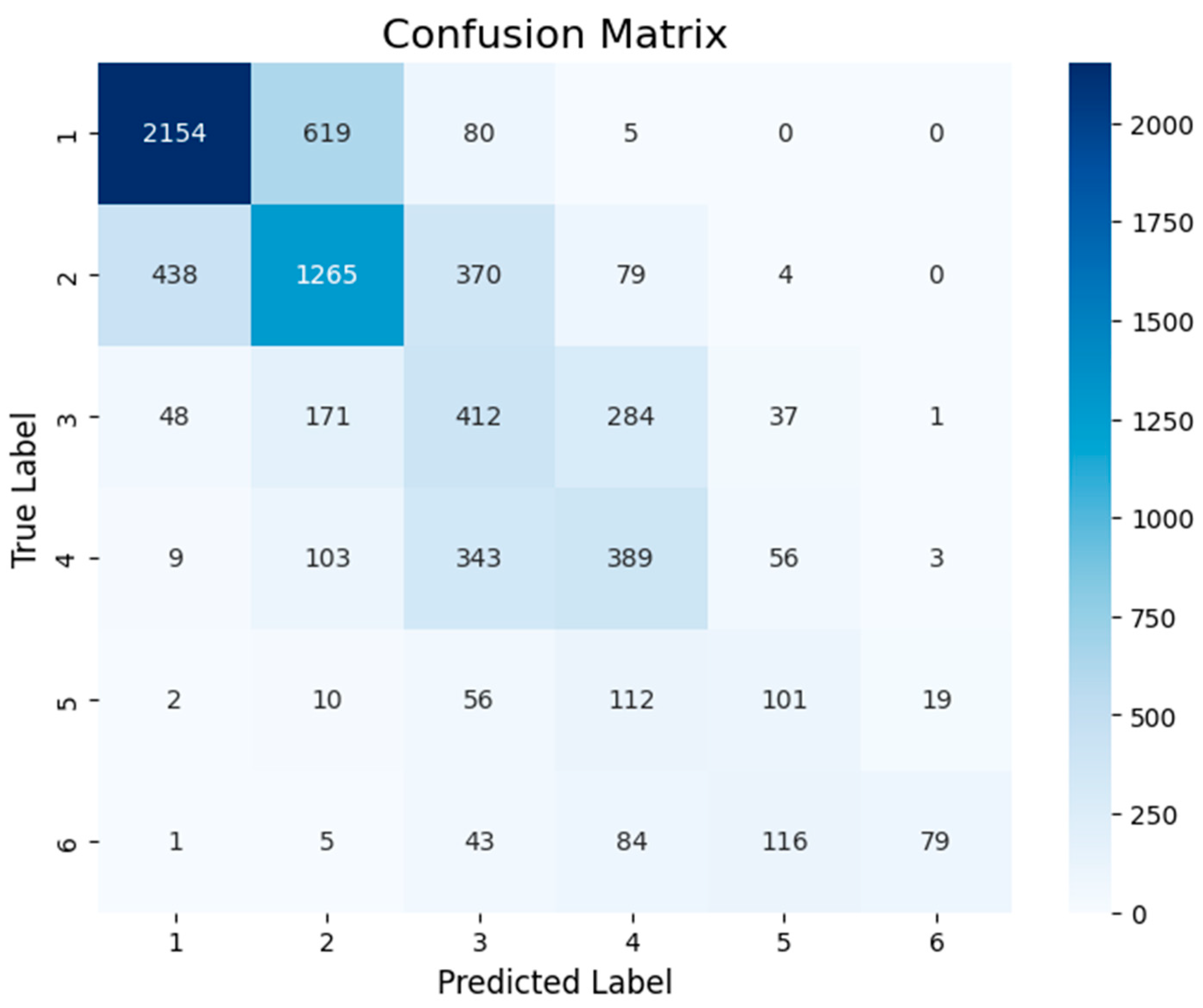

p < 0.05). To aid interpretability, we also report label-space accuracies for the best configuration (XGB + combined). Exact-match accuracy was 0.587, while 92.4% of predictions fell within ±1 level of the human score on the 6-point TOPIK scale. Class-wise exact-match rates monotonically decreased with proficiency level as follows: 0.754, 0.587, 0.432, 0.431, 0.337, 0.241 (

Figure 4). A pattern consistent with the increasing lexical/syntactic variability of higher-level responses. Pearson and Spearman correlations between predicted and reference scores were 0.807 and 0.773, respectively.

The model demonstrates high accuracy for essays at the lower end of the score scale. For essays with a true score of 1, the model is correct in approximately 75% of cases (2154 out of 2858). Performance remains strong for score 2, with an accuracy of around 59% (1265 out of 2156). At these levels, writing quality is often distinguished by clear, surface-level linguistic markers such as high frequencies of grammatical errors, simple syntactic structures, and limited lexical diversity. The conventional features in our model are well-suited to detect these strong negative signals, allowing for reliable classification.

The model’s predictive power diminishes significantly for mid-range and high-end scores. Accuracy drops to approximately 43% for scores 3 and 4, further to 33% for score 5, and to just 24% for score 6. This decline can be explained by three primary factors:

As writers become more proficient, the utility of surface-level features as differentiators decreases. Essays scored 5 and 6 are both likely to exhibit low error rates, high lexical diversity, and complex syntax. The distinction between these scores lies in more abstract qualities such as rhetorical sophistication, argumentative nuance, and originality of thought. The current feature set is not designed to capture these subtle, higher-order attributes, resulting in a “ceiling effect” where the model can identify an essay as “good” but cannot reliably distinguish it from an “excellent” one.

For highly proficient writers, the semantic similarity score may become a confounding factor. An exceptional essay (score 6) might present a more creative, complex, or unconventional argument that deviates semantically from the standardized, LLM-generated reference answer. In contrast, a competent but less exceptional essay (score 4 or 5) might adhere more closely to a conventional line of reasoning, thereby achieving a higher similarity score. This can paradoxically cause the model to penalize originality and underestimate the quality of the most advanced essays.

The confusion matrix reveals a significant imbalance in the dataset. There are far more examples of low-scoring essays (2858 instances of score 1) than high-scoring ones (300 instances of score 5 and 328 of score 6). This skew means the model has substantially less data from which to learn the defining characteristics of top-tier writing. Consequently, the model is biased towards the more heavily represented lower and middle scores and exhibits a strong tendency to underestimate the quality of high-performing essays, as seen by the large number of true score 6 essays being misclassified as 5 or 4.

3.2. Comparison with Direct LLM Scoring Baselines

A critical question for any hybrid system is whether its architectural complexity is justified when compared to direct scoring by a state-of-the-art Large Language Model. To address this, we benchmarked our system against direct scoring by GPT-4o under both zero-shot and few-shot conditions. In the zero-shot setting, the model was provided only with the essay and the official TOPIK scoring rubric. In the few-shot setting, the prompt was augmented with five representative essay examples for each of the six TOPIK levels.

The results, presented in

Table 4, are striking and decisively demonstrate the necessity of our hybrid architecture. Direct scoring by GPT-4o, even with few-shot prompting, performed very poorly, achieving a QWK of just 0.24. The high error rates (MAE of 1.28 and RMSE of 1.62) indicate that the model’s predictions were, on average, incorrect by more than a full proficiency level. This performance is substantially worse than even our baseline models using only conventional linguistic features.

In contrast, our hybrid XGBoost model achieved a QWK of 0.80, with MAE and RMSE values of 0.50 and 0.84, respectively. This represents a performance gap of more than 230% on the QWK metric. These findings provide clear validation for our hybrid approach. They suggest that while a powerful LLM possesses general language understanding, it struggles to reliably apply the nuanced, multi-level criteria of the TOPIK rubric in a zero- or few-shot setting. The integration of explicit linguistic features acts as an essential structural guardrail, allowing the model to ground its semantic understanding in the formal properties of the text. The hybrid architecture is therefore not merely beneficial; it is indispensable for achieving accurate and reliable scoring.

3.3. Model Performance by Topic

To understand the model’s robustness and limitations, we analyzed the performance of our best-performing configuration (XGBoost with combined features) on a per-topic basis.

Table 5 presents the evaluation metrics for each of the 20 distinct essay topics in the test set, revealing a significant variance in performance that correlates strongly with the nature of the prompt.

These performance differences show a clear pattern. The model excels on concrete, descriptive, and narrative topics, but struggles significantly on abstract, argumentative, or highly personal topics.

The model achieved the highest accuracy on topics such as “Self-Introduction” (QWK 0.797) and “Comprehensive Narrative” (QWK 0.660). These topics typically lead to responses that use predictable structures and a relatively limited range of topics and vocabulary. For example, self-introductions are likely to discuss family, hobbies, and personal goals. This thematic consistency makes both existing linguistic features and semantic similarity scores highly effective. Linguistic features can reliably model fluency and complexity in these familiar contexts, while semantic similarity scores accurately measure the relevance of content to the generated reference responses, which capture the common elements of the essays.

Conversely, the model’s performance deteriorated sharply on abstract and argumentative topics, with QWK scores near zero for “Using the Internet Correctly” (0.007) and “What Matters Most in Life” (0.029). This failure can be attributed to two key limitations of the feature set when applied to these prompts:

Failure of the Semantic Similarity Feature: For abstract topics, there is no single “right” answer. A high-quality essay can present one of several valid and logical arguments. The referenced answers generated by the LLM represent only twenty possible perspectives. Consequently, a student’s creative, nuanced, and unconventional argument, while potentially excellent, may be semantically disconnected from the referenced text. In this case, the semantic similarity score becomes a misleading feature, penalizing originality and rewarding adherence to a single, arbitrary criterion. The model incorrectly assumes that proximity to the LLM answer is a measure of quality, which is not true for complex, open-ended questions.

Lack of conventional linguistic features: The quality of an argumentative or philosophical essay is determined by factors such as logical coherence, depth of insight, and strength of reasoning. Conventional linguistic features used in this model (e.g., sentence length, lexical diversity, error rate) fail to adequately reflect this deeper structure. An essay may be grammatically perfect and syntactically complex, yet logically flawed and superficial. Conversely, a profound argument may be expressed in simple and clear language. Because this model relies on these superficial features, it fails to distinguish between well-structured and poorly structured arguments, and its scores for topics where argumentation is the primary criterion for quality are unreliable.

4. Discussion

This section interprets the empirical results presented in

Section 3, connecting them to the working hypotheses and situating them within the broader landscape of AES research. The performance of the hybrid model is discussed, its strengths and weaknesses across proficiency levels and topic types are analyzed, and the wider implications for Korean L2 writing assessment and pedagogy are discussed.

4.1. Validation of the Hybrid System

The central hypothesis of this study, that integrating linguistic form with semantic content would yield a more accurate assessment, is strongly validated by our findings. The results clearly show that the hybrid model significantly outperforms models trained exclusively on either conventional or semantic features. This outcome empirically demonstrates that neither form nor content is sufficient in isolation; rather, their synergistic combination is essential for a robust evaluation that approximates human judgment.

This finding aligns with the “U-shaped” trajectory of AES development, where the field is returning to a sophisticated integration of engineered features with LLMs after a period dominated by opaque, end-to-end deep learning models. Our work contributes to a growing body of evidence showing that hybrid models, which combine the strengths of interpretable linguistic features with the deep semantic understanding of modern neural architectures, represent the current state of the art.

The catastrophic failure of the semantic-only model is a profoundly important diagnostic result. It reveals that while an LLM can generate high-quality reference texts, raw semantic similarity is an inadequate proxy for writing quality. It effectively measures topical relevance but fails to capture the quality of the discourse. A student could simply list relevant keywords in grammatically incoherent sentences and still achieve a high similarity score, showing that the semantic feature in isolation is blind to linguistic proficiency. The success of the hybrid model, therefore, stems from the linguistic features acting as a crucial “gatekeeper.” They establish a baseline of formal competence, after which the semantic feature can contribute a meaningful signal about content relevance.

4.2. Interpreting Model Performance Across Proficiency Levels

As detailed in the Results Section, our model exhibits clear limitations, with a performance gradient across proficiency. This pattern is consistent with the ceiling effect reported in AES research. Lower proficiency is characterized by frequent, easily detectable errors (e.g., particle misuse, simple syntax, restricted vocabulary), which our conventional linguistic features capture effectively. As proficiency increases, these negative cues become sparse. Distinguishing a very good essay (Level 5) from an excellent one (Level 6) depends more on higher-order aspects such as discourse organization, argument development, originality, and creativity, which are not fully represented in the current feature set.

Severe class imbalance in the NIKL corpus (2858 Level 1 essays vs. 328 Level 6 essays) is not merely a technical inconvenience. It is a primary causal factor that compounds the ceiling effect. Supervised models learn by discovering patterns associated with labels, and the scarcity of Level 6 examples prevents the learner from forming a reliable statistical profile of top-tier writing. In the current feature space, Level 5 and Level 6 essays tend to appear similar because both show low error rates and high lexical diversity, while the true discriminators lie in dimensions not captured by our features. The combination of a limited feature representation for advanced skills and a sparse sample of high-level essays produces a compounding limitation. The model lacks both the tools (features) and the experience (data) needed to make fine-grained distinctions at the top of the scale. This issue reflects a broader challenge in L2 AES, namely, the difficulty of assembling large, well-annotated corpora of high-proficiency learner writing, and it exemplifies the imbalanced learning problem in which models are biased toward the majority class.

4.3. Implications for Korean L2 Writing Assessment and Pedagogy

This study makes a significant contribution by developing and validating one of the first hybrid AES systems specifically for Korean L2 writing. It addresses a notable gap in a field historically dominated by English and tackles the unique challenges posed by Korean’s agglutinative morphology. By training on the official NIKL corpus and aligning with the TOPIK scale, our system provides a valuable and relevant benchmark for future research in this area.

The system’s topic-dependent performance profile dictates its appropriate pedagogical use. It is a highly promising tool for providing formative feedback to beginner and intermediate learners (Levels 1–4) engaged in descriptive and narrative writing tasks. This reframes the system’s contribution more precisely. It serves as a strong baseline for foundational writing where topical relevance is a key signal of quality. The immediate, consistent feedback on linguistic form can help learners notice errors, facilitate revision, and promote autonomy, thereby alleviating some of the grading burden on instructors. Conversely, the system is not suitable for the high-stakes evaluation of advanced, open-ended argumentative writing, where its reliance on topical similarity can penalize originality.

However, the system’s limitations on abstract tasks indicate a necessary reframing for the field. The objective should not be to construct a single all-purpose AES that replaces human graders across contexts. A more viable direction is a toolkit of specialized systems. Our model constitutes a successful prototype within this toolkit, functioning as a “foundational writing tutor” for Korean L2 learners. It performs strongly within this scope but is not appropriate for high-stakes evaluation of advanced argumentative writing. Such targeted deployment supports responsible integration of AI in education and positions these systems as aids rather than substitutes for human educators.

5. Limitations and Future Work

This study demonstrates the promise of a hybrid approach to AES for Korean L2 writing, but it also has limitations that motivate a clear agenda for future work. This section transparently discusses the current model’s constraints and outlines several promising avenues for subsequent research.

5.1. Limitations

While our hybrid system represents a significant advance, its performance and methodology are subject to several important limitations that must be acknowledged.

A primary limitation, evident from our results, is the model’s diminished accuracy when evaluating high-level writing (TOPIK Levels 5–6). This “ceiling effect” arises because the current feature set, while effective at identifying foundational linguistic competence, is not equipped to capture the higher-order qualities that distinguish “very good” writing from “excellent” writing. These qualities such as rhetorical sophistication, argumentative nuance, logical depth, and originality are not easily quantified by metrics like sentence length or lexical diversity. The model can reliably identify well-formed prose but struggles to assess the quality of the ideas within it.

The use of cosine similarity with a finite set of LLM-generated reference essays has inherent flaws. Although we generate twenty distinct essays per prompt to create a broad semantic space, this approach primarily measures topical relevance, the degree of overlap in vocabulary and concepts, rather than the logical coherence or quality of an argument. Consequently, an exceptionally creative or unconventional essay that is well-argued but deviates from the semantic space of the reference texts may be unfairly penalized. The semantic feature, in its current form, is a proxy for topicality, not a true measure of content quality.

The performance of the model is constrained by the skewed data distribution within the NIKL corpus, which contains far fewer examples of high-proficiency essays compared to beginner-level ones. This severe class imbalance means the model has insufficient data from which to learn the defining statistical characteristics of top-tier writing, thereby exacerbating the ceiling effect and biasing its predictions toward the more heavily represented lower and middle proficiency levels

Finally, the current study does not include a fairness audit to assess whether the model exhibits performance disparities based on learners’ L1 backgrounds. Given that the NIKL corpus includes writers from 99 native language backgrounds, it is possible that linguistic transfer from different native languages could influence the model’s predictions. This represents an unexamined potential for bias that must be acknowledged as a limitation.

5.2. Future Works

The limitations identified above provide a clear and ambitious roadmap for future research. We have identified four key areas for development.

The most critical next step is to move beyond simple semantic similarity. A promising direction is the integration of features derived from dynamic entity embeddings and knowledge graphs to model the logical structure of an essay by representing its claims and evidence as a graph. This could be complemented by incorporating argument-mining techniques to detect, classify, and evaluate claims, premises, and their logical relations. Such a framework would represent a significant leap from measuring what an essay is about to how well it argues its point.

To better capture the specific challenges faced by Korean L2 learners, the linguistic feature set should be expanded to include more sophisticated, error-specific features. Given that particle errors are among the most frequent and persistent mistakes for learners of Korean, integrating a dedicated module for Korean particle error detection would be a particularly high-impact addition. Additionally, incorporating computational measures of discourse coherence, such as entity-grid models, would help capture the higher-order skills that define advanced writing.

To mitigate the problem of data imbalance at higher proficiency levels, future research should explore advanced data augmentation techniques. This could include methods such as back-translation or the controlled, LLM-based synthesis of new, high-quality L2 essays that exhibit the characteristics of TOPIK Levels 5 and 6. Furthermore, adapting feature-space synthesizers such as SMOTE or ADASYN to the hybrid representation could help create more balanced training sets.

A responsible AES system must be both fair and transparent. A crucial direction for future work is to conduct a fairness audit to investigate whether the model exhibits performance disparities across learners from different L1 backgrounds, ensuring the system is equitable for all users. Additionally, to enhance the system’s pedagogical value, deeper interpretability analyses using methods like SHAP or LIME should be conducted. Generating instance-level explanations that link a score to specific textual evidence would move the system from a simple grading tool to a powerful diagnostic and feedback generation engine for learners.

6. Conclusions

The rapid global growth of Korean language education has created an urgent demand for scalable, objective, and consistent writing assessment tools. This study responds by designing, implementing, and evaluating a hybrid Automated Essay Scoring system for Korean L2 learners. The approach integrates two complementary feature sets, a robust set of conventional linguistic indicators that capture writing form and a semantic feature derived from the cosine similarity between a student essay and an LLM-generated reference response that evaluates content. Experiments on the official NIKL Korean Language Learner Corpus yield four principal findings. First, the proposed hybrid system achieves QWK = 0.80 and significantly outperforms baselines that use only linguistic or only semantic features, supporting the hypothesis that accurate assessment requires joint evidence on form and content. Second, the system attains high accuracy for beginner and intermediate levels but shows a ceiling effect at the highest level, a limitation exacerbated by severe class imbalance. Third, performance is highly sensitive to the essay prompt and is stronger on concrete, descriptive topics than on abstract, argumentative topics, where reliance on a single reference response can penalize originality and creativity. Finally, the evidence supports the use of the system for formative assessment in foundational L2 writing contexts.

This study makes four contributions. It presents a novel hybrid AES architecture for Korean L2 writers, introduces the use of large language models to generate a semantic benchmark, provides a comprehensive evaluation against the official TOPIK proficiency scale, and empirically demonstrates the advantage of a hybrid approach. Although the current model has limitations, particularly in assessing argumentation and addressing equity, the work constitutes a practical advance and establishes a foundation for future research on more nuanced and educationally useful AI-based tools for Korean-language learners worldwide.