Abstract

Topic modeling of large news streams is widely used to reconstruct economic and political narratives, which requires coherent topics with low lexical overlap while remaining interpretable to domain experts. We propose TF-SYN-NER-Rel, a structural–semantic term weighting scheme that extends classical TF-IDF by integrating positional, syntactic, factual, and named-entity coefficients derived from morphosyntactic and dependency parses of Russian news texts. The method is embedded into a standard Latent Dirichlet Allocation (LDA) pipeline and evaluated on a large Russian-language news corpus from the online archive of Moskovsky Komsomolets (over 600,000 documents), with political, financial, and sports subsets obtained via dictionary-based expert labeling. For each subset, TF-SYN-NER-Rel is compared with standard TF-IDF under identical LDA settings, and topic quality is assessed using the C_v coherence metric. To assess robustness, we repeat model training across multiple random initializations and report aggregate coherence statistics. Quantitative results show that TF-SYN-NER-Rel improves coherence and yields smoother, more stable coherence curves across the number of topics. Qualitative analysis indicates reduced lexical overlap between topics and clearer separation of event-centered and institutional themes, especially in political and financial news. Overall, the proposed pipeline relies on CPU-based NLP tools and sparse linear algebra, providing a computationally lightweight and interpretable complement to embedding- and LLM-based topic modeling in large-scale news monitoring.

1. Introduction

One of the key unresolved problems of contemporary topic modeling is the simultaneous achievement of high topic coherence, reduced token overlap between clusters, and preservation of interpretability for domain experts. Topic modeling is increasingly used to analyze large flows of textual data in economics, finance, and management; however, the quality of the resulting topics and their interpretability largely depend on how documents are represented in the feature space. Traditional term weighting schemes based on frequency and positional characteristics take into account neither the syntactic structure of utterances, nor role relations between entities, nor relational links between documents and sources, which leads to mixing the lexicon of different storylines within the same topics and reduces their coherence in a substantive rather than purely formal–statistical sense. As a result, a gap arises between formal quality indicators of models (including traditional coherence metrics) and their real explanatory power in applied tasks related to the analysis of the information environment and economic narratives: topics may be statistically acceptable, but insufficiently clear and stable from the standpoint of expert interpretation. Under these conditions, the development of structural–semantic weighting schemes that integrate statistical, syntactic, factual, and graph-based features and are thus aimed at increasing topic coherence and reducing token overlap between clusters while preserving interpretability becomes particularly important. As the main integral topic quality metric used in this study, we employ the classical topic coherence measure

, which is based on co-occurrence statistics of terms and does not require constructing dense co-occurrence matrices for the entire vocabulary, making it computationally feasible for evaluating models on massive corpora. The TF-SYN-NER-Rel approach developed in this work continues the line of research on statistical term weighting in information retrieval. Starting from the classical notions of term specificity and its influence on retrieval quality [1,2], generalized evolutionary schemes for choosing weights [3], new variants of TF-IDF [4], regularization with regard to relational links between terms [5], and models using random walks and term position in a document [6,7] have been proposed. Additionally, temporal relevance of documents [8], synonymic substitutions [9], and term position in the text [10] have been taken into account. Taken together, these studies show that the simple frequency TF component must be complemented with structural and semantic factors, which conceptually aligns with the rationale of TF-SYN-NER-Rel and is directly related to increasing topic coherence and reducing overlap of key tokens between topics.

At the level of topic analysis, the starting point is the probabilistic Latent Dirichlet Allocation (LDA) model [11]. Numerous modifications of LDA and related models are systematized in surveys on topic modeling [12], including works devoted to multi-label topic models [13] and applied analysis of social media texts and online communications [14]. In these studies, topic modeling is treated as a basic tool for extracting latent structure from large text corpora, while emphasizing the need for topics to be coherent, with low lexical overlap between topics, and interpretable for experts in the relevant subject areas. In the present study, the fulfilment of these requirements is quantitatively evaluated using the classical topic coherence measure

, which ensures comparability of the obtained results with prevailing empirical practice in topic modeling and does not require resorting to computationally heavy embedding-based or neural coherence metrics.

Early work on topic quality assessment proposed automatic coherence metrics based on statistical relationships between words in the corpus and their co-occurrence [15,16,17,18]. As a result, a family of topic coherence metrics emerged, within which the classical coherence value modification has de facto become the standard for quantitative evaluation of topic models in applied research, as it combines substantive interpretability with moderate computational costs and the ability to operate on large sparse corpora. This laid the foundations for formalized analysis of coherence; however, such metrics by themselves do not guarantee reduced token overlap between different topics. Subsequent studies broadened the range of indicators by analyzing the relationship between coherence and topic interpretability, the influence of the underlying corpus (full text versus abstracts), and the choice of topic descriptors [19,20,21,22]. The results of these works underline that even with high values of formal coherence scores, expert interpretability of topics and the clarity of their substantive boundaries remain a separate problem requiring special procedures for configuring the feature space. The problem of model stability and choosing the number of topics is discussed in the context of partition stability and parameter sensitivity [23]. Stability of the partition and robustness to parameter settings are directly related to the practically observed sensitivity of the cluster structure to minor changes in the corpus, which leads to “floating” topics and, as a consequence, complicates expert interpretation and the comparability of results over time. Bibliometric and comparative studies document the rapid growth in the number of publications on topic modeling, the diversity of application domains, and the heterogeneity of quality criteria used [24,25,26,27]. This heterogeneity of quality criteria, combined with the growing volume of work, exacerbates the problem of consistent comparison of models in terms of coherence and the degree of lexical overlap between identified topics. Against the backdrop of emerging neural and embedding-based topic models [28,29,30], including approaches that operate in contextual embedding spaces such as Top2Vec, the Combined Topic Model (CTM) and BERTopic [29], a number of studies show that automatic coherence metrics only partially correlate with expert assessments of interpretability and that estimating the reliability of topic-modeling results often remains problematic [31,32,33]. In this context, the use of the classical topic coherence measure

as the main quality criterion appears to be a compromise between established practice, ease of implementation, and computational efficiency on large, sparse corpora. At the same time, neural architectures themselves, including large language models (LLMs) and related Transformer-based solutions, are typically characterized by high computational complexity, require substantial memory resources and specialized hardware, and therefore cannot always be used as a routine topic modeling tool for monitoring large streams of textual data. Building on these advances, recent work has also explored LLM-driven topic extraction pipelines, in which large language models are used to cluster documents, generate topic labels or refine topic descriptors, further increasing computational demands and dependence on specific model implementations [28,29,30,31,32,33]. This stimulates the development of combined validation schemes that integrate machine-based indicators with expert evaluation procedures [28,29,30,31,32,33]. For the purposes of the present study, it is particularly important that such combined validation schemes are oriented not only toward formally increasing coherence, but also toward reducing token overlap between topics and enhancing result transparency for experts, which directly imposes requirements on the structure of the underlying feature space.

The objective of this paper is to investigate whether structural–semantic term weighting can improve topic coherence and reduce token overlap while preserving expert interpretability in LDA-based modeling of Russian news. We introduce TF-SYN-NER-Rel as a general structural–semantic term weighting scheme that can, in principle, be combined with a wide range of topic modeling algorithms and applied to different languages and domains. In this paper, however, we focus on large-scale corpora of articles from the Russian newspaper Moskovsky Komsomolets and treat this setting as a large-scale empirical study of structurally informed topic modeling in a real-world news monitoring environment. Specifically, we address the following research questions:

(R1) Does TF-SYN-NER-Rel systematically increase topic coherence compared with classical TF-IDF across different news domains (political, financial, sports) within a common LDA configuration?

(R2) Does structural–semantic weighting reduce lexical overlap between topics and lead to more interpretable, actor- and event-centered topic structures as judged by domain experts?

(R3) Can these gains be achieved within a computationally lightweight pipeline that remains practical for large-scale monitoring of Russian news streams on non-specialized hardware?

A deeper consideration of utterance structure, needed to increase substantive topic coherence and to explicitly identify actors and events from the expert’s perspective, can be achieved through integration of Semantic Role Labeling (SRL). A recent SRL overview [34] and work on deep neural architectures [35] rely on the conclusion about the key role of syntactic analysis for correct extraction of predicate–argument structures [36]. For term weighting tasks, this opens up the possibility of moving from purely frequency-based schemes to accounting for the role and syntactic functions of a word in a sentence, which in TF-SYN-NER-Rel is implemented via syntactic and factual coefficients and contributes to the identification of clearer event cores within topics.

In applied tasks of analyzing digital text streams, topic modeling and structural–semantic term weighting are viewed as components of broader big data analytics systems [37]. In such systems, special attention is paid to ensuring that topic clusters reflect stable, coherent economic storylines and enable experts to detect significant changes in the structure of information flows. In the financial domain, textual and event-based features are linked to regime shifts in markets and changes in entropy characteristics of price dynamics [38]. Studies on the digital economy and the management of a firm’s information capital, as well as research on “natural digital information” in marketing, show that the state of the information environment can be described through topical and structural characteristics of corporate and market texts [39,40]. For practical use of such descriptions in managerial and financial models, it is necessary to minimize token overlap between topics; otherwise, the interpretation of shifts in the information environment becomes ambiguous. To increase the stability and informativeness of the feature space, constructions of associative tokens and topic hyperspaces, graph models of “semantic entanglement” of sources, and structural–semantic schemes for assessing the influence of the information environment on financial indicators have been proposed [41,42,43,44]. The transition to associative and graph-based representations of topics is thus directly related to the task of increasing structural coherence of the topical space and its suitability for expert analysis. Substantively, this is consistent with institutional analysis of economic narratives [45] and the concept of “narrative economics,” according to which the propagation of stories and narratives significantly affects macroeconomic dynamics [46].

Integration of textual features into econometric and forecasting models requires robust regression methods under conditions of high dimensionality and sparsity, including modifications of partial least squares and generalized ridge regression [47,48]. The quality of topical features, including their coherence and low degree of mutual overlap, directly affects the stability and interpretability of the resulting regression models. To describe the spread of information across networks of documents and sources, models of random walks and Laplacian diffusion are used [49,50]. Vector representations of words and documents obtained in GloVe and word2vec models [51,52] serve as standard building blocks for neural topic models and for assessing semantic similarity between terms, which makes them a natural basis for embedding-based modifications of the weighting schemes used in TF-SYN-NER-Rel. At the same time, the use of embedding representations in combination with computationally lightweight weighting schemes such as TF-SYN-NER-Rel makes it possible to shift part of the load away from computationally heavy LLM-class models to the stage of feature space construction.

Thus, the current state of research imposes the following requirements on the feature space for topic modeling: (1) accounting for term position, temporal relevance, and contextual substitutions; (2) integration of syntactic and role structure of text; (3) the possibility of graph-based and associative feature enrichment; (4) compatibility with neural and embedding-based models at reasonable computational cost; and (5) suitability for subsequent econometric and narrative interpretation in terms of coherence, low lexical overlap between topics, and expert interpretability. The proposed TF-SYN-NER-Rel model satisfies these requirements by replacing flat term frequency with a structural–semantic metric and forming a more interpretable and coherent feature space for topic analysis, which reduces overlap of key tokens between clusters without resorting to computationally heavy large language model-based approaches and, in particular, leads to higher values of the classical topic coherence measure

on the corpora used in this study.

2. Materials and Methods

All experiments in this paper are conducted on a single large-scale corpus of Russian-language news articles from the online archive of the newspaper Moskovsky Komsomolets (MK). The corpus comprises more than 600,000 online articles published up to October 2025, which were collected directly from the public MK website using an iterative web parser and stored in a single CSV file (Нoвoсти_МК_full_финал_2.csv) containing full article texts and associated metadata.

In the present study, we use only this MK corpus; no other newspapers, languages, or domains are considered in the experiments. The thematic subsets (political, financial, and sports news) were obtained via dictionary-based manual labeling, i.e., by applying expert-curated keyword lexicons to the MK articles to assign them to the corresponding domain. During preprocessing, we applied standard topic-modeling cleaning steps, including removal of high-frequency functional and auxiliary lemmas (e.g., the Russian equivalents of “to be”), along with additional domain-specific filtering where appropriate. The full corpus (or its public distribution package, depending on copyright constraints) and all scripts used for preprocessing, term weighting, and topic modeling are publicly available in the GitHub repository “TF-SYN-NER-Rel (v.1.0.0)” (https://github.com/polytechinvest/TF-SYN-NER-Rel accessed on 10 December 2025), which also contains the universal stopword list and an example of domain-specific filter lists (e.g., for financial news). Details on data access and availability of corpus artifacts are provided in the Data Availability Statement.

The aim of the next section is to provide a formal justification for the proposed TF-SYN-NER-Rel method, designed to mitigate the syntactic blindness of the classical TF-IDF model, i.e., its insensitivity to syntactic structure. The method combines the statistical robustness of TF-IDF with linguistic enrichment that takes into account syntactic structure and the factual content of the text.

NER analysis remains part of corpus preprocessing: it is used to merge multi-word named entities (for example, “State Corporation Rosatom”) into single tokens for subsequent processing. In addition, NER results are incorporated into term weighting through a dedicated NER-based coefficient.

In our method, term weights depend on four coefficients: a positional coefficient, a syntactic coefficient, a factual coefficient, and an NER coefficient that amplifies the contribution of named entities.

The classical TF-IDF (Term Frequency–Inverse Document Frequency) assigns each term t in a document d a weight:

where

And N is the total number of documents in the corpus D. This model is simple and effective, but it completely ignores syntactic and semantic-role relations. The two sentences “Company A bought Company B” and “Company B bought Company A” will obtain almost identical vector representations, which makes TF-IDF syntactically “blind”.

The collection of document vectors

forms a feature matrix

of size

:

where each element

defines the weight of term (token)

in document

This matrix is the standard input to topic models, but it does not encode any linguistic structure.

The central hypothesis of the TF-SYN-NER-Rel method is that the importance of a term is determined not only by its frequency, but also by its structural and semantic role. Each occurrence of a token

receives an individual weight

, which is computed as the product of four coefficients: a positional coefficient, a syntactic coefficient, a factual coefficient, and an NER-based coefficient:

Positional coefficient

reflects the internal structure of a news article based on the journalistic “inverted pyramid” principle, according to which key information is concentrated at the beginning of the text.

This distribution increases the weight of words that define the main topic and attenuates the influence of secondary contexts.

Syntactic coefficient

reduces role ambiguity by assigning higher weights to words depending on their syntactic function in the sentence:

Thus, the predicate receives the highest weight as the action core, while actors and action targets are assigned slightly lower but still substantial priority.

Factual coefficient

amplifies tokens that belong to extracted facts of the form “subject–action–object”. Based on the dependency tree, for each sentence we construct a set of facts

, that represents the factual core of the document.

This adjustment makes the model more sensitive to substantive events and increases the weight of the semantic cores of sentences.

NER-coefficient

takes into account whether a token belongs to a recognized named entity. For each occurrence tᵢ we define the rule:

Thus, tokens that are parts of named entities (companies, organizations, geographic locations, persons) receive a moderate weight boost. Choosing the coefficients 1 and 1.5 increases the importance of such entities without drastically distorting the frequency distribution within the document.

The aggregate frequency of a term in a document is computed as the sum of the weights of all its occurrences:

where

) denotes the set of all occurrences of term t in document d.

The final weight of a term in a document follows the TF-IDF structure, but with a modified local component:

The set of new vectors forms an extended feature matrix

:

where

This matrix is used as input for topic models, providing structural enrichment of the feature space.

The TF-SYN-NER-Rel method preserves the statistical foundation of TF-IDF, but replaces flat term frequency with a structural–semantic metric that reflects the distribution of roles in the text. This approach enables topic models to distinguish between actors and action targets, focus on the semantic core of events, and increase topic interpretability. Experimental results show that using the four weighting components: positional, syntactic, factual, and named-entity, provides consistent improvements in topic coherence as measured by

on thematic corpora and enhances the substantive structural organization of the resulting topics.

3. Results

To evaluate the effectiveness of the TF-SYN-NER-Rel model, we compared it with classical TF-IDF in the task of topic modeling news texts. Four corpora were considered the combined news stream (general, political, financial, sports and other news combined into a single corpus) and three subcorpora consisting of financial, political, and sports news, respectively.

In all experiments, the same LDA configuration was used; the only difference was the construction of the document–term matrix: either classical TF-IDF or the proposed TF-SYN-NER-Rel weighting scheme incorporating positional, syntactic, factual, and NER-based coefficients. Model quality was assessed using the

metric. For each corpus, we computed the dependence of

on the number of topics, and the optimal number of topics K was selected based on these curves.

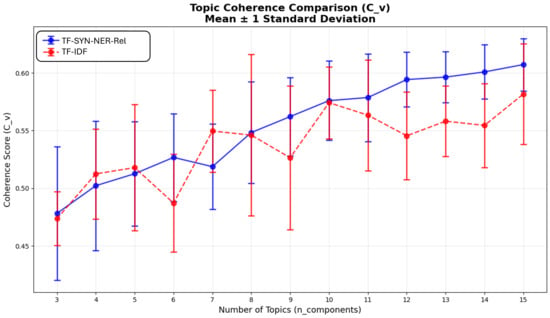

In addition to these corpus-level experiments, we performed a robustness analysis to quantify how sensitive topic coherence is to random LDA initialization and to assess the method’s stability across repeated runs. For this purpose, we drew a reproducible random subset of 15,000 articles from the combined MK news corpus using a fixed sampling seed and constructed two document–term matrices on this sample: a standard TF-IDF representation and the TF-SYN-NER-Rel representation. For each number of topics

and for each weighting scheme, LDA was fitted ten times on different random seeds, and the topic coherence

was computed for every run. We then aggregated these values across seeds and, for each K, report the mean coherence and its standard deviation. The resulting mean

curves with error bars corresponding to ±1 standard deviation are shown in Figure 1.

Figure 1.

Topic coherence comparison between TF-SYN-NER-Rel and classical TF-IDF under repeated LDA random initializations. Mean

coherence is shown as a function of the number of topics K on a reproducible random subset of 15,000 MK news articles; error bars indicate ±1 standard deviation across LDA seeds.

All coherence plots in this section use identical axis conventions for comparability. The horizontal axis reports the number of topics K, and the vertical axis reports the

coherence score. Each plot contains two curves corresponding to the two weighting schemes (standard TF-IDF and TF-SYN-NER-Rel based on the TF-new document–term matrix).

Figure 1 shows that coherence increases with the number of topics for both representations, while TF-SYN-NER-Rel consistently attains higher mean

values than classical TF-IDF across most K. Moreover, the variability across LDA initializations is lower for TF-SYN-NER-Rel in the majority of settings, as reflected by narrower error bars, suggesting that structural–semantic weighting yields more stable topic solutions under repeated runs. Because running multi-seed LDA grid searches on the full 600k-document corpus is computationally expensive, this robustness analysis is reported on a 15k-document subset as a proxy. This proxy is sufficient for assessing initialization sensitivity because the effect of random seeds primarily manifests through the stochastic optimization dynamics rather than corpus size alone. Importantly, when training models on the full corpus (without multi-seed repetition), we observe the same qualitative pattern in topic structure: TF-SYN-NER-Rel produces more distinct, event- and actor-centered topics with lower apparent lexical overlap, whereas TF-IDF more often merges institutional and event dimensions into broader macro-topics.

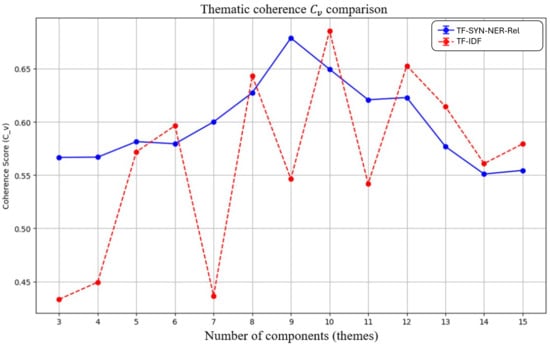

The first experiment consisted of computing and comparing coherence values for classical TF-IDF and TF-SYN-NER-Rel on the full news corpus. The dependence of the

on the number of topics for this experiment is shown in Figure 2.

Figure 2.

Coherence value as a function of the number of topics computed on the full news corpus.

On the combined corpus, both models exhibit a non-monotonic dependence of

on the number of topics K, but the shapes of the curves differ markedly. For TF-SYN-NER-Rel, the increase in coherence is relatively smooth: the values gradually grow from K = 3 to a stable plateau in the range K = 7–9 (coherence value approximately 0.60–0.68), followed by a mild decline with small oscillations. For standard TF-IDF, by contrast, the curve is much less stable: after very low values at a small number of topics (0.43–0.45 at K = 3–4), there is a sharp jump at K = 6–8, followed by a series of local maxima and minima in the interval K = 8–13. As a result, the two models intersect several times at similar values of K, but TF-SYN-NER-Rel delivers a more stable and predictable coherence level over a wider range, whereas standard TF-IDF is sensitive to the precise choice of the number of topics.

Qualitative analysis shows that this smoother behavior reflects less diffuse and better structured topics. The proposed method reduces undesirable token overlap between clusters: political discourse splits into external (“president”, “USA”, “Trump”, “Ukraine”) and domestic (“Russia”, “Putin”, “RF” (stands for Russian Federation), “Vladimir”) components, whereas under TF-IDF these tokens often co-occur within a single, overly general topic. Similarly, incident-related news separates into a law-enforcement cluster (“court”, “detain”, “criminal”, “police”) and transport/technological accidents (“occur”, “die”, “explosion”, “driver”, “car”), while in the classical model they form a semantically diffuse topic. Economic themes under TF-SYN-NER-Rel acquire event-level specificity (“electric vehicle”, “station”, “infrastructure”, “Irkutsk”, “holding”), whereas TF-IDF tends to emphasize a more abstract macroeconomic background (“ruble”, “dollar”, “oil”, “exchange rate”). Thus, the evolution of coherence values is accompanied by increased topical sharpness and reduced overlap of key tokens, indicating better interpretability of topics obtained with our approach. These results motivate a more detailed analysis of the method on more specialized subsets.

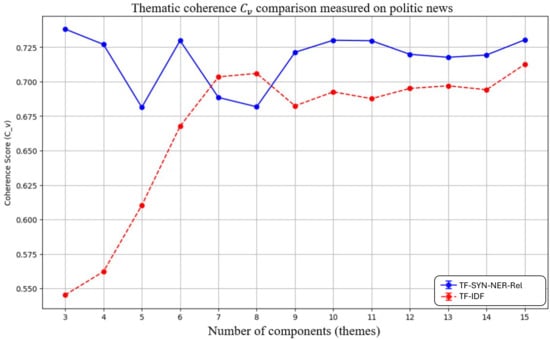

We next compare coherence values computed only on the politically oriented subset of the same news corpus. The dependence of the

on the number of topics for this experiment is shown in Figure 3.

Figure 3.

Coherence value as a function of the number of topics computed on the political news subcorpus.

In the political subcorpus, the two models exhibit different coherence dynamics as the number of topics increases. For TF-SYN-NER-Rel, the curve immediately reaches a high level (about 0.73 at three topics) and then fluctuates within a narrow interval of 0.68–0.73 without sharp drops. For classical TF-IDF, by contrast, low values persist for a long time (from 0.54 to 0.61 at K = 3–5), followed by a gradual increase, and only under strong fragmentation of the political discourse—at approximately seven to fifteen topics—does the model reach a plateau around 0.69–0.71. This indicates that the proposed method delivers more stable and predictable coherence already at a small number of components, whereas standard TF-IDF requires a substantial increase in the number of topics to achieve a comparable quality level.

An analysis of the resulting content clusters confirms this difference. With three topics, TF-SYN-NER-Rel produces three relatively clear blocks with minimal token overlap: (1) the domestic political scene with an emphasis on actors and electoral procedures (“president”, “deputy”, “party”, “election”, “Trump”, “Putin”, “Vladimir”); (2) a regional socio-economic agenda centered on energy and tariffs (“Irkutsk”, “tariff”, “capacity”, “energy sector”, “company”, “mining”); (3) the external conflict contour (“Ukraine”, “Russia”, “USA”, “president”, “RF”, “state that”). In the classical TF-IDF model with the optimal fifteen topics, political content splits into numerous partially overlapping groups: the same tokens (“Russia”, “Ukraine”, “Putin”, “USA”, “president”) recur across multiple topics (1, 2, 5, 6, 9, 12, 14, 15), which indicates fuzzy boundaries between clusters and substantial token overlap. Thus, for political news, the new method makes it possible to obtain more homogeneous and interpretable topic structures with a smaller number of topics.

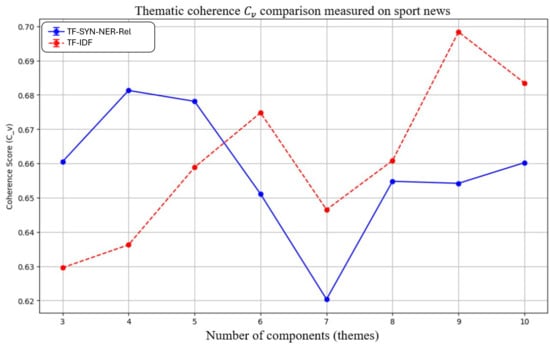

We next compare coherence values computed only on the sports-related subset of the same news corpus. The dependence of the

on the number of topics for this experiment is shown in Figure 4.

Figure 4.

Coherence value as a function of the number of topics computed on the sports news subcorpus.

In the sports subcorpus, both models exhibit a different coherence pattern compared with the political and combined news corpora. For our method, coherence already reaches about 0.66–0.68 at three to four topics and then fluctuates within a relatively narrow interval: after a slight decrease at six to seven topics, it partially recovers to around 0.65–0.66 at eight to ten topics. For classical TF-IDF, the dynamics are more monotonic: coherence gradually increases from 0.63 at three topics to a maximum of about 0.70 at nine topics, and then decreases slightly. This means that in sports news the standard approach outperforms our method in terms of the coherence metric under strong fragmentation (nine to ten topics), whereas TF-SYN-NER-Rel reaches its quality “ceiling” more quickly and maintains a comparatively stable coherence level already with a compact structure of three to five topics.

A qualitative analysis of topics at four clusters shows that the proposed method produces a more structured and less diffuse partition at a small number of topics. The first topic concentrates on club football: “footballer”, “football”, “Spartak”, “Zenit”, “Moscow”, “CSKA”, clearly highlighting league competitions and specific clubs. The second topic describes international tournaments and national team performances: “Russia”, “world”, “Russian”, “champion”, “medal”, “win”, “national team”, “bout”, “secure victory”, indicating an event-centric structure around wins and medals. The third topic reflects the political and governance dimension of sport: “president”, “state that”, “head”, “Russia”, “Ukraine”, “country”, “decision”, “international”. The fourth topic forms a separate cluster capturing law-enforcement and incident-related contexts at the intersection with sport: “report”, “detain”, “police”, “court”, “case”, “occur”, “officer”. In the standard model with the same number of topics, club football and international competitions are represented by similar token sets, but the politico-sport topic overlaps with the lexicon of the game itself (“football” appearing in a political cluster with “president”, “Putin”, “FIFA”), while the law-enforcement topic additionally “mixes in” the geopolitical marker “Ukraine”. This increases token overlap between topics and makes their boundaries less clear. Thus, in the sports subcorpus, our method provides a more structured and interpretable topical space at a small number of topics, whereas standard TF-IDF attains higher coherence only at the cost of strong fragmentation and partially overlapping clusters.

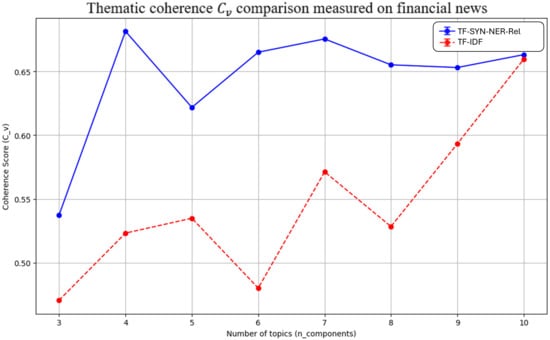

Finally, we compare coherence values on the most practically relevant subset, the corpus of financial news. The dependence of

on the number of topics for this experiment is shown in Figure 5.

Figure 5.

Coherence value as a function of the number of topics computed on the financial news subcorpus.

In the financial subcorpus, the differences between the models are particularly pronounced. For the proposed weighting scheme, coherence is already at a moderate level for the minimum number of topics and, when moving from three to more topics, rapidly reaches a stable plateau around 0.65–0.66. Further increases in the number of topics lead only to minor fluctuations without changing the overall quality level. For classical TF-IDF, by contrast, low and unstable values are observed in the 3–7 range topics; coherence then starts to increase and only at ten topics approaches the values reached much earlier by our method. Thus, on financial news, the new weighting scheme provides a higher and at the same time more stable level of topic coherence for virtually any reasonable number of components.

The structure of the extracted topics shows how this advantage is achieved. In our model, with four topics we obtain semantically clear clusters with limited token overlap. A separate regional block emerges related to infrastructure and tariffs: “fire”, “tariff”, “Irkutsk”, “capacity”, “region”, “oblast”, “house”, “rescuer”, corresponding to a set of stories about technological incidents and tariff policy in the energy sector. The second topic accumulates the law-enforcement context (“court”, “case”, “detain”, “police”, “protest”), the third describes macroeconomic and sanctions-related issues (“president”, “Ukraine”, “USA”, “government”, “minister”, “sanctions”, “exchange rate”), and the fourth captures investments in electromobility and the associated infrastructure (“electric vehicle”, “station”, “infrastructure”, “holding”). In the standard TF-IDF model with the same number of topics, the picture is noticeably more diffuse: electromobility “dissolves” within a generic macroeconomic topic (“exchange rate”, “oil”, “trading”, “currency”, “stock exchange”), the law-enforcement cluster is mixed with general urban incidents, and one of the clusters merges heterogeneous stories about courts, airlines, sports matches, and companies. This indicates a higher degree of token overlap and less clear topic boundaries in the standard variant, whereas our method makes it possible to separate infrastructure–financial, sanctions and exchange-rate, and socio-legal stories into distinct, interpretable topical blocks.

To assess the computational efficiency of the proposed TF-SYN-NER-Rel weighting scheme, we measured runtimes on a political-news subset of 181,602 documents drawn from a larger collection of over 600,000 Russian news articles across several domains. All experiments were run on a standard workstation with a multi-core CPU and 32 GB DDR5 4800 MHz RAM. The NLP stack relied on open-source tools for Russian: Razdelv0.5.0 for sentence and token segmentation, Pymorphy2 v0.8 for lemmatization, Navec v0.10.0 embeddings (navec_news_v1_1B_250K_300d_100q.tar) as a base semantic layer, and Slovnet v0.6.0 models (slovnet_morph_news_v1.tar, slovnet_syntax_news_v1.tar, slovnet_ner_news_v1.tar) for morphological tagging, dependency parsing and named entity recognition. The implementation uses CPU-based libraries (Pymorphy2, Natasha/Slovnet, Navec, scikit-learn, Gensim, pandas, NumPy) and does not require GPU acceleration or large language models. The full NLP pipeline (tokenization, lemmatization, POS tagging, dependency parsing, NER) processed the 181,602-document subset in approximately 48 min 39 s, which corresponds to about 62 documents per second. Computation of structural–semantic TF-SYN-NER-Rel weights (TF_new) for all documents took about 6 min 33 s, i.e., roughly 462 documents per second. The most expensive part of the workflow is topic number selection: training LDA models and evaluating topic quality with

for K from 3 to 15 for both the standard TF-IDF and TF-SYN-NER-Rel representations took about 2 h 16 min. In total, the complete cycle “from raw texts to the optimal number of topics” on 181,602 documents required around 3.2 h, corresponding to a throughput of about 57,000 documents per hour.

From a complexity standpoint, once the NLP annotations are available, TF-SYN-NER-Rel is computed in a single linear pass over each document, and the resulting document–term matrix remains high-dimensional but sparse (in the reported experiment, 181,602 × 159,554). Each token used in TF_new is enriched with its lemma, morphological tag, dependency relation and, when applicable, named entity type, so that the structural–semantic weighting operates on linguistically informed but still sparse features. A key factor behind the lightweight nature of the overall approach is the use of the classical

as the main topic quality metric. In the Gensim implementation employed here, coherence value is based on co-occurrence statistics of lemmatized tokens in a sliding window and operates directly on a sparse bag-of-words corpus and a term dictionary, without constructing a dense co-occurrence matrix for the full vocabulary. This makes it possible to compare the standard TF-IDF and TF-SYN-NER-Rel feature spaces over a grid of topic numbers on a large sparse corpus within a few hours on a CPU-only setup, in contrast to embedding-based or neural coherence measures that rely on dense high-dimensional representations and are substantially more demanding in terms of computation and memory.

Overall, these measurements show that TF-SYN-NER-Rel provides a structurally richer and more interpretable feature space for topic modeling while preserving the computational footprint of a classical LDA plus

workflow. The additional cost of structural–semantic weighting is small relative to the cost of topic-model training and selection, and the entire pipeline remains practical for large-scale news streams without resorting to GPU-accelerated inference or large language models.

4. Discussion

The starting point of this study was the observation, articulated in the introduction, that standard feature spaces for topic modeling (in particular, TF-IDF) are syntactically “blind”, prone to high lexical overlap between topics and only partially aligned with expert-oriented notions of interpretability. The central working hypothesis behind TF-SYN-NER-Rel was that term importance should reflect not only frequency, but also structural and semantic roles in the sentence (predicate, actor, object, named entity, factual core). The experimental results across several news domains support this hypothesis.

First, in all considered corpora the dependence of

on the number of topics is smoother and more stable for TF-SYN-NER-Rel than for classical TF-IDF. On the combined news corpus, TF-SYN-NER-Rel quickly reaches a plateau and then exhibits only mild oscillations, whereas TF-IDF shows pronounced local maxima and minima and strong sensitivity to the exact choice of the number of topics. Similar patterns are observed in the political and financial subcorpora, where TF-SYN-NER-Rel delivers high coherence already at small K, while TF-IDF approaches comparable values only under substantial fragmentation of the discourse. Importantly, this pattern remains consistent under repeated LDA runs with different random initializations, indicating that the observed differences are not driven by a single favorable seed. This behavior is consistent with previous findings that purely frequency-based representations are sensitive to lexical noise and corpus perturbations, and that the stability of topic models is closely tied to the choice of feature space and hyperparameters.

Second, qualitative inspection of topics confirms that structural–semantic weighting reduces token overlap and sharpens topic boundaries, particularly in domains with rich institutional structure. In political news, TF-SYN-NER-Rel separates domestic political processes and electoral procedures from external conflict narratives, yielding topics that closely correspond to the institutional and narrative dimensions described in the literature on political communication and narrative economics. In financial news, the method disentangles sanctions and exchange-rate narratives, regional tariff and infrastructure stories, and law-enforcement contexts, which is in line with prior work that links textual indicators to market regimes and information shocks. In contrast, TF-IDF tends to merge these dimensions into broader, less interpretable macro-topics where the same high-frequency tokens recur across multiple clusters.

Third, the sports subcorpus provides an informative counterpoint. Here the lexicon is more homogeneous and event templates are simpler. TF-SYN-NER-Rel still produces well-structured topics at small K (club competitions, international tournaments, governance and politics of sport, incident-related news), but TF-IDF attains slightly higher coherence values when the number of topics is large. This asymmetry highlights an important point from the introduction: automatic coherence metrics only partially capture expert notions of interpretability, and gains in coherence at high K may correspond to over-fragmentation and increased overlap in semantic terms. In other words, TF-SYN-NER-Rel aligns more closely with the narrative and institutional structure of the domain at a compact topic structure (a small number of topics), whereas TF-IDF tends to optimize coherence by splitting the corpus into many narrowly focused but partially redundant clusters.

Overall, the results are consistent with earlier work on enhanced term weighting and structural features in information retrieval, while extending these ideas to the domain of topic modeling. By incorporating positional, syntactic, factual, and NER-based coefficients into the local term frequency component, TF-SYN-NER-Rel operationalizes the requirement, formulated in previous research, that topic spaces should reflect the underlying event and actor structure rather than just word co-occurrence statistics.

From a methodological perspective, the proposed scheme can be viewed as a bridge between classical bag-of-words topic models and structurally informed, narrative-oriented analyses. By amplifying predicates, actors, objects and named entities, the feature space becomes more sensitive to patterns of “who did what to whom”, which facilitates mapping topics onto economic and institutional narratives and supports downstream econometric and forecasting applications.

At the same time, TF-SYN-NER-Rel preserves the computational footprint of a standard LDA plus

workflow. The entire pipeline relies on CPU-based tools (Razdel, Pymorphy2, Navec, Slovnet, scikit-learn, Gensim) and sparse linear algebra, the full NLP stack (tokenization, lemmatization, dependency parsing, NER) and TF_new computation complete within minutes to tens of minutes, while the dominant cost remains the standard grid search over the number of topics. The choice of the

metric is crucial here: in the Gensim implementation it operates on sparse bag-of-words data and sliding-window co-occurrence counts, avoiding dense vocabulary-by-vocabulary matrices and making large sparse corpora tractable without GPU acceleration or LLM-based evaluation. For reproducibility, the repository also provides the universal stop word list and examples of domain-specific filter lists (e.g., for financial news).

In this sense, TF-SYN-NER-Rel complements, rather than competes with, neural and LLM-based topic models. It provides a lightweight, interpretable feature space that can be used both within classical probabilistic models such as LDA and as an input or regularization signal for more complex architectures.

Several limitations of the present study should be acknowledged.

First, all experiments were conducted on an online archive of the Russian-language news from the Russian newspaper Moskovsky Komsomolets (articles published up to October 2025). The behavior of TF-SYN-NER-Rel in other genres (social media, long-form analysis, corporate reports) and languages remains to be investigated. Differences in syntactic structure, named-entity distributions and discourse patterns may affect the relative benefits of structural–semantic weighting.

Second, LDA was used as the sole topic modeling framework. This choice facilitates comparison with a large body of prior work and emphasizes the contribution of the feature space, but does not cover neural topic models or embedding-based approaches, which operate in fundamentally different representation spaces. The interaction between TF-SYN-NER-Rel and such models is not addressed here.

Third, the evaluation relies primarily on a single automatic coherence metric. Although

is widely used and has desirable computational properties, previous studies have shown that automatic metrics only partially correlate with human judgments of interpretability and may be sensitive to corpus characteristics and parameter settings. In this work, coherence analysis was complemented by qualitative inspection of topics and robustness checks across random initializations, but a systematic, large-scale expert evaluation protocol was not implemented.

Fourth, the method depends on a specific pipeline for morphological analysis, dependency parsing and NER. Errors in these components propagate to term weights and may influence topic boundaries. In addition, the construction of the political/financial/sports subsets relies on dictionary-based expert labeling; while transparent and reproducible, this procedure may introduce assignment noise in borderline cases. The sensitivity of TF-SYN-NER-Rel to the quality of linguistic annotations, labeling heuristics, and alternative NLP toolchains has not yet been quantified.

These limitations suggest several directions for further work. A first avenue is to integrate TF-SYN-NER-Rel with neural and embedding-based topic models. Structural–semantic weights could be used to shape input representations, to re-weight token-level contributions in attention mechanisms, or to regularize topic-word distributions toward predicate–argument structures extracted from the text.

A second direction is to extend the current approach with graph-based and associative representations. Factual links and shared named entities naturally define edges in document or source networks, which could be combined with TF-SYN-NER-Rel in models of semantic entanglement, random walks and multiscale clustering of information flows.

Third, a more systematic human-in-the-loop evaluation is needed. Established protocols for assessing topic interpretability could be applied in specific domains (e.g., monetary policy, energy markets, regional development), comparing expert judgments of topic coherence, distinctness and usefulness for decision support under TF-SYN-NER-Rel and TF-IDF.

Fourth, the integration of structurally enriched topical features into econometric and forecasting models deserves detailed study. This would allow a quantitative assessment of how clearly separated narrative themes relate to financial indicators, regime shifts and institutional changes, thereby connecting the present work more directly to the literature on narrative economics and information capital.

Finally, cross-lingual and multilingual extensions—combining language-specific linguistic pipelines with shared embedding spaces—could test whether the advantages of structural–semantic weighting carry over to other languages and media systems.

5. Conclusions

This paper introduced TF-SYN-NER-Rel, a structural–semantic term weighting scheme that extends classical TF-IDF by incorporating positional, syntactic, factual and named-entity information derived from dependency-parsed news texts. In line with the hypotheses formulated in the introduction, the method yields smoother and more stable coherence curves, higher coherence levels at moderate numbers of topics K, and substantially reduced token overlap between topics in political and financial news. In sports news, TF-SYN-NER-Rel produces compact and interpretable topic structures at small K, while TF-IDF achieves slightly higher coherence only under strong fragmentation.

Taken together, these findings indicate that TF-SYN-NER-Rel moves topic modeling closer to the requirements of narrative and institutional analysis: topics become less like arbitrary clusters of co-occurring words and more like structured groupings of events, actors and institutions. At the same time, the method remains computationally lightweight, relying on CPU-based NLP tools, sparse document–term matrices and the classical

metric, while exhibiting robustness across repeated runs with different random initializations. Within the scope of the present empirical setting (a single Russian-language news source and an LDA-based pipeline), TF-SYN-NER-Rel provides a practical, interpretable and scalable complement to more resource-intensive neural and LLM-based approaches for large-scale news monitoring and the reconstruction of economic and political narratives.

Author Contributions

Conceptualization, G.G. and P.Y.; Methodology, G.G.; Software, G.G.; Validation, D.R., P.Y. and E.K.; Formal analysis, E.K.; Investigation, G.G.; Resources, D.R.; data curation, E.K.; Writing—original draft, G.G.; Writing—review & editing, G.G.; Visualization, G.G.; Supervision, E.K.; Project administration, E.K.; Funding acquisition, D.R. All authors have read and agreed to the published version of the manuscript.

Funding

The data supporting the findings of this study are openly available in the “TF-SYN-NER-Rel” repository at https://github.com/polytechinvest/TF-SYN-NER-Rel (accessed on 10 December 2025). The repository provides the link for full corpus of Moskovsky Komsomolets (MK) online articles (up to October 2025) and associated metadata, as well as all scripts required to reproduce preprocessing, TF-SYN-NER-Rel term weighting, and LDA topic modeling. The release also includes the universal stopword list and examples of domain-specific filter lists (e.g., for financial news).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in this study are openly available at the following link: https://disk.yandex.com/d/OYhwgBWCRFx_Nw (accessed on 10 December 2025).

Acknowledgments

The work was carried out within the framework of the project “Development of methodology for the formation of a tool base for analysis and modeling of spatial socio-economic development of systems in the conditions of digitalization with reliance on internal reserves” (FSEG-2023-0008).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| TF-IDF | Term Frequency–Inverse Document Frequency |

| TF-SYN-NER-Rel | Term Frequency with Syntactic, Named Entity and Relational weighting |

| LDA | Latent Dirichlet Allocation |

| NLP | Natural Language Processing |

| NER | Named Entity Recognition |

| SRL | Semantic Role Labeling |

| LLM | Large Language Model |

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

References

- Sparck Jones, K. A statistical interpretation of term specificity and its application in retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Cummins, R.; O’Riordan, C. Evolving general term-weighting schemes for information retrieval: Tests on larger collections. Artif. Intell. Rev. 2005, 24, 277–299. [Google Scholar] [CrossRef]

- Paik, J.H. A novel TF-IDF weighting scheme for effective ranking. In Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ‘13), Dublin, Ireland, 28 July–1 August 2013; pp. 343–352. [Google Scholar] [CrossRef]

- Wu, H.; Fang, H. Relation based term weighting regularization. In Advances in Information Retrieval (ECIR 2012); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7224, pp. 109–120. [Google Scholar] [CrossRef]

- Arif, A.S.M.; Rahman, M.M.; Mukta, S.Y. Information retrieval by modified term weighting method using random walk model with query term position ranking. In Proceedings of the 2009 International Conference on Signal Processing Systems (ICSPS 2009), Singapore, 15–17 May 2009; pp. 526–530. [Google Scholar] [CrossRef]

- Blanco, R.; Lioma, C. Random walk term weighting for information retrieval. In Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ‘07), Amsterdam, The Netherlands, 23–27 July 2007; pp. 829–830. [Google Scholar] [CrossRef]

- Marwah, D.; Beel, J. Term-recency for TF-IDF, BM25 and USE term weighting. In Proceedings of the 8th International Workshop on Mining Scientific Publications (WOSP 2020), Wuhan, China, 5 August 2020; CEUR Workshop Proceedings. Volume 2665, pp. 34–42. [Google Scholar]

- Kumari, M.; Jain, A.; Bhatia, A. Synonyms based term weighting scheme: An extension to TF.IDF. Procedia Comput. Sci. 2016, 89, 555–561. [Google Scholar] [CrossRef]

- Lv, Y.; Zhai, C. Positional language models for information retrieval. In Proceedings of the 32nd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ‘09), Boston, MA, USA, 19–23 July 2009; pp. 299–306. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. Available online: https://www.jmlr.org/papers/v3/blei03a.html (accessed on 10 November 2025).

- Jelodar, H.; Wang, Y.; Yuan, C.; Feng, X.; Jiang, X.; Li, Y.; Zhao, L. Latent Dirichlet allocation (LDA) and topic modeling: Models, applications, a survey. Multimed. Tools Appl. 2019, 78, 15169–15211. [Google Scholar] [CrossRef]

- Burkhardt, S.; Kramer, S. A survey of multi-label topic models. ACM SIGKDD Explor. Newsl. 2019, 21, 61–79. [Google Scholar] [CrossRef]

- Nikolenko, S.I.; Koltcov, S.; Koltsova, O. Topic modelling for qualitative studies. J. Inf. Sci. 2017, 43, 88–102. [Google Scholar] [CrossRef]

- Newman, D.; Lau, J.H.; Grieser, K.; Baldwin, T. Automatic evaluation of topic coherence. In Proceedings of the 2010 Conference of the North American Chapter of the Association for Computational Linguistics—Human Language Technologies (NAACL-HLT 2010), Los Angeles, CA, USA, 14–16 June 2010; pp. 100–108. Available online: https://aclanthology.org/N10-1012 (accessed on 10 November 2025).

- Mimno, D.; Wallach, H.M.; Talley, E.; Leenders, M.; McCallum, A. Optimizing semantic coherence in topic models. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing (EMNLP 2011), Edinburgh, UK, 27–31 July 2011; pp. 262–272. Available online: https://aclanthology.org/D11-1024 (accessed on 10 November 2025).

- Röder, M.; Both, A.; Hinneburg, A. Exploring the space of topic coherence measures. In Proceedings of the 8th ACM International Conference on Web Search and Data Mining (WSDM 2015), Shanghai, China, 2–6 February 2015; pp. 399–408. [Google Scholar] [CrossRef]

- Lau, J.H.; Newman, D.; Baldwin, T. Machine Reading Tea Leaves: Automatically evaluating topic coherence and topic model quality. In Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics (EACL 2014), Gothenburg, Sweden, 26–30 April 2014; pp. 530–539. [Google Scholar] [CrossRef]

- Fang, A.; Macdonald, C.; Ounis, I. Using word embedding to evaluate the coherence of topics from Twitter data. In Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2016), Pisa, Italy, 17–21 July 2016; pp. 1057–1060. [Google Scholar] [CrossRef]

- Syed, S.; Spruit, M. Full-text or abstract? Examining topic coherence scores using Latent Dirichlet allocation. In Proceedings of the 2017 IEEE International Conference on Data Science and Advanced Analytics (DSAA 2017), Tokyo, Japan, 19–21 October 2017; pp. 165–174. [Google Scholar] [CrossRef]

- O’Callaghan, D.; Greene, D.; Carthy, J.; Cunningham, P. An analysis of the coherence of descriptors in topic modeling. Expert Syst. Appl. 2015, 42, 5645–5657. [Google Scholar] [CrossRef]

- Doogan, C.; Buntine, W. Topic model or topic twaddle? Re-evaluating semantic interpretability measures. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT 2021), Online, 6–11 June 2021; pp. 3824–3848. [Google Scholar] [CrossRef]

- Greene, D.; O’Callaghan, D.; Cunningham, P. How many topics? Stability analysis for topic models. In Machine Learning and Knowledge Discovery in Databases (ECML PKDD 2014); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8725, pp. 498–513. [Google Scholar] [CrossRef]

- Li, X.; Lei, L. A bibliometric analysis of topic modeling studies (2000–2017). J. Inf. Sci. 2021, 47, 161–175. [Google Scholar] [CrossRef]

- Campagnolo, J.M.; Duarte, D.; Dal Bianco, G. Topic coherence metrics: How sensitive are they? J. Inf. Data Manag. 2022, 13, e2181. [Google Scholar] [CrossRef]

- Albalawi, R.; Yeap, T.H.; Benyoucef, M. Using topic modeling methods for short-text data: A comparative analysis. Front. Artif. Intell. 2020, 3, 42. [Google Scholar] [CrossRef]

- Harrando, I.; Lisena, P.; Troncy, R. Apples to apples: A systematic evaluation of topic models. In Proceedings of the International Conference on Recent Advances in Natural Language Processing (RANLP 2021), Varna, Bulgaria, 6–7 September 2021; pp. 483–493. [Google Scholar] [CrossRef]

- Dieng, A.B.; Ruiz, F.J.R.; Blei, D.M. Topic modeling in embedding spaces. Trans. Assoc. Comput. Linguist. 2020, 8, 439–453. [Google Scholar] [CrossRef]

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794. Available online: https://arxiv.org/abs/2203.05794 (accessed on 10 November 2025).

- Nagda, M.; Fellenz, S. Putting back the stops: Integrating syntax with neural topic models. In Proceedings of the 33rd International Joint Conference on Artificial Intelligence (IJCAI 2024), Jeju, Republic of Korea, 3–9 August 2024; pp. 5169–5177. Available online: https://www.ijcai.org/proceedings/2024/0710.pdf (accessed on 10 November 2025).

- Hoyle, A.M.; Goel, P.; Hian-Cheong, A.; Peskov, D.; Boyd-Graber, J.; Resnik, P. Is automated topic model evaluation broken? The incoherence of coherence. In Proceedings of the NeurIPS Workshop on Robust AI in Text, Virtually, 13–14 December 2021; Available online: https://openreview.net/pdf?id=tjdHCnPqoo (accessed on 10 November 2025).

- Schroeder, K.; Wood-Doughty, Z. Reliability of Topic Modeling. arXiv 2024, arXiv:2410.23186. Available online: https://arxiv.org/abs/2410.23186 (accessed on 10 November 2025).

- Romero, J.D.; Feijoo-Garcia, M.A.; Nanda, G.; Newell, B.; Magana, A.J. Evaluating the performance of topic modeling techniques with human validation to support qualitative analysis. Big Data Cogn. Comput. 2024, 8, 132. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, M.; Li, J.; Zhang, M.; Øvrelid, L.; Hajič, J.; Fei, H. Semantic role labeling: A systematical survey. arXiv 2025, arXiv:2502.08660. Available online: https://arxiv.org/abs/2502.08660 (accessed on 10 November 2025).

- He, L.; Lee, K.; Lewis, M.; Zettlemoyer, L. Deep semantic role labeling: What works and what’s next. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (ACL 2017), Vancouver, BC, Canada, 30 July–4 August 2017 2017; pp. 473–483. [Google Scholar] [CrossRef]

- Punyakanok, V.; Roth, D.; Yih, W. The importance of syntactic parsing and inference in semantic role labeling. Comput. Linguist. 2008, 34, 257–287. [Google Scholar] [CrossRef]

- Hassani, H.; Beneki, C.; Unger, S.; Mazinani, M.T.; Yeganegi, M.R. Text mining in big data analytics. Big Data Cogn. Comput. 2020, 4, 1. [Google Scholar] [CrossRef]

- Liu, A.; Chen, J.; Yang, S.Y.; Hawkes, A.G. The flow of information in trading: An entropy approach to market regimes. Entropy 2020, 22, 1064. [Google Scholar] [CrossRef]

- Rodionov, D.; Zaytsev, A.; Konnikov, E.; Dmitriev, N.; Dubolazova, Y. Modeling changes in the enterprise information capital in the digital economy. J. Open Innov. Technol. Mark. Complex. 2021, 7, 166. [Google Scholar] [CrossRef]

- Konnikov, E.; Konnikova, O.; Rodionov, D.; Yuldasheva, O. Analyzing natural digital information in the context of market research. Information 2021, 12, 387. [Google Scholar] [CrossRef]

- Rodionov, D.; Lyamin, B.; Konnikov, E.; Obukhova, E.; Golikov, G.; Polyakov, P. Integration of associative tokens into thematic hyperspace: A method for determining semantically significant clusters in dynamic text streams. Big Data Cogn. Comput. 2025, 9, 197. [Google Scholar] [CrossRef]

- Dmitriev, N.; Zaytsev, A.; Konnikov, E. Graph-based model of semantic entanglement in information sources using embedding representations and coherence analysis. In Proceedings of the 2025 International Russian Automation Conference (RusAutoCon), Sochi, Russia, 7–13 September 2025; pp. 1036–1045. [Google Scholar] [CrossRef]

- Zaytsev, A.; Dmitriev, N.; Konnikov, E. Integration of event-driven modeling and stochastic optimization within control frameworks of regional energy systems. In Proceedings of the 2025 International Russian Automation Conference (RusAutoCon), Sochi, Russia, 7–13 September 2025; pp. 248–254. [Google Scholar] [CrossRef]

- Konnikov, E.A.; Polyakov, P.A.; Rodionov, D.G. Specification of regression analysis of the impact of the information environment on the financial performance of a company. Softw. Syst. Comput. Methods 2025, 3, 31–44. Available online: https://nbpublish.com/library_read_article.php?id=75398 (accessed on 10 November 2025).

- Tanova, A.G.; Rodionov, D.G.; Dmitriev, N.D. Institutional structure of narratives in economics: A sociological approach to modeling socio-economic systems. Financ. Manag. 2024, 4, 98–123. Available online: https://nbpublish.com/library_read_article.php?id=72583 (accessed on 10 November 2025). [CrossRef]

- Shiller, R.J. Narrative Economics: How Stories Go Viral and Drive Major Economic Events; Princeton University Press: Princeton, NJ, USA, 2019; Available online: https://press.princeton.edu/books/hardcover/9780691182292/narrative-economics (accessed on 10 November 2025).

- Mou, Y.; Zhou, L.; Chen, W.; Liu, J.; Li, T. Filter learning-based partial least squares regression and its application in infrared spectral analysis. Algorithms 2025, 18, 424. [Google Scholar] [CrossRef]

- Emura, T.; Matsumoto, K.; Uozumi, R.; Michimae, H.G. Ridge: An R package for generalized ridge regression for sparse and high-dimensional linear models. Symmetry 2024, 16, 223. [Google Scholar] [CrossRef]

- Masuda, N.; Porter, M.A.; Lambiotte, R. Random walks and diffusion on networks. Phys. Rep. 2017, 716–717, 1–58. [Google Scholar] [CrossRef]

- Lambiotte, R.; Delvenne, J.-C.; Barahona, M. Laplacian dynamics and multiscale modular structure in networks. arXiv 2008, arXiv:0812.1770. Available online: https://arxiv.org/abs/0812.1770 (accessed on 10 November 2025).

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP 2014), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. Available online: https://aclanthology.org/D14-1162 (accessed on 10 November 2025).

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and Their Compositionality. Advances in Neural Information Processing Systems 26 (NeurIPS 2013), 2013; pp. 3111–3119. Available online: https://papers.nips.cc/paper/2013/hash/9aa42b31882ec039965f3c4923ce901b-Abstract.html (accessed on 10 November 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.