Abstract

In smart education, adaptive e-learning systems personalize the educational process by tailoring it to individual learning styles. Traditionally, identifying these styles relies on learners completing surveys and questionnaires, which can be tedious and may not reflect their true preferences. Additionally, this approach assumes that learning styles are fixed, leading to a cold-start problem when automatically identifying styles based on e-learning platform behaviors. To address these challenges, we propose a novel approach that annotates unlabeled student feedback using multi-layer topic modeling and implements the Felder–Silverman Learning Style Model (FSLSM) to identify learning styles automatically. Our method involves learners answering four FSLSM-based questions upon logging into the e-learning platform and providing personal information like age, gender, and cognitive characteristics, which are weighted using fuzzy logic. We then analyze learners’ behaviors and activities using web usage mining techniques, classifying their learning sequences into specific styles with an advanced deep learning model. Additionally, we analyze textual feedback using latent Dirichlet allocation (LDA) for sentiment analysis to enhance the learning experience further. The experimental results demonstrate that our approach outperforms existing models in accurately detecting learning styles and improves the overall quality of personalized content delivery.

Keywords:

e-learning system; smart education; sentiment analysis; fuzzy weights; FSLSM model; deep learning; LSTM; LDA 1. Introduction

In recent years, electronic learning (e-learning) systems have emerged as a transformative force, reshaping the landscape of smart education. Online learning systems offer significant advantages, making education more accessible, flexible, and personalized. By eliminating geographical and socioeconomic barriers, these platforms enable individuals from diverse backgrounds to access educational resources from anywhere in the world [1]. This accessibility is especially beneficial for those who might not be able to attend traditional classroom settings due to work or family commitments [2]. Additionally, online systems allow for a high degree of customization, catering to different learning styles and paces, which can enhance student engagement and improve learning outcomes. Moreover, online courses tend to be more cost-effective than their traditional counterparts, reducing financial barriers for learners and institutions alike. These platforms also provide students with an abundance of resources, including videos, interactive content, and extensive databases, further enriching the learning experience.

Developing a successful adaptive e-learning system is contingent upon the creation of a detailed student model, incorporating various attributes of the student. Students have unique ways of engaging with learning materials that shape their individual learning styles [3]. These styles reflect their preferred methods for processing and understanding information. Recognizing these styles is crucial for e-learning systems to tailor content that enhances learning effectively. Traditionally, identifying learning styles involved having students complete questionnaires. However, this method has several drawbacks: it is often a boring and time-consuming task, students may not fully understand or be aware of their learning styles, leading to arbitrary responses, and the results are static, failing to account for changes in learning styles over time, such a type of learning style identification approach is known as an explicit approach [4]. These issues highlight the need for a more dynamic and engaging approach to accurately ascertain students’ learning preferences.

Various automated methods have been developed to identify students’ learning styles by analyzing their interactions with e-learning systems to address these limitations. These automatic identification techniques offer several advantages over traditional methods. Firstly, they eliminate the need for time-consuming questionnaires by gathering data directly from students’ activities within the system [5]. Additionally, unlike static results from questionnaires, the learning styles identified through these automated approaches are dynamic; they can adapt and change in response to shifts in students’ behaviors, ensuring a more accurate and personalized learning experience; such a type of approach is known as an implicit approach [6]. The implicit approach is much better than the explicit approach, but it may face a cold-start problem when new users log into the system. To overcome these issues, this paper combined both approaches as we collected a minimal number of input characteristics such as learners’ previous score (categorized by fuzzy weight logic), age, gender, and lastly, filling our four questions which represented the FSLSM dimension.

Implementing automatic identification of learning styles requires using a learning style (LS) model, which classifies students based on their preferred learning methods [7]. Various learning style models have been discussed in this paper. However, the Felder–Silverman Learning Style Model (FSLSM) is identified as particularly effective for adaptive e-learning systems according to recent studies [8]. In this paper, the FSLSM model was chosen for the reasons described in the next section.

This paper proposes a hybrid approach to improve the e-learning system by integrating automatic learning style identification and simultaneously conducting sentiment analysis to improve the learning object quality further. To identify automatic learning styles, using web usage mining techniques, we analyze student behavior and obtain minimal attributes from learners when they log into the e-learning platform. The collected data on student activities from the e-learning platform’s log files organizes this information into sequences. Each sequence consists of the learning objects that a student accessed during a session. These learning objects are then aligned with the learning style combinations outlined in the FSLSM. These sequences of student activities, their input attributes, and their corresponding learning objects are used as input for long short-term memory (LSTM), a recurrent neural network (RNN) architecture capable of learning long-term dependencies in data sequences. This algorithm maps the sequences to various learning style categories.

Additionally, this paper analyzes textual feedback to improve the learning objects further by using LDA for sentiment analysis to examine textual feedback from learners. This paper proposes a novel approach to annotating unlabeled student feedback using multi-layer topic modeling and also introduces and implements a novel algorithm for sentiment extraction and mapping. Our proposed approach combines both implicit and explicit approaches of learning style identification methods, which removes the limitations of both approaches that exist individually. The experimental results of this paper not only outperform existing models in accurately detecting learning styles but also enhance the quality of learning objects, improving the overall adaptive e-learning systems.

This paper contributes the following points:

- By using minimal learner inputs and learning sequences of learners, this paper designs LSTM positional encoding to classify learners; our novel approach improves the accuracy of learning style identification compared to other traditional machine learning algorithms. This novel approach addresses the limitations of traditional questionnaire-based methods, such as learners’ time consumption while filling in forms, lack of self-awareness, and static learning style results.

- This paper proposes a novel approach to annotating unlabeled student feedback using multi-layer topic modeling.

- This paper also introduces and implements a novel rule-based algorithm for sentiment extraction and mapping. Incorporating LDA to analyze textual feedback from learners, this research paper offers a nuanced understanding of learners’ perspectives on the educational content and the overall e-learning experience. This sentiment analysis allows for the continuous refinement of e-learning content to better align with learner needs and preferences.

The structure of this paper is outlined as follows: Section 2 describes the literature review of related work. Section 3 provides prerequisites and introduces the proposed methodology of this paper. Section 4 presents the experiments conducted and the results. Section 5 discusses and compares with other models. Section 6 concludes the paper and discusses the limitations and future work.

2. Related Works

In academic research, a range of classification methods have been applied to automatically determine learning styles and sentiment across different models. Researchers have utilized these techniques to better understand and cater to individual learning preferences, enhancing the personalization of educational content.

According to Graf [9], a data-driven approach utilizing artificial intelligence algorithms has been developed to automatically detect learning styles from learner interactions within a system. This method uses real behavioral data as input, with the algorithm outputting the learner’s style preferences, thereby enhancing accuracy. To ensure meaningful data for classification, web mining techniques are employed to extract detailed behavioral information, as detailed by Mahmood [10], making the system both effective and efficient in adapting to individual learning needs.

In [11], a novel method was introduced to identify each learner’s style using the FSLSM by extracting behaviors from Moodle logs. Decision trees were utilized for dynamic classification based on these styles, with the method’s accuracy evaluated by comparing behaviors to quiz results provided at the end of a course. This approach, however, was tested with a limited sample of 35 learners in a single Moodle-based online course.

In [12], the authors integrated fuzzy logic with neural networks to train an algorithm capable of recognizing various learning styles. However, the algorithm’s effectiveness was limited to classifying just three dimensions of the FSLSM model: perception, input, and understanding.

In [13], the authors employed Bayesian networks to analyze learner data from logs of chats, forums, and processing activities, detecting only three Felder–Silverman learning styles: perception, processing, and understanding. Their study illustrates the use of predictive modeling to discern specific educational traits based on interactive online behaviors.

Fuzzy logic has been utilized to automatically determine learners’ styles, as demonstrated by Troussas [14]. Expanding on this, Crockett [15] developed a fuzzy classification tree within a predictive model that employs independent variables captured through natural language dialogue, enhancing the precision of style assessments.

In [16], the authors applied the Fuzzy C-Means (FCM) algorithm to categorize learning behavioral data into FSLSM categories. This clustering approach enabled a structured analysis of behavioral patterns, aligning them with defined learning styles to enhance personalized education strategies.

In [17], a model named adaptive e-learning recommender model using learning style and knowledge-level modeling (AERM-KLLS) was developed to enhance student engagement and performance through personalized materials using questionnaires and adaptive feedback, focusing on personalized learning experiences. Another study [18] designed an adaptive learning system based on artificial intelligence model (ALSAI model)for personalized learning environments utilizing artificial intelligence, modeling, adaptive learning, machine learning, natural language processing, and deep learning, aimed at enhancing online teaching.

Additionally, a framework was proposed in [19] for the automatic recognition of learning styles using machine learning, FSLSM, decision tree–hidden Markov model (decision tree MM) and Bayesian model, specifically targeting the automatic identification of learning styles. Another contribution [20] included the development of an auto-detection model for learning styles in learning management systems (LMSs), based on a literature review and machine learning algorithms.

A systematic review of machine learning techniques for identifying learning styles was conducted in [21], highlighting the use of neural networks, deep learning, FSLSM, and visual aural read/write and kinesthetic (VARK), primarily focused on e-learning enhancement. Furthermore, a convolutional neural network learning feature descriptor (CNN-LFD) model for predicting learning styles was developed in [22], using convolutional neural networks (CNNs), Levy flight distribution, and machine learning, aimed at e-learning environments.

There was also a survey on the automatic prediction of learning styles through a literature review, FSLSM, classification, clustering, and hybrid methods, emphasizing the prediction of learning styles [23]. Improvement in the identification of learning styles was achieved using deep multi-target prediction and artificial neural networks in adaptive e-learning systems [24]. Lastly, the automatic modeling of learning styles and affective states in web-based learning management systems was demonstrated in [25] to be more appropriate than traditional questionnaires for detecting learning styles and affective states.

The literature indicates that learners’ learning styles can be automatically identified using models such as the FSLSM, which is prominently featured in these studies. Despite this, most of these investigations rely on behavioral data from just one course, limiting the robustness of their classifications. There is a noted deficiency in validating these classification processes across multiple courses, which would provide a more accurate and reliable identification of learning styles according to the FSLSM.

3. Materials and Methods

3.1. Preliminaries

This subsection explores the core concepts behind adaptive learning: web usage mining (WUM) for pattern analysis, learning style models (LSM) for categorizing information processing preferences, the Felder–Silverman Learning Style Model (FSLSM) for personalized education, and long short-term memory (LSTM) networks for sequential data handling.

3.1.1. Learning Style

The term learning styles (LSs) has several definitions in the modern literature. Pashler [26] defines learning styles as distinctive modes of perception, memory, thought processes, problem-solving, and decision-making. According to [27], learning styles are the combination of typical affective, cognitive, and physiological variables that serve as generally stable markers of how a learner sees, interacts with, and responds to an instructional environment. Several learning style models have been developed to categorize learners into certain groups to improve their learning potential by adapting educational content to their preferences. Some of the most famous learning style models are the Honey and Mumford learning style model, the Kolb learning style model, the Myers–Briggs Type Indicator learning style model, the VARK learning style model, and lastly the FSLSM [28].

3.1.2. Web Usage Mining Techniques

Finding and automatically extracting usage patterns from web data is known as web usage mining (WUM) [29]. Its main focus is on identifying learners’ online behaviors by looking at how they interact with websites. Log files, which document learners’ activities as they access material on web servers, are the main source of data for WUM. These web log files are plain text documents containing data on user activity when interacting with the e-learning platform. According to [30], the usage mining process consists of three steps. It starts with data preprocessing, which involves the essential tasks of data cleaning and transformation to prepare it for analysis. In pattern discovery’s second phase, we uncover concealed insights and patterns within the processed data. Data mining and machine learning approaches are used to accomplish this; in the final stage, known as pattern analysis [31], our attention is directed toward extracting noteworthy and compelling patterns from the outcomes of the pattern discovery phase. Finally, the learning sequences of learners are generated; learning sequences refer to learners’ way of learning. This ensures that only the most pertinent patterns are preserved for further investigation and practical use.

3.1.3. Felder–Silverman Learning Style Model

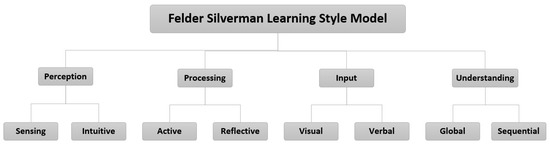

Richard Felder and Linda Silverman initially studied the Felder–Silverman learning style to assist instructors in their instruction of engineering students. However, it has since gained popularity as a learning style paradigm and is progressively being incorporated into numerous online learning platforms. In this work, we employed the FSLSM [32] primarily because of its comprehensive nature compared to other models that categorize learners into fewer styles [33]. The FSLSM offers an in-depth exploration of learner styles, making it a suitable choice [34]. It defines four key dimensions: perception of information, preferred input type, information processing, and comprehension of information. Within the perception dimension, there are two variants: sensing (concrete) and intuitive (abstract/imaginative) [20]. Input preferences can fall into two categories: visual or verbal. Information processing occurs in two distinct ways: actively through experimentation and reflectively through observation [35]. Understanding information is also categorized into two approaches: sequential (following a specific order) and global (working without a fixed sequence) [36]. These dimensions and their respective values in the FSLSM are depicted in Figure 1. By selecting one value from each of the four dimensions within the model, a total of 16 unique combinations of learning styles can be formed [37], as shown in Table 1. Additionally, the FSLSM’s emphasis on learning inclinations is noteworthy. It acknowledges that even when a learner strongly prefers a certain style, they may occasionally display actions consistent with a different learning style [38].

Figure 1.

Overview of the FSLSM: its four key dimensions, and their associated values [39].

Table 1.

FSLSM learning styles: 16 combinations [40].

3.1.4. Long Short-Term Memory

Long short-term memory (LSTM) networks, a specialized subset of recurrent neural networks (RNNs), excel in learning long-term dependencies, setting them apart from conventional neural networks, which primarily handle short-term data [41]. These networks utilize a sequence-to-sequence architecture, particularly beneficial when the input and output sizes vary, encompassing an encoder that summarizes input information over specific time frames and a decoder that interprets this condensed data to generate outputs [42]. In the context described, LSTMs process inputs of 4098 units per time step, with the encoder designed for 24-time steps and the decoder producing outputs of 10 units [43]. This LSTM configuration is devised to align with the Felder-Shilman learning style model, categorizing learning styles into five distinct dimensions: perception, input, organization, processing, and understanding. In this way, the LSTM algorithm classifies and offers a nuanced understanding of individual learning preferences, contributing to the enhancement of tailored educational approaches.

3.2. Methodology

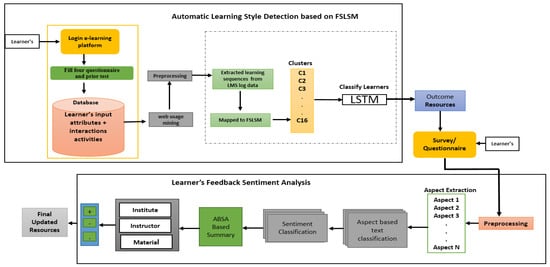

This paper’s aim is to improve adaptability in e-learning systems through learning style identification and sentiment analysis. It aims to address critical issues such as the inefficient identification of learning styles through traditional questionnaire-based methods, which are time-consuming and lack dynamic responsiveness to individual learner preferences [44]. Additionally, it seeks to address the lack of incorporation of learners’ sentiments and feedback, which are vital for refining e-learning content and improving learner experiences. To tackle these challenges, this paper introduces a novel hybrid approach that integrates automatic learning style identification and sentiment analysis. This approach aims to dynamically identify learners’ preferences and sentiments towards educational content, thus facilitating a more personalized and engaging learning experience. By overcoming the limitations of traditional methods and incorporating sentiment analysis, the proposed system seeks to enhance the effectiveness of e-learning platforms, ultimately aiming to improve learner engagement, satisfaction, and educational outcomes. The workflow starts when learners access the e-learning platform; initially, they have to answer the four questions which are based on the FSLSM dimension [45], and additionally provide their personal information such as age, gender, and prior academic performance. Considering the uncertainty in grade classification, fuzzy weights are used to evaluate prior academic performance. For example, a score of 75% might be seen as both somewhat good and somewhat excellent. When the learners interact with learning objects in the e-learning platform, the learners’ behaviors are extracted using web usage mining and stored in the database, and their learning sequences are captured to identify their learning style dynamically. To use a supervised learning algorithm, it is essential to convert the sequences of learner interactions extracted from log files into a suitable input format for the algorithm. A learner’s interaction sequence is characterized by the different learning objects they engage with during a session. Each of these sequences is detailed with identifiers for the sequence, session, and learner, along with a list of the learning objects the learner interacted with within that session. Following the extraction of these interaction sequences, they are categorized based on the FSLSM, where each sequence is associated with a distinct combination of learning styles. This paper used advanced deep learning techniques, such as a Transformer model with LSTM positional encoding, to automatically classify learners based on their learning styles because of its ability to handle sequential data, leveraging its ability to remember long-term dependencies for tasks as compared to other algorithms. This classifier takes into account various learner attributes, including age, previous academic performance, gender, and learning sequences. This method enables an automated process to determine the learning styles of students, aligning them with specific dimensions without the necessity of completing the entire 44-question FSLSM questionnaire, is a process known for being long and tedious. Additionally, this approach utilizes the powerful LDA algorithm to extract key topics from student feedback, enabling it to unearth valuable insights. As students engage with the e-learning platform, feedback is collected through surveys and questionnaires. This feedback undergoes thorough preprocessing to ensure accuracy and meaningful analysis. Using LDA, we identify prominent aspects within the feedback, shedding light on student preferences, concerns, and sentiments. Furthermore, the extracted aspects and sentiments are subjected to aspect-based sentiment analysis (ABSA), which assesses the sentiment associated with each aspect and develops a novel algorithm for analyzing aspect-oriented feedback to determine each piece of feedback’s sentiment orientation (positive, negative, or neutral). Its purpose is to automatically categorize user feedback based on sentiment, helping to understand overall user sentiment towards different aspects of feedback. This multifaceted analysis provides critical insights that guide improvements in the overall e-learning experience. By employing a sentiment classification algorithm such as the convolutional neural network (CNN), sentiment labels are assigned to learner feedback, categorizing it as positive, negative, or neutral based on sentiment scores calculated using a sentiment lexicon. In the final stages of the workflow, this approach summarizes the results of the aspect-based sentiment analysis (ABSA) and sends them to educational institutions and instructors. This summary drives the process of updating course materials and resources in alignment with the feedback and sentiments expressed by learners. Ultimately, this comprehensive approach paves the way for more engaging, adaptable, and satisfying e-learning experiences for students. The overall framework is shown in Figure 2.

Figure 2.

Overall proposed framework.

3.3. Learning Style Identification

This study integrates an automatic identification of learning styles (AILS) module into our system, drawing upon the FSLSM framework, utilizing minimal input attributes, and extracting learning sequences from learners’ behavior activities. The AILS system makes use of a tailored Transformer model, incorporating LSTM-based positional encoding and GloVe word vectors. Its purpose is to efficiently classify students into their specific learning styles [46]. The process begins with training the Transformer model using a designated dataset, and subsequently, it predicts the learning style category for each instance. To ascertain students’ learning styles, Learnenglish considers specific student attributes, including:

- Age: This significantly influences learning styles, younger students prefer visual learning while older students engage in detail-oriented competitive, listening, and reading activities [47]. Younger students also favor peer collaboration, list-making, and direct communication [48]. Learnenglish treats learning styles as dynamic traits, recognizing that they can change with age and other characteristics. When students first interact, they have to select their age from four age groups: prepubescent (6–12 years old), younger (13–18 years old), average (19–45 years old), and elder (46+ years old).

- Gender: This is a significant factor affecting the recognition of learning styles, as indicated in previous studies [49]. Both male and female students can benefit from individualized instruction that aligns with their learning preferences to improve their acquisition of knowledge and development of skills [50]. For instance, male learners tend to favor auditory and reading activities, while female learners are more inclined toward composition activities. Learnenglish collects gender information during the learner’s initial interaction with options for “female” and “male”.

- Before the first interaction with Learnenglish, students are prompted to answer four questions, each aligned with one of the FSLSM dimensions [32]: understanding, perception, input, and processing. These questions, chosen for their relevance and representativeness, play a crucial role in identifying students’ learning styles. The four questions are as follows:

- When approaching a new subject, do you prefer (a) Learning the material in clear steps and maintaining focus? (b) Developing a broad understanding of the connections between the topic and related subjects? This question is about the understanding dimension.

- In instructional settings, do you prefer an emphasis on (a) Specific data or factual material? (b) Theoretical concepts and overarching principles? These questions show the perception dimension.

- When presented with data and information, do you tend to focus on (a) figures, charts, and graphs? (b) Oral or written explanations summarizing the findings? This question represents the input dimension.

- Regarding learning preferences, do you prefer: (a) Collaborative study in a group setting? (b) Independent study alone? This last question demonstrates the processing dimension.

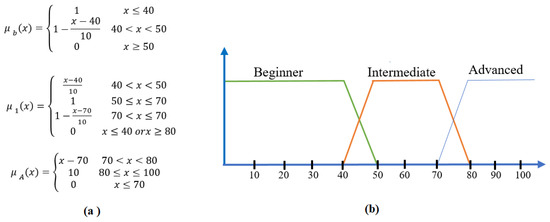

- Previous academic performance: This plays a crucial role in identifying learning styles [51]. It is linked to students’ preferred teaching methods based on their prior academic achievements. High-achieving students tend to favor more complex knowledge delivery methods like diagrams and mathematical equations while lower-achieving students lean towards examples and images [52]. Determining prior academic performance occurs during the learner’s initial interaction with Learnenglish, where they respond to preliminary questions related to English language domain knowledge. However, assessing academic performance is not straightforward; it is fraught with uncertainty. For instance, when a student scores 70/100, it cannot be definitively categorized as either good or advanced. Three fuzzy weights—beginner , intermediate , and advanced —are used in fuzzy logic to solve this problem [53]. These weights are represented by membership functions based on the student’s score. The membership functions, illustrated in Figure 3, are defined with respect to the student’s score (represented as x). The “X” values indicate the score obtained by a student in a subject. The membership functions determine how well this score aligns with the predefined categories of “beginner”, “intermediate”, and “advanced” based on fuzzy logic. The categorizes learner scores into advanced, intermediate, and beginner [54]. The second case offers detailed classification from intermediate to advanced and beginner, and the case further refines the classification, providing intermediate, intermediate to advanced, and beginner categories.

Figure 3. Fuzzy weighting for a student’s prior academic performance. (a) Mathematical Definitions for Membership Functions (b) Graphical Representation of Membership Functions.

Figure 3. Fuzzy weighting for a student’s prior academic performance. (a) Mathematical Definitions for Membership Functions (b) Graphical Representation of Membership Functions.

The membership functions described above assign values to the three fuzzy sets representing each student’s prior academic performance (Figure 3). These numbers go from 0 to 1, where 1 denotes a total understanding of the subject matter being taught. As a result, the equation states that the total of the partition values for these fuzzy sets for the domain notion is always 1. These fuzzy sets and their membership function thresholds were determined by a panel of 16 experts from various domains. Among them, 10 were computer science experts from public universities’ computer science departments, and 6 were pedagogical experts from public universities’ education departments. These experts, each with over 10 years of experience, provided descriptive assessments of student progress and performance levels along with defining success intervals for each knowledge level.

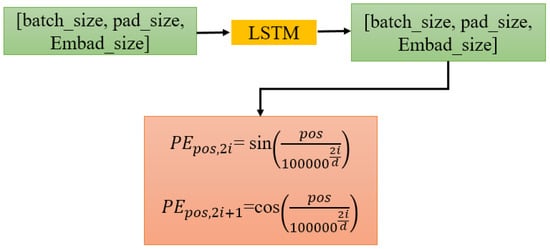

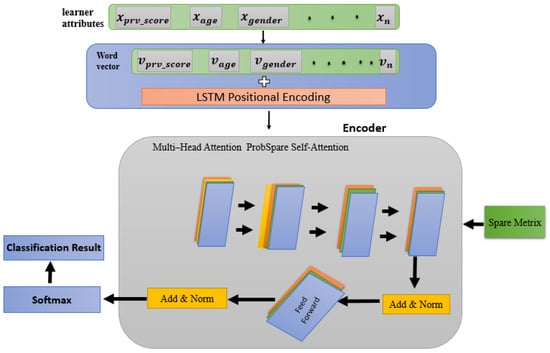

3.4. Learning Style Classification

A modified Transformer design is used to classify students’ learning styles, as Figure 4 illustrates. Global Vectors (GloVe), an unsupervised technique, offers a solution to the limitations of traditional word-to-vector (Word2Vec) approaches [55]. In contrast to Word2Vec, which depends on a one-to-one correspondence between words and vectors and encounters difficulties with synonyms and polysemous terms, GloVe capitalizes on global statistics, historical data, and the benefits of co-occurrence windows to adeptly handle these synonyms and polysemous words [56]. This approach enhances grammatical and semantic information richness in word feature representation, making it a valuable alternative to traditional Word2Vec techniques.

Figure 4.

Design of the LSTM positional encoding architecture in our research.

The total number of unique terms in our domain-specific knowledge is represented by the symbol V. We have a word vector representation and an associated bias term for every word . When we refer to the occurrence of word j within the context of word it is represented as and represents the total count of words that co-occur with word i. We use Equation (1) to compute the probability of word j appearing in the context of word i.

The co-occurrence probabilities receive weight assignments through the application of the weighting function, denoted as . This function is often selected as , where represents the maximum count of co-occurrences in the corpus [57]. is a hyperparameter responsible for governing the weighting process and holds significant importance in the context of GloVe. GloVe’s primary optimization objective lies in the minimization of the loss function illustrated in Equation (2). This optimization is conducted under the constraint outlined in Equation (3).

A widely adopted selection for the weighting function, denoted as , is expressed as . Here, represents the maximum co-occurrence count within the corpus, while acts as the hyperparameter responsible for controlling the probability weighting process [58]. Additionally, we enhance our input sentence representation by introducing positional encoding through the utilization of (LSTM), as shown in Figure 4. This allows our model to take into account the order of words and their positions, which is a crucial task in text classification. The “[batch_size, pad_size, Embad_size]” describes the structure of our input data. “batch_size” is the number of sequences processed in each batch, “pad_size” is the sequence length after padding to ensure uniformity, and “Embad_size” is the dimension of our word embeddings. and represent positional encodings for words in the input sentences. These encodings are used to provide information about the position of words in the sequence. The mathematical functions and calculate the positional encodings. They are based on the position () of words in the sequence and the embeddings’ dimension (d).

LSTM Positional Encoding

The Transformer has trouble effectively utilizing both front and back data because it lacks temporal information [59]. LSTM frequently exhibits a superior capacity for addressing temporally sensitive matters through the utilization of dedicated implicit units designed for the extended storage of input information [60]. Consequently, LSTM is implemented as a preceding layer before obtaining the location data. The specific procedure is illustrated in Figure 5. To enhance the efficiency of dot products, it becomes imperative that the distribution of attention probabilities for pertinent queries be non-uniform [61]. Leveraging KL divergence, we identify the most critical queries and disregard the remaining ones while assessing query sparsity. Equation (4) represents the probability formulation for the maximal average measurement.

The proposed approach makes use of “Log-Sum-Exp” to ascertain the average and peak levels. When a relatively high value is chosen, it amplifies the importance of the attention probability . This querying technique effectively filters out less critical information, creating a novel probabilistic sparse matrix, denoted as Q, which encapsulates the most relevant specifics from . By employing the sample sampling factor in the dot product calculation, we manage to reduce the complexity to . When calculating the dot product, the sample sampling factor decreases in complexity to . The dot product is then used to generate the confidence score P, which highlights the factor that is being given the most attention. Higher confidence scores indicate stronger associations. Equation (5) displays the formula for P.

Figure 5.

The proposed framework for classifying learning styles.

This approach uses the top- method to extract relevant information from P based on the confidence score P. For each row in P, we isolate the top n scores, forming a pool of key indices, where n is adjusted to c. This strategy maintains the intended meaning while emphasizing the most important information. The final values are set to zero. This strategy streamlines the model by selecting the most important scores while preserving important data. The conversion of P into a sparse matrix P is achieved by utilizing the softmax function, and this process is an integral part of the regularization procedure. Finally, Equation (6) shows the ultimate result, S.

By leveraging a mask, our model utilizes Kullback–Leibler (KL) divergence to filter and assess attention points, allowing for the extraction and refinement of attention. A diagram of this is shown in Figure 5.

3.5. Final Output Layer

Using the feature vector from the previous layer as input, we run the text through a softmax classifier to classify it. The probability of the classifier for class j indicates the chance of the text being placed in that specific category, as per Equation (7).

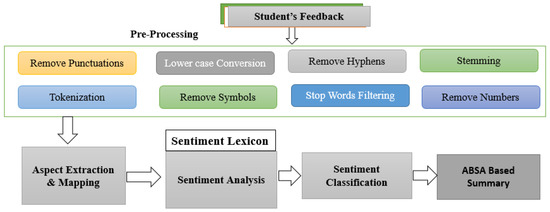

3.6. Aspect-Based Sentiment Analysis

After identifying learners’ learning styles, learning materials were provided to each learner according to their individual preferences; simultaneously, feedback was collected from each learner to improve the quality of the materials further. We have developed an automated and resilient system for analyzing textual data from student feedback collected during teaching-learning. This system efficiently tags and processes the textual data, providing detailed insights. The proposed framework generates concise annotated results, including aspect terms and sentiments, which are then presented to the institute’s management and study board. Subsequently, these results are forwarded to the relevant authorities for analysis and decision-making. To ensure prompt results, the system accepts offline data for training. Our proposed system transforms student feedback into meaningful information, facilitating a deeper understanding of the situation. The framework of our approach is illustrated in Figure 6.

Figure 6.

Proposed framework for aspect-based sentiment classification.

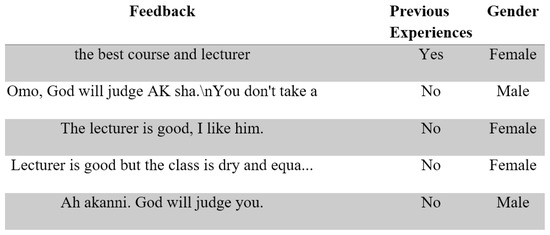

3.7. Dataset

The dataset used in this paper is publicly available, originates from a prominent northern Indian university, and serves as the foundation for generating the institutional report through the analysis of student feedback data [62]. It encompasses six essential categories: teaching quality, course content, lab experiences, library facilities, and the environment of the institute. Each category in the dataset is represented by two columns, allowing for sentiment labels—0 (neutral), 1 (positive), or −1 (negative)—to be assigned. This dataset provides a comprehensive view of student opinions, making it a valuable resource for evaluating various facets of the university experience, and the sample dataset is shown in Figure 7.

Figure 7.

Dataset sample used in our research.

3.8. Preprocessing

The data were cleaned during the preprocessing procedure [63] to enable efficient computation. In the initial stages of text data preprocessing, our approach incorporates a series of essential steps to ensure the consistency and quality of textual data for subsequent analysis. These steps include the following.

- Word Tokenization: Word tokenization involves the task of segmenting the text into individual words or tokens. It divides the text into discrete units where each unit is typically a word [64]. For example, the sentence “The quick brown fox” would be transformed into tokens as follows: [“The” “quick” “brown” “fox”]. Tokenization is essential because it transforms unstructured text into a structured format that computers can process. It enables subsequent analysis, such as counting word frequency, determining sentence structure, and identifying significant terms.

- Lowercase Conversion: Lowercase conversion involves changing all the tokens in the text to lowercase [65]. For instance, “Word” becomes “word”. This step ensures uniformity in text analysis by treating words with different letter cases as identical. Lowercasing simplifies text analysis by reducing the influence of letter case variations, allowing for more accurate word matching and counting.

- Removal of Numbers and Punctuation: This step focuses on removing numerical characters and punctuation marks from the text [66]. For example, When we transform “The price is $100” into “The price is”, we eliminate the numbers and punctuation. This simplification process streamlines the text, removing non-textual elements, and thus, making it more amenable to the extraction of meaningful information from the text.

- Exclusion of Symbols and Hyphens: Symbols like “@” or “#” and hyphens are excluded from the text. These characters often do not carry significant linguistic meaning and can interfere with text analysis [67]. Excluding symbols and hyphens maintains the focus on the textual content, reducing noise and distractions from non-textual elements.

- Stop Word Removal: Stop words consist of common, uninformative words such as “of” “they” “is” “on”, etc. In this step, these words are removed from the text. Removing stop words is critical for improving feature extraction because it reduces the influence of frequently occurring but less meaningful words [68]. This enhances the quality of text analysis and gives more attention to content-rich terms.

- Stemming and Lemmatization: Stemming and lemmatization are techniques to reduce words to their base forms. Stemming typically removes suffixes, resulting in a root form (e.g., “running” → “run”) [69]. Lemmatization focuses on semantic relevance, ensuring that words are reduced to their dictionary form (e.g., “better” → “good”).

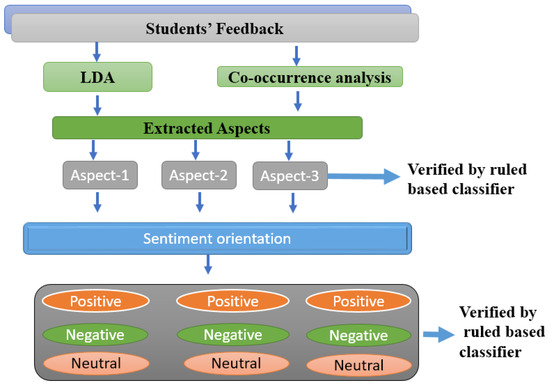

3.9. Aspect Extraction and Mapping

This approach implements a two-layered approach in the aspect modeling framework, as shown in Figure 8. The initial layer focuses on the extraction of aspects, employing both LDA in its various forms [70] and co-occurrence analysis techniques. These methods are instrumental in identifying relevant topics and associated terms. A rule-based algorithm is then applied to scrutinize each aspect derived from topics and terms, ensuring their validity as suggested by both LDA and co-occurrence analysis. The subsequent layer of the proposed methodology is dedicated to extracting and labeling sentiments within each comment and assigning appropriate labels by correlating comments with predefined polarities, thereby mapping sentiments to the extracted aspects.

Figure 8.

Multi-level framework for aspect extraction and labeling.

Using the LDA technique extracts aspect terms from unlabeled data for students’ textual feedback. These hidden aspects in student feedback were discovered using an unsupervised LDA model similar to the latent topics represented by documents. The generative workflow of LDA involves documents as mixtures over latent topics with words characterized by topic-specific word probabilities. This approach allowed us to uncover hidden aspects of student feedback effectively [71].

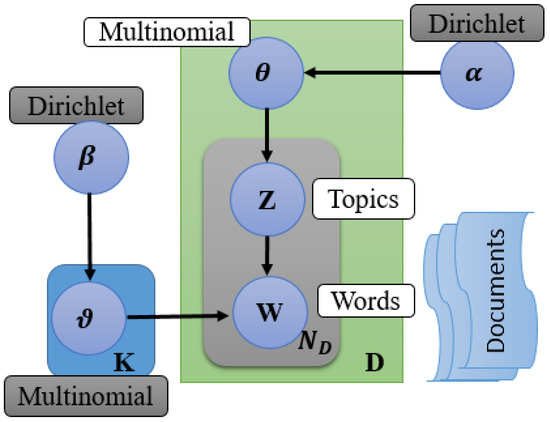

The generative process of LDA is shown in Figure 9, presenting an overview of its key elements. In this context, ‘D’ symbolizes the corpus containing many documents, ‘z’ denotes topics, and ‘w’ represents the words present within these documents. Additionally, within this visual representation, ‘’ and ‘’ are utilized to signify Dirichlet distributions, while ‘’ and ‘’ are indicative of multinomial distributions.

Figure 9.

The architectural illustration of the LDA topic modeling approach.

Equation (8) depicts the LDA probability model, which consists of two sides. On the left-hand side, the document’s probability is a mixture of latent topics, while the right-hand side involves four distinct factors. The first two factors serve as the foundation of LDA, while the latter two act as its driving mechanisms. Each factor calculates probabilities and multiplies them to determine the final document probability. To be more specific, the first term on the right-hand side corresponds to topics and is characterized by a Dirichlet distribution, while the second factor represents words, also following a Dirichlet distribution. In the same way, the third element of the equation is linked with topics, while the fourth is associated with words. Both elements are defined by multinomial distributions.

This approach involved implementing LDA through Gensim and customizing it to suit our dataset. We conducted extensive fine-tuning of the hyperparameters and and carefully optimized the number of topics T to ensure alignment with the characteristics of the student feedback A detailed outline of the hyperparameter settings tailored to our dataset can be found in Table 2.

Table 2.

LDA hyperparameters.

3.10. Aspects Mapping

Our approach to validate the aspects extracted through LDA analysis involved the development and execution of Algorithm 1. This algorithm systematically reviews each student’s feedback, matching terms, and their synonymous equivalents. The synonymous terms were manually collected and organized into separate files [72,73]. Additionally, part-of-speech (POS) tagging was employed to link specific aspect terms with the related comments, as exemplified in Table 3. The relevant terms are associated with their corresponding feedback and recorded in the “extracted aspects” column.

Table 3.

Part-of-Speech( POS) Tagging.

When combined with feature modification techniques, the approach demonstrates significant accuracy improvements, indicating the algorithm’s strong performance in handling real-world data. Moreover, the use of multi-core parallel processing techniques, as mentioned, enhances efficiency and reduces processing time, further underscoring the algorithm’s robust performance in practical applications.

| Algorithm 1 Sentiment Extraction and Mapping |

|

3.11. Extracting and Mapping of Sentiments

This paper designed and assessed a sentiment classification algorithm (Algorithm 1), which is employed to assign sentiment labels based on sentiment orientation by utilizing a sentiment lexicon [74] containing 2006 positive expressions and 4783 negative expressions. We calculated sentiment scores for each feedback with the aid of this sentiment lexicon. Based on these scores, we classified each feedback into positive, negative, or neutral sentiment categories.

3.12. Sentiment Classification

This study used a two-layer convolutional neural network to review sentiment classification. CNNs operate exclusively with fixed-length inputs, necessitating the standardization of input lengths to ‘l’ by either truncating longer sentences or padding shorter ones with zeros. Here, ‘’ represents an input instance while ‘’ denotes the word within that instance.

Layer 0: This initial layer incorporates word embeddings using Word2Vec representing words as low-dimensional vectors ‘’ where ‘k’ denotes the dimension of the word vectors. These word vectors are concatenated to form the input instance ‘’.

Layer 1: In this layer, a one-dimensional convolution operation is applied with filter weights ‘m’ of width ‘n’. For each word vector ‘’, feature vectors are generated by capturing contextual information within a window of size ‘n’. The output is calculated using the ReLU activation function:

Layer 2: Similar to layer 1, this layer includes a one-dimensional convolution operation followed by max pooling.

Layer 3: The output from layer 2 is passed through a fully connected layer with ReLU activation and dropout regularization.

Layer 4: The final layer is another fully connected layer with sigmoid activation for classification.

This structure is a convolutional neural network (CNN) with two layers. The model trains on source domain data and subsequently fine-tunes with target domain data to enhance its performance and adapt to the specific domain.

4. Experiments and Results Analysis

This results section presents the experimental results, their interpretation, comparison with other existing models, and conclusions from our experiments.

4.1. Evaluation Metrics

Loss metrics and a confusion matrix approach were used to evaluate the effectiveness of the proposed methodology and the accuracy of the classification algorithm utilized in this study. This approach involves a structured representation of the actual versus predicted classifications, facilitating the analysis of the model’s performance across various categories. Let us assume our analysis involves a confusion matrix designed for ‘n’ distinct classes, the confusion matrix allows us to determine the counts of true positives (TTPs), true negatives (TTNs), false positives (TFPs), and false negatives (TFNs) within each category.

False negatives (FNs) represent the count of instances that the classifier incorrectly labels as negative when they are actually positive. False positives (FPs) indicate the instances that the classifier mistakenly predicts as positive, though they are, in reality, negative. True negatives (TNs) denote the instances that are negative and are accurately classified by the classifier as such. True positives (TPs) are the positive instances that the classifier rightly identifies as positive.

To assess the effectiveness of a classification model, specific metrics can be calculated, including accuracy (A), precision (P), recall (R), and the F1 score (F1), each serving as critical indicators of validation performance.

Precision (P): Measures the proportion of correctly predicted positive observations to the total predicted positives.

Recall (R): Captures the ratio of correctly predicted positive observations to all actual positives.

Accuracy (A): Accuracy reflects the proportion of total predictions that a model has classified correctly.

F1 score: Harmonic mean of precision and recall, balancing both metrics.

4.2. Experiment and Results

In this paper, the experimental section describes the effectiveness of the proposed hybrid deep learning approach in enhancing e-learning systems. This involves two key components: automatic learning style identification and sentiment analysis. To assess the effectiveness of the proposed learning style identification methods, a comprehensive experiment was conducted with 126 students. Initially, when students log into an LMS such as Sup’Management Group, a free and open-source platform created to assist students and educators in advancing their learning experiences, they fill in four questions aligned with the dimensions of the FSLSM and the entirety of the 44-question Index of Learning Styles (ILS) based on FSLSM, as well as basic information such as age and gender. We administered a preliminary test as part of this tailored approach to evaluate the student’s prior academic achievements. Using fuzzy weight logic, we analyzed the test scores, which helped to accurately determine each student’s knowledge level. Secondly, from the e-learning platform, we gathered 1235 sequences that reflect the behaviors of learners, with each student contributing between 1 and 35 sequences. This dataset includes students’ demographic information (such as age and gender), their prior academic performance, and their responses to four key questions aligned with the FSLSM dimensions and 1235 learning sequences. These elements serve as inputs for our algorithm to predict individual learning styles. The experiments demonstrate how the proposed model accurately categorizes students into various learning styles based on (FSLSM) and is validated through a series of tests for accuracy, precision, recall, and F1 score. Our novel approach achieved a learning style prediction accuracy of 95.54%, precision of 95.54%, recall of 95.55%, and an F1 score of 95.54%. Sentiment analysis further explores student feedback using LDA for topic modeling. This approach effectively uncovers key aspects and sentiments within the feedback, providing a nuanced understanding of student opinions on different facets of the e-learning system. The performance of sentiment analysis was evaluated using a two-layer convolutional neural network (CNN), which achieved a peak accuracy of 93.81%.

4.3. Learning Style Identification

The first experiment was carried out to demonstrate the reliability of the proposed work when using learning behaviors that are relevant to various courses to determine the learning type by randomly selecting a course and 126 students to gather the data to find the learning style according to FSLSM dimensions. The test sequences are categorized into eight FSLSM categories: verbal sequential, visual, active, reflective, sensing, intuitive, and global. After 210 iterations, the final clusters are obtained. The clustering outcome is shown in Table 4.

Table 4.

The number of sequences generated by algorithms for each cluster.

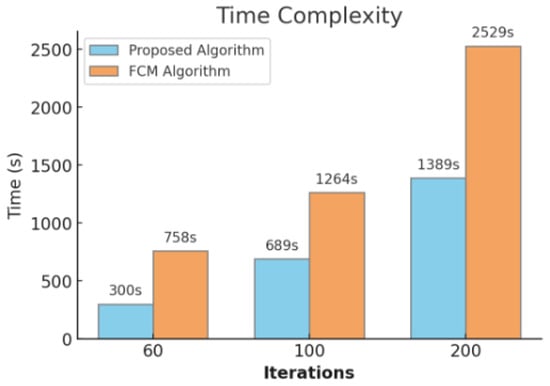

The 16 FSLSM categories are used to label the sequences. Since some sequences, based on feature values, belong to more than one cluster, the total number of sequences clustered using the LSTM algorithm is 1298, which is greater than the 1235 input sequences. In our paper, and 200, and correspond to the number of data points, k to the number of clusters, c to the number of dimensions, and i to the number of iterations. The results of our computation and a comparison of the temporal complexity of both techniques are shown in Table 5.

Table 5.

The time complexity of the proposed approach and FCM (Fuzzy C-Means) based on the number of iterations.

The cross-validation outcomes for every dimension and algorithm are shown in Table 6. Even though the proposed method has the highest precision, recall, and accuracy scores in terms of size, as can be shown, the cross-validation score is just 78%. Other algorithms with high cross-validation ratings are logistic regression random forest and linear discriminant analysis. How will this help researchers and practitioners? Discussing two significant aspects of this research will help to address this query. One is the distinction and resemblance between hand marking and machine prediction in terms of consistency, as can be seen in Table 7.

Table 6.

Results of each dimension’s cross-validation and different algorithms.

Table 7.

The degree of consistency between machine prediction and manual marking about Gardner’s multiple intelligence theory.

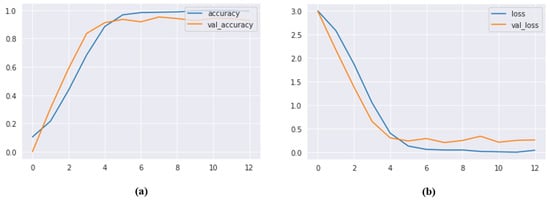

4.4. Learning Style Classification

Understanding students’ diverse learning styles is fundamental to creating tailored educational experiences. To achieve this, we used capabilities of the Transformer architecture in combination with GloVe (Global Vectors for Word Representation) word embeddings. The amalgamation of these cutting-edge technologies allowed us to navigate the complexities of student learning styles with precision and efficiency by assessing the performance of the designed Transformer architecture using accuracy and loss metrics. The model achieved an outstanding accuracy of 95.54%, as demonstrated in Figure 10. Confusion matrices (Table 8) provided detailed insights into classification accuracy for each learning style cluster. These results affirm the effectiveness of the proposed approach, showcasing its potential for personalized education delivery.

Figure 10.

Performance evaluation based on accuracy and loss metrics: (a) accuracy and validation accuracy; (b) training and validation loss.

Table 8.

Confusion matrices for the classification of student learning styles.

Additionally, we evaluated the proposed model’s performance for each learning style, as shown in Table 9. This detailed analysis offers precise insights into the model’s accuracy within specific learning style categories, affirming its effectiveness in personalized learning assessments.

Table 9.

Evaluation of performance for each learning style classification.

4.5. Aspect-Based Sentiment Analysis

In this paper, we used LDA topic modeling to identify topics in the feedback provided by learners. The results are shown in Table 10, with the first column dedicated to student feedback while the second column displays aspect terms organized into three categories: teacher, course, and university. These categories were determined through LDA analysis using the id2word vocabulary sourced from our corpus. In addition, Table 11 presents the results of applying Algorithm 1 for sentiment orientation and mapping, showcasing its effectiveness in sentiment classification. It displays the sentiment labeling results, where two subject matter experts examined each label. One is a specialist in English linguistics, while the other is in text mining. To determine the sentiment scores for each feedback, we used the sentiment dictionary “citephu2004mining”, which includes 2006 terms associated with positivity and 4783 terms conveying negativity [75]. We calculated the sentiment score employing the previously mentioned sentiment lexicon, subsequently assigning labels of either positive, negative, or neutral to each feedback based on the obtained sentiment score. Furthermore, Table 12 presents the extracted topics, inclusive of their corresponding keywords, subject contribution percentages, and illustrative text. It also serves as a visual aid in understanding the topics extracted with accompanying relevant terms and coherence scores, all achieved through LDA Gensim [76]. In the same way, it graphically illustrates the extracted topics, the associated terms, and the coherence scores obtained using LDA Gensim. Furthermore, our sentiment analysis approach leveraged a tailor-made rule-based classifier. This led to the categorization of each comment into positive, negative, or neutral sentiments depending on the polarity detected. It also illustrates the topics that were extracted using LDA Gensim, along with relevant terms and coherence scores.

Table 10.

Student feedback and aspect extraction.

Table 11.

The outcomes of proposed Algorithm 1 for sentiment analysis.

Table 12.

Extracted topics and their corresponding terms obtained through LDA.

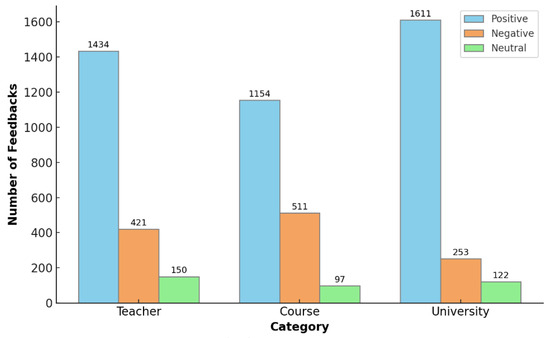

Figure 11 indicates the sentiment orientation concerning feedback aspects.

Figure 11.

Sentiment orientation based on extracted aspects.

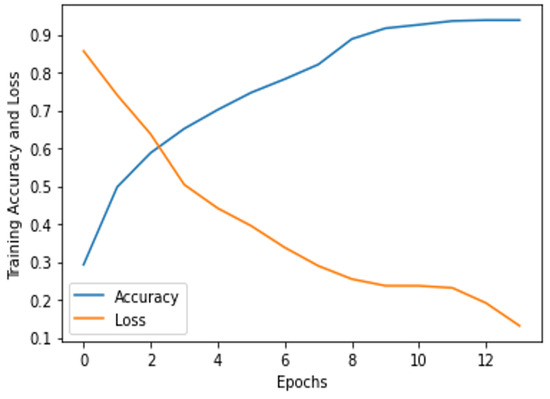

4.6. Sentiment Classification

We harnessed a two-layer convolutional neural network to enhance sentiment classification for student feedback within the realm of e-learning systems. After meticulous fine-tuning of hyperparameters tailored to our specific classification task, our novel approach achieved peak accuracy, reaching an impressive 93.81%. Figure 12 visually depicts the accuracy and loss graphs, which we employed to assess the performance of our sentiment classification models.

Figure 12.

Performance evaluation of the model by analyzing accuracy and loss graphs based on our specific dataset.

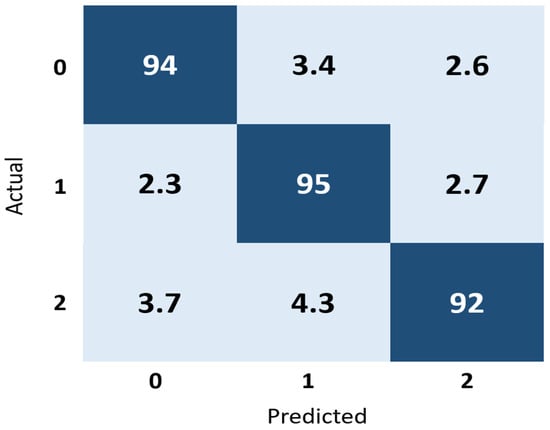

When conducting sentiment classification, a confusion matrix serves as a valuable tool for understanding the algorithm’s accuracy in predicting different classes. It helps identify which classes are correctly predicted and any potential shortcomings in the classifier. Figure 13 illustrates the confusion matrix generated through a two-layer convolutional neural network for classifying student feedback into positive, negative, and neutral categories.

Figure 13.

Illustrating the performance of our trained models using a confusion matrix.

5. Discussion and Comparison

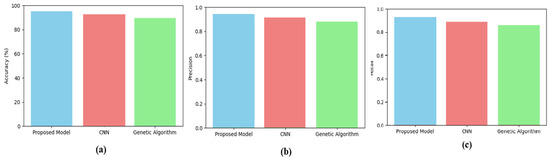

In this section, the proposed models are compared with other existing models in terms of overall accuracy of learning style identification and sentiment classification. To comprehensively assess the efficacy of the proposed model, firstly it is compared with two established methodologies: convolutional neural network (CNN) and genetic algorithm. The results, visualized in Figure 14 using bar plots, highlight the significant advantages of the proposed model over the alternatives.

Figure 14.

Comparative evaluation of the proposed model with other existing models. In this context, (a–c) signify the performance metrics concerning accuracy, precision, and recall, respectively.

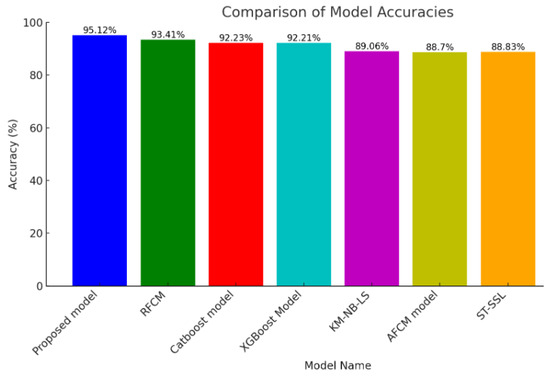

Secondly, the proposed approach’s accuracy is compared to other existing studies’ accuracy outcomes. Figure 15 demonstrates that the proposed model achieves a 95.12% accuracy in identifying learning styles and it leads the pack by a significant margin, indicating a nuanced understanding of individual learner preferences. The other models, like the “Robust Fuzzy C-Means algorithm (RFCM)” [77], “Category Boosting (Catboost)”, and “Extreme Gradient Boosting (XGBoost) [78]”, show commendable accuracies, all above 92%, but they are not quite as precise as the proposed model. Models with lower accuracies, like the “K-modes Naive Bayes Learning Styles (KM-NB-LS)” [79], “Adaptive Fuzzy C-Mean model (AFCM)” [80], and “Self-Taught Semi-Supervised Learning (ST-SSL)” [81], hover around 88–89%, which might suggest a less sensitive approach to the subtleties of learning styles.

Figure 15.

Proposed model vs. other models for learning style identification.

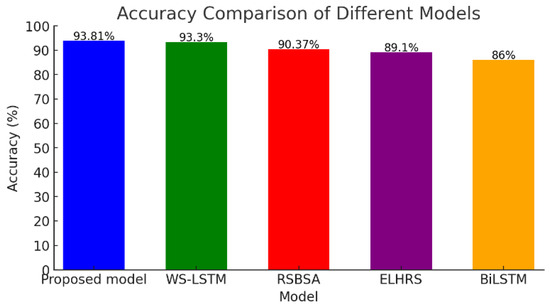

In the bar chart for sentiment analysis of learner feedback shown in Figure 16, the proposed model’s top performance is consistent, registering an accuracy of 93.81%. This suggests that the model is not only good at identifying patterns in data for categorization but is also adept at interpreting the nuances of language—a key feature for analyzing feedback. The “Weakly Supervised Long Short-Term Memory (WS-LSTM)” [82], which is only slightly less accurate, also demonstrates strong sentiment analysis capabilities. However, as we move down the list to models like the “Recommendation System Based on Sentiment Analysis (RSBSA)” [83], “Enhanced e-Learning Hybrid Recommender System (ELHRS)” [84], and “Bidirectional Long Short-Term Memory (BiLSTM)” [85], the drop in accuracy becomes more pronounced, indicating potential challenges in fully capturing learner sentiments, especially when dealing with subtle or complex expressions.

Figure 16.

Proposed model vs. other models for sentiment classification performance.

The time complexity graph shown in Figure 17 compares the proposed algorithm against the FCM (Fuzzy C-Means) algorithm over different iteration counts. Although the proposed algorithm takes longer to execute than the FCM algorithm, the time increase is not as pronounced as the iteration count grows. For instance, at 200 iterations, the proposed algorithm takes 1389 s compared to 2529 s for the FCM algorithm, demonstrating better scalability. This demonstrates that the proposed algorithm is more efficient at handling large-scale data, a desirable trait for e-learning systems that need to process information from many users simultaneously.

Figure 17.

Proposed model and FCM algorithm time complexity comparison.

The time complexity graph shown in Figure 17 compares the proposed algorithm against the FCM (Fuzzy C-Means) algorithm over different iteration counts.

Although the proposed algorithm takes longer to execute than the FCM algorithm, the time increase is not as pronounced as the iteration count grows. For instance, at 200 iterations, the proposed algorithm takes 1389 s compared to 2529 s for the FCM algorithm, demonstrating better scalability. This demonstrates that the proposed algorithm is more efficient at handling large-scale data, a desirable trait for e-learning systems that need to process information from many users simultaneously.

6. Conclusions and Future Work

This paper proposed a hybrid deep-learning approach to improve e-learning systems. This involves two key components: automatic learning style identification and sentiment analysis. The first experiment was carried out to demonstrate the reliability of the proposed approach in determining the learning style. This approach selected an English course and 126 students to gather the data to find the learning style according to FSLSM dimensions and the response data from students were categorized into eight FSLSM categories: verbal sequential, visual, active, reflective, sensing, intuitive, and global. After 210 iterations, the final clusters were obtained. Initially when student logged into to an LMS such as Sup’Management Group, a free and open-source platform created to assist students and educators in advancing their learning experiences, they filled in four questions aligned with the dimensions of the FSLSM and the entirety of the 44-question Index of Learning Styles (ILS) based on FSLSM, as well as basic information such as age and gender. We administered a preliminary test as part of a tailored proposed approach to evaluate the students’ prior academic achievements. Implementing fuzzy weights logic to analyze the test scores helped in accurately determining each student’s level of knowledge. Secondly, from the e-learning platform, we collected 1235 sequences that reflect the behaviors of learners, with each student contributing between 1 and 35 sequences. This dataset includes the demographic information of students and their prior academic scores, and their responses to four key questions aligned with the FSLSM dimensions and 1235 learning sequences. These elements serve as inputs for our designed Transformer architecture in combination with GloVe (Global Vectors for Word Representation) word embeddings. The amalgamation of these cutting-edge technologies allowed us to navigate the complexities of student learning styles with precision and efficiency. The experiments demonstrate how the proposed model accurately categorizes students into various learning styles based on (FSLSM) and it is validated through a series of tests for accuracy, precision, recall, and F1 score. The proposed novel approach achieved a learning style prediction accuracy of 95.54%, precision of 95.54%, recall of 95.55%, and F1 score of 95.54%. The sentiment analysis further explores student feedback using LDA for topic modeling. This approach effectively uncovers key aspects and sentiments within the feedback, providing a nuanced understanding of student opinions on different facets of the e-learning system. The performance of the sentiment analysis was evaluated using a two-layer convolutional neural network (CNN), which achieved a peak accuracy of 93.81%. In conclusion, the proposed hybrid deep learning method revolutionizes e-learning by precisely tailoring content to individual learning styles, enhancing student engagement and success. By analyzing sentiments, it also offers valuable insights into student feedback, enabling continuous improvement. This approach significantly boosts the effectiveness and accessibility of online education, directly impacting students’ learning outcomes. In practice, it empowers educators to deliver more personalized, responsive, and impactful e-learning experiences.

In the future, we aim to apply the proposed learning style identification across various domains and among diverse age groups, including tertiary education students. Additionally, we plan to develop an integrated LS model, combining the FSLSM model with other cognitive frameworks. This will allow for a more comprehensive understanding of factors influencing e-learning. The identification of these hybrid learning styles will be automated through the use of advanced intelligent techniques. The proposed aspect sentiment analysis approach deals only with English language comments. However, in Pakistan, most students use Roman Urdu in their feedback, so there is a significant opportunity to enhance the system’s inclusivity and effectiveness. By incorporating the ability to process Roman Urdu, future iterations of the proposed approach could delve deeper into understanding the nuances of student feedback. Furthermore, students often employ a range of symbols and emoticons to express their opinions in online feedback systems. Therefore, a critical area of future research will involve examining how these visual elements correlate with the sentiments being expressed. By systematically analyzing the weight and sentiment of each attribute, future research aims to refine sentiment analysis techniques, thereby achieving greater precision in classifying sentiments.

Author Contributions

Conceptualization, T.H., L.Y., M.A., and A.A.; methodology, T.H., L.Y., M.A. and A.A.; software, T.H., L.Y. and M.A.; validation, L.Y., M.A. and M.A.W.; formal analysis, L.Y., M.A. and M.A.W.; investigation, M.A. and M.A.W.; resources, L.Y. and M.A.W.; writing—original draft, T.H.; writing—review and editing, L.Y., M.A., A.A. and M.A.W.; supervision, L.Y.; funding acquisition, M.A. and M.A.W. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank Prince Sultan University for paying the APC of this article.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

This work was supported by EIAS Data Science Lab, College of Computer and Information Sciences, Prince Sultan University. The authors would like to thanks Prince Sultan University for their support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Imran, M.; Almusharraf, N.; Abdellatif, M.S.; Ghaffar, A. Teachers’ perspectives on effective English language teaching practices at the elementary level: A phenomenological study. Heliyon 2024, 10, e29175. [Google Scholar] [CrossRef] [PubMed]

- Farooq, U.; Naseem, S.; Mahmood, T.; Li, J.; Rehman, A.; Saba, T.; Mustafa, L. Transforming educational insights: Strategic integration of federated learning for enhanced prediction of student learning outcomes. J. Supercomput. 2024, 1–34. [Google Scholar] [CrossRef]

- Sivarajah, R.T.; Curci, N.E.; Johnson, E.M.; Lam, D.L.; Lee, J.T.; Richardson, M.L. A review of innovative teaching methods. Acad. Radiol. 2019, 26, 101–113. [Google Scholar] [CrossRef] [PubMed]

- Karagiannis, I.; Satratzemi, M. An adaptive mechanism for Moodle based on automatic detection of learning styles. Educ. Inf. Technol. 2018, 23, 1331–1357. [Google Scholar] [CrossRef]

- Granić, A. Educational technology adoption: A systematic review. Educ. Inf. Technol. 2022, 27, 9725–9744. [Google Scholar] [CrossRef] [PubMed]

- Shoeibi, A.; Khodatars, M.; Jafari, M.; Ghassemi, N.; Sadeghi, D.; Moridian, P.; Khadem, A.; Alizadehsani, R.; Hussain, S.; Zare, A.; et al. Automated detection and forecasting of COVID-19 using deep learning techniques: A review. Neurocomputing 2024, 577, 127317. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, T.; Liu, S.; Yin, H.; Li, J.; Yang, H.; Xia, Y. A learning style classification approach based on deep belief network for large-scale online education. J. Cloud Comput. 2020, 9, 1–17. [Google Scholar] [CrossRef]

- Muhammad, B.A.; Qi, C.; Wu, Z.; Ahmad, H.K. An evolving learning style detection approach for online education using bipartite graph embedding. Appl. Soft Comput. 2024, 152, 111230. [Google Scholar] [CrossRef]

- Graf, S. Adaptivity in Learning Management Systems Focussing on Learning Styles. Ph.D. Thesis, Technische Universität Wien, Vienna, Austria, 2007. Available online: http://hdl.handle.net/20.500.12708/10843 (accessed on 27 March 2024).

- Jalal, A.; Mahmood, M. Students’ behavior mining in e-learning environment using cognitive processes with information technologies. Educ. Inf. Technol. 2019, 24, 2797–2821. [Google Scholar] [CrossRef]

- Abdullah, M.A. Learning style classification based on student’s behavior in moodle learning management system. Trans. Mach. Learn. Artif. Intell. 2015, 3, 28. [Google Scholar]

- Zatarain-Cabada, R.; Barrón-Estrada, M.L.; Angulo, V.P.; García, A.J.; García, C.A.R. A learning social network with recognition of learning styles using neural networks. In Proceedings of the Advances in Pattern Recognition: Second Mexican Conference on Pattern Recognition, MCPR 2010, Puebla, Mexico, 27–29 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 199–209. [Google Scholar] [CrossRef]

- García, P.; Amandi, A.; Schiaffino, S.; Campo, M. Evaluating Bayesian networks’ precision for detecting students’ learning styles. Comput. Educ. 2007, 49, 794–808. [Google Scholar] [CrossRef]

- Troussas, C.; Chrysafiadi, K.; Virvou, M. An intelligent adaptive fuzzy-based inference system for computer-assisted language learning. Expert Syst. Appl. 2019, 127, 85–96. [Google Scholar] [CrossRef]

- Crockett, K.; Latham, A.; Mclean, D.; O’Shea, J. A fuzzy model for predicting learning styles using behavioral cues in an conversational intelligent tutoring system. In Proceedings of the 2013 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Hyderabad, India, 7–10 July 2013; IEEE: New York, NY, USA, 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Kolekar, S.V.; Pai, R.M.; MM, M.P. Prediction of Learner’s Profile Based on Learning Styles in Adaptive E-learning System. Int. J. Emerg. Technol. Learn. 2017, 12, 31–51. [Google Scholar] [CrossRef]

- Aziz, A.S.; El-Khoribi, R.A.; Taie, S.A. Adaptive E-learning recommendation model based on the knowledge level and learning style. J. Theor. Appl. Inf. Technol. 2021, 99, 5241–5256. [Google Scholar]

- Kaouni, M.; Lakrami, F.; Labouidya, O. The design of an adaptive E-learning model based on Artificial Intelligence for enhancing online teaching. Int. J. Emerg. Technol. Learn. 2023, 18, 202. [Google Scholar] [CrossRef]

- Madhavi, A.; Nagesh, A.; Govardhan, A. A framework for automatic detection of learning styles in e-learning. AIP Conf. Proc. 2024, 2802, 120012. [Google Scholar] [CrossRef]

- Rashid, A.B.; Ikram, R.R.R.; Thamilarasan, Y.; Salahuddin, L.; Abd Yusof, N.F.; Rashid, Z.B. A Student Learning Style Auto-Detection Model in a Learning Management System. Eng. Technol. Appl. Sci. Res. 2023, 13, 11000–11005. [Google Scholar] [CrossRef]

- Essa, S.G.; Celik, T.; Human-Hendricks, N. Personalised adaptive learning technologies based on machine learning techniques to identify learning styles: A systematic literature review. IEEE Access 2023, 11, 48392–48409. [Google Scholar] [CrossRef]

- Alshmrany, S. Adaptive learning style prediction in e-learning environment using levy flight distribution based CNN model. Clust. Comput. 2022, 25, 523–536. [Google Scholar] [CrossRef]

- Raleiras, M.; Nabizadeh, A.H.; Costa, F.A. Automatic learning styles prediction: A survey of the State-of-the-Art (2006–2021). J. Comput. Educ. 2022, 9, 587–679. [Google Scholar] [CrossRef]

- Gomede, E.; Miranda de Barros, R.; de Souza Mendes, L. Use of deep multi-target prediction to identify learning styles. Appl. Sci. 2020, 10, 1756. [Google Scholar] [CrossRef]

- Khan, F.A.; Akbar, A.; Altaf, M.; Tanoli, S.A.K.; Ahmad, A. Automatic student modelling for detection of learning styles and affective states in web based learning management systems. IEEE Access 2019, 7, 128242–128262. [Google Scholar] [CrossRef]

- Pashler, H.; McDaniel, M.; Rohrer, D.; Bjork, R. Learning styles: Concepts and evidence. Psychol. Sci. Public Interest 2008, 9, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Hauptman, H.; Cohen, A. The synergetic effect of learning styles on the interaction between virtual environments and the enhancement of spatial thinking. Comput. Educ. 2011, 57, 2106–2117. [Google Scholar] [CrossRef]

- Jegatha Deborah, L.; Baskaran, R.; Kannan, A. Learning styles assessment and theoretical origin in an E-learning scenario: A survey. Artif. Intell. Rev. 2014, 42, 801–819. [Google Scholar] [CrossRef]

- Choudhary, L.; Swami, S. Exploring the Landscape of Web Data Mining: An In-depth Research Analysis. Curr. J. Appl. Sci. Technol. 2023, 42, 32–42. [Google Scholar] [CrossRef]

- Roy, R.; Giduturi, A. Survey on pre-processing web log files in web usage mining. Int. J. Adv. Sci. Technol. 2019, 29, 682–691. [Google Scholar]

- Fawzia Omer, A.; Mohammed, H.A.; Awadallah, M.A.; Khan, Z.; Abrar, S.U.; Shah, M.D. Big Data Mining Using K-Means and DBSCAN Clustering Techniques. In Big Data Analytics and Computational Intelligence for Cybersecurity; Springer: Berlin/Heidelberg, Germany, 2022; pp. 231–246. [Google Scholar] [CrossRef]

- Nafea, S.M.; Siewe, F.; He, Y. On recommendation of learning objects using felder-silverman learning style model. IEEE Access 2019, 7, 163034–163048. [Google Scholar] [CrossRef]

- Deng, Y.; Lu, D.; Chung, C.J.; Huang, D.; Zeng, Z. Personalized learning in a virtual hands-on lab platform for computer science education. In Proceedings of the 2018 IEEE Frontiers in Education Conference (FIE), San Jose, CA, USA, 3–6 October 2018; IEEE: New York, NY, USA, 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Hu, J.; Peng, Y.; Chen, X.; Yu, H. Differentiating the learning styles of college students in different disciplines in a college English blended learning setting. PLoS ONE 2021, 16, e0251545. [Google Scholar] [CrossRef]

- Hmedna, B.; El Mezouary, A.; Baz, O. How does learners’ prefer to process information in MOOCs? A data-driven study. Procedia Comput. Sci. 2019, 148, 371–379. [Google Scholar] [CrossRef]

- Reardon, M.; Derner, S. Strategies for Great Teaching: Maximize Learning Moments; Taylor & Francis: Abingdon, UK, 2023. [Google Scholar] [CrossRef]

- Seghroucheni, Y.Z.; Chekour, M. How Learning Styles Can Withstand the Demands of Mobile Learning Environments? Int. J. Interact. Mob. Technol. 2023, 17, 84–99. [Google Scholar] [CrossRef]

- Othmane, Z.; Derouich, A.; Talbi, A. A comparative study of the Most influential learning styles used in adaptive educational environments. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 520–528. [Google Scholar]

- Sihombing, J.H.; Laksitowening, K.A.; Darwiyanto, E. Personalized e-learning content based on felder-silverman learning style model. In Proceedings of the 2020 8th International Conference on Information and Communication Technology (ICoICT), Yogyakarta, Indonesia, 24–26 June 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Hidayat, N.; Wardoyo, R.; Sn, A.; Surjono, H.D. Enhanced performance of the automatic learning style detection model using a combination of modified K-means algorithm and Naive Bayesian. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 638–648. [Google Scholar] [CrossRef]

- Staudemeyer, R.C. Applying long short-term memory recurrent neural networks to intrusion detection. S. Afr. Comput. J. 2015, 56, 136–154. [Google Scholar] [CrossRef]

- Kumaravel, G.; Sankaranarayanan, S. PQPS: Prior-Art Query-Based Patent Summarizer Using RBM and Bi-LSTM. Mob. Inf. Syst. 2021, 2021, 1–19. [Google Scholar] [CrossRef]

- Pamir; Javaid, N.; Javaid, S.; Asif, M.; Javed, M.U.; Yahaya, A.S.; Aslam, S. Synthetic theft attacks and long short term memory-based preprocessing for electricity theft detection using gated recurrent unit. Energies 2022, 15, 2778. [Google Scholar] [CrossRef]