Abstract

As the demands of multimedia and data services increase, efficient communication systems are being investigated to meet the high data rate requirements. Joint source-channel coding (JSCC) schemes were proposed for improving overall system performance. However, existing JSCC systems may suffer a symbol error rate (SER) performance loss when residual source redundancy is not fully exploited. This paper presents a novel, low-complexity JSCC system, which consists of a fixed-length source block code and an irregular convolutional channel code. A simple approach is proposed to design source codes that minimize the SER of source detection and guarantee the convergence of iterative source-channel decoding (ISCD). To improve the waterfall performance of ISCD, the channel code is optimized by using the extrinsic information transfer (EXIT) chart and the concept of irregular code. The channel code is constituted by recursive non-systematic convolutional (RNSC) subcodes. The weights of subcodes are optimized to make the EXIT curves of the channel decoder and the source decoder well-matched, and therefore, a near-capacity performance is achieved. Simulation results show that the proposed system achieves more than 1 dB gains and 0.3 dB gains compared to the separate source-channel code system and the other optimal JSCC systems, respectively. Additionally, the performance of the proposed system is within 1 dB deviation from the Shannon limit capacity.

1. Introduction

With the escalation in the number of cellular mobile phones and the popularity of wireless networks, high data rate transmission is required. However, the wireless channel is restricted by transmission power and limited available bandwidth, so challenges still exist in designing reliable and efficient communication systems. In classical communication systems, the source encoder and the channel encoder are designed separately. The source encoder removes redundancy from the source to improve the transmission rate and the channel encoder re-inserts controlled redundancy to resist the channel noise. According to Shannon’s source and channel separation theorem, the system can achieve a near-capacity performance if the source and channel coding are both optimal [1]. However, due to the severe delay and computational complexity restrictions in practical applications, the theorem does not hold, and the separate source and channel code (SSCC) system usually inflicts a loss of optimality. This motivates the studies of joint source-channel code (JSCC) systems to narrow the gap to the global optimum.

JSCC systems can exploit the residual redundancy in source-coded parameters to realize a better transmission performance. In [2], the authors proposed a soft-bit source decoder (SBSD), which uses the a priori knowledge of source statistics to calculate the reliability of source-coded bits. Then, the SBSD was applied in an iterative source-channel decoding (ISCD) structure, improving the error-correction capability of the channel decoder [3]. Moreover, the source encoder and the bit-interleaved coded modulation were serially concatenated [4] to further improve the bandwidth and power efficiency of the system by exchanging extrinsic information between the source decoder, the channel decoder, and the demodulator.

These previous studies on ISCD systems usually used near-entropy codes for source compression. Because very limited redundancy is left in the source-coded bits after the compression encoding, the iteration gain of ISCD is limited [5]. It has been found that the application of source codes with introduced redundancy as an alternative to classical entropy codes can achieve significant performance improvement. Several redundant source codes were proposed, including the variable-length codes (VLCs) [6,7,8,9] and the fixed-length codes (FLCs). VLCs were designed to increase the free distance to improve the error-correction performance. [6]. Recently, several efficient algorithms were proposed to construct near-optimal VLCs that have the minimum average codeword length with low search complexity [7,8,9]. The VLCs are sensitive to error propagation and suffer from a high decoding complexity, while the FLCs have the advantages of inherent synchronization and low-complexity because the symbol positions in the coded bitstream are fixed. Thus, the FLCs have been investigated by many studies.

The non-redundant index assignment for quantized source symbols proposed in [10] is the first joint source-channel code scheme based on FLCs. However, this JSCC scheme has a limited error correction capability, since the index has nonredundant bits. To solve this problem, the authors in [11] utilized a linear block code (LBC) to realize a redundancy index assignment for source symbols. The introduced redundancy of source code was exploited by SBSD at the receiving side, and the convergence performance of ISCD was improved. Additionally, the generator metrics of LBCs were further optimized to maximize the minimum Hamming distance of codewords [12]. This optimization of source codes reduced the error floor of ISCD. Similarly, the authors in [13] proposed simple algorithms to generate a class of short block codes that have diverse minimum Hamming distance () values. In multimedia communication, these block codes are used to deliberately impose additional redundancy in the source coded video bit-stream [14]. In [15], the effects of Hamming distance were investigated for an FLC-based JSCC system. An improvement in the SER performance was observed with the increase in the minimum Hamming distance. The authors in [16] compared the performance of three different types of JSCC schemes using FLCs and convolutional codes, namely, nonconvergent serial-concatenated coding, self-concatenated coding, and convergent serial concatenated coding. In [17], the author proposed a three-stage, serially concatenated JSCC scheme, which was constituted by the source block code, the unity-rate convolutional code and the space–time code. The bit error rate (BER) floor is averted due to the employment of a recursive unity-rate code. In addition, due to the outstanding error-correction performance of low-density parity-check (LDPC) codes and the polar codes, JSCC schemes where double low-density parity-check (D-LDPC) codes [18] and double polar codes [19] are used as source code and channel code for binary independent and identically distributed sources are proposed. Source statistics, which greatly affect the performance of the JSCC system, were subsequently raised in [20] and discussed in-depth in [21]. In the noisy communication with nonuniform sources, the source codeword assignment can greatly affect how well the JSCC decoder exploits the residual source redundancy, and the symbol error rate performance gap between the optimized codeword assignments and bad codeword assignments could be significant [22].

Moreover, the extrinsic information transfer (EXIT) chart can effectively analyze the convergence performance of JSCC. With the aid of an EXIT chart, near-capacity performance can be obtained by optimizing all components of the system. One optimization method is irregular codes, such as irregular convolutional codes (IrCCs) [23,24,25], irregular VLCs (IrVLCs) [26,27], irregular redundant index assignments [11], and irregular unity-rate codes (IrURCs) [28]. These irregular codes were employed in the JSCC system, making the EXIT curves of the source decoder and channel decoder accurately matched.

In this paper, we propose a novel joint source-channel code system based on fixed-length source code and irregular channel code in order to both improve symbol error rate performance and reduce calculation complexity. The main novelties and contributions are summarized as follows.

First, we derive the close-form expression of the source symbol error rate (SER) performance of a joint source-channel code system based on soft-bit source decoder.

Second, we propose a source coding scheme based on the fixed-length source code. By optimizing the fixed-length codeword assignment with the aid of a binary switch algorithm (BSA), the proposed scheme achieves nearly the same decoding complexity as the linear block code in [13], while the symbol error rate and convergence performance are much better than the traditional schemes.

Third, based on EXIT chart analysis, we innovatively design the joint source-channel code system by introducing a low-complexity, irregular recursive nonsystematic convolutional code (RNSC). The subcodes were chosen from unity-rate RNSCs with small constraint lengths, such that the overall decoding complexity of the channel decoder was reduced.

Last, via simulation results, we show that the proposed joint source-channel code system realizes a near-capacity iterative decoding performance with a relatively low calculation complexity as compared to benchmark schemes.

The rest of this paper is organized as follows: Section 2 introduces the model of our JSCC system. Section 3 discusses the design guidelines of source block codes and provides the algorithms for generating the fixed-length codewords. Section 4 presents the optimization of the irregular channel code based on EXIT chart analysis. Then, Section 5 discusses the simulations results. Finally, Section 6 concludes the study.

2. Joint Source-Channel Code System

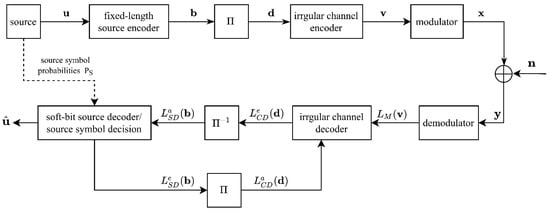

The system model of our joint source-channel code (JSCC) scheme is illustrated in Figure 1.

Figure 1.

The system model of proposed JSCC scheme.

We consider the case where nonuniform source-symbol distribution is inherent in the source signal. The memoryless source generates a frame of symbols, , from a discrete alphabet, . The source symbols are encoded by a source encoder, which is a block code with a fixed-length. The source encoder encodes each symbol, , to a binary codeword, , and concatenates them to a bit sequence, . The block code maintains the nonuniform probabilities of source symbols and introduces some artificial redundancy. These nonuniform probabilities are transmitted as side information and are reliably protected against transmission errors by using a low-rate error-correct code. Thus, the probabilities are perfectly available at the receiver and can be later exploited by the soft source decoder as additional a priori knowledge, leading to an enhanced error protection.

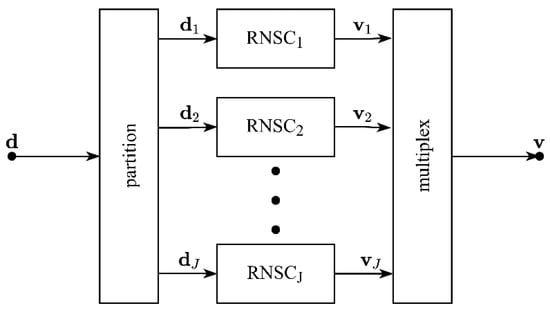

After source-encoding, the bit sequence is permuted by a random bit-interleaver, and then the interleaved bit sequence, , is encoded by a channel encoder. According to [29], the necessary condition for a serially concatenated system to be capacity-achieving is that the inner component should be a recursive convolutional code with rate . We employ an irregular, recursive nonsystematic convolutional (RNSC) code with unity-rate as the channel encoder. The structure of the irregular encoder is depicted in Figure 2. The input sequence, , is partitioned into different subsequences, , according to the weight vector, . Every subsequence is individually encoded by one of the RNSC sub-encoders. Then, all the outputs of the sub-encoders are multiplexed into a whole bit sequence, . The rate of channel coding can be increased by randomly puncturing some bits of .

Figure 2.

Irregular channel encoder.

Finally, the encoded sequence, , is segmented into m-bit labels, . The mapper maps each label, , to a symbol from the M-ary constellation set, , according to the Gray mapping. After modulation, the modulated symbols, , are transmitted over a noisy memoryless channel. At the receiver, the received symbols, , are obtained, where indicates independent and identically distributed complex Gaussian noise, with mean zero and variance .

The receiver processes the received symbols, , to produce an estimate of the source symbols, , through iterative decoding between the channel decoder and the soft-bit source decoder (SBSD). In this study, the soft channel decoder and the SBSD are implemented in the logarithmic domain, and the reliability information exchanged between them is represented by the log-likelihood ratios (LLRs).

The received symbols, , are demodulated to posterior LLRs, , prior to being evaluated in the iterative decoding process. The irregular channel decoder demultiplexes the posterior LLRs into , different subsequences according to the partitioning performed inside the irregular encoder. Each of these subsequences is individually decoded by applying the BCJR algorithm [30] to the trellis of the RNSC code, and the resulting extrinsic LLRs are multiplexed to a single LLRs sequence, .

After channel decoding, the extrinsic LLRs, , are deinterleaved to obtain a priori LLRs of the source coded bits, , which are then input to the SBSD. The SBSD is realized by calculating the a posteriori probabilities of each bit of the codewords. In the calculation, both the a priori LLRs of coded bits and the occurrence probabilities of the source symbols are exploited. The extrinsic output is calculated as

where, is the n-th bit of codeword , is the n-th bit of the codeword , and is the a priori knowledge of codeword , which is determined by the occurrence probability of the corresponding source symbol . is the subset of codebook, and it contains the codewords whose -th bit is ,. The resulting extrinsic LLRs are interleaved and fed back to the channel decoder.

This process is repeated until no more gains in the extrinsic information are achieved or an appropriate stopping criterion is satisfied. After the iterative process is finished, the ultimate LLRs are used to perform a maximum posterior probability (MAP) estimation of source symbols, as follows:

where the maximum posterior probability is calculated as

3. Source Block Code Design

Denote the codebook of the source alphabet, , as , where is the codeword assigned to symbol . All the codewords have the same length , and the symbols in the alphabet have occurrence probabilities of .

3.1. Iterative Convergence and Error-Correction Criterion

For the JSCC system in Figure 1, iterative decoding is employed to make the source and channel decoder assist each other to gain maximum benefit in the form of extrinsic information, and . The near-entropy source codes with limited redundancy are not fit for the system since the iterative gain is limited. Hence, to improve the achievable ISCD performance gain, we artificially introduce redundancy in the source-coded bitstream using redundant fixed-length codes. In fact, iterative decoding is capable of attaining an infinitesimally low decoded bit error rate (BER) if the EXIT curves of the inner and outer decoder components intersect at the (1, 1) point [29]. The relevant design criterion of the fixed-length block codes is that the codewords should have a minimum Hamming distance of . According to the SBSD in Equation (1), for the codewords having a minimum Hamming distance of , when the a priori information, , is perfect, the considered bit, , is uniquely determined, and the perfect extrinsic information can be obtained. Thus, the EXIT curve of SBSD can reach the (1, 1) point.

Apart from the convergence behavior of iterative decoding, it also needs to consider the end-to-end source distortion for the source code’s design. The analog source is quantized and discretized before it is input in our source encoder. Thus, in this paper, we focus on a discrete source. Our goal is to perfectly reconstruct the discrete source symbols, i.e., minimize the symbol error rate (SER) [31],

where is the expectation of variables and is the indicator function, i.e., equals 1 if is true, and 0 otherwise. For the memoryless source, the SER can be determined as

where is the occurrence probability of the source symbol and is the pairwise error probability (PEP) of erroneously detecting instead of . According to the estimate of the source symbol in (3). the symbol decision is determined by the input LLRs of each coded bit. Because the LLRs are permuted by a long random bit-interleaver during decoding, their statistic can be simplified and assumed to be the same and independent [32]. Hence, can be characterized by the crossover probability, , of coded bits. Then, the sum of PEPs can be formulated as:

where is the Hamming distance between the codewords and . By substituting Equation (6) into Equation (5), the SER can be computed as follows:

It is difficult to calculate the value of crossover probability, , theoretically. However, since this paper focuses on the estimate of source symbols after a good convergent iterative decoding, the error analysis is under the assumption of perfect LLRs. It is reasonable to set a small value for the crossover probability .

In addition, to avoid the symbol decision error, the mapping from codewords back to the source symbols should be unique and unambiguous. This means different source symbols must be mapped to different codewords, i.e., , if . Hence, the design of source codewords, , can be formulated as the following optimization problem:

The cost function, , can be further simplified by eliminating the irrelevant terms:

This paper proposes a simple solution to this optimization problem by using the binary switch algorithm (BSA) [33]. The BSA is the best-known method for searching bit mapping, and it attempts to minimize the cost function by switching every pair of bit-labels. Here, a set of binary codewords with fixed-length, , is generated. Then, codewords are chosen from the set and assigned to the source symbols to minimize the cost function in Equation (9) while guaranteeing the codewords have the minimum Hamming distance, , by using BSA. The algorithm for generating the source codebook is formulated as follows (Algorithm 1):

| Algorithm 1 Binary Switch Algorithm (BSA) |

| . |

| . |

| according to (9). |

| Step 4: Rearrange the codewords to provide a minimum cost function by using the BSA: |

| ; |

| according to (9) |

| then |

| end if |

| end for |

| end for |

| rearranged codewords form the set . Check the minimum Hamming distance of each codeword in . If all the codewords have a minimum Hamming distance , then output the set as the source code. If some codewords have a minimum Hamming distance , remove them from the set . Then, search the codeword of in order, and add the codeword that has a minimum Hamming distance, , into . |

The source code can be obtained from the steps described above. It should be noted that the length of the codeword should be chosen to satisfy . For the block code with a fixed-length of , there are, at most, possible codewords that satisfy the minimum distance, . Therefore, to ensure the codewords are unambiguous, the number of possible codewords must satisfy , i.e., .

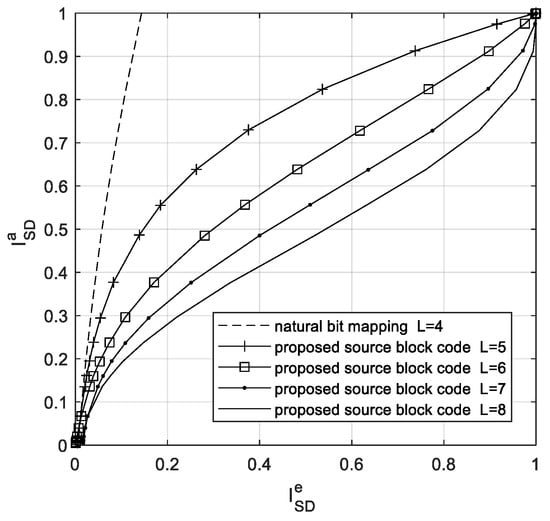

3.2. Design Example

As an example, a Gaussian distribution source [26] is used. The discrete source symbols obey the occurrence probabilities that resulted from the 16-ary Lloyd–Max quantization of independent Gaussian-distributed source. The source entropy is bits/symbol. Set the crossover probability . For the codeword length , the source codes are generated by applying Algorithm 1. The resulting codewords are detailed in Table 1, along with their corresponding minimum Hamming distances . For the proposed block codes, inverse EXIT curves of the SBSD are illustrated in Figure 3, and the EXIT curve of natural bit mapping [10] is also illustrated in the same figure.

Table 1.

Parameters of optimized source codewords with different code lengths.

Figure 3.

The EXIT curves of different source codes.

The natural bit mapping approach maps the source symbols to the binary representation of indices. The codewords have a length of and do not have any redundant bits. Even though this source code remainsthe nonuniform distribution redundancy of the source symbols, there is limited redundancy in the source codewords. As can be seen from Figure 3, the SBSD only provides a low amount of extrinsic information, even if perfect a priori information, i.e., , is provided. The early intersections with the EXIT curve of the decoders will lead to a poor convergence for iterative decoding. Thus, the source codes with low redundancy cannot benefit from iterative decoding.

By increasing the codeword’s redundancy, i.e., decreasing the source code rate, the SBSD can benefit more from a priori information provided by the channel decoder. This can be seen for our proposed code with length . It is also shown that if the codeword’s minimum Hamming distance satisfies , the EXIT curve of the SBSD reaches the (1, 1) point. In this case, it is possible to design an appropriate channel encoder that ensures reliable extrinsic information can be obtained by iterative decoding. In addition, the coding efficiency of FLCs may be reduced due to the high residential redundancy. In our joint system design, to keep the overall rate of the system constant, the code rate loss caused by source coding redundancy can be compensated by a channel code with code rate .

The convergence of the iterative decoder relies on the amount of redundancy at both sides of the interleaver. If a source decoder has neither redundancy nor channel measures, the iterative decoding is almost useless and does not perform much better than separate decoding.

4. Irregular Channel Code for Iterative Source Channel Decoding

The EXIT chart is an efficient tool for designing iterative decoding systems [34], and it provides a fast approach for predicting the performance of the iterative decoder accurately. In this section, the channel encoder is optimized based on the EXIT chart analysis. In particular, the EXIT chart of the proposed system is first analyzed concerning the design guidelines. Then, an appropriate irregular channel code is designed to make the iterative decoding have a good convergence.

4.1. EXIT Chart Analysis for Iterative Source Channel Decoding

Generally, the EXIT chart of a serially concatenated system has two EXIT curves, i.e., the EXIT curve of the inner decoder and the inverse EXIT curve of the outer decoder. The EXIT curve exploits the mutual information (MI) between the data bits at the transmitter and the LLRs at the receiver to describe the input–output relation of each constituent decoder [32].

For our JSCC system, illustrated in Figure 1, the irregular channel decoder and the SBSD serve as the inner decoder and the outer decoder, respectively. The EXIT curves of the channel decoder, , and the inverse EXIT curve of the SBSD, , can be expressed as:

and

where is the mutual information between bits and LLRs , is the mutual information between bits and LLRs , is the mutual information between bits and LLRs , and is the mutual information between bits and LLRs . Meanwhile, the EXIT curve of the channel decoder depends on the posterior LLRs of demodulator, , and the EXIT curve of the SBSD is also affected by the source statistic, . These EXIT curves can be obtained through the Monte Carlo simulation.

The design of the encoders is based on the property of the EXIT chart, i.e., the system can achieve an infinitesimally low BER by iterative decoding only if there is an open tunnel between the EXIT curves [29]. Thus, the encoders should be designed in such a way that their EXIT curves satisfy for at a low SNR to make the system achieve a near-capacity performance. An effective way to design very well-matching encoders is to apply the concept of irregular codes [23].

The irregular source block codes are not fit for our scheme. In the irregular block codes, high-rate codes have to be used together with low-rate codes. High-rate block codes naturally have a lower minimum Hamming distance and, therefore, a lower symbol error-correction capability. In order to ensure the error-correction capability, we still adopt regular source block code in our scheme. Furthermore, the well-matching EXIT curves are attained by designing an irregular inner channel code for the given source code.

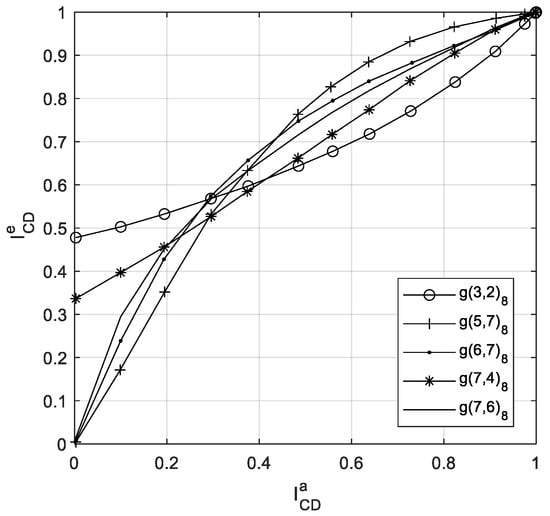

4.2. Irregular Channel Code Design

Irregular convolutional codes as inner codes have been successfully employed in the context of iterative source-channel decoding in [10]. Here, we use the recursive nonsystematic convolutional (RNSC) codes with rate 1 as the subcodes. Considering the computational of the RNSC decoder, we limit the maximum constraint length to . There are kinds of different RNSC codes. In addition, the RNSC subcodes should have diverse shapes in the EXIT curves, so that the EXIT curve of the irregular channel code is expected to match the EXIT curve of the source code more accurately. Thus, we select five kinds of RNSC codes having the most dissimilar EXIT curves as shown in Figure 4. Their octal generator polynomials are , , , , and .

Figure 4.

EXIT curves of different RNSC codes, AWGN channel, SNR = 8 dB, 16QAM Gray mapping.

Denote the weight vector of the different RNSC subcodes. The overall EXIT characteristic of the channel code is the weighted superposition of the EXIT characteristic of different RNSC subcodes, i.e.:

Then, the EXIT curves of encoders should satisfy for at a low SNR. Considering sample points of the EXIT curves, the curve-fitting problem can be formulated as the optimization problem:

subject to

The matrix of dimension contains sample points of each RNSC decoder EXIT curve. The vector contains sample points of the SDSD inverse EXIT curves. The width of the decoding tunnel is . Constraint (14) ensures an open decoding tunnel while constraints (15) and (16) guarantee the validity of the weights. As all subcodes have the rate 1, no additional rate constraint needs to be considered.

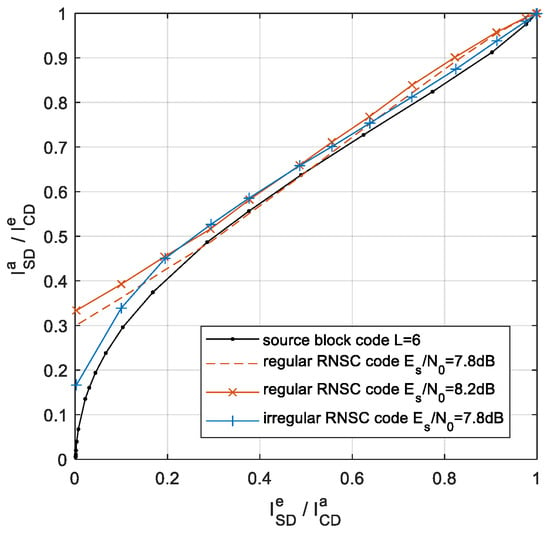

The optimization problem can be solved by the steepest descent algorithm, referred to in [23]. As an example, the optimum weights for the source codes with length are summarized in Table 2, and the EXIT curves of the irregular and regular RNSC decoder and SBSD are illustrated in Figure 5. This shows that an open tunnel between the inverse EXIT curve of SBSD and the EXIT curve of the irregular channel decoder is obtained at SNR dB. Meanwhile, for the regular channel code, an open tunnel is obtained until the 8.2 dB. The waterfall performance of ISCD is improved by employing the irregular channel code.

Table 2.

Parameters of channel code matched with L = 6 source code.

Figure 5.

Exit curve of optimized channel code for the source code with L = 6.

5. Results and Discussion

5.1. Systems Parameters

In this section, to verify the performance of the proposed JSCC system in both symbol error rate and decoding complexity, we choose four benchmark schemes for comparison: (1) the separate source-channel code (SSCC), (2) the VLC-IrRNSC, (3) the LBC-RSC, and (4) LBC-IrRNSC. Simulation with the Gaussian distribution source [26] is performed. The discrete source symbols obey the occurrence probabilities that resulted from the 16-ary Lloyd–Max quantization of independent Gaussian distributed source. The source entropy is bits/symbol. Table 3 lists the constituent encoders of different systems. For all systems, 16QAM Gray mapping is used as the modulation scheme, and a random interleaver is used. The number of source symbols per frame is set to 5000. An additive white Gaussian noise (AWGN) channel is assumed for transmission. For a fair comparison between different systems, all systems are designed to have the same overall code rate of about R = 0.66, i.e., 2.64 bits/channel-use for 16QAM, and the Shannon limit capacity for the efficiency of 2.64 bits/channel-use is about dB. To satisfy the requirement for overall code rate, the channel code rate and source code rate are accordingly adjusted in each system.

Table 3.

Parameters of different source channel coding schemes.

The system consisting of the Huffman code and Turbo code is taken as the benchmarks of the separate source-channel code (SSCC) system. The Turbo code is used to protect the Huffman coded bits from the channel error. The Turbo code consists of two recursive systematic convolutional (RSC) codes with generator polynomial , and its output bits are punctured to obtain the code rate 2/3. At the receiver, noniterative source-channel decoding is used.

Iterative source-channel decoding is used in JSCC systems, i.e., the VLC-IrRNSC scheme, the LBC-RSC scheme, the LBC-IrRNSC, and our scheme. The VLC-IrRNSC scheme employs a VLC as the source code of and an irregular RNSC code as the channel code. In this scheme, the VLC [6] is constructed for a given free distance of , and the attained codewords have an average length . The output bits of VLC encoder are passed through an interleaver and forwarded to the irregular RNSC encoder with a rate 33/32. The irregular code is composed of RNSC subcodes and with optimum weights of 0.86 and 0.14. In the linear block code (LBC)-based JSCC schemes [13], the LBCs are generated based on the linear parity check code. The LBC-RSC scheme employs an LBC with codeword length as the source code and an 8/9 rate RSC code as the channel code. The LBC adds a single parity bit to the 4-bit quantized source symbol, and the optimized RSC code with generator polynomial also introduces additional redundancy for error correction. The LBC-IrRNSC scheme employs an LBC with codeword length as the source code and an irregular RNSC code as the channel code. The LBCs are generated based on the rate 2/3 linear parity check code in [15]. The subcodes of the irregular encoder used are and , and their optimum weights are 0.08 and 0.92. The output bits of the irregular code are randomly punctured such that the channel code rate was increased as a result of 22/21. In the proposed scheme, the optimized block code with length is generated by Algorithm 1, and the parameters of the irregular RNSC code are listed in Table 4

Table 4.

Parameters of irregular RNSC code matched with different source code.

5.2. Convergence and SER Performance

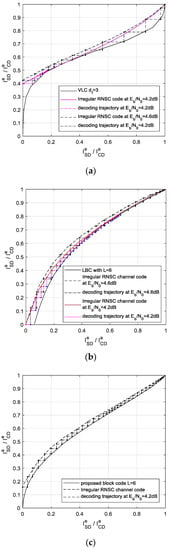

We use EXIT curves for analyzing the convergence performance of the schemes in Table 3. In Figure 6a–c, the well-matched EXIT curves of the outer SBSD and the irregular channel decoder are illustrated. The decoding trajectories are also acquired by recording the mutual information at the input and output of decoders during the Monte Carlo simulation. It can be seen that, at the bit SNR of 4.2 dB, an open EXIT tunnel is obtained and the decoding trajectory can reach the (1,1) point in the proposed scheme. This indicates that the convergence threshold of iterative decoding is 4.2 dB. Similarly, open EXIT tunnels are obtained at 4.6 dB for both the LBC-IrRNSC scheme and the VLC-IrRNSC scheme. With the aid of an EXIT chart, the convergence threshold of each scheme is obtained, and we list them in Table 5 for comparison. It can be concluded that the SSCC scheme has the worst converge performance, and our scheme with L = 6 achieves the best converge performance compared to the other JSCC schemes.

Figure 6.

The EXIT chart of the different JSCC schemes using Irregular RNSC codes. (a) The EXIT chart of the VLC-IrRNSC scheme. (b) The EXIT chart of the LBC-IrRNSC scheme with L = 6. (c) The EXIT chart of the proposed scheme with L = 6.

Table 5.

Convergence performance of different source channel coding schemes.

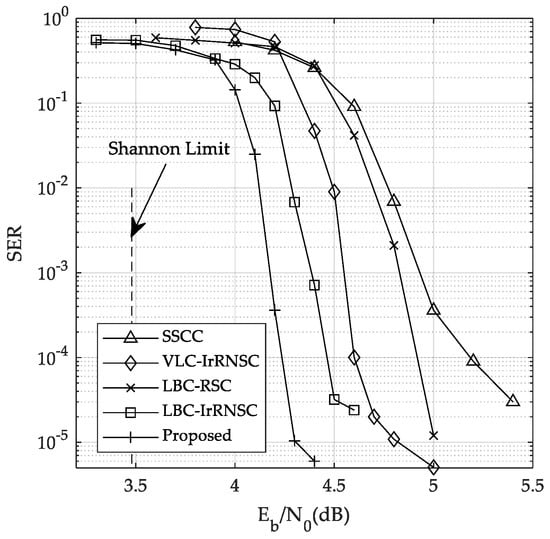

The SER performance of all the schemes is illustrated in Figure 7. It can be seen that the proposed scheme achieves the best performance compared to benchmark schemes. The proposed scheme obtains the waterfall region at about dB, which is within a 1 dB deviation from the Shannon limit. The simulation results are also verified in the EXIT chart predictions.

Figure 7.

The SER performance of different systems over the AWGN channel.

Compared to the SSCC scheme, the proposed scheme obtains about a 1 dB gain at the SER of 10−4. The parameters of source and channel encoders are jointly optimized in our scheme. Therefore, the residual redundancy in the source-coded bits is efficiently exploited by ISCD, resulting in SNR gains. In comparison with the VLC-IrRNSC scheme, our scheme has a 0.5 dB gain. The performance improvement can be attributed to the inherent synchronization of the fixed-length codes and the well-matched IrRNSC code. The VLC codes suffer from error propagation in source symbol decisions when some errors remain after iterative decoding, while our fixed-length block code avoids the problem. Additionally, the fixed-length codes also have a low decoding complexity.

Compared with the LBC-RSC scheme and LBC-IrRNSC scheme, our scheme also obtains a gain of 0.3 dB and 0.8 dB at the SER of 10−4. The improvement is obtained by optimizing the error-correction properties of the fixed-length codewords and the irregular channel code without decreasing the transmission efficiency.

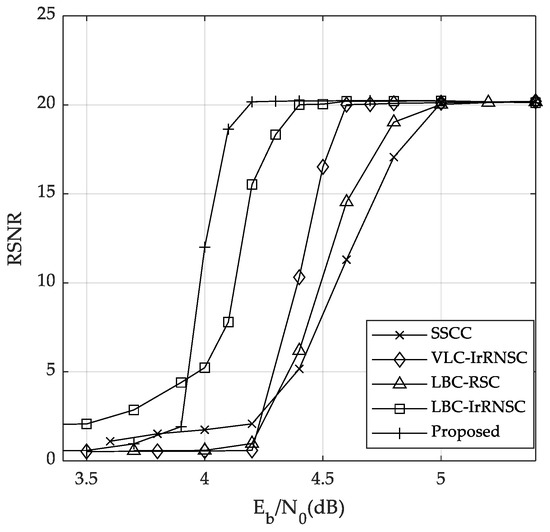

For the quantitated source symbols, the reconstruction SNR (RSNR) is also illustrated in Figure 8. It can be seen that, in the region of dB, our proposed scheme with L = 6 also has the best RSNR performance compared to the other schemes. These results can be attributed to its best SER performance.

Figure 8.

The RSNR performance of different systems over the AWGN channel.

5.3. Decoding Complexity Analysis

We tested the decoding time of each scheme in a PC with an Intel core i5 3.1 GHz CPU and 4 GB RAM. Monte Carlo simulations were on the matlab2018. All the schemes are single-core based. The simulation decoding time under different SNRs are listed in Table 6.

Table 6.

Simulation decoding time of different source channel coding schemes.

It can be seen that, for the JSCC schemes with a source block code, namely, the LBC-RSC scheme, the LBC-IrRNSC scheme, and our scheme, the decoding time varies from 8.7 s to 19.5 s. While the decoding time of VLC-IrRNSC scheme varies from 122.5 s to 217.9 s for different SNRs, the SSCC scheme consumes about 20 s for each frame. This indicates the propose scheme has a low decoding complexity.

The decoding complexity mainly depends on the computation complexity of the iterative decoders and on the number of decoding iterations. In our scheme, the decoder of source block code is realized by Equation (1), and its decoding complexity depends on the size of the source alphabet and the codeword length. The channel decoder employs the BCJR algorithm. In our simulations, the size of the source alphabet is 16 and the codeword length satisfies . Compared to the channel convolutional decoders which employ the BCJR algorithm on the long code trellis, the source block codes have a lower computational decoding complexity.

The VLC decoder is modeled by the bit-level trellis, whose state space is the set of the internal nodes of the VLC binary tree. The sizes of the trellises can be considered a measure of the computational decoding complexity [35]. Because the maximum constraint length of the channel code is limited to , the average state number of the channel code satisfies . The VLC with free distance has states, which is at least 10 times . Hence, the decoding complexity of VLC decoder is much higher than the channel decoder and the source block code. Therefore, the VLC-IrRNSC scheme takes the longest decoding time.

6. Conclusions

This paper presents a novel, low-complexity joint source-channel code system consisting of a fixed-length source block code and an irregular channel code. Block codes with the minimum Hamming distance are designed for guaranteeing the convergence of ISCD and reducing the SER of the source symbol decision. In addition, a well-matched irregular channel code is employed to further improve the waterfall performance of ISCD. The irregular channel code is constituted by unity-rate RNSC codes with small constraint length, and, therefore, it has a low decoding complexity. EXIT charts are used for the analysis of the convergence behavior of ISCD. The proposed system realizes a near-capacity performance by exploiting both the source statistic redundancy and the artificially introduced redundancy in the source code. As the simulation results of the Gaussian distribution sources show, the proposed system outperforms the SSCC system by more than 1 dB gains. Compared to the JSCC system based on LBC, the optimized block code has better error-correction property. Furthermore, in comparison with the JSCC system based on VLC, the proposed scheme achieves about 0.5 dB gains and also reduces the decoding complexity. Our future work will be the further extension of the JSCC system over other nonstandard coding channels and the investigation of the employment of the JSCC system in multimedia applications.

Author Contributions

Conceptualization, methodology, and writing: all authors; software and data curation: H.B.; supervision and project administration: C.Z. and S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant numbers No. 61571416 and No. 61271282, and the Award Foundation of the Chinese Academy of Sciences, grant number No. 2017-6-17.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shannon, C.E.; Weaver, W. The Mathematical Theory of Communication; University of Illinois Press: Champaign, IL, USA, 1949. [Google Scholar]

- Bauer, R.; Hagenauer, J. On variable length codes for iterative source/channel decoding. In Proceedings of the DCC 2001, Data Compression Conference, Snowbird, UT, USA, 27–29 March 2001; pp. 273–282. [Google Scholar]

- Hagenauer, J.; Bauer, R. The turbo principle in joint source channel decoding of variable length codes. In Proceedings of the 2001 IEEE Information Theory Workshop (Cat. No.01EX494), Cairns, QLD, Australia, 2–7 September 2001; pp. 33–35. [Google Scholar]

- Clevorn, T.; Brauers, J.; Adrat, M.; Vary, P. Turbo decodulation: Iterative combined demodulation and source-channel decoding. IEEE Commun. Lett. 2005, 9, 820–822. [Google Scholar] [CrossRef]

- Jaspar, X.; Vandendorpe, L. Design and performance analysis of joint source-channel turbo schemes with variable length codes. IEEE Int. Conf. Commun. 2005, 1, 526–530. [Google Scholar]

- Wu, T.Y.; Chen, P.N. On the Design of Variable-Length Error-Correcting Codes. IEEE Trans. Commun. 2013, 61, 3553–3565. [Google Scholar] [CrossRef][Green Version]

- Huang, C.; Wu, T.; Chen, P.; Alajaji, F.; Han, Y.S. An Efficient Tree Search Algorithm for the Free Distance of Variable-Length Error-Correcting Codes. IEEE Commun. Lett. 2018, 22, 474–477. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, F.; Li, C.; Varshney, P.K. An Efficient Construction Strategy for Near-Optimal Variable-Length Error-Correcting Codes. IEEE Commun. Lett. 2019, 23, 398–401. [Google Scholar] [CrossRef]

- Chen, Y.-M.; Wu, F.-T.; Li, C.-P.; Varshney, P.K. On the Design of Near-Optimal Variable-Length Error-Correcting Codes for Large Source Alphabets. IEEE Trans. Commun. 2020, 68, 7896–7910. [Google Scholar] [CrossRef]

- Adrat, M.; Vary, P. Iterative Source-Channel Decoding: Improved System Design Using EXIT Charts. EURASIP J. Adv. Signal Process. 2005, 2005, 178541. [Google Scholar] [CrossRef][Green Version]

- Schmalen, L.; Adrat, M.; Clevorn, T.; Vary, P. EXIT Chart Based System Design for Iterative Source-Channel Decoding with Fixed-Length Codes. IEEE Trans. Commun. 2011, 59, 2406–2413. [Google Scholar] [CrossRef]

- Schmalen, L.; Vary, P. Iterative Source–Channel Decoding with Reduced Error Floors. IEEE J. Sel. Top. Signal Process. 2011, 5, 1577–1587. [Google Scholar] [CrossRef]

- Khalil, A.; Minallah, N.; Awan, M.A.; Khan, H.U.; Khan, A.S.; Rehman, A.U. On the Performance of Wireless Video Communication Using Iterative Joint Source Channel Decoding and Transmitter Diversity Gain Technique. Wirel. Commun. Mob. Comput. 2020, 2020, 88. [Google Scholar] [CrossRef]

- Minallah, N.; Ullah, K.; Ullah, K.; Khan, I.U.; Khattak, K.S. Efficient Wireless Video Communication using Sophisticated Channel Coding and Transmitter Diversity Gain Technique. 2020. Available online: https://assets.researchsquare.com/files/rs-35714/v1_covered.pdf?c=1631836661 (accessed on 10 July 2021).

- Khan, H.U.; Minallah, N.; Masood, A.; Khalil, A.; Frnda, J.; Nedoma, J. Performance Analysis of Sphere Packed Aided Differential Space-Time Spreading with Iterative Source-Channel Detection. Sensors 2021, 21, 5461. [Google Scholar] [CrossRef] [PubMed]

- Minallah, N.; Butt, M.F.U.; Khan, I.U.; Ahmed, I.; Khattak, K.S.; Qiao, G.; Liu, S. Analysis of Near-Capacity Iterative Decoding Schemes for Wireless Communication Using EXIT Charts. IEEE Access 2020, 8, 124424–124436. [Google Scholar] [CrossRef]

- Minallah, N.; Ahmed, I.; Frnda, J.; Khattak, K.S. Averting BER Floor with Iterative Source and Channel Decoding for Layered Steered Space-Time Codes. Sensors 2021, 21, 6502. [Google Scholar] [CrossRef]

- Bocharova, I.E.; Fabregas, A.G.i.; Kudryashov, B.D.; Martinez, A.; Campo, A.T.; Vazquez-Vilar, G. Low-complexity fixed-to-fixed joint source-channel coding. In Proceedings of the 8th International Symposium on Turbo Codes and Iterative Information Processing (ISTC), Bremen, Germany, 18–22 August 2014; pp. 132–136. [Google Scholar]

- Dong, Y.; Niu, K.; Dai, J.; Wang, S.; Yuan, Y. Joint source and channel coding using double polar codes. IEEE Commun. Lett. 2021, 25, 2810–2814. [Google Scholar] [CrossRef]

- He, J.; Wang, L.; Chen, P. A joint source and channel coding scheme base on simple protograph structured codes. In Proceedings of the 2012 International Symposium on Communications and Information Technologies (ISCIT), Gold Coast, QLD, Australia, 2–5 October 2012; pp. 65–69. [Google Scholar]

- Chen, C.; Wang, L.; Xiong, Z. Matching criterion between source statistics and source coding rate. IEEE Commun. Lett. 2015, 19, 1504–1507. [Google Scholar] [CrossRef]

- Wu, C.; Chung, W. Iterative source-channel decoding design using distortion based index assignment and joint redundant information. In Proceedings of the SiPS 2013, Taipei, Taiwan, 16–18 October 2013; pp. 95–99. [Google Scholar]

- Tuchler, M.; Hagenauer, J. EXIT charts of irregular codes. In Proceedings of the Conference on Information Sciences and Systems, Prineton University, Prineton, NJ, USA, 20–22 March 2002. [Google Scholar]

- Minallah, N.; Ullah, K.; Frnda, J.; Cengiz, K.; Javed, M.A. Transmitter Diversity Gain Technique Aided Irregular Channel Coding for Mobile Video Transmission. Entropy 2021, 23, 235. [Google Scholar] [CrossRef] [PubMed]

- Schmalen, L.; Vary, P. Error resilient turbo compression of source codec parameters using inner irregular codes. In Proceedings of the 2010 International ITG Conference on Source and Channel Coding (SCC), Siegen, Germany, 18–21 January 2010; pp. 1–6. [Google Scholar]

- Maunder, R.G.; Hanzo, L. Near-capacity irregular variable length coding and irregular unity rate coding. IEEE Trans. Wirel. Commun. 2009, 8, 5500–5507. [Google Scholar] [CrossRef]

- Hanzo, L. Near-Capacity Variable-Length Coding: Regular and EXIT-Chart-Aided Irregular Designs; John Wiley & Sons: Hoboken, NJ, USA, 2010; Volume 20. [Google Scholar]

- Maunder, R.G.; Zhang, W.; Wang, T.; Hanzo, L. A Unary Error Correction Code for the Near-Capacity Joint Source and Channel Coding of Symbol Values from an Infinite Set. IEEE Trans. Commun. 2013, 61, 1977–1987. [Google Scholar] [CrossRef]

- Ashikhmin, A.; Kramer, G.; Brink, S.T. Extrinsic information transfer functions: Model and erasure channel properties. IEEE Trans. Inf. Theory 2004, 50, 2657–2673. [Google Scholar] [CrossRef]

- Bahl, L.; Cocke, J.; Jelinek, F.; Raviv, J. Optimal decoding of linear codes for minimizing symbol error rate (corresp.). IEEE Trans. Inf. Theory 1974, 20, 284–287. [Google Scholar] [CrossRef]

- Jaspar, X.; Guillemot, C.; Vandendorpe, L. Joint Source–Channel Turbo Techniques for Discrete-Valued Sources: From Theory to Practice. Proc. IEEE 2007, 95, 1345–1361. [Google Scholar] [CrossRef]

- El-Hajjar, M.; Hanzo, L. EXIT Charts for System Design and Analysis. IEEE Commun. Surv. Tutor. 2014, 16, 127–153. [Google Scholar] [CrossRef]

- Schreckenbach, F.; Gortz, N.; Hagenauer, J.; Bauch, G. Optimization of symbol mappings for bit-interleaved coded Modulation with iterative decoding. IEEE Commun. Lett. 2003, 7, 593–595. [Google Scholar] [CrossRef]

- Nguyen, H.V.; Xu, C.; Ng, S.X.; Hanzo, L. Near-Capacity Wireless System Design Principles. IEEE Commun. Surv. Tutor. 2015, 17, 1806–1833. [Google Scholar] [CrossRef]

- Jaspar, X.; Vandendorpe, L. Joint source-channel codes based on irregular turbo codes and variable length codes. IEEE Trans. Commun. 2008, 56, 1824–1835. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).