Abstract

In the attempt to mitigate the effects of COVID-19 lockdown, most countries have recently authorized and promoted the adoption of e-learning and remote teaching technologies, often with the support of teleconferencing platforms. Unfortunately, not all students can benefit from the adoption of such a surrogate of their usual school. We were asked to devise a way to allow a community of children affected by the Rett genetic syndrome, and thus unable to communicate verbally, in writing or by gestures, to actively participate in remote rehabilitation and special education sessions by exploiting eye-gaze tracking. As not all subjects can access commercial eye-tracking devices, we investigated new ways to facilitate the access to eye gaze-based interaction for this specific case. The adopted communication platform is a videoconferencing software, so all we had at our disposal was a live video stream of the child. As a solution to the problem, we developed a software (named SWYG) that only runs at the “operator” side of the communication, at the side of the videoconferencing software, and does not require to install other software in the child’s computer. The preliminary results obtained are very promising and the software is ready to be deployed on a larger base. While this paper is being written, several children are finally able to communicate with their caregivers from home, without relying on expensive and cumbersome devices.

1. Introduction

Since its outbreak, the COVID-19 pandemic has produced disruptive effects on the school education of millions of children all around the world. The loss of learning time for students of every age varies from country to country, according to the local patterns of contagion and the consequent government actions, however, the effects will be severe everywhere. In the attempt to mitigate such effects, most countries have authorized and promoted the adoption of e-learning and remote teaching technologies, often with the support of teleconferencing platforms such as Microsoft Teams, Zoom, Cisco Webex, Google Meet and others. With the support of such platform, both teachers and students have gradually and partially recovered their previous habits, by meeting daily within sorts of “virtual classrooms” where teachers and students could meet and interact almost lively, being able to talk to each other and see each other. If, on one end, the effectiveness of such a surrogate of the “normal” school is still to be assessed and is widely criticized, on the other hand, it is undoubtedly an effective emergency solution that could be promptly deployed thanks to the widespread availability of its enabling technologies.

Unfortunately, not all students can benefit from the adoption of teleconferencing platforms as a surrogate of their usual school. Children and young students with disabilities face severe barriers that, in several cases, prevent them from accessing any kind of remote education at all [1]. Among children with disabilities, those with Multiple Disabilities (MD) face the worst difficulties and, at the same time, need remote education and interventions more than others, as they cannot receive the usual help from caregivers and professionals. People affected by MDs have severe difficulty communicating their needs, freely moving their body to access and engage their world and learning abstract concepts and ideas. The lockdown is heavily affecting their lives, as it prevents them from receiving the sparkle of light that comes from their periodic meetings with caregivers and, in some cases, even their beloved ones. Moreover, the rehabilitation interventions are in general more effective during the early stages after the insurgence of the disease [2,3], therefore the ability to continue the cognitive rehabilitation and special education interventions becomes crucial.

Rett Syndrome (RTT) is a complex genetic disorder caused by mutations in the gene encoding the methyl CpG binding protein 2 (MECP2) [4] that induces severe multiple disabilities. As Rett Syndrome is a rare disease, affecting 1:10,000 girls by age 12 (almost all RTT subjects are female), there are few specialized centers and therapists and accessing the needed treatments was a big challenge for the subjects and their families already before the lockdown.

RTT subjects suffer from severe impairments in physical interaction, that in some cases leave them with only the ability to communicate and interact by means of their eye gaze [5]. As a consequence, for such subjects participating in online, interactive educational activities is particularly difficult. On the other hand, it has been demonstrated that technology-mediated cognitive rehabilitation and special education approaches are very effective in treating RTT subjects [6,7]. The influence of digital media on Typically Developing (TD) children has been extensively investigated in the last few years, due to the need for an adequate balance in their usage with respect to other activities [6,8,9,10,11,12,13]. However, in the case of RTT subjects, studies examining the effectiveness of multimedia technologies have demonstrated very positive effects on the cognitive, communicative and motivational abilities of subjects [14]. Furthermore, some studies have found that subjects with RTT have prerequisites underlying Theory of Mind (ToM) abilities, such as gaze control and pointing [15,16,17]. Other studies have demonstrated that ToM is not impaired in RTT [18].

For all these theoretical reasons, we think that RTT subjects may benefit from the possibility of joining social school interactions actively, as during these social interactions there might be opportunities for games, surprises and learning and so on. However, as the interaction between the subject and the digital platform must be mostly based on eye gaze direction estimation, specialized hardware must be acquired and installed on the subject’s side. Such a specialized hardware can be expensive and introduces a further degree of complexity in the already-hindered familiar routines. If, on one end, innovative software and hardware platforms are being developed to bring, in the near future, more and more effective rehabilitation and educational services [19,20], on the other hand, the current lockdown due to the COVID-19 pandemic and, more in general, the non-uniform geographical distribution and “physical” availability of the needed rehabilitation and special educational services, have lately produced an actual and immediate emergency.

In the case of TD subjects, teleconferencing platforms and applications could be easily adopted mainly because the hardware and the technological infrastructure supporting them were already available to most of the families. However, this is not the case for RTT and, in general, for many MD subjects because such teleconferencing applications do not support eye-gaze tracking.

In this paper we describe motivations, design, implementation and early results of a remote eye-tracking architecture that aims at enhancing most common teleconferencing and video chatting applications with the ability to remotely estimate the direction of the eye gaze of the users. The proposed approach enables the user on one side of the communication channel (the operator) to automatically estimate the direction of the eye gaze of the other user (the subject), thus enabling eye gaze-based interaction, without requiring the subject to install additional software or hardware. Moreover, the data acquired through this software can also be saved and constitute the basis for machine learning applications and advanced analysis techniques to provide decision-making support to therapists, educators or researchers [21].

2. Materials and Methods

The target population for this work is a community of about 300 girls diagnosed with RTT in Italy and their families. From this community, we took into consideration those subjects who only communicate with eye gaze, thus sampling from about 270 subjects. The targeted subjects cannot actively participate in remote education and rehabilitation programs and, therefore, are at risk of not receiving their needed treatments during the lockdown.

We propose a solution named SWYG (Speak With Your Gaze) that requires only the operator to install some additional software (no special hardware is needed) and use it together with the preferred Video Conferencing System (VCS), while the subjects are not required to install any additional software or hardware.

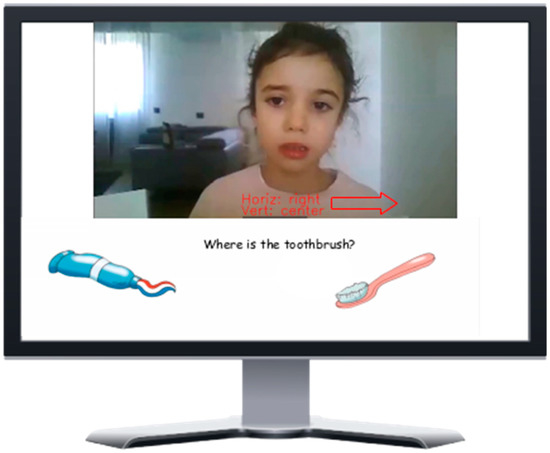

SWYG (this word means also “silent” in Afrikaans) sits at the side of the VCS acquiring the content of the video conferencing window, analyses it in near real-time and visualizes the direction of the eye gaze of the person appearing in the window. As a result, the operator using it can easily and promptly receive a feedback from the eye gaze of the subject (Figure 1).

Figure 1.

With SWYG (Speak With Your Gaze), the operator receives an automatic indication on the eye gaze direction of the subject. Note that the subject is actually looking at her right.

SWYG is composed of four modules: the Video Acquisition Module (VAM); the Face Detection and Analysis Module (FDAM); the Eye-Gaze Tracking Module (EGTM) and the Visualization Module (VM).

The VAM identifies the window of the VCS though its application name and acquires its content (i.e., the video). It is based on the well-established “Screen Capture Lite” open source screencasting library freely available online [22].

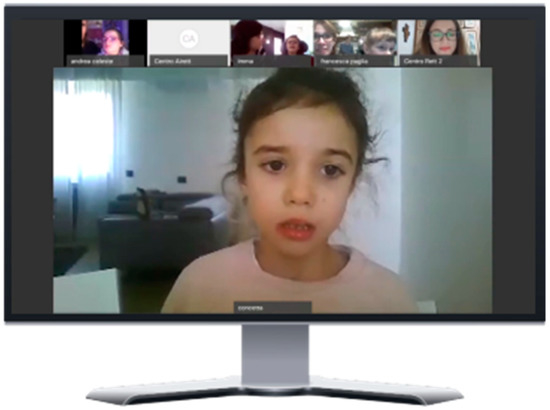

The FDAM receives the video stream from the VAM and processes it, detecting the largest face in each frame and estimating its orientation. Only the largest face is considered because other persons might be present during the session at the subject’s place and, moreover, the video from the VCS often shows the user currently selected in a larger frame, and several other users in smaller frames (Figure 2).

Figure 2.

Most Video Conferencing Systems (VCS) show several users at a time on the screen.

In the FDAM, face detection is performed by exploiting the very effective and efficient face detector from the OpenCV library [23]. The face orientation is then estimated according to the same approach described in [16,24], and the eyes are detected by leveraging on the DLib machine learning library [25]. The face position and orientation, as well as the eyes’ positions relative to the face, are then passed to the EGTM module that performs the eye-gaze estimation.

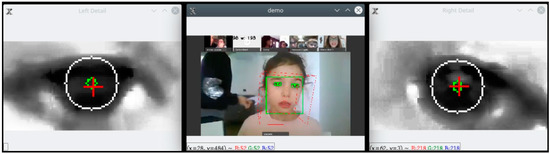

As SWYG must run in near real-time on a general purpose, average-level notebook, we had to take into consideration strict computational power constraints. In particular, the EGTM should not require the support of a powerful Graphics Processing Unit (GPU) or specialized hardware for Deep Neural Networks computation. We therefore developed our own software solution for eye-gaze tracking, that is still under final testing and will be open-sourced soon. Our EGTM is devised to work both in natural and near-infrared illumination and does not rely on special hardware. The main difficulties that had to be overcome were the low resolution of the input eye image, the non-uniform illumination and the dependency of the eye gaze direction on both the head and camera orientations. We leveraged on the accurate head orientation and eye position estimations produced by the FDAM to solve the first and the last two issues, and on an accurate local histogram equalization to deal with non-uniform illumination. A preliminary version of the tracker is demonstrated in Figure 3, where the face orientation and the positions of the left and right pupil are shown.

Figure 3.

Eye-gaze tracking and head orientation estimation.

Once the pose (i.e., position and spatial orientation) of the head is estimated and the eyes are detected, each eye is analyzed, and the relative pupil orientation is determined by applying a correlation matching algorithm enhanced with a Bayesian approach that constraints the position of the pupil in the eye image. Figure 4 illustrates the very simple algorithm adopted. Each eye image undergoes histogram equalization and gray levels filtering in order to improve the image quality, then a correlation matching filter is applied, that produces an image where the points that have higher probability of being the centers of the eye pupil have higher brightness. This likelihood is then weighted by a 2D Gaussian mask determined, for each frame and each eye, according to the size and shape of the eye image in that frame. Such a mask represents a “prior” that constraints the position of the pupil in the eye according to its size and shape. Finally, the point that features the Maximum A Posteriori (MAP) probability of being the center of the pupil, is selected.

Figure 4.

The eye-gaze tracking process pipeline.

The orientations of the two eyes are then averaged in order to obtain a more reliable estimate of the direction of the eye gaze. An ad-hoc calibration procedure is applied in order to map the pupils averaged direction onto the screen of the device. As the subject cannot be asked to collaborate to a lengthy calibration procedure, in our case only 3 calibration points are used, i.e., center, left, right. Each subject is invited to fixate each calibration point for a few seconds, in order to acquire a set of discrimination parameters. The obtained accuracy, initially tested over about 100 tests with 8 different non-RTT subjects, is 7 degrees of visual angle on the average, that is by no means comparable with that of modern hardware-oriented gaze trackers [26]; however, we judged it acceptable for our purposes.

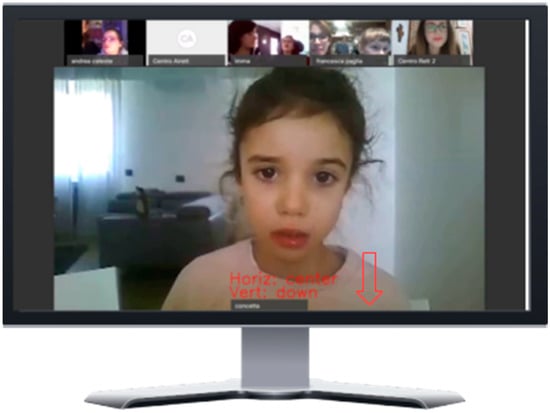

The VM is a simple graphical interface that opens a window and shows in near real-time the video of the subject with the information regarding the gaze direction. This is the video that the operator uses to receive the eye gaze-based feedback from the subject (Figure 5).

Figure 5.

The Visualization Module showing the output of SWYG.

3. Preliminary Experiments

We conducted a preliminary experimental campaign on a sample of 12 subjects, in their late primary and secondary school ages, all female. Subjects were selected among those who could also access a commercial eye-tracking device, which was used as a reference ground truth for our experiments. The videoconferencing software adopted by the involved families was Cisco Webex, acquired within a different ongoing remote rehabilitation project [19]. The commercial eye tracker available to the subjects was the Tobii 4C eye-tracking USB bar.

The families of the subjects were preliminary asked for a written informed consent on the use of images from the testing sessions for scientific purposes (including publication).

The recruited subjects were already trained with eye gaze-based communication, as they frequently used an eye tracker during their rehabilitation sessions before the COVID-19 lockdown.

The experiments aimed at evaluating the reliability of the tracker in discriminating the response intended by the subject while answering to a question by means of her eye gaze.

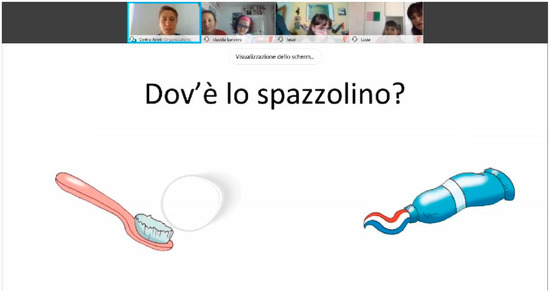

We ran 12 sessions, one for each subject. Each session started with a short briefing for the family, explaining the aim of the experiment and providing instructions on the setup. Up to 10 min were, then, devoted to locating a suitable setting for the session. A correct illumination, reasonably smooth and without deep shadows on the face of the subject, and a scene with only one face on the foreground where preferred. Then, a short presentation started, showing a short graphical story with some verbal comments from the operator. The presentation was run on the operator’s PC and its window was shared with the subject through the Webex software. Finally, 8 questions were asked within a 10 min session of questions. Therefore, in total 8 × 12 = 96 questions were posed, equally distributed among 12 different subjects. The subjects were requested to answer each question by means of their eye gaze. Figure 6 is a screenshot of the user interface on the subject’s side, with a question to be answered and the trace of the reference Tobii eye tracker is shown as a circular translucid shape on the screen.

Figure 6.

The user interface on the subject’s side.

For the sake of our application, the computer screen was divided into 9 zones (three rows by three columns), so the required resolution for the eye tracker is 9 × 9. The obtained results are very encouraging: provided that the illumination conditions are favorable and that only the subject is on the foreground of the video, the correspondence between the results of the SWYG eye tracker and the Tobii eye tracker is about 98%, i.e., for 94 questions out of 96, SWYG estimated the same answer as the Tobii tracker.

Of course, these are only preliminary results and we still have to refine several aspects of our approach; however, thanks to those results, we decided to run more experiments with more subjects as soon as the lockdown will come to an end. In the meanwhile, the software will be used during the next months in support of the rehabilitation and special education services to the subjects involved in the project.

4. Discussion

We were recently asked to devise a way to allow a community of girls diagnosed with the Rett syndrome, and thus unable to communicate verbally, in writing or by gestures, to actively participate in remote rehabilitation and special education sessions. As the hardware devices needed for eye tracking were not available for all subjects, we investigated new ways to facilitate the access to eye gaze-based interaction for the specific case of simplified communication, i.e., communication through direct selection of one out of a small number of predefined choices. As the adopted communication platform was a videoconferencing software, all we had at our disposal was a live video stream of the subject.

We considered the following use case. A cognitive therapist or special education teacher (the operator) is in charge of a group of RTT subjects distributed on a possibly large geographical area. The families cannot afford the expenses and commuting time needed to periodically reach the operator, thus adopting a teleconferencing platform would be both economically and logistically convenient. However, all or most subjects lack verbal or written communication abilities. Moreover, most subjects also lack fine motor control and therefore cannot communicate by gestures. As a consequence, only eye-gaze interaction can be used to communicate with the operator.

If each family had an eye-tracking device, the simplest solution would be to share the operator’s desktop with each subject and show to the subject very simple questions with three or four options. The subject could then choose the preferred option by simply fixating their gaze on it. Unfortunately, not all the families have an eye-tracking device at home and some of them can only rely on a tablet or a smartphone, so using specialized software is not feasible. Without eye-gaze tracking, the subjects cannot send their feedback to the operator, who therefore has no way to estimate the subjects’ degree of comprehension of the content being administered and receive responses to his/her questions.

Eye gaze technology has been investigated for more than 20 years now [26]. Most commercially available products rely on specialized hardware devices able to detect the eyes and eye direction in real-time, but those devices are often expensive and do not support smartphones or tables (except some very recent and quite expensive ones). As a consequence, an inclusive approach must leverage on common webcams and computer vision algorithms to detect the face of the subject and his/her eye gaze direction [27,28,29,30,31,32]. Moreover, to the best of our knowledge, current video conferencing platforms do not support software-based eye-gaze analysis, so the computer vision algorithms must be applied externally to the video conferencing software (VCS) yet work on the same video stream managed by that software. As VCS is usually not open source, modifying it is not a viable solution. Furthermore, most families are not willing to install yet more software on their devices.

As a solution to the problem, we developed software (named SWYG) that only runs at the “operator” side of the communication, i.e., it does not require other software to be installed on the subject’s computer. Moreover, it does not require powerful hardware at the operator’s side and works with virtually any videoconferencing software, on Windows, Linux and Apple operating systems. Finally, it does not rely on any “cloud” or client-server data analysis and storage services, so it does introduce further issues with privacy and personal data management.

The preliminary results obtained are very promising and the software is ready to be deployed on a larger base. While this paper is being written, several subjects are finally able to communicate with their caregivers from home, without relying on expensive and cumbersome devices.

We are now planning to extend the SWYG platform to allow for a larger range of interaction channels in order to support different groups of disabled users as well. In particular, we are investigating remote gesture recognition [33,34] and remote activity recognition for the assistance to elderly subjects [35]. In combination with software-defined networking [36], the proposed approach can also be deployed at the local and campus level to develop real-time video communication and “rapid” surveillance architecture by relying on existing infrastructures and video conferencing platforms.

Author Contributions

All authors have jointly, and on equal basis, collaborated to this research work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Global Education Monitoring Report. How Is the Coronavirus Affecting Learners with Disabilities? 30 March 2020. Available online: https://gemreportunesco.wordpress.com/2020/03/30/how-is-the-coronavirus-affecting-learners-with-disabilities/ (accessed on 10 May 2020).

- Guralnick, M.J. Early Intervention for Children with Intellectual Disabilities: An Update. J. Appl. Res. Intellect. Disabil. 2016, 30, 211–229. [Google Scholar] [CrossRef] [PubMed]

- Gangemi, A.; Caprì, T.; Fabio, R.A.; Puggioni, P.; Falzone, A.; Martino, G. Transcranial Direct Current Stimulation (tDCS) and Cognitive Empowerment for the functional recovery of diseases with chronic impairment and genetic etiopathogenesis. In Advances in Genetic Research; Nova Science Publisher: New York, NY, USA; Volume 18, pp. 179–196. ISBN 978-1-53613-264-9.

- Neul, J.; Kaufmann, W.E.; Glaze, D.G.; Christodoulou, J.; Clarke, A.J.; Bahi-Buisson, N.; Leonard, H.; Bailey, M.E.S.; Schanen, N.C.; Zappella, M.; et al. Rett syndrome: Revised diagnostic criteria and nomenclature. Ann. Neurol. 2010, 68, 944–950. [Google Scholar] [CrossRef] [PubMed]

- Vessoyan, K.; Steckle, G.; Easton, B.; Nichols, M.; Siu, V.M.; McDougall, J. Using eye-tracking technology for communication in Rett syndrome: Perceptions of impact. Augment. Altern. Commun. 2018, 34, 230–241. [Google Scholar] [CrossRef] [PubMed]

- Fabio, R.A.; Magaudda, C.; Caprì, T.; Towey, G.E.; Martino, G. Choice behavior in Rett syndrome: The consistency parameter. Life Span Disabil. 2018, 21, 47–62. [Google Scholar]

- Fabio, R.A.; Capri, T.; Nucita, A.; Iannizzotto, G.; Mohammadhasani, N. Eye-gaze digital games improve motivational and attentional abilities in rett syndrome. J. Spéc. Educ. Rehabil. 2019, 19, 105–126. [Google Scholar] [CrossRef]

- Caprì, T.; Gugliandolo, M.C.; Iannizzotto, G.; Nucita, A.; Fabio, R.A. The influence of media usage on family functioning. Curr. Psychol. 2019, 1, 1–10. [Google Scholar] [CrossRef]

- Dinleyici, M.; Çarman, K.B.; Ozturk, E.; Dagli, F.S.; Guney, S.; Serrano, J.A. Media Use by Children, and Parents’ Views on Children’s Media Usage. Interact. J. Med Res. 2016, 5, e18. [Google Scholar] [CrossRef] [PubMed]

- Caprì, T.; Santoddi, E.; Fabio, R.A. Multi-Source Interference Task paradigm to enhance automatic and controlled processes in ADHD. Res. Dev. Disabil. 2019, 97, 103542. [Google Scholar] [CrossRef]

- Cingel, D.P.; Krcmar, M. Predicting Media Use in Very Young Children: The Role of Demographics and Parent Attitudes. Commun. Stud. 2013, 64, 374–394. [Google Scholar] [CrossRef]

- McDaniel, B.T.; Radesky, J.S. Technoference: Parent Distraction With Technology and Associations With Child Behavior Problems. Child Dev. 2017, 89, 100–109. [Google Scholar] [CrossRef]

- Mohammadhasani, N.; Caprì, T.; Nucita, A.; Iannizzotto, G.; Fabio, R.A. Atypical Visual Scan Path Affects Remembering in ADHD. J. Int. Neuropsychol. Soc. 2019, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Fabio, R.A.; Caprì, T. Automatic and controlled attentional capture by threatening stimuli. Heliyon 2019, 5, e01752. [Google Scholar] [CrossRef]

- Djukic, A.; Rose, S.A.; Jankowski, J.J.; Feldman, J.F. Rett Syndrome: Recognition of Facial Expression and Its Relation to Scanning Patterns. Pediatr. Neurol. 2014, 51, 650–656. [Google Scholar] [CrossRef] [PubMed]

- Fabio, R.A.; Caprì, T.; Iannizzotto, G.; Nucita, A.; Mohammadhasani, N. Interactive Avatar Boosts the Performances of Children with Attention Deficit Hyperactivity Disorder in Dynamic Measures of Intelligence. Cyberpsychol. Behav. Soc. Netw. 2019, 22, 588–596. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Ring, H. A model of the mindreading system: Neuropsychological and neurobiological perspectives. In Children’s Early Understanding of Mind: Origins and Development; Lewis, C., Mitchell, P., Eds.; Lawrence Erlbaum Associates: Hillsdale, MI, USA, 1994; pp. 183–207. [Google Scholar]

- Castelli, I.; Antonietti, A.; Fabio, R.A.; Lucchini, B.; Marchetti, A. Do rett syndrome persons possess theory of mind? Some evidence from not-treated girls. Life Span Disabil. 2013, 16, 157–168. [Google Scholar]

- Caprì, T.; Fabio, R.A.; Iannizzotto, G.; Nucita, A. The TCTRS Project: A Holistic Approach for Telerehabilitation in Rett Syndrome. Electronics 2020, 9, 491. [Google Scholar] [CrossRef]

- Benham, S.; Gibbs, V. Exploration of the Effects of Telerehabilitation in a School-Based Setting for At-Risk Youth. Int. J. Telerehabil. 2017, 9, 39–46. [Google Scholar] [CrossRef]

- Nucita, A.; Bernava, G.; Giglio, P.; Peroni, M.; Bartolo, M.; Orlando, S.; Marazzi, M.C.; Palombi, L. A Markov Chain Based Model to Predict HIV/AIDS Epidemiological Trends. Appl. Evol. Comput. 2013, 8216, 225–236. [Google Scholar] [CrossRef]

- Screen Capture Lite. Available online: https://github.com/smasherprog/screen_capture_lite (accessed on 14 May 2020).

- Itseez. Open Source Computer Vision Library. Available online: https://github.com/itseez/opencv (accessed on 31 May 2020).

- Iannizzotto, G.; Lo Bello, L.; Nucita, A.; Grasso, G.M. A Vision and Speech Enabled, Customizable, Virtual Assistant for Smart Environments. In Proceedings of the 2018 11th International Conference on Human System Interaction (HSI), Gdańsk, Poland, 4–6 July 2018; pp. 50–56. [Google Scholar]

- King, D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Kar, A.; Corcoran, P. Performance Evaluation Strategies for Eye Gaze Estimation Systems with Quantitative Metrics and Visualizations. Sensors 2018, 18, 3151. [Google Scholar] [CrossRef]

- Kar, A.; Corcoran, P. A Review and Analysis of Eye-Gaze Estimation Systems, Algorithms and Performance Evaluation Methods in Consumer Platforms. IEEE Access 2017, 5, 16495–16519. [Google Scholar] [CrossRef]

- Iannizzotto, G.; La Rosa, F. Competitive Combination of Multiple Eye Detection and Tracking Techniques. IEEE Trans. Ind. Electron. 2010, 58, 3151–3159. [Google Scholar] [CrossRef]

- Crisafulli, G.; Iannizzotto, G.; La Rosa, F. Two competitive solutions to the problem of remote eye-tracking. In Proceedings of the 2009 2nd Conference on Human System Interactions, Catania, Italy, 21–23 May 2009; pp. 356–362. [Google Scholar]

- Marino, S.; Sessa, E.; Di Lorenzo, G.; Lanzafame, P.; Scullica, G.; Bramanti, A.; La Rosa, F.; Iannizzotto, G.; Bramanti, P.; Di Bella, P. Quantitative Analysis of Pursuit Ocular Movements in Parkinson’s Disease by Using a Video-Based Eye Tracking System. Eur. Neurol. 2007, 58, 193–197. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. MPIIGaze: Real-World Dataset and Deep Appearance-Based Gaze Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 162–175. [Google Scholar] [CrossRef]

- Lemley, J.; Kar, A.; Drimbarean, A.; Corcoran, P. Convolutional Neural Network Implementation for Eye-Gaze Estimation on Low-Quality Consumer Imaging Systems. IEEE Trans. Consum. Electron. 2019, 65, 179–187. [Google Scholar] [CrossRef]

- Iannizzotto, G.; La Rosa, F. A Modular Framework for Vision-Based Human Computer Interaction. In Image Processing; IGI Global: Hershey, PA, USA, 2013; pp. 1188–1209. [Google Scholar]

- Iannizzotto, G.; La Rosa, F.; Costanzo, C.; Lanzafame, P. A Multimodal Perceptual User Interface for Collaborative Environments. In Proceedings of the 13th International Conference on Image Analysis and Processing, ICIAP 2005, Cagliari, Italy, 6–8 September 2005; Volume 3617, pp. 115–122. [Google Scholar]

- Cardile, F.; Iannizzotto, G.; La Rosa, F. A vision-based system for elderly patients monitoring. In Proceedings of the 3rd International Conference on Human System Interaction, Rzeszów, Poland, 13–15 May 2010; pp. 195–202. [Google Scholar]

- Leonardi, L.; Ashjaei, M.; Fotouhi, H.; Bello, L.L. A Proposal Towards Software-Defined Management of Heterogeneous Virtualized Industrial Networks. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019; Volume 1, pp. 1741–1746. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).