Abstract

Underwater images frequently exhibit color distortion, detail blurring, and contrast degradation due to absorption and scattering by the underwater medium. This study proposes a progressive color correction strategy integrated with a vision-inspired image enhancement framework to address these issues. Specifically, the progressive color correction process includes adaptive color quantization-based global color correction, followed by guided filter-based local color refinement, aiming to restore accurate colors while enhancing visual perception. Within the vision-inspired enhancement framework, the color-adjusted image is first decomposed into a base layer and a detail layer, corresponding to low- and high-frequency visual information, respectively. Subsequently, detail enhancement and noise suppression are applied in the detail pathway, while global brightness correction is performed in the structural pathway. Finally, results from both pathways are fused to yield the enhanced underwater image. Extensive experiments on four datasets verify that the proposed method effectively handles the aforementioned underwater enhancement challenges and significantly outperforms state-of-the-art techniques.

1. Introduction

With the growing exploration of marine resources, underwater images have become a critical medium for transmitting marine information, playing a vital role in various fields such as visual navigation of underwater robots, seabed topographic mapping, marine engineering inspection, and aquaculture monitoring [1]. However, due to the influence of light attenuation and low-light conditions, underwater images often suffer from quality degradation issues, including color distortion, blurred details, and low contrast. Enhancing image quality to achieve visually clear results has thus garnered significant attention.

Over the past two decades, a large number of studies on improving the visibility of underwater images have been proposed, encompassing traditional methods (such as model-free image enhancement and model-based image restoration) as well as data-driven techniques. Among these, model-free image enhancement methods include color equalization-based [2], color balance-based [3,4,5], and color channel compensation-based approaches [6,7,8]. By contrast, model-based methods typically utilize underwater imaging models and prior knowledge to obtain high-quality underwater images by inverting the degradation process. Variational models [9,10,11,12], dark channel prior [13,14,15], red channel prior [16,17], light attenuation prior [18,19,20,21], and Retinex theory [22,23] are widely applied in model-based underwater image restoration to yield visually pleasing results. Although such methods exhibit a certain level of image enhancement performance, their stability is comparatively limited when applied to complex underwater scenes, thereby negatively impacting the restoration results. Notably, data-driven methods restore and enhance degraded underwater images by learning the nonlinear relationships between ground-truth images and their degraded counterparts. These approaches, which include Convolutional Neural Networks (CNN) [24,25], Generative Adversarial Networks (GAN) [26,27,28,29,30], Transformer Networks [31,32], and contemporary mainstream Mamba architectures [33,34], have reshaped the field of underwater image restoration. Despite their remarkable performance, the requirement for large-scale training datasets, the complexity of model architecture design, and generalization challenges may limit their applicability in practical scenarios.

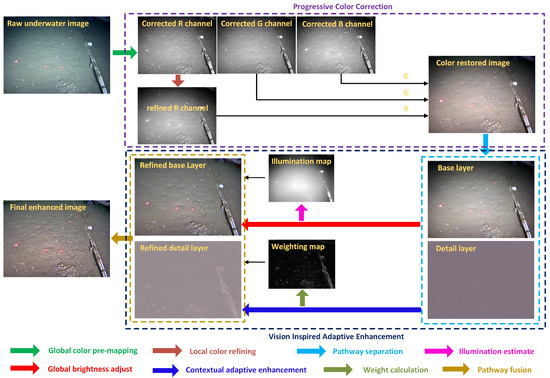

While existing model-free underwater image enhancement approaches have demonstrated promising performance, they still suffer from limitations such as oversaturation, undersaturation, and uneven brightness. In this paper, we propose a novel model-free underwater image enhancement method that integrates a progressive color correction module with a vision-inspired image enhancement framework. As illustrated in Figure 1, the progressive color correction module processes degraded underwater images via global color mapping and local color adjustment to achieve effective color recovery. Within the visual perception-driven enhancement module, the color-corrected image is first decomposed into a base layer (encoding low-frequency information) and a detail layer (encoding high-frequency information). Subsequently, the base layer undergoes non-uniform illumination correction using an adaptive gain function; meanwhile, the detail layer is adaptively sharpened via a local energy-weighted filter to accentuate fine-grained structures. Finally, these layers are fused to yield the restored image.

Figure 1.

Overview of our proposed method.

The key ideas and contributions of this work are summarized as follows:

- (1)

- A progressive underwater image color correction algorithm is proposed to enhance color balance while systematically mitigating the risk of overcompensation.

- (2)

- A vision-inspired underwater image enhancement algorithm is designed to uncover latent information within the image while preserving the naturalness of the scene and fine-grained details.

- (3)

- The proposed method is extensively evaluated on multiple datasets and benchmarked against state-of-the-art methods. It is assessed in terms of quantitative metrics, qualitative results, and computational efficiency.

2. Related Work

Approaches to improving underwater image quality can be broadly classified into three categories: model-driven image restoration methods, model-free image enhancement methods, and data-driven methods.

Model-driven image restoration methods typically utilize hand-crafted priors to identify parameters for underwater optical imaging, followed by model inversion to recover a clear image. Such priors include the underwater dark channel prior [14], haze line prior [35], light scattering prior [36], and minimal color loss [4,37], etc. For example, in Sea-Thru [38], the traditional imaging model is modified to account for dependencies between optical water types and illumination conditions. Galdran et al. [39] constructed an image restoration model by leveraging the characteristic of rapid attenuation of red light in underwater environments. In [13], a generalized dark channel prior was utilized to estimate transmission maps and mitigate color casts. This method adaptively adjusts kernel sizes based on image characteristics, thereby effectively reducing artifacts in regions affected by bright objects or artificial light interference. Similarly, Liang et al. proposed a generalized underwater dark channel prior [14], which integrates multiple priors for transmission map estimation. Liu et al. [40] proposed the rank-one prior (ROP) to mathematically characterize the superimposition properties of clear images, thus facilitating the restoration of degraded images under various conditions. Building upon the haze imaging model, Liang et al. [15] proposed a color decomposition and contrast restoration method that integrates color balance, detail enhancement, and contrast improvement procedures to enhance underwater image quality. Although effective in most underwater scenes, the brightness improvement of this method is generally limited for low-light underwater images, as the haze imaging model is physically invalid under such conditions. Variational strategies, as specialized techniques in image restoration, are often integrated with underwater imaging models or priors to enhance the perceptual quality of underwater images. For instance, leveraging the complete underwater image formation model, Xie et al. [41] developed a red-channel sparse prior-guided variational framework, which enables simultaneous dehazing and deblurring of underwater images. In HLRP [10], the hyper-laplacian reflectance priors established with an -norm penalty on the first-order and second-order gradients of the reflectance are used to regularize the Retinex variational model. This model separately yet simultaneously estimates reflection and illumination, which are then used to enhance underwater images. By accounting for underwater noise and texture priors, Zhou et al. [9] also introduced a Retinex variational model to effectively decouple illumination and reflectance components in underwater imagery. Despite notable advancements in this field, the aforementioned methods fail to adequately address the comprehensive analysis of factors influencing imaging quality.

Model-free image enhancement methods typically leverage existing image enhancement techniques to enhance the visual quality of underwater images by adjusting pixel values of degraded images. A variety of effective enhancement techniques, including Retinex-based methods [22,23], histogram stretching methods [2,42], color compensation methods [7,43], and multiscale fusion methods [44], are widely adopted. For instance, Zhang et al. [42] proposed an effective underwater image color correction method by measuring the proportional relationship between the dominant color channel and other lower color channels. Ancuti et al. [5] performed brightness correction and detail sharpening on the white-balanced image, respectively, and employed a multi-weight fusion strategy to derive the restored underwater image. Furthermore, Zhang et al. [45] proposed an approach that employs sub-interval linear transformation-based color correction and bi-interval histogram-based contrast equalization, effectively mitigating color distortion, enhancing image visibility, and emphasizing fine details. In the Color Balance and Lightness Adjustment (CBLA) method [3], both the channel-adaptive color restoration module and the information-adaptive contrast enhancement module are strategically designed for color correction and contrast enhancement, respectively. Owing to their favorable performance in image enhancement, multi-scale texture enhancement and fusion techniques have also been widely applied. For instance, both ref. [46] and Zhang et al. [47] employed a weighted wavelet-based visual perception fusion strategy to integrate high- and low-frequency components across multiple scales, thereby enhancing the quality of underwater images. In the Structural Patch Decomposition Fusion (SPDF) method [44], the complementary preprocessed images are first decomposed into mean intensity, contrast, and structure components via structural patch decomposition. Subsequently, tailored fusion strategies are employed to integrate these components for reconstructing the restored underwater image. In the High-Frequency Modulation (HFM) method [6], both pixel intensity-based and global gradient-based weighting mechanisms are designed to fuse underwater images with improved visibility and contrast.

Data-driven methods have attracted considerable attention owing to their superiority in learning nonlinear mappings from large-scale training data for high-quality image reconstruction. For instance, Li et al. [25] proposed a convolutional neural network, namely WaterNet, which directly reconstructs clear images by leveraging an underwater scene prior. Sun et al. [27] introduced an integrated learning framework termed UMGAN, embedding a noise reduction network into the GAN to recover underwater images across diverse scenes. In PUGAN [29], a well-designed GAN framework comprising two subnetworks—the parameter estimation and interaction enhancement subnetworks—is employed to generate visually pleasing images. In [48], a dual-Unet network is incorporated into the diffusion model to simultaneously enable distribution transformation of degraded images and elimination of latent noise. To enhance attention toward different color channels and spatial regions, thereby better eliminating color artifacts and color casts, the U-shaped Transformer network (UTransNet) [31] integrates both channel-level and spatial-level transformer modules. Furthermore, Wang et al. [49] proposed a two-stage domain adaptation network that incorporates a triple alignment network and a ranking-based image quality assessment network to simultaneously bridge the inter-domain and intra-domain gaps, thus rendering the generated images more realistic. In PixMamba [50], both the patch-level Mamba Net and pixel-level Mamba Net are designed to efficiently model global dependencies in underwater scenes. To capitalize on the distinctions between learning-based and physics-based compensation methods, Ouyang et al. [51] proposed a perceptual underwater enhancement technique that adapts the performance of data-driven semantic transfer and scene-relevant reconstruction. Although these deep learning-based methods offer notable advantages in handling complex and dynamic underwater scenes, their heavy reliance on large datasets may limit their robustness and practicality.

3. Methodology

In the following sections, we first present the progressive color correction strategy for underwater images, which comprises global color pre-mapping and local color refinement, followed by a detailed explanation of the vision-inspired image enhancement framework with adaptive mechanisms.

3.1. Progressive Color Correction

Due to the selective scattering and absorption of light, different wavelengths of light undergo varying degrees of attenuation, resulting in underwater images that predominantly display blue or green tones. This degradation in visual quality, induced by the underwater environment and lighting conditions, greatly obscures critical information within the scene.

3.1.1. Global Color Pre-Mapping

Extensive research has demonstrated that the three-channel histograms of natural images closely conform to a Rayleigh distribution with a high degree of inter-channel overlap [9]. Leveraging this characteristic, this study first fits each color channel histogram of underwater images using the Rayleigh function, then applies pixel remapping to minimize disparities in their color distributions.

For a given underwater image I, the probabilistic distributions of the histogram for each channel are first calculated as follows:

where represents the probability density function of channel , and denotes the histogram function that counts the occurrences of intensity value i () within color channel .

A least squares-based parameter estimation strategy is then employed to estimate the mean and variance of the Rayleigh distribution being fitted. Given the probability density function , the parameters of the Rayleigh distribution can be expressed as follows:

where and are the mean and variance of the Rayleigh distribution , respectively, which govern the Rayleigh probability density distribution. Once the color channel-related parameters and are determined, the distribution differences among the color channels become apparent.

To mitigate the distribution differences among the color channels in underwater scenes, drawing on the gray world algorithm [5], we first use the following formula to determine the dominant color channel:

where and D denote the maximum operation and dominant mean, respectively. Subsequently, we utilize the dominant value to perform adaptive color quantization, which is defined as follows:

where denotes the quantized pixel intensity at location in color channel . The gain parameter adjusts the post-quantization value range and is set to 0.05 in experiments to optimally balance visual perception and quantitative metrics. To enhance the naturalness of image intensity, an additional equalization is applied to .

3.1.2. Local Color Refining

Due to selective absorption in underwater environments, the red channel typically undergoes the most significant attenuation among color channels. Although the proposed global color pre-mapping strategy delivers a preliminary correction for color cast, local red artifacts may emerge due to overcompensation. Therefore, further local color refinement is applied to restore color-accurate images with enhanced visual perception. Based on the strong correlation between the red and green channels [7], we first propose a guided filter-based local color correction approach for the red channel, which is mathematically formulated as follows:

where is a window centered at the pixel point i, is the average value of within , is the variance in over , and is a regularization parameter that is set as .

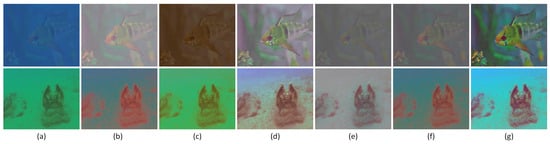

The processed red channel data are then fused with the blue and green channels derived from global color remapping to generate the color-corrected image. In addition, Figure 2 compares our progressive color correction method against other state-of-the-art methods. For the first test image, the results obtained by Gray-Edge [52], WB_sRGB [53], 3C [7], NUCE [54], and Shades-of-Gray [55] contain limited color information. For the second test image, both Gray-Edge [52] and Shades-of-Gray [55] exhibit significant overcompensation artifacts. In contrast, our method generates corrected images that are free of color distortion and dominant color cast, while simultaneously preserving all critical image details.

Figure 2.

Visual comparison of different color correction methods. From left to right: (a) Images from test datasets; (b) Gray-Edge [52] results; (c) WB_sRGB [53] results; (d) NUCE [54] results; (e) 3C [7] results; (f) Shades-of-Gray [55] results; (g) Ours progressive color correction.

3.2. Vision Inspired Adaptive Image Enhancement

The proposed progressive color correction process effectively eliminates color casts; however, it does not generate an enhanced image with improved visibility. Therefore, this study proposes a pathway separation and adaptive enhancement approach to tackle the issues of contrast degradation and detail blurring in underwater scenes.

3.2.1. Pathway Separation

Underwater images typically contain significant noise, and directly enhancing their brightness or contrast may amplify such noise, thereby complicating noise suppression and removal. Therefore, to improve their visual quality, it is critical to suppress or eliminate such noise during the image enhancement process.

The input underwater image can be decomposed into two distinct components: the base layer and the detail layer , i.e.,

Since structural information typically resides in the base layer, while fine details and noise reside in the detail layer, we draw inspiration from biological vision-inspired visual information processing mechanisms [56]. Specifically, we first adopt a Gaussian curvature filter-based image decomposition approach to decompose into the two layers, and then achieve luminance adaptation and detail-preserving contrast enhancement by processing the two layers separately.

The Gaussian curvature filter model can be expressed as the following total variation-based framework:

where denotes the scalar regularization parameter. This objective function includes two parts: the first is a data-fitting term that preserves meaningful scene structures; the second is the Gaussian curvature regularization term, defined as . Minimizing this term is equivalent to rendering the image as developable as possible.

The base layer is first obtained by implicitly minimizing Equation (10) using Gaussian curvature filtering [57]. This method searches for the tangent plane closest to the current pixel among those formed by all neighboring pixels, thereby iteratively adjusting pixel values. The detail layer is then computed as follows:

3.2.2. Global Brightness Adjust

To tackle the problem of uneven illumination induced by lighting conditions, we propose to estimate the illumination field of underwater images and conduct illumination adjustment for brightness correction. Given a base-layer image , an initial illumination can be obtained by taking the minimum value across RGB channels as the illumination value for each pixel, which is expressed as follows:

where denotes the -th color channel of the base-layer image, and is the pixel position. To preserve salient structures while eliminating redundant texture details, the following objective function is introduced to derive the desired illumination map T:

where is the balancing parameter, and is the weight matrix defined as follows:

Here, denotes the absolute value operator; is the local window centered at pixel and is a small constant to prevent division by zero. represents the first-order derivative operator, which includes (horizontal) and (vertical).

Since the objective function (Equation (13)) involves only quadratic terms, it can be rewritten in matrix form as follows: . Here, and represent vertical vectors formed by concatenating all elements of T and , respectively. and denote diagonal matrices formed by the square roots of and , respectively; and are Toeplitz matrices corresponding to discrete gradient operators with forward differences. Thus, the vector that minimizes Equation (13) can be uniquely determined by solving the linear system:

where denotes an identity matrix, and is a five-point positive-definite Laplacian matrix. Once is obtained, the refined illumination T can be recovered via inverse vectorization of .

The resulting illumination indicates which regions of the image will be lightened or darkened. Specifically, areas with insufficient luminous intensity require incremental adjustment. To this end, we formulate the following pixel-wise brightness correction factor:

As is evident from the formulation, this coefficient adaptively adjusts based on the illumination values. Specifically, when the luminous intensity is less than 0.5, it will result in a greater than 1. Finally, the brightness-adjusted base layer is defined as follows:

3.2.3. Contextual Adaptive Enhancement

Using the base-detail decomposition in Equations (9)–(11), high-frequency details and noise are retained in the detail pathway. For relatively smooth regions containing primarily noise, their local energy tends to be low; in contrast, regions containing both noise and texture details generally exhibit high local energy. Based on this analysis, we can calculate the detail preservation weights by evaluating the energy values within the local neighborhood of the detail layer, thereby achieving noise suppression and detail preservation. The specific formula is as follows:

where ∗ denotes the convolution operator and represents a Gaussian filter. A smaller indicates that the current pixel is not located in texture regions and can be smoothed. Conversely, a larger suggests the presence of texture details that should be preserved. Therefore, the proposed detail-preserving and noise-suppression mechanism in the detail pathway can be formulated as follows:

where the exponential parameter is introduced to tune the contrast of details and is set to for optimal visual and quantitative results. To generate the final enhanced image, the processed layers are fused as follows:

where denotes the adaptively enhanced underwater image, and controls the trade-off between noise suppression and detail enhancement. To avoid over-enhancement of image details, we set in this work. The workflow of our vision-inspired adaptive enhancement algorithm for underwater images is summarized in Algorithm 1.

| Algorithm 1: Vision-Inspired Adaptive Enhancement for Underwater Images |

1 Input: Color-corrected image , parameters , , , ; Output: Enhanced image ; 6 Obtain the detail-preserving enhanced detail layer via Equation (20); 7 Obtain the enhanced image via Equation (21); |

4. Results and Analysis

Comparative Methods. We compare our method with 12 state-of-the-art methods, including Attenuated Color Channel Correction and Detail-Preserved Contrast Enhancement (ACDC) [58], Pixel Distribution Remapping and Multi-Prior Retinex Variational Model (PDRMRV) [9], Minimal Color Loss and Locally Adaptive Contrast Enhancement (MMLE) [4], Weighted Wavelet Visual Perception Fusion (WWPF) [47], Multi-Color Components and Light Attenuation (MCLA) [21], HFM [6], Hyper-Laplacian Reflectance Priors (HLRP) [10], L2UWE [59], SPDF [44], UDHTV [60], WaterNet [25], and U-shape Transformer (UTransNet) [31]. Specifically, PDRMRV, MCLA, UDHTV, and HLRP are categorized as restoration-based methods; ACDC, MMLE, WWPF, HFM, L2UWE, and SPDF as enhancement-based methods and WaterNet and UTransNet as data-driven approaches. To ensure a fair comparison, the parameters of the compared algorithms are set to optimal values following the recommendations of the original authors.

Benchmark Datasets. All the aforementioned methods were evaluated using four benchmark underwater image datasets: UIEB [25], RUIE [61], OceanDark [59], and Color-Checker7 [5]. The UIEB dataset contains 890 degraded images captured under diverse challenging conditions, such as various color casts, poor illumination, turbid water, and deep-sea environments. Notably, it includes a substantial number of images featuring marine organisms and artificial objects, thus making it particularly suitable for evaluating the robustness of underwater image enhancement algorithms. In contrast, the RUIE dataset, collected using waterproof cameras near Zhangzi Island, provides complementary test scenarios for evaluation. The OceanDark dataset comprises 183 low-light underwater images acquired by Ocean Networks Canada. Additionally, the Color-Checker7 dataset consists of seven underwater images captured by various cameras in a shallow swimming pool, each containing a color checker.

Evaluation Metrics. Several commonly used quantitative metrics are employed to evaluate the effectiveness of various methods, including Average Gradient (AG) [62], Edge Intensity (EI) [54], Colorfulness, Contrast, and Fog Density index (CCF) [63], Fog Density Index (FADE) [64], Underwater Image Quality Metric (UIQM) [65], Underwater Color Image Quality Evaluation metric (UCIQE) [66], and CIEDE2000 [67]. Among these metrics, higher AG values indicate improved image sharpness; higher UCIQE values reflect enhanced brightness; lower FADE scores correspond to less fog density; higher EI values indicate better detail preservation and higher CCF and UIQM scores suggest improved contrast and perceptual quality, respectively. Unlike the aforementioned metrics, which rely on global statistical features, CIEDE2000 specifically quantifies the color differences between the recovered image and the reference ground-truth, with lower values indicating superior color restoration.

4.1. Parameters Setting

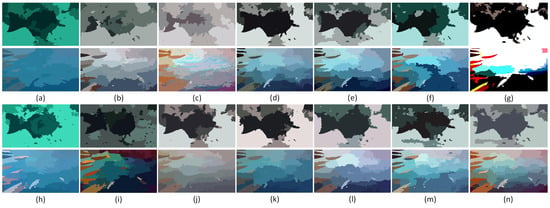

The proposed algorithm involves two key parameters: the adjustment parameter for detail enhancement and the fusion weight parameter . To determine their appropriate values, we randomly selected several images and conducted experiments following these strategies: (1) fixing while varying ; (2) fixing while varying . As shown in the results of Figure 3, when increases from 0.8 to 1.6, the details of the resulting images gradually become richer; however, an excessively large leads to over-enhancement. Similarly, a smaller risks weakening detail contrast, while a larger makes the enhanced image appear unnatural. Therefore, to balance the visual naturalness and contrast of the enhanced image, we empirically set and .

Figure 3.

The effect of different values of and on the enhanced image. Specifically, in the first to second rows, the results correspond to different values of with the parameter fixed at 3.0; in the third to fourth rows, the results correspond to different values of with the parameter fixed at 1.2.

4.2. Comparisons on the Color-Check7 Dataset

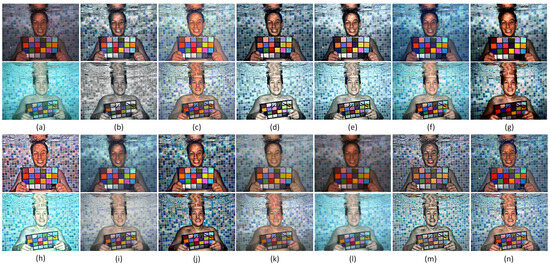

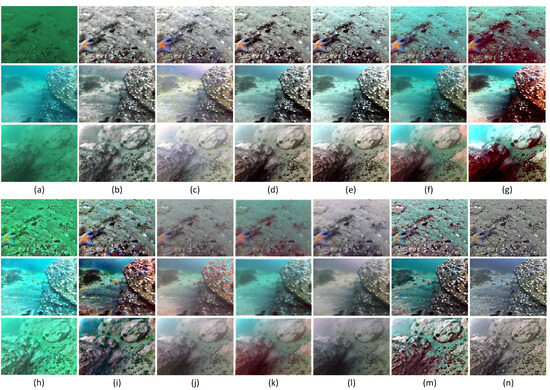

To evaluate the robustness and accuracy of underwater color correction and white balance methods, we conducted comparative experiments on the Color-Check7 dataset. As shown in the first row of Figure 4, ACDC and UTransNet produce visually unsatisfactory results for red-tinged underwater images, with unnatural color reproduction. While MMLE, WWPF, HLRP, and UDHTV partially correct color shifts, their outputs exhibit spatially inconsistent color distributions. Notably, MCLA and SPDF introduce a systematic blue cast, whereas L2UWE and WaterNet fail to sufficiently suppress residual red tones. Although PDRMRV, HFM, and our proposed method successfully eliminate red chromatic aberrations, PDRMRV and HFM yield weaker contrast and less effective detail enhancement compared with our approach. For the blue-tinged underwater image in the second row of Figure 4, ACDC yields excessively dark outputs, compromising overall color clarity. MCLA, L2UWE, and WaterNet retain excessive blue chromaticity, failing to achieve balanced color representation. SPDF produces low-contrast results with inadequate detail preservation, while HFM introduces undesirable artifacts due to overcompensation. By contrast, the proposed method demonstrates superior detail preservation and a more natural appearance. Comprehensive evaluation across the Color-Check7 dataset confirms that our approach consistently outperforms existing methods in color distortion correction and contrast enhancement.

Figure 4.

Visual performance comparison of different enhancement methods on the Color-Check7 dataset. (a) Raw images; (b) Enhanced results of ACDC [58]; (c) PDRMRV [9]; (d) MMLE [4]; (e) WWPF [47]; (f) MCLA [21]; (g) HLRP [10]; (h) L2UWE [59]; (i) SPDF [44]; (j) UDHTV [60]; (k) HFM [6]; (l) WaterNet [25]; (m) UTransNet [31]; (n) Proposed method.

For quantitative analysis, the CIEDE2000 values for all images are presented in Table 1. While the proposed method does not achieve the lowest CIEDE2000 values for all images, its overall average score is 11.067, which is significantly better than those of the compared methods.

Table 1.

Quantitative Comparison Results of CIEDE2000 for the ColorCheck-7 Dataset.

4.3. Comparisons on the UIEB Dataset

As illustrated in Figure 5, three underwater images randomly selected from the UIEB dataset—exhibiting diverse color casts and degradations, such as greenish tones, backscatter effects, and bluish tones—are employed for comparative experiments. For the greenish underwater image (first row), MCLA, L2UWE, HFM, WaterNet, and UTransNet fail to correct the color cast. PDRMRV introduces a reddish cast in the upper left corner due to overcompensation of the red channel. Similarly, SPDF produces noticeable blue artifacts, primarily due to its inappropriate local color correction strategy. Both HLRP and SPDF fail to effectively remove the greenish cast; instead, they introduce varying degrees of color distortion, resulting in overall visual darkening of the image. Although ACDC, MMLE, and WWPF mitigate these issues, their visual effects are not sufficiently natural, and detail preservation remains inadequate. For the bluish image (third row), PDRMRV produces noticeable blue artifacts, while MMLE, WWPF, SPDF, and UDHTV exhibit a purple cast in distant scenes. MCLA, HLRP, L2UWE, HFM, and UTransNet fail to eliminate the blue distortion; additionally, MCLA and HLRP introduce a red cast. In contrast, the proposed method effectively reduces color deviations and artifacts while markedly improving the visibility of underwater scenes. The quantitative evaluation results for the entire UIEB dataset are summarized in Table 2. Compared with competing methods, the proposed method achieves the best performance in FADE, UIQM, and UCIQE, with values of 0.833, 3.588, and 0.603, respectively. Although its AG and EI values are slightly lower than those of SPDF, the proposed method outperforms all counterparts in overall metrics.

Figure 5.

Visual performance comparison of various methods on the UIEB dataset. (a) Raw images; (b) enhanced results of ACDC [58]; (c) PDRMRV [9]; (d) MMLE [4]; (e) WWPF [47]; (f) MCLA [21]; (g) HLRP [10]; (h) L2UWE [59]; (i) SPDF [44]; (j) UDHTV [60]; (k) HFM [6]; (l) WaterNet [25]; (m) UTransNet [31]; (n) proposed method.

Table 2.

Quantitative performance analyses on the UIEB and RUIE datasets. The best performance value for each metric is highlighted in red, and the second-best is marked in blue.

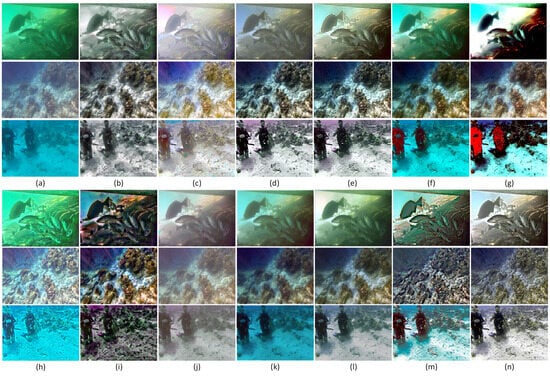

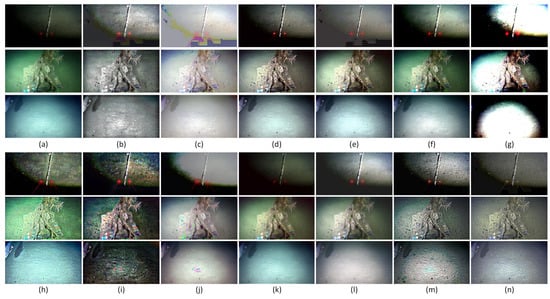

4.4. Comparisons on the RUIE and OceanDark Datasets

Figure 6 presents images randomly selected from the RUIE dataset. Notably, the PDRMRV method suffers from color overcompensation, with all three restored images exhibiting distinct blue blocks. In the dark regions of the images, MCLA, HLRP, SPDF, and HFM introduce significant red casts after enhancement. While the L2UWE method enhances texture details, it fails to mitigate the inherent color cast in the original images. For white shell-like objects, the UDHTV method also produces obvious blue blocks. Furthermore, images processed by UTransNet exhibit a slight red cast in distant regions. As shown in the quantitative results in Table 2, the proposed method has a slightly lower EI value than SPDF and a marginally lower FADE value compared with WWPF. Nevertheless, it outperforms competing methods in the remaining four metrics: AG, CCF, UIQM, and UCIQE.

Figure 6.

Visual performance comparison of various methods on the RUIE dataset. (a) Raw images; (b) enhanced results of ACDC [58]; (c) PDRMRV [9]; (d) MMLE [4]; (e) WWPF [47]; (f) MCLA [21]; (g) HLRP [10]; (h) L2UWE [59]; (i) SPDF [44]; (j) UDHTV [60]; (k) HFM [6]; (l) WaterNet [25]; (m) UTransNet [31]; (n) proposed method.

Figure 7 shows images randomly chosen from the OceanDark dataset. The degraded underwater images suffer not only from color distortion but also from non-uniform lighting. For instance, in the first low-light underwater image, methods such as MMLE, MCLA, HLRP, L2UWE, SPDF, UDHTV, WaterNet, and UTransNet enhance brightness and contrast to some extent but fail to reveal underlying information in dark regions. Although ACDC and PDRMRV yield relatively uniform brightness, color artifacts emerge due to excessive color compensation. Notably, for the second greenish-distorted image and the third bluish-distorted image, methods like WWPF, MCLA, HLRP, L2UWE, and HFM are ineffective in eliminating color distortion. In contrast, the processing results of ACDC and the proposed method for these two distorted images are superior to those of the aforementioned methods. Table 3 summarizes the quantitative evaluations of all compared methods on the entire OceanDark dataset. It is evident that the proposed method outperforms others in most cases across these five metrics, confirming its effectiveness in improving color and brightness for underwater images captured under suboptimal lighting conditions.

Figure 7.

Visual performance comparison of various methods on the OceanDark dataset. (a) Raw images; (b) enhanced results of ACDC [58]; (c) PDRMRV [9]; (d) MMLE [4]; (e) WWPF [47]; (f) MCLA [21]; (g) HLRP [10]; (h) L2UWE [59]; (i) SPDF [44]; (j) UDHTV [60]; (k) HFM [6]; (l) WaterNet [25]; (m) UTransNet [31]; (n) proposed method.

Table 3.

Quantitative performance analyses on the OceanDark dataset. The best performance value for each metric is highlighted in red, and the second-best is marked in blue.

4.5. Ablation Study

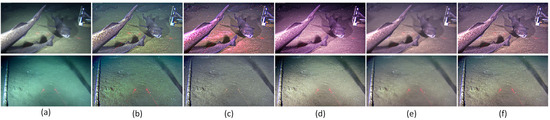

To verify the effectiveness of each component in the proposed method, a series of ablation experiments are conducted on the OceanDark dataset, including (1) our method without color correction (-w/o CC); (2) our method without local color refining (-w/o LCR); (3) our method without global brightness adjustment (-w/o GBA) and (4) our method without contextual adaptive enhancement (-w/o CAE). Figure 8 presents a comparative visualization of the ablation experiments. As shown in the results, the -w/o CC variant enhances detail preservation but fails to rectify color distortion. The -w/o LCR configuration exhibits limited capability in addressing subtle color deviations in the enhanced imagery. Similarly, the -w/o GBA approach fails to achieve meaningful visibility improvement, particularly in underexposed regions of the underwater scene. While the -w/o CAE method succeeds in improving overall visibility, it shows a pronounced deficiency in high-frequency details. Additionally, Table 4 summarizes the quantitative evaluations of all ablation configurations. The experimental results demonstrate that our complete framework—integrating all proposed components—achieves the best performance, indicating that each component plays an important role in the proposed method.

Figure 8.

Ablation study of the proposed method. (a) Raw images; (b) Results of -w/o CC; (c) Results of -w/o LCR; (d) Results of -w/o GBA; (e) Results of -w/o CAE; (f) Proposed method.

Table 4.

Quantitative Evaluations of Ablation Results on the OceanDark Datasets. The best performance for each metric is highlighted in red.

4.6. Runtime Analysis

For computational efficiency analysis, we quantitatively evaluated the average running time of various methods on 1280 × 720-pixel images from the OceanDark dataset and 400 × 300-pixel images from the RUIE dataset. All traditional algorithms were implemented in MATLAB 2019b and executed on a Windows 11 PC equipped with a 13th Gen Intel(R) Core(TM) i9-13900KF CPU (3.00 GHz) and 32 GB RAM. Additionally, a 24 GB NVIDIA GeForce RTX 4090 GPU was employed to run deep learning-based algorithms implemented using the PyTorch 1.10.0 framework. As summarized in Table 5, the proposed method achieves an average processing time of 4.51 s for 1280 × 720-pixel images, significantly outperforming most traditional methods (i.e., PDRMRV, MCLA, HLRP, L²UWE, SPDF, UDHTV, and HFM) in terms of speed. Although our algorithm is slower than ACDC, MMLE, and WWPE, its quantitative evaluation results from the aforementioned experiments are superior to those of these algorithms.

Table 5.

Comparison of running times for images with different resolutions (in seconds).

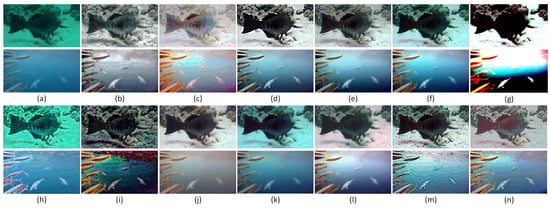

4.7. Application to Image Segmentation

The primary objective of image enhancement is to provide high-quality input images for subsequent high-level vision applications, thereby facilitating more effective feature extraction in advanced computer vision tasks. To rigorously evaluate the enhancement performance of our method, we employ a robust color image segmentation algorithm, superpixel-based fast FCM clustering (SFFCM) [68], to partition images into mutually exclusive and homogeneous regions.

Figure 9 demonstrates the results of various methods on images randomly selected from the OceanDark dataset, with their corresponding segmentation outcomes presented in Figure 10. The segmentation results show that our enhanced images enable more accurate fish-body contour extraction using SFFCM, while comparative methods yield inferior contour localization. Thus, experimental results demonstrate that our method generates images with enhanced structural details, superior contrast, and uniform illumination distribution.

Figure 9.

Visual performance comparison of various methods on the OceanDark dataset. (a) Raw images; (b) enhanced results of ACDC [58]; (c) PDRMRV [9]; (d) MMLE [4]; (e) WWPF [47]; (f) MCLA [21]; (g) HLRP [10]; (h) L2UWE [59]; (i) SPDF [44]; (j) UDHTV [60]; (k) HFM [6]; (l) WaterNet [25]; (m) UTransNet [31]; (n) proposed method.

Figure 10.

Segmentation performance of the SFFCM algorithm on images provided by (a) Raw images; (b) ACDC [58]; (c) PDRMRV [9]; (d) MMLE [4]; (e) WWPF [47]; (f) MCLA [21]; (g) HLRP [10]; (h) L2UWE [59]; (i) SPDF [44]; (j) UDHTV [60]; (k) HFM [6]; (l) WaterNet [25]; (m) UTransNet [31]; (n) proposed method.

5. Conclusions

In this work, we propose an effective and robust underwater image enhancement method comprising two key modules: progressive color correction and vision-inspired adaptive image enhancement. During the color correction stage, we first employ a Rayleigh distribution prior to developing a globally color-balanced pre-mapping strategy for color shift correction. Subsequently, we design a guided filter-based local color refinement method that explicitly models inter-channel correlations to alleviate potential local reddish artifacts caused by overcompensation. Within the vision-inspired adaptive enhancement framework, we introduce a global brightness adjustment module to tackle non-uniform illumination caused by lighting conditions. Additionally, a contextually adaptive enhancement module derived from the local energy characteristics of detail layers is designed to jointly suppress noise and enhance structural details. Extensive experiments conducted across multiple underwater image benchmarks verify the proposed method’s superior performance in color cast correction, brightness adjustment, and structural detail enhancement.

Author Contributions

Z.L. proposed the original idea and wrote the manuscript. W.L. and J.W. collected materials and wrote the manuscript. Y.Y. supervised the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Key Research and Development (R&D) Plan of Shanxi Province (No. 2023-ZDLGY-15), the Guangdong Basic and Applied Basic Research Foundation (No. 2023A1515110770), the Non-funded Science and Technology Research Program of Zhanjiang (No. 2024B01051), and the Program for Scientific Research Start-up Funds of Guangdong Ocean University (Nos. 060302112319 and 060302112309).

Data Availability Statement

The underwater datasets used in this study are publicly available on GitHub via the following URL: https://github.com/xahidbuffon/Awesome_Underwater_Datasets (accessed on 15 September 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hu, K.; Weng, C.; Zhang, Y.; Jin, J.; Xia, Q. An overview of underwater vision enhancement: From traditional methods to recent deep learning. J. Mar. Sci. Eng. 2022, 10, 241. [Google Scholar] [CrossRef]

- Zhou, J.; Pang, L.; Zhang, D.; Zhang, W. Underwater image enhancement method via multi-interval subhistogram perspective equalization. IEEE J. Ocean. Eng. 2023, 48, 474–488. [Google Scholar] [CrossRef]

- Jha, M.; Bhandari, A.K. Cbla: Color-balanced locally adjustable underwater image enhancement. IEEE Trans. Instrum. Meas. 2024, 73, 1–11. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H.-H.; Li, G.; Kwong, S.; Li, C. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2017, 27, 379–393. [Google Scholar] [CrossRef]

- An, S.; Xu, L.; Member, I.S.; Deng, Z.; Zhang, H. Hfm: A hybrid fusion method for underwater image enhancement. Eng. Appl. Artif. Intell. 2024, 127, 107219. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Sbert, M. Color channel compensation (3c): A fundamental pre-processing step for image enhancement. IEEE Trans. Image Process. 2019, 29, 2653–2665. [Google Scholar] [CrossRef] [PubMed]

- Subramani, B.; Veluchamy, M. Pixel intensity optimization and detail-preserving contextual contrast enhancement for underwater images. Opt. Laser Technol. 2025, 180, 111464. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, S.; Lin, Z.; Jiang, Q.; Sohel, F. A pixel distribution remapping and multi-prior retinex variational model for underwater image enhancement. IEEE Trans. Multimed. 2024, 26, 7838–7849. [Google Scholar] [CrossRef]

- Zhuang, P.; Wu, J.; Porikli, F.; Li, C. Underwater image enhancement with hyper-laplacian reflectance priors. IEEE Trans. Image Process. 2022, 31, 5442–5455. [Google Scholar] [CrossRef]

- Hou, G.; Li, J.; Wang, G.; Yang, H.; Huang, B.; Pan, Z. A novel dark channel prior guided variational framework for underwater image restoration. J. Vis. Commun. Image Represent. 2020, 66, 102732. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, S.; Zhang, D.; He, Z.; Lam, K.-M.; Sohel, F.; Vivone, G. Adaptive variational decomposition for water-related optical image enhancement. ISPRS J. Photogramm. Remote Sens. 2024, 216, 15–31. [Google Scholar] [CrossRef]

- Peng, Y.-T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Liang, Z.; Ding, X.; Wang, Y.; Yan, X.; Fu, X. Gudcp: Generalization of underwater dark channel prior for underwater image restoration. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4879–4884. [Google Scholar] [CrossRef]

- Liang, Z.; Zhang, W.; Ruan, R.; Zhuang, P.; Xie, X.; Li, C. Underwater image quality improvement via color, detail, and contrast restoration. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 1726–1742. [Google Scholar] [CrossRef]

- Huo, G.; Wu, Z.; Li, J. Underwater image restoration based on color correction and red channel prior. In Proceedings of the 2018 IEEE International Conference on Systems, Man and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 3975–3980. [Google Scholar]

- Cui, Z.; Yang, C.; Wang, S.; Wang, X.; Duan, H.; Na, J. Underwater image enhancement by illumination map estimation and adaptive high-frequency gain. IEEE Sens. J. 2024, 24, 24677–24689. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Chau, L.-P. Single underwater image restoration using adaptive attenuation-curve prior. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 65, 992–1002. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In Advances in Multimedia Information Processing–PCM 2018, Proceedings of the Pacific Rim Conference on Multimedia, Hefei, China, 21–22 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 678–688. [Google Scholar]

- Liu, K.; Liang, Y. Underwater image enhancement method based on adaptive attenuation-curve prior. Opt. Express 2021, 29, 10321–10345. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, Y.; Li, C.; Zhang, W. Multicolor light attenuation modeling for underwater image restoration. IEEE J. Ocean. Eng. 2023, 48, 1322–1337. [Google Scholar] [CrossRef]

- Zhuang, P.; Li, C.; Wu, J. Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 2021, 101, 104171. [Google Scholar] [CrossRef]

- Li, D.; Zhou, J.; Wang, S.; Zhang, D.; Zhang, W.; Alwadai, R.; Alenezi, F.; Tiwari, P.; Shi, T. Adaptive weighted multiscale retinex for underwater image enhancement. Eng. Appl. Artif. Intell. 2023, 123, 106457. [Google Scholar] [CrossRef]

- Yeh, C.-H.; Lai, Y.-W.; Lin, Y.-Y.; Chen, M.-J.; Wang, C.-C. Underwater image enhancement based on light field-guided rendering network. J. Mar. Sci. Eng. 2024, 12, 1217. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef]

- Li, F.; Li, W.; Zheng, J.; Wang, L.; Xi, Y. Contrastive feature disentanglement via physical priors for underwater image enhancement. Remote Sens. 2025, 17, 759. [Google Scholar] [CrossRef]

- Sun, B.; Mei, Y.; Yan, N.; Chen, Y. Umgan: Underwater image enhancement network for unpaired image-to-image translation. J. Mar. Sci. Eng. 2023, 11, 447. [Google Scholar] [CrossRef]

- Fu, F.; Liu, P.; Shao, Z.; Xu, J.; Fang, M. Mevo-gan: A multi-scale evolutionary generative adversarial network for underwater image enhancement. J. Mar. Sci. Eng. 2024, 12, 1210. [Google Scholar] [CrossRef]

- Cong, R.; Yang, W.; Zhang, W.; Li, C.; Guo, C.-L.; Huang, Q.; Kwong, S. Pugan: Physical model-guided underwater image enhancement using gan with dual-discriminators. IEEE Trans. Image Process. 2023, 32, 4472–4485. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Peng, L.; Zhu, C.; Bian, L. U-shape transformer for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Tang, Y.; Iwaguchi, T.; Kawasaki, H.; Sagawa, R.; Furukawa, R. Autoenhancer: Transformer on u-net architecture search for underwater image enhancement. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1403–1420. [Google Scholar]

- An, G.; He, A.; Wang, Y.; Guo, J. Uwmamba: Underwater image enhancement with state space model. IEEE Signal Process. Lett. 2024, 31, 2725–2729. [Google Scholar] [CrossRef]

- Guan, M.; Xu, H.; Jiang, G.; Yu, M.; Chen, Y.; Luo, T.; Song, Y. Watermamba: Visual State Space Model for Underwater Image Enhancement. arXiv 2024, arXiv:2405.08419. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2822–2837. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Wang, Y.; Zhang, W. Underwater image restoration via information distribution and light scattering prior. Comput. Electr. Eng. 2022, 100, 107908. [Google Scholar] [CrossRef]

- Li, C.-Y.; Guo, J.-C.; Cong, R.-M.; Pang, Y.-W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Akkaynak, D.; Treibitz, T. Sea-thru: A method for removing water from underwater images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Liu, J.; Liu, R.W.; Sun, J.; Zeng, T. Rank-one prior: Real-time scene recovery. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 8845–8860. [Google Scholar] [CrossRef]

- Xie, J.; Hou, G.; Wang, G.; Pan, Z. A variational framework for underwater image dehazing and deblurring. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3514–3526. [Google Scholar] [CrossRef]

- Zhang, W.; Pan, X.; Xie, X.; Li, L.; Wang, Z.; Han, C. Color correction and adaptive contrast enhancement for underwater image enhancement. Comput. Electr. Eng. 2021, 91, 106981. [Google Scholar] [CrossRef]

- Balta, H.; Ancuti, C.O.; Ancuti, C. Effective underwater image restoration based on color channel compensation. In Proceedings of the OCEANS 2021: San Diego–Porto, San Diego, CA, USA, 20–23 September 2021; pp. 1–4. [Google Scholar]

- Kang, Y.; Jiang, Q.; Li, C.; Ren, W.; Liu, H.; Wang, P. A perception-aware decomposition and fusion framework for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 988–1002. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Zhang, T.; Xu, W. Enhancing underwater image via color correction and bi-interval contrast enhancement. Signal Process. Image Commun. 2021, 90, 116030. [Google Scholar] [CrossRef]

- Zhang, T.; Su, H.; Fan, B.; Yang, N.; Zhong, S.; Yin, J. Underwater image enhancement based on red channel correction and improved multiscale fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, L.; Zhuang, P.; Li, G.; Pan, X.; Zhao, W.; Li, C. Underwater image enhancement via weighted wavelet visual perception fusion. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 2469–2483. [Google Scholar] [CrossRef]

- Lu, S.; Guan, F.; Zhang, H.; Lai, H. Underwater image enhancement method based on denoising diffusion probabilistic model. J. Vis. Commun. Image Represent. 2023, 96, 103926. [Google Scholar] [CrossRef]

- Wang, Z.; Shen, L.; Xu, M.; Yu, M.; Wang, K.; Lin, Y. Domain adaptation for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 1442–1457. [Google Scholar] [CrossRef]

- Lin, W.-T.; Lin, Y.-X.; Chen, J.-W.; Hua, K.-L. Pixmamba: Leveraging state space models in a dual-level architecture for underwater image enhancement. In Proceedings of the Asian Conference on Computer Vision, Hanoi, Vietnam, 8–12 December 2024; pp. 3622–3637. [Google Scholar]

- Ouyang, W.; Liu, J.; Wei, Y. An underwater image enhancement method based on balanced adaption compensation. IEEE Signal Process. Lett. 2024, 31, 1034–1038. [Google Scholar] [CrossRef]

- Van De Weijer, J.; Gevers, T.; Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef]

- Afifi, M.; Price, B.; Cohen, S.; Brown, M.S. When color constancy goes wrong: Correcting improperly white-balanced images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1535–1544. [Google Scholar]

- Azmi, K.Z.M.; Ghani, A.S.A.; Yusof, Z.M.; Ibrahim, Z. Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appl. Soft Comput. 2019, 85, 105810. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Trezzi, E. Shades of gray and colour constancy. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 9 November 2004; Society of Imaging Science and Technology: Springfield, VA, USA, 2004; Volume 12, pp. 37–41. [Google Scholar]

- Yang, K.-F.; Zhang, X.-S.; Li, Y.-J. A biological vision inspired framework for image enhancement in poor visibility conditions. IEEE Trans. Image Process. 2019, 29, 1493–1506. [Google Scholar] [CrossRef]

- Gong, Y.; Sbalzarini, I.F. Curvature filters efficiently reduce certain variational energies. IEEE Trans. Image Process. 2017, 26, 1786–1798. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Y.; Li, C. Underwater image enhancement by attenuated color channel correction and detail preserved contrast enhancement. IEEE J. Ocean. Eng. 2022, 47, 718–735. [Google Scholar] [CrossRef]

- Marques, T.P.; Albu, A.B. L2uwe: A framework for the efficient enhancement of low-light underwater images using local contrast and multi-scale fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 538–539. [Google Scholar]

- Li, Y.; Hou, G.; Zhuang, P.; Pan, Z. Dual high-order total variation model for underwater image restoration. arXiv 2024, arXiv:2407.14868. [Google Scholar] [CrossRef]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Liu, R.; Ma, L.; Zhang, J.; Fan, X.; Luo, Z. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10561–10570. [Google Scholar]

- Wang, Y.; Li, N.; Li, Z.; Gu, Z.; Zheng, H.; Zheng, B.; Sun, M. An imaging-inspired no-reference underwater color image quality assessment metric. Comput. Electr. Eng. 2018, 70, 904–913. [Google Scholar] [CrossRef]

- Choi, L.K.; You, J.; Bovik, A.C. Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Gómez-Polo, C.; Muñoz, M.P.; Luengo, M.C.L.; Vicente, P.; Galindo, P.; Casado, A.M.M. Comparison of the cielab and ciede2000 color difference formulas. J. Prosthet. Dent. 2016, 115, 65–70. [Google Scholar] [CrossRef]

- Lei, T.; Jia, X.; Zhang, Y.; Liu, S.; Meng, H.; Nandi, A.K. Superpixel-based fast fuzzy c-means clustering for color image segmentation. IEEE Trans. Fuzzy Syst. 2018, 27, 1753–1766. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).