Abstract

A distance-independent background light estimation method is proposed for underwater overhead images. The method addresses the challenge of the absence of the farthest point in underwater overhead images by adopting a global perspective to select the optimal solution and estimate the background light by minimizing the loss function. Moreover, to enhance the information retention in the images, a translation function is employed to adjust the transmission map values within the range of [0.1, 0.95]. Additionally, the method capitalizes on the redundancy of image information and the similarity of adjacent frames, resulting in higher computational efficiency. The comparative experimental results show that the proposed method has better restoration performance on underwater images in various scenarios, especially in handling color bias and preserving information.

1. Introduction

The development of modern technology has made unmanned underwater vehicles (UUVs) suitable oceanic equipment for performing various underwater tasks. In political, economic, and military fields, UUVs play an irreplaceable role. They are widely used in various underwater tasks, e.g., pipeline tracking, underwater terrain scanning, laying underwater cables, developing oceanic resources, etc. As the tasks performed by UUVs become more complex, acoustic technology is unable to meet the accuracy requirements for fine operations. In contrast, optical technology is more suitable for close-range, delicate operations. The visual of underwater optical images directly affects the performance of subsequent operations, such as target detection, feature extraction, and pose estimation. Therefore, obtaining clear and realistic underwater images is crucial for precise underwater operations. However, the special properties of the underwater environment can affect the quality of underwater images. Due to the different rates of attenuation of light of different wavelengths underwater and the scattering of light caused by underwater impurities, underwater images often have problems such as low contrast, blurred object edges, high noise, and blue-green color deviation. These problems greatly affect the effectiveness of UUVs in underwater tasks and may even lead to task failure. Therefore, image processing technology for underwater images plays a crucial role in UUV underwater operations.

This paper aims to address the issue of poor image restoration caused by the absence of the farthest point in underwater overhead images. To achieve this, a distance-independent real-time underwater image restoration method suitable for CPUs is proposed. Specifically, a loss function is constructed to minimize information loss and achieve histogram distribution equalization. For the severely attenuated red channel, the background light is calculated based on the minimum difference principle of gray value. In addition, this method also optimizes the transmission map estimation method [1] using a translation function to reduce information loss. To address real-time issues, two acceleration strategies are proposed based on the redundancy of image information and the similarity of adjacent frames. Experimental results show that the proposed method can restore underwater images with richer colors and more information.

The rest of the paper is organized as follows. In Section 2, the existing underwater image processing methods and their shortcomings are reviewed. In Section 3, the underwater imaging model is introduced. In Section 4, the proposed method for real-time underwater image restoration is explained. In Section 5, the experimental results are presented and discussed. Finally, Section 6 is the conclusion.

2. Related Work

In recent years, many methods have been proposed to enhance and restore underwater images to tackle these issues. Image enhancement algorithms [2] include histogram equalization, white balancing, wavelet transform, fusion methods, etc. These algorithms enhance image contrast and sharpen image details to improve the quality without relying on the underwater imaging model. While these methods are easy to operate and have simple principles, they do not address the root cause of the degradation of underwater images. Image restoration algorithms based on the Jaffe–McGlamery underwater imaging model [3,4] address this limitation. The core of this approach is to solve two unknowns in the model: transparency and background light, to restore the image. After the pioneering work of He et al. [5], many related methods and variants emerged [6,7]. While the above image restoration algorithm can be applied to image dehazing and deblurring, there is still room for improvement [8]. For better results, combined approaches [8,9,10,11,12,13,14,15,16] that combine image enhancement methods such as Gray-World assumption theory, semantic white balance, low-pass filtering, and polarization technology with image restoration algorithms. While these methods can achieve better results, they require more processing time and complex computations.

Most of the methods mentioned above attempt to determine the distance between the camera and the target and approximate the intensity value of the farthest point as the background light. For instance, the dark channel prior (DCP) algorithm proposed by He et al. [5] exploits the fact that scattered light can increase the luminance of the dark channel. As the depth of the scene increases, the dark channel luminance also increases. The background light can be obtained by calculating the average gray value of the top 1% of the brightest pixels in the dark channel. Furthermore, the transmission map can be obtained by substituting the dark channel value into the Jaffe–McGlamery underwater imaging model [3,4] after assuming that the dark channel value of a clear and fog-free image tends to zero. Drews et al. [6] proposed the underwater dark channel prior (UDCP) algorithm, which is a variant of the DCP algorithm that removes the effect of the red channel by considering the severe attenuation of the red channel in underwater images. Carlevaris-Bianco et al. [1] calculated the difference between the red channel and the maximum value of the blue and green channels to obtain the transmittance, which decreases with increasing distance. This law leads to the proposed MIP algorithm, where the grayscale value at the lowest transmittance, which corresponds to the farthest distance, is the value of the background light. Dai et al. [14] employed the fact that objects that are further away from the camera are more blurred than closer objects. They used a quadtree to find a small area in the image with the flattest, the least color variation, and most blurred, and the mean grayscale value of this area is the value of background light. Additionally, Peng et al. [15] combined several of the above methods to solve for the unknowns in the Jaffe–McGlamery underwater imaging model [3,4]. Furthermore, there have been various deep learning-based methods proposed recently for scene depth and lighting estimation. For instance, Wang et al. [17] presented an occlusion-aware light field depth estimation network with channel and view attention, which uses a coarse-to-fine approach to fuse sub-aperture images from different viewpoints, enabling robust and accurate depth estimation even in the presence of occlusions. Song et al. [7] used deep learning to find the linear relationship between the maximum value of the blue-green channel and the maximum value of the red channel and the distance. Similarly, the transmission map is found, and the grayscale value at the farthest distance is the value of background light. Ke et al. [16] comprehensively consider color, saturation, and detail information to construct the scene depth and edge maps for estimating the transmission map. Zhan et al. [18] proposed a lighting estimation framework that combines regression-based and generation-based methods to achieve precise regression of lighting distribution with the inclusion of a depth branch. These methods rely on estimating the background light value by approximating the farthest point in the underwater image as a point at infinity from the camera. Therefore, these methods only work well when processing horizontally captured images, as shown in Figure 1a. However, in areas such as underwater pipeline tracking, underwater mine clearance, underwater terrain exploration, and seafood fishing, the cameras on UUVs are typically pointed downwards vertically or diagonally. The captured images are shown in Figure 1b, where each point is very close to the camera. In this situation, the distance-based background light estimation methods are unsuitable for such images. Moreover, the DCP algorithm, which depends on the background light to solve for the transmission map, tends to introduce errors in the results, degrading the quality further.

Figure 1.

Underwater images. (a) Image taken horizontally; (b) Image taken overhead.

To solve this problem, image restoration methods that do not rely on the farthest point from the camera in the image are needed. Currently, the commonly used solution is to create an end-to-end network that directly outputs the restored image after inputting the original image [19,20,21,22,23,24,25,26,27]. For instance, Tang et al. [25] unified the unknown variables of the underwater imaging model. They predicted a single-variable linear physical model through a lightweight convolutional neural network (CNN) to generate clear images directly. Zhang et al. [26] enhanced the three-channel features of the image and fused them based on CNN to solve the problem of non-uniform illumination. Han et al. [27] utilized contrastive learning and generative adversarial networks to maximize the mutual information between original information for image restoration. Although these methods perform well in underwater scenes, they require graphics processing units (GPUs) with high performance and large memory. However, the suitability of this method for UUVs with limited hardware resources is limited, which led to the proposal of an underwater overhead image restoration method suitable for central processing units (CPUs).

Recently, Li et al. [8] proposed a simple and effective underwater image restoration method based on the principles of minimum information loss and histogram priors. This method is distance-independent and can be implemented on CPUs. Inspired by this, these principles and prior knowledge are applied to propose a distance-independent background light calculation method. The novelty of the method is that it takes a global perspective and constructs a loss function based on the expected effect of image restoration, thus obtaining the background light without relying on distance. In addition, considering the real-time requirement of image processing, Jamil et al. [28] classified the information in the image into three categories: useful, redundant, and irrelevant, and discussed the pros and cons of various lossy image compression methods. Regarding the global variable of background light, which is related to the overall color tone of the entire image but not related to the details in the image. Inspired by this, in solving the background light, this paper adopts a lossy compression method to reduce the resolution of the image, thereby improving the efficiency of the algorithm. The main contributions of this work are as follows:

- A distance-independent method for solving background light is proposed, which is more suitable for color correction and does not require additional image enhancement operations or hardware resources.

- By utilizing spatial resolution and similarity between adjacent frames, the proposed method offers high computational efficiency.

3. Background

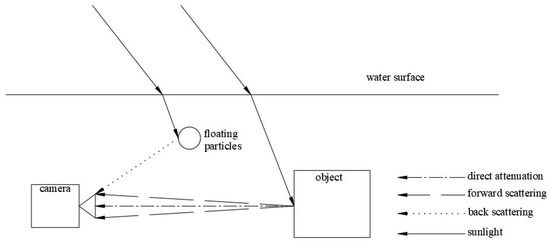

Underwater images are generally degraded due to the absorption and scattering of light. The medium of water causes the absorption of light, reducing the energy of light based on its wavelength and depth. As different wavelengths of light have varying attenuation rates in water, this leads to color distortion. In addition, the scattering of light is caused by suspended particles in the water reflecting light in other directions, resulting in image blurring. The Jaffe–McGlamery underwater imaging model suggests that the light received by the camera in an underwater scene contains three components: the direct attenuation component, the forward scattering component, and the backscattering component, as shown in Figure 2. Therefore, an underwater image can be represented as a linear superposition of these three components. The model can be expressed as

where is the light arriving at the camera, is the direct attenuation, is the forward scattering, and is the backscattering.

Figure 2.

Jaffe–McGlamery underwater imaging model.

Direct attenuation is the attenuation due to the main radiation within the medium with increasing propagation distance. It is mathematically defined as

where x denotes a pixel, is the wavelength of light, is the non-degraded image, and is the transmission map.

Forward scattering is the light reflected from the target object that reaches the camera through scattering. It can be expressed as

where is the point spread function; because forward scattering has little effect on image quality, it is usually neglected.

Backscattering is the light reaching the camera due to the scattering effect of impurities in the water. It can be expressed as

where is the background light, it represents the color of the body of water, and its value can be expressed as the grayscale value of a point at a distance of infinity from the camera.

The simplified underwater imaging model [25] can be expressed as

where is the observed image.

Equation (5) can be transformed into Equation (6). The main task of underwater image recovery is to estimate the transmission map and the background light .

Methods for solving the background light and the transmission map often involve estimating the distance between the camera and the target. This is because the background light represents the grayscale value of a point at infinity from the camera. In addition, according to the Beer–Lambert law [29], as shown in Equation (7), the transmission map decreases exponentially as the distance increases.

where c is the attenuation coefficient; d(x) is the distance between the camera and the target.

4. The Proposed Method

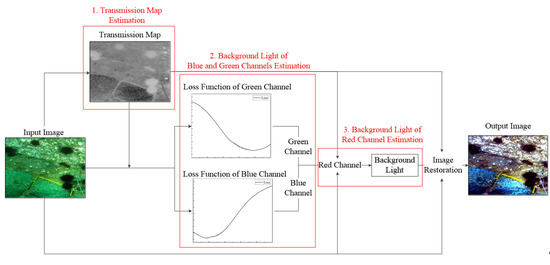

In this section, this paper will introduce the proposed method for restoring overhead underwater images, as well as strategies for accelerating the process. The operational steps of the proposed method are shown in Figure 3. Firstly, the transmission map is calculated, and the values of the transmission map outside the range are transferred by a translation function to reduce information loss. Secondly, the background light for the blue and green channels is estimated by minimizing the loss function. Thirdly, the background light for the red channel is determined based on the principle of minimizing the average grayscale difference between the three channels. Finally, the degraded underwater image can be restored based on the obtained background light and transmission map.

Figure 3.

Operation process of the proposed method.

4.1. Transmission Map Estimation

To achieve accurate transmission map solutions and avoid compounding errors, this paper utilizes the MIP algorithm’s [1] transmission map solution method instead of the DCP algorithm, which depends on the background light. In the MIP algorithm, the largest differences among the three different color channels are calculated as follows:

where is the largest difference among the three color channels, and is a local patch in the image.

The transmission map is

As shown by Equation (5), t(x) and (1 − t(x)), respectively, represent the contribution of the main radiation and the background radiation to the image. The value of t(x) decreases as the background radiation dominates the grayscale value. However, as the background radiation is usually not as bright as the main radiation, the minimum value of t(x) is kept at 0.1 to avoid an overly dark recovered image. In addition, to preserve the image’s authenticity, a portion of the fog is retained in the recovered image. Hence, the maximum value of t(x) is set to 0.95 [5]. This leads to a transmission map range of [0.1, 0.95].

However, simply cutting off the transmission map values beyond the range of values will lead to the loss of information. Therefore, in this paper, the transmission map is transformed as follows to transfer the information in the transmission map to the range [0.1, 0.95] as much as possible.

where is the quantity of the transformation, is the minimum value of the transmission map, is the maximum value of the transmission map, and is the transmission map after the transformation.

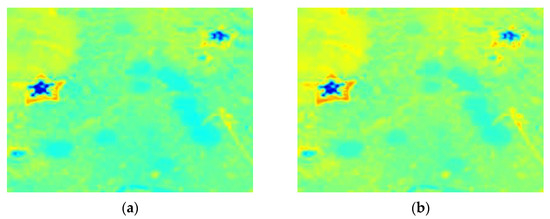

This transformation technique retains some transmission map information beyond the effective range while maintaining the contrast of the original transmission map. The comparison results of the transmission maps before and after the transformation are depicted in Figure 4a,b. The entropy values of the transmission maps are 5.5424 and 5.5433, respectively, indicating that the transformed transmission map is more detailed and informative.

Figure 4.

(a) Transmission map before transfer; (b) Transmission map after transfer.

4.2. Background Light of Blue and Green Channels Estimation

As mentioned above, it is not appropriate to use one or the mean value of a few pixels in the image to represent the background light in an overhead image because there is no point far enough in the image that can be reasonably approximated as background light. To overcome this limitation, this paper calculates the value of the background light through inference rather than relying on any specific pixel value in the image. The value of the background light is inferred from the desired image result, which provides a more accurate representation of the background light.

Firstly, motivated by Li et al. [30], who determined the transmission map by minimizing information loss, this paper aims to find the background light by minimizing the information loss of the image. To ensure that the grayscale value of the restored image is between 0 and 1, any values outside this range are directly assigned to 0 or 1. If there are too many pixels outside the grayscale range, large black or white areas will appear in the image, leading to information loss from the original image.

To solve this problem, the existing approach is to use the stretching function.

where V represents the grayscale range before stretching and v represents the grayscale value involved in the calculation.

The grayscale difference between the two pixels can be expressed as

where represents the difference between the two pixels before stretching, and represents the difference between the two pixels after stretching.

While the method is effective when the difference between the maximum and minimum grayscale values is less than 1, it is unsuitable for cases where this difference exceeds 1. In such scenarios, the method compresses the original grayscale values, resulting in decreased contrast between pixels. This can lead to loss of important information and reduced overall image quality. Therefore, compressing the contrast of the entire image to retain a few bright or dark pixels that contain minimal information is not a reasonable approach.

When the grayscale difference is greater than 1, the method described above directly assigns the under or over the part to 0 or 1. However, to minimize information loss and maintain the contrast of the image, this paper aims to minimize the number of pixels that fall outside the grayscale range. In other words, the number of such pixels must be kept as small as possible while minimizing the information loss.

where M and N represent the length and width of the image and is the number of pixels whose grayscale values fall between 0 and 1 in the image recovered by taking the background light .

In addition, Li et al. [30] observed that the histograms of clear, fog-free images are distributed relatively uniformly without sharp points, based on their analysis of image datasets of five natural scenes. However, the histograms of underwater images are typically concentrated in a small range. In light of this, the present study aims to produce restored images with the most balanced grayscale distribution. This is achieved by minimizing the variance of the histogram of the restored image, which can be expressed as

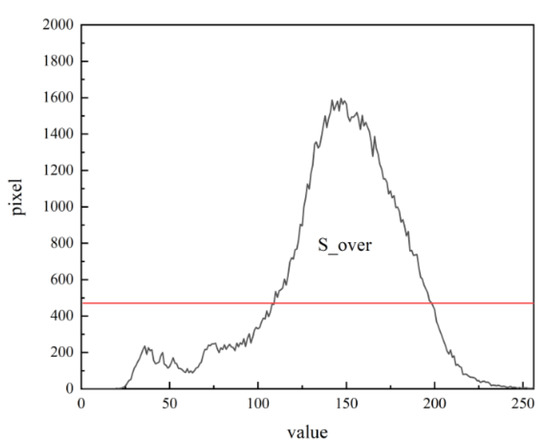

where is the area of the histogram of the recovered images over the desired histogram (the desired histogram is the most uniform distribution of image grayscale, with the same number of pixels falling on each gray value as (M × N)/255), indicating the degree of unevenness of the histogram distribution, as shown in Figure 5.

Figure 5.

The area of .

In summary, the loss function for obtaining the background light values in the range [0, 1] is defined as follows:

Then the background light of the blue and green channels is

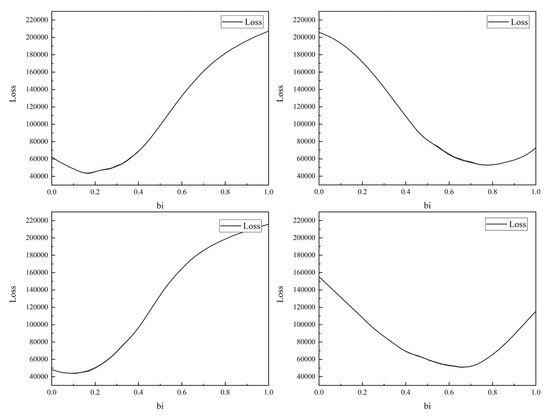

Through several experiments, it is found that the loss function is generally convex, i.e., it shows a trend of decreasing and then increasing as the background light increases, as shown in Figure 6. Therefore, inspired by the loss function optimization method in deep learning, to find the background light that makes the loss function obtain the minimum value, instead of finding all the function values within the range of values of background light , the gradient descent method can be used, as shown in Equation (17), and can be obtained after several steps.

where is the learning rate.

Figure 6.

The shape of the loss function.

4.3. Background Light of Red Channel Estimation

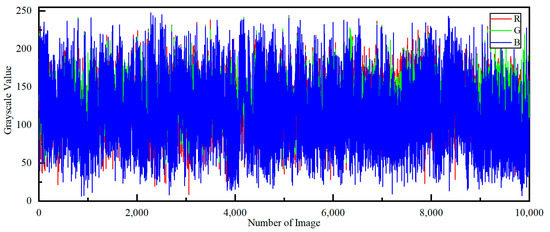

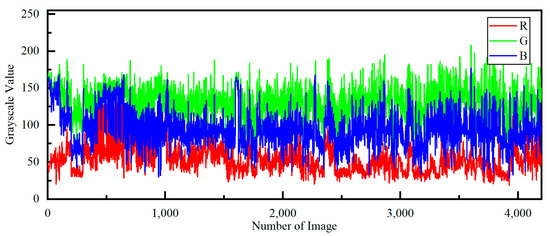

Due to the red channel’s limited information, it is challenging to accurately determine the background light using only this channel. Therefore, this paper infers the red channel background light by leveraging information from the recovered blue-green channels. The study analyzes the mean values of the red, green, and blue channels of both natural and underwater image datasets, as illustrated in Figure 7 and Figure 8. The details of the datasets are as follows:

Figure 7.

The mean values of the red, green, and blue channels of the natural image dataset.

Figure 8.

The mean values of the red, green, and blue channels of the underwater image dataset.

- The Caltech-UCSD Birds-200-2011 Dataset (http://www.vision.caltech.edu/datasets/cub_200_2011/, accessed on 20 March 2023) [31]. This is a natural image dataset for bird image classification, which includes 11,788 images covering 200 bird species.

- CBCL Street Scenes Dataset (http://cbcl.mit.edu/software-datasets/streetscenes/, accessed on 20 March 2023) [32]. This is a dataset of street scene images captured by a DSC-F717 camera from Boston and its surrounding areas in Massachusetts, belonging to the category of natural image datasets, with a total of 3547 images.

- Real World Underwater Image Enhancement dataset (https://github.com/dlut-dimt/Realworld-Underwater-Image-Enhancement-RUIE-Benchmark, accessed on 20 March 2023) [33]. This underwater image dataset was collected from a real ocean environment testing platform consisting of 4231 images. The dataset is characterized by its large data size, diverse degree of light scattering effects, rich color tones, and abundant detection targets.

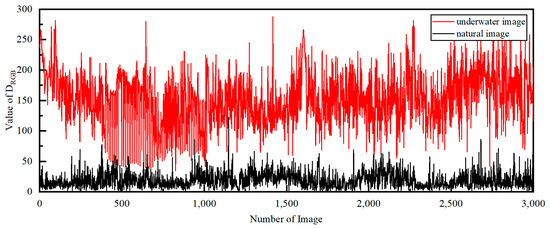

The natural images exhibit similar three-channel mean values, whereas the underwater images demonstrate a clear demarcation. To further investigate this difference, Equation (18) calculates the sum of the differences between the three-channel means, and Figure 9 displays the results. The analysis shows that the values for underwater images are significantly larger than those for natural images.

where , , and are the mean values of the red, green, and blue channels of the observed image.

Figure 9.

The values of the underwater and natural images.

Therefore, this paper aims to minimize the values. Specifically, the gray mean value of the blue and green channels is utilized as the gray mean value of the red channel to derive the red channel background light. This approach allows for correcting the color shift problem in underwater images without additional image enhancement operations.

where , , and are the mean values of the red, green, and blue channels of the restored image, is the mean value of the transmission map.

In some cases, there may be a significant difference between the mean value of the blue channel and the mean value of the green channel , leading to a color bias towards red in the resulting image. To address this issue, this paper proposes a solution where the background light of the channel with the higher mean value is kept, and its recovery map is used to estimate the background light of the remaining two channels. For instance, if the mean value of the green channel is larger, the background light of the other two channels can be estimated as follows:

The recovered image is obtained by bringing the transmission map and background light into Equation (6).

4.4. Strategies to Speed Up

Considering the need for higher computational efficiency, this paper aims to improve the operation speed by addressing two key factors: the spatial resolution of images and the similarity of neighboring images.

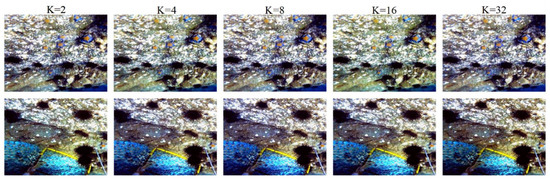

First, the proposed background light calculation method is based on the global consideration of the picture and is not sensitive to the size of the picture. As shown in Figure 10, the recovery effect of the picture is not affected too much after reducing the size of the picture to 1/K of the original one. Therefore, when applied in practice, the K value can be adjusted appropriately, weighing the imaging effect as well as the running speed.

Figure 10.

Results of image restoration using the background light were calculated by reducing the image by a factor of K.

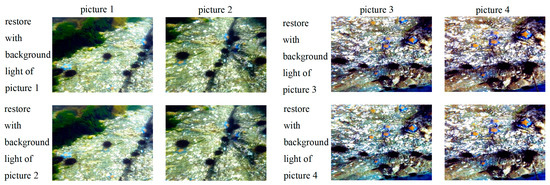

Second, in the continuous working state, the scene of several adjacent frames does not change much, and the background light values can be shared to further improve the running speed. As shown in Figure 11, the recovery images are almost the same after the neighboring images swap the background light values.

Figure 11.

The results of image restoration by sharing the background light between several adjacent frames.

5. Experimental Results and Discussion

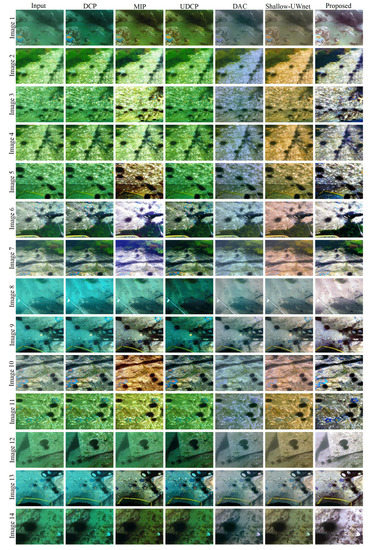

The proposed approach is compared with five existing techniques: the dark channel prior (DCP) method [5], underwater dark channel prior (UDCP) method [6], Carlevaris-Bianco’s (MIP) method [1], Differential Attenuation Compensation (DAC) method [2] and Shallow-UWnet method [34]. Underwater images were obtained from the Real World Underwater Image Enhancement dataset [33]. Qualitative and quantitative evaluations are carried out to assess the performance of different methods.

In this study, different types of images are selected from the dataset (including images with the green tune, blue tune, and images with less color bias). In addition, the performance of the proposed method is compared with other methods in restoring real underwater images. Qualitative experiments show the results of different methods in restoring underwater aerial images, as shown in Figure 12. The proposed method eliminates the effects of light absorption, corrects the color distortion of blue-green color cast in underwater images, and restores the rich and vibrant colors of the images, which is in line with common sense. In addition, the proposed method successfully eliminates the effects of scattering and makes the images clearer. Other methods improve the underwater images to different extents but still have room for improvement. Although the DCP and UDCP methods improve the clarity of the images, they do not correct the color bias of the images. The MIP method successfully performs defogging of the images but causes undesirable yellow color bias. DAC algorithm overcomes the color bias problem. However, the restored images are more blurry than other methods, and the corrected colors are relatively dim. The Shallow-UWnet algorithm successfully corrects the color bias of blue tune underwater images. Still, for images with green tune or less color bias, the restored images are over-corrected, resulting in additional yellow or red color distortion. Therefore, their method’s results are not satisfactory. The fact that every point in the underwater aerial image is near the camera is considered. The proposed method does not use distance-based background light solutions but instead chooses the best global solution for background light. As a result, the method produces more natural and superior results in terms of color and detail than other methods.

Figure 12.

Subjective evaluation results.

Table 1 shows the average processing time and hardware requirements for different algorithms to process one image, as well as their integrated development environments (IDE). The DCP method [5], UDCP method [6], MIP method [1], DAC method [2], and the proposed method run on the Windows 10 operating system, with 16 GB of memory and 11th Gen Intel(R) Core(TM) i7-1165G7 @ 2.80 GHz (8 CPUs). The Shallow-UWnet method [34] runs on a Tesla T4 GPU. As shown in Table 1, among the algorithms running on the CPUs, the DCP algorithm has the fastest speed. The proposed method in this paper is slightly slower than MIP and UDCP. The Shallow-UWnet algorithm has good real-time performance but requires additional hardware resources.

Table 1.

The average processing time, hardware requirements, and IDE of each method.

To show the proposed method’s advantages quantitatively, comparisons are made with other restoration methods using three underwater quality evaluation metrics: the Underwater Color Image Quality Evaluation Metric (UCIQE) [35], the Underwater Image Quality Measure (UIQM) [36], and the entropy.

UCIQE is an objective evaluation expressed as a linear combination of chroma, saturation, and contrast:

where ,, and are the scale factors, they are set as per the original paper [35]. is the standard deviation of chroma, is the contrast of brightness, and is the saturation average.

UIQM evaluates the quality of underwater images through a linear combination of its three components: sharpness measure, colorfulness measure, and contrast measure.

where , , and are the scale factors, they are set as per the original paper [36]. UICM is the colorfulness measure, UISM is the sharpness measure, and UIConM is the contrast measure.

The entropy of the system reflects the degree of chaos, and the higher the entropy, the more information the image contains, which means the clearer the image.

Table 2 and Table 3 report the UCIQE and UIQM scores of images shown in Figure 12. The scores in bold denote the best results. The proposed method has stronger robustness and optimal overall performance in various scenarios, as indicated by its maximum average value. While the UCIQE and UIQM scores for some of the images processed by the proposed method were slightly lower than those of other methods, these methods exhibited larger fluctuations, with high scores in some images and low scores in others. In contrast, the proposed method demonstrates a more stable restoration performance for various types of images. However, it should be noted that some of the restoration images with higher scores were too focused on enhancing contrast, neglecting color correction.

Table 2.

UCIQE scores * of images shown in Figure 12.

Table 3.

UIQM scores * of images shown in Figure 12.

To further demonstrate the superiority of the proposed method in color correction, the colorfulness measures in UCIQE and UIQM scores ( and UICM) are additionally listed in Table 4 and Table 5. The results show that the proposed method achieved the highest or near-highest scores in both evaluation metrics, indicating that the algorithm can effectively improve the color level of the restored images. This is because the histogram distribution balance was considered when constructing the loss function, and the value of the background light was obtained by minimizing the loss function. Therefore, the calculated background light can make the restored images more colorful and vivid.

Table 4.

The colorfulness measure * in UCIQE scores of images shown in Figure 12.

Table 6 gives the entropy values of the images shown in Figure 12. The images restored by the proposed method carry more information. This is because the transformation function in this study preserved more information about the transmitted image, and the loss of information was considered when constructing the loss function. This allows the calculated background light to retain more information about the image.

Table 6.

Entropy * values of images shown in Figure 12.

6. Conclusions

In this paper, a new image recovery method has been proposed for overhead images taken by UUVs performing tasks such as underwater pipeline tracking, underwater mine clearance, underwater terrain detection, and seafood fishing. Firstly, a distance-independent background light calculation method has been proposed for the overhead images where the points are close to the camera, unlike the previous methods that use the most distant point in the image to approximate the background light. Next, an optimization function based on the overall information loss as well as the uniformity of the histogram distribution has been used to calculate the blue and green channel background light values. Then, based on the statistical results, the mean value of the red channel has been determined based on the principle of minimizing the sum of the differences between the mean values of the three channels, which has been used to invert the background light value of the red channel. Moreover, this paper also has used the translation function to control the value range of the transmission map between 0.1 and 0.95, which retains the information carried by the transmission map. Finally, based on the real-time consideration, two strategies have been proposed to speed up the operation from the perspective of the spatial resolution of the image and the similarity of two adjacent frames. The experimental results show that the proposed method has strong robustness in adapting to various underwater environments. Moreover, the method can effectively correct the blue-green color cast in underwater images while preserving more information. Importantly, the method can run on a CPU without the need for additional hardware resources. The average processing time per frame is 0.3345 s, demonstrating good real-time performance.

However, the proposed method still has some limitations. The method focuses on color restoration and information preservation without taking measures to enhance contrast. In future work, efforts will be made to enhance the contrast of underwater images to make image details clearer.

Author Contributions

Conceptualization, Y.W., A.Y. and S.Z.; methodology, A.Y.; software, A.Y.; validation, Y.W., A.Y. and S.Z.; formal analysis, A.Y.; investigation, Y.W.; resources, S.Z.; data curation, A.Y.; writing—original draft preparation, A.Y.; writing—review and editing, Y.W.; visualization, A.Y.; supervision, Y.W. and S.Z.; project administration, Y.W.; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The code of the proposed method is available at GitHub: https://github.com/YADyuaidi/underwater, accessed on 4 May 2023.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Carlevaris-Bianco, N.; Mohan, A.; Eustice, R.M. Initial results in underwater single image dehazing. In Proceedings of the Oceans 2010 Mts/IEEE Seattle, Seattle, WA, USA, 20–23 September 2010; pp. 1–8. [Google Scholar]

- Lai, Y.; Zhou, Z.; Su, B.; Zhe, X.; Tang, J.; Yan, J.; Liang, W.; Chen, J. Single underwater image enhancement based on differential attenuation compensation. Front. Mar. Sci. 2022, 9, 1047053. [Google Scholar] [CrossRef]

- McGlamery, B. A computer model for underwater camera systems. In Proceedings of the Ocean Optics VI; SPIE: Bellingham, WA, USA, 1980; pp. 221–231. [Google Scholar]

- Jaffe, J.S. Computer modeling and the design of optimal underwater imaging systems. IEEE J. Ocean. Eng. 1990, 15, 101–111. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Drews, P., Jr.; do Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission Estimation in Underwater Single Images. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 825–830. [Google Scholar]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In Proceedings of the Pacific Rim Conference on Multimedia, Hefei, China, 21–22 September 2018; pp. 678–688. [Google Scholar]

- Li, C.; Quo, J.; Pang, Y.; Chen, S.; Wang, J. Single underwater image restoration by blue-green channels dehazing and red channel correction. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1731–1735. [Google Scholar]

- Liang, Z.; Wang, Y.; Ding, X.; Mi, Z.; Fu, X. Single underwater image enhancement by attenuation map guided color correction and detail preserved dehazing. Neurocomputing 2021, 425, 160–172. [Google Scholar] [CrossRef]

- Ding, X.; Liang, Z.; Wang, Y.; Fu, X. Depth-aware total variation regularization for underwater image dehazing. Signal Process. Image Commun. 2021, 98, 116408. [Google Scholar] [CrossRef]

- Emberton, S.; Chittka, L.; Cavallaro, A. Underwater image and video dehazing with pure haze region segmentation. Comput. Vis. Image Underst. 2018, 168, 145–156. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Sbetr, M. Color Channel Transfer for Image Dehazing. IEEE Signal Process. Lett. 2019, 26, 1413–1417. [Google Scholar] [CrossRef]

- Li, T.; Wang, J.; Yao, K. Visibility enhancement of underwater images based on active polarized illumination and average filtering technology. Alex. Eng. J. 2022, 61, 701–708. [Google Scholar] [CrossRef]

- Dai, C.; Lin, M.; Wu, X.; Wang, Z.; Guan, Z. Single underwater image restoration by decomposing curves of attenuating color. Opt. Laser Technol. 2020, 123, 105947. [Google Scholar] [CrossRef]

- Peng, Y.-T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Ke, K.; Zhang, C.; Wang, Y.; Zhang, Y.; Yao, B. Single underwater image restoration based on color correction and optimized transmission map estimation. Meas. Sci. Technol. 2023, 34, 055408. [Google Scholar] [CrossRef]

- Wang, X.; Tao, C.; Zheng, Z.J.O.; Engineering, L.i. Occlusion-aware light field depth estimation with view attention. Opt. Lasers Eng. 2023, 160, 107299. [Google Scholar] [CrossRef]

- Zhan, F.; Yu, Y.; Zhang, C.; Wu, R.; Hu, W.; Lu, S.; Ma, F.; Xie, X.; Shao, L. Gmlight: Lighting estimation via geometric distribution approximation. IEEE Trans. Image Process. 2022, 31, 2268–2278. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, X.; An, D.; Wei, Y. Underwater image enhancement and marine snow removal for fishery based on integrated dual-channel neural network. Comput. Electron. Agric. 2021, 186, 106182. [Google Scholar] [CrossRef]

- Wang, K.; Shen, L.; Lin, Y.; Li, M.; Zhao, Q. Joint Iterative Color Correction and Dehazing for Underwater Image Enhancement. IEEE Robot. Autom. Lett. 2021, 6, 5121–5128. [Google Scholar] [CrossRef]

- Zong, X.; Chen, Z.; Wang, D.J.A.I. Local-CycleGAN: A general end-to-end network for visual enhancement in complex deep-water environment. Appl. Intell. 2021, 51, 1947–1958. [Google Scholar] [CrossRef]

- Zhu, S.; Luo, W.; Duan, S. Enhancement of Underwater Images by CNN-Based Color Balance and Dehazing. Electronics 2022, 11, 2537. [Google Scholar] [CrossRef]

- Hong, L.; Wang, X.; Xiao, Z.; Zhang, G.; Liu, J. WSUIE: Weakly Supervised Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2021, 6, 8237–8244. [Google Scholar] [CrossRef]

- Gui, X.; Zhang, R.; Cheng, H.; Tian, L.; Chu, J. Multi-Turbidity Underwater Image Restoration Based on Neural Network and Polarization Imaging. Laser Optoelectron. Prog. 2022, 59, 0410001. [Google Scholar] [CrossRef]

- Tang, Z.; Li, J.; Huang, J.; Wang, Z.; Luo, Z. Multi-scale convolution underwater image restoration network. Mach. Vis. Appl. 2022, 33, 85. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, W.; Li, L.; Jiao, H.; Li, Y.; Guo, L.; Xu, J. A framework for the efficient enhancement of non-uniform illumination underwater image using convolution neural network. Comput. Graph. 2023, 112, 60–71. [Google Scholar] [CrossRef]

- Han, J.; Shoeiby, M.; Malthus, T.; Botha, E.; Anstee, J.; Anwar, S.; Wei, R.; Armin, M.A.; Li, H.; Petersson, L. Underwater Image Restoration via Contrastive Learning and a Real-World Dataset. Remote Sens. 2022, 14, 4297. [Google Scholar] [CrossRef]

- Jamil, S.; Piran, M.J.; Rahman, M.; Kwon, O.-J. Learning-driven lossy image compression: A comprehensive survey. Eng. Appl. Artif. Intell. 2023, 123, 106361. [Google Scholar] [CrossRef]

- Han, M.; Lyu, Z.; Qiu, T.; Xu, M. A review on intelligence dehazing and color restoration for underwater images. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 1820–1832. [Google Scholar] [CrossRef]

- Li, C.-Y.; Guo, J.-C.; Cong, R.-M.; Pang, Y.-W.; Wang, B. Underwater Image Enhancement by Dehazing With Minimum Information Loss and Histogram Distribution Prior. IEEE Trans. Image Process. 2016, 26, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Welinder, P.; Branson, S.; Mita, T.; Wah, C.; Schroff, F.; Belongie, S.; Perona, P. Caltech-UCSD birds 200. In Computation & Neural Systems Technical Report,2010–001; California Institute of Technology: Pasadena, CA, USA, 2010. [Google Scholar]

- Korc, F.; Förstner, W. University of Bonn, Tech. Rep. TR-IGG-P-01. eTRIMS Image Database for Interpreting Images of Man-Made Scenes; University of Bonn: Bonn, Germany, 2009. [Google Scholar]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Naik, A.; Swarnakar, A.; Mittal, K.; Assoc Advancement Artificial, I. Shallow-UWnet: Compressed Model for Underwater Image Enhancement (Student Abstract). In Proceedings of the 35th AAAI Conference on Artificial Intelligence/33rd Conference on Innovative Applications of Artificial Intelligence/11th Symposium on Educational Advances in Artificial Intelligence, Virtual, 2–9 February 2021; pp. 15853–15854. [Google Scholar]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. A Publ. IEEE Signal Process. Soc. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).