Abstract

Approximately 24% of the global land area consists of mountainous regions, with 10% of the population relying on these areas for their cultivated land. Accurate statistics and monitoring of cultivated land in mountainous regions are crucial for ensuring food security, creating scientific land use policies, and protecting the ecological environment. However, the fragmented nature of cultivated land in these complex terrains challenges the effectiveness of existing extraction methods. To address this issue, this study proposed a cascaded network based on an improved semantic segmentation model (DeepLabV3+), called Cascade DeepLab Net, specifically designed to improve the accuracy in the scenario of fragmented land features. This method aims to accurately extract cultivated land from remote sensing images. This model enhances the accuracy of cultivated land extraction in complex terrains by incorporating the Style-based Recalibration Module (SRM), Spatial Attention Module (SAM), and Refinement Module (RM). The experimental results using high-resolution satellite images of mountainous areas in southern China show that the improved model achieved an overall accuracy (OA) of 92.33% and an Intersection over Union (IoU) of 82.51%, marking a significant improvement over models such as U-shaped Network (UNet), Pyramid Scene Parsing Network (PSPNet), and DeepLabV3+. This method enhances the efficiency and accuracy of monitoring cultivated land in mountainous areas and offers a scientific basis for policy formulation and resource management, aiding in ecological protection and sustainable development. Additionally, this study presents new ideas and methods for future applications of cultivated land monitoring in other complex terrain regions.

1. Introduction

Cultivated land is essential for human survival, providing the foundation for food production and playing a critical role in maintaining ecological balance and promoting sustainable development [1,2]. However, rapid global urbanization and industrialization have exacerbated the loss of cultivated land due to desertification, urban expansion, and over-exploitation [3,4]. This threatens food security and impacts biodiversity, water resources, and climate stability [5,6]. These problems are particularly serious in mountainous regions. Mountainous regions cover about 24% of the Earth’s land area, supporting approximately 10% of the global population. Despite challenges like steep topography, soil erosion, and climate change, cultivated land in these regions is crucial for agricultural production [7]. Therefore, developing efficient and accurate monitoring methods for cultivated land in these areas is of significant practical importance.

Unlike flat plains with regular agricultural plots, cultivated land in mountainous areas is often interspersed with forests, shrubs, and rocks, resulting in highly fragmented and irregularly shaped plots. This complex topography and diverse ecological environment make it difficult to apply traditional land survey and remote sensing methods.

Traditional surveying methods typically rely on manual fieldwork, which is time-consuming, labor-intensive, and prone to human error, limiting the ability to collect large-scale, accurate data quickly [8]. For example, China’s first national land survey, covering an area of about 9.6 million km2, took over ten years to complete, from 1984 to 1997. In contrast, the second survey, which utilized remote sensing technology, took just two years (2007–2009) [9]. While remote sensing has significantly improved upon manual methods, challenges remain, particularly when dealing with the fragmented landscapes and irregularly shaped plots in mountainous regions. Traditional pixel-based or object-based classification methods often fail in such areas. Pixel-based methods overlook the spatial context, leading to issues such as “same object, different spectrum” or “different object, same spectrum” [10], while object-based methods depend on manually set parameters, which are not well suited for the diverse and variable scale of cultivated land in mountainous areas [11]. Thus, a method that can accurately extract fragmented cultivated land without excessive reliance on manual feature selection remains an urgent need.

Recent advances in deep learning have greatly enhanced remote sensing image analysis, especially for land cover mapping [12,13], disaster monitoring [14,15], and change detection [16,17]. Deep learning techniques, particularly convolutional neural networks (CNNs) [18], have become a leading approach for automated cultivated land extraction [19]. CNNs can automatically learn and extract multi-layer image features, such as edges, textures, and spectral properties, often providing more discriminative results than manually designed features. These methods are highly adaptable, adjusting to task-specific and data-specific needs, making them particularly effective for cultivated land extraction in diverse remote sensing datasets. Currently, there are many classic CNN models, such as FCN [20], UNet [21], ResNet [22], and the DeepLab series [23]. Additionally, related studies have successfully applied these classic CNN models to extract cultivated land from remote sensing images, achieving certain results [8,19].

However, existing generic computer vision models are not specifically designed for satellite imagery, and applying them directly to the task of cultivated land extraction from satellite images often results in poor extraction outcomes, such as edge misclassification, holes, and noise [24]. These problems are particularly severe in mountainous regions, where cultivated land is irregular and fragmented. Current solutions to address these issues generally fall into two categories: one involves post-processing techniques (such as dilation, erosion, and smoothing) to optimize the morphology and address the issue of holes [25]; the other involves multi-task parallel network frameworks, where the main network is responsible for identifying cultivated land, and branch networks are used for edge detection, with edge features fused with cultivated land features to guide the extraction [26,27]. While these methods have been successful in flat terrain, they still face challenges in complex mountainous environments. Post-processing methods lack dynamic adaptability and rely heavily on prior knowledge, while edge-guided extraction methods depend on edge accuracy, which is difficult to achieve in mountainous regions due to the complex land morphology and fuzzy edges.

To address the challenges of cultivated land extraction in complex mountainous areas, this study proposes a novel cascaded deep learning network model—Cascade DeepLab Net. This model abandons post-processing methods and strategies relying on cultivated land edge prediction. Instead, it adopts a cascaded refinement approach, progressively improving the accuracy of cultivated land extraction by combining the predicted results and surrounding features on the basis of coarse prediction results, ultimately achieving precise extraction. The Cascade DeepLab Net model innovatively integrates a style recalibration module (SRM) and a Spatial Attention Module (SAM) into the DeepLabV3+ framework, thereby enhancing the overall cultivated land recognition accuracy. Furthermore, the model incorporates a Refinement Module (RM) for further optimization of the detailed morphology of the results. This module, based on coarse prediction, can refine the output by combining surrounding features and existing cultivated land extraction results, without the need for manual parameter settings or reliance on cultivated land edge information. To a certain extent, this approach addresses the limitations of existing methods in complex mountainous environments. This paper details the design, specific implementation, and experimental evaluation of the model, demonstrating its significant advantages in fragmented cultivated land environments in mountainous areas.

2. Materials and Methods

2.1. Study Area

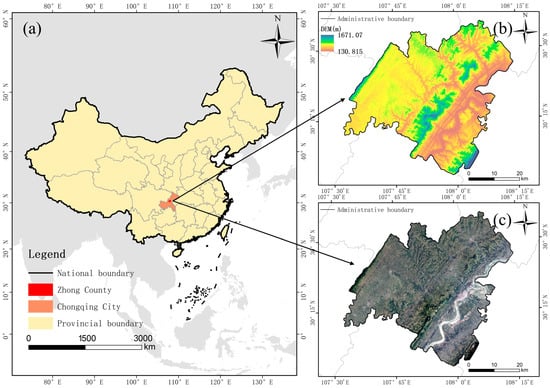

This study focuses on Zhong County in Chongqing Municipality, China. Located in the eastern part of Chongqing and the middle and upper reaches of the Yangtze River, the study area’s coordinates are 107°30′ to 108°06′ E and 30°03′ to 30°33′ N. The region experiences a warm, humid, mountainous climate in the subtropical southeastern monsoon zone, ideal for agriculture (Figure 1). The terrain consists mainly of hills and low mountains, with significant elevation variations averaging between 200 and 800 m. Cultivated land is primarily found in river valleys, flat areas, and gentle slopes, characterized by fragmentation and irregular shapes typical of mountainous regions. This complex terrain and fragmented land distribution present challenges for land identification but also provide an excellent platform to apply deep learning techniques in remote sensing for cultivated land identification.

Figure 1.

Geographical location maps of the study area: (a) the location of the study area in China’s administrative zoning map; (b) digital elevation model (DEM) of the study area; (c) remote sensing image of the study area.

2.2. Data and Sample Construction

Due to the high cost of acquiring sub-meter multispectral remote sensing imagery, this study opted to use high-resolution imagery from Google Earth to minimize data acquisition expenses. Google Earth offers free, publicly accessible high-resolution remote sensing imagery for most of the world, sourced from various platforms, including the Landsat and Sentinel series, the commercial QuickBird satellite, and aerial data [28]. Imagery in densely populated cities or critical regions is updated frequently, typically every one to three years, while updates in remote areas occur less often, depending on the data source and satellite orbit. For instance, Landsat images are updated every 16 days, while Sentinel-2 provides updates every five days. Commercial satellites offer even more frequent updates, with near-real-time, high-resolution imagery. Given that much of the cultivated land in southwest China is in populated areas, the high resolution and update frequency of the images in this region align well with the needs of this study.

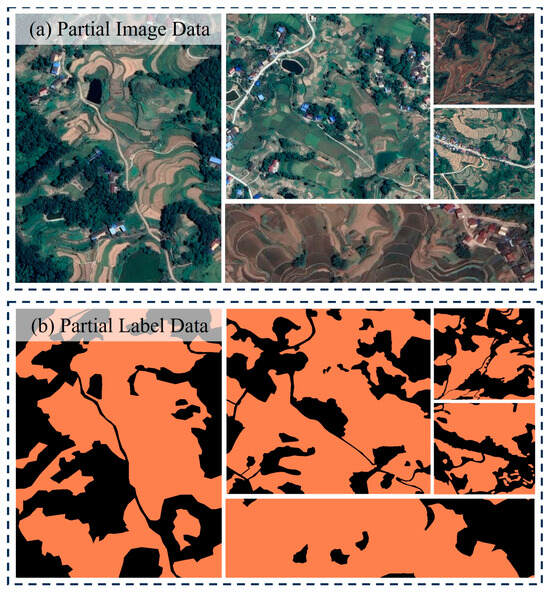

The satellite images of the study area were obtained from Google Earth level 18 imagery, featuring three RGB bands with a spatial resolution of about 0.54 m, ideal for cultivated land extraction. The data were obtained using the open-source geographic information system QGIS (3.26), QGIS (3.26) can download it from https://www.qgis.org/download/, accessed on 1 January 2025, and satellite images were loaded and exported through the QuickMapServices plugin (can download it from https://github.com/nextgis/quickmapservices, accessed on 1 January 2025). Due to the area’s cloudy and rainy conditions, the images were combined using multiple time series from August 2021 to August 2022. After labeling and cleaning, the dataset comprised 40 sheets, each with a size of 1000 × 1000 pixels. Given hardware limitations, data compression and cropping were essential. We cropped the data into 256 × 256 pixel sections with a 0.3 overlap rate. To ensure high-quality samples, we eliminated those with over 80% background or 100% cultivated land using histograms to balance positive and negative samples. To maintain sample diversity, we applied data augmentation techniques such as flipping, random rotation, and random color dithering. Ultimately, we obtained 23,251 sample slices, with 80% used for training and 20% for validation. Some samples are shown in Figure 2.

Figure 2.

Display of some samples.

2.3. Methodology

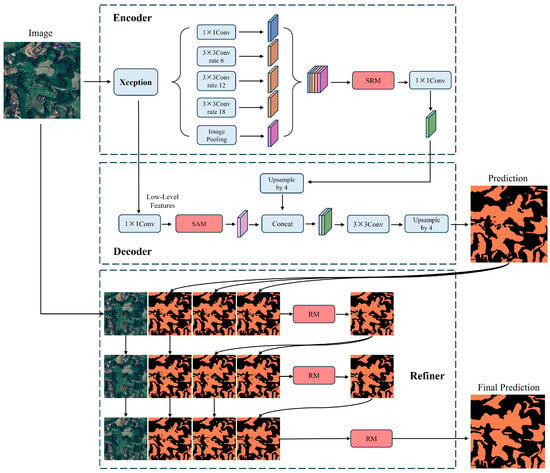

This study proposed the Cascade DeepLab Net to enhance the accuracy of cultivated land extraction. The framework is based on Google’s DeepLabV3+ model, known for its efficient multi-scale contextual information capture and strong performance. Using an encoder–decoder architecture is ideal for remote sensing image processing tasks. The network structure comprises three main components: encoder, decoder, and refiner, as shown in Figure 3.

Figure 3.

The structure of Cascade DeepLab Net.

First, the encoder uses Xception as the backbone for feature extraction, followed by the ASPP (Atrous Spatial Pyramid Pooling) module to capture richer multi-scale information for more detailed feature extraction. The SRM within the ASPP module enhances the network’s focus and integration of multi-scale information. Second, the decoder module fuses high-level and low-level features and introduces a Spatial Attention Module, allowing the model to concentrate on important features like the location and texture of cultivated land. The initial mask output is then generated using the classification header.

To further optimize the segmentation results, we introduce the RM, as proposed by Cheng in 2020CIPV, for refining segmentation outcomes. The initial mask output is combined with the remote sensing image as input, maintaining a constant input channel size by copying the input mask. In the first cascade level, the RM refines the segmentation, with one input channel being replaced by the linearly upsampled output, and this process is repeated until the final level. This design allows the network to incrementally correct segmentation errors while preserving initial details. The multi-stage processing effectively enhances the extracted morphology of the cultivated land. Additionally, a hybrid loss function supervises the extraction, improving the model accuracy and robustness by jointly overseeing segmentation results at different stages.

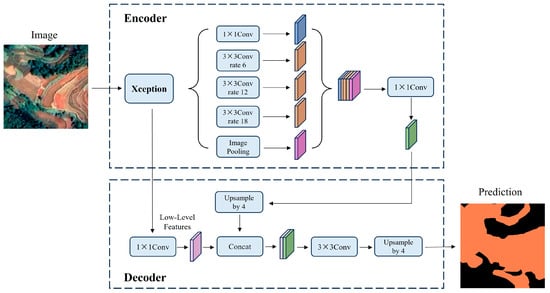

2.3.1. DeepLabV3+

DeepLabV3+ is an advanced semantic segmentation model developed by Google for precise image segmentation [29]. It enhances DeepLabV3 by incorporating an encoder–decoder structure to capture spatial and semantic information more effectively. The encoder uses a deep convolutional neural network for feature extraction and employs atrous convolution to capture multi-scale contextual information. The decoder achieves high-resolution predictions through stepwise upsampling and combining low-level features to accurately define target boundaries.

DeepLabV3+’s efficient semantic segmentation is particularly useful for extracting cultivated land from satellite or aerial images. It identifies and segments cultivated land boundaries using multi-scale feature extraction and atrous convolution, maintaining the computational efficiency. The decoder’s fine upsampling ensures a precise depiction of cultivated land boundaries. Despite its strong performance, DeepLabV3+ has shortcomings in extracting details of cultivated land with fuzzy boundaries or complex shapes, leading to insufficient accuracy in some areas [11]. This study aims to address these limitations to enhance the model’s effectiveness in extracting fragmented cultivated land. The network structure is shown in Figure 4.

Figure 4.

The structure of DeepLabV3+ network.

2.3.2. Style-Based Recalibration Module (SRM)

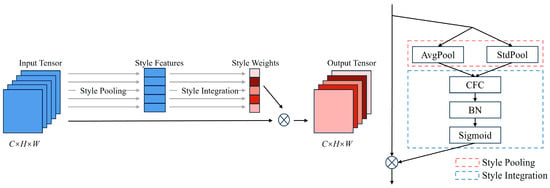

The Style-based Recalibration Module (SRM) is an advanced neural network technique that enhances the feature representation capabilities of convolutional neural networks (CNNs) [30]. The SRM improves the model’s discriminative power by recalibrating the response of each feature channel and integrating style information into the feature extraction process. Specifically, SRM introduces an adaptive mechanism to adjust channel weights based on input data features, including global average pooling, inter-channel correlations, and nonlinear activation functions. This dynamic adjustment of the feature map distribution allows SRM to capture and utilize critical information from input images more effectively, enhancing performance across various computer vision tasks. The module structure is shown in Figure 5.

Figure 5.

The structure of the Style-based Recalibration Module (SRM).

Cultivated land extraction requires accurately identifying and segmenting cultivated land areas from remote sensing images, necessitating high feature extraction and discriminative abilities from the model. With SRM, the model adaptively adjusts feature channel weights to enhance cultivated land-related feature representation while suppressing background and noise interference. SRM dynamically adjusts the feature map distribution through global average pooling, inter-channel correlations, and nonlinear activation functions, improving the aggregation and processing of multi-scale information. This adaptive calibration enhances feature representation flexibility and robustness, maintaining high accuracy in complex environments. Therefore, incorporating SRM significantly improves the effectiveness of cultivated land extraction.

2.3.3. Spatial Attention Module (SAM)

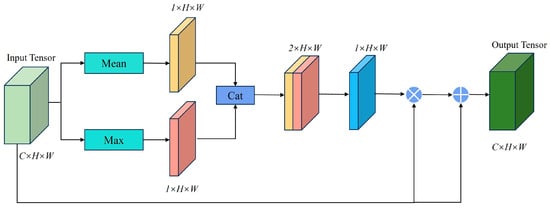

The Spatial Attention Module (SAM) is a widely used technique in deep learning, particularly in computer vision, designed to enhance feature extraction by assigning different attentional weights in the spatial dimension [31]. The core idea is to improve the model’s recognition and localization of target objects by learning the importance of each position in the input feature map, highlighting key regions, and suppressing irrelevant backgrounds. SAM typically uses convolution operations to calculate the importance score of each location in the feature map, generating an enhanced feature map through weighted fusion with the original feature map. This mechanism significantly improves the model’s performance and robustness while providing more intuitive interpretations, making the decision-making process more transparent. Studies show that spatial attention mechanisms excel in various visual tasks such as image classification, object detection, and image segmentation, proving their effectiveness and wide applicability. The module structure is shown in Figure 6.

Figure 6.

The structure of the Spatial Attention Module (SAM).

Using SAM to extract cultivated land information from RGB remote sensing images is highly effective. Remote sensing images typically contain complex and detail-rich surface information, and cultivated land representation may vary due to different lighting conditions, vegetation cover, soil types, and other factors. Introducing SAM enhances the model’s ability to recognize cultivated land features at different spatial locations. Specifically, SAM can automatically learn and highlight areas in the image related to cultivated land while suppressing background interference and other extraneous areas. SAM also addresses issues of scale variation and terrain complexity common in remote sensing images. By adaptively adjusting attention weights, the model can more flexibly handle cultivated land areas of different scales and complex terrain, which is crucial for extracting fragmented cultivated land in mountainous areas.

2.3.4. Refinement Module (RM)

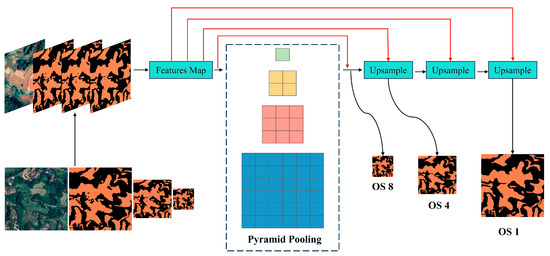

The Refinement Module (RM), proposed in CVPR2020, is designed to enhance image segmentation accuracy [32]. Its core concept is to refine and optimize preliminary segmentation results by fusing features from the image and coarse mask through multi-scale feature fusion. RM connects the image with the preliminary generated mask and extracts multi-scale features using pyramid pooling. These features are then fused to create segmentation masks of different resolutions. RM integrates these masks, combining information from each scale, and makes preliminary fusion corrections to produce a refined mask. The module structure is shown in Figure 7.

Figure 7.

The structure of the Refinement Module (RM), taking three levels of segmentation as inputs to refine the segmentation with different output strides (OS) in different branches.

The introduction of RM is crucial for the task of cultivated land extraction. Cultivated land morphology and edges are key, particularly in mountainous and fragmented areas where land shapes are irregular and edges are blurred. Traditional segmentation methods struggle with such complexity. The RM significantly improves the accuracy of recognizing cultivated land morphology and edges through multi-scale feature fusion and refinement. By processing the image and preliminary segmentation mask at multiple levels, RM captures richer spatial and textural information, enhancing the accuracy of edge localization and reducing boundary blurring.

2.3.5. Evaluation Metrics

To evaluate the model’s performance in the cultivated land extraction task, we selected several accuracy metrics commonly used in semantic segmentation: overall accuracy (OA), Intersection over Union (IoU), and F1 score. OA measures global pixel classification accuracy, calculated as the ratio of correctly classified pixels to total pixels. However, in cases of class imbalance, OA alone may be insufficient. For instance, if non-cultivated areas vastly outnumber cultivated areas, a high OA may mainly reflect correct classification of the non-cultivated areas, neglecting the cultivated ones. Thus, additional metrics are necessary for a comprehensive assessment of model performance; IoU quantifies the overlap between predicted and actual segmentations. A higher IoU indicates a better overlap between the predicted and actual cultivated land areas, offering a precise measure of the model’s segmentation accuracy. Moreover, IoU is robust to data imbalance and provides an objective performance assessment, even when the sizes of cultivated land and non-cultivated land samples differ significantly; The F1 score is the harmonic mean of precision and recall. Precision measures the proportion of predicted cultivated land areas that are correctly classified, while recall indicates the proportion of actual cultivated land correctly identified. Ideally, we want the model to accurately identify all cultivated land (high recall) and minimize the misclassification of non-cultivated land as cultivated land (high precision). The F1 score offers an optimal balance between the two, with higher scores indicating a better trade-off between precision and recall. These metrics offer a comprehensive assessment of the model’s performance, reflecting its accuracy, reliability, and consistency from different perspectives. The formulas for calculating these metrics are as follows:

where TP, FP, FN, and TN are the number of true positives, false positives, false negatives, and true negatives, respectively.

2.4. Experimental Environment and Parameter Details

The code for this study is written in Python 3.9 and implemented using PyTorch version 2.1. The hardware setup includes an NVIDIA RTX 4090 GPU with 24 GB of display memory and an Intel Core i9-12900K CPU with 64 GB of RAM. For model training, ReLU was selected as the activation function, weights were initialized using the He-Normal method, and dropout was applied to mitigate overfitting. Hyperparameters were kept constant throughout the training process, with a batch size of 32, 100 epochs, and 3 input channels. The loss function used was binary cross-entropy. The optimizer used is stochastic gradient descent (SGD) with an initial learning rate of 0.01, a momentum parameter of 0.9, and a weight decay coefficient of 0.005. To enhance the model’s training effectiveness and speed up convergence, a learning rate adjustment strategy using Polynomial Decay with an exponent of 0.9 is employed. Additionally, comparative models are implemented using the MMSegmentation framework, which offers various common segmentation models and algorithms for model comparison and performance evaluation.

3. Results and Analysis

3.1. Comparison of Different Methods: Quantitative Analysis

To evaluate the effectiveness of our proposed method, we conducted comparative experiments against widely used methods for cultivated land extraction, namely, UNet, PSPNet, and DeepLabV3+. UNet uses an encoder–decoder structure to capture multi-scale feature information, making it suitable for images with rich details. PSPNet captures multi-scale contextual information globally via a pyramid pooling module, performing well in complex backgrounds with varying target sizes. DeepLabV3+ combines atrous convolution with an encoder–decoder structure, excelling in detail restoration and boundary processing. All of the models were trained with identical parameters: a batch size of 12, 100 epochs, and an SGD optimizer.

As shown in Table 1, UNet performed the worst out of the four methods. DeepLabV3+ outperformed UNet and PSPNet slightly due to its combination of atrous convolution and the ASPP module, enhancing feature extraction, particularly for targets at different scales. UNet’s simple encoder–decoder structure led to poor detail recovery, blurred boundaries, and more pronounced pretzeling. PSPNet improved multi-scale contextual information capture through the pyramid pooling module but still struggled with detail processing. Our proposed method outperformed all the other methods on all the indicators. This success is attributed to the ASPP module capturing multi-scale information, the SRM enhancing the aggregation and processing of various scales, and the Spatial Attention Module focusing on location and texture features. Additionally, the RM captured different levels of structural and boundary information by linking imperfect segmentation masks and adaptively fusing multi-scale features, resulting in significant improvements in segmentation accuracy and boundary processing.

Table 1.

Comparison of accuracy of different methods.

3.2. Comparison of Different Methods: Qualitative Analysis

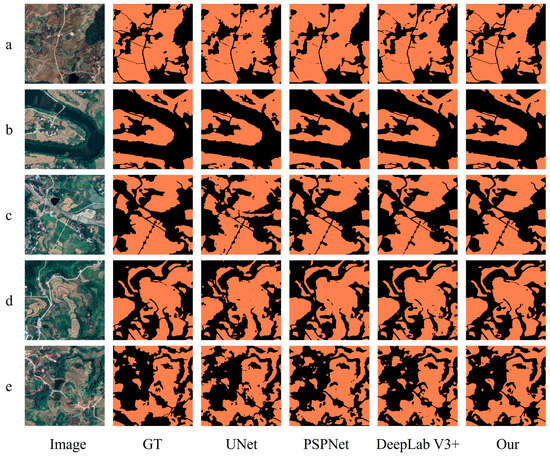

To further assess the effectiveness of these four methods, we compared them across five typical cultivated land scenes (Figure 8): a simple homogeneous scene (a); a scene mixed with a water body (b); a scene with multiple features such as inhabitants, roads, and mulch (c); a mixture of different cultivated and uncultivated land styles (d); and a broken, complex cultivated land scene (e).

Figure 8.

Comparison of extraction results of different methods in the test area. (a) illustrates a simple homogeneous landscape, (b) depicts a scenario characterized by the coexistence of water bodies and cultivated land, (c) portrays a complex and varied scene, (d) exemplifies a mosaic of diverse agricultural patterns, and (e) represents an intricately fragmented and heterogeneous environment.

In group a, a simple homogeneous scene, all of the models showed good segmentation but struggled with narrow roads between fields. UNet exhibited more pronounced pretzeling. Our model provided complete segmentation, clear roads, and minimal pretzeling. In group b, UNet often missed or misclassified boundaries between water bodies and cultivated land, while PSPNet and DeepLabV3+ improved the boundary processing slightly but still had misclassifications. Our model accurately differentiated water bodies from cultivated land, reducing misclassifications. In group c, complex scenes led UNet and PSPNet to misclassify non-cultivated land as cultivated. DeepLabV3+ improved on this but still had fragmented misclassifications. Our model excelled in detailed accuracy. In group d, with mixed styles of cultivated land, UNet and DeepLabV3+ led to increased pretzeling and misdetection. Our model improved land identification and suppressed pretzeling effectively. In group e, very fragmented and complex scenes caused severe pretzeling, misclassification, and poor detail handling in UNet, PSPNet, and DeepLabV3+. Our model recognized the overall morphology and details better, with clear edges.

Overall, UNet suffers from blurring and pretzeling at boundaries and details due to its encoder–decoder structure. PSPNet and DeepLabV3+ mitigate this issue somewhat through pyramid pooling and atrous convolution but still lack detail. Our model enhances boundary details using the ASPP module and SRM. In complex scenes, UNet and PSPNet struggle with multi-scale feature fusion, leading to poor feature differentiation. DeepLabV3+ improves multi-scale features with atrous convolution but still needs better detail and boundary processing. Our model improves the adaptability and segmentation accuracy in complex scenes by introducing the SAM and RMs. Differentiating between various styles of cultivated land, such as fallow versus cropping periods, requires strong feature representation and multi-scale information capture. Our model enhances this differentiation by fusing high- and low-level features with SAM and SRM. For fragmented and complex cultivated land, accurately capturing different levels of structural and boundary information is crucial. UNet and PSPNet perform poorly here, while DeepLabV3+ exhibits an improved performance. Our model refines the segmentation results with the RM, effectively identifying the overall morphology and details of complex cultivated land. In summary, our model provides better segmentation and detail processing in complex scenarios, significantly improving the accuracy and reliability of cultivated land extraction in mountainous areas.

3.3. Validation of the Improvement Mechanism

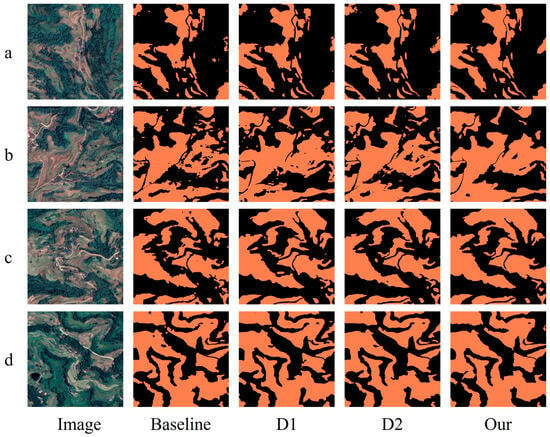

To validate our improvements, we conducted ablation experiments. Using DeepLabV3+ as the baseline, we first added the SRM to its encoding stage to create method D1. Next, we added the SAM to the decoding stage of D1, resulting in method D2. Finally, we added the RM to D2, producing the method used in this study.

Table 2 shows the results of the ablation experiments. Figure 9 compares the details of the extraction results from different modules. Each row shows the segmentation results for a specific region when different modules are applied. Since all of the experiments use the same backbone network, the overall segmentation outcomes are relatively consistent. However, the performance of different modules varies in more complex areas, such as fuzzy edges or scattered scrub in cultivated land. When the SRM is added to the baseline model, the overall performance improves significantly, with the OA, IoU, and F1 scores increasing by 1.27%, 1.53%, and 1.28%, respectively. As shown in the images, the SRM helps suppress background noise, particularly in complex settings, and enhances the separation between the background and the target. Additionally, the SRM improves multi-scale information processing, allowing the model to extract cultivated land features more accurately in complex scenes and adapt to changes in scale. After incorporating the SAM into the D1 model, the OA, IoU, and F1 scores increase by 0.67%, 0.92%, and 0.82%, respectively. By focusing on specific location and texture features, the SAM improves the model’s performance in regions with more distinct textures, as demonstrated by the D2 extraction results for region d in Figure 9. Finally, adding the RM to the D2 model results in a substantial improvement in the overall performance, with the OA, IoU, and F1 scores rising by 3.52%, 3.56%, and 3.06%, respectively. The RM is particularly effective in optimizing detail, especially in boundary processing and fine fragmentation. As illustrated in the images, the RM performs well in optimizing boundary processing and fine fragmentation, and significantly improves the segmentation accuracy. It effectively fills in the details that are not adequately handled by the other modules, thus enhancing the overall accuracy and reliability of the segmentation results.

Table 2.

Results of the validation of the effectiveness of the improved method.

Figure 9.

Cultivated land extraction results of the comparison of different modules. (a–d) depict four distinct cultivated land scenarios, each illustrating varying levels of fragmentation.

The ablation experiments demonstrate that the gradual addition of the SRM, SAM, and RM significantly enhances the model’s performance. Each module plays a crucial role in handling multi-scale information, feature fusion, detail processing, and boundary refinement, leading to a Cascade DeepLab Net that outperforms the base DeepLabV3+ model.

3.4. Optimization Effect on the Morphology of Fragmented Cultivated Land

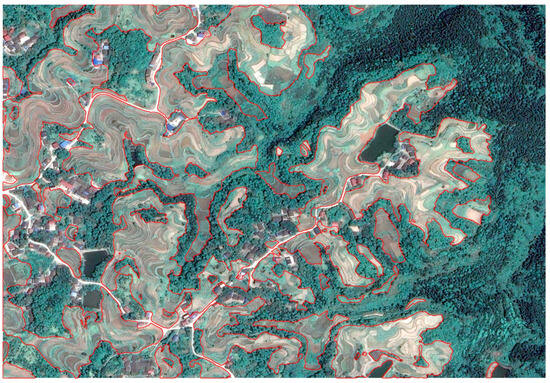

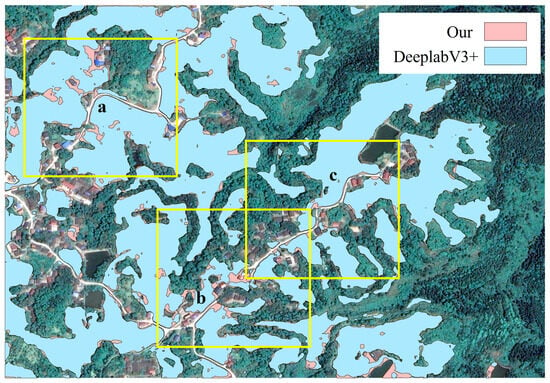

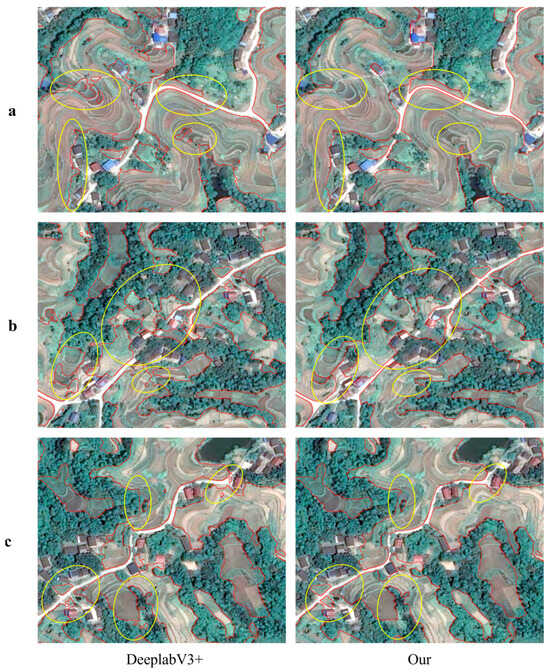

To further validate the remarkable performance of our improved model in optimizing the morphology of fragmented cultivated land, we selected an image that had never been involved in the training process as a crucial verification sample. Figure 10 presents the extraction results obtained by our improved model, while Figure 11 shows the overlay of the extraction results of the baseline model (Deeplabv3+) and the improved model (ours). Overall, by comparing the baseline model with the improved one and overlaying their extraction results, it becomes evident that the improved model effectively addresses issues such as fragmented patches and blurred edges that exist in the baseline model. Meanwhile, we further showcase the details of the model in three regions (Figure 12) where the extraction results show significant differences, namely, regions a, b, and c.

Figure 10.

Improved model cultivated land extraction results. The red frame delineates the cultivated land area as extracted by the improved model.

Figure 11.

Overlay display of the results of the baseline framework model (DeepLabV3+) and the improved model cultivated land extraction results. In areas a, b, and c, the distinctions between the improved model and the baseline framework model (DeepLabV3+) are markedly evident.

Figure 12.

Detailed presentation of the results of cultivated land extraction for the baseline framework (DeepLabV3+) model and the improved model. In areas (a–c) the distinctions between the improved model and the baseline framework model (DeepLabV3+) are markedly evident. The red frame delineates the cultivated land area as extracted by model, the yellow circles highlight areas where the differences are particularly pronounced.

As shown in Figure 12, regions a, b, and c all feature complex terrains, with cultivated land interlaced with forest land and other land types. In the extraction results of the baseline model (DeeplabV3+) in these regions, inaccurate boundaries of cultivated land are quite common. For instance, in the terraced fields of region a, the extraction result exhibits a jagged appearance; around the villages in region b, there is a misalignment phenomenon in the cultivated land; and when the cultivated land intersects with forests in region c, its boundary is confused with that of other land types. These issues have, to some extent, affected the accuracy and integrity of the cultivated land morphology, leading to inaccurate statistics of the cultivated land area and a more fragmented extracted cultivated land morphology compared to the actual one.

In contrast, our improved method demonstrates obvious advantages in these three regions. For cultivated land in complex terrains like terraced fields, the extraction result of our improved method (ours) has a smoother edge, which can accurately fit the actual boundary and better present a sense of hierarchy and continuity. In region b, where villages and cultivated land are interlaced, this method can fully detect small plots of vegetable fields and other cultivated land, avoiding missed detections and making the total cultivated land area closer to the actual value. In the intersection zone of cultivated land and forests in region c, the improved method pays more attention to the key characteristic areas of the cultivated land, overcomes vegetation interference, accurately divides the boundary between cultivated land and forest land, maintains the coherence of the long and narrow cultivated land morphology, avoids misidentifying forest land and the discontinuity of long and narrow cultivated land, and makes the extraction result of the cultivated land area more consistent with the actual situation, with a significantly reduced error rate.

In summary, our improved method performs excellently in optimizing the morphology of fragmented cultivated land in mountainous regions, achieving remarkable improvements in the overall accuracy, morphological integrity, and detailed processing capabilities.

4. Discussion

4.1. Possible Value of This Study

This study focused on the mountainous region of southern China, using high-resolution satellite images to accurately extract cultivated land information in complex terrains. Unlike UAV data acquisition methods [33], this study leverages satellite imagery for extensive land extraction, offering a broader application potential. Previous research has enhanced the cultivated land extraction accuracy by developing joint-type edge enhancement loss functions with constraints and introducing polarized self-attention mechanisms [26,34]. While these methods achieved a high accuracy, they were primarily applied in plain areas. In our study, we enhanced DeepLabV3+ by integrating SAM, SRM, and cascading RM, improving the model accuracy for cultivated land extraction in complex terrains. Using our improved model with high-resolution satellite data, we achieved an OA of 92.33% and an IoU of 82.51% in cultivated land extraction. Our results, compared to those from other studies [26,34], show slight performance differences mainly due to the unique characteristics of our study area. While others focused on plains, our research addresses the complex terrain of the southern mountainous region. Despite the slightly lower precision, our findings remain comparable and demonstrate excellent applicability in specific scenarios.

4.2. The Combined Effects of Methodological Parametric Volume

As shown in Table 3, the Cascade DeepLab Net model proposed in this study has a parameter count of 129.26M, significantly higher than classical architectures like UNet, PSPNet, and DeepLabV3+. However, as shown in Table 4, the core components—the SRM [35] and SAM [36]—remain lightweight, each with fewer than 1M parameters, suggesting that the increase in parameters primarily comes from the RM. This is due to two structural factors: first, the RM includes an independent backbone network for feature reconstruction [37]; second, to improve the fine-grained identification of cultivated land boundaries, we adopted a three-level iterative RM architecture. While this design increases the model complexity, as shown in Table 2 and Figure 11, the RM plays a crucial role in detecting fragmented cultivated land in mountainous areas [38]. Its introduction resulted in a more than 3% improvement in IoU metrics, significantly enhancing cultivated land recognition. This accuracy boost is particularly important in food security monitoring, where even a 3% improvement could represent tens of thousands of hectares of overlooked cultivated land. Additionally, given the prevalence of fragmented small plots in mountainous regions, the increased parameter cost is justified by the practical need for detailed cultivated land monitoring.

Table 3.

Count of different model parameters.

Table 4.

Count of different module parameters.

However, it is noteworthy that the SRM/SAM achieve a nearly 2% accuracy gain with fewer than 1M parameters, revealing an imbalance in parameter efficiency within the model. This highlights two key areas for optimization in future research: (i) reducing redundant parameters in the RM using lightweight techniques like depth-separable convolutions, and (ii) developing a dynamic inference mechanism that adjusts the number of RM iterations based on image spatial complexity. In homogeneous areas such as plains, for example, reducing RM computational layers could substantially decrease the computational overhead. These optimization strategies will be the focus of our next phase of research.

4.3. Limitations of the Current Study

This study focuses on extracting cultivated land in mountainous regions using high-resolution remote sensing images from a single time point. However, the method faces significant challenges in practical applications due to the complexity of the mountainous ecological environment. In these areas, cultivated land, scrubland, forest, and other features are interspersed and the spectral characteristics are similar [39]. This issue is particularly pronounced when the crops grow luxuriantly, making it difficult to distinguish them from other land features in single-time-phase imagery. Additionally, mixed cropping is common, with multiple crops often grown in the same plot [40]. This results in uneven spectral features, further complicating the classification accuracy of remote sensing images. Relying solely on imagery from a single time period, particularly in the off-season, when crop phenology is less distinct and spectral differences are minimal, significantly reduces the classification accuracy [41]. Therefore, current methods heavily depend on the timing of image acquisition.

To address these challenges, future research should incorporate multi-temporal remote sensing imagery and integrate crop phenology data. By leveraging spectral changes across different seasons and crop growth stages, it is possible to enhance the accuracy of cultivated land extraction. Given the frequent cloud cover and rainy conditions in southwest China, future studies should also explore multi-source data fusion, particularly with synthetic aperture radar (SAR) data. SAR’s polarization characteristics and interference information can help distinguish different vegetation types, thus improving the accuracy of cultivated land extraction, even in challenging weather conditions.

5. Conclusions

In this study, we have developed and evaluated the Cascade DeepLab Net model for the accurate extraction of fragmented cultivated land in mountainous regions. By integrating the Style-based Recalibration Module (SRM), Spatial Attention Module (SAM), and Refinement Module (RM) into the DeepLabV3+ architecture, our model addresses key challenges such as irregular land morphology, unclear boundaries, and the fragmentation common in such terrains. The experimental results demonstrate that Cascade DeepLab Net outperforms traditional models like UNet, PSPNet, and DeepLabV3+ in both quantitative metrics (overall accuracy, Intersection over Union, and F1 score) and qualitative assessments, with an overall accuracy of 92.33%, an IoU of 82.51% and an F1 score of 91.77%. The model’s significant improvement in boundary precision and segmentation clarity and its ability to handle complex and fragmented land structures makes it highly suitable for remote sensing applications in agricultural land monitoring, particularly in mountainous areas. Despite its advantages, challenges such as the reliance on high-resolution imagery and the need for multi-temporal data for accurate crop phenology remain. Future studies should explore multi-source data fusion, particularly incorporating synthetic aperture radar (SAR) imagery to enhance the model’s robustness under varied environmental conditions. This work not only contributes to more accurate land monitoring in mountainous regions but also provides a foundation for further advancements in cultivated land extraction methods, particularly in areas where traditional methods fall short.

Author Contributions

M.L. and R.W. conceived the study and contributed to the conception, analysis, and interpretation of data. M.L., L.Z. and A.D. designed and managed the investigation. R.W. and A.D. contributed to software development. R.W., W.Z. and L.X. were involved in data validation. M.L. and R.W. performed the formal analysis. G.Y. provided resources and supervised the study. R.W., L.Z. and W.Z. curated the data. M.L. prepared the original draft of the manuscript, with review and editing by R.W., W.Y. and G.Y. M.L. and A.D. contributed to visualization. W.Y. and G.Y. managed the project and acquired funding. All authors contributed to the preparation of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Guizhou Provincial Key Technology R&D Program (No. Qiankehe key [2023] normal 176), Guizhou Provincial Basic Research Program (Natural Science) Program (No. Qiankehe base-ZK [2024] normal 445), and the Guizhou Provincial Key Technology R&D Program (No. Qiankehe Key [2024] normal 138).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fan, J.; Wang, L.; Qin, J.; Zhang, F.; Xu, Y. Evaluating cultivated land stability during the growing season based on precipitation in the Horqin Sandy Land, China. J. Environ. Manag. 2020, 276, 111269. [Google Scholar] [CrossRef] [PubMed]

- Pang, X.; Xie, B.; Lu, R.; Zhang, X.; Xie, J.; Wei, S. Spatial–Temporal Differentiation and Driving Factors of Cultivated Land Use Transition in Sino–Vietnamese Border Areas. Land 2024, 13, 165. [Google Scholar] [CrossRef]

- Li, M.; Li, J.; Haq, S.U.; Nadeem, M. Agriculture land use transformation: A threat to sustainable food production systems, rural food security, and farmer well-being? PLoS ONE 2024, 19, e0296332. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Lopez-Carr, D.; Gong, J.; Gao, J. Editorial: Sustainable cultivated land use and management. Front. Environ. Sci. 2023, 11, 1162769. [Google Scholar] [CrossRef]

- Guo, X.N.; Chen, R.Q.; Li, Q.; Su, W.C.; Liu, M.; Pan, Z.Z. Processes, mechanisms, and impacts of land degradation in the IPBES Thematic Assessment. Acta Ecol. Sin. 2019, 39, 6567–6575. [Google Scholar] [CrossRef]

- The Intergovernmental Panel on Climate Change. Special Report: Special Report on Climate Change and Land. IPCC Website. 2019. Available online: https://www.ipcc.ch/srccl/ (accessed on 1 January 2025).

- Liang, X.; Jin, X.; Yang, X.; Xu, W.; Lin, J.; Zhou, Y. Exploring cultivated land evolution in mountainous areas of Southwest China, an empirical study of developments since the 1980s. Land Degrad. Dev. 2021, 32, 546–558. [Google Scholar] [CrossRef]

- Miao, L.; Li, X.; Zhou, X.; Yao, L.; Deng, Y.; Hang, T.; Zhou, Y.; Yang, H. SNUNet3+: A Full-Scale Connected Siamese Network and a Dataset for Cultivated Land Change Detection in High-Resolution Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4400818. [Google Scholar] [CrossRef]

- Chen, X.; Yu, L.; Du, Z.; Liu, Z.; Qi, Y.; Liu, T.; Gong, P. Toward sustainable land use in China: A perspective on China’s national land surveys. Land Use Policy 2022, 123, 106428. [Google Scholar] [CrossRef]

- Rapinel, S.; Clément, B.; Magnanon, S.; Sellin, V.; Hubert-Moy, L. Identification and mapping of natural vegetation on a coastal site using a Worldview-2 satellite image. J. Environ. Manag. 2014, 144, 236–246. [Google Scholar] [CrossRef] [PubMed]

- Cao, Q.; Li, M.; Yang, G.; Tao, Q.; Luo, Y.; Wang, R.; Chen, P. Urban Vegetation Classification for Unmanned Aerial Vehicle Remote Sensing Combining Feature Engineering and Improved DeepLabV3+. Forests 2024, 15, 382. [Google Scholar] [CrossRef]

- Storie, C.D.; Henry, C.J. Deep learning neural networks for land use land cover mapping. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3445–3448. [Google Scholar]

- Šćepanović, S.; Antropov, O.; Laurila, P.; Rauste, Y.; Ignatenko, V.; Praks, J. Wide-area land cover mapping with Sentinel-1 imagery using deep learning semantic segmentation models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10357–10374. [Google Scholar] [CrossRef]

- Wiguna, S.; Adriano, B.; Mas, E.; Koshimura, S. Evaluation of deep learning models for building damage mapping in emergency response settings. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5651–5667. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, Z.; Huang, C.; Yang, Y.; Hu, J.; Yan, X.; Tan, Y.; Liao, L.; Zhou, X.; Li, Y. Advances in Deep Learning Recognition of Landslides Based on Remote Sensing Images. Remote Sens. 2024, 16, 1787. [Google Scholar] [CrossRef]

- Dahiya, N.; Singh, G.; Gupta, D.K.; Kalogeropoulos, K.; Detsikas, S.E.; Petropoulos, G.P.; Singh, S.; Sood, V. A novel Deep Learning Change Detection approach for estimating Spatiotemporal Crop Field Variations from Sentinel-2 imagery. Remote Sens. Appl. Soc. Environ. 2024, 35, 101259. [Google Scholar] [CrossRef]

- Huang, Y.; Li, X.; Du, Z.; Shen, H. Spatiotemporal enhancement and interlevel fusion network for remote sensing images change detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5609414. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Liu, Z.; Li, N.; Wang, L.; Zhu, J.; Qin, F. A multi-angle comprehensive solution based on deep learning to extract cultivated land information from high-resolution remote sensing images. Ecol. Indic. 2022, 141, 108961. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Huang, J.; Ning, T. Progress and Prospect of Cultivated Land Extraction from High-Resolution Remote Sensing Images. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1582–1590. [Google Scholar]

- Li, Z.; Chen, S.; Meng, X.; Zhu, R.; Lu, J.; Cao, L.; Lu, P. Full Convolution Neural Network Combined with Contextual Feature Representation for Cropland Extraction from High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 2157. [Google Scholar] [CrossRef]

- Dong, Z.; Li, J.; Zhang, J.; Yu, J.; An, S. Cultivated land extraction from high-resolution remote sensing images based on BECU-Net model with edge enhancement. Natl. Remote Sens. Bull. 2023, 27, 2847–2859. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, Z.; Guo, M.; Huang, Y. Multiscale Edge-Guided Network for Accurate Cultivated Land Parcel Boundary Extraction From Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4501020. [Google Scholar] [CrossRef]

- Liang, J.; Gong, J.; Li, W. Applications and impacts of Google Earth: A decadal review (2006–2016). ISPRS J. Photogramm. Remote Sens. 2018, 146, 91–107. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Lee, H.; Kim, H.-E.; Nam, H. Srm: A style-based recalibration module for convolutional neural networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1854–1862. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Cheng, H.K.; Chung, J.; Tai, Y.-W.; Tang, C.-K. Cascadepsp: Toward class-agnostic and very high-resolution segmentation via global and local refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8890–8899. [Google Scholar]

- Lu, H.; Fu, X.; Liu, C.; Li, L.-G.; He, Y.-X.; Li, N.-W. Cultivated land information extraction in UAV imagery based on deep convolutional neural network and transfer learning. J. Mt. Sci. 2017, 14, 731–741. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, D.; Pan, Y.; Dai, J. High-resolution cropland extraction in Shandong province using MPSPNet and UNet network. Natl. Remote Sens. Bull. 2023, 27, 471–491. [Google Scholar] [CrossRef]

- Shi, X.Q.; Li, P.; Wu, H.; Chen, Q.D.; Zhu, H.Y. A lightweight image splicing tampering localization method based on MobileNetV2 and SRM. IET Image Process. 2023, 17, 1883–1892. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.Y.; Bian, J.W.; Zhang, L.; Cheng, M.M. SAMNet: Stereoscopically Attentive Multi-Scale Network for Lightweight Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3804–3814. [Google Scholar] [CrossRef]

- Zhai, Y.J.; Fan, D.P.; Yang, J.F.; Borji, A.; Shao, L.; Han, J.W.; Wang, L. Bifurcated Backbone Strategy for RGB-D Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 8727–8742. [Google Scholar] [CrossRef]

- Chen, G.J.; Chen, H.Z.; Cui, T.; Li, H.H. SFMRNet: Specific Feature Fusion and Multibranch Feature Refinement Network for Land Use Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 16206–16221. [Google Scholar] [CrossRef]

- Naboureh, A.; Li, A.N.; Bian, J.H.; Lei, G.B.; Amani, M. A Hybrid Data Balancing Method for Classification of Imbalanced Training Data within Google Earth Engine: Case Studies from Mountainous Regions. Remote Sens. 2020, 12, 3301. [Google Scholar] [CrossRef]

- Zhang, J.C.; He, Y.H.; Yuan, L.; Liu, P.; Zhou, X.F.; Huang, Y.B. Machine Learning-Based Spectral Library for Crop Classification and Status Monitoring. Agronomy 2019, 9, 496. [Google Scholar] [CrossRef]

- Longchamps, L.; Philpot, W. Full-Season Crop Phenology Monitoring Using Two-Dimensional Normalized Difference Pairs. Remote Sens. 2023, 15, 5565. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).