Abstract

Accurate identification of tea plant varieties during the harvest period is a critical prerequisite for developing intelligent multi-variety tea harvesting systems. Different tea varieties exhibit distinct chemical compositions and require specialized processing methods, making varietal purity a key factor in ensuring product quality. However, achieving reliable classification under real-world field conditions is challenging due to variable illumination, complex backgrounds, and subtle phenotypic differences among varieties. To address these challenges, this study constructed a diverse canopy image dataset and systematically evaluated 14 convolutional neural network models through transfer learning. The best-performing model was chosen as a baseline, and a comprehensive optimization of the training strategy was conducted. Experimental analysis demonstrated that the combination of Adamax optimizer, input size of 608 × 608, training and validation sets split ratio of 80:20, learning rate of 0.0001, batch size of 8, and 20 epochs produced the most stable and accurate results. The final optimized model achieved an accuracy of 99.32%, representing a 2.20% improvement over the baseline. This study demonstrates the feasibility of highly accurate tea variety identification from canopy imagery but also provides a transferable deep learning framework and optimized training pipeline for intelligent tea harvesting applications.

1. Introduction

Tea is one of the most consumed non-alcoholic beverages, valued not only for its distinctive flavor but also for its health-promoting properties, attributable to its abundance of polyphenols, amino acids, caffeine, and volatile aromatic compounds [,]. The tender buds and leaves of the tea plant serve as the primary raw materials for tea production. Tea plants are primarily cultivated in Asia and Africa, including China, India, Sri Lanka, and Japan []. Among these, China has a long history of tea culture and produces the widest diversity of teas. India and Sri Lanka mainly produce black tea, while Japan primarily produces green tea. According to statistics from the Food and Agriculture Organization of the United Nations, both global tea cultivation area and production have shown a steady upward trend, expanding from 3.15 million hectares and 19.53 million tons in 2010 to 4.83 million hectares and 32.18 million tons in 2023 []. Harvesting is a crucial stage in tea production, characterized by highly seasonal and labor-intensive requirements. Labor shortages and the loss of agricultural workers pose significant challenges to the development of the tea industry [,]. With the emergence of artificial intelligence technology, mechanized and intelligent tea harvesting offers an opportunity to address this challenge. However, current research primarily focuses on detecting harvest targets and locating picking points, while relatively few studies have addressed the identification of tea plant varieties during the harvest period.

A visual perception system is the core of intelligent harvesting platforms, enabling environmental sensing, target detection, and decision-making []. In tea plantations, multi-variety planting patterns are widely adopted to mitigate risks from natural disasters and pests, stagger peak harvest periods, and increase economic returns []. However, these patterns require harvesting platforms to adapt to different varieties in real time. Since different tea varieties differ in chemical composition and processing requirements, accurate identification during harvesting is essential to maintain varietal purity and ensure product quality [,]. During harvesting, these varieties also exhibit distinct phenotypic traits, particularly in canopy architecture and shoot morphology. To address the requirements of multi-variety tea harvesting, two approaches can be applied to visual perception systems: (1) developing a single target detection model applicable across multiple varieties, followed by sorting the harvested tea by variety for post-harvest processing; (2) developing multiple single-variety target detection models and automatically activating the appropriate one based on the variety being harvested. For identifying tea plant varieties within the working area, the harvesting platform can be pre-set according to planting schemes. However, this approach cannot be reliably applied when different varieties exist in adjacent plots or within the same plot, and it does not facilitate flexible scheduling for multiple harvesting platforms. Therefore, real-time perception of tea varieties within the operational area is required to enable the harvesting platform to accurately activate the appropriate detection and localization models. This also forms the informational basis for the harvesting platform to store harvested tea leaves separately according to their associated varieties.

Currently, two primary approaches are employed for rapid and accurate crop variety identification: spectral analysis and image processing. Differences in the chemical composition (e.g., chlorophyll, moisture, and amino acids) and physical structure (e.g., thickness and surface texture) of seeds and leaves among varieties lead to distinct spectral characteristics, including unique absorption peaks and reflectance patterns []. Techniques such as near-infrared spectroscopy [], multispectral imaging [], and Raman spectroscopy [] capture these characteristics across different wavelengths. When combined with chemometric methods, they enable rapid and non-destructive crop variety identification. Although highly accurate, these methods require specialized spectral sensors, which increase the cost and complicate deployment in intelligent harvesting platforms. In contrast, image-based methods using RGB or depth cameras are more practical, as they support both variety identification and harvest-target detection, and are easier to integrate into edge devices [,]. Early studies often extracted color, texture, and shape features from seeds [], fruits [], and leaves [], and then combined machine learning algorithms such as support vector machines [], backpropagation neural networks [], k-nearest neighbors [], and random forests [] for variety classification. However, such feature-engineered methods demand substantial domain expertise and iterative experimentation, and their performance often deteriorates under real-world field conditions characterized by variable lighting and complex backgrounds.

Deep learning has revolutionized image-based agricultural applications by automatically extracting task-relevant features from raw data, capturing both low-level (color and texture) and high-level semantic representations [,]. It has been successfully applied to field management [,], autonomous farm machinery [,], and intelligent harvesting [,]. For crop variety identification, Pan et al. conducted research on the wild grape variety identification using deep learning methods based on leaf images, achieving an accuracy of 98.64% across 23 varieties []. To enable rapid and accurate identification of olive varieties in natural environments, Zhu et al. improved the EfficientNet model by integrating bilinear networks and attention mechanisms, achieving an accuracy of 90.28% for four varieties based on fruit images []. Zhang et al. combined MobileNetV3 with multi-task learning for fine-grained classification of jujube varieties, reaching 91.79% accuracy across 20 varieties []. Despite these advances, few studies have investigated tea plant variety identification in field environments, particularly during the harvest period. Moreover, existing studies often rely on single-organ images (e.g., leaves or fruits) [,], which are unsuitable for operational harvesting platforms that require canopy-level recognition.

Although deep learning provides a versatile framework for visual recognition tasks, specialized models for agricultural crop variety identification are still lacking []. Current practice often relies on modifying existing network architectures to improve performance, but this approach requires extensive experimentation and advanced algorithmic expertise []. To address this gap, the present study focuses on tea plant variety identification during the harvest period using canopy images. A dataset of 6600 images representing 11 tea varieties was constructed under diverse field conditions, including varying seasons, lighting environments, shooting angles, and distances, to capture real-world variability. Fourteen deep learning models were developed using transfer learning, and their performance was systematically compared to identify the baseline model. Furthermore, a comprehensive analysis of training strategies was conducted, examining the effects of optimizers, input image size, dataset split ratio, and training parameters. By optimizing the training process, the performance of tea plant variety identification was improved. This study demonstrates the feasibility of rapid and accurate tea variety identification from canopy images during the harvest period, thereby providing critical technical support for intelligent tea harvesting.

2. Materials and Methods

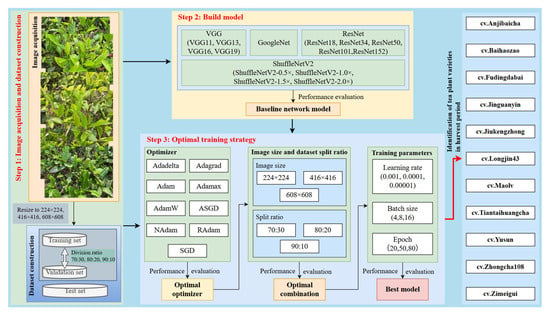

To achieve tea plant variety identification based on canopy images during the harvest period, the technical workflow of this study was designed as outlined in Figure 1, which consisted of three main steps. Step 1: Dataset construction. Canopy images of tea plants were collected in the field during the harvest period under varying seasons, lighting conditions, shooting angles, and distances to enhance the generalizability of the proposed method. The tea plant variety identification dataset was constructed based on these images, which served as the foundation for model training and performance evaluation. Step 2: Baseline network model selection. To identify a suitable deep learning framework, 14 convolutional neural networks with varying sizes and structural complexities were evaluated. Each network was trained using transfer learning, and these models were compared to determine the baseline network with the best identification performance. Step 3: Training strategy optimization. The effects of different optimizers, input image sizes, dataset split ratios, and training parameter combinations on model performance were analyzed using the leave-one-out method. The optimal training strategy was determined to improve the identification performance of the baseline network model.

Figure 1.

Overall technical route of the proposed tea plant variety identification method based on canopy images during the harvest period.

2.1. Image Acquisition and Dataset Construction

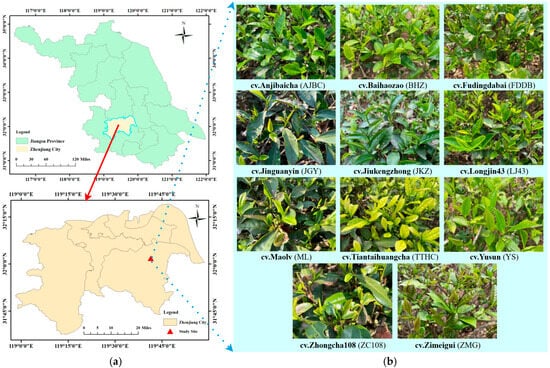

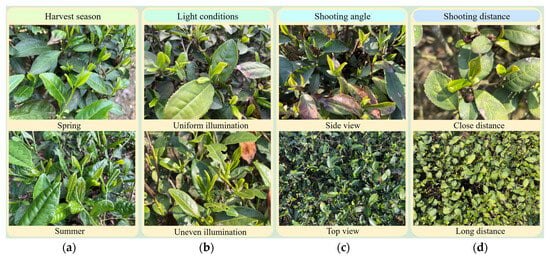

The images used in this study were acquired in 2024 during the spring tea harvest period from 8 to 21 April and the summer tea harvest period from 3 to 15 July at the Yinchunbiya tea plantation (elevation 6.7 m), Zhenjiang City, Jiangsu Province, China (latitude 32°01′35″ N and longitude 119°40′21″ E) (Figure 2a). The tea plantation follows a standardized production pattern, with pruning conducted in October 2023 and June 2024 to ensure the concentration of tea shoots at the canopy surface during the harvest period. Canopy images of 11 tea plant varieties (Figure 2b) were captured using two smartphones (Xiaomi 12, Xiaomi, Beijing, China and iPhone 12, Apple, Shenzhen, China) and a digital camera (Canon PowerShot SX30 IS, Canon, Tokyo, Japan), with resolutions of 4000 × 3000 pixels, 4032 × 3024 pixels, and 4320 × 3240 pixels, respectively. To ensure the generalizability of the developed variety identification model across different environmental backgrounds, these canopy images were collected across various seasons (spring and summer) (Figure 3a), lighting conditions (uniform and non-uniform) (Figure 3b), shooting angles (side and top views) (Figure 3c), and shooting distances (long distance and close distance) (Figure 3d). Specifically, images were captured between 7:00 a.m. and 6:00 p.m. under various weather conditions (sunny and cloudy), at angles ranging from 30° to 90°, and distances of 20 cm to 70 cm from the tea plant canopy. A total of 6600 canopy images were obtained, with 600 images per variety.

Figure 2.

Experimental site and selected tea plant variety. (a) and (b) represent the experimental site and the selected tea plant variety, respectively.

Figure 3.

Tea plant canopy image samples under different conditions. (a–d) represent the canopy image samples captured under different seasons, light conditions, shooting angles, and distances.

To objectively evaluate the model’s performance in real-world scenarios, 120 images were randomly selected from each variety (a total of 1320 images) to form the test set. These images were not used during the training process and remained unseen by the trained model. The remaining images were randomly divided into training and validation sets according to their variety categories at three split ratios: 70:30, 80:20, and 90:10. The training set was used to optimize network weights through backpropagation and gradient descent to minimize the loss function, while the validation set was used to tune network parameters and prevent overfitting. Under the three split ratios, the numbers of training and validation images per variety were 336 and 144, 384 and 96, and 432 and 48, respectively. The images in the training, validation, and test sets under different split ratios were uniformly resized to 224 × 224, 416 × 416, and 608 × 608, using k-nearest neighbor interpolation, respectively. Ultimately, this study constructed nine datasets with different combinations of split ratios and image sizes.

2.2. Convolutional Neural Network

Convolutional neural networks (CNNs) represent a subclass of deep learning specifically designed to process grid-structured data, such as images, videos, and audio. In classification tasks, CNNs extract informative features layer by layer through stacked convolutional layers, pooling layers, and activation functions []. This hierarchical feature extraction mechanism forms the basis of their strong representation capabilities. In the lower layers, CNNs primarily capture low-level visual features, such as color, texture, and edges. As the network depth increases, progressively higher-level semantic features are learned.

Four CNN architectures were selected for developing tea variety identification models in this study, including VGG [], GoogleNet [], ResNet [], and ShuffleNetV2 []. The VGG series, developed by the Visual Geometry Group, features a simple architecture primarily composed of stacked 3 × 3 convolutions. GoogleNet, proposed by Google Research, expands network width through multiscale convolution kernel and pooling operations in the Inception module, thereby enhancing performance by fusing features from different scales. The ResNet series developed by Microsoft Research consists primarily of stacked residual blocks. Skip connections within these blocks allow input signals to bypass intermediate layers, enabling low-level features to be propagated directly to deeper layers, thereby improving information flow and mitigating gradient degradation. The ShuffleNetV2 series is an efficient, lightweight, real-time model proposed by Megvii as an improvement over ShuffleNetV1. Employing channel shuffling operations to address the channel isolation issue in group convolutions enhances feature fusion while maintaining high computational efficiency, making it particularly suitable for deployment on resource-constrained devices.

2.3. Transfer Learning

Compared to traditional machine learning algorithms, deep learning-based network models contain a large number of parameters, enabling them to achieve excellent performance in complex application scenarios []. In the output stage of a network model, the final extracted feature representations are mapped to one-dimensional logits corresponding to the number of task categories through a fully connected layer. These logits are then transformed into a probability distribution using the Softmax function, and the category with the highest probability is selected as the prediction result. To achieve satisfactory performance, a large amount of labeled image data and substantial computing resources are typically required during training, which ensures the network fully learns task-relevant features. This requirement presents a challenge for developing a tea plant variety identification model. Transfer learning provides an effective solution by leveraging the general knowledge (e.g., edge and texture features) learned by pre-trained models on large datasets to provide a favorable initialization for specific tasks []. In this study, transfer learning was employed to develop the tea plant variety identification model. Pre-trained weights from ImageNet [] were used to initialize the network parameters, and the final fully connected layer was modified from 1000 to 11 nodes to match the number of tea plant varieties under investigation. This approach enabled efficient training and improved model generalization despite limited domain-specific data.

2.4. Training Process Optimization

To improve the classification performance of deep learning-based models in specific application scenarios, this study optimized the training process. Specifically, the effects of different optimizers, input image sizes, dataset splitting ratios, and training parameters (Table 1) on model identification performance were analyzed to determine the optimal training conditions. In deep learning frameworks, the optimizer is an algorithm that automatically updates model parameters to minimize the loss function. It computes the gradient of the loss with respect to the parameters and adjusts them according to specific rules, thereby guiding the network toward an optimal solution []. Appropriate input image size and dataset splitting ratio provide the model with sufficient detailed features to distinguish different categories during training, while preventing overfitting. Training parameters (including learning rate, batch size, and number of epochs) have been demonstrated in relevant studies to impact model performance significantly [,]. However, most of these studies have analyzed the effects of these parameters on training outcomes in isolation and incrementally, without considering the combined impact of their interactions on model performance.

Table 1.

Model training process settings.

2.5. Experimental Platform Configuration

The experiments in this study were conducted on a 64-bit Windows 10 PC with 48 GB of RAM, an AMDRyzen 3700X CPU (3.6 GHz, 8 cores) (Advanced Micro Devices, Santa Clara, CA, USA), and an NVIDIA GeForce RTX 3080 Ti GPU (NVIDIA, Santa Clara, CA, USA) with 12 GB of memory. The development environment consisted of Python 3.10.13, PyTorch 1.13.1, and CUDA 11.7.

2.6. Performance Evaluation Metrics

This study evaluated model performance using both identification metrics, including accuracy, precision, specificity, and F1-score, and computational metrics, including model size, number of parameters, floating point operations per second (FLOPs), and frames per second (FPS). Accuracy quantifies the proportion of correctly classified samples among all samples, reflecting the overall performance of the model. Precision measures the reliability of predictions for each variety, defined as the correctly predicted samples for a given variety out of all samples predicted as that variety. Specificity assesses the model’s ability to correctly identify non-target categories, i.e., the samples from other varieties that are not incorrectly classified as the target variety. The F1-score is a comprehensive evaluation metric that reflects the model’s capability to reduce both false positives and false negatives. The formulas for the identification performance metrics are as follows:

where n represents the number of categories, which corresponds to 11 tea plant varieties in this study; N denotes the total number of samples used for performance evaluation; TPi is the number of samples correctly predicted as belonging to the i-th tea plant varieties; FPi is the number of samples from other varieties incorrectly predicted as the i-th variety; TNi is the number of samples correctly identified as not belonging to the i-th variety; and FNi is the number of samples from the i-th variety incorrectly predicted as other varieties.

3. Results and Analysis

3.1. Performance of Different CNN-Based Models

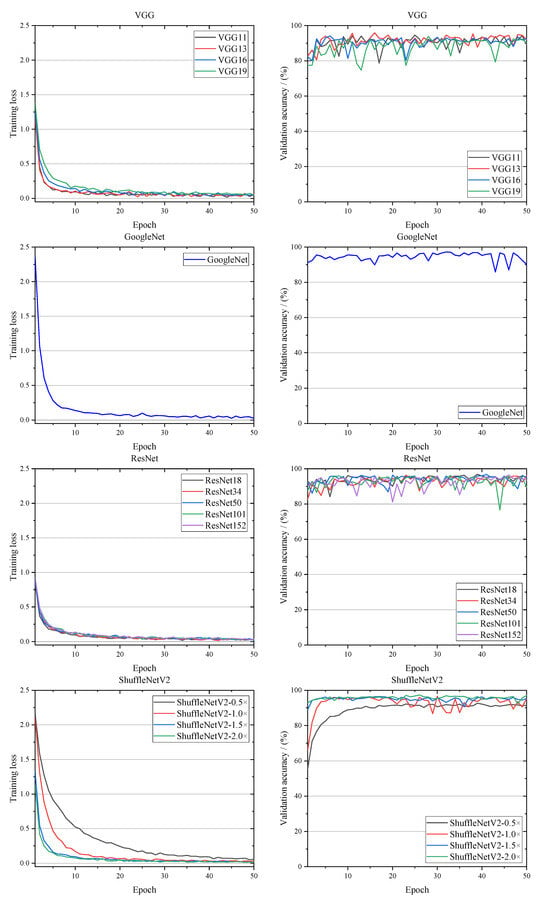

To develop a high-performance model for identifying tea plant varieties based on canopy images during the harvest period, this study fine-tuned different CNNs using transfer learning methods. A total of 14 CNN architectures were selected, including VGG (VGG11, VGG13, VGG16, VGG19), GoogleNet, ResNet (ResNet18, ResNet34, ResNet50, ResNet101, ResNet152), ShuffleNetV2 (ShuffleNetV2-0.5×, ShuffleNetV2-1.0×, ShuffleNetV2-1.5×, ShuffleNetV2-2.0×). During training, the input image size was resized to 416 × 416, the training and validation sets were randomly split at a ratio of 80:20. The AdamW optimizer was employed with a batch size of 4, 50 epochs, and an initial learning rate of 0.0001. The training process of the different models is shown in Figure 4. Most models exhibited a gradual decrease in training loss and achieved convergence. An exception was ShuffleNetV2-0.5×, whose loss continued to decline at the 50th epoch, likely due to its relatively simple network structure requiring additional iterations to fully fit the training data. Benefiting from transfer learning, the validation accuracy of all models increased rapidly during the initial epochs and subsequently stabilized with minor fluctuations, demonstrating the effectiveness of leveraging pre-trained weights for this task.

Figure 4.

Training process of different models.

The performance of different models is shown in Table 2. All models achieved the highest accuracy on the training set, as their parameters were directly optimized using the training data through backpropagation and gradient descent. Validation and test set accuracies were comparable, with the test set consisting of unseen images, indicating strong generalization capabilities across all models. Ideally, increasing model complexity (e.g., depth or width) enhances feature extraction ability. For example, within the ShuffleNetV2 series, accuracy improved from 92.50% for ShuffleNetV2-0.5× to 97.05% for ShuffleNetV2-2.0×, accompanied by an increase in model size from 1.49 MB to 20.72 MB. However, excessive complexity may lead to problems such as gradient vanishing or explosion, potentially causing overfitting and reduced generalization. This trend is evident in the VGG series: accuracy increased from 94.85% for VGG11 to 96.52% for VGG13, but declined to 94.24% for VGG19. Among these models, ShuffleNetV2-0.5× demonstrated the best deployment performance, with a model size of 1.49 MB, FLOPs of 0.15 G, and 0.35 M parameters, but yielded the lowest identification performance with an accuracy of 92.50%. By contrast, VGG19 exhibited the highest complexity, with a model size of 532.69 MB, FLOPs of 67.61 G, and 139.63 M parameters, imposing substantial demands on storage and computational resources during deployment. Notably, ResNet50 achieved the best identification performance, with an accuracy of 97.12%, while its model size, FLOPs, and parameters were only 16.91%, 21.08%, and 16.69% of those of VGG19, respectively.

Table 2.

Identification performance of different models.

3.2. Optimize the Training Process

Through a comprehensive comparison and evaluation of the different models’ performance, ResNet50 was selected as the baseline network for subsequent experiments. To further improve its effectiveness in identifying tea plant varieties during the harvest period, this study systematically evaluated the impact of different optimizers, input image sizes, dataset split ratios, and training parameters on model performance.

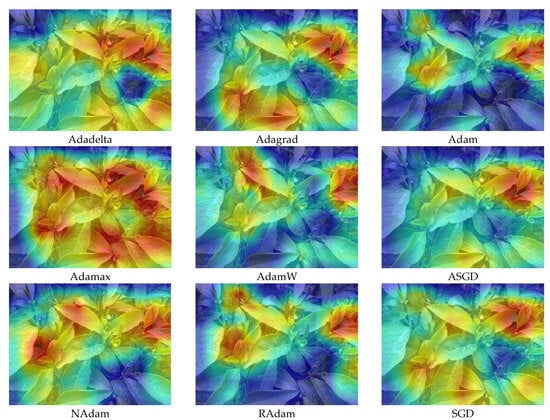

3.2.1. Optimizers

In this study, the performance of models trained with 9 optimizers was compared, including Adadelta, Adagrad, Adam, Adamax, AdamW, ASGD, NAdam, RAdam, and SGD (Table 3). Among these, Adamax achieved the best identification performance, with an accuracy of 98.03%, whereas Adadelta yielded the worst identification performance, with an accuracy of 85.61%. During the harvest period, tea shoot morphology varies due to differences in light conditions, nutritional status, and shooting angles. Consequently, canopy images of different tea plant varieties may exhibit similar features, which can cause gradient oscillations during training when using optimizers with simple update rules, such as Adadelta and SGD. This prevents the model from converging to an optimal solution. Adamax is a variant of Adam based on the infinity norm, simplifying the upper bound of the learning rate and enabling the model to capture the maximum gradient component during training. This mechanism allows it to better learn fine-grained differences between visually similar varieties. The regions of interest for different models were visualized using the gradient-weighted class activation mapping method [] (Figure 5). Heatmaps showed that all models primarily focused on tea shoot regions while paying less attention to the background elements, such as older leaves and stems. Notably, the Adamax-trained model exhibited the broadest attention distribution and highest intensity, reflecting its superior capability to extract discriminative features for variety classification. Therefore, Adamax was selected as the optimal optimizer. Replacing AdamW with Adamax in ResNet50 increased identification accuracy from 97.12% to 98.03%, representing a 0.91% improvement.

Table 3.

Identification performance of trained models with different optimizers.

Figure 5.

Heatmap visualization of trained models with different optimizers.

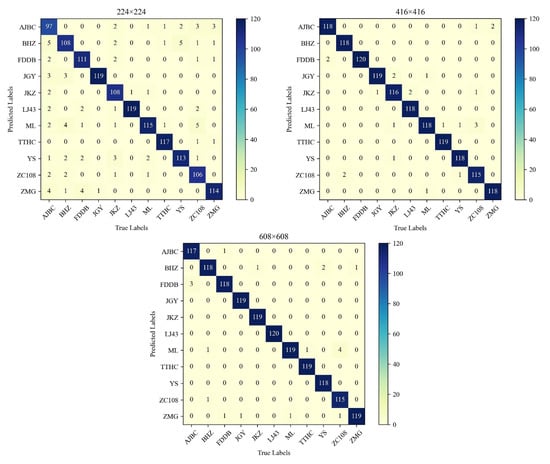

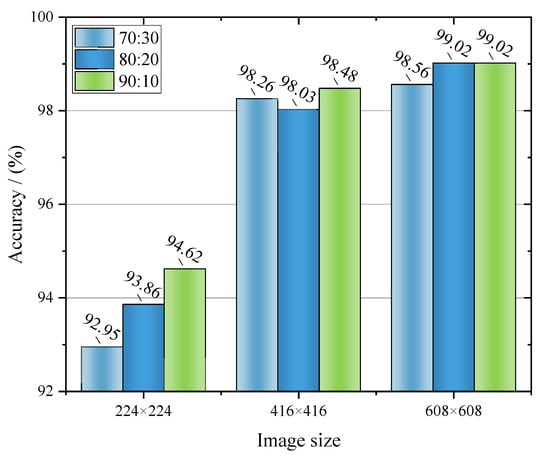

3.2.2. Input Image Size and Dataset Division Ratio

CNNs hierarchically extract image features, progressively downsampling the input data. Therefore, when developing deep learning–based classification models, it is essential to evaluate the effects of input image size and dataset split ratio on training outcomes. Based on the above results, Adamax was adopted to dynamically adjust model parameters during training. To determine the optimal combination of input image size and dataset split ratio, this study conducted a comparative analysis of three input image sizes (224 × 224, 416 × 416, 608 × 608) and three dataset splitting ratios (training set and validation set splitting ratios of 70:30, 80:20, 90:10) on training outcomes (Table 4). Figure 6 illustrates the confusion matrices for models trained with different input sizes when the training and validation sets are split at a ratio of 70:30. When smaller images are used, the model receives limited fine-grained information, making it difficult to distinguish tea plant varieties with high phenotypic similarity. Increasing the input size allows the model to capture more discriminative features during training, thereby improving classification accuracy. However, higher-resolution images also increase computational demands and reduce real-time inference speed. For example, the FLOPs and FPS changed from 4.13 G and 76.81 at 224 × 224 to 30.44 G and 37.90 at 608 × 608.

Table 4.

Effects of image size and dataset split ratio on model identification performance.

Figure 6.

Confusion matrix for trained models with different image sizes.

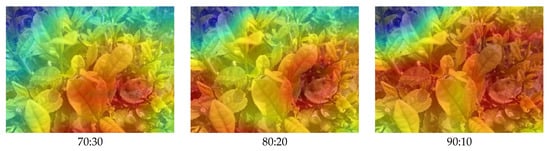

A well-chosen dataset split ratio is critical for balancing model training and validation. The training set must contain sufficient samples to enable the network to learn key discriminative features effectively, while the validation set provides an unbiased estimate of generalization performance and helps prevent overfitting []. In this study, the impact of different training-validation split ratios on identification performance was evaluated across multiple input image sizes. The results revealed that the model’s accuracy varied with the split ratio, reflecting the trade-off between the quantity of training data and the reliability of validation feedback (Figure 7).

Figure 7.

Identification accuracy of trained models with different image sizes and dataset split ratios.

When the input size was 224 × 224, accuracy consistently improved as the proportion of training data increased, increasing from 92.95% to 94.62%. This trend likely reflects the limited fine-grained information in smaller images, which necessitates a larger training set to capture sufficient details for accurate classification. With more training samples, the model was able to learn a richer set of discriminative features for variety identification (Figure 8). For input images of 416 × 416, accuracy initially decreased and then increased as the training set proportion grew. Specifically, accuracy decreased from 98.26% at a 70:30 split ratio to 98.03% at 80:20, before increasing to 98.48% at 90:10. This pattern may be attributed to the balance between training and validation data. With a split ratio of 70:30, the relatively large validation set could better reflect generalization performance and guide parameter tuning, partially offsetting the underfitting risk from fewer training samples. When the validation set became smaller at a split ratio of 80:20, the reduced feedback may have caused the model to converge to a local optimum. At a split ratio of 90:10, the larger training set enabled the model to learn more robust feature representations, improving accuracy. For an input size of 608 × 608, accuracy initially increased with a larger training set and then plateaued. Larger images inherently provide detailed visual features, and once a sufficient number of training samples is available, additional data has minimal impact on performance.

Figure 8.

Heatmaps of trained models with different dataset split ratios for image sizes of 224 × 224.

The model achieved the highest identification performance when trained with an input size of 608×608 and a split ratio of 80:20. By adjusting the input image size and dataset split ratio, the model’s identification performance was further improved, achieving an accuracy of 99.02%.

3.2.3. Training Parameters

A suitable configuration of training parameters is essential for obtaining a high-performance model. Accordingly, this study systematically evaluated the effects of learning rate, batch size, and number of epochs on model performance. During these experiments, Adamax was employed as the optimizer, the input image size was set to 608 × 608, and the training and validation sets were split at a ratio of 80:20. The parameters examined included learning rates of 0.001, 0.0001, and 0.00001; batch sizes of 4, 8, and 16; and epochs of 20, 50, and 80 (Table 5). The learning rate determines the step size for parameter updates during training. Excessively high values can cause oscillations or prevent convergence, whereas overly low values may trap the model in local minima. The batch size defines the number of samples processed in each iteration. Large batch sizes produce smoother gradient estimates, which can lead the model to converge to sharp minima, while small batch sizes may introduce excessive gradient noise. The number of epochs represents complete passes over the training dataset; too few epochs may hinder full feature learning, whereas too many can result in overfitting and reduced generalization.

Table 5.

Effect of training parameters on model identification performance.

The effects of learning rate, batch size, and number of epochs on model performance are summarized in Table 5. At the learning rate of 0.001, identification performance generally improved with increasing batch size across different epochs. For batch sizes of 8 or 16, performance increased as the number of epochs; however, for a batch size of 4, performance initially improves but then declines as epochs increase. The highest identification accuracy under this learning rate is achieved with a batch size of 16 and 80 epochs, reaching 98.64%. At the learning rate of 0.0001, no obvious correlation was observed between performance and batch size or number of epochs. Specifically, with a batch size of 4, performance improves as epochs increase; with a batch size of 8, performance declines as epochs increase; and with a batch size of 16, performance first declines and then improves with increasing epochs. The best performance under this learning rate is obtained with a batch size of 8 and 20 epochs, achieving an accuracy of 99.32%. When the learning rate is 0.00001, the model’s performance shows a trend of first improving and then declining as epochs increase under the same batch size. Additionally, under the same number of epochs, performance decreases as the batch size increases.

The above results indicate that model performance varies considerably under different training parameter configurations. When the learning rate is relatively high, larger batch sizes and more epochs are more suitable, whereas at lower learning rates, smaller batch sizes tend to yield better results. By comprehensively comparing different combinations of training parameters, the optimal configuration was determined to be a learning rate of 0.0001, a batch size of 8, and 20 epochs. Under these conditions, the model achieved an identification accuracy of 99.32% for tea plant varieties, which not only improved identification performance but also reduced training time.

3.3. Identification Performance Under Different Environmental Conditions

To validate the suitability of the model in complex field conditions, this study analyzed its identification performance under different seasons and light conditions (Table 6). As shown in Figure 3, during the spring tea harvesting period, the new leaves and buds appear light green, while the older leaves are darker, with some withered leaves due to early spring frosts. During the summer tea harvest period, the color of new leaves and buds becomes dark green, resembling that of mature leaves. This seasonal variation is attributable to differences in pigment synthesis: under the mild sunlight and lower temperatures of spring, tea plants accumulate large amounts of chlorophyll a, carotenoids, and anthocyanins, whereas in summer, intense sunlight promotes the synthesis of chlorophyll b. The identification performance of the model remained consistent across seasons, achieving accuracies of 99.40% in spring and 99.24% in summer. Under uniform illumination conditions, detailed features in canopy images are fully preserved. In contrast, under uneven lighting conditions, light spots and shadows cause partial information loss, preventing the model from fully extracting the critical features for distinguishing different varieties. The identification performance of the model varies significantly under different lighting conditions, with an accuracy of 99.85% under uniform illumination and 98.79% under non-uniform illumination.

Table 6.

Identification performance of the model under different environments.

4. Discussion

4.1. Comparison with Methods Proposed in Other Studies

Compared with other studies on tea plant variety identification, the method proposed in this study achieves higher accuracy (Table 7). Previous research [,,] identified tea plant varieties using spectral analysis, in which spectral information from leaves or canopies was processed to select characteristic wavelengths or spectral indices as inputs for machine learning classifiers. However, the chemical composition of tea plants varies with growth stage and nutritional status, and spectral data are highly sensitive to lighting conditions. Consequently, spectral analysis–based methods show lower accuracy in tea plant variety identification. Moreover, integrating spectral acquisition devices into an intelligent tea harvesting platform increases both deployment complexity and cost. Tea plants reproduce through both sexual and asexual means, resulting in some varieties exhibiting highly similar leaf phenotypes, which makes leaf image–based models less effective for accurate variety discrimination [,]. In this study, variety identification focuses on the harvest period, when canopy images are less complex than those captured across the full growth cycle (within one year) [], enabling the developed model to achieve higher accuracy. In addition, image processing–based variety identification models can share camera sensors with tea bud detection or positioning systems on intelligent tea harvesting platforms, thereby enhancing deployment convenience.

Table 7.

Comparison results with other studies.

4.2. Comparison with Other Convolutional Neural Network Models

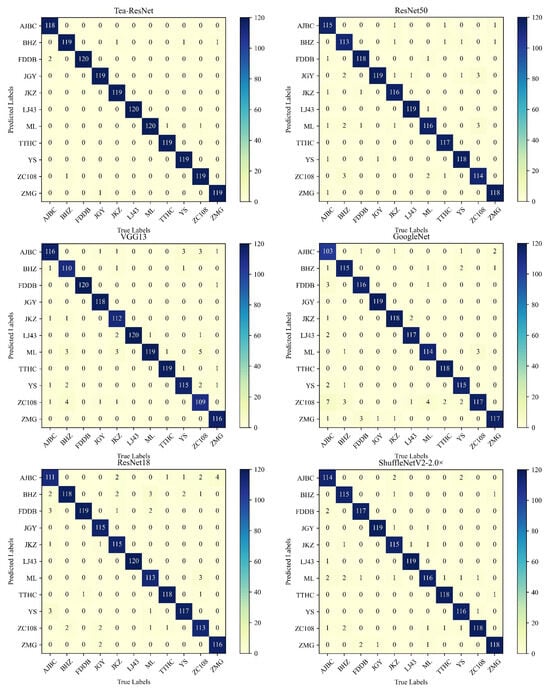

This study successfully achieved accurate identification of tea plant varieties based on canopy images during the harvest period using deep learning, with further performance improvements obtained through systematic training strategy optimization. Based on ResNet50 under the optimal training strategy, i.e., Adamax as the optimizer, input image size is 608 × 608, training set and validation split at a ratio of 80:20, learning rate is 0.0001, the batch size is 8, and 20 epochs, the model achieved an accuracy of 99.32%. This optimized model was named Tea-ResNet and compared with several models listed in Table 2 (Figure 9). The similarity among canopy images of different tea varieties, along with complex lighting and environmental backgrounds, is the main factor limiting the identification performance. For ResNet50, VGG13, GoogleNet, ResNet18, and ShuffleNetV2-2.0×, the varieties with the lowest identification performance in the test set were BHZ, ZC108, AJBC, AJBC, and AJBC, with correct identifications of 113, 109, 103, 111, and 114 samples, respectively. In contrast, Tea-ResNet accurately identified 118, 119, and 119 samples for AJBC, BHZ, and ZC108, respectively. Optimizing the training process enables the model to capture finer discriminative details between varieties, thereby reducing misidentification.

Figure 9.

Confusion matrix for different model identification results.

Tea-ResNet distinguishes itself through its emphasis on real-world canopy imagery across a substantive array of 11 varieties, eschewing reliance on specialized instrumentation or controlled laboratory conditions. For comparison, a contemporaneous investigation employing dense convolutional architectures on canopy images of 16 cultivars reported a peak accuracy of 97.81% with DenseNet201 following analogous training refinements, albeit on a marginally larger dataset of 8000 images []. In the domain of specialized cultivars, a transfer learning approach utilizing MobileNetV2 on 1800 images of three purple-leaf varieties yielded 99.67% accuracy, though confined to a narrower varietal scope and leveraging isolated leaf samples rather than holistic canopies []. Electrochemical and fluorescence-based methodologies have occasionally surpassed 98% in controlled settings for 3–9 varieties, yet they diverge from our non-destructive, image-centric ethos by demanding bespoke hardware []. Thus, Tea-ResNet not only eclipses many predecessors in accuracy and field applicability but also furnishes a pragmatic scaffold for intelligent harvesting systems, warranting further exploration into lightweight deployments for edge computing in agronomic contexts.

4.3. Limitations and Feature Work

Currently, our experiments are being conducted using tea plant varieties cultivated in specific regions. Although our study comprehensively considered canopy images from diverse environments and constructed a dataset containing 11 varieties, the number of varieties is relatively limited. The model’s adaptability and robustness in other regions still require further verification. Future work will involve collecting canopy images from a wider range of tea-producing regions to build a dataset encompassing more geographic areas and varieties. High-performance modules and compression methods will be employed to modify the model, thereby improving its generalizability, identification performance, and deployment-friendliness.

5. Conclusions

In multi-variety tea plantations, precise and rapid variety identification during harvest is vital for boosting the intelligence and efficiency of automated systems. This precision facilitates the activation of customized algorithms for bud detection and spatial location, in combination with efficient post-harvest management practices.

The study introduced a deep learning method for variety identification via canopy images captured in field conditions. A comprehensive dataset of 6600 images across 11 varieties was constructed, incorporating diverse factors like harvest periods, lighting conditions, shooting angles, and distances. Fourteen CNN models based on architectures including VGG, GoogleNet, ResNet, and ShuffleNetV2 were assessed, with ResNet50 delivering the best initial results. To improve performance, the training process underwent optimization through detailed evaluations of optimizers, dataset splitting ratios, input image sizes, and hyperparameters. The optimized setup included using the Adamax optimizer, with an input image size of 608 × 608, a training and validation sets split ratio of 80:20, a 0.0001 learning rate, a batch size of 8, and 20 epochs. The final optimized model achieved an accuracy of 99.32%, representing a 2.20% improvement over the baseline.

This study demonstrates the feasibility of highly accurate tea variety identification from canopy imagery but also provides a transferable deep learning framework and optimized training pipeline for intelligent tea harvesting applications. In comparison to existing methods, this research broadens the scope using cost-effective RGB canopy images. Despite potential higher computational requirements, it fills critical voids, propelling advancements in precision agriculture and variety-specific innovations.

Author Contributions

Conceptualization: Z.Z.; data curation: Z.Z., Y.L. and P.L.; formal analysis: Z.Z. and P.L.; funding acquisition: Y.L.; investigation: Z.Z. and Y.L.; methodology: Z.Z. and P.L.; project administration: Z.Z. and Y.L.; resources: Z.Z.; software: Z.Z. and Y.L.; supervision: Z.Z. and Y.L.; validation: Z.Z. and P.L.; visualization: Z.Z. and P.L.; writing—original draft: Z.Z. and Y.L.; writing—review and editing: Z.Z., Y.L. and P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Priority Academic Program Development of Jiangsu Higher Education Institutions (PAPD-2023-87).

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Acknowledgments

The principal authors would like to express their gratitude to the School of Agricultural Engineering, Jiangsu University, for providing essential instruments without which this work would not have been possible. We would like to thank the anonymous reviewers for their careful attention.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhou, H.; Fu, H.; Wu, X.; Wu, B.; Dai, C. Discrimination of tea varieties based on FTIR spectroscopy and an adaptive improved possibilistic c-means clustering. J. Food Process. Preserv. 2020, 44, e14795. [Google Scholar] [CrossRef]

- Wu, X.; He, F.; Wu, B.; Zeng, S.; He, C. Accurate classification of Chunmee tea grade using NIR spectroscopy and fuzzy maximum uncertainty linear discriminant analysis. Foods 2023, 12, 541. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.; Nie, Q.; Tai, H.; Song, X.; Tong, Y.; Zhang, L.; Wu, X.; Lin, Z.; Zhang, Y.; Ye, D.; et al. Tea and tea drinking: China’s outstanding contributions to the mankind. Chin. Med. 2022, 17, 27. [Google Scholar] [CrossRef] [PubMed]

- Food and Agriculture Organization of the United Nations. Available online: https://www.fao.org (accessed on 15 July 2025).

- Zhang, Z.; Lu, Y.; Zhao, Y.; Pan, Q.; Jin, K.; Xu, G.; Hu, Y. TS-YOLO: An All-Day and lightweight tea canopy shoots detection model. Agronomy 2023, 13, 1411. [Google Scholar] [CrossRef]

- Luo, Y.; Wei, L.; Xu, L.; Zhang, Q.; Liu, J.; Cai, Q.; Zhang, W. Stereo-vision-based multi-crop harvesting edge detection for precise automatic steering of combine harvester. Biosyst. Eng. 2022, 215, 115–128. [Google Scholar] [CrossRef]

- Andronie, M.; Lăzăroiu, G.; Karabolevski, O.; Ștefănescu, R.; Hurloiu, I.; Dijmărescu, A.; Dijmărescu, I. Remote big data management tools, sensing and computing technologies, and visual perception and environment mapping algorithms in the internet of robotic things. Electronics 2022, 12, 22. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Yang, M.; Wang, G.; Zhao, Y.; Hu, Y. Optimal training strategy for high-performance detection model of multi-cultivar tea shoots based on deep learning methods. Sci. Hortic. 2024, 328, 112949. [Google Scholar] [CrossRef]

- Li, Y.; Yu, S.; Yang, S.; Ni, D.; Jiang, X.; Zhang, D.; Zhou, J.; Li, C.; Yu, Z. Study on taste quality formation and leaf conducting tissue changes in six types of tea during their manufacturing processes. Food Chem. X 2023, 18, 100731. [Google Scholar] [CrossRef]

- Wong, M.; Sirisena, S.; Ng, K. Phytochemical profile of differently processed tea: A review. J. Food Sci. 2022, 87, 1925–1942. [Google Scholar] [CrossRef]

- Ge, X.; Sun, J.; Lu, B.; Chen, Q.; Xun, W.; Jin, Y. Classification of oolong tea varieties based on hyperspectral imaging technology and BOSS-LightGBM model. J. Food Process Eng. 2019, 42, e13289. [Google Scholar] [CrossRef]

- Li, X.; Wu, J.; Bai, T.; Wu, C.; He, Y.; Huang, J.; Li, X.; Shi, Z.; Hou, K. Variety classification and identification of jujube based on near-infrared spectroscopy and 1D-CNN. Comput. Electron. Agric. 2024, 223, 109122. [Google Scholar] [CrossRef]

- Cao, Q.; Yang, G.; Wang, F.; Chen, L.; Xu, B.; Zhao, C.; Duan, D.; Jiang, P.; Xu, Z.; Yang, H. Discrimination of tea plant variety using in-situ multispectral imaging system and multi-feature analysis. Comput. Electron. Agric. 2022, 202, 107360. [Google Scholar] [CrossRef]

- Saletnik, A.; Saletnik, B.; Puchalski, C. Raman method in identification of species and varieties, assessment of plant maturity and crop quality—A Review. Molecules 2022, 27, 4454. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-Time detection and location of potted flowers based on a ZED camera and a YOLO V4-Tiny deep learning algorithm. Horticulturae 2021, 8, 21. [Google Scholar] [CrossRef]

- Wang, W.; Xi, Y.; Gu, J.; Yang, Q.; Pan, Z.; Zhang, X.; Xu, G.; Zhou, M. YOLOV8-TEA: Recognition Method of tender shoots of tea based on instance segmentation algorithm. Agronomy 2025, 15, 1318. [Google Scholar] [CrossRef]

- Chen, X.; Xun, Y.; Li, W.; Zhang, J. Combining discriminant analysis and neural networks for corn variety identification. Comput. Electron. Agric. 2009, 71, S48–S53. [Google Scholar] [CrossRef]

- Osako, Y.; Yamane, H.; Lin, S.; Chen, P.; Tao, R. Cultivar discrimination of litchi fruit images using deep learning. Sci. Hortic. 2020, 269, 109360. [Google Scholar] [CrossRef]

- Wang, B.; Li, H.; You, J.; Chen, X.; Yuan, X.; Feng, X. Fusing deep learning features of triplet leaf image patterns to boost soybean cultivar identification. Comput. Electron. Agric. 2022, 197, 106914. [Google Scholar] [CrossRef]

- Larese, M.; Granitto, P. Finding local leaf vein patterns for legume characterization and classification. Mach. Vis. Appl. 2015, 27, 709–720. [Google Scholar] [CrossRef]

- Baldi, A.; Pandolfi, C.; Mancuso, S.; Lenzi, A. A leaf-based back propagation neural network for oleander (Nerium oleander L.) cultivar identification. Comput. Electron. Agric. 2017, 142, 515–520. [Google Scholar] [CrossRef]

- Altuntas, Y.; Kocamaz, A.; Yeroglu, C. Identification of apricot varieties using leaf characteristics and KNN classifier. In Proceedings of the 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 21–22 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Hong, P.; Hai, T.; Lan, L.; Hoang, V.; Hai, V.; Nguyen, T. Comparative study on vision based rice seed varieties identification. In Proceedings of the 2015 Seventh International Conference on Knowledge and Systems Engineering (KSE), Ho Chi Minh City, Vietnam, 8–10 October 2015; pp. 377–382. [Google Scholar] [CrossRef]

- Khosravi, H.; Saedi, S.I.; Rezaei, M. Real-time recognition of on-branch olive ripening stages by a deep convolutional neural network. Sci. Hortic. 2021, 287, 110252. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Peng, Y.; Yang, M.; Hu, Y. A lightweight and High-Performance YOLOV5-Based model for tea shoot detection in field conditions. Agronomy 2025, 15, 1122. [Google Scholar] [CrossRef]

- Wu, M.; Liu, S.; Li, Z.; Ou, M.; Dai, S.; Dong, X.; Wang, X.; Jiang, L.; Jia, W. A review of intelligent orchard sprayer technologies: Perception, control, and system integration. Horticulturae 2025, 11, 668. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Z.; Jia, W.; Ou, M.; Dong, X.; Dai, S. A review of environmental sensing technologies for targeted spraying in orchards. Horticulturae 2025, 11, 551. [Google Scholar] [CrossRef]

- Jiang, L.; Xu, B.; Husnain, N.; Wang, Q. Overview of agricultural machinery automation technology for sustainable agriculture. Agronomy 2025, 15, 1471. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, W.; Wei, X. Improved field obstacle detection algorithm based on YOLOV8. Agriculture 2024, 14, 2263. [Google Scholar] [CrossRef]

- Ji, W.; Zhang, T.; Xu, B.; He, G. Apple recognition and picking sequence planning for harvesting robot in a complex environment. J. Agric. Eng. 2023, 55, 1549. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, J.; Wang, J.; Cai, L.; Jin, Y.; Zhao, S.; Xie, B. Realtime picking point decision algorithm of trellis grape for high-speed robotic cut-and-catch harvesting. Agronomy 2023, 13, 1618. [Google Scholar] [CrossRef]

- Pan, B.; Liu, C.; Su, B.; Ju, Y.; Fan, X.; Zhang, Y.; Sun, L.; Fang, Y.; Jiang, J. Research on species identification of wild grape leaves based on deep learning. Sci. Hortic. 2024, 327, 112821. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, F.; Zheng, Y.; Li, Z.; Zhang, X. Identification of olive cultivars using bilinear networks and attention mechanisms. Trans. Chin. Soc. Agric. Eng. 2023, 39, 183–192. [Google Scholar] [CrossRef]

- Zhang, R.; Yuan, Y.; Meng, X.; Liu, T.; Zhang, A.; Lei, H. A multitask model based on MobileNetV3 for fine-grained classification of jujube varieties. J. Food Meas. Charact. 2023, 17, 4305–4317. [Google Scholar] [CrossRef]

- De Nart, D.; Gardiman, M.; Alba, V.; Tarricone, L.; Storchi, P.; Roccotelli, S.; Ammoniaci, M.; Tosi, V.; Perria, R.; Carraro, R. Vine variety identification through leaf image classification: A large-scale study on the robustness of five deep learning models. J. Agric. Sci. 2024, 162, 19–32. [Google Scholar] [CrossRef]

- Quan, W.; Shi, Q.; Fan, Y.; Wang, Q.; Su, B. Few-shot learning for identifying wine grape varieties with limited data. Trans. Chin. Soc. Agric. Eng. 2025, 41, 211–219. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Attri, I.; Awasthi, L.; Sharma, T.; Rathee, P. A review of deep learning techniques used in agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- You, J.; Li, D.; Wang, Z.; Chen, Q.; Ouyang, Q. Prediction and visualization of moisture content in Tencha drying processes by computer vision and deep learning. J. Sci. Food Agric. 2024, 104, 5486–5494. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for Large-Scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In Computer Vision–ECCV 2018; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2018; pp. 122–138. [Google Scholar] [CrossRef]

- Zhao, S.; Peng, Y.; Liu, J.; Wu, S. Tomato leaf disease diagnosis based on improved convolution neural network by attention module. Agriculture 2021, 11, 651. [Google Scholar] [CrossRef]

- Simhadri, C.; Kondaveeti, H. Automatic recognition of rice leaf diseases using transfer learning. Agronomy 2023, 13, 961. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar] [CrossRef]

- Wen, X.; Zhou, M. Evolution and role of optimizers in training deep learning models. IEEE/CAA J. Auto-Matica Sin. 2024, 11, 2039–2042. [Google Scholar] [CrossRef]

- Ramos, L.; Casas, E.; Bendek, E.; Romero, C.; Rivas-Echeverría, F. Hyperparameter optimization of YOLOv8 for smoke and wildfire detection: Implications for agricultural and environmental safety. Artif. Intell. Agric. 2024, 12, 109–126. [Google Scholar] [CrossRef]

- Lee, Y.; Patil, M.; Kim, J.; Seo, Y.; Ahn, D.; Kim, G. Hyperparameter optimization of apple leaf dataset for the disease recognition based on the YOLOv8. J. Agric. Food Res. 2025, 21, 101840. [Google Scholar] [CrossRef]

- Selvaraju, R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Pawluszek-Filipiak, K.; Borkowski, A. On the importance of train–test split ratio of datasets in automatic land-slide detection by supervised classification. Remote Sens. 2020, 12, 3054. [Google Scholar] [CrossRef]

- Cao, Q.; Xu, Z.; Xu, B.; Yang, H.; Wang, F.; Chen, L.; Jiang, X.; Zhao, C.; Jiang, P.; Wu, Q.; et al. Leaf phenotypic difference analysis and variety recognition of tea cultivars based on multispectral imaging technology. Ind. Crops Prod. 2024, 220, 119230. [Google Scholar] [CrossRef]

- Cao, Q.; Zhao, C.; Bai, B.; Cai, J.; Chen, L.; Wang, F.; Xu, B.; Duan, D.; Jiang, P.; Meng, X.; et al. Oolong tea cultivars categorization and germination period classification based on multispectral information. Front. Plant Sci. 2023, 14, 1251418. [Google Scholar] [CrossRef]

- Sun, L.; Shen, J.; Mao, Y.; Li, X.; Fan, K.; Qian, W.; Wang, Y.; Bi, C.; Wang, H.; Xu, Y.; et al. Discrimination of tea varieties and bud sprouting phenology using UAV-based RGB and multispectral images. Int. J. Remote Sens. 2025, 46, 6214–6234. [Google Scholar] [CrossRef]

- Liu, Z.; Zhou, T.; Fu, D.; Peng, H. Extraction of fresh tea leaf image features based on color and shape with application in tea plant variety identification. Jiangsu Agric. Sci. 2021, 49, 168–172. [Google Scholar] [CrossRef]

- Sun, D.; Ding, Z.; Liu, J.; Liu, H.; Xie, J.; Wang, W. Classification method of multi-variety tea leaves based on improved SqueezeNet model. Trans. Chin. Soc. Agric. Mach. 2023, 54, 223–230. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, M.; Pan, Q.; Jin, X.; Wang, G.; Zhao, Y.; Hu, Y. Identification of tea plant cultivars based on canopy images using deep learning methods. Sci. Hortic. 2024, 339, 113908. [Google Scholar] [CrossRef]

- Ding, Y.; Huang, H.; Cui, H.; Wang, X.; Zhao, Y. A Non-Destructive Method for Identification of Tea Plant Cultivars Based on Deep Learning. Forests 2023, 14, 728. [Google Scholar] [CrossRef]

- Wu, T.; Zhou, L.; Zhao, Y.; Qi, H.; Pu, Y.; Zhang, C.; Liu, Y. Applications of Deep Learning in Tea Quality Monitoring: A Review. Artif. Intell. Rev. 2025, 58, 342. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).