How AI Improves Sustainable Chicken Farming: A Literature Review of Welfare, Economic, and Environmental Dimensions

Abstract

1. Introduction

- What role AI currently plays in sustainable chicken farming

- What challenges are encountered in its practical deployment

- What future directions are emerging

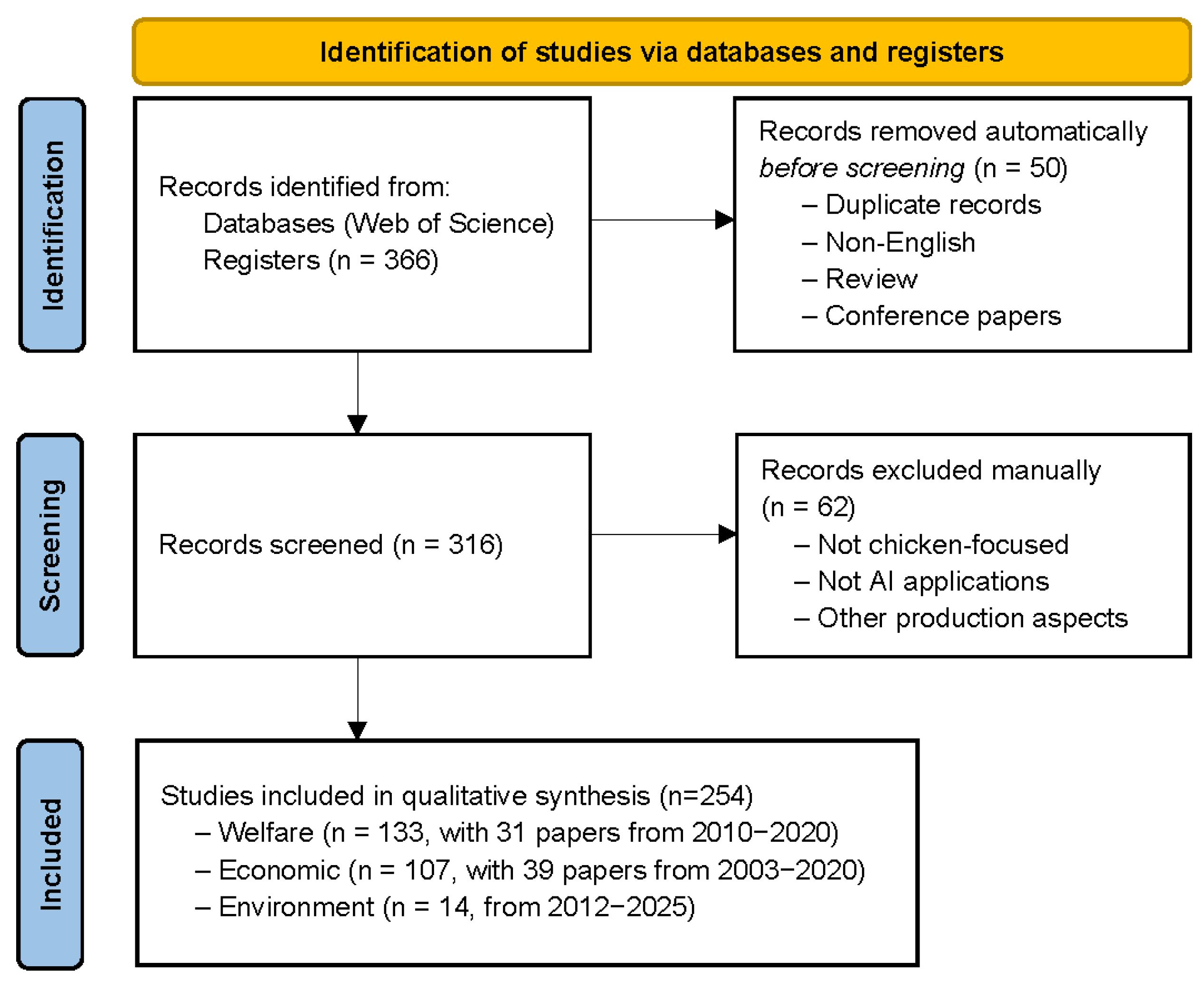

2. Methodology

2.1. Search Strategy

2.2. Selection of Search Terms

TS=((farm OR breed OR house) AND (broiler OR chicken OR chick OR cock OR hen) AND (artificial intelligence OR machine learning OR deep learning OR neural network OR natural language processing OR transformer OR generative adversarial network)).

2.3. Inclusion and Exclusion Criteria

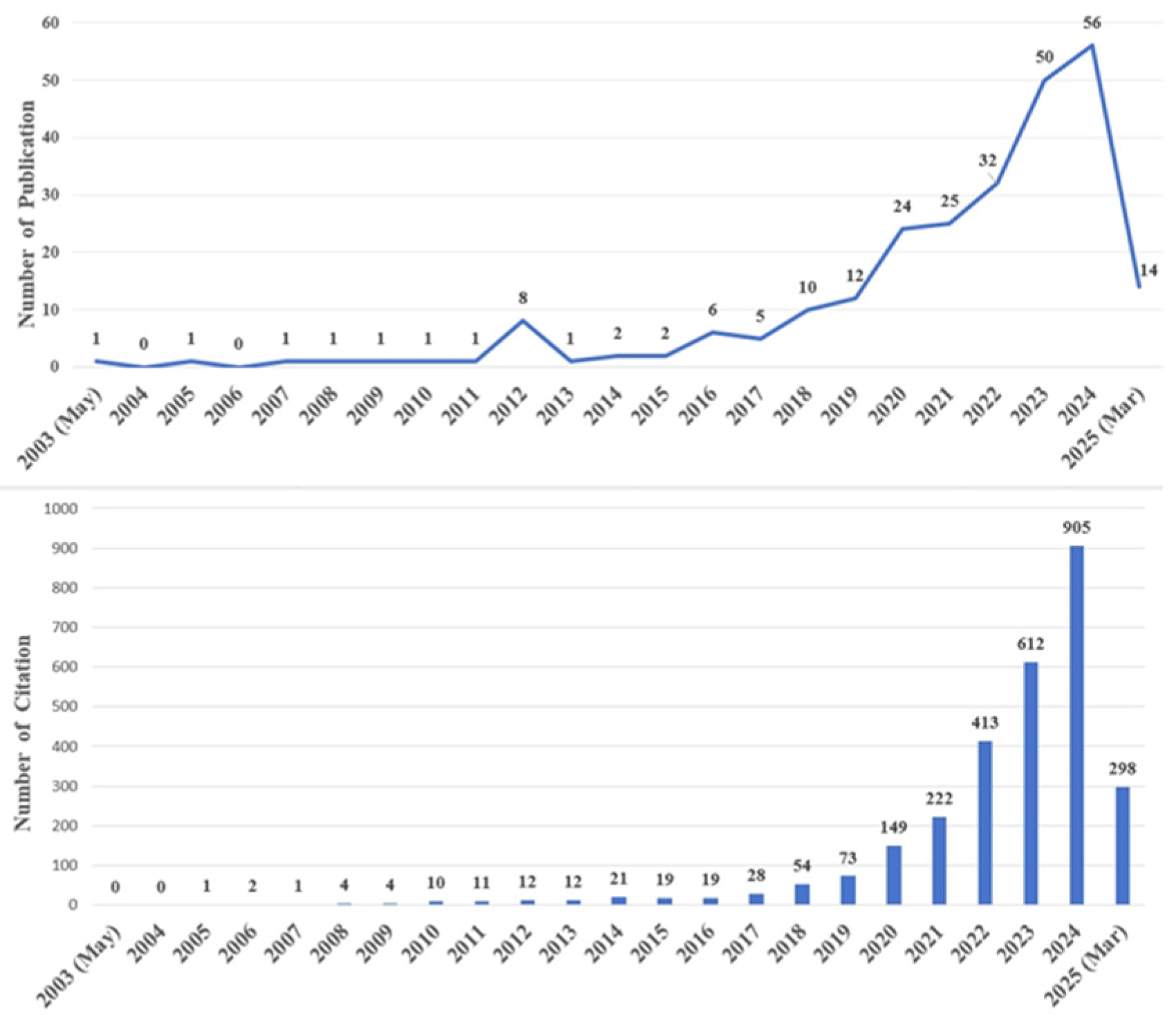

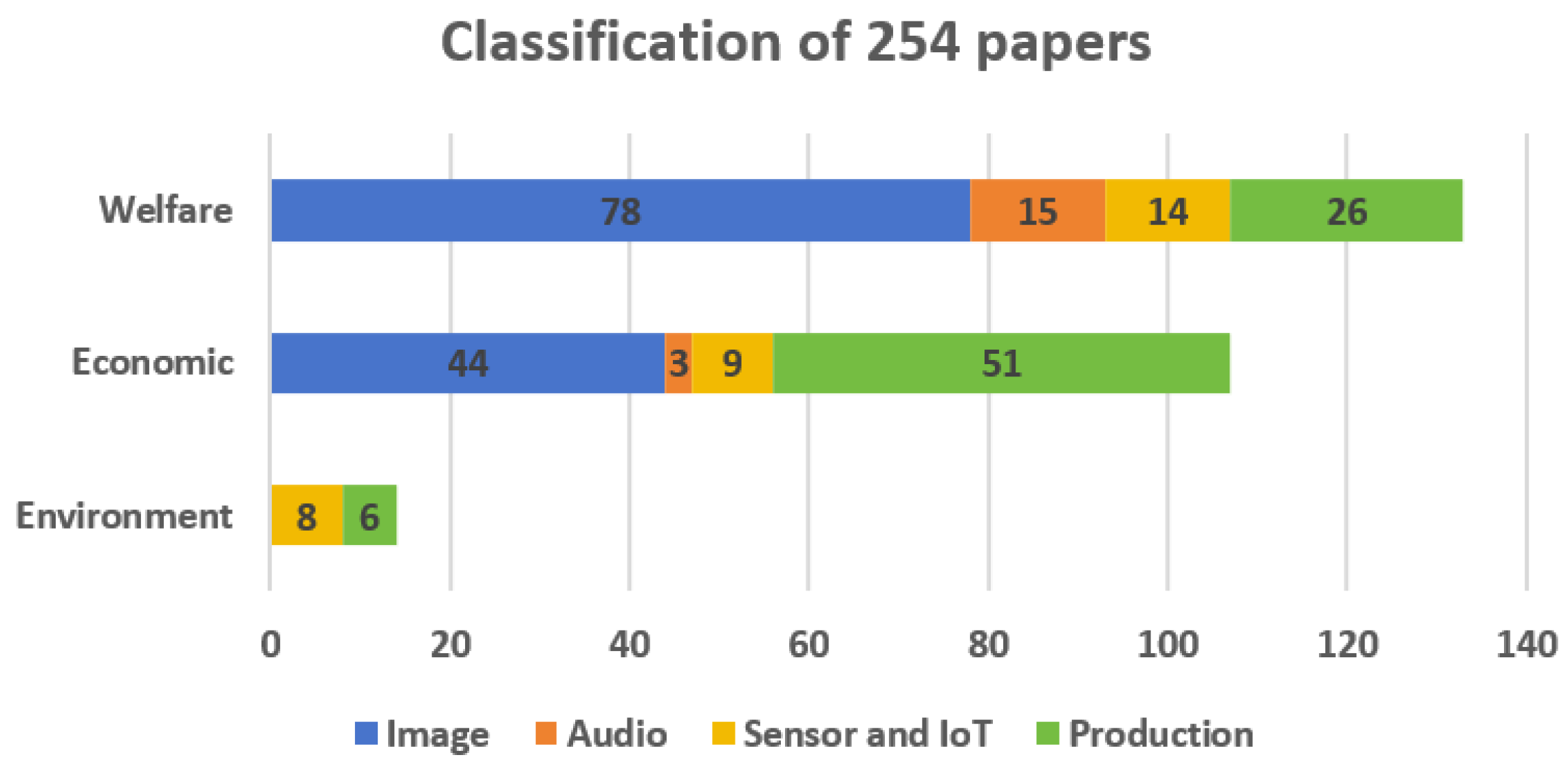

2.4. Data Extraction from Included Papers

- (1)

- computed annual publication and citation counts.

- (2)

- aggregated author keywords across all papers and retained those occurring more than 10 times, then grouped these frequent keywords into Method, Object, and Purpose categories.

- (3)

- (4)

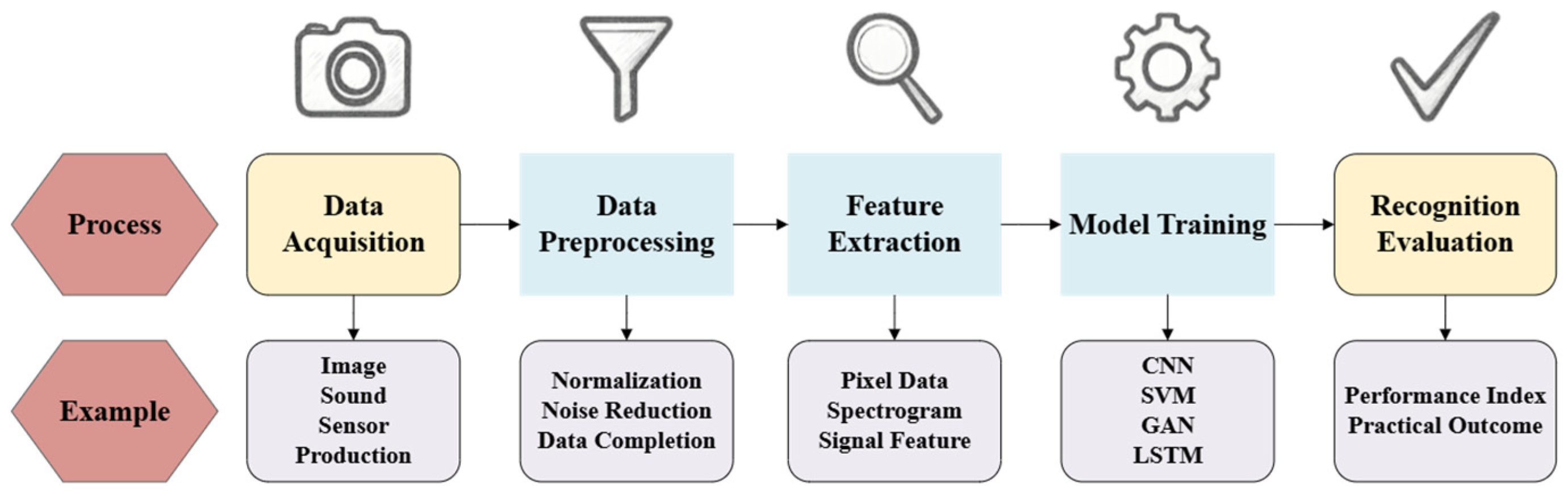

- summarized the typical AI processing workflow for sustainable chicken farming.

- (5)

- used the contribution tallies to address what role AI currently plays in sustainable chicken farming.

- (6)

- synthesized the above analyses to discuss the challenges of practical deployment and the emerging directions.

3. Results

3.1. Overall Description of Literature

3.2. AI-Driven Enhancements to Chicken Welfare

3.3. Economic Impacts of AI Applications in Chicken Farming

3.4. AI-Driven Environmental Optimization in Chicken Farming

4. Discussion

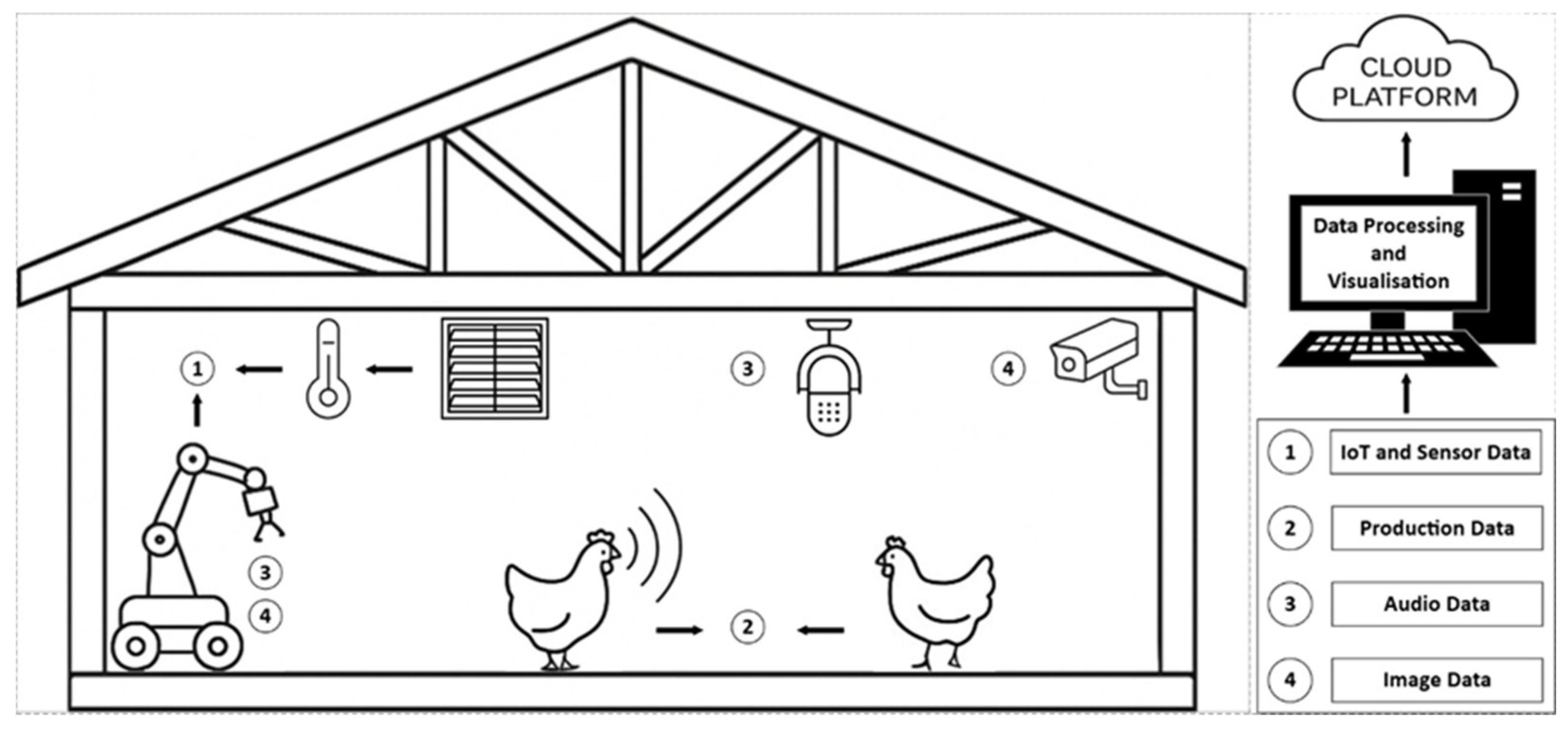

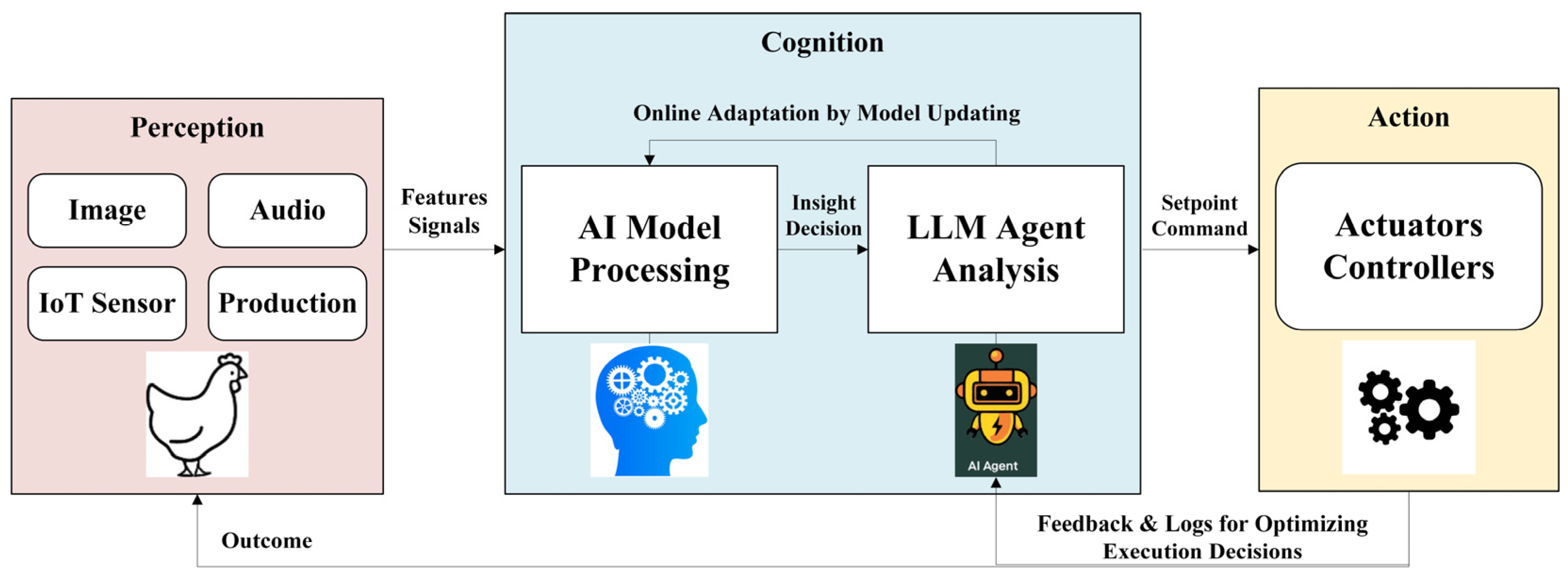

4.1. What Role AI Currently Plays in Sustainable Chicken Farming

4.2. What Challenges Are Encountered in Practical Deployment

4.2.1. Lack of Standardized Model-Optimization Pipelines

4.2.2. Long-Term, Real-Time Use of Multi-Modal Sensor Networks

4.2.3. Disconnect Between Detection and Control

4.2.4. Economic Considerations for AI Adoption

4.3. What Future Directions Are Emerging

4.4. Study Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| IoT | Internet of Things |

| PLF | Precision Livestock Farming |

| CNN | Convolutional Neural Network |

| SVM | Support Vector Machine |

| GAN | Generative Adversarial Network |

| LSTM | Long Short-Term Memory |

| RFID | Radio-Frequency Identification |

| IMU | Inertial Measurement Unit |

| NH3 | Ammonia |

References

- Abbas, A.O.; Nassar, F.S.; Al Ali, A.M. Challenges of Ensuring Sustainable Poultry Meat Production and Economic Resilience under Climate Change for Achieving Sustainable Food Security. Res. World Agric. Econ. 2025, 6, 159–171. [Google Scholar] [CrossRef]

- Usturoi, M.G.; Rațu, R.N.; Crivei, I.C.; Veleșcu, I.D.; Usturoi, A.; Stoica, F.; Radu Rusu, R.M. Unlocking the Power of Eggs: Nutritional Insights, Bioactive Compounds, and the Advantages of Omega-3 and Omega-6 Enriched Varieties. Agriculture 2025, 15, 242. [Google Scholar] [CrossRef]

- Reynolds, S. Breaking the Expected: Rethinking the Impact of Bird Losses on Egg Prices. 2025. Available online: https://opus.govst.edu/research_day/2025/thurs/9/ (accessed on 25 September 2025).

- Kagaya, S.; Widmar, N.O.; Kilders, V. The price of attention: An analysis of the intersection of media coverage and public sentiments about eggs and egg prices. Poult. Sci. 2025, 104, 104482. [Google Scholar] [CrossRef]

- Brundtland, G.H. Our Common Future World Commission on Environment and Developement. Available online: https://sustainabledevelopment.un.org/content/documents/5987our-common-future.pdf (accessed on 25 September 2025).

- FAO. Production|Gateway to Poultry Production and Products|Food and Agriculture Organization of the United Nations. Available online: https://www.fao.org/poultry-production-products/production/en/ (accessed on 25 September 2025).

- Vaarst, M.; Steenfeldt, S.; Horsted, K. Sustainable development perspectives of poultry production. World’s Poult. Sci. J. 2015, 71, 609–620. [Google Scholar] [CrossRef]

- Bist, R.B.; Bist, K.; Poudel, S.; Subedi, D.; Yang, X.; Paneru, B.; Mani, S.; Wang, D.; Chai, L. Sustainable poultry farming practices: A critical review of current strategies and future prospects. Poult. Sci. 2024, 103, 104295. [Google Scholar] [CrossRef]

- Berckmans, D. General introduction to precision livestock farming. Anim. Front. 2017, 7, 6–11. [Google Scholar] [CrossRef]

- Werner, A.; Jarfe, A. (Eds.) Programme Book of the Joint Conference of ECPA-ECPLF; Wageningen Academic: Wageningen, The Netherlands, 2003; p. 846. Available online: https://www.cabidigitallibrary.org/doi/full/10.5555/20033115161 (accessed on 25 September 2025).

- Norton, T.; Berckmans, D. Engineering advances in precision livestock farming. Biosyst. Eng. 2018, 173, 1–3. [Google Scholar] [CrossRef]

- Roy, A.; Rana, T. Precision Livestock Farming and Its Advantage to the Environment. Epidemiol. Environ. Hyg. Vet. Public Health 2025, 6, 343–347. [Google Scholar] [CrossRef]

- Bretas, I.L.; Dubeux, J.C., Jr.; Cruz, P.J.; Oduor, K.T.; Queiroz, L.D.; Valente, D.S.; Chizzotti, F.H. Precision livestock farming applied to grazingland monitoring and management—A review. Agron. J. 2024, 116, 1164–1186. [Google Scholar] [CrossRef]

- Hsieh, C.W.; Hsu, S.Y.; Chao, C.H.; Lee, M.C.; Chen, C.Y.; Wu, C.H. Implementing an intelligent chicken aviary using deep learning techniques. Multimed. Tools Appl. 2025, 84, 1–30. [Google Scholar] [CrossRef]

- Van Hertem, T.; Rooijakkers, L.; Berckmans, D.; Fernández, A.P.; Norton, T.; Vranken, E. Appropriate data visualisation is key to Precision Livestock Farming acceptance. Comput. Electron. Agric. 2017, 138, 1–10. [Google Scholar] [CrossRef]

- Kaswan, S.; Chandratre, G.A.; Upadhyay, D.; Sharma, A.; Sreekala, S.M.; Badgujar, P.C.; Ruchay, A. Applications of sensors in livestock management. In Engineering Applications in Livestock Production; Academic Press: Cambridge, MA, USA, 2024; pp. 63–92. [Google Scholar] [CrossRef]

- Brassó, L.D.; Komlósi, I.; Várszegi, Z. Modern technologies for improving broiler production and welfare: A Review. Animals 2025, 15, 493. [Google Scholar] [CrossRef] [PubMed]

- Rowe, E.; Dawkins, M.S.; Gebhardt-Henrich, S.G. A systematic review of precision livestock farming in the poultry sector: Is technology focussed on improving bird welfare? Animals 2019, 9, 614. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. bmj 2021, 372, 71. [Google Scholar] [CrossRef] [PubMed]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep learning for precision agriculture: A bibliometric analysis. Intell. Syst. Appl. 2022, 16, 200102. [Google Scholar] [CrossRef]

- Zhu, J.; Liu, W. A tale of two databases: The use of Web of Science and Scopus in academic papers. Scientometrics 2020, 123, 321–335. [Google Scholar] [CrossRef]

- Ali, W.; Din, I.U.; Almogren, A.; Rodrigues, J.J. Poultry Health Monitoring With Advanced Imaging: Toward Next-Generation Agricultural Applications in Consumer Electronics. IEEE Trans. Consum. Electron. 2024, 70, 7147–7154. [Google Scholar] [CrossRef]

- Bist, R.B.; Yang, X.; Subedi, S.; Bist, K.; Paneru, B.; Li, G.; Chai, L. An automatic method for scoring poultry footpad dermatitis with deep learning and thermal imaging. Comput. Electron. Agric. 2024, 226, 109481. [Google Scholar] [CrossRef]

- Dayan, J.; Goldman, N.; Halevy, O.; Uni, Z. Research Note: Prospects for early detection of breast muscle myopathies by automated image analysis. Poult. Sci. 2024, 103, 103680. [Google Scholar] [CrossRef]

- Elmessery, W.M.; Gutiérrez, J.; El-Wahhab, G.G.A.; Elkhaiat, I.A.; El-Soaly, I.S.; Alhag, S.K.; Al-Shuraym, L.A.; Akela, M.A.; Moghanm, F.S.; Abdelshafie, M.F. YOLO-based model for automatic detection of broiler pathological phenomena through visual and thermal images in intensive poultry houses. Agriculture 2023, 13, 1527. [Google Scholar] [CrossRef]

- Tong, Q.; Zhang, E.; Wu, S.; Xu, K.; Sun, C. A real-time detector of chicken healthy status based on modified YOLO. Signal Image Video Process. 2023, 17, 4199–4207. [Google Scholar] [CrossRef]

- Qin, W.; Yang, X.; Liu, C.; Zheng, W. A deep learning method based on YOLOv5 and SuperPoint-SuperGlue for digestive disease warning and cage location backtracking in stacked cage laying hen systems. Comput. Electron. Agric. 2024, 222, 108999. [Google Scholar] [CrossRef]

- Pakuła, A.; Paśko, S.; Marć, P.; Kursa, O.; Jaroszewicz, L.R. AI Classification of Eggs’ Origin from Mycoplasma synoviae-Infected or Non-Infected Poultry via Analysis of the Spectral Response. Appl. Sci. 2023, 13, 12360. [Google Scholar] [CrossRef]

- Nakrosis, A.; Paulauskaite-Taraseviciene, A.; Raudonis, V.; Narusis, I.; Gruzauskas, V.; Gruzauskas, R.; Lagzdinyte-Budnike, I. Towards early poultry health prediction through non-invasive and computer vision-based dropping classification. Animals 2023, 13, 3041. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zhang, J.; Paneru, B.; Lin, J.; Bist, R.B.; Lu, G.; Chai, L. Precision Monitoring of Dead Chickens and Floor Eggs with a Robotic Machine Vision Method. AgriEngineering 2025, 7, 35. [Google Scholar] [CrossRef]

- Khanal, R.; Wu, W.; Lee, J. Automated Dead Chicken Detection in Poultry Farms Using Knowledge Distillation and Vision Transformers. Appl. Sci. 2024, 15, 136. [Google Scholar] [CrossRef]

- Ma, W.; Wang, X.; Yang, S.X.; Xue, X.; Li, M.; Wang, R.; Yu, L.; Song, L.; Li, Q. Autonomous inspection robot for dead laying hens in caged layer house. Comput. Electron. Agric. 2024, 227, 109595. [Google Scholar] [CrossRef]

- Luo, S.; Ma, Y.; Jiang, F.; Wang, H.; Tong, Q.; Wang, L. Dead laying hens detection using TIR-NIR-depth images and deep learning on a commercial farm. Animals 2023, 13, 1861. [Google Scholar] [CrossRef]

- Fodor, I.; van der Sluis, M.; Jacobs, M.; de Klerk, B.; Bouwman, A.C.; Ellen, E.D. Automated pose estimation reveals walking characteristics associated with lameness in broilers. Poult. Sci. 2023, 102, 102787. [Google Scholar] [CrossRef]

- Nasiri, A.; Yoder, J.; Zhao, Y.; Hawkins, S.; Prado, M.; Gan, H. Pose estimation-based lameness recognition in broiler using CNN-LSTM network. Comput. Electron. Agric. 2022, 197, 106931. [Google Scholar] [CrossRef]

- Sun, Z.; Zhang, M.; Liu, J.; Wang, J.; Wu, Q.; Wang, G. Research on white feather broiler health monitoring method based on sound detection and transfer learning. Comput. Electron. Agric. 2023, 214, 108319. [Google Scholar] [CrossRef]

- Cuan, K.; Zhang, T.; Li, Z.; Huang, J.; Ding, Y.; Fang, C. Automatic Newcastle disease detection using sound technology and deep learning method. Comput. Electron. Agric. 2022, 194, 106740. [Google Scholar] [CrossRef]

- Welch, M.; Sibanda, T.Z.; De Souza Vilela, J.; Kolakshyapati, M.; Schneider, D.; Ruhnke, I. An initial study on the use of machine learning and radio frequency identification data for predicting health outcomes in free-range laying hens. Animals 2023, 13, 1202. [Google Scholar] [CrossRef] [PubMed]

- Mei, W.; Yang, X.; Zhao, Y.; Wang, X.; Dai, X.; Wang, K. Identification of aflatoxin-poisoned broilers based on accelerometer and machine learning. Biosyst. Eng. 2023, 227, 107–116. [Google Scholar] [CrossRef]

- Ahmed, G.; Malick, R.A.S.; Akhunzada, A.; Zahid, S.; Sagri, M.R.; Gani, A. An approach towards IoT-based predictive service for early detection of diseases in poultry chickens. Sustainability 2021, 13, 13396. [Google Scholar] [CrossRef]

- Ram Das, A.; Pillai, N.; Nanduri, B.; Rothrock, M.J., Jr.; Ramkumar, M. Exploring pathogen presence prediction in pastured poultry farms through transformer-based models and attention mechanism explainability. Microorganisms 2024, 12, 1274. [Google Scholar] [CrossRef]

- Jeon, K.M.; Jung, J.; Lee, C.M.; Yoo, D.S. Identification of Pre-Emptive Biosecurity Zone Areas for Highly Pathogenic Avian Influenza Based on Machine Learning-Driven Risk Analysis. Animals 2023, 13, 3728. [Google Scholar] [CrossRef]

- Liu, Y.; Zhuang, Y.; Yu, L.; Li, Q.; Zhao, C.; Meng, R.; Zhu, J.; Guo, X. A Machine learning framework based on extreme gradient boosting to predict the occurrence and development of infectious diseases in laying hen farms, taking H9N2 as an example. Animals 2023, 13, 1494. [Google Scholar] [CrossRef]

- Pillai, N.; Ayoola, M.B.; Nanduri, B.; Rothrock, M.J., Jr.; Ramkumar, M. An ensemble learning approach to identify pastured poultry farm practice variables and soil constituents that promote Salmonella prevalence. Heliyon 2022, 8. [Google Scholar] [CrossRef]

- Merenda, V.R.; Bodempudi, V.U.; Pairis-Garcia, M.D.; Li, G. Development and validation of machine-learning models for monitoring individual behaviors in group-housed broiler chickens. Poult. Sci. 2024, 103, 104374. [Google Scholar] [CrossRef]

- Paneru, B.; Bist, R.; Yang, X.; Chai, L. Tracking dustbathing behavior of cage-free laying hens with machine vision technologies. Poult. Sci. 2024, 103, 104289. [Google Scholar] [CrossRef]

- Yang, X.; Dai, H.; Wu, Z.; Bist, R.B.; Subedi, S.; Sun, J.; Lu, G.; Li, C.; Liu, T.; Chai, L. An innovative segment anything model for precision poultry monitoring. Comput. Electron. Agric. 2024, 222, 109045. [Google Scholar] [CrossRef]

- Teterja, D.; Garcia-Rodriguez, J.; Azorin-Lopez, J.; Sebastian-Gonzalez, E.; Nedić, D.; Leković, D.; Knežević, P.; Drajić, D.; Vukobratović, D. A Video Mosaicing-Based Sensing Method for Chicken Behavior Recognition on Edge Computing Devices. Sensors 2024, 24, 3409. [Google Scholar] [CrossRef] [PubMed]

- Jensen, D.B.; Toscano, M.; van der Heide, E.; Grønvig, M.; Hakansson, F. Comparison of strategies for automatic video-based detection of piling behaviour in laying hens. Smart Agric. Technol. 2025, 10, 100745. [Google Scholar] [CrossRef]

- Bist, R.B.; Subedi, S.; Yang, X.; Chai, L. A novel YOLOv6 object detector for monitoring piling behavior of cage-free laying hens. AgriEngineering 2023, 5, 905–923. [Google Scholar] [CrossRef]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking pecking behaviors and damages of cage-free laying hens with machine vision technologies. Comput. Electron. Agric. 2023, 204, 107545. [Google Scholar] [CrossRef]

- Fujinami, K.; Takuno, R.; Sato, I.; Shimmura, T. Evaluating behavior recognition pipeline of laying hens using wearable inertial sensors. Sensors 2023, 23, 5077. [Google Scholar] [CrossRef]

- Shahbazi, M.; Mohammadi, K.; Derakhshani, S.M.; Groot Koerkamp, P.W. Deep learning for laying hen activity recognition using wearable sensors. Agriculture 2023, 13, 738. [Google Scholar] [CrossRef]

- Oso, O.M.; Mejia-Abaunza, N.; Bodempudi, V.U.C.; Chen, X.; Chen, C.; Aggrey, S.E.; Li, G. Automatic analysis of high, medium, and low activities of broilers with heat stress operations via image processing and machine learning. Poult. Sci. 2025, 104, 104954. [Google Scholar] [CrossRef]

- Yeh, Y.H.; Chen, B.L.; Hsieh, K.Y.; Huang, M.H.; Kuo, Y.F. Designing an Autonomous Robot for Monitoring Open-Mouth Behavior of Chickens in Commercial Chicken Farms. J. ASABE 2025, 68, 25–36. [Google Scholar] [CrossRef]

- Yan, Y.; Sheng, Z.; Gu, Y.; Heng, Y.; Zhou, H.; Wang, S. Research note: A method for recognizing and evaluating typical behaviors of laying hens in a thermal environment. Poult. Sci. 2024, 103, 104122. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Zhang, J.; Chen, Y.; Yao, H.; Xin, C.; Wang, S.; Yu, J.; Chen, C.; Xiao, M.; Zou, X. Research into Heat Stress Behavior Recognition and Evaluation Index for Yellow-Feathered Broilers, Based on Improved Cascade Region-Based Convolutional Neural Network. Agriculture 2023, 13, 1114. [Google Scholar] [CrossRef]

- de Carvalho Soster, P.; Grzywalski, T.; Hou, Y.; Thomas, P.; Dedeurwaerder, A.; De Gussem, M.; Tuyttens, F.; Devos, P.; Botteldooren, D.; Antonissen, G. Automated detection of broiler vocalizations a machine learning approach for broiler chicken vocalization monitoring. Poult. Sci. 2025, 104, 104962. [Google Scholar] [CrossRef] [PubMed]

- Srinivasagan, R.; El Sayed, M.S.; Al-Rasheed, M.I.; Alzahrani, A.S. Edge intelligence for poultry welfare: Utilizing tiny machine learning neural network processors for vocalization analysis. PLoS ONE 2025, 20, e0316920. [Google Scholar] [CrossRef]

- Lev-Ron, T.; Yitzhaky, Y.; Halachmi, I.; Druyan, S. Classifying vocal responses of broilers to environmental stressors via artificial neural network. Animal 2025, 19, 101378. [Google Scholar] [CrossRef]

- Lv, M.; Sun, Z.; Zhang, M.; Geng, R.; Gao, M.; Wang, G. Sound recognition method for white feather broilers based on spectrogram features and the fusion classification model. Measurement 2023, 222, 113696. [Google Scholar] [CrossRef]

- Massari, J.M.; Moura, D.J.D.; Nääs, I.D.A.; Pereira, D.F.; Oliveira, S.R.D.M.; Branco, T.; Barros, J.D.S.G. Sequential Behavior of Broiler Chickens in Enriched Environments under Varying Thermal Conditions Using the Generalized Sequential Pattern Algorithm: A Proof of Concept. Animals 2024, 14, 2010. [Google Scholar] [CrossRef]

- Solis, I.L.; de Oliveira-Boreli, F.P.; de Sousa, R.V.; Martello, L.S.; Pereira, D.F. Using Thermal Signature to Evaluate Heat Stress Levels in Laying Hens with a Machine-Learning-Based Classifier. Animals 2024, 14, 1996. [Google Scholar] [CrossRef]

- De-Sousa, K.T.; Deniz, M.; Santos, M.P.D.; Klein, D.R.; Vale, M.M.D. Decision support system to classify the vulnerability of broiler production system to heat stress based on fuzzy logic. Int. J. Biometeorol. 2023, 67, 475–484. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, T.; Zhang, Y.; Gao, Y.; Pan, J.; Rao, X. FCS-Net: Feather condition scoring of broilers based on dense feature fusion of RGB and thermal infrared images. Biosyst. Eng. 2024, 247, 132–142. [Google Scholar] [CrossRef]

- Lamping, C.; Derks, M.; Koerkamp, P.G.; Kootstra, G. ChickenNet-an end-to-end approach for plumage condition assessment of laying hens in commercial farms using computer vision. Comput. Electron. Agric. 2022, 194, 106695. [Google Scholar] [CrossRef]

- Lins, A.C.D.S.; Lourençoni, D.; Yanagi, T.; Miranda, I.B.; Santos, I.E.D.A. Neuro-fuzzy modeling of eyeball and crest temperatures in egg-laying hens. Eng. Agrícola 2021, 41, 34–38. [Google Scholar] [CrossRef]

- Ansarimovahed, A.; Banakar, A.; Li, G.; Javidan, S.M. Separating Chickens’ Heads and Legs in Thermal Images via Object Detection and Machine Learning Models to Predict Avian Influenza and Newcastle Disease. Animals 2025, 15, 1114. [Google Scholar] [CrossRef] [PubMed]

- Shetty, S.; Shetty, M. Poultry Disease Detection: A Comparative Analysis of CNN, SVM, and YOLO v3 Algorithms for Accurate Diagnosis. Gener. Artif. Intell. Concepts Appl. 2025, 155–171. [Google Scholar] [CrossRef]

- Pal, S.; Ghosh, A.; Sarkar, S.K. Development of Efficient Algorithm for Detection and Tracking of Infected Chicken at an Early Stage of Bird Flu with a Suitable Surveillance System Using RFID Technology. Power Devices Internet Things Intell. Syst. Des. 2025, 303–325. [Google Scholar] [CrossRef]

- Khan, I.; Peralta, D.; Fontaine, J.; de Carvalho, P.S.; Martinez-Caja, A.M.; Antonissen, G.; Tuyttens, F.; De Poorter, E. Monitoring Welfare of Individual Broiler Chickens Using Ultra-Wideband and Inertial Measurement Unit Wearables. Sensors 2025, 25, 811. [Google Scholar] [CrossRef]

- Hui, X.; Zhang, D.; Jin, W.; Ma, Y.; Li, G. Fine-tuning faster region-based convolution neural networks for detecting poultry feeding behaviors. Int. J. Agric. Biol. Eng. 2025, 18, 64–73. [Google Scholar] [CrossRef]

- Smith, B.; Long, Y.; Morris, D. An Automated LED Intervention System for Poultry Piling. In 2025 ASABE Annual International Meeting; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2025; p. 1. [Google Scholar] [CrossRef]

- Xue, H.; Ma, J.; Yang, Y.; Qu, H.; Wang, L.; Li, L. Aggressive behavior recognition and welfare monitoring in yellow-feathered broilers using FCTR and wearable identity tags. Comput. Electron. Agric. 2025, 235, 110284. [Google Scholar] [CrossRef]

- Karatsiolis, S.; Panagi, P.; Vassiliades, V.; Kamilaris, A.; Nicolaou, N.; Stavrakis, E. Towards understanding animal welfare by observing collective flock behaviors via AI-powered Analytics. In Proceedings of the 2024 19th Conference on Computer Science and Intelligence Systems (FedCSIS), Belgrade, Serbia, 8–11 September 2024; IEEE: New York, NY, USA, 2024; pp. 643–648. [Google Scholar] [CrossRef]

- Neethirajan, S. Decoding Vocal Indicators of Stress in Laying Hens: A CNN-MFCC Deep Learning Framework. Smart Agric. Technol. 2025, 11, 101056. [Google Scholar] [CrossRef]

- van Veen, L.A.; van den Brand, H.; van den Oever, A.C.; Kemp, B.; Youssef, A. An adaptive expert-in-the-loop algorithm for flock-specific anomaly detection in laying hen production. Comput. Electron. Agric. 2025, 229, 109755. [Google Scholar] [CrossRef]

- Niu, J.; Li, T.; Qi, K.; Liu, Y.; Deng, H.; Hu, Y.; Xu, D.; Wu, L.; Amevor, F.K.; Wang, Y.; et al. Research Note: Application of Convolutional Neural Networks for Feather Classification in Chickens. Poult. Sci. 2025, 104, 105254. [Google Scholar] [CrossRef]

- Zhou, H.; Zhu, Q.; Norton, T. Cough sound recognition in poultry using portable microphones for precision medication guidance. Comput. Electron. Agric. 2025, 237, 110541. [Google Scholar] [CrossRef]

- Zheng, H.; Ma, C.; Liu, D.; Huang, J.; Chen, R.; Fang, C.; Yang, J.; Berckmans, D.; Norton, T.; Zhang, T. Weight prediction method for individual live chickens based on single-view point cloud information. Comput. Electron. Agric. 2025, 234, 110232. [Google Scholar] [CrossRef]

- Campbell, M.; Miller, P.; Díaz-Chito, K.; Irvine, S.; Baxter, M.; Del Rincón, J.M.; Hong, X.; McLaughlin, N.; Arumugam, T.; O’COnnell, N. Automated precision weighing: Leveraging 2D video feature analysis and machine learning for live body weight estimation of broiler chickens. Smart Agric. Technol. 2025, 10, 100793. [Google Scholar] [CrossRef]

- Oh, Y.; Lyu, P.; Ko, S.; Min, J.; Song, J. Enhancing Broiler Weight Estimation through Gaussian Kernel Density Estimation Modeling. Agriculture 2024, 14, 809. [Google Scholar] [CrossRef]

- Li, X.; Wu, J.; Zhao, Z.; Zhuang, Y.; Sun, S.; Xie, H.; Gao, Y.; Xiao, D. An improved method for broiler weight estimation integrating multi-feature with gradient boosting decision tree. Animals 2023, 13, 3721. [Google Scholar] [CrossRef]

- Wu, Z.; Yang, J.; Zhang, H.; Fang, C. Enhanced Methodology and Experimental Research for Caged Chicken Counting Based on YOLOv8. Animals 2025, 15, 853. [Google Scholar] [CrossRef]

- Guo, Y.; Wu, Z.; Su, Z.; Zhao, J.; Li, X. PCCNet: A Point Supervised Dense Chickens Flock Counting Network. Smart Agric. Technol. 2025, 10, 100795. [Google Scholar] [CrossRef]

- Li, X.; Cai, M.; Tan, X.; Yin, C.; Chen, W.; Liu, Z.; Wen, J.; Han, Y. An efficient transformer network for detecting multi-scale chicken in complex free-range farming environments via improved RT-DETR. Comput. Electron. Agric. 2024, 224, 109160. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, H.; Ni, Z.; Jiang, Z.; Wang, X. An Accurate and Lightweight Algorithm for Caged Chickens Detection based on Deep Learning. Pak. J. Agric. Sci. 2024, 61, 403–415. [Google Scholar] [CrossRef]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking floor eggs with machine vision in cage-free hen houses. Poult. Sci. 2023, 102, 102637. [Google Scholar] [CrossRef]

- Ren, Y.; Huang, Y.; Wang, Y.; Zhang, S.; Qu, H.; Ma, J.; Wang, L.; Li, L. A high-performance day-age classification and detection model for chick based on attention encoder and convolutional neural network. Animals 2022, 12, 2425. [Google Scholar] [CrossRef] [PubMed]

- Amirivojdan, A.; Nasiri, A.; Zhou, S.; Zhao, Y.; Gan, H. ChickenSense: A Low-Cost Deep Learning-Based Solution for Poultry Feed Consumption Monitoring Using Sound Technology. AgriEngineering 2024, 6, 2115–2129. [Google Scholar] [CrossRef]

- Nasiri, A.; Amirivojdan, A.; Zhao, Y.; Gan, H. Estimating the feeding time of individual broilers via convolutional neural network and image processing. Animals 2023, 13, 2428. [Google Scholar] [CrossRef] [PubMed]

- Xin, C.; Li, H.; Li, Y.; Wang, M.; Lin, W.; Wang, S.; Zhang, W.; Xiao, M.; Zou, X. Research on an identification and grasping device for dead yellow-feather broilers in flat houses based on deep learning. Agriculture 2024, 14, 1614. [Google Scholar] [CrossRef]

- Zhang, Y.; Lai, Z.; Wang, H.; Jiang, F.; Wang, L. Autonomous navigation using machine vision and self-designed fiducial marker in a commercial chicken farming house. Comput. Electron. Agric. 2024, 224, 109179. [Google Scholar] [CrossRef]

- Li, G.; Chesser, G.D.; Purswell, J.L.; Magee, C.; Gates, R.S.; Xiong, Y. Design and development of a broiler mortality removal robot. Appl. Eng. Agric. 2022, 38, 853–863. [Google Scholar] [CrossRef]

- Pirompud, P.; Sivapirunthep, P.; Punyapornwithaya, V.; Chaosap, C. Application of machine learning algorithms to predict dead on arrival of broiler chickens raised without antibiotic program. Poult. Sci. 2024, 103, 103504. [Google Scholar] [CrossRef]

- You, J.; Lou, E.; Afrouziyeh, M.; Zukiwsky, N.M.; Zuidhof, M.J. A supervised machine learning method to detect anomalous real-time broiler breeder body weight data recorded by a precision feeding system. Comput. Electron. Agric. 2021, 185, 106171. [Google Scholar] [CrossRef]

- Johansen, S.V.; Jensen, M.R.; Chu, B.; Bendtsen, J.D.; Mogensen, J.; Rogers, E. Broiler FCR optimization using norm optimal terminal iterative learning control. IEEE Trans. Control Syst. Technol. 2019, 29, 580–592. [Google Scholar] [CrossRef]

- Ma, C.; Zhang, T.; Zheng, H.; Yang, J.; Chen, R.; Fang, C. Measurement method for live chicken shank length based on improved ResNet and fused multi-source information. Comput. Electron. Agric. 2024, 221, 108965. [Google Scholar] [CrossRef]

- Zhu, R.; Li, J.; Yang, J.; Sun, R.; Yu, K. In vivo prediction of breast muscle weight in broiler chickens using X-ray images based on deep learning and machine learning. Animals 2024, 14, 628. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zheng, J.; An, B.; Ma, X.; Ying, F.; Kong, F.; Wen, J.; Zhao, G. Several models combined with ultrasound techniques to predict breast muscle weight in broilers. Poult. Sci. 2023, 102, 102911. [Google Scholar] [CrossRef] [PubMed]

- Castro, S.L.D.; Silva, I.J.D.; Nazareno, A.C.; Mota, M.D.O. Computer vision for morphometric evaluation of broiler chicken bones. Eng. Agrícola 2022, 42, e20210150. [Google Scholar] [CrossRef]

- Ji, H.; Xu, Y.; Teng, G. Predicting egg production rate and egg weight of broiler breeders based on machine learning and Shapley additive explanations. Poult. Sci. 2025, 104, 104458. [Google Scholar] [CrossRef]

- Bumanis, N.; Kviesis, A.; Paura, L.; Arhipova, I.; Adjutovs, M. Hen egg production forecasting: Capabilities of machine learning models in scenarios with limited data sets. Appl. Sci. 2023, 13, 7607. [Google Scholar] [CrossRef]

- Qin, X.; Lai, C.; Pan, Z.; Pan, M.; Xiang, Y.; Wang, Y. Recognition of abnormal-laying hens based on fast continuous wavelet and deep learning using hyperspectral images. Sensors 2023, 23, 3645. [Google Scholar] [CrossRef]

- Oliveira, E.B.; Almeida, L.G.B.D.; Rocha, D.T.D.; Furian, T.Q.; Borges, K.A.; Moraes, H.L.D.S.; Nascimento, V.P.D.; Salle, C.T.P. Artificial neural networks to predict egg-production traits in commercial laying breeder hens. Braz. J. Poult. Sci. 2022, 24, eRBCA-2021. [Google Scholar] [CrossRef]

- Quintana-Ospina, G.A.; Alfaro-Wisaquillo, M.C.; Oviedo-Rondon, E.O.; Ruiz-Ramirez, J.R.; Bernal-Arango, L.C.; Martinez-Bernal, G.D. Effect of environmental and farm-associated factors on live performance parameters of broilers raised under commercial tropical conditions. Animals 2023, 13, 3312. [Google Scholar] [CrossRef]

- Akinsola, O.M.; Sonaiya, E.B.; Bamidele, O.; Hassan, W.A.; Yakubu, A.; Ajayi, F.O.; Ogundu, U.; Alabi, O.O.; Adebambo, O.A. Comparison of five mathematical models that describe growth in tropically adapted dual-purpose breeds of chicken. J. Appl. Anim. Res. 2021, 49, 158–166. [Google Scholar] [CrossRef]

- Peng, J.; Xiao, R.; Wu, C.; Zheng, Z.; Deng, Y.; Chen, K.; Xiang, Y.; Xu, C.; Zou, L.; Liao, M.; et al. Characterization of the prevalence of Salmonella in different retail chicken supply modes using genome-wide and machine-learning analyses. Food Res. Int. 2024, 191, 114654. [Google Scholar] [CrossRef]

- Spyrelli, E.D.; Ozcan, O.; Mohareb, F.; Panagou, E.Z.; Nychas, G.J.E. Spoilage assessment of chicken breast fillets by means of fourier transform infrared spectroscopy and multispectral image analysis. Curr. Res. Food Sci. 2021, 4, 121–131. [Google Scholar] [CrossRef]

- Sekulska-Nalewajko, J.; Gocławski, J.; Korzeniewska, E.; Kiełbasa, P.; Dróżdż, T. The verification of hen egg types by the classification of ultra-weak photon emission data. Expert Syst. Appl. 2024, 238, 122130. [Google Scholar] [CrossRef]

- Chen, Z.; He, P.; He, Y.; Wu, F.; Rao, X.; Pan, J.; Lin, H. Eggshell biometrics for individual egg identification based on convolutional neural networks. Poult. Sci. 2023, 102, 102540. [Google Scholar] [CrossRef] [PubMed]

- Bischof, G.; Januschewski, E.; Juadjur, A. Authentication of laying hen housing systems based on egg yolk using 1H NMR spectroscopy and machine learning. Foods 2024, 13, 1098. [Google Scholar] [CrossRef] [PubMed]

- Cheng, T.; Li, P.; Ma, J.; Tian, X.; Zhong, N. Identification of four chicken breeds by hyperspectral imaging combined with chemometrics. Processes 2022, 10, 1484. [Google Scholar] [CrossRef]

- Seo, D.; Cho, S.; Manjula, P.; Choi, N.; Kim, Y.-K.; Koh, Y.J.; Lee, S.H.; Kim, H.-Y.; Lee, J.H. Identification of target chicken populations by machine learning models using the minimum number of SNPs. Animals 2021, 11, 241. [Google Scholar] [CrossRef]

- Horkaew, P.; Kupittayanant, S.; Kupittayanant, P. Noninvasive in ovo sexing in Korat chicken by pattern recognition of its embryologic vasculature. J. Appl. Poult. Res. 2024, 33, 100424. [Google Scholar] [CrossRef]

- Ghaderi, M.; Mireei, S.A.; Masoumi, A.; Sedghi, M.; Nazeri, M. Fertility detection of unincubated chicken eggs by hyperspectral transmission imaging in the Vis-SWNIR region. Sci. Rep. 2024, 14, 1289. [Google Scholar] [CrossRef]

- Groves, I.; Holmshaw, J.; Furley, D.; Manning, E.; Chinnaiya, K.; Towers, M.; Evans, B.D.; Placzek, M.; Fletcher, A.G. Accurate staging of chick embryonic tissues via deep learning of salient features. Development 2023, 150, dev202068. [Google Scholar] [CrossRef]

- Jia, N.; Li, B.; Zhao, Y.; Fan, S.; Zhu, J.; Wang, H.; Zhao, W. Exploratory study of sex identification for chicken embryos based on blood vessel images and deep learning. Agriculture 2023, 13, 1480. [Google Scholar] [CrossRef]

- Wang, J.; Cao, R.; Wang, Q.; Ma, M. Nondestructive prediction of fertilization status and growth indicators of hatching eggs based on respiration. Comput. Electron. Agric. 2023, 208, 107779. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, T.; Cuan, K.; Fang, C.; Zhao, H.; Guan, C.; Yang, Q.; Qu, H. Sex detection of chicks based on audio technology and deep learning methods. Animals 2022, 12, 3106. [Google Scholar] [CrossRef] [PubMed]

- Cuan, K.; Li, Z.; Zhang, T.; Qu, H. Gender determination of domestic chicks based on vocalization signals. Comput. Electron. Agric. 2022, 199, 107172. [Google Scholar] [CrossRef]

- Li, X.; Chen, X.; Wang, Q.; Yang, N.; Sun, C. Integrating bioinformatics and machine learning for genomic prediction in chickens. Genes 2024, 15, 690. [Google Scholar] [CrossRef]

- Bouwman, A.C.; Hulsegge, I.; Hawken, R.J.; Henshall, J.M.; Veerkamp, R.F.; Schokker, D.; Kamphuis, C. Classifying aneuploidy in genotype intensity data using deep learning. J. Anim. Breed. Genet. 2023, 140, 304–315. [Google Scholar] [CrossRef]

- Serva, L.; Marchesini, G.; Cullere, M.; Ricci, R.; Dalle Zotte, A. Testing two NIRs instruments to predict chicken breast meat quality and exploiting machine learning approaches to discriminate among genotypes and presence of myopathies. Food Control 2023, 144, 109391. [Google Scholar] [CrossRef]

- Klotz, D.F.; Ribeiro, R.; Enembreck, F.; Denardin, G.W.; Barbosa, M.A.; Casanova, D.; Teixeira, M. Estimating and tuning adaptive action plans for the control of smart interconnected poultry condominiums. Expert Syst. Appl. 2022, 187, 115876. [Google Scholar] [CrossRef]

- Shams, M.Y.; Elmessery, W.M.; Oraiath, A.A.T.; Elbeltagi, A.; Salem, A.; Kumar, P.; El-Messery, T.M.; El-Hafeez, T.A.; Abdelshafie, M.F.; El-Wahhab, G.G.A.; et al. Automated on-site broiler live weight estimation through YOLO-based segmentation. Smart Agric. Technol. 2025, 10, 100828. [Google Scholar] [CrossRef]

- Pangestu, G. Real-Time Chicken Counting System using YOLO for FCR Optimization in Small and Medium Poultry Farms. J. Dev. Res. 2025, 9, 1–9. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, H.; Fang, C. Research on machine vision online monitoring system for egg production and quality in cage environment. Poult. Sci. 2025, 104, 104552. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zhu, L.; Jiang, W.; Yang, Y.; Gan, M.; Shen, L.; Zhu, L. Machine Learning-Based Prediction of Feed Conversion Ratio: A Feasibility Study of Using Short-Term FCR Data for Long-Term Feed Conversion Ratio (FCR) Prediction. Animals 2025, 15, 1773. [Google Scholar] [CrossRef] [PubMed]

- Adejola, Y.A.; Sibanda, T.Z.; Ruhnke, I.; Boshoff, J.; Pokhrel, S.; Welch, M. Analyzing the Risk of Short-Term Losses in Free-Range Egg Production Using Commercial Data. Agriculture 2025, 15, 743. [Google Scholar] [CrossRef]

- Hélène, O.K.; Kuradusenge, M.; Sibomana, L.; Mwaisekwa, I.I. TinyML and IoT-Enabled System for Automated Chicken Egg Quality Analysis and Monitoring. Smart Agric. Technol. 2025, 12, 101162. [Google Scholar] [CrossRef]

- Sari, O.F.; Bader-El-Den, M.; Leadley, C.; Esmeli, R.; Mohasseb, A.; Ince, V. AI-driven food safety risk prediction: A transformer-based approach with RASFF database. British Food J. 2025, 127, 3427–3445. [Google Scholar] [CrossRef]

- Kong, Y.; Wen, Z.; Cai, X.; Tan, L.; Liu, Z.; Wang, Q.; Li, Q.; Yang, N.; Wang, Y.; Zhao, Y. Genetic traceability, conservation effectiveness, and selection signatures analysis based on ancestral information: A case study of Beijing-You chicken. BMC Genom. 2025, 26, 402. [Google Scholar] [CrossRef]

- Zhi, Y.; Geng, W.; Li, S.; Chen, X.; Challioui, M.K.; Chen, B.; Wang, D.; Li, Z.; Tian, Y.; Li, H.; et al. Advanced molecular system for accurate identification of chicken genetic resources. Comput. Electron. Agric. 2025, 231, 109989. [Google Scholar] [CrossRef]

- Gao, Z.; Zheng, J.; Xu, G. Molecular Mechanisms and Regulatory Factors Governing Feed Utilization Efficiency in Laying Hens: Insights for Sustainable Poultry Production and Breeding Optimization. Int. J. Mol. Sci. 2025, 26, 6389. [Google Scholar] [CrossRef]

- Rodriguez, M.V.; Phan, T.; Fernandes, A.F.; Breen, V.; Arango, J.; Kidd, M.T.; Le, N. Facial Chick Sexing: An Automated Chick Sexing System From Chick Facial Image. Smart Agric. Technol. 2025, 12, 101044. [Google Scholar] [CrossRef]

- Saetiew, J.; Nongkhunsan, P.; Saenjae, J.; Yodsungnoen, R.; Molee, A.; Jungthawan, S.; Fongkaew, I.; Meemon, P. Automated chick gender determination using optical coherence tomography and deep learning. Poult. Sci. 2025, 104, 105033. [Google Scholar] [CrossRef]

- Barbosa, L.V.S.; Lima, N.D.d.S.; Barros, J.d.S.G.; de Moura, D.J.; Estellés, F.; Ramón-Moragues, A.; Calvet-Sanz, S.; García, A.V. Predicting Risk of Ammonia Exposure in Broiler Housing: Correlation with Incidence of Health Issues. Animals 2024, 14, 615. [Google Scholar] [CrossRef]

- Liu, Y.; Zhuang, Y.; Ji, B.; Zhang, G.; Rong, L.; Teng, G.; Wang, C. Prediction of laying hen house odor concentrations using machine learning models based on small sample data. Comput. Electron. Agric. 2022, 195, 106849. [Google Scholar] [CrossRef]

- Weng, X.; Kong, C.; Jin, H.; Chen, D.; Li, C.; Li, Y.; Ren, L.; Xiao, Y.; Chang, Z. Detection of volatile organic compounds (VOCs) in livestock houses based on electronic nose. Appl. Sci. 2021, 11, 2337. [Google Scholar] [CrossRef]

- Cosan, A.; Atilgan, A. Setting up of an expert system to determine ammonia gas in animal livestock. J. Food Agric. Environ. 2012, 10, 910–912. Available online: https://hero.epa.gov/hero/index.cfm/reference/details/reference_id/2044779 (accessed on 25 September 2025).

- Kim, S.J.; Lee, M.H. Design and implementation of a malfunction detection system for livestock ventilation devices in smart poultry farms. Agriculture 2022, 12, 2150. [Google Scholar] [CrossRef]

- Silva, W.; Moura, D.; Carvalho-Curi, T.; Seber, R.; Massari, J. Evatuation system of exhaust fans used on ventilation system in commercial broiler house. Eng. Agrícola 2017, 37, 887–899. [Google Scholar] [CrossRef]

- Gonzalez-Mora, A.F.; Rousseau, A.N.; Larios, A.D.; Godbout, S.; Fournel, S. Assessing environmental control strategies in cage-free aviary housing systems: Egg production analysis and Random Forest modeling. Comput. Electron. Agric. 2022, 196, 106854. [Google Scholar] [CrossRef]

- Martinez, A.A.G.; Nääs, I.D.A.; de Carvalho-Curi, T.M.R.; Abe, J.M.; da Silva Lima, N.D. A Heuristic and data mining model for predicting broiler house environment suitability. Animals 2021, 11, 2780. [Google Scholar] [CrossRef]

- Yelmen, B.; Şahin, H.; Cakir, M.T. The use of artificial neural networks in energy use modeling in broiler farms: A case study of Mersin province in the Mediterranean region. Appl. Ecol. Environ. Res. 2019, 17, 13169–13183. [Google Scholar] [CrossRef]

- López-Andrés, J.J.; Aguilar-Lasserre, A.A.; Morales-Mendoza, L.F.; Azzaro-Pantel, C.; Pérez-Gallardo, J.R.; Rico-Contreras, J.O. Environmental impact assessment of chicken meat production via an integrated methodology based on LCA, simulation and genetic algorithms. J. Clean. Prod. 2018, 174, 477–491. [Google Scholar] [CrossRef]

- Rico-Contreras, J.O.; Aguilar-Lasserre, A.A.; Méndez-Contreras, J.M.; Cid-Chama, G.; Alor-Hernández, G. Moisture content prediction in poultry litter to estimate bioenergy production using an artificial neural network. Rev. Mex. De Ing. Química 2014, 13, 933–955. Available online: https://www.scielo.org.mx/pdf/rmiq/v13n3/v13n3a24.pdf (accessed on 25 September 2025).

- Karadurmus, E.; Cesmeci, M.; Yuceer, M.; Berber, R. An artificial neural network model for the effects of chicken manure on ground water. Appl. Soft Comput. 2012, 12, 494–497. [Google Scholar] [CrossRef]

- Leite, M.V.; Abe, J.M.; Souza, M.L.H.; de Alencar Nääs, I. Enhancing Environmental Control in Broiler Production: Retrieval-Augmented Generation for Improved Decision-Making with Large Language Models. AgriEngineering 2025, 7, 12. [Google Scholar] [CrossRef]

| Group (AND) | Keyword (OR) |

|---|---|

| Farming environment | “farm”, “breed”, “house” |

| Chicken type | “broiler”, “chicken”, “chick”, “cock”, “hen” |

| AI technologies | “artificial intelligence”, “machine learning”, “deep learning”, “neural network”, “natural language processing”, “transformer”, “generative adversarial network” |

| Category | Definition | Decision Rule |

|---|---|---|

| Welfare | Research that benefits the birds directly: improved health, reduced stress, better behavior, lower pain or handling time | Assign when the main objective and endpoints concern bird condition, health status, stress or behavior indices, mortality or lameness reduction, longer productive lifespan |

| Economic | Research that improves production efficiency or profitability | Assign when primary outcomes focus on productivity, cost, labor, feed efficiency, yield or uniformity, product quality grades, or return on investment |

| Environment | Research that improves environmental management or reduces environmental burden | Assign when endpoints center on emissions, waste handling, microclimate quality, energy consumption, or environmental impact indicators (for example ammonia levels) |

| Modality | Decision Rule | Example |

|---|---|---|

| Image | Images or video are the main data source used by the model | Object detection and tracking, segmentation, pose or activity recognition |

| Audio | Acoustic signals are the main data source | Vocalization analysis, distress or cough detection, barn acoustics anomalies |

| IoT Sensor | Non-image, non-audio physical sensors and integrated systems | Temperature and humidity sensing, ammonia or CO2 monitoring, RFID or accelerometers, robotics, ventilation telemetry |

| Production | Structured production or biological records are the main data source | Growth and weight records, egg counts and quality grades, feed intake, mortality, genetic or breeding records |

| Category | Keyword | Year of First Appearance | Number |

|---|---|---|---|

| Method | Deep Learning | 2020 | 46 |

| Machine Learning | 2019 | 43 | |

| System | 2019 | 31 | |

| Computer Vision | 2016 | 26 | |

| Artificial Intelligence | 2019 | 18 | |

| Machine Vision | 2021 | 13 | |

| Artificial Neural Network | 2010 | 13 | |

| Object | Chickens | 2012 | 21 |

| Poultry | 2016 | 15 | |

| Laying Hens | 2020 | 16 | |

| Purpose | Animal Welfare | 2019 | 29 |

| Performance | 2011 | 27 | |

| Behavior | 2016 | 27 | |

| Prediction | 2003 | 26 | |

| Classification | 2016 | 11 | |

| Growth | 2007 | 12 |

| Welfare Objective | Modality | AI Research Cases | References |

|---|---|---|---|

| Disease Monitoring and Prevention | Image | Deep neural networks analyze external features such as feathers, head, and feet to enable real-time monitoring and early prevention of diseases including fowlpox, avian cholera, footpad dermatitis, avian influenza, and others | [22,23,24,25,26] |

| Image | Image recognition of abnormal appearance in chicken droppings, eggs, and other products allows indirect inference of flock health, enabling indirect disease detection and welfare assurance | [27,28,29] | |

| Image | An automated dead-bird detection and alarm system based on image recognition prevents disease spread and safeguards overall flock welfare | [30,31,32,33] | |

| Image | Computer vision analysis of chicken locomotion postures accurately and promptly identifies lameness and other disorders, improving monitoring of locomotor health | [34,35] | |

| Audio | Acoustic analysis and machine learning monitor and classify abnormal sounds such as coughing to rapidly detect Newcastle disease, avian influenza, and similar illnesses, strengthening health surveillance | [36,37] | |

| IoT Sensor | Machine-learning analysis of RFID sensor data identifies parasitic infestations and aflatoxin poisoning, enabling early intervention and improved welfare | [38,39,40] | |

| Production | AI tools analyze feces and carcass sample data to quickly identify potential pathogens in the production environment, optimizing disease-control strategies and enhancing overall welfare | [41,42,43,44] | |

| Behavior Monitoring | Image | Computer vision automatically monitors daily behaviors such as feeding, drinking, dust-bathing, and activity level, quantifies comfort, and supports environmental optimization to improve welfare | [45,46,47,48] |

| Image | AI-based video analysis detects abnormal behaviors like feather pecking and piling in real time, enabling timely intervention and preventing injury or stress | [49,50,51] | |

| IoT Sensor | Sensors such as IMU and RFID record daily behavioral data; machine-learning algorithms evaluate aggressive and abnormal acts to ensure flock safety and quality of life | [52,53] | |

| Stress Monitoring | Image/IoT Sensor | Combining computer-vision analysis of mouth movements (open beak, gaping) with ambient temperature and humidity data automatically identifies heat stress, improving environmental control and welfare | [54,55,56,57] |

| Audio | Deep-learning models recognize and classify vocal expressions of different emotional states, providing real-time monitoring and management of stress and emotional welfare | [58,59,60,61] | |

| Production | AI analyses behavioral data to automatically detect and categorize degrees of heat stress, facilitating environmental adjustments and welfare improvement | [62,63,64] | |

| Health Scoring | Image | Image recognition assesses feather quality to evaluate individual growth and health status, supporting management decisions and enhancing flock welfare | [65,66] |

| Image | Thermal imaging monitors eye and comb temperatures to ensure environmental comfort and health | [67] | |

| Audio | AI analyses vocal characteristics after vaccination to evaluate vaccine efficacy and health status, optimizing immunization strategies and improving overall welfare | [68] |

| Economic Objective | Modality | AI Research Cases | References |

|---|---|---|---|

| Farming Management Optimization | Image | Use of 2D/3D computer vision to estimate body weight accurately, enabling automated individual and flock management and lowering labor costs while raising efficiency | [80,81,82,83] |

| Image | Neural-network video analysis for automatic bird counting, reducing manual inventory and improving management efficiency | [84,85,86,87] | |

| Image | Rapid floor-egg detection via image recognition, cutting losses and increasing egg yield and quality | [88] | |

| Image | Image recognition for fast and accurate estimation of chick age, supporting precision rearing and cost reduction | [89] | |

| Audio/IoT Sensor | AI analysis of feed intake patterns to optimize ration allocation and lower feed costs | [90,91] | |

| IoT Sensor | Robot patrols and automated dead-bird/egg collection to improve daily management while reducing labor and boosting efficiency | [92,93,94] | |

| Production | Machine-learning analysis of dead-on-arrival rates during transport to cut economic losses and raise logistics efficiency | [95] | |

| Production | Real-time AI analysis of body-weight data to detect anomalies and optimize feed-conversion ratio | [96,97] | |

| Growth and Performance Scoring | Image/IoT Sensor | Neural networks accurately measure body dimensions and weights for precise performance evaluation and management optimization | [98,99,100,101] |

| Production | Machine-learning models predict and evaluate laying performance, improving production planning and profits | [102,103,104,105] | |

| Production | AI integrates environmental factors within the farm to fine-tune conditions and optimize growth and productivity | [106,107] | |

| Product Quality Monitoring | Image/IoT Sensor | Spectral imaging, whole-genome sequencing, and machine learning assess meat quality and Salmonella risk, enhancing food safety, uniformity, and market competitiveness | [108,109] |

| Image/IoT Sensor | AI combining image and optical-sensor data automatically grades eggs, boosting market value | [110,111] | |

| Image/IoT Sensor/Production | High-spectral data traced by AI to verify meat and egg provenance, strengthening branding and consumer trust | [112,113,114] | |

| Gender Identification and Genetic Improvement | Image | Neural-network analysis of candled-egg images for early detection of fertilization and embryo sex, improving hatchery efficiency and profitability | [115,116,117,118,119] |

| Audio | AI analysis of chick vocalizations for early sex determination, lowering labor costs and increasing productivity | [120,121] | |

| Production | Integration of bioinformatics and machine learning for genomic prediction, reducing hereditary disease risk and enhancing large-scale economic returns | [122,123,124] | |

| Production | Deep learning combined with genetic algorithms for adaptive feeding control, significantly improving feed-conversion ratio and reducing costs | [125] |

| Environmental Objective | Modality | AI Research Cases | References |

|---|---|---|---|

| Odor-concentration prediction and correlation | Production | A multilayer neural network trained on health records and ammonia levels at different stocking densities predicts in-house ammonia exposure and links it to respiratory-disease incidence | [138] |

| IoT Sensor | A gradient-boosted decision-tree model, fed with small samples from ammonia (NH3) and hydrogen-sulfide sensors, forecasts odor levels in layer houses and confirms ammonia as the dominant driver | [139] | |

| IoT Sensor | Gas-chromatography-mass-spectrometry data combined with “electronic-nose” readings and a support-vector machine identify abnormal volatile compounds in real time | [140] | |

| IoT Sensor | An AI expert system that relies on low-cost ammonia sensors provides continuous NH3 monitoring and automatically adjusts the ventilation rate | [141] | |

| Ventilation-performance monitoring | IoT Sensor | A recurrent neural network analyses time-series data from fan sensors and controllers, detecting faults and abnormal airflow | [142] |

| IoT Sensor | Design a deep-learning-based platform that merges camera analysis to evaluate exhaust-fan performance in real time and issue maintenance alerts | [143] | |

| Environmental-control strategies | IoT Sensor | Principal-component and linear-discriminant analyses extract key features in cage-free houses; a random-forest model then simulates egg-production changes under alternative control strategies | [144] |

| IoT Sensor | A decision-tree classifier labels conditions as suitable, marginal, or unsuitable based on temperature, humidity, air speed and ammonia concentration, guiding environmental adjustments | [145] | |

| Production | A neural network fed with feed, energy-use and output records estimates total farm energy demand and suggests retrofit options for saving power | [146] | |

| Environmental-impact assessment | Production | Within a life-cycle-assessment framework, Monte-Carlo simulation and a genetic algorithm optimize the entire broiler-production chain, lowering environmental impact per kilogram of meat | [147] |

| Production | A neural network coupled with risk simulation predicts manure moisture content, evaluating its value as a bio-energy feedstock | [148] | |

| Production | A neural network refined by the Levenberg–Marquardt method models groundwater-contamination risk, forecasting how chicken manure affects fecal-coliform counts in nearby water sources | [149] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Willems, S.; Liu, D.; Norton, T. How AI Improves Sustainable Chicken Farming: A Literature Review of Welfare, Economic, and Environmental Dimensions. Agriculture 2025, 15, 2028. https://doi.org/10.3390/agriculture15192028

Wu Z, Willems S, Liu D, Norton T. How AI Improves Sustainable Chicken Farming: A Literature Review of Welfare, Economic, and Environmental Dimensions. Agriculture. 2025; 15(19):2028. https://doi.org/10.3390/agriculture15192028

Chicago/Turabian StyleWu, Zhenlong, Sam Willems, Dong Liu, and Tomas Norton. 2025. "How AI Improves Sustainable Chicken Farming: A Literature Review of Welfare, Economic, and Environmental Dimensions" Agriculture 15, no. 19: 2028. https://doi.org/10.3390/agriculture15192028

APA StyleWu, Z., Willems, S., Liu, D., & Norton, T. (2025). How AI Improves Sustainable Chicken Farming: A Literature Review of Welfare, Economic, and Environmental Dimensions. Agriculture, 15(19), 2028. https://doi.org/10.3390/agriculture15192028