Abstract

In precision livestock farming, synchronous and high-precision instance segmentation of multiple key body parts of sika deer serves as the core visual foundation for achieving automated health monitoring, behavior analysis, and automated antler collection. However, in real-world breeding environments, factors such as lighting changes, severe individual occlusion, pose diversity, and small targets pose severe challenges to the accuracy and robustness of existing segmentation models. To address these challenges, this study proposes an improved model, MPDF-DetSeg, based on YOLO11-seg. The model reconstructs its neck network, and designs the multipath diversion feature fusion pyramid network (MPDFPN). The multipath feature fusion and cross-scale interaction mechanism are used to solve the segmentation ambiguity problem of deer body occlusion and complex illumination. The design depth separable extended residual module (DWEResBlock) improves the ability to express details such as texture in specific parts of sika deer. Moreover, we adopt the MPDIoU loss function based on vertex geometry constraints to optimize the positioning accuracy of tilted targets. In this study, a dataset consisting of 1036 sika deer images was constructed, covering five categories, including antlers, heads (front/side views), and legs (front/rear legs), and used for method validation. Compared with the original YOLO11-seg model, the improved model made significant progress in several indicators: the mAP50 and mAP50-95 under the bounding-box metrics increased by 2.1% and 4.9% respectively; the mAP50 and mAP50-95 under the mask metrics increased by 2.4% and 5.3%, respectively. In addition, in the mIoU index of image segmentation, the model reached 70.1%, showing the superiority of this method in the accurate detection and segmentation of specific parts of sika deer, this provides an effective and robust technical solution for realizing the multidimensional intelligent perception and automated applications of sika deer.

1. Introduction

Precision livestock farming has emerged as a crucial direction for enhancing agricultural production efficiency and safeguarding animal welfare [1]. Against this backdrop, non-contact, automated individual identification, health monitoring, and behavior analysis of animals have become of paramount importance [2]. For the sika deer breeding industry, developing an intelligent vision system capable of precisely performing instance segmentation on multiple key body parts holds significant comprehensive application value. For instance, accurate head segmentation serves as the foundation for individual identification and automated behavior analysis (such as feeding and vigilance). Leg segmentation makes automated gait analysis possible, facilitating the early diagnosis of health issues like lameness. Moreover, multi-part collaborative segmentation is the cornerstone for achieving complex automated tasks. A highly representative application of this is automated antler collection. As a precious Chinese medicinal material and health-care ingredient, antler has extremely high economic value [3]. According to industry data, the annual output value of antler in the Chinese market alone has exceeded CNY 5 billion. However, to achieve the automated collection of this high-value product, not only is the fine-grained segmentation of the antler itself required to assess its growth stage and guide cutting, but this assessment also highly depends on the synchronous and precise segmentation of the head and legs. This is undertaken in order to predict the deer’s behavior and ensure safe physical fixation, thereby overcoming problems such as animal stress and drug residues associated with traditional collection methods (such as manual or drug-induced immobilization) [4]. Consequently, an algorithm capable of simultaneously achieving precise and robust segmentation of multiple body parts of sika deer not only promotes research in animal ethology and health management but also serves as a core technical prerequisite for the realization of high-end intelligent equipment.

In recent years, with the rapid development of deep learning technology, object detection technology has been widely used in the field of animal husbandry automation [5]. For example, Zhang et al. [6] proposed the lightweight sheep face recognition model LSR -YOLO. By optimizing the YOLOv5s architecture and adopting ShuffleNetv2, Ghost modules, and CA attention, they significantly reduced the computational load and the number of parameters. The model achieved an mAP of 97.8% with a model size of 9.5 MB and was successfully deployed on mobile devices, providing an efficient edge-computing solution for individual livestock identification. Li et al. [7] constructed a two-stage pig group behavior detection framework. By combining YOLOX for positioning and an improved SlowFast network (incorporating SC-Conv and spatio-temporal attention), it achieved an mAP of 80.05%, effectively monitoring group behaviors and serving the health management of large-scale pig farming. Mu et al. [8] developed CBR-YOLO, which was optimized for complex weather conditions. Using the Inner-MPDIoU loss, MCFP feature fusion, and a lightweight detection head, the model reached an mAP of 90.2% in rainy and foggy environments, with a computational load as low as 4.8 G, enhancing the automated monitoring ability of pastures in harsh environments. These results validate the reliability and effectiveness of target detection technology in complex agricultural scenarios and provide a solid foundation for the automated development of modern animal husbandry [9]. Focusing on the research field of Sika deer, Xiong et al. [10] proposed the AD-YOLOv5 model for detecting key parts. By optimizing multi-scale feature fusion through S-BiFPN, combining SENet attention and SIoU loss, the model achieved an mAP50 of 97.30% in complex scenes, with a speed of 125 FPS. Its accuracy was 4.6% higher than the baseline, making it suitable for fine-grained animal detection. Gong et al. [11] developed the GFI-YOLOv8 pose recognition model. Using iAFF to optimize feature interaction, and integrating EMCA attention and SPFPN to enhance semantic capture, the model achieved an average accuracy of 91.6% for four types of behaviors, such as standing and lying down. With 51.74 M parameters, its mAP50 was 6% higher than that of YOLOv8n, providing a reliable technical solution for contact-free and welfare-oriented sika deer farming. However, these target detection methods, including the existing research on deer target detection, can only output the bounding box of the target, and cannot provide pixel-level contour information. This limits their use in tasks that require fine-grained operations. For automated deer antler collection, it is not only necessary to accurately cut deer antler, but also to automatically fix sika deer, and these need to rely on accurate positioning and fine segmentation of specific parts of sika deer.

In order to overcome the limitations of traditional object detection methods, case segmentation technology comes into being. Instance segmentation can not only detect objects in the image, but also generate pixel-level masks for each object, thus providing finer segmentation results [12]. In the field of animal husbandry, instance segmentation technology has shown broad application prospects. For example, Liu et al. [13] proposed the dual-branch ICNet to address the issues of dense occlusion and scale variation in pigs. They enhanced features using PDCL and DCNv3, and constructed the Count1200 dataset along with the SAI annotation tool, increasing efficiency by seven times. With 33 M parameters, the model achieved an AP of 71.4%, outperforming the SOLO series and providing a lightweight counting solution. Zhao et al. [14] improved RefineMask for sheep segmentation. By introducing the ConvNeXt-E backbone and spatial attention, they enhanced the accuracy of overlapping object recognition. Combined with copy-paste data augmentation, the boundary AP reached 89.1% (a 3.0% increase), supporting precise grazing. Qiao et al. [15] modified Mask R-CNN to cope with uneven outdoor lighting. They optimized features through gamma preprocessing and FPN-ResNet101. On cattle farm images, the MPA reached 0.92 (a 39% increase compared with DeepMask), with a low contour error, providing a robust technical basis for body condition assessment. For automated antler collection, case segmentation technology is indispensable. Only by obtaining the precise outline of the specific parts of the deer (such as the antler, head, and legs) can it provide accurate positioning and guidance for subsequent cutting operations.

Although instance segmentation technology has demonstrated potential in other livestock farming scenarios, applying it to the real and complex breeding environment of sika deer still faces a series of unique challenges. These challenges jointly pose a severe test to the accuracy and robustness of the algorithm—first, the visual variability of the scene, including drastic changes in natural lighting and motion blur caused by the rapid movement of sika deer, requires the model to possess strong feature robustness and, second, the complexity of physical interactions among targets. In high-density breeding environments, severe occlusion between individuals and pose diversity demand that the model has excellent multi-scale feature fusion and context-reasoning capabilities. Finally, the demand for target refinement, that is, the precise segmentation of fine-grained features, such as the fluff of antlers and leg joints, requires the model to have outstanding detail-capturing abilities. To systematically address the above challenges, this study proposes an improved model based on YOLO11-seg, namely MPDF-DetSeg. This model aims to develop an algorithm capable of simultaneously performing high-precision and robust instance segmentation on multiple key body parts of sika deer, providing core visual technical support for a series of applications, such as automated health monitoring, behavior analysis, and automated collection. The main contributions of this paper lie in constructing an integrated solution with multiple innovations. We designed the multi-path diverging feature-fusion pyramid network (MPDFPN) to address occlusion and multi-scale problems; constructed the depth-wise separable expanding residual module (DWEResBlock) to enhance fine-grained feature representation; and introduced the MPDIoU loss function to improve geometric positioning accuracy. Through these collaborative designs, MPDF-DetSeg achieves a superior balance between accuracy and efficiency, effectively solving the segmentation problems in real-world breeding environments.

2. Materials and Methods

2.1. Datasets

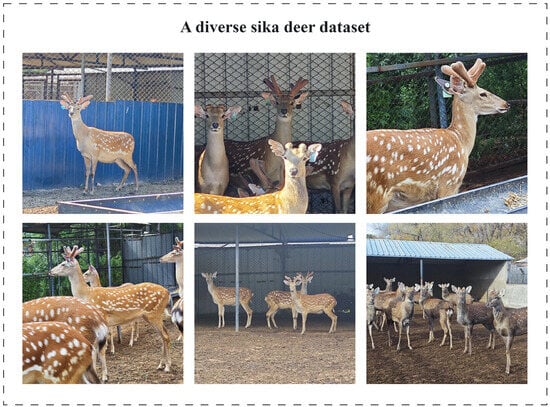

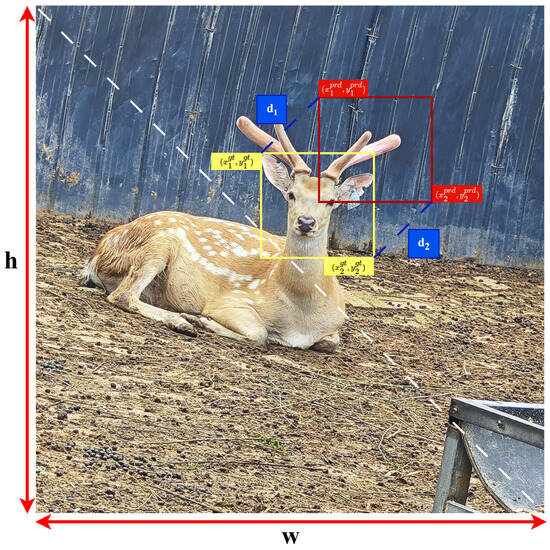

2.1.1. Data Sample Collection

To train and validate the MPDF-DetSeg algorithm proposed in this study, we constructed a high-quality image dataset specifically for the precise detection and image segmentation of specific parts of sika deer. This dataset was collected from the Smart Deer breeding base of Jilin Agricultural University. The data collection was carried out from April to October 2024, and the photography was undertaken using a Xiaomi 13 camera. The collection process covered various weather conditions including sunny, cloudy, and overcast days, and different natural lighting intensities from early morning to evening, so as to ensure the diversity of the dataset. Based on the originally collected images, we removed those with low resolution, excessive blurring, abnormal exposure, or containing irrelevant objects. In the end, the dataset contained 1036 high-quality sika deer images. The dataset covers five key categories of deer antler, head (front and side), and legs (front and back legs), with some representative images shown in Figure 1. The selection of these images is based on the practical application scenario of the automated collection of velvet antler, aiming to provide a reliable data basis for accurate detection and segmentation of these specific parts. Finally, the data set is randomly divided into a training set and a validation set according to the ratio of 8:2, which is used for subsequent model training and performance evaluation.

Figure 1.

A data set of sika deer covering different lighting conditions, shooting angles, and occlusion is constructed.

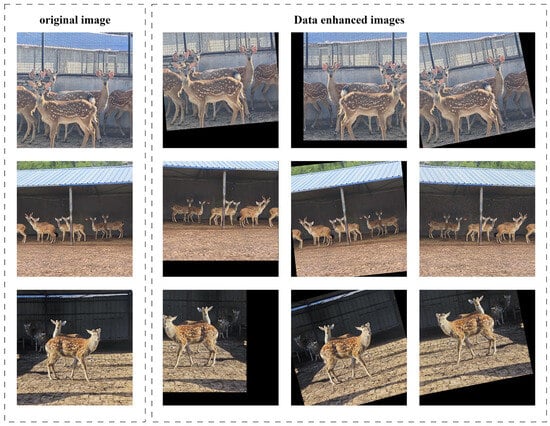

2.1.2. Datasets Enhancement

In order to improve the generalization ability and robustness of the model, and reduce the risk of overfitting, we only applied the data enhancement strategy to the training set, and the validation set was kept in its original state to ensure the objectivity of the evaluation [16]. Specifically, we used the following data enhancement methods to expand the diversity of the training data: We applied a random rotation of 10° to 20° to the training image to simulate the shooting angle deviation; random cropping was carried out to make the model focus on different image regions and adapt to the change of target scale. Random Gaussian noise is added to enhance the anti-noise ability of the model. Random translation within ±20% of the image size in both horizontal and vertical directions is performed to simulate a slight shift in the target position. With these data enhancements, we effectively increased the diversity of our training data and expanded the training set to four times its original size. Figure 2 shows an example of an image after data enhancement, including effects such as rotation, cropping, noise addition, and shift. Table 1 shows in detail the proportion of each instance in the training set and the test set before and after data enhancement.

Figure 2.

Examples of the application of data enhancement techniques, randomly rotating the image by about 10 to 20 degrees, cropping, adding noise, and panning.

Table 1.

Shows the composition of the data set, including the number of images of the original training set, the enhanced training set and the original verification set, as well as the number of instances of the 5 categories.

2.2. Model Improvement

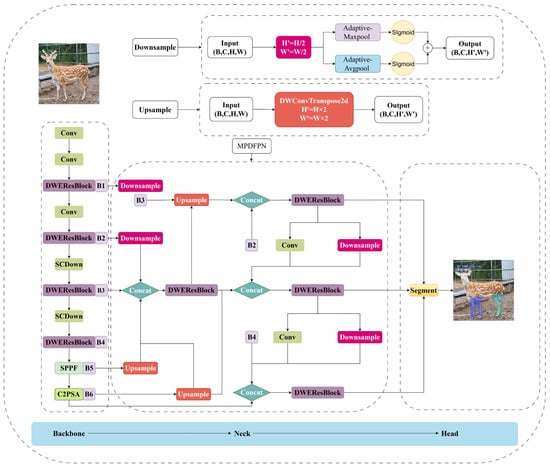

The existing example segmentation methods are difficult to directly apply to the detection and segmentation of specific parts of sika deer, especially in the actual farming environment, with complex lighting changes, individual occlusion, motion blur, etc., which requires high accuracy and robustness of the algorithm. To address these challenges, an improved YOLO11-seg model [17], MPDF-DetSeg, was proposed in this study to achieve accurate detection and segmentation of specific parts of sika deer. This model is based on the YOLO11-seg architecture, as shown in Figure 3. This is composed of a backbone network, a neck network and a segmentation head. We systematically improve the feature extraction network design, neck network reconstruction and loss function optimization. In terms of feature extraction, in order to improve the model’s ability to capture detailed features such as texture (such as villi) in specific parts of Sika deer, and take into account computational efficiency, we designed a depth separable extended residual module (DWEResBlock) and used this module to reconstruct the feature extraction network. Secondly, for the neck network, in order to solve the problem of the blurred boundary of small target segmentation and the decline of mask accuracy under complex illumination interference, we proposed a multipath diversion feature fusion pyramid network (MPDFPN), and constructed a multi-granularity, cross-level dense feature interaction path. Finally, in order to enhance the geometric sensitivity of the algorithm to the inclined target and help reduce the risk of positioning deviation caused by the sudden change of deer body attitude, we replace the CIoU loss with MPDIoU loss [18] in the original model. Through the above improvements, the MPDF-DetSeg model not only retains the advantages of YOLO11’s efficient single-stage architecture, but also realizes collaborative innovation in the three dimensions of lightweight feature extraction, multi-scale information fusion enhancement and geometric perception optimization. This helps the model to achieve good balance in terms of detection and segmentation accuracy, as well as real-time and dynamic scene robustness and the ability to provide high reliability algorithm support for automatic acquisition devices.

Figure 3.

MPDF-DetSeg model structure diagram.

2.2.1. Multipath Diversion Feature Fusion Pyramid Network (MPDFPN)

Compared with traditional feature pyramids (such as FPN [19] and PANet [20]), which fuse multi-scale features through unidirectional or bidirectional paths, shallow detail information is easily diluted in deep transmission, resulting in the missed detection of small targets. Therefore, this paper proposes a multipath diversion feature fusion pyramid network (MPDFPN), which can realize the deep cooperation of outputs at different stages of the backbone network by constructing multi-granularity and cross-level dense interaction paths. As shown in Figure 3. The core function of MPDFPN is to build a cross-scale progressive enhancement path: On one hand, after being upsampled by lightweight depth-separable transposed convolutions, deep-layer features are injected into the shallow-layer branches to compensate for the spatial information loss caused by downsampling; on the other hand, the shallow high-resolution features interact with the deep features after adaptive pooling compression, using cross-layer gradient information to improve semantic consistency. In terms of feature fusion mechanism, the improved model introduces a depth separable inverse convolutional [21] dynamic upsampling module to replace the traditional interpolation upsampling and adjusts feature resolution dynamically. This is combined with learnable parameters to reduce computation and avoid detail loss. For example, a learnable multi-scale resolution reconstruction is carried out for deep high-semantic features (such as B4 stage output) and to generate high-dimensional representations aligned with shallow feature spaces (e.g., B2, B3). At the same time, a cross-stage adaptive pooling alignment strategy is designed to dynamically adjust the resolution of middle-layer features (such as B2) in the backbone network by adaptive pooling operation, so that the model can realize channel dimension stitching with the upsampled deep features (such as B4) at the same scale.

In order to optimize the segmentation head’s perception of multi-scale objects, the above cross-scale progressive enhancement pathway plays a key role between shallow high-resolution features (80 × 80) and deep global features (40 × 40). Specifically, after the features output from the B5 and B6 stages are upsampled by lightweight depth-separable transposition convolution, they are spliced with the features output from the B3 and B2 stages through adaptive pooling downsampling, and the enhanced feature map formed is finally fused to the mid-layer features (40 × 40). At the same time, after adaptive pooling downsampling of the output features of stage B1, the enhanced feature map formed is finally fused to the shallow high-resolution features (80 × 80). Finally, the segmentation head combines fine-grained local features at 80 × 80 resolution, middle-level semantic features at 40 × 40 resolution and global context features at 20 × 20 resolution to form a composite feature representation covering targets at different scales through three-level heterogeneous feature aggregation. The improved scheme balances computational efficiency and feature representation ability through modular design and realizes the intensive interaction of multi-level features by introducing depth-separable transposition convolution and by designing cross-stage adaptive pooling modules. Without significantly increasing the number of parameters, the problem of insufficient cross-scale feature coupling and detail information attenuation in traditional methods is effectively solved. This provides an efficient and robust solution for real-time instance segmentation.

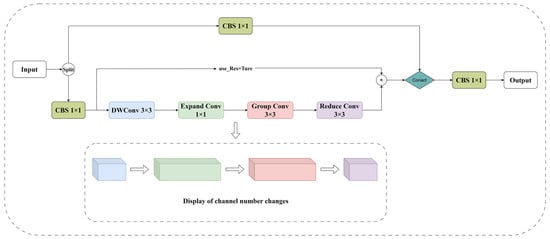

2.2.2. Deeply Separable Extended Residual Module

In order to enhance the ability to express fine-grained features of specific parts of sika deer (such as fluff and joints) while ensuring the lightweight nature of the model, we constructed the depth separable extended residual module, an innovative design for the contradictory relationship between feature expression efficiency and computational complexity in lightweight networks. Based on the dynamic residual learning framework, this module constructs a composite feature extraction structure with multi-path interaction through the collaborative optimization of depth-separable convolution and channel expansion strategies, as shown in Figure 4.

Figure 4.

DWEResBlock model structure diagram.

The core design uses a two-branch parallel architecture: the main branch achieves cross-dimensional feature interaction through cascading improved bottleneck blocks (IBBs) [22], each of which innovatively incorporates deep separable convolution, channel expansion mechanisms, and switchable residual connections to enhance the nonlinear representation of channel dimensions while preserving spatial feature details. The IBB module decomposed standard convolution into a cascade process of a deep separable convolution channel extension-adaptive receptive field learning-feature projection, which is mathematically expressed as follows:

First, a fixed depth-wise separable convolution is employed to initially extract spatial information from the input features. Subsequently, a point-wise convolution is responsible for channel expansion, extending the number of input channels to . Here, the expansion factor (denoted as ) is a crucial hyperparameter that determines the dimension of internal feature processing within the module. In our model, we set to strike a good balance between the model’s representational ability and computational complexity. Next comes the core of the design-the adaptive receptive-field learning stage. We adopt a depth-wise separable convolution, where the convolution kernel size is variable and can be adaptively selected as 3, 5, or 7, according to the hierarchical depth of the module in the network. This mechanism enables the model to use smaller convolution kernels to focus on local details in the shallow-layer network and larger convolution kernels to obtain a broader receptive field for capturing global context information in the deep-layer network. Finally, another 1 × 1 point-wise convolution projects the features linearly from the expanded high-dimensional space back to the target output dimension , completing the entire feature transformation process. The residual connection is designed to be switchable, as follows:

where acts as a binary gating factor, opening residual connections () to enhance gradient propagation in shallow networks (when feature abstraction is low) and closing () to accelerate convergence in deep networks. The auxiliary branches retain the original feature distribution characteristics through constant mapping, and the branch features are dynamically fused in the channel dimension, which not only realizes the complementary enhancement of cross-layer features, but also suppresses the redundant information through the channel recalibration mechanism. In particular, the IBB module introduces an extensible bottleneck structure, and dynamically adjusts the ratio of convolutional kernel receptive field and channel expansion, so that the network can adapt to the scale change of features at different levels. This modular design significantly improves the model’s ability to discriminate multi-scale features while ensuring parametric efficiency, and its flexible configurability facilitates the deployment of the model under different computational constraints.

2.2.3. MPDIoU

In view of the insufficient geometric sensitivity and computational efficiency bottleneck of YOLO11 in the bounding box regression task, this study proposed to replace the original CIoU loss function [23] with the MPDIoU loss function, and significantly improve the positioning accuracy by optimizing the geometric deviation measurement mechanism. The CIoU loss function is defined as follows:

where is the Euclidean distance between the center point of the predicted box and the real box, is the diagonal length of the smallest external rectangle of the two boxes, and is the weight coefficient, which is used to punish the difference in aspect ratio. Although CIoU enhances regression stability by introducing central point and aspect ratio constraints, its dependence on the external rectangle parameter results in insufficient sensitivity to the shift of key corner points. For example, when the aspect ratios of the predicted bounding box and the truth bounding box are the same, but there is a significant difference in size (such as nested detection of multi-scale targets in an image), CIoU degrades to DIoU because . In this case, the loss function only depends on the center-point distance term and cannot distinguish size deviations. In addition, the external rectangle calculation and the aspect ratio penalty term increase the computing cost by 12%, which restricts the real-time performance of the model. To this end, MPDIoU abandoned the traditional optimization path of the coupling center point and aspect ratio, and innovatively proposed the minimum point distance criterion, whose loss function is defined as follows:

of which,

is the minimum Euclidean distance between the predicted box and the upper left corner point and the lower right corner point of the real box, and

is the normalization factor composed of the diagonal length of the image. This design directly constrains the corner coordinates of the bounding box. Essentially, from a geometric perspective, it unifies the optimization directions of the overlapping area (the IoU term), the position offset , and the implicit size deviation (which is transferred from the differences in corner coordinates to the width-height calculation), as shown in Figure 5.

Figure 5.

Parameters of MPDIoU.

Compared with CIoU, MPDIoU’s improvement is reflected in three aspects, as follows:

- Enhanced geometric sensitivity: When the centers of the predicted bounding box and the truth bounding box coincide but their sizes are different (), for CIoU, as and , the loss function becomes equivalent to IoU (), and thus cannot guide the model to optimize the size. In contrast, MPDIoU explicitly penalizes the corner offset through , forcing the model to adjust both the width and height parameters simultaneously.

- Optimization of computational efficiency: MPDIoU eliminates the complicated external rectangle calculation and aspect ratio coupling terms in CIoU, giving a computational complexity reduction of about 30–40% when compared with CIoU.

- Small target adaptability: For small target detection tasks, in CIoU, the absolute coordinate deviation of the bounding box results in weak gradients due to the relatively large normalization factor ; however, the term in MPDIoU retains linear sensitivity to minute offsets. Theoretical analysis shows that its gradient amplitude is 23% higher than that of CIoU.

2.3. Evaluation Indicators

In order to comprehensively evaluate the performance of the MPDF-DetSeg model on the task of detecting and segmenting specific parts of sika deer, the following evaluation indexes were used. For the instance segmentation tasks (including object detection and pixel-level segmentation), we used precision (P), recall (R), mAP50 (IoU threshold 0.5) and MAP50-95 (IoU threshold 0.5–0.95, step size 0.05) to evaluate the bounding box detection performance. The same index is used to evaluate the mask segmentation performance based on pixel-level calculation. In addition, to evaluate the overall performance of the model on pixel-level segmentation, we introduced MIoU, mean pixel accuracy (mPA) and pixel accuracy (PA) in semantic segmentation as supplements. The above indicators are calculated as follows:

Among these, true positive (TP), false positive (FP), false negative (FN), and true negative (TN) represent the number of positive and negative sample pixels correctly or incorrectly predicted by the model, respectively. is the number of classes. These metrics can comprehensively evaluate the performance of the MPDF-DetSeg model from different dimensions.

3. Experimental Results and Analysis

3.1. Experimental Environment and Parameter Setting

The MPDF-DetSeg model proposed in this study was built based on the PyTorch deep learning framework and developed and trained in Anaconda environment. Table 2 shows the configuration of the main experimental equipment environment. The experimental hyperparameter settings are shown in Table 3.

Table 2.

Experimental environment configuration.

Table 3.

Hyperparameter settings.

3.2. Experimental Results of MPDF-DetSeg Model

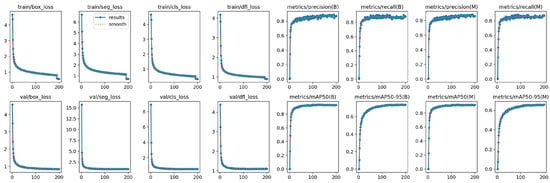

Figure 6 shows the change curve of the MPDF-DetSeg model’s loss function value during training, as well as the change curves of precision, recall and average accuracy under the bounding box and mask evaluation systems. With the increase of training rounds, the loss function value continues to decline and eventually becomes stable, indicating that the model gradually converges. At the same time, the precision rate, recall rate and mAP indexes all showed a trend of steady increase and reached a high level in the late training period, indicating that the MPDF-DetSeg model effectively learned the characteristics of the specific parts of sika deer during the training process, and had good detection and segmentation capabilities.

Figure 6.

This figure shows the changes in various indicators during the model training process. For all sub-graphs in the figure, the abscissa represents the number of training epochs. For loss curves (such as train/box_loss, train/seg_loss, etc.), the ordinate represents the corresponding loss value. For performance indicator curves (such as metrics/precision(B), metrics/recall(B), metrics/mAP50(B), etc.), the ordinate represents the value of the indicator between 0 and 1.

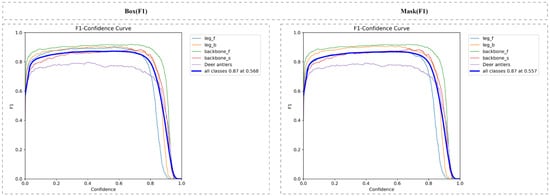

In order to explore the effects of different confidence levels on model performance, Figure 7 shows the F1 score curves for each category of MPDF-DetSeg model under different confidence thresholds. F1 score is the harmonic average of accuracy rate and recall rate, and can comprehensively evaluate the performance of the model under different confidence thresholds. As can be seen from the figure, with the increase of the confidence threshold, F1 scores first increase and then decrease, reaching a peak of around 0.8. This shows that, when the confidence threshold is set near 0.8, the MPDF-DetSeg model can achieve the best balance between the accuracy rate and the recall rate, and obtain the best overall performance.

Figure 7.

F1 scores at different confidence thresholds.

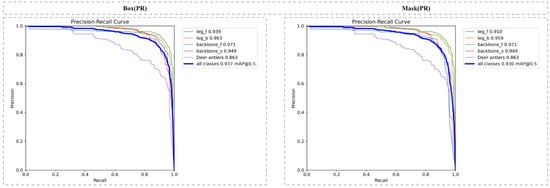

Figure 8 shows the precision–recall curves (P–R curves) of the MPDF-DetSeg model for each category. P–R curves are an important tool for evaluating the performance of object detection and segmentation models, especially for class-unbalanced data sets. The closer the curve is to the upper right corner, the better the model performance. As can be seen from the figure, the P–R curves of the MPDF-DetSeg model in each category are relatively smooth and close to the upper right corner, which indicates that the model has good detection and segmentation performance in different categories, and that this performance is relatively stable. In addition, by comparing the P–R curves of different categories, it can be found that the performance of the model on deer antlers category is slightly lower than that of other categories, which is compared with the smaller target of the deer antlers category, the irregular shape, and the relatively small sample size in the dataset (see Table 1 and Figure 16), resulting in relatively limited effective features learned by the model. Nevertheless, the P–R curve of the MPDF-DetSeg model in the deer antlers category still shows a good trend, indicating that the model still has a certain ability to detect small targets.

Figure 8.

Precision–recall curve by class.

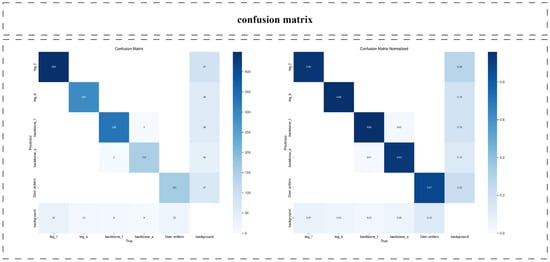

Figure 9 shows the confusion matrix results of the MPDF-DetSeg model on the task of detecting specific parts of sika deer. The confusion matrix can directly reflect the prediction accuracy and misjudgment of the model in different categories. As can be seen from the figure, the MPDF-DetSeg model has higher prediction accuracy in other categories, while it has relatively lower prediction accuracy in the deer antlers category, with the main misjudgment being background category. This is because the targets of the deer antlers category are relatively small, and, as described in Section 4.2, their distinguishability from the background in the images is low. As a result, during the feature extraction and classification processes, the model is more prone to misclassifying them as the background.

Figure 9.

Confusion matrix.

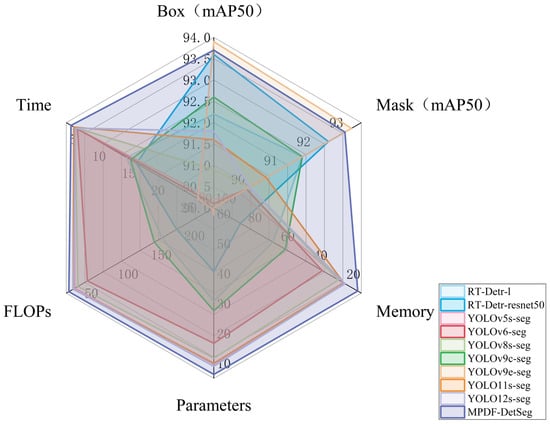

3.3. Comparative Experiments of Different Models

In order to verify the effectiveness of the MPDF-DetSeg model, we compare it with many mainstream models. These include the RT-Detr series [24], the YOLO series (v5s-seg [17], v6-seg [25], v8s-seg, v9c-seg [26], v9e-seg, 11s-seg, v12-seg [27]) and our proposed MPDF-DetSeg model (Our). Table 4 shows the evaluation of each model on the two tasks of box and mask. Figure 10 visually compares the mAP indexes of different models on the tasks of Box and Mask, as well as comprehensive performance indexes such as the number of parameters, the size of the model, the amount of computation and the reasoning speed of the model. Experimental analysis shows that the model proposed in this paper outperforms other comparative models in both bounding-box (Box) and mask detection performance, while maintaining an excellent balance in terms of model complexity (memory footprint, number of parameters, and computational load). Specifically, in terms of bounding-box metrics, the mAP50 and mAP50-95 of the improved model reach 93.7% and 74% respectively, an increase of 2.1% and 5.1% compared with the original YOLO11s-seg model. Correspondingly, in terms of mask metrics, its mAP50 and mAP50-95 also reach 93.0% and 68%, respectively, with an improvement of 2.4% and 5.3% compared with the original model.

Table 4.

10 Performance comparison of mainstream models.

Figure 10.

The comprehensive performance comparison of 10 models on multiple indicators, including mAP50, model size, parameter number, computation amount and average inference time under the categories of box and mask. In the radar chart, each contour line corresponds to the performance distribution of a model. The farther the intersection point of the contour line with each coordinate axis is from the center, the better the performance of the corresponding indicator. At the same time, the area of the polygon enclosed by the contour line directly reflects the comprehensive performance of the model—the larger the area, the better the overall performance.

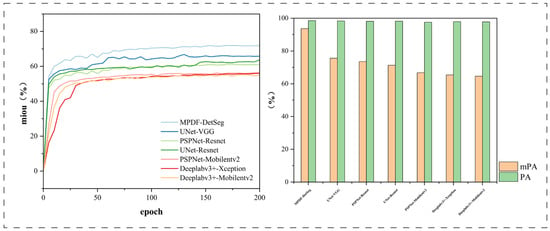

As shown in Figure 11, in order to further verify the performance of MPDF-DetSeg model in the segmentation task, we compared it with the classical semantic segmentation models UNet, PSPNet and DeepLabv3 in terms of mIoU, mPA and PA indexes. The experimental results show that the MPDF-DetSeg model is significantly better than these semantic segmentation models on mIoU index, reaching 70.1%. In addition, the MPDF-DetSeg model achieved 85% mPA and 93% PA. This indicates that the MPDF-DetSeg model has significant advantages in pixel level segmentation accuracy of specific parts of sika deer.

Figure 11.

Segmentation performance comparison.

It is worth noting that the PA index of other models reaches more than 90%, which is much higher than its mIoU. This is mainly due to the large number of background pixels in the sika deer data set used in this study and the relatively small proportion of small targets (e.g., deer antler, leg joints) (as described in Section 4.2). The pixel accuracy (PA) metric calculates the classification accuracy of all pixels. As background pixels are easy to classify and are numerous in quantity, it is relatively easy for the PA metric to reach a high level. In contrast, the mIoU metric calculates the average of the IoU for each category and is more focused on measuring the segmentation accuracy of the model for foreground targets (especially small targets). Therefore, although the PA index of other models is high, the mIoU index can better reflect the actual performance of the model on the specific part segmentation task of sika deer.

The MPDF-DetSeg model achieved excellent performance while still maintaining the advantages of lightweight, model memory occupancy is only 17.45 MB, the number of parameters is about 9 million, the computation amount is 35.1 GFLOPs, and the average inference time is 6.8 ms. As shown in Table 5, although RT-Detr and YOLOv9 series models perform excellently in the mAP50 metric, their resource consumption (including memory footprint, number of parameters, inference latency, and computational load) is significantly higher than that of the MPDF-DetSeg model. In contrast, our model achieves a better synergy among accuracy, efficiency, and model size. It is particularly suitable for deployment scenarios on resource-constrained edge devices and has greater potential for practical applications.

Table 5.

Comprehensive performance parameters of RT-Detr series and YOLOv9 series models.

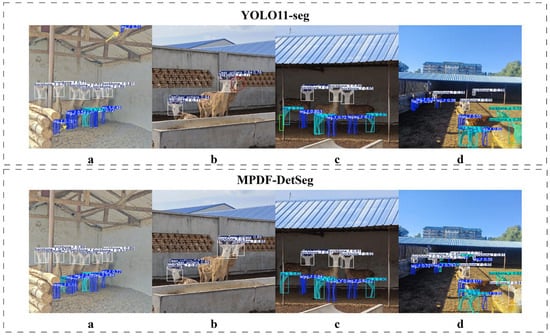

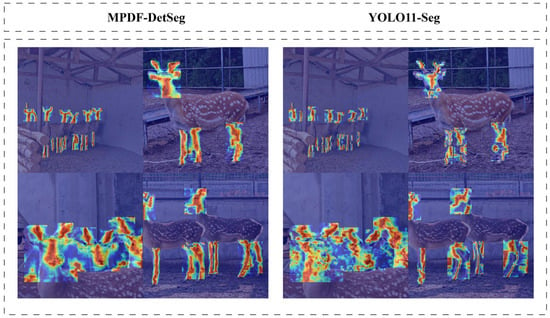

3.4. Visual Comparison of Test Results

In order to evaluate the robustness of the MPDF-DetSeg model in complex deer farm scenarios, we selected four typical scenarios: dense standing deer herd, occluded-prone posture, dark light environment and remote small target, and compared the visual detection and segmentation results of MPDF-DetSeg model and YOLO11-seg model (as shown in Figure 12). In the dense deer herd scene (Figure 12a), due to feature confusion in the YOLO11-seg model, the leg boundary frame of adjacent deer bodies overlapped (yellow arrow), and the segmentation mask adhered and mistakenly identified wooden stakes with similar shapes and textures in the background as the legs of a deer. In contrast, the MPDF-DetSeg model uses the DWEResBlock module to enhance the ability to distinguish the subtle differences between dense targets, and more accurately divide the boundary boxes and segmentation masks of different individuals. For the antler targets that are occluded in a prone posture (Figure 12b), the YOLO11-seg model failed to detect the occluded objects (the red circles). The MPDF-DetSeg model relies on the multi-scale feature fusion capability of the multipath diversion feature fusion pyramid network (MPDFPN) to effectively extract the features of the occluded target and realize the detection of the occluded target. In a dark light environment (Figure 12c), the YOLO11-seg model was disturbed by noise and failed to detect and segment the leg joints in the shaded area (red circle). The MPDF-DetSeg model can enhance the feature expression under low illumination conditions through the cross-scale context information fusion of MPDFPN, identify the true leg boundaries more accurately and suppress the reflective artifacts. For long-distance deer herds, the YOLO11-seg model showed more missed and false detections. The MPDF-DetSeg model can locate small targets more reliably and generate segmentation masks with fine edges. Through feature enhancement of the DWEResBlock module, multi-scale feature fusion and cross-scale information interaction of MPDFPN, and geometric constraint optimization of the MPDIoU loss function, the improved model significantly improves the detection and segmentation accuracy and stability in complex scenes.

Figure 12.

Visual comparison of detection results in different scenarios. Among them, (a) is the comparison of the dense sika deer herd scene, (b) is the comparison of sika deer in a prone position and under occlusion, (c) is the comparison of the scene in low-light conditions, and (d) is the comparison of the long-distance small-target sika deer herd.

3.5. Ablation Experiment

In order to verify the effectiveness of each improved module, this study designed a progressive ablation experiment on the deer datasets, taking the original YOLOv11s-seg as the baseline model, gradually introduced DWEResBlock module, reconstructed the neck network and MPDIoU loss function, and finally integrated all of the improvements. All experiments were divided by the same training parameters and data sets, and the results are shown in Table 6:

Table 6.

Results of ablation experiments.

3.5.1. Analysis of MPDFPN’s Influence on Model Performance

Table 6 shows the impact of refactoring the neck network (with the introduction of MPDFPN) on model performance. It can be seen that, after the introduction of MPDFPN, in the bounding box detection task, the mAP50 and mAP50-95 of the model increased by 1.9% and 4.7% respectively. In the segmentation task, the mAP50 and mAP50-95 increased by 2.2% and 4.8% respectively. This shows that the introduction of MPDFPN significantly improves the detection and segmentation performance of the model. In order to further analyze the action mechanism of MPDFPN, we combined the heat map (Figure 13) to conduct analysis from two dimensions of the feature focusing ability and the detail perception intensity. The comparison of the heat map shows that the neck network of the original model is scattered in the feature response area in the detection of the head of deer, and that the activation of the contour edge is weak, resulting in the blurring of the segmentation mask boundary. In contrast, MPDFPN dynamically strengthens the feature weights of the target subject area through the multi-path shunting feature fusion pyramid network, which enhances the model’s attention to key structures such as deer antler and leg joints, while suppressing the interference response of background noise. In addition, the dynamic upsampling calibration strategy of MPDFPN significantly optimized the multi-scale feature alignment capability. In long-distance antler detection, the improved feature pyramid retained the villus texture details at the shallow layer, and accurately positioned the antler base spatial coordinates at the deep layer, thus driving the segmentation mAP50-95 of small targets to improve by 4.8%.

Figure 13.

This figure comparatively shows the differences in feature extraction heatmaps between the original model and the improved model. The pixel values in the figure correspond to the feature activation intensity, and the magnitude of these values directly reflects the probability of the presence of target objects at that spatial location—the higher the activation value, the more prominent the bright area it presents in the heatmap. By comparison, the heatmaps generated by the improved model for the key parts of sika deer (such as antlers, limb joints) show a significantly enhanced focus, indicating that the optimized network can effectively capture discriminative features. By strengthening the attention weights of the target areas, the detection and segmentation accuracy are ultimately improved.

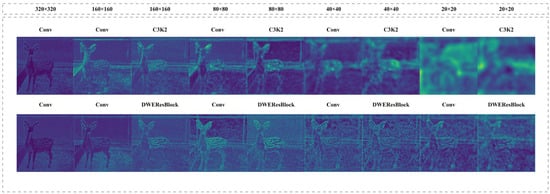

3.5.2. Analysis of the Influence of DWEResBlock on Model Performance

Compared with the benchmark model, mAP50 and MAP50-95 under box increased by 1.5% and 3.5%, respectively, and mAP50 and MAP50-95 under mask increased by 1.4% and 3.6%, respectively (Table 6). In order to further analyze the optimization mechanism of DWEResBlock, we made a visual comparison of the backbone network feature diagram of the benchmark model and the model after the introduction of the DWEResBlock module, as shown in Figure 14. It can be seen from the figure that the response of the last two layers of the backbone network of the benchmark model to the edge areas, such as the limbs and antler of sika deer, is fuzzy, and there is more noise interference. By focusing on high-frequency details, DWEResBlock improves the edge feature response strength through the sparse connection characteristic of depth separable convolution. The visualization results show the dual advantages of DWEResBlock in local detail enhancement and global semantic decoupling. Through dynamic a residual learning framework and channel expansion strategy, the clarity and semantic richness of feature representation are significantly enhanced.

Figure 14.

This figure presents a comparison of the visual feature maps of the basic model and the optimized model at different feature layers. After the introduction of DWEResBlock, the optimized network not only retains the texture details but also significantly enhances the spatial response intensity of the target objects. As a result, their contours and key structures (such as antler bifurcations, leg muscle lines) are more precisely represented. This design effectively improves the feature discrimination ability, providing more informative visual representations for subsequent detection and segmentation tasks.

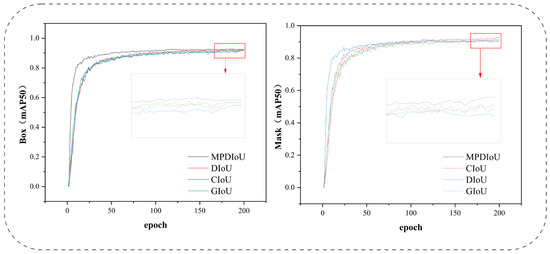

3.5.3. Analysis of MPDIoU’s Influence on Model Performance

In order to verify the validity of MPDIoU loss function, we replace CIoU, DioU [28], GioU [29] and MPDIoU loss functions on the basis of the YOLO11-seg model, and conduct training and testing on the sika deer data set. As shown in Table 6, compared with the baseline model using CIoU loss function, the mAP50 and MAP50-95 of the model using the MPDIoU loss function increased by 0.7% and 1.7%, respectively, under box, and the mAP50 and MAP50-95 under mask increased by 0.6% and 1.8%, respectively. The MPDIoU loss function also achieved the best performance improvement compared with other loss functions (Figure 15). The MPDIoU loss function can achieve better performance because it more directly constrains the bounding box regression process by directly optimizing the sum of squares of the distance between the predicted box and the real box corner points. Compared with the indirect geometric constraints, such as aspect ratio and center point distance, used in traditional loss functions (such as CIoU) (these constraints need to be obtained indirectly through IoU calculation), MPDIoU loss function takes corner geometric alignment as the core, effectively solving the problem of inaccurate boundary box regression caused by object tilt and occlusion in complex scenes. Thus, the accuracy of detection and segmentation is improved.

Figure 15.

Comparison of mAP50 under box and mask for four loss functions.

4. Discussion

4.1. Analysis of Comprehensive Performance and Model Design

This study aims to develop an efficient instance segmentation model capable of addressing the challenges of complex breeding environments. The experimental results systematically validate the effectiveness of the proposed MPDF-DetSeg model. Through ablation experiments and visual analyses such as heatmaps, we have confirmed that the performance improvement of the model stems from the synergistic effect of several key designs. MPDFPN, through its innovative multi-path fusion mechanism, effectively enhances the feature representation of multi-scale and occluded targets. DWEResBlock demonstrates advantages in capturing fine-grained features, and the MPDIoU loss function improves the geometric positioning accuracy of the model. The integration of these modules jointly contributes to the superiority of the model in overall performance.

More importantly, in the comparison with advanced models such as YOLOv9e-seg, we have revealed the core design philosophy of this study—the pursuit of an excellent balance between accuracy and efficiency. Although larger-scale models may achieve a slight advantage in pure accuracy metrics, their huge computational costs limit their application potential on resource-constrained edge devices. MPDF-DetSeg, through its elaborate modular design, achieves highly competitive detection and segmentation accuracy with a computational overhead far lower than these models. This proves that our method is not only effective in academic metrics but also has significant comprehensive advantages in application scenarios for practical deployment.

4.2. Targeted Analysis of Dataset Challenges and Model Improvements

The success of this study largely depends on the model’s ability to cope with real- world challenges. The dataset we constructed poses significant challenges to the model in multiple dimensions. These challenges not only go beyond mainstream benchmark datasets but also directly expose the performance bottlenecks of the baseline model, thus highlighting the pertinence and necessity of each improvement in our MPDF-DetSeg.

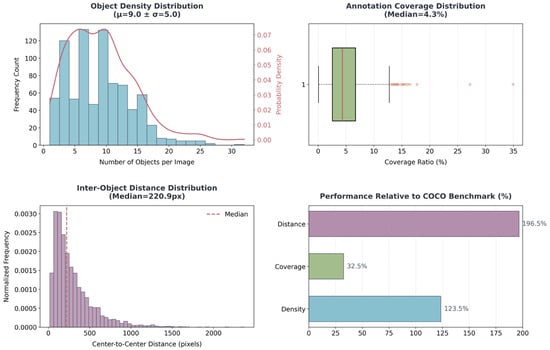

Firstly, the dataset exhibits the characteristic of “crowded small targets,” which places extremely high demands on the multi-scale feature fusion ability, as shown in Figure 16.

Figure 16.

The target density distribution histogram, labeled coverage box plot, instance spacing density histogram and COCO data set comparison bar chart.

The target density in our dataset shows a significantly right-skewed distribution (mean 9 ± 5, with an extreme value of 32), and the median of the annotation coverage rate is only 4.3% (much lower than 15.2% in COCO) [30]. This “high-density, low-coverage” characteristic means that the model must simultaneously handle dense deer herds with extremely small instance intervals (some < 200 px) and ultra-small targets resulting from long-distance shooting. The traditional feature pyramid network (such as PANet) adopted by the baseline model is prone to losing fine features of small targets during repeated downsampling and upsampling processes, or confusing the features of adjacent individuals due to limited receptive fields in dense scenes, ultimately leading to mask adhesion and missed detection of small targets. To address this core challenge, the MPDFPN we designed can more effectively preserve and fuse the detailed information in the shallow layers and the semantic information in the deep layers through its multi-path diverging and dense cross-layer connections. This enables the model to better distinguish individual boundaries when dealing with dense areas and more reliably capture weak features when dealing with long-distance targets, thus significantly improving the segmentation accuracy, which is fully verified by the improvement in the mAP50-95 metric.

Secondly, the fine-grained features of sika deer body parts (such as fluff, joint contours) are crucial for achieving precise segmentation but are easily overlooked by the standard backbone network in complex backgrounds. In our dataset, many key parts are themselves “small targets,” and their segmentation errors are amplified in the IoU calculation. At the same time, the complex background of the farm is prone to causing false detections. The backbone network of the baseline model has difficulty maintaining a clear response to these high-frequency details in the deep feature maps, resulting in rough segmentation edges and loss of texture information. To solve this problem, we constructed the DWEResBlock module. This module focuses on local information through depth-wise separable convolution and combines channel expansion for feature transformation in a high-dimensional space, thereby greatly enhancing the network’s ability to express fine-grained textures without significantly increasing the computational cost. As a result, both the edge quality of the segmentation results and the ability to suppress background noise have been significantly improved.

Finally, the variable postures of sika deer and the bimodal distribution of instance intervals pose a dual challenge to the model’s geometric positioning accuracy and context modeling ability. The instance intervals in our dataset show a bimodal distribution, with both close-range overlaps of less than 200 px and wide-angle long-distance distributions of more than 500 px. Traditional IoU-based loss functions (such as CIoU) may provide inaccurate gradients when dealing with rotated or non-standard aspect-ratio targets, leading to bounding box positioning deviations, which is particularly fatal in close-range overlaps. At the same time, for targets in wide-angle scenes, the model requires stronger long-distance context-modeling ability. The MPDIoU loss function we introduced provides more direct and sensitive geometric constraints to the model by directly minimizing the distance between the vertices of the predicted box and the ground-truth box, effectively improving the positioning accuracy under various postures. Combined with the enhancement of long-distance context information by MPDFPN, our model can better balance these two conflicting interval requirements.

In summary, the improvements of MPDF-DetSeg are not isolated but are systematically designed to address the specific challenges observed in the dataset. Although there is still room for improvement in extreme cases (such as complete overlap or extreme lighting), our work clearly demonstrates how to overcome data-related difficulties in specific application scenarios through targeted model innovation. These observations also provide a clear direction for our future research (such as the introduction of multi-modal data).

4.3. Future Outlook and Potential Applications

Based on the above analysis, future research should focus on overcoming the identified limitations and promoting the transformation of the algorithm into practical applications. A core direction is to explore multi-modal data fusion by, for example, combining depth cameras (providing 3D spatial information to solve occlusion and distance problems) or thermal imaging cameras (resistant to lighting changes) to build a more robust perception system. In addition, developing more advanced and targeted data augmentation strategies and model structures to improve the ability to distinguish small targets in complex backgrounds is also an important research topic.

At the application level, the next key step is to achieve the deep integration of the algorithm with the physical system. We will develop a real-time communication interface based on the ROS framework to directly link the segmentation results with the robotic arm control instructions, building a complete closed-loop system from “perception-decision-execution,” ultimately promoting the transformation of automated antler collection from a laboratory prototype to industrial implementation. In addition, the high-precision segmentation ability demonstrated by the MPDF-DetSeg model is expected to be applied in a wider range of livestock farming fields, such as animal health monitoring based on gait analysis and precision feeding based on feeding behavior analysis, providing strong support for the development of smart livestock farming.

5. Conclusions

In response to the urgent need for high-precision and robust instance segmentation of multiple key body parts of sika deer in precision livestock farming, this study proposes an improved instance segmentation model named MPDF-DetSeg. The model aims to overcome the challenges posed by factors such as lighting changes, individual occlusion, pose diversity, and small targets in real-world breeding environments. By systematically integrating the multi-path diverging feature-fusion pyramid network (MPDFPN), the depth-wise separable expanding residual module (DWEResBlock), and the MPDIoU loss function designed by us, MPDF-DetSeg has been significantly enhanced in terms of feature fusion, detail expression, and geometric positioning accuracy. Comprehensive experimental evaluations on the self-built sika deer dataset show that the detection performance of the improved model has significantly improved compared with the original YOLO11-seg model. Specifically, under the bounding-box metrics, mAP50 and mAP50- 95 have increased by 2.1% and 4.9%, respectively. Under the mask metrics, mAP50 and mAP50-95 have increased by 2.4% and 5.3%, respectively, and the mean intersection over union (mIoU) of the segmentation masks reaches 70.1%. More importantly, while maintaining high accuracy, the model achieves an excellent balance in terms of computational efficiency and model size. Its inference speed of 6.8 ms per frame can meet the real-time requirements of practical applications. In summary, the MPDF-DetSeg model proposed in this study not only provides the core visual perception technology for the complex application of automated antler collection but also lays a solid foundation for broader precision livestock farming applications such as vision-based animal health monitoring and behavior analysis. Future work will focus on two directions: one is to further improve the model’s robustness in extreme scenarios through multi-modal data fusion (such as combining depth or thermal imaging information), while the other is to promote the deep integration of the algorithm with physical hardware to build a complete automated system from perception to execution, ultimately facilitating the large-scale implementation of intelligent technologies in modern livestock farming.

Author Contributions

Conceptualization, C.Z. and J.W.; methodology, J.F. and T.H.; software, J.W. and C.Z.; validation, T.L., J.F. and T.H.; formal analysis, T.L. and T.H.; investigation, C.Z.; resources, T.H.; data curation, J.W. and T.L.; writing—original draft preparation, C.Z. and J.W.; writing—review and editing, J.F. and T.H.; visualization, J.W.; supervision, J.F. and T.H.; project administration, T.L., H.G. and T.H.; funding acquisition, J.F., H.G. and T.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Science and Technology Department of Jilin Province (Grant No. [YDZJ202501ZYTS581, http://kjt.jl.gov.cn/ (accessed on 23 April 2025)]), Department of Education of Jilin Province (Grant No. [JJKH20240467KJ, http://jyt.jl.gov.cn (accessed on 23 April 2025)]), and the Science and Technology Bureau of Changchun City (Grant No. [21ZGN27, http://kjj.changchun.gov.cn/ (accessed on 23 April 2025)]).

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to ongoing research projects.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wu, D.; Cui, D.; Zhou, M.; Ying, Y. Information Perception in Modern Poultry Farming: A Review. Comput. Electron. Agric. 2022, 199, 107131. [Google Scholar] [CrossRef]

- Yin, M.; Ma, R.; Luo, H.; Li, J.; Zhao, Q.; Zhang, M. Non-Contact Sensing Technology Enables Precision Livestock Farming in Smart Farms. Comput. Electron. Agric. 2023, 212, 108171. [Google Scholar] [CrossRef]

- Li, W.; Ren, Q.; Feng, J.; Lee, S.Y.; Liu, Y. DNA Barcoding for the Identification and Authentication of Medicinal (Cervus sp.) Products in China. PLoS ONE 2024, 19, e0297164. [Google Scholar] [CrossRef]

- Ortega, A.C.; Dwinnell, S.P.; Lasharr, T.N.; Jakopak, R.P.; Denryter, K.; Huggler, K.S.; Hayes, M.M.; Aikens, E.O.; Verzuh, T.L.; May, A.B.; et al. Effectiveness of Partial Sedation to Reduce Stress in Captured Mule Deer. J. Wildl. Manag. 2022, 84, 1445–1456. [Google Scholar] [CrossRef]

- Zhang, Z.; He, Z.; Cao, G.; Cao, W. Animal Detection From Highly Cluttered Natural Scenes Using Spatiotemporal Object Region Proposals and Patch Verification. IEEE Trans. Multimed. 2016, 18, 2079–2092. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Xue, J.; Chen, B.; Ma, Y. LSR-YOLO: A High-Precision, Lightweight Model for Sheep Face Recognition on the Mobile End. Animals 2023, 13, 1824. [Google Scholar] [CrossRef]

- Li, R.; Dai, B.; Hu, Y.; Dai, X.; Fang, J.; Yin, Y.; Liu, H.; Shen, W. Multi-Behavior Detection of Group-Housed Pigs Based on YOLOX and SCTS-SlowFast. Comput. Electron. Agric. 2024, 225, 109286. [Google Scholar] [CrossRef]

- Mu, Y.; Hu, J.; Wang, H.; Li, S.; Zhu, H.; Luo, L.; Wei, J.; Ni, L.; Chao, H.; Hu, T.; et al. Research on the Behavior Recognition of Beef Cattle Based on the Improved Lightweight CBR-YOLO Model Based on YOLOv8 in Multi-Scene Weather. Animals 2024, 14, 2800. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Huang, F.; Hu, J.; Zheng, H.; Liu, M.; Dou, Z.; Jiang, Q. Automatic Face Detection of Farm Images Based on an Enhanced Lightweight Learning Model. Int. J. Pattern Recognit. Artif. Intell. 2024, 38, 2456009. [Google Scholar] [CrossRef]

- Xiong, H.; Xiao, Y.; Zhao, H.; Xuan, K.; Zhao, Y.; Li, J. AD-YOLOv5: An Object Detection Approach for Key Parts of Sika Deer Based on Deep Learning. Comput. Electron. Agric. 2024, 217, 108610. [Google Scholar] [CrossRef]

- Gong, H.; Liu, J.; Li, Z.; Zhu, H.; Luo, L.; Li, H.; Hu, T.; Guo, Y.; Mu, Y. GFI-YOLOv8: Sika Deer Posture Recognition Target Detection Method Based on YOLOv8. Animals 2024, 14, 2640. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Liu, S.; Zhao, C.; Zhang, H.; Li, Q.; Li, S.; Chen, Y.; Gao, R.; Wang, R.; Li, X. ICNet: A Dual-Branch Instance Segmentation Network for High-Precision Pig Counting. Agriculture 2024, 14, 141. [Google Scholar] [CrossRef]

- Zhao, H.; Mao, R.; Li, M.; Li, B.; Wang, M. SheepInst: A High-Performance Instance Segmentation of Sheep Images Based on Deep Learning. Animals 2023, 13, 1338. [Google Scholar] [CrossRef] [PubMed]

- Qiao, Y.; Truman, M.; Sukkarieh, S. Cattle Segmentation and Contour Extraction Based on Mask R-CNN for Precision Livestock Farming. Comput. Electron. Agric. 2019, 165, 104958. [Google Scholar] [CrossRef]

- Kumar, T.; Brennan, R.; Mileo, A.; Bendechache, M. Image Data Augmentation Approaches: A Comprehensive Survey and Future Directions. IEEE Access 2024, 12, 187536–187571. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO 2023. Available online: https://www.ultralytics.com/events/yolovision/2023 (accessed on 1 June 2025).

- Ma, S.; Xu, Y. MPDIoU: A Loss for Efficient and Accurate Bounding Box Regression. arXiv 2023, arXiv:2307.07662. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B. MobileNetV4—Universal Models for the Mobile Ecosystem; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Li, C.; Zhang, B.; Li, L.; Li, L.; Geng, Y.; Cheng, M.; Xiaoming, X.; Chu, X.; Wei, X. YOLOV6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2024, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019, arXiv:1911.08287. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).