Abstract

As software systems become increasingly large, the logic becomes more complex, resulting in a large number of bug reports being submitted to the bug repository daily. Due to tight schedules and limited human resources, developers may not have enough time to inspect all the bugs. Thus, they often concentrate on the bugs that have large impacts. However, there are two main challenges limiting the automation technology that would help developers to become aware of high-impact bug reports early, namely, low quality and class distribution imbalance. To address these two challenges, we propose an approach to identify high-impact bug reports that combines the data reduction and imbalanced learning strategies. In the data reduction phase, we combine feature selection with the instance selection method to build a small-scale and high-quality set of bug reports by removing the bug reports and words that are redundant or noninformative; in the imbalanced learning strategies phase, we handle the imbalanced distributions of bug reports through four imbalanced learning strategies. We experimentally verified that the method of combining the data reduction and imbalanced learning strategies could effectively identify high-impact bug reports.

1. Introduction

Bug tracking systems, such as Bugzilla [1] and JIRA [2], can help developers to manage the bug reports collected from various sources, including development teams, testing teams, and end users [3]. Due to the increased scale and complexity of software projects, a large number of bug reports are received daily by bug tracking systems. For example, the Mozilla bug repository receives an average of 135 new bug reports each day [4]. Due to the large number of bugs submitted to the bug repository every day, in order to correctly verify the severity of the bug report and solve the problems of manual bug classification, such as high time consumption and low accuracy, it is becoming increasingly important to automatically identify high-impact bug reports. However, we face the following two challenges: low quality data and data distribution imbalances. Since bug reports are submitted by people from all over the world and each person’s description in their natural language and understanding of bugs are different, there is excessive noise in the data [5]. Noisy data may mislead the data analysis techniques, and large-scale data may increase the cost of data processing [6]. The low quality bugs accumulate in bug repositories and grow in scale. Furthermore, most of the bug reports are not high impact bug reports; in other words, the training set often has an imbalanced distribution. This is a disadvantage for most of the existing classification approaches, which have largely been developed under the assumption that the underlying training set is evenly distributed. These two challenges, the large amount and low quality of the data, will affect the performance of the bug classification. To solve the problems of software projects quickly, developers often need to prioritize and concentrate on the high-impact bug reports. Therefore, an automated technique to tell developers whether or not a bug report is a high-impact bug report, would be preferable to augment productivity.

However, although a large number of bug reports are submitted daily by people from all over the world, only a small percentage of the bug reports are high-impact bug reports, and each person’s description in their natural language and understanding of bugs are different, thus resulting in excessive noise [5]. Noisy data may mislead the data analysis techniques, while large-scale data may increase the cost of data processing [6,7,8]. The low quality bugs accumulate in the bug repositories and grow in scale. The time cost of manual bug classification is costly and has low accuracy. In manual bug classification in Eclipse, 44% of the bugs were misallocated, and the time cost between opening a bug and its first classification averaged 19.3 days [9]. To avoid the expensive cost of manual bug classification, Anvik et al. [10] proposed an automatic bug classification method, which uses text classification technologies to predict the developers of bug reports. In this method, a bug report is mapped to a document, and the relevant developer maps to the label of the document. Then, a text classifier is used to automatically resolve the bug reports, such as Naive Bayes [11]. Based on the results of the text categorization, manual categorizers assign new bugs by combining their professional abilities. However, the low-quality and class imbalance distribution of the bug reports in bug repositories will have an adverse effect on automatic bug classification techniques. Since the software bug reports are free-form text datasets (generated by the developer), it is necessary to process the bug datasets into high-quality bugs to facilitate the application [6]. Xuan et al. proposed a method that combines the feature selection with instance selection to reduce bug datasets to obtain high-quality bug data and to determine the performance of the bug classification by changing the order of reduction. Due to the huge size and noise of the bug repository, it is still difficult to reduce the bug data set to a completely high-quality data set. Yang [12] investigated four widely used imbalanced learning strategies (i.e., random under-sampling (RUS), random over-sampling (ROS), synthetic minority over-sampling technique (SMOTE) and cost-matrix adjuster (CMA)) to solve the class imbalance distribution of bug reports from four different open source projects. However, the bug reports are written in natural language, which includes much noise. Therefore, the internal methods (CMA) are unsuitable to balance the distribution of bug reports [13]. In addition, RUS could cause under-fitting, whereas ROS could cause over-fitting [14,15,16,17]. Additionally, the SMOTE is based on low-dimensional random sampling, which has a poor generalization ability [18,19]. Furthermore, random sampling (RUS, ROS, SMOTE) could bring a certain degree of uncertainty, and some sampling results are not in accord with the real distribution of the dataset.

In this paper, we propose an approach for identifying high-impact bug reports by combining data reduction, named Data Reduction based on Genetic algorithm (DRG), with imbalanced learning strategies. In data reduction phase, we use four feature selection algorithms (FS) (i.e., one rule (OneR), information gain (IG), chi-square (CHI), and filtered selection (Relief) (Described in Appendix A.2)) to extract 10%, 20%, 30%, 40%, 50%, 60%, 70%, 80% and 90% of the attributes from bug reports with reduced bug data dimensions and word dimensions to solve the low-quality bug reports challenge and obtain 36 feature selection schemes, i.e., feature reduction (GA(FS)), and use instance selection (IS) algorithms (i.e., condensed nearest neighbor (CNN), edited nearest neighbor (ENN), minimal consistent set (MCS), and iterative case filter (ICF)) to randomly generate 36 instance selection schemes, i.e., instance reduction (GA(IS)). Simultaneous feature and instance reduction (i.e., GA (FS+IS)) is a combined extraction scheme of 36 features and instance strings; imbalanced learning strategies are applied to the dataset after the reduction operation. In the imbalanced learning strategies phase, we investigate four widely used imbalanced learning strategies, i.e., RUS, ROS, SMOTE and CMA, and four popular classfication algorithms, i.e., naive Bayes (NB), naive Bayes multinomial (NBM), support vector machine (SVM) and K-nearest neighbors (KNN) (Described in Appendix A.1), and combine them to make a total of 16 different combinations (i.e., variants) to identify high-impact bug reports for reduced datasets. Comprehensive experiments have been conducted on public datasets obtained from real-world bug repositories (Mozilla [20], GCC [21] and Eclipse [22]). In addition, we also verify the performance of our method in the case of different combinations of FS and IS, data reduction and imbalanced learning strategies.

The main contributions of this paper are as follows:

- We present a data reduction method based on the genetic algorithm to reduce the data for identifying high-impact bug reports. This problem aims to augment the data set by identifying two aspects of the high-impact bug reports, namely, (a) to simultaneously reduce the scales of the bug report dimension and the word dimension and (b) to improve the ability to identifying high-impact bug reports.

- We verify the ability of our method to identify the high-impact bug reports in the case of different combinations of FS and IS, data reduction and imbalanced learning strategies.

- Five evaluation criteria are used in experimental part to evaluate the proposed method. The results from three public bug repository datasets (Mozilla, GCC and Eclipse) show that our method could effectively identify the high-impact bug reports.

The remainder of this paper is organized as follows: the related studies and the motivation of our approach are discussed in Section 2, the design of our approach is discussed in Section 3, the experimental design and results are presented in Section 4 and Section 5, and the conclusions are discussed in Section 6.

2. Background Knowledge and Motivation

In our work, we propose a high-impact bug report identification approach that combines data reduction, i.e., Data Reduction based on the Genetic algorithm (DRG), and imbalanced learning strategies. Thus, in this section, we introduce some background knowledge concerning bug report management. Moreover, we present the motivation of our study.

Bug reports are valuable to software maintenance activities [23,24,25,26]. Automatic support for bug report classification can facilitate understanding, resource allocation, and planning.

Antoniol et al. applied text mining techniques to the description of bug reports to determine whether a bug report is a real bug or a feature request [27]. They use techniques such as decision trees and logistic regression as well as the Naive Bayes classifier to achieve this purpose. Menzies et al. use rule learning techniques to predict the severity of bug reports [28]. Their approach was applied to five projects supplied by NASA’s Independent Verification and Validation Facility. Tian et al. [29] use the overall framework of machine learning to predict the bug report priority by considering factors such as time, text, author, related reports, severity, and product. Hooimeijer et al. [30] established a predictive model to identify high-quality bug reports. The method effectively distinguishes between high-quality bug reports and low-quality bug reports based on extracting relevant descriptive information about the bug reports, products, operating systems, and bug report submitters. Runeson et al. [31] proposed a detection method for redundant bug reports based on information retrieval technology. This method treats each bug report as a document and obtains bug reports similar to the current bug report by calculating the similarity between the current bug report and the existing bug report. Sun et al. [32] proposed a feature-based partitioning model that identified the similarities between bug reports. Subsequently, Sun et al. [33] proposed a retrieval model based on multifeature information, which can continue to match the most similar features in bug reports.

Xia et al. [34] found that for approximately 80% of bug reports, the values of one or more of their fields (including the severity and fixer fields) are reassigned. They also show that redistributing field values for bug reports with existing field values consumes more time and cost than bug reports for no field reassignment. To resolve this problem, it is necessary to develop automatic approaches to perform severity predictions and semiautomatic fixer recommendations. Zhang [35] proposed a new approach for severity predictions and semiautomatic fixer recommendations to take the place of manual work by developers. In this approach, the top k nearest neighbors of a new bug report are extracted by computing a similarity measure called REP topic, which is an enhanced version of REP. Next, the severity prediction and semiautomatic fixer recommendation approaches are implemented based on the characteristics of these k neighbors, such as participating developers and textual similarities with the given bug report.

Feng et al. [36] applied test report prioritization methods to crowdsourced testing. They designed dynamic strategies to select the most dangerous and diverse test reports for inspection in each iteration. In their subsequent article, Feng et al. [37] proposed a new technology to prioritize test reports for inspection by software developers. This approach combined image-understanding techniques with traditional text-based techniques, especially for the crowdsourced testing of mobile applications. They proposed prioritization approaches that are based on text descriptions, screenshot images, and a combination of both sources of information. Under the premise of obtaining rich training data, Wang et al. [38] proposed a cluster-based classification approach for crowdsourcing report classification. However, sufficient training data are often not available. Subsequently, Wang et al. [39] proposed an approach called local-based active classification (LOAF) to address the local bias problem and the lack of labeled historical data that exist in the automated crowdsourced testing report classification.

3. Methodology

In this section, we will describe in detail the methods for identifying bug reports with an imbalanced distribution.

3.1. Overview

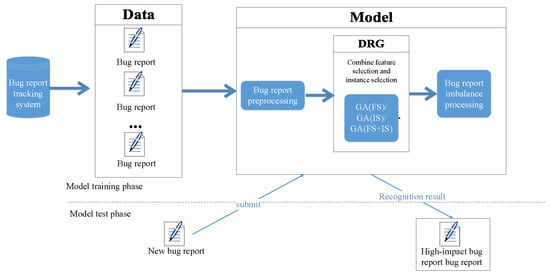

In this part, we propose a high-impact bug report identification approach by combining data reduction, i.e., Data Reduction based on Genetic algorithm (DRG), and imbalanced learning strategies. The model consists of the following three parts: (1) Data preprocessing: We use a text categorization technique to convert each bug report into a word vector based on the vector space model that is mentioned in [11,40]. (2) Data reduction phase: Low quality bug reports may cause the classification approach to assign bug reports to the wrong category [41,42,43,44]. To obtain high-quality bug reports, we use our proposed DRG approach to obtain a reduced dataset. There are three types of reduction methods, as follows: feature reduction (GA(FS)), instance reduction (GA(IS)) and the reduction of features and instances simultaneously (GA(FS+IS)). (3) Imbalance learning strategies: To eliminate the imbalance of datasets, we use four imbalanced processing strategies, i.e., RUS, ROS, SMOTE and CMA, to balance the processing of datasets and solve the class imbalance problem [19,45,46,47]. Figure 1 shows the overall framework of our proposed method.

Figure 1.

The framework of our model.

3.2. DRG Algorithm

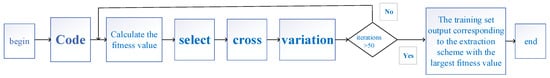

The DRG algorithm mainly includes the following six steps: gene coding, population initialization, selection, crossover, mutation and termination criteria judgment. Its execution process is shown in Figure 2:

Figure 2.

DRG algorithm execution process.

Gene coding: The feature sequence in the dataset is represented as a vector of size , where is the total number of features. We define as the number of populations, it is different in GA(FS), GA(IS) and GA(FS+IS). A combination of selected features is represented as a binary string, , , . represents a feature selection scheme. Each feature is a variable with two values: when , it means that the feature in corresponding to is not selected, and indicates that the feature in corresponding to is selected. Similarly, the instance sequence in the dataset is represented as a vector of size , where is the total number of instances (that is the number of bug reports). Each combination of selected instances is a instance selection scheme, it is represented as a binary string, , , . Each instance is a variable. If the instance in corresponding to is not selected, . And means that the instance in corresponding to is selected.

Population initialization. The population initialization in GA(FS) is the generation of initial feature selection scheme. The method is to use four basic feature selection algorithms (IG, CHI, OneR, Relief) to sort the dataset according to the importance of the features from high to low, and then take 10%, 20%, 30%, 40%, 50%, 60%, 70%, 80% and 90% of the sorted dataset respectively, and thus 36 feature selection schemes are obtained. The population initialization in GA(IS) is the generation of the initial instance selection scheme. The method is to randomly generate a random number between [0, 1] at each in each . If the random number is greater than or equal to 0.5, , otherwise , thus obtaining 36 instance selection schemes. The method of generating the initial population of GA(FS+IS) has two steps: Firstly, 36 extraction schemes are generated according to the initial population generation methods in GA(FS) and GA(IS); Then, the generated and were separately combined to obtain 36 extraction schemes in the initial population.

Selection: The fitness value of each extraction scheme is calculated according to the fitness function. The extraction scheme with the largest fitness value is copied times, and they will be used as schemes in the next generation population (it guarantees that the extraction scheme with high fitness can be retained). Then, using the roulette selection method, the remaining extraction schemes in the next generation population is generated.

Crossover: Divide all extraction schemes in the population into two groups: and , and is equal to . The extraction schemes in the population are combined in order, and then a single point crossover is performed. We define a crossover probability , it is randomly generated value. If is greater than or equal to the crossover probability lower bound and less than or equal to the crossover probability upper bound , the crossover of these two extraction schemes will be carried out, otherwise they will not crossover. If crossover is performed, a crossover point is randomly generated, then all the gene positions after this point in both two extraction schemes will be exchanged.

Mutation. We define a mutation rate . For each extraction scheme in population, a mutation possibility is randomly generated, if the mutation possibility is less than the , the mutation operation is performed. If mutation, mutation gene positions are randomly selected in the extraction scheme, if the value of a gene position is 0, it is changed to 1, and if it is 1, it is changed to 0.

Termination criteria judgment. The iteration is terminated when the defined number of iterations T is reached.

3.3. Reduction Algorithm (GA)

In Section 3.3 and Section 3.4, the input parameters and the meaning of each parameter in the algorithm are as follows: : The training set. : Population size. T: The number of iterations. N: The number of features. M: The number of bug reports. : The lower bound of the crossover probability. : The upper bound of the crossover probability. : Mutation rate. : The number of mutated genes.

Algorithm 1 indicates that only the feature selection (GA(FS)) or only instance selection (GA(IS)) is performed on the dataset by the genetic algorithm. In the first line of the algorithm, both the population and the best extraction scheme () are initialized to null. In lines 2–5, if the algorithm performs feature selection, Algorithm 2 is called to initialize the population; if the algorithm performs instance selection, Algorithm 3 is called to initialize the population. In lines 7–8, the fitness value of each extraction scheme in the population is calculated by the fitness function defined in Section 3.5, and the extraction scheme with the largest fitness value is recorded. Lines 9–11 represent Selection operation, the extraction scheme with the largest fitness function value is copied, and it is passed to the next generation as of the population. Then, the roulette selection method is used to generate the remaining extraction schemes in the population. Lines 12–23 describe the Crossover operation, lines 24–30 describe the mutation operation, and the specific methods of the crossover and mutation are detailed in Section 3.1. The mutation loci represent the number of mutant genes. In lines 30–31, all extraction schemes update the population after performing the genetic operation. In line 32, after performing T iterations, the obtained optimal extraction scheme is decoded into a corresponding dataset . In line 33, return the reduced dataset .

Algorithm 2 represents the population initialization for feature selection using genetic algorithms. In line 1, initial the population to null. In line 2, is used to represent the four feature selection methods (IG, CHI, OneR, and Relief). In lines 3–9, the training set is sorted by the four feature selection algorithms according to importance of all features from high to low. The first 10%, 20%, 30%, 40%, 50%, 60%, 70%, 80%, and 90% of the sorted feature set are added to the feature selection scheme respectively added to the feature selection scheme (). In line 10, the corresponding column of the available feature is set to 1, and the other to 0, return .

Algorithm 3 represents the population initialization for the instance selection using genetic algorithms. In line 1, the initial population is set to null. In lines 2–13, first, each instance selection scheme is organized into a binary string of size . Then, a number between 0 and 1 is randomly generated for each gene position in each extraction scheme; if this number is greater than or equal to 0.5, its corresponding gene position is set to 1, otherwise, it is set to 0, and each instance selection generated will be added into . In line 14, all the extraction schemes produced will be returned.

3.4. GA(FS+IS) Algorithm

GA(FS+IS) indicates that the feature and instance selection are simultaneously performed on the dataset by using the genetic algorithm. Algorithm 4 details the method of simultaneous feature selection and instance selection. In line 1, the population and the best extraction scheme ( are initialized to null. In line 2 to 5, the initialized feature selection scheme and the instance selection scheme are combined. Lines 7–8 indicate that in each iteration, the fitness value of each extraction scheme in the population is calculated by the fitness function described in Section 3.5, and the extraction scheme with the largest fitness value will be recorded in ( Lines 9–11 are the Selection operation; the extraction scheme with the largest fitness value will be copied for inheritance to the next generation, and the number of this scheme accounts for a of the population. The other of the population will be generated by the roulette selection method. Lines 12–22 are the crossover operation; the crossover is performed on feature selection scheme and instance selection. In lines 23–35, the mutation is performed separately on the feature selection scheme and instance selection. Line 46 indicates that the population needs to be updated after each iteration. Line 38 indicates that after completing T iterations, the best extraction scheme is retained by ( and it is decoded into the corresponding dataset . The algorithm finally returns the reduced dataset.

| Algorithm 1 Reduction algorithm (GA) |

| Input: Output: |

|

3.5. Fitness Function

Individuals need to be selected according to the fitness function value when the data set is reduced by the genetic algorithm. This function is used to measure the ability of the individual classification. The higher the fitness function value is, the better the individual is. The fitness function is defined as follows [48]:

where represents the fuzzy distance between different categories, and represents the fuzzy distance within the same category. The samples can be separated because they are located in different regions of the feature space. The larger the and the smaller , the better the classification effect is. The specific calculation method is described as follows:

| Algorithm 2 Initialize_FS |

| Input: Output: |

|

| Algorithm 3 |

| Input: Output: |

|

We adopt the following Euclidean distance [24]:

When calculating the distance between different categories, and represent the mean vectors of and , respectively. The mean vector can be obtained by the following formula:

where represents the two categories of and , is the category center feature vector of the category i, and there are bug reporters in category.

| Algorithm 4 GA(FS+IS) |

| Input: Output: |

|

When calculating the distance between the same category, and represent two different bug reports in the same category. For two different bug reports A and B in the same category, the inner class distance should be calculated, then, the inner class distance of the two category is added to obtain . The definition is as follows:

where , represents the number of bug reports; , represents the number of bug reports;

3.6. Feature Selection Approach

Since many feature selection algorithms have been investigated for text categorization, we select four typical algorithms (OneR, IG, CHI, Relief) in our work [49].

4. Experimental Design

The experimental design used to validate the performance of our approach is described in this section.

4.1. Experimental Datasets

To demonstrate the effectiveness of the proposed approach, we carry out a series of experiments on the bug repositories of three large open source projects, namely, Mozilla, GCC, and Eclipse. Portions of the bug reports (that is, the repaired state is fixed) are selected as the experimental data. There are seven types of labels corresponding to the severity of the datasets, as follows: normal, enhancement, major, critical, blocker, trivial, and minor. The major, critical, and blocker tags are severe bug reports. The trivial and minor tags are bug reports. Statistics information on the three datasets is shown in Table 1, which contains the total number of bug reports, the number of severe bug reports, the number of bug reports, and imbalance ratio. We can see that all the datasets are imbalanced. In Mozilla, the proportion of severe bug reports is 1.3015. In Eclipse, the proportion of severe bug reports is 2.311197, and in GCC, the proportion of severe bug reports is 3.6735. In Table 1, the second column represents the size of the data set. The third and fourth columns indicate that the severity labels are major, critical, blocker, respectively. The tags are trivial and minor. The fifth and sixth columns represent the number of severe bug reports and the number of bug reports, respectively. The last column indicates the imbalance ratio of the bug report.

Table 1.

The original datasets for Mozilla, GCC, and Eclipse.

We analyze and process the Mozilla, GCC and Eclipse datasets and remove the bug reports labeled normal and environment from each dataset, leaving only the bug reports labeled major, critical, blocker, trivial, and minor; then, text preprocessing is performed. The description information of each bug report in the datasets is segmented, the stop words are removed, and the word stem is processed into a text matrix. Each row in the matrix represents a bug report, and each column represents a word. We delete words whose word frequency is less than 5. The datasets after word frequency reduction are divided into parts in chronological order; the first parts are used as training sets, and the last parts are used as test sets. We obtained the final standard experimental data sets, and the results are shown in Table 2. In Table 2, the second column represents the size of the data set with a word frequency less than 5, the third column represents the proportion of the deleted bug report, and the last two columns represent the number of bug reports with severe and nonsevere tags in the training and test sets, respectively.

Table 2.

The preprocessed datasets for Mozilla, GCC, and Eclipse.

4.2. Experimental Parameter Setting

The genetic algorithm is used to reduce the attributes and instances. The specific parameters of GA(FS), GA(IS) and GA(FS+IS) are shown in Table 3. The parameters in Table 3 are the parameter settings in the DRG algorithm.

Table 3.

The parameters of the GA(FS), GA(IS), GA(FS+IS).

4.3. Evaluation Metrics

We use precision, recall, the F-measure and Area Under the Curve (AUC) as our evaluation metrics. These metrics are commonly used measures for evaluating classification performance [24,50]. They can be derived from the confusion matrix, which captures all four possible classification results, as presented in Table 4. The number of true positives (TP) is the number of low impact bug reports that are correctly divided into low impact bug reports. The number of false positives (FP) is the number of high impact bug reports that are incorrectly divided into low impact bug reports. The number of false negatives (FN) is the number of low impact bug reports that are incorrectly divided into high impact bug reports. The number of true negatives (TN) is the number of high impact bug reports that are correctly divided into high impact bug reports. Where, the sum of TP and TN is the number of correctly classified bug reports and the sum of FP and FN is the number of correctly classified bug reports. Based on the values of TP, FP, FN, and TN, the precision, recall, F-measure and AUC are calculated as follows.

Table 4.

Confusion matrix, which can be used to calculate many evaluation metrics.

Accuracy: The accuracy of the model is the number of correct classifications divided by the total number of classifications. The accuracy is defined as follows:

Precision: The percentage of bug reports that are predicted to be either nonhighimpact or highimpact and are correctly predicted. We thus consider a separate precision for each severity. For a bug report severity of either nonhighimpact or highimpact, we define the precision more formally as follows:

Recall: The percentage of all bug reports that are actually nonhighimpact or highimpact and are correctly predicted to be nonhighimpact or highimpact, respectively. As for the precision, we also consider a separate recall for each severity. For a bug report severity of either nonhighimpact or highimpact, we define the recall more formally as follows:

F-measure: Usually, the precision and recall are not discussed in isolation. Instead, either the values of one measure are compared for a fixed value of the other measure or both are combined into a single measure, such as the F-measure, which is the weighted harmonic mean of the recall and precision. The F-measure has the property that if either the recall or precision is low, the F-measure also decreases. Therefore, the F-measure can be used as an effective evaluation criterion for the classification of imbalanced datasets. represents the weighting parameters; if is greater than 1, the (precision) is more important than recall, whereas if it is less than 1, the recall is a more important one. In this case study, we set equal to 1 to define the F-measure such that the recall and (precision) are equally weighted.

To comprehensively consider the total F-measure for a bug repository dataset, we combine and into a single measure F-measure, which is defined as follows, where and represent weighting parameters such that and :

AUC(Area Under Curve): The ROC curve is commonly used for classifier evaluation. However, comparing curves visually can be cumbersome, especially when the curves are close together. Therefore, the AUC is calculated to serve as a single number expressing the accuracy. The AUC is the area of a two-dimensional graph in which or is plotted on the Y axis and or is plotted on the X axis over a distinct threshold T of possibility values. If the AUC is close to 0.5, then the classifier is practically random, whereas a value close to 1.0 means that the classifier makes practically perfect predictions. This value enables more rational discussions when comparing the accuracies of different classifiers [51]:

where

To comprehensively consider the total AUC for a bug repository dataset, we combine and into a single measure AUC, which is defined as follows, where and represent weighting parameters such that and :

5. Experimental Results

In this section, the experimental results are discussed in relation to the specific research questions.

5.1. RQ1:What Are the Better Classification Results for the Reduced Datasets and the Unreduced Datasets?

In the first research question, we want to investigate which of the reduced datasets and the unreduced datasets have better classification performance for Mozilla, GCC and Eclipse projects.

To answer this question, first, we use NB, NBM, KNN, and SVM to classify the original datasets and record the experimental results. Then, we use the genetic algorithm for the feature selection for the original datasets and set the iteration to 50 times. After finding the optimal individual GA(FS), we use the basic filter feature selection method, i.e., IG, CHI, OneR, or Relief, to reduce the datasets to the same scale as the genetic algorithm and the statistical results. Finally, we use the genetic algorithm for the instance selection for the original datasets and set the iteration to 50 times. After finding the optimal individual GA(IS), we use the basic instance selection method, i.e., CNN, ENN, MCS, or ICF, to reduce the datasets to the same scale as the genetic algorithm and the statistical experiment result. We use four evaluation metrics mentioned above (accuracy, precision, recall, F-measure and AUC) to analyze the experimental results. Table 5, Table 6 and Table 7 present the performance of the feature selection approaches and instance selection approaches with four classifiers on Mozilla, GCC, and Eclipse, respectively.

Table 5.

The results of feature selection and instance selection in the Mozilla dataset compared to the results of the raw Mozilla data.

Table 6.

The results of feature selection and instance selection in the GCC dataset compared to the results of the raw GCC data.

Table 7.

The results of feature selection and instance selection in the Eclipse dataset compared to the results of the raw Eclipse data.

From Table 5, Table 6 and Table 7, it can be found that the ability to identifying high-impact bug reports by using feature selection and instance selection to select datasets after reduction works much better than the original experimental datasets. For example, from Table 5, it can be found that for the Mozilla dataset, the NBM classifier-based instance reduction method GA(IS) works best for identifying high-impact bug reports. It achieves the largest AUC value, which is 0.8545, and the accuracy, F-measure, precision, and recall values are 0.8026, 0.803, 0.8238, and 0.8026, respectively. Compared with the original data classification without data reduction, the F-measure value results have the greatest improvement. For the Mozilla dataset after attribute reduction with CHI, the SVM classifier has the best classification performance for identifying high-impact bug reports, and the accuracy, F-measure, precision, recall, and AUC values are 0.8219, 0.8227, 0.8326, 0.8219, 0.8288, respectively, and achieve the maximum accuracy, F-measure, precision and recall values.

From Table 6, it can be found that for the GCC dataset, the GA(FS) method based on the NBM classifier has the greatest improvement in the accuracy rate, recall rate and the AUC value. Among them, the accuracy of the GA(FS) method based on the NBM classifier for identifying high-impact bug reports is 0.7814, the recall rate is 0.7814, and the AUC value is 0.8. From Table 7, for the Eclipse dataset, using the OneR reduction method based on the NBR classifier to identify high-impact bug reports has the greatest improvement, especially for the accuracy, F-measure, recall and AUC values; the classification results are 0.6399, 0.6467, 0.6399, 0.7707, respective.

Therefore, according to the experimental results, the reduced dataset has better classification performance than the unreduced dataset.

5.2. RQ2: How Does the Order of the Feature Selection and Instance Selection on the Datasets Impact the Experimental Performance?

In this research question, we want to investigate whether the order of the feature selection and instance selection on the Mozilla, GCC and Eclipse datasets has an impact on the experimental results. We consider four feature selection methods, i.e., IG, CHI, OneR, and Relief, and four instance selection methods, i.e., CNN, ENN, MCS, and ICF. We use the five evaluation metrics mentioned above (accuracy, precision, recall, F-measure and AUC) to analyze the experimental results.

To answer this question, first, we use the genetic algorithm to simultaneously perform the approximate reduction of features and instances on the original dataset, i.e., GA(FS+IS), and record the experimental results. Thus, we use genetic algorithms to apply the feature selection for the datasets. Based on the obtained optimal individuals, we use the genetic algorithm to apply the instance selection, i.e., , and the experimental results are recorded. Next, we use genetic algorithms to apply the instance selection for the datasets. Based on the obtained optimal individuals, we use the genetic algorithm to apply the feature selection, i.e., , and record the experimental results. Finally, according to the first experiment, among the NB, NBM, KNN, and SVM classifiers, NBM has the best classification effect, so we only retain the experimental results of using NBM as a classifier in Table 8.

Table 8.

The effect of the feature selection and instance selection order on the experimental results for the Mozilla and GCC datasets when using NBM as a classifier.

Table 8 shows that the best classification performance is obtained after instance reduction using NBM as a classifier to identify high-impact bug reports for Mozilla dataset. The accuracy, F-measure, and recall values show the most obvious improvements, with values of 0.8026, 0.803, and 0.8026, respectively. For the GCC dataset, the method (this is, feature selection is first performed on the dataset, and then the instance selection of the dataset is performed) achieves the best classification performance in identifying high-impact bug reports. In the accuracy value and the recall value, there is the most obvious improvement: the accuracy and the recall values are 0.796 and 0.796, respectively. For the Eclipse dataset, the method (that is, feature selection and instance selection are performed simultaneously) performs better to identify high-impact bug reports. The most obvious improvement is obtained for the accuracy, F-measure and recall values, which are 0.6907, 0.6983 and 0.6907, respectively.

Therefore, we found that the order of the feature selection and instance selection has an impact on identifying high-impact bug reports through the experiments on the Mozilla, GCC and Eclipse datasets.

5.3. RQ3: What Is the Effect of the Balance Processing and Reduction Denoising Order on the Experimental Results?

In this research question, we want to investigate the effect of the balance processing and reduction denoising order on the experimental results for the Mozilla, GCC and Eclipse datasets.

To answer this question, we first use several different imbalanced processing strategies, i.e., RUS, ROS, SMOTE and CMA, to balance the preprocessed datasets after performing feature selection and instance selection and record the experimental results as the GA(FS+IS)_imbalance. After we balance the original dataset, we simultaneously perform feature selection and instance selection and record the results as . Second, we perform feature selection on the original datasets and then use the different imbalanced processing strategies, RUS, ROS, SMOTE and CMA, to balance the datasets. The experimental results are recorded as the GA(FS)_imbalance. We perform the feature selection after the raw datasets are balanced by the different imbalanced processing strategies, RUS, ROS, SMOTE and CMA, and the experimental results are recorded as imbalance _GA(FS). Third, we perform instance selection on the original datasets and then use the different imbalanced processing strategies, RUS, ROS, SMOTE and CMA, to balance the datasets. The experimental results are recorded as the GA(IS)_imbalance. We perform the feature selection after the raw datasets are balanced by the different imbalanced processing strategies, RUS, ROS, SMOTE and CMA, and the experimental results are recorded as imbalance_GA(IS). Fourth, we perform feature selection on the original datasets first, then perform instance selection, and then use the different imbalanced processing strategies, RUS, ROS, SMOTE and CMA, for balance processing; the experimental results are recorded as the GA(FS_IS)_imbalance. We first balance the original dataset with the RUS, ROS, SMOTE and CMA method, then perform feature selection and instance selection; we record the experimental results as imbalance_GA(FS_IS). Finally, we perform instance selection on the original datasets first and then perform feature selection; subsequently, we use the different imbalanced processing strategies, RUS, ROS, SMOTE and CMA, for balance processing, and the experimental results are recorded as the GA(IS_FS)_imbalance. We first balance the original dataset with the RUS, ROS, SMOTE and CMA methods then perform instance selection and feature selection; the experimental results are subsequently recorded as imbalance_GA(IS_FS). We use the five evaluation metrics mentioned above (accuracy, precision, recall, F-measure and AUC) to make comparisons and only retain the experimental results of NBM as a classifier. Table 9, Table 10, Table 11, Table 12 and Table 13 shows the performance of the processing sequence for balance processing and reduction denoising to identify high-impact bug reports for Mozilla, GCC and Eclipse.

Table 9.

The results of GA(FS+IS)_imbalance and imbalance_GA(FS+IS) on the Mozilla, GCC and Eclipse datasets.

Table 10.

The results of GA(FS)_imbalance and imbalance_GA(FS) on the Mozilla, GCC and Eclipse datasets.

Table 11.

The results of GA(IS)_imbalance and imbalance_GA(IS) on the Mozilla, GCC and Eclipse datasets.

Table 12.

The results of GA(FS_IS)_imbalance and imbalance_GA(FS_IS) on the the Mozilla, GCC and Eclipse datasets.

Table 13.

The results of GA(IS_FS)_imbalance and imbalance_GA(IS_FS) on the Mozilla, GCC and Eclipse datasets.

From Table 9, we find that the method (that is, first using the SMOTE method for unbalanced data sets and then using feature selection and instance selection at the same time) can achieve the maximum performance improvement for identifying high-impact bug reports for the Mozilla dataset. The accuracy, F-measure, precision, recall and AUC values are 0.7435, 0.7444, 0.7615, 0.7435, and 0.8322, respectively. For the GCC and Eclipse datasets, it can be found that the GA(FS+IS_imbalance method (that is, first using the feature selection and instance selection for the dataset, then using the ROS method for imbalance processing) performs better in identifying high-impact bug reports. For the GCC dataset, the accuracy, the F-measure, the precision, the recall, and AUC values are 0.7562, 0.7725 0.805, 0.7562, and 0.7853, respectively. For the Eclipse dataset, the accuracy, F-measure, and recall values are 0.6251, 0.6303, 0.7207, 0.6251, and 0.7761, respectively.

From Table 10, we can observe that the imbalance_GA(FS) method (that is, using the SMOTE method to imbalance the dataset first, and then only using feature selection) has the greatest improvement for identifying high-impact bug reports for the Mozilla dataset. The accuracy, F-measure, precision, recall and AUC values are 0.762, 0.7616, 0.7926, 0.762, and 0846, respectively. For the GCC dataset, the GA(FS)_imbalance method (that is, the dataset is processed by feature selection first, and then the dataset is processed using the CMA method) performs better in identifying high-impact bug reports. The accuracy, F-measure, precision, recall and AUC values are 0.7778, 0.7878, 0.8054, 0.7778, and 0.799, respectively. For the Eclipse dataset, the GA(FS)_imbalance method (that is, feature selection is used first, and then the ROS method is used to imbalance the dataset) performs better in identifying high-impact bug reports. The accuracy, F-measure, precision, recall and AUC values are 0.5697, 0.5686, 0.7319, 0.5697, and 0.734, respectively.

From Table 11, For the Mozilla and GCC datasets, the GA(IS)_imbalance method (that is, dataset is processed by feature selection first and then the CMA method is used to imbalance the data set) performs better in identifying high-impact bug reports. For the Mozilla dataset, the accuracy, the F-measure, the precision, the recall, and AUC values are 0.8044, 0.8048, 0.8251, 0.8044, and 0.8547, respectively. For the GCC dataset, the accuracy, F-measure, the precision, recall and AUC values are 0.7855, 0.7981, 0.827, 0.7855, and 0.8052, respectively. For the Eclipse dataset, the GA(IS)_imbalance method (that is, the instance selection of the dataset is first performed, and then the SMOTE method is used to imbalance the dataset) performs better for identifying high-impact bug reports. The accuracy, F-measure, precision, recall and AUC values are 0.6061, 0.6092, 0.7465, 0.6061 and 0.7475, respectively.

From Table 12, we can find that the imbalance_GA(FS_IS) method (that is, first the SMOTE method is used to imbalance the dataset, then feature selection is used to reduce it, and then the instance selection is also used to reduce it) achieves the maximum performance improvement for identifying high-impact bug reports for the Mozilla dataset. The accuracy, F-measure, precision, recall and AUC values are 0.7952, 0.796, 0.8078, 0.7952, and 0.8633, respectively. For the GCC dataset, we find that the imbalance_GA(FS_IS) method (that is, first the CMA method is used for the imbalanced dataset, then feature selection is used for reduction, and then use instance selection is used for reduction) can achieve the maximum performance improvement for identifying high-impact bug reports and the accuracy, F-measure, precision, recall, and AUC values are 0.7887, 0.797, 0.8109, 0.7887, and 0.8108, respectively. For the Eclipse dataset, we find that the imbalance_GA(FS_IS) method (that is, first imbalance the dataset using the ROS method, then use the feature selection to reduce, and then use the instance selection to reduce) could achieve the maximum performance improvement for identifying high-impact bug reports, and the accuracy, F-measure, precision, recall, and AUC values are 0.5779, 0.5779, 0.7368, 0.5779, and 0.7417, respectively.

From Table 13, we find that the GA(IS_FS)_imbalance method (that is, first instance selection is used, then feature selection is performed, and then the imbalanced learning strategy is used to imbalance the dataset) can achieve the best classification effect. For the Mozilla and Eclipse datasets, the accuracy, F-measure, precision, recall, and AUC values after the imbalance processing using the ROS method are 0.7724, 0.7734, 0.7889, 0.7724, and 0.8303 and 0.5928, 0.5961, 0.7307, 0.5928, and 0.7342, respectively. For the GCC datasets, the accuracy, F-measure, precision, recall and AUC values after the imbalanced processing using the SMOTE method are 0.7978, 0.8048, 0.8158, 0.7978, and 0.7973, respectively.

Therefore, we found that the imbalance_GA(FS_IS) method (that is, first use the ROS method to imbalance the dataset, then use the feature selection to reduce, and then use the instance selection to reduce) achieved the highest AUC value of 0.86333 for identifying high-impact bug reports for the Mozilla dataset, and using the CMA method for processing datasets after instance reduction achieved the highest precision value of 0.827 for identifying high-impact bug reports for the GCC dataset. For the Eclipse dataset, using the ROS method for processing datasets after FS and IS reduction simultaneously achieved the highest AUC value of 0.7761 to identify high-impact bug reports.

6. Conclusions

In this paper, we propose a high-impact bug report identification approach generated by combining the data reduction, e.g., the Data Reduction based on Genetic algorithm (DRG), and imbalanced learning strategies. We use four feature selection algorithms (i.e., One Rule (OneR), information gain (IG), chi squared (CHI), and filtered selection (Relief)) to extract the important attributes, which aims to reduce the data from the original bug reports by removing noisy or noninformative words. Then, we use imbalanced processing technologies (i.e., random under-sampling (RUS), random over-sampling (ROS), synthetic minority over-sampling technique (SMOTE), and cost-matrix adjuster (CMA)) to reduce the imbalance of small-scale and high-quality training sets obtained after feature selection and instance selection. In our work, we not only reduced the word dimension of the original training set that improved the quality of training set, but we also improved the classification ability for identifying the high-impact bug reports with an imbalanced distribution. Comprehensive experiments have been conducted on public datasets obtained from real-world bug repositories, and the experimental results indicate that our approach can efficiently improve the ability to identify high-impact bug reports.

Author Contributions

Data curation, M.W., S.W. and T.L.; Formal analysis, H.L.; Methodology, S.G. and C.G.; Writing—review and editing, R.C.

Funding

This research was supported by the National Natural Science Foundation of China (grant number. 61902050, 61672122,61602077,61771087,51879027,51579024, and 71831002), Program for Innovative Research Team in University of Ministry of Education of China (No. IRT 17R13), the Fundamental Research Funds for the Central Universities (Nos. 3132019501 and 3132019502,JLU), and CERNET Innovation Project (Nos. NGII20181203 and NGII20181205).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Classifiers

Many classifiers are used in text categorization. In this section, we mainly introduce the four classifiers used in our manuscript, which are as follows: naive Bayes(NB), naive Bayes multinomial (NBM), support vector machine (SVM) and K-nearest neighbors (KNN).

Appendix A.1.1. Naive Bayes

The theory of the naive Bayes classifier: For the given unclassified items, calculate the probability of each category occurring under the condition of the item occurring and which probability value is the largest; then, determine which category the classification item belongs to.

Suppose is an unclassified item, and each a is a feature attribute of x; the category set is , and the probability that x belongs to each category is .

If , then .

Appendix A.1.2. Naive Bayes Multinomial

The polynomial naive Bayes implements the Bayesian algorithm when the discrete features obey the polynomial distribution. The polynomial distribution is parameterized into a vector . For each class y, the parameter n represents the number of features, that is, the size of the word vector. indicates that the probability of having appear for a feature i in a sample classified as y. The parameter is calculated by the smoothed maximum likelihood estimation method, and the calculation formula is as follows: , where is the number of occurrences of the feature i in the sample belonging to the y class on the training set T, and is the sum of all the features in class y. The smoothing coefficient represents a feature that has not appeared in the training set. If , it is called Laplace smoothing.

Appendix A.1.3. K-Nearest Neighbors

The K-nearest neighbor algorithm is used to input test data in the case where the data and tags in the training set are known to compare the features of the test data with the features corresponding to the training set and to find the top K data whose training set is most similar to the test data. Then, the category corresponding to the test data is the one with the most occurrences among the K data, and the description of the algorithm is as follows:

- Calculate the distance between the test data and each training data;

- Sort according to the increasing relationship of distances;

- Select K data points with the smallest distance;

- Determine the frequency of occurrence of the category of the top K data points;

- Return the category with the highest frequency among the top K data points as the prediction classification of the test data.

Appendix A.1.4. Support Vector Machine

SVM is a supervised learning method. It searches for a classification hyperplane in high-dimensional space and separates the sample points of different categories to maximize the interval between different types of points. The classification hyperplane is the classifier corresponding to the maximum interval hyperplane, called maximum interval classifiers; it can minimize empirical errors and maximize geometric edges.

Appendix A.2. Feature Selection Algorithm

In this section, we mainly introduce the four feature selection algorithms used in our manuscript, which are as follows: One Rule(OneR), Information Gain (IG), Chi-square (CHI) and Filtered Selection (Relief).

Appendix A.2.1. OneR

The basic idea of the OneR algorithm is to select a single attribute for a particular class to maximize the accuracy of that class. First, assign each value of each attribute to the class with the most occurrences of that value. Then, calculate the accuracy of the attribute value corresponding to the other classes Add all the accuracy values to obtain the total accuracy. The OneR algorithm selects those attributes that have the highest accuracy, as shown in Algorithm A1. C represents the category of the original training set, A represents all the attributes of the original training set (a is one of the attributes of A), and s represents the feature extraction range.

Appendix A.2.2. IG

The IG algorithm is often used to assess the quality of features in machine learning. It calculates the accuracy of the classification algorithm including an attribute and not including an attribute. The IG assigns a weight to the attribute based on the difference between the two values. In the IG algorithm, the measure of word importance is mainly to consider how much information the word can provide for the classification algorithm. The more information it provides, the more important the word is, as shown in Algorithm A2.

| Algorithm A1 OneR |

| Input: Output: |

|

| Algorithm A2 IG |

| Input: Output: |

|

Appendix A.2.3. CHI

The purpose of the CHI algorithm is to assess the degree of independence between words and categories. It is actually a common method to test the independence of two words in mathematical statistics. The basic idea is to verify that the theory is correct by calculating the deviation between the observed and theoretical values. The larger the calculated CHI value is, the more relevant the attribute and category, as shown in Algorithm A3. M represents the number of times the words a and c occur simultaneously; N represents the number of times a appears and c does not occur; J represents the number of times c appears and a does not appear; and Q represents the number of times a and c are not present.

| Algorithm A3 CHI |

| Input: Output: |

|

Appendix A.2.4. Relief

The Relief algorithm is a feature weighting algorithm that assigns different weights according to the correlation of each feature and category. The features with weights less than a certain threshold will be removed. The correlation of features and categories in the Relief algorithm is based on the ability of the features to distinguish between close-range samples. The algorithm randomly selects a sample R from the training set D and then searches for the nearest neighbor sample H from the samples of the same type R, called Near Hit. Then, the algorithm finds the nearest neighbor sample M from the samples of different R types, called near miss, and updates the weight of each feature according to the following rules. If the distance between R, near hit and a feature is less than the distance between R and near miss, it indicates that the feature is beneficial for distinguishing the nearest neighbors of the same type and different classes, and the weight of the feature is increased. Conversely, if the distance between R, near hit and a feature is greater than the distance between R and near miss, indicating that the feature has a negative effect on distinguishing the nearest neighbors of the same class and different class, the weight of the feature is decreased. The above process is repeated m times, and finally, the average weight of each feature is obtained. The greater the weight of the feature is, the stronger the classification ability of the feature and the weaker the ability to classify the feature. The running time of the Relief algorithm increases linearly with the sampling number of samples m and the number of original features N, so the operating efficiency is very high. Algorithm A4 introduces the specific steps.

| Algorithm A4 Relief |

| Input: Output: |

|

References

- Kumaresh, S.; Baskaran, R. Mining software repositories for defect categorization. J. Commun. Softw. Syst. 2015, 11, 31–36. [Google Scholar] [CrossRef][Green Version]

- Bertram, D.; Voida, A.; Greenberg, S.; Walker, R. Communication, collaboration, and bugs: The social nature of issue tracking in small, collocated teams. In Proceedings of the 2010 ACM Conference on Computer Supported Cooperative Work, Savannah, GA, USA, 6–10 February 2010; pp. 291–300. [Google Scholar]

- Xia, X.; Lo, D.; Wang, X.; Zhou, B. Accurate developer recommendation for bug resolution. In Proceedings of the 2013 20th Working Conference on Reverse Engineering (WCRE), Koblenz, Germany, 14–17 October 2013; pp. 72–81. [Google Scholar]

- Liu, C.; Yang, J.; Tan, L.; Hafiz, M. R2Fix: Automatically generating bug fixes from bug reports. In Proceedings of the 2013 IEEE Sixth International Conference on Software Testing, Verification and Validation, Luxembourg, 18–22 March 2013; pp. 282–291. [Google Scholar]

- Lang, G.; Li, Q.; Guo, L. Discernibility matrix simplification with new attribute dependency functions for incomplete information systems. Knowl. Inf. Syst. 2013, 37, 611–638. [Google Scholar] [CrossRef]

- Guo, S.; Chen, R.; Wei, M.; Li, H.; Liu, Y. Ensemble Data Reduction Techniques and Multi-RSMOTE via Fuzzy Integral for Bug Report Classification. IEEE Access 2018, 6, 45934–45950. [Google Scholar] [CrossRef]

- Zhu, X.; Wu, X. Cost-constrained data acquisition for intelligent data preparation. IEEE Trans. Knowl. Data Eng. 2005, 17, 1542–1556. [Google Scholar] [CrossRef]

- Zhao, H.; Yao, R.; Xu, L.; Yuan, Y.; Li, G.; Deng, W. Study on a novel fault damage degree identification method using high-order differential mathematical morphology gradient spectrum entropy. Entropy 2018, 20, 682. [Google Scholar] [CrossRef]

- Jeong, G.; Kim, S.; Zimmermann, T. Improving bug triage with bug tossing graphs. In Proceedings of the 7th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering, Amsterdam, The Netherlands, 24–28 August 2009; pp. 111–120. [Google Scholar]

- AAnvik, J.; Hiew, L.; Murphy, G.C. Who should fix this bug? In Proceedings of the 28th International Conference on Software Engineering, Shanghai, China, 20–28 May 2006; ACM: New York, NY, USA, 2006; pp. 361–370. [Google Scholar]

- Deng, W.; Zhao, H.; Zou, L.; Li, G.; Yang, X.; Wu, D. A novel collaborative optimization algorithm in solving complex optimization problems. Soft Comput. 2017, 21, 4387–4398. [Google Scholar] [CrossRef]

- Yang, X.L.; Lo, D.; Xia, X.; Huang, Q.; Sun, J.L. High-Impact Bug Report Identification with Imbalanced Learning Strategies. J. Comput. Sci. Technol. 2017, 32, 181–198. [Google Scholar] [CrossRef]

- Naganjaneyulu, S.; Kuppa, M.R.; Mirza, A. An efficient wrapper approach for class imbalance learning using intelligent under-sampling. Int. J. Artif. Intell. Appl. Smart Dev. 2014, 2, 23–40. [Google Scholar]

- Cieslak, D.A.; Chawla, N.V. Learning decision trees for unbalanced data. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Antwerp, Belgium, 14–18 September 2008; pp. 241–256. [Google Scholar]

- Chen, R.; Guo, S.; Wang, X.; Zhang, T. Fusion of Multi-RSMOTE with Fuzzy Integral to Classify Bug Reports with an Imbalanced Severity Distribution. IEEE Trans. Fuzzy Syst. 2019. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Mani, I.; Zhang, I. kNN approach to unbalanced data distributions: a case study involving information extraction. In Proceedings of the Workshop on Learning From Imbalanced Datasets, Washington DC, USA, 21 August 2003; p. 126. [Google Scholar]

- Li, H.; Gao, G.; Chen, R.; Ge, X.; Guo, S.; Hao, L. The Influence Ranking for Testers in Bug Tracking Systems. Int. J. Softw. Eng. Knowl. Eng. 2019, 29, 93–113. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Mozilla. Available online: http://Mozilla.apache.org/ (accessed on 3 September 2019).

- GCC. Available online: http://GCC.apache.org/ (accessed on 3 September 2019).

- Eclipse. Available online: http://Eclipse.apache.org/ (accessed on 3 September 2019).

- Jiang, H.; Li, X.; Ren, Z.; Xuan, J.; Jin, Z. Toward Better Summarizing Bug Reports with Crowdsourcing Elicited Attributes. IEEE Trans. Reliab. 2019, 68, 2–22. [Google Scholar] [CrossRef]

- Anvik, J. Evaluating an assistant for creating bug report assignment recommenders. Workshop Eng. Comput. Hum. Interact. Recomm. Syst. 2016, 1705, 26–39. [Google Scholar]

- Ai, J.; Su, Z.; Li, Y.; Wu, C. Link prediction based on a spatial distribution model with fuzzy link importance. Phys. A Stat. Mech. Appl. 2019, 527, 121155. [Google Scholar] [CrossRef]

- Deng, W.; Xu, J.; Zhao, H. An improved ant colony optimization algorithm based on hybrid strategies for scheduling problem. IEEE Access 2019, 7, 20281–20292. [Google Scholar] [CrossRef]

- Antoniol, G.; Ayari, K.; Di Penta, M.; Khomh, F.; Guéhéneuc, Y.G. Is it a bug or an enhancement?: A text-based approach to classify change requests. In Proceedings of the CASCON 2008, 18th Annual International Conference on Computer Science and Software Engineering, Conference of the Centre for Advanced Studies on Collaborative Research, Richmond Hill, ON, Canada, 27–30 October 2008. [Google Scholar]

- MMenzies, T.; Marcus, A. Automated severity assessment of software defect reports. In Proceedings of the 2008 IEEE International Conference on Software Maintenance, Beijing, China, 28 September–4 October 2008; pp. 346–355. [Google Scholar]

- Tian, Y.; Lo, D.; Sun, C. DRONE: Predicting Priority of Reported Bugs by Multi-factor Analysis. In Proceedings of the 2013 IEEE International Conference on Software Maintenance, Eindhoven, The Netherlands, 22–28 September 2013; pp. 200–209. [Google Scholar]

- Hooimeijer, P.; Weimer, W. Modeling bug report quality. In Proceedings of the Twenty-Second IEEE/ACM International Conference on Automated Software Engineering, Atlanta, GA, USA, 5–9 November 2007; pp. 34–43. [Google Scholar]

- Runeson, P.; Alexandersson, M.; Nyholm, O. Detection of Duplicate Defect Reports Using Natural Language Processing. In Proceedings of the 29th International Conference on Software Engineering, Washington, DC, USA, 20–26 May 2007; pp. 499–510. [Google Scholar]

- Sun, C.; Lo, D.; Wang, X.; Jiang, J.; Khoo, S.C. A discriminative model approach for accurate duplicate bug report retrieval. In Proceedings of the 32nd ACM/IEEE International Conference on Software, Cape Town, South Africa, 1–8 May 2010; pp. 45–54. [Google Scholar]

- Sun, C.; Lo, D.; Khoo, S.C.; Jiang, J. Towards more accurate retrieval of duplicate bug reports. In Proceedings of the 2011 26th IEEE/ACM International Conference on Automated Software Engineering, Washington, DC, USA, 6–10 November 2011; pp. 253–262. [Google Scholar]

- Xia, X.; Lo, D.; Wen, M.; Shihab, E.; Zhou, B. An empirical study of bug report field reassignment. In Proceedings of the 2014 Software Evolution Week—IEEE Conference on Software Maintenance, Reengineering, and Reverse Engineering (CSMR-WCRE), Antwerp, Belgium, 3–6 February 2014; pp. 174–183. [Google Scholar]

- Zhang, T.; Chen, J.; Yang, G.; Lee, B.; Luo, X. Towards more accurate severity prediction and fixer recommendation of software bugs. J. Syst. Softw. 2016, 117, 166–184. [Google Scholar] [CrossRef]

- Feng, Y.; Chen, Z.; Jones, J.A.; Fang, C.; Xu, B. Test report prioritization to assist crowdsourced testing. In Proceedings of the 10th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering, Bergamo, Italy, 30 August–4 September 2015; pp. 225–236. [Google Scholar]

- Feng, Y.; Jones, J.A.; Chen, Z.; Fang, C. Multi-objective test report prioritization using image understanding. In Proceedings of the 2016 31st IEEE/ACM International Conference on Automated Software Engineering (ASE), Singapore, 3–7 September 2016; pp. 202–213. [Google Scholar]

- Wang, J.; Cui, Q.; Wang, Q.; Wang, S. Towards Effectively Test Report Classification to Assist Crowdsourced Testing. In Proceedings of the 10th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, Ciudad Real, Spain, 8–9 September 2016. [Google Scholar]

- Wang, J.; Wang, S.; Cui, Q.; Wang, Q. Local-based active classification of test report to assist crowdsourced testing. In Proceedings of the 2016 31st IEEE/ACM International Conference on Automated Software Engineering (ASE), Singapore, 3–7 September 2016; pp. 190–201. [Google Scholar]

- Cubranic, D.; Murphy, G.C. Automatic bug triage using text categorization. In Proceedings of the SEKE 2004: Sixteenth International Conference on Software Engineering & Knowledge Engineering 2004, Banff, AB, Canada, 20–24 June 2004; pp. 92–97. [Google Scholar]

- Xuan, J.; Jiang, H.; Ren, Z.; Yan, J.; Luo, Z. Automatic bug triage using semi-supervised text classification. In Proceedings of the 22nd International Conference on Software Engineering and Knowledge Engineering (SEKE 2010), Redwood City, San Francisco Bay, CA, USA, 1–3 July 2010; pp. 209–214. [Google Scholar]

- Zhao, H.; Zheng, J.; Xu, J.; Deng, W. Fault diagnosis method based on principal component analysis and broad learning system. IEEE Access 2019. [Google Scholar] [CrossRef]

- Bettenburg, N.; Just, S.; Schröter, A.; Weiss, C.; Premraj, R.; Zimmermann, T. What makes a good bug report. In Proceedings of the SIGSOFT 2008/FSE-16, 16th ACM SIGSOFT International Symposium on Foundations of Software Engineering, Atlanta, GA, USA, 9–15 November 2008; pp. 308–318. [Google Scholar]

- Gao, K.; Khoshgoftaar, T.M.; Seliya, N. Predicting high-risk program modules by selecting the right software measurements. Softw. Qual. J. 2012, 20, 3–42. [Google Scholar] [CrossRef]

- Deng, W.; Zhao, H.; Yang, X.; Xiong, J.; Sun, M.; Li, B. Study on an improved adaptive PSO algorithm for solving multi-objective gate assignment. Appl. Soft Comput. 2017, 59, 288–302. [Google Scholar] [CrossRef]

- Xuan, J.; Jiang, H.; Hu, Y.; Ren, Z.; Zou, W.; Luo, Z.; Wu, X. Towards Effective Bug Triage with Software Data Reduction Techniques. IEEE Trans. Knowl. Data Eng. 2015, 27, 264–280. [Google Scholar] [CrossRef]

- Xuan, J.; Jiang, H.; Zhang, H.; Ren, Z. Developer recommendation on bug commenting: a ranking approach for the developer crowd. Sci. China Ser. Inf. Sci. 2017, 60, 072105. [Google Scholar] [CrossRef]

- Liu, S.; Hou, H.; Li, X. Feature Selection Method Based on Genetic and Simulated Annealing Algorithm. Comput. Eng. 2005, 31, 157–159. [Google Scholar]

- Jiang, H.; Nie, L.; Sun, Z.; Ren, Z.; Kong, W.; Zhang, T.; Luo, X. Rosf: Leveraging information retrieval and supervised learning for recommending code snippets. IEEE Trans. Serv. Comput. 2016, 12, 34–46. [Google Scholar] [CrossRef]

- Huang, G. An Insight into Extreme Learning Machines: Random Neurons, Random Features and Kernels. Cogn. Comput. 2014, 6, 376–390. [Google Scholar] [CrossRef]

- Guo, S.; Chen, R.; Li, H.; Zhang, T.; Liu, Y. Identify Severity Bug Report with Distribution Imbalance by CR-SMOTE and ELM. Int. J. Softw. Eng. Knowl. Eng. 2019, 29, 139–175. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).