Abstract

Skin cancer is one of the most common and life-threatening diseases. In the current era, early detection remains a significant challenge, particularly in remote and underserved regions with limited internet access. Traditional skin cancer detection systems often depend on image classification using deep learning models that require constant connectivity to internet access, creating barriers in areas with poor infrastructure. To address this limitation, CNN provides an innovative solution by enabling on-device machine learning on low-computing Internet of Things (IoT) devices. This study evaluates the performance of a convolutional neural network (CNN) model trained on 10,000 dermoscopic images spanning seven classes from the Harvard Skin Lesion dataset. Unlike previous research, which seldom offers detailed performance evaluations on IoT hardware, this work benchmarks the CNN model on multiple single-board computers (SBCs), including low-computing devices like Raspberry Pi and Jetson Nano. The evaluation focuses on classification accuracy and hardware efficiency, analyzing the impact of varying training dataset sizes to assess the model’s scalability and effectiveness on resource-constrained devices. The simulation results demonstrate the feasibility of deploying accurate and efficient skin cancer detection systems directly on low-power hardware. The simulation results show that our proposed method achieves an accuracy of 98.25%, with the fastest hardware being the Raspberry Pi 5, which achieves a detection time of 0.01 s.

1. Introduction

With millions of cases recorded each year, skin cancer ranks as one of the most prevalent and potentially deadly diseases impacting individuals worldwide. Early identification is essential for enhancing survival rates, as it allows for prompt medical intervention and care. Dermoscopy of skin cancer, a non-invasive technique, has evolved into a crucial instrument for diagnosing skin lesions by offering intricate visual representations of the skin’s surface. Nevertheless, evaluating these images correctly demands considerable expertise, which is frequently scarce in remote or resource-limited areas.

In recent years, progress in artificial intelligence (AI) has resulted in the creation of automated systems for detecting skin cancer. Convolutional neural networks (CNNs) have shown exceptional effectiveness in tasks related to image classification, particularly in medical imaging fields. CNNs are highly effective at extracting features, recognizing patterns, and classifying skin lesion images into different types like melanoma, benign nevi, and keratosis. For example, the HAM10000 dataset, comprising 10,015 dermoscopic images of skin cancer, has been widely utilized in training CNN models to reach high classification precision.

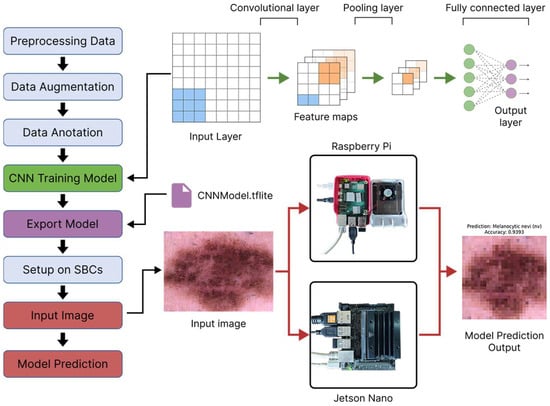

Figure 1 depicts the development flow model for implementing deep learning on single-board computers, which provides a formal framework for maximizing CNN performance on resource-constrained IoT devices. The integration of deep learning models into IoT devices is a rapidly expanding topic, notably in healthcare. Raspberry Pi (Raspberry Pi Ltd., Cambridge, UK) and NVIDIA Jetson Nano (Nvidia, Santa Clara, CA, USA) are affordable platforms for delivering CNN-based models in medical imaging. CNNs excel in feature extraction and image classification, making them ideal for skin lesion analysis. However, installing CNNs on low-power IoT devices raises issues with computational efficiency, memory utilization, and energy consumption. This study assesses a CNN model tailored for embedded systems using CNN and compares its classification accuracy and hardware performance on Raspberry Pi and Jetson Nano. The objective is to provide skin cancer diagnosis in resource-constrained areas without using cloud computing.

Figure 1.

Flow development model for deep learning on a single-board computers.

Although CNNs are very efficient, implementing them on low-computing IoT devices poses difficulties related to resource limitations, including memory, computational power, and energy use. This study tackles these problems by assessing the effectiveness of a model utilizing CNN. The research contrasts the classification accuracy and hardware efficiency of the model on Raspberry Pi and Jetson Nano, providing insights into the feasibility of skin cancer detection in resource-limited settings.

The primary contributions of this research include analysis for the proposed deep learning model and architecture for skin cancer detection, benchmarking CNN performance on single-board computers for skin cancer detection and assessing hardware efficiency in terms of processing speed, memory usage, and power consumption. The findings seek to close the gap between cutting-edge deep learning models and their practical implementation in low-computing environments, so that cancer can be tackled more effectively, especially in areas with limited infrastructure.

The rest of this article is organized as follows. Section 2 discusses related works relevant to this research. Section 3 describes the materials and methods, including dataset preprocessing, model architecture, performance metric evaluation, and single-board computer platforms. Section 4 describes the paper results and discussion, including a model performance evaluation and hardware implementation performance. Finally, Section 5 provides the conclusions and future research.

2. Related Works

Segmentation, object detection, classification, and picture recognition are all examples of computer vision problems, which frequently use convolutional neural networks (CNNs), a kind of deep learning. In research by Abayomi-Alli et al., the SqueezeNet model obtained 92.18% accuracy in skin cancer classification, suggesting that its lightweight design is acceptable for implementation in resource-limited situations. Its disadvantages include significantly lower accuracy when compared to more complicated models [1]. Similarly, Rakesh Kumar et al. (2024) demonstrated CNNs’ ability to diagnose skin illnesses with 94.91% accuracy, highlighting possibilities in telemedicine, early detection, and mobile-based healthcare accessible for rural populations. Despite this, the absence of real-world testing in varied groups raises concerns regarding generalizability [2].

Deep learning advancements have improved methods for detecting skin cancer. Prominent research using the VGG-19 model obtained 97.29% accuracy via transfer learning using pre-trained weights, proving the model’s robust feature extraction capability. While this precision is great, the computing demands of the VGG-19 model may make real-time implementation difficult [3]. Other study that leveraged fastai’s high-level API focused on constructing CNN models for melanoma diagnosis, obtaining excellent accuracy through data augmentation and hyperparameter tuning. These studies underline fastai’s effectiveness for streamlining model construction, although it has disadvantages such as dependency on preset APIs, which may limit customization [4,5].

Further advancements have been investigated using models such as MobileNetV2 and DenseNet201. In their research, Muhammad Zia Ur Rehman et al. improved these models by using Grad-CAM visualization and extra convolutional layers to increase feature interpretability. Their updated DenseNet201 framework obtained a leading accuracy of 95.50%, demonstrating its efficacy in identifying crucial aspects for diagnosis. However, the model’s increasing complexity raises doubts about its appropriateness for application in low-power devices [6]. Preprocessing procedures and data augmentation were also highlighted as critical stages to ensure data quality and model durability, with one suggested deep CNN model achieving 90.48% accuracy. While this strategy is successful, it may be time-consuming for big datasets [7].

The EfficientNet model family has also demonstrated potential in skin cancer diagnosis. Karar Ali et al. found that the EfficientNet B4 model achieved a Top-1 Accuracy of 87.91% and an F1-score of 87%, exhibiting superior performance with fewer parameters than traditional models. However, the lower accuracy shows that there is still space for improvement in feature extraction for complicated scenarios. Raed A. Said et al. created an automated approach employing the MobileNet optimizer that achieved 90% accuracy in early skin cancer detection and classification, demonstrating its promise for lightweight, mobile applications [8,9].

In another CNN-related study, R and Shrivastava (2023) built a CNN-based model for skin disease categorization using the Jetson Nano Developer Kit. On the 200-image PH2 dataset, their model surpassed MobileNet (80%) and ResNet50 (56.66%), with a proposed model CNN achieving 93.33% accuracy. Despite this, the dataset’s limited size (139 training and 60 test pictures) may restrict its generalizability [10]. A CNN-based model performed admirably in the experiment by V. P. K. and S. P., categorizing skin problems into seven distinct groups with 95% accuracy. This highlights the potential for deep learning approaches to automate the diagnosis process, reduce reliance on manual evaluation, and improve clinical decision making in dermatology [11].

In addition, MobileNet, a lightweight convolutional neural network (CNN) which employs depth-wise separable convolutions, has demonstrated potential by obtaining an accuracy of 91.23%. Its architecture minimizes computing complexity, making it suited for resource-constrained environments and real-time applications. Despite its benefits, MobileNet lacks built-in interpretability, which is vital in clinical diagnostics where understanding the reasoning behind predictions is essential. Furthermore, it is susceptible to image quality fluctuations [12].

Recent research has also investigated explainable AI and hybrid models, emphasizing their influence on model dependability. For example, domain-specific visualization approaches combined with Grad-CAM have increased diagnostic transparency [13]. Similarly, preprocessing processes geared towards resource-constrained circumstances allowed MobileNet to reach remarkable performance metrics, with automated techniques attaining Top-1 accuracy of 90% for early-stage detection [14].

These innovations underscore the potential for integrating cutting-edge methods, such as advanced CNN architectures, transfer learning, and visualization techniques, into skin cancer diagnostics to enhance detection accuracy, efficiency, and usability. While these approaches improve diagnostic performance and model explainability, challenges such as high computational costs, limited applicability in resource-constrained environments, and the need for robust real-world validation remain areas for further exploration. Nonetheless, these advancements collectively expand access to healthcare through telemedicine and automated screening solutions, paving the way for more inclusive and reliable diagnostic systems.

3. Materials and Methods

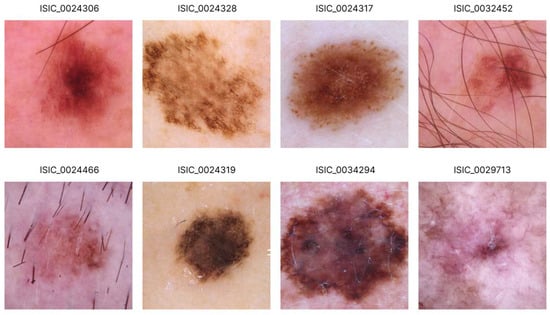

In this research, a methodology consisting of several stages was used, as shown in Figure 2. The initial stage involved data preprocessing, including dividing the dataset into training and testing sets, data augmentation to increase diversity, and image labeling for annotations. The convolutional neural network (CNN) model was then trained using the training data to recognize patterns in the images. The performance of the proposed model was evaluated using metrics like F1-score, recall, precision, and Mean Average Precision (MAP). Following that, the trained model was implemented on hardware devices such as Jetson Nano and Raspberry Pi. In the final stage, the model’s efficiency and accuracy were evaluated by considering various data parameters. This methodology allowed for the development, evaluation, and implementation of an efficient and accurate model for image recognition.

Figure 2.

Research stages.

3.1. Data Preprocessing

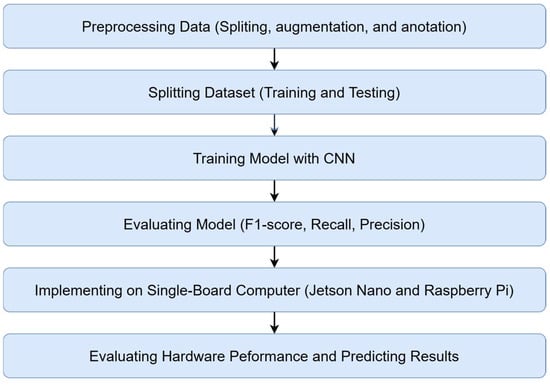

The dataset used in this study is the 10,000 training images Human Against Machine skin cancer dataset (HAM10000) from the Harvard Dataverse [15]. The dataset contains 10,015 dermatoscopic images of human skin pigment lesions. The dataset was collected using various tools and methods and categorized into 7 classes.

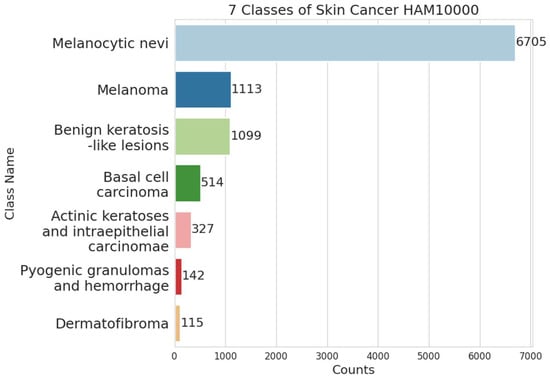

As shown in Figure 3, the dataset is divided into 7 class categories, dominated by the melanocytic nevi class with 6706 data and other categories with 3310 data. From the dataset, which we obtained from the Harvard dataset, we obtain 2 zip files, each containing a total of 10,015 data in the form of JPEG images, as shown in Figure 4. The dataset also provides metadata to track lesions in the image. This HAM1000 dataset was also used as a training set for the 2018 ISIC Challenge.

Figure 3.

Classes of skin cancer dataset HAM10000.

Figure 4.

Sample images of HAM10000 dataset.

The dataset is obtained from 3 gender distributions, with 5406 male data, 4522 female data and 57 unknown data. In the initial stage of preprocessing, we applied a Random Over-Sampler technique to address class imbalance issues within the dataset. Random Over-Sampling artificially increases the number of samples in minority classes by duplicating existing instances, ensuring a more balanced class distribution. As a result of the Random Over-Sampling technique, the data shape changes to 46,935 samples. In the metadata, the dataset provides the original label information as a marker of the given image. This research converts the dataset into a 4-dimensional form with batch, height, width and channel, with image dimensions of 28 × 28 × 3 using RGB color channels. Furthermore, the dataset is divided into 2 categories, with 75% for training and 25% for testing. In this process, the data are divided randomly and data labels and image data features are stored for the next stage.

3.2. Convolutional Neural Network Model Training

In our research, we used the multi-layer convolutional neural network (CNN) model proposed in this study. The resulting model produces output to predict 7 class categories in skin cancer. This model was built using TensorFlow and Keras libraries, where each layer is designed for optimal results in the feature learning process.

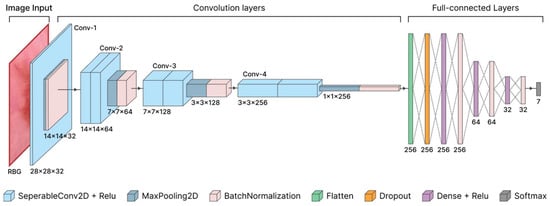

The proposed CNN architecture consists of multiple stages and layers, as shown in Figure 5. The architecture is divided into several stages and layers. The model is designed to accept 28 × 28 × 3 RGB input images. A 2D separable convolution layer is used for preprocessing, which reduces the number of parameters compared to standard convolution. This technique separates the process into depthwise and pointwise convolution. Depthwise convolution extracts spatial features independently in each channel, while pointwise convolution combines information between channels to understand the relationship between features. The he_normal initialization is used to speed up the convergence of the model and ensure better weight distribution at the start of training.

Figure 5.

Architecture of the proposed convolutional neural network.

In the initial Conv-1 stage, the model uses 2D Separable Convolution and Rectified Linear Unit with 32 filters and kernel size 3 3, followed by 2D MaxPooling and Batch Normalization to normalize the data distribution. The Rectified Linear Unit can help the layer learn more complex data. The combination of MaxPooling and Batch Normalization ensures stable gradient updates, preventing internal covariates.

At the Conv-2 stage, the model has an output size of 14 14 64. The model uses two 2D separable convolutional layers, each with 64 filters and kernel size 3 3, followed by 2D MaxPooling and Batch Normalization to improve stability during training.

At the Conv-3 stage, the model has an output size of 7 7 128, with two 2D separable convolutional layers with 128 filters and a 3 3 kernel, followed by 2D MaxPooling and Batch Normalization. At the Conv-4 stage, the model has an output of 3 3 256, with two 2D separable convolutional layers using 256 filters and a 3 3 kernel. This layer is followed by 2D MaxPooling and Batch Normalization to normalize the data distribution and speed up the convergence process.

After the convolution layer, the extracted features are flattened by the flatten layer and passed to multiple fully connected layers (dense layers). Regularization is applied using the Dropout layer to reduce the risk of overfitting. The weights in the dense layers are initialized using he_normal, and additional regularization is applied to the last layer before output.

3.3. Convolutional Neural Network Performance Evaluation

Measuring the performance effectiveness of a CNN model is an important step in evaluating the effectiveness of the model in classifying an object. In this research, several evaluation metrics are used to measure the performance of the model detector in the SCDS system. These metrics include accuracy (A), precision (P), recall (R), and F1-score.

True Positive (TP) can occur when the model correctly identifies an object as positive. True Negative (TN) can occur when the model correctly identifies an object as negative. False Positive (FP) can occur when the model incorrectly identifies an object as positive, and False Negative (FN) can occur when the model fails to identify an object that exists.

Accuracy (A) measures the extent to which the model can correctly and effectively classify samples using the formula in (1). Precision indicates the proportion of correct positive predictions compared to all positive predictions made by the model. This metric can measure the accuracy of the model in identifying the correct object by calculating the formula in (2). Recall measures the extent to which the model can capture all positive samples that are present in the dataset. The higher the recall, the better the model is at recognizing all relevant objects, using the formula in (3). The F1-score is a metric between precision and recall. This metric becomes a reference of the balance between the two metrics Precision and Recall. F1-score is calculated with the formula in (4).

3.4. Convolutional Neural Network Implementation on SBC Platform

The research uses multiple single-board computer platforms to test the evaluation performance with several parameters, such as detection time, power consumption, RAM utilization, and dissipated energy. Detection time is used to determine the speed of the model in detecting objects. The smaller the detection time value, the faster the model identifies the object. Power Consumption measures the amount of power consumed by the single-board computer during the process. RAM utilization can be used to see how much memory is being used to develop this skin cancer classification detection system.

The dissipated energy parameter in (5) describes how much total energy is wasted while the system is running on a single-board computer device with the formula multiplying the power value by the detection time value. This helps determine how efficient the power consumption will be when the system is running.

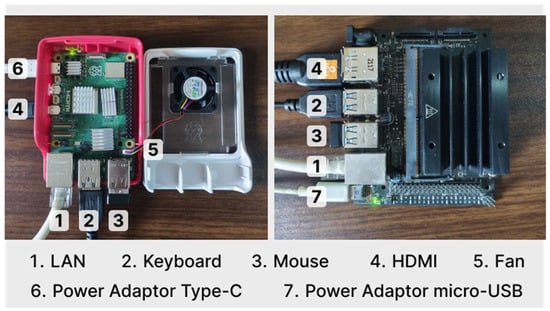

The above are the specifications of the single-board computer device used in this research, with detailed parameters of the data used, as shown in Table 1. The CNN model used is stored in TensorFlow Lite format. TensorFlow Lite is suitable for low-power devices such as those used in this study. There are several libraries that need to be installed, such as tensorflow, numpy, matplotlib, psutil, and pandas. Some example images are prepared to test the performance of the model, the hardware, and the classification results. Some hardware is connected to Jetson Nano, such as micro-USB power adapters and HDMI cables for monitors. As for Raspberry Pi devices, type-C power adapters and HDMI cables for monitors are used. For installation and initial setup purposes, you can also use a LAN cable for internet access, as shown in Figure 6.

Table 1.

Hardware specifications.

Figure 6.

Setup of single-board computer.

In evaluating the hardware performance of each SBC platform, this paper uses 4 parameters. The parameters consist of detection time (s), power consumption (W/h), RAM utilization (MB), and dissipated energy (mJ). Detection is performed by preparing 10 random images from the dataset. The 10 images were later used randomly to evaluate the devices 100 times. The average values of the 4 parameters are shown in the table hardware performance evaluation results.

4. Results and Discussion

4.1. Model Performance Evaluation

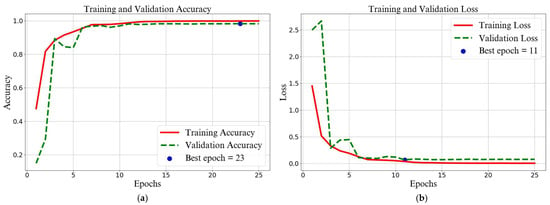

The model achieved the lowest validation loss of 0.0685 at epoch 11 and the highest validation accuracy of 98.30% at epoch 23. The model accuracy increased significantly from the beginning and stabilized after epoch 11, with the learning rate gradually decreasing at each epoch to avoid overfitting. The model can generalize quite well, and the stability and consistency gap between training and validation show good results, as shown in Figure 7.

Figure 7.

Metrics of (a) training and validation accuracy and (b) training and validation loss.

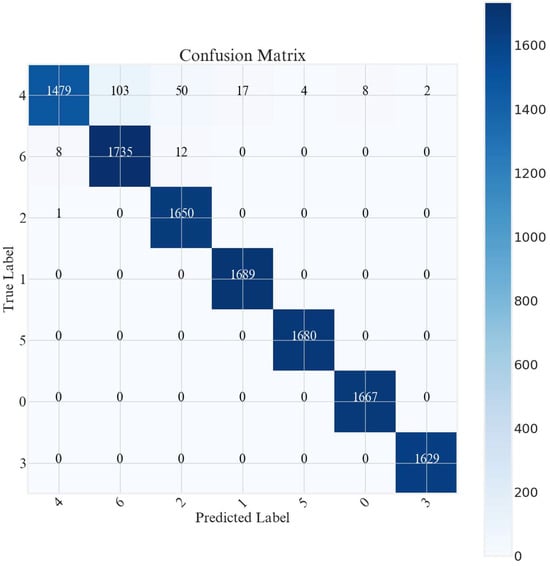

The model performed very well, with most predictions falling within the main diagonal of the confusion matrix, indicating a high level of accuracy. However, there were some significant misclassifications in class 4, with 103 samples misclassified as class 6, 50 samples misclassified as class 2, and 17 samples misclassified as class 1. In the other classes, these were very small or zero, indicating that the model had excellent precision and recall for most of the class categories shown in Figure 8. Improvements can be made by further exploring the features that distinguish classes 4, 6, and 2, and by increasing the amount of other training data to reduce the bias between classes.

Figure 8.

Confusion matrix of proposed model.

Compared to other models in Table 2, the model proposed in this paper shows the best performance with 98% accuracy, surpassing the CNN method in R and Shrivastava, (2023) [10] with dataset PH2 got 93.33% accuracy and in research Salomi et al. (2024) [13] used dataset ISIC got 89.31% accuracy. With same CNN method used HAM10000 dataset in Barbadekar et al. (2023) [3] got 97.29% accuracy. This model is also superior to the TinyML method in the paper by Watt et al. (2024) [16] with the lowest accuracy of 85% and CNN with Alexnet method achived high accuracy at 98% in Ali et al. (2023) [14]. In addition, the proposed model is successfully implemented on a single-board computer (SBC), as well as several other models, which show efficiency in deployment. Our proposed model achieves the highest accuracy, but also maintains implementation flexibility, making it a superior solution in the classification of the HAM10000 dataset.

Table 2.

Comparison of proposed model with state of the art.

The results of our experiment illustrate both the value and effectiveness of the suggested convolutional neural network (CNN) model for skin cancer classification. When tested on the HAM10000 dataset, as shown in Table 3, the model attained an impressive overall accuracy of 98%, surpassing other cutting-edge approaches significantly. In terms of individual class performance, the model achieved high precision, recall, and F1-scores in all seven disease categories. Notably, it received perfect scores of 1.00 for the akiec, bcc, df, and vasc classes, indicating immaculate detection in these categories. The mel class likewise performed well, with a precision of 0.94, recall of 0.99, and F1-score of 0.97. The nv class had slightly reduced recall of 0.89, but high precision of 0.99, resulting in a balanced F1-score of 0.94.

Table 3.

Proposed model classification result.

4.2. Hardware Implementation Performance

Figure 9 is an example of the output obtained by running the program on a single-board computer.

Figure 9.

Model detection results on single-board computers.

Using the TensorFlow Lite file format, the model has a relatively small data size of 976 KB. TensorFlow Lite helps reduce the model size significantly from its original H5 format, which was 3 MB. The lightweight TensorFlow Lite model is well suited for implementation on low-cost computing devices such as those used in this paper. By using a lightweight model, the detection process becomes faster and produces good accuracy. In the first image, the model detects the melanoma class with a good accuracy of 99.88%. In the next image, the model detects the benign keratosis-like lesion class with a high accuracy of 97.42%.

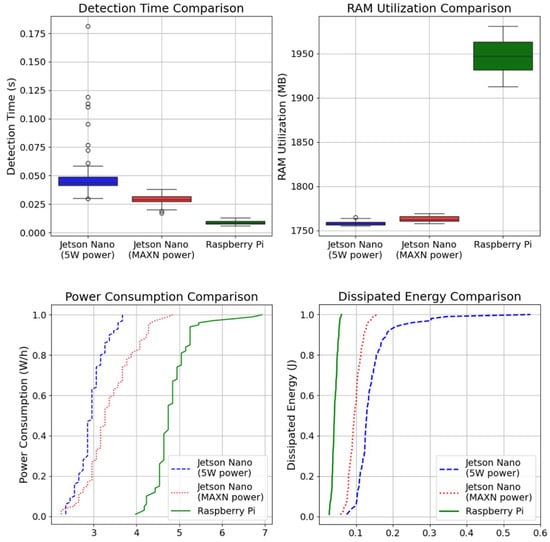

For the hardware implementation on single-board computers (SBCs), especially the Raspberry Pi 5 Model B and the NVIDIA Jetson Nano, the model produced excellent performance results, as shown in Table 4. Raspberry Pi achieved the fastest detection time of 0.01 s, making it suitable for real-time applications, while Jetson Nano in MAXN mode demonstrated an efficient balance between speed and power consumption. Raspberry Pi consumed 4.825 W/h of power while utilizing 1948 MB of RAM, whereas Jetson Nano in MAXN power mode had a detection time of 0.029 s, with a power consumption of 3.385 W/h and RAM utilization of 1763 MB. In its low-power 5 Watt mode, the Jetson Nano had a slightly slower detection time of 0.048 s but consumed just 2.948 W/h, with a RAM utilization of 1960 MB. These results confirm that SBCs offer an effective balance between energy efficiency and real-time classification speed, making them ideal candidates for on-device AI applications in low-resource environments.

Table 4.

Hardware performance evaluation results.

However, implementing this strategy in places with restricted internet connectivity has additional obstacles. Because the proposed model runs purely on-device with TensorFlow Lite, it does not require constant internet access for inference. This makes it ideal for offline deployment in rural healthcare settings, where general practitioners or paramedics may utilize it as a decision support tool for early diagnosis. However, one significant shortcoming of this technique is that without access to centralized model updates, the model may become obsolete over time as more varied data become available.

To overcome this issue in feature research, Federated Learning (FL) offers a possible solution. Instead of requiring centralized training, FL enables edge devices to train locally on fresh patient data and only send model updates (rather than raw patient data) when connection is available. This decentralized method protects patient privacy while allowing the model to develop over time. A realistic FL-based implementation would have the following workflow. A healthcare professional in a rural clinic utilizes the system for real-time inference, while the gadget also performs local training to fine-tune the model. When a healthcare professional visits a hospital or a central facility with internet connectivity, the trained model parameters (rather than raw patient data) are sent to a central server. The server aggregates multiple updates from different locations using techniques like FedAvg, improving overall model accuracy without compromising privacy [17]. The updated global model is then redistributed to all connected edge devices, ensuring continuous improvement across diverse healthcare settings.

The dissipated energy measurements validated the SBCs’ energy efficiency throughout the experiment. Raspberry Pi had the lowest dissipated energy of 0.013 mJ, demonstrating that, despite its greater power consumption, its faster processing speed substantially reduces energy loss. In contrast, Jetson Nano in 5 W mode had the maximum dissipated energy of 0.039 mJ, demonstrating that reduced power consumption does not always imply superior efficiency. Interestingly, Jetson Nano’s MAXN mode, with a dissipated energy of 0.027 mJ, showed a better balance of power efficiency and processing capabilities, suggesting that deliberately allocating more power can enhance overall energy efficiency.

Given these findings, Raspberry Pi remains the best choice for applications that need high-speed inference, although Jetson Nano in 5 W mode may be more ideal for power-constrained situations, as shown in Figure 10. However, when contemplating the long-term scalability of edge AI systems, particularly those utilizing Federated Learning (FL), energy efficiency becomes an important consideration in feature research.

Figure 10.

Comparison of hardware performance metrics.

Rather than investigating different hardware platforms separately, this work demonstrates how incorporating the TensorFlow Lite-based model into small, low-cost SBCs provides a feasible step forward in AI-assisted skin cancer diagnosis. The fast inference speed of Raspberry Pi and the combination of power efficiency and processing power of Jetson Nano show that AI-assisted diagnosis can be efficiently implemented on low-cost hardware. These results demonstrate that SBCs are not only capable of classification, but also provide a cost-effective and scalable option for mobile, power-efficient medical AI applications. Furthermore, such a system can enable remote monitoring of patients in rural or underserved areas, ensuring access to early diagnosis and treatment recommendations without the need for high-end medical infrastructure.

However, this study has limitations, including the lack of diverse clinical images, which may affect the generalizability of the model. Computational constraints of edge devices also limit the complexity of the model. Further validation in real-world settings is required to address environmental variability. Network conditions, sensor inconsistencies and patient movement can affect applicability. Explainable AI (XAI) can improve model interpretability for medical practitioners. Expanding the dataset with diverse skin colors and lesion types is critical for robustness. Future work should optimize accuracy and efficiency for edge devices.

5. Conclusions

The HAM10000 dataset was used in this study to create and test a convolutional neural network (CNN) model for skin cancer classification on single-board computer (SBC) devices. The technique included data preprocessing, picture modification with UV spot detection and pigment comparison, model training and assessment, and hardware implementation. The suggested CNN model obtained 98% accuracy, beating other state-of-the-art techniques. Performance criteria including accuracy, recall, and F1-score showed consistent reliability across all seven illness categories.

Experiments demonstrated that the model performed well when implemented on SBCs like Raspberry Pi 5 Model B and NVIDIA Jetson Nano. Raspberry Pi had the quickest detection time of 0.01 s, whereas Jetson Nano, especially in its MAXN power mode, combined efficient power use with a detection time of 0.036 s. Jetson Nano’s low-power 5 W mode, which operates at 3.1 W/h, has proven practical for energy-efficient applications.

These findings demonstrate the viability of using the CNN model in real-time, portable skin cancer detection devices with little hardware. Furthermore, the findings identify areas for further improvement in practical healthcare diagnostic applications.

Future research should improve the model’s adaptation to low-connectivity conditions. While the system functions offline, it does not receive constant updates, which may reduce its usefulness. Federated Learning (FL) is a promising method that allows for on-device training from remote places while syncing updates when internet connectivity is available. FL enables edge devices such as Raspberry Pi and Jetson Nano to learn on localized data while maintaining patient privacy. This strategy improves model performance without transferring raw medical data. However, issues including communication efficiency, resource restrictions, and model heterogeneity must be addressed.

Furthermore, using Explainable AI (XAI) can increase model interpretability, which boosts confidence among healthcare practitioners. Further optimization for real-time deployment on edge devices is required for broader use, guaranteeing scalable, privacy-preserving, and efficient skin cancer detection across a variety of medical scenarios.

Author Contributions

Conceptualization, N.S.; Methodology, V. and N.S.; Software, V.; validation, V., G.D. and N.S.; Investigation, V., G.D. and N.S.; Resources, N.S.; Data curation, V. and G.D.; Writing-original draft preparation, V., G.D. and N.S.; Writing—review and editing, V., G.D. and N.S.; visualization, V., G.D. and N.S.; funding acquisition, N.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in the Harvard Dataverse at HAM10000, reference number [15]. These data were derived from the following resources available in the public domain:

https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T, accessed date 1 January 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Abayomi-Alli, O.O.; Damasevicius, R.; Misra, S.; Maskeliunas, R.; Aboyomi-Alli, A. Malignant skin melanoma detection using image augmentation by oversampling nonlinear lower-dimensional embedding manifold. Turk. J. Electr. Eng. Comput. Sci. 2021, 29, 2600–2614. [Google Scholar] [CrossRef]

- Gupta, M.; Kumar, R.; Pradhan, A.K.; Obaid, A.J. Skin Disease Detection Using Neural Networks. In Proceedings of the 2024 International Conference on Advancements in Smart, Secure and Intelligent Computing (ASSIC), Bhubaneswar, India, 27–29 January 2024; pp. 1–4. [Google Scholar]

- Barbadekar, A.; Ashtekar, V.; Chaudhari, A. Skin cancer classification and detection using VGG-19 and DenseNet. In Proceedings of the 2023 International Conference on Computational Intelligence, Networks and Security (ICCINS), Mylavaram, India, 22–23 December 2023; pp. 1–6. [Google Scholar]

- Mia, M.S.; Mim, S.M.; Alam, M.J.; Razib, M.; Mahamudullah; Bilgaiyan, S. An approach to detect melanoma skin cancer using fastai CNN models. In Proceedings of the 2023 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 23–25 January 2023. [Google Scholar]

- Ornek, H.K.; Yilmaz, B.; Yasin, E.; Koklu, M. Deep Learning-Based Classification of Skin Lesion Dermoscopic Images for Melanoma Diagnosis. Intell. Methods Eng. Sci. 2024, 3, 70–81. [Google Scholar] [CrossRef]

- Zia Ur Rehman, M.; Ahmed, F.; Alsuhibany, S.A.; Jamal, S.S.; Zulfiqar Ali, M.; Ahmad, J. Classification of skin cancer lesions using explainable deep learning. Sensors 2022, 22, 6915. [Google Scholar] [CrossRef] [PubMed]

- Mahalle, P.; Shinde, S.; Raka, P.; Lodha, K.; Mane, S.; Malbhage, M. Skin cancer detection using CNN. In Proceedings of the International Conference on Computer Science and Emerging Technologies (CSET), Bangalore, India, 10–12 October 2023; pp. 1–5. [Google Scholar]

- Ali, K.; Shaikh, Z.A.; Khan, A.A.; Laghari, A.A. Multiclass skin cancer classification using EfficientNets-a first step towards preventing skin cancer. Neurosci. Inform. 2022, 2, 100034. [Google Scholar] [CrossRef]

- Said, R.A.; Raza, H.; Muneer, S.; Amjad, K.; Mohammed, A.S.; Akbar, S.S.; Aslam, M.A. Skin cancer detection and classification based on deep learning. In Proceedings of the International Conference on Cyber Resilience (ICCR), Dubai, United Arab Emirates, 6–7 October 2022. [Google Scholar]

- Shrivastava, V.K. Skin Disease Classification Using Deep Convolutional Neural Network on Jetson Nano Developer Kit. In Proceedings of the Third International Conference on Applied Electromagnetics, Signal Processing, & Communication (AESPC), Bhubaneswar, India, 24–26 November 2023. [Google Scholar]

- Vasishtamol, P.K.; Soni, P. Advances in deep learning for dermatology: Convolutional neural networks for automated skin disease detection and diagnosis. In Proceedings of the 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–5. [Google Scholar]

- Raut, P.; Sahu, N.; Sudam, A.; Shirvastava, A.; Mumbaikar, S. Skin lesion detection using MobileNet CNN. In Proceedings of the 3rd Asian Conference on Innovation in Technology (ASICON), Pune, India, 25–27 August 2023; pp. 1–6. [Google Scholar]

- Salomi, M.; Daram, G.; Harshitha, S.S. Early skin cancer detection using CNN-ABCD rule-based feature extraction classification and K-means clustering algorithm through Android mobile application. In Proceedings of the Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), Vellore, India, 22–23 February 2024; pp. 1–5. [Google Scholar]

- Ali, M.A.; Khan, M.; Sherwani, K.I. Early skin cancer detection using deep learning. In Proceedings of the 3rd International Conference on Smart Generation Computing, Communication and Networking (SMART GENCON), Bangalore, India, 29–31 December 2023; pp. 1–6. [Google Scholar]

- Tschandl, P. The HAM10000 Dataset, a Large Collection of Multi-Source Dermatoscopic Images of Common Pigmented Skin Lesions (Version 4). Available online: https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T (accessed on 1 January 2025).

- Watt, T.; Chrysoulas, C.; Barclay, P.J. Moving healthcare AI-support systems for visually detectable diseases onto constrained devices. arXiv 2024, arXiv:2408.08215. [Google Scholar]

- Abreha, H.G.; Hayajneh, M.; Serhani, M.A. Federated Learning in Edge Computing: A Systematic Survey. Sensors 2022, 22, 450. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).