Abstract

With the increasing prevalence of Internet of Things (IoT) devices in areas like authentication, data protection, and access control, general purpose microcontrollers (MCUs) have become the primary platform for security-critical apps. However, the expense of these attacks has decreased significantly in recent years, making them a viable threat to MCU-based devices. We present a framework-driven perspective with a comparative survey of MCU fault injection resilience for IoT. The survey supports—and is organized around—the procedural evaluation framework we introduce. We discuss the basic requirements for security first, and then categorize the common types of hardware intrusion, including side-channel attacks, fault injection attacks, and invasive methods. We synthesize reported security technologies employed by MCU vendors, such as TrustZone/TEE, Physical Unclonable Functions (PUF), secure boot, flash encryption, secure debugging, and tamper detection, in the context of FIA scenarios. A comparison of representative MCUs—STM32U585, NXP LPC55S69, Nordic nRF54L15, Espressif ESP32-C6, and Renesas RA8M1—highlights cost–security trade-offs relevant to token-class deployments. We position this work as a framework/perspective: an evidence-first FI evaluation protocol for token-class MCUs, a portable checklist unifying PSA/SESIP/CC expectations, and a set of concrete case studies (e.g., ESP32-C6 secure boot hardening). We do not claim a formal systematic review.

1. Introduction

Over the past decade, security architects have increasingly relied on resource-constrained microcontrollers (MCUs)—typically Arm Cortex-M or compact RISC-V parts with features such as TrustZone-M, secure boot, flash/OTP encryption, and authenticated debug—as the primary protection boundary in IoT and token-class systems [1]. These MCUs often anchor authentication tokens and access badges and serve as roots of trust in devices whose economics favor a streamlined supply chain, a single firmware image, predictable edge latency, and aggressive platform reuse. As a result, many products now treat an MCU—not a companion secure element—as the first line of defense for boot integrity, key protection, lifecycle control, and update assurance.

At the same time, the bar for practical hardware-assisted attacks has dropped. Software-assisted fault injection (FIA) has shown that privileged code can modulate voltage and frequency within allowed operating ranges to induce exploitable timing faults without invasive equipment [2]. In parallel, microarchitectural channels exploiting caches, TLBs, prefetchers, performance/power sensors, and related structures have demonstrated that secrets can be encoded into and recovered from shared microarchitectural state, even on comparatively simple cores [3]. Phenomena such as DRAM disturbance effects further illustrate how physical behaviors can erode higher-level isolation if not treated as first-class threats [4]. Together, these trends narrow the implicit safety margins of embedded designs.

This raises a practical question for IoT practitioners: how resilient are “security-enhanced yet general-purpose” MCUs to low-barrier attacks—especially FIA—and can they be trusted to underpin authentication tokens and similar anchors? Addressing this requires moving beyond checklists (e.g., “has TrustZone-M”) toward capability-driven reasoning that ties concrete attack preconditions (clock/voltage control, lifecycle/debug state, firmware update surface) to deployable controls (secure boot chains, key vaults, flash encryption, TRNG/PUF quality, side-channel hardening, and tamper/monitor subsystems).

1.1. Problem Statement and Motivation

Assumptions about privilege separation at microarchitectural boundaries have been systematically challenged [5]. Even where MCUs avoid speculative out-of-order cores, the method—encode secrets into microarchitectural state and recover them via robust timing/power observables—ports to simpler structures such as TLBs, PMUs, prefetchers, and thermal sensors [6]. In parallel, DVFS-class FIA demonstrates that software-exposed power/clock controls can create fault windows affecting control flow, cryptographic checks, and boot-time verification [7].

Our motivation is twofold:

- (i)

- Provide a vendor-neutral, evidence-weighted evaluation of security-enhanced MCUs considered for tokens and trust roots;

- (ii)

- Bridge the vocabulary of MCU features (TrustZone-M partitions, secure boot chains, key-vault isolation, lifecycle/authenticated debug, flash encryption, TRNG/PUF health) with contemporary attack mechanisms (DVFS-triggered faults, lifecycle/debug misuse, and microarchitectural channels) to enable holistic residual-risk reasoning. These aims align with the research questions formalized in Section 2.

Primary contribution. The core contribution is a procedural evaluation framework in Section 5. The comparative survey and device-level narratives exist to instantiate and stress-test that framework on representative MCU families.

We therefore emphasize framework-guided reasoning over “leaderboard”-style rankings.

1.2. Scope and Threat Model

In scope. Commodity and security-enhanced MCUs used as primary trust anchors in IoT/token-class deployments (e.g., Cortex-M33/M85 with TrustZone-M; securely booted RISC-V MCUs with flash/OTP encryption). We consider adversaries with physical proximity and board-level access who can (a) induce transient faults via power/clock or EM glitching; (b) exercise lifecycle/debug transitions and update paths; and (c) exploit microarchitectural observables (timing/power). The goal is to evaluate integrity (boot/firmware), confidentiality (keys/entropy/PUF), availability (fault tolerance/rollback safety), and policy correctness (lifecycle/debug scope).

Out of scope. High-assurance secure elements and smartcards engineered for invasive resistance (different cost/assurance models), as well as full decapsulation/lab-grade invasive attacks. Our focus is on realistic, board-level FIA/SCA conditions and software-assisted preconditions that practitioners can and should design against.

Assumptions. Systems commonly deploy a single production firmware per device class; board designs are cost-sensitive; and product teams value reuse across SKUs. Where DVFS is software-exposed, its operational envelope is assumed to be policy-controlled but not necessarily fault-proof.

1.3. Challenges in Evaluating MCU Suitability for Tokens

Feature–attack coupling. Vendor datasheets enumerate protections (secure boot, flash encryption, debug authentication), but the mitigation mechanics and their coupling to concrete attack preconditions are unevenly documented [8]. Establishing whether a given feature breaks an attacker’s prerequisites often requires cross-referencing silicon behavior, board power integrity, and firmware policy.

Realistic fault models. DVFS-class FIA and analog glitching during boot or crypto can defeat integrity checks unless monitors/resets, brown-out/clock-quality detectors, and retry logic are robust and correctly parameterized [9]. Fault windows are narrow and device-/board-specific, complicating reproducibility and validation.

Lifecycle/debug scoping. Manufacturing and RMA workflows necessitate privileged lifecycle/debug states. If entitlements, scoping, and revocation are permissive—or if rollback counters and error budgets are not atomically enforced—bypass opportunities arise, especially when combined with FIA/SCA [10].

Heterogeneous evidence. Targets, tools, and metrics vary widely across studies. Evidence quality ranges from peer-reviewed replications to single-lab demonstrations with limited parameter disclosure, requiring careful weighting rather than simple vote-counting [11].

1.4. Contributions

Taxonomy and normalization. We standardize terms across vendors, covering TrustZone-M/TEE partitioning, TRNG/DRBG health testing, key-vault isolation, flash/OTP encryption, secure boot chains, lifecycle/debug policies, tamper/monitoring, and certification language (PSA/SESIP/CC).

Attack-to-defense mapping with measurable artifacts. We align concrete vectors (DVFS/EMFI faults; cache/TLB/PMU/thermal/prefetcher channels; lifecycle/debug misuse) to deployable defenses (detection/monitoring, infective checks, partitioning, board-level conditioning) and emphasize observable artifacts such as SUIT manifests, TRNG health tests, lifecycle/debug transcripts, and fault-detection logs.

Evaluation framework for token suitability. Section 5 defines measurable criteria that score resilience along attacker control over power/clock and update surfaces, observable microarchitectural vantage points, fault detectability, rollback resistance, secret-exposure surface, channel SNR, and recoverability under noise.

Practitioner guidance. We distill fault-aware design patterns (boot-time hardening, credential minimization, short-lived manufacturing entitlements, lifecycle segregation) into checklists that complement certification efforts and enable threat-tailored device selection without endorsing specific models.

1.5. Positioning and Relation to Prior Work

Prior work on transient/speculative behaviors, microarchitectural channels, and software-assisted undervolting demonstrates that software and physics interact in ways that can subvert isolation—even absent invasive equipment [12]. Most demonstrations target desktop/server-class SoCs; this study extends the discussion to MCU-class devices, bridging silicon/board/firmware controls with realistic attack preconditions, and providing a framework that evaluates deployability and assurance context rather than only feature presence [13].

1.6. Paper Organization

Section 2 details the survey methodology: information sources, eligibility, screening workflow, coding schema, quality appraisal, and synthesis approach. Section 3 provides background and a threat taxonomy tailored to MCU-class FIA/SCA/invasive techniques and MCU-relevant surfaces. Section 4 offers comparative, cross-vendor device narratives that emphasize deployable controls and measurable artifacts. Section 5 presents the MCU-oriented FIA evaluation framework, discusses design implications and certification alignment, and concludes.

2. Survey Methodology

This section presents an a priori protocol for collecting, screening, and synthesizing evidence on the security of commodity microcontrollers (MCUs) against hardware-assisted threats—especially fault injection (FIA)—and on defenses commonly deployed in IoT/token-class designs. We explicitly scope the search to software-exposed DVFS fault phenomena to motivate the inquiry [14,15,16] and acknowledge converging evidence on microarchitectural transients and side channels (e.g., speculation, port/interconnect contention, prefetchers, PCIe/GPU vectors, and DRAM disturbance) that show how software and physics interact to undermine isolation even on simple cores [17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32]. Methodological guardrails follow established SLR practice and transparent reporting norms [33,34], are grounded in engineering baselines to keep findings actionable [35], and incorporate community policies on artifacts and reproducibility to set technical admission thresholds [36,37,38]. Data/metadata stewardship aligns with FAIR principles [39]. Selection of industry talks adheres to formal briefing requirements to avoid promotional content [40].

Research Questions (RQs)

RQ1. Within 2015–2025, what MCU-class vulnerabilities and attack surfaces are most relevant to FIA/SCA/invasive techniques?

RQ2. Which countermeasures demonstrate verifiable and reproducible effectiveness in MCU/token-class deployments?

RQ3. How do certification/assurance clauses map to measurable artifacts in practice (e.g., SUIT manifests, TRNG health tests, lifecycle/debug transcripts)?

RQ4. How is evidence distributed across MCU families and security primitives, and where are the gaps?

2.1. Information Sources and Search Strategy

Time window. 2015–2025 (inclusive), capturing a decade of MCU-security evolution.

Last update. 28 August 2025.

Channels.

- (1)

- Peer-reviewed venues (e.g., IEEE/ACM/USENIX/NDSS/IACR) for primary technical evidence.

- (2)

- Standards/certification materials to normalize assurance language (not as a stand-alone proxy for resilience).

- (3)

- Curated industry venues (e.g., Black Hat, Hardwear.io) limited to talks/whitepapers with enough technical detail (setup, parameters, measurements) for independent reproduction.

- (4)

- Vendor technical materials (security advisories, application notes, reference manuals) with concrete configuration guidance (marketing content excluded).

Representative queries (Boolean combinations of MCU primitives with attack/defense terms):

“MCU secure boot” AND (“fault injection” OR glitch OR EMFI)

“TrustZone-M” AND (attestation OR “secure update” OR SUIT)

“flash encryption” AND (bypass OR DFA)

“authenticated debug” OR lifecycle OR “JTAG lock”

“TRNG 800-90B” AND (“ring oscillator” OR “entropy health”)

“PUF” AND (SRAM OR “helper data”) AND (IoT OR MCU)

Snowballing. Forward/backward snowballing from seed papers and standards was applied until no new MCU-relevant technical contributions appeared in the citation neighborhood [37].

Screening and documentation practices follow community reproducibility guidance and briefing policies to maintain rigor and auditability [36,38,39,40]. For inter-rater reliability procedures we adopt well-accepted evidence-synthesis conventions [41]. Entropy-source terminology used in search strings was harmonized to authoritative definitions to reduce ambiguity [42].

2.2. Eligibility Criteria

Population. Commodity or security-enhanced MCUs (e.g., Arm Cortex-M, compact RISC-V) used as a primary protection boundary in IoT/token-class deployments; MCU-centric SoCs where boot/update/crypto/lifecycle subsystems define trust.

Concept. Empirical evidence, analyses, or implementation detail about FIA, side-channel attacks (SCA), invasive techniques, and/or mitigations relevant to secure boot chains; key/flash protection; entropy/PUF; lifecycle/debug; and update/attestation.

Context. Embedded settings where physical proximity and board-level conditions make FIA/SCA realistic and where silicon + board + firmware + process controls determine resilience.

Included study types. Peer-reviewed papers; official standards/certification documents; vendor advisories and application notes; vetted industry talks/whitepapers with auditable technical substance. English only.

Exclusions. Dedicated secure elements/TPMs and smartcards engineered for high-cost invasive resistance (different cost/assurance models); promotional content without technical detail; non-English sources lacking reliable technical translation; informal materials without reproducible detail (e.g., no attack parameters, measurement methods, or configuration listings).

Outcomes. At least one of (a) concrete attack/mitigation evidence on MCU-class platforms; (b) implementation/configuration details enabling replication; (c) standards-aligned control descriptions tied to measurable artifacts (e.g., SUIT manifests, TRNG health tests, lifecycle/debug transcripts).

2.3. Screening and Selection Workflow

Records were de-duplicated by DOI/title/author/venue/year. Two reviewers independently screened titles/abstracts against Section 2.2; candidates then underwent full-text assessment using a structured form aligned with Section 2.4. Disagreements were resolved by a third reviewer. Cohen’s κ was computed at both title/abstract and full-text stages to quantify agreement. To keep the narrative focused on technical synthesis, key counts (N0: retrieved; N1: de-duplicated; N2: title/abstract includes; N3: full-text assessed; N4: included) and a PRISMA-style flow diagram appear in an appendix, together with a transparent audit trail of inclusion/exclusion reasons.

2.4. Data Extraction and Coding

For each included item we extracted MCU family and core; clocking and memory/bus characteristics; security primitives (TrustZone/TEE, secure boot chain, flash/key protection, TRNG/DRBG, PUF, lifecycle/debug policies, tamper/monitors); update/attestation controls; and stated certifications (when applicable).

Normalization schema (five-tuple) for cross-study aggregation without over-fitting to any single vendor/toolchain:

Mechanism: FIA/SCA/invasive/transient/microarchitectural.

Carrier: voltage/clock/EM/laser/cache/PMU/prefetcher/…

Observable: control-flow upset, MAC/tag mismatch, entropy bias, PUF BER drift, timing/power leakage.

Surface: boot, key unwrap/flash, TRNG, PUF, lifecycle/debug, application crypto.

Defense: detection/monitoring, infective, partitioning, board-level conditioning.

An appendix provides an MCU-specific terminology concordance (e.g., microarchitectural includes prefetch/cache manipulations that induce timing anomalies; transient includes short-duration voltage/clock disturbances that flip control flow). Entropy-source and health-testing terms are harmonized to authoritative recommendations [42].

Evidence tiers (reproducibility/verification):

T3: peer-reviewed with artifacts/data and at least one independent replication;

T2: peer-reviewed or official advisory with sufficient technical detail to replicate;

T1: vetted talk/whitepaper with concrete technique but limited replication.

Claims lacking sufficient technical detail were excluded. When a single study met multiple criteria, the highest verifiable tier was assigned for synthesis.

2.5. Quality Appraisal and Risk of Bias

Each study was scored on four axes (0 = insufficient, 1 = partial, 2 = sufficient):

Internal validity: controls/ablations, calibrated timing/power resolution, noise modeling, statistical power.

Reproducibility/verification: availability of artifacts/data/scripts/configurations; corroboration by independent studies or vendor bulletins.

External validity: applicability to MCU/token contexts; explicit assumptions/limits.

Bias risk: selective reporting, idealized lab conditions (power integrity, temperature) not representative of field settings, over-generalization beyond tested silicon/revisions.

A weighted sum W = I + R + E − 0.5·B mapped to tiers: W ≥ 5 → T3; 3 ≤ W < 5 → T2; W < 3 → T1. Lower-quality results were used cautiously and only when consistent with higher-tier evidence. Scores informed narrative weighting in later sections; no single numeric meta-aggregate was enforced.

2.6. Synthesis Methods

Given heterogeneity in targets, tooling, and effect measures, we employed narrative synthesis rather than meta-analysis. Results are organized along three axes: (i) threat taxonomy (FIA/SCA/invasive) and MCU-relevant surfaces; (ii) mappings from attacks to defenses with measurable artifacts; and (iii) cross-vendor device narratives emphasizing deployable controls and assurance context over checklist comparisons. Two predefined subgroup analyses were performed: by MCU family/ISA and security primitive, and by defense type (detection, infective, partitioning, board-level conditioning). A sensitivity analysis repeated the main conclusions using T2 + T3 evidence only to assess robustness. Descriptive statistics (e.g., proportions by attack class or targeted primitive) are discussed qualitatively to avoid implying uniform metrics across diverse setups.

2.7. Data Management and Reproducibility

We maintain a de-duplicated bibliography with screening decisions and exclusion reasons, a structured codebook, and extraction sheets. Appendices provide the PRISMA-style flow diagram, evidence-tier assignments, and aggregation tables needed to verify selection and synthesis logic.

Governance follows transparency and reusability principles, without distributing copyrighted full texts; artifact availability and replication status are reflected in tiering and carried into the background/taxonomy (Section 3), device comparisons (Section 4), and the FIA evaluation framework (Section 5).

Entropy-source metrics and terminology remain consistent throughout the process [42].

3. Background and Theory

This section consolidates the core concepts and evaluation axes for MCU/SoC-based token security and ties them to publishable, auditable artifacts that engineering teams can reproduce on a bench. We align terminology with widely adopted guidance on entropy sources, firmware resiliency, cryptographic module boundaries, and IoT/token assurance so that a single body of evidence can satisfy multiple schemes.

Deterministic DRBG integration and health management must follow companion recommendations to avoid silent degradation in constrained firmware workflows [43].

Platform firmware resiliency principles clarify what must survive resets, power anomalies, and recovery paths and how these behaviors are verified end-to-end [44].

For entropy sources, evaluators should combine population characterization with on-device health tests consistent with established evaluation methodologies for TRNGs [45].

Where a cryptographic boundary is claimed, roles, zeroization behavior, and lifecycle interactions must be articulated in the language of module validation programs to avoid ambiguous scoping in MCU settings [46].

Token-oriented assurance vocabularies help normalize claims about roots of trust, lifecycle/debug discipline, and attack potential so that evidence maps cleanly to certification language without vendor-specific ambiguity [47].

Baseline IoT security guidance further constrains update, configuration, and vulnerability-handling practices that intersect with token deployments at scale [48].

3.1. Cryptography and Security Requirements for Tokens

Five Pillars and Auditable, Engineering-Grade Artifacts

We map token security pillars to MCU/SoC controls and to publishable evidence so reviewers can check claims without privileged access. Update and rollback guarantees must be machine-verifiable through standard manifests and tools [49]. Attestation should bind nonce, measurements, lifecycle/debug state, and rollback counters, with public verifier scripts that reproduce pass/fail outcomes deterministically [50].

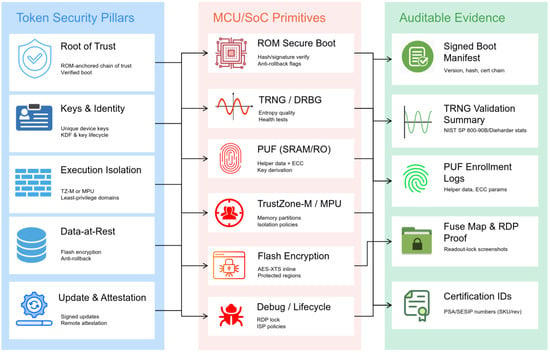

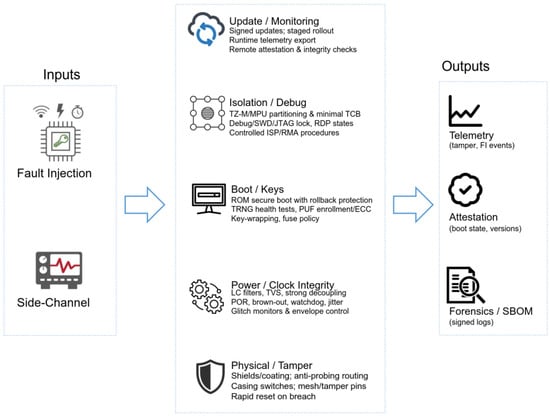

Before diving into mechanisms, we map high-level security pillars to MCU/SoC controls and to the publishable evidence reviewers should expect. Figure 1 conceptually links pillars → primitives → artifacts.

Figure 1.

Token pillars–evidence map.

We map token security pillars to MCU/SoC controls and to publishable evidence so reviewers can check claims without privileged access.

- Confidentiality. Robust entropy (TRNG/DRBG) for key generation; device-bound KEKs (PUF/fuses); flash/OTP encryption; debug locked by default, with health failures that halt keygen rather than falling back. Population characterization and run-time health logs must accompany releases.

- Integrity. Immutable RoT or verifiably secure first stage; chained secure boot; anti-rollback via monotonic counters; measurements and counter states carried inside attestation artifacts that tools can replay deterministically.

- Availability. Brown-out and clock-quality monitors, watchdogs, and safe-restart strategies with documented thresholds, detection latencies, and fault injection (FI) behavior across calibrated envelopes.

- Updatability. Authenticated, policy-checked updates with machine-verifiable manifests and transparent failure handling; third parties should reproduce verifier outcomes from vendor-published artifacts.

- Attestation. Nonce-bound evidence of firmware state, rollback counters, lifecycle/debug status, and relevant monitors, conveyed in standardized structures that are script-checkable by external verifiers.

To convert this into immediate actionable steps for engineering teams, we discuss the mapping from pillars to MCU/SoC controls and the things they should publish. As illustrated in Table 1, each pillar is associated with concrete foundations and a list of verification evidence.

Table 1.

Pillars–primitives–evidence checklist.

Implementation notes and common pitfalls:

- TRNG/DRBG: never fail silently; log SP 800-90B health-test failures and halt key generation.

- Boundary and zeroization: define a cryptographic boundary (FIPS 140-3) and publish standardized zeroization tests for lifecycle/RMA.

- Secure boot and rollback: include anti-rollback counters in attestation; ship verifier code for SUIT and chain-integrity checks.

- Lifecycle/debug: Off by default past DEV, access only for authenticated users; unlock permitted in RMA; irreversible transitions DEV→PROD→RMA; for unlocked devices, publish fuse maps and release transcripts.

- Tamper/FI observers: publish thresholds, detection latency characteristics, and failure logs; report behavior under N attempts.

3.2. Hardware Attack Taxonomy

We summarize prior taxonomies of adversarial pressure on tokens into FIA, SCA, and invasive attacks. The first two dominate practical risk for MCU/SoC tokens; the third informs scoping and completeness statements.

A side-by-side comparison in Table 2 contrasts the access model, cost, and common goals of each class, this provides a concise reference when studying the threat model.

Table 2.

FIA vs. SCA vs. invasive summary.

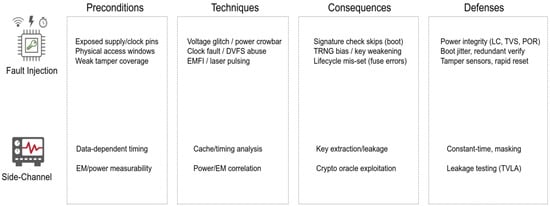

To aid in the taxonomy’s visual anchoring and association with concrete taxonomy of failure modes in tokens, Figure 2 illustrates the attack classes, their prerequisites, the most likely consequences, and the defensive hooks that can be published and independently verified.

Figure 2.

Hardware attack taxonomy overview.

3.2.1. Fault Injection Attacks (FIA)

Prior work shows that voltage, clock, and EM transients can induce instruction skips or state corruptions near signature checks and key schedules, subverting secure boot or crypto [54]. Software-assisted DVFS abuse (e.g., CLKSCREW, Plundervolt, VoltJockey) reduces probe reliance by misusing power–management interfaces—contrary to TEE isolation assumptions [58]. To facilitate the practical application of FI analysis to engineering teams, Table 3 lists the vectors of FI analysis, the periods during which it is most effective, the typical effects, and what should be monitored and documented.

Table 3.

FI vectors, windows, effects, instrumentation.

Mitigations and measurable hooks: Monitors must be fast and well-tuned, their defensive actions should include a temporal/logical redundancy around critical checks, micro-jitter that will desynchronize the FI window, and a halt-on failure with a forensic log.

DVFS must be enforced by hardware OPPs that have special registers that are locked (BIOS/microcode) and publish negative tests demonstrating software cannot bypass security boundaries.

Low-cost setups (e.g., ChipWhisperer) are sufficient to create heatmaps that bypass the cost of money and reviewers can reproduce.

3.2.2. Side-Channel Attacks (SCA)

Power/EM/timing channels remain effective if crypto is not constant-time or lacks masking/shuffling; near-field EM and CPA are standard tools. In SoC-class tokens, shared microarchitectural resources (caches, PMU) also leak if secure and non-secure code co-locate.

To summarize the most influential channels and matching remedies in embedded deployments, Table 4 lists practical combinations and the necessary controls.

Table 4.

Microarchitectural channels and mitigations.

Artifacts to publish. CPA leakage plots; EM traces; cache-timing distributions with no secret-dependent separation; PMU access-control tests showing counters are gated/disabled for secure tasks. If MCU designs lack the shared features, document the negative tests and rationale.

3.2.3. Invasive Attacks (Scope and Completeness)

Invasive methods—such as decapsulation, microprobing, FIB/laser editing, and optical faulting—are typically employed for inexpensive tokens, but the scope of the statement must be explicitly stated. Defenses include active grids, shields, temperature/voltage sensors, and epoxy coating; these are all examples of passive defenses. When mapping to the SESIP/CC, state which potential attacks (devices, costs, time) are excluded and justified, these are considered in-scope with the FI/SCA mitigation strategies.

3.3. Illustrative Cases (Grounding FIA and SCA Consequences)

It is beneficial to attach the taxonomy to practical failure modes that appear frequently in practice. The following cases demonstrate results from previous research that do not involve third parties, and they specifically describe what vendors should release to demonstrate stability.

3.3.1. FI Bypasses of Boot/Rollback

Multiple studies have demonstrated that a single instruction skip or a small byte corruption near the cryptographic verification gates (e.g., signature checks, anti-rollback comparisons, attestation logic) can adversely affect the secure boot chain when cryptography is incorrect.

The issue is worse when DVFS guardrails allow software to induce a remote-like FI (without probes), as seen in CLKSCREW, Plundervolt, and VoltJockey.

To make bypass claims verifiable, vendors should publish bypass probability heatmaps (glitch amplitude × delay), monitor behavior (detection latency vs. fault magnitude), and negative tests demonstrating that post-patch software can no longer cross OPP guardrails.

3.3.2. DFA-Assisted Key Recovery

Single-fault DFA typically restores the AES key by corrupting the late-round state bytes; RSA can be bypassed by temporary changes to the modulus or exponent during critical scenarios. To demonstrate infeasibility, teams must demonstrate that FI halts before the fallible windows are reached, that there is additional temporal redundancy in the key schedules and final rounds, and that the results of the negative CPA are also published.

3.3.3. SCA Pitfalls in SoC Tokens

When logic that is both trusted and untrusted shares a cache with untrusted code, Flush + Reload and Prime + Probe produce high-fidelity signals; PMU power proxies (e.g., PLATYPUS) also leak the pattern of execution. Beyond the constant-time cryptography, practical hardening necessitated the cache partition/disable for safe tasks and the PMU gating to specific levels of privilege. Reviewers should see cache-timing experiments with no statistically significant separation for secret paths and PMU access-control tests that block access to secure-task counters.

3.4. Standards and Assurance Context

Building a single, script-verifiable artifact pack—boundary/zeroization proofs, lifecycle/debug transcripts, monitor thresholds/latencies, attestation/verifier outputs, and FI/SCA parameter sweeps—keeps claims portable across schemes and reduces redundant effort for product teams [67].

Heterogeneous trusted-execution work highlights that shared interconnects and schedulers in mixed-IP SoCs must be treated as part of the attack surface when mapping MCU-level claims; the same discipline applies when an MCU offloads to accelerators [68].

Engineering teams often have to deal with multiple, separate requests for assurance (PSA, SESIP, CC, cryptographic requirements). To avoid the redundant effort of building multiple artifacts, we suggest creating a single pack of artifacts that satisfies all of them. A concise description of the methods used to update and verify controls over concrete evidence is listed in Table 5, this ensures that once-produced evidence can be reused.

Table 5.

Update/attestation controls to evidence.

Lifecycle and debug discipline directly affect auditability and field risk; requirements and evidence should be independently script-checked by external stakeholders [69]. The lifecycle and auditability of a system are directly affected by debug discipline. It is important to isolate these controls and the specific documents released; this will allow external stakeholders to programmatically verify the policy. As can be seen in Table 6, requirements include a default of debug, a requirement for authentication, a rate limit for RMA, and lifecycles that are irreversible with an attested state.

Table 6.

Lifecycle and debug requirements and evidence.

Where DVFS is present, governance must be demonstrated, not asserted: show that software cannot violate operating-performance points (OPPs), lock privileged registers, and publish monitor coverage/latency under calibrated FI with regression tests proving that prior proofs-of-concept no longer work post-patch [70].

For tokens that are built on top of SoCs that are enabled by DVFS, the authority to govern must be proven. Engineers should consider negative tests that demonstrate the effectiveness of hardware OPP enforcement and the lack of privilege, along with coverage and latency graphs. A practical list of checkouts is listed in Table 7.

Table 7.

DVFS governance checkpoints and artifacts.

3.5. Consolidated Engineering Guidance (What to Build, What to Publish)

To make assertions that are both credible and portable across PSA/SESIP/CC, we provide a single source of information on controls, artifacts, and acceptance rules. This is intended to serve as a means of verifying that a building is constructed as intended and as a means of releasing a template for release. Refer to Table 8 for the full matrix.

Table 8.

Consolidated controls, artifacts, criteria.

3.6. Relation to Literature and Threat-Model Calibration

Our synthesis mirrors recurring results in the literature on FIA windows and DFA feasibility, software-assisted FI via DVFS that undermines enclave assumptions, and SCA in SoC settings with shared caches/PMU. PSA/SESIP provide IoT/MCU-appropriate assurance language; CC contributes attack potential and scoping vocabulary; FIPS 140-3 clarifies crypto-module boundaries. The goal is not to introduce a new framework, but to aggregate controls and artifacts into a portable, auditable checklist consistent with these bodies of work.

4. Comparative Analysis of Selected MCUs

This section provides a security-oriented comparison of five microcontrollers commonly considered for low-cost security tokens and small-scale IoT devices: STMicroelectronics STM32U585, NXP LPC55S69, Nordic nRF54L15, Espressif ESP32-C6, and Renesas RA8M1. We assess CPU architecture and frequency, integrated security features (TrustZone-M where applicable, TRNG/DRBG, secure boot, flash/key protection, PUF), lifecycle/debug policies, indicative certification posture, and price bands.

4.1. Scope and Methodology

Our primary concern is the design of hardware and protections deployable without extra security chips (e.g., a discrete secure element or TPM-class co-processor), because BOM pressure often excludes external controllers from low-cost tokens. Selection highlights: (i) Arm Cortex-M or RISC-V cores with proven toolchains and active ecosystems; (ii) first-party security blocks with on-die flash/key protection; (iii) availability of TRNG/DRBG and lifecycle controls; and (iv) publicly documented certifications where applicable (PSA Certified/Common Criteria) [71,72]. The pricing strategy is based on the volume of public distributors and is only indicative of the average; it differs by region, package, and order size.

Selection rationale (why these five). We select STM32U585, LPC55S69, nRF54L15, ESP32-C6, and RA8M1 to span (i) heterogeneous cores (Armv8-M TZ-M vs. RISC-V without TZ-M); (ii) different identity/storage baselines (SRAM-PUF vs. eFuse keying); (iii) varying on-die security blocks (SCE/PRINCE/OTF flash encryption); (iv) lifecycle/debug mechanisms with public collateral; (v) availability and tooling maturity; and (vi) distinct price bands. This coverage creates contrasting FI/SCA trade-offs without forcing an external secure element.

4.2. Comparative Summary Table

Before moving on to device narratives, we summarize essential attributes across two compact tables to improve readability. Table 9 concentrates on compute and core security capabilities—micro-architecture, maximum clock frequency, PUF availability and the presence of TrustZone, TRNG, and secure boot. Table 10 complements this with storage and lifecycle protections, certification signals, and indicative price bands. Collectively, these five MCUs occupy distinct points on the security–cost–performance frontier—each prioritizing different security features relative to performance and price.

Table 9.

Comparative summary—compute and core security.

Table 10.

Comparative summary—storage, lifecycle and certification.

Note: Values are representative of the publicly accessible datasheets and technical references at the time of writing; the certification level and scope of this certification is contingent on the specific SKU and version. Price bands are based on the volume of sales. “TrustZone-M” is a term used to describe Armv8-M TrustZone. “Helium” is a term used to describe the Arm M-Profile Extended Vector (MVE).

For deployments that have a security level of moderate concern, our analysis of the Nordic nRF family suggests that it is the most practical technologically and economically for a physical token that stores keys. This is derived from the attack-attribute mapping that was developed in this project: nRF devices possess a comprehensive set of isolation attributes and lifecycle controls that are complementary to non-invasive FI/SCA threat models, while still maintaining the effort of integration and the testing time within a larger market. In practical terms, once the debug/RMA interfaces are permanently disabled, the secure boot and key management policies are enforced, and basic FI hardening is applied at the board level (power/clock filtering, temporal margins, fault detection). The remaining risk profile is still compatible with consumers’ and enterprises’ “moderate-assurance” goals.

4.3. Device-Level Analyses

4.3.1. STM32U585

The STM32U5 family targets low-power designs with substantial security: TrustZone-M partitioning, on-chip TRNG, AES-256 on-the-fly (OTF) flash decryption, ROM-based secure boot, and readout protection (RDP). Public collateral covers secure provisioning and boot chain configuration across multiple part numbers [73,74].

Security posture. Entropy availability, a mature ROM boot chain, and production-grade lifecycle controls are the principal strengths. However, FI windows around verification logic are a cross-vendor risk; secure ROM alone does not imply FI resistance. Practical designs should pair silicon features with board-level countermeasures (filtering/decoupling/TVS, tight supply supervision) and lock RDP Level 2 as late as possible in manufacturing but before field exposure. Representative bypass narratives in the literature motivate these practices [75,76].

When appropriate. Mid-range tokens require signed updates, moderate physical exposure, and TZ-M isolation for crypto stacks.

4.3.2. NXP LPC55S69

LPC55S69 remains a capable M33-class security platform. Its SRAM-PUF enables device-unique keys without long-term NVM storage (valuable against forensic reads), with helper-data/ECC flows documented by the vendor [77,78]. PRINCE inline region encryption provides low-latency protection for selected flash regions [79], and the ROM secure boot chain is well described. CASPER (big-number) and PowerQuad DSP accelerate ECC/RSA and signal processing.

Trade-offs. SCA robustness varies if developers bypass vendor engines or use non-constant-time software paths; analog tamper protection is more modest than in high-end secure controllers. For “near-secure” MCU builds, the PUF-anchored identity + PRINCE + lifecycle controls + family-level PSA coverage form a practical baseline [80].

When appropriate. Tokens prioritizing unclonability via PUF and low-latency encrypted regions; cost-sensitive builds with a mature ecosystem.

4.3.3. Nordic nRF54L15

The nRF54L15 emphasizes ultra-low-power wireless with secure boot, robust separation between protocol and application domains, and mature radio/software ecosystems. Public collateral for the nRF54L line highlights multi-domain partitioning and secure key services integrated with radio scheduling [81]. PSA certification is well represented across Nordic families (e.g., nRF5340), informing lifecycle/debug expectations and attestable posture [82].

Trade-offs. Compute headroom is tuned for energy efficiency rather than heavy cryptography; analog hardening is limited; no public PUF. For radio-centric, low-duty-cycle tokens the match is strong; FI-intense environments may need external conditioning or companion parts.

When appropriate. BLE tokens, low-duty authenticators, and nodes prioritizing radio posture over raw CPU performance.

USB note. nRF54L15 lacks native USB; a USB token needs an external PHY/bridge or a different SoC. A close family variant (nRF54LM20A) with HS-USB was difficult to procure at writing; nRF5340 serves as a practical USB-capable baseline with TZ-M, hardware crypto, and PSA-style lifecycle controls under a similar threat model [82].

4.3.4. Espressif ESP32-C6

ESP32-C6 combines a single-core RISC-V (~160 MHz) with Wi-Fi 6 and BLE 5.3 and offers a strong price/performance ratio. Its security layer comprises RSA-based secure boot, AES-XTS flash encryption, eFuse keying, HMAC, and TRNG, with flows documented in the TRM and IDF guides [83,84]. Debug/JTAG can be disabled and ROM flows support anti-rollback policies.

Trade-offs. No native PUF; limited analog tamper sensing; and no TrustZone-M (not applicable to this RISC-V core). With strict lifecycle policy, MPU/privilege isolation, constant-time crypto, code hardening, and physical FI conditioning, the C6 achieves a practical “good-enough” posture for cost-optimized tokens.

When appropriate. Cost-efficient authenticators, badges, and mass-scale IoT where SB + flash encryption + eFuse keying suffice.

4.3.5. Renesas RA8M1

At the high end, RA8M1 integrates Arm Cortex-M85 (with Helium vector extension) up to ~480 MHz. The Secure Crypto Engine (SCE) supports key wrap, secure key storage, and crypto acceleration, and is positioned as an on-die foundation for secure development [85,86]. Some SKUs expose tamper pins and voltage/time monitors; PUF is not listed as native.

Trade-offs. Pricing is premium, but headroom for protocol stacks and hardened cryptography reduces the need for external accelerators. For designs needing TZ-M isolation, high-throughput crypto, and long lifecycles, RA8M1 is compelling.

When appropriate. Premium tokens with TZ-M compartmentalization and abundant compute where lifecycle and performance justify cost.

4.4. Threat-Driven Discussion

Fault/Fault Injection (FI). All five families implement ROM-anchored secure boot with TRNG support, but immunity to voltage/clock glitches ultimately depends on timing slack, monitors, and board-level conditioning.

Designs should add randomized timing, redundant checks (e.g., double verification via diverse code paths), robust power supervision, and envelope protection (TVS/LC, fast POR retries). Devices with tamper pins and sag monitors should enable and calibrate them; production logging/locking behavior must balance DoS risk and bypass prevention.

Side-Channel Leakage (SCA). Only a subset of paths are side-channel hardened by default. Software AES/ECC must be constant-time/masked and validated under realistic EM/CPA conditions; cache-timing pitfalls and power proxies remain relevant in MCU/SoC settings. Use vendor engines where available and verify residual leakage empirically.

Lifecycle and Debugging. All devices support debug locks (JTAG/SWD, lifecycle states, RDP). Production flows should enforce irreversible lock after release with controlled RMA/unlock transcripts to maintain auditability and avoid CWE-1191 class errors (improper debug access control).

Key at Rest and Identity. Devices without PUFs rely on eFuse-rooted keying and key-wrap; PUF-anchored identities (e.g., SRAM-PUF) reduce cloning risk under invasive reads but require robust enrollment, helper-data protection, and error correction, aligned with random bit generation recommendations (RBG) for key material quality [87].

PUF schemes and operational caveats (stability, BER/temperature drift) should be addressed in enrollment and lifecycle handling [88].

Secure Upgrade. Verified delivery and rollback protection (e.g., SUIT manifests, monotonic version counters) are essential for token-class longevity [88].

For RISC-V SoCs (e.g., ESP32-C6), vendor “IDF security” controls (secure boot v2, flash encryption policy, JTAG disable) provide concrete hooks for deterministic verification by integrators and external reviewers.

4.5. Design Guidance

Before device-specific choices, we highlight two baseline hardening principles that are repeatedly validated across literature and practice: (i) use constant-time/masked crypto and measure leakage (CPA/EM) rather than assuming it away [89,90]; (ii) place FI checks close to security-critical comparisons, combine detection with temporal/logical redundancy, and log/lock on detection rather than recovering silently [91].

Different application constraints lead to distinct “best fit” choices:

Cost-first mass authenticators—ESP32-C6. Enforce strict secure boot + flash encryption policies; fuse JTAG early; add board-level FI countermeasures; adopt constant-time crypto libraries and avoid dynamic key exposure. (Vendor IDF hardening points are available for verifier automation.)

SRAM-PUF unclonability in a low-cost M33—LPC55S69. Use PUF-derived KEKs with PRINCE regions for data-at-rest; prefer CASPER for big-number operations; pair with masked software crypto if using software paths [92].

Ultra-low-power radio tokens—nRF54L15. Lean on PSA posture and Nordic’s protocol isolation; adopt reduced crypto footprints and offload heavy compute to the backend; configure lifecycle/debug authentication rigorously. For ESP32-class SoCs, integrators can map controls to the vendor’s “IDF Security Features” to script repeatable checks [93].

Balanced TZ-M + OTF encryption without PUF—STM32U585. Harden boot paths against transient FI with monitor + redundancy; fully lock RDP Level 2 and validate TRNG output in provisioning; align partitioning and call gates with the Armv8-M TZ-M model to minimize trusted computing base [94].

High-performance secure controller—RA8M1. Use SCE for key wrap/inline crypto, configure tamper pins/monitors where available, rely on TZ-M for compartmentalization, and exploit M85/Helium headroom for protocol stacks and hardened crypto [95].

4.6. Limitations of the Comparative Analysis

Certification claims (PSA/SESIP/CC) are SKU- and revision-specific; integrators must verify the exact device and evaluation depth for the intended package/lot/date code. Price ranges are indicative and vary by package and market conditions. While Table 9 and Table 10 focuses on silicon features, real FI/SCA resilience is strongly shaped by system-level factors—board layout and power integrity, shielding, tamper sensors/grids, manufacturing controls, and disciplined debug/RMA procedures—without which silicon primitives can be bypassed. Debug discipline must also be auditable: irreversible lock, authenticated RMA, and clear transcripts are needed to avoid CWE-1191-class pitfalls [96]. Finally, where PUFs are used, enrollment stability, helper-data protection, and field drift must be engineered and evidenced to the standard of specialized PUF literature [97].

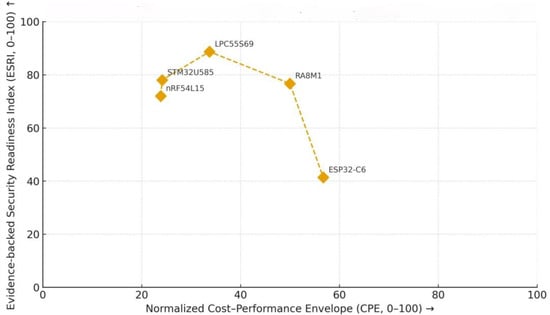

Evidence-supported Security Readiness Index (ESRI). We score eight dimensions on a 0–5 scale: Boot-path Resilience (s_BPR), Crypto Fault Tolerance (s_CFT), Entropy and PUF Assurance (s_EPA), Partitioning and Containment (s_PAC), Lifecycle/Debug Governance (s_LDG), Update and Attestation Rigor (s_UAR), Board-Level Integrity (s_BLI), and SCA Hardening (s_SCH). Let s∙∈ {0, 1, 2, 3, 4, 5}. We use weights w = {0.18, 0.12, 0.12, 0.10, 0.18, 0.12, 0.10, 0.08} (sum = 1).

Cost–Performance Envelope (CPE). CPE is bounded to [0, 100] by combining normalized performance and price (lower price is better). Pnorm is the min-max normalized performance proxy; PriceNorm is the min-max normalized public-distributor volume price. The performance proxy is Praw = 0.5 fnorm + 0.3 anorm + 0.2 × RAMnorm, where fnorm normalizes max clock across compared MCUs, anorm in [0, 1] reflects on-die crypto/accelerator presence, and RAMnorm normalizes on-chip RAM. Both Pnorm and PriceNorm use 5–95% percentile clipping before min-max.

The ESRI–CPE trade-offs for the five MCU families considered in this work are illustrated in Figure 3.

Figure 3.

Evidence-supported Security Readiness Index (ESRI) versus Cost–Performance Envelope (CPE) for five MCU families. ESRI is the weighted sum of eight evidence-backed dimensions (see Section 3 and Table 8); CPE is a normalized combination of performance characteristics and indicative price. The arrows on the vertical and horizontal axes indicate increasing ESRI (from bottom to top) and increasing normalized CPE (from left to right), respectively. Inputs and scoring rubric are provided in the supplementary data.

5. FIA-Oriented Security Evaluation

This section is the paper’s normative contribution: a reproducible protocol to evaluate fault injection (FI) resistance of token-class MCUs and their boards. Unlike Section 3 (which is purely background and terminology), Section 5 defines what to test, how to measure it, and how to report it, culminating in quantitative scores used by the paper’s comparative graphics. Concretely, we bind FI modalities (voltage, clock, EM/laser) to testable requirements for secure boot, TRNG/DRBG, PUF, flash encryption/key-wrap, debug/ISP lock, and TZ-M/MPU partitioning; and we specify pass/fail metrics, coverage criteria, and auditable artifacts that can underpin certification-grade evidence [98].

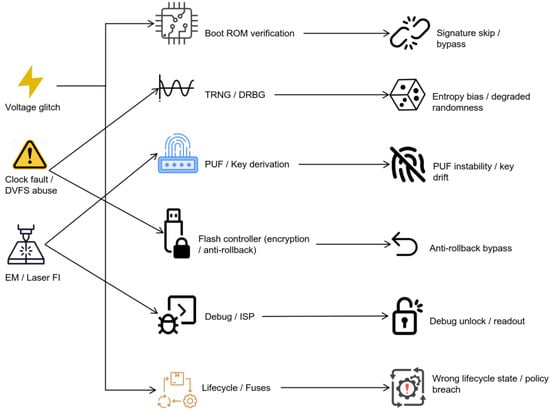

We provide (i) a concise FI surface/effect taxonomy focused on evaluation outcomes; (ii) a cross-mapping matrix linking FI types to bypass/degradation paths, pre-conditions, mitigations, and instrumentation; (iii) case studies grounding the matrix (including a concrete ESP32-C6 walk-through); (iv) a practical bring-up checklist; and (v) the scoring formulas that feed the manuscript’s aggregate plots, resolving ambiguity raised by the reviewers. Figure 4 provides a high-level view of the resulting FIA exposure landscape across token-class MCU subsystems.

Figure 4.

FIA exposure landscape across token-class MCU subsystems.

5.1. Scope, Terminology, and Threat Focus

Scope. We target FI vectors widely demonstrated on embedded platforms: voltage interference (crowbar, fast VDD transients, deliberate undervoltage), clock faults (overshoot/undershoot, duty-cycle distortion, unlocked PLLs), and optical/EMFI injections that induce localized logic faults [99,100]. Practical semi-invasive optical and EM methods on MCUs are well-documented across academic and practitioner literature [101,102,103,104]. Secure boot bypass narratives (e.g., instruction skip and mis-branch near signature checks) motivate concrete timing-window tests in our protocol [105,106]. While software-controlled DVFS is largely an application-class SoC phenomenon, frequency injection and clock-path abuse remain relevant to MCUs and are in scope (see TRNG sensitivity later) [107].

Terminology. “Bypass” = transient/persistent circumvention of a security check (e.g., signature verification). “Degradation” = reduced reliability/assurance (e.g., entropy bias in TRNGs, PUF instability). “Sensors” include on-die undervoltage/brown-out and clock-anomaly monitors, tamper pins, and lifecycle/debug state machines.

Threat focus. We assume adversaries can obtain brief physical access and wield low-/mid-cost tooling capable of sub-microsecond perturbations. Our pass/fail criteria emphasize evidence that is practical to obtain under lab-accessible conditions and scalable to third-party replication.

Practical Tooling and Cost Bands

We categorize setups by cost and reproducibility—all protocol steps are feasible without bespoke equipment:

Low-cost (~sub-$1 k): open-hardware glitchers, programmable PSUs, basic probes; useful for voltage/clock sweeps and coarse timing maps [108].

Mid-cost (~$1 k–$10 k): calibrated EMFI heads, higher-bandwidth injectors, stable optical benches; enables localized faulting and tighter synchronization [109].

High-cost (>$10 k): pulsed lasers, precision stages, synchronized multi-domain injectors; extends coverage but is not required for our base pass/fail metrics; we design the protocol so low/mid tiers remain valid [110,111].

5.2. Taxonomy of FIA Effects on MCU Security Functions

Control-flow timing upsets. Clock/VDD perturbations near compare/branch windows can skip signature checks, misread anti-rollback counters, or mis-evaluate debug locks. These typically occur during ROM boot or lifecycle transitions and are reproducible with narrow timing sweeps.

Data-path corruption. Optical/EMFI or severe glitching flips bits during fetch/decode or memory access, yielding faulty MAC/tag comparisons, spurious eFuse reads, or incorrect key unwrapping.

Analog/entropy degradation. Frequency injection against ring-oscillator TRNGs can phase-lock or correlate oscillators, lowering entropy; if TRNG seeds DRBG or keys, this is critical [112,113].

PUF stability and reconstruction. SRAM/RO PUFs exhibit temperature/voltage sensitivity; FI can inflate BER during enrollment or reconstruction, stressing helper-data workflows and ECC margins [114,115,116].

Lifecycle/debug FSM desynchronization. FI during fusing/ISP can cause partial locks or inconsistent state records; robust acceptance criteria require monotonic fuses and verifiable transcripts. Online TRNG/DRBG tests should be run under stress to ensure detection of degraded entropy rather than silent failure [117].

5.3. FIA–Primitive Mapping Matrix (Bypass and Degradation Paths)

To make evaluation guidance easier to read, we split the threat-mapping matrix into two coordinated parts. Table 11 lists representative primary mitigations, minimal instrumentation/setup for evaluation, and the targeted operation(s). Table 12 pairs each row with pass/fail criteria and metrics, coverage notes, and representative references. Together, Table 11 and Table 12 cross-map fault injection attack (FIA) types to affected security primitives and provide practical hooks for lab reproduction and reporting.

Table 11.

FIA × security primitive—mitigations, setup and targeted operations.

Table 12.

FIA × security primitive—pass/fail, coverage and references.

Note: “Minimal Test” assumes baseline lab gear (a ChipWhisperer-class glitcher, a programmable power supply, and a logic analyzer); higher-end equipment is optional but improves fault localization and repeatability.

“Infective checks” means computations corrupted by faults deterministically fail as a whole, rather than branching into an error path that could be exploited as an oracle.

All evidence—fault heatmaps, monitor/BO/clock logs, reproduction scripts, build/config options, and fuse/state readouts—must be packaged into a reproducible artifact bundle.

5.4. Case Studies and Empirical Patterns

5.4.1. Secure Boot + Voltage/Clock Glitching

Observed Behavior. Practical studies and conference reports show that precisely synchronized voltage or clock transients can cause instruction skips or erroneous branch decisions in secure ROM boot flows, allowing signature-check bypass or rollback-protection failure [98,105,110,111]. The classic symptom is a single-cycle mismatch or condition-code flip that allows unverified firmware to run.

Mitigations. Timing randomization (jitter), duplicate/heterogeneous cryptographic checks (two independent implementations or code paths), infective verification patterns (errors propagate to a global failure), and strict POR/power supervision that resets upon out-of-envelope events [98,99,100]. Our acceptance criteria are consistent with non-invasive FI test expectations codified in ISO/IEC 17825 and widely adopted industry practice [110,120].

5.4.2. TRNG/DRBG + Frequency/EM Injection

Observed Behavior. Frequency injection can lock or correlate RO-based TRNGs, reducing entropy to unacceptable levels; EM coupling or clock stress can impair metastability. If the TRNG seeds a DRBG or keys, predictability follows [107].

Mitigations. Online health checks (per the spirit of NIST SP 800-90B), multiple entropy sources (RO + avalanche), cross-domain conditioning (XOR mixing), and rate-limiting so health tests can reject low-entropy periods [107,117]. Where possible, use shielding or circuit hardening and decouple the entropy path from noisy power/clock domains.

5.4.3. PUF Enrollment/Stability + Laser/Voltage Stress

Observed Behavior. SRAM/RO PUFs are sensitive to environmental corners; FI during power-up/readout inflates BER and can corrupt auxiliary data [112,113,114,115,116].

Mitigations. Enroll at voltage/temperature corners; use ECC (e.g., BCH/RS); authenticate helper data; treat tamper events as key-invalidating and force controlled re-enrollment [113,114,115,116].

5.4.4. Flash Encryption/Key-Wrap + DFA/EMFI

Observed Behavior. EMFI/laser may corrupt MAC/tag checks or key-expansion logic; “check-and-abort” designs can become oracles; faults in AES or RSA-CRT enable classical DFA/key recovery [98,99,100,119].

Mitigations. Infective checks (error → global failure), redundant tag verification (diverse paths), masking/blinding for asymmetric ops, and strict compartmentalization so decrypted material never crosses trust boundaries [98,99,100].

5.4.5. ESP32-C6 Secure Boot Voltage-Glitch Hardening (Walk-Through)

Target. ESP32-C6 with secure boot v2 and AES-XTS flash encryption; eFuse keying; JTAG disabled.

Setup. (i) Board with stable 3.3 V rail and test points; (ii) ChipWhisperer-class glitcher + programmable PSU; (iii) logic analyzer for POR/monitor pins; (iv) ESP-IDF with secure boot/flash encryption enabled; (v) SUIT/OTA verifier scripts for post-boot attestation.

Attack procedure.

- (1)

- Baseline: program signed image with rollback counter N; verify normal boot; capture ROM-verify timing.

- (2)

- Glitch sweep: inject negative VDD transients around ROM signature check; build heatmap (delay × width). Record (a) unverified code execution, (b) spurious POR, (c) clean reset.

- (3)

- Rollback attempt: downgrade to counter N−1 and repeat sweep.

Hardening steps.

- (A)

- Enable redundant verification: add a second, heterogeneous signature verification in early user code; ensure different code/data paths (“infective” style).

- (B)

- Randomize boot timing: insert ROM-adjacent jitter not visible to application timing.

- (C)

- Power integrity: add TVS at 3.3 V input, increase bulk + high-freq decoupling, tighten return paths; supervise rails with fast POR re-assertion.

- (D)

- Monitors: wire brown-out detector to fail-safe reset; log and rate-limit faults.

- (E)

- Lifecycle: burn eFuses to disable JTAG; enforce rollback counters in attestation.

Pass/fail and evidence.

- Pass: no unverified code runs across the tested envelope; downgrade always rejected; monitor alarms asserted under attempted faults; SUIT/attestation transcripts verify counter monotonicity.

- Artifacts to publish: glitch heatmaps; POR/monitor logs; verifier outputs; fuse maps; build configs (secure boot/flash encryption options); scripted reproduction (delay/width sweeps).

5.5. From Mapping to Architecture: Hardening and Design Rules

- Boot-path resilience. Use diverse redundant verification (e.g., ROM verify + shadow check in immutable OTP microcode), time randomization, and strict POR so any out-of-envelope rail/clock aberration forces clean reset. Lifecycle/debug locks must be one-way with multi-step commits and signed RMA workflows [98,105,110,120].

- Crypto fault tolerance. For AES/RSA/ECC, adopt infective countermeasures and blinding; avoid oracle-like aborts that leak control flow or error codes; audit vendor engines under injection [98,99,100].

- Entropy and PUF. Architect diverse entropy (RO + avalanche, multi-domain mixing) and enforce online diagnostics; for PUF, plan ECC, helper-data protection, and re-enrollment strategies that treat tamper as key-invalidating [107,112,113,114,115,116,117].

- Partitioning and containment. With TrustZone-M/MPU, strictly contain key handling/decryption; never expose plaintext keys to non-TZ code; treat debug/ISP as lifecycle-governed capabilities with immutable kill fuses.

- Board-level defenses. Add TVS diodes, LC filtering, robust decoupling, clean clock return paths, and shielding for sensitive traces. Expose tamper pins; wire BO/clock monitors to fail-safe policies. Shield PUF/TRNG regions and minimize coupling.

5.6. Limitations and Open Issues

Limitations. (i) Results can drift with silicon revision, package, and board layout; certification is SKU- and revision-specific. (ii) Over-sensitive sensors can trade bypass for denial-of-service; tuning is context-dependent. (iii) Field assurance of TRNGs under EM/clock stress remains challenging; online tests detect but do not explain all degradations. (iv) Fine-grained mapping of FI impact on PUF stability/modeling resistance still lacks standard BER/Hamming-distance reporting.

Future work. (1) A community rubric for FI reporting: required fault-heatmap resolution, monitor trigger latency, and accept-on-fault rate thresholds. (2) Standardized PUF-under-FI campaigns with public BER/HD datasets across voltage/temperature/fault windows. (3) DVFS/PLL governance tests formalized for MCU-class parts (explicit negative tests and firmware-lock proofs). (4) Unified artifact packs (verifier scripts, monitor logs, fuse maps) reusable across PSA/SESIP/CC, enabling third-party reproducibility at low-/mid-cost tool tiers.

6. Discussion and Recommendations

Designing trustworthy IoT tokens on mass-market MCUs requires evidence that explicitly addresses fault injection (FI) techniques recognized by established evaluation bodies (JIL/Common Criteria FI methods) and scheme owners (PSA/SESIP) [121,122,123]. Beyond check-box features, systems must assume realistic tamper capabilities (voltage/clock glitches, EMFI/laser) and apply layered countermeasures—from silicon and board layout to boot/update/attestation processes—grounded in tamper-resistance principles [124]. Our recommendations below translate those expectations into actionable design, measurement, and operational guidance.

6.1. Key Lessons for IoT Security Devices

Features ≠ posture. Secure boot, flash encryption, TRNG/PUF and lifecycle/debug locks are necessary but insufficient. The system must fail closed under FI, preferably with infective verification and diversity so that single-point faults cannot yield “accept-on-fault” behavior [125,126].

Layering wins. A defensible posture emerges from coordinated silicon hardening, board-level power/clock discipline, and lifecycle governance tied to production states—rather than any single on-chip feature [121,122,123].

Assurance is operational. Certifications communicate scope and depth but are SKU- and revision-specific; they must be complemented by application-specific FI tests and operational controls (attestation, telemetry, RMA policies) [122,123].

6.2. Layered Architecture for FIA-Resilient Tokens

Figure 5 (conceptual) organizes countermeasures across (i) physical/board, (ii) boot and crypto, (iii) partitioning and key-handling domains, (iv) update/attestation, and (v) fleet operations. Each layer either prevents useful faulting, detects and contains it, or produces audit-worthy evidence. Cryptographic verification adopts infective and diverse patterns to avoid exploitable branches [125,126], and side-channel-aware implementations are assumed in tandem with FI resilience [127].

Figure 5.

Layered architecture for FIA-resilient IoT tokens.

Takeaway. No MCU option compensates for poor board power integrity, misconfigured lifecycle/debug, or a weak update/attestation pipeline [121,122,123,124,125,126].

6.3. MCU Selection Recommendations

Certification-first. Prefer devices with PSA-L2/L3 or SESIP-2/3/4 collateral where FI test scope is documented and reproducible [122,123].

Baseline alignment. Map product requirements to consumer/enterprise IoT baselines (ETSI EN 303 645 [128]; NISTIR 8259A [129]) and to cryptographic module expectations (ISO/IEC 19790 [130]).Concrete families (illustrative).

STM32U585/nRF54L15/LPC55S69/RA8M1/ESP32-C6: trade-offs in TZ-M presence, PUF availability, SCE/inline crypto, and certification maturity should be weighed against cost, power, and toolchain readiness [122,123,128,129,130].

What to verify before tape-out/PO. Certificate exactness (part #, mask, package), ROM-boot chain immutability, TRNG with online health tests, lifecycle fuses, and debug kill semantics.

6.4. Physical and Board-Level Protections

Physical and board-level control complements silicon and firmware hardening, transforming delicate, knife-edge behavior into safe states. The goal is not just to add parts, but to shape the device’s response under load so that probe or error attempts result in safe resets and sealed secrets, rather than partial, vulnerable operations.

- Anti-probing and FI shielding.

Start with power integrity so injected transients deterministically collapse into reset: TVS diodes, LC filtering, tight decoupling on MCU rails, and fast POR. Beyond the rails, minimize observation/injection paths with RF cans over the MCU and especially over TRNG/PUF regions; keep entropy nets short and shielded; where feasible, separate entropy and logic domains to reduce cross-coupling. Package intrusion and light sensors near die area further raise attack cost; higher-assurance variants may justify active meshes that invalidate keys on intrusion. These measures align with platform evaluation and industrial component security baselines that emphasize physical tamper resistance and controlled failure behaviors. Use small-pitch packages and conformal coating to deter micro-probing; wire physical security pins to enforced interrupt policies (zeroize/lock), not passive logs [131,132].

- Clock discipline.

Treat time as an asset: protect external clock references against duty-cycle distortion; enable and calibrate clock-anomaly monitors to catch real abuse (not normal jitter); avoid exposing clock/DVFS controls to untrusted flows so software cannot steer the device into vulnerable windows.

6.5. Firmware and Crypto Hardening for FIA

Infective and diverse verification. Secure boot should employ diverse redundant verification (e.g., a shadow check implemented differently) so single-cycle faults cannot yield accept-on-fault.

Crypto engines and constant time. Prefer hardened vendor accelerators; add blinding (RSA/ECC) and redundant MAC/tag checks for wrapped content. Use constant-time paths and normalize error signaling to avoid oracle behaviors that pair with FI.

TRNG health and PUF stability. Run online health tests and rate-limit consumers so the system can throttle when anomalies appear. Treat PUF helper data as sensitive; provision ECC (BCH/RS), characterize BER across V/T corners, and define re-enrollment on tamper.

Compartmentalization. Use TrustZone-M/MPU to isolate key unwrap and plaintext key domains; where TZ-M is absent, rely on strict least privilege, MPU regions, and audited context boundaries.

6.6. Update, Attestation, and Lifecycle

Standards-aligned update (SUIT). Adopt the IETF firmware-package model with cryptographic protection (CMS) and anti-rollback counters; design for dual-bank/A/B fallback to avoid bricking during recovery [133,134].

Measured boot and remote attestation. Bind measured firmware to device identity chains suitable for verifier consumption: per-device DICE roots, PSA threat-model alignment for RoT services, and scheme documentation that clarifies device-security semantics [135,136,137]. Then integrate a RATS evidence flow and token formats appropriate for constrained devices (e.g., CWT) so backends can enforce allow/deny/quarantine policy [138,139,140,141,142].

Sequencing guidance for roots and measurements. Where server/PC literature is more mature, reuse its lessons for robust ordering and evidence capture in embedded roots-of-trust (e.g., boot-block protections and integrity-measurement handoffs) [143,144].

Lifecycle/debug governance. Enforce one-way fuses for debug disable; require multi-step, signed transcripts for any RMA re-enable; lock ISP before shipment and tie unlock to authenticated procedures.

6.7. Factory and Supply-Chain Controls

Adopt HSM-backed provisioning with per-device certificates/identities, audit trails, and tamper-evident records. Baseline device policy against widely recognized IoT security concerns to reduce exposure from weak defaults and misconfigurations at the factory and in the field [138].

During bring-up, run threat-driven FI tests (voltage/clock/EMFI windows) around known compare/branch regions; tune sensor thresholds and acceptance criteria. Vendor anti-tamper application guidance is useful to translate these controls into layout, coating, and sensor wiring practices on the line [139].

Document a CWE-1247-aware test plan so voltage/clock glitch protections are verified as design and process requirements, not assumptions [145].

6.8. Tiered Hardening Blueprint by Cost Envelope

Match achievable controls to BOM/power budgets. Ultra-low-cost tokens emphasize clean power, minimal TCB, and redundant verification; balanced tiers add shielding, tamper wiring, and attested update; premium tiers justify selective meshes, epoxy, telemetry, and deeper FI hardening. Treat these as process-controlled engineering bundles with documented verification activities to avoid drift over time [146].

Table 13 offers a baseline configuration for three cost tiers. It complements Section 4’s device taxonomy.

Table 13.

Baseline hardening by cost tier.

6.9. Procurement and Certification Checklist

Publish software-identity tags (e.g., CoSWID) with your artifact packs so third parties can correlate firmware, configuration, and evidence [147].

For bootloaders, prefer well-maintained, widely deployed microcontroller loaders that support modern manifest and rollback policies and integrate cleanly with CI/CD signing [148].

To avoid “paper compliance” and ensure real resilience, enforce the checklist in Table 14 during sourcing and design reviews.

Table 14.

Procurement and certification checklist.

6.10. Special Advice for Ultra-Low-Cost Platforms (e.g., ESP32-C6)

When budgets prohibit PSA-L3/SESIP3 MCUs, strict engineering discipline can still achieve practical security for low-risk deployments:

- Harden secure boot with two independent verification passes, time jitter, and infective accept logic. Immediately fuse JTAG.

- Enforce constant-time cryptography; prefer hardware acceleration where trustworthy; implement double MAC/tag checking in critical unwrap paths.

- Use MPU-based isolation; minimize TCB; keep key unwrap in a single, small module; never expose plaintext keys to general tasks.

- Design clean power with fast POR; add RF can; instrument light/case sensors to enforce erase-of-wrapped-keys under tamper.

- Integrate SUIT update, anti-rollback, and basic attestation (device certificate + measurements).

- Validate with FI sweeps (timed around compare windows) and continuously monitor TRNG health.

- Treat the device as a policy subject: the backend should quarantine or deny service upon suspicious attestation or repeated tamper.

This approach does not yield the same assurance as a PSA-L3/SESIP3 device, but it raises the bar substantially for opportunistic attackers while preserving the cost advantage.

6.11. Aligning with Ecosystem Baselines

Where feasible, align product requirements with component security, process, and attestation norms already referenced in this section; ensure your internal checklists, acceptance tests, and field-operations documentation trace back to those requirements and to the artifact evidence emitted by your build and provisioning pipelines.

6.12. Concluding Recommendations

Start with threats, not features. Build an application-specific FIA threat model. If attackers can gain physical access to the device (even briefly), plan for FI and prove that signature checking, MAC/tag verification, and debugging locks are error-free.

Prefer PSA-L3/SESIP3 devices with TRNG and online health checks; use PUFs when possible (e.g., LPC55S69) to reduce the risk of cloning. For radio tokens or ultra-low-power nodes (e.g., nRF54L15), confirm the PSA level for the specific variant and budget for board-level security enhancements.

For STM32U585-class designs, combine TZ-M, OTF encryption, and boot infection verification with board-level FI mitigation and a disciplined debug cycle. For RA8M1-class performance controllers, SCE and TZ-M should be used to store unwrapped keys in secure domains and enforce telemetry for TRNG/PUF/tamper.

For ESP32-C6 or similar cost-conscious platforms, compensate for the lack of TZ-M/PUF with software isolation, redundant verifications, constant-time encryption, and physical shielding, followed by validation using FI sweeps. Implementation should be performed using a backend that enforces validation policies and responds to tamper telemetry.

Incorporate FI testing into vendor verification plans and specifications of work (SOWs). Require proof of passing voltage/clock/EMFI test sets and verify sensor thresholds under on-board conditions (temperature, supply voltage fluctuations).

Close the operational loop. Secure update (SUIT), rollback protection, attestation, key rotation, and RMA auditing must be operational realities, not promises in a whitepaper. Treat tampering as a first-order event that can trigger policy actions and key revocation.

In short, trustworthy IoT security is a systemic endeavor: a combination of proven silicon, board/mechanical design, robust startup/cryptography/entropy engineering, supply chain integrity, and attestation-based operations. Only by combining these layers can low-cost tokens withstand large-scale FIA attacks.

7. Conclusions

This work reframes MCU security for IoT from a catalog of features into a verifiable account of system behavior under adversarial stress. We contribute a normative, reproducible protocol that couples concrete fault modalities—voltage, clock, and EM/optical—with testable controls across silicon, board design, firmware, and operations, supplying explicit pass/fail criteria, quantitative scoring, and artifact bundles suitable for third-party certification. Anchored by the cross-mapping matrix and an ESP32-C6 walk-through, the study shows how disciplined power/clock integrity, infective and redundant verification, compartmentalized key handling, health-checked entropy, and rollback-proof, attested updates cohere into defense-in-depth that withstands single-fault conditions where superficially “feature-rich” devices fail. The comparative analysis of STM32U585, LPC55S69, nRF54L15, ESP32-C6, and RA8M1 makes the trade space explicit—TrustZone versus native PUF, isolation depth versus price/performance—and maps parts to tiered hardening profiles aligned with cost envelopes and deployment risk. The central lesson is compositional: credible resilience is an emergent property of coherent interactions among MCU capabilities, mechanical and PCB design, cryptographic and firmware hardening, supply-chain discipline, and attestation-driven operations, rather than any single mechanism in isolation.

At the same time, important boundaries remain and motivate a concrete research and engineering agenda. A cross-SKU experimental campaign quantifying glitch success rates, detector latency, and recovery semantics under FreeRTOS/Zephyr timing constraints would strengthen external validity; standardized datasets and rubrics—fault heatmaps, accept-on-fault thresholds, monitor trigger characteristics—would elevate reproducibility and comparability across labs. Run-time adaptation deserves deeper study, including ML-assisted filters that raise alarms without prohibitive false positives, as do fleet-scale lifecycle/RMA controls that preserve irreversibility without introducing service backdoors. Closer governance of DVFS/PLL surfaces on MCU-class parts, and systematic characterization of TRNG/PUF behavior under combined FI and environmental stress, remain open technical gaps. Operationally, open-sourcing scripts, SUIT/attestation templates, and certification-ready artifact packs can convert guidance into evidence and accelerate independent evaluation. Pursued together, these directions embed fault injection as a first-class design assumption and push cost-sensitive IoT tokens toward demonstrable, field-relevant resilience.

Author Contributions

Conceptualization, M.A. and N.G.; methodology, M.A. and I.S.; validation, Y.A., I.S. and O.N.; formal analysis, M.A.; investigation, M.A., K.B. and O.N.; resources, Y.S. and Y.A.; data curation, O.N. and K.B.; writing—original draft preparation, M.A.; writing—review and editing, N.G., Y.A., I.S., Y.S., K.B., O.N. and M.A.; visualization, O.N. and M.A.; supervision, N.G., Y.S. and Y.A.; project administration, Y.A. and N.G.; funding acquisition, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science Committee of the Ministry of Science and Higher Education of the Republic of Kazakhstan (Grant No. AP26103891). The TSARKA group of companies funded the Article Processing Charge (APC) for this work.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The author would like to thank colleagues and reviewers for their valuable suggestions during the preparation of this manuscript.

Conflicts of Interest