Artificial Intelligence-Assisted Wrist Radiography Analysis in Orthodontics: Classification of Maturation Stage

Abstract

1. Introduction

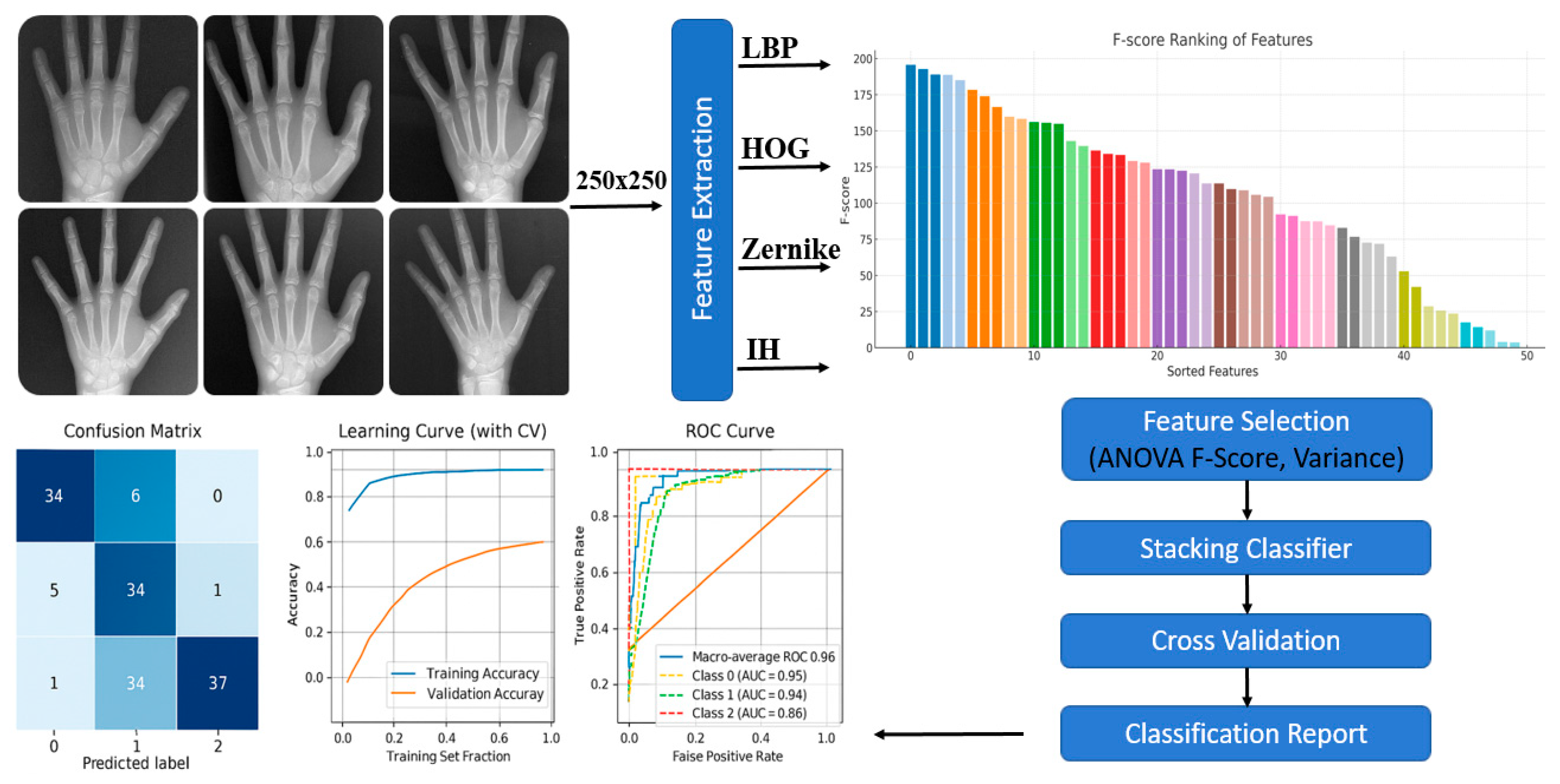

2. Materials and Methods

2.1. Skeletal Maturation Classification

2.2. Feature Extraction

- LBP captures fine textural patterns and micro-morphological variations in bone tissue, providing local-level structural representation.

- HOG focuses on gradient orientation and edge distribution, emphasizing contour and morphological boundaries of bone structures.

- Zernike Moments describe the geometrical properties of symmetric and asymmetric bone shapes, offering robust rotation- and scale-invariant descriptors.

- Intensity Histogram analyzes grayscale pixel distribution, distinguishing between bone and surrounding soft tissues based on brightness variations.

2.3. Data Augmentation

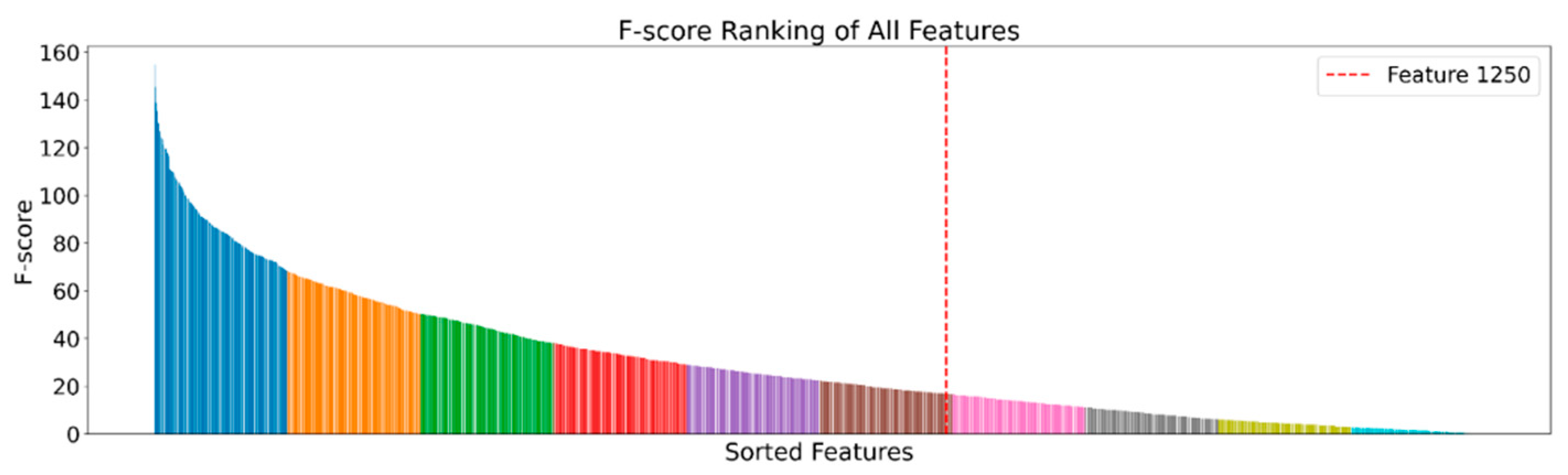

2.4. Feature Selection

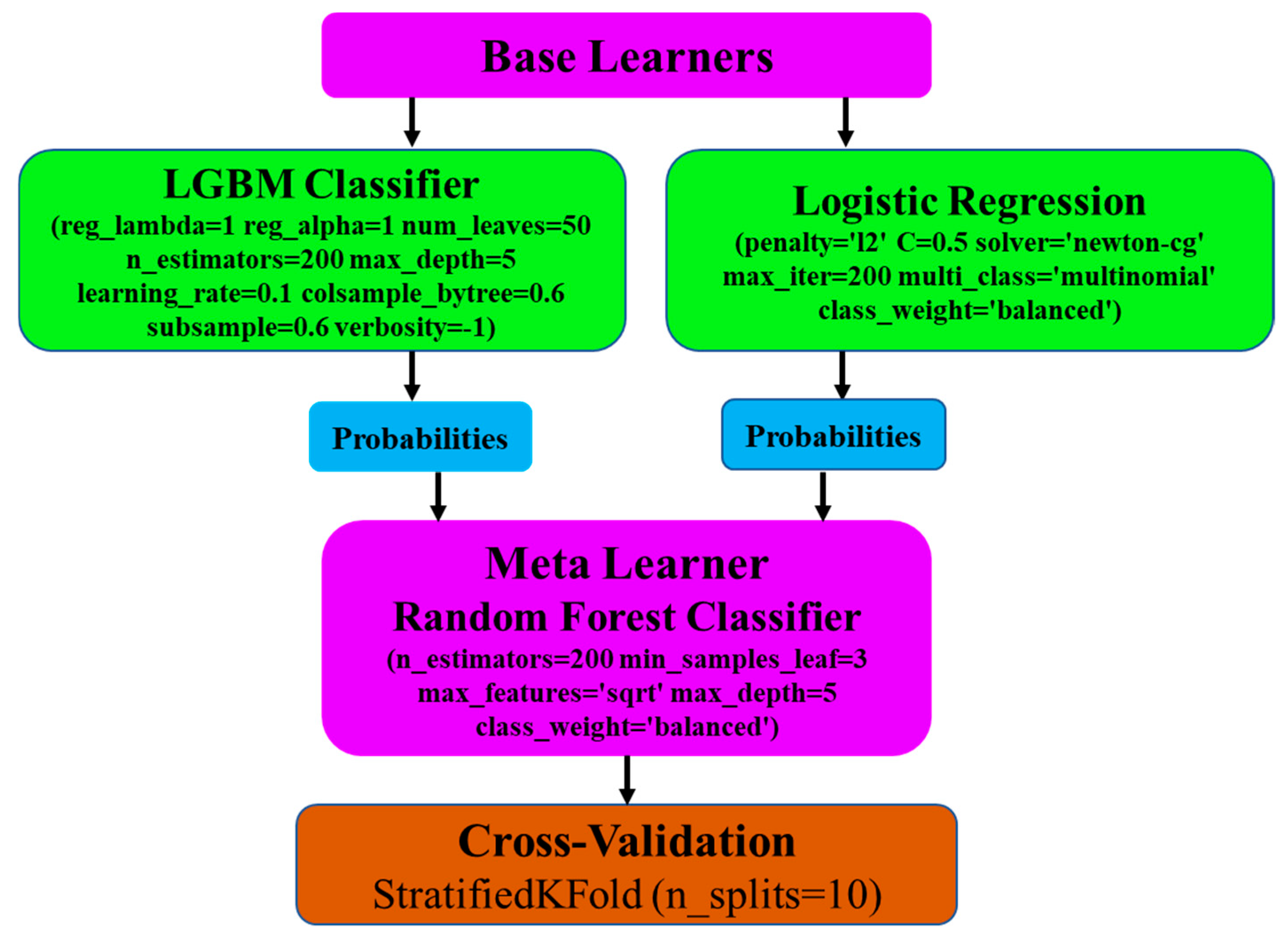

2.5. Base Learners

2.6. Meta Learner

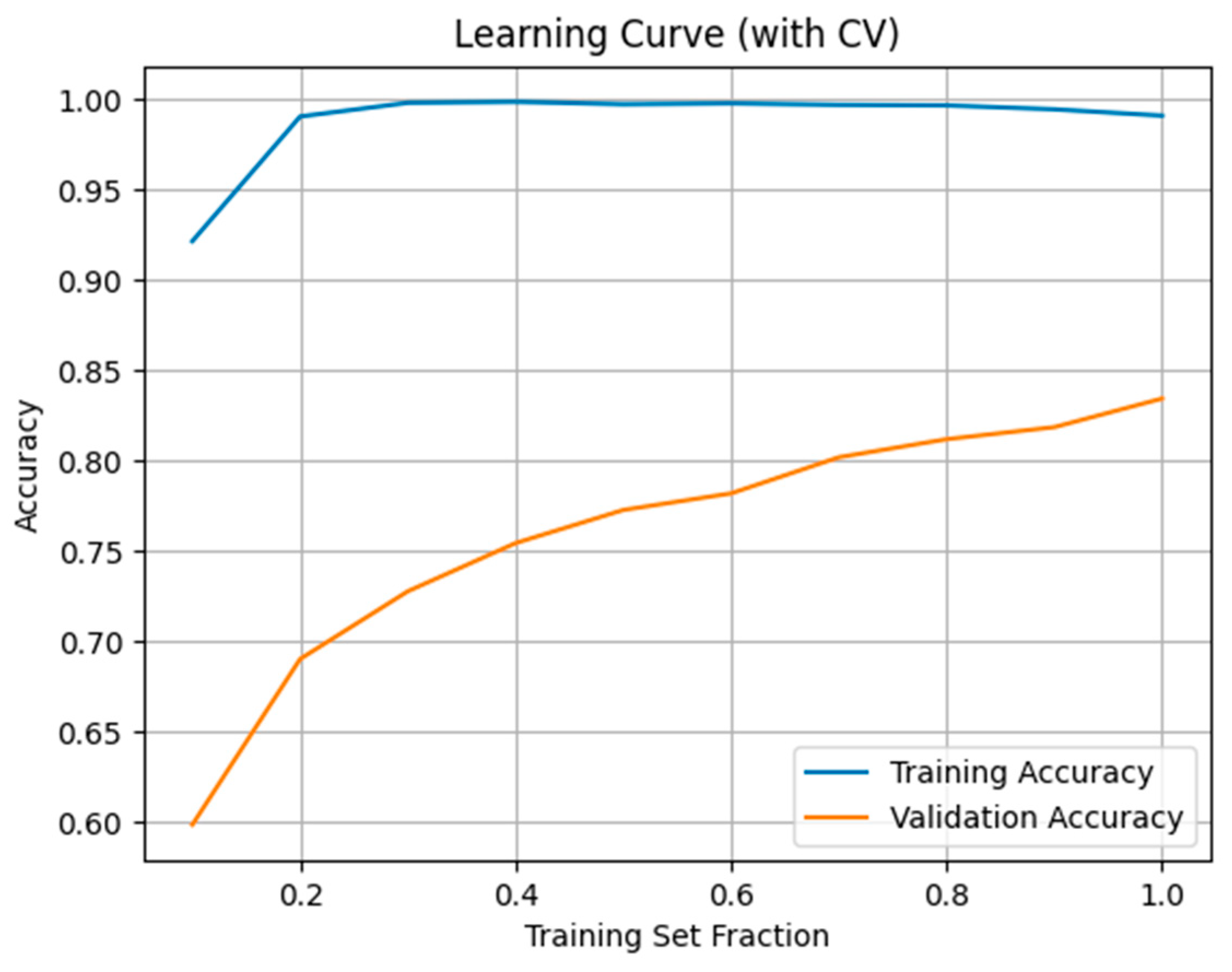

2.7. Model Evaluation and Performance Metrics

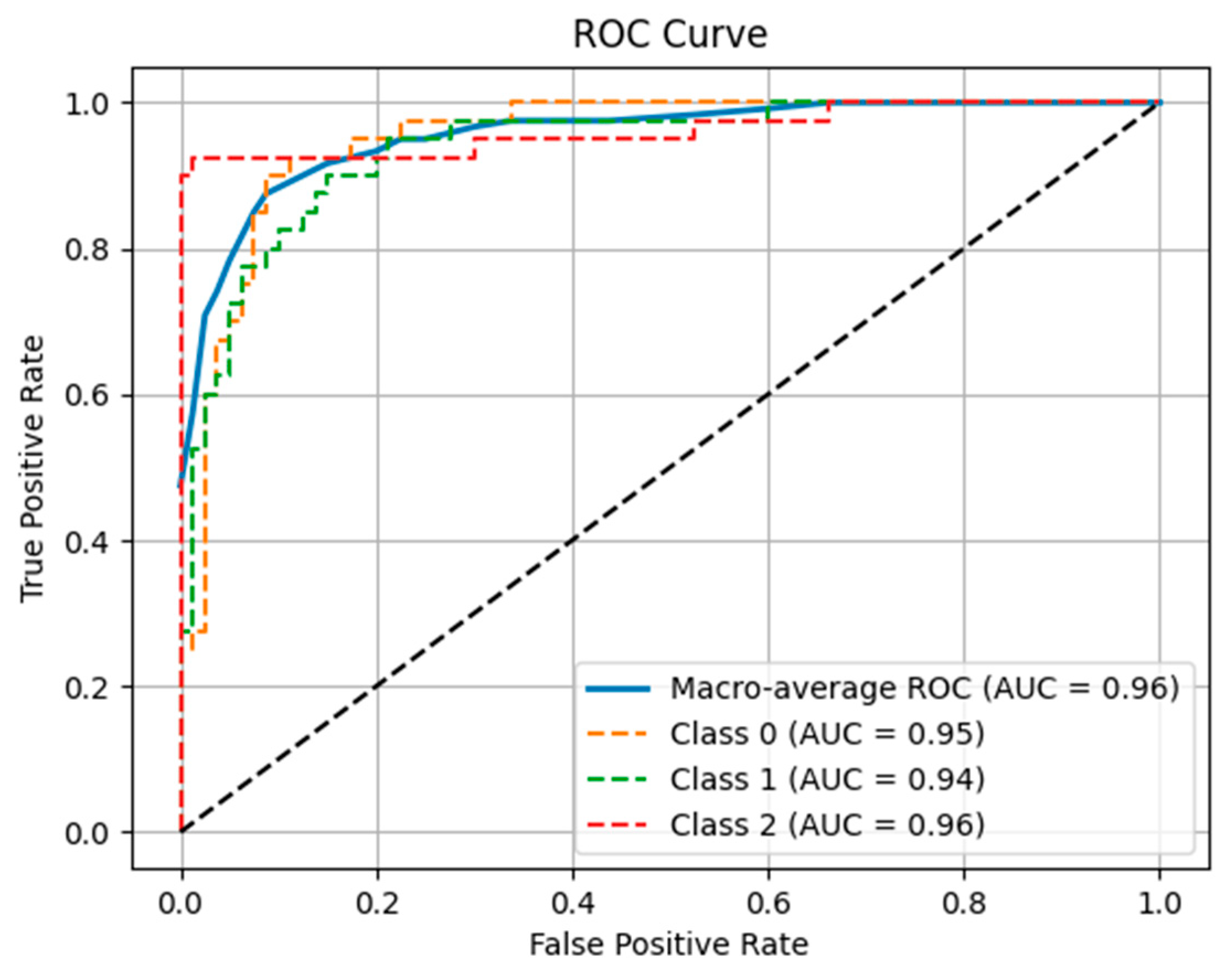

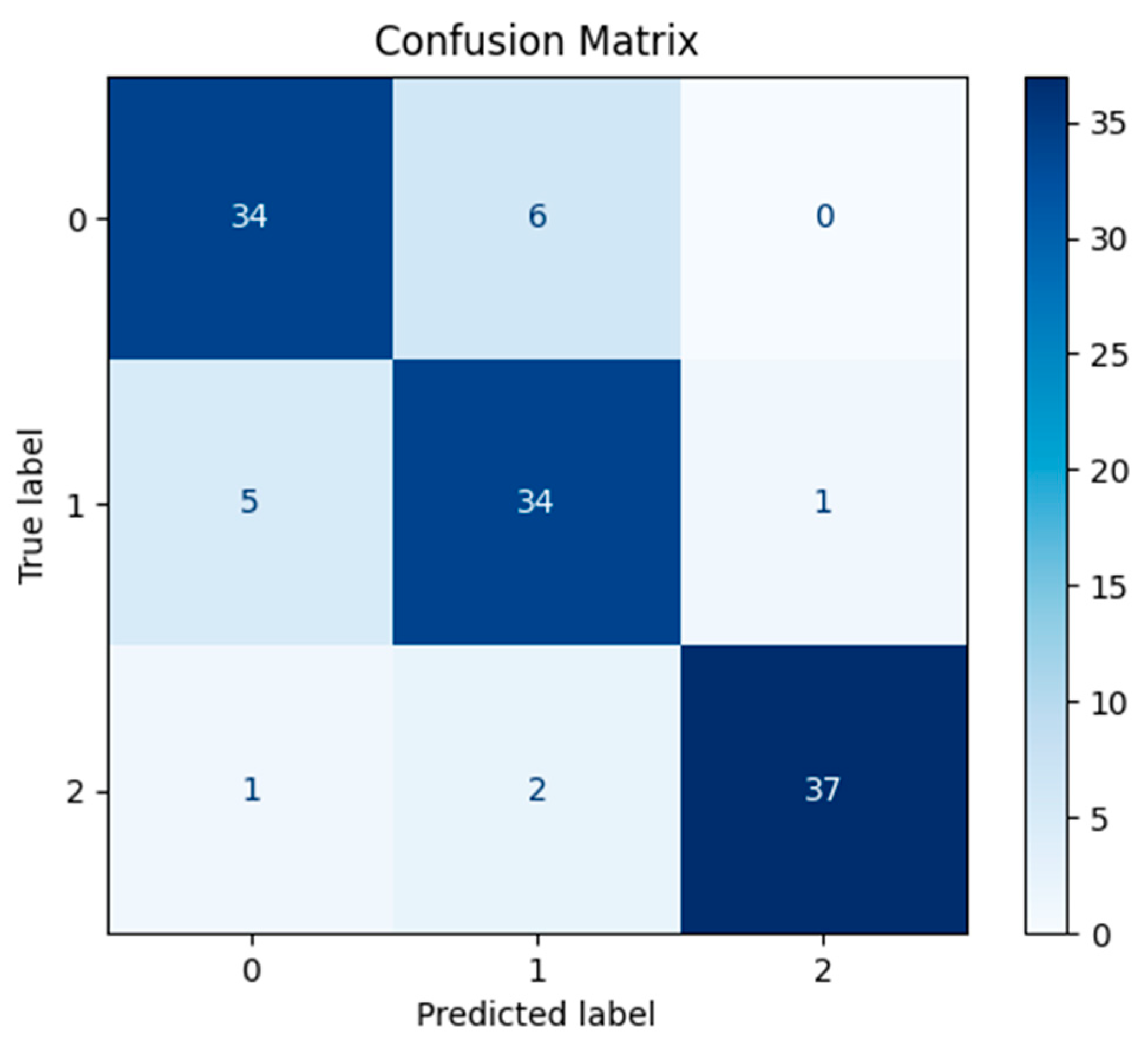

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| LBP | Local Binary Pattern |

| HOG | Histogram of Oriented Gradients |

| CNN | Convolutional Neural Network |

| FD | Fractal Dimension |

| SMI | Skeletal Maturation Indicators |

| LGBM | Light Gradient Boosting Machine |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under the Curve |

| ANOVA | Analysis of Variance |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Square Error |

| XAI | Explainable Artificial Intelligence |

| CBCT | Cone Beam Computed Tomography |

| MRI | Magnetic Resonance Imaging |

References

- Baccetti, T.; Franchi, L.; McNamara, J.A., Jr. The cervical vertebral maturation (CVM) method for the assessment of optimal treatment timing in dentofacial orthopedics. Semin. Orthod. 2005, 11, 119–129. [Google Scholar] [CrossRef]

- Fishman, L.S. Radiographic evaluation of skeletal maturation: A clinically oriented method based on hand-wrist films. Angle Orthod. 1982, 52, 88–112. [Google Scholar]

- Spampinato, C.; Palazzo, S.; Giordano, D.; Aldinucci, M.; Leonardi, R. Deep learning for automated skeletal bone age assessment in X-ray images. Med. Image Anal. 2017, 36, 41–51. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Correction: Corrigendum: Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 546, 686. [Google Scholar] [CrossRef] [PubMed]

- Pandey, A.; Tiwari, A.K. Smart Security: Unmasking face spoofers with advanced decision tree classifier. In Proceedings of the 2024 15th International Conference Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Chen, J.C.; Yu, P.Q.; Yao, C.Y.; Zhao, L.P.; Qiao, Y.Y. Eye detection and coarse localization of pupil for video-based eye tracking systems. Expert. Syst. Appl. 2024, 236, 121316. [Google Scholar] [CrossRef]

- Sajitha, P.; Andrushia, A.D.; Anand, N.; Naser, M.Z. A review on machine learning and deep learning image-based plant disease classification for industrial farming systems. J. Ind. Inf. Integr. 2024, 38, 100572. [Google Scholar] [CrossRef]

- Albataineh, Z.; Aldrweesh, F.; Alzubaidi, M.A. COVID-19 CT-images diagnosis and severity assessment using machine learning algorithm. Clust. Comput. 2024, 27, 547–562. [Google Scholar] [CrossRef]

- Lee, B.D.; Lee, M.S. Automated bone age assessment using artificial intelligence: The future of bone age assessment. Korean J. Radiol. 2021, 22, 792–800. [Google Scholar] [CrossRef]

- Kunz, F.; Stellzig-Eisenhauer, A.; Zeman, F.; Boldt, J. Artificial intelligence in orthodontics: Evaluation of a fully automated cephalometric analysis using a customized convolutional neural network. J. Orofac. Orthop. 2020, 81, 52–68. [Google Scholar] [CrossRef]

- Gao, Y.; Zhu, T.; Xu, X. Bone age assessment based on deep convolution neural network incorporated with segmentation. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1951–1962. [Google Scholar] [CrossRef]

- Kim, H.; Kim, C.S.; Lee, J.M.; Lee, J.J.; Lee, J.; Kim, J.S.; Choi, S.H. Prediction of Fishman’s skeletal maturity indicators using artificial intelligence. Sci. Rep. 2023, 13, 5870. [Google Scholar] [CrossRef] [PubMed]

- Grave, K.C.; Brown, T. Skeletal ossification and the adolescent growth spurt. Am. J. Orthod. 1976, 69, 611–619. [Google Scholar] [CrossRef]

- Costaner, L.; Lisnawita, L.; Guntoro, G.; Abdullah, A. Feature extraction analysis for diabetic retinopathy detection using machine learning techniques. Sist. J. Sist. Inform. 2024, 13, 2268–2276. [Google Scholar] [CrossRef]

- Sharma, P.; Bansal, D.; Gupta, B. Dementia Vision: Feature Extraction and Comparison using HOG and PCA for Diagnostic Imaging. In Proceedings of the 2024 OPJU International Technology Conference (OTCON) on Smart Computing for Innovation and Advancement in Industry 4.0, Raigarh, India, 5–7 June 2024; IEEE: New York, NY, USA, 2024; pp. 1–7. [Google Scholar]

- Silva, C.M.; Da Silva, M.C.; Da Silva, S.P.P.; Rebouças Filho, P.P.; Nascimento, N.M.M. Computer vision for brain tumor classification: A novel approach based on Zernike moments. In Proceedings of the 2024 IEEE 37th International Symposium on Computer-Based Medical Systems (CBMS), Guadalajara, Mexico, 26–28 June 2024; IEEE: New York, NY, USA, 2024; pp. 94–99. [Google Scholar]

- Arul Edwin Raj, A.M.; Sundaram, M.; Jaya, T. Thermography based breast cancer detection using self-adaptive gray level histogram equalization color enhancement method. Int. J. Imaging Syst. Technol. 2021, 31, 854–873. [Google Scholar] [CrossRef]

- Maharana, K.; Mondal, S.; Nemade, B. A review: Data pre-processing and data augmentation techniques. Glob. Transit. Proc. 2022, 3, 91–99. [Google Scholar] [CrossRef]

- Islam, T.; Hafiz, M.S.; Jim, J.R.; Kabir, M.M.; Mridha, M.F. A systematic review of deep learning data augmentation in medical imaging: Recent advances and future research directions. Healthc. Anal. 2024, 5, 100340. [Google Scholar] [CrossRef]

- Saeed, M.H.; Hama, J.I. Cardiac disease prediction using AI algorithms with SelectKBest. Med. Biol. Eng. Comput. 2023, 61, 3397–3408. [Google Scholar] [CrossRef] [PubMed]

- Boutahar, K.; Laghmati, S.; Moujahid, H.; El Gannour, O.; Cherradi, B.; Raihani, A. Exploring machine learning approaches for breast cancer prediction: A comparative analysis with ANOVA-based feature selection. In Proceedings of the 2024 4th International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Fez, Morocco, 16–17 May 2024; IEEE: New York, NY, USA, 2024; pp. 1–7. [Google Scholar]

- Jaiyeoba, O.; Ogbuju, E.; Yomi, O.T.; Oladipo, F. Development of a model to classify skin diseases using stacking ensemble machine learning techniques. J. Comput. Theor. Appl. 2024, 2, 22–38. [Google Scholar] [CrossRef]

- Bidwai, P.; Gite, S.; Pahuja, N.; Pahuja, K.; Kotecha, K.; Jain, N.; Ramanna, S. Multimodal image fusion for the detection of diabetic retinopathy using optimized explainable AI-based Light GBM classifier. Inf. Fusion. 2024, 111, 102526. [Google Scholar] [CrossRef]

- Liu, P.; Xing, Z.; Peng, X.; Zhang, M.; Shu, C.; Wang, C.; Ji, F. Machine learning versus multivariate logistic regression for predicting severe COVID-19 in hospitalized children with Omicron variant infection. J. Med. Virol. 2024, 96, e29447. [Google Scholar] [CrossRef]

- Sarkera, S.Z.; Ahmeda, S.F.B.; Avea, A.A.; Abrar, T.A. A hybrid pre-processing technique for stacking ensemble with Random Forest as a meta classifier for heart disease classification. UU J. Sci. Eng. Technol. 2024. Available online: https://www.uttara.ac.bd/wp-content/uploads/2024/07/Paper-ID-14.pdf (accessed on 29 October 2025).

- Zamrai, M.A.H.; Yusof, K.M.; Azizan, M.A. Random Forest stratified k-fold cross validation on SYN DoS attack SD-IoV. In Proceedings of the 2024 7th International Conference on Communication Engineering and Technology (ICCET), Tokyo, Japan, 22–24 February 2024; IEEE: New York, NY, USA, 2024; pp. 7–12. [Google Scholar]

- Hazar, Y.; Ertuğrul, Ö.F. Process management in diabetes treatment by blending technique. Comput. Biol. Med. 2025, 190, 110034. [Google Scholar] [CrossRef]

- Kok, H.; Zhang, G.; Zhang, W. Artificial intelligence system for assessing skeletal maturity using hand and wrist radiographs. Nat. Commun. 2021, 12, 5214. [Google Scholar]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Artificial intelligence in orthodontics: Where are we now and what’s next? Korean J. Orthod. 2020, 50, 59–68. [Google Scholar]

- Gonca, S.; Ozdas, T. Machine learning-based prediction of skeletal growth stages using fractal dimension analysis of hand-wrist radiographs. Orthod. Craniofac. Res. 2022, 25, 401–410. [Google Scholar]

- Kim, D.W.; Kim, J.; Kim, T.; Kim, T.; Kim, Y.J.; Song, I.S.; Lee, D.Y. Prediction of hand-wrist maturation stages based on cervical vertebrae images using artificial intelligence. Orthod. Craniofac. Res. 2021, 24, 68–75. [Google Scholar] [CrossRef] [PubMed]

- Luu, N.S.; Perkins, J.A.; Naranjo, C.M. Artificial intelligence in dentistry: Current applications and future perspectives. Dent. Clin. N. Am. 2022, 66, 599–616. [Google Scholar]

- Samek, W.; Montavon, G.; Lapuschkin, S.; Anders, C.J.; Müller, K.-R. Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications in Explainable AI (XAI). Nat. Commun. 2021, 12, 247–278. [Google Scholar]

- Perinetti, G.; Contardo, L. Reliability of dental maturity as an indicator of skeletal maturity: A systematic review. Angle Orthod. 2011, 81, 710–721. [Google Scholar]

- Alkhal, H.A.; Wong, R.W.K.; Rabie, A.B.M. Correlation between chronological age, cervical vertebral maturation and Fishman’s skeletal maturity indicators in southern Chinese. Angle Orthod. 2008, 78, 591–596. [Google Scholar] [CrossRef] [PubMed]

| Skeletal Stage | Number of Radiographs (n) | Percentage (%) |

|---|---|---|

| Pre-peak | 400 | 49.44% |

| Peak | 100 | 12.36% |

| Post-peak | 309 | 38.20% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kavasoglu, N.; Ertugrul, O.F.; Kotan, S.; Hazar, Y.; Eratilla, V. Artificial Intelligence-Assisted Wrist Radiography Analysis in Orthodontics: Classification of Maturation Stage. Appl. Sci. 2025, 15, 11681. https://doi.org/10.3390/app152111681

Kavasoglu N, Ertugrul OF, Kotan S, Hazar Y, Eratilla V. Artificial Intelligence-Assisted Wrist Radiography Analysis in Orthodontics: Classification of Maturation Stage. Applied Sciences. 2025; 15(21):11681. https://doi.org/10.3390/app152111681

Chicago/Turabian StyleKavasoglu, Nursezen, Omer Faruk Ertugrul, Seda Kotan, Yunus Hazar, and Veysel Eratilla. 2025. "Artificial Intelligence-Assisted Wrist Radiography Analysis in Orthodontics: Classification of Maturation Stage" Applied Sciences 15, no. 21: 11681. https://doi.org/10.3390/app152111681

APA StyleKavasoglu, N., Ertugrul, O. F., Kotan, S., Hazar, Y., & Eratilla, V. (2025). Artificial Intelligence-Assisted Wrist Radiography Analysis in Orthodontics: Classification of Maturation Stage. Applied Sciences, 15(21), 11681. https://doi.org/10.3390/app152111681