Deep Learning Application of Fruit Planting Classification Based on Multi-Source Remote Sensing Images

Abstract

1. Introduction

2. Materials and Methods

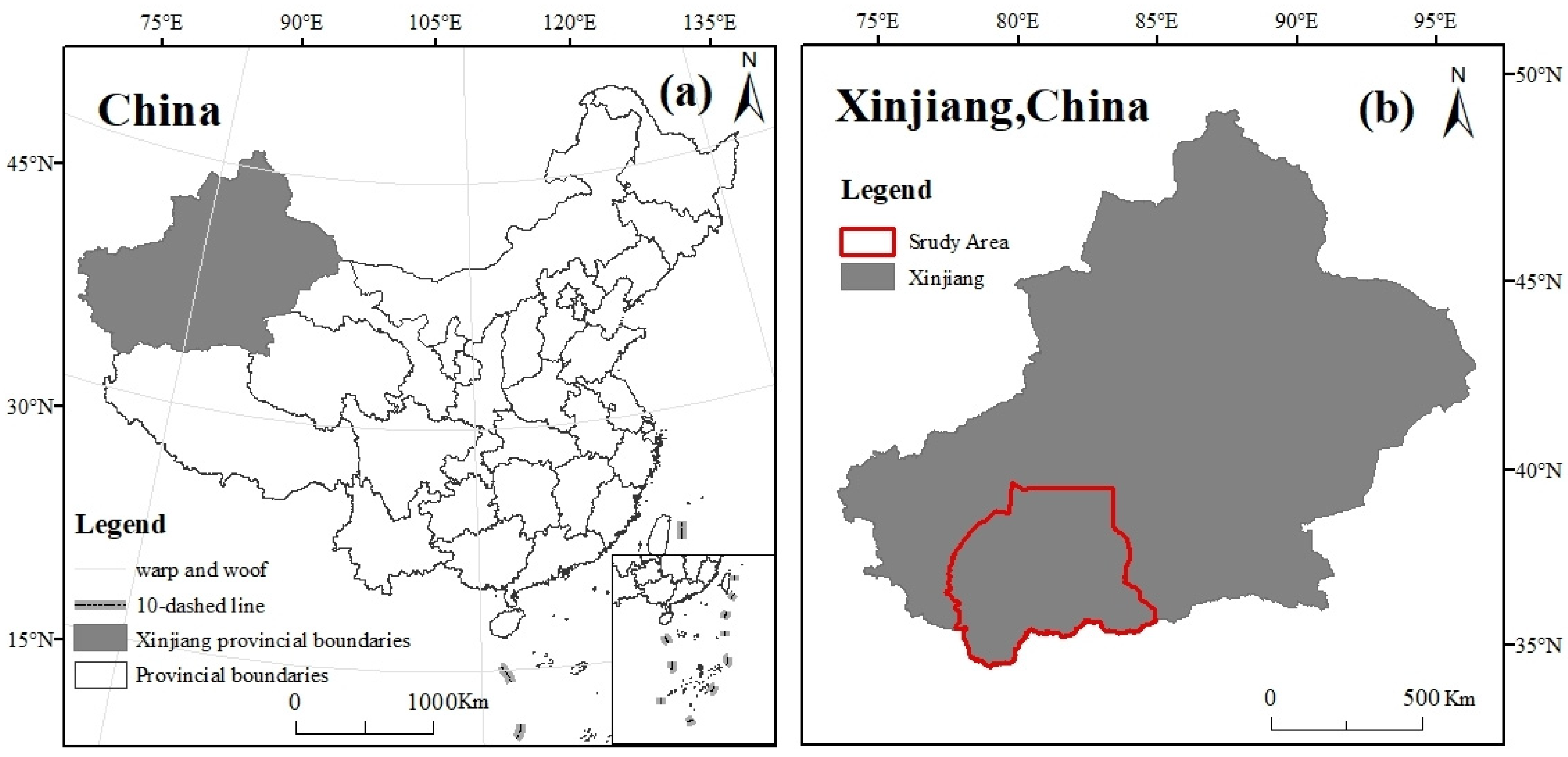

2.1. Study Area

2.2. Satellite Data and Preprocessing

2.2.1. Sentinel-1 Data

2.2.2. Sentinel-2 Data

2.2.3. SRTM Data

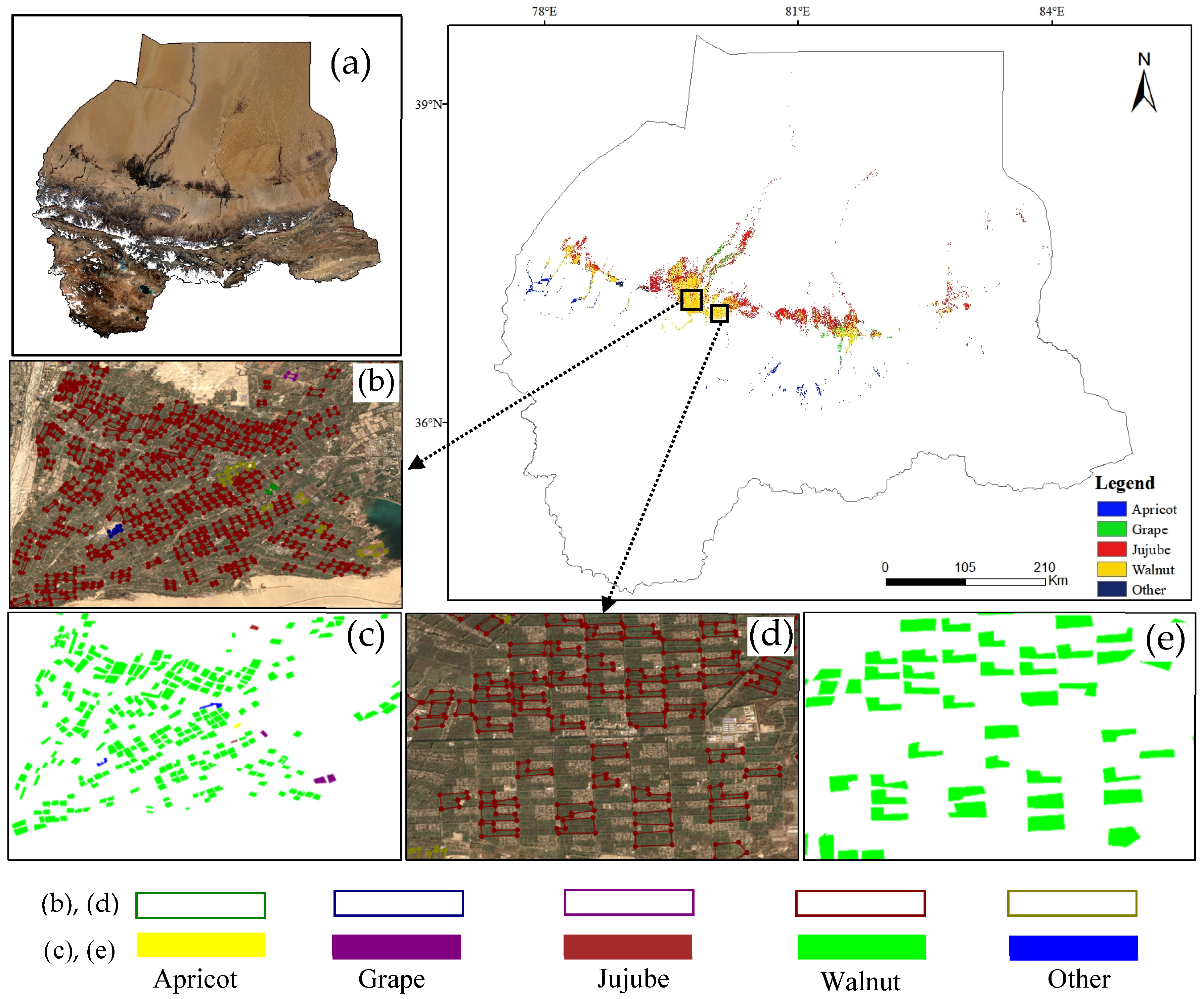

2.3. Sample Data

2.4. Methods

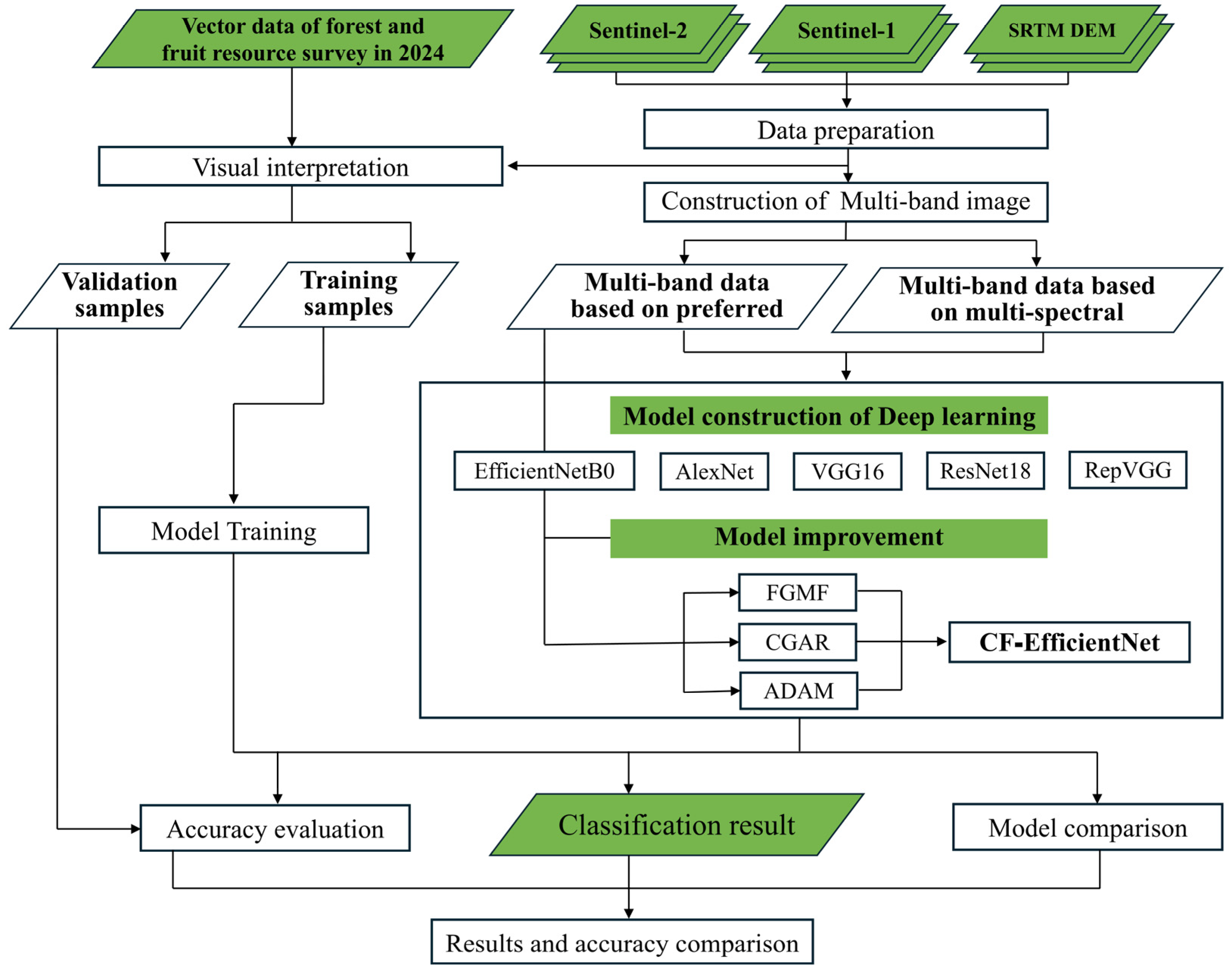

2.4.1. Methodological Overview

2.4.2. Feature Selection

2.4.3. Deep Learning Architectures

2.4.4. Deep Learning Training Strategy

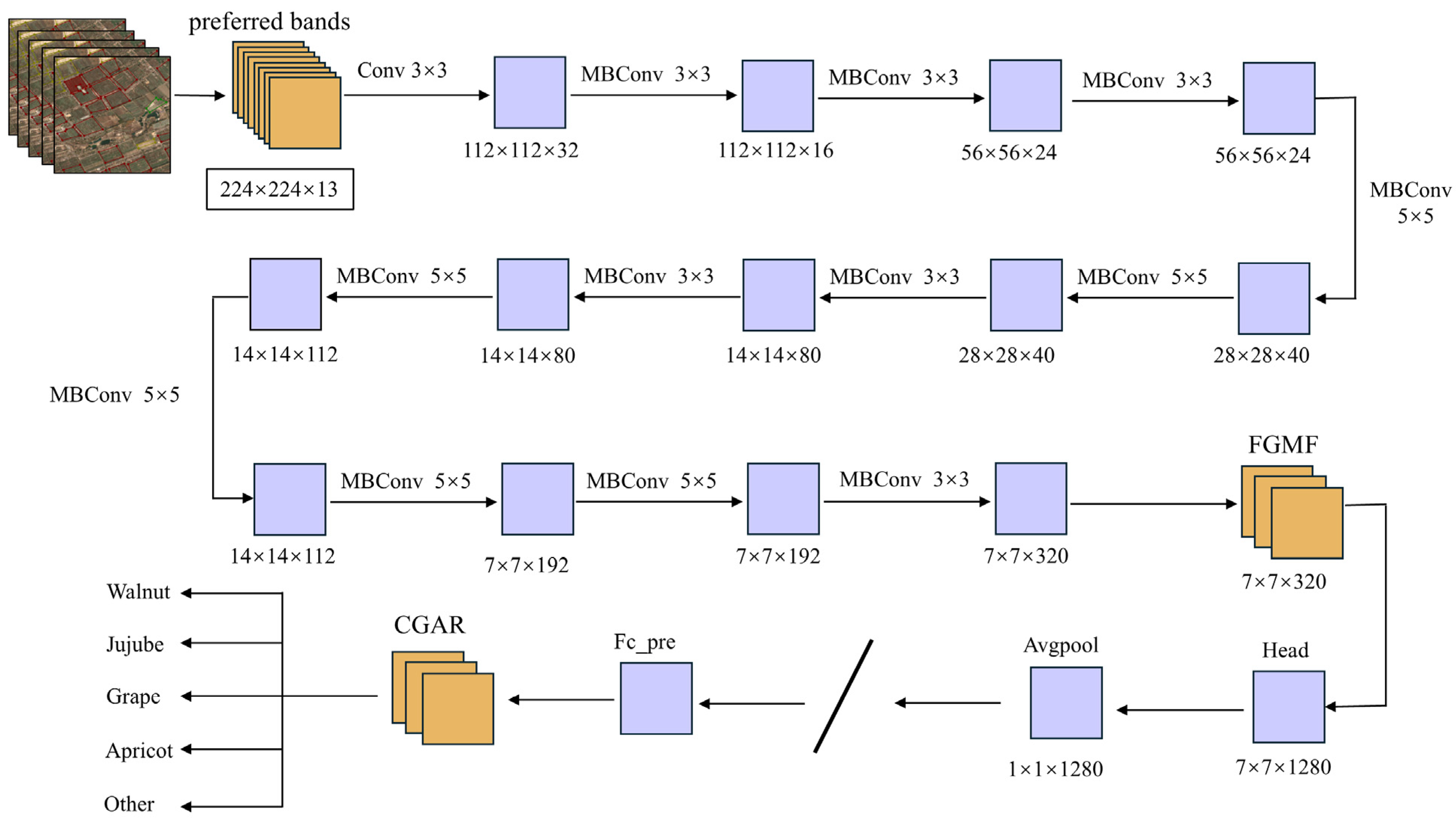

2.4.5. CF-EfficientNet Model

2.4.6. Experiment Design

3. Results

3.1. Preferred Bands

3.2. Classification Based on Multi-Spectral Bands and Preferred Bands

3.3. Structural Optimization and Classification Performance Evaluation of CF-EfficientNet

3.3.1. Ablation Study Analysis

3.3.2. Confusion Matrix Analysis

4. Discussion

4.1. Temporal Spectral and Vegetation Index Analysis

4.2. Advantages and Potential of Feature Selection

4.3. CF-EfficientNet Module Design and Performance Advantages

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xu, W.; Li, Z.; Lin, H.; Shao, G.; Zhao, F.; Wang, H.; Cheng, J.; Lei, L.; Chen, R.; Han, S.; et al. Mapping Fruit-Tree Plantation Using Sentinel-1/2 Time Series Images with Multi-Index Entropy Weighting Dynamic Time Warping Method. Remote Sens. 2024, 16, 3390. [Google Scholar] [CrossRef]

- Zhao, G.; Wang, L.; Zheng, J.; Tuerxun, N.; Han, W.; Liu, L. Optimized Extraction Method of Fruit Planting Distribution Based on Spectral and Radar Data Fusion of Key Time Phase. Remote Sens. 2023, 15, 4140. [Google Scholar] [CrossRef]

- Zhong, L.; Dai, Z.; Fang, P.; Cao, Y.; Wang, L. A Review: Tree Species Classification Based on Remote Sensing Data and Classic Deep Learning-Based Methods. Forests 2024, 15, 852. [Google Scholar] [CrossRef]

- Zhou, X.-X.; Li, Y.-Y.; Luo, Y.-K.; Sun, Y.-W.; Su, Y.-J.; Tan, C.-W.; Liu, Y.-J. Research on remote sensing classification of fruit trees based on Sentinel-2 multi-temporal imageries. Sci. Rep. 2022, 12, 11549. [Google Scholar] [CrossRef]

- Huang, Y.; Wen, X.; Gao, Y.; Zhang, Y.; Lin, G. Tree Species Classification in UAV Remote Sensing Images Based on Super-Resolution Reconstruction and Deep Learning. Remote Sens. 2023, 15, 2942. [Google Scholar] [CrossRef]

- Yan, Y.; Tang, X.; Zhu, X.; Yu, X. Optimal Time Phase Identification for Apple Orchard Land Recognition and Spatial Analysis Using Multitemporal Sentinel-2 Images and Random Forest Classification. Sustainability 2023, 15, 4695. [Google Scholar] [CrossRef]

- Qureshi, S.; Koohpayma, J.; Firozjaei, M.K.; Kakroodi, A.A. Evaluation of Seasonal, Drought, and Wet Condition Effects on Performance of Satellite-Based Precipitation Data over Different Climatic Conditions in Iran. Remote Sens. 2021, 14, 76. [Google Scholar] [CrossRef]

- Liu, Z.; Xiang, X.; Qin, J.; Tan, Y.; Zhang, Q.; Xiong, N.N. Image Recognition of Citrus Diseases Based on Deep Learning. Comput. Mater. Contin. 2020, 66, 457–466. [Google Scholar] [CrossRef]

- Trigo, I.F.; Ermida, S.L.; Martins, J.P.A.; Gouveia, C.M.; Göttsche, F.-M.; Freitas, S.C. Validation and consistency assessment of land surface temperature from geostationary and polar orbit platforms: SEVIRI/MSG and AVHRR/Metop. ISPRS J. Photogramm. Remote Sens. 2021, 175, 282–297. [Google Scholar] [CrossRef]

- Chen, J.; Xu, W.; Yu, Y.; Peng, C.; Gong, W. Reliable Label-Supervised Pixel Attention Mechanism for Weakly Supervised Building Segmentation in UAV Imagery. Remote Sens. 2022, 14, 3196. [Google Scholar] [CrossRef]

- Dong, S.; Chen, Z. A Multi-Level Feature Fusion Network for Remote Sensing Image Segmentation. Sensors 2021, 21, 1267. [Google Scholar] [CrossRef] [PubMed]

- Johansen, K.; Lopez, O.; Tu, Y.-H.; Li, T.; McCabe, M.F. Center pivot field delineation and mapping: A satellite-driven object-based image analysis approach for national scale accounting. ISPRS J. Photogramm. Remote Sens. 2021, 175, 1–19. [Google Scholar] [CrossRef]

- Li, X.; He, B.; Ding, K.; Guo, W.; Huang, B.; Wu, L. Wide-Area and Real-Time Object Search System of UAV. Remote Sens. 2022, 14, 1234. [Google Scholar] [CrossRef]

- Chen, S.; Chen, M.; Zhao, B.; Mao, T.; Wu, J.; Bao, W. Urban Tree Canopy Mapping Based on Double-Branch Convolutional Neural Network and Multi-Temporal High Spatial Resolution Satellite Imagery. Remote Sens. 2023, 15, 765. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, Z.; Shi, X. Transfer learning on efficientnet for remote sensing image classification. In Proceedings of the 2020 5th International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Harbin, China, 25–27 December 2020; pp. 2255–2258. [Google Scholar]

- Alhichri, H.; Alswayed, A.S.; Bazi, Y.; Ammour, N.; Alajlan, N.A. Classification of remote sensing images using EfficientNet-B3 CNN model with attention. IEEE Access 2021, 9, 14078–14094. [Google Scholar]

- Yin, H.; Yang, C.; Lu, J. Research on remote sensing image classification algorithm based on EfficientNet. In Proceedings of the 2022 7th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 15–17 April 2022; pp. 1757–1761. [Google Scholar]

- Mehmood, M.; Hussain, F.; Shahzad, A.; Ali, N. Classification of Remote Sensing Datasets with Different Deep Learning Architectures. Earth Sci. Res. J. 2024, 28, 409–419. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Wu, H.; Zhou, H.; Wang, A.; Iwahori, Y. Precise Crop Classification of Hyperspectral Images Using Multi-Branch Feature Fusion and Dilation-Based MLP. Remote Sens. 2022, 14, 2713. [Google Scholar] [CrossRef]

- Li, L.; Liang, P.; Ma, J.; Jiao, L.; Guo, X.; Liu, F.; Sun, C. A Multiscale Self-Adaptive Attention Network for Remote Sensing Scene Classification. Remote Sens. 2020, 12, 2209. [Google Scholar] [CrossRef]

- Xue, G.; Liu, S.; Ma, Y. A hybrid deep learning-based fruit classification using attention model and convolution autoencoder. Complex Intell. Syst. 2020, 9, 2209–2219. [Google Scholar] [CrossRef]

- Li, Q.; Yan, D.; Wu, W. Remote Sensing Image Scene Classification Based on Global Self-Attention Module. Remote Sens. 2021, 13, 4542. [Google Scholar] [CrossRef]

- Guo, N.; Jiang, M.; Gao, L.; Tang, Y.; Han, J.; Chen, X. CRABR-Net: A Contextual Relational Attention-Based Recognition Network for Remote Sensing Scene Objective. Sensors 2023, 23, 7514. [Google Scholar] [CrossRef] [PubMed]

- Antonijević, O.; Jelić, S.; Bajat, B.; Kilibarda, M. Transfer learning approach based on satellite image time series for the crop classification problem. J. Big Data 2023, 10, 54. [Google Scholar] [CrossRef]

- Barriere, V.; Claverie, M.; Schneider, M.; Lemoine, G.; d’Andrimont, R. Boosting crop classification by hierarchically fusing satellite, rotational, and contextual data. Remote Sens. Environ. 2024, 305, 114110. [Google Scholar] [CrossRef]

- Cui, J.; Wang, Y.; Zhou, T.; Jiang, L.; Qi, Q. Temperature Mediates the Dynamic of MODIS NPP in Alpine Grassland on the Tibetan Plateau, 2001–2019. Remote Sens. 2022, 14, 2401. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, H.; Jiang, B.; Meng, L.; Guan, H.; Xu, M.; Cui, Y.; Kong, F.; Yin, Y.; Wang, M. An Innovative Approach for Improving the Accuracy of Digital Elevation Models for Cultivated Land. Remote Sens. 2020, 12, 3401. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.; Murayama, Y.; Ranagalage, M. Sentinel-2 Data for Land Cover/Use Mapping: A Review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Li, J.; Cheng, K.; Wang, S.; Morstatter, F.; Trevino, R.P.; Tang, J.; Liu, H. Feature Selection. ACM Comput. Surv. 2017, 50, 1–45. [Google Scholar] [CrossRef]

- Hanchuan, P.; Fuhui, L.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Deng, W.; Wang, Y.; Ma, L.; Zhang, Y.; Ullah, S.; Xue, Y. Computational prediction of methylation types of covalently modified lysine and arginine residues in proteins. Brief. Bioinform. 2017, 18, 647–658. [Google Scholar] [CrossRef]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random Forests for Classification in Ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef] [PubMed]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Pasquadibisceglie, V.; Appice, A.; Castellano, G.; van der Aalst, W. PROMISE: Coupling predictive process mining to process discovery. Inf. Sci. 2022, 606, 250–271. [Google Scholar] [CrossRef]

- Li, A.; Huang, W.; Lan, X.; Feng, J.; Li, Z.; Wang, L. Boosting Few-Shot Learning with Adaptive Margin Loss. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12573–12581. [Google Scholar]

- Cai, J.; Pan, R.; Lin, J.; Liu, J.; Zhang, L.; Wen, X.; Chen, X.; Zhang, X. Improved EfficientNet for corn disease identification. Front. Plant Sci. 2023, 14, 1224385. [Google Scholar] [CrossRef]

- Wang, G.; Jin, H.; Gu, X.; Yang, G.; Feng, H.; Sun, Q. Autumn crops remote sensing classification based on improved separability threshold feature selection. Trans. Chin. Soc. Agric. Mach. 2021, 52, 199–210. [Google Scholar]

| Feature Name | Feature Band Names | Number of Features |

|---|---|---|

| Spectral feature | B2-B8, B8A, B11-B12 | 10 |

| Radar feature | VV, VH | 2 |

| Vegetation index | NDVI, EVI, RVI, DVI, GCVI, REP, LSWI, SAVI, CVI | 9 |

| Terrain feature | Elevation, Slope, Aspect, Hillshade | 4 |

| Texture feature | asm, corr, ent, idm, savg, sent, shade, svar | 8 |

| Band Names | Spectral Band | Central Wavelength (nm) | Band Names | Spectral Band | Central Wavelength (nm) |

|---|---|---|---|---|---|

| Blue | B2 | 490 | Red-Edge | B7 | 775 |

| Green | B3 | 560 | NIR | B8 | 842 |

| Red | B4 | 665 | NIR | B8a | 865 |

| Red-Edge | B5 | 705 | SWIR | B11 | 1610 |

| Red-Edge | B6 | 740 | SWIR | B12 | 2190 |

| Vegetation Index | Abbreviations | Based on S2 Expressions |

|---|---|---|

| Normalized Difference Vegetation Index | NDVI | (B8 − B4)/(B8 + B4) |

| Enhanced Vegetation Index | EVI | 2.5 × (B8 − B4)/(B8 + 6 × B4 − 7.5 × B2 + 1) |

| Ratio Vegetation Index | RVI | B8/B4 |

| Difference Vegetation Index | DVI | B8 − B4 |

| Green Chlorophyll Vegetation Index | GCVI | (B8/B3) − 1 |

| Red-Edge Position | REP | 700 + 40 × (((B6 + B7)/2) − B5)/(B6 − B5) |

| Land Surface Water Index | LSWI | (B8 − B11)/(B8 + B11) |

| Soil-Adjusted Vegetation Index | SAVI | (B8 − B4) × (1 + 0.5)/(B8 + B4 + 0.5) |

| Chlorophyll Vegetation Index | CVI | (B8/B5) × (B8/B3) |

| Name of Experiment | Preferred Bands | Multi-Spectral Bands |

|---|---|---|

| Features | elevation, RE2, savg, Blue, RE3, VV, Swir1, REP, NIR, idm, GCVI, Green, RE4 | B2, B3, B4, B5, B6, B7, B8, B8A, B11, B12 |

| Model | AlexNet, VGG16, ResNet18, RepVGG, EfficientNetB0 | |

| Model Adjustment | CF-EfficientNet | None |

| No. | Base | Adam | FGMF | CGAR | Accuracy | |

|---|---|---|---|---|---|---|

| OA | Kappa | |||||

| a | √ | 87.1 | 0.677 | |||

| b | √ | √ | 88.2 | 0.688 | ||

| c | √ | √ | √ | 89.5 | 0.744 | |

| d | √ | √ | √ | 91.8 | 0.782 | |

| e | √ | √ | √ | √ | 92.6 | 0.830 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miao, J.; Gao, J.; Wang, L.; Luo, L.; Pu, Z. Deep Learning Application of Fruit Planting Classification Based on Multi-Source Remote Sensing Images. Appl. Sci. 2025, 15, 10995. https://doi.org/10.3390/app152010995

Miao J, Gao J, Wang L, Luo L, Pu Z. Deep Learning Application of Fruit Planting Classification Based on Multi-Source Remote Sensing Images. Applied Sciences. 2025; 15(20):10995. https://doi.org/10.3390/app152010995

Chicago/Turabian StyleMiao, Jiamei, Jian Gao, Lei Wang, Lei Luo, and Zhi Pu. 2025. "Deep Learning Application of Fruit Planting Classification Based on Multi-Source Remote Sensing Images" Applied Sciences 15, no. 20: 10995. https://doi.org/10.3390/app152010995

APA StyleMiao, J., Gao, J., Wang, L., Luo, L., & Pu, Z. (2025). Deep Learning Application of Fruit Planting Classification Based on Multi-Source Remote Sensing Images. Applied Sciences, 15(20), 10995. https://doi.org/10.3390/app152010995