Abstract

Bearings are ubiquitous machinery parts. Monitoring and diagnosing their state is essential for reliable functioning. Machine learning techniques are now established tools for anomaly detection. We focus on a less used setup, although a very natural one: the data available for training come only from normal behavior, as the faults are various and cannot be all simulated. This setup belongs to semi-supervised learning, and the purpose is to obtain a method that is able to distinguish between normal and faulty data. We focus on the Case Western Reserve University (CWRU) dataset, since it is relevant for bearing behavior. We investigate several methods, among which one based on Dictionary Learning (DL) and another using graph total variation stand out; the former was less used for anomaly detection, and the latter is a new algorithm. We find that, together with Local Factor Outlier (LOF), these algorithms are able to identify anomalies nearly perfectly, in two scenarios: on the raw time-domain data and also on features extracted from them.

1. Introduction

Continuous machine health monitoring is essential for the rapid identification of faults and has been aided, in recent years, by anomaly detection techniques. It is also the case for rotating machinery, where bearing faults constitute a frequent and important cause of malfunctioning []. Vibrational signals, collected from accelerometers mounted on the machine, are often used for the task, since data acquisition does not interfere with machine functioning.

Goals. The purpose of our work is twofold. In terms of methodology, we argue that the most appropriate anomaly detection approach for bearing fault identification is a semi-supervised solution. It is unreasonable to assume, in most real-life applications involving operating machinery, that a recording of faulty functioning would be available and labeled beforehand, let alone with the same fault characteristics as the ones needing to be identified during machine monitoring. Normal operating data, on the other hand, are accessible and labeled as such. Process monitoring is expected to produce normal data as well as occasional faulty data, as defects occur. In the semi-supervised approach we argue for, training is performed on normal data only, and testing involves a mix of normal and faulty data, with the aim of distinguishing defects. Such an approach is called one-class anomaly detection, since labeled data belong to a single class, the normal one.

Our study shows that, despite the high number of existing anomaly detection algorithms for bearing fault identification, few proposals follow this setup. Moreover, we point out that the variety of machine learning setups present in the literature hinders benchmarking and a fair comparison of the results.

In what the methods are concerned, the aim of this study is to verify whether bearing faults can be identified using simple models, with low computational expenses. Although some authors [] argue that nonlinear aspects of faulty bearing functioning are a burden for signal processing methods in identifying defects, recent results suggest near perfect detection is achievable with lightweight methods. We propose such a method and compare it to several others, both established and lesser known.

A substantial part of existing work, regardless of the type of methods used, relies on extracting features from the vibrational data. Since feature extraction adds numerical complexity, the present paper also considers the need for data processing and its influence on fault identification performance. While the effect varies with respect to the method, our study shows that, for some of the best performing methods, working with raw data may be in fact better.

Setup. Case Western Reserve University (CWRU) [] is a dataset extensively used for testing machine learning techniques in the detection of bearing faults. Most recent methods, as discussed in Section 2, assume the availability of training data for all types of faults and attempt to identify those types. In such a scenario, there is no place for new types of faults. As previously stated, here, we assume the basic setup, where only normal data are available. Since, in most cases, the immediate repair solution is to replace the faulty bearing with a new one, such a setup is more realistic: the quick detection of a fault is important, not necessarily the type of fault, which can be determined later by a post-mortem analysis, after replacement.

Problem. The above setup defines a semi-supervised anomaly detection problem. We describe it here in its general form. We assume that a set of normal signals is available. A number of of the normal signals are utilized for training, as described later in Section 4. The remaining ones, together with faulty signals, are used for assessing the ability of a machine learning method to identify the faults. We denote as the number of such signals.

Contribution. We provide a review of recent works on bearing fault identification using machine learning. We do not aim for an extensive review (several such works are referenced throughout the paper), rather we focus on exposing differences in methodology, as well as some relevant implementation details that put the results in context.

The central contribution of the paper consists in the proposal of a semi-supervised anomaly identification method, One-Class anomaly detection with regularized graph Total Variation (OC-TVreg). Minimizing the total variation of a graph whose nodes are the signals and edges are built based on a similarity distance was used when labels from two classes (normal/faulty) are available. We propose an optimization problem where it is enough to know a set of normal signals.

We tested the method, alongside other five solutions, among which dictionary learning (DL) is less used in anomaly detection, in two experimental conditions on the CWRU dataset. In the first, we assessed the influence of the motor load on the detection performance. Training was performed on each available motor load normal data, and we also tested separately for each load. The second experiment involved training on a mix of motor loads’ normal data.

Since we are also interested in determining the effect of data processing, in both the above experiments we performed two kinds of tests: on the raw time-series data and by applying a feature extraction technique.

Contents. We review existing solutions on bearing fault identification in semi-supervised, unsupervised, and supervised setups in Section 2. Section 3 presents the algorithms on which we perform the experiments. First, we briefly describe four commonly used anomaly detection methods and proceed with the less common dictionary learning approach. The section ends with the presentation of our proposed method, OC-TVreg.

In Section 4, we describe the CWRU bearing fault dataset, as well as all data treatment steps that we apply in the experiments.

The results are presented in Section 5, which starts with a description of the evaluation metrics and practical aspects concerning the parameters and heuristics of the tested methods. The performance on the two experimental setups (with training on either individual or all motor loads) is then presented, and the section ends with an analysis of execution times. Anticipating the results, we mention here that, in many test cases, the results provided by OC-TVreg, DL, and also LOF ensure perfect classification.

Concluding remarks follow in Section 6.

2. State of the Art

The CWRU dataset has attracted a lot of interest due to its realistic character, the diversity of measurement conditions, and the multitude of methods that can be applied. It has become a de facto benchmark for bearing fault analysis, surpassing other datasets like Paderborn University Bearing Dataset [], Xi’an Jiaotong University Bearing Dataset [], and others; see, for example, [] and its bibliography.

In this section, we will give an overview of machine learning methods that have been used for analyzing the CWRU dataset. Such an endeavor is inherently partial. We will look especially at semi-supervised methods, since they are the most related to our approach. Then, we will present mostly recent results with unsupervised or supervised methods.

More bibliography can be found in review papers dedicated to CWRU: [] (focusing on deep learning methods) and [] (general machine learning methods).

2.1. Semi-Supervised Learning

In semi-supervised learning for anomaly detection problems, it is always assumed that knowledge of normal data is available. However, there are two main approaches regarding the faulty data. One either assumes that faulty data are unavailable for training (the case of interest to us) or that only few faulty data are available. In the latter case, there may be several types of faults, and so the classification may be no longer binary. A review of semi-supervised methods for industrial fault detection, including many applied to CWRU data, can be found in [].

Of special interest to us are the works in which training is made only on normal data; all other data are not labeled. Despite the natural setup of the problem, there are only few papers in this category.

The method from [] starts by computing 19 time and frequency domain features, further reduced to 6 by LDA; classification is performed with distance-preserving self-organizing maps; besides the normal data, it is assumed that the number and nature of classes are known. The resulting accuracy ranges from to .

Although labeled as unsupervised, the domain adaptation method from [] uses healthy samples to generate synthetic faults, a goal being also to balance the classes. The CWRU set is split into 4 classes; the classification accuracy is less than . A similar domain adaptation setup was proposed in [], but using adversarial training. The results were at about the same level, although with somewhat different premises. Unlike these works, we do not make any assumption about the anomalies.

In most of the papers on semi-supervised learning, training is made on data from all classes, although with some limitations, most commonly a (very) small number of labeled faulty signals.

Most methods from around a decade ago compute diverse features and then use standard or adapted classification methods. For example, in [], 42 time, frequency, and wavelet features are extracted, then reduced to 3 by PCA; SVM is used for classification on a 10-class problem; the accuracy is of about with less than 10% labeled data, but not much larger with all data. In [], time and wavelet features are the input of a manifold regularization method, followed by clustering, on a 4-class problem. Inference from one load to all loads is applied. In [], Empirical Mode Decomposition and wavelet features are employed, and the method is weighted kernel clustering based on gravitational search; two 4-class problems are considered (one for fault position, other for fault size), and the accuracy ranges from to 1 (only for some particular subproblems). Other methods use label propagation [], decision making [], ensemble learning [,], and tensors []. In [], an imbalanced 4-class problem is considered, with many normal signals and few anomalies in the training set, but with a balanced test set; over-sampling of faulty signals is used to increase the performance of standard classifiers like LS-SVM, thus obtaining better results (close to 1) than more complicated methods that use directly the imbalanced data. Over-sampling is also used in [], with better results.

Dictionary learning is present among the semi-supervised classification methods for CWRU. In [], label consistent DL with label adaptation is used. On a 10-class problem, an accuracy of is reached. A somewhat related solution is given in [], where non-negative sparse coding and low-rank factorization are used to build a graph of relations between samples for semi-supervised learning. On a 10-class problem, with 10% labeled samples (same for each class), the obtained accuracy is –.

Graph methods are worth mentioning, especially since we propose one. In [], an objective function representing error with respect to known labels, regularized with graph total variation, is minimized. The graph is built by connecting K-nearest neighbors, with ; time and frequency features or raw signals are used as input. On a 4-class problem (fault position), the obtained accuracy is 1 even for 1% labeled signals; however, the signals are long (at least 2048 samples) compared to ours (only 100). A related work is [], where the graph is now directed, and so the Laplacian regularization is different. The length of signals is smaller (1200), and the accuracy is less than 1 even when 90% of the labels are known.

The most recent approaches use various forms of neural networks. Several types of architecture were employed, such as auto-encoders [,,,], graph NN [,] (the latter combined with adaptive feature fusion and giving better results than the former), dual graph NN [], ARMA graph convolutional NN [], convolutional NN [,,], Generative Adversarial Network [,,,,], adversarial network [], meta-learning network [], bidirectional gated recurrent unit (BiGRU) network [], long short-term memory (LSTM) network [], twin deep neural netorks for data with noisy labels [], and Dual-Attention Fusion Transformer []. Some of the methods can achieve perfect accuracy if enough labeled signals are available. Thus, the border between semi-supervised and supervised learning is blurred.

2.2. Unsupervised Learning

In unsupervised learning, no information on the data is available. Training is made on all data, without labels. Ideally, after learning, clusters of data are revealed, and they are associated with classes.

In [], time–frequency features are extracted from the spectrogram with an autoencoder and clustering is made with the K-means algorithms. Perfect accuracy is reported for the normal/faulty classification. The paper contains relatively few details, and it is not clear which faults are considered.

Many other approaches are based on neural networks, but the number of classes is larger, from 3 to 16. Sometimes, only abnormal signals are considered. Diverse tools are used for clustering. The classification accuracy is often quite large, although not equal to 1.

In [] a sparsifying NN works in the spectral domain and produces features clustered with affinity propagation. Generative adversarial networks are used in [,], in both cases on a 16-class problem. Training is made on one load (speed) and testing on all loads. For some of the fault classes, the detection is perfect. Other notable works are [,], both employing auto-encoders, and [].

2.3. Supervised Learning

In supervised learning, training is made on labeled data from all classes, normal and faulty. The number of faulty classes may range from 1 to 16 for the CWRU dataset, depending on the considered fault location, rotation speed, and fault size. The purpose is to identify the class of new signals. We give more details for papers where transfer learning is used in a form or another, and learning does not use samples from all classes.

In terms of methodology, some of the works provide interesting conclusions. In [], where inference from one faulty dataset to another was examined, it was argued that prediction from one load (speed) to another, as performed in many papers, is much easier than prediction from one fault size to another. Of course, in our semi-supervised setup, we do not use at all faulty signals; hence, we are in the most difficult position. Similarly, in [], data from a type of fault are used only in one of the training, validation, and testing datasets. In particular, binary classification (normal/faulty) is studied. For CWRU, the only method that gives an accuracy equal to 1 is Naive Bayes. Although the classification is perfect, faulty signals are used for training.

Among the approaches partially similar with ours, we can cite [], where the problem is multiclass but with inference from some types of data (normal, inner race fault, outer race fault, all used for training) to other type (ball fault, not used for training). The recognition rate of unknown samples is .

In [], where a neural network is used, only three classes are considered: normal, light damage, heavy damage. Training uses samples from all classes. Fault detection is made for each load separately, and the resulting accuracy nears 1.

Some of the methods from the previous decade do not use NNs but are interesting for employing features. Wavelets and SVM are used in [] and time–frequency features in []. Neither of these works was applied to the CWRU dataset, but a comparison was made in [] the advantage going to the NN approaches.

Currently, NN solutions are prevalent. Different architectures were used, like autoencoders [,], convolutional NN (CNN) [], belief network [], a combination of CNN and mixing layers [], CNN having the input computed with multi-domain time-frequency transform [], with Gram angle field transformation [], with filtering using an optimized Maximum Correlated Kurtosis Deconvolution [], with global and local feature extraction [], or with augmented data via a diffusion model [], fuzzy CNN [], deep transfer reinforcement learning [], self attention and CNN [], transformer-based meta learning [], an attention-based generative adversarial network (AttGAN), and dynamic perceptual convolutional neural network (DPCNN) []. A comparison of several deep learning methods was made in [], where MixCNN [] obtains the best results for the CWRU dataset, even when the training set is small.

In multiclass approaches (type of anomaly is also inferred), training and testing datasets are taken from all data. In neural networks methods:

Although not directly applied to CWRU data, there are some works where DL was used for rotating machinery diagnosis in a supervised manner. In [], a dictionary was learned for each class, then classification was made by comparing representation errors, with examples for wind turbines. In [], convolutional DL was used for qualitative analysis (feature extraction) after training separately for normal data and faulty data; the atoms of the dictionary were used for the analysis of vibration signals.

3. Methods

We use several methods to tackle the semi-supervised anomaly detection problem based on the CWRU data. Some of them are known and need only minimal or no tuning of hyper-parameters. We will describe them briefly in Section 3.1; they are: dictionary learning (DL) [], Local Outlier Factor (LOF) [], Isolation Forest (IF) [], Robust Random Cut Forest (RRCF) [], and One-class Support Vector Machine (OC-SVM) []. LOF, IF, RRCF, and OC-SVM are established methods and are part of state-of-the-art libraries like PyOD []. Although DL was used in anomaly detection, it is not as popular as the above methods; a goal of this paper is to prove its good performance on such problems. We also propose a new method, using the total variation of a neighborhood graph associated with the data; the method is the first of this kind using only training on normal data and is described in Section 3.3.

All methods assume only the knowledge of normal data, and their purpose is to classify new data into two categories: normal and faulty. They compute anomaly scores for each new signal; comparison with the scores of normal signals allows the classification. We denote the score associated with signal . In most anomaly detection libraries, a higher score is more likely associated with an anomaly. In the description below, we give the natural value of the score for each method; if high scores are associated with normal signals, reversing the sign will give a score respecting the convention.

3.1. A Selection of Known Methods

3.1.1. Local Outlier Factor

LOF is a density-based method for identifying anomalies. The general assumption with density-based algorithms is that normal signals are similar to one another such that they cluster in dense regions of the signal space, whereas anomalies lie in lower-density ones. Since the actual structure of the data may not allow for an explicit assessment of the normal density, LOF considers a relative measure and computes the anomaly score of a signal (named the outlier factor), , by comparing its local density to the local density of its neighbors. Locality refers to the K-nearest neighborhood of a signal. Data points that have similar local density to that of their neighbors, therefore a score that is close to 1, are considered normal.

3.1.2. Isolation Forest

IForest isolates signals by recursively choosing dimensions and splitting values at random. Intuitively, anomalies require fewer such decisions in order to be separated from the rest of the signals than normal ones do. The method constructs a forest of trees, where the interior nodes correspond to the decisions (or cuts), and the leaves correspond to data points. The trees are obtained by repeated subsampling of the dataset. The anomaly score, , of a given signal is computed by passing the signal through the forest and returning its normalized mean height: a low height corresponds to anomalies and is translated into a high score.

3.1.3. Robust Random Cut Forest

RRCF views anomalies as responsible for an increase in model complexity when added to the dataset. The method constructs a forest of trees, where each tree corresponds to a random partitioning of the data. The anomaly score of a signal (termed the collusive displacement), , is computed by considering the number of structural changes produced by its deletion from the trees: anomalies have a higher number of sibling displacements. This property is achieved by choosing the dimension to cut not at random, as with IForest, but proportional to its spread.

3.1.4. One-Class Support Vector Machine

OC-SVM also uses only normal signals in the training step. The method projects the signals to a feature space using a kernel function and computes a decision boundary that separates them from the origin, allowing for a maximum margin. In the testing phase, signals are passed to the binary decision function and labeled according to their position with respect to the boundary.

3.2. Dictionary Learning

Dictionary learning for sparse coding (DL) [] is a signal processing technique used to learn sparse approximations of signals.

Given a signal and a basis (called the dictionary), , the aim of sparse coding is to compute a representation of that only has nonzero entries. Here, n stands for the number of atoms (columns) in the dictionary and for the (imposed) sparsity level. We call the sparse representation; the problem amounts to minimizing , with having at most nonzeros.

In the usual machine learning setup (and other signal processing tasks), the dictionary is not given but needs to be learned from the dataset . The DL problem thus consists in minimizing , jointly on and , with the constraint that has at most nonzeros on each column. In other words, a dictionary is learned to represent all the signals in the dataset, but for each of them, only a few atoms are used for the approximation.

Note that in order to avoid multiplication ambiguity, the atoms of the dictionary are normalized. In the DL setting, the dictionary is said to be overcomplete, that is , usually by at least a factor of 2.

Like many signal approximation methods, DL can be used to identify anomalies in semi-supervised or unsupervised scenarios by using the representation error of a signal as anomaly score. Since anomalies are usually few and significantly different from the normal signals, the model is assumed to be better able to identify normal signals, hence producing a smaller error in their case.

Indeed, DL was used for anomaly detection, including fault detection tasks, such as structural diagnosis in solid media [], vessel cracks in glass melting processes [] or faults in other manufacturing processes []. These works, however, take a different approach than ours; the latter is a supervised solution, while the former ones build two different dictionaries, for representing normal and abnormal data features, respectively. While essentially they both rely on representation errors to compute anomaly scores or label estimates, the exact score computations are particular to each approach.

3.3. One-Class Anomaly Detection with Regularized Graph Total Variation

Several anomaly detection methods, notably [], build an undirected graph based on distances between signals. Let be the graph of distances. A node represents a signal ; the weight associated with an edge can be constructed as follows, based on the K-nearest neighbors principle, where K is a given positive integer. If j is among the nearest K neighbors of i in terms of a distance , or i is among the K nearest neighbors of j, then ; otherwise, . We have used the cosine distance

and the standard Euclidean distance, but any distance can be used, in principle.

Denoting as the matrix of weights and the diagonal degree matrix, with

where is the set of nodes that are immediate neighbors of i, the Laplacian matrix associated with the graph is

The Laplacian is symmetric and positive semidefinite.

Given a graph signal , which will be associated with an anomaly score in the sense that , the total variation (TV) of the signal on the graph is

We would like to have for normal signals and for anomalies; this choice is not random as we will see immediately. Remind that we are in the setup where only some of the normal signals are labeled, while other normal signals and the anomalies are not labeled. In [] and other papers, the ideal score values are 1 and , and the setup is different: some normal signals are labeled, but there are also some anomalies.

Minimizing the total variation of the graph makes sense in the context of computing an anomaly score. The TV is minimum when the signal is constant. Since distances should be small between normal signals and large between anomalies and normal signals, a small TV value reflects few changes between nodes, ideally those between some anomalies and some normal nodes; there should be no changes between normal nodes or between anomalies.

Since minimizing only the TV leads to a constant signal, we propose to minimize

subject to the constraint that when the signal is known to be normal. The regularization term, weighted by a trade-off factor , tends to draw the signal towards zero. However, the TV term tends to minimize changes between neighbors, in the solution. The overall objective will thus combine these tendencies and distinguish between normal signals and anomalies: the former will have a larger value than the latter, although not necessarily 1.

Denoting

where and (without loss of generality, we order the signals such that the training ones come first, and taking into account that (where and is a vector of ones), the solution of (5) is

Since is a positive definite matrix, the solution (7) can be computed via a Cholesky factorization or using adequate iterative solvers (e.g., conjugate gradient). Thus, the scores of the unlabeled signals are given by (7).

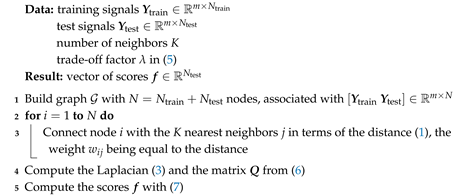

The resulting algorithm called OC-TVreg (One-Class anomaly detection with Total Variation regularization) is summarized as Algorithm 1.

| Algorithm 1: OC-TVreg |

|

Remark 1.

In the ideal case, where the graph has two connected components, one for normal signals and the other for anomalies, it follows that the columns of corresponding to anomalies are all zero and that has a block diagonal structure, with separate blocks for anomalies and normal test signals; it results from (7) that the part of corresponding to anomalies is zero, and so OC-TVreg provides a nearly perfect score distribution: nearly 1 for normal signals and exactly 0 for anomalies. Similarly, all the anomaly nodes that form a connected component get a zero score through (7). Of course, this is less likely to happen in practice, but it suggests the soundness of the proposed heuristic.

Remark 2.

The complexity of OC-TVreg is , since (7) is in fact a system of linear equations. The most time-consuming operation is the Cholesky factorization of the matrix . So, the result can be obtained in reasonable time when is at most a few thousand (which is the case in our experiments).

If the number of test signals is large, the test set can be split in several subsets, and the algorithm can be applied on each subset separately, with the same set of labeled normal signals.

Remark 3.

The training and testing populations must be large enough, especially the testing one. The latter recommendation comes from a simple remark. If the testing set consists of only one signal, let us denote and . Then, the submatrix is in fact a single element; due to (2), it has the expression . Additionally, is a vector representing the last row of without the diagonal; so, . Hence, the solution (7) is

This value, representing the score of the test signal, is smaller than 1, but it would be hard to decide whether it corresponds to a normal signal or to an anomaly. However, when many test signals are involved, the values of the solution are more likely to be smaller for anomalies than for normal signals.

Example 1.

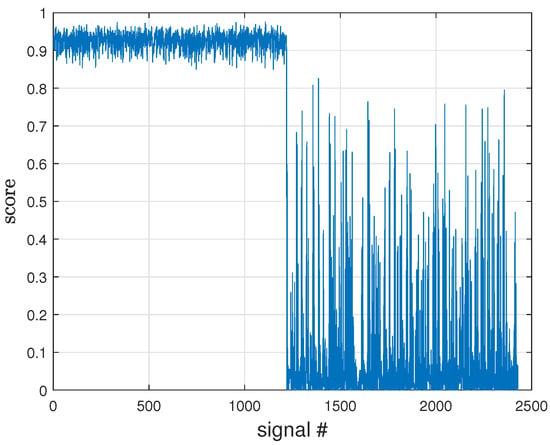

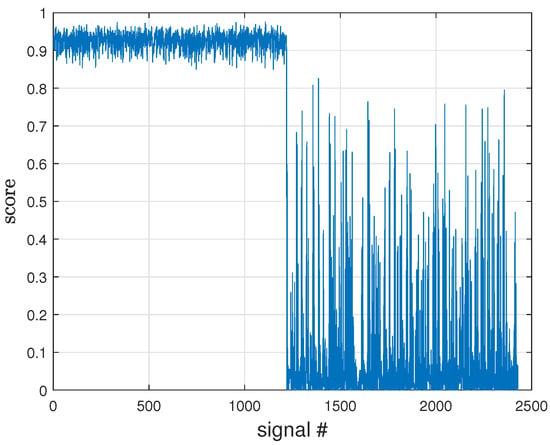

An example of a result, for one of the CWRU experiments to be described later, is shown in Figure 1. The sizes of the datasets are and ; half of the test signals are normal, and half are anomalies. The parameters are , . The solution (7) has large values not far from 1 for the normal signals (first half of the figure) and smaller, although only some near zero, for the anomalies (second half). In this example, the classification is perfect: all the anomalies have lower scores than all normal signals.

Figure 1.

An example of solution of (5).

4. The Data

In the CWRU test stand [], two rolling bearings are placed at the drive and fan ends of the motor shaft. The motor operates at four four different loads: 0, 1, 2, 3 horse power (HP); the rotation speed is different for each load. Vibration data are collected with two accelerometers placed on the motor housing at the drive and fan ends. The experiments consist of both normal and faulty operations. Single-point faults are planted independently on the two bearings at five locations (inner race; ball; outer race orthogonal; centered; and opposite to the load zone). Three different fault depths (0.007, 0.014, and 0.021 inches) for each location are tested.

Note that although there would be 60 faulty scenarios, data are available only for 45 cases, as many outer race configurations were not tested.

Data are collected at 12 kHz (for both normal and faulty operation setups) and 48 kHz (for the drive-end faults). For the following experiments, we only used fan-end accelerometer data.

As shown in Section 2, the CWRU dataset was the test subject for many methods. Some of them give hints on which setups are easier and which are more difficult. The discussion in [] is significant for the analysis of the CWRU data and the characterization of the difficulty; for example, it is argued that ball faults are the most difficult to diagnose.

4.1. Segmentation

We first segment the time series into samples windows without overlapping. We call the resulting windows, which are 8.33 miliseconds in length, signals or segments. For , this corresponds to roughly a quarter of a motor rotation. The choice is motivated by the fact, ignored in many papers, that if superposing signals are one in the training set and the other in the testing set, the classification task may be easier, and an unfair advantage would be given to the evaluated method.

4.2. Training Methodology

We performed two types of experiments, both in a semi-supervised approach.

In the first experiment, we trained on normal data with a single motor load and tested on both normal and faulty data for each of the four motor loads separately. This allowed us to examine whether the fault detection was influenced by the motor load used in the training set.

In the second experiment, we verified whether training on multiple motor loads increased the performance. The training set is composed of an equal number of normal signals from each motor load. The testing set, as previously, consists of normal and faulty segments for each motor load.

All experiments were performed independently on each fault location and size.

4.3. Train–Test Split

In all the following experiments, regardless of training methodology, the testing set was roughly twice the size of the training set. Training was always performed on normal segments, corresponding to a time series of slightly over 10 s. The testing set contained the same number of normal signals and all available faulty segments for the respective fault location, size, and motor load. There were slight differences in the sizes of faulty datasets, ranging from to for inner roll faults, from to for ball faults, and from to for outer roll faults.

4.4. Feature Extraction

We performed two types of experiments: with raw time-series data and by extracting time and frequency features.

As stated earlier, in the case of time series data, the signals are non-overlapping windows of dimension . Following the notation in Section 3.2, the signal dimension is .

For extracting the features, we followed the indications in [] and used 14 statistical time domain measures. Out of the 13 frequency domain measures in that work, however, we excluded the frequency features and ; both had very large values, and preliminary tests showed they negatively influenced the fault detection performance. In this case, the signal dimension is .

4.5. Normalization

With both time-series and processed data, we performed z-score normalization on the training set and used the scaling and centering parameters to normalize the test set.

5. Results

In this Section, we present and discuss the results for both the proposed and existing methods detailed in Section 3.

We report the Area Under the Receiver Operating Characteristic Curve (ROC AUC) as a performance indicator, calculated as follows. The scores computed by a method are sorted decreasingly; the signals with the largest scores are considered anomalies; the remaining signals are considered normal. The ROC curve has all possible false positive rates on the horizontal axis and the corresponding true positive rates on the vertical axis, both computed for the sorted signals. The ROC AUC is the area below this curve, with the ideal value being 1. All results correspond to the mean over five independent tests.

In discussing the results below, we start with the experiments where training is performed on a single motor load (Section 5.3) and follow with the ones where all motor loads are included in the training set (Section 5.4). In both cases, we first treat the raw time-series data and continue with time and frequency features.

5.1. Parameters

The DL model has three heuristics: sparsity of the representation, ; dictionary overcompleteness, o; and number of training iterations (alternate minimization steps), . We report results on the following parameter values: , , and .

For OC-TVreg, we tested and . We will report the results for and , which are generally best or nearly best. A discussion on the robustness of this choice is presented in Section 5.5. We have used both the cosine (1) and Euclidean distances. For raw signals, the cosine distance gives much better results. For features, the Euclidean distance gives practically perfect results, and the cosine distance is only slightly behind. We will report the best results in each case.

When testing LOF, we used the default parameters: Euclidean distance metric and neighbors. We also tried the cosine distance, but the results were worse, and we do not report them.

For IF, we used the parameter values suggested by the authors of the method, 100 trees and 256 signals per tree. While empirical tests [] suggest that the optimal number of samples in the tree depends on the properties of the dataset and in some cases might be larger than the default, we took into consideration the relatively small size of our dataset.

The same values were used for RRCF as well, where we set the collusive displacement method to ′maximal’.

Finally, for OC-SVM, we used the default parameters, with the exception of ′KernelScale’, which was set to ′auto’.

We used the MATLAB R2023a implementations of LOF, IF, RRCF, and OC-SVM.

The programs implementing OC-TVreg and the tests reported below can be found at https://asydil.upb.ro/software/ (accessed on 4 October 2025).

5.2. Summary of Results

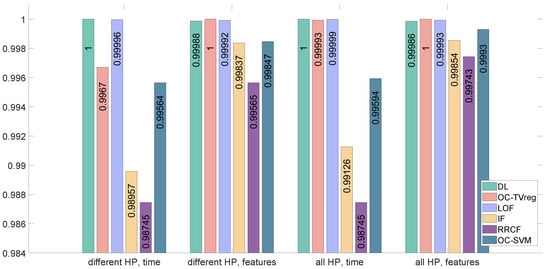

Figure 2 shows a summary of the results obtained in the two motor load training setups and with both types of data: raw-time series and features. We discuss next the overall rank of the methods, in terms of the average ROC AUC over the entire experiment batch. The first position is contested by DL and OC-TVreg, with DL having a perfect identification score in experiments performed on time-series data (in both training scenarios) and OC-TVreg in those on time and frequency features, again with a mean ROC AUC of 1. LOF is always second, and in the last three positions, we have OC-SVM, IF, and RRCF, regardless of the experiment or data type. Features clearly give better results than raw signals for OC-TVreg, IF, RRCF, and OC-SVM; however, for the DL and LOF, the results are slightly worse.

Figure 2.

Summary of results: average ROC AUC on all combinations of training load (different/all) and signal type (raw/features).

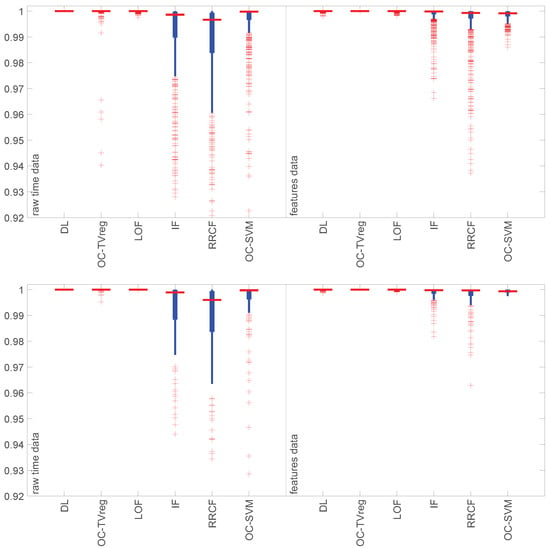

Figure 3 shows the distribution of the ROC AUC values in each test case. The horizontal red line marks the median, the thick blue line corresponds to values between the 25th and 75th percentiles, the thin blue line covers extreme values not considered outliers, and the cross sign corresponds to outliers. For the methods obtaining perfect fault identification in all test cases, only the median is shown. Possible outliers are present for the methods with a mean ROC AUC close to 1.

Figure 3.

Summary of results: distribution of ROC AUC on all combinations of training load (different/all), signal type (raw/features), and independent test runs. Top: training on different motor loads. Bottom: training on all motor loads.

The figure thus shows that DL, OC-TVreg, and LOF are not only the best performing but also the most robust with respect to the experimental conditions: fault size, location, and motor load, and also to the train/test splits. Working with features instead of raw signals reduces the variance for the rest of the methods; among these, OC-SVM has the tightest distribution of results.

We continue by giving detailed results for each setup.

5.3. Training on Individual Motor Loads

Table 1 shows a summary of the results for all methods, when training is performed on raw data. Recall that each algorithm is trained separately on normal data for each motor load and tested on normal and faulty data for the different motor loads, independently. For brevity, we only present the average of four motor load test cases for each individual training set.

Table 1.

Training on different motor loads, with raw time series data. Mean (over test loads and five independent runs) ROC AUC values.

The results, showing the ROC AUC values, are rounded up to four decimals; we reserve the integer notation 1 for perfect identification, while 1.0 corresponds to the rounded result. A value of 1 therefore indicates the situation where all anomalies are identified in all the four motor load test cases.

While the table only presents the results of the best performing DL parameter configuration for each test case, the experiments show that the DL algorithm is robust with respect to its heuristics. In most cases, several if not all the parameter configurations yield an ROC AUC of 1. Moreover, in of the tested scenarios, the , , configuration perfectly identifies the anomalies; for the remaining, an increase to is sufficient to achieve this result. Since the DL heuristics impact speed, either directly (number of iterations) or indirectly (sparsity and dictionary overcompleteness determine model size), robustness also translates into the possibility of using the fastest model for identifying faults.

Note that, although in terms of overall rank, OC-TVreg is situated third, in terms of the individual test scenarios, it produces the second highest number of complete fault identifications. The blame for the poor general mean ROC AUC is due to the result for the 0.007 inch ball fault trained with no motor load. Without this case, the overall average of OC-TVreg is 0.99991, very close to that of the frontrunners, DL and LOF. Remind that the ball faults in CWRU are known [] to be harder to identify than the other two locations.

Out of the four established anomaly detection methods we tested (LOF, IF, RRCF, OC-SVM), LOF is by far the best performing.

We now move to the experiments performed on time and frequency features data. Compared with results obtained on time-series data, DL and LOF perform worse, while all other methods see an improvement in the mean ROC AUC when working with data features.

Table 2 shows the detailed results for the experiments performed with time and frequency features. Aside from DL and, to a lower extent LOF, all methods benefit from using features. OC-TVreg produces near perfect detection in all cases; in particular, for ball faults, the improvement is high. We remind that the Euclidean distance was used for OC-TVreg. With the cosine distance, OC-TVreg has only a slightly lower performance, with the overall ROC AUC average being , which is still better than IF, RRCF, and OC-SVM. RRCF and OC-SVM have perfect detection in none of the fault configurations; however, the overall mean ROC AUC is better than in the raw time signals experiment, for both methods.

Table 2.

Training on different motor loads, with features data. Mean (over test loads and five independent runs) ROC AUC values.

Features seem to introduce more sensitivity to the training conditions, as shown by the results on and for the majority of methods.

5.4. Training on All Motor Loads

Training with raw data from all motor loads increases the performance on all methods (except for the RRCF, which has the same average ROC AUC) and does not change ranking.

Table 3 shows the detailed results for each test configuration. We provide a quick reminder as to why the structure of this table differs from the corresponding experiment on training with different motor loads, namely from Table 1. Indeed, data do not exist for all motor loads, fault locations, and size configurations; Table 3 reflects the dataset structure. In Table 1, however, the results are averaged on the training motor load, which means that if data from at least one fault configuration are available, a mean ROC AUC value is reported. Note also that the second column corresponds to Train HP and Test HP, respectively, in the two tables.

Table 3.

Training on all motor loads, raw time series data. Mean (over five independent runs) ROC AUC values.

Returning now to Table 3, the average OC-TVreg result for the ball fault of size 0.007 on all test motor loads is now 0.9992. This value was only achieved when training on in the experiment with training on different loads (see again Table 1), all other values being lower. This as a significant improvement on what appears to be the most difficult defect in this dataset. Similar improvements can be seen for other methods and other fault configurations, which suggests training on all motor loads is, in general, beneficial.

Table 4 shows the detailed results for training on features data. The same general observations hold as made for Table 2, with respect to the overall performance of the methods, compared to the case of raw signals. The OC-TVreg results are again only slightly worse for the cosine distance, where the mean ROC AUC is 0.99992.

Table 4.

Training on all motor loads, features data. Mean (over five independent runs) ROC AUC values.

In this case as well, algorithms are more sensitive to test conditions than when trained on raw data, this time with being the most affected case for DL and LOF.

5.5. OC-TVreg: Robustness to Parameter Choice

Our proposed method, OC-TVreg, has two parameters: the number of neighbors K in the graph and the regularization parameter in (5). We present here an experiment, suggesting that OC-TVreg has a robust behavior with respect to the values of these parameters. Table 5 presents the average ROC AUC values given by OC-TVreg with Euclidean distance (like in Table 4) in the case where all motor loads were used for training on features. One can see that the optimal value of 1 is obtained for several combinations of parameters. In the other reported cases, the values are quite close to 1.

Table 5.

Average ROC AUC of OC-TVreg, with Euclidean distance and training on features from all motor loads, for various values of the parameters K and .

Similar behavior is visible in Table 6, where OC-TVreg is run with the cosine distance on time signals from all motor loads (like in Table 3). Note that the maximum value is obtained for , . Again, there are many parameter choices for which the obtained ROC AUC values are near to the best one.

Table 6.

Average ROC AUC of OC-TVreg, with cosine distance and training on time signals from all motor loads, for various values of the parameters K and .

As a general rule, the number of neighbors K should be in the low tens. The value is a popular default choice for methods based on distance to neighbors, including LOF, COF, and SOD from PyOD []. Somewhat lower values are also frequently used. The regularization factor should be small compared to the norm of the Laplacian matrix in (5). Empirically, we noticed that should be 100–1000 times smaller than (the 1-norm is chosen for ease of computation).

5.6. Execution Times

We now report the execution times for testing the methods on an Intel Core i9, 256 GB RAM machine. The results in Table 7 present the average execution times on 10 independent tests, in seconds. Since there is no significant difference in terms of data dimensions between the experiments (except, of course for raw vs. features cases), we only provide execution times for testing on inner roll faults of dimension and .

Table 7.

Execution times.

For DL, the training phase is significantly more computationally expensive then the test phase; however, in the semi-supervised approach proposed here, it does not constitute a drawback. In practice, it is feasible and preferable that the training phase, where the dictionary is learned on a normal functioning bearing, be performed offline, prior to the actual operative monitoring. In fact, this also holds for the rest of the methods.

Moreover, the testing in DL amounts to computing the sparse representations for each individual signal in the dataset and the corresponding error . These computations are independent for each signal; hence, parallelization can be employed. In Table 7, we also provide DL times using the MATLAB Parallel Computing Toolbox, version 2024a.

IF and RRCF can also benefit from a similar treatment, and MATLAB implementations allow this option. For IF, using parallel computations decreases the execution times by for raw data and for features. Surprisingly however, with RRCF, there is a increase in execution times when working with raw data and a increase for features. Due to this apparent inconsistency, in Table 7 we only report results on the default non-parallel implementations.

As expected, DL is faster with features, as the dictionary size is dependent on the signal size. Likewise, the heavy reliance on distance computations in LOF makes the algorithm slower on raw time-series data. Similarly, the execution time of OC-SVM grows with the dimensionality of the signal, albeit for different reasons, linked to the larger complexity of the quadratic optimization problem that is solved. While OC-TVreg also initially computes distances between signals (1), all subsequent operations, including the most computationally expensive (7), depend only on the total number of training and testing signals and not their dimension. Since, in theory, IF should also not be affected by the signal size, we attribute the differences between execution times in the two experiments to data validation and other support operations in the MATLAB implementation. For RRCF, the difference between the two experiments is within the standard deviation of the individual tests.

6. Conclusions

We have tested the behavior of several semi-supervised anomaly detection methods on the CWRU bearing dataset. Only normal data are assumed to be labeled and are used for training (in the methods that have an explicit training phase), unlike in most of other works with the CWRU data. The best results are obtained by three methods. One of them, OC-TVreg, is proposed in this paper and minimizes a regularized total variation of a neighborhood graph associated with the data. The second is based on Dictionary Learning, a versatile modeling tool, not often used in anomaly detection but quite natural in our semi-supervised setup. The third is a well established anomaly detection method, namely LOF. Training with data from one load or from all loads, using the raw vibration signals or derived features, the methods achieve a perfect ROC AUC for many of the faults types and loads and nearly perfect values in the remaining cases. One can thus argue that anomaly detection on the CWRU data, in the natural semi-supervised setup that we have studied, is not a difficult machine learning problem and also that the lightweight methods we employ are adequate.

Author Contributions

Conceptualization, A.B. and B.D.; methodology, A.B. and B.D.; software, A.B. and B.D.; validation, A.B. and B.D.; investigation, A.B. and B.D.; data curation, A.B.; writing—original draft preparation, A.B. and B.D.; writing—review and editing, A.B. and B.D.; supervision, B.D.; funding acquisition, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

Andra Băltoiu’s work was supported by a grant from the National Program for Research of the National Association of Technical Universities—GNAC ARUT 2023, grant number 207.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The experiments in this paper are performed on the CWRU dataset, publicly available at https://engineering.case.edu/bearingdatacenter (accessed on 15 August 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional neural network |

| CWRU | Case Western Reserve University (dataset) |

| DL | Dictionary learning |

| IF | Isolation forest |

| LOF | Local outlier factor |

| NN | Neural network |

| OC-TVreg | One-class anomaly detection with total variation regularization |

| ROC AUC | Receiver operating characteristic area under curve |

| RRCF | Robust random cut forest |

| SVM | Support vector machine |

| TV | Total variation |

References

- Nandi, S.; Toliyat, H.A.; Li, X. Condition Monitoring and Fault Diagnosis of Electrical Motors; A Review. IEEE Trans. Energy Convers. 2005, 20, 719–729. [Google Scholar] [CrossRef]

- Wang, S.; Xiang, J.; Zhong, Y.; Zhou, Y. Convolutional neural network-based hidden Markov models for rolling element bearing fault identification. Knowl. Based Syst. 2018, 144, 65–76. [Google Scholar]

- Case Western Reserve University. Bearing Data Center. Available online: https://engineering.case.edu/bearingdatacenter (accessed on 15 August 2025).

- Available online: https://mb.uni-paderborn.de/kat/forschung/kat-datacenter/bearing-datacenter/data-sets-and-download (accessed on 15 August 2025).

- Wang, B.; Lei, Y.; Li, N.; Li, N. A hybrid prognostics approach for estimating remaining useful life of rolling element bearings. IEEE Trans. Reliab. 2018, 69, 401–412. [Google Scholar] [CrossRef]

- Lundström, A.; O’Nils, M. Factory-based vibration data for bearing-fault detection. Data 2023, 8, 115. [Google Scholar]

- Neupane, D.; Seok, J. Bearing fault detection and diagnosis using Case Western Reserve University dataset with deep learning approaches: A review. IEEE Access 2020, 8, 93155–93178. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, B.; Lin, Y. Machine learning based bearing fault diagnosis using the Case Western Reserve University data: A review. IEEE Access 2021, 9, 155598–155608. [Google Scholar] [CrossRef]

- Ramírez-Sanz, J.; Maestro-Prieto, J.; Arnaiz-González, Á.; Bustillo, A. Semi-supervised learning for industrial fault detection and diagnosis: A systemic review. ISA Trans. 2023, 143, 255–270. [Google Scholar] [CrossRef]

- Li, W.; Zhang, S.; He, G. Semisupervised distance-preserving self-organizing map for machine-defect detection and classification. IEEE Trans. Instrum. Meas. 2013, 62, 869–879. [Google Scholar] [CrossRef]

- Wang, Q.; Taal, C.; Fink, O. Integrating expert knowledge with domain adaptation for unsupervised fault diagnosis. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar]

- Zhu, Z.; Fang, X.; Zhu, C.; Luo, W.; Zhu, Y. Structured Prediction in Latent Subspace for Unsupervised Fault Diagnosis with Small and Imbalanced Data. IEEE Sens. J. 2024, 24, 25106–25115. [Google Scholar] [CrossRef]

- Zhao, X.; Li, M.; Xu, J.; Song, G. An effective procedure exploiting unlabeled data to build monitoring system. Expert Syst. Appl. 2011, 38, 10199–10204. [Google Scholar] [CrossRef]

- Yuan, J.; Liu, X. Semi-supervised learning and condition fusion for fault diagnosis. Mech. Syst. Signal Process. 2013, 38, 615–627. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J. Semi-supervised weighted kernel clustering based on gravitational search for fault diagnosis. ISA Trans. 2014, 53, 1534–1543. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Z.; Zhang, Z.; Jia, L.; Qin, Y. A semi-supervised approach to bearing fault diagnosis under variable conditions towards imbalanced unlabeled data. Sensors 2018, 18, 2097. [Google Scholar] [CrossRef]

- Razavi-Far, R.; Hallaji, E.; Farajzadeh-Zanjani, M.; Saif, M. A semi-supervised diagnostic framework based on the surface estimation of faulty distributions. IEEE Trans. Ind. Inform. 2018, 15, 1277–1286. [Google Scholar] [CrossRef]

- Jian, C.; Yang, K.; Ao, Y. Industrial fault diagnosis based on active learning and semi-supervised learning using small training set. Eng. Appl. Artif. Intell. 2021, 104, 104365. [Google Scholar] [CrossRef]

- Jian, C.; Ao, Y. Imbalanced fault diagnosis based on semi-supervised ensemble learning. J. Intell. Manuf. 2023, 34, 3143–3158. [Google Scholar]

- Hu, C.; Zhou, Z.; Wang, B.; Zheng, W.; He, S. Tensor transfer learning for intelligence fault diagnosis of bearing with semisupervised partial label learning. J. Sens. 2021, 2021, 6205890. [Google Scholar]

- Wei, J.; Huang, H.; Yao, L.; Hu, Y.; Fan, Q.; Huang, D. New imbalanced bearing fault diagnosis method based on Sample-characteristic Oversampling TechniquE (SCOTE) and multi-class LS-SVM. Appl. Soft Comput. 2021, 101, 107043. [Google Scholar]

- Jiang, W.; Zhang, Z.; Li, F.; Zhang, L.; Zhao, M.; Jin, X. Joint label consistent dictionary learning and adaptive label prediction for semisupervised machine fault classification. IEEE Trans. Ind. Inform. 2016, 12, 248–256. [Google Scholar]

- Zhao, M.; Li, B.; Qi, J.; Ding, Y. Semi-supervised classification for rolling fault diagnosis via robust sparse and low-rank model. In Proceedings of the 2017 IEEE 15th international conference on industrial informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 1062–1067. [Google Scholar]

- Gao, Y.; Yu, D. Fault diagnosis of rolling bearing based on Laplacian regularization. Appl. Soft Comput. 2021, 111, 107651. [Google Scholar] [CrossRef]

- Gao, Y.; Yu, D. Intelligent fault diagnosis for rolling bearings based on graph shift regularization with directed graphs. Adv. Eng. Inform. 2021, 47, 101253. [Google Scholar]

- Li, X.; Li, X.; Ma, H. Deep representation clustering-based fault diagnosis method with unsupervised data applied to rotating machinery. Mech. Syst. Signal Process. 2020, 143, 106825. [Google Scholar] [CrossRef]

- Zhang, S.; Ye, F.; Wang, B.; Habetler, T. Semi-supervised bearing fault diagnosis and classification using variational autoencoder-based deep generative models. IEEE Sens. J. 2021, 21, 6476–6486. [Google Scholar]

- Luo, S.; Huang, X.; Wang, Y.; Luo, R.; Zhou, Q. Transfer learning based on improved stacked autoencoder for bearing fault diagnosis. Knowl. Based Syst. 2022, 256, 109846. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, Y.; Cheng, C.; Peng, Z. A hybrid classification autoencoder for semi-supervised fault diagnosis in rotating machinery. Mech. Syst. Signal Process. 2021, 149, 107327. [Google Scholar] [CrossRef]

- Gao, Y.; Chen, M.; Yu, D. Semi-supervised graph convolutional network and its application in intelligent fault diagnosis of rotating machinery. Measurement 2021, 186, 110084. [Google Scholar] [CrossRef]

- Xie, Z.; Chen, J.; Feng, Y.; He, S. Semi-supervised multi-scale attention-aware graph convolution network for intelligent fault diagnosis of machine under extremely-limited labeled samples. J. Manuf. Syst. 2022, 64, 561–577. [Google Scholar]

- Wang, H.; Wang, J.; Zhao, Y.; Liu, Q.; Liu, M.; Shen, W. Few-shot learning for fault diagnosis with a dual graph neural network. IEEE Trans. Ind. Inform. 2023, 19, 1559–1568. [Google Scholar]

- Kavianpour, M.; Ramezani, A.; Beheshti, M. A class alignment method based on graph convolution neural network for bearing fault diagnosis in presence of missing data and changing working conditions. Measurement 2022, 199, 111536. [Google Scholar] [CrossRef]

- Wang, X.; Liu, F.; Zhao, D. Cross-machine fault diagnosis with semi-supervised discriminative adversarial domain adaptation. Sensors 2020, 20, 3753. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Z.; Gao, L.; Wen, L. A new semi-supervised fault diagnosis method via deep CORAL and transfer component analysis. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 6, 690–699. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, R.; Jin, W.; He, T.; Ma, S.; Shi, M. Intelligent fault diagnosis of rolling bearings using a semi-supervised convolutional neural network. Appl. Intell. 2021, 51, 2144–2160. [Google Scholar]

- Pan, T.; Chen, J.; Xie, J.; Chang, Y.; Zhou, Z. Intelligent fault identification for industrial automation system via multi-scale convolutional generative adversarial network with partially labeled samples. ISA Trans. 2020, 101, 379–389. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Shi, Y.; Shi, L.; Ren, Z.; Lu, Y. Intelligent deep adversarial network fault diagnosis method using semisupervised learning. Math. Probl. Eng. 2020, 2020, 8503247. [Google Scholar] [CrossRef]

- Liang, P.; Deng, C.; Wu, J.; Li, G.; Yang, Z.; Wang, Y. Intelligent fault diagnosis via semisupervised generative adversarial nets and wavelet transform. IEEE Trans. Instrum. Meas. 2020, 69, 4659–4671. [Google Scholar] [CrossRef]

- Xu, M.; Wang, Y. An imbalanced fault diagnosis method for rolling bearing based on semi-supervised conditional generative adversarial network with spectral normalization. IEEE Access 2021, 9, 27736–27747. [Google Scholar] [CrossRef]

- Fu, W.; Jiang, X.; Tan, C.; Li, B.; Chen, B. Rolling bearing fault diagnosis in limited data scenarios using feature enhanced generative adversarial networks. IEEE Sens. J. 2022, 22, 8749–8759. [Google Scholar]

- Zong, X.; Yang, R.; Wang, H.; Du, M.; You, P.; Wang, S.; Su, H. Semi-supervised transfer learning method for bearing fault diagnosis with imbalanced data. Machines 2022, 10, 515. [Google Scholar] [CrossRef]

- Feng, Y.; Chen, J.; Zhang, T.; He, S.; Xu, E.; Zhou, Z. Semi-supervised meta-learning networks with squeeze-and-excitation attention for few-shot fault diagnosis. ISA Trans. 2022, 120, 383–401. [Google Scholar]

- Zhao, K.; Jiang, H.; Wu, Z.; Lu, T. A novel transfer learning fault diagnosis method based on manifold embedded distribution alignment with a little labeled data. J. Intell. Manuf. 2022, 33, 151–165. [Google Scholar]

- Tang, Z.; Bo, L.; Liu, X.; Wei, D. A semi-supervised transferable LSTM with feature evaluation for fault diagnosis of rotating machinery. Appl. Intell. 2022, 52, 1703–1717. [Google Scholar]

- Cheng, C.; Liu, X.; Zhou, B.; Yuan, Y. Intelligent fault diagnosis with noisy labels via semisupervised learning on industrial time series. IEEE Trans. Ind. Inform. 2023, 19, 7724–7732. [Google Scholar]

- Liu, S.; Li, J.; Zhou, N.; Chen, G.; Lu, K.; Wu, Y. Intelligent fault diagnosis of rotating machine via Expansive dual-attention fusion Transformer enhanced by semi-supervised learning. Expert Syst. Appl. 2025, 260, 125398. [Google Scholar]

- Wei, X.; Lee, T.; Söffker, D. A New Unsupervised Learning Approach for CWRU Bearing State Distinction. In Proceedings of the European Workshop on Structural Health Monitoring, Palermo, Italy, 4–7 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 312–319. [Google Scholar]

- Zhao, X.; Jia, M. A novel unsupervised deep learning network for intelligent fault diagnosis of rotating machinery. Struct. Health Monit. 2020, 19, 1745–1763. [Google Scholar]

- Li, X.; Zhang, F. Classification of multi-type bearing fault features based on semi-supervised generative adversarial network (GAN). Meas. Sci. Technol. 2023, 35, 025107. [Google Scholar]

- Li, X.; Zhang, F.L.; Lei, J.; Xiang, W. Deep representation clustering of multi-type damage features based on unsupervised generative adversarial network. IEEE Sens. J. 2024, 24, 25374–25393. [Google Scholar] [CrossRef]

- Kim, T.; Lee, S. A novel unsupervised clustering and domain adaptation framework for rotating machinery fault diagnosis. IEEE Trans. Ind. Inform. 2023, 19, 9404–9412. [Google Scholar] [CrossRef]

- Wu, Y.; Li, C.; Yang, S.; Bai, Y. Multiscale reduction clustering of vibration signals for unsupervised diagnosis of machine faults. Appl. Soft Comput. 2023, 142, 110358. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, R.; Cheng, G.; Huang, X.; Yu, W. An unsupervised mechanical fault classification method under the condition of unknown number of fault types. J. Mech. Sci. Technol. 2024, 38, 605–622. [Google Scholar] [CrossRef]

- Hendriks, J.; Dumond, P.; Knox, D. Towards better benchmarking using the CWRU bearing fault dataset. Mech. Syst. Signal Process. 2022, 169, 108732. [Google Scholar] [CrossRef]

- Abburi, H.; Chaudhary, T.; Ilyas, H.; Manne, L.; Mittal, D.; Williams, D.; Snaidauf, D.; Bowen, E.; Veeramani, B. A Closer Look at Bearing Fault Classification Approaches. arXiv 2023, arXiv:2309.17001. [Google Scholar] [CrossRef]

- Hu, M.; Luo, C.; Wang, C.; Qiang, Z. Compound fault recognition and diagnosis of rolling bearing in open-set-recognition setting. Measurement 2025, 242, 116132. [Google Scholar]

- Shen, S.; Lu, H.; Sadoughi, M.; Hu, C.; Nemani, V.; Thelen, A.; Webster, K.; Darr, M.; Sidon, J.; Kenny, S. A physics-informed deep learning approach for bearing fault detection. Eng. Appl. Artif. Intell. 2021, 103, 104295. [Google Scholar] [CrossRef]

- Xu, Y.; Xiu, S. A New and Effective Method of Bearing Fault Diagnosis Using Wavelet Packet Transform Combined with Support Vector Machine. J. Comput. 2011, 6, 2502–2509. [Google Scholar] [CrossRef]

- Dhamande, L.; Chaudhari, M. Compound gear-bearing fault feature extraction using statistical features based on time-frequency method. Measurement 2018, 125, 63–77. [Google Scholar]

- Jin, Y.; Qin, C.; Huang, Y.; Liu, C. Actual bearing compound fault diagnosis based on active learning and decoupling attentional residual network. Measurement 2021, 173, 108500. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Lin, J.; Zhou, X.; Lu, N. Deep neural networks: A promising tool for fault characteristic mining and intelligent diagnosis of rotating machinery with massive data. Mech. Syst. Signal Process. 2016, 72, 303–315. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.; Qin, W.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- Guo, X.; Chen, L.; Shen, C. Hierarchical adaptive deep convolution neural network and its application to bearing fault diagnosis. Measurement 2016, 93, 490–502. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Wang, F.; Wang, Y. Rolling bearing fault diagnosis using adaptive deep belief network with dual-tree complex wavelet packet. ISA Trans. 2017, 69, 187–201. [Google Scholar] [CrossRef]

- Zhao, Z.; Jiao, Y. A fault diagnosis method for rotating machinery based on CNN with mixed information. IEEE Trans. Ind. Inform. 2022, 19, 9091–9101. [Google Scholar]

- Dong, H.; Lu, J.; Han, Y. Multi-Stream Convolutional Neural Networks for Rotating Machinery Fault Diagnosis under Noise and Trend Items. Sensors 2022, 22, 2720. [Google Scholar] [CrossRef]

- Zhang, B.; Pang, X.; Zhao, P.; Lu, K. A new method based on encoding data probability density and convolutional neural network for rotating machinery fault diagnosis. IEEE Access 2023, 11, 26099–26113. [Google Scholar] [CrossRef]

- Gao, S.; Shi, S.; Zhang, Y. Rolling bearing compound fault diagnosis based on parameter optimization MCKD and convolutional neural network. IEEE Trans. Instrum. Meas. 2022, 71, 1–8. [Google Scholar] [CrossRef]

- Han, S.; Niu, P.; Luo, S.; Li, Y.; Zhen, D.; Feng, G.; Sun, S. A novel deep convolutional neural network combining global feature extraction and detailed feature extraction for bearing compound fault diagnosis. Sensors 2023, 23, 8060. [Google Scholar] [CrossRef]

- Zhao, P.; Zhang, W.; Cao, X.; Li, X. Denoising diffusion probabilistic model-enabled data augmentation method for intelligent machine fault diagnosis. Eng. Appl. Artif. Intell. 2025, 139, 109520. [Google Scholar]

- Rajput, D.; Meena, G.; Acharya, M.; Mohbey, K. Fault prediction using fuzzy convolution neural network on IoT environment with heterogeneous sensing data fusion. Meas. Sens. 2023, 26, 100701. [Google Scholar]

- Yang, D.; Karimi, H.; Pawelczyk, M. A new intelligent fault diagnosis framework for rotating machinery based on deep transfer reinforcement learning. Control Eng. Pract. 2023, 134, 105475. [Google Scholar]

- Wei, Q.; Tian, X.; Cui, L.; Zheng, F.; Liu, L. WSAFormer-DFFN: A model for rotating machinery fault diagnosis using 1D window-based multi-head self-attention and deep feature fusion network. Eng. Appl. Artif. Intell. 2023, 124, 106633. [Google Scholar] [CrossRef]

- Li, X.; Su, H.; Xiang, L.; Yao, Q.; Hu, A. Transformer-based meta learning method for bearing fault identification under multiple small sample conditions. Mech. Syst. Signal Process. 2024, 208, 110967. [Google Scholar] [CrossRef]

- Qin, N.; You, Y.; Huang, D.; Jia, X.; Zhang, Y.; Du, J.; Wang, T. AttGAN-DPCNN: An Extremely Imbalanced Fault Diagnosis Method for Complex Signals From Multiple Sensors. IEEE Sens. J. 2024, 24, 38270–38285. [Google Scholar] [CrossRef]

- Jiang, D.; He, C.; Chen, Z.; Zhao, J. Are Novel Deep Learning Methods Effective for Fault Diagnosis? IEEE Trans. Reliab. 2025, 74, 4170–4184. [Google Scholar] [CrossRef]

- Han, T.; Jiang, D.; Sun, Y.; Wang, N.; Yang, Y. Intelligent fault diagnosis method for rotating machinery via dictionary learning and sparse representation-based classification. Measurement 2018, 118, 181–193. [Google Scholar] [CrossRef]

- Wang, H.; Wang, J.; Fan, Z. Deep convolutional sparse dictionary learning for bearing fault diagnosis under variable speed condition. J. Frankl. Inst. 2025, 362, 107392. [Google Scholar] [CrossRef]

- Dumitrescu, B.; Irofti, P. Dictionary Learning Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. SIGMOD Rec. 2000, 29, 93–104. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation Forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar]

- Guha, S.; Mishra, N.; Roy, G.; Schrijvers, O. Robust random cut forest based anomaly detection on streams. In Proceedings of the 33rd International Conference on International Conference on Machine Learning—Volume 48. JMLR.org, New York, NY, USA, 20–22 June 2016; ICML’16. pp. 2712–2721. [Google Scholar]

- Schölkopf, B.; Platt, J.C.; Shawe-Taylor, J.; Smola, A.J.; Williamson, R.C. Estimating the Support of a High-Dimensional Distribution. Neural Comput. 2001, 13, 1443–1471. [Google Scholar] [CrossRef]

- Chen, S.; Qian, Z.; Siu, W.; Hu, X.; Li, J.; Li, S.; Qin, Y.; Yang, T.; Xiao, Z.; Ye, W.; et al. PyOD 2: A Python Library for Outlier Detection with LLM-powered Model Selection. arXiv 2024, arXiv:2412.12154. [Google Scholar]

- Druce, J.M.; Haupt, J.D.; Gonella, S. Anomaly-sensitive dictionary learning for structural diagnostics from ultrasonic wavefields. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2015, 62, 1384–1396. [Google Scholar] [CrossRef]

- Liu, Y.; Zeng, J.; Wang, Z.; Sheng, W.; Gao, C.; Xie, Q.; Xie, L. Structured Pattern Discovery Using Dictionary Learning for Incipient Fault Detection and Isolation. IEEE Trans. Ind. Inform. 2025, 21, 6679–6689. [Google Scholar] [CrossRef]

- Li, J.; Huang, K.; Wu, D.; Liu, Y.; Yang, C.; Gui, W. Hybrid variable dictionary learning for monitoring continuous and discrete variables in manufacturing processes. Control Eng. Pract. 2024, 149, 105970. [Google Scholar] [CrossRef]

- Smith, W.; Randall, R. Rolling element bearing diagnostics using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Process. 2015, 64, 100–131. [Google Scholar] [CrossRef]

- Chabchoub, Y.; Togbe, M.U.; Boly, A.; Chiky, R. An In-Depth Study and Improvement of Isolation Forest. IEEE Access 2022, 10, 10219–10237. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).