Biases in AI-Supported Industry 4.0 Research: A Systematic Review, Taxonomy, and Mitigation Strategies

Abstract

1. Introduction

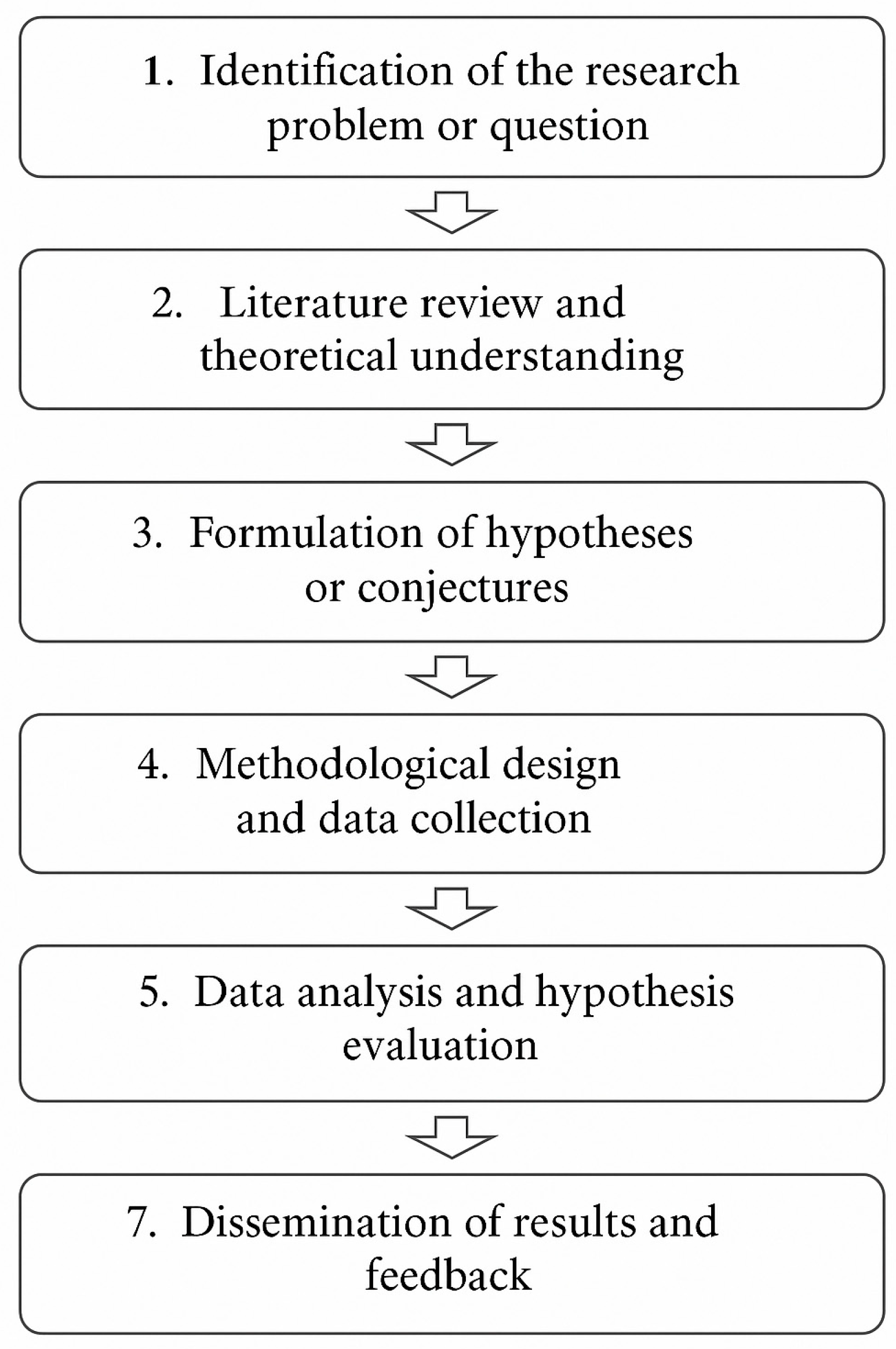

2. Materials and Methods

3. Results

- Those obtained through a quantitative approach based on data processing with advanced computational tools.

- Those derived from a detailed manual review of articles specifically addressing AI within the analyzed corpus.

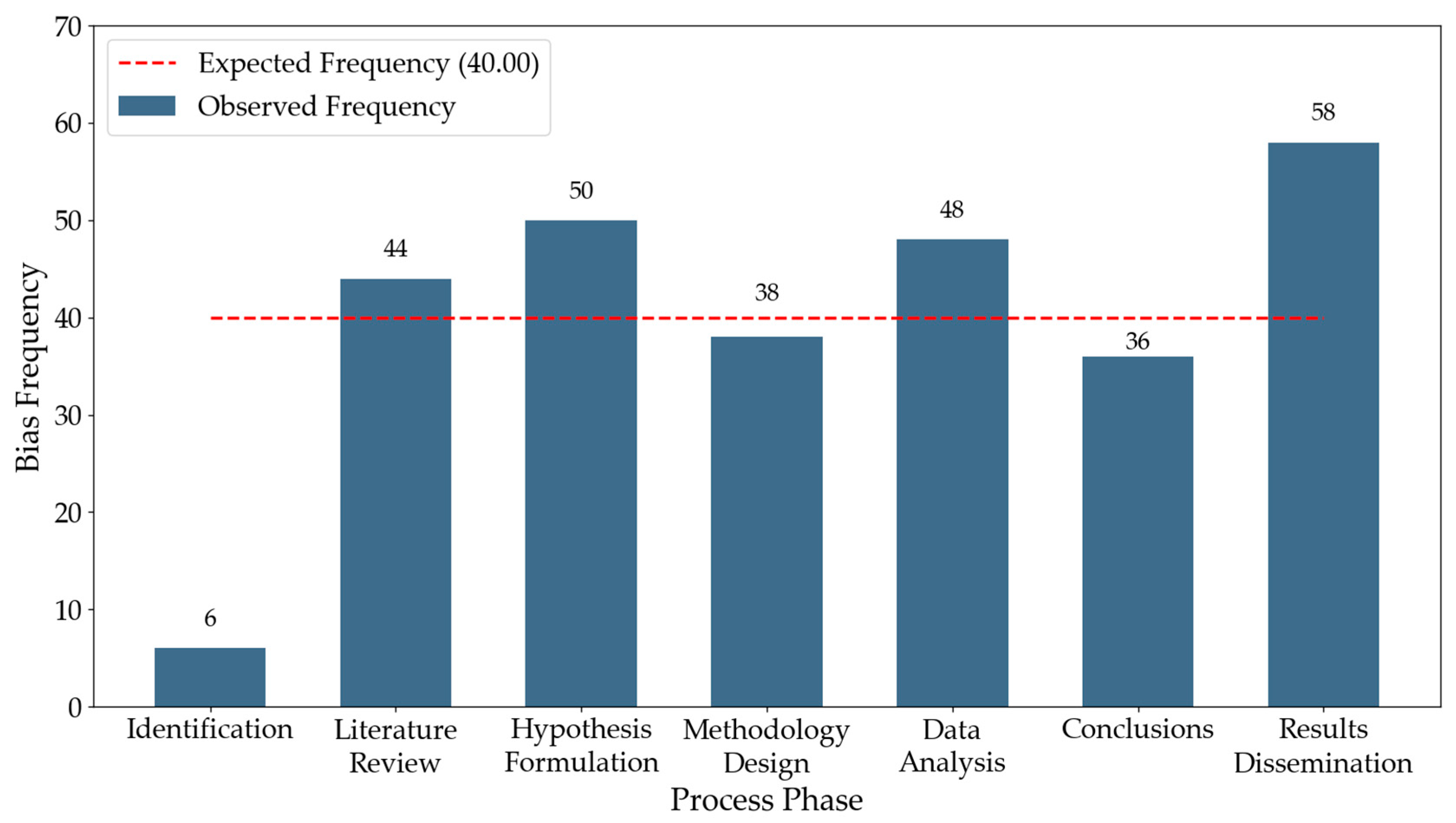

3.1. Data Analysis and Hypothesis Evaluation in Industry 4.0

- Under the assumption of a homogeneous distribution, the expected frequency for each phase is E = 40

- The χ2 statistic was calculated as the sum of the terms , where O represents the observed frequency (Table 4).

- The test statistic was therefore computed as

- This value was compared against the critical χ2 distribution with k − 1 = 6 degrees of freedom and a significance level of α = 0.05.

- If the computed χ2 exceeds the critical value, the null hypothesis must be rejected, indicating that the incidence of biases varies significantly across the stages of the research process, as hypothesized. Otherwise, the hypothesis of homogeneous distribution could not be discarded.

3.2. AI-Related Emergent Biases Identified in CPS/IIoT

- Scientific automation bias (Phase: Data analysis and hypothesis evaluation): The tendency to accept AI-generated results without critical examination, neglecting to verify the validity of those results or the quality of input data. In engineering, this is evident when predictive models (e.g., structural behavior or fault analysis) are employed uncritically, leading to flawed decisions in the design or maintenance of infrastructures [126].

- Algorithmic opacity bias (Phase: Literature review and theoretical understanding): Stemming from the lack of transparency in AI models, this bias hampers the detection of inherent errors or distortions in their functioning. In engineering research, automated monitoring systems may exclude or filter critical information without explanation, undermining the reliability of conclusions. In digitalized production environments, where CPS integrate large volumes of real-time data, this opacity compromises decision traceability and may generate failures that are difficult to diagnose [127].

- Knowledge homogenization bias (Phase: Literature review and theoretical understanding): AI models trained on large datasets tend to reinforce established theories and approaches, which may limit the exploration of innovative ideas in engineering. For example, recommendation systems may consistently return the same sources or well-recognized authors, disregarding emerging lines of research. In highly automated industrial contexts, this reduces the diversity of considered solutions and may hinder the adoption of disruptive approaches in areas such as process optimization, materials design, or predictive maintenance [128].

- Cognitive hyperparameterization bias (Phase: Hypothesis formulation): This bias manifests when AI-based methodologies are prioritized over traditional empirical methods, creating problems in engineering fields where physical experimentation is essential to validate models in critical performance scenarios. Excessive reliance on simulations or automated tools may compromise the validity of results in the absence of complementary experimental testing. In advanced production environments, this can lead to design or control decisions based on overfitted models with low generalization capacity, thereby increasing the risk of failures in production systems and infrastructure operation [129].

- Technological dependence in scientific inference (Phase: Methodological design and data collection): Occurs when experimental planning becomes overly conditioned by AI-based tools, sidelining indispensable empirical validation practices. In engineering, this is seen in the uncritical adoption of smart sensors or automated acquisition systems, which, although optimizing data collection, may limit the researcher’s ability to detect anomalies unforeseen by the algorithms. In industrial contexts, such dependence may result in predictive maintenance or quality control processes that reproduce technological system limitations rather than overcoming them, thus compromising decision reliability [130].

- AI-assisted confirmation bias (Phase: Hypothesis formulation): This bias arises when AI tools are employed to search and filter information that validates the researcher’s pre-existing hypotheses, rather than exposing them to contradictory evidence. For instance, an AI-driven academic search engine may prioritize studies aligned with the researcher’s initial belief, thereby reinforcing conviction instead of challenging it [131].

- Database filtering bias (Phase: Literature review and theoretical understanding): The use of AI-based systems for automated literature selection can lead to the exclusion of relevant studies due to biased indexing or filtering criteria, producing a partial view of the state of the art. This is particularly critical in engineering, where diversity of perspectives drives innovation. A search system omitting certain types of publications can thus distort the research landscape [132]. Although both knowledge homogenization bias and database filtering bias may appear related, they originate at different stages and operate through distinct mechanisms. Database filtering bias emerges earlier in the research pipeline, during the automated retrieval and selection of literature, when indexing rules or algorithmic filters exclude relevant studies and thereby constrain the diversity of the knowledge base from the outset. Knowledge homogenization bias, by contrast, manifests later, during the processing and modeling of information, when AI systems trained on large datasets disproportionately reinforce prevailing theories, canonical sources, or widely accepted methodologies. As a result, database filtering bias restricts what information enters the analysis, while knowledge homogenization bias shapes how that information is weighted, interpreted, and reproduced, limiting the exploration of novel hypotheses or disruptive approaches in engineering research [133].

- Cumulative bias in AI models (Phase: Data analysis and hypothesis evaluation): This occurs when AI models are trained on datasets that already contain historical biases, thereby reinforcing prior errors and distorting scientific inference. In engineering, such cumulative bias may negatively affect predictive failure systems for critical infrastructures, causing them to perpetuate error patterns instead of correcting them.

- Algorithmic optimization bias in experimentation (Phase: Methodological design and data collection): Refers to the adjustment of models or experimental parameters to maximize computational efficiency at the expense of fidelity and precision in representing complex phenomena. For instance, numerical simulations might be oversimplified to reduce computation times, thereby compromising the validity of engineering simulations by sacrificing detail or realism [134].

- Selective dissemination bias of scientific findings (Phase: Results dissemination and feedback): Describes the tendency of certain AI systems (e.g., publication platforms or automated dissemination networks) to favor and amplify positive results or those aligned with dominant trends, while neglecting rigorous studies with null or negative results. In engineering, this distorts the perception of technological development success, potentially limiting innovation by rendering invisible findings that could be critical for scientific progress but do not fit prevailing narratives [135].

4. Discussion

- Education and awareness (Phases 1–7, Subjects: researchers): Researchers must be systematically trained to recognize the cognitive mechanisms and algorithmic distortions that generate biases, particularly confirmation bias, automation bias, and cumulative bias. This training should address how these distortions emerge at each stage of the research process—from problem definition to dissemination—and equip researchers with methodological literacy to critically interpret AI outputs and ensure transparency and interpretability in industrial contexts [136].

- Process transparency (Phases 3–6, Subjects: researchers and AI systems): AI models used in cyber-physical systems and IIoT environments should integrate explainability components that make their internal decision logic interpretable. Transparent pipelines enable the detection of distortions such as correlation–causation confusion, cumulative bias, and automation bias, facilitating auditing and validation of results during hypothesis formulation, analysis, and dissemination stages [137].

- Data diversification (Phases 2–5, Subjects: researchers and data engineers): Expanding the diversity and representativeness of training and operational datasets mitigates database filtering bias and knowledge homogenization bias. Integrating heterogeneous data sources from multiple industrial contexts reduces structural distortions and enhances robustness and generalizability, improving the reliability of results in data-intensive stages [138].

- Human supervision (Phases 4–6, Subjects: researchers and domain experts): Implementing human-in-the-loop approaches ensures that human expertise validates critical outputs of automated systems, counteracting scientific automation bias and technological dependence in inference. Human oversight is particularly crucial in high-stakes CPS applications, where algorithmic recommendations must be critically assessed before deployment [139].

- Continuous auditing and monitoring (Phases 5–7, Subjects: researchers, institutions, and AI governance bodies): Governance frameworks should define clear responsibilities between human agents and AI systems, ensuring continuous evaluation of model behavior and early detection of bias re-emergence during operation. Periodic bias audits are essential in dynamic industrial environments, where input distributions and operational conditions evolve over time [140].

- Iterative model adjustment (Phases 5–6, Subjects: AI developers and researchers): Reinforcement learning with human feedback (RLHF) and other adaptive techniques should be applied to continuously align model outputs with operational and ethical requirements, reducing the persistence of cumulative and hyperparameterization biases. This iterative refinement improves both performance and reliability in real-world CPS scenarios [141].

- Multi-phase mitigation techniques (Phases 3–6, Subjects: AI developers and data scientists): Bias control must be implemented throughout the research lifecycle—before, during, and after model training. Pre-processing (e.g., data rebalancing), in-process (e.g., adversarial debiasing), and post-processing (e.g., output calibration) interventions work together to reduce the impact of biases across methodological design and analysis stages, enhancing reproducibility [142].

- Multidimensional evaluation (Phases 5–6, Subjects: researchers and system evaluators): Model evaluation should go beyond conventional accuracy metrics to include fairness, robustness, explainability, and resilience. Adopting this broader evaluation framework prevents the amplification of subtle biases, such as automation bias or cumulative bias, and supports trustworthy deployment of AI systems in industrial contexts [143].

- Cross-validation methods (Phases 5–6, Subjects: researchers and data scientists): Employing rigorous validation strategies, such as k-fold or stratified cross-validation, mitigates sampling and selection biases while improving error estimation. These techniques enhance the reliability and generalizability of results across data subsets and reduce the risk of overfitting driven by biased data partitions [144].

- Interdisciplinary collaboration (Phases 1–7, Subjects: researchers, data scientists, ethicists, and domain experts): Collaboration across disciplines is essential to define boundaries for AI intervention, contextualize findings, and maintain human oversight in decision-making. This integrative approach aligns technical solutions with societal and industrial values, mitigating risks associated with unexamined bias propagation and reinforcing accountability throughout the research process [145].

4.1. Practical Implications

4.2. Comparative Insights, Limitations, and Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Portillo-Blanco, A.; Guisasola, J.; Zuza, K. Integrated STEM Education: Addressing Theoretical Ambiguities and Practical Applications. Front. Educ. 2025, 10, 1568885. [Google Scholar] [CrossRef]

- Correa, J. Science and Scientific Method. Int. J. Sci. Res. 2022, 11, 621–633. [Google Scholar] [CrossRef]

- Wang, R. Active Learning-Based Optimization of Scientific Experimental Design. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Computer Engineering, ICAICE 2021, Hangzhou, China, 5–7 November 2021; pp. 268–274. [Google Scholar] [CrossRef]

- Obioha-Val, O.; Oladeji, O.O.; Selesi-Aina, O.; Olayinka, M.; Kolade, T.M. Machine Learning-Enabled Smart Sensors for Real-Time Industrial Monitoring: Revolutionizing Predictive Analytics and Decision-Making in Diverse Sector. Asian J. Res. Comput. Sci. 2024, 17, 92–113. [Google Scholar] [CrossRef]

- Necula, S.C.; Dumitriu, F.; Greavu-Șerban, V. A Systematic Literature Review on Using Natural Language Processing in Software Requirements Engineering. Electronics 2024, 13, 2055. [Google Scholar] [CrossRef]

- Kong, X.; Jiang, X.; Zhang, B.; Yuan, J.; Ge, Z. Latent Variable Models in the Era of Industrial Big Data: Extension and Beyond. Annu. Rev. Control 2022, 54, 167–199. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G. A Comprehensive Review of Deep Learning: Architectures, Recent Advances, and Applications. Information 2024, 15, 755. [Google Scholar] [CrossRef]

- Cachola, I.; Lo, K.; Cohan, A.; Weld, D.S. TLDR: Extreme Summarization of Scientific Documents. In Proceedings of the Findings of the Association for Computational Linguistics Findings of ACL: EMNLP 2020, Seattle, WA, USA, 5–10 July 2020; pp. 4766–4777. [Google Scholar] [CrossRef]

- Lee, Y.L.; Zhou, T.; Yang, K.; Du, Y.; Pan, L. Personalized recommender systems based on social relationships and historical behaviors. Appl. Math. Comput. 2022, 437, 127549. [Google Scholar] [CrossRef]

- Werdiningsih, I.; Marzuki; Rusdin, D. Balancing AI and Authenticity: EFL Students’ Experiences with ChatGPT in Academic Writing. Cogent. Arts Humanit. 2024, 11, 2392388. [Google Scholar] [CrossRef]

- Mehdiyev, N.; Majlatow, M.; Fettke, P. Interpretable and Explainable Machine Learning Methods for Predictive Process Monitoring: A Systematic Literature Review. arXiv 2023, arXiv:2312.17584. [Google Scholar] [CrossRef]

- Astleitner, H. We Have Big Data, But Do We Need Big Theory? Review-Based Remarks on an Emerging Problem in the Social Sciences. Philos. Soc. Sci. 2024, 54, 69–92. [Google Scholar] [CrossRef]

- Lee, Y.; Pawitan, Y. Popper’s Falsification and Corroboration from the Statistical Perspectives. In Karl Popper’s Science and Philosophy; Springer: Berlin/Heidelberg, Germany, 2020; pp. 121–147. [Google Scholar] [CrossRef]

- Lamata, P. Avoiding Big Data Pitfalls. Heart Metab. 2020, 82, 33–35. [Google Scholar] [CrossRef] [PubMed]

- Bertrand, Y.; Van Belle, R.; De Weerdt, J.; Serral, E. Defining Data Quality Issues in Process Mining with IoT Data. In Lecture Notes in Business Information Processing; Springer: Berlin/Heidelberg, Germany, 2023; Volume 468, pp. 422–434. [Google Scholar] [CrossRef]

- He, J.; Feng, W.; Min, Y.; Yi, J.; Tang, K.; Li, S.; Zhang, J.; Chen, K.; Zhou, W.; Xie, X.; et al. Control Risk for Potential Misuse of Artificial Intelligence in Science. arXiv 2023, arXiv:2312.06632. [Google Scholar] [CrossRef]

- Anand, G.; Larson, E.C.; Mahoney, J.T. Thomas Kuhn on Paradigms. Prod. Oper. Manag. 2020, 29, 1650–1657. [Google Scholar] [CrossRef]

- Buyl, M.; De Bie, T. Inherent Limitations of AI Fairness. Commun. ACM 2022, 67, 48–55. [Google Scholar] [CrossRef]

- Horvitz, E.; Young, J.; Elluru, R.G.; Howell, C. Key Considerations for the Responsible Development and Fielding of Artificial Intelligence. arXiv 2021, arXiv:2108.12289. [Google Scholar] [CrossRef]

- Da Silva, S.; Gupta, R.; Monzani, D. Editorial: Highlights in Psychology: Cognitive Bias. Front. Psychol. 2023, 14, 1242809. [Google Scholar] [CrossRef] [PubMed]

- Aini, R.Q.; Sya’bandari, Y.; Rusmana, A.N.; Ha, M. Addressing Challenges to a Systematic Thinking Pattern of Scientists: A Literature Review of Cognitive Bias in Scientific Work. Brain Digit. Learn. 2021, 11, 417–430. [Google Scholar] [CrossRef]

- Krenn, M.; Pollice, R.; Guo, S.Y.; Aldeghi, M.; Cervera-Lierta, A.; Friederich, P.; dos Passos Gomes, G.; Häse, F.; Jinich, A.; Nigam, A.; et al. On Scientific Understanding with Artificial Intelligence. Nat. Rev. Phys. 2022, 4, 761–769. [Google Scholar] [CrossRef]

- Vicente, L.; Matute, H. Humans Inherit Artificial Intelligence Biases. Sci. Rep. 2023, 13, 15737. [Google Scholar] [CrossRef]

- Morita, R.; Watanabe, K.; Zhou, J.; Dengel, A.; Ishimaru, S. GenAIReading: Augmenting Human Cognition with Interactive Digital Textbooks Using Large Language Models and Image Generation Models. arXiv 2025, arXiv:2503.07463. [Google Scholar] [CrossRef]

- Jugran, S.; Kumar, A.; Tyagi, B.S.; Anand, V. Extractive Automatic Text Summarization Using SpaCy in Python NLP. In Proceedings of the 2021 International Conference on Advance Computing and Innovative Technologies in Engineering, ICACITE 2021, Greater Noida, India, 4–5 March 2021; pp. 582–585. [Google Scholar] [CrossRef]

- Edwards, A.; Edwards, C. Does the Correspondence Bias Apply to Social Robots?: Dispositional and Situational Attributions of Human Versus Robot Behavior. Front. Robot. AI 2022, 8, 788242. [Google Scholar] [CrossRef]

- Samoilenko, S.A.; Cook, J. Developing an Ad Hominem Typology for Classifying Climate Misinformation. Clim. Policy 2024, 24, 138–151. [Google Scholar] [CrossRef]

- Yoo, S. LLMs as Deceptive Agents: How Role-Based Prompting Induces Semantic Ambiguity in Puzzle Tasks. arXiv 2025, arXiv:2504.02254. [Google Scholar] [CrossRef]

- Peretz-Lange, R.; Gonzalez, G.D.S.; Hess, Y.D. My Circumstances, Their Circumstances: An Actor-Observer Distinction in the Consequences of External Attributions. Soc. Pers. Psychol. Compass. 2024, 18, e12993. [Google Scholar] [CrossRef]

- Wehrli, S.; Hertweck, C.; Amirian, M.; Glüge, S.; Stadelmann, T. Awareness, and Ignorance in Deep-Learning-Based Face Recognition. AI Ethics 2021, 2, 509–522. [Google Scholar] [CrossRef]

- Rastogi, C.; Zhang, Y.; Wei, D.; Varshney, K.R.; Dhurandhar, A.; Tomsett, R. Deciding Fast and Slow: The Role of Cognitive Biases in AI-Assisted Decision-Making. Proc. ACM Hum. Comput. Interact. 2022, 6, 3512930. [Google Scholar] [CrossRef]

- González-Sendino, R.; Serrano, E.; Bajo, J.; Novais, P. A Review of Bias and Fairness in Artificial Intelligence. Int. J. Interact. Multimed. Artif. Intell. 2024, 9, 5–17. [Google Scholar] [CrossRef]

- Tump, A.N.; Pleskac, T.J.; Kurvers, R.H.J.M. Wise or Mad Crowds? The Cognitive Mechanisms Underlying Information Cascades. Sci. Adv. 2020, 6, eabb0266. [Google Scholar] [CrossRef] [PubMed]

- Suri, G.; Slater, L.R.; Ziaee, A.; Nguyen, M. Do Large Language Models Show Decision Heuristics Similar to Humans? A Case Study Using GPT-3.5. J. Exp. Psychol. Gen. 2023, 153, 1066. [Google Scholar] [CrossRef]

- Cau, F.M.; Tintarev, N. Navigating the Thin Line: Examining User Behavior in Search to Detect Engagement and Backfire Effects. arXiv 2024, arXiv:2401.11201. [Google Scholar] [CrossRef]

- Knyazev, N.; Oosterhuis, H. The Bandwagon Effect: Not Just Another Bias. In Proceedings of the ICTIR 2022—Proceedings of the 2022 ACM SIGIR International Conference on the Theory of Information Retrieval, Madrid, Spain, 11–12 July 2022; pp. 243–253. [Google Scholar] [CrossRef]

- Erbacher, R.F. Base-Rate Fallacy Redux and a Deep Dive Review in Cybersecurity. arXiv 2022, arXiv:2203.08801. [Google Scholar] [CrossRef]

- Kovačević, I.; Manojlović, M. Base Rate Neglect Bias: Can It Be Observed in HRM Decisions and Can It Be Decreased by Visually Presenting the Base Rates in HRM Decisions? Int. J. Cogn. Res. Sci. Eng. Educ. 2024, 12, 119–132. [Google Scholar] [CrossRef]

- Ashinoff, B.K.; Buck, J.; Woodford, M.; Horga, G. The Effects of Base Rate Neglect on Sequential Belief Updating and Real-World Beliefs. PLOS Comput. Biol. 2022, 18, e1010796. [Google Scholar] [CrossRef] [PubMed]

- Fraune, M.R. Our Robots, Our Team: Robot Anthropomorphism Moderates Group Effects in Human–Robot Teams. Front. Psychol. 2020, 11, 540167. [Google Scholar] [CrossRef]

- Gautam, S.; Srinath, M. Blind Spots and Biases: Exploring the Role of Annotator Cognitive Biases in NLP. In Proceedings of the HCI+NLP 2024—3rd Workshop on Bridging Human-Computer Interaction and Natural Language Processing, Mexico City, Mexico, 21 June 2024; pp. 82–88. [Google Scholar] [CrossRef]

- Bremers, A.; Parreira, M.T.; Fang, X.; Friedman, N.; Ramirez-Aristizabal, A.; Pabst, A.; Spasojevic, M.; Kuniavsky, M.; Ju, W. The Bystander Affect Detection (BAD) Dataset for Failure Detection in HRI. arXiv 2023, arXiv:2303.04835. [Google Scholar] [CrossRef]

- Zimring, J.C. Bias with a Cherry on Top: Cherry-Picking the Data. In Partial Truths; Columbia University Press: New York City, NY, USA, 2022; pp. 52–60. [Google Scholar] [CrossRef]

- Kobayashi, T.; Kitaoka, A.; Kosaka, M.; Tanaka, K.; Watanabe, E. Motion Illusion-like Patterns Extracted from Photo and Art Images Using Predictive Deep Neural Networks. Sci. Rep. 2022, 12, 3893. [Google Scholar] [CrossRef]

- Seran, C.E.; Tan, M.J.T.; Karim, H.A.; Aldahoul, N.; Joshua, M.; Tan, T.; Hezerul; Karim, A. A Conceptual Exploration of Generative AI-Induced Cognitive Dissonance and Its Emergence in University-Level Academic Writing. Front. Artif. Intell. 2025, 8, 1573368. [Google Scholar] [CrossRef]

- Zwaan, L. Cognitive Bias in Large Language Models: Implications for Research and Practice. NEJM AI 2024, 1, e2400961. [Google Scholar] [CrossRef]

- Bashkirova, A.; Krpan, D. Confirmation Bias in AI-Assisted Decision-Making: AI Triage Recommendations Congruent with Expert Judgments Increase Psychologist Trust and Recommendation Acceptance. Comput. Hum. Behav. Artif. Hum. 2024, 2, 100066. [Google Scholar] [CrossRef]

- Peters, U. Algorithmic Political Bias in Artificial Intelligence Systems. Philos. Technol. 2022, 35, 25. [Google Scholar] [CrossRef]

- Westberg, M.; Främling, K. Cognitive Perspectives on Context-Based Decisions and Explanations. NEJM AI 2021, 1, e2400961. [Google Scholar] [CrossRef]

- Kliegr, T.; Bahník, Š.; Fürnkranz, J. A Review of Possible Effects of Cognitive Biases on Interpretation of Rule-Based Machine Learning Models. Artif. Intell. 2021, 295, 103458. [Google Scholar] [CrossRef]

- Xiao, Y.; Wang, S.; Liu, S.; Xue, D.; Zhan, X.; Liu, Y. FITNESS: A Causal De-Correlation Approach for Mitigating Bias in Machine Learning Software. arXiv 2023, arXiv:2305.14396. [Google Scholar] [CrossRef]

- Philip, J.; Ωθ, W.; Ruas, T.; Abdalla, M.; Gipp, B.; Mohammad, S.M. Citation Amnesia: On The Recency Bias of NLP and Other Academic Fields. arXiv 2024, arXiv:2402.12046. [Google Scholar] [CrossRef]

- Tao, Y.; Viberg, O.; Baker, R.S.; Kizilcec, R.F. Cultural Bias and Cultural Alignment of Large Language Models. Proc. Natl. Acad. Sci. USA Nexus 2024, 3, 346. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y.; Shui, R.; Pan, L.; Kan, M.Y.; Liu, Z.; Chua, T.S. Expertise Style Transfer: A New Task Towards Better Communication between Experts and Laymen. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Virtual, 7–10 July 2020; pp. 1061–1071. [Google Scholar] [CrossRef]

- Schedl, M.; Lesota, O.; Brandl, S.; Lotfi, M.; Ticona, G.J.E.; Masoudian, S. The Importance of Cognitive Biases in the Recommendation Ecosystem. arXiv 2024, arXiv:2408.12492. [Google Scholar] [CrossRef]

- Leo, X.; Huh, Y.E. Who Gets the Blame for Service Failures? Attribution of Responsibility toward Robot versus Human Service Providers and Service Firms. Comput. Hum. Behav. 2020, 113, 106520. [Google Scholar] [CrossRef]

- Tavares, S.; Ferrara, E. Fairness and Bias in Artificial Intelligence: A Brief Survey of Sources, Impacts, and Mitigation Strategies. Sci 2023, 6, 3. [Google Scholar] [CrossRef]

- Horowitz, M.C.; Kahn, L. Bending the Automation Bias Curve: A Study of Human and AI-Based Decision Making in National Security Contexts. Int. Stud. Q. 2023, 68, sqae020. [Google Scholar] [CrossRef]

- Gong, Q. Machine Endowment Cost Model: Task Assignment between Humans and Machines. Humanit. Soc. Sci. Commun. 2023, 10, 129. [Google Scholar] [CrossRef]

- Barkett, E.; Long, O.; Kröger, P. Getting out of the Big-Muddy: Escalation of Commitment in LLMs. arXiv 2025, arXiv:2508.01545. [Google Scholar] [CrossRef]

- Hufendiek, R. Beyond Essentialist Fallacies: Fine-Tuning Ideology Critique of Appeals to Biological Sex Differences. J. Soc. Philos. 2022, 53, 494–511. [Google Scholar] [CrossRef]

- Maneuvrier, A. Experimenter Bias: Exploring the Interaction between Participant’s and Investigator’s Gender/Sex in VR. Virtual Real. 2024, 28, 96. [Google Scholar] [CrossRef]

- Choi, J.; Hong, Y.; Kim, B. People Will Agree What I Think: Investigating LLM’s False Consensus Effect. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2025, Albuquerque, NM, USA, 29 April–4 May 2025; pp. 95–126. [Google Scholar] [CrossRef]

- Pataranutaporn, P.; Archiwaranguprok, C.; Chan, S.W.T.; Loftus, E.; Maes, P. Synthetic Human Memories: AI-Edited Images and Videos Can Implant False Memories and Distort Recollection. In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings, Honolulu, HI, USA, 11–16 May 2024; Volume 1. [Google Scholar] [CrossRef]

- Mou, Y.; Xu, T.; Hu, Y. Uniqueness Neglect on Consumer Resistance to AI. Mark. Intell. Plan. 2023, 41, 669–689. [Google Scholar] [CrossRef]

- Herrebrøden, H. Motor Performers Need Task-Relevant Information: Proposing an Alternative Mechanism for the Attentional Focus Effect. J. Mot. Behav. 2023, 55, 125–134. [Google Scholar] [CrossRef]

- Marwala, T.; Hurwitz, E. Artificial Intelligence and Asymmetric Information Theory. arXiv 2015, arXiv:1510.02867. [Google Scholar] [CrossRef]

- Ulnicane, I.; Aden, A. Power and Politics in Framing Bias in Artificial Intelligence Policy. Rev. Policy Res. 2023, 40, 665–687. [Google Scholar] [CrossRef]

- Saeedi, P.; Goodarzi, M.; Canbaz, M.A. Heuristics and Biases in AI Decision-Making: Implications for Responsible AGI. In Proceedings of the 2025 6th International Conference on Artificial Intelligence, Robotics and Control (AIRC), Savannah, GA, USA, 15 July 2025. [Google Scholar] [CrossRef]

- Gong, C.; Yang, Y. Google Effects on Memory: A Meta-Analytical Review of the Media Effects of Intensive Internet Search Behavior. Front. Public Health 2024, 12, 1332030. [Google Scholar] [CrossRef]

- Wiss, A.; Showstark, M.; Dobbeck, K.; Pattershall-Geide, J.; Zschaebitz, E.; Joosten-Hagye, D.; Potter, K.; Embry, E. Utilizing Generative AI to Counter Learner Groupthink by Introducing Controversy in Collaborative Problem-Based Learning Settings. Online Learn. 2025, 29, 39–65. [Google Scholar] [CrossRef]

- Nicolau, J.L.; Mellinas, J.P.; Martín-Fuentes, E. The Halo Effect: A Longitudinal Approach. Ann. Tour Res. 2020, 83, 102938. [Google Scholar] [CrossRef]

- Wang, J.; Redelmeier, D.A. Cognitive Biases and Artificial Intelligence. NEJM AI 2024, 1, 2400639. [Google Scholar] [CrossRef]

- Noor, N.; Beram, S.; Huat, F.K.C.; Gengatharan, K.; Mohamad Rasidi, M.S. Bias, Halo Effect and Horn Effect: A Systematic Literature Review. Int. J. Acad. Res. Bus. Soc. Sci. 2023, 13, 1116–1140. [Google Scholar] [CrossRef] [PubMed]

- Lyu, Y.; Combs, D.; Neumann, D.; Leong, Y.C. Automated Scoring of the Ambiguous Intentions Hostility Questionnaire Using Fine-Tuned Large Language Models. arXiv 2025, arXiv:2508.10007. [Google Scholar] [CrossRef]

- Vuculescu, O.; Beretta, M.; Bergenholtz, C. The IKEA Effect in Collective Problem-Solving: When Individuals Prioritize Their Own Solutions. Creat. Innov. Manag. 2021, 30, 116–128. [Google Scholar] [CrossRef]

- Nowotny, H. AI and the Illusion of Control. In Proceedings of the Paris Institute for Advanced Study, Paris, France, 2 April 2024; Volume 1, p. 10. [Google Scholar] [CrossRef]

- Xavier, B. Biases within AI: Challenging the Illusion of Neutrality. AI Soc. 2024, 40, 1545–1546. [Google Scholar] [CrossRef]

- Ntoutsi, E.; Fafalios, P.; Gadiraju, U.; Iosifidis, V.; Nejdl, W.; Vidal, M.E.; Ruggieri, S.; Turini, F.; Papadopoulos, S.; Krasanakis, E.; et al. Bias in Data-Driven Artificial Intelligence Systems—An Introductory Survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1356. [Google Scholar] [CrossRef]

- Goette, L.; Han, H.-J.; Leung, B.T.K. Information Overload and Confirmation Bias. 2024. Available online: https://ssrn.com/abstract=4843939 (accessed on 1 October 2025).

- Ciccarone, G.; Di Bartolomeo, G.; Papa, S. The Rationale of In-Group Favoritism: An Experimental Test of Three Explanations. Games Econ. Behav. 2020, 124, 554–568. [Google Scholar] [CrossRef]

- Pacchiardi, L.; Teši’c, M.T.; Cheke, L.; Hernández-Orallo, J. Leaving the Barn Door Open for Clever Hans: Simple Features Predict LLM Benchmark Answers. arXiv 2024, arXiv:2410.11672. [Google Scholar] [CrossRef]

- Kartal, E. A Comprehensive Study on Bias in Artificial Intelligence Systems: Biased or Unbiased AI, That’s the Question! Int. J. Intell. Inf. Technol. 2022, 18, 309582. [Google Scholar] [CrossRef]

- Kim, S.; Sohn, Y.W. The Effect of Belief in a Just World on the Acceptance of AI Technology. Korean J. Psychol. Gen. 2020, 39, 517–542. [Google Scholar] [CrossRef]

- Haliburton, L.; Ghebremedhin, S.; Welsch, R.; Schmidt, A.; Mayer, S. Investigating Labeler Bias in Face Annotation for Machine Learning. Front. Artif. Intell. Appl. 2023, 386, 145–161. [Google Scholar] [CrossRef]

- Zhou, J. A Review of the Relationship Between Loss Aversion Bias and Investment Decision-Making Process. Adv. Econ. Manag. Political Sci. 2023, 27, 143–150. [Google Scholar] [CrossRef]

- Fonseca, J. The Myth of Meritocracy and the Matilda Effect in STEM: Paper Acceptance and Paper Citation. arXiv 2023, arXiv:2306.10807. [Google Scholar] [CrossRef]

- Liao, C.H. The Matthew Effect and the Halo Effect in Research Funding. J. Inf. 2021, 15, 101108. [Google Scholar] [CrossRef]

- Sguerra, B.; Tran, V.-A.; Hennequin, R. Ex2Vec: Characterizing Users and Items from the Mere Exposure Effect. In Proceedings of the 17th ACM Conference on Recommender Systems, RecSys 2023, Singapore, 18–22 September 2023; Volume 1, pp. 971–977. [Google Scholar] [CrossRef]

- Bhatti, A.; Sandrock, T.; Nienkemper-Swanepoel, J. The Influence of Missing Data Mechanisms and Simple Missing Data Handling Techniques on Fairness. arXiv 2025, arXiv:2503.07313. [Google Scholar] [CrossRef]

- Kneer, M.; Skoczeń, I. Outcome Effects, Moral Luck and the Hindsight Bias. Cognition 2023, 232, 105258. [Google Scholar] [CrossRef]

- Tsfati, Y.; Barnoy, A. Media Cynicism, Media Skepticism and Automatic Media Trust: Explicating Their Connection with News Processing and Exposure. Communic. Res. 2025, 00936502251327717. [Google Scholar] [CrossRef]

- Surden, H. Naïve Realism, Cognitive Bias, and the Benefits and Risks of AI. SSRN Electron. J. 2023, 23, 4393096. [Google Scholar] [CrossRef]

- Chiarella, S.G.; Torromino, G.; Gagliardi, D.M.; Rossi, D.; Babiloni, F.; Cartocci, G. Investigating the Negative Bias towards Artificial Intelligence: Effects of Prior Assignment of AI-Authorship on the Aesthetic Appreciation of Abstract Paintings. Comput. Human Behav. 2022, 137, 107406. [Google Scholar] [CrossRef]

- Cheung, V.; Maier, M.; Lieder, F. Large Language Models Show Amplified Cognitive Biases in Moral Decision-Making. Proc. Natl. Acad. Sci. USA 2025, 122, e2412015122. [Google Scholar] [CrossRef] [PubMed]

- Owen, M.; Flowerday, S.V.; van der Schyff, K. Optimism Bias in Susceptibility to Phishing Attacks: An Empirical Study. Inf. Comput. Secur. 2024, 32, 656–675. [Google Scholar] [CrossRef]

- Stone, J.C.; Gurunathan, U.; Aromataris, E.; Glass, K.; Tugwell, P.; Munn, Z.; Doi, S.A.R. Bias Assessment in Outcomes Research: The Role of Relative Versus Absolute Approaches. Value Health 2021, 24, 1145–1149. [Google Scholar] [CrossRef]

- Li, W.; Zhou, X.; Yang, Q. Designing Medical Artificial Intelligence for In- and out-Groups. Comput. Human Behav. 2021, 124, 106929. [Google Scholar] [CrossRef]

- Sihombing, Y.R.; Prameswary, R.S.A. The Effect of Overconfidence Bias and Representativeness Bias on Investment Decision with Risk Tolerance as Mediating Variable. Indik. J. Ilm. Manaj. Dan Bisnis 2023, 7, 1. [Google Scholar] [CrossRef]

- Borowa, K.; Zalewski, A.; Kijas, S. The Influence of Cognitive Biases on Architectural Technical Debt. In Proceedings of the 2021 IEEE 18th International Conference on Software Architecture (ICSA), Stuttgart, Germany, 22–26 March 2021. [Google Scholar] [CrossRef]

- Montag, C.; Schulz, P.J.; Zhang, H.; Li, B.J. On Pessimism Aversion in the Context of Artificial Intelligence and Locus of Control: Insights from an International Sample. AI Soc. 2025, 40, 3349–3356. [Google Scholar] [CrossRef]

- Kosch, T.; Welsch, R.; Chuang, L.; Schmidt, A. The Placebo Effect of Artificial Intelligence in Human-Computer Interaction. ACM Trans. Comput.-Hum. Interact. 2022, 29, 32. [Google Scholar] [CrossRef]

- Marineau, J.E.; Labianca, G. (Joe) Positive and Negative Tie Perceptual Accuracy: Pollyanna Principle vs. Negative Asymmetry Explanations. Soc. Netw. 2021, 64, 83–98. [Google Scholar] [CrossRef]

- Obendiek, A.S.; Seidl, T. The (False) Promise of Solutionism: Ideational Business Power and the Construction of Epistemic Authority in Digital Security Governance. J. Eur. Public Policy 2023, 30, 1305–1329. [Google Scholar] [CrossRef]

- Gulati, A.; Lozano, M.A.; Lepri, B.; Oliver, N. BIASeD: Bringing Irrationality into Automated System Design. arXiv 2022, arXiv:2210.01122. [Google Scholar] [CrossRef]

- De-Arteaga, M.; Elmer, J. Self-Fulfilling Prophecies and Machine Learning in Resuscitation Science. Resuscitation 2023, 183, 109622. [Google Scholar] [CrossRef]

- Yang, T.; Han, C.; Luo, C.; Gupta, P.; Phillips, J.M.; Ai, Q. Mitigating Exploitation Bias in Learning to Rank with an Uncertainty-Aware Empirical Bayes Approach. In Proceedings of the WWW 2024—Proceedings of the ACM Web Conference, Madrid, Spain, 13–17 May 2024; Volume 1, pp. 1486–1496. [Google Scholar] [CrossRef]

- Candrian, C.; Scherer, A. Reactance to Human versus Artificial Intelligence: Why Positive and Negative Information from Human and Artificial Agents Leads to Different Responses. SSRN Electron. J. 2023, 4397618. [Google Scholar] [CrossRef]

- Wang, P.; Yang, H.; Hou, J.; Li, Q. A Machine Learning Approach to Primacy-Peak-Recency Effect-Based Satisfaction Prediction. Inf. Process. Manag. 2023, 60, 103196. [Google Scholar] [CrossRef]

- Del Giudice, M. The Prediction-Explanation Fallacy: A Pervasive Problem in ScientificApplications of Machine Learning. Methodology 2024, 20, 22–46. [Google Scholar] [CrossRef]

- Gundersen, O.E.; Cappelen, O.; Mølnå, M.; Nilsen, N.G. The Unreasonable Effectiveness of Open Science in AI: A Replication Study. arXiv 2024, arXiv:2412.17859. [Google Scholar] [CrossRef]

- Malecki, W.P.; Kowal, M.; Krasnodębska, A.; Bruce, B.C.; Sorokowski, P. The Reverse Matilda Effect: Gender Bias and the Impact of Highlighting the Contributions of Women to a STEM Field on Its Perceived Attractiveness. Sci. Educ. 2024, 108, 1474–1491. [Google Scholar] [CrossRef]

- Vellinga, N.E. Rethinking Compensation in Light of the Development of AI. Int. Rev. Law Comput. Technol. 2024, 38, 391–412. [Google Scholar] [CrossRef]

- Kim, S. Perceptions of Discriminatory Decisions of Artificial Intelligence: Unpacking the Role of Individual Characteristics. Int. J. Hum. Comput. Stud. 2025, 194, 103387. [Google Scholar] [CrossRef]

- Wu, M.; Li, Z.; Yuen, K.F. Effect of Anthropomorphic Design and Hierarchical Status on Balancing Self-Serving Bias: Accounting for Education, Ethnicity, and Experience. Comput. Hum. Behav. 2024, 158, 108299. [Google Scholar] [CrossRef]

- Lee, M.H.J. Examining the Robustness of Homogeneity Bias to Hyperparameter Adjustments in GPT-4. arXiv 2025, arXiv:2501.02211. [Google Scholar] [CrossRef]

- Oschinsky, F.M.; Stelter, A.; Niehaves, B. Cognitive Biases in the Digital Age—How Resolving the Status Quo Bias Enables Public-Sector Employees to Overcome Restraint. Gov. Inf. Q. 2021, 38, 101611. [Google Scholar] [CrossRef]

- Fabi, S.; Hagendorff, T. Why We Need Biased AI How Including Cognitive and Ethical Machine Biases Can Enhance AI Systems. arXiv 2022, arXiv:2203.09911. [Google Scholar] [CrossRef]

- Kleinberg, J.; Oren, S.; Raghavan, M.; Sklar, N. Stochastic Model for Sunk Cost Bias. PMLR 2021, 161, 1279–1288. [Google Scholar] [CrossRef]

- Gupta, P.; MacAvaney, S. On Survivorship Bias in MS MARCO. In Proceedings of the SIGIR 2022—Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 2214–2219. [Google Scholar] [CrossRef]

- Phillips, I.; Upadhyayula, A.; Flombaum, J. Tachypsychia—The Subjective Expansion of Time—Happens in Immediate Memory, Not Perceptual Experience. J. Vis. 2020, 20, 1466. [Google Scholar] [CrossRef]

- Choi, S. Temporal Framing in Balanced News Coverage of Artificial Intelligence and Public Attitudes. Mass Commun. Soc. 2024, 27, 384–405. [Google Scholar] [CrossRef]

- Kodden, B. The Art of Sustainable Performance: The Zeigarnik Effect. In The Art of Sustainable Performance; Springer: Berlin/Heidelberg, Germany, 2020; pp. 67–73. [Google Scholar] [CrossRef]

- Korteling, J.E.; Paradies, G.L.; Sassen-van Meer, J.P. Cognitive Bias and How to Improve Sustainable Decision Making. Front. Psychol. 2023, 14, 1129835. [Google Scholar] [CrossRef] [PubMed]

- Ye, A.; Maiti, A.; Schmidt, M.; Pedersen, S.J. A Hybrid Semi-Automated Workflow for Systematic and Literature Review Processes with Large Language Model Analysis. Future Int. 2024, 16, 167. [Google Scholar] [CrossRef]

- Kücking, F.; Hübner, U.; Przysucha, M.; Hannemann, N.; Kutza, J.O.; Moelleken, M.; Erfurt-Berge, C.; Dissemond, J.; Babitsch, B.; Busch, D. Automation Bias in AI-Decision Support: Results from an Empirical Study; IOS Press: Amsterdam, The Netherlands, 2024. [Google Scholar] [CrossRef]

- Chuan, C.H.; Sun, R.; Tian, S.; Tsai, W.H.S. EXplainable Artificial Intelligence (XAI) for Facilitating Recognition of Algorithmic Bias: An Experiment from Imposed Users’ Perspectives. Telemat. Inform. 2024, 91, 102135. [Google Scholar] [CrossRef]

- Daniil, S.; Slokom, M.; Cuper, M.; Liem, C.C.S.; van Ossenbruggen, J.; Hollink, L. On the Challenges of Studying Bias in Recommender Systems: A UserKNN Case Study. arXiv 2024, arXiv:2409.08046. [Google Scholar] [CrossRef]

- Roth, B.; de Araujo, P.H.L.; Xia, Y.; Kaltenbrunner, S.; Korab, C. Specification Overfitting in Artificial Intelligence. Artif. Intell. Rev. 2024, 58, 35. [Google Scholar] [CrossRef]

- Li, S. Computational and Experimental Simulations in Engineering. In Proceedings of the ICCES 2023, Shenzhen, China, 26–29 May 2023; Volume 145. [Google Scholar] [CrossRef]

- Wang, B.; Liu, J. Cognitively Biased Users Interacting with Algorithmically Biased Results in Whole-Session Search on Debated Topics. In Proceedings of the ICTIR 2024—Proceedings of the 2024 ACM SIGIR International Conference on the Theory of Information Retrieval, Washington, DC, USA, 13 July 2024; Volume 1, pp. 227–237. [Google Scholar] [CrossRef]

- Kacperski, C.; Bielig, M.; Makhortykh, M.; Sydorova, M.; Ulloa, R. Examining Bias Perpetuation in Academic Search Engines: An Algorithm Audit of Google and Semantic Scholar. First Monday 2023, 29, 11. [Google Scholar] [CrossRef]

- Suresh, H.; Guttag, J. A Framework for Understanding Sources of Harm throughout the Machine Learning Life Cycle. In Proceedings of the ACM International Conference Proceeding Series, New York City, NY, USA, 20–24 October 2021. [Google Scholar] [CrossRef]

- van Stein, N.; Thomson, S.L.; Kononova, A.V. A Deep Dive into Effects of Structural Bias on CMA-ES Performance along Affine Trajectories. arXiv 2024, arXiv:2404.17323. [Google Scholar] [CrossRef]

- Soleymani, H.; Saeidnia, H.R.; Ausloos, M.; Hassanzadeh, M. Selective Dissemination of Information (SDI) in the Age of Artificial Intelligence (AI). Library Hi Tech News 2023. ahead-of-print. [Google Scholar] [CrossRef]

- Beer, P.; Mulder, R.H. The Effects of Technological Developments on Work and Their Implications for Continuous Vocational Education and Training: A Systematic Review. Front. Psychol. 2020, 11, 535119. [Google Scholar] [CrossRef]

- Marcinkevičs, R.; Vogt, J.E. Interpretable and Explainable Machine Learning: A Methods-Centric Overview with Concrete Examples. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1493. [Google Scholar] [CrossRef]

- Hort, M.; Chen, Z.; Zhang, J.M.; Harman, M.; Sarro, F. Bias Mitigation for Machine Learning Classifiers: A Comprehensive Survey. ACM J. Responsible Comput. 2022, 1, 11. [Google Scholar] [CrossRef]

- Mosqueira-Rey, E.; Hernández-Pereira, E.; Alonso-Ríos, D.; Bobes-Bascarán, J.; Fernández-Leal, Á. Human-in-the-Loop Machine Learning: A State of the Art. Artif. Intell. Rev. 2023, 56, 3005–3054. [Google Scholar] [CrossRef]

- Minkkinen, M.; Laine, J.; Mäntymäki, M. Continuous Auditing of Artificial Intelligence: A Conceptualization and Assessment of Tools and Frameworks. Digit. Soc. 2022, 1, 21. [Google Scholar] [CrossRef]

- Casper, S.; Davies, X.; Shi, C.; Gilbert, T.K.; Scheurer, J.; Rando, J.; Freedman, R.; Korbak, T.; Lindner, D.; Freire, P.; et al. Open Problems and Fundamental Limitations of Reinforcement Learning from Human Feedback. arXiv 2023, arXiv:2307.15217. [Google Scholar] [CrossRef]

- Feldman, T.; Peake, A. End-To-End Bias Mitigation: Removing Gender Bias in Deep Learning. arXiv 2021, arXiv:2104.02532. [Google Scholar] [CrossRef]

- Khakurel, U.; Abdelmoumin, G.; Rawat, D.B. Performance Evaluation for Detecting and Alleviating Biases in Predictive Machine Learning Models. ACM Trans. Probabilistic Mach. Learn. 2025, 1, 1–34. [Google Scholar] [CrossRef]

- Demircioğlu, A. Applying Oversampling before Cross-Validation Will Lead to High Bias in Radiomics. Sci. Rep. 2024, 14, 11563. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Kim, J.; Jeon, J.H.; Xing, J.; Ahn, C.; Tang, P.; Cai, H. Toward Integrated Human-Machine Intelligence for Civil Engineering: An Interdisciplinary Perspective. In Proceedings of the Computing in Civil Engineering 2021—Selected Papers from the ASCE International Conference on Computing in Civil Engineering 2021, Orlando, FL, USA, 12–14 September 2021; pp. 279–286. [Google Scholar] [CrossRef]

- Li, X.; Yang, C.; Møller, C.; Lee, J. Data Issues in Industrial AI System: A Meta-Review and Research Strategy. arXiv 2024, arXiv:2406.15784. [Google Scholar] [CrossRef]

- Rosado Gomez, A.A.; Calderón Benavides, M.L. Framework for Bias Detection in Machine Learning Models: A Fairness Approach. In Proceedings of the WSDM 2024—Proceedings of the 17th ACM International Conference on Web Search and Data Mining, Merida, Mexico, 4–8 March 2024; pp. 1152–1154. [Google Scholar] [CrossRef]

- Razavi, S.; Jakeman, A.; Saltelli, A.; Prieur, C.; Iooss, B.; Borgonovo, E.; Plischke, E.; Lo Piano, S.; Iwanaga, T.; Becker, W.; et al. The Future of Sensitivity Analysis: An Essential Discipline for Systems Modeling and Policy Support. Environ. Model. Softw. 2021, 137, 104954. [Google Scholar] [CrossRef]

- Siddique, S.; Haque, M.A.; George, R.; Gupta, K.D.; Gupta, D.; Faruk, M.J.H. Survey on Machine Learning Biases and Mitigation Techniques. Digital 2024, 4, 1. [Google Scholar] [CrossRef]

| Construct | Gap Addressed in This Study |

|---|---|

| Bias typologies and definitions | Provides a unified taxonomy structured across research stages in Industry 4.0. |

| Phase-specific distribution of biases | Delivers quantitative mapping of bias occurrence across all stages. |

| Emergent AI-related biases in CPS/IIoT | Identifies and formalizes ten novel biases specific to industrial AI contexts. |

| Methodological transparency and explainability | Links explainability challenges to phase-specific manifestations of bias. |

| Human oversight and governance | Outlines practical oversight mechanisms aligned with each stage. |

| Mitigation strategies | Consolidates phase-tailored strategies into an operational framework. |

| Paradigm and epistemic framing | Connects claims of paradigm shift to observed bias patterns. |

| Name | Phase | Ref. |

|---|---|---|

| Actor-observer bias | 1,3,5,7 | [26] |

| Ad hominem | 7 | [27] |

| Ambiguity effect | 3,4,5 | [28] |

| Anchoring effect | 2,3,5 | [29] |

| Argument from ignorance | 3,5,6 | [30] |

| Attentional bias | 2,4,5 | [31] |

| Authority bias | 2,5,6,7 | [32] |

| Availability cascade | 2,3,7 | [33] |

| Availability heuristic | 2,3,5 | [34] |

| Backfire effect | 6,7 | [35] |

| Bandwagon effect | 1,2,3,7 | [36] |

| Base rate fallacy | 3,5 | [37] |

| Base rate neglect | 2,3,5 | [38] |

| Belief bias | 2,3,4,7 | [39] |

| Black sheep effect | 6,7 | [40] |

| Blind spot | 2,7 | [41] |

| Bystander effect | 7 | [42] |

| Cherry Picking | 1,2,3,4,5,6,7 | [43] |

| Clustering illusion | 2,3,5 | [44] |

| Cognitive dissonance | 3, 5, 6 | [45] |

| Cognitive fluency bias | 7 | [46] |

| Confirmation bias | 1,2,3,4,5,6,7 | [47] |

| Conservatism bias | 2,5,6 | [48] |

| Context effect | 4,5,7 | [49] |

| Contrast effect | 2,5,7 | [50] |

| Correlation-causation | 5,6 | [51] |

| Cryptomnesia or false memories | 2,3,7 | [52] |

| Cultural | 2,3,5,6,7 | [53] |

| Curse of knowledge | 7 | [54] |

| Declinism | 2,6,7 | [55] |

| Defensive attribution | 4,5,6 | [56] |

| Distinction bias | 3,5 | [57] |

| Dunning-Kruger effect | 2,3,5 | [58] |

| Endowment effect | 3,4,7 | [59] |

| Escalation of commitment | 4,5,6 | [60] |

| Essentialism fallacy | 2,3,6 | [61] |

| Experimenter bias | 4,5,6 | [62] |

| False consensus effect | 3,6,7 | [63] |

| False memory effect | 3,6,7 | [64] |

| False uniqueness effect | 1,3,7 | [65] |

| Focus effect | 4,5,7 | [66] |

| Framing asymmetry | 3,7 | [67] |

| Framing effect | 5,7 | [68] |

| Funding bias | 1,3,4,7 | |

| Gambler’s fallacy | 3,4,5 | [69] |

| Google effect | 2,3 | [70] |

| Groupthink | 3,4,6,7 | [71] |

| Halo effect | 2,6,7 | [72] |

| Hindsight bias | 6,7 | [73] |

| Horn Effect | 5,7 | [74] |

| Hostile attribution bias | 7 | [75] |

| IKEA effect | 4,7 | [76] |

| Illusion of control | 4,5 | [77] |

| Illusion of neutrality | 4,6,7 | [78] |

| Information bias | 2,4,7 | [79] |

| Information overload bias | 2,3,6,7 | [80] |

| Ingroup favoritism | 7 | [81] |

| Internal validity bias | 4,5,6 | [82] |

| Justification bias | 5,6,7 | [83] |

| Just-world hypothesis | 2,3,5,6,7 | [84] |

| Labeling effect | 4 | [85] |

| Loss aversion | 4 | [86] |

| Matilda effect | 7 | [87] |

| Matthew effect | 2,3,5,6,7 | [88] |

| Mere exposure effect | 2,3,5,7 | [89] |

| Missing data bias | 4,5,6,7 | [90] |

| Moral luck | 6,7 | [91] |

| Naïve cynicism | 2,7 | [92] |

| Naïve realism | 2,3,5,6,7 | [93] |

| Negativity bias | 2,5,6 | [94] |

| Omission bias | 4,5,7 | [95] |

| Optimism bias | 3,4,5,6,7 | [96] |

| Outcome bias | 5,6,7 | [97] |

| Outgroup homogeneity effect | 2,3,5,6,7 | [98] |

| Overconfidence effect | 1,3,5,7 | [99] |

| Parkinson’s law of triviality | 7 | [100] |

| Pessimism bias | 3,4,5,6,7 | [101] |

| Placebo effect | 4,5,6 | [102] |

| Pollyanna effect | 6,7 | [103] |

| Pro-innovation bias | 3,4,7 | [104] |

| Pseudocertainty effect | 3,4,5 | [105] |

| Pygmalion effect or self-fulfilling prophecy | 3,4,5 | [106] |

| Ranking bias | 2,3 | [107] |

| Reactance | 4,7 | [108] |

| Recency effect | 2,7 | [109] |

| Regression fallacy | 5,6 | [110] |

| Replication crisis | 4,5,7 | [111] |

| Reverse Matilda effect | 7 | [112] |

| Risk compensation effect | 4,5,7 | [113] |

| Selective perception bias | 2,3,5,6,7 | [114] |

| Self-serving bias | 5,6,7 | [115] |

| Source homogeneity bias | 2,3,5,6,7 | [116] |

| Status quo bias | 3,4,6,7 | [117] |

| Suggestibility | 4,5,7 | [118] |

| Sunk cost fallacy | 3,4,5,6 | [119] |

| Survivorship bias | 4,5,6,7 | [120] |

| Tachypsychia | 7 | [121] |

| Temporal framing effect | 7 | [122] |

| Zeigarnik effect | 4,5,7 | [123] |

| Zero-risk bias | 4,5 | [124] |

| Phase | Frequency |

|---|---|

| Identification of the research problem or question | 6 |

| Literature review and theoretical understanding | 44 |

| Hypothesis formulation | 50 |

| Methodological design and data collection | 38 |

| Data analysis and hypothesis evaluation | 48 |

| Conclusions and paradigm comparison | 36 |

| Results dissemination and feedback | 58 |

| Phase | O | E | χ2 |

|---|---|---|---|

| 1 | 6 | 40 | 28.90 |

| 2 | 44 | 40 | 0.40 |

| 3 | 50 | 40 | 2.50 |

| 4 | 38 | 40 | 0.10 |

| 5 | 48 | 40 | 1.60 |

| 6 | 36 | 40 | 0.40 |

| 7 | 58 | 40 | 8.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arévalo-Royo, J.; Flor-Montalvo, F.-J.; Latorre-Biel, J.-I.; Jiménez-Macías, E.; Martínez-Cámara, E.; Blanco-Fernández, J. Biases in AI-Supported Industry 4.0 Research: A Systematic Review, Taxonomy, and Mitigation Strategies. Appl. Sci. 2025, 15, 10913. https://doi.org/10.3390/app152010913

Arévalo-Royo J, Flor-Montalvo F-J, Latorre-Biel J-I, Jiménez-Macías E, Martínez-Cámara E, Blanco-Fernández J. Biases in AI-Supported Industry 4.0 Research: A Systematic Review, Taxonomy, and Mitigation Strategies. Applied Sciences. 2025; 15(20):10913. https://doi.org/10.3390/app152010913

Chicago/Turabian StyleArévalo-Royo, Javier, Francisco-Javier Flor-Montalvo, Juan-Ignacio Latorre-Biel, Emilio Jiménez-Macías, Eduardo Martínez-Cámara, and Julio Blanco-Fernández. 2025. "Biases in AI-Supported Industry 4.0 Research: A Systematic Review, Taxonomy, and Mitigation Strategies" Applied Sciences 15, no. 20: 10913. https://doi.org/10.3390/app152010913

APA StyleArévalo-Royo, J., Flor-Montalvo, F.-J., Latorre-Biel, J.-I., Jiménez-Macías, E., Martínez-Cámara, E., & Blanco-Fernández, J. (2025). Biases in AI-Supported Industry 4.0 Research: A Systematic Review, Taxonomy, and Mitigation Strategies. Applied Sciences, 15(20), 10913. https://doi.org/10.3390/app152010913