Abstract

Real-time rendering is increasingly used in augmented and virtual reality (AR/VR), interactive design, and product visualisation, where materials must prioritise efficiency and consistency rather than the extreme accuracy required in offline rendering. In parallel, the growing demand for personalised and customised products has created a need for digital materials that can be generated in-house without relying on expensive commercial systems. To address these requirements, this paper presents a low-cost digitisation workflow based on photometric stereo. The system integrates a custom-built scanner with cross-polarised illumination, automated multi-light image acquisition, a dual-stage colour calibration process, and a node-based reconstruction pipeline that produces albedo and normal maps. A reproducible evaluation methodology is also introduced, combining perceptual colour-difference analysis using the CIEDE2000 () metric with angular-error assessment of normal maps on known-geometry samples. By openly providing the workflow, bill of materials, and implementation details, this work delivers a practical and replicable solution for reliable material capture in real-time rendering and product customisation scenarios.

1. Introduction

Physically Based Rendering (PBR) has become the dominant paradigm for material representation in digital environments, supporting a wide range of real-time applications such as AR/VR, interactive product design, projection-based prototyping, and web-based visualisation [,]. By representing appearance through texture maps, PBR enables the scalable and perceptually consistent reproduction of materials across rendering engines [,]. While offline rendering can achieve extreme photorealism through computationally intensive algorithms, real-time rendering prioritises efficiency, consistency, and robustness under limited computational resources [,]. This distinction makes the availability of reliable PBR textures essential for interactive scenarios, where runtime feasibility is as important as visual fidelity. Reliable PBR appearance data can be obtained via three complementary routes: (i) artistic or AI-assisted authoring (procedural and learning-based) [,], (ii) scanning-based acquisition of real samples [,], and (iii) simulation-based synthesis using physically based light-transport models, including path tracing and Metropolis Light Transport (MLT), to generate realistic appearances and large-scale training data [,].

The demand for digital materials is further amplified by the growing trend towards personalisation and customisation in product design []. Designers and companies increasingly require accurate digital counterparts of physical samples that can be produced rapidly and integrated directly into interactive workflows. However, access to such materials remains constrained. Professional-grade scanning devices provide comprehensive capture capabilities but are prohibitively expensive and closed-source and require specialised training [,]. At the same time, low-cost custom-built systems based on consumer hardware have been explored. However, they often lack rigorous optical design, standardised calibration strategies, and reproducible workflows []. This divide forces practitioners to choose between costly precision and limited reliability.

In addition to the currently established scanning approaches, several alternative methods have been suggested in the broader literature as potential directions for future PBR texture acquisition. Digital holography has been investigated for high-precision surface-roughness measurement, which could be combined with photographic textures to enhance geometric detail []. Visual colour display holograms, also known as optoclones, provide analogue replications of texture appearance that could subsequently be digitised using advanced imaging techniques []. Recent work on the digitisation of such holographic representations further demonstrates possible pathways from analogue optoclones to digital appearance data []. Nevertheless, as these approaches are still immature and unsuitable for routine prototyping, a strong need remains for practical in-house solutions for real-time rendering.

Building on these considerations, there is a clear need for digitisation solutions that can be deployed directly in-house, without reliance on expensive commercial systems or external services. Such solutions must substantially reduce costs, in terms of both equipment and operational expenses, while remaining simple to set up and operate through the use of readily available components. At the same time, they must deliver accuracy sufficient for the intended use, ensuring that the generated textures meet the quality requirements of real-time rendering workflows. Equally important, these systems should be reproducible, transparent, and adaptable, allowing practitioners not only to replicate the technology but also to customise it for different materials or application scenarios.

In response, this paper presents a custom-built scanning system and a fully documented workflow for the digitisation of flat materials based on photometric stereo. The system integrates cross-polarised illumination, automated multi-light image acquisition, and a dual-stage colour-calibration strategy, followed by a modular node-based reconstruction pipeline that generates albedo and normal maps. In addition, we introduce a reproducible evaluation methodology that quantifies colour accuracy using the CIEDE2000 () metric and validates geometric fidelity through angular-error analysis of known-geometry samples. By openly providing the workflow, bill of materials, and implementation details, this work delivers an accessible and adaptable solution optimised for real-time rendering. Its combination of affordability, reproducibility, and systematic evaluation distinguishes it from existing alternatives and makes it particularly suitable for interactive design and product-customisation contexts.

2. Related Works

Material scanning techniques can broadly be divided into two main categories: image-based approaches and active sensing systems. The latter, including laser scanners and structured-light devices, are primarily optimised for geometric reconstruction, often achieving sub-millimetre accuracy and excelling at capturing complex object shapes []. However, their treatment of surface appearance is typically secondary: colour is acquired through auxiliary cameras or projected patterns and later mapped onto the reconstructed mesh []. This process is sensitive to registration errors, lighting inconsistencies, and resolution limits, which reduces the reliability of the resulting albedo or normal maps. Consequently, while these systems are highly effective for shape digitisation, they do not provide the photometrically consistent texture maps required for PBR workflows. By contrast, image-based approaches such as photogrammetry and photometric stereo directly capture appearance information. Photogrammetry focuses on producing dense 3D meshes from multi-view image sets, whereas photometric stereo specialises in recovering detailed surface normals and fine-scale textures from controlled lighting variations at a fixed viewpoint, making it suitable when appearance fidelity is the primary objective [].

Photometric stereo was first introduced by Woodham, who demonstrated its potential for estimating object surface normals based on observed changes in illumination []. Classical photometric stereo methods typically assume perfectly diffuse, or Lambertian, reflectance behaviour. However, many real-world objects exhibit non-Lambertian characteristics, including glossy reflections, interreflections, and specular highlights. To address these issues, numerous enhancements to the basic photometric stereo technique have been developed. One group of methods classifies specular reflections as outliers and attempts to eliminate or reduce their impact. Early attempts included selecting illumination conditions under which surfaces appeared more Lambertian, using algorithms based on subsets of available images []. Subsequent techniques adopted robust statistical approaches, such as random sample consensus (RANSAC), robust Singular Value Decomposition (SVD), and Markov random field models [,,]. While effective in some scenarios, these methods typically require capturing a larger number of images for reliable statistical analysis and often degrade in accuracy when applied to materials exhibiting dense, complex specular reflections or strong interreflections. An alternative set of methods explicitly models non-Lambertian reflectance by fitting nonlinear analytic Bidirectional Reflectance Distribution Functions (BRDFs). Examples include the Torrance–Sparrow model, bivariate BRDF models, and symmetry-based approaches [,,]. Unlike the former category, BRDF-based methods utilise all available image data, theoretically improving accuracy. However, these models are inherently complex and must be tailored specifically to different materials or classes of objects, requiring extensive parameter tuning and case-by-case analyses, which limits their general applicability and ease of deployment in practical settings.

The technology of photometric stereo has been effectively implemented in various commercial and custom-built material scanners. These scanners can broadly be classified into professional-grade commercial devices and custom-engineered setups. Professional scanning solutions offer integrated hardware and software, providing high accuracy, repeatability, and ease of use []. For instance, X-Rite’s TAC7 scanner, part of its Total Appearance Capture (TAC) ecosystem, utilises 30 calibrated LED sources and four high-resolution cameras to generate detailed PBR texture maps, including albedo, normal, gloss, and transparency maps []. Its comprehensive software suite (Pantora) enables high-quality capture suitable for industrial and research applications. Nevertheless, the substantial cost (approximately EUR 150,000) significantly limits its accessibility. Another professional-grade device, the HP Z Captis, integrates advanced polarised and photometric stereo techniques to capture texture resolutions up to 8K. Powered by NVIDIA’s Jetson AGX Xavier, it enables real-time analysis with two scanning modes that accommodate different sample sizes and properties []. Despite its comprehensive capabilities and integration with Adobe Substance 3D Sampler, the relatively high price (USD 19,999) remains a barrier to widespread adoption. Alternative professional devices such as xTex A4 scanner (manufactured by Vizoo in Munich, Germany), the NX Premium Scanner (manufactured by NunoX in Taiwan), and the DMIx SamplR (manufactured by ColorDigital in Cologne, Germany) offer a range of resolutions and capabilities. Still, they are similarly limited by proprietary software, specialised hardware requirements, and relatively high initial investments [,,]. More recently, the TMAC system has emerged as another photometric-stereo-based scanning solution []. It bridges the gap between high-end industrial scanners and experimental research platforms.

On the other hand, custom-built material scanning rigs offer more affordable and adaptable alternatives, constructed using off-the-shelf components such as cameras, LED lighting, polarisers, and programmable controllers. Prominent examples include the smartphone-based scanning setup introduced by Allegorithmic (now Adobe Substance), which integrates seamlessly into Substance 3D workflows, offering an accessible and simplified entry point for users []. Ubisoft engineer Grzegorz Baran proposed a tripod-based configuration optimised for improved lighting distribution and consistency, demonstrating substantial improvement in image quality through careful lighting arrangements []. Additionally, Niklas Hauber developed an automated scanning rig complemented by custom 3D reconstruction algorithms, while the VFX Grace team explored polarisation techniques to minimise glare and accurately capture subtle material details []. Despite these innovations, custom setups typically suffer from critical limitations. Specifically, they often lack scientifically rigorous optical setups, standardised calibration procedures, and clearly defined, reproducible processing workflows. Consequently, scanning results remain inconsistent, heavily dependent on user expertise, material characteristics, and environmental variables.

Existing photometric stereo-based scanning devices, whether commercial or custom-built, have largely overlooked the specific requirements of rapid prototyping and real-time rendering workflows. These contexts demand fast turnaround, reproducibility, and outputs optimised for appearance rather than purely geometric accuracy. This creates the need for a scanning solution that combines scientifically defined optical and mechanical configurations with systematic calibration procedures and a reproducible post-processing pipeline, capable of generating reliable PBR texture maps at a practical cost. The system presented in this paper addresses this gap through a low-cost, fully integrated photometric stereo-based digitisation workflow specifically configured for flat material samples, with an emphasis on supporting time-sensitive prototyping and real-time rendering applications.

3. System Description

This section describes the proposed material scanning system, which integrates a dedicated hardware design with a complementary software workflow. The design follows the principles of photometric stereo to recover both colour and geometric information from flat material samples. The hardware provides controlled acquisition conditions through a modular enclosure, polarised directional illumination, and automated camera–light synchronisation. The software complements this with a two-stage colour calibration procedure, systematic image processing, and a node-based reconstruction workflow that generates reliable PBR texture maps.

3.1. Hardware Design

The hardware design was developed to meet the requirements of photometric stereo, ensuring stable multi-directional illumination and consistent image capture []. It comprises the illumination system, the imaging system, and the structural framework, complemented by a cross-polarisation setup.

3.1.1. Illumination and Camera Configuration

Illumination was designed according to the requirements of photometric stereo, which recovers surface normals from shading variations under multiple directional light sources. Although, in theory, three sources are sufficient, we employed eight Light Emitting Diode (LED) sources to improve robustness against noise and cast shadows, as this number corresponds to the maximum typically supported by most post-processing software packages []. The eight LEDs are mounted on the octagonal side walls of the enclosure to provide evenly distributed directional illumination, which will be further detailed in the structural design Section 3.1.2. Direct-current Multifaceted Reflector 16 (MR16) units were selected due to their low cost, stable power requirements, and wide availability in different optical configurations.

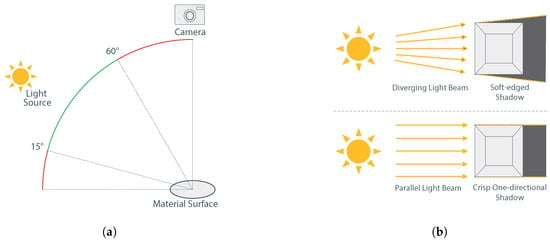

The incidence angle between the incoming light and the sample plane strongly influences the discriminative quality of shading cues. Shallow angles may not produce adequate variation, whereas overly steep angles can lead to self-occlusion. For this reason, the LEDs were positioned within a range of approximately 15° to 60° relative to the surface, a configuration that can be achieved with commercially available adjustable brackets [,]. Similarly, the beam angle of each LED affects the spatial distribution of light: narrow beams enhance contrast and improve the stability of PS estimation, whereas wide diffusion reduces the distinctiveness of shading information []. The effects of incidence and beam angles on illumination quality are illustrated in Figure 1. Other parameters, such as colour temperature and colour rendering index, were also considered, as they directly influence the fidelity of albedo reconstruction [,,]. Detailed specifications of the LED modules, including power, beam angle, colour temperature, and rendering index, are reported in Appendix A, Table A1.

Figure 1.

Light configuration principles for photometric surface capture: (a) Incidence angles of 15°–60° between light and surface, ensuring effective shading cues without self-occlusion. (b) Effect of beam divergence: diverging beams yield soft shadows, while parallel beams produce sharp one-directional shadows.

In addition to the illumination system, the imaging setup was designed to ensure reliable capture of surface details under controlled conditions. A consumer-grade digital camera equipped with a zoom lens was preferred, chosen for its affordability and ease of operation. And in our implementation, a Lumix DMC-G80 (manufactured by Panasonic in Kadoma, Japan) was used. To maximise consistency, the camera was operated in full manual mode with fixed focus, exposure, and white balance settings. Manual focusing (MF) ensured that fine surface details remained sharp, while white balance was calibrated against a neutral grey reference (e.g., X-Rite White Balance) and subsequently locked throughout all acquisitions. Exposure parameters were configured to minimise variability: ISO was set to the lowest native value to reduce noise, aperture was stopped down by approximately two f-stops from maximum to guarantee adequate depth of field, and shutter speed was adjusted to the minimum safe value to prevent motion blur [].

To preserve the full dynamic range of the sensor and avoid compression artefacts, all images were recorded in RAW format, which also enabled accurate colour calibration through the application of a Digital Camera Profile (DCP) in Section 3.2.1. Remote operation of the camera was achieved through a tip–ring–ring–sleeve (TRRS) interface controlled by the Arduino board, which simulated half-press and full-press shutter states via a resistor network. This configuration allowed the camera to be triggered automatically in synchrony with illumination changes, ensuring repeatable acquisition sequences. All the specifications of the imaging system are reported in Appendix A Table A2.

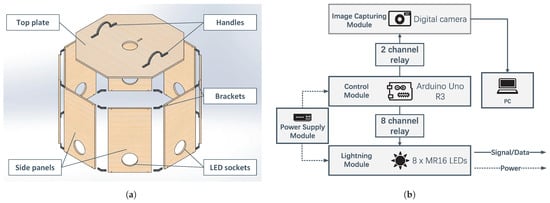

3.1.2. Structural and Electronic Framework

Complementing the illumination and imaging setup, the structural framework and integrated electronics were designed to provide a stable and reproducible acquisition environment. The overall mechanical assembly of the scanner is illustrated in Figure 2a. The enclosure adopts an octagonal geometry to accommodate the eight LED sources, with side panels manufactured from laser-cut plywood and joined by 3D-printed polyethylene terephthalate glycol (PETG) connectors. The opaque construction suppresses ambient light interference, while the overall dimensions were defined to accommodate flat samples up to A4 size (8.27 inches by 11.69 inches). The lower part consists of eight vertical panels with apertures for mounting the LEDs at fixed positions, while the upper part serves as a removable cover that also functions as a camera support. A standard tripod head allows the camera to be mounted vertically with its optical axis aligned to the sample plane, while adjustment slots enable fine alignment of the lens position. For background control, the scanner is placed on a black matte acrylic sheet, which minimises mirror-like reflections and facilitates image segmentation during post-processing. Appendix A, Table A3 provides detailed specifications of the structural components.

Figure 2.

System overview of the custom scanner: (a) mechanical assembly showing the top plate, handles, side panels, LED sockets, and brackets; (b) electronic subsystem organised into power supply, control, lighting, and image-capturing modules.

The electronic subsystem ensures synchronised operation of the scanner and is organised into four functional modules, as illustrated in Figure 2b. A 12 V DC power supply provides a stable current to the system. Control is handled by an Arduino Uno board, which operates both an eight-channel relay for the illumination system and a two-channel relay for triggering the camera shutter via the TRRS interface. The lighting module consists of eight MR16 LED bulbs, which are activated in a predefined sequence. The acquisition cycle is divided into two stages: first, all LEDs are powered simultaneously to capture a uniformly illuminated image for albedo reconstruction; second, the LEDs are switched on one at a time, with each activation synchronised with a camera trigger, yielding eight directional inputs for photometric stereo. The image-capturing module comprises the digital camera, which receives trigger signals and transfers the captured images to the PC for storage and further processing. The component specifications are given in Appendix A, Table A4 ensuring that the electronic subsystem can be reproduced.

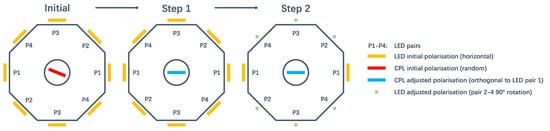

3.1.3. Cross-Polarisation Setup

In addition to the structural and electronic design, a cross-polarisation configuration was implemented to suppress specular reflections and isolate the diffuse component of reflected light []. A schematic illustration of the polarisation setup is shown in Figure 3, while detailed filter specifications are provided in Appendix A Table A5. Linear polarising films were mounted on the front of all LEDs, initially aligned in the same horizontal orientation. And a screw-in circular polarising filter (CPL) was attached to the camera lens. After the scanner assembly was completed, the CPL was rotated slowly while all LEDs were illuminated, until one opposing pair of LEDs appeared darkest in the camera viewfinder. At this point, the optical axes of that LED pair and the camera were aligned in orthogonal polarisation. Once this alignment was reached, the orientation of the CPL remained fixed.

Figure 3.

Schematic illustration of the polarisation setup: (Initial) Linear polarisers were mounted on all LEDs in a common horizontal orientation, and a CPL was attached to the camera lens in a random orientation. (Step 1) The CPL was rotated until one opposing LED pair appeared darkest. (Step 2) The remaining LED pairs were adjusted by 90° relative to this reference (from horizontal to vertical).

The remaining three opposing LED pairs were then rotated by 90°, using the LED mounting base as a reference to approximate the angle. In this configuration, the LED pair orthogonal to the first one also became cross-polarised with respect to the camera. The two remaining pairs, located at intermediate positions, were instead oriented at 45° relative to the camera polarisation axis. This arrangement maximises glare suppression for two opposing directions while still providing balanced multi-directional illumination. Under this configuration, a single cross-polarised image with all LEDs activated simultaneously can be used to obtain a glare-free albedo map.

3.2. Software Workflow

The software workflow was developed to process the acquired data into PBR textures. It comprises a dual-stage colour calibration procedure, an automated acquisition workflow, and a node-based reconstruction process for generating mainly albedo and normal maps.

3.2.1. Colour Calibration

The workflow begins with a dual-stage colour calibration designed to ensure accurate chromatic reproduction. The first stage was performed through camera profiling. A reference image of the ColorChecker Classic (manufactured by X-Rite in Kentwood, MI, USA) was acquired under the same illumination and exposure conditions used for albedo capture (as described in Section 3.1.2). The RAW image was converted to DNG format and processed with the X-Rite Camera Calibration software (Version 2.2.0) to generate a custom Digital Camera Profile (DCP). By aligning the camera’s recorded RGB values with the known reflectance values of the 24 standard patches, this step corrected device-specific spectral deviations such as hue bias and tonal imbalance [].

The second stage refined chromatic accuracy through a three-dimensional Look-Up Table (3D LUT) constructed in the CIELAB space []. Under the same imaging conditions, the camera captured 154 samples from the RAL Classic colour fan deck, covering a broad and balanced distribution of hues. The measured colours were converted to CIELAB and compared with their reference values from the RAL database to construct a discrete correction grid, while trilinear interpolation was used for intermediate values []. The final LUT was encapsulated into a dedicated MATLAB script, providing a reproducible and transferable procedure for subsequent image correction.

3.2.2. Image Processing

After the calibration stage, the images of the scanned material were processed to prepare a dataset. Each acquisition included one uniformly illuminated frame for albedo and eight directionally illuminated frames for normal reconstruction, as described in Section 3.1.2. The RAW files were first imported into Adobe Lightroom, where the previously generated DCP was applied. All images were then exported as 16-bit TIFF files, preserving dynamic range. Subsequently, a dedicated MATLAB (Version R2024b) script was executed to apply the 3D LUT correction, with the output likewise maintained in TIFF format. Once colour correction was complete, the images were cropped into square frames and rescaled to a resolution of 4096 by 4096 pixels using Adobe Photoshop (Version 2024). The corrected TIFF images thus provided a uniform, high-resolution dataset ready for import into Adobe Substance 3D Designer, which natively supports TIFF as an input resource.

3.2.3. Texture Reconstruction

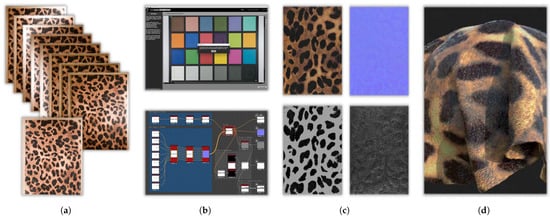

The corrected image set was imported into Adobe Substance 3D Designer for material reconstruction. This software was selected because it combines accessibility with advanced functionality, integrates dedicated nodes for material scanning, and is supported by extensive tutorials that facilitate reproducibility. A reusable node-graph template was developed to streamline the process, structured into colour-coded blocks for clarity and adaptability. The template accepts nine calibrated input images: one uniformly illuminated frame for albedo extraction and eight directionally illuminated frames for normal reconstruction based on photometric stereo.

Within the node graph, two main processing branches were implemented. The albedo branch handles the uniformly illuminated input, applying cropping, colour equalisation, and optional tiling to generate the final albedo map. The normal branch processes the eight directional inputs in sequence, estimating per-pixel surface normals through a photometric stereo node; the correct ordering of light directions is preserved to ensure geometric fidelity. Additional modules, such as multi-cropping, tone balancing, or auto-tiling, can be activated depending on the material properties and user requirements. Real-time previews within the software allow interactive inspection of both 2D textures and 3D renderings, ensuring that reconstruction outcomes can be evaluated and refined before final export.

The reconstruction pipeline is designed to output two core PBR texture maps: albedo and normal. Both maps are generated directly at 4096 × 4096 pixels, which provides high visual quality while maintaining compatibility with real-time rendering performance. This outcome aligns with the theoretical capabilities of photometric stereo, which, by nature, can directly recover only albedo and normal information. Additional maps—including roughness, height, metallic, or ambient occlusion—can be derived using preconfigured nodes integrated into the template and are exported in linear space. The albedo is exported in sRGB colour space, while the normal map follows either the OpenGL or DirectX convention, depending on the target engine. Supported file formats include TIFF, PNG, and PSB, ensuring compatibility with engines such as Unity and Unreal Engine. An overview of the complete workflow is illustrated in Figure 4, using a sample of leopard fabric.

Figure 4.

Workflow of the proposed material scanning system, demonstrated with a leopard fabric sample: (a) captured raw images with the scanner; (b) calibration and processing pipeline in software; (c) exported PBR texture maps; (d) rendered digital material in Adobe Substance 3D.

4. System Evaluation

The evaluation aims to verify both the accuracy and the practical usability of the proposed scanning system. A direct comparison with commercial scanners was deliberately avoided, as their acquisition pipelines are opaque and typically involve undisclosed post-processing. Furthermore, differences in resolution, colour space and other parameters make precise alignment of textures unreliable and prone to bias, meaning that device-to-device benchmarking cannot provide a trustworthy basis for validation. Instead, we adopted an indirect evaluation strategy based on standardised reference targets and controlled experiments. Albedo accuracy is assessed using colour samples with known reference values, while normal map fidelity is evaluated through CAD (Computer-Aided Design)-based reference geometries with computable ground truth normals. Additionally, usability and cost factors are examined to provide a comprehensive view of the system’s efficiency and accessibility.

4.1. Albedo Map Evaluation

4.1.1. Reference Standards and Evaluation Procedure

To evaluate the colour accuracy of the reconstructed albedo maps, 39 samples from the RAL Classic K7 colour fan deck were selected, which is a widely adopted hue standard in industry []. These samples cover all nine hue-based categories defined by the RAL Classic system, ensuring broad representation of the perceptual colour space and a reliable basis for quantitative analysis. Importantly, while the colour calibration procedure described in Section 3.2.1 was based on the same RAL Classic system, the 39 verification samples selected here did not overlap with those employed for calibration, ensuring an independent evaluation. This separation ensured that the assessment was performed on independent references, rather than on data implicitly used for correction.

Each sample was scanned using the proposed workflow, and the resulting albedo maps were compared against the corresponding reference values defined in the official RAL database. The captured images had already undergone the dual-stage colour calibration described in Section 3.2.1, so that the remaining deviations could be attributed to the performance of the scanning workflow rather than camera or lighting bias. For each reconstructed albedo map, the mean colour of the sample region was extracted, converted into CIELAB coordinates, and compared to its reference values. Perceptual differences were then quantified using the metric, which is a reliable measure of visual colour fidelity, since it has non-uniform sensitivity to changes in different regions of the colour space [].

4.1.2. Quantitative Results and Analysis for Albedo Map

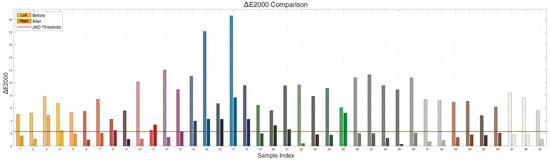

To analyse the colour accuracy of the reconstructed albedo maps, the evaluation was carried out in three stages: first at the level of individual samples, then by aggregating results into hue categories, and finally by examining the overall distribution of errors. At the individual sample level, the average decreased from 8.20 before calibration to 2.29 after calibration, with a maximum residual error of 7.67, as illustrated in Figure 5. While a few samples remained above the Just Noticeable Difference (JND) threshold of 2.30, the majority fell within or below this range []. This indicates that the calibration workflow effectively compresses the error distribution, lowering both the overall deviation and the number of outliers above the perceptual threshold. Detailed per-sample results are reported in Table A7 in Appendix A.

Figure 5.

Comparison of before and after colour calibration across 39 validation samples. The colour of each bar corresponds to the actual colour of the respective test sample. For each sample, the left bar represents the before calibration, and the right bar represents the after calibration. The red horizontal line indicates the JND threshold at 2.3.

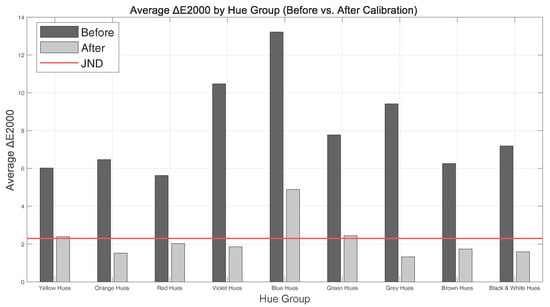

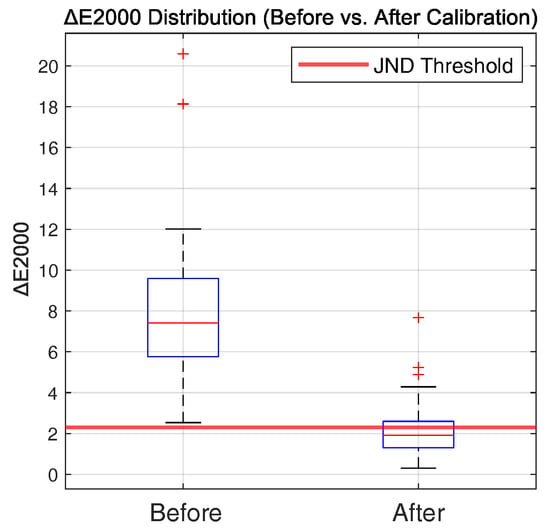

When grouped by hue category, the system showed broadly consistent performance across the perceptual colour space, as reported in Figure 6. Yellow, orange, red, green, grey, brown, and neutral hues were generally reproduced with values close to or below the JND threshold. In contrast, the blue group showed the most significant deviations, with a mean of 4.89 after calibration, as listed in Table A6 in Appendix A. This reduced accuracy is consistent with known sensor limitations in the blue channel and the lower exposure response of dark or saturated blues under fixed illumination. The overall distribution further highlights the impact of calibration. As shown in Figure 7, the boxplot illustrates a marked compression of values after correction, with both the mean and variance reduced. Most samples clustered tightly below the JND threshold, with only a limited number of outliers. This indicates not only improved average accuracy but also enhanced stability and predictability across the dataset.

Figure 6.

Comparison of the average before and after colour calibration across the 9 RAL hue groups. For each hue group, the left bar represents the before calibration, and the right bar represents the after calibration. The red horizontal line indicates the JND threshold at 2.3.

Figure 7.

Box plot comparison of distributions before and after calibration, with the red horizontal line indicating the JND threshold at 2.3. The red crosses represent statistical outliers.

4.1.3. Exploratory Comparison with Commercial Scanners

An exploratory comparison was conducted using a commercial scanning device (from Metis Systems) on physical material samples. Due to differences in resolution, a strict pixel-level comparison was not attempted. Instead, a block-wise analysis was performed. After coarse alignment using an affine transformation, the images were divided into non-overlapping blocks of 5 × 5 pixels, and the average RGB value was computed within each block. These averages were then converted to CIELAB space for calculating colour differences against the corresponding blocks in the reference. This strategy smooths out local misalignments and noise, enabling a more stable region-level comparison without requiring exact pixel correspondence or resolution standardisation.

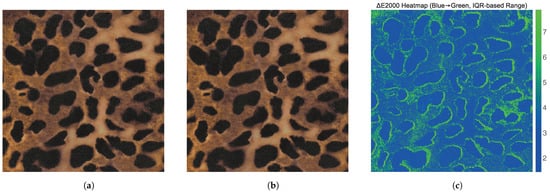

Figure 8 shows the albedo maps of a representative leopard-pattern sample obtained from both systems, together with the corresponding block-wise error heatmap. Errors were generally low in homogeneous areas and increased at the boundaries of dark spots, where local misalignments had a greater impact.

Figure 8.

Visual and numerical comparison of aligned Albedo maps: (a) Albedo maps acquired from a commercial-grade scanning system; (b) albedo maps acquired from our custom-built scanner; (c) heatmap, with colour scale rendered from blue (low difference) to green (high difference).

In addition to the leopard-pattern leather shown above, three more samples were also tested, including a woven fabric, an embossed leather, and a velvet. For clarity, only the leather case is illustrated, while the quantitative results for all four materials are summarised in Table 1. The reported values were obtained using the same block-wise analysis described above. Across the dataset, errors were generally low in uniform regions. They increased mainly at high-contrast or structurally complex boundaries, such as fabric stripes, embossed ridges, or the shaded areas of velvet. Mean values ranged between 2.58 and 3.54, exceeding the strict JND threshold of 2.30. These results are influenced by factors such as imperfect alignment, resolution differences, and local texture complexity and should therefore be regarded as indicative rather than absolute measures of perceptual fidelity.

Table 1.

Mean values for four tested materials, with notes on deviation patterns observed in block-wise analysis.

4.2. Normal Map Evaluation

4.2.1. Reference Geometries and Ground Truth Generation

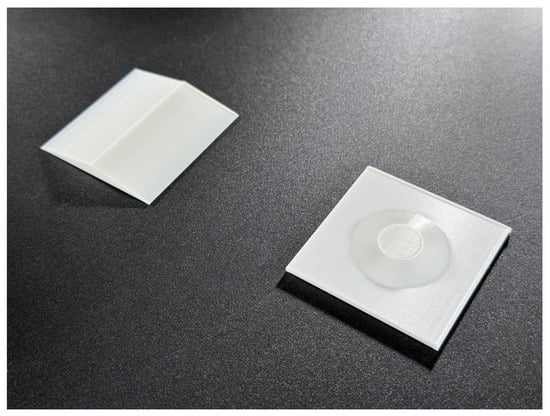

To enable a quantitative evaluation of surface normal accuracy, two reference geometries were specifically designed []. Photographs of the fabricated specimens are shown in Figure 9. The left one depicts a triangular slope composed of two planar regions that meet along a central ridge. Its large inclined surfaces provide a controlled basis for assessing the scanner’s ability to reproduce uniform normals over extended flat regions. The right one is a bevelled cylinder, whose curved sidewall introduces a continuous radial gradient in orientation. This geometry is suitable for evaluating how accurately the system can capture smoothly varying surface normals.

Figure 9.

Reference geometries for normal map validation: triangular slope (left) and bevelled cylinder (right).

Ground truth normal maps for both geometries were directly generated from the corresponding CAD models, providing exact knowledge of the theoretical surface orientations. These maps served as benchmarks for quantitative comparison with the scanned results. Physical specimens were fabricated using a high-precision stereolithography (SLA) 3D printer from Formlabs, which minimised manufacturing deviations and ensured dimensional fidelity to the digital models.

4.2.2. Alignment and Image Preprocessing

Before quantitative evaluation, the scanned normal maps were spatially aligned with the ground truth models to enable valid pixel-wise comparison. Alignment was based on geometric features directly visible in the maps. For the triangular slope, the central ridge line was used as the primary reference to correct rotation and positioning. For the bevelled cylinder, the circular boundary provided a reliable basis for refining centring and scale. These procedures ensured geometric consistency between the reconstructed and theoretical maps before the error analysis.

Following alignment, regions of interest (ROIs) were defined to exclude areas likely to bias the results. In the triangular slope, the ridge zone was omitted, since even minor misalignments in this discontinuous area produce disproportionately large angular errors that do not reflect performance on the planar slopes. In the bevelled cylinder, only the annular sidewall was retained, while the flat top and bottom surfaces were excluded. These planar regions contain little geometric variation and thus contribute minimally to the evaluation of normal accuracy.

Parameter sensitivity was also considered. In Adobe Substance 3D Designer, surface normals are reconstructed using the Multi Angle to Normal node, which requires adjusting a key parameter controlling normal intensity within the range of [0, 1]. Based on preliminary tests and common practice, values below 0.50 tend to produce overly flattened normals, while values above 0.90 exaggerate noise and artefacts. The interval 0.50–0.90 was therefore selected as a practical range for optimisation. Within this range, nine values were tested for each geometry in steps of 0.05. Each reconstructed map was compared against the corresponding ground truth, and the parameter yielding the lowest overall error was selected. The representative error reported for each geometry thus reflects the best achievable performance of the scanner under the current workflow.

4.2.3. Quantitative Results and Analysis for Normal Map

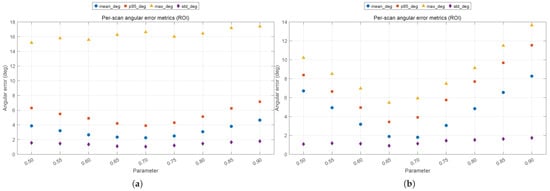

Across the nine tested configurations, the angular error decreased as the intensity parameter increased up to 0.70, after which performance began to degrade. Both geometries therefore achieved their minimum error at a parameter value of 0.70, which was adopted as the representative setting for the present configuration, as illustrated in Figure 10.

Figure 10.

Angular error metrics of surface normals across nine tested configurations for different intensity parameter values: triangular slope (a) and bevelled cylinder (b). In both cases, the minimum error occurred at a parameter value of 0.70, which was adopted as the representative setting.

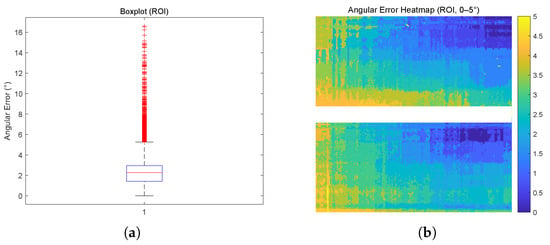

At this setting, the triangular slope yielded a mean angular error of 2.25°, with a median of 2.27°, a 95th percentile of 3.89°, and a maximum error of 16.62°. The boxplot in Figure 11 shows that the majority of pixels fall below 3°, with only a small fraction extending beyond 10°. The corresponding heatmap in Figure 11 further indicates that the higher deviations are mainly confined to the ROI boundaries, while the planar slope regions remain within the range of [0, 5] degrees.

Figure 11.

Evaluation of surface normal accuracy on the triangular slope geometry: (a) angular error distribution boxplot, where the red horizontal line represents the median value and the red crosses denote statistical outliers; (b) spatial error heatmap.

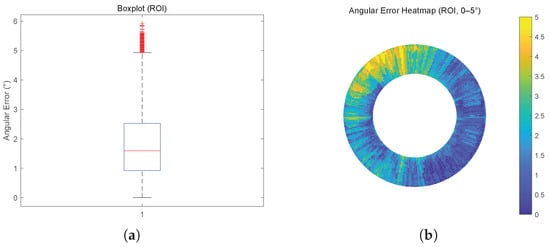

The bevelled cylinder exhibited slightly lower errors overall. At the same parameter value, the mean angular error was 1.80°, with a median of 1.60°, a 95th percentile of 3.91°, and a maximum error of 5.92°. The boxplot in Figure 12 confirms that most pixels lie within 3°, with only a limited tail extending towards 6°. The corresponding heatmap in Figure 12 demonstrates generally uniform accuracy across the annular sidewall, with only small localised regions of higher deviation.

Figure 12.

Evaluation of surface normal accuracy on the bevelled cylinder geometry: (a) angular error distribution boxplot, where the red horizontal line represents the median value and the red crosses denote statistical outliers; (b) spatial error heatmap.

4.3. Usability and Cost Evaluation

4.3.1. Accessibility of Components and Operation

The scanner relies entirely on commercially available components, including MR16 LED bulbs, a consumer-grade digital camera, and an Arduino-based control unit. These elements can be sourced at low cost and assembled without the need for specialised equipment. The structural framework is likewise constructed from standard plywood panels and PETG connectors, both of which can be produced using standard fabrication methods.

In terms of operation, the workflow is designed to require only basic technical skills. Colour calibration is performed using standard reference charts such as the X-Rite ColorChecker and the RAL Classic fan deck. The only manual intervention required during LUT construction is the extraction of measured colour values from the scanned patches—potentially assisted by web-based tools—and the retrieval of the corresponding reference values from the RAL database. Once these values are provided, a MATLAB script automatically generates the LUT. Image pre-processing is performed in widely adopted platforms such as Adobe Lightroom and Photoshop, while texture reconstruction is handled through a preconfigured node graph in Adobe Substance 3D Designer, with parameters and outputs adjustable as required. These tools are extensively documented and familiar to users with general photography or image-processing experience, ensuring that the workflow can be adopted without requiring domain-specific expertise.

4.3.2. Time and Cost Efficiency

Beyond accessibility, the system was also designed to minimise both acquisition time and overall cost. Table 2 summarises the representative time requirements for each stage of the workflow, divided into assembly, calibration, and operation based on our evaluation across four representative materials: smooth leather, woven fabric, embossed leather, and velvet. Fabrication time for laser-cut or 3D-printed components cannot be precisely determined, as it depends on the equipment available. However, once all parts are obtained, the structural framework can be assembled in 10 min using a screwdriver. The Arduino control circuit, responsible for LED switching and camera triggering, was designed to avoid soldering by relying on a solderless breadboard with jumper wires for logic connections and terminal blocks with silicone wires for power distribution. With this approach, the complete mechanical and electrical assembly can be accomplished within approximately two hours.

Table 2.

Overview of scanner workflow stages, including approximate task durations and frequencies.

Calibration is also performed only once, unless the illumination, camera model, or exposure parameters are changed. The first stage, camera profiling with the X-Rite ColorChecker, requires no more than 10 min, including both image capture and profile generation. The second stage, construction of the 3D LUT, depends on the number of colour samples acquired. In the present study, 154 RAL colours were used, with image capture taking around 30 min and an additional 30 min required for retrieving and organising the reference CIELAB values. Once completed, this calibration can be reused across multiple scanning sessions without repetition.

The operation can then be repeated for any number of material samples. Image acquisition, controlled by the Arduino sequence, takes about 15 s per sample and remains consistent through automation. Post-processing involves three steps: image editing, reconstruction in Adobe Substance 3D Designer to generate albedo and normal maps, and optional tiling for seamless textures. The average post-processing time was approximately 5 min. Such rapid turnaround highlights the system’s suitability for iterative material studies and real-time rendering applications.

In terms of financial cost, the scanner was assembled for approximately EUR 550, which includes the structural framework, the electronic components, and auxiliary materials. The camera was not included in this estimate. By contrast, professional material scanners such as the X-Rite TAC7 are estimated to cost over EUR 100,000, while commercial services typically charge several hundred euros per sample. The modest upfront investment and negligible per-sample operating cost make the proposed scanner a highly cost-effective alternative for research and design workflows.

5. Conclusions

This work presented a fully customisable and cost-effective material scanning system designed to generate ready-to-use PBR texture maps for real-time rendering applications. The hardware configuration follows the principles of photometric stereo, utilising eight fixed-angle LEDs arranged in an octagonal layout with a vertically mounted camera to ensure stable capture. A cross-polarisation setup was integrated to suppress specular reflection and isolate diffuse surface information. On the software side, the workflow incorporates a two-stage colour calibration procedure, consisting of camera profiling through an X-Rite colour chart and fine-grained correction via a 3D LUT built from 154 RAL samples. The calibrated images are then processed in Adobe Substance 3D Designer using a reusable node-based pipeline, which outputs tiled albedo and normal maps at a 4096 by 4096 resolution, directly compatible with standard rendering engines.

The proposed system was evaluated in terms of both colour and geometric accuracy, as well as usability and cost effectiveness. For albedo maps, 39 RAL colour samples were processed through the complete workflow, yielding a mean of 2.29 after calibration, with most samples reproduced within the perceptual JND threshold of 2.30. Only saturated blue hues showed larger deviations, consistent with known limitations in camera sensor sensitivity. For normal maps, reference geometries fabricated from CAD models demonstrated that the scanner accurately captured both uniform planar slopes and smoothly varying cylindrical gradients, with mean angular errors of 2.24° for the triangular slope and 1.77° for the bevelled cylinder. Beyond accuracy, the workflow was shown to be efficient and accessible: assembly and calibration can be completed with modest effort, while a full acquisition and post-processing sequence can be completed in 5 min. The hardware was assembled for approximately EUR 550, significantly lower than the cost of commercial devices, underscoring the practical viability of the proposed system. Importantly, because the evaluation relies on objective standards, such as colour samples and CAD-derived geometries, rather than device-specific outputs, the same methodology could also serve as a reproducible protocol for assessing other material scanning systems.

While the evaluation confirmed the practicality and accuracy of the proposed workflow, some constraints remain that define its current scope of application. The system is currently configured to digitise A4-sized flat samples, reflecting its optical design and the intended use case of fabrics and leathers. As a result, it cannot be directly applied to curved or complex geometries. Transparent or highly reflective materials also remain challenging, as photometric stereo is not well-suited to capturing their optical behaviour []. A possible solution already under consideration is to integrate upward-facing light sources at the base of the scanner, enabling partial acquisition of subsurface scattering or transmissive effects. In addition, the fidelity of the generated textures is inherently affected by the characteristics of the imaging device. As highlighted in our evaluation, consumer-grade cameras may exhibit colour biases stemming from their spectral response, along with limitations in noise performance and dynamic range. Finally, surface roughness cannot be directly measured with the present photometric-stereo hardware: the standard PS image stack supports recovery of surface normals (and diffuse albedo) but does not directly yield the microfacet roughness parameter used in PBR models []. While our Substance Designer template includes heuristic nodes that infer roughness from albedo/normal inputs, obtaining spatially varying roughness in a physically reliable manner requires additional reflectance information or priors—either via data-driven Spatially Varying Bidirectional Reflectance Distribution Function (SVBRDF) estimation or specialised measurement with gonioreflectometric setups []. We consider these as potential extensions for future versions of the system.

Future developments will focus on broadening both the material range and the scope of evaluation of the system. Extending the hardware to handle non-flat samples represents a natural next step, together with the exploration of lighting configurations that support partial transparency capture. On the software side, the colour calibration procedure could be refined by adopting denser reference datasets such as the RAL Design Plus system with over 1000 colours, thereby improving coverage of the CIELAB colour space and enabling investigation of whether a larger sample set can mitigate the higher deviations observed in the blue hue group. Roughness estimation will also be further investigated, combining heuristic post-processing with data-driven inference methods and, where feasible, complementary instruments. In addition, a subjective evaluation will be introduced to complement the objective metrics. Reconstructed textures from the proposed scanner and from professional systems will be projected alongside real physical samples, and users will be asked to rate their perceived similarity. These directions aim to consolidate the system into a more comprehensive framework for material digitisation, directly supporting real-time rendering applications.

Author Contributions

Conceptualization, G.C., F.M. and L.W.; methodology, F.M. and L.W.; software, L.W.; validation, L.W.; formal analysis, L.W.; investigation, L.W.; resources, F.M.; data curation, L.W.; writing—original draft preparation, L.W.; writing—review and editing, G.C. and F.M.; visualization, L.W.; supervision, G.C. and F.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset available on request from the authors. The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| AR | Augmented Reality |

| VR | Virtual Reality |

| CIEDE2000 | |

| PBR | Physically Based Rendering |

| MLT | Metropolis Light Transport |

| RANSAC | Random Sample Consensus |

| SVD | Singular Value Decomposition |

| BRDF | Bidirectional Reflectance Distribution Functions |

| LED | Light Emitting Diode |

| MR | Multifaceted Reflector |

| CRI | Colour Rendering Index |

| MF | Manual Focusing |

| LUT | Lookup Table |

| DCP | DNG Camera Profile |

| TRRS | Tip–Ring–Ring–Sleeve |

| PETG | Polyethylene Terephthalate Glycol |

| CPL | Circular Polarising Filter |

| LCD | Liquid Crystal Display |

| CIELAB | Commission Internationale de l’Éclairage L*a*b* |

| JND | Just Noticeable Difference |

| SLA | Stereolithography |

| ROI | Regions of Interest |

| SVBRDF | Spatially Varying Bidirectional Reflectance Distribution Functions |

Appendix A

Table A1.

Detailed specifications of the lighting system.

Table A1.

Detailed specifications of the lighting system.

| Parameter | Value/Range | Notes |

|---|---|---|

| LED type | GU 5.3, MR16 | Widely available, cost-effective modules |

| Beam angle | Narrow, ∼15° | Reduces spill light and improves directional control |

| Incidence angle | 20° | Achieved using adjustable LED frames |

| Colour temperature | 6000 K | Selected to approximate neutral daylight conditions |

| Colour rendering index | >95 | Ensures accurate reproduction of surface colours |

| Supply voltage | 12 V, DC | Driven by external regulated power supply |

Table A2.

Detailed specifications of the imaging system.

Table A2.

Detailed specifications of the imaging system.

| Parameter | Value/Setting | Notes |

|---|---|---|

| Camera model | Panasonic Lumix DMC-G80 | Consumer-grade digital camera, readily available |

| Lens | 12–60 mm, ⌀ 58 mm | Versatile focal range, suitable for both framing and detail capture |

| Focus mode | Manual (locked) | Ensures surface micro-details remain sharp |

| Sample–camera distance | 450 mm | Provides full coverage of A4 samples within the field of view (FOV) |

| ISO | ISO 100 (lowest) | Minimises sensor noise |

| Aperture | f/11 (≈two stops below maximum) | Balances sharpness with sufficient depth of field |

| Shutter speed | 1/ (minimum safe exposure) | Avoids motion blur while preserving correct exposure |

| File format | RAW (convertible to DNG, TIFF, JPEG) | Required for later calibration and reconstruction |

Table A3.

Detailed specifications of the structural components.

Table A3.

Detailed specifications of the structural components.

| Component | Specification | Notes |

|---|---|---|

| Framework material | Laser-cut plywood, thickness 10 mm | Opaque, prevents ambient light interference |

| Connectors | 3D-printed PETG | Provides rigid joints |

| Background plate | Black matte acrylic, thickness 5 mm | Reduces reflections and simplifies image segmentation |

| Camera mount | Manfrotto Tilt Tripod Head, 3-axis | Ensures vertical alignment of lens |

Table A4.

Detailed specifications of the electrical components.

Table A4.

Detailed specifications of the electrical components.

| Component | Specification | Notes |

|---|---|---|

| Control board | Arduino Uno R3 | Manages LED switching and camera trigger |

| Relay modules | 8-channel and 2-channel relay boards | One for LED illumination, one for camera TRRS trigger |

| Power supply | 12 V, 8.5 A, DC switching power supply | Provides current for MR16 LEDs |

| Wiring | Breadboard with jumper wires; connection terminal with silicone wires | Solderless connections for ease of assembly |

| Camera trigger interface | 2.5 mm to 3.5 mm TRRS audio cable, carbon film resistors | Simulates shutter triggering of the Panasonic camera |

Table A5.

Detailed specifications of the polarisation filters.

Table A5.

Detailed specifications of the polarisation filters.

| Component | Specification | Notes |

|---|---|---|

| LED filter | Linear polariser, adhesive type, transmission 42% ± 2%, extinction ratio 99.9% | Cut and mounted on each LED front surface; initial orientation horizontal |

| Camera filter | Circular polariser, screw-in type, transmission 30% ± 2%, extinction ratio > 99.9% | Mounted on camera lens; rotated to achieve cross-polarisation |

Table A6.

List of the 9 RAL hue groups used for colour accuracy validation.

Table A6.

List of the 9 RAL hue groups used for colour accuracy validation.

| RAL Code | Hue Group | Calib Samples | Valid Samples | ||

|---|---|---|---|---|---|

| 1xxx | Yellow hues | 22 | 5 | 6.03 | 2.39 |

| 2xxx | Orange hues | 17 | 2 | 6.47 | 1.52 |

| 3xxx | Red hues | 18 | 4 | 5.63 | 2.02 |

| 4xxx | Violet hues | 30 | 2 | 10.48 | 1.86 |

| 5xxx | Blue hues | 8 | 5 | 13.22 | 4.89 |

| 6xxx | Green hues | 9 | 7 | 7.78 | 2.44 |

| 7xxx | Grey hues | 8 | 7 | 9.42 | 1.32 |

| 8xxx | Brown hues | 27 | 4 | 6.26 | 1.74 |

| 9xxx | White and Black hues | 15 | 3 | 7.19 | 1.59 |

Table A7.

List of the 39 RAL samples used for colour accuracy validation.

Table A7.

List of the 39 RAL samples used for colour accuracy validation.

| RAL Code | Colour Name | RAL Code | Colour Name | ||||

|---|---|---|---|---|---|---|---|

| 1004 | Golden yellow | 5.01 | 1.61 | 6012 | Black green | 9.56 | 2.62 |

| 1012 | Lemon yellow | 5.23 | 1.13 | 6017 | May green | 9.66 | 0.42 |

| 1017 | Saffron yellow | 7.83 | 4.88 | 6022 | Olive drab | 7.88 | 1.83 |

| 1023 | Traffic yellow | 6.76 | 2.42 | 6028 | Pine green | 9.16 | 1.74 |

| 1033 | Dahlia yellow | 5.29 | 1.90 | 6037 | Pure green | 6.09 | 5.23 |

| 2002 | Vermilion | 5.52 | 1.00 | 7003 | Moss grey | 10.83 | 2.00 |

| 2010 | Signal orange | 7.41 | 2.04 | 7009 | Green grey | 11.27 | 2.00 |

| 3001 | Signal red | 4.27 | 2.51 | 7015 | Slate grey | 9.59 | 1.26 |

| 3007 | Black red | 5.60 | 1.07 | 7024 | Graphite grey | 8.91 | 0.31 |

| 3014 | Antique pink | 10.14 | 1.42 | 7033 | Cement grey | 1.78 | 2.07 |

| 3020 | Traffic red | 2.53 | 3.37 | 7038 | Agate grey | 7.33 | 0.69 |

| 4001 | Red lilac | 12.02 | 1.40 | 7044 | Silk grey | 7.22 | 0.93 |

| 4006 | Traffic purple | 8.94 | 2.31 | 8001 | Ochre brown | 6.94 | 1.38 |

| 5000 | Violet blue | 11.06 | 3.97 | 8008 | Olive brown | 7.08 | 1.79 |

| 5005 | Signal blue | 18.13 | 4.28 | 8016 | Mahogany brown | 4.84 | 1.71 |

| 5011 | Steel blue | 6.73 | 4.26 | 8024 | Beige brown | 6.18 | 2.07 |

| 5017 | Traffic blue | 20.58 | 7.67 | 9003 | Signal white | 8.38 | 1.84 |

| 5022 | Night blue | 9.59 | 4.26 | 9010 | Pure white | 7.60 | 1.84 |

| 6002 | Leaf green | 6.48 | 1.98 | 9018 | Papyrus white | 5.59 | 1.09 |

| 6007 | Bottle green | 5.64 | 3.27 |

References

- Burley, B.; Studios, W.D.A. Physically-based shading at disney. In Proceedings of the ACM SIGGRAPH, Los Angeles, CA, USA, 5–9 August 2012. [Google Scholar]

- Sturm, T.; Sousa, M.; Thöner, M.; Limper, M. A unified GLTF/X3D extension to bring physically-based rendering to the web. In Proceedings of the 21st International Conference on Web3D Technology, Anaheim, CA, USA, 22–24 July 2016; pp. 117–125. [Google Scholar] [CrossRef]

- Pharr, M.; Jakob, W.; Humphreys, G. Physically Based Rendering: From Theory to Implementation, 3rd ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2016. [Google Scholar] [CrossRef]

- Hu, Y.; Guerrero, P.; Hasan, M.; Rushmeier, H.; Deschaintre, V. Generating Procedural Materials from Text or Image Prompts. In Proceedings of the ACM SIGGRAPH 2023 Conference Proceedings, Los Angeles, CA, USA, 6–10 August 2023. SIGGRAPH ’23. [Google Scholar] [CrossRef]

- Burley, B.; Adler, D.; Chiang, M.J.Y.; Driskill, H.; Habel, R.; Kelly, P.; Kutz, P.; Li, Y.K.; Teece, D. The Design and Evolution of Disney’s Hyperion Renderer. ACM Trans. Graph. 2018, 37, 1–22. [Google Scholar] [CrossRef]

- Bitterli, B.; Wyman, C.; Pharr, M.; Shirley, P.; Lefohn, A.; Jarosz, W. Spatiotemporal reservoir resampling for real-time ray tracing with dynamic direct lighting. ACM Trans. Graph. 2020, 39, 148. [Google Scholar] [CrossRef]

- Aittala, M.; Aila, T.; Lehtinen, J. Reflectance modeling by neural texture synthesis. ACM Trans. Graph. 2016, 35, 1–13. [Google Scholar] [CrossRef]

- Deschaintre, V.; Aittala, M.; Durand, F.; Drettakis, G.; Bousseau, A. Single-image SVBRDF capture with a rendering-aware deep network. ACM Trans. Graph. 2018, 37, 1–15. [Google Scholar] [CrossRef]

- Guarnera, D.; Guarnera, G.; Ghosh, A.; Denk, C.; Glencross, M. BRDF Representation and Acquisition. Comput. Graph. Forum 2016, 35, 625–650. [Google Scholar] [CrossRef]

- Aittala, M.; Weyrich, T.; Lehtinen, J. Practical SVBRDF capture in the frequency domain. ACM Trans. Graph. 2013, 32, 110–121. [Google Scholar] [CrossRef]

- Kronander, J. Physically Based Rendering of Synthetic Objects in Real Environments. Ph.D. Thesis, Linköping University, Linköping, Sweden, 2015. [Google Scholar] [CrossRef]

- Zhang, Y.; Song, S.; Yumer, E.; Savva, M.; Lee, J.Y.; Jin, H.; Funkhouser, T. Physically-Based Rendering for Indoor Scene Understanding Using Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Pech, M.; Vrchota, J. The Product Customization Process in Relation to Industry 4.0 and Digitalization. Processes 2022, 10, 539. [Google Scholar] [CrossRef]

- Merzbach, S.; Weinmann, M.; Klein, R. High-Quality Multi-Spectral Reflectance Acquisition with X-Rite TAC7. In Proceedings of the Workshop on Material Appearance Modeling; Klein, R., Rushmeier, H., Eds.; The Eurographics Association: Eindhoven, The Netherlands, 2017. [Google Scholar] [CrossRef]

- Kavoosighafi, B.; Hajisharif, S.; Miandji, E.; Baravdish, G.; Cao, W.; Unger, J. Deep SVBRDF Acquisition and Modelling: A Survey. Comput. Graph. Forum 2024, 43, e15199. [Google Scholar] [CrossRef]

- Lichy, D.; Wu, J.; Sengupta, S.; Jacobs, D.W. Shape and material capture at home. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6123–6133. [Google Scholar]

- de Groot, P.J.; Deck, L.L.; Su, R.; Osten, W. Contributions of holography to the advancement of interferometric measurements of surface topography. Light. Adv. Manuf. 2022, 3, 258. [Google Scholar] [CrossRef]

- Sarakinos, A.; Lembessis, A. Color Holography for the Documentation and Dissemination of Cultural Heritage: OptoClonesTM from Four Museums in Two Countries. J. Imaging 2019, 5, 59. [Google Scholar] [CrossRef] [PubMed]

- Rabosh, E.; Balbekin, N.; Petrov, N. Analog-to-digital conversion of information archived in display holograms: I. discussion. J. Opt. Soc. Am. A 2023, 40, B47–B56. [Google Scholar] [CrossRef] [PubMed]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photon. 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Callieri, M.; Cignoni, P.; Corsini, M.; Scopigno, R. Masked photo blending: Mapping dense photographic data set on high-resolution sampled 3D models. Comput. Graph. 2008, 32, 464–473. [Google Scholar] [CrossRef]

- Karami, A.; Menna, F.; Remondino, F. Combining Photogrammetry and Photometric Stereo to Achieve Precise and Complete 3D Reconstruction. Sensors 2022, 22, 8172. [Google Scholar] [CrossRef]

- Woodham, R.J. Photometric Method For Determining Surface Orientation From Multiple Images. Opt. Eng. 1980, 19, 191139. [Google Scholar] [CrossRef]

- Solomon, F.; Ikeuchi, K. Extracting the shape and roughness of specular lobe objects using four light photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 449–454. [Google Scholar] [CrossRef]

- Verbiest, F.; Van Gool, L. Photometric stereo with coherent outlier handling and confidence estimation. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Cho, D.; Matsushita, Y.; Tai, Y.W.; Kweon, I.S. Semi-Calibrated Photometric Stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 232–245. [Google Scholar] [CrossRef]

- Chandraker, M.; Agarwal, S.; Kriegman, D. ShadowCuts: Photometric Stereo with Shadows. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Georghiades. Incorporating the Torrance and Sparrow model of reflectance in uncalibrated photometric stereo. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 2, pp. 816–823. [Google Scholar] [CrossRef]

- Shi, B.; Tan, P.; Matsushita, Y.; Ikeuchi, K. Bi-Polynomial Modeling of Low-Frequency Reflectances. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1078–1091. [Google Scholar] [CrossRef]

- Lu, F.; Chen, X.; Sato, I.; Sato, Y. SymPS: BRDF Symmetry Guided Photometric Stereo for Shape and Light Source Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 221–234. [Google Scholar] [CrossRef]

- Kavoosighafi, B. Data-Driven Reflectance Acquisition and Modeling for Predictive Rendering. Ph.D. Thesis, Linköping University, Linköping, Sweden, 2025. [Google Scholar] [CrossRef]

- TAC7 Material Scanner. Available online: https://www.xrite.com/categories/appearance/total-appearance-capture-ecosystem/tac7 (accessed on 20 February 2025).

- HP Z Captis. Available online: https://www.hp.com/us-en/workstations/z-captis.html (accessed on 20 February 2025).

- xTex A4. Available online: https://www.vizoo3d.com/xtex-hardware/ (accessed on 20 February 2025).

- NX Premium Scanner. Available online: https://nunox.io/hardware-scanner/ (accessed on 20 February 2025).

- DMIx SamplR. Available online: https://www.dmix.info/samplr/ (accessed on 20 February 2025).

- TMAC: Texture Material Acquisition Capture System. Available online: https://www.tmac.dev/ (accessed on 7 October 2025).

- Your Smartphone Is a Material Scanner. Available online: https://www.adobe.com/learn/substance-3d-designer/web/your-smartphone-is-a-material-scanner?locale=en&learnIn=1 (accessed on 20 February 2025).

- Linen-Photometric Stereo Based PBR Material. Available online: https://gbaran.artstation.com/projects/8w1YeG (accessed on 20 February 2025).

- Details Capture. Available online: https://www.youtube.com/watch?v=IOF0THxnRi4 (accessed on 20 February 2025).

- Chan, J.H.; Yu, B.; Guo, H.; Ren, J.; Lu, Z.; Shi, B. ReLeaPS: Reinforcement Learning-based Illumination Planning for Generalized Photometric Stereo. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 9133–9141. [Google Scholar] [CrossRef]

- Gardi, H.; Walter, S.F.; Garbe, C.S. An Optimal Experimental Design Approach for Light Configurations in Photometric Stereo. arXiv 2022, arXiv:2204.05218. [Google Scholar] [CrossRef]

- Drbohlav, O.; Chantler, M. On optimal light configurations in photometric stereo. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05) Volume 1, Beijing, China, 17–21 October 2005; Volume 2, pp. 1707–1712. [Google Scholar] [CrossRef]

- Guo, X.; Houser, K. A review of colour rendering indices and their application to commercial light sources. Light. Res. Technol. 2004, 36, 183–197. [Google Scholar] [CrossRef]

- Sándor, N.; Schanda, J. Visual colour rendering based on colour difference evaluations. Light. Res. Technol. 2006, 38, 225–239. [Google Scholar] [CrossRef]

- Masuda, O.; Nascimento, S.M.C. Best lighting for naturalness and preference. J. Vis. 2013, 13, 4. [Google Scholar] [CrossRef] [PubMed]

- Photogrammetry Basics by Quixel. Available online: https://dev.epicgames.com/community/learning/courses/blA/unreal-engine-capturing-reality-introduction-to-photogrammetry/Ok7l/unreal-engine-capturing-reality-exposure (accessed on 20 February 2025).

- Frost, A.; Mirashrafi, S.; Sánchez, C.M.; Vacas-Madrid, D.; Millan, E.R.; Wilson, L. Digital Documentation of Reflective Objects: A Cross-Polarised Photogrammetry Workflow for Complex Materials. In 3D Research Challenges in Cultural Heritage III: Complexity and Quality in Digitisation; Springer International Publishing: Cham, Switzerland, 2023; pp. 131–155. [Google Scholar] [CrossRef]

- XRite User Manual. Available online: https://www.xrite.com/-/media/xrite/files/manuals_and_userguides/c/o/colorcheckerpassport_user_manual_en.pdf (accessed on 20 February 2025).

- Zeng, H.; Cai, J.; Li, L.; Cao, Z.; Zhang, L. Learning Image-Adaptive 3D Lookup Tables for High Performance Photo Enhancement in Real-Time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2058–2073. [Google Scholar] [CrossRef] [PubMed]

- Liaw, M.J.; Chen, C.Y.; Shieh, H.P.D. Color characterization of an LC projection system using multiple-regression matrix and look-up table with interpolation. In Proceedings of the Projection Displays IV; Wu, M.H., Ed.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 1998; Volume 3296, pp. 238–245. [Google Scholar] [CrossRef]

- Morosi, F.; Caruso, G. High-fidelity Rendering of Physical Colour References for Projected-based Spatial Augmented Reality Design Applications. Comput.-Aided Des. Appl. 2020, 18, 343–356. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.; Rigg, B. The development of the CIE 2000 colour-difference formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

- Zhang, Y.; Gibson, G.M.; Hay, R.; Bowman, R.W.; Padgett, M.J.; Edgar, M.P. A fast 3D reconstruction system with a low-cost camera accessory. Sci. Rep. 2015, 5, 10909. [Google Scholar] [CrossRef]

- Murez, Z.; Treibitz, T.; Ramamoorthi, R.; Kriegman, D. Photometric stereo in a scattering medium. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3415–3423. [Google Scholar]

- Nayar, S. Photometric Stereo. In Proceedings of the Monograph FPCV-3-2, First Principles of Computer Vision, Columbia University, New York, NY, USA, February 2025. [Google Scholar]

- Li, H.; Chen, M.; Deng, C.; Liao, N.; Rao, Z. Versatile four-axis gonioreflectometer for bidirectional reflectance distribution function measurements on anisotropic material surfaces. Opt. Eng. 2019, 58, 124106. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).