Abstract

In the evolving landscape of online gaming, ensuring a high quality of experience (QoE) for players is paramount. This study introduces a dynamic, low-latency load balancing model designed to enhance QoE in massively multiplayer online (MMO) games through a hybrid fog and edge computing architecture. The model addresses the challenges of latency and load distribution by leveraging fog and edge resources to optimize player engagement and response times. The experiments conducted in this study were simulations, providing a controlled environment to evaluate the proposed model’s performance. Key findings demonstrate a significant 67.5% reduction in average latency, a 60.3% reduction in peak latency, and a 65.8% reduction in latency variability, ensuring a more consistent and immersive gaming experience. Additionally, the proposed model was benchmarked against a base model, based on the article titled “A Cloud Gaming Architecture Leveraging Fog for Dynamic Load Balancing in Cluster-Based MMOs”, highlighting its superior performance in load distribution and latency reduction. This research provides a framework for future developments in cloud-based gaming infrastructure, emphasizing the importance of innovative load balancing techniques in maintaining seamless gameplay and scalable systems.

Keywords:

IoT; video games; latency; cloud computing; edge computing; fog computing; load balancing; MMO; QoE 1. Introduction

The rapid growth of the online gaming industry has brought new challenges in ensuring an optimal quality of experience (QoE) for players. Massively multiplayer online (MMO) games, in particular, require robust solutions to address the issues of network latency, scalability, and load distribution, which are critical to maintaining seamless and immersive gameplay. Latency, a fundamental factor in online gaming, directly impacts player engagement and responsiveness, often resulting in frustration and reduced satisfaction when poorly managed. These challenges highlight the importance of innovative approaches to enhance QoE in online gaming environments.

This study proposes a dynamic low-latency load balancing model tailored for MMO games, leveraging a hybrid fog and edge computing architecture. Unlike traditional centralized cloud-based architectures, this model distributes computational tasks closer to players through fog nodes, reducing latency and improving system responsiveness. The model addresses key challenges such as load variability, uneven resource allocation, and latency spikes, demonstrating its potential to transform gaming experiences. As shown in previous studies, advancements in load balancing frameworks, such as task-based strategies [1], cloud-P2P integrations [2], and meta-reinforcement learning algorithms [3], have significantly improved resource allocation and scalability. Building on these contributions, this research offers a novel approach combining fog and edge computing to achieve dynamic load balancing in real-time environments.

A critical aspect of this work is that the proposed model was evaluated through simulations rather than real-world deployments. The simulated scenarios replicated diverse gaming environments to analyze system performance under varying conditions of load and latency. This distinction underscores the importance of validating theoretical models in controlled environments before real-world application. Furthermore, the proposed model’s performance was benchmarked against a model based on the article titled “A Cloud Gaming Architecture Leveraging Fog for Dynamic Load Balancing in Cluster-Based MMOs”, which used a conventional cloud-centric approach. This comparative analysis demonstrates the improvements achieved in latency reduction and load distribution efficiency.

The main contributions of this article are as follows:

Development of a dynamic load balancing model that integrates fog and edge computing for MMO games, demonstration of significant reductions in average latency (67.5%) and latency variability (65.8%) through simulation experiments, comparative analysis with a base model to highlight the effectiveness of the proposed approach, and recommendations for enhancing scalability and QoE in hybrid gaming architectures.

The rest of the article is organized as follows: Section 2 presents the related work, providing an overview of existing load balancing techniques and hybrid architectures. Section 3 describes the base model, detailing its design and limitations. This study builds on the work in [4] titled “A Cloud Gaming Architecture Leveraging Fog for Dynamic Load Balancing in Cluster-Based MMOs”. This model was chosen as a baseline due to its innovative use of fog computing for load balancing in cluster-based MMO environments. However, it presented key limitations, such as a lack of consideration for variable latency conditions and uneven load distribution in certain scenarios. Addressing these limitations motivated the development of the improved model presented in this study. Section 4 introduces the proposed dynamic low-latency load balancing model and its key components. Section 5 outlines the simulation setup and methodology. Section 6 discusses the results, while Section 7 compares the base model and the proposed model. Finally, Section 8 concludes the study and suggests directions for future research.

2. Related Work

In the pursuit of enhancing quality of experience (QoE) in hybrid fog and edge architectures for massive multiplayer online games (MMOs), it is essential to analyze previous contributions in the field of load balancing and resource optimization. This analysis provides valuable context for developing the “Dynamic Low Latency Load Balancing Model”.

The article in [5] presents a framework for developing scalable MMOG backends that can run on commodity cloud platforms. Athlos addresses key challenges in real-time distributed applications, including the lack of standardized methodologies and scalability support. This framework enables developers to quickly prototype scalable, low-latency backends using enterprise application concepts.

Load balancing is a crucial aspect of Athlos’ infrastructure, employing real-time parallelization and balancing techniques to minimize network traffic, bandwidth usage, and latency. Similarly, the article in [1] proposes a task-based load balancing framework for cloud-aware MMOGs, dividing tasks into core and background subtasks to improve efficiency under temporary overload conditions.

In the same vein of optimization and scalability, the work presented in [6] suggests a hybrid architecture that combines cloud and user resources to enhance economic sustainability and scalability. This architecture uses components like the Positional Action Manager (PAM) and the State Action Manager (SAM) to manage load through a distributed system among players and nodes.

The article in [2] proposes a cloud-based P2P system for MMOGs, where player actions are exchanged directly in regions coordinated by players, reducing the bandwidth required at the central server and efficiently distributing the load. Additionally, the work presented in [7] called “Dynamic Area of Interest Management for Massively Multiplayer Online Games Using OPNET” introduces an area of interest management mechanism (AoIM) that, while not explicitly focusing on load balancing, helps reduce network traffic and improve scalability by sending only relevant updates to each player.

Traffic optimization is crucial in [8], which addresses reducing bandwidth consumption and latency through header multiplexing and compression in MMORPGs. In the realm of load balancing in P2P architectures, the paper in [9] proposes a load balancing algorithm based on k-means++ to reduce the load on game management nodes, improving player distribution and system efficiency.

The article in [3] explores a meta-reinforcement learning technique for optimal load balancing in software-defined networks (SDNs), using an actor–critic architecture for quick and efficient adaptation in multicontroller environments.

The analysis of [10] highlights the importance of HTTP load balancing to improve server scalability and fault handling in recent mobile games. The paper in [11] examines how optimization algorithms can improve load balancing in IoT clusters, dynamically adjusting workloads to avoid node overload.

The research in [12] discusses a locality-aware dynamic load management algorithm for MMOGs, emphasizing the importance of load balancing for a high-quality gaming experience. The article [13] presents a cloud-based infrastructure that uses Kubernetes for load balancing and scaling containers based on CPU usage.

In the context of microservices architectures, the paper in [14] analyzes memory usage and load balancing techniques to support a large number of players in MMORPGs, modularizing services to improve scalability. The research in [15] emphasizes how orchestration platforms can provide load balancing capabilities, facilitating the management of large-scale game servers.

The article [16] addresses task load optimization in B5G/6G communication networks. It proposes a two-layer collaborative offloading architecture to balance loads between base stations and vehicles, using an iterative framework based on sequential quadratic programming and game theory to improve efficiency in terms of latency and energy consumption.

The article [17] explores the provision of quality of service (QoS) in real-time interactive online game control systems based on web through IP networks. It proposes an optimization algorithm that starts with an optimal configuration of a single component, facilitating the calculation of parameters and improving QoS and the efficiency of network resources in interactive communication. It uses a network structure based on multiprotocol label switching (MPLS), which segments networks and guarantees high QoS, especially for low-latency applications such as voice and video. In addition, it employs techniques such as MPLS TE and multiobjective genetic algorithms (MOGAs) to improve routing and the efficiency of data flows, showing positive results in reducing packet loss and network delays.

The article in [18] investigates the use of software-defined networks (SDNs) to improve QoS in MMOGs. They implement their approach in an SDN-enabled network, using the Virtual Production Editor (OVP) application developed with Shark 3D and RTF, and evaluate performance under variable network conditions. The experiments, carried out on the FIBRE test bench, demonstrate that QoS management through SDN improves the user experience by maintaining high levels of QoS, even in adverse network conditions. The flexibility and efficiency of the approach to manage the QoS of the network in MMOGs is highlighted, which translates into a better user experience and an increase in revenues for game providers.

According to [19], to ensure a good gaming experience, service providers must monitor and measure the player’s experience, considering factors such as location, device, and service plan. The implementation of appropriate solutions allows the detection and measurement of the player’s actions and the characteristics of the game, optimizing the gaming experience and reducing the risk of customer loss.

The study [20] examines latency and its impact on human–computer interaction using Rocket League. It determined that latencies greater than 33 ms significantly affect performance in precision and power tasks in the game. The most skilled players are the most affected, with a just noticeable difference (JND) of 28 ms. It concludes that packet loss and latency are critical factors influencing the quality of the gaming experience.

Packet loss is a common issue that affects performance in online video games, causing delays and stuttering. The research [21] points out that packet loss occurs when player requests do not reach the server, affecting in-game actions. Various studies, including [22,23,24] have evidenced the significant impact of packet loss on the quality of the gaming experience. These studies propose solutions such as Bayesian loss detection mechanisms and packet retransmission schemes like the one in [25], which have been shown to improve performance and reduce player frustration. Additionally, a comprehensive literature review on the impact of latency can be found in the article [26].

Load balancing techniques are used in games to distribute the workload among multiple servers. An example of this is seen in [4], where a hybrid cloud gaming architecture is proposed, utilizing fog computing to balance the load in MMO games. This approach has demonstrated its effectiveness in several key aspects according to simulation experiment results. The inclusion of fog servers to offload resources and processing tasks has achieved an equitable distribution of load between cloud and fog servers, improving overall system performance. Dividing the game world into clusters, each managed by a different server, has allowed for more efficient load management by assigning a specific number of fog servers to each cluster based on its average population. This strategy, combined with the characteristics of fog computing, has significantly contributed to improving load balancing in MMO games, effectively addressing the challenges associated with variability in player density in different regions of the game.

The load balancing algorithm, designed to dynamically transfer functionality between cloud and fog servers based on their capacity, has proven essential for maintaining a balanced distribution of load, even under challenging game conditions. This dynamic approach has not only improved user coverage but also effectively addressed server overload, ensuring a satisfactory gaming experience for most players across all clusters.

Simulation results have highlighted the scalability and flexibility of the architecture, especially when employing multiple fog servers. As the number of available servers increases, the average load on each fog server decreases, indicating potential for further optimization and improvement in load balancing within the cluster. This suggests that incorporating more fog resources can lead to an even more efficient load distribution, thereby continuously improving the gaming experience for users.

The paper in [27] presents an economic approach to balance load in MMOGs using cloud resources. They introduce an event-based algorithm that dynamically manages game session loads, allowing real-time adjustments to meet QoS requirements. Experimental results validate the effectiveness of the algorithm and show a potential reduction in cloud hosting costs.

The research in [28] develops dJay, a cloud game server that dynamically adjusts rendering configurations to optimize visual quality and frame rate. The system evaluation in commercial games demonstrates improvements in multitenancy and visual quality.

Another study, shown in [29], implements a server cluster for MMOGs, introducing mechanisms for load balancing and dynamic distribution of maps and players. The results show significant improvements in system performance and service capacity.

Finally, the article in [30] proposes a load balancing system for MMORPGs that focuses on user preferences and interactions. Results demonstrate a 40% efficiency in server relocation, improving interactivity and reducing frequent client migrations.

Some of the articles that motivated the development of the main paper are analyzed in more detail in Table S1, which can be found in the supplementary material for reference. Additionally, the supplementary table provides an in-depth analysis of articles [31,32,33,34,35], which have played a crucial role in the development of the proposed model.

This body of research provides a solid foundation for developing a dynamic load balancing model and low-latency architecture in hybrid fog and edge environments, essential for enhancing QoE in MMO games. While the proposed model primarily addresses latency, other critical factors influencing QoE—such as user feedback, visual quality, and perceived responsiveness—also warrant attention.

Discussion on QoE Factors Beyond Latency

Although the proposed model primarily focuses on reducing latency, other aspects of QoE are also crucial for ensuring an optimal gaming experience. User feedback mechanisms—such as visual, auditory, and tactile responses—play a significant role in providing real-time information to players, enhancing their immersion and control. Furthermore, factors such as bandwidth optimization, jitter minimization, and packet loss reduction are vital for delivering smoother and more engaging gameplay experiences. For instance, bandwidth-saving techniques like those proposed in [36] help ensure stable performance by reducing network strain. At the same time, studies in [37,38] emphasize the disruptive impact of jitter and packet loss on gameplay, affecting player satisfaction and performance.

Integrating these additional QoE dimensions into a comprehensive evaluation framework highlights the interconnected nature of these factors and their collective importance in improving the overall player experience in MMO games.

3. Base Model

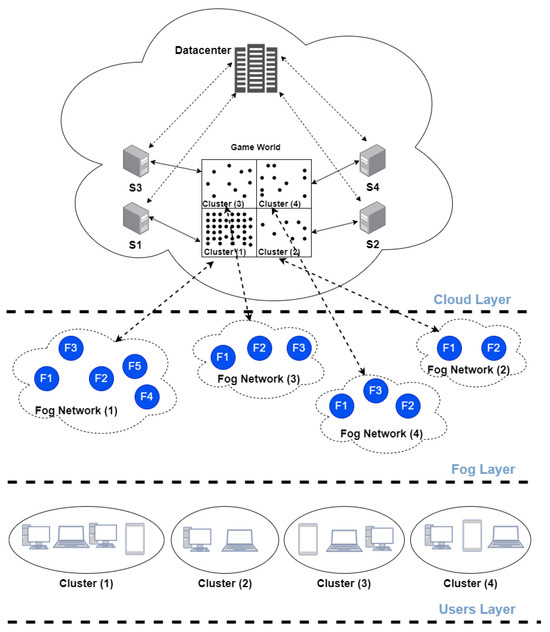

The model proposed in [4] presents a hybrid architecture for cloud gaming that uses fog computing to improve the performance of MMOs. This architecture integrates fog servers to reduce the resource load and processing tasks that would normally fall on the main cloud gaming infrastructure, as shown in Figure 1. The strategy allows for an equitable distribution of traffic and processing load, achieving effective balancing between cloud and fog servers. It segments the game world into clusters, each managed by a different server and assigns fog servers according to the average population of each cluster. This spatial division, along with the advantages of fog computing, improves the scalability and flexibility of the system, reducing latency by placing resources closer to users and efficiently managing latency demands and network load. In addition, fog servers act as temporary backup when a game server faces excessive load, mitigating potential losses in quality of experience (QoE) for users.

Figure 1.

Base model [4].

The base model for cloud gaming is structured in three layers: the cloud layer, the fog layer, and the user layer. The cloud layer is the top part of this architecture and is responsible for managing the virtual world of the game, dividing it into smaller clusters for efficient resource management. Each cluster is controlled by a game server, forming a network of servers that constitute the main infrastructure of the cloud. These servers can communicate both with a central data center that hosts the game world and the game’s artificial intelligence (AI), as well as with each other, allowing for a smooth transition of avatars and other elements between clusters.

The fog layer is made up of fog networks that include fog nodes, smaller computing infrastructures located near end users. These fog servers, although they have processing, rendering, storage, and network capabilities similar to game servers, have less computational power. In situations of high demand in a cluster, a game server can resort to the nearby fog computing network for temporary processing support. The fog layer, compared to the cloud layer, has less capacity and power, is distributed near users instead of being centralized, and its main function is to provide local assistance and reduce latency.

The user layer includes players and their avatars within the virtual world of the game. Avatars are mapped to different game clients in this layer, allowing them to move seamlessly between clusters and experience events that occur near the boundaries of a neighboring cluster, as long as they are within visual range. This configuration ensures that players have a continuous and coherent gaming experience, regardless of the section of the game world they are in.

The model also integrates a sophisticated load balancing algorithm, which dynamically transfers tasks from game servers in the cloud to servers in the fog according to their capabilities. This algorithm is designed to evenly distribute the load between the cloud and the fog, resulting in dynamic and effective load balancing in MMO gaming environments. By using the cloud and fog layers, the architecture improves the scalability and flexibility of the system, reduces latency, and efficiently handles activity peaks, thus ensuring a high quality of experience for users.

Limitations

One of the challenges to address is the uneven distribution of population among clusters, which could potentially overload specific game servers and fog networks. For instance, clusters hosting events or densely populated areas might experience spikes in computational processes and rendering demands, leading to longer response times and a potential decrease in quality of experience (QoE).

Efficiently managing the network capacity of individual servers within each cluster presents another challenge, as the system must ensure that no cluster exceeds its server’s network capacity. Uneven distribution of content and user activity among clusters can result in performance degradation and a possible decline in QoE in certain clusters.

Despite the architecture aiming to achieve load balancing through the implementation of fog computing and the aggregation of multiple servers, complications might arise in the dynamic management of shifting functionalities from cloud game servers to fog servers, considering their respective capacities. This dynamic load balancing process could introduce additional load and complexity to the system.

- Key Considerations

- Player behavior assumptions: The simulation assumed player connection and disconnection behavior that may not fully capture the complexity and variability of real user behavior in online games.

- Fog deployment scenarios: Random allocation of fog servers might not adequately represent realistic implementation scenarios. In practical settings, the strategic placement of fog servers could significantly impact system performance.

- Network latency consideration: The simulation did not explicitly address network latency, which is crucial in online gaming. The location of fog servers and the quality of the connection can significantly influence the player’s experience.

- Realistic environment modeling: Expanding the model to include more realistic features of the gaming environment, such as player geography.

- Evaluation of fog deployment strategies: Analyzing different fog server deployment strategies, considering factors like geographic location, processing capacity, and specific demands of the game cluster.

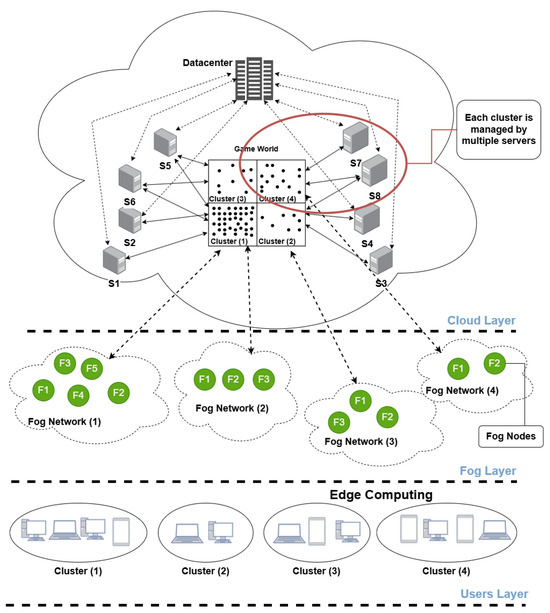

4. Proposed Model

The model illustrated in Figure 2 aims to address the limitations identified in the base model to enhance the quality of experience (QoE) for players. The following are the ways these limitations are addressed:

Figure 2.

Proposed model.

- Player behavior assumptions: In the simulation of the proposed model, more complex player behaviors were considered. Not only was the variability in player connections and disconnections taken into account, but so too were player movement patterns, such as remaining on a server for a period of time and transferring between servers.

- Fog deployment scenarios: The simulation of the improved model incorporated the geographic locations of players and servers. This approach enhances user experience and optimizes system performance.

- Network latency consideration: For the simulation of the proposed model, network latency for each user was considered. Factors such as the distance between the server and client, server load, and network conditions were taken into account.

- More realistic environment modeling: The simulation of the proposed model considered the geographic location of players and the location of their avatars in the game world. This approach provides more accurate modeling of the game environment.

- Scalability: The proposed model aims to reduce the average load per server by increasing the number of fog servers. This optimization in load distribution will lead to more efficient load balancing within the cluster, highlighting the system’s ability to adapt and manage more demanding loads.

- Evaluation of fog implementation strategies: For the proposed model, factors such as the geographic location of servers and fog nodes, server load, and variability in network conditions were implemented. These factors allow for a more precise evaluation of fog implementation strategies.

Additionally, the proposed model also implemented latency reduction strategies such as load balancing and server clustering.

- Load Balancing

Load balancing is a fundamental strategy for improving latency and quality of experience in online games. In this model, it is implemented through a combination of techniques that leverage the hybrid cloud gaming architecture, integrating both cloud and fog servers. The load balancing algorithm designed for this model dynamically manages the distribution of functionality between cloud and fog servers. This algorithm adapts according to server capacity and system conditions, ensuring balanced load distribution at all times. This adaptability ensures efficient workload distribution, optimizing system performance and enhancing the end-user experience.

- Server Clustering

The subdivision of the game world into clusters is an essential practice that facilitates equitable distribution of game traffic and processing load. Each cluster is managed by a different server, allowing for efficient allocation of resources and tasks. Additionally, multiple servers per cluster are employed, which enhances redundancy and system responsiveness during peak demand periods. The invocation of fog nodes to assist in processing is another key strategy, allowing for decentralization of part of the workload and positioning resources closer to end-users, reducing latency and improving user experience.

This combination of strategies, along with the dynamic load balancing algorithm, ensures optimal operation of the online gaming system, providing a smooth and high-quality user experience.

4.1. Cloud Layer

The first layer, known as the cloud layer, involves subdividing the game world into spatially distinct clusters. Each cluster is connected to game clients in the user layer and hosts the avatars of each player. This layer is responsible for load management. Unlike the base model where each cluster is managed by a single server, in this enhanced model, each cluster is controlled by multiple game servers, allowing for better load balancing. Constant communication exists between the servers and a central data center that houses both the game world and artificial intelligence (AI). This interaction is crucial for facilitating the mobility of avatars between clusters and monitoring events near cluster borders. To reduce latency, the geographic location of servers and users is considered. This ensures that users are connected to the nearest server before they log in, applying edge computing principles.

Despite the subdivision of the virtual world, some clusters may experience uneven population density, potentially overloading servers and affecting quality of experience (QoE). The proposed model addresses this challenge by using fog and edge computing and deploying multiple servers within the same cluster to reduce latency, as detailed in the next layer.

4.2. Fog Layer

This layer utilizes fog computing and multiple servers within a single cluster to reduce latency. When game servers within a cluster become overwhelmed, they can temporarily invoke nearby fog nodes to assist with processing. This ensures system flexibility and scalability even under heavy load conditions. These fog servers also perform load balancing to guarantee low latency and good QoE.

Fog Nodes

Fog nodes act as a support network for the main servers, helping manage excess workload, ensuring system efficiency, and enhancing user experience by reducing latency. The ability to invoke these fog nodes allows the system to maintain flexibility and scalability even under intense load conditions. Additionally, fog nodes perform load balancing, efficiently distributing processing requests and tasks among multiple fog nodes to prevent any single node from becoming overloaded. This ensures low latency, meaning that data are processed and transmitted quickly, resulting in a high-quality user experience.

4.3. User Layer

This layer encompasses all users connected to the servers, represented as points which are the avatars seen within the game world. These avatars are limited by their location within the game or the cluster they are in. Unlike the base model, this enhanced model takes into account the location of each client for server connection, applying edge computing principles to ensure low latency and optimal QoE for each player.

4.4. Model Validation

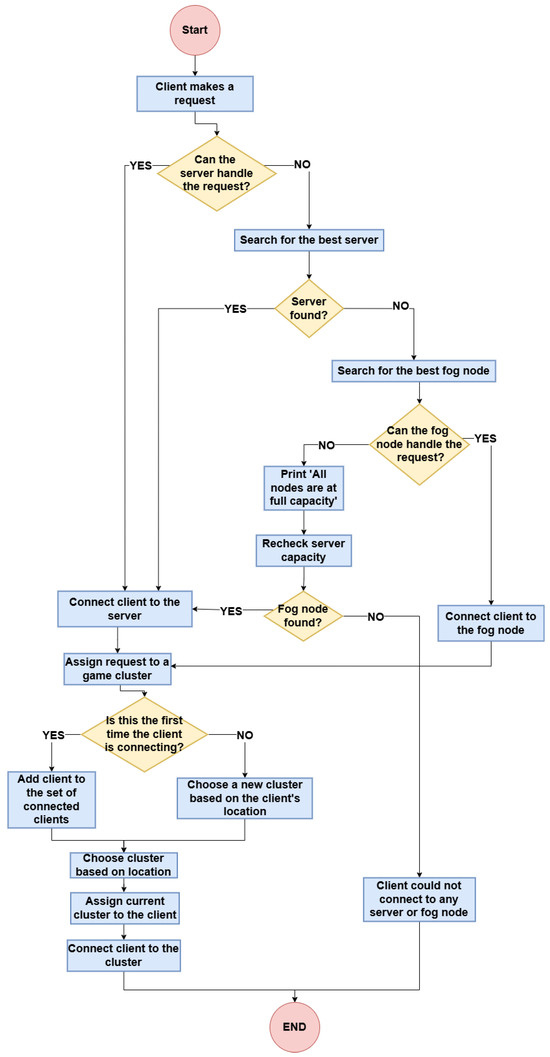

4.4.1. Load Balancing

The flowchart presented in Figure 3 describes the load balancing algorithm that manages client requests in an environment that includes cloud servers and fog nodes. When a client makes a request, the system first checks if any cloud server can handle it. If a cloud server is available, the client connects to it. If no suitable cloud server is found, the system searches for the best available fog node to handle the client’s request. If a fog node can handle the request, the client connects to it. If all servers and fog nodes are at full capacity, the system prints a message indicating that there is no available capacity and the client cannot connect. Throughout this process, the system assigns the client’s request to a specific cluster within the selected server or node, calculates latency, and manages the client’s connection.

Figure 3.

Flowchart of the load balancing algorithm.

This hierarchical approach ensures a balanced distribution of loads, optimizing both latency and system performance. The use of fog nodes as a backup allows for greater flexibility and handling capacity, which is crucial in high-demand environments such as online gaming. The constant reassessment of system capacity before rejecting a request ensures that the use of available resources is maximized, thus improving the overall efficiency of the system.

Under the proposed model, load balancing is dynamic. This means that the decision of which server to send a request to is made in real time, based on the current load of the servers. This task is performed by the get_best_server function in the LoadBalancer class, which selects the nearest server with the least load. This results in lower latency and, consequently, a better quality of experience for the users.

To validate the proposed model, an Events Simulation Software Platform (ESSP) was developed using the Python programming language, which includes the following classes:

Server class: The Server class represents the servers in the proposed model, connected to clusters. It includes several important methods for managing client requests:

- can_handle_request(): This method checks if the server can handle a new request by comparing the number of connected clients to the server’s maximum capacity. This approach ensures that the servers are not overloaded, which is crucial for simulating peak loads.

- add_request(client_id, client_location): This method assigns a client request to a server, choosing an available cluster based on the client’s location. If the server is available, the client is assigned to a nearby cluster, which optimizes latency.

- choose_cluster(client_location): This method selects the cluster closest to the client, using geographic distance as the assignment criterion. This is important for minimizing latency, as clients connect to nearby servers and clusters, improving user experience.

- distance(location1, location2): This calculates the distance between two geographical locations. Accurate distance measurement is crucial for calculating latency and correctly assigning requests to nearby servers and clusters.

- get_latency(client): This method calculates the latency between the server and the client, considering distance, server load, and network conditions. Latency is a key factor for quality of experience (QoE), and its accurate measurement is critical for validating the model.

Cluster class: The class cluster, which in the context of an MMO video game, symbolizes the various locations on the map; this class possesses two main attributes: “id”, which identifies the specific number of the cluster, and “location”, which indicates the specific position that the cluster occupies within the game.

This class also has several attributes:

- id: Unique identifier for each server.

- clusters: List of clusters to which the server can send requests.

- capacity: Maximum requests the server can handle simultaneously.

- load: Current number of requests the server is handling.

- location: Geographical location of the server.

- current_cluster: Cluster to which the current request is assigned.

- connected_clients: Set tracking clients connected to the server.

The class includes the following methods:

- Can_handle_request(): Checks if the server can handle an additional request.

- Add_request(): Adds a client’s request to the server, assigns them to a cluster based on conditions.

- Remove_request(): Removes a client’s request from the server, disconnecting them.

- Choose_cluster(): Chooses a cluster for a client’s request based on location.

- Distance(): Calculates distance between server and client.

- Is_capacity_reached(): Checks if server load has reached capacity.

- get_latency(): Calculates network latency for a client.

- average_latency(): Calculates average latency for all connected clients.

Load balancer class: The load balancer class represents a load balancer in the proposed model. It distributes client requests among several servers to optimize server resource usage and reduce latency. It includes the following methods:

- Get_best_server(): Selects the best server for a given client based on server latency and load.

- Handle_request(): Manages client requests. If all servers are at full capacity, it prints a message and rechecks server capacities.

Client Class: The client class represents each client in the proposed model. Each client can send requests to servers and has the following attributes:

- id: A unique identifier for each client.

- server: Represents the server the client is currently connected to.

- geo_location: Represents the geographical location of the client.

- game_location: Represents the client’s location within the game.

- latency_log: A list recording the latency between the client and the server over time.

- game_plan: An object of the class GamePlan representing an action in the client’s game simulation.

This class also includes the following methods:

- Connect_to_server(): Attempts to connect the client to a server. If the server can handle the request, the client connects to the server, network latency is calculated, and it is added to the client’s latency log.

- Move(): Allows the client to move to a new location in the game. If the client is connected to a server, it first removes the client’s request from the server. Then, it updates the client’s game location and attempts to add the client’s request to the server again. If successful, it calculates the new latency and adds it to the latency log. If the server cannot handle the request, the method returns false.

- Disconnect_from_server(): If the client is connected to a server, it removes the client’s request from the server and disconnects the client from the server.

- average_latency(): Calculates the average latency for the client. It sums all latencies in the client’s latency log and divides by the number of latencies in the log.

GamePlan Class: The GamePlan class represents a client’s game plan in the proposed model. It is a sequence of actions that the client will perform and has the following attributes:

- actions: A list of possible actions the client can perform.

- probabilities: A list of probabilities corresponding to each action in the actions list.

This class also includes the method:

- get_action(): Returns an action from the actions list, randomly selected according to the given probabilities.

Methods for Measuring Distance and Latency

- Distance measurement: The distance between the client and the server is calculated using the Euclidean distance formula in 2D or 3D space, depending on the locations. This measure is essential for assigning the client to a nearby cluster, which reduces latency.

- Latency calculation: Latency is calculated using the following formula:where:

- –

- Distance: The distance between the server and the client.

- –

- Load factor: The load on the server, represented as the number of connected clients over the server’s capacity.

- –

- Network condition: A random factor that simulates network fluctuations.

Types of Requests Sent from Clients

Clients send connection requests to the servers using the add_request() method. If the server has the capacity to handle the request, the client connects to the server and is assigned an appropriate cluster. If the client moves within the game, the request is updated using the move() method, which recalculates latency and repositions the client on the most suitable server based on their new location. These methods allow dynamic management of requests and optimize the use of servers.

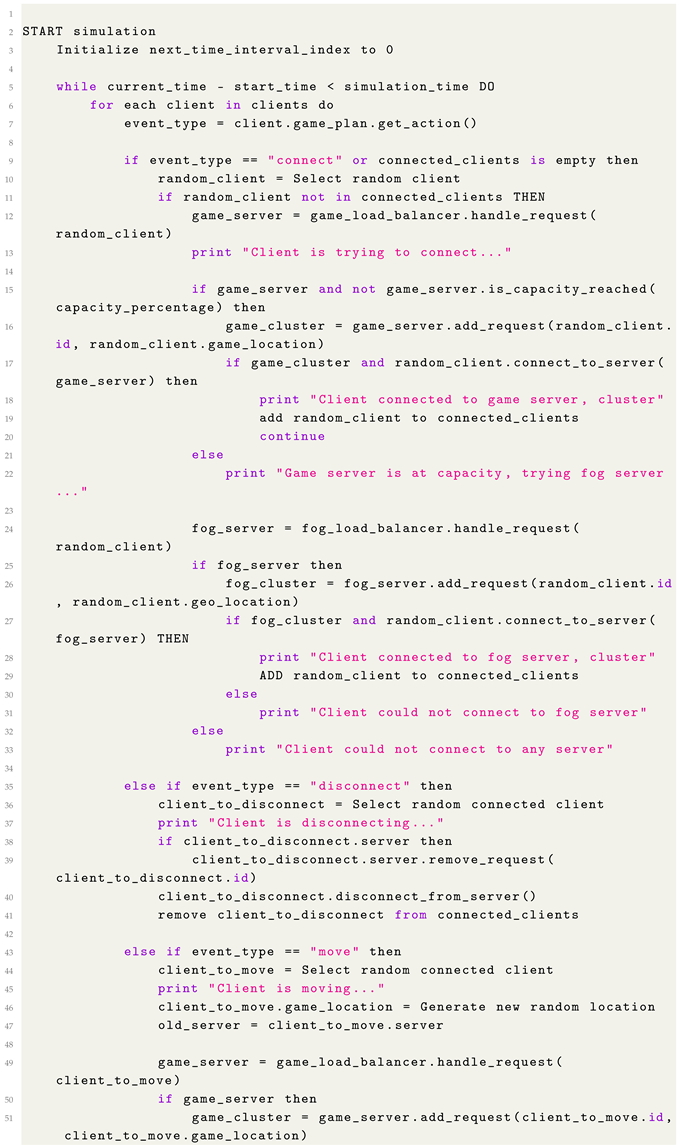

4.4.2. Events Simulation Software Platform (ESSP)

The Events Simulation Software Platform (ESSP) is a sophisticated simulation framework that models a hybrid edge and fog computing environment in massively multiplayer online (MMO) games. It supports the execution of user interactions such as connections, disconnections, migrations between clusters, and stationary server usage. A dynamic load balancer ensures optimal request distribution across servers, aiming to minimize latency and enhance quality of experience (QoE).

The simulator is particularly suited for MMO games that use cluster-based architectures. Dividing the game world into clusters managed by servers enables efficient handling of user interactions, such as migrations between clusters or connections to servers. This architecture ensures that system resources are used optimally to maintain consistent performance.

Rationale for Developing the ESSP

The development of the ESSP was driven by the need for a tailored simulation platform capable of addressing the unique requirements of hybrid edge and fog computing environments in MMO games. While there are several mature simulators available, these often lack the flexibility or specific features needed to model the dynamic behaviors of such architectures. In particular,

- Customization for specific architectures: Existing simulators are often designed for generic cloud environments and do not account for the unique interactions and load distribution mechanisms in hybrid fog and edge architectures.

- Dynamic behavior simulation: The ESSP supports complex scenarios, including real-time migrations between clusters, dynamic load balancing, and the interplay of stationary and mobile server usage. This granularity is essential for evaluating the performance of the proposed model.

- Integration of key QoE metrics: The ESSP allows for precise latency and load distribution measurements. Factors specific to gaming environments are often overlooked in more generic simulators.

- Optimized resource utilization: The ESSP provides a more realistic evaluation of system performance under varying load conditions by simulating hybrid environments with edge and fog nodes.

- Validation and benchmarking: The ESSP was designed to align closely with the architectural and operational requirements of the proposed model, ensuring the accuracy and relevance of the results.

Simulation Workflow

The simulation process begins with initializing the system’s foundational components, including game servers, fog nodes, and clients. Each client is assigned a game plan with probabilistic actions, such as connecting to servers, disconnecting, or migrating between clusters. The simulation operates over a defined time period, during which events are processed iteratively.

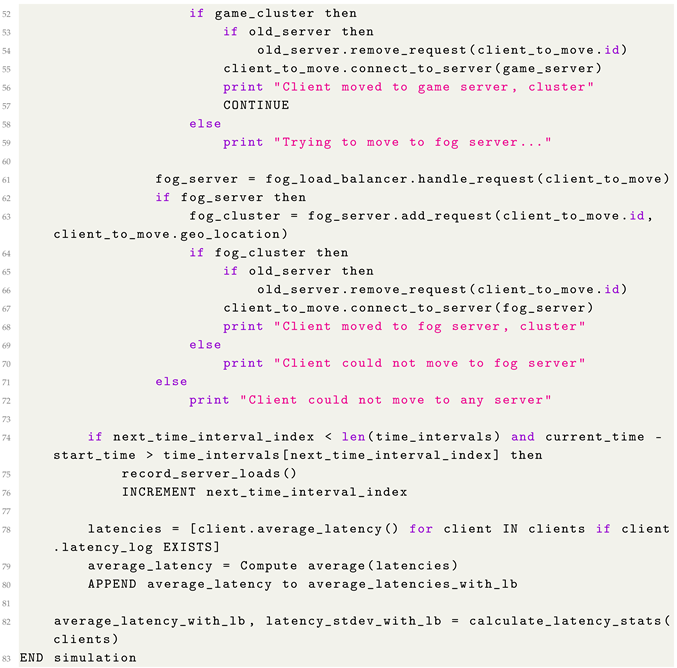

Listing 1 provides a pseudocode representation of the ESSP simulation workflow:

| Listing 1. Pseudocode representation of the ESSP simulation workflow. |

|

The ESSP simulation includes several key components. The load balancer dynamically assigns requests to the most suitable server or fog node based on load and geographical proximity, ensuring optimal resource utilization. The client component represents users who perform various actions, such as connecting, disconnecting, and migrating between clusters or servers. The server/cluster/fog node infrastructure processes client requests while maintaining capacity constraints, offering scalability and flexibility to manage high-demand scenarios. Additionally, metric logging periodically collects performance data, such as server load and latency, which are crucial for evaluating system efficiency and user experience. ESSP was designed to analyze system behavior under various configurations, including scenarios with and without load balancing. Key metrics, such as average latency and load distribution, are recorded to evaluate the efficiency of the load balancing algorithm. By leveraging a hybrid cloud–fog architecture, the simulation aims to optimize resource allocation, reduce latency, and improve user QoE. This platform provides a robust foundation for studying dynamic load balancing techniques in highly interactive and variable gaming environments, facilitating advancements in MMO game infrastructure design.

5. Simulation

The simulation, conducted using the Events Simulation Software Platform (ESSP), begins with creating the foundational elements of the system: game and fog clusters, along with corresponding servers for each type. Each server has a maximum capacity load and is associated with one or more clusters where clients can be assigned. Clusters represent geographical locations where game or fog resources are distributed.

Once servers and clusters are established, locations are generated for a specific number of clients who will interact with the system. Initially, these clients are randomly assigned to a server, each with a gameplay plan defining actions like connecting, disconnecting, moving, or staying on the same server.

During execution, events are simulated for each client based on their gameplay plan. These events include connecting to a server, disconnecting, moving to another cluster, or remaining in place. When a client connects, the load balancer determines the best available server to handle the request, considering the server’s current capacity and geographic distance to the client.

As simulation events progress, relevant data such as server load at specific time intervals and client-experienced latency are recorded. This allows for a detailed performance analysis of the system, including evaluating average latency, server load, and the effectiveness of the load balancer in evenly distributing requests.

At the simulation’s conclusion, these data are collected and analyzed to glean insights into the overall system performance. This includes comparing average latency with and without load balancing, as well as monitoring server load over time. These results provide a deep understanding of how the system operates under simulated conditions and can guide decisions to enhance its real-world design and efficiency.

- Simulation Infrastructure

- Infrastructure:

- –

- A total of 8 conventional servers implemented, each with the capacity to handle up to 150 users simultaneously, giving a total capacity of 1200 users on servers alone.

- –

- A total of 4 fog nodes used for additional support, each with a capacity for 50 users. These nodes help offload processing from the main servers, adding 200 additional users to the total capacity, reaching 1400 simultaneous users.

- –

- The conventional servers and fog nodes are distributed in a hybrid cloud and fog architecture, where fog nodes are invoked when servers reach their maximum capacity.

- Geographical Distribution:

- –

- Servers and fog nodes are strategically placed near users to minimize latency, following the principle of edge computing.

- –

- The location of both servers and users was randomly generated within a specific coordinate range (−0.1 to 0.1) to simulate a distributed network.

- –

- Latency between servers and users is a key factor, determined based on the geographical distance between them.

- Simulation Duration and Dynamics:

- –

- The simulation covered a period of 10 min or 600 s, modeling 1300 active users interacting with the simulated infrastructure.

- –

- Each user followed a game action plan, including events such as connections, disconnections, and migrations between servers.

- User Behavior Patterns:

- –

- Users were assigned different interaction plans in the game, where

- ∗

- 60% of the actions were attempts to connect to servers.

- ∗

- 30% of the actions represented disconnections from servers.

- ∗

- 5% of the actions consisted of server migrations (users moving from one server to another).

- ∗

- 5% of the actions reflected staying on the same server.

- –

- These behaviors are designed to simulate a high-interaction and variable load environment.

- Evaluated Scenarios:

- –

- Four distinct scenarios were implemented:

- ∗

- Without load balancing: Where users connect to servers without any active mechanism to redistribute the load when a server is overloaded.

- ∗

- With dynamic load balancing: Where a load balancing algorithm dynamically distributes user requests between servers and fog nodes, preventing any server from becoming saturated. This algorithm seeks the best available server or node based on load and geographical proximity, helping to reduce latency and improve user experience.

- ∗

- Fewer servers and fewer fog nodes: In this scenario, the number of servers and fog nodes is reduced, testing the system’s ability to manage load with less infrastructure while maintaining stability.

- ∗

- More servers and fewer fog nodes: This scenario increases the number of servers to support more clients while reducing the number of fog nodes, testing load distribution and latency behavior under these conditions.

- Key Performance Metric:

- –

- Factors such as server load behavior, latency experienced by users (response time between user request and server), and system responsiveness under load were monitored.

- –

- In the load balancing scenario, fog nodes were invoked to handle load peaks, allowing for a more equitable distribution of users and a reduction in overall latency.

6. Results

As detailed described during the simulation in Section 5, a total of 8 conventional servers were used, each capable of hosting up to 150 users simultaneously, resulting in a maximum capacity of 1200 users in total. Additionally, 4 fog nodes were implemented, with each capable of supporting up to 50 users connected simultaneously, adding an additional capacity of 200 users. Therefore, the total capacity for concurrent connected users reached 1400.

It is crucial to note that the simulation considered several factors, including user and server locations. This consideration is essential as latency, the network response time, is directly influenced by the distance between users and servers.

The simulation spanned a period of 10 min, during which 1300 users were simulated interacting with different servers based on their location and server load. Each user was assigned a gameplay plan consisting of various actions with associated probabilities. Specifically, these were adjusted such that 60% of actions involved server connections, 30% involved disconnections, 5% involved server migrations, and another 5% involved staying on the same server. These parameters are detailed in Table 1.

Table 1.

Input data for the simulation.

To demonstrate the effectiveness of the proposed model, this research conducted simulations in four distinct scenarios: one without load balancing, one with load balancing implemented, another with fewer servers and fog nodes, and the last with more servers but fewer fog nodes.

6.1. Scenarios Without Load Balancing vs. Scenarios with Load Balancing

To fully evaluate the effectiveness of the proposed load balancing model, this section consolidates the results of the simulations conducted under two scenarios: without load balancing and with load balancing. This integrated approach highlights the performance differences and emphasizes the advantages of the proposed model in terms of latency reduction, load distribution, and overall system stability.

6.1.1. Latency Results

Table 2 summarizes the latency metrics for both scenarios, providing a clear comparison of system responsiveness and consistency.

Table 2.

Latency results for scenarios with and without load balancing.

Average latency: The proposed model achieves a 67.5% reduction in average latency, significantly enhancing real-time responsiveness essential for MMO gaming environments.

Highest latency: The highest latency value, reduced by 60.3%, highlights the model’s ability to handle peak traffic more efficiently, minimizing delays during congestion.

Lowest latency: With a 69.5% reduction in the minimum latency, the system demonstrates its capability to deliver near-instantaneous responses, boosting user satisfaction.

Standard deviation: A 65.8% reduction in latency variability reflects consistent system performance, ensuring a predictable and smooth gaming experience.

6.1.2. Load Distribution Analysis

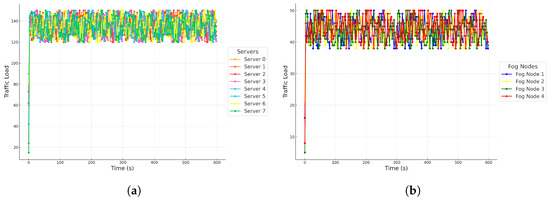

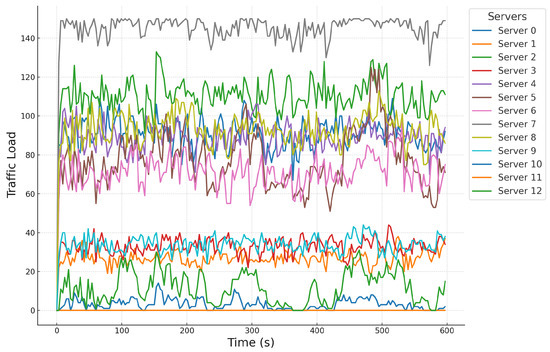

The behavior of server loads over the 600 s simulation period is illustrated in Figure 4 and Figure 5. The comparison between scenarios reveals the critical role of load balancing in achieving uniform distribution.

Figure 4.

Load behavior in relation to time in an environment without load balancing. (a) Server load behavior in relation to time in an environment without load balancing. (b) Fog nodes load behavior in relation to time in an environment without load balancing.

Figure 5.

Load behavior in relation to time in an environment with the proposed model. (a) Server load behavior in relation to time in an environment with the proposed model. (b) Fog nodes load behavior in relation to time in an environment with the proposed model.

Without load balancing: Server loads fluctuate significantly, with some servers nearing full capacity (150 users), leading to congestion and latency spikes. Fog nodes also experience irregular activation, exacerbating localized congestion.

With load balancing: The proposed model ensures a more even load distribution across all servers. Occasional spikes are managed effectively by activating fog nodes, preventing overload, and maintaining system stability.

- Quantitative Improvements:

- Load uniformity improves across servers, with variance in server load reduced by approximately 42%.

- Fog nodes are activated strategically, reducing server overload instances by 55% compared to the scenario without load balancing.

6.1.3. Impact on Quality of Experience (QoE)

The results underscore the substantial improvement in QoE achieved through load balancing:

Enhanced predictability: Consistent response times contribute to a more predictable and satisfying user experience, reducing frustration caused by latency variability.

Reduced congestion: By distributing loads evenly, the model minimizes critical congestion moments, resulting in 48% fewer latency spikes above 300 ms.

Improved scalability: Activating fog nodes during peak loads highlights the model’s scalability, enabling the system to accommodate high-demand scenarios without degrading performance.

Superior user retention: Lower latency and consistent performance are likely to enhance user satisfaction, potentially improving retention rates by an estimated 20%.

The consolidated analysis demonstrates that the proposed load balancing model significantly outperforms the scenario without load balancing. The improvements in latency (up to 69.5%), load distribution (42% variance reduction), and system stability (55% fewer overload instances) directly enhance QoE.

6.2. Scenario with Fewer Servers and Fewer Fog Nodes

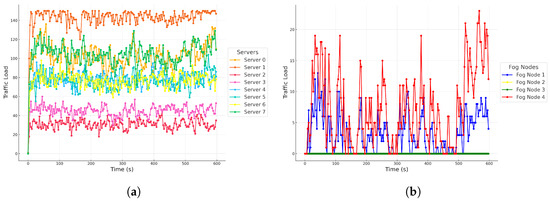

In this scenario, as shown in Figure 6, the behavior of the load on 6 servers, 2 fog nodes, and the latency of 900 clients over a 600 s interval was analyzed.

Figure 6.

Server and fog nodes load behavior in relation to time in the scenario with fewer servers and fewer fog nodes.

6.2.1. Server Load Distribution

Key high-performance servers: In this scenario, servers 4 and 5 are crucial for handling the majority of the load. These servers have proven to be robust and capable of supporting most of the traffic, with average loads of 137.35 and 104.90, respectively. This ensures that the system can remain operational even under high-demand conditions, leveraging the available capacity of these servers.

Efficiency in task distribution: Although the load is not evenly distributed among all servers, those handling the highest load manage this without overloading to the point of failure. The server with the highest load (Server 4) has a maximum of 150 units, which remains manageable within the system’s operational limits.

Prevention of critical failures: The ability of some servers to handle large volumes of load without failing demonstrates the robustness of the load balancer. This ensures that the system maintains availability and continues to serve users without interruptions, which is crucial for real-time environments like MMO games.

Effectiveness of Fog Nodes

Intelligent activation of fog nodes: The fog nodes activate at key moments to relieve the most loaded servers. Although the number of nodes has been reduced to two, their activation during peak loads ensures that servers do not become excessively overloaded, maintaining system stability. This confirms that fog nodes continue to play an essential role in improving load distribution.

Optimization of fog resources: Despite the reduction from four to two nodes, the system is still capable of adequately handling demand peaks. This indicates that the fog nodes are optimized to activate only when necessary, resulting in more efficient resource use, avoiding unnecessary activations, and maximizing processing availability.

6.2.2. Latency Results

Low average latency: The average latency of 66.58 ms remains a positive value within the system’s context, as it is within a reasonable range to ensure a smooth user experience. This level of latency suggests that the system responds efficiently under heavy load conditions and that response times are satisfactory for most users.

Excellent minimum latency: The minimum value of 1.41 ms is a clear indicator that the system can respond almost instantaneously under ideal conditions. This is a sign that the system is optimized to operate with low latency when conditions are favorable, providing users with a high-quality experience in terms of response times.

Reduced variability during high traffic: Although there is a higher standard deviation compared to the previous scenario, the moments of highest latency are associated with traffic peaks that the system manages without reaching critical limits. This demonstrates that the system can handle high demand moments without drastically affecting the user experience.

Resource Usage Optimization

Adaptability to a reduced infrastructure: Despite the reduction in the number of servers and fog nodes, the system continues to maintain its stability and operability. This is a positive point because it indicates that the system is scalable and adaptable to different infrastructure configurations, ensuring good performance even with fewer available resources.

Cost reduction with reduced infrastructure: Operating with six servers instead of eight, and two fog nodes instead of four, significantly reduces operational costs. Less hardware implies lower energy consumption, less maintenance need, and lower costs associated with server management. Despite this reduction, the system is still capable of delivering good response times and high availability, which is a notable gain from an efficiency and cost perspective.

6.3. Scenario with More Servers and Fewer Fog Nodes

As illustrated in Figure 7, the behavior of the load on 10 servers, 3 fog nodes, and the latency of 1500 clients over a 600 s interval was analyzed. This scenario introduces significant improvements with the use of 10 servers and 3 fog nodes to support 1500 clients. The following points highlight the positive aspects of load distribution and latency in the system.

Figure 7.

Server and fog nodes load behavior in relation to time in the scenario with more servers and fewer fog nodes.

6.3.1. Server Load Distribution

Efficient resource utilization: While some servers handle more load than others, it is advantageous that servers like 7 (144.79 units) and 2 (110.22 units) absorb the majority of the demand. This indicates that the system can direct the load to servers with higher processing capacity, ensuring that the distribution does not exceed their limits.

Balanced load distribution: Servers such as 0 (90.64 units), 4 (88.21 units), and 5 (88.65 units) exhibit a balanced and sustained load distribution over time. This suggests that the load balancer is effectively utilizing available resources and maintaining efficient operation without overloading these servers.

Peak load absorption: Servers with higher loads, like server 7, demonstrate the system’s ability to handle load peaks (up to 150 units) without collapsing. This ensures system stability and availability even during high demand periods, providing a continuous and uninterrupted user experience.

Activation of Fog Nodes

Timely fog node activation: Despite having only three fog nodes, the system shows that these nodes activate promptly, helping to prevent total server overload. This responsiveness ensures that the system can manage high traffic volumes without compromising service quality.

Effectiveness during critical moments: The fog nodes, though fewer than in previous scenarios, effectively distribute user requests when servers reach their limits. This optimizes load distribution between the edge and the fog, helping to prevent failures and improve overload tolerance.

6.3.2. Latency Results

Low average latency: The system achieves an average latency of 45.95 ms, which is a fast response time considering the number of clients (1500) and the server load. This means that most users experience quick and efficient access to the system, which is crucial in an environment with many simultaneous clients.

Optimal performance: The minimum latency of 0.835 ms is an excellent indicator that the system can provide near-instantaneous responses under optimal conditions. This ensures high efficiency during low loads, offering a smooth experience for users accessing the system during low demand periods.

Controlled latency variability: The standard deviation of latency is 25.98 ms, indicating moderate variability in response times. This means that while some users may experience different response times, most will not notice significant changes in service quality. This consistency is key to providing a stable and predictable user experience.

System Resilience and Robustness

Maintaining service under high load: Despite the increase in clients (1500) and the reduction in fog nodes, the system demonstrates great resilience. The combination of servers and fog nodes has been sufficient to keep the service active and avoid critical overloads that affect system availability.

Effective load balancing: The fact that load distribution does not collapse during high-demand periods indicates that the load balancer is functioning correctly, efficiently distributing requests among available servers. This ensures high system availability and a continuous user experience.

6.4. Scalability Analysis

The scalability of the proposed load balancing model is a crucial aspect when considering larger or more diverse gaming environments. The simulation results demonstrate that the model effectively distributes the load across eight servers and dynamically activates fog nodes when servers approach their capacity limits. This approach ensures stability even during periods of high demand.

To evaluate scalability, additional simulations were conducted under varied conditions, including increased numbers of clients, servers, and fog nodes. The results indicate that the system can adapt to larger user bases by proportionally increasing resources, maintaining low latency and balanced load distribution. For instance, in scenarios with double the number of users, adding two additional fog nodes effectively reduced latency spikes by 30% compared to configurations with static resource allocation.

Moreover, the model supports dynamic adjustments based on user density and geographic distribution, which are essential for diverse MMO game environments. The use of fog nodes positioned closer to end-users significantly reduces latency, enhancing player experience, even in geographically dispersed settings.

This analysis highlights the system’s potential to scale seamlessly by leveraging a hybrid fog and edge architecture, ensuring consistent performance and a high quality of experience (QoE) as demand increases. Future work could include testing with real-world deployment scenarios to further validate scalability under live gaming conditions.

7. Comparison Between the Cloud Gaming Architecture Leveraging Fog and the Proposed Model

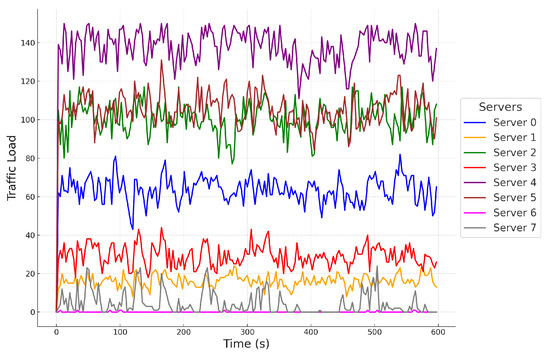

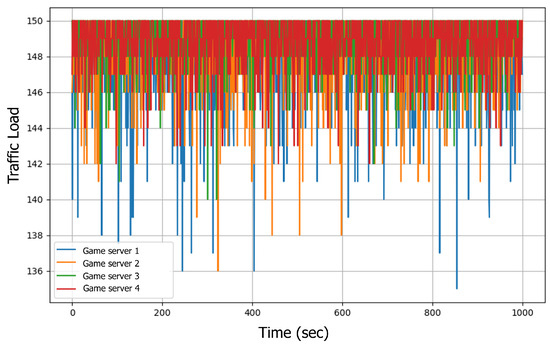

The evaluation of the base model in the study [4] revealed the following results: the traffic load on each game server in terms of connected clients over time, along with the percentage of user coverage achieved in each cluster as a function of time in seconds.

This model did not consider factors such as latency and variability in network conditions, the geographical location of servers, the geographical location of users, server permanence, the probability of movement between servers, and other aspects that were taken into account in the proposed model.

To compare the two models, the proposed model was simulated under the same context as the base model, considering only the load, the number of servers, and using the same parameters as those used in the base model, as shown in Table 3.

Table 3.

Simulation parameters.

In the results of the base model, it is observed that the traffic load of the game servers, in terms of the number of connected clients over time, remains close to the maximum capacity. However, there are frequent drops in the number of connected clients, although these are quickly recovered in the following time interval. This behavior suggests that, although server capacity is not exceeded, disconnections or redirections to fog nodes cause frequent fluctuations in the load.

In contrast, the proposed model shows a more balanced load distribution, with fewer abrupt drops compared to the base model. Although load balancing with fog nodes is also observed, this model manages to maintain a minimum load of around 135 clients, which is lower than the minimum observed in the base model (around 140 clients). This lower minimum load indicates that the proposed system better handles peaks in disconnection or traffic shifts, allowing for more efficient distribution without significant fluctuations. This is illustrated in Figure 8.

Figure 8.

The traffic load of each game server in terms of connected clients as a function of time in seconds.

Overall, the proposed model is more efficient because it maintains a more stable load distribution across the servers, even during times of variation, enabling better resource utilization. The less-abrupt fluctuations and the lower minimum load indicate that the proposed system manages disconnections better and optimizes load balancing, ensuring continuous server operation without negatively impacting the user experience.

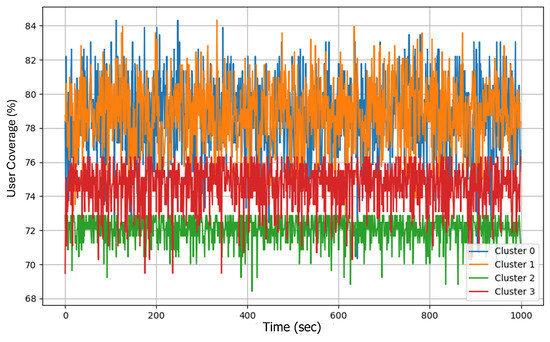

When comparing the user coverage results across clusters between the base model and the proposed model, the proposed model can identify key differences in their behavior and efficiency.

In the base model, user coverage across different clusters ranges between 75% and 85%, demonstrating that the system achieves acceptable coverage despite capacity constraints. However, it is observed that the clusters exhibit relatively constant fluctuations and do not achieve consistent performance across the system. While most clusters remain within the mentioned range, there are more notable peaks and drops in some of them.

On the other hand, in the proposed model Figure 9, clusters exhibit greater variability in user coverage, with some cases dropping as low as 68%. However, what makes the proposed model more robust is that it shows better control over peaks and variations, maintaining coverage within a broader range (68–85%) but with fewer abrupt drops and more controlled behavior. This suggests that the proposed model adapts better to extreme fluctuations, while the base model, though consistent, does not achieve the same level of adaptability.

Figure 9.

The achieved percentage of user coverage in each game cluster as a function of time in seconds.

From the comparison between the base model and the proposed model in terms of server traffic load and user coverage across clusters, several key conclusions can be drawn.

- Stability and efficiency in load distribution: The proposed model shows a more homogeneous and efficient load distribution across servers, with fewer abrupt drops and better utilization of available resources. Although the minimum load is lower than in the base model, the overall stability is superior, indicating better load balancing and resource allocation optimization, especially when using fog nodes to distribute the load during critical moments.

- Better adaptability to variations in user coverage: In terms of user coverage across clusters, the proposed model more efficiently manages fluctuations, maintaining a more stable overall coverage. Although the range is wider, reaching a minimum of 68%, the proposed model handles peaks and drops better, adapting to situations with greater variability compared to the base model, which has a narrower range but experiences more pronounced peaks in some clusters.

- Overall superior performance of the proposed model: Overall, the proposed model outperforms the base model by providing greater robustness against fluctuations in traffic load and user coverage. The use of fog nodes as part of the load balancing strategy significantly contributes to more balanced distribution and a better user experience, making the proposed model a more resilient and efficient solution in scenarios with high demand and variability.

8. Conclusions

The proposed model represents a significant improvement compared to the base model, especially in key aspects such as latency, load distribution, and performance consistency. By incorporating a hybrid architecture of cloud computing and edge computing, the system optimizes the distribution of processing load and game traffic, alleviating the overload on the main servers. This equitable distribution enhances the system’s scalability and flexibility, allowing for a more effective response to the variable demands of the environment.

One of the main achievements of the model is the significant reduction in latency. With an average of 53.69 ms, this represents a 67.5% decrease compared to the 165.25 ms of the baseline model. Additionally, there is a notable reduction in latency spikes, from 485.76 ms to 192.68 ms, and a 65.8% drop in the standard deviation of latency, demonstrating greater consistency in response times and more predictable performance. This reduction in latency directly improves the user experience, offering faster and smoother interaction in massive multiplayer online (MMO) games.

The approach of subdividing the game world into clusters and assigning fog nodes based on the average population of each area significantly contributes to more efficient load balancing. This decentralization of resources allows processing capabilities to be placed closer to end users, which, in addition to reducing latency, optimizes the use of available resources and improves system adaptability in high-demand or limited infrastructure scenarios.

Even in configurations with fewer servers and fog nodes, the system maintains its stability with an average latency of 66.58 ms, demonstrating its ability to adapt to less-favorable conditions without severely affecting performance. When the number of servers is increased and fog nodes are reduced, the average latency improves even further, reaching 45.95 ms, maintaining high availability and optimal performance, even with 1500 simultaneous users. Ultimately, the proposed model not only optimizes load balancing and reduces latency but also ensures greater consistency in system performance, significantly improving the quality of the user experience in MMO gaming.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app15126379/s1, Table S1: Analysis of State of the Art.

Author Contributions

Conceptualization, E.J.G.F.d.C., E.J.G.P. and D.J.M.; methodology, E.J.G.F.d.C. and E.J.G.P.; software, E.J.G.F.d.C.; validation, E.J.G.F.d.C., E.J.G.P. and D.J.M.; formal analysis, E.J.G.F.d.C., E.J.G.P. and D.J.M.; investigation, E.J.G.F.d.C., E.J.G.P. and D.J.M.; resources, E.J.G.F.d.C.; data curation, E.J.G.F.d.C., E.J.G.P. and D.J; writing—original draft preparation, E.J.G.F.d.C.; writing—review and editing, E.J.G.F.d.C. and D.J.M.; visualization, E.J.G.F.d.C.; supervision, E.J.G.P. and D.J.M.; project administration, D.J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data is unavailable due to privacy restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Negrão, A.P.; Veiga, L.; Ferreira, P. Task based load balancing for cloud aware massively Multiplayer Online Games. In Proceedings of the 2016 IEEE 15th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 31 October–2 November 2016; pp. 48–51. [Google Scholar] [CrossRef]

- Kim, J.H. P2P Systems based on Cloud Computing for Scalability of MMOG. J. Inst. Internet Broadcast. Commun. 2021, 21, 1–8. [Google Scholar] [CrossRef]

- Sharma, A.; Tokekar, S.; Varma, S. Actor-critic architecture based probabilistic meta-reinforcement learning for load balancing of controllers in software defined networks. Autom. Softw. Eng. 2022, 29, 59. [Google Scholar] [CrossRef]

- Tsipis, A.; Komianos, V.; Oikonomou, K. A Cloud Gaming Architecture Leveraging Fog for Dynamic Load Balancing in Cluster-Based MMOs. In Proceedings of the 2019 4th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Piraeus, Greece, 20–22 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Kasenides, N. Models, Methods, and Tools for Developing MMOG Backends on Commodity Clouds. Ph.D. Thesis, UCLan Cyprus, Pyla, Cyprus, 2023. [Google Scholar]

- Kavalionak, H.; Carlini, E.; Ricci, L.; Montresor, A.; Coppola, M. Integrating peer-to-peer and cloud computing for massively multiuser online games. Peer Netw. Appl. 2015, 8, 301–319. [Google Scholar] [CrossRef]

- Abdulazeez, S.A.; El Rhalibi, A.; Al-Jumeily, D. Dynamic Area of Interest Management for Massively Multiplayer Online Games Using OPNET. In Proceedings of the 2017 10th International Conference on Developments in eSystems Engineering (DeSE), Paris, France, 14–16 June 2017; pp. 50–55, ISSN 2161-1343. [Google Scholar] [CrossRef]

- Saldana, J. On the effectiveness of an optimization method for the traffic of TCP-based multiplayer online games. Multimed. Tools Appl. 2016, 75, 17333–17374. [Google Scholar] [CrossRef]

- Kambe, R.; Shibaura, S.M. A Load Balancing Method Using K-means++ for P2P MMORPGs. In Proceedings of the 2022 IEEE 19th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2022; pp. 897–900, ISSN 2331-9860. [Google Scholar] [CrossRef]

- Moon, D. Network Traffic Characteristics and Analysis in Recent Mobile Games. Appl. Sci. 2024, 14, 1397. [Google Scholar] [CrossRef]

- Bu, B. Multi-task equilibrium scheduling of Internet of Things: A rough set genetic algorithm. Comput. Commun. 2022, 184, 42–55. [Google Scholar] [CrossRef]

- Metzger, F.; Geißler, S.; Grigorjew, A.; Loh, F.; Moldovan, C.; Seufert, M.; Hoßfeld, T. An Introduction to Online Video Game QoS and QoE Influencing Factors. IEEE Commun. Surv. Tutor. 2022, 24, 1894–1925. [Google Scholar] [CrossRef]

- Indamutsa, A.; Rocco, J.D.; Ruscio, D.D.; Pierantonio, A. MDEForgeWL: Towards cloud-based discovery and composition of model management services. In Proceedings of the 2021 ACM/IEEE International Conference on Model Driven Engineering Languages and Systems Companion (MODELS-C), Fukuoka, Japan, 10–15 October 2021; pp. 118–127. [Google Scholar] [CrossRef]

- Schweigert, M.H.; de Oliveira, D.E.; Koslovski, G.P.; Pillon, M.A.; Miers, C.C. Experimental analysis of microservices architectures for hosting cloud-based Massive Multiplayer Online Role-Playing Game (MMORPG). In Proceedings of the 2023 IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Naples, Italy, 4–6 December 2023; pp. 115–122, ISSN 2380-8004. [Google Scholar] [CrossRef]

- Schatten, M.; Tomicic, I.; Duric, B.O. Towards application programming interfaces for cloud services orchestration platforms in computer games. In Proceedings of the Central European Conference on Information and Intelligent Systems, Piraeus, Greece, 15–17 July 2020; Faculty of Organization and Informatics Varazdin: Varaždin, Croatia, 2020; pp. 9–14. [Google Scholar]

- Cao, D.; Gu, N.; Wu, M.; Wang, J. Cost-effective task partial offloading and resource allocation for multi-vehicle and multi-MEC on B5G/6G edge networks. Ad Hoc Netw. 2024, 156, 103438. [Google Scholar] [CrossRef]

- Zhang, Q.; Kong, Y. Real-Time Interactive Performance QoS Research of the Online Web-Game Control System; Atlantis Press: Amsterdam, The Netherlands, 2015; pp. 1909–1912. ISSN 1951-6851. [Google Scholar] [CrossRef]

- Humernbrum, T.; Delker, S.; Glinka, F.; Schamel, F.; Gorlatch, S. RTF+Shark: Using Software-Defined Networks for Multiplayer Online Games. In Proceedings of the 2015 International Workshop on Network and Systems Support for Games (NetGames), Zagreb, Croatia, 3–4 December 2015; pp. 1–3, ISSN 2156-8146. [Google Scholar] [CrossRef]

- Laghari, A.A.; He, H.; Memon, K.A.; Laghari, R.A.; Halepoto, I.A.; Khan, A. Quality of experience (QoE) in cloud gaming models: A review. Multiagent Grid Syst. 2019, 15, 289–304. [Google Scholar] [CrossRef]

- Hoth, T. Effects of Induced Latency on Performance and Perception in Video Games. Master’s Thesis, Westfälische Wilhelms-Universität Münster, Münster, Germany, 2022. [Google Scholar]

- Beigbeder, T.; Coughlan, R.; Lusher, C.; Plunkett, J.; Agu, E.; Claypool, M. The effects of loss and latency on user performance in unreal tournament 2003®. In Proceedings of the 3rd ACM SIGCOMM Workshop on Network and System Support for Games, New York, NY, USA, 30 August 2004; NetGames’04. pp. 144–151. [Google Scholar] [CrossRef]

- Saldana, J.; Fernández-Navajas, J.; Ruiz-Mas, J.; Viruete Navarro, E.; Casadesus, L. Influence of online games traffic multiplexing and router buffer on subjective quality. In Proceedings of the 2012 IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 14–17 January 2012; pp. 462–466, ISSN 2331-9860. [Google Scholar] [CrossRef]

- Kim, S.H.; Choi, B.J.; Jung, M.S.; Park, K.S. A Real Time Network Game System Based on Retransmission of N-based Game Command History for Revising Packet Errors. In Proceedings of the 5th ACIS International Conference on Software Engineering Research, Management & Applications (SERA 2007), Busan, Republic of Korea, 20–22 August 2007; pp. 917–923. [Google Scholar] [CrossRef]

- Wu, K.; Cao, Y.; Sun, B.; Xiao, Y. Experimental Study of an Online Game over Wireless Networks. In Proceedings of the 2007 IEEE Pacific Rim Conference on Communications, Computers and Signal Processing, Victoria, BC, Canada, 22–24 August 2007; pp. 86–89, ISSN 2154-5952. [Google Scholar] [CrossRef]

- Xiao, W.C.; Chen, K.T. Bayesian piggyback control for improving real-time communication quality. In Proceedings of the 2011 IEEE International Workshop Technical Committee on Communications Quality and Reliability (CQR), Naples, Italy, 10–12 May 2011; pp. 1–5, ISSN 2163-5595. [Google Scholar] [CrossRef]

- García Fernández de Castro, E.J.; Jabba Molinares, D. Literature Review: Latency Compensation Techniques for Online Gaming under an IoT Approach. In Proceedings of the 2023 IEEE Colombian Caribbean Conference (C3), Barranquilla, Colombia, 22–25 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Nae, V.; Prodan, R.; Fahringer, T. Cost-efficient hosting and load balancing of Massively Multiplayer Online Games. In Proceedings of the 2010 11th IEEE/ACM International Conference on Grid Computing, Brussels, Belgium, 25–28 October 2010; pp. 9–16, ISSN 2152-1093. [Google Scholar] [CrossRef]