Abstract

A deep reinforcement learning framework is presented for strategy generation and profit forecasting based on large-scale economic behavior data. By integrating perturbation-based augmentation, backward return estimation, and policy-stabilization mechanisms, the framework facilitates robust modeling and optimization of complex, dynamic behavior sequences. Experimental evaluations on four distinct behavior data subsets indicate that the proposed method achieved consistent performance improvements over representative baseline models across key metrics, including total profit gain, average reward, policy stability, and profit–price correlation. On the sales feedback dataset, the framework achieved a total profit gain of 0.37, an average reward of 4.85, a low-action standard deviation of 0.37, and a correlation score of . In the overall benchmark comparison, the model attained a precision of 0.92 and a recall of 0.89, reflecting reliable strategy response and predictive consistency. These results suggest that the proposed method is capable of effectively handling decision-making scenarios involving sparse feedback, heterogeneous behavior, and temporal volatility, with demonstrable generalization potential and practical relevance.

1. Introduction

Driven by the wave of digitalization, massive volumes of economic behavior data have become a critical foundation for modern enterprises to support decision-making and optimization processes []. These data primarily encompass transaction records, click behavior, browsing paths, and price responses, capturing user decision-making patterns and market dynamics across various economic activities []. With the continuous advancement of big data technologies and computational capabilities, these large-scale datasets offer unprecedented opportunities for enterprises to refine pricing strategies and forecast revenues with higher accuracy [,]. Nevertheless, extracting meaningful insights from complex, high-dimensional, and delayed-feedback economic behavior data remains a significant challenge in the field of intelligent pricing []. Traditional pricing strategies often rely on static statistical models or manually defined rules, which tend to be inadequate in handling dynamic market environments []. Such approaches demonstrate limited flexibility and effectiveness, particularly in scenarios involving diverse product types, intricate user behavior, and intense market competition [,]. Specifically, static pricing models are unable to dynamically adjust prices, making them unsuitable for rapidly changing markets. Rule-based methods, on the other hand, depend heavily on expert experience and often fail to quantitatively model complex economic behavior and market feedback []. Moreover, economic behavior data are characterized by high dimensionality, heterogeneity, temporal dependence, and sparsity, which makes it difficult for traditional models to generalize effectively or cope with the data’s inherent complexity []. Although static pricing approaches were among the earliest methods applied in market settings, they generally set prices based on historical data and market patterns []. While effective in simple markets with a single product and stable conditions, such methods exhibit substantial limitations in complex real-world scenarios [], such as the inability to promptly update prices or account for consumer heterogeneity and intricate market behavior []. Regression-based dynamic pricing methods typically involve building regression models to estimate the relationship between price and sales volume, followed by pricing adjustments based on market feedback []. While these methods can partially address demand fluctuations, their limitations are evident. First, regression models often assume a fixed relationship between demand and price; however, in complex market environments, this relationship is highly nonlinear and dynamic, which cannot be adequately captured by standard regression techniques []. Second, these models usually presume immediate and continuous feedback from the market, whereas, in reality, feedback is frequently delayed and sparse, leading to uncertainty that regression models are ill-equipped to manage [,].

With the advancement of deep learning technologies, data-driven pricing strategies have attracted increasing attention in both academic and industrial domains. Deep learning enables multi-layer neural networks to extract abstract representations from complex economic behavior data, thereby facilitating more accurate and flexible decision-making processes [,]. In particular, deep reinforcement learning (DRL), which combines the perceptual capabilities of deep networks with the sequential decision-making mechanisms of reinforcement learning, has emerged as a highly promising paradigm []. Among the various DRL methods, the deep Q-network (DQN) represents a fundamental breakthrough. By leveraging deep neural networks to approximate the Q-value function, DQN effectively addresses the scalability issues encountered by traditional Q-learning in high-dimensional state spaces [,]. Through end-to-end interactions with the environment, DQN enables direct learning of pricing strategies from feedback signals []. Compared with conventional approaches, DQN exhibits superior generalization performance and adaptability to dynamic market environments. For example, Liang et al. constructed a Dueling DQN-based presale pricing model capable of adapting to complex dynamics in multi-phase sales processes, which demonstrated strong policy generalization across various product categories, thereby improving retail profitability []. Zhu et al. developed a reinforcement learning framework for multi-flight pricing, validating the policy convergence and generalization performance of DQN and other algorithms under high-dimensional state spaces and competitive market conditions such as high-speed rail interference []. Narayan et al. further demonstrated the profit advantages of DQN in high-volatility pricing scenarios within intelligent transportation systems []. Recent studies have also extended DRL to incorporate more diverse behavioral signals, such as inventory levels, competitor pricing, and user browsing behavior []. Effectively integrating these multidimensional features remains a core challenge in pricing-strategy modeling. To address this issue, Avramelou et al. proposed a multimodal financial embedding approach to fuse price and sentiment data while enabling interpretable weighting of each modality’s influence on the decision outcomes []. Yan et al. designed a multi-source information fusion framework that integrates graph convolutional networks and large language models for sector rotation-based asset allocation, demonstrating strong performance under multi-source financial data scenarios []. Wang et al. developed a high-dimensional state representation framework capable of capturing cross-feature interactions and enhancing the robustness of pricing decisions []. In addition, hybrid models have also been explored. Chen et al. introduced a K-means–LSTM hybrid model based on dynamic time warping (DTW) clustering to predict financial time series []. Liu et al. developed a DDPG-based pricing strategy for electric vehicle aggregators under evolving market conditions []. Moreover, Yin et al. integrated DRL with game-theoretic reasoning to derive optimal pricing strategies under strategic competition using Nash equilibrium solutions []. He et al. proposed a hybrid DRL model based on a bi-level Markov decision process that integrates day-ahead and real-time pricing mechanisms for coordinated modeling of supply–demand dynamics in electricity markets []. These recent developments underscore the increasing structural sophistication of DRL-based pricing frameworks and highlight the importance of designing architectures capable of learning from heterogeneous, delayed, and interactive economic feedback signals.

A novel framework for pricing-strategy generation and revenue prediction based on DRL is proposed in this work. By introducing a deep Q-network architecture and incorporating high-dimensional state modeling, price perturbation enhancement, and delayed-reward reevaluation mechanisms, the framework enables dynamic responses to user behavior and progressive optimization of pricing strategies. The key contributions of this work are summarized as follows:

- High-dimensional heterogeneous state modeling and long short-term return balancing: A DRL-based pricing framework is proposed that effectively addresses the challenges of modeling high-dimensional heterogeneous states and balancing short- and long-term returns using large-scale economic behavior data.

- Reward enhancement via price perturbation and delayed-feedback revisitation: A novel reward enhancement mechanism is designed based on price perturbation and delayed-feedback tracing, significantly improving model stability and applicability in real-world big data environments, especially under delayed-feedback and sparse-data conditions.

- Comprehensive empirical validation and multi-scenario applicability: Extensive experiments on multiple real-world economic datasets demonstrate the proposed method’s superior performance in terms of revenue growth rate, pricing stability, and policy generalization compared to existing baseline approaches.

A subset of the data samples and the full source code will be made publicly available at 28 May 2025 https://github.com/user837498178/DRL after acceptance.

2. Related Works

2.1. Traditional Pricing Models and Strategies

Traditional pricing models have been extensively applied across various economic domains, particularly in product pricing and market strategy optimization []. Classical pricing approaches primarily include static pricing methods, regression-based dynamic pricing models, and pricing game strategies derived from game theory []. While each of these methods demonstrates value under specific market conditions, limitations are evident when addressing high-dimensional state modeling and real-time feedback []. Static pricing methods represent one of the earliest and most widely adopted pricing strategies. The core idea involves predefining prices based on historical data and market patterns, without accommodating dynamic market changes []. Such methods are typically suitable for relatively stable markets with predictable demand []. However, in volatile markets influenced by complex consumer behavior and competitive pricing, static pricing approaches often lack efficiency and adaptability []. Consequently, these models generally rely on averages or heuristic rules derived from historical data but lack the capacity for dynamic adjustments. To address these shortcomings, regression-based dynamic pricing models have been introduced []. These models dynamically adjust prices by establishing functional relationships between price, sales, and demand. Regression analysis is commonly used to model the dependency between pricing and demand. A representative linear regression model is expressed as

where y denotes sales or demand, represent feature variables related to price and market conditions, are regression coefficients, and is the error term. This approach assumes a linear or otherwise regressive relationship between price and demand. However, in practical scenarios, the relationship between pricing and consumer demand is often nonlinear and influenced by various factors, such as psychological behavior and seasonal market fluctuations. Therefore, regression-based dynamic pricing methods tend to lack accuracy and stability when faced with such complexity. Pricing game strategies derived from game theory have been widely applied in competitive market scenarios, especially under multi-agent competition []. Game theory offers a systematic framework for analyzing strategic behavior among market participants, where pricing is often a critical decision variable []. Typical game-theoretic models involve defining the players, strategy spaces, and payoff functions, from which equilibrium pricing can be derived []. For example, in a classical Nash equilibrium model, each participant chooses the optimal strategy considering the strategies of others. The payoff function can be expressed as

where denotes the profit of the i-th participant, are the pricing strategies of all participants, and the profit function reflects how revenue varies under different pricing scenarios. Although game-theoretic approaches provide a theoretical framework for analyzing pricing competition, practical application is constrained by the dynamic nature of markets, nonlinear price feedback, and the unpredictability of competitor behavior. These models often assume fully rational agents, thereby overlooking the complexity of consumer behavior and the presence of irrational market factors, which limits their applicability in complex real-world environments [].

2.2. Applications of Deep Reinforcement Learning in Pricing Scenarios

DRL, which integrates reinforcement learning (RL) with deep learning, has gained substantial traction in various practical domains in recent years [,]. RL, as a major branch of machine learning, enables agents to learn optimal strategies by interacting with the environment. The fundamental components of RL include the agent, environment, state, action, and reward []. An agent selects an action under a given state and updates its strategy based on feedback (reward or penalty) from the environment, aiming to maximize the cumulative reward. The value function in RL is defined as

where represents the expected value under state s, is the reward at time t, and is the discount factor representing the attenuation of future rewards. By iteratively updating strategies, agents can discover optimal policies in complex environments. In DRL, deep neural networks are employed to approximate value or policy functions, effectively addressing the computational challenges posed by high-dimensional state spaces faced by traditional RL []. Among the most representative DRL algorithms is the deep Q-network (DQN), which combines Q-learning with deep learning to approximate the Q-function using neural networks, enabling the model to handle complex and high-dimensional state spaces []. The Q-function is defined as

where denotes the expected return from taking action a in state s, is the next state, is the immediate reward, is the discount factor, and represents the maximum Q-value for all possible actions in state . Through end-to-end neural network training, DQN can learn optimal pricing policies in complex environments []. Additionally, algorithms such as deep deterministic policy gradient (DDPG) and asynchronous advantage actor–critic (A3C) have also been applied in pricing decisions, particularly in scenarios involving continuous action spaces and high-dimensional state representations []. DDPG is a policy-gradient-based algorithm that estimates both the policy and value functions through deep networks to solve continuous control problems []. A3C leverages asynchronous multi-threaded training to enhance efficiency and has demonstrated strong performance in various tasks []. Despite the promising performance of DRL in pricing applications, several challenges remain. First, training DRL models typically requires a large volume of interaction data, which may be difficult to obtain in real-world pricing scenarios []. Second, due to the dynamic and complex nature of economic environments, DRL models are prone to overfitting and training instability []. Furthermore, delayed rewards and partial feedback complicate the learning process, making the design of effective reward functions and the mitigation of these issues a critical area for future research.

2.3. Revenue Prediction and Economic Behavior Modeling

Revenue prediction constitutes a core problem in economics, particularly in sales forecasting and market analysis, where accurate prediction supports the development of optimized pricing strategies []. In recent years, deep learning methods—especially those tailored to sequential data such as long short-term memory (LSTM) networks and transformers—have been widely adopted for sales forecasting []. LSTM networks, designed for sequence modeling, introduce gated mechanisms that effectively address the vanishing gradient problem observed in traditional recurrent neural networks (RNNs). The fundamental components of an LSTM unit include the input, forget, and output gates, with the update equations as follows:

where is the output of the forget gate, is the input gate, is the candidate cell state, is the updated cell state, is the output gate, and is the hidden state. The LSTM structure enables the modeling of long-range dependencies, which play a vital role in revenue forecasting. Transformer models, built on self-attention mechanisms, offer advantages over LSTM in handling long sequences through parallel processing []. The core operation of the self-attention mechanism is formulated as

where Q, K, and V represent the query, key, and value matrices, respectively, and denotes the dimensionality of the keys. This mechanism enables the modeling of dependencies between all positions in a sequence, facilitating more expressive representations. Although LSTM and transformer models have demonstrated strong performance in revenue forecasting, several challenges persist. First, the complexity and variability of temporal data make models susceptible to noise interference []. Second, these models often assume a fixed sequential relationship in data; however, in practice, user behavior is influenced by seasonal effects, promotional campaigns, and other external factors. Addressing the non-stationarity of time-series data remains a key direction for future research [].

3. Materials and Method

3.1. Dataset Collection

The dataset utilized in this study was collected from major e-commerce platforms across multiple representative industries over a continuous period from January 2023 to December 2023. The data exhibit a well-defined time-series structure and encompasses diverse behavioral features. To ensure both the practical relevance and generalizability of the experimental results, four core types of economic behavior data were gathered: product pricing records, sales feedback, user browsing behavior, and inventory levels. Data sources included leading platforms such as JD (jd.com), Taobao (taobao.com), Suning (suning.com), and Vipshop (vip.com). For each category, between 200,000 and 400,000 complete records were collected, resulting in a total dataset size exceeding 1,000,000 entries. The data were collected at a daily granularity, enabling robust time-series modeling, as shown in Table 1.

Table 1.

Behavior data subtype statistics.

During the data collection process, an automated scraping system was developed using Python 3.13-based tools, including Scrapy and Selenium. While these tools enabled the acquisition of large-scale behavior data from multiple e-commerce platforms, their use also introduced potential risks, such as parsing errors caused by frequent website structure changes, anti-scraping mechanisms, and inconsistent page templates. To mitigate these issues, several safeguards were employed: structural schema validation was applied during crawling to ensure field consistency, periodic manual sampling was conducted to audit data quality, and records with missing or anomalous key attributes were filtered out during preprocessing. Additionally, data collection was performed within a constrained time window to minimize discrepancies due to platform-side updates. For the pricing records, data were periodically retrieved from product detail pages and promotional listings, capturing listed prices, discount prices, and associated timestamps. The pricing fields followed a consistent structure, incorporating price fluctuation ranges, update frequency, and promotional tags, which effectively reflected the dynamic characteristics of pricing strategies. Sales feedback data were obtained from historical transaction statistics and monthly sales rankings, with fields including daily sales volume, cumulative monthly sales, and sales ranks, normalized using timestamp alignment for consistent analysis. Browsing behavior data were extracted from page popularity indicators, click-tracking modules, and redirection logs in recommendation zones. The collected fields included click frequency, navigation depth, and average session duration, providing insight into user engagement patterns. Inventory data were inferred from stock status prompts, restock frequency indicators, and seller dispatch cycles on product pages, and further refined through integration with pricing and sales information to approximate real-world supply–demand dynamics.

3.2. Data Preprocessing and Augmentation

In the framework of DRL, data preprocessing and augmentation constitute critical steps to ensure model stability and accuracy in complex market environments. In practical applications, economic behavior data often suffer from missing values and outliers. Furthermore, due to delayed market feedback, handling lagged data and enhancing data diversity have emerged as core challenges in preprocessing. To address these issues, several effective preprocessing and augmentation techniques have been proposed to improve learning efficiency and model robustness. Initially, the imputation of missing values and treatment of outliers are essential preprocessing procedures. In economic behavior datasets—particularly transaction logs and market feedback—missing or anomalous data are frequently encountered. Missing values typically arise from incomplete user actions or data collection errors, while outliers refer to values that significantly deviate from expected ranges, such as sudden spikes in product sales or abrupt price fluctuations. Common imputation methods include mean imputation, interpolation, and prediction-based filling using other features. In this work, a k-nearest neighbors (KNN)-based imputation approach is adopted. The method estimates missing values by identifying and averaging the k most similar instances. Given a dataset , where denotes the feature vectors and represents the target variables, missing features can be filled as follows:

where k denotes the number of nearest neighbors, are the target values of the k most similar data points, and is the imputed target. Outliers are identified and corrected using standard deviation thresholds or box plot-based statistical techniques, ensuring the rationality and consistency of the data. After addressing missing and anomalous values, the next step involves constructing state representations from the triplets of price, sales, and inventory. To enable DRL models to interpret economic behavior data, these variables must be transformed into state spaces amenable to model learning. While traditional pricing models often rely solely on price and sales data, inventory levels and competitive dynamics also critically influence pricing decisions. Accordingly, a multidimensional state representation is constructed by integrating the price (), sales (), and inventory (), forming the state vector . These state variables provide richer information about market dynamics and allow more informed optimization of pricing strategies. To further enhance the representational quality, weighted transformations of the price, sales, and inventory are introduced. The refined state representation is defined as

where represents the price at time t, denotes the sales-to-inventory ratio, and captures the nonlinear influence of inventory levels. This formulation enables the model to better grasp the interdependencies among pricing, demand, and stock, thereby facilitating more precise pricing decisions. Subsequently, a price perturbation mechanism based on a normal distribution is introduced to simulate variations in market response. In real-world markets, price fluctuations often trigger nonlinear and delayed changes in sales. To emulate such feedback behavior, a stochastic price perturbation strategy is adopted. Let denote the price perturbation at time t, sampled from a normal distribution:

where represents the standard deviation of the price perturbation. This augmentation technique increases the diversity of training data and enables the model to generalize better across different pricing conditions, reducing the risk of overfitting and enhancing adaptability to unseen market fluctuations. The resulting impact of price perturbation on sales can be modeled as

where denotes the sales volume at time t, is the price, is the inventory level, and are regression coefficients, and is a stochastic noise term representing market volatility. This perturbation-based approach effectively enhances data diversity and model robustness. Due to the presence of delayed market feedback in economic behavior data, handling lagged responses constitutes another important aspect of preprocessing. In pricing optimization, the influence of pricing decisions on sales and revenue is often realized only after a temporal delay. To account for this effect, a delayed-reward reestimation mechanism is introduced to construct temporally extended feedback. Specifically, suppose the model selects an action (pricing strategy) at state at time t and receives an immediate reward corresponding to the observed revenue. However, due to feedback delays, the actual outcomes may only manifest in subsequent time steps. To model this, a multi-step return approach is employed to compute the delayed reward as

where represents the reward received at time , is the discount factor, and n is the number of steps in the lookahead window. This temporal aggregation allows the model to assess the long-term impact of pricing decisions, reducing over-reliance on short-term fluctuations. The delayed-reward reestimation mechanism, therefore, effectively addresses the problem of lagged market response and facilitates more rational pricing behavior. Through the aforementioned preprocessing and augmentation techniques, challenges related to missing values and anomalies are resolved, while learning performance and robustness are improved via high-dimensional state representation, stochastic price perturbation, and delayed-reward modeling. These enhancements enable DRL models to better adapt to dynamic market environments, thereby improving the stability and precision of pricing strategies.

3.3. Proposed Method

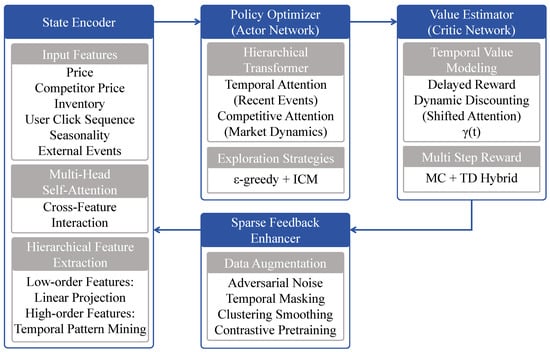

A modular DRL strategy-generation system was constructed, in which preprocessed temporal data features were initially processed by a state encoder for multi-level feature extraction. Cross-modal interactions were subsequently realized through multi-head self-attention mechanisms. A strategy optimizer then generated decision actions based on the encoded states, employing an -greedy strategy combined with an intrinsic motivation mechanism to facilitate exploration. Concurrently, a value estimator modeled future returns based on current states and actions using delayed modeling and dynamic discounting, with training signals optimized via multi-step mixed returns. To enhance feedback robustness, a sparse-enhancement module was introduced, which incorporated adversarial perturbation, temporal masking, and cluster smoothing for structural augmentation and prior training. All modules functioned cooperatively in a closed-loop configuration, enabling efficient generation and dynamic optimization of decision policies under high-dimensional states, as shown in Figure 1 and Table 2. The overall architecture follows an actor–critic paradigm based on the deep Q-network (DQN) framework. The policy network (actor) generates a stochastic action distribution through a softmax layer, while the value network (critic) estimates the Q-values using a multi-step return formulation. This design combines the sample efficiency and convergence stability of value-based learning with the flexibility of gradient-based policy optimization.

Figure 1.

Overview of the proposed DRL framework for pricing-strategy generation. It includes a state encoder for multi-level feature extraction, a transformer-based policy optimizer with exploration mechanisms, a value estimator for delayed-reward modeling, and a sparse-feedback enhancer to improve training robustness.

Table 2.

Layer-wise architecture and parameter count of the proposed method.

3.3.1. Policy-Learning Module

The policy-learning module represents the core component of the proposed DRL-based pricing-strategy framework, responsible for mapping high-dimensional economic state inputs to distributions over pricing actions. The entire process can be abstractly formulated by the following mathematical expression:

Here, denotes the optimal pricing policy, and represents the policy function, which outputs a probability distribution over actions given the state . The state comprises the current price , competitor pricing, user click sequences, inventory levels , seasonal factors, and external events. The action refers to the pricing decision made by the platform at time t. The immediate reward is defined as follows:

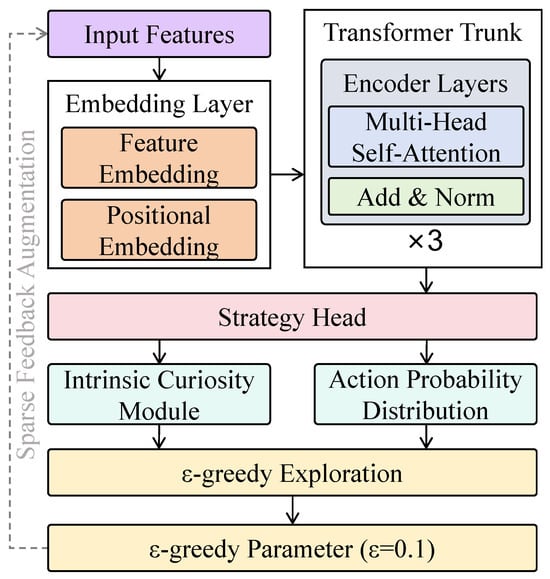

In this formulation, denotes the actual profit at time t, measures the relative increase in sales volume compared to a historical average, and penalizes abrupt pricing fluctuations to encourage smooth strategy evolution. The weighting coefficients are set as , , and , emphasizing a profit-oriented optimization objective while incorporating considerations for sales growth and pricing stability. The learned mapping function from state to action distribution guides the platform in selecting optimal pricing strategies at each time step. The input features of this module include current product prices, competitor prices, inventory levels, user click-behavior sequences, seasonality factors, and external event variables. These features are vectorized via an embedding layer and fused with positional embeddings to retain both sequential and structural information within the transformer backbone network. The embedded state tensor , where T denotes the sequence length and d is the embedding dimension, is processed through multiple transformer encoder layers for state representation modeling, as shown in Figure 2.

Figure 2.

Architecture of the policy-learning module. The design includes feature embedding, a transformer encoder with multi-head attention, a strategy head for action distribution, and dual exploration and intrinsic curiosity.

The transformer trunk is composed of three stacked encoder layers, each consisting of a multi-head self-attention mechanism and a feed-forward network, both integrated with residual connections and layer normalization (Add and Norm). In each self-attention layer, the input X is projected into queries (Q), keys (K), and values (V), and the attention weight matrix is computed as . The contextual representation is then obtained via , followed by multi-head concatenation and a linear projection to yield the fused feature representation. This design enables the policy network to capture intricate dependencies between heterogeneous features such as price trends, user engagement, and competitive actions, which is particularly suitable for economic behavior modeling scenarios characterized by complex causal structures and market dynamics. For instance, the mechanism can emphasize recent user behavior spikes during promotional periods or prioritize inventory fluctuations when supply constraints emerge. Following the transformer backbone, the output features are passed to the strategy head to generate action probabilities. A fully connected layer maps the hidden states to a probability distribution over the pricing action space, denoted as , with the softmax function ensuring proper probability constraints. Specifically, given the state representation at time t, the policy function is defined as , where W and b are trainable parameters. The resulting probability distribution is used to sample a pricing action , which is then passed into the environment for interaction. To enhance exploration capabilities and generalizability, the policy-output stage incorporates an -greedy strategy and an intrinsic curiosity module (ICM). In the -greedy strategy, the action with the highest probability is selected with probability , while a random action is selected with probability , with in the experiments. The ICM further defines an intrinsic reward based on the prediction error between state–action pairs, thereby encouraging exploration in underrepresented regions of the state space and alleviating policy degradation caused by sparsity in economic behavior data. This design of the policy-learning module offers several mathematical advantages. First, the transformer-based state modeling process is equivalent to constructing a class of learnable dynamic feature kernel functions within the state space, where the self-attention mechanism adaptively adjusts the weight distribution among features, significantly outperforming fixed-structure convolutional or recurrent units. Second, the multi-head mechanism enables the parallel modeling of local and global dependencies across multiple subspaces, enhancing the policy’s sensitivity to complex market dynamics such as promotional events and seasonal fluctuations. Moreover, the probabilistic output of the policy, combined with the -greedy exploration mechanism, provides interpretable and elastic action selection, theoretically mitigating the risk of converging to suboptimal local solutions.

3.3.2. Reward Estimation Module

The reward estimation module, serving as the core component for value evaluation in reinforcement learning, is responsible for estimating the contribution of each pricing action to long-term returns. Given that feedback in real-world platforms is often significantly delayed, sparse, and incomplete, a reward-modeling structure integrating multi-step return and dynamic discounting has been designed to enhance both the accuracy of return estimation and the stability of training. Architecturally, the reward estimation module adopts a multi-layer, one-dimensional convolutional neural network (1D-CNN) tailored for temporal modeling, which is motivated by the need to capture local temporal dependencies (e.g., short-term revenue trends or clustered sales fluctuations) in reward sequences with lower computational overhead. The input consists of the state representation vectors from the policy-learning module (i.e., the transformer output), shaped as , where B denotes the batch size, T is the temporal window length, and is the feature dimension. The first convolutional layer employs a kernel size of with channels, resulting in an output dimension of ; the second layer uses a kernel size of with channels, yielding . A global average pooling layer compresses the temporal dimension into a single vector. This is followed by two fully connected layers with widths of 128 and 1, respectively, producing the value estimate for each state–action pair. This structure is not only computationally lightweight but also enhances local dependency modeling among adjacent states through the sliding nature of convolution kernels. From a modeling perspective, the objective function of this module is based on a hybrid multi-step return, which combines Monte Carlo return and temporal difference return through weighted fusion. The learning objective is to minimize the Bellman error as follows:

where n denotes the number of backward steps and represents the n-step cumulative return, defined as

where denotes the dynamic discount factor, which is designed as a time-decaying function:

This formulation allows the model to assign moderate but diminishing attention to long-term rewards, thereby balancing short-term and long-term strategy optimization. In experiments, is set to and to , which have been empirically determined to be optimal. This module is jointly trained with the policy-learning module, forming an actor–critic architecture. The policy network outputs pricing actions, while the value network estimates the returns for those actions under given states. The total loss function used for joint optimization is

where is the weighting coefficient for value loss, which is set to to maintain a balance between policy learning and value estimation. The policy loss is defined as the cross-entropy between predicted and high-reward actions, encouraging the network to prefer actions with higher expected returns. This reward estimation module offers several advantages in the pricing task. First, the convolutional structure demonstrates strong capabilities in modeling local behavior sequences, which aligns well with the needs of e-commerce pricing scenarios characterized by high correlation and low latency. Second, the use of multi-step returns mitigates the high variance of single-step temporal difference methods and the high bias of full-trajectory returns, achieving a favorable bias–variance trade-off. Third, the introduction of dynamic discounting enables the model to adapt to varying time scales, thereby enhancing generalization capabilities. Mathematically, this module also satisfies the expected convergence properties. When the learning rate and , the following convergence holds:

This implies that the module’s estimation of long-term cumulative rewards asymptotically approaches the expected return in the true environment, ensuring that policy optimization is guided by reliable value signals rather than noise.

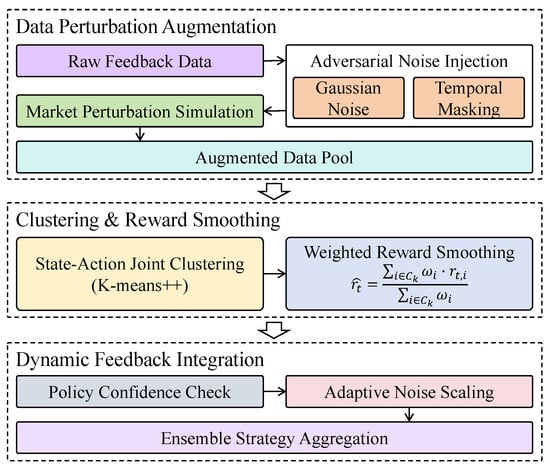

3.3.3. Sparse-Feedback-Enhancement Module

In large-scale data environments, behavioral feedback often exhibits high levels of sparsity and heterogeneity, particularly under conditions in which data recording is constrained by uneven user attention, short sampling intervals, or asynchronous event timing. These factors frequently result in missing or delayed supervisory signals, which can significantly undermine the accuracy of value estimation during training, amplify gradient propagation errors, and ultimately lead to policy degradation or convergence to suboptimal solutions. This issue critically impairs the model’s generalization and stability in complex environments. To address this, a sparse-feedback-enhancement module (reward denoising module) has been designed to improve robustness, generalizability, and exploratory capabilities under sparse-feedback conditions. This module integrates data perturbation augmentation, temporal masking, state–action clustering, and weighted return smoothing as core mechanisms, as shown in Figure 3.

Figure 3.

Illustration of the sparse-feedback-enhancement module, which enhances policy robustness through data augmentation, clustering-based reward smoothing, adaptive noise scaling, and dynamic feedback integration.

The first component, data perturbation augmentation, includes adversarial noise injection and Gaussian noise perturbation to simulate uncertainty in market feedback by generating perturbed samples. Adversarial noise is computed using the Fast Gradient Sign Method (FGSM), applying perturbation to the input state features x, thereby yielding perturbed samples . Gaussian noise sampled from is added to critical variables such as price and inventory to generate boundary-near training instances. To further enhance robustness to partial temporal observation, a temporal masking mechanism is employed, randomly masking a proportion of time steps t in the state sequence. This forces the model to infer action values from incomplete observations, thereby improving tolerance to missing data. The second component, clustering and reward smoothing, performs joint clustering of high-dimensional state–action pairs using the K-means++ algorithm. Within each cluster , a weighted average reward is constructed as the smoothed feedback signal, calculated as

where denotes the KNN of in the embedding space and is a distance-based weight defined by , where is the embedding of the state and is a decay coefficient. A policy confidence check mechanism is further incorporated, executing smoothing only when the output confidence exceeds a predefined threshold, preventing over-reliance on noisy data during early exploration stages and preserving training validity. During training, the original reward is fused with the smoothed reward to form an enhanced reward signal , computed as

The weighting coefficient is adaptively adjusted based on the clustering density, confidence, and loss dynamics. A higher weight is assigned to in regions of high density and low confidence to mitigate bias introduced by skewed samples. Additionally, an ensemble strategy aggregation mechanism is introduced to improve generalization, whereby policy outputs under multiple perturbation and clustering instances are aggregated through behavior–consistency weighting. This yields a more robust policy estimation and prevents training drift caused by individual perturbations. Mathematically, this smoothing process is analogous to applying a low-pass filter to the reward function, suppressing high-frequency noise while preserving stable local value signals. In reinforcement learning, this reduces the variance of value-error propagation. Theoretically, for perturbed feedback , where is independent and identically distributed with zero mean, the following holds after smoothing:

This result indicates that the smoothed reward remains an unbiased estimator while achieving variance reduction, thereby enhancing the stability of Q-value estimation and driving the policy network toward a superior convergence solution.

4. Results and Discussion

4.1. Experimental Setup and Evaluation Metrics

4.1.1. Hardware and Software Platform

In the present study, the choice of hardware platform plays a critical role in the training and execution of DRL models. To ensure efficient training on large-scale datasets, high-performance computing resources were employed. Specifically, multiple servers equipped with NVIDIA Tesla V100 GPUs (manufactured by NVIDIA Corporation, headquartered in Santa Clara, CA, USA) were utilized, offering substantial parallel computing capabilities that significantly accelerate the training of deep learning algorithms. These servers are further supported by the latest Intel Xeon processors (manufactured by Intel Corporation, headquartered in Santa Clara, CA, USA), ensuring stable and efficient CPU–GPU cooperation during data preprocessing, model training, and results analysis. Each server is outfitted with 128 GB of DDR4 RAM (manufactured by Intel Corporation, headquartered in Santa Clara, CA, USA) to accommodate the loading and processing of large volumes of data. In addition, NVMe SSDs (manufactured by Intel Corporation, headquartered in Santa Clara, CA, USA) were used for storage to facilitate fast data reading and writing. This hardware configuration allows the DRL algorithms to iterate rapidly, greatly reducing training time and improving experimental efficiency. On the software side, Python was selected as the primary programming language, combined with the TensorFlow framework, for implementing DRL models. TensorFlow, one of the most widely used deep learning frameworks, supports GPU acceleration and enables efficient training of large-scale neural networks. Its flexibility and scalability allow for the implementation of various models and algorithms. For reinforcement learning environment interaction, the OpenAI Gym platform was employed as the training and testing environment. OpenAI Gym provides a collection of standardized, modular environments suitable for various reinforcement learning algorithms, facilitating seamless integration between agents and environments. Additionally, Pandas and NumPy were used for data cleaning, manipulation, and analysis. All experiments were conducted on the Ubuntu 20.04 Linux operating system, ensuring platform stability and compatibility. This comprehensive hardware and software setup ensures efficient execution and guarantees the reliability and accuracy of the experimental results.

4.1.2. Hyperparameter Settings

To ensure generalizability and stability, the dataset was divided using a temporal split strategy to prevent future information leakage inherent in standard random cross-validation schemes. Specifically, data collected from January to September 2023 were used for training, while data from October to December 2023 were reserved for testing. This setup simulates real-world deployment scenarios in which pricing strategies must generalize to future unseen market conditions. In addition, since the four behavior data subsets (i.e., browsing behavior, inventory status, product pricing records, and sales feedback) span different temporal ranges, each subset was treated as an independent experimental task. For each task, training and testing splits were performed within the respective subset’s available time range (e.g., browsing data from January–June were split into training on January–May and testing on June), thereby ensuring consistency in temporal ordering and eliminating the risk of information leakage over time. Several hyperparameters were configured to ensure effective training and convergence of the DRL model. The learning rate was set to 0.001, a value determined through empirical tuning to balance training speed and convergence stability. The discount factor was set to 0.99, reflecting a strong emphasis on long-term rewards and improving the stability and accuracy of pricing policies. The experience replay buffer size was configured to store 100,000 samples to ensure a rich and diverse history of interactions. A batch size of 64 was selected to balance computational efficiency and learning diversity across different states. The neural network architecture adopted in the deep Q-network (DQN) consisted of three fully connected layers, each containing 256 neurons. The ReLU activation function was applied to each layer to enhance the model’s capacity for nonlinear representation. The target network was updated every 10,000 steps during training to stabilize learning.

4.1.3. Evaluation Metrics

The performance of the model was assessed using several key evaluation metrics, including total profit gain, average reward, strategy stability (standard deviation of actions), the coefficient of determination () for the reward–price relationship, the Sharpe ratio, and maximum drawdown.

where represents the total profit obtained by the model at the end of training and is the total profit at the initial state. The variable denotes the immediate reward at time t, is the total number of training steps, refers to the action taken at time t, and is the average of all actions taken. In addition, is the model’s predicted reward, is the mean of the actual rewards, and is the total number of data points. is the baseline risk-free reward (set to 0 in our experiments for simplicity), and is the standard deviation of the reward sequence. denotes the cumulative profit at time t. The total profit gain measures the percentage increase in the cumulative profit achieved through the optimized pricing policy relative to the initial profit level. The average reward quantifies the mean reward obtained per decision step, reflecting the effectiveness of the learned strategy throughout the training process. Strategy stability, represented by the standard deviation of actions, evaluates the consistency of the pricing decisions, particularly under changing market conditions. The metric assesses the correlation between the predicted pricing strategies and actual revenue, where higher values indicate a stronger alignment between the model’s pricing outputs and real-world revenue patterns. The Sharpe ratio measures the average excess reward earned per unit of reward volatility, indicating the risk-adjusted efficiency of the pricing strategy throughout the decision-making process. And the maximum drawdown quantifies the worst-case decline from a historical peak in the cumulative profit, reflecting the extent of the short-term loss risk encountered during strategy execution.

4.2. Comparative Experiments and Baselines

In order to evaluate the performance of the proposed method, several baseline models were considered for comparison. These included XGBoost [], DQN [], BERT [], transformer-based prediction market models [], and LSTM-based prediction market models []. Each of these baselines represents a different approach to pricing optimization, enabling a comprehensive comparison across various state-of-the-art techniques. XGBoost (Extreme Gradient Boosting) is a highly effective implementation of gradient-boosted decision trees and is widely used for supervised learning tasks. It has been particularly successful in many machine learning competitions due to its scalability, efficiency, and ability to handle various types of data. The deep Q-network (DQN) is another baseline model used for reinforcement learning tasks, particularly in the context of dynamic pricing. The DQN model approximates the Q-value function using a deep neural network, allowing the model to learn optimal pricing strategies by interacting with the environment. BERT (Bidirectional Encoder Representations from Transformers) is a pre-trained transformer-based model that has achieved significant success in natural language processing tasks. BERT models are able to understand contextual relationships between words in a text by training on large corpora with both left-to-right and right-to-left contexts. The transformer-based prediction market model employs self-attention mechanisms to capture dependencies across various market conditions and consumer behavior. By using multi-head attention layers, the transformer model learns the relationships between market inputs and predictions, allowing it to make more accurate forecasts over long sequences. The LSTM-based prediction market model is another baseline used to model sequential dependencies, especially in time-series data such as sales and pricing history. LSTM is well known for handling long-range dependencies by using gating mechanisms that control the flow of information.

4.3. Results and Analysis

4.3.1. Experimental Results of Different Pricing-Strategy Models

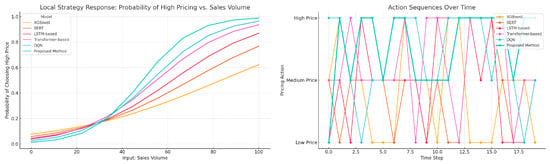

This experiment was designed to evaluate the overall capability of various models to predict pricing strategies under large-scale heterogeneous behavior data. Particular attention was given to their performance in terms of profit growth, behavioral stability, and the consistency of strategy responses, as shown in Table 3 and Figure 4.

Table 3.

Experimental results of different pricing-strategy models.

Figure 4.

Visualization of the learned pricing-policy behavior across different models.

The results indicate that XGBoost demonstrated the weakest performance across all metrics, primarily due to its static modeling approach, which failed to capture the temporal dynamics inherent in complex behavioral feedback. While BERT exhibited some capability in modeling sequential data, its contextual embedding mechanism struggled to generate stable policies in the absence of reinforcement signals. The LSTM-based model performed better in handling continuous time series but still suffered from degradation in capturing long-term dependencies. The transformer-based model significantly improved the efficiency and stability of policy learning through its global attention mechanism. The DQN model, equipped with a reinforcement learning structure, adapted policies dynamically and performed well in terms of average return and behavioral smoothness. The proposed method achieved the best results across all evaluation metrics, which can be attributed to its structural design that accommodates the entire modeling pipeline of multi-source behavior data. From a mathematical perspective, the multi-head attention mechanism of the transformer effectively captured long-range dependencies, the delayed-reward estimation module enhanced sensitivity to future gains, and the sparse-feedback-enhancement mechanism mitigated training bias caused by low-frequency behavioral samples. The strategy-generation process incorporated dynamic exploration and value-constrained mechanisms, theoretically constructing a more stable convergence path toward the optimal solution. As a result, the framework succeeded in simultaneously achieving profit maximization and smooth responses under complex behavioral environments.

4.3.2. Performance Evaluation on Different Subsets of Economic Behavior Data

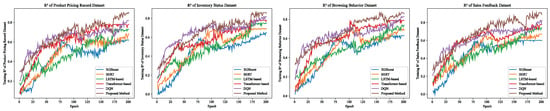

This experiment was designed to evaluate the generalization capability and response stability of different models when applied to various dimensions of economic behavior data in a large-scale setting. By constructing four specialized subsets—product pricing records, sales feedback, browsing behavior, and inventory status—the performance of each model under different types of information structures was assessed to determine its adaptability and prediction effectiveness.

As shown in Table 4, Table 5, Table 6 and Table 7 and Figure 5, the proposed method consistently outperformed all baselines across the sub-tasks. It achieved the highest total profit gain and average reward on the sales feedback dataset while maintaining lower action variability and higher correlation scores in the browsing behavior and inventory status datasets, demonstrating strong robustness in handling unstructured behavior data. From a theoretical standpoint, XGBoost, although capable of modeling structured features, lacked the temporal representation and contextual modeling abilities necessary for sequential prediction, resulting in the weakest performance across all subsets. BERT captured semantic relationships to a certain extent, but the inconsistency between its pretraining objectives and temporal dynamics hindered its sequential adaptability. The LSTM-based model benefited from its recurrent architecture for learning local dependencies but suffered from memory decay when modeling long-range behavioral cycles. In contrast, the transformer-based model leveraged its global attention mechanism to model nonlinear dependencies over extended time horizons, resulting in performance improvements. The DQN model constructed an iterative optimization path via reinforcement learning, providing advantages in sparse-feedback environments. The proposed method, which combines multi-head attention structures, delayed-return estimation, and sparse-feedback enhancement, achieved synergistic improvements in representation learning, value estimation, and policy stability, thereby enhancing prediction accuracy and consistency across heterogeneous behavior data subsets.

Table 4.

Prediction results on product pricing records dataset.

Table 5.

Prediction results on sales feedback dataset.

Table 6.

Prediction results on browsing behavior dataset.

Table 7.

Prediction results on inventory status dataset.

Figure 5.

curves of different models.

4.3.3. Ablation Study of Key Modules in the Proposed Method

This experiment was conducted to assess the individual contributions of the key structural components within the overall strategy-generation framework by progressively removing each module.

As shown in Table 8, a continuous improvement across all four metrics was observed as individual components were incrementally restored, demonstrating the synergistic value of each module in modeling complex behavioral patterns under large-scale data conditions. In the absence of the perturbation module, the model’s adaptability to dynamic behavior changes declined significantly. When the profit-backtracking module was removed, the ability to capture long-term rewards was diminished, leading to reduced prediction accuracy and lower cumulative returns. Disabling the strategy stabilizer resulted in a notable increase in policy variance, compromising the consistency of execution. The full model consistently outperformed all ablated configurations, confirming the compounded benefit of combining all modules. From a theoretical perspective, although the backbone structure possessed basic temporal awareness and action-mapping capabilities, it lacked the mechanisms required to effectively address data sparsity and delayed feedback. The perturbation module introduced Gaussian and adversarial noise mechanisms, thereby constructing a localized generalization neighborhood within the input space to enhance policy robustness. The profit-backtracking module employs a multi-step bootstrapped return formulation defined as

where n is the lookahead step (set to in our experiments), is the discount factor, and is the estimated action-value function. This approach allowed the model to improve value-function accuracy, enabling it to optimize policy trajectories under long-term incentive structures. The strategy stabilizer reweighted historical action distributions to impose structural constraints in the output space, reducing volatility and enhancing policy coherence. Together, these modules jointly optimized the convergence trajectory from the input perturbation to value estimation and action generation, achieving the dual objectives of improved stability and increased return from a mathematical standpoint.

Table 8.

Ablation study of key modules in the proposed method.

4.3.4. Cross-Stock Domain Transfer Evaluation

To evaluate the model’s generalization ability across different assets within the same market domain, a domain transfer experiment was conducted. The agent was trained exclusively on AAPL stock data and directly evaluated on MSFT data without any fine-tuning. All features and preprocessing procedures were kept consistent. The evaluation metrics included the total profit gain, average reward, policy stability, correlation, and Sharpe ratio. Table 9 summarizes the performance of various models under this cross-stock generalization scenario.

Table 9.

Cross-stock generalization performance: training on AAPL, testing on MSFT.

The results demonstrate that the proposed method achieved superior cross-stock generalization performance. Despite being trained on AAPL, the model exhibited a high score (0.85) and low action standard deviation (0.42) when deployed on MSFT, indicating consistent policy stability and accurate reward prediction. This supports the model’s capability to generalize across assets within the same market domain without fine-tuning.

4.4. Discussion

4.4.1. Empirical Findings and Behavioral Insights

A series of experiments was conducted to systematically evaluate the proposed model from three perspectives: overall performance, module contributions, and robustness across different subsets of behavior data. The results demonstrated that the proposed framework exhibits strong adaptability, stability, and generalization capabilities under complex economic behavioral scenarios. In real-world applications, platform-level decision-making is often challenged by high-dimensional heterogeneity, delayed feedback, and volatile user behavior. Traditional static or shallow learning models fail to effectively integrate such multi-source, heterogeneous information. The proposed approach, constructed and validated on large-scale daily-granularity behavior data from e-commerce platforms, exhibits practical potential for deployment. In terms of overall performance, the proposed strategy-generation framework significantly outperformed mainstream models such as XGBoost, BERT, LSTM, transformer, and standard DQN across all metrics, including total profit gain, average reward, behavioral output stability, and profit–price curve fitting. In the dataset collected throughout 2023 from jd.com, taobao.com, suning.com, and vip.com, numerous occurrences, such as temporary promotions, unexpected demand surges, and inventory fluctuations, were observed. These patterns require models with strong temporal sensitivity and adaptive policy adjustment capabilities. Unlike traditional methods that tend to respond prematurely or belatedly due to rigid weighting schemes, the proposed model demonstrated the ability to generate stable, trend-aware policy trajectories in the presence of highly imbalanced and volatile economic signals. For instance, in datasets related to holiday promotions for consumer electronics, the proposed method responded more accurately to changes in click behavior and peak historical sales, maintaining continuity and an upward trajectory in profit outcomes.

Among the four behavior data subsets, the model performed best on sales feedback and pricing records data, indicating a particular sensitivity to features exhibiting numerical continuity and strong price–sales coupling. In the sales feedback subset, the model captured periodic patterns and temporal surges in sales, effectively avoiding policy instability at peak or off-season transitions, which is critical for datasets involving cyclical categories such as food and personal care. Although the performance was slightly lower on the browsing behavior and inventory status datasets, the proposed method still outperformed all baseline models. The browsing dataset, characterized by high uncertainty and weak click–conversion correlation, presented a low signal-to-noise ratio. While the model could not fully predict post-click conversion behavior, the combination of perturbation augmentation and policy stabilization successfully suppressed the influence of behavioral outliers. In inventory modeling, the model estimated potential stockout risks by learning from inventory update frequencies and outbound flow patterns, enabling timely policy adjustments. This feature proved particularly valuable in domains prone to stock discontinuities, such as books and home goods. Regarding module contributions, the ablation study confirmed the distinct value of the three key modules in practical scenarios. The perturbation augmentation module introduced Gaussian and adversarial noise to price shifts, sales fluctuations, and inventory interventions, simulating real-world market uncertainty such as competitor price cuts or temporary policy changes. Without this module, the model’s adaptability diminished, leading to increased policy volatility and overreaction to minor behavioral disturbances. The profit-backtracking module was especially effective in desynchronized sequences, in which platform actions such as pre-event advertisements precede actual revenue realization by several days. By leveraging multi-step discounted returns, the module allowed the early incorporation of anticipated profits, thus enhancing foresight and buffering capacity in policy generation. The strategy stabilizer played a critical role under high-frequency policy update constraints, as operational systems often limit the number of adjustments per day. This module applied weighted reinforcement from historical behavior to smooth the current policy output, effectively controlling fluctuations and enhancing the operational feasibility and responsiveness of platform strategies.

4.4.2. Computational Complexity Analysis

From the perspective of computational efficiency, the proposed framework maintains a favorable balance between model expressiveness and runtime feasibility. The transformer-based policy-learning module, composed of three stacked encoder layers, introduces a self-attention mechanism with a complexity of , where T denotes the input sequence length and d is the feature embedding dimension. In our setting, and , which constrains the quadratic growth and allows practical training on modern GPUs. Multi-head attention introduces a constant multiplicative overhead proportional to the number of heads (set to four in our implementation), but parallelization across heads mitigates latency. The reward estimation module adopts a 1D convolutional architecture with a total of two convolutional layers and one pooling operation. Its time complexity is , where k is the kernel size. This design is significantly more efficient than a transformer- or RNN-based critic while still capturing local dependencies through sliding receptive fields. The entire training pipeline runs on a single Tesla V100 GPU (manufactured by NVIDIA Corporation, headquartered in Santa Clara, CA, USA) with approximately 24 min per epoch and peak memory usage below 12 GB, as profiled on the full-scale dataset. Furthermore, the sparse-feedback-enhancement module operates during training only and introduces minimal inference-time overhead. Clustering-based reward smoothing has a complexity of for k-nearest neighbor search, where N is the batch size. However, this process is offloaded to auxiliary GPU streams and does not impede forward or backward propagation.

In summary, the overall framework achieves a complexity-efficient configuration that supports deployment in real-time decision-making systems. Compared with other DRL-based pricing models, the proposed approach introduces only moderate computational overhead while yielding consistent gains in stability, generalization, and long-horizon value estimation.

4.5. Limitations and Future Work

Despite the superior performance demonstrated by the proposed strategy-generation and prediction framework across various large-scale behavior data scenarios, several limitations remain that warrant further investigation and refinement. First, the current approach still relies on a minimum amount of historical behavior data to support state modeling and value estimation, which may pose challenges during cold-start phases or in cases involving newly deployed platforms and short-lived product categories. Under such conditions, the stability of early-stage policy training may be insufficient. Second, the model’s training and inference procedures are currently executed within a centralized framework that aggregates data and generates policies in a unified manner. This architecture does not account for data silos and heterogeneity that are common across different platforms. In practical e-commerce environments, significant structural and access discrepancies exist among platforms with respect to data sharing. Future extensions could incorporate federated learning or multi-agent coordination mechanisms to enable cross-platform policy alignment and knowledge sharing without exposing raw data, thereby enhancing deployment flexibility and scalability in real-world settings. Future work will also consider the integration of multimodal information fusion mechanisms. By leveraging graph neural networks or joint language–behavior encoding strategies, diverse modalities—such as textual content, imagery, news feeds, and sequential user behavior—can be modeled collectively. This enhancement is expected to improve the model’s adaptability to complex and dynamic market conditions while increasing its sensitivity to latent strategic signals. Ultimately, such developments would facilitate the construction of an interpretable and practically valuable intelligent optimization system for strategic decision-making.

5. Conclusions

Under the paradigm of data-driven intelligent decision-making, a fundamental challenge has emerged in effectively extracting informative patterns from high-dimensional, heterogeneous, and sparsely labeled economic behavior data and in constructing stable and forward-looking strategy-optimization models. To address the limitations of existing approaches—such as inadequate modeling of long-term returns, high sensitivity to behavioral perturbations, and poor control over policy fluctuations—a DRL framework has been proposed. This framework integrates perturbation-based augmentation, delayed-reward estimation, and policy-stabilization mechanisms for dynamic strategy generation and profit forecasting. The architecture is built upon a transformer backbone, incorporating both policy and value networks. Data diversity is enhanced through the use of perturbation-injected samples, while multi-step return estimation is employed to improve reward perception over extended time horizons. In addition, a behavior-weighted stabilization mechanism is applied to suppress oscillations in policy outputs, thereby improving the model’s responsiveness to complex behavioral dynamics and ensuring output consistency. Extensive experiments were conducted using real-world economic behavior data collected from jd.com, taobao.com, suning.com, and vip.com. The results demonstrated the effectiveness of the proposed method. On the sales feedback subset, the highest total profit gain of 0.37 was achieved, along with an average reward of 4.85, a policy action standard deviation of only 0.37, and a profit-to-price correlation score of . Comparable stability and superior performance were observed on other subsets such as pricing records and inventory status when compared with baseline models. These findings indicate that the proposed framework is capable of effectively handling complex decision-making scenarios characterized by sparse feedback, behavioral heterogeneity, and temporal volatility, thereby offering strong generalization capabilities and significant potential for real-world application.

Author Contributions

Conceptualization, Z.Z., L.Z., X.L., and C.L.; Data curation, S.H.; Formal analysis, J.Z. (Jingxuan Zhang) and Y.P.; Funding acquisition, C.L.; Investigation, J.Z. (Jingxuan Zhang), J.Z. (Jinzhi Zhu), and Y.P.; Methodology, Z.Z., L.Z., and X.L.; Project administration, C.L.; Resources, S.H. and J.Z. (Jinzhi Zhu); Software, Z.Z., L.Z., and X.L.; Supervision, C.L.; Validation, J.Z. (Jingxuan Zhang) and J.Z. (Jinzhi Zhu); Visualization, S.H. and Y.P.; Writing—original draft, Z.Z., L.Z., X.L., S.H., J.Z. (Jingxuan Zhang), J.Z. (Jinzhi Zhu), Y.P., and C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under grant number 61202479.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sedliacikova, M.; Moresova, M.; Alac, P.; Drabek, J. How do behavioral aspects affect the financial decisions of managers and the competitiveness of enterprises? J. Compet. 2021, 13, 99–116. [Google Scholar] [CrossRef]

- Umapathy, T. Behavioral Economics: Understanding Irrationality In Economic Decision-Making. Migr. Lett. 2024, 21, 923–932. [Google Scholar]

- Zhou, X.; Chen, S.; Ren, Y.; Zhang, Y.; Fu, J.; Fan, D.; Lin, J.; Wang, Q. Atrous Pyramid GAN Segmentation Network for Fish Images with High Performance. Electronics 2022, 11, 911. [Google Scholar] [CrossRef]

- Das, P.; Pervin, T.; Bhattacharjee, B.; Karim, M.R.; Sultana, N.; Khan, M.S.; Hosien, M.A.; Kamruzzaman, F. Optimizing real-time dynamic pricing strategies in retail and e-commerce using machine learning models. Am. J. Eng. Technol. 2024, 6, 163–177. [Google Scholar] [CrossRef]

- Alwan, A.A.; Ciupala, M.A.; Brimicombe, A.J.; Ghorashi, S.A.; Baravalle, A.; Falcarin, P. Data quality challenges in large-scale cyber-physical systems: A systematic review. Inf. Syst. 2022, 105, 101951. [Google Scholar] [CrossRef]

- Yin, C.; Han, J. Dynamic pricing model of e-commerce platforms based on deep reinforcement learning. Comput. Model. Eng. Sci. 2021, 127, 291–307. [Google Scholar] [CrossRef]

- Izaret, J.M.; Sinha, A. Game Changer: How Strategic Pricing Shapes Businesses, Markets, and Society; John Wiley & Sons: Hoboken, NJ, USA, 2023. [Google Scholar]

- Zhang, L.; Zhang, Y.; Ma, X. A New Strategy for Tuning ReLUs: Self-Adaptive Linear Units (SALUs). In Proceedings of the ICMLCA 2021; 2nd International Conference on Machine Learning and Computer Application, VDE, Shenyang, China, 17–19 December 2021; pp. 1–8. [Google Scholar]

- Nagle, T.T.; Müller, G.; Gruyaert, E. The Strategy and Tactics of Pricing: A Guide to Growing More Profitably; Routledge: London, UK, 2023. [Google Scholar]

- Gast, R.; Solla, S.A.; Kennedy, A. Neural heterogeneity controls computations in spiking neural networks. Proc. Natl. Acad. Sci. USA 2024, 121, e2311885121. [Google Scholar] [CrossRef]

- Mehrjoo, S.; Amoozad Mahdirji, H.; Heidary Dahoei, J.; Razavi Haji Agha, S.H.; Hosseinzadeh, M. Providing a Robust Dynamic Pricing Model and Comparing It with Static Pricing in Multi-level Supply Chains Using a Game Theory Approach. Ind. Manag. J. 2023, 15, 534–565. [Google Scholar]

- Jalota, D.; Ye, Y. Stochastic online fisher markets: Static pricing limits and adaptive enhancements. Oper. Res. 2025, 73, 798–818. [Google Scholar] [CrossRef]

- Cohen, M.C.; Miao, S.; Wang, Y. Dynamic pricing with fairness constraints. SSRN 2021, 09, 3930622. [Google Scholar] [CrossRef]

- Sari, E.I.P.; Aurachman, R.; Akbar, M.D. Determination Price Ticket Of Airline Low-cost Carrier Based On Dynamic Pricing Strategy Using Multiple Regression Method. eProceedings Eng. 2021, 8, 6881–6892. [Google Scholar]

- Skiera, B.; Reiner, J.; Albers, S. Regression analysis. In Handbook of Market Research; Springer: Berlin/Heidelberg, Germany, 2021; pp. 299–327. [Google Scholar]

- Li, Q.; Ren, J.; Zhang, Y.; Song, C.; Liao, Y.; Zhang, Y. Privacy-Preserving DNN Training with Prefetched Meta-Keys on Heterogeneous Neural Network Accelerators. In Proceedings of the 2023 60th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 9–13 July 2023; pp. 1–6. [Google Scholar]

- Anis, H.T.; Kwon, R.H. A sparse regression and neural network approach for financial factor modeling. Appl. Soft Comput. 2021, 113, 107983. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, Y.; Ren, J.; Li, Q.; Zhang, Y. You Can Use But Cannot Recognize: Preserving Visual Privacy in Deep Neural Networks. arXiv 2024, arXiv:2404.04098. [Google Scholar]

- Li, D.; Xin, J. Deep learning-driven intelligent pricing model in retail: From sales forecasting to dynamic price optimization. Soft Comput. 2024, 28, 12281–12297. [Google Scholar] [CrossRef]

- Chuang, Y.C.; Chiu, W.Y. Deep reinforcement learning based pricing strategy of aggregators considering renewable energy. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 6, 499–508. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, Y. Confidential Federated Learning for Heterogeneous Platforms against Client-Side Privacy Leakages. In Proceedings of the ACM Turing Award Celebration Conference, Changsha, China, 5–7 July 2024; pp. 239–241. [Google Scholar]

- Massahi, M.; Mahootchi, M. A deep Q-learning based algorithmic trading system for commodity futures markets. Expert Syst. Appl. 2024, 237, 121711. [Google Scholar] [CrossRef]

- Park, M.; Kim, J.; Enke, D. A novel trading system for the stock market using Deep Q-Network action and instance selection. Expert Syst. Appl. 2024, 257, 125043. [Google Scholar] [CrossRef]

- Liang, Y.; Hu, Y.; Luo, D.; Zhu, Q.; Chen, Q.; Wang, C. Distributed Dynamic Pricing Strategy Based on Deep Reinforcement Learning Approach in a Presale Mechanism. Sustainability 2023, 15, 10480. [Google Scholar] [CrossRef]

- Zhu, X.; Jian, L.; Chen, X.; Zhao, Q. Reinforcement learning for Multi-Flight Dynamic Pricing. Comput. Ind. Eng. 2024, 193, 110302. [Google Scholar] [CrossRef]

- Paudel, D.; Das, T.K. Tacit Algorithmic Collusion in Deep Reinforcement Learning Guided Price Competition: A Study Using EV Charge Pricing Game. arXiv 2024, arXiv:2401.15108. [Google Scholar] [CrossRef]

- Alabi, M. Data-Driven Pricing Optimization: Using Machine Learning to Dynamically Adjust Prices Based on Market Conditions. J. Pricing Strategy Anal. 2024, 12, 45–59. [Google Scholar]

- Avramelou, L.; Nousi, P.; Passalis, N.; Tefas, A. Deep reinforcement learning for financial trading using multi-modal features. Expert Syst. Appl. 2024, 238, 121849. [Google Scholar] [CrossRef]

- Yan, Y.; Zhang, C.; An, Y.; Zhang, B. A Deep-Reinforcement-Learning-Based Multi-Source Information Fusion Portfolio Management Approach via Sector Rotation. Electronics 2025, 14, 1036. [Google Scholar] [CrossRef]

- Wang, C.H.; Wang, Z.; Sun, W.W.; Cheng, G. Online regularization toward always-valid high-dimensional dynamic pricing. J. Am. Stat. Assoc. 2024, 119, 2895–2907. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, J.; Wu, Z. China’s commercial bank stock price prediction using a novel K-means-LSTM hybrid approach. Expert Syst. Appl. 2022, 202, 117370. [Google Scholar] [CrossRef]

- Liu, D.; Wang, W.; Wang, L.; Jia, H.; Shi, M. Dynamic pricing strategy of electric vehicle aggregators based on DDPG reinforcement learning algorithm. IEEE Access 2021, 9, 21556–21566. [Google Scholar] [CrossRef]

- He, Y.; Gu, C.; Gao, Y.; Wang, J. Bi-level day-ahead and real-time hybrid pricing model and its reinforcement learning method. Energy 2025, 322, 135316. [Google Scholar] [CrossRef]

- Khedr, A.M.; Arif, I.; El-Bannany, M.; Alhashmi, S.M.; Sreedharan, M. Cryptocurrency price prediction using traditional statistical and machine-learning techniques: A survey. Intell. Syst. Accounting, Financ. Manag. 2021, 28, 3–34. [Google Scholar] [CrossRef]

- Kenyon, P. Pricing. In A Guide to Post-Keynesian Economics; Routledge: London, UK, 2023; pp. 34–45. [Google Scholar]

- Ali, B.J.; Anwar, G. Marketing Strategy: Pricing strategies and its influence on consumer purchasing decision. Int. J. Rural. Dev. Environ. Health Res. 2021, 5, 26–39. [Google Scholar] [CrossRef]

- Gerpott, T.J.; Berends, J. Competitive pricing on online markets: A literature review. J. Revenue Pricing Manag. 2022, 21, 596. [Google Scholar] [CrossRef]

- Zhao, Y.; Hou, R.; Lin, X.; Lin, Q. Two-period information-sharing and quality decision in a supply chain under static and dynamic wholesale pricing strategies. Int. Trans. Oper. Res. 2022, 29, 2494–2522. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, X.; Xiao, Y.; Sheng, J. Effects of online reviews and competition on quality and pricing strategies. Prod. Oper. Manag. 2022, 31, 3840–3858. [Google Scholar] [CrossRef]

- Basal, M.; Saraç, E.; Özer, K. Dynamic pricing strategies using artificial intelligence algorithm. Open J. Appl. Sci. 2024, 14, 1963–1978. [Google Scholar] [CrossRef]

- Chen, P.; Han, L.; Xin, G.; Zhang, A.; Ren, H.; Wang, F. Game theory based optimal pricing strategy for V2G participating in demand response. IEEE Trans. Ind. Appl. 2023, 59, 4673–4683. [Google Scholar] [CrossRef]

- Patel, P. Modelling Cooperation, Competition, and Equilibrium: The Enduring Relevance of Game Theory in Shaping Economic Realities. Soc. Sci. Chron. 2021, 1, 1–19. [Google Scholar] [CrossRef]

- Ghosh, P.K.; Manna, A.K.; Dey, J.K.; Kar, S. Supply chain coordination model for green product with different payment strategies: A game theoretic approach. J. Clean. Prod. 2021, 290, 125734. [Google Scholar] [CrossRef]

- Bekius, F.; Meijer, S.; Thomassen, H. A real case application of game theoretical concepts in a complex decision-making process: Case study ERTMS. Group Decis. Negot. 2022, 31, 153–185. [Google Scholar] [CrossRef]