Abstract

Interactive multimedia experiences (IME) can be a pedagogical resource that has a strong potential to enhance learning experiences in early childhood. Learning analytics (LA) has become an important tool that allows us to understand more clearly how these multimedia experiences can contribute to the learning processes of these students. This article proposes a work route that defines a set of activities and techniques, as well as a flow for their application, by taking into consideration the importance of including LA guidelines when designing IMEs for elementary education. The work route’s graphical representation is inspired by the foundations of the Essence standard’s graphical notation language. The guidelines are grouped into five categories, namely (i) a data analytics dashboard, (ii) student data, (iii) teacher data, (iv) learning activity data, and (v) student progress data. The guidelines were validated through two approaches. The first involved a case study, where the guidelines were applied to an IME called Coco Shapes, which was aimed at transition students at the Colegio La Fontaine in Cali (Colombia), and the second involved the judgments of experts who examined the usefulness and clarity of the guidelines. The results from these approaches allowed us to obtain precise and effective feedback regarding the hypothesis under study. Our findings provide promising evidence of the value of our guidelines, which were included in the design of an IME and contributed to the greater personalized monitoring available to teachers to evaluate student learning.

1. Introduction

An interactive multimedia experience (IME) is defined as the means through which a multimedia system delivers the creation of value to stakeholders [1]. This concept considers aspects that are associated with the responsible design of multimedia systems, as well as the interests, needs, motivations, and expectations of the interested parties, as a product of a human-centered design process. We can also define learning analytics (LA) as the field of measurement, analysis, and data reporting about learners and their contexts to understand and improve learning where it occurs [2].

A systematic review of the applications of LA in the design of IMEs has shown a trend toward its use in services based on e-learning [3]. It has also been found that most LA guidelines are applied in both higher education and elementary education. However, gamification techniques are one of the most commonly used strategies for mediating the use of LA in an IME for children [4]. Regarding LA guidelines [5], a trend has been observed in their application for designing solutions that offer personalized learning experiences to students. For example, some IMEs aimed at children based on gamification have integrated physical and interactive objects with virtual games to promote collaborative work between students [6].

Some authors, such as Lee [7], suggest that artificial intelligence (AI)-powered LA can potentially transform early childhood education and instruction. Although this work does not provide a solution in the form of an AI-based system for LA, it does address a problem associated with the gap in the use and appropriation of learning experiences mediated by technology in the context of elementary education. In this case, it is a process for the inclusion of LA in the development of an IME based on human-centered design involving the stakeholders of the community to which the school belongs.

This effort is due to the contribution that LA can offer to achieve learning experiences that, through the mediation of technologies such as an IME, allow young children to be offered an education more personalized to their needs and environment [8]. This is an important contribution, since access to technologies for education in low-resource communities in countries with emerging economies is typically achieved through the adoption of technologies developed in other countries, with cultures, conditions, and needs different from their own, with little adaptation to the real needs of the context in most cases [9]. This suggests a limitation regarding the conditions that educational technology should provide, which in this case, are related to the children’s agency [10]. This is interpreted as a “socially situated capacity to act” [11].

Incorporating LA into a development process is challenging because it can be applied to achieve various objectives, each involving selecting precise data and methods to generate the necessary information. From there, this work arises, which presents a work route to include LA in IME, which allows designers and developers to select and implement a series of concrete LA guidelines and then provide useful information to different stakeholders. Through our research, we offer a new approach to incorporate LA guidelines in developing IME for elementary education in public or private institutions, contributing to creating learning experiences in a school context. This approach is presented using an artifact [12], which is expressed through a work route using the Essence graphic notation language [13].

This study was carried out over 2.5 years and initially conceived as a flexible and adaptable process that could be used in different communities and contexts. It was based on a method for the pre-production and validation of multimedia systems by developing an IME focused on gamification techniques. It aimed to allow children, between four and five years old in elementary education, to learn the English language in a school located in a low-income commune in Cali, Colombia [14]. The solution evolved based on experiences with students, teachers, and community members, allowing us to define a set of LA guidelines implemented as an LA dashboard [15]. The guidelines were validated through two approaches. The first involved a case study, where the guidelines were applied to an IME called Coco Shapes that was aimed at transition students at the Colegio La Fontaine in Cali (Colombia). The second involved the judgments of experts who examined the usefulness and clarity of the guidelines.

The work route to incorporate the LA guidelines in IME has been tailored for implementation by companies producing technology solutions and content for educational content. Additionally, these guidelines are intended for use by technology-support units and information and communication technology (ICT) managers within educational institutions. They serve as a valuable resource for educators to integrate technology into their instructional and learning strategies.

The structure of this article is as follows. Section 2 provides an overview of the application of LA within the IME framework. Section 3 outlines the materials and methods utilized in the study. Section 4 details the findings and the discussion of the research, while Section 5 offers conclusions and recommendations for future research.

2. Analytics in Interactive Multimedia Experiences

2.1. The Role of IME in Elementary Education

Multimedia and its interactive possibilities for users are valuable for enriching and improving learning experiences at different levels of schooling [16]. A wide range of multimedia tools is now used in education, and the proliferation of these tools is attributed to the evolution of technologies over the years and the continuous efforts of teachers to improve the delivery of knowledge [17]. This finding is consistent with those observed in emerging economies, such as Colombia, where access to technological resources has expanded in school educational centers, mainly in the public sector, according to the National Administrative Department of Statistics (DANE) [18]. However, strong limitations still need to be addressed by teachers and students attempting to access educational technologies due to the digital gap measured by indicators, such as the Network Readiness Index (NRI) [19].

Emerging economies often adapt solutions based on models and technologies to support education that were developed for the needs of different social, cultural, and economic contexts [9], which restricts the value that can be created in terms of students’ learning experiences in early childhood. This opens up new opportunities for the generation of alternatives that lead to the creation of IMEs designed according to the particular needs of these communities [14]. Using an IME, we present a solution that can enrich the experiences of children from schools in low-income areas with a low-cost solution for learning a second language, such as English.

Throughout this process, we have identified opportunities to improve the learning experiences offered through the IME. We initially recognized the basis of a systemic model that would allow us to identify a set of essential variables to enable better management of data and information that could be used to enrich traceability in the learning process of children in these communities [20]. This effort is consistent with another study to identify the potential of interactive technologies to add value to the learning experiences of basic education students [21].

2.2. Learning Analytics Guidelines

Guided by the results of a systematic review [3], and considering student learning goals, activities, and stakeholders’ needs in the EMI development [5], we formulated 36 guidelines. These guidelines encompass five categories, namely (i) dashboards for academic management, (ii) student profiling, (iii) teaching management, (iv) tracking student–multimedia interactions, and (v) monitoring student performance and progress. The guidelines are aimed at designers, developers, teachers, and other stakeholders in the basic education context to integrate LA into IMEs for elementary learners. These guidelines are detailed for each category in Table 1.

Table 1.

Guidelines for incorporating LA into IME.

2.3. Work Route to Include LA Guidelines into IME

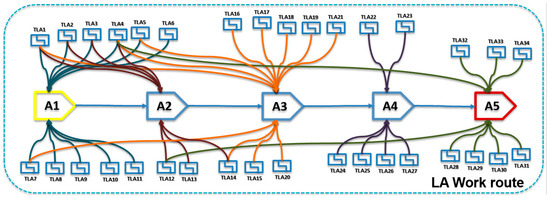

The route proposes a workflow comprised of a set of activities and techniques. Through the application of these techniques, the completion of the defined activities is pursued. The sequence observed by the flow of activities suggests an order for their application, but it should not be confused with a waterfall process. On the contrary, its application should be framed within an iterative process. The way both activities and techniques are graphically represented is inspired by the foundations of the Essence standard’s graphical notation language [13]. This is intended for potential future work related to the design of a practice for incorporating learning guidelines in IME. An activity with a yellow border represents the starting point of the route workflows, while an activity with a red border represents the end of a workflow iteration along the route. Figure 1 shows the work route for including LA guidelines in IME in elementary education.

Figure 1.

LA work route.

The process flow begins by defining the purpose of applying LA to an IME with several learning objectives. Second, LA guidelines that align with stakeholder needs are identified. Third, the sources and types of data needed to support LA are defined. Up to this point, iterations can occur between these activities to ensure alignment between the selected guidelines and the needs being studied. Then, the IME is developed and tested for continuous improvement. This iterative flow ensures that the IME effectively integrates LA guidelines.

Table 2 outlines the activities associated with the LA route and the techniques utilized to fulfill the scope of the work. These techniques are grounded in methodologies and frameworks linked to tools commonly employed in Design Thinking [37,38] and Value-Sensitive Design [39], practices based on User-Centered Design for LA [23], and Software Engineering [40,41].

Table 2.

Activities and techniques involved in the LA route.

3. Methods

In order to validate the proposed guidelines, two approaches were used. For the first, we conducted a case study in which the guidelines were applied to an IME for transition students from the La Fontaine School, in Cali (Colombia). In the second, we collected the opinions of experts who examined the usefulness and clarity of the guidelines. These approaches allowed for precise and effective feedback regarding the aspects under evaluation.

3.1. Case Study

3.1.1. Methodology

Considering that this research originated from an issue related to the need to incorporate LA guidelines into the development of IME aimed at elementary education in Colombia, this led to the conception of a practice for incorporating LA guidelines as a design artifact for school contexts. The proposal suggested a process based on unifying criteria among various stakeholders. Subsequently, evaluations of the work route were conducted in the school context through a case study and expert judgment. Design science was determined to be the most consistent methodological approach for this research [42].

At this point, it is important to highlight that the methodology of design science has been widely embraced by the LA community, primarily for the validation of studies related to the generation of the models [43], methods [44], frameworks [45], and design principles of LA [46], among other studies. In this case, the work route proposed for incorporating LA guidelines in the Colombian school context can be defined as a knowledge artifact associated with information technology (IT) [47]. The results shared by these studies suggest that the selection of design science has shown satisfactory results regarding its utility for validating approaches related to this proposal. Additionally, it facilitates the reproducibility of the presented research for other researchers.

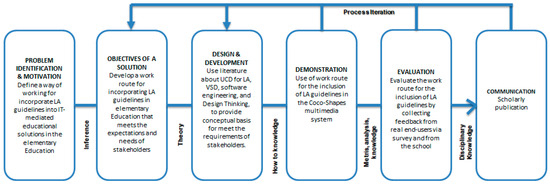

Consistent with the above, this work was conducted through a set of four stages, namely (i) identifying a problem related to the inclusion of LA guidelines in the context of Colombian institutions, (ii) objectives of a solution for incorporating LA in school contexts as an IT artifact, (iii) design and development of the artifact of the solution, (iv) evaluation of the work route for the inclusion of LA guidelines through the Coco Shapes multimedia system, and (v) communication of the analysis of the results and contributions offered by the work route, along with its identified limitations. The research method followed is presented in Figure 2 and is an adaptation of the objective of this research of the method presented by Peffers et al. [48].

Figure 2.

Design science research process case study. Adapted of Peffers et al. [48].

For the case study, we developed the following hypothesis. The application of the proposed design guidelines for including LA in the design of an IME allows the teacher to carry out more personalized follow-up in terms of evaluating student learning in elementary education.

3.1.2. Creation of Work Teams

This project was carried out by a core of five researchers, including four with a PhD in engineering and one with a PhD in education. These researchers are members of groups accredited by the Ministry of Science and Technology of Colombia, Minciencias (2023). A second group consisted of five interested parties from the La Fontaine school in Cali (Colombia), consisting of three transition teachers and two directors. These two groups made up the work team that participated in the validation of the proposed guidelines.

This year, 42 transition students were all children between four and five years old. The three teachers, who were experts in English, guided the students throughout the process. The school director, who had a degree in foreign languages, also participated.

3.1.3. Coco Shapes

Using the multimedia system pre-production method [1] in the elementary education context, an experience titled ‘Coco Shapes’ was created for transition students at the La Fontaine School in Cali, Colombia. Coco Shapes focuses on teaching the specific topics of colors, shapes, and counting. The initiative was developed with objectives that align with the Basic Learning Rights and address the needs of the school’s stakeholders.

Considering stakeholder needs, the team designed English language learning activities based on student proficiency. These activities, spanning three difficulty levels, covered colors, shapes, and counting. Coco Shapes, deployed on a tablet (see Figure 3), blended physical (button and shapes) and digital resources for interactive learning. During geometry activities, children inserted shapes into slots; during color activities, they pressed corresponding buttons. And during counting activities, they selected the right screen option.

Figure 3.

A student using Coco Shapes.

The course teachers could observe the students’ results, which allowed them to evaluate each student’s progress.

3.1.4. Evolution of Coco Shapes

To include elements of LA in Coco Shapes, the team of researchers interviewed teachers at the La Fontaine School to identify the relevant data that would contribute to evaluating student learning.

After the interviews, the audio files were transcribed, and the qualitative information was analyzed. Using the first version of Coco Shapes and analyzing the interviews identified opportunities for improvement in relation to the usability of the experience, with the aim of providing a more fluid interaction to the user. Opportunities for improvement related to including LA elements were also identified so that the teachers could monitor the student’s learning process.

Considering the stakeholders’ expectations, we developed a web application that included modules related to student management, teacher management, course management, and reports. The reporting section contained a dashboard that could be used to monitor the student’s learning process and included a set of graphs and information-filtering mechanisms at the interested parties’ request.

3.2. Expert Judgments

The statistical methodology used for this phase of the research included the following steps:

Step 1: We conducted an expert-consensus analysis via a questionnaire to validate the LA guidelines, assessing perceptions of clarity and usefulness across categories, including a (i) data analytics dashboard (LAD), (ii) student (LAS), (iii) teacher (LAT), and (iv) learning activities. Expert evaluations were then quantitatively analyzed for usefulness and clarity.

Step 2: Descriptive and inferential analyses were conducted after applying the questionnaire. Each category was characterized by constructing tables and summary graphs and employing the Mann–Whitney test [49,50] to evaluate potentially significant differences in respondents’ perceptions of usefulness and clarity. A percentage perception index was developed to synthesize the collected information and facilitate an overarching evaluation of the different variables and items presented. This index compiled the data to form a quantitative assessment, drawing on the responses provided by each expert participant. The final rating was derived from the operation of the indicator, as given in the following equation [51,52]:

rating of item i.

number of effective responses.

maximum possible score: E*6 (six response categories, with each measured on a Likert-type scale from zero to five).

This indicator expresses the general level of perception in each of the sectors and cities on a scale ranging from 0 to 100. Descriptive analyses and Mann–Whitney and statistical tests were performed using the freely distributed statistical program R-3.4.2 [53].

4. Results and Discussion

4.1. Case Study Results

4.1.1. Application of Guidelines to Coco Shapes

Using the first version of Coco Shapes, the researchers interviewed school teachers to identify relevant data related to the evaluation of student learning. After analyzing the information collected, opportunities for improvement were identified related to the usability of the IME, so that it provides a more fluid experience to the user, and includes LA elements so that teachers can monitor the student’s learning process. Based on the opportunities for improvement related to the inclusion of LA, a set of 26 guidelines was selected to develop the second version of Coco Shapes. Guidelines for implementing the data analytics dashboard, including LAD1, LAD2, LAD3, LAD5, and LAD7, were considered, as described below.

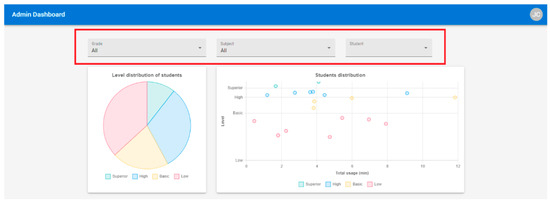

For LAD1, the Coco Shapes web-application dashboard allows filtering by grade, subject, and student, as shown in Figure 4. In this way, teachers can configure the data they want to view according to their needs and focus on the relevant data from large sets of information.

Figure 4.

Filters for configuring the level of detail for the data.

For LAD2, the dashboard offers teachers different types of graphs (such as pie, bar, point, and line graphs) for viewing the distribution of data by student or course and allowing them to see students at a certain level or student performance. In graphs that include time, the X-axis represents this variable to facilitate the interpretation of the information.

For LAD3, the dashboard graphs (see Figure 2) use distinctive colors for each variable so that teachers can quickly identify different sets of data or variables in a graph. Feedback from the teachers confirmed that the graphs were clear and differentiated and that they sped up the interpretation of the information.

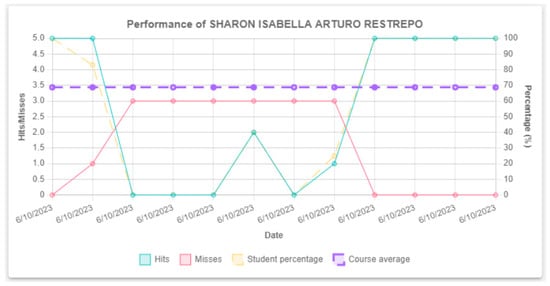

For LAD5, the student performance indicators that are based on the number of successes and failures are represented by lines over time (see Figure 5), and a percentage representing the student’s progress and the course average is presented for the period of system use. With this information, teachers can monitor the student’s performance and compare it with the course average, thus identifying opportunities for improvement in their teaching strategy for students with low performance over time.

Figure 5.

Student performance.

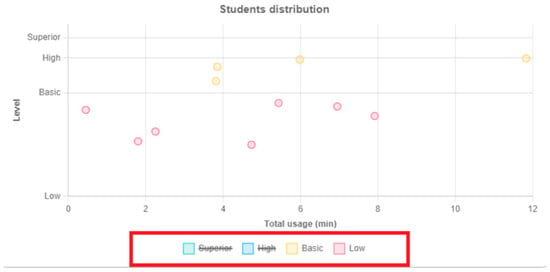

For LAD7, Figure 6 displays the student distribution by level (“Very High”, “High”, “Basic”, “Low”), with selectable user options for data viewing. The institution’s stakeholders define the levels according to its teaching strategy. Aligning with LAD2, time is on the X-axis. Hovering over a point reveals student details, including name, app usage time, and performance percentage for that level.

Figure 6.

Selection of certain values of a variable.

For guidelines related to the student, we considered LAS1, LAS2, LAS4, and LAS6, as described below.

For LAS1, the multimedia experience records the student’s name and grade. In the web application, the school director can enter the information manually or upload an Excel file that includes the data for all the students in a course.

For LAS2, the web application included effective information-security practices, such as user sessions and profile management, password encryption, data protection with built-in defaults for access, and end-to-end encryption to guarantee the privacy, integrity, and confidentiality of the user data.

For LAS4, Coco Shapes features amusement park scenarios, mirroring the school’s surroundings and student preferences. Developed collaboratively, the digital content includes Coco, a spectacled bear, reflecting the Colombian context where spectacled bears inhabit. Coco navigates the park, playing games like catching geometric shapes, soccer, toppling specific figures, or color-mixing to create secondary hues.

For LAS6, Coco Shapes includes three scenarios that correspond to the student’s interests and context. The first scenario involves a roller coaster for counting activities. The second is a circus for shape activities, and the third consists of fair games for color activities.

Guidelines LAT1, LAT2, and LAT3 were considered regarding the teacher, as described below.

For LAT1, on the web application, the school director can add a teacher by registering a name and email address (minimum required data) and assigning one or more courses to this teacher. Once the information is entered, the professor receives an email notification, and access is granted to the data on the students in this course.

For LAT2, the directors and teachers of the La Fontaine school participated in developing the learning activities for Coco Shapes, considering the educational methodologies and resources (physical and technological) available.

For LAT3, the teacher has a dashboard that includes the distribution of students by level, a comparison of results by grade, the average time for each course using Coco Shapes, and the students’ performance. With this information, teachers can detect students who may need additional support and dedicate additional time and resources to this group of students.

The guidelines LAA1, LAA2, LAA3, LAA8, LAA9, LAA10, LAA11, LAA12, LAA13, and LAA15 were considered when applying to learning activities, as described below.

For LAA1, Figure 7 shows an example of the guideline where items 2, 3, and 5 were considered (see Table 1). Based on the needs of the interested parties, the percentage of correct answers and the date on which the user carried out the activities associated with each theme offered by Coco Shapes were chosen.

Figure 7.

Data on activities carried out by students.

For LAA2, Coco Shapes uses a star-based rating system based on the number of hits and misses the user makes. Gamification with stars was chosen in collaboration with teachers to motivate children and turn learning into a playful and fun experience. The stars act as positive reinforcement. Students associate the achievement with a reward by receiving stars for completing activities, reinforcing the desired behavior.

For LAA3, the system records the theme (colors, shapes, or counting), the date, and the time spent on each theme (the last time the student used Coco Shapes), as shown in Figure 7. With this information, teachers can identify topics that are of interest to students or that are complex and use this information as part of their teaching strategy.

For LAA8, the teacher’s dashboard displays student-level distribution, grade-wise results comparison, average course time on Coco Shapes, and student performance (see Figure 5). It also shows topics, dates, and time spent per topic (see Figure 7). Stakeholders deemed this information sufficient for user feedback, identifying strengths and areas for improvement, and evaluating classrooms’ teaching strategies and resources.

For LAA9, the learning activities in Coco Shapes include digital content, such as text, audio, images, and 2D animations. In conjunction with the interested parties, the digital content was aligned with the learning activities, since it contributes to the development of skills such as listening and interpreting the English language for the transition students of the La Fontaine school.

For LAA10, the digital content that supports the learning activities, scenarios, characters, and narrative flow corresponds to the preferences and cultural diversity of the students. Coco Shapes includes eye-catching and intuitive interfaces for students, stimulating interest and curiosity in the multimedia system. It should be noted that it has been left to future work to address the issue of accessibility guidelines for students with visual or hearing limitations.

For LAA11, the teachers decided that, to carry out the figure and color activities, a physical object was required with (i) spaces to insert the geometric figures and (ii) primary and secondary color buttons. In addition, a tablet was used to display the multimedia experience and the counting activities. These elements require the active interaction of students with the contents.

For LAA12, the learning activities included sound effects and music that accompanied the instructions provided to the user. It is important to mention that the school director recorded the instructions played in the learning activities, as she represented a familiar voice for the students due to her excellent pronunciation of English. This was conducted to evoke positive emotions and hold the students’ interest.

For LAA13, Coco Shapes’ figure and color activities include a physical object with geometric figure slots and primary/secondary color buttons, mirroring the transition students’ curriculum. Interacting with these objects stimulates tactile and visual senses, enhancing the learning experience by allowing children to touch, manipulate, and explore, thus boosting topic retention and comprehension.

For LAA15, as part of the learning activities of Coco Shapes, students press buttons, insert shapes into well-defined spaces, and select options on a tablet screen, depending on the challenges defined for each theme. This diversity of interaction styles involves children more actively in the multimedia experience. The student’s commitment and attention to the challenge are encouraged by offering several interaction options.

The guidelines LAP1, LAP2, LAP3, and LAP4 were considered when applying the guidelines related to student progress, as described below.

For LAP1, the number of successes and failures recorded based on the student’s interaction with Coco Shapes is displayed on the dashboard, which displays a line graph of the student’s performance over time (see Figure 6) in comparison to the course average. With this information, teachers can identify long-term trends, patterns, or improvements in student performance.

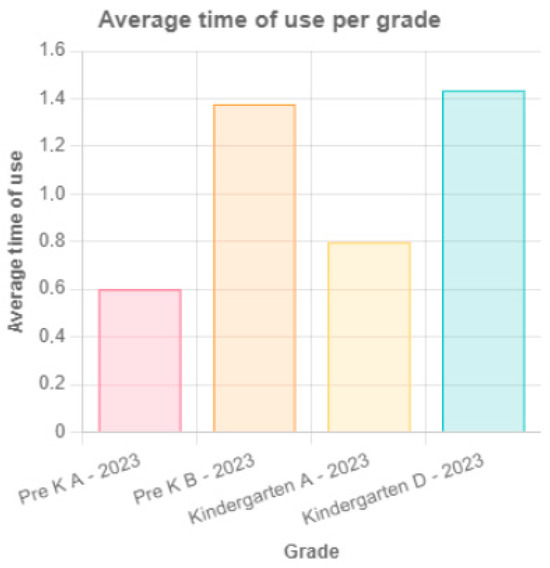

For LAP2, the web app visualizes each course’s average time spent on Coco Shapes based on student usage (see Figure 8), indicating the exposure duration to the second language. This usage time reflects student engagement, allowing teachers to assess interest and participation levels.

Figure 8.

Average usage times for Coco Shapes.

For LAP3, Figure 4 shows an example that includes the number of successes and failures, the student progress as a percentage, and the course average throughout the system’s use. The graph follows the LAD5 guideline and allows the student’s performance to be displayed and compared with the average results for the course. This allows the teacher to identify opportunities for the improvement of the teaching strategy for students who show low performance over time.

For LAP4, the Coco Shapes web app logs student interactions with learning activities, allowing access to historical data by course. This enables stakeholders to refine pedagogical strategies and compare student cohorts for trend analysis and improvement areas. Teachers can also control course visibility within their school’s assigned course list.

4.1.2. Results

Following the methodology chosen for the case study and taking into account the proposed hypothesis, we presented the updated version of Coco Shapes and its web application to the school’s stakeholders to obtain feedback on the support provided by the data in terms of monitoring students. The presentation was carried out at the school facilities, and the responses of those interested were recorded with their respective authorization.

Tokenization of the text was carried out to analyze the feedback. In this phase, the entire text was decomposed into individual words to facilitate a detailed analysis. A data frame was created to house these tokenized words, allowing for more efficient manipulation and analysis [54]. Data cleaning was then performed to eliminate common words or ‘stopwords’ that do not add substantial meaning based on a predefined list of stopwords in Spanish. In addition, specific numbers and markers were eliminated to improve the data-cleaning process. Data cleaning was further enhanced by removing ‘not available’ (NA) [55] values and empty strings, thus ensuring the quality and relevance of the dataset for analysis.

Exploratory data analysis was then performed, focusing on the frequency of the words. The frequency of each word was calculated and represented as a percentage of the total. A filter was set to include only words for which the frequency exceeded a specific threshold, such as more than 1%, to allow us to focus on the most relevant terms. This quantitative analysis was complemented by a graphic visualization using a bar graph, making it possible to identify the most common words in the stakeholder discussion.

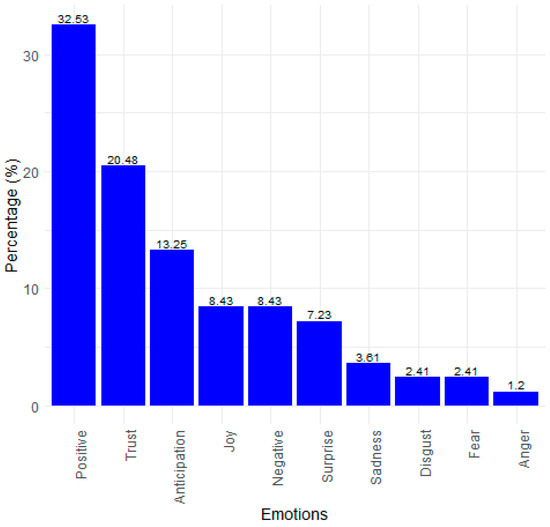

An emotion analysis was also carried out [56] to identify and extract emotional connotations in the language of the texts. This approach allowed us to determine whether the tone was positive, negative, or neutral and to capture both explicit expressions of emotions and subtle nuances. This technique allows for an objective and automatic evaluation of the attitudes and feelings of the author of a piece of text, thus offering a deeper and more quantifiable understanding of the perceptions and emotional reactions of the interviews. The analysis used the statistical program R version 4.1.1 [53,57].

Figure 9 shows the distribution of various emotions, highlighting that positive emotions (32.53%) and trust (20.48%) have the highest values. This suggests that participants perceive the studied environment, likely related to the integration of LA into multimedia experiences, favorably and trustingly. This prevalence indicates that users find value and security in implementing the proposed guidelines. Additionally, the considerable representation of anticipation (13.25%) suggests that participants accept the guidelines and have positive expectations for their future implementation, reinforcing the guidelines’ effectiveness in generating interest and anticipation. Although joy (8.43%), surprise (7.23%), and sadness (3.61%) have lower representations, their presence points to areas where the guidelines can be adjusted to increase satisfaction and reduce stress or dissatisfaction. Negative emotions (8.43%) and disgust (2.41%) are low but present, indicating that while the general perception is positive, specific aspects could be improved. Emotion is a crucial component in the learning experience, and the presence of emotions, such as trust and positivity, indicates that the guidelines are aligned with users’ emotional needs, which is essential for engagement and learning effectiveness. Fear (2.41%) and anger (1.2%) are minimal, which is positive since such emotions can harm learning and collaboration. In summary, the distribution of emotions revealed by the figure justifies the relevance of these categories in the study, showing that the proposed guidelines are functional and emotionally resonant with users, which is crucial for their success and adoption.

Figure 9.

Distribution of emotions.

4.2. Expert Judgments Results

Table 3 summarizes expert opinions on the usefulness and clarity of various guidelines to integrate learning analytics (LA) into multimedia experiences. Usefulness is defined as how well a guideline helps to achieve its goal, while clarity measures the ease of understanding and implementation. The data analytics dashboard (LAD) guidelines received positive feedback, with scores between 67% and 75%, indicating room for improvement. The student-focused guidelines (LAS) scored higher, particularly regarding clarity, suggesting they align well with student needs. Teacher-related guidelines (LAT) had similar perceptions, with scores between 69.63% and 73.33%. Learning activities guidelines (LAA) showed variability but were generally positive, reflecting trends seen in other groups. Guidelines for student progress tracking (LAP) received favorable feedback, aligning with general averages. Overall, the average scores for all the guidelines were 71.78% for usefulness and 71.72% for clarity, indicating a generally positive reception while highlighting areas for potential enhancement.

Table 3.

Experts’ perceptions of the guidelines.

Table 4 below compares the results for each group of guidelines (usefulness and clarity) for the perception indicators. The results of a nonparametric Mann–Whitney test for the LAD, LAS, LAT, LAA, and LAP categories, with W statistics of 33.5, 25, 3.5, 120.5, and 4.5, respectively, and corresponding p-values of 0.27, 1.00, 0.83, 0.75, and 0.38, indicate no statistically significant differences in the perceptions of clarity and usefulness between the groups under comparison. The p-values are above the conventional threshold for significance in all categories but provide insufficient evidence to reject the null hypothesis, which posits that the guidelines’ perceptions of clarity and usefulness are equivalent across the various groups tested. This consistent pattern suggests that, irrespective of the guideline category, no statistically discernible difference exists in how they are evaluated concerning their clarity and usefulness, indicating a balanced perception of these attributes across the different educational guidelines analyzed here.

Table 4.

Comparison of perceptions for each guideline group in regard to usefulness and clarity.

4.3. Discussion

The guidelines’ application has demonstrated a contribution to teachers’ assessment of student learning in the context of basic education, specifically at the La Fontaine school, which was considered a case study. This suggests that the implementation of LA-based strategies in the design of IMEs can provide a more complete and accurate understanding of student progress.

Based on state-of-the-art validation and review activities, it is estimated that continuous evaluation involving LA is essential to improve the quality and effectiveness of IMEs in basic education. This supports the notion that constant feedback is necessary for teachers to adjust and enhance pedagogical practices. This finding is evident from the case study with Coco Shapes since, based on the results presented via the dashboard, the teachers were able to adjust their teaching strategy and consider changes in the activities conducted in the classroom and the didactic resource materials that complemented them for teaching colors, shapes, and counting.

A comprehensive evaluation of the proposed guidelines indicated favorable perceptions among experts regarding usefulness and clarity. Although room for improvement was identified, particularly for guidelines with percentages below the 70% threshold, the timely intervention of the team regarding refining the wording and expanding the information proved to be an effective step toward optimizing these resources. The absence of statistically significant differences in the perceptions of usefulness and clarity between the groups of guidelines indicates consistency in evaluating the guidelines across various categories. This suggests that the guidelines are well-founded and relevant to the needs of their users, representing a solid basis for their implementation and future adaptation in educational contexts.

From an emotional perspective, we observed the predominance of positive emotions and anticipation in the responses of the interested parties, which reflected a general optimism and favorable expectations regarding the use of the IME. A variety of other emotions were also expressed, although to a lesser extent, suggesting that, although positive reactions predominated, there are also nuances in the learning experience that may include challenges and concerns on the part of users.

Together, the quantitative and qualitative data obtained through the validation of the guidelines provided a comprehensive vision that favored a positive interpretation of the impact of their application in the design of IMEs. However, the results also highlight the importance of considering and addressing the full range of user emotions and reactions to improve the effectiveness and reception of digital learning tools. This holistic understanding is vital for developers and educators who seek to create and optimize educational resources that are effective and emotionally resonant with their users.

It is important to note that the validation process using the functional prototype of Coco Shapes was conducted with a small population from the La Fontaine School. However, the project scope presents opportunities to extend validations to other populations in similar contexts.

5. Conclusions

This article presents 36 LA design guidelines for developing IMEs in education, categorized into five groups, including (i) a data analytics dashboard, (ii) student data, (iii) teacher data, (iv) learning activity data, and (v) progress/performance/student monitoring. Validation via a case study and expert evaluations confirms these guidelines as a solid foundation for LA integration in multimedia experiences, facilitating effective analysis of the student learning process.

This work allows us to conclude that integrating LA into IMEs has significant potential to improve learning experiences, since stakeholders (teachers and administrators) could observe various learning situations, such as participation patterns, learning paths, and the time required to complete the activities. Furthermore, this study confirms that the use of IMEs in the context of basic education has a significant impact on student participation and motivation.

Future efforts should focus on expanding the guideline set to cover additional basic education aspects, like governance, value-sensitive design, ethical dilemmas, conflict resolution, and system architectures. Furthermore, developing new metrics or performance indicators will enhance the precision of the learning evaluation in this context.

Author Contributions

Conceptualization, A.S. and C.A.P.; methodology, A.S. and C.A.P.; software, J.A.O. and G.M.R.; validation, A.S., C.A.P., J.A.O., and J.A.P.; formal analysis, J.A.O.; investigation, J.A.P., J.A.L.S., and K.O.V.-C.; resources, G.M.R. and F.M.; data curation, H.L.-G., G.M.R. and K.O.V.-C.; writing—original draft preparation, A.S., C.A.P., J.A.O., and H.L.-G.; writing—review and editing, J.A.P., G.M.R., F.M. and K.O.V.-C.; visualization, J.A.L.S. and K.O.V.-C.; supervision, A.S. and C.A.P.; project administration, H.L.-G.; funding acquisition, H.L.-G. and K.O.V.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Vicerrectorado de Investigación Universidad Nacional Pedro Henríquez Ureña, Santo Domingo, República Dominicana; Laboratorio de Tecnologías Interactivas y Experiencia de Usuario, Universidad Autónoma de Zacatecas, México.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board (or Ethics Committee) of La Fontaine School (approved on 9 March 2024) for studies involving humans.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ayala, C.A.P.; Solano, A.; Granollers, T. Proposal to Conceive Multimedia Systems from a Value Creation Perspective and a Collaborative Work Routes Approach. Interact. Des. Arch. 2021, 49, 8–28. [Google Scholar] [CrossRef]

- Siemens, G.; Gasevic, G.; Haythornthwaite, C.; Dawson, S.; Buckingham, S.; Ferguson, R.; Baker, R. Open Learning Analytics: An Integrated & Modularized Platform. Ph.D. Dissertation, Open University Press, Berkshire, UK, 2011. [Google Scholar]

- Peláez, C.A.; Solano, A.; De La Rosa, E.A.; López, J.A.; Parra, J.A.; Ospina, J.A.; Ramírez, G.M.; Moreira, F. Learning analytics in the design of interactive multimedia experiences for elementary education: A systematic review. In Proceedings of the XXIII International Conference on Human Computer Interaction, Copenhagen, Denmark, 23–28 July 2023; ACM: Lleida, Spain, 2023. [Google Scholar] [CrossRef]

- Cassano, F.; Piccinno, A.; Roselli, T.; Rossano, V. Gamification and learning analytics to improve engagement in university courses. In Methodologies and Intelligent Systems for Technology Enhanced Learning, 8th International Conference 8; Springer International Publishing: Toledo, Spain, 2019; pp. 156–163. [Google Scholar] [CrossRef]

- Srinivasa, K.; Muralidhar, K. A Beginer’s Guide to Learning Analytics; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Blanco, B.B.; Rodriguez, J.L.T.; López, M.T.; Rubio, J.M. How do preschoolers interact with peers? Characterising child and group behaviour in games with tangible interfaces in school. Int. J. Hum.-Comput. Stud. 2022, 165, 102849. [Google Scholar] [CrossRef]

- Lee, A.V.Y.; Koh, E.; Looi, C.K. AI in education and learning analytics in Singapore: An overview of key projects and initiatives. Inf. Technol. Educ. Learn. 2023, 3, Inv-p001. [Google Scholar] [CrossRef]

- Ferguson, R.; Macfadyen, L.P.; Clow, D.; Tynan, B.; Alexander, S.; Dawson, S. Setting learning analytics in context: Overcoming the barriers to large-scale adoption. J. Learn. Anal. 2014, 1, 120–144. [Google Scholar] [CrossRef]

- Dakduk, S.; Van der Woude, D.; Nieto, C.A. Technological Adoption in Emerging Economies: Insights from Latin America and the Caribbean with a Focus on Low-Income Consumers. In New Topics in Emerging Markets; Bobek, V., Horvat, T., Eds.; IntechOpen: London, UK, 2023. [Google Scholar] [CrossRef]

- Wise, A. Designing pedagogical interventions to supportstudent use of learning analytics. In Proceedings of the Fourth International Conference on Learning Analytics and Knowledge, Indianapolis, IN, USA, 24–28 March 2014; ACM: Kyoto, Japan, 2014; pp. 203–211. [Google Scholar] [CrossRef]

- Manyukhina, Y.; Wise, D. British Educational Research Association, BERA. Retrieved from Children’s Agency: What Is It, and What should Be Done? 2021. Available online: https://www.bera.ac.uk/blog/childrens-agency-what-is-it-and-what-should-be-done (accessed on 26 July 2024).

- Hevner, A.R.; March, S.T.; Park, J.; Ram, S. Design science in information systems research. MIS Q. 2004, 28, 75–105. [Google Scholar] [CrossRef]

- Object Management Group. Essence–Kernel and Language for Software Engineering Methods; OMG: Needham, MA, USA, 2018. [Google Scholar]

- Peláez, C.A.; Solano, A. A Practice for the Design of Interactive Multimedia Experiences Based on Gamification: A Case Study in Elementary Education. Sustainability 2023, 15, 2385. [Google Scholar] [CrossRef]

- Roberts, L.D.; Howell, J.A.; Seaman, K. Give me a customizable dashboard: Personalized learning analytics dashboards in higher education. Technol. Knowl. Learn. 2017, 22, 317–333. [Google Scholar] [CrossRef]

- Zin, M.Z.M.; Sakat, A.A.; Ahmad, N.A.; Bhari, A. Relationship between the multimedia technology and education in improving learning quality. Procedia Soc. Behav. Sci. 2013, 9, 351–355. [Google Scholar] [CrossRef]

- Abdulrahaman, M.D.; Faruk, N.; Oloyede, A.A.; Surajudeen-Bakinde, N.T.; Olawoyin, L.A.; Mejabi, O.V.; Imam-Fulani, Y.O.; Fahm, A.O.; Azeez, A.L. Multimedia tools in the teaching and learning processes: A systematic review. Heliyon 2020, 6, 11. [Google Scholar] [CrossRef]

- Departamento Administrativo Nacional de Estadística—DANE. Educación, Ciencia, Tecnología e Innovación—ECTeI—Reporte Estadístico No.1; DANE: Bogotá, Colombia, 2020.

- Portulans Institute; University of Oxford; Saïd Business School. Network Readiness Index 2022. (Countries Benchmarking the Future of the Network Economy). Available online: https://networkreadinessindex.org/countries/ (accessed on 27 April 2023).

- Parra-Valencia, J.A.; Peláez, C.A.; Solano, A.; López, J.A.; Ospina, J.A. Learning Analytics and Interactive Multimedia Experience in Enhancing Student Learning Experience: A Systemic Approach. In Complex Tasks with Systems Thinking; Qudrat-Ullah, H., Ed.; Springer Nature: Cham, Switzerland, 2023; pp. 151–175. [Google Scholar] [CrossRef]

- Budiarto, F.; Jazuli, A. Interactive Learning Multimedia Improving Learning Motivation Elementary School Students. In Proceedings of the ICONESS 2021: Proceedings of the 1st International Conference on Social Sciences, Purwokerto, Indonesia, 19 July 2021; European Alliance for Innovation: Purwokerto, Indonesia, 2021; p. 318. [Google Scholar] [CrossRef]

- Macfadyen, L.P.; Lockyer, L.; Rienties, B. Learning design and learning analytics: Snapshot 2020. J. Learn. Anal. 2020, 7, 6–12. [Google Scholar] [CrossRef]

- Dabbebi, I.; Gilliot, J.; Iksal, S. User centered approach for learning analytics dashboard generation. In Proceedings of the CSEDU 2019: 11th International Conference on Computer Supported Education, Heraklion, Crete, Greece, 2–4 May 2019; pp. 260–267. [Google Scholar]

- Xin, O.K.; Singh, D. Development of learning analytics dashboard based on model learning management system. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 838–843. [Google Scholar] [CrossRef]

- Khosravi, H.; Shabaninejad, S.; Bakharia, A.; Sadiq, S.; Indulska, M.; Gašević, D. Intelligent Learning Analytics Dashboards: Automated Drill-Down Recommendations to Support Teacher Data Exploration. J. Learn. Anal. 2021, 8, 133–154. [Google Scholar] [CrossRef]

- Ahn, J.; Campos, F.; Hays, M.; Digiacomo, D. Designing in Context: Reaching Beyond Usability in Learning Analytics Dashboard Design. J. Learn. Anal. 2019, 6, 70–85. [Google Scholar] [CrossRef]

- Chicaiza, J.; Cabrera-Loayza, M.; Elizalde, R.; Piedra, N. Application of data anonymization in Learning Analytics. In Proceedings of the 3rd International Conference on Applications of Intelligent Systems, Las Palmas de Gran Canaria, Spain, 7–12 January 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Hubalovsky, S.; Hubalovska, M.; Musilek, M. Assessment of the influence of adaptive E-learning on learning effectiveness of primary school pupils. Comput. Hum. Behav. 2019, 92, 691–705. [Google Scholar] [CrossRef]

- Dimitriadis, Y.; Martínez, R.; Wiley, K. Human-centered design principles for actionable learning analytics. In Research on E-Learning and ICT in Education: Technological, Pedagogical and Instructional Perspectives; Springer: Berlin/Heidelberg, Germany, 2021; pp. 277–296. [Google Scholar] [CrossRef]

- Hallifax, S.; Serna, A.; Marty, J.C.; Lavoué, É. Adaptive gamification in education: A literature review of current trends and developments. In Proceedings of the 14th European Conference on Technology Enhanced Learning, EC-TEL 2019, Delft, The Netherlands, 16–19 September 2019. [Google Scholar] [CrossRef]

- Shah, K.; Ahmed, J.; Shenoy, N.; Srikant, N. How different are students and their learning styles? Int. J. Res. Med. Sci. 2013, 1, 212–215. [Google Scholar] [CrossRef]

- Wald, M. Learning through multimedia: Speech recognition enhancing accessibility and interaction. J. Educ. Tional Multimed. Hypermedia 2008, 17, 215–233. [Google Scholar]

- Heimgärtner, R. Cultural Differences in Human-Computer Interaction: Towards Culturally Adaptive Human-Machine Interaction; Oldenbourg Wissenschaftsverlag Verlag: Munich, Germany, 2012. [Google Scholar] [CrossRef]

- Farah, J.C.; Moro, A.; Bergram, K.; Purohit, A.K.; Gillet, D.; Holzer, A. Bringing computational thinking to non-STEM undergraduates through an integrated notebook application. In Proceedings of the 15th European Conference on Technology Enhanced Learning, Heidelberg, Germany, 14–18 September 2020. [Google Scholar]

- Naranjo, D.M.; Prieto, J.R.; Moltó, G.; Calatrava, A. A visual dashboard to track learning analytics for educational cloud computing. Sensors 2019, 19, 2952. [Google Scholar] [CrossRef] [PubMed]

- Papamitsiou, Z.; Giannakos, M.N.; Ochoa, X. From childhood to maturity: Are we there yet? Mapping the intellectual progress in learning analytics during the past decade. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 23–27 March 2020; pp. 559–568. [Google Scholar] [CrossRef]

- Kumar, V. 101 Design Methods: A Structured Approach for Driving Innovation in Your Organization; John Wiley & Sons: Hoboken, NY, USA, 2012. [Google Scholar]

- IDEO. Human Centered Design Toolkit; IDEO: San Francisco, CA, USA, 2011. [Google Scholar]

- Friedman, B.; Hendry, D.G.; Borning, A. A survey of value sensitive design methods. Found. Trends® Hum.–Comput. Interact. 2017, 11, 63–125. [Google Scholar] [CrossRef]

- Pressman, R.; Maxim, B. Software Engineering. A Practitioner’s Approach; Mc Graw Hill: New York, NY, USA, 2015. [Google Scholar]

- ISACA. COBIT 5: A Business Framework for the Governance and Management of Enterprise IT; ISACA: Schaumburg, IL, USA, 2012. [Google Scholar]

- Geerts, G.L. A design science research methodology and its application to accounting information systems research. Int. J. Account. Inf. Syst. 2011, 12, 142–151. [Google Scholar] [CrossRef]

- Freitas, E.; Fonseca, F.; Garcia, V.; Falcão, T.P.; Marques, E.; Gašević, D.; Mello, R.F. MMALA: Developing and Evaluating a Maturity Model for Adopting Learning Analytics. J. Learn. Anal. 2024, 11, 67–86. [Google Scholar] [CrossRef]

- Schmitz, M.; Scheffel, M.; Bemelmans, R.; Drachsler, H. FoLA 2—A Method for Co-Creating Learning Analytics-Supported Learning Design. J. Learn. Anal. 2022, 9, 265–281. [Google Scholar] [CrossRef]

- Law, N.; Liang, L. A multilevel framework and method for learning analytics integrated learning design. J. Learn. Anal. 2020, 7, 98–117. [Google Scholar] [CrossRef]

- Nguyen, A.; Tuunanen, T.; Gardner, L.; Sheridan, D. Design principles for learning analytics information systems in higher education. Eur. J. Inf. Syst. 2020, 30, 541–568. [Google Scholar] [CrossRef]

- Ivari, J. Distinguishing and contrasting two strategies for design science research. Eur. J. Inf. Systems 2015, 24, 107–115. [Google Scholar] [CrossRef]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A design science research methodology for information systems research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Hollander, M.; Wolfe, D. Solutions Manual to Accompany: Nonparametric Statistical Methods; Wiley: New York, NY, USA, 1999. [Google Scholar]

- Wasserman, L. All of Nonparametric Statistics; Springer Science & Business Media: New York, NY, USA, 2006. [Google Scholar]

- Pérez, E. Mágenes Sobre la Universidad del Valle: Versiones de Diversos Sectores Sociales. Estudio Regional y Nacional; Universidad del Valle: Cali, Colombia, 2011. [Google Scholar]

- Ho, G.W. Examining perceptions and attitudes: A review of Likert-type scales versus Q-methodology. West. J. Nurs. Res. 2017, 39, 674–689. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; Foundation for Statistical Computing: Vienna, Austria, 2022. [Google Scholar]

- Jockers, M.L.; Thalken, R. Text Analysis with R; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Little, R.J.; Rubin, D.B. Statistical Analysis with Missing Data; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Mohammad, S.; Turney, P. Emotions evoked by common words and phrases: Using mechanical turk to create an emotion lexicon. In Proceedings of the NAACL HLT 2010 Workshop on Computational Approaches to Analysis and Generation of Emotion in Text, Los Angeles, CA, USA, 5 June 2010; pp. 26–34. [Google Scholar]

- Field, A.; Miles, J.; Field, Z. Discovering Statistics Using R; W. Ross MacDonald School Resource Services Library: Brantford, ON, USA, 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).