Abstract

Mask-face detection has been a significant task since the outbreak of the COVID-19 pandemic in early 2020. While various reviews on mask-face detection techniques up to 2021 are available, little has been reviewed on the distinction between two-class (i.e., wearing mask and without mask) and three-class masking, which includes an additional incorrect-mask-wearing class. Moreover, no formal review has been conducted on the techniques of implementing mask detection models in hardware systems or mobile devices. The objectives of this paper are three-fold. First, we aimed to provide an up-to-date review of recent mask-face detection research in both two-class cases and three-class cases, next, to fill the gap left by existing reviews by providing a formal review of mask-face detection hardware systems; and to propose a new framework named Out-of-distribution Mask (OOD-Mask) to perform the three-class detection task using only two-class training data. This was achieved by treating the incorrect-mask-wearing scenario as an anomaly, leading to reasonable performance in the absence of training data of the third class.

1. Introduction

COVID-19 has posed great challenges to the public globally since the first case of unknown pneumonia detected in humans in December, 2019. On 30 January 2020, the World Health Organization (WHO) announced that the epidemic of COVID-19 was listed as a public health emergency of international concern (PHEIC). In March 2020, the World Health Organization further announced that the outbreak of COVID-19 constituted a global pandemic. By 10 July 2022, 561 million cases of COVID-19 had been confirmed, including over 6 million deaths, according to WHO’s report [1]. During the continuous outbreak of the COVID-19 epidemic, various mutants such as Delta and Omicron emerged, which led to an explosive increase in the confirmed cases, requiring effective epidemic prevention and control measures. With the rise of new and more transmissible sub-variants of BA.4 and BA.5 [2], it seems the COVID-19 pandemic is far from over yet.

Wearing face masks is an important measure to prevent the transmission of diseases among people. Hence, face mask wearing in public places is still encouraged or even mandatory [3]. To ensure the effectiveness of face masks, according to the guidelines of WHO, one should wear face masks correctly (i.e., making sure the nose, mouth and chin are all covered). Therefore, some countries in the epidemic established monitoring systems to guide people to put on face masks properly in public places, such as public transportation, shopping malls, hospitals, etc. Meanwhile, many researchers have worked on effective face mask detection methods and hardware systems.

With the large amount of papers dealing with face-mask detection methods and hardware systems, it is natural to take stock to effectively review these papers for the adoption of those more efficient face-mask detection methods and hardware systems in practical use. While several review papers on this topic have been published in the past years [4,5,6,7], a common limitation of these review papers is that they all mainly concentrate on reviewing the methodologies and software developed, i.e., without reviewing face-mask detection hardware systems properly and systematically. Moreover, while incorrect mask-wearing has been touched upon by some of the review papers [4,5], no in-depth review is available as of yet. With this background, in this paper, we review face-mask detection methods by singling out both the two-class and three-class cases, where two-class refers to wearing a mask and without mask and three-class has an extra class of incorrect mask-wearing. The main gap between the two cases is analyzed. Moreover, we reviewed papers on face-mask detection hardware systems specifically. In addition, a new framework is introduced to handle the incorrect mask-wearing detection task in the absence of training data of the third class. The main contributions of the study are as follows:

- We present an up-to-date review of recent face-mask detection methodologies in both two-class and three-class cases and investigate the gap in techniques between them.

- We provide an additional review and analysis on mask-face detection hardware systems. Various techniques of implementing mask-face detection models into portable systems are also considered.

- We develop a new Out-of-distribution-Mask (OOD-Mask) detection framework, which can perform three-class mask-face detection with only two-class data of wearing a mask and without mask.

The outline of the remainder of this paper is as follows: We first explain our review strategy in Section 2. Then, we make a brief summary in Section 3 of commonly used evaluation criteria and previous review papers on mask-face detection methodology in recent years. Following that, we provide a review of face-mask detection methodologies in terms of both two-class and three-class features in Section 4, as well as a review of face-mask detection hardware systems in Section 5. In Section 6, we introduce the new OOD-Mask detection framework, including its implementations and experiments. Finally, we conclude our work and present several research directions for future works for face-mask detection in Section 7.

2. Reviewing Methods

In this study, the review was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. We divided the filtering process into the following several phases.

2.1. Searching Strategy

We first clarified our research objectives of an up-to-date review for two-class and three-class mask-face detection models and a review for mask-face detection systems. Therefore, we explored online electronic databases including Google Scholar, IEEE Xplore Digital Library, Web of Science, and Springer Link with the following Boolean search strings:

- ((“face mask” OR “mask face”) AND (“detection” OR “detection model”)).

- ((“face mask” OR “mask face”) AND (“detection system” OR “detection hardware” OR "detection hardware system”)).

2.2. Study Selection

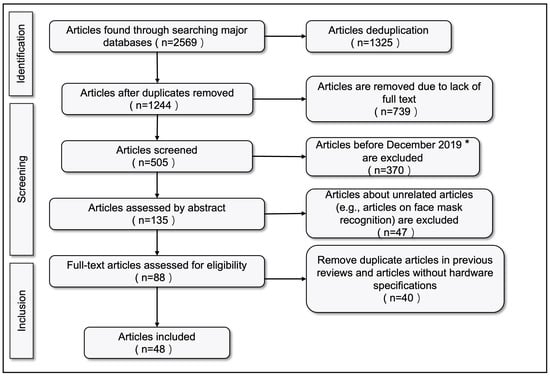

We collected a total of 2569 articles from the searched databases as shown in Figure 1. We first excluded all duplicated articles by different Boolean search strings. Then, we made a further screening according to articles’ titles and abstracts manually.

Figure 1.

PRISMA diagram for two-class mask-face detection, three-class detection and mask-face hardware systems. * December 2019 refers to the time of the COVID-19 outbreak.

2.3. Eligibility Check

After the relevance selection, we further evaluated the eligibility for the remaining 135 articles. For the up-to-date reviews of mask-face detection models, we excluded duplicated articles from previous reviews [4,5]. For the review of mask-face detection hardware systems, we excluded articles without model descriptions or hardware specifications.

Consequently, we retained 48 articles for our review purposes in this study. These 48 articles consist of two parts. First, 32 represent the latest research on mask-face detection models which are not mentioned in previous reviews and are analyzed in Section 4 to show the state-of-the-art methods for improving mask-face detection performance. The other 16 articles covered most mask-face detection systems based on various research angles according to the review in Section 5. Thus, the articles we collected are sufficient to achieve our review aims.

2.4. Review Structure

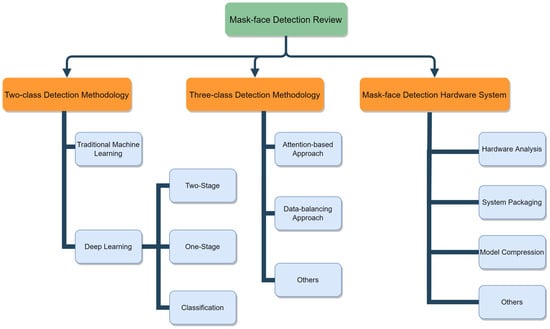

Additionally, to make our review clearer and understandable, we visualized the overall review structure as in Figure 2. Finer classification details are provided in each review part and we explain them in detail in the corresponding Sections.

Figure 2.

The overall mask-face detection review structure.

3. Related Works

3.1. Major Evaluation Metrics

Due to different characteristics of the approaches taken in the literature, studies on mask-face detection methodologies use a variety of indicators to evaluate their performance. In this section, we introduce these evaluation metrics used frequently in our investigated research, and the corresponding formulas.

We first provide the definitions of the required statistics. True Positive (TP) indicates that the positive sample is predicted correctly. False Negative (FN) refers to the positive sample being incorrectly predicted to be of negative class. True Negative (TN) defines cases of a negative sample being correctly classified into the negative class. Additionally, False Positives (FP) are the result of negatives samples incorrectly classified into the positive class.

Recall that Equation (1) indicates how many positive samples are correctly detected in the prediction result. The precision shown in Equation (2) is defined as evaluating the proportion of correctly predicted samples. Since the values of precision and recall are inversely proportional to each other, sometimes there are contradictions. Thus, it is necessary to use F1-score to weight and average these two indicators, as expressed in Equation (3).

The True Positive Rate (TPR) has the same definition as recall (1). The False Positive Rate (FPR) shown in Equation (4) refers to the proportion of wrongly predicted negative samples with respect to all negative samples. TPR and FPR are often used as a pair of evaluation indicators. Accuracy, expressed in Equation (5), is the most common indicator which indicates the proportion of correctly classified samples with respect to the overall sample space. In general, a higher accuracy suggests better classification performance.

In order to measure the overlap degree between the predicted bounding box and the ground truth, the Intersection over Union (IoU) was utilized as shown in Equation (6). By adjusting the value of IoU, a corresponding set of recall and precision values can be obtained. The Average Precision (AP), expressed in Equation (7), is the area enclosed by this set of values. The mean Average Precision (mAP) is calculated by averaging all AP values of N categories as defined in Equation (8).

3.2. Summary of Previous Review Papers on Mask-Face Detection Methodologies

Research on mask-face detection emerged in recent years in varied datasets, model types, evaluation criteria and applied systems. This motivated several researchers to review and summarize different approaches to establish reliable guidelines for future development. In this section, we discuss in detail and summarize these previous literature reviews on mask-face detection.

Since mask-face detection is an application of object detection algorithms, Nowrin et al. [4] first divided mask-face detection models into machine learning-based algorithms and deep learning-based algorithms, then divided each category of models further. Traditional methods were divided into Viola–Jones detectors [8], HOG-based detectors, etc. Methods based on deep learning were classified as CNN, GAN and other deep neural networks. Additionally, the reviews stressed the importance of the remaining image resolution during detection, the scarcity of diverse datasets and classification of objects, incorporated issues such as the diversity of datasets and mask types, different mask wearing conditions, and reconstruction of masked faces for future improvements.

In the review provided in [5], the authors introduced state-of-the-art neural network models and datasets used in mask-face detection comprehensively and evaluated the prediction accuracy. They also detailed the shortcomings of different models. As the conclusion, this review proposed future research directions for detecting mask-wearing conditions in real scenarios; highlighted the demand for a shared mask database to develop a high-accuracy and real-time automatic mask-face detection system; and proposed the fitting of a deeper and wider range of deep learning architectures of more training parameters for the mask-face detection task, such as Mask R-CNN [9], Inception-v4 [10], Xception [11], etc.

In another study [6], face-mask detection models were categorized into one-stage detection, two-stage detection, and feature extraction. The study summarized and analyzed the real-time and mask-face detection technologies and compared the advantages and challenges among different structures. After reviewing all the research, it was found that the utilization rate of deep learning surged after the COVID-19 outbreak. In addition, the reviews proposed that the quality of datasets can be improved by removing images with insufficient light, and by combining different detection models with systems that can detect whether a sufficient physical distance is maintained between people.

In [7], Ali et al. introduced several techniques of mask-face detection and briefly analyzed their drawbacks according to multi-stage and single-stage detection. They divided multi-stage detection models into CNN, R-CNN [12], Fast R-CNN [13], Faster R-CNN [14], and Mask R-CNN [9]. Similarly, YOLO [15] was introduced as single-stage detection. However, the review mainly discussed the architectures of general object detection models, rather than focusing on the characteristics of mask-face detection itself.

In the above literature reviews, the researchers all first extended face-mask detection to a general object detection task. By collecting the reported datasets, classifying and analyzing different model architectures, studies put forward multiple prospects for improving mask-face detection models in the continuous epidemic. Nevertheless, these studies shared the limitation of paying little attention to distinguishing the mask-face categories and failing to focus on the techniques of detecting the third class of incorrect mask-wearing. Moreover, none of the previous reviews thoroughly explored the application of mask-face detection models to hardware systems.

To fill the gaps left by the above mentioned reviews, we divide the research in mask-face detection into two-class and three-class reviews in the next section and provide another mask-face detection hardware system review in Section 5. In each review, more detailed categorizations are made according to the corresponding criteria.

4. Review of Mask-Face Detection Methodologies

In this section, we summarize various studies focused on mask-face detection published in recent years. We first collate commonly used datasets for mask-face detection in Table 1.

Table 1.

Common datasets for mask-face detection.

4.1. Two-Class Mask-Face Detection Methodologies

Due to the difficulty of collecting and labeling the class of incorrect mask-wearing, the majority of research focused on two-class (i.e., wearing mask and without mask) mask-face detection. In order to achieve the real-time detection of masks during the epidemic, in addition to the popular deep learning methods used for mask-face detection, there are a small number of studies that used traditional machine learning methods to extract and classify object’s features. In this part, we review the research on mask-face detection from both traditional machine learning and deep learning methods. Table 2 shows the discussion and classification of related research works.

Table 2.

Summary of 2-class mask-face detection methodologies.

4.1.1. Traditional Machine Learning Based Approaches

Before deep learning methods became popular, researchers tended to use traditional machine learning for feature extraction. The location and range of the region of interest are determined by letting candidate frames of different sizes traverse the entire image, and then feature extraction algorithms are used such as Haar and Histogram of Oriented Gradient (HOG) to extract features from the candidate region, so as to obtain the required features and classify them.

In the study of Dewantara et al. [25], their feature-based adaptive boosting and cascade classifiers trained Haar-like features, Local Binary Pattern (LBP), and HOG, respectively, to obtain the required features and perform sorting. Then, since Haar-like features focused more on facial contours (nose and mouth), its accuracy reached 86.9%, surpassing the 81.7% accuracy of LBP and 74.5% of HOG.

4.1.2. Deep Learning Based Approaches

In the field of machine learning, deep learning-based methods have achieved great popularity in recent years. Deep learning aims to build and simulate the neural network of the human brain for analysis and learning. It imitates the mechanism of the human brain to interpret data such as images, sounds, and texts. Many architectures have been proposed in object detection with the development of deep learning. As a branch of object detection, mask-face detection also includes the processes of target detection, feature extraction, classification, etc. According to the implementation of object detection methods, we divided mask-face detection models into the following two categories: two-stage and one-stage detection methods. In addition, there are some studies that only performed classification using convolutional neural networks and learned nothing about location information. Therefore, we divided deep learning methods into the following three parts: two-stage detection, one-stage detection and classification.

A. Two-stage detectors

Due to the lack of human and material resources and incomplete feature coverage in previous traditional machine learning models, two-stage detection generates a series of sample candidate boxes using the RPN [14] algorithm to obtain a more accurate detection effect. The main network includes the R-CNN series [13,14,51], etc.

Sethi et al. [23] proposed a novel two-stage detector that also possessed the advantages of one-stage detectors. The model achieved a low inference time and high accuracy of 98.2%. In addition, adding affine transformation could more accurately locate faces in different poses so as to identify people who are not wearing masks and retrieve their identity information for registration. This method was helpful for shopping malls, schools, and other public places to check whether masks are worn through surveillance video.

B. One-stage detectors

Compared with the high accuracy of two-stage detectors, more research works tend to use the one-stage detector to detect and extract object features in pictures or videos with a higher detection efficiency, which could help achieve the target of real-time mask-face detection. One-stage detection methods mainly include the You Only Look Once (YOLO) series [15,35,52], Single Shot Detector (SSD) [33], etc. They directly returned the category probability and position coordinate values of objects through the model. Such an algorithm can provide faster detection, but the accuracy may be lower than that of the two-stage detection network.

In the work of Guo et al. [37], in order to solve the problem of the low accuracy of mask-wearing detection under dim light conditions, a method of introducing an attention mechanism into the YOLOv5 network for mask-wearing detection was proposed. By using CIOU Loss [53] to calculate the localization loss of the target frame, it not only considered the overlapping area of the predicted frame and the real frame but also accounted for the distance between the center points of the two and the aspect ratio of the two. At the same time, a convolutional block attention module (CBAM) [54] was added to the YOLOv5 network, which multiplied the input feature map and the attention map for adaptive feature optimization, highlighting the main features and suppressing irrelevant ones. The accuracy of this model reached 92% in the detection of mask-wearing under dim light conditions.

C. Classification

In some studies, the input images were preprocessed with methods such as enlarging, cropping, etc., to maintain only the focused areas for classification. They only focused on classification architectures, leaving out the detection component.

In order to improve the accuracy of mask-face classification, Pranjali et al. [48] proposed a new idea to focused on the parts of non-covered faces and obtained the best aspects of the collection areas through CNN.They made a comparison among different classification models of SSDMNV2, LeNet-5, AlexNet [30], VGG-16 [44] and ResNet-50 [28]. As a result, their system achieved 98.7% accuracy.

In [46], Habib et al. first performed data augmentation on the following three datasets: Face Mask Detection (FMD) [21], Face Mask (FM) [55], and Real-World Mask Face Recognition (RMFR) by implementing flipping, rotation, etc., to expand the size of training data. After that, they proposed a model based on the MobileNetV2 [32] architecture, followed by autoencoders, which were used to transform the high-dimensional output feature vector into low dimensions. Finally, they performed ablation studies with their proposed model with VGG16, EfficientNet [56] and other well-known CNN models. Their model achieved a precision of 99.696%, recall of 99.97%, F1-score of 99.97%, and accuracy of 99.98%. Furthermore, the light-weighted architecture of the proposed model achieved 199.01 FPS over GPU, 44.06 FPS over CPU, and 18.07 FPS over the Raspberry Pi resource-constrained device.

Besides the two-class mask-face detection methods, some researchers paid attention to the issue of incorrect mask-wearing, which plays a critical role in the protection of infection during the epidemic. Therefore, in the next section, we summarize studies on mask-face detection models of three-class, which includes wearing mask, without mask, and incorrect mask-wearing.

4.2. Three-Class Mask-Face Detection Methodologies

The main defect of two-class based methods is that in the case of incorrect mask-wearing (i.e., wearing a mask without covering the nose, mouth or chin), the mask can still be detected in the image but it this not effective in virus prevention. Therefore, researchers recently paid more attention to performing mask-face detection with the additional class of incorrect mask-wearing.

The detection categories used for three-class mask detection are roughly the same as the two-class methods. Due to the characteristics of three-class datasets, three-class mask detection is more challenging.

Firstly, a majority of datasets used by existing three-class detection methodologies are unbalanced and the number of samples in the incorrect mask-wearing class is less than that in the class of wearing mask or the class of without mask. In addition, the feature of incorrect mask-wearing has a high similarity with the other two categories, and this directly leads to the lower accuracy of the class.

In order to improve the accuracy of detecting incorrect mask-wearing, some studies [57,58,59,60] added attention mechanisms to balance features from multiple dimensions to generate more salient features. Other studies [61,62] searched for and labelled more incorrect mask-wearing data by themselves or used the data augmentation approaches to increase the small number of samples in the incorrect mask-wearing class, thus making the three classes in the training dataset more balanced as to achieve a better mask-face detection performance. Studies used for three-class mask-face detection are discussed in Table 3 after introducing representative studies from each category in the subsequent sections.

Table 3.

Summary of three-class mask-face detection methodologies.

4.2.1. Attention-Based Approaches

The attention mechanism is a component used in neural networks to model long-range interaction, which has led to great progress in both NLP and CV fields.

In Computer Vision, motivated by human observation, the attention mechanism is a dynamic weight adjustment process based on features of the input images. In the study of three-class mask-face detection [57,58,59,60], researchers added the attention mechanism after extracting the target features through the neural network, with the aim of reducing the effect of the class imbalanced problem on the detection performance.

In particular, Zhang et al. [60] proposed a two-stage structure with the attention mechanism, Context-Attention R-CNN, which could reach mAP of 84.1%. The dataset contained 4672 images in three categories, including 3988 images without masks, 636 images wearing masks, and 48 images of incorrect mask-wearing. Among them, the incorrect mask-wearing category had the least number of images, presenting a large gap with the other two categories. In order to reduce the effect of this imbalance on the detection performance, the attention mechanism was added after extracting the target features through the neural network. It could weigh the extracted features to obtain more effective features of masked-faces from different dimensions and reduce noise features. The mAP of incorrect mask-wearing was improved by 5.5 percent using this method.

4.2.2. Data Balancing-Based Approaches

Due to the imbalanced problem in the existing dataset, [23,61,62,65] established datasets of three balanced categories by making more annotations for incorrect manually. More specifically, in [65], by performing enhanced data augmentation techniques, the training dataset represented an equal proportion of the three categories. In addition, their model was more robust in real scenarios owing to data augmentation. Their Faster R-CNN Inception ResNet V2 model finally achieved an accuracy of 99.8%.

Kayali et al. [61] also customized their dataset with equal amounts of data in three classes before designing their mask-face detection model. This dataset avoided the situation where three classes of detection results were quite different due to the imbalance of training data. First, they used the face recognition algorithm in OpenCV to locate and crop the faces in the images, and the obtained pictures passed through the lightweight network NASNetMobile [64] and the deep-structured ResNet50 [28], respectively. After comparison, the model achieved an accuracy of 92%.

4.2.3. Others

Other studies did not design mask-face detection methodologies from the perspective of attention mechanism or data imbalance. Instead, they made contributions from other perspectives. In the research of of [66,69], they introduced a new class of mask-face detection named mask area, which provided the location information of masks. It could also served as a potential method for generating images of mask-covered faces. For simplicity, we attributed them to three-class mask-face detection methodologies since their main purposes were to detect incorrect mask-wearing. However, whether such classification would be useful in practical mask-face detection remains to be examined. On the other hand, their proposed methods presented large gaps in terms of the accuracy of different classes.

Besides detecting masked faces, Eyiokur et al. [70] paid attention to the social distance and the interaction between face and hand. The attention model was leveraged for the detection of face–hand interaction. Combining such additional components helped to track and prevent infection with COVID-19 more comprehensively.

The papers mentioned above and other related works are summarized in Table 3.

5. Review of Mask-Face Detection Hardware Systems

To develop highly integrated, user-friendly and flexible mask–face detection systems, deep learning models or other software methods need to be incorporated with embedded systems such as Raspberry Pi [73]. While Wuttichai et al. [74] proved that even when implemented in embedded systems, deep learning based approaches are more suitable than traditional machine learning methods; most of the recent research on mask-face detection systems are based on deep learning models. Due to the complexity of deep learning models, integrating mask-face detection models with hardware devices at a reasonable cost is the main problem that should be taken into consideration. However, since deep learning models require training and testing on high-end desktop hardwares or connection to remote servers with sufficient computational resources in general, most research reviewed in Section 4 are beyond testing and tend to be unable to perform in real time when deployed in embedded systems with limited acceleration devices. Based on the reasons mentioned above and insufficient existing surveys, we provide an additional review on system-level mask detection methods. In the following Section, in order to emphasize the perspective of the system-level, we review research works which either provide detailed hardware implementations or have unique contributions to mask–face detection systems. Systems that are only tested on PCs/remote servers with simple webcams or do not specify the hardware configurations are excluded.

5.1. Recent Mask-Face Detection Systems

In this section, we introduce the latest mask–face detection systems. We categorize all these research works into the following four categories: Hardware Analysis, System Packaging, Model Compression and Others according to their contributions. Definitions of these categories are as follows:

- Hardware Analysis: Either making a careful comparisons among various hardware types or developing special techniques to perform acceleration on the hardware platform.

- System Packaging: Developing ready-to-use mask-face detection product prototypes such as a smart door.

- Model Compression: Leveraging various compression techniques to build light-weight mask–face detection models to fit the embedded systems.

- Others: Contribute to mask–face detection hardware system from other perspectives.

We first begin by detailing several representative studies from each category. Then, we present a thorough summary of all the studies investigated in Table 4.

Table 4.

Summary of mask-face detection hardware systems.

5.1.1. Hardware Analysis

Peter et al. [75] provided an elaborate comparison among multiple hardware choices. They set a desktop-based implementation of an Intel I7-8650U CPU 1.9 GHz, and 16 GB RAM as the baseline. Then three embedded hardware systems were designed to deploy the deep learning detection model. From the software level, the authors proposed a customized CNN model named MaskDetect. Compared to well-known pretrained models such as VGG-16 [44] and ResNet-50V2 [71], MaskDetect was light-weight, thus saving memory and accelerating the inference to a great extent. Furthermore, to fit the specific hardware accelerator, the author provided three different quantization strategies. A memory reduction from the model size of 11.5 MB to 0.983 MB can be realized under the 32-bit floats to 8-bit integer quantization compression. As a result, three systems, namely the a.Raspberry Pi 4 computational platform + Google Coral Edge TPU + 8-bit quantized model, b.Raspberry Pi 4 computational platform + Intel Neural Compute Stick 2 (INCS2) + Compressed model in float16 precision and c.Jetson Nano + 128 Maxwell cores + Compressed model in float32 precision achieved the following performances: FPS—19, accuracy—90.4%; FPS—18, accuracy—94.3% and FPS—22, accuracy—94.2%, respectively. The NVIDIA Jetson Nano is least costly in terms of design and had the best performance of acceleration while its detection accuracy was comparable. This paper is the only research in which multiple systems were designed and an in-depth comparison was made from different angles.

In [94], the authors provided a similar result to [75] that the NVIDIA Jetson Nano might be a more suitable platform for developing mask-face detection systems. They deployed various deep learning models of both image classification and object detection on Jetson Nano and Raspberry Pi3 for mask-face detection and performed a real-time analysis. Consequently, the Jetson Nano outperformed Raspberry Pi3 by a substantial degree. As an example, Jetson Nano provided 36 FPS with the well-known ResNet50 [28] while the FPS was 1.4 for Raspberry Pi3 in the same configuration. As a result, though most systems tend to deploy models on Raspberry Pi embedded systems, the above research revealed that Raspberry Pi might not be the most suitable choice for designing mask-face detection systems. More hardware devices should be explored.

Besides looking for suitable hardware devices, Fang et al. [76] achieved great progress in addition to designing detection models. In their approach, they used the multithreading technique to avoid the model delay. They trained Haar Cascade Classifiers (HCC) [78] to detect mask-wearing and faces without a mask. They used two HCCs to detect faces and months, respectively. The mask-face detection results were based on a combination of two outcomes. While no deep learning models were deployed, it was proved that the traditional machine learning model could cause significant delay due to the algorithm’s running time. To avoid such delays, they incorporated multithreading whereby they pipelined the video frame through the FPGA unit and executed the detection model in the ARM core. Therefore, the algorithm delay could be replaced by a buffer delay which improved the FPS to a large extent.

5.1.2. System Packaging

Susanto et al. [80] put their mask-face detection system into use at Politeknik Negeri Batam island. They installed a MiniPC with GPX 1060 Nvidia GPU together with a digital webcam and screen to assemble the system. The authors of [82,83] integrated their systems into smart and automatic doors. The doors were driven by servo motors and determined whether a person could enter or not by performing mask-face detection and checking body temperature.

Unlike the previously mentioned approaches based on embedded systems, Wang et al. [81] proposed an in-browser serverless edge-computing based mask-face detection system in which all computations were executed by personal devices. It is so flexible that users can access their server through their mobile phones or PC at anytime and from anywhere. Another advantage is that the abstract system is of little cost since it is device-agnostic meaning it requires no additional hardware platform. However, because the detection model inference is operated by CPUs on personal devices, the real-time performance is not satisfying. Moreover, whether such an online system could be applied to real cases remains to be discovered.

5.1.3. Model Compression

Aiming at compatibility with embedding systems and real-time implementation, various methods were proposed to compress the deep learning model. The studies in [75,86,87,88,90] all applied quantization to the deep learning models in order to fit the model into embedded systems and achieve faster inference performance. Among them, Ref. [90] provides several ablation studies on both pruning and quantization procedures. It provides a guideline for object detection model compression with the strategy of a 0.6 pruning rate and static quantization. In addition, Yahia Said [87] raised that since mask-face detection is a binary classification problem (i.e., wearing mask and without mask), the process of class probability calculation could be eliminated to lessen the inference time. They treated the output confidence scores as class probabilities to avoid additional forward calculation.

Nael et al. [89] presented a special quantization method named BinaryCoP to reduce the model memory footprint. The proposed model was based on the Binary Neural Network (BNN) founded by [95]. BNN can be considered as an extreme case of quantization. It quantizes all parameters of weights and activations to one-bit precision as −1 and 1. Consequently, most complicated calculations in the neural network can be replaced by binary operations such as XOR during the forward pass of the neural network. As such, a unique structure could be accelerated by performing deployment on a delicately designed hardware; the authors implemented their BinaryCoP models on embedded FPGAs based on the Xilinx FINN framework [96], which was designed for FPGA accelerations of BNNs. When pre-processing on the dataset, they subdivided the MaskedFace-Net dataset’s [84] two classes of Correctly Masked Face Dataset (CMFD) and Incorrectly Masked Face Dataset (IMFD) into four classes of CMFD, IMFD Nose, IMFD Chin, and IMFD Nose and Mouth. From the experiments, three proposed BinaryCop models of CNV, n-CNV and µ-CNV achieved a latency of 1.58 ms, 0.31 ms, 0.81 ms and accuracy of 98.10%, 93.94%, 93.78%, respectively. The comparable results proved the effectiveness of BinaryCop and its requirement of a low inference power of 2 W.

Besides employing quantization to generate light-weight models, [83] proposed a hybrid approach to perform the mask-face detection task. The architecture was divided into two stages. The first stage exploited the Haar Cascade algorithm [78], also called the Voila–Jones algorithm to detect faces. It is a traditional machine learning algorithm used to carry out object detection and therefore requires fewer computational resources. The authors used pre-trained face detection Haar Cascade models in OpenCV in their method. In the second stage, they trained a MobileNetV2 [32] deep learning model from scratch as the classifier of two classes (mask-wearing and no mask/incorrect mask-wearing). From their experiments, the hybrid model free of a deep learning based detector could achieve a comparable performance.

5.1.4. Others

Other research works contribute to mask-face detection system design from different angles. For instance, Ref. [74] studied the performance of K-nearest neighbors (KNN), support vector machine (SVM) and MobileNet [29] when performing mask-face detection tasks on Raspberry Pi 4. They showed that even in a real-time case, a light-weight version of the MobileNet algorithm can achieve a better accuracy of 88.7% than both the SVM and KNN algorithms (78.1% and 72.5%, respectively).

5.2. Discussions

Regarding the systems summarized above, we provide specific evaluations of both hardware acceleration performance and software detection performance for each study in Table 4. According to the models in Table 4, we can easily see that all approaches using traditional machine learning methods are based on Haar Cascade Classifiers (HCC) [78] since HCC is a popular machine learning algorithm to perform object, especially face, detection. Nevertheless, according to [97], based on the fact that the HCC has weaknesses in lowlight conditions, pure HCC requires an additional IR (Infrared) module on the camera to improve its performance. Meanwhile, models based on deep learning are able to outperform HCC-based methods without any additional hardware device. Therefore, these results further prove the conclusion that deep learning-based masked-face detection methods are mainstream.

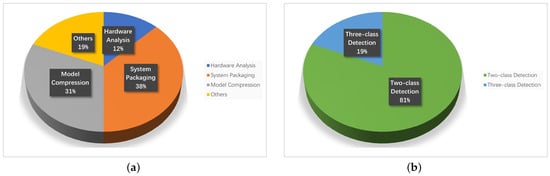

As illustrated in Figure 3a, most research works focus either on packaging the systems into ready-to-use products or compressing masked-face detection models to perform in real-time on the hardware platform. Only a few papers contribute to the hardware design to achieve a better speed performance or make a comparison among different devices in detail. Such a conclusion provides potential in terms of the masked-face detection system for future studies. In addition, we visualized the proportions of two-class and three-class mask-face detection systems in Figure 3b. Particularly, we categorized the four-class classification model [89] into a three-class model since two classes of incorrectly masked nose and incorrectly masked chin can both be regarded as incorrect mask-wearing. As shown in the figure, three-class mask-face detection is performed less frequently on hardware systems. This is because preparing a three-class mask-face detection model is of higher-cost and more time-consuming. For example, labelling the third class of incorrect mask-wearing in the dataset is costly and may easily cause a data imbalance. Under such circumstances, in the next section, we introduce an approach to quickly extract abnormal cases of incorrect mask-wearing which can be incorporated into all two-class mask-face detection systems with no additional data required.

Figure 3.

Sector graphs for mask-face detection hardware system classifications. (a) Contributions of different approaches; (b) Proportions for two-class and three-class mask-face detection systems.

6. OOD-Mask Detection Framework

In this section, we propose a new Out-of-distribution Mask (OOD-Mask) framework to detect the abnormal class of incorrect mask-wearing with only two-class data. Due to its simplicity and decoupling, large numbers of existing two-class mask-face detection models can be directly incorporated with the OOD framework to perform three-class mask–face detection tasks.

6.1. Related Works

6.1.1. Out-of-Distribution Detection

In supervised deep learning models, the number of classes that models are able to classify is consistent with the number of training labels. However, a special technique such as the Out-of-Distribution (OOD) detection method can detect an additional class not included in the training dataset. It is usually closely applied in anomaly detection (AD) [98], novelty detection (ND) [99], open set recognition (OSR) [100], and outlier detection (OD) [101].

Several advanced methods were presented to ensure OOD abnormal detection performance while the original model performance was not affected. Hendrycks et al. The study in [102] proposed an approach to effectively detect OOD samples by predicting the softmax probability of the sample through a statistical model. Liang et al. [103] proposed the use of temperature scaling and input processing to improve the OOD detection capability. Denouden et al. [104] detected OOD samples by exploiting the reconstruction error of the variational autoencoder (VAE) [105]. Abdelzad et al. [106] extracted the input and output data of different layers, used a one-class SVM classifier, counted the classification error rate of this layer, and then selected the layer with the smallest error to detect OOD samples.

Based on the characteristics of the OOD detection approach, we found it suitable for the mask-face detection task where the data of incorrect mask-wearing are hard to collect and annotate. We were then motivated to develop a new framework named Out-of-Distribution Mask (OOD-Mask) incorporating the state-of-the-art object detection neural network, which is aimed at carrying out the three-class mask-face detection task.

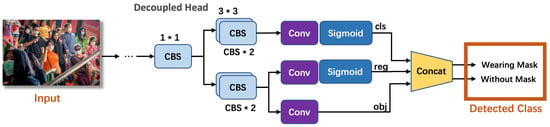

6.1.2. YOLOX Object Detection Model

The YOLO series is one of the most widely used detectors in the industry due to its broad compatibility, so we selected the YOLOX [107] architecture, which is a high-performance anchor-free detection model, as the backbone detector in our framework.

Despite the fact that the original YOLOX model is one of the state-of-the-art object detection models, it shares the common limitation of supervised neural networks that the numbers of training classes and prediction classes have to be the same so that classes not included in training datasets are not detected.

YOLOX uses YOLOv3 as the baseline, which adopts the architecture of the DarkNet53 backbone and an SPP (Spatial Pyramid Pooling) space [108]. The SPP structure performs feature extraction through the maximum pooling of different pooling kernels to improve the perception field of the network. After that, the three enhanced feature layers are passed into Yolo’s head to obtain the prediction results. The classification and regression are implemented separately, and they are integrated with the final prediction, as shown in Figure 4. The network can only classify the categories that have been labeled in the training dataset.

Figure 4.

YOLOX architecture.

6.2. Methodology of OOD-Mask

We proposed the OOD-Mask framework using Out-of-Distribution detection for detecting incorrect mask-wearing, according to the differences in confidence scores between in-and Out-of-Distribution. Such difference enables OOD-Mask to separate the third class of incorrect mask-wearing from the output prediction of neural networks that only contain input data of wearing mask and without mask. In the following sections, we first describe how to use the sigmoid score to detect the third class, then introduce the energy score and use it to implement the OOD-Mask for detecting incorrect mask-wearing.

6.2.1. Sigmoid Score

In order to achieve the goal of detecting the third class of incorrect mask-wearing from the samples predicted as wearing mask or without mask, we first treated both classes of wearing mask and without mask within the dataset as in-distribution (ID) while the class of incorrect mask-wearing was treated as out-of-distribution (OOD). Then, we tried to separate OOD data from ID data based on the sigmoid confidence score.

The sigmoid function is one of the most commonly used non-linear activation functions in neural networks. All real numbers are within the domain of sigmoid functions, and the return value is mapped in the range of 0 to 1. The formula is as follows:

where the input x is a scalar.

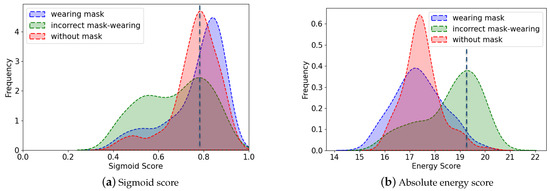

We can obtain sigmoid scores from the decoupled head with the following three branches in YOLOX: class, object, and regression corresponding to the prediction scores of the target box class, the score of whether the box has the target, and the predicted bounding box in order. Multiplication is performed to obtain the final sigmoid score after sending class and object through the sigmoid function, respectively. Figure 5a shows the sigmoid score distribution of the three classes of wearing mask, without mask, and incorrect mask-wearing. As illustrated in the figure, the peak value of incorrect mask-wearing (OOD) overlaps with that of wearing mask and without mask (ID), and the OOD data is uniformly distributed in the overall dataset. In addition, because the output value activated by the sigmoid function ranged between 0 and 1 and the data during the experiment were taken to three decimal places, it was difficult to make more accurate selections. Therefore, extracting the OOD data while remaining the performance of ID remains unchanged seems impossible under such circumstance.

Figure 5.

Data distribution: (a). The peak of the incorrect mask-wearing which is at around S = 0.8, almost overlaps the peaks of both wearing mask and without mask in ID, although the class has a large proportion of values in the 0.2–0.7 range. (b). the values obtained by the OOD-Mask are all negative numbers. In order to compare with the sigmoid score, we take the absolute value of the energy score. the spiky distribution of the incorrect mask-wearing, at absolute energy score = 19.3, is completely separated from wearing mask and without mask, at = 17.3.

6.2.2. Energy Score

To separate the distribution of OOD data from the ID, we introduced the complete OOD-Mask framework as illustrated in Figure 5b. OOD-Mask can attribute lower values to in-distribution data and higher values to out-of-distribution data. During the testing process, the detector of YOLOX with trained weights and parameters are combined with OOD-Mask to perform the detection task. We input the whole testing set into the model, which includes three classes (wearing mask, without mask and incorrect mask-wearing). The backbone detector is responsible for predicting two classes (wearing mask, without mask) because it is trained by the training dataset of only two classes. Then, its prediction is passed to the OOD-Mask to extract the third class of incorrect mask-wearing. Within the OOD-Mask, we first use class and object branches from the decoupled head of YOLOX to calculate both the sigmoid score and energy score separately. The energy score is implemented to classify the ID classes and the OOD class. If the sample belongs to the ID classes, it can be further classified by the sigmoid score. We detailed the method of the energy score as follows.

The energy function [109], first proposed by Weitang Liu et al. plays an important role in this method, which builds upon earlier work [110] relating to the energy-based Model (EBM). EBM has an inherent connection with modern machine learning, especially discriminate models. The energy function creates a discriminative neural classifier : →, which maps the K classes of input space to a single, non-probabilistic scalar called the energy. The formula of the energy function is as follows:

where T is the temperature parameter, and the subscript i indicates the ith class of .

The energy score is used to sort the overall identified targets and extract a portion of them as OOD data. With two types of scores, we not only maintain the accuracy of the wearing mask and without mask classification in the ID but also provide more meaningful information to maximize the distinction between in-distribution and out-of-distribution. The two-class detection prediction of the backbone detector is passed through the OOD-Mask module and the three-class detection result can be obtained. The energy score leads to an overall smoother distribution and can largely separate the class of incorrect mask-wearing from other labels without reducing the performance of other ID classes. The complete OOD-Mask detection framework and its inference process are shown in Figure 6, where the YOLOX detector is trained using the two-class dataset.

Figure 6.

Network Architecture: The YOLOX network is trained with two-class training dataset and performs two-class detection. The prediction output of YOLOX is then passed through the OOD-Mask to obtain three-class detection results.

Additionally, compared to only using the sigmoid function for classification, the energy function does not perform normalization and directly maps each input to a single scalar, which results in lower energy values for in-distribution and higher energy for incorrect mask-wearing, making the gap between categories more obvious.

6.3. Dataset

Our mask detection and classification dataset was divided into three categories: (1) wearing mask, (2) without mask, and (3) incorrect mask-wearing. The sample source in this paper was taken from Wider Face [16] and Kaggle.

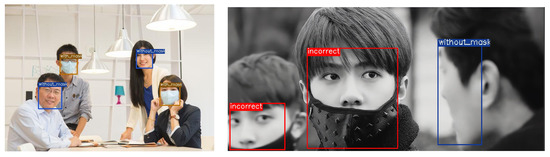

Our proposed dataset contains a total of 1174 images and annotations in a Pascal VOC format. The training dataset contains 3000 wearing mask, and 3322 without mask, and there are 223 wearing mask, 268 without mask and 157 incorrect mask-wearing in the testing set. The above numbers illustrate that the number of people wearing masks incorrectly in the dataset is limited and much lower than the other two classes. Figure 7 shows several examples included in the dataset.

Figure 7.

Examples within the dataset.

6.4. Experiments & Results

We used YOLOX as the object detection model. The training process was carried out on a server with an NVidia RTX 2080 Ti video card. The training configuration was set in a batch size of 16 and 200 epochs, and the entire training process lasted 52.38 min. To highlight the effectiveness of the proposed OOD-Mask framework, we carried out three experiments for comparison as follows.

6.4.1. Experiment on the Original Model

The experiment on the original model without OOD-Mask for two-class mask-face detection acts as the baseline. We used criteria of recall, precision, and F1-score to evaluate the two-class detection performance when the value of the intersection of union (IoU) was 0.5, which had the best classification effect. Results are shown in the first row of Table 5.

Table 5.

Experiment Results.

6.4.2. Experiment on Sigmoid Scores

In the second experiment, in order to extract the OOD data within the class of incorrect mask-wearing, the sigmoid confidence scores in the original network were used to correspond to the different distributions of the three classes (wearing mask, without mask, and incorrect mask-wearing). We used the test dataset of three-class to evaluate the performance while keeping the performance of ID data as constant as possible. It was shown that the data peaks of the three classes were all stacked around a sigmoid score of 0.8, but there was still a large part of the OOD data scattered in the range of less than 0.7. If 0.7 was used as the threshold, value lf less than 0.7 could be regarded as OOD, and values greater than this were regarded as ID. However, this demarcation method reduced the performance of wearing mask and without mask greatly. After changing the score range many times, we chose the data with a sigmoid score of between 0.5 and 0.67 as the OOD by observing three sigmoid score distributions. The evaluation results of the second experiment are presented in Table 5. While the performance of wearing-mask and without-mask remained unchanged, the best F1-score for incorrect mask-wearing was only 31.25%, which meant the extraction was not satisfactory.

6.4.3. Experiment on OOD-Mask Detection Framework

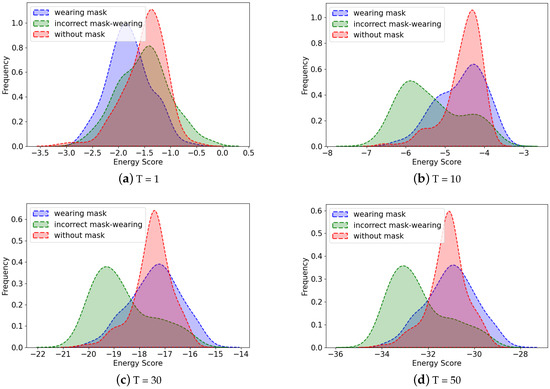

The third experiment was based on the framework combining the YOLOX detection head and OOD-Mask, which used the energy function to score the results without reducing the performance of wearing mask and without mask. It separated the OOD data from the ID to a greater extent, so the distinction effect of the OOD could be improved. We used both energy score and sigmoid score in OOD-Mask and changed the range of the energy score by adjusting the value of T in the energy function, as shown in Figure 8. A comparable classification performance could be achieved at a low cost. When T equaled 30, the energy score range was between 14 and 22. When we observed the energy score distributions of three classes, setting the threshold to 18.3 provided a greater degree of discrimination between abnormal and normal classes. Therefore, after many experiments, we selected an energy score of between 18.3 and 22 as the OOD, and the rest were divided into the ID. The wearing mask and without mask classes in ID were still classified using the sigmoid function.

Figure 8.

Class distributions corresponding to different T values: (a). When T equals 1, the overlap of three distributions makes it challenging to extract the incorrect mask-wearing class. (b). When T is set to 10, the peak of incorrect mask-wearing distribution can be clearly distinguished from other classes (wearing mask and without mask). (c). When T is set to 30, the distinction effect is further improved. (d). When the value of T is greater than or equal to 30, the distribution of the three classes changes little. The energy scores increase with the value of T, but the range of score still remains within 10.

It was shown that the results of this method’s F1-score improved by 24.51% over the results of the second experiment. Compared with the previous experiment, the values of precision and recall improved by 13% and 28%, respectively, as shown in the third row of Table 5. Besides, the performance of both wearing mask and without mask was comparable. Under the same condition as in the second experiment that only two classes (wearing mask and without mask) of data were input into the training process, without any data support for the third class of incorrect mask-wearing, the improvement in the detection performance of the third class was considerable. However, since there was no training data for the third class, the performance still had room for further improvement. Therefore, new OOD-related variants remain to be discovered.

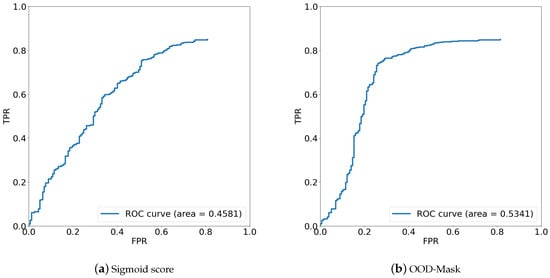

Furthermore, to assess the performance of experiments 2 and 3 more thoroughly, we classified wearing mask and without mask as in-distribution (positive class) and incorrect mask-wearing as out-of-distribution (negative class). The receiver operating characteristic (ROC) curve is a performance measure for classification problems under various threshold settings, where the y-axis is TPR and the x-axis is FPR, and area under curve (AUC) is the area under the ROC curve, indicating the degree or measure of separability. Since some targets were not recognized by the network due to being too small or inconspicuous features, the TPR and FPR values could not reach 1.0. For the AUC value, 53.43% in experiment 3 was higher than 45.80% in experiment 2. By observing the ROC of the two experiments in Figure 9, it was shown that the classification performance of using the energy score was more excellent and obvious than only using the sigmoid score. When we took the same FPR value of between 0.2 and 1.0, the value of TPR in the energy score was consistently higher than in the sigmoid score.

Figure 9.

ROC under different scores.

In order to test the speed performance, we divided all images in the testing set by the whole processing time and used the frame per second (FPS) as the evaluation metric. The test dataset contained a total of 235 images. As shown in Table 6, the frame rate of the three experiments reached 76.18, 65.68 and 64.51 FPS, respectively. The model with OOD-Mask resulted in slightly lower performance due to its additional calculation on data distribution. However, the calculation complexity was limited, and the performance loss was acceptable since the OOD-Mask still met the real-time detection requirement.

Table 6.

Speed Performance.

7. Conclusions

In this work, we first presented an up-to-date review on two-class and three-class mask-face detection models separately to extract the common characteristics and main distinction between the two mentioned fields. We discussed the state-of-the-art approaches and found that researchers prefer one-stage object detection techniques in both two-class and three-class fields for the real-time requirement of masked-face detection applications. We also deduced that research works on three-class masked-face detection models provided new insights, mainly in terms of extending the dataset with more training samples of incorrect mask-wearing or improving models, such as introducing the attention mechanism.

A survey on mask-face detection hardware systems was then provided. We summarized that recent hardware system research and mostly contributed in the following three aspects: hardware analysis, system packaging, and model compression. Notably, emphasis of research in this part varied, and no study paid attention to multiple aspects. Therefore, a system which both builds a light-weighted and well-performing detection model and designs a well-adapted and user-friendly hardware platform remains to be developed.

Finally, we proposed a new framework named Out-of-Distribution Mask (OOD-Mask). The framework aimed to extract the abnormal cases (i.e., incorrect mask-wearing) from wearing mask and without mask. Thus, the proposed framework succeeded in performing the three-class mask-face detection task based on the data and model required in the two-class mask-face detection task.

Developing an integrated mask-face detection system that is low cost, has a fast response, and high accuracy requires techniques in various aspects as we discussed. Based on the survey we conducted, we suggest some research directions for future work. In order to ensure that the detection system can identify whether a person is wearing a mask correctly, researchers need to pay more attention to three-class mask-face detection models. Moreover, in order to make these models compatible with the corresponding hardware systems, instead of increasing model complexity for better accuracy, detection performance should be improved on light-weight models. The OOD framework presented in our work is capable of achieving this, but performance needs to be improved in future work. Furthermore, since the difference between wearing a mask correctly and incorrectly is rather subtle and may be formulated as a fuzzy problem, new fuzzy models [111] could be adopted for this problem in the future. On the hardware side, more hardware research and comparisons are required on how to choose a suitable hardware device to deploy a light-weighted deep learning model. In addition, improving compatibility and achieving better hardware acceleration performance are also significant research directions. Overall, we expect a combination of state-of-the-art techniques both in three-class mask-face detection models and hardware design, as well as continuous efforts in each domain.

Author Contributions

Conceptualization, Y.H., Y.X. and H.Z.; methodology, Y.H. and Y.X.; software: Y.H.; validation: Y.X.; formal analysis, Y.H. and Y.X.; investigation, Y.H. and Y.X.; resources, Z.W., Z.L. and H.Z.; data curation, Y.H. and H.Z.; writing—original draft preparation, Y.H., Y.X. and Z.L.; writing—review and editing, Y.X., Y.H., Z.L., H.Z. and Z.W.; visualization, Y.X.; supervision, Z.L., H.Z.; project administration, H.Z. and Z.W.; funding acquisition, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Science and Engineering Research Council, Agency of Science, Technology and Research, Singapore, through the National Robotics Program under Grant No. 1922500054.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the images/photos used in this paper deriving from the internet which is openly available.

Informed Consent Statement

Not available.

Data Availability Statement

The photos in this study were taken from the publicly archived dataset, Face Mask Detection, https://www.kaggle.com/datasets/andrewmvd/face-mask-detection (last accessed in June 2022); Wider Face, http://mmlab.ie.cuhk.edu.hk/projects/WIDERFace/ (last accessed in June 2022), and the publicly available link: http://www.latender.com/Sleep_health/1159.html (last accessed in July 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations were used in this manuscript:

| COVID-19 | Coronavirus disease-2019 |

| WHO | World Health Organization |

| PHEIC | Public Health Emergency of International Concern |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

| TPR | True Positive Rate |

| FPR | False Positive Rate |

| IoU | Intersection over Union |

| AP | Average Precision |

| mAP | mean Average Precision |

| HOG | Histogram of Oriented Gradient |

| LBP | Local Binary Pattern |

| CNN | Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| ResNet | Residual Neural Network |

| YOLO | You Only Look Once |

| SSD | Single Shot Detector |

| RPN | Region Proposal Network |

| R-CNN | Region-based Convolutional Neural Network |

| CBAM | Convolutional Block Attention Module |

| MAFA | Masked Face |

| MFDD | Masked Face Detection Dataset |

| SMFRD | Simulated Masked Face Recognition Dataset |

| RMFRD | Real-world Masked Face Recognition Dataset |

| RMFD | Real-World Masked Face Dataset |

| FMD | Facemask Detection |

| MAFA-FMD | MAsked FAces for Face Mask Detection |

| WMD | Wearing Mask Detection |

| FM | Face Mask |

| FPS | Frame Per Second |

| BNN | Binary Neural Network |

| FPGA | Field Programmable Gate Array |

| HCC | Haar Cascade Classifier |

| SVM | Support Vector Machine |

| KNN | K-NearestNeighbor |

| AD | Anomaly Detection |

| ND | Novelty Detection |

| OSR | Open Set Recognition |

| OD | Outlier Detection |

| VAE | Variational Autoencoder |

| SPP | Spatial Pyramid Pooling |

| ID | In-distribution |

| OOD | Out-of-distribution |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under Curve |

| FPS | Frame Per Second |

References

- WHO Coronavirus (COVID-19) Dashboard. 2022. Available online: https://covid19.who.int/ (accessed on 1 July 2022).

- Cao, Y.; Yisimayi, A.; Jian, F.; Song, W.; Xiao, T.; Wang, L.; Du, S.; Wang, J.; Li, Q.; Chen, X.; et al. BA. 2.12. 1, BA. 4 and BA. 5 escape antibodies elicited by Omicron infection. Nature 2022, 608, 593–602. [Google Scholar] [CrossRef]

- Mandatory Face Masks Reintroduced at Manx Care Sites after COVID Spike. 2022. Available online: https://www.bbc.com/news/world-europe-isle-of-man-61873373 (accessed on 1 July 2022).

- Nowrin, A.; Afroz, S.; Rahman, M.S.; Mahmud, I.; Cho, Y.Z. Comprehensive review on facemask detection techniques in the context of COVID-19. IEEE Access 2021, 9, 106839–106864. [Google Scholar] [CrossRef]

- Mbunge, E.; Simelane, S.; Fashoto, S.G.; Akinnuwesi, B.; Metfula, A.S. Application of deep learning and machine learning models to detect COVID-19 face masks-A review. Sustain. Oper. Comput. 2021, 2, 235–245. [Google Scholar] [CrossRef]

- Vibhuti; Jindal, N.; Singh, H.; Rana, P.S. Face mask detection in COVID-19: A strategic review. Multimed. Tools Appl. 2022. [Google Scholar] [CrossRef] [PubMed]

- Mohammed Ali, F.A.; Al-Tamimi, M.S. Face mask detection methods and techniques: A review. Int. J. Nonlinear Anal. Appl. 2022, 13, 3811–3823. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Chollet, F. Xception: Deep Learning With Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. Wider face: A face detection benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5525–5533. [Google Scholar]

- Ge, S.; Li, J.; Ye, Q.; Luo, Z. Detecting masked faces in the wild with lle-cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2682–2690. [Google Scholar]

- Wang, Z.; Wang, G.; Huang, B.; Xiong, Z.; Hong, Q.; Wu, H.; Yi, P.; Jiang, K.; Wang, N.; Pei, Y.; et al. Masked face recognition dataset and application. arXiv 2020, arXiv:2003.09093. [Google Scholar]

- Roy, B.; Nandy, S.; Ghosh, D.; Dutta, D.; Biswas, P.; Das, T. MOXA: A deep learning based unmanned approach for real-time monitoring of people wearing medical masks. Trans. Indian Natl. Acad. Eng. 2020, 5, 509–518. [Google Scholar] [CrossRef]

- Chiang, D. AIZOOTech. 2021. Available online: https://github.com/AIZOOTech/FaceMaskDetection (accessed on 1 July 2022).

- Face Mask Detection Dataset. Available online: https://www.kaggle.com/omkargurav/face-mask-dataset (accessed on 12 December 2021).

- Fan, X.; Jiang, M. RetinaFaceMask: A single stage face mask detector for assisting control of the COVID-19 pandemic. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 832–837. [Google Scholar]

- Wang, B.; Zhao, Y.; Chen, C.L.P. Hybrid Transfer Learning and Broad Learning System for Wearing Mask Detection in the COVID-19 Era. IEEE Trans. Instrum. Meas. 2021, 70, 5009612. [Google Scholar] [CrossRef]

- Ottakath, N.; Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S.; Mohamed, A.; Khattab, T.; Abualsaud, K. ViDMASK dataset for face mask detection with social distance measurement. Displays 2022, 73, 102235. [Google Scholar] [CrossRef]

- Bayu Dewantara, B.S.; Twinda Rhamadhaningrum, D. Detecting Multi-Pose Masked Face Using Adaptive Boosting and Cascade Classifier. In Proceedings of the 2020 International Electronics Symposium (IES), Surabaya, Indonesia, 29–30 September 2020; pp. 436–441. [Google Scholar] [CrossRef]

- Lin, H.; Tse, R.; Tang, S.K.; Chen, Y.; Ke, W.; Pau, G. Near-Realtime Face Mask Wearing Recognition Based on Deep Learning. In Proceedings of the 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Sethi, S.; Kathuria, M.; Kaushik, T. Face mask detection using deep learning: An approach to reduce risk of Coronavirus spread. J. Biomed. Inform. 2021, 120, 103848. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Mercaldo, F.; Santone, A. Transfer learning for mobile real-time face mask detection and localization. J. Am. Med. Inform. Assoc. 2021, 28, 1548–1554. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. Mobilenetv2: The next generation of on-device computer vision networks. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Prusty, M.R.; Tripathi, V.; Dubey, A. A novel data augmentation approach for mask detection using deep transfer learning. Intell.-Based Med. 2021, 5, 100037. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Walia, I.S.; Kumar, D.; Sharma, K.; Hemanth, J.D.; Popescu, D.E. An Integrated Approach for Monitoring Social Distancing and Face Mask Detection Using Stacked ResNet-50 and YOLOv5. Electronics 2021, 10, 2996. [Google Scholar] [CrossRef]

- Guo, L.; Wang, Q.; Xue, W.; Guo, J. Detection of Mask Wearing in Dim Light Based on Attention Mechanism. Dianzi Keji Daxue Xuebao/J. Univ. Electron. Sci. Technol. China 2022, 51, 123–129. [Google Scholar]

- Goyal, H.; Sidana, K.; Singh, C.; Jain, A.; Jindal, S. A real time face mask detection system using convolutional neural network. Multimed. Tools Appl. 2022, 81, 14999–15015. [Google Scholar] [CrossRef]

- Yang, C.W.; Phung, T.H.; Shuai, H.H.; Cheng, W.H. Mask or Non-Mask? Robust Face Mask Detector via Triplet-Consistency Representation Learning. ACM Trans. Multimed. Comput. Commun. Appl. 2022, 18, 1–20. [Google Scholar] [CrossRef]

- Singh, S.; Ahuja, U.; Kumar, M.; Kumar, K.; Sachdeva, M. Face mask detection using YOLOv3 and faster R-CNN models: COVID-19 environment. Multimed. Tools Appl. 2021, 80, 19753–19768. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. You Only Learn One Representation: Unified Network for Multiple Tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar] [CrossRef]

- Negi, A.; Kumar, K.; Chauhan, P.; Rajput, R. Deep Neural Architecture for Face mask Detection on Simulated Masked Face Dataset against COVID-19 Pandemic. In Proceedings of the 2021 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 1–20 February 2021; pp. 595–600. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Adhinata, F.D.; Rakhmadani, D.P.; Wibowo, M.; Jayadi, A. A deep learning using DenseNet201 to detect masked or non-masked face. JUITA J. Inform. 2021, 9, 115–121. [Google Scholar] [CrossRef]

- Habib, S.; Alsanea, M.; Aloraini, M.; Al-Rawashdeh, H.S.; Islam, M.; Khan, S. An Efficient and Effective Deep Learning-Based Model for Real-Time Face Mask Detection. Sensors 2022, 22, 2602. [Google Scholar] [CrossRef]

- Waleed, J.; Abbas, T.; Hasan, T.M. Facemask Wearing Detection Based on Deep CNN to Control COVID-19 Transmission. In Proceedings of the 2022 Muthanna International Conference on Engineering Science and Technology (MICEST), Samawah, Iraq, 16–17 March 2022; pp. 158–161. [Google Scholar] [CrossRef]

- Singh, P.; Garg, A.; Singh, A. A Comprehensive Analysis on Masked Face Detection Algorithms. In Advanced Healthcare Systems; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2022; Chapter 16; pp. 319–334. [Google Scholar] [CrossRef]

- Olukumoro, O.S.; Ajayi, F.A.; Adebayo, A.A.; Usman, A.A.B.; Johnson, F. HIC-DEEP: A Hierarchical Clustered Deep Learning Model for Face Mask Detection. Int. J. Res. Innov. Appl. Sci. 2022, 7, 22–28. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Dollár, P.; Appel, R.; Belongie, S.; Perona, P. Fast Feature Pyramids for Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Oumina, A.; El Makhfi, N.; Hamdi, M. Control The COVID-19 Pandemic: Face Mask Detection Using Transfer Learning. In Proceedings of the 2020 IEEE 2nd International Conference on Electronics, Control, Optimization and Computer Science (ICECOCS), Kenitra, Morocco, 2–3 December 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning. PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Wu, P.; Li, H.; Zeng, N.; Li, F. FMD-Yolo: An efficient face mask detection method for COVID-19 prevention and control in public. Image Vis. Comput. 2022, 117, 104341. [Google Scholar] [CrossRef]

- Han, Z.; Huang, H.; Fan, Q.; Li, Y.; Li, Y.; Chen, X. SMD-YOLO: An efficient and lightweight detection method for mask wearing status during the COVID-19 pandemic. Comput. Methods Programs Biomed. 2022, 221, 106888. [Google Scholar] [CrossRef] [PubMed]

- Guo, S.; Li, L.; Guo, T.; Cao, Y.; Li, Y. Research on Mask-Wearing Detection Algorithm Based on Improved YOLOv5. Sensors 2022, 22, 4933. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Han, F.; Chun, Y.; Chen, W. A Novel Detection Framework About Conditions of Wearing Face Mask for Helping Control the Spread of COVID-19. IEEE Access 2021, 9, 42975–42984. [Google Scholar] [CrossRef]

- Kayali, D.; Dimililer, K.; Sekeroglu, B. Face Mask Detection and Classification for COVID-19 using Deep Learning. In Proceedings of the 2021 International Conference on INnovations in Intelligent SysTems and Applications (INISTA), Kocaeli, Turkey, 25–27 August 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, W. Face Mask Wearing Detection Algorithm Based on Improved YOLO-v4. Sensors 2021, 21, 3263. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Proceedings of Machine Learning Research. Bach, F., Blei, D., Eds.; PMLR: Lille, France, 2015; Volume 37, pp. 448–456. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Razavi, M.; Alikhani, H.; Janfaza, V.; Sadeghi, B.; Alikhani, E. An automatic system to monitor the physical distance and face mask wearing of construction workers in COVID-19 pandemic. SN Comput. Sci. 2022, 3, 27. [Google Scholar] [CrossRef]

- Kumar, A.; Kalia, A.; Verma, K.; Sharma, A.; Kaushal, M. Scaling up face masks detection with YOLO on a novel dataset. Optik 2021, 239, 166744. [Google Scholar] [CrossRef]

- Mokeddem, M.L.; Belahcene, M.; Bourennane, S. Yolov4FaceMask: COVID-19 Mask Detector. In Proceedings of the 2021 1st International Conference On Cyber Management And Engineering (CyMaEn), Hammamet, Tunisia, 26–28 May 2021; pp. 1–6. [Google Scholar] [CrossRef]