Machine Learning Techniques and Systems for Mask-Face Detection—Survey and a New OOD-Mask Approach

Abstract

:1. Introduction

- We present an up-to-date review of recent face-mask detection methodologies in both two-class and three-class cases and investigate the gap in techniques between them.

- We provide an additional review and analysis on mask-face detection hardware systems. Various techniques of implementing mask-face detection models into portable systems are also considered.

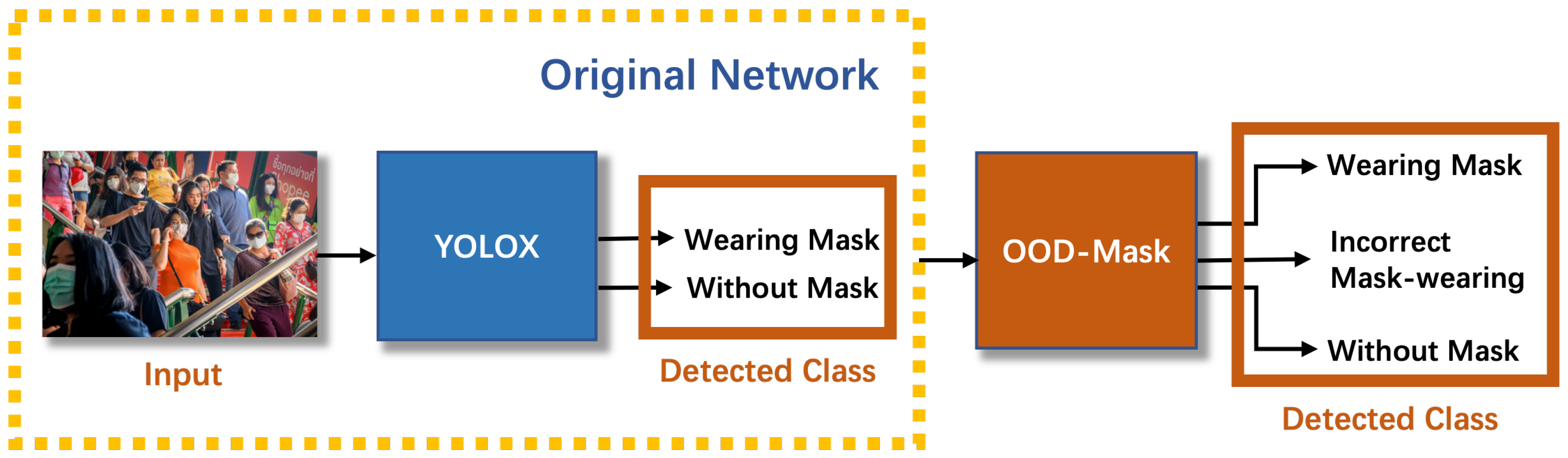

- We develop a new Out-of-distribution-Mask (OOD-Mask) detection framework, which can perform three-class mask-face detection with only two-class data of wearing a mask and without mask.

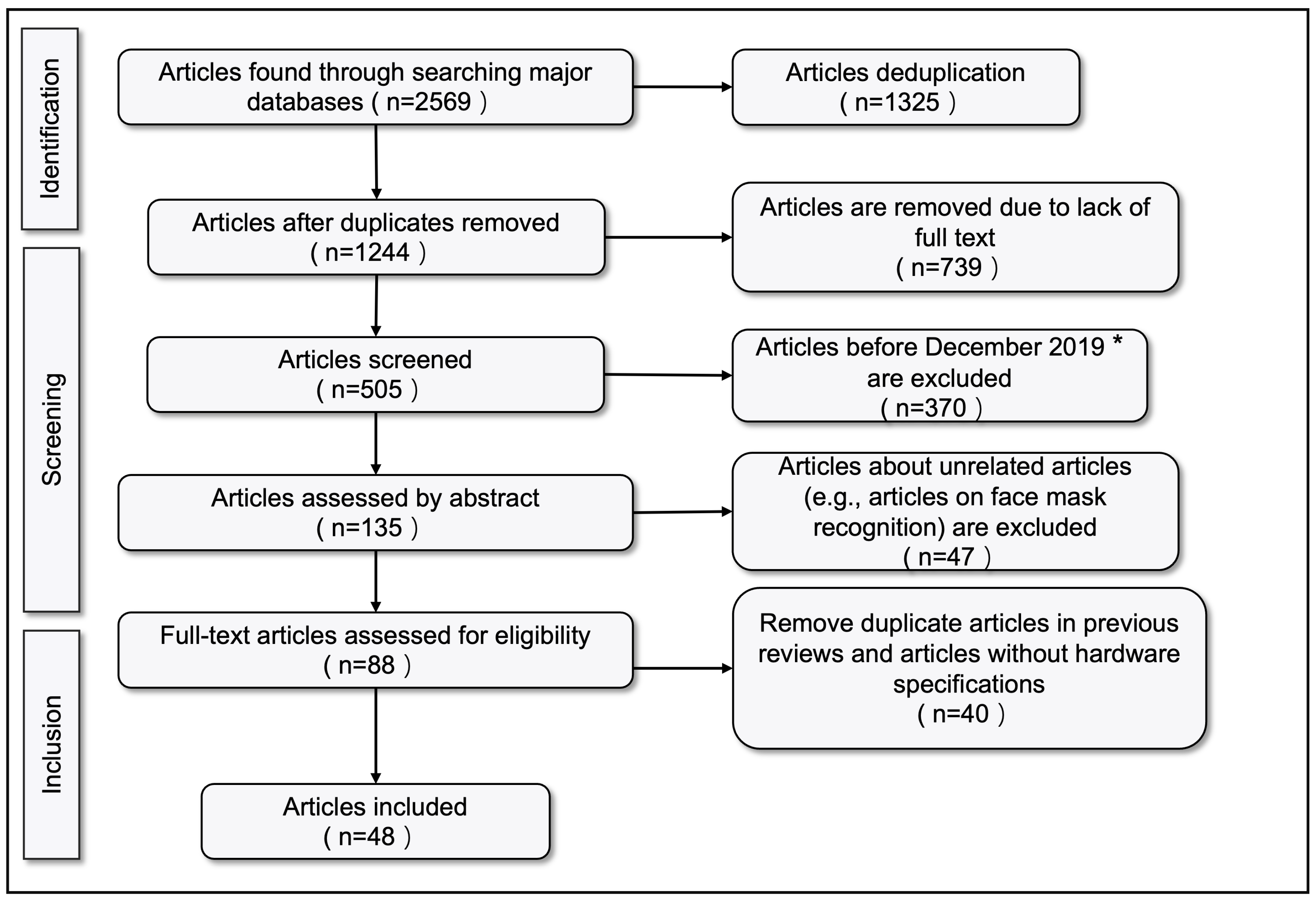

2. Reviewing Methods

2.1. Searching Strategy

- ((“face mask” OR “mask face”) AND (“detection” OR “detection model”)).

- ((“face mask” OR “mask face”) AND (“detection system” OR “detection hardware” OR "detection hardware system”)).

2.2. Study Selection

2.3. Eligibility Check

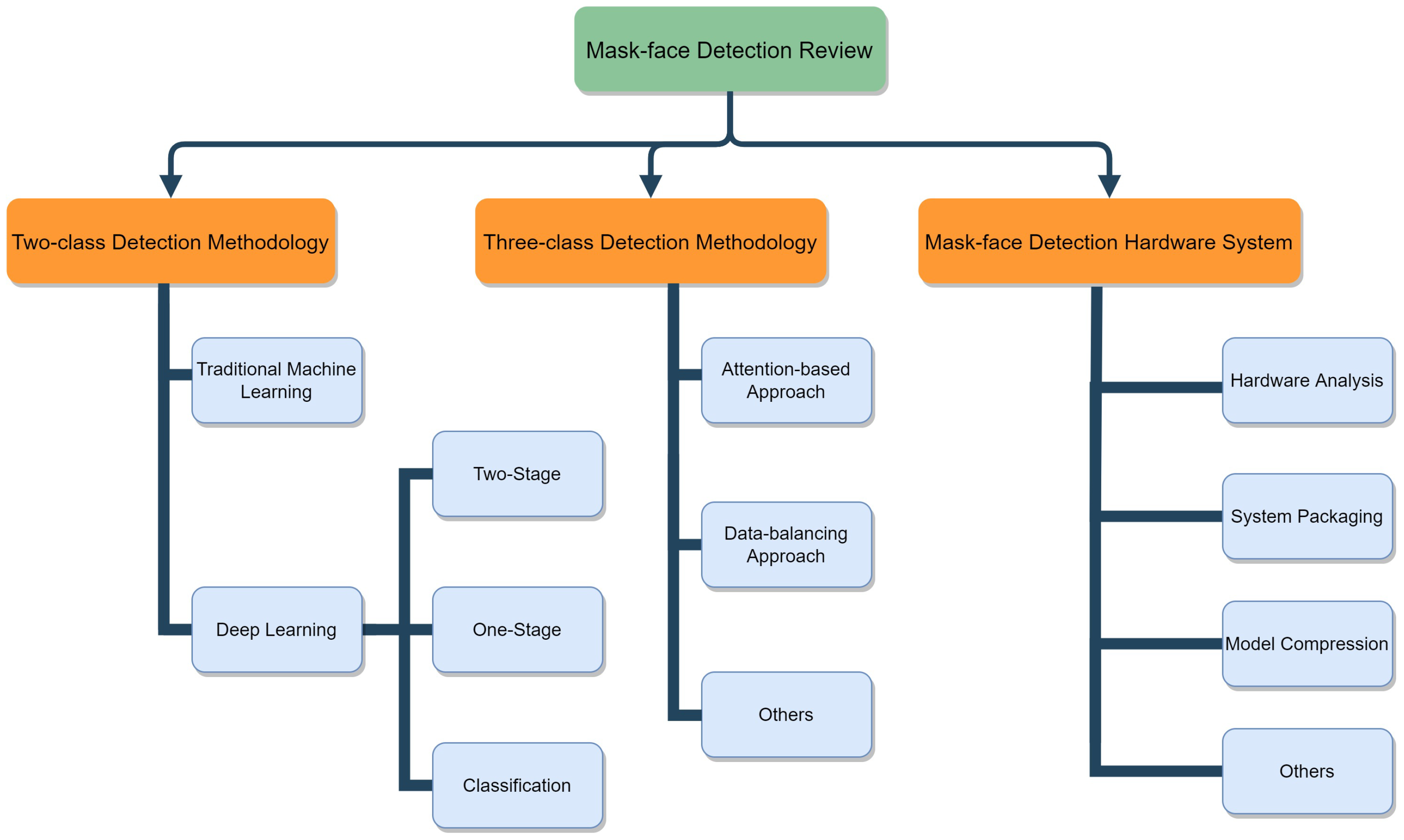

2.4. Review Structure

3. Related Works

3.1. Major Evaluation Metrics

3.2. Summary of Previous Review Papers on Mask-Face Detection Methodologies

4. Review of Mask-Face Detection Methodologies

4.1. Two-Class Mask-Face Detection Methodologies

4.1.1. Traditional Machine Learning Based Approaches

4.1.2. Deep Learning Based Approaches

4.2. Three-Class Mask-Face Detection Methodologies

4.2.1. Attention-Based Approaches

4.2.2. Data Balancing-Based Approaches

4.2.3. Others

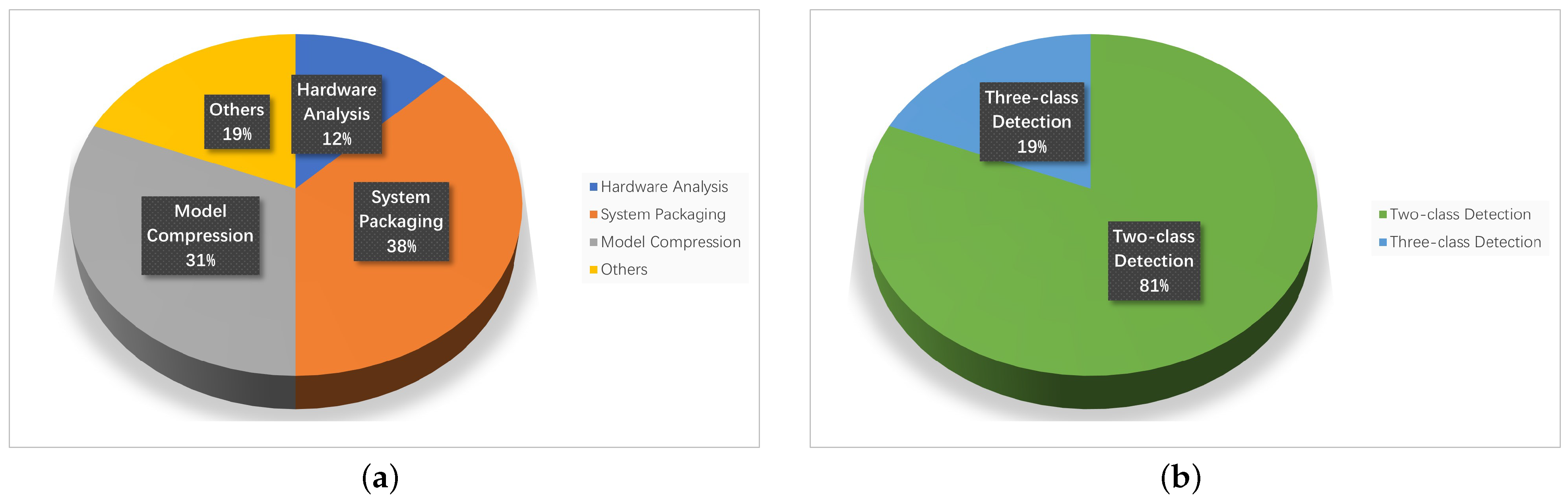

5. Review of Mask-Face Detection Hardware Systems

5.1. Recent Mask-Face Detection Systems

- Hardware Analysis: Either making a careful comparisons among various hardware types or developing special techniques to perform acceleration on the hardware platform.

- System Packaging: Developing ready-to-use mask-face detection product prototypes such as a smart door.

- Model Compression: Leveraging various compression techniques to build light-weight mask–face detection models to fit the embedded systems.

- Others: Contribute to mask–face detection hardware system from other perspectives.

5.1.1. Hardware Analysis

5.1.2. System Packaging

5.1.3. Model Compression

5.1.4. Others

5.2. Discussions

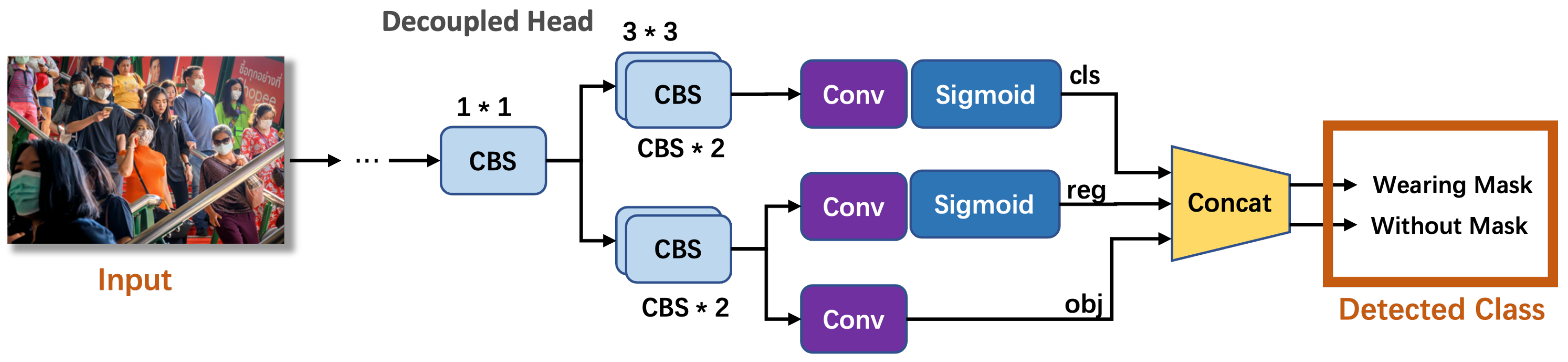

6. OOD-Mask Detection Framework

6.1. Related Works

6.1.1. Out-of-Distribution Detection

6.1.2. YOLOX Object Detection Model

6.2. Methodology of OOD-Mask

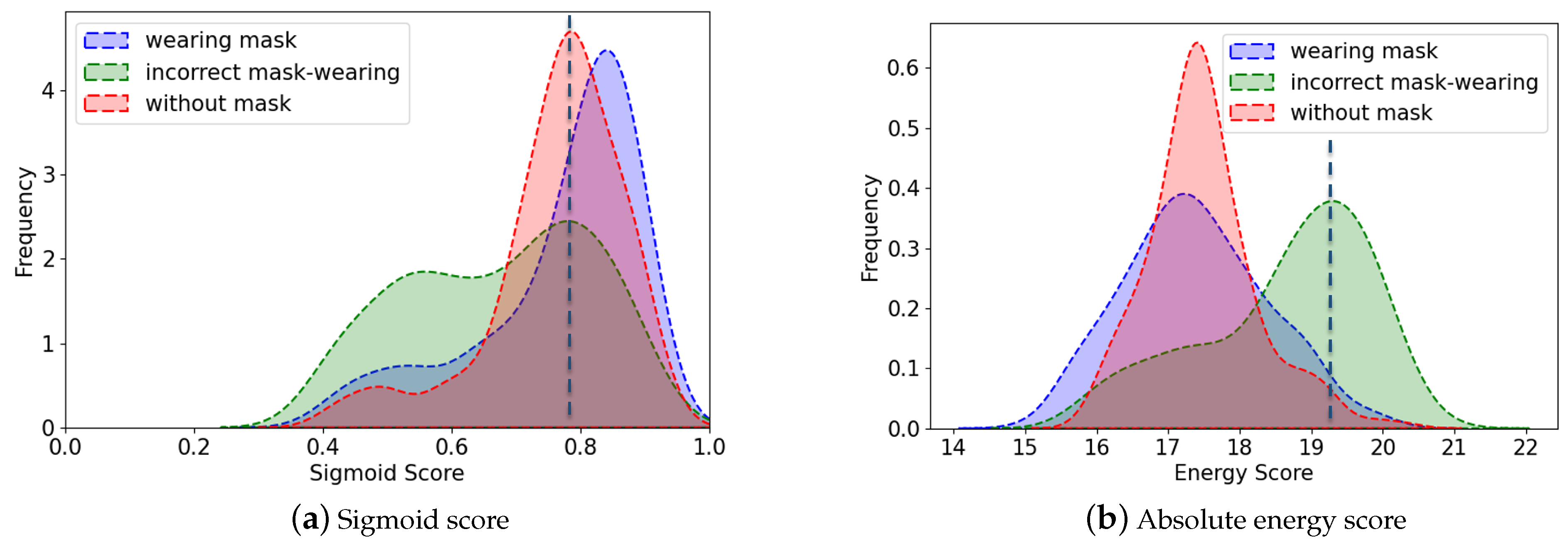

6.2.1. Sigmoid Score

6.2.2. Energy Score

6.3. Dataset

6.4. Experiments & Results

6.4.1. Experiment on the Original Model

6.4.2. Experiment on Sigmoid Scores

6.4.3. Experiment on OOD-Mask Detection Framework

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| COVID-19 | Coronavirus disease-2019 |

| WHO | World Health Organization |

| PHEIC | Public Health Emergency of International Concern |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

| TPR | True Positive Rate |

| FPR | False Positive Rate |

| IoU | Intersection over Union |

| AP | Average Precision |

| mAP | mean Average Precision |

| HOG | Histogram of Oriented Gradient |

| LBP | Local Binary Pattern |

| CNN | Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| ResNet | Residual Neural Network |

| YOLO | You Only Look Once |

| SSD | Single Shot Detector |

| RPN | Region Proposal Network |

| R-CNN | Region-based Convolutional Neural Network |

| CBAM | Convolutional Block Attention Module |

| MAFA | Masked Face |

| MFDD | Masked Face Detection Dataset |

| SMFRD | Simulated Masked Face Recognition Dataset |

| RMFRD | Real-world Masked Face Recognition Dataset |

| RMFD | Real-World Masked Face Dataset |

| FMD | Facemask Detection |

| MAFA-FMD | MAsked FAces for Face Mask Detection |

| WMD | Wearing Mask Detection |

| FM | Face Mask |

| FPS | Frame Per Second |

| BNN | Binary Neural Network |

| FPGA | Field Programmable Gate Array |

| HCC | Haar Cascade Classifier |

| SVM | Support Vector Machine |

| KNN | K-NearestNeighbor |

| AD | Anomaly Detection |

| ND | Novelty Detection |

| OSR | Open Set Recognition |

| OD | Outlier Detection |

| VAE | Variational Autoencoder |

| SPP | Spatial Pyramid Pooling |

| ID | In-distribution |

| OOD | Out-of-distribution |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under Curve |

| FPS | Frame Per Second |

References

- WHO Coronavirus (COVID-19) Dashboard. 2022. Available online: https://covid19.who.int/ (accessed on 1 July 2022).

- Cao, Y.; Yisimayi, A.; Jian, F.; Song, W.; Xiao, T.; Wang, L.; Du, S.; Wang, J.; Li, Q.; Chen, X.; et al. BA. 2.12. 1, BA. 4 and BA. 5 escape antibodies elicited by Omicron infection. Nature 2022, 608, 593–602. [Google Scholar] [CrossRef]

- Mandatory Face Masks Reintroduced at Manx Care Sites after COVID Spike. 2022. Available online: https://www.bbc.com/news/world-europe-isle-of-man-61873373 (accessed on 1 July 2022).

- Nowrin, A.; Afroz, S.; Rahman, M.S.; Mahmud, I.; Cho, Y.Z. Comprehensive review on facemask detection techniques in the context of COVID-19. IEEE Access 2021, 9, 106839–106864. [Google Scholar] [CrossRef]

- Mbunge, E.; Simelane, S.; Fashoto, S.G.; Akinnuwesi, B.; Metfula, A.S. Application of deep learning and machine learning models to detect COVID-19 face masks-A review. Sustain. Oper. Comput. 2021, 2, 235–245. [Google Scholar] [CrossRef]

- Vibhuti; Jindal, N.; Singh, H.; Rana, P.S. Face mask detection in COVID-19: A strategic review. Multimed. Tools Appl. 2022. [Google Scholar] [CrossRef] [PubMed]

- Mohammed Ali, F.A.; Al-Tamimi, M.S. Face mask detection methods and techniques: A review. Int. J. Nonlinear Anal. Appl. 2022, 13, 3811–3823. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Chollet, F. Xception: Deep Learning With Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. Wider face: A face detection benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5525–5533. [Google Scholar]

- Ge, S.; Li, J.; Ye, Q.; Luo, Z. Detecting masked faces in the wild with lle-cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2682–2690. [Google Scholar]

- Wang, Z.; Wang, G.; Huang, B.; Xiong, Z.; Hong, Q.; Wu, H.; Yi, P.; Jiang, K.; Wang, N.; Pei, Y.; et al. Masked face recognition dataset and application. arXiv 2020, arXiv:2003.09093. [Google Scholar]

- Roy, B.; Nandy, S.; Ghosh, D.; Dutta, D.; Biswas, P.; Das, T. MOXA: A deep learning based unmanned approach for real-time monitoring of people wearing medical masks. Trans. Indian Natl. Acad. Eng. 2020, 5, 509–518. [Google Scholar] [CrossRef]

- Chiang, D. AIZOOTech. 2021. Available online: https://github.com/AIZOOTech/FaceMaskDetection (accessed on 1 July 2022).

- Face Mask Detection Dataset. Available online: https://www.kaggle.com/omkargurav/face-mask-dataset (accessed on 12 December 2021).

- Fan, X.; Jiang, M. RetinaFaceMask: A single stage face mask detector for assisting control of the COVID-19 pandemic. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 832–837. [Google Scholar]

- Wang, B.; Zhao, Y.; Chen, C.L.P. Hybrid Transfer Learning and Broad Learning System for Wearing Mask Detection in the COVID-19 Era. IEEE Trans. Instrum. Meas. 2021, 70, 5009612. [Google Scholar] [CrossRef]

- Ottakath, N.; Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S.; Mohamed, A.; Khattab, T.; Abualsaud, K. ViDMASK dataset for face mask detection with social distance measurement. Displays 2022, 73, 102235. [Google Scholar] [CrossRef]

- Bayu Dewantara, B.S.; Twinda Rhamadhaningrum, D. Detecting Multi-Pose Masked Face Using Adaptive Boosting and Cascade Classifier. In Proceedings of the 2020 International Electronics Symposium (IES), Surabaya, Indonesia, 29–30 September 2020; pp. 436–441. [Google Scholar] [CrossRef]

- Lin, H.; Tse, R.; Tang, S.K.; Chen, Y.; Ke, W.; Pau, G. Near-Realtime Face Mask Wearing Recognition Based on Deep Learning. In Proceedings of the 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Sethi, S.; Kathuria, M.; Kaushik, T. Face mask detection using deep learning: An approach to reduce risk of Coronavirus spread. J. Biomed. Inform. 2021, 120, 103848. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Mercaldo, F.; Santone, A. Transfer learning for mobile real-time face mask detection and localization. J. Am. Med. Inform. Assoc. 2021, 28, 1548–1554. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. Mobilenetv2: The next generation of on-device computer vision networks. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Prusty, M.R.; Tripathi, V.; Dubey, A. A novel data augmentation approach for mask detection using deep transfer learning. Intell.-Based Med. 2021, 5, 100037. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Walia, I.S.; Kumar, D.; Sharma, K.; Hemanth, J.D.; Popescu, D.E. An Integrated Approach for Monitoring Social Distancing and Face Mask Detection Using Stacked ResNet-50 and YOLOv5. Electronics 2021, 10, 2996. [Google Scholar] [CrossRef]

- Guo, L.; Wang, Q.; Xue, W.; Guo, J. Detection of Mask Wearing in Dim Light Based on Attention Mechanism. Dianzi Keji Daxue Xuebao/J. Univ. Electron. Sci. Technol. China 2022, 51, 123–129. [Google Scholar]

- Goyal, H.; Sidana, K.; Singh, C.; Jain, A.; Jindal, S. A real time face mask detection system using convolutional neural network. Multimed. Tools Appl. 2022, 81, 14999–15015. [Google Scholar] [CrossRef]

- Yang, C.W.; Phung, T.H.; Shuai, H.H.; Cheng, W.H. Mask or Non-Mask? Robust Face Mask Detector via Triplet-Consistency Representation Learning. ACM Trans. Multimed. Comput. Commun. Appl. 2022, 18, 1–20. [Google Scholar] [CrossRef]

- Singh, S.; Ahuja, U.; Kumar, M.; Kumar, K.; Sachdeva, M. Face mask detection using YOLOv3 and faster R-CNN models: COVID-19 environment. Multimed. Tools Appl. 2021, 80, 19753–19768. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. You Only Learn One Representation: Unified Network for Multiple Tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar] [CrossRef]

- Negi, A.; Kumar, K.; Chauhan, P.; Rajput, R. Deep Neural Architecture for Face mask Detection on Simulated Masked Face Dataset against COVID-19 Pandemic. In Proceedings of the 2021 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 1–20 February 2021; pp. 595–600. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Adhinata, F.D.; Rakhmadani, D.P.; Wibowo, M.; Jayadi, A. A deep learning using DenseNet201 to detect masked or non-masked face. JUITA J. Inform. 2021, 9, 115–121. [Google Scholar] [CrossRef]

- Habib, S.; Alsanea, M.; Aloraini, M.; Al-Rawashdeh, H.S.; Islam, M.; Khan, S. An Efficient and Effective Deep Learning-Based Model for Real-Time Face Mask Detection. Sensors 2022, 22, 2602. [Google Scholar] [CrossRef]

- Waleed, J.; Abbas, T.; Hasan, T.M. Facemask Wearing Detection Based on Deep CNN to Control COVID-19 Transmission. In Proceedings of the 2022 Muthanna International Conference on Engineering Science and Technology (MICEST), Samawah, Iraq, 16–17 March 2022; pp. 158–161. [Google Scholar] [CrossRef]

- Singh, P.; Garg, A.; Singh, A. A Comprehensive Analysis on Masked Face Detection Algorithms. In Advanced Healthcare Systems; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2022; Chapter 16; pp. 319–334. [Google Scholar] [CrossRef]

- Olukumoro, O.S.; Ajayi, F.A.; Adebayo, A.A.; Usman, A.A.B.; Johnson, F. HIC-DEEP: A Hierarchical Clustered Deep Learning Model for Face Mask Detection. Int. J. Res. Innov. Appl. Sci. 2022, 7, 22–28. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Dollár, P.; Appel, R.; Belongie, S.; Perona, P. Fast Feature Pyramids for Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Oumina, A.; El Makhfi, N.; Hamdi, M. Control The COVID-19 Pandemic: Face Mask Detection Using Transfer Learning. In Proceedings of the 2020 IEEE 2nd International Conference on Electronics, Control, Optimization and Computer Science (ICECOCS), Kenitra, Morocco, 2–3 December 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning. PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Wu, P.; Li, H.; Zeng, N.; Li, F. FMD-Yolo: An efficient face mask detection method for COVID-19 prevention and control in public. Image Vis. Comput. 2022, 117, 104341. [Google Scholar] [CrossRef]

- Han, Z.; Huang, H.; Fan, Q.; Li, Y.; Li, Y.; Chen, X. SMD-YOLO: An efficient and lightweight detection method for mask wearing status during the COVID-19 pandemic. Comput. Methods Programs Biomed. 2022, 221, 106888. [Google Scholar] [CrossRef] [PubMed]

- Guo, S.; Li, L.; Guo, T.; Cao, Y.; Li, Y. Research on Mask-Wearing Detection Algorithm Based on Improved YOLOv5. Sensors 2022, 22, 4933. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Han, F.; Chun, Y.; Chen, W. A Novel Detection Framework About Conditions of Wearing Face Mask for Helping Control the Spread of COVID-19. IEEE Access 2021, 9, 42975–42984. [Google Scholar] [CrossRef]

- Kayali, D.; Dimililer, K.; Sekeroglu, B. Face Mask Detection and Classification for COVID-19 using Deep Learning. In Proceedings of the 2021 International Conference on INnovations in Intelligent SysTems and Applications (INISTA), Kocaeli, Turkey, 25–27 August 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, W. Face Mask Wearing Detection Algorithm Based on Improved YOLO-v4. Sensors 2021, 21, 3263. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Proceedings of Machine Learning Research. Bach, F., Blei, D., Eds.; PMLR: Lille, France, 2015; Volume 37, pp. 448–456. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Razavi, M.; Alikhani, H.; Janfaza, V.; Sadeghi, B.; Alikhani, E. An automatic system to monitor the physical distance and face mask wearing of construction workers in COVID-19 pandemic. SN Comput. Sci. 2022, 3, 27. [Google Scholar] [CrossRef]

- Kumar, A.; Kalia, A.; Verma, K.; Sharma, A.; Kaushal, M. Scaling up face masks detection with YOLO on a novel dataset. Optik 2021, 239, 166744. [Google Scholar] [CrossRef]

- Mokeddem, M.L.; Belahcene, M.; Bourennane, S. Yolov4FaceMask: COVID-19 Mask Detector. In Proceedings of the 2021 1st International Conference On Cyber Management And Engineering (CyMaEn), Hammamet, Tunisia, 26–28 May 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Tomás, J.; Rego, A.; Viciano-Tudela, S.; Lloret, J. Incorrect facemask-wearing detection using convolutional neural networks with transfer learning. Healthcare 2021, 9, 1050. [Google Scholar] [CrossRef]

- Kumar, A.; Kalia, A.; Sharma, A.; Kaushal, M. A hybrid tiny YOLO v4-SPP module based improved face mask detection vision system. J. Ambient. Intell. Humaniz. Comput. 2021, 1–14. [Google Scholar] [CrossRef]

- Eyiokur, F.I.; Ekenel, H.K.; Waibel, A. Unconstrained Face-Mask & Face-Hand Datasets: Building a Computer Vision System to Help Prevent the Transmission of COVID-19. arXiv 2021, arXiv:2103.08773. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Richardson, M.; Wallace, S. Getting Started with Raspberry PI; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2012. [Google Scholar]

- Vijitkunsawat, W.; Chantngarm, P. Study of the performance of machine learning algorithms for face mask detection. In Proceedings of the 2020-5th International Conference on Information Technology (InCIT), Chonburi, Thailand, 21–22 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 39–43. [Google Scholar]

- Sertic, P.; Alahmar, A.; Akilan, T.; Javorac, M.; Gupta, Y. Intelligent Real-Time Face-Mask Detection System with Hardware Acceleration for COVID-19 Mitigation. Healthcare 2022, 10, 873. [Google Scholar] [CrossRef]

- Fang, T.; Huang, X.; Saniie, J. Design flow for real-time face mask detection using PYNQ system-on-chip platform. In Proceedings of the 2021 IEEE International Conference on Electro Information Technology (EIT), Mt. Pleasant, MI, USA, 14–15 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Humans in the Loop. Medical Mask Dataset. 2020. Available online: https://humansintheloop.org/resources/datasets/medical-mask-dataset/ (accessed on 1 July 2022).

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 1, p. I. [Google Scholar]

- Kumar, T.A.; Rajmohan, R.; Pavithra, M.; Ajagbe, S.A.; Hodhod, R.; Gaber, T. Automatic Face Mask Detection System in Public Transportation in Smart Cities Using IoT and Deep Learning. Electronics 2022, 11, 904. [Google Scholar] [CrossRef]

- Susanto, S.; Putra, F.A.; Analia, R.; Suciningtyas, I.K.L.N. The face mask detection for preventing the spread of COVID-19 at Politeknik Negeri Batam. In Proceedings of the 2020 3rd International Conference on Applied Engineering (ICAE), Batam, Indonesia, 7–8 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Wang, Z.; Wang, P.; Louis, P.C.; Wheless, L.E.; Huo, Y. Wearmask: Fast in-browser face mask detection with serverless edge computing for COVID-19. arXiv 2021, arXiv:2101.00784. [Google Scholar]

- Varshini, B.; Yogesh, H.; Pasha, S.D.; Suhail, M.; Madhumitha, V.; Sasi, A. IoT-Enabled smart doors for monitoring body temperature and face mask detection. Glob. Transit. Proc. 2021, 2, 246–254. [Google Scholar] [CrossRef]

- Özyurt, F.; Mira, A.; Çoban, A. Face Mask Detection Using Lightweight Deep Learning Architecture and Raspberry Pi Hardware: An Approach to Reduce Risk of Coronavirus Spread While Entrance to Indoor Spaces. Trait. Signal 2022, 39, 645–650. [Google Scholar] [CrossRef]

- Cabani, A.; Hammoudi, K.; Benhabiles, H.; Melkemi, M. MaskedFace-Net–A dataset of correctly/incorrectly masked face images in the context of COVID-19. Smart Health 2021, 19, 100144. [Google Scholar] [CrossRef]

- Labib, R.P.M.D.; Hadi, S.; Widayaka, P.D. Low Cost System for Face Mask Detection Based Haar Cascade Classifier Method. MATRIK J. Manaj. Tek. Inform. Dan Rekayasa Komput. 2021, 21, 21–30. [Google Scholar] [CrossRef]

- Park, K.; Jang, W.; Lee, W.; Nam, K.; Seong, K.; Chai, K.; Li, W.S. Real-time mask detection on google edge TPU. arXiv 2020, arXiv:2010.04427. [Google Scholar]

- Said, Y. Pynq-YOLO-Net: An embedded quantized convolutional neural network for face mask detection in COVID-19 pandemic era. Int. J. Adv. Comput. Sci. Appl 2020, 11, 100–106. [Google Scholar] [CrossRef]

- Mohan, P.; Paul, A.J.; Chirania, A. A tiny CNN architecture for medical face mask detection for resource-constrained endpoints. In Innovations in Electrical and Electronic Engineering; Springer: Berlin/Heidelberg, Germany, 2021; pp. 657–670. [Google Scholar]

- Fasfous, N.; Vemparala, M.R.; Frickenstein, A.; Frickenstein, L.; Badawy, M.; Stechele, W. Binarycop: Binary neural network-based COVID-19 face-mask wear and positioning predictor on edge devices. In Proceedings of the 2021 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Portland, OR, USA, 17–21 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 108–115. [Google Scholar]

- Liberatori, B.; Mami, C.A.; Santacatterina, G.; Zullich, M.; Pellegrino, F.A. YOLO-Based Face Mask Detection on Low-End Devices Using Pruning and Quantization. In Proceedings of the 2022 45th Jubilee International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 900–905. [Google Scholar]

- Mask-Detection-Dataset. 2020. Available online: https://github.com/archie9211/Mask-Detection-Dataset (accessed on 1 July 2022).

- Ruparelia, S.; Jethva, M.; Gajjar, R. Real-time Face Mask Detection System on Edge using Deep Learning and Hardware Accelerators. In Proceedings of the 2021 2nd International Conference on Communication, Computing and Industry 4.0 (C2I4), Bangalore, India, 16–17 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Jiang, X.; Gao, T.; Zhu, Z.; Zhao, Y. Real-time face mask detection method based on YOLOv3. Electronics 2021, 10, 837. [Google Scholar] [CrossRef]

- Jovović, I.; Babić, D.; Čakić, S.; Popović, T.; Krčo, S.; Knežević, P. Face Mask Detection Based on Machine Learning and Edge Computing. In Proceedings of the 2022 21st International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 16–18 March 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Hubara, I.; Courbariaux, M.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized neural networks. Adv. Neural Inf. Process. Syst. 2016, 29, 2044. [Google Scholar]

- Umuroglu, Y.; Fraser, N.J.; Gambardella, G.; Blott, M.; Leong, P.; Jahre, M.; Vissers, K. Finn: A framework for fast, scalable binarized neural network inference. In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 65–74. [Google Scholar]

- Rahmatulloh, A.; Gunawan, R.; Sulastri, H.; Pratama, I.; Darmawan, I. Face Mask Detection using Haar Cascade Classifier Algorithm based on Internet of Things with Telegram Bot Notification. In Proceedings of the 2021 International Conference Advancement in Data Science, E-learning and Information Systems (ICADEIS), Bali, Indonesia, 13–14 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Ruff, L.; Kauffmann, J.R.; Vandermeulen, R.A.; Montavon, G.; Samek, W.; Kloft, M.; Dietterich, T.G.; Müller, K. A Unifying Review of Deep and Shallow Anomaly Detection. CoRR 2020, 109, 756–795. [Google Scholar] [CrossRef]

- Pimentel, M.A.F.; Clifton, D.A.; Clifton, L.A.; Tarassenko, L. A review of novelty detection. Signal Process. 2014, 99, 215–249. [Google Scholar] [CrossRef]

- Boult, T.E.; Cruz, S.; Dhamija, A.R.; Günther, M.; Henrydoss, J.; Scheirer, W.J. Learning and the Unknown: Surveying Steps toward Open World Recognition. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: California, CA, USA, 2019; pp. 9801–9807. [Google Scholar] [CrossRef]

- Aggarwal, C.C.; Yu, P.S. Outlier Detection for High Dimensional Data. In Proceedings of the the 2001 ACM SIGMOD International Conference on Management of Data, Santa Barbara, CA, USA, 21–24 May 2001; Mehrotra, S., Sellis, T.K., Eds.; ACM: New York, NY, USA, 2001; pp. 37–46. [Google Scholar] [CrossRef]

- Hendrycks, D.; Gimpel, K. A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks. arXiv 2016, arXiv:1610.02136. [Google Scholar]

- Liang, S.; Li, Y.; Srikant, R. Principled Detection of Out-of-Distribution Examples in Neural Networks. arXiv 2017, arXiv:1706.02690. [Google Scholar]

- Denouden, T.; Salay, R.; Czarnecki, K.; Abdelzad, V.; Phan, B.; Vernekar, S. Improving Reconstruction Autoencoder Out-of-distribution Detection with Mahalanobis Distance. arXiv 2018, arXiv:1812.02765. [Google Scholar]

- An, J.; Cho, S. Variational Autoencoder based Anomaly Detection Using Reconstruction Probability. Comput. Sci. 2015, 2015, 36663713. [Google Scholar]

- Abdelzad, V.; Czarnecki, K.; Salay, R.; Denouden, T.; Vernekar, S.; Phan, B. Detecting Out-of-Distribution Inputs in Deep Neural Networks Using an Early-Layer Output. arXiv 2019, arXiv:1910.10307. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Liu, W.; Wang, X.; Owens, J.; Li, Y. Energy-based out-of-distribution detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21464–21475. [Google Scholar]

- Zhai, S.; Cheng, Y.; Lu, W.; Zhang, Z. Deep structured energy based models for anomaly detection. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 20–22 June 2016; pp. 1100–1109. [Google Scholar]

- Riaz, M.; Tanveer, S.; Pamucar, D.; Qin, D.S. Topological Data Analysis with Spherical Fuzzy Soft AHP-TOPSIS for Environmental Mitigation System. Mathematics 2022, 10, 1826. [Google Scholar] [CrossRef]

| Dataset | Year | Images (or Videos) | Class |

|---|---|---|---|

| WIDER FACE [16] | 2016 | 32,203 images | without mask |

| MAFA [17] | 2017 | 30,811 images | wearing mask |

| MFDD [18] | 2020 | 24,771 images | wearing mask |

| SMFRD [18] | 2020 | 500,000 images | wearing mask |

| RMFRD [18] | 2020 | 95,000 images | wearing mask: 5000/ without mask: 90,000 |

| Moxa3K [19] | 2020 | 3000 images | without mask: 9161/ wearing mask: 3015 |

| AIZOO [20] | 2021 | 7971 images | wearing mask: 4064/ without mask: 3894 |

| FMD [21] | 2021 | 7553 images | wearing mask: 3725/ without mask: 3828 |

| MAFA-FMD [22] | 2021 | 56,024 images | wearing mask: 28,233/ without mask: 26,463/ incorrect mask-wearing: 1388 |

| WMD [23] | 2021 | 7804 images | wearing mask: 26,403 |

| ViDMASK [24] | 2022 | 67 videos | wearing mask: 20,000 instances/without mask: 2500 instances |

| Ref. & Publish Date | Datasets | Models | Detection Performance | Merits | Limitations | |

|---|---|---|---|---|---|---|

| Traditional machine learning | October 2020, [25] | A combination of CASPEAL dataset, AISL dataset, INRIA dataset and RMFD dataset | Haar Wavelet, Local Binary Pattern (LBP) and Histogram of Oriented Gradient (HOG) based Adaptive Boostings and Cascade Classifiers | Accuracy of 86.9% | The work focused on masks multi-pose faces using traditional methods. | The detection accuracy was lower than that of the neural network, and self-made feature pictures were limited. |

| January 2021, [26] | A customized dataset of 2192 images | FMRN (A customized CNN) | Day time accuracy of 95.8%, Night time accuracy of 94.6% | The authors added the human skeleton analysis part to analyze the human posture, and the influence of light on the detection system was considered. | The number of images in the dataset was limited. | |

| Two-stage | July 2021, [27] | A customized mask-face dataset and video surveillance | ResNet50 [28], MobileNet [29] and AlexNet [30] | Accuracy of 98.2% | Besides mask-face detection, the identity of persons without a mask was confirmed. It required less memory and has low inference time, high accuracy. | Due to the addition of RPN, the detection speed was not as fast as one-stage. |

| One-stage | March 2021, [31] | A customized dataset of 4095 images | A transfer learning on MobileNetV2 [32] and use SSD [33] detector. | Accuracy of 98% | Performed high detection accuracy and could work on devices with limited computational resources. | The proposed method lacked consideration for crowded situations. |

| June 2021, [34] | The customized dataset of 350 MB size | Mask Detection Model (MDM) with YOLOv3 [35] detector | Average confidence level are 97% (A), 96% (B) and 93% (C) | Separated the data categories into individual images (type A), group people (type B), and videos with the group of people (type C). | The study lacked comparison with other mask-face detection models. | |

| December 2021, [36] | A combination of 1916 masked and 1930 unmasked images | Stacked ResNet-50 [28], YOLOv5 and density-based spatial clustering of applications with noise (DBSCAN) | mAP of 98% | In addition to detecting masks, social distancing norms were also detected, and real-time detection was performed. | Dim light situations were not investigated in the scope of the research. | |

| January 2022, [37] | A customized dataset of 9000 images | YOLOv5 | Accuracy of 92% | The authors combined attention mechanism with YOLOv5, thereby improving the accuracy of mask wearing detection in dim light conditions. | The dataset did not contain pictures of multiple people for mask-face detection. | |

| February 2022, [38] | A customized dataset of 4000 images | A customized CNN model with ResNet-10 [28] architecture based on SSD | Accuracy of 98% | Compared with other network models, the accuracy rate was higher for a small model size. | This model did not consider mask-face detection in dim light situations. | |

| February 2022, [39] | WiderFace and MAFA | A customized frame "CenterFace" (CenterFace(MobileNet) (CenterFace(ResNet18) and an anchor-free detector | Without mask: precision of 99.3%, recall of 96.2%, wearing mask: precision of 99.7%, recall of 96.7% | They conducted ablation studies using different datasets and networks, and the size of proposed model was small. | Surveillance video was not included in the dataset, and real-time detection was not considered. | |

| Two-stage & One-stage | February 2021, [40] | A combination of MAFA, WIDER FACE and many manually prepared images | Faster R-CNN [14] and YOLOv3 [35] | Average Precision of 55% and 62% | Comparing one-stage and two-stage models in mask detection for the first time, it was found that two-stage had higher accuracy and that the one-stage model is faster. | There was a limited contribution of deep learning models to mask-face detection. |

| May 2022, [24] | ViDMASK (proposed for the first time) | Mask R-CNN [9], YOLOv4 [41], YOLOv5 and YOLOR [42] | mAP of 92.4% in YOLOR | The research proposed a new dataset, ViDMASK, in terms of pose, environment, quality of image, and versatile subject characteristics. | Compared with MOXA3K, except for the better performance on YOLOR, it did not perform well on the rest of the frameworks. | |

| Classification | April 2021, [43] | SMFD | Customized CNN and VGG16 [44] based deep learning models | Accuracy of 97.42% and accuracy of 98.97% in test | The model added data augmentation to enlarge the training dataset. | A limited number of images were included in the dataset, and the model did not detect during video surveillance. |

| May 2021, [45] | MFRD | A customized DenseNet201 model | F-Measure of 98% | It had higher detection accuracy with more parameters. | Only the front face was detected, and different face poses cannot be detected. | |

| March 2022, [46] | FMD, FM, RMFRD | MobileNetV2 [32] followed by autoencoders. | precision of 99.96%, recall of 99.97%, F1-score of 99.97%, and accuracy of 99.98% | Ablation studies were conducted in nine network structures using three different datasets and the proposed models was compared with other models. The proposed model size is small. | The model did not provide location information, as it only focused on the classification. | |

| March 2022, [47] | RMFD | A customized deep CNN | Accuracy of 99.57% | The model achieved high accuracy. | The dataset did not include scenes with many people. | |

| March 2022, [48] | A customized mask-face dataset | SSDMNV2, LeNet-5, AlexNet [30], VGG-16 [44], ResNet-50 [28] and SVM | Accuracy of 98.7% | They compared multiple models to find the algorithm of best accuracy while spending the least time during training and detection. | The proposed method was not described in detail and the results of performance were not clear for comparison. | |

| May 2022, [49] | A customized mask-face dataset | HIC-DEEP, using InceptionV3 [50] and evaluation of the distance algorithms (Euclidian, Spearman, and Pearson) | Accuracy of 60%, 78% and 85% | The architecture chose three different distance measure methods to classify images. | The accuracy of the proposed model was low. |

| Ref. & Publish Date | Datasets | Models | Detection Performance | Merits | Limitations | |

|---|---|---|---|---|---|---|

| Attention- based approach | March 2021, [60] | A customized dataset of 4672 images | A customized framework of Context-Attention R-CNN | mAP of 84.1% | The model improved the accuracy of mask-face detection by adding an attention module, decoupling branches, and adapting multiple extractors of the context features. | Three classes of data in the dataset were imbalanced, without a discussion of the model size. |

| November 2021, [57] | Two publicly available datasets | A customized network FMD-Yolo | AP50 of 92.0% and 88.4% on the two datasets | Improved robustness, generalization ability and inference efficiency. | The accuracy for the incorrect mask-wearing class was lower than for the other two classes, and the model did not include the impact of different scenarios on mask-face detection. | |

| May 2022, [58] | A customized dataset of 11,000 images | SMD-YOLO based on YOLOv4-tiny [41] | mAP of 67.01% | The model significantly improved the detection ability of small targets in public, and had a fast processing speed for real-time detection. | The data imbalance effect was not considered. | |

| June 2022, [59] | A combination of RMFD, AFLW, and a web crawler | YOLOv5-CBD | mAP of 96.7% | This model reached high accuracy with potential for real-time detection. It was able to detect small-scale targets, which led a better effectiveness compared to YOLOv5. | There was only an overall performance of three classes provided so we were unable to evaluate each class separately. | |

| Data balancing- based approach | March 2021, [23] | Proposed models of WMD and WMC | Based on the Faster R-CNN [14] and InceptionV2 [63] in the first stage. The face masks were verified by a broad learning system. | Correct Mask accuracy of 99.87% Incorrect Mask_Chin: Accuracy of 99.93% Incorrect Mask_ Mouth_Chin accuracy of 97.47% | They considered both the impact on mask detection in different scenarios and situations where multiple people wear masks in small-size images. | Real-time detection in video surveillance was not tested. |

| May 2021, [62] | A combination of their own photos, RMFD and MaskedFace-Net datasets | The combination of an improved CSPDarkNet53, the adaptive image scaling algorithm and the improved PANet structure based on YOLOv4 [41] | mAP of 98.3%, when IOU was 0.5. | The developed model had a high accuracy and high frame rate. The customized dataset included various head poses wearing masks and various situations of wearing incorrect masks. | This study performed mask-face detection without the consideration of dim light conditions. | |

| August 2021, [61] | A customized dataset of 7892 images | Face detection algorithms in OpenCV & ResNet50 [28] and NASNetMobile [64] used for feature extraction and classification | Accuracy of 92% | Made a comparison between light-weighted network and deep structure network. The numbers of samples of each class in the dataset are about the same. | Neglected the situations of mask-face detection in video surveillance. | |

| October 2021, [65] | A customized dataset of 3300 images and four videos | Faster R-CNN [14] and Inception V2 [63] | Accuracy of 99.8% | The algorithm successfully identified people who did not maintain physical distance and were too near each another. It also took into account the angle of the camera. | Compared with the other two types of results, the accuracy of incorrect mask-wearing class was not satisfactory. | |

| Others | March 2021, [66] | A customized dataset of 52,635 images | Tweaked and modified feature extraction networks for tiny YOLOv1 [15], tiny YOLOv2 [52], tiny YOLOv3 [35] and tiny YOLOv4 [41] | mAP of 70.25% for M-T YOLOv4 | The proposed dataset contained a unique class of mask area, which enhanced the detection accuracy of small object targets. | There were large gaps between the accuracy of the three classes of detection, especially for incorrect mask-wearing. |

| May 2021, [67] | A combination of 14,409 images from Wider face, FMD and RMFD | Yolov4FaceMask | mAP of 88.82% | The research considered the detection of different types of face masks in different scenarios and input videos of different resolutions. | The detection accuracy was low. The number of samples in each class in training dataset was not specified. | |

| August 2021, [68] | A customized dataset of 3200 images | CNN Models of MobileNetV2 [32], Xception [11], InceptionV3 [50], ResNet-50 [28], NASNet [64], and VGG19 [44] with transfer learning. | F1-score of 85% | In the dataset, the class of incorrect mask-wearing was subdivided into ten more specific types. | The detection accuracy of the incorrect mask-wearing was low. | |

| October 2021, [69] | A customized dataset of 11,000 images | Tiny YOLO v4-SPP | mAP of 64.31% | In addition to the detection of the classes of masked-faces, the mask area was also detected. | The detection accuracy was lower than other state-of-the-art methodologies, and the accuracy gap among categories was large. | |

| December 2021, [70] | ISL-UFMD and ISL-UFHD (proposed for the first time) | RetinaFace as the detector. ResNet50 [71], Inception-v3 [50], MobileNetV2 [32] and EfficientNet [56] as classifier. And the DeepHRNet [72] for person detection. | Accuracy of 98.20% | The authors not only considered mask-face detection, but also the detection of face–hand interaction and social distance. | Compared with the other two categories, the class of incorrect mask-wearing was of a low accuracy. |

| Ref. & Publish Date | Hardware Platform | Speed Performance/ Detection Performance | Datasets | Models | Merits | Limitations | |

|---|---|---|---|---|---|---|---|

| Hardware Analysis | May 2022, [75] | a. Raspberry Pi 4 computational platform + Google Coral Edge TPU + 8-bit quantized model, b. Raspberry Pi 4 computational platform + Intel Neural Compute Stick 2 (INCS2) + Compressed model in float16 precision, c. Jetson Nano + 128 Maxwell cores + Compressed model in float32 precision | a. FPS of 19, b. FPS of 18, c. FPS of 22/ a. Accuracy of 90.4%, b. Accuracy of 94.3%, c. Accuracy of 94.2% | 3843 samples of 1915 wearing masks and 1928 without masks | MaskDetect (a customized CNN model) | Made comparisons of three-well designed embedded systems in terms of both accuracy and real-time performance. Proposed a new compressed CNN model to minimize the cost. | Provided no hardware implementation details. Has yet to be integrated into a complete mask-face detection system. |

| July 2021, [76] | PYNQ-Z2 all-programmable SoC platform | FPS of 45.79, Detection delay of 0.13 s/ Precision of 96.5% | Medical mask dataset [77] | Two concatenated Haar Cascade Classifiers (HCC) [78] | Carefully analyzed the video delay which derives from both hardware and software. Used the multithreading technique to avoid the delay caused by the algorithm. | The mask-wearing status was judged only by the mouth detection in which the absence of mouth in the detected face was considered as mask-wearing. Such a method seems unreasonable in cases such as incorrect mask-wearing with nose exposed or obstructed faces without masks. | |

| System Packaging | March 2022, [79] | Raspberry Pi 4 | Latency of around 90 ms (no specific value provided)/ Accuracy of 99.07%, Recall of 98.91% | 1376 images of 686 wearing masks and 690 without masks | A customized CNN model | Used motor controller as gate controller and buzzer as an alarm to simulate real environment in public transportation. | There was no detailed description of the proposed model structure. Performance result was confusing (many different experimental results presented in the original paper). |

| October 2020, [80] | MiniPC embedded with GTX 1060 Nvidia GPU | FPS of 11.1/ - | - | YOLOv4 [41] | The proposed system was implemented in the real case at Batam Island. Provided photographs of application scenarios similar to the custom security check. | Lack of detection performance made it hard to evaluate the whole system quality. | |

| December 2020, [81] | Personal devices (PC, tablets, mobile phones, etc.) | FPS of iPad Pro (2016 A9X): 4.12, iPhone 11(2019 A13): 8.57, MacBook Pro 15(2019 i7-9750H): 20.36/ mAP of 89% | A combination dataset of 4065 from MAFA, 3894 from WIDER FACE, 1138 from internet | YOLO-Fastest [15] | The proposed mask-face detection system was free of additional acceleration hardware, OS and device agnostics. Accessible by any user and achieves low-cost. | Detection Performance was low due to the lightness of the model and the model inference completely depended on local CPUs. | |

| November 2021, [82] | Raspberry Pi tiny computer | -/ Accuracy of 99.22% | A customized dataset of 1376 images: 690 images wearing masks and 686 images without masks | A CNN-based neural network | The highly integrated system had many extended functions such as body temperature measurement and gate opening control to simulate an IoT-Enabled smart door. | The dataset was too small, and the deep learning model structure was not specified. The mask detection was based on a classification model, so it was unable to recognize multiple persons with or without masks. There was no real-time performance evaluated. | |

| April 2022, [83] | Raspberry Pi 4 Model B -8 GB Ram, Raspberry Pi camera, Raspberry Pi display, MLX90614 non-contact infrared temperature sensor, SG 90 mini servo motor | -/ Accuracy of 97.0% | 2902 images of mask-wearing and 2872 images of no mask or incorrect mask-wearing from [84] | MobileNetV2 [32] & Haar Cascade Algorithm | The whole system was of high integration. The description and visualization of its hardware system is in provided detail. Used a hybrid method which combines traditional machine learning and deep learning models to perform the mask-face detection task. | The proposed model was light-weight, but no speed performance analysis was performed to prove the value of its hybrid approach. The performance comparison presented in the paper with other mask-face detection methods was invalid because they were evaluated on different datasets. | |

| November 2021, [85] | a 5-megapixel camera, Raspberry Pi 3 model B+, buzzer and a monitor screen | FPS of 5/ Success rate of 88.89% | - | Multiple Haar Cascade Classifiers (HCC) [78] | The whole system was in low-cost. Comparing to [76], Haar Cascade Classifiers were used to detect both nose and lip so the proposed method can detect incorrect mask-wearing faces. | The speed performance was too low to perform mask-face detection in real-time. There was no dataset source provided; therefore, the accuracy was not convincing. | |

| Model Compres- sion | October 2020, [86] | Coral Dev Board embedded with a Google Edge TPU coprocessor | Latency of 6.4 ms/ Accuracy of 58.4% | A customized dataset of 2127 images | MobileNetV2 [32] + SSD | Showed the complete progress of shrinking the model size. | The accuracy lost about 6% points while comparing the 8-bit integer model to the original 32-bit model according to the experiment of 1NN model on CPU in the original paper. |

| September 2020, [87] | Pynq Z1 platform (with an ARM processor and a single-chip FPGA) | FPS of 16/ Precision of 90.7%, Recall of 92.3% | A Combination of Real-World Masked Face Dataset (RMFD), Masked Face Detection Dataset (MFDD), Simulated Masked Face Recognition Dataset (SMFRD), MAsked FAces (MAFA) dataset | Pynq-YOLO-Net (a pruned and quantized YOLO model) | Lower decrease in performance compared to other state-of-the-art models while applying a dedicated compression strategy to the model. | Lacked a comparison of acceleration performance between before compression and after compression. The effectiveness of the proposed compression strategy was not illustrated. | |

| May 2021, [88] | A small development board with an ARM Cortex-M7 microcontroller clocked at 480 Mhz and 496 KB of framebuffer RAM | FPS of 30/ Accuracy of 99.83% | 14,211 images in wearing mask and without mask from Kaggle and openCV | A customized CNN model | The proposed model was super light-weighted of 138 KB after integer quantization. Outperformed Squeeze Net which was a similar size. | The model only acted as a two-class classifier, not a detector. It could not be developed to perform two-class detection tasks due to the choice of BinaryCrossentropy loss. | |

| June 2021, [89] | A low-power, real-time embedded FPGA accelerator based on the Xilinx FINN architecture | FPS of 6460, Latency of 0.31 ms/ Accuracy of 93.94% | MaskedFace-Net dataset with a detailed split into 4 classes: Correctly Masked Face Dataset (CMFD), Incorrectly Masked Face Dataset (IMFD) Nose, IMFD Chin, and IMFD Nose and Mouth | BinaryCop–a binary neural network (BNN) classifier | The system was capable of executing a finer classification. The inference was fast with the combination of a Binary Neural Network (BNN) and an acceleration framework. The embedded system hardware required low power. | Remains to be tested in other platforms except embedded FPGAs. | |

| June 2022, [90] | Raspberry Pi 4 | FPS of 1.97/ mAP of 57.40% | Mask-Detection-Dataset [91] | YOLOv4-tiny [41] | Provided an analysis on multiple strategies of model pruning and quantization. Achieved a great improvement in FPS while a decrease in mAP was observed. | The pruning and quantization analysis was not theoretical; thus, it was hard to say that the best strategy for YOLOv4-tiny could be generalized to other deep learning models. The FPS was still so low even after compression, showing that the system is not yet suited to real-use implementation. | |

| Others | December 2021, [92] | a. Nvidia Jetson Nano, b. Jetson Xavier NX | a. FPS of 12 and 8 for YOLOv5s and YOLOv5l; b. FPS of 30 and 24 for YOLOv5s and YOLOv5l/ mAP of 86.43 and 92.49 for YOLOv5s and YOLOv5l | A customized dataset of 3000 images divided into 3 classes (mask-wearing, no mask and incorrect mask-wearing) | YOLOv5s, YOLOv5l | The proposed system was capable of detecting a third class of Improper Mask-wearing. | There was no contribution of fitting deep learning models to the hardware system, leading to a high cost of hardware. |

| October 2020, [74] | Raspberry Pi4 with intel Neural Computer Stick 2 | -/ Accuracy of 88.7% | Prajna Bhandary dataset of 1376 images: 690 images wearing masks and 686 images without masks | MobileNet [29] | Presented a comparison among deep learning models (Mobilenet) and traditional machine learning methods (SVM and KNN). | Model was trained with a relatively small dataset. Hardware performance was not tested. | |

| March 2021, [93] | Raspberry Pi | System latency time of 0.13 s/ mAP of 73.7% | Properly Wearing Masked Face Detection Dataset (PWMFD) including 9205 images with 3 categories (mask-wearing, no mask and incorrect mask-wearing) | Squeeze and Excitation (SE)-YOLOv3 | Established a complete detection system as an access control gate. Able to detect a third class of incorrect mask. | The proposed model SE-YOLOv3 had more parameters than YOLOv3 which negatively affected the inference performance. Further experiments on other types of hardware device and model simplification remained to be conducted. |

| Train | Test | OOD-Mask | Wearing_MASK (%) | Without_MASK (%) | Incorrect_MASK-Wearing (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 | ||||

| 1 | 2-cls | 2-cls | ✘ | 74.69 | 82.06 | 78.21 | 72.20 | 84.33 | 77.80 | - | ||

| 2 | 2-cls | 3-cls | ✘ | 81.41 | 72.65 | 76.96 | 78.65 | 78.36 | 78.50 | 40.40 | 25.48 | 31.25 |

| 3 | 2-cls | 3-cls | ✔ | 83.08 | 72.65 | 77.51 | 82.04 | 75.00 | 78.36 | 53.18 | 53.60 | 55.76 |

| Experiment | GPU | FPS |

|---|---|---|

| 1 | 2080Ti | 76.18 |

| 2 | 2080Ti | 65.68 |

| 3 | 2080Ti | 64.51 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Xu, Y.; Zhuang, H.; Weng, Z.; Lin, Z. Machine Learning Techniques and Systems for Mask-Face Detection—Survey and a New OOD-Mask Approach. Appl. Sci. 2022, 12, 9171. https://doi.org/10.3390/app12189171

Hu Y, Xu Y, Zhuang H, Weng Z, Lin Z. Machine Learning Techniques and Systems for Mask-Face Detection—Survey and a New OOD-Mask Approach. Applied Sciences. 2022; 12(18):9171. https://doi.org/10.3390/app12189171

Chicago/Turabian StyleHu, Youwen, Yicheng Xu, Huiping Zhuang, Zhenyu Weng, and Zhiping Lin. 2022. "Machine Learning Techniques and Systems for Mask-Face Detection—Survey and a New OOD-Mask Approach" Applied Sciences 12, no. 18: 9171. https://doi.org/10.3390/app12189171

APA StyleHu, Y., Xu, Y., Zhuang, H., Weng, Z., & Lin, Z. (2022). Machine Learning Techniques and Systems for Mask-Face Detection—Survey and a New OOD-Mask Approach. Applied Sciences, 12(18), 9171. https://doi.org/10.3390/app12189171