Abstract

Content-based image retrieval (CBIR) focuses on video searching with fine-tuning of pre-trained off-the-shelf features. CBIR is an intuitive method for image retrieval, although it still requires labeled datasets for fine-tuning due to the inefficiency caused by annotation. Therefore, we explored an unsupervised model for feature extraction of image contents. We used a variational auto-encoder (VAE) expanding channel of neural networks and studied the activation of layer outputs. In this study, the channel expansion method boosted the capability of image retrieval by exploring more kernels and selecting a layer of comparatively activated object region. The experiment included a comparison of channel expansion and visualization of each layer in the encoder network. The proposed model achieved (52.7%) mAP, which outperformed (36.5%) the existing VAE on the MNIST dataset.

1. Introduction

The amount of present image data has been increasing tremendously and image retrieval is an essential technique for utilizing huge databases on the internet. These data look extensive and have various characteristics; thus, image retrieval is a challenging field. The traditional method utilizes metadata, including brief descriptors such as time-stamps, keywords, and related words explaining image contents, which is called text-based image retrieval (TBIR). The procedure for creating metadata is highly inefficient. Moreover, metadata contain ambiguous and subjective meaning and cannot always represent obvious feature of images. On the other hand, content-based image retrieval (CBIR) is based on the visual content of an image, such as its color, texture, or shape. CBIR can be used in many other fields as well criminal investigation [1], medical imaging [2], and remote sensing image analysis [3].

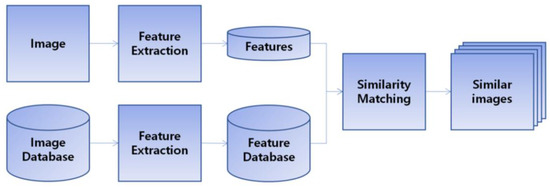

Figure 1 is a general diagram of CBIR. It consists of feature extraction and similarity measurement stage for searching relevant images. All images are transformed into a feature vector or representation and compared in the vector space. This space has continuity and similarity; s stands for distance within it. Multi-dimensional vector space is in relatively lower level than human recognition, and the gap between vector feature and human recognition is called the “semantic gap”. In other words, the ultimate objective is to reduce the semantic gap of recognition between human and computer vision [4].

Figure 1.

General content-based image retrieval framework.

Traditional hand-crafted features have lower accuracy despite of the huge computation involved, while recent deep learning-based research shows notable results. Therefore, most computer vision studies focus on convolutional neural networks (CNNs). Since the effect of off-the-self features by pre-trained classification models has been proved [5], fine-tuning is a major method in image retrieval. Fine-tuning requires labeled datasets, which means a supervised methodology. However, we studied an unsupervised method to manage massive data with a simple process without annotation. Our model is based on a general self-supervised model, a variational auto-encoder (VAE). This paper proposes a channel expansion VAE that is able to enhance the feature extraction of image content by expanding the layer. It allows networks to search more kernels and extract better features. VAE attributes generate continuous space such that similar images are placed close to each other in latent space. The encoder of the VAE embeds features into the latent space, and similarity can be measured by using a distance metric. Channel expansion VAE (CEVAE) results in a competitive mAP in comparison to existing VAE for CBIR and traditional unsupervised methods. Furthermore, we studied an activation map derived from the dimension of latent space on a more complex dataset.

2. Related Work

Since the CNN features of general visual recognition work effectively, broad vision tasks have been studied by neural networks [6]. Following this trend, image retrieval was also studied by CNN layer output as a feature descriptor. The paper “Neural Codes for Image Retrieval” [7] was the first to apply a pre-trained classification model for CBIR. The output of the network consists of types of codes, and these codes are a descriptor of the images. Afterward, off-the-shelf feature-based studies proceeded with image retrieval.

In 2015, sum pooling of convolution (SPoC) [8] was proposed as a spatial-wise weighting method; prior to that, the region of interest was generally placed in the center. Thus, a Gaussian filter was used as a weighting function and the descriptor as a sum-pool of the convolution layer. Cross-dimensional weighting (CroW) [9] considered not only spatial-wise but also channel-wise weighting in 2016. Spatial weight was based on the activation map, and channel weight was based on the sparsity given by the non-zero response of the k-channel. The sparsity was inversely proportional to the max response. In the following year, selective convolutional descriptor aggregation (SCDA) was used [10], which mainly deals with the selective region. Each channel of the layer can obtain a gradient-based response derived from the model’s output, which indicates a class. The method is to sum the channel-wise figure and generate a regional mask. Finally, the authors concatenated the global max-pooling and average-pooling as a descriptor. Part-based weighting aggregation (PWA) [11] introduces the concept of “Probabilistic Proposal” by selecting N kernels that have a larger variance in the off-line stage. Then, the probabilistic proposal spotlights the discriminative region in the image. Generalized-mean pooling (GeM) [12] generalizes pooling with the coefficient that could be both max-pooling and average pooling. The region activation varies in size depending on the coefficient and includes the means of extraction and representation. Unlike this, the most representative descriptor RMAC [13] uses classification models and extracts an appropriate descriptor, which aggregates regional maximum activation values. In a recent work, Heejae [14] proposed a framework to combine multiple global descriptors that utilize different features’ properties. A study by Giorgos [15] dealt with local descriptors that effectively outperformed global descriptors on a large scale.

The abovementioned papers are based on fine-tuning and feature aggregation, which means a supervised methodology to shift domains. Like other flows, generative models, such as the generative adversarial network [16] and VAE [17], are used in unsupervised methods. In 2019, unsupervised adversarial image retrieval UAIR [18] was proposed to learn data distribution via an adversarial feedback structure for CBIR. UAIR takes extracted features from various types of representation, such as hand-crafted or learning-based features. Then, the framework generates a rank of similarity and discrimination. On the other hand, some papers applied VAE for CBIR. Torrez [19] designed an image retrieval model with VAE and analyzed it with the MNIST dataset [20], which had 16% mean average precision (mAP). Sarmiento [21] extracted latent codes through VAE and used them for image retrieval, recommendations, and the generation of a fashion product dataset. Rupapara [22] studied an auto-encoder implementation on MNIST in detail. Moreover, Manish [23] dealt with the search for binary diagram images with a VAE-based model. We proposed a new channel expansion with a bottleneck effect and regional masking in convolutional networks to improve VAE for CBIR.

3. Method

This section includes a brief description of VAE for understanding the entire structure of the proposed feature extraction method. The feature aggregation method for selective region masking is also specified.

3.1. Variational Auto-Encoder

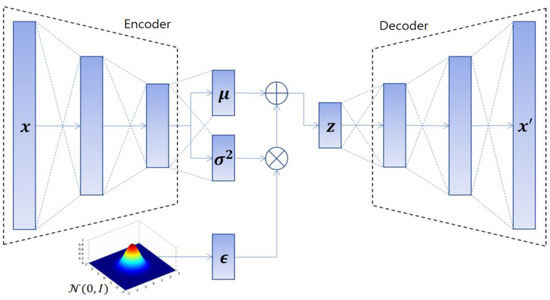

The auto-encoder was composed of an encoder extracting features from the query image and a decoder reconstructing the image. All images can be represented by a compressed vector in latent space. The VAE had the same structure, but it used variational inference for data distribution. Its structure is shown in Figure 2.

Figure 2.

Variational auto-encoder (VAE) structure.

The VAE included a layer to approximate the output of an encoder inducing normal distribution. The objective can be formularized as follows:

and correspond to the encoder and decoder, respectively. Data distribution can be estimated by optimization of and , but this is convoluted. Thus, the model adopted variational inference to approximate general distribution. Consequently, a loss occurred between original data distribution and approximation distribution . The loss can be described as following Kullback–Leibler divergence.

This D is bigger than zero, and this formulation can be deduced to evidence low boundary (ELBO).

Equation (3) means that it is able to maximize the objective by the right-hand side. Just as in the ELBO equation, the first term is latent distribution regularization loss, and the second term is reconstruction loss. Consequently, the final objective loss is written as follows:

Usually, the approximation distribution for VAE is Gaussian distribution, and the VAE model needs the reparameterization trick [16] for a learning network, because the reconstruction loss term, , is derived from sampling on q. Nonetheless, it is not able to differentiate between and . So, sampling for normal distribution was separated from the encoder, and it made back-propagation possible. Latent distribution z can be shown as follows:

The VAE learns data distribution and embeds image features into latent space. This continuity of multi-dimensional latent space defines data similarity and generates images. That is, the VAE can obtain compressed multi-dimensional vector representation from hundreds of thousands image pixel data, which enables faster retrieval from enormous databases. In particular, variances of certain dimensions are prominent in the distribution of latent space, which implies that the latent vector can be compressed into a less dimensional vector with a small performance loss. Therefore, VAE has a benefit in terms of compression.

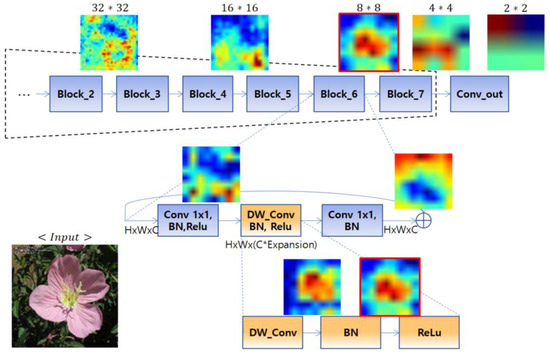

3.2. Channel Expansion

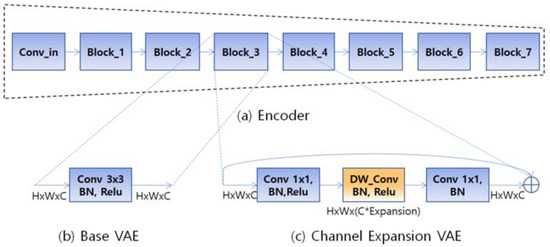

As we see the importance of channel or dimension, studies of instance retrieval have been focusing on weighting channel and spatial information. In this work, we aimed to expand the area, channels, and search for more features for the better representation of an image. The more channels a network has, the more various features it can search. Since kernels have different patterns in training, more channels have the chance to obtain key features. Thus, our observation was that more channel exploration would improve feature extraction performance. However, it causes an increase in complexity; therefore, we temporarily expanded the number of channels. Hence, the network had a bottleneck structure to repeat the expansion in the middle of the block and a reduction between blocks, which can explore and select more kernels. Figure 3 depicts the encoder structure with the expansion in comparison to normal VAE. The structure of the decoder is symmetric to the encoder.

Figure 3.

Channel Expansion VAE structure: (a) encoder with block unit, (b) VAE with convolution, (c) VAE with channel expansion. BN is batch normalization and DW_Conv is depth-wise convolution.

In Figure 3, when the encoder splits into blocks; (b) base VAE and (c) channel expansion have different elements in the block. Each block consists of convolution and batch normalization and Relu in (b) base VAE. On the other hand, (c) channel expansion VAE (CEVAE) has depth-wise convolution and 1 × 1 convolutions at the front and the rear, respectively. Convolution supersedes depth-wise convolution in order to reduce the computation derived from expansion.

Surprisingly, we found a similar block module in the MNASNet [24] when searching network architecture based on reinforcement learning. That is, our intuition was proved correct in terms of accuracy with inference. However, we did not apply a varied expansion in accordance with the layer of MNASNet because this is not automatic network searching. In addition, our model was an auto-encoder structure, unlike the reference. The expansion coefficient in our proposed model was fixed as 6; details of the model are shown in Table 1. In consequence, blocks of this model are replaced with an expansion module as in Figure 3c.

Table 1.

Encoder in channel expansion VAE.

The size of the network was based on a 128 × 128 image dataset, and layer out was an output layer consisting of a fully convolutional layer and global max pooling. The output of the encoder was a 512-dimensional latent vector.

4. Experiment

Our experiment was conducted on Windows 10 with NVidia GeForce 1080 Ti. This section includes the dataset and analysis of mean average precision (mAP). Moreover, the performance of compression and visualization for regional masking are shown.

4.1. Dataset

4.1.1. Oxford Flowers 102 Dataset

A total of 102 kinds of flowers common in England were prepared for classification. Each class contained 40 to 158 images. To refine the image of the retrieval test, we used a total of 7169 images from the training and test dataset as a gallery set (database) and 1020 images from the validation dataset for the query set (test set). Yet, there were some cases that had visually different images in the same data, which made it difficult to determine whether those images were in the same class. Thus, we excluded 21 classes to enable semantically similar image retrieval.

4.1.2. MNIST Dataset

This dataset included 70,000 images of hand-written digits from 0 to 9. All images belonged to 10 classes and were 28 × 28 sized gray scale. For comparison with past papers, we randomly picked 100 images per class as a test query set. The remainder of the dataset belonged to the database (gallery set).

4.2. Experiment

4.2.1. Setting

The proposed model detailed in Table 1 was used for testing on the Oxford Flowers 102 dataset [25]. For testing on the MNIST dataset [20], we only used four layers, and the number of channels in the layers were 32, 16, 40, and 80, in sequence. For training, we empirically set the learning rate at 0.0001, and the latent dimension at 512 for the Flowers 102 dataset, and 128 for the MNIST dataset. Evaluation criteria followed average precision based on the area under curve (AUC) of the precision–recall curve, wherein recall uses the full database as a similarity rank [18]. For similarity matching, the distance metric was set as cosine similarity.

4.2.2. Channel Expansion Effect on complex dataset

For comparison between the VAE and CEVAE on the Oxford Flowers dataset, we set the same layers in terms of the block. The number of parameters was the same as well: both model parameters were 5 million. Each model was trained with 500 epochs and 32 batch. For the training time, the VAE took 34 s and the CEVAE took 40 s per each epoch. Table 2 shows the comparison of the Top-k mAPs of both models.

Table 2.

Effect of channel expansion on the Flowers dataset.

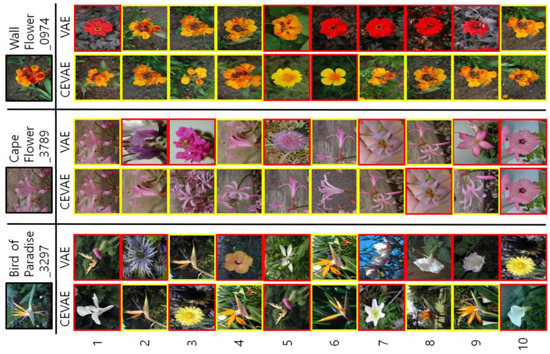

With the same parameters, the CEVAE outperformed the base model in Topk mAP. In addition, Figure 4 shows the qualitative results ranked in 10s. The yellow box is the correct prediction and red box is the wrong prediction. In general, the overall results were more consistent than the base model. This dataset had a complex background and made prediction difficult.

Figure 4.

Comparison between the VAE and CEVAE on the Oxford Flowers dataset.

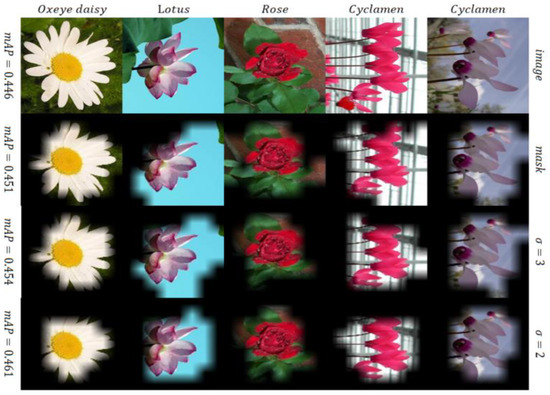

4.2.3. Regional Masking Effect

The last layer could be not optimal for the descriptor of the image; thus, we visualized the activation map at each layer and selected a layer for the final representation. The activation map can be obtained by gradient calculation from the output. Figure 5 shows the activation map at each layer.

Figure 5.

Activation map of the CEVAE.

As shown in Figure 5, the activation map capturing the flower region precisely appears in block 6. Hence, the activation maps of “DW_Conv” and “ReLu” in block 6 were average and used for binary masks to reduce an effect from the background.

In Figure 6, the threshold of the mask takes the mean value of the activation map, and its masking increased the mAP, but the increment was small (2%). Furthermore, we applied a Gaussian filter, since most of the flowers were placed in the center of the image. The variance of the Gaussian filter was tested at one to four because the feature size was 8 × 8. When the variance size was two, precision was shown to be the best—46.1%. With the filter and mean mask, performance was extremely varied in accordance with the class of flower. Total mAP increased, but the prediction rate of some classes decreased. For instance, a portion of the oxeye daisy was hidden but the rose image still included the background part.

Figure 6.

Regional masking on the Flowers dataset.

4.2.4. Compression performance

Generally, VAE had a worse performance but allowed images to be compressed into small-sized representations, which allowed for fast searching for similar images. Hence, we gradually reduced the dimension of the latent vector and measured precision. Table 3 contains the mAP according to dimension and descriptor.

Table 3.

Effect of channel expansion on the Flowers dataset (mAP for top 5). GMP = global max pooling.

At block_6, the number of channels was 1152. GMP is global max pooling and GAP is global average pooling. We expected a compound of GMP and GAP to perform better, but this was not realized. In comparison with 512 dimensions, the performance of GMP from block_6 was the best, and the descriptor had superior compression power. At 16-dimensional representation, GMP from block_6 showed 39.5%, which is 5.1% higher than the output of the last layer in the encoder. As an overall comparison, the 512-dimensional GMP feature from block_6 was the best, according to precision considering inference time.

4.2.5. Comparison to Unsupervised IR Models

For a fair comparison with the results reported in a UAIR paper [18], we used the MNIST dataset. The number of the database and test dataset is the same as the reference paper. Since the MNIST dataset contains small-sized images, we amended the network size to 4 layers. Table 4 contains the mAP for the full rank of the supervised and unsupervised models.

Table 4.

Comparison performance of image retrieval.

The UAIR reports the performance for supervised models (NDH, OANIR) and unsupervised models (ITQ, Spherical, DH). In addition, Table 4 had the top-2 mAP of VAE [19] on the MNIST dataset. Unsupervised methods achieved less than 50%, but CEVAE achieved 52.71%. Even though the performance of the unsupervised models was worse than the supervised model and GAN, it is promising without the annotation process. Moreover, CEVAE was competitive in easy learning and the compression rate. In Table 4, 52.7% is a 128-dimensional vector, and mAP was kept high with 51.7% in an 8-dimensional vector.

5. Discussion and Conclusions

In this paper, we extracted features from a complex dataset and compressed them in latent space for CBIR. Although the training of the VAE was simple, and feature compression was superb, it is limited to containing various features of complex data. The performance of the MNIST dataset was high in mAP, but it showed poor results with the flowers dataset. Nevertheless, CEVAE was superior to previous VAE models with a 52.71% mAP on the MNIST dataset. We visualized the encoder layers and utilized regional activation for better representation. Most significantly, our model kept the number of parameters and explored more kernels for various features. In qualitative results, the CEVAE had consistency in color, pattern, and shape.

In our experiment, auxiliary augmentation and higher resolution experiments were excluded. Therefore, this model can be expanded to a wider, deeper model than the 128 × 128 input size, and be improved with additional constraints. A proposal for unsupervised representation to produce contrastive learning with a dataset labeled by hand-drafted features seems to be validated.

As a result, CEVAE is a valid model for compressing image features and has a compact dataset. For practical precision, adding elaborate loss constraints or annotating is required with a big dataset. As unsupervised methodology has attracted attention, we expect further developments in the future.

Author Contributions

Conceptualization, Y.L., K.L. and H.-H.K.; methodology, Y.L., K.L. and H.-H.K.; software, Y.L.; validation, Y.L., K.L. and H.-H.K.; investigation, Y.L. and H.-H.K.; resources, Y.L. and K.L.; data curation, Y.L., K.L. and H.-H.K.; writing—original draft preparation, Y.L.; writing—review and editing, Y.L., K.L. and H.-H.K.; supervision, K.L. and M.K.; funding acquisition, K.L. All authors have read and agreed to the published version of the manuscript.

Funding

The present research has been supported by a research grant from Kwangwoon University (2020).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We used Oxford 102 Category Flower Dataset for comparison of CEVAE model.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dongyuan, L.; Xiaojun, B. Criminal Investigation Image Retrieval Based on Deep Learning. In Proceedings of the 2020 International Conference on Computer Network, Electronic and Automation (ICCNEA), Xi’an, China, 25–27 September 2020. [Google Scholar]

- Kazuma, K.; Ryuichiro, H.; Yusuke, K.; Tatsuya, H.; Ryuji, H. Decomposing Normal and Abnormal Features of Medical Image for Content-based Image Retrieval. arXiv 2020, arXiv:2011.0622474. [Google Scholar]

- Subhankar, R.; Enver, S.; Begun, D.; Nicu, S. Metric-Learning based Deep Hashing Network for Content Based Retrieval of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 18, 226–230. [Google Scholar]

- Urszula, M.; Halina, K. Deep Learning: A New Era in Bridging the Semantic Gap. In Bridging the Semantic Gap in Image and Video Analysis; Springer: Berlin/Heidelberg, Germany, 2018; pp. 123–159. [Google Scholar]

- Ali, S.R.; Hossein, A.; Josephine, S.; Stefan, C. CNN Features off-the-shelf: An Astounding Baseline for Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR-W), Columbus, OH, USA, 23–28 June 2014; pp. 512–519. [Google Scholar]

- Jeff, D.; Yangqung, J.; Oriol, V.; Judy, H.; Ning, Z.; Eric, T.; Trevor, D. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. PMLR 2014, 32, 647–655. [Google Scholar]

- Artem, B.; Anton, S.; Alexandr, C.; Victor, L. Neural Codes for Image Retrieval. Eur. Conf. Comput. Vis. 2014, 8689, 586–599. [Google Scholar]

- Artem, B.Y.; Victor, L. Aggregating Local Deep Features for Image Retrieval. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1269–1277. [Google Scholar]

- Yannis, K.; Clayton, M.; Simon, O. Cross-Dimensional Weighting for Aggregated Deep Convolutional Features. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2016; Volume 9913, pp. 685–701. [Google Scholar]

- Xiu-Shen, W.; Jian-Hao, L.; Jianxin, W.; Zhi-Hua, Z. Selective Convolutional Descriptor Aggregation for Fine-Grained Image Retrieval. IEEE Trans. Image Process. 2017, 26, 1868–1881. [Google Scholar]

- Jian, X.; Cunzhao, S.; Chengzuo, Q.; Chunheng, W.; Baihua, X. Unsupervised Part-based Weighting Aggregation of Deep Convolutional Features for Image Retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2017; Volume 8689, pp. 586–599. [Google Scholar]

- Filip, R.; Giorgos, T.; Ondrej, C. Fine-tuning CNN Image Retrieval with No Human Annotation. IEEE Trans. Patter Anal. Mach. Intell. 2019, 41, 1655–1668. [Google Scholar]

- Giorgos, T.; Ronan, S.; Herve, J. Particular Object Retrieval with Integral Max-pooling of CNN Activations. In Proceedings of the ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Heejae, J.; Byungsoo, K.; Youngjoon, K.; Insick, K.; Jongtack, K. Combination of Multiple Global Descriptors for Image Retrieval. arXiv 2020, arXiv:1903.10663. [Google Scholar]

- Giorgos, T.; Tomas, J.; Ondrej, C. Learning and Aggregating Deep Local Descriptors for Instance-Level Recognition. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2020; pp. 460–477. [Google Scholar]

- Ian, J.G.; Jean, P.; Mehdi, M.; Bing, X.; David, W.; Sherjil, O.; Aaron, C.; Yoshua, B. Generative Adversarial Nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS), Montreal, Canada, 8–13 December 2014; Volume 2, pp. 2672–2680. [Google Scholar]

- Diederik, P.K.; Max, W. Auto-encoding Variational Bayes. arXiv 2014, arXiv:1312.6114. [Google Scholar]

- Ling, H.; Cong, B.; Yijuan, L.; Shengyong, C.; Qi, T. Adversarial Learning for Content-based Image Retrieval. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019. [Google Scholar]

- Sara, T.F. Designing Variational Autoencoders for Image Retrieval; KTH EECS: Stockholm, Sweden, 2018. [Google Scholar]

- Yann, L.; Leon, B.; Yoshua, B.; Patrick, H. Gradient-based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar]

- James-Andrew, R.S. Exploiting Latent Codes: Interactive Fashion Product Generation, Similar Image Retrieval, and Cross-Category Recommendation using Variational Autoencoders. arXiv 2020, arXiv:2008.01053. [Google Scholar]

- Vaibhav, R.; Manideep, N.; Naresh, K.G.; Kaushika, T.; Swapnil, G. Auto-Encoders for Content-based Image Retrieval with its Implementation Using Handwritten Dataset. In Proceedings of the International Conference on Communication and Electronics Systems, Coimbatore, India, 10–12 June 2020. [Google Scholar]

- Manish, B.; Diane, O.; Juan, C.; Liping, Y.; Brendt, W. Diagram Image Retrieval using Sketch-based Deep Learning and Transfer Learning. In Proceedings of the IEEE Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Mingxing, T.; Bo, C.; Ruoming, P.; Vijay, V.; Mark, S.; Andrew, H.; Quoc, V.L. MnasNet: Platform-Aware Neural Architecture Search for Mobile. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2820–2828. [Google Scholar]

- Maria-Elena, N.; Andrew, Z. Automated Flower Classification over a Large Number of Classes. In Proceedings of the 2008 IEEE 6th Indian Conference on Computer Vision, Graphics & Image processing, Bhubaneswar, India, 16–19 December 2008; pp. 722–729. [Google Scholar]

- Zhixiang, C.; Jiwen, L.; Jianjiang, F.; Jie, Z. Nonlinear Discrete Hashing. IEEE Trans. Multimed. 2017, 19, 123–135. [Google Scholar]

- Cong, B.; Ling, H.; Xiang, P.; Jianwei, Z.; Shengyong, C. Optimization of Deep Convolutional Neural Network for Large Scale Image Retrieval. Neurocomputing 2018, 303, 60–67. [Google Scholar]

- Yunchao, G.; Svetlana, L.; Albert, G.; Florent, P. Iterative Quantization: A Procrustean Approach to Learning Binary Codes for Large-Scale Image Retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2916–2929. [Google Scholar]

- Jae-Pil, H.; Youngwoon, L.; Junfeng, H.; Shih-Fu, C.; Sung-Eui, Y. Spherical Hashing: Binary Code Embedding with Hyper-spheres. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2304–2316. [Google Scholar]

- Jiwen, L.; Venice, E.L.; Jie, Z. Deep Hashing for Scalable Image Search. IEEE Trans. Image Process. 2017, 26, 2352–2367. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).