Abstract

With the advancement of cost informationization in construction, the automatic classification of building project costs has become a key step to improving management efficiency. Traditional rule-based or manual methods are insufficient to handle increasingly complex engineering texts. To address this issue, this study proposes a deep learning framework that integrates Convolutional Neural Networks (CNNs), Deep Pyramid Convolutional Neural Networks (DPCNNs), and Long Short-Term Memory networks (LSTMs). A standardized dataset of 12,838 records was constructed based on expert annotation. Six baseline models were trained under both character-level and word-level tokenization, and their predictions were combined through a majority voting strategy. Experimental results show that the ensemble model achieved an accuracy of 97.59% on the test set, outperforming single models, with character-level tokenization performing better. The findings confirm the effectiveness of model ensembling in enhancing classification accuracy and robustness, providing a feasible solution for intelligent text classification in cost management, and offering practical reference for digitalization and intelligent applications.

1. Introduction

As a critical component of project life-cycle management, construction cost management plays a central role in investment control, resource allocation, and risk mitigation []. Under the context of high-quality economic development and the low carbon strategy, refined cost management has emerged as a key step to improving project efficiency and achieving sustainable development []. Traditionally, construction cost management has relied heavily on expert judgment for cost estimation, auditing, and classification. While this approach may be adequate for small-scale projects with limited data, the growing complexity and expanding investment scale of modern construction projects have led to cost data that is large in volume, complex in structure, and heterogeneous in format []. These characteristics render traditional methods insufficient to meet the requirements of modern cost management in terms of efficiency, accuracy, and consistency. Consequently, advancing cost management toward digitalization and intelligent automation has become an urgent demand in the industry [,].

Within practical cost management, cost classification is a foundational task for the standardization of cost data and subsequent analytical processes. At present, classification is still predominantly performed manually, giving rise to several challenges. First, the process is inefficient, requiring extensive time and labor, and cannot keep pace with the need to process large-scale data []. Second, misclassification is common, as engineering text descriptions are often complex and domain specific []. Third, classification standards are inconsistent, different practitioners or institutions may interpret classification schemes differently, leading to inconsistent results across project stages or regions []. The overall quality of cost data is constrained, with inconsistencies undermining downstream tasks such as data analysis, statistical benchmarking, and decision support. These issues restrict the progress of cost data standardization and hinder the advancement of intelligent cost management.

Recent advances in deep learning have demonstrated strong capabilities in text classification tasks []. Different categories of deep learning architectures exhibit complementary strengths in text classification: convolution-based models are effective for capturing local semantic patterns, deeper hierarchical networks enhance the representation of contextual information, and recurrent or sequence-oriented models are adept at learning long-range dependencies in textual data [,]. These models have shown outstanding classification performance in diverse domains, highlighting the potential of deep learning in processing complex textual data. Moreover, ensemble learning techniques that integrate multiple models to capitalize on their complementary strengths have proven effective in enhancing robustness and generalization, with successful applications in fields such as healthcare, finance, and law [,,]. Such developments provide a solid theoretical and methodological foundation for the intelligent classification of construction cost texts.

Against this backdrop, the present study introduces deep learning methods into the domain of construction cost classification and develops an ensemble framework that integrates convolutional neural network (CNN), deep pyramid convolutional neural network (DPCNN), and long short-term memory network (LSTM) models to achieve automated classification and standardized processing of cost texts. The research objectives are fourfold: (1) to construct a standardized cost text dataset covering diverse building types and engineering disciplines; (2) to investigate the impact of character-level and word-level tokenization on classification performance; (3) to compare the performance of individual models and the ensemble framework in terms of accuracy, robustness, and generalization; and (4) to validate the feasibility and practical utility of deep learning for construction cost text classification, thereby providing technical support for data standardization and intelligent cost management. The remainder of this paper is structured as follows. Section 2 reviews related literature on construction cost classification, deep learning methods for text classification, and ensemble modeling strategies. Section 3 presents the methodology, including the cost data taxonomy, dataset construction, model architecture, and ensemble strategy. Section 4 describes the experimental setup and results, followed by a discussion of the key findings in Section 5. Section 6 concludes the paper and outlines directions for future work.

2. Related Works

This section provides a review of methods used in engineering cost classification domains, explores applications of deep learning models for text classification, and examines fusion, ensemble, and hybrid modeling approaches, with emphasis on relevance to construction cost text classification.

2.1. Construction Cost Classification

Traditional methods for classifying cost descriptions or engineering documents have relied on manual rule-based systems and classical machine learning using handcrafted features. In construction cost management contexts, keywords and domain ontologies have often been used to define categories via expert rules. For example, in the UK and international practices, “Bills of Quantities” (BoQ) are often organized manually into codes or measurement standards by human experts []. Recent studies have shifted toward employing machine learning methods for the automatic classification. Deza, Ihshaish, and Mahdjoubi [] explored machine learning approaches to classify BoQ descriptions into ICMS codes, comparing classical statistical features and simpler sequence models, finding that simpler local features often suffice for good performance. Other works in cost estimation focus on numerical prediction rather than textual cost-item classification. Park et al. [] used attributes from Building Information Modeling (BIM) and building design metadata to predict total construction cost, but did not address classifying cost descriptions into semantic categories. Similar gaps are evident in recent works, which focus on cost prediction and document classification frameworks, yet stop short of mapping text descriptions into semantic classification schemas [,].

Rule-based or ontology-guided approaches have the advantage of interpretability and domain consistency. Within it, experts define key terms, patterns, and sometimes templates. But such approaches often struggle with noisy text, missing terms, variations in phraseology, and scale [,,]. They also lack adaptability when moving across regions, project types, or languages, domain rules have to be re-crafted. Traditional methods in engineering cost text classification have offered baseline or initial solutions but are limited in scalability, adaptability, and handling of linguistic variations. These limitations motivate the move toward deep learning and ensemble modeling in the recent literature []. BIM-enabled and parametric design workflows illustrate how integrated computation can optimize construction design and materials management. For example, Wu et al. [] formalize trade know-how for floor-tile cutting and reuse into a BIM and Design workflow implemented in Rhino software and coupled with an algorithm, yielding rapid solution generation and lower material waste. This line of work targets geometry and layout optimization from structured data, whereas our study addresses upstream, unstructured cost texts, providing a taxonomy-grounded classifier that can supply cost semantics to such downstream optimization pipelines.

2.2. Deep Learning Methods for Text Classification

Deep learning architectures have dominated research in text classification in recent years, including in domains with technical or specialized language. Surveys such as Li et al. [] provide taxonomies and comparative analyses of different architectures: CNNs, recurrent neural networks (RNNs), attention models, and transformers, showing that each has trade-offs in terms of capturing local vs. global phenomena. Applied works extending to domain specific text that proposes a CNN architecture that captures both intra-sentence and inter-sentence n-gram features by two-dimensional multi-scale convolution operations. Their model outperforms standard CNN, bidirectional long short-term memory (BiLSTM), attention models on several binary and multi-class datasets []. Another example is the work combining CNN and LSTM in hybrid fashion which evaluates a hybrid model that leverages LSTM’s strength in sequence modeling and CNN’s local feature extraction; this research found that this hybrid outperforms pure CNN or pure LSTM on various datasets []. In sentiment analysis and social media domains, models combining CNN, LSTM and gated recurrent unit (GRU) for strong performance. Minaee et al. [] reviews more than 150 deep learning models and over 40 datasets, indicating trends such as the success of CNN and RNN hybrids, the rise of attention mechanisms, and the increasing utility of pretrained language models.

There are also domain-specific applications. For instance, there are works include automatic classification of knowledge texts using deep architectures to handle large-scale document classification []. Taha et al. [] compared deep learning algorithms including CNNs, RNNs, and transformer models across a wide range of benchmark datasets, highlighting their respective advantages and limitations. Research on budgeting-phase document classification by Sousa et al. [] has explored the use of artificial neural networks (ANNs) and natural language processing (NLP) techniques for handling budgeting texts; while not always aimed at precise cost item classification, this line of work provides insights into preprocessing, feature engineering, and domain adaptation challenges. In the technical and legal-contract domain, Zhong et al. [] investigated contract dispute classification with deep learning models, a direction related to cost text classification given the comparable linguistic complexity.

These studies demonstrate that deep learning models, attention-based and transformer variants, are highly effective for text classification when applied to large datasets with defined labels and domain-adapted resources such as custom vocabularies, specialized tokens, or pre-trained embeddings. However, many of these works still face notable limitations, including limited attention to hierarchical or multi-level classification tasks, inadequate exploration of tokenization granularity in languages, challenges in dealing with domain-specific noisy, condensed texts such as cost descriptions, and difficulties in maintaining consistent performance across regions or project types. Despite these advances, open issues remain in hierarchical classification and tokenization strategies, which motivates the exploration of ensemble methods for error-profile stabilization.

2.3. Model Fusion, Hybrid, and Ensemble Methods in Text Processing

Since a single architecture often captures only part of the information, such as local features or long-range dependencies, many recent works adopt fusion, hybrid, or ensemble strategies that combine multiple models and incorporate different input representations. Mohammed et al. [] introduced a meta-learning ensemble framework that fuses baseline CNN, RNN, transformer etc., layers into a two-tier meta-classifier. Across tasks, the ensemble showed superior accuracy and more consistent F1 scores across classes than any constituent model. Kathirgamanathan et al. [] systematically compared ensembles of CNN, LSTM, and their combinations, showing that ensemble models reduce the error of the worst-performing class while maintaining high accuracy. Kamateri et al. [] propose a domain-agnostic method for selecting base models and combining them for patent classification and other long and short text tasks. Their results show that diversity among base models with different architectures and input representations is essential for ensemble gain. For dealing with the construction cost document, some empirical studies suggest that temporal convolutional architectures can outperform recurrent models such as LSTM under certain conditions; for example, Hou et al. [] show that TCNs exceed LSTM and GRU models in tasks where long-range dependencies are important, and Elmadjian et al. [] find that temporal convolutional networks (TCNs) achieve higher F1-scores across all classes compared to CNN-LSTM and CNN-BiLSTM in online classification tasks involving sequential technical or sensor-derived data.

Hybrid architecture that combines convolutional and recurrent models have been adopted in text classification. For instance, Priyadarshini et al. [] developed a grid search–based deep neural network that integrates CNN and LSTM, allowing simultaneous exploitation of local feature extraction and long-sequence modeling, often enhanced with tuning or attention mechanisms. Similarly, research such as Agbesi et al. [] introduce a multi-TextCNN ensemble that captures text information across multiple granularity levels, and Zhou et al. [] extract discriminative n-gram features via convolutional filters; it employs multi-scale convolutional strategies to approximate longer context even in predominantly convolutional architectures.

Beyond hybrids, ensemble strategies such as majority voting, weighted averaging, stacking, and meta-classifiers are also prominent. Huynh et al. [] demonstrated that combining CNN, LSTM, and Bidirectional Encoder Representations from Transformers (BERT) through ensemble fusion improves robustness across datasets. Other ensemble methods focus on integrating different tokenization or embedding schemes, for example, contrasting word-level and character-level inputs or comparing pretrained embeddings with domain-specific ones. While many studies explore these aspects during hyperparameter tuning, few have systematically investigated character versus word granularity, particularly in Chinese, multilingual, or technical texts. Recent work also considers ensembles trained on different subsets of data or enriched with metadata features such as document source or project type. Zangari et al. [] reviewed hierarchical text classification methods, emphasizing how the use of taxonomy, metadata, and multi-level label structures can enhance generalization and improve performance in tasks where categories are nested or structured. Kowsari et al. [] proposes a hierarchical deep learning architecture to handle documents that must be assigned labels at multiple levels, an approach relevant for tasks like cost classification in units, specialty, sub-specialty levels.

Taken together, existing research reveals a clear trajectory: from traditional rule-based and statistical approaches, to the adoption of deep learning architectures, and more recently to the emergence of ensemble and hybrid frameworks. However, studies directly targeting construction cost texts are limited, particularly those that incorporate hierarchical label structures, evaluate tokenization granularities, and apply ensemble learning. These gaps provide the foundation for this study, which develops an ensemble model integrating CNN, DPCNN, and LSTM under both character and word-level tokenization to advance construction cost classification.

3. Methodology

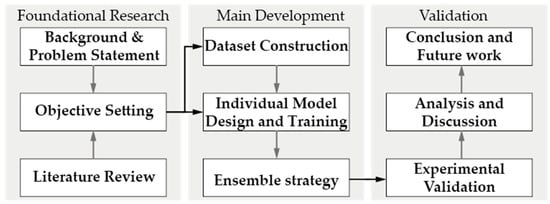

Based on the research background, this study develops cost dataset and then designs and trains individual models and implements an integration strategy. It conducts experimental validation and results analysis, culminating in conclusions and an outlook for future work (Figure 1).

Figure 1.

The workflow of this study.

3.1. Cost Data Taxonomy

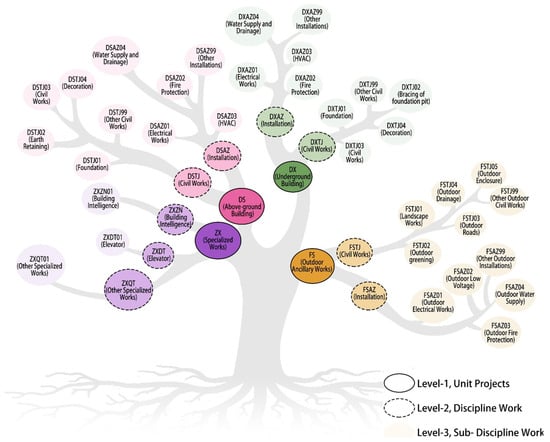

To achieve standardized cost classification, this study establishes a three-level taxonomy comprising unit projects, discipline work, and sub-discipline work, as shown in Figure 2. This hierarchical system ensures consistency and comparability of cost data while reflecting the multidimensional nature of construction expenditures. At the top level-1, unit projects categorize works into above-ground structures (DS), underground structures (DX), outdoor ancillary works (FS), and specialized projects (ZX), providing a macro framework for cost organization. The level-2, discipline work, refines these categories according to specialized domains, highlighting the discipline attributes and resource characteristics of construction activities. At the most detailed level-3, sub-discipline work further break down discipline work into specific tasks and cost components, enabling precise accounting and management. This taxonomy provides a clear label hierarchy for subsequent classification tasks, allowing models to capture inter-category differences.

Figure 2.

Illustration of a Three-level Classification System.

3.2. Dataset Construction

3.2.1. Data Sources and Cleaning

To ensure the consistency, learnability, and statistical validity of construction cost text features, four standardized data cleaning procedures were applied. Each procedure was designed not only to reduce noise but also to enhance semantic stability and facilitate downstream tokenization and modeling. The steps are as follows:

- (1)

- Symbol Normalization

To eliminate ambiguity, all wave/range symbols were normalized to the en dash “–” (U + 2013). In addition, non-domain special characters were removed. The replacements were implemented with regular expressions covering the entire corpus. This ensures equivalent expressions share identical token forms, reducing vocabulary fragmentation.

- (2)

- Case Folding

All characters were converted to uppercase according to Unicode upper-casing rules. Without this step, tokens such as “PVC” and “pvc” would be treated as separate entries, artificially enlarging the vocabulary. By unifying case, the effective lexicon size is reduced. No semantic information is lost, as casing carries no meaningful distinction in construction cost texts.

- (3)

- Full-width to Half-width Conversion

In many Chinese construction documents, digits, letters, and punctuation frequently appear in full width form due to document formatting. These variants pose challenges for both tokenizers and numerical parsers. Following the punctuation standard and Unicode East Asian Width specifications, all full-width characters were mapped to half-width equivalents. This harmonization allows uniform handling of quantities, units, and alphanumeric codes, ensuring compatibility across preprocessing modules.

- (4)

- Deduplication

To avoid compromising statistical validity by overweighting repeated samples, a deduplication step was applied. Records were keyed by the tuple {project code, description, quantity, unit price} and compared using MD5 hashing. Exact duplicates were removed.

3.2.2. Data Annotation and Splitting

A dual-control protocol with blind labeling and expert arbitration is employed. Three engineers (avg. 8 years’ experience) performed the initial labeling. The arbitration panel comprised three senior engineers (avg. 15 years), chosen as an odd-number committee to avoid ties and to provide discipline coverage across major categories (civil, MEP, landscape). A pilot run indicated that a 3 plus 3 configuration met the turnaround and agreement targets, whereas smaller or even-number panels increased tie risk or delayed adjudication. When labelers disagreed, the arbitration panel decided the final label; if panelists differed, a majority vote was taken.

The annotation process followed a structured multi-stage workflow designed to ensure both reliability and domain validity. First, all samples were randomly distributed to the labeling group through a double-blind allocation mechanism, which guaranteed that annotators could not view each other’s results and thus minimized mutual influence or cognitive bias. Each annotator then assigned the most appropriate label to each record based on the established three-level taxonomy in Section 3.1. When the three annotators reached full consensus, the sample was directly admitted into the corpus as a gold-standard instance. In cases where inconsistencies occurred, the disputed entries were transferred to the arbitration group, consisting of more senior experts, who reviewed the evidence and engaged in structured discussions until a unanimous decision was reached. To further reinforce quality assurance, a random 5% of the accepted samples underwent post hoc inspection. If the error rate in this inspection exceeded 1%, the entire batch was subjected to re-examination, and the annotators involved were retrained before continuing. Through this layered procedure, the annotation workflow established a reliable and reproducible dataset suitable for downstream model training and evaluation.

After annotation, the final corpus was divided into training, validation, and test sets at a ratio of 6:2:2, ensuring stratified sampling across all three levels of categories. This guarantees that rare sub-discipline classes are represented in each partition, which is critical for training models and preventing class imbalance from distorting evaluation. To aid reproducibility and transparency.

3.2.3. Text Pre-Processing

To ensure that the cleaned construction cost texts could be consumed by deep learning models, a four-step preprocessing pipeline was established. The pipeline consists of tokenization, stop-word removal, domain dictionary injection, and text vectorization.

A dual-channel tokenization strategy was adopted using the jieba tokenizer to generate both character-level and word-level sequences. Character-level tokenization captures fine-grained morphological features and alleviates out-of-vocabulary (OOV) issues caused by coined technical terms, while word-level tokenization preserves semantic integrity and enhances contextual understanding. To avoid segmentation errors, particularly for composite technical expressions, regular expressions were employed to match Chinese and English characters, numerals, and engineering-specific symbols.

Vocabulary adaptation involved both the removal of low-information tokens and the injection of domain-specific terminology. A stop-word lexicon was built by extending a standard list with additional non-informative tokens common in cost texts, while a curated lexicon of construction terms (e.g., civil works, installation, decoration) was added to the tokenizer dictionary to ensure compound technical phrases were preserved as single tokens.

Token sequences were mapped into dense numerical representations through a Skip-gram model trained on a large corpus of construction cost texts. Both character-level and word-level embeddings were generated with context windows tailored to their granularities, and negative sampling was applied to improve training efficiency. The resulting embeddings transformed raw cost descriptions into standardized, domain-aware, and semantically enriched vector sequences, enabling downstream models to capture both local and global patterns.

3.3. Model Architectures

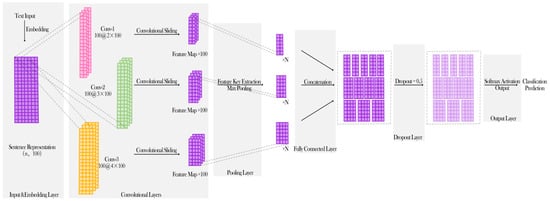

3.3.1. TextCNN Model

The TextCNN model (Figure 3) was selected as a representative convolutional neural network architecture for text classification, owing to its strong ability to capture local semantic features. The model first transforms the input text into an embedding space, where each token is represented by a dense vector that preserves semantic and syntactic information.

Figure 3.

TextCNN Model Architecture.

These embeddings are then processed through convolutional filters of different receptive field sizes, which are capable of detecting n-gram patterns and collocations within the sequence. By sliding across the text, the filters act as feature detectors that capture contextual cues at multiple granularities, from short technical expressions to longer phrase structures commonly present in construction cost descriptions. The resulting feature maps are aggregated through pooling operations, which emphasize the most salient signals while reducing dimensionality and noise. The pooled features are subsequently integrated in a fully connected layer, which combines information extracted from multiple channels and generates high-level semantic representations suitable for classification. To enhance generalization, a dropout mechanism is applied, reducing the risk of overfitting by randomly omitting neurons during training. In this way, TextCNN balances efficiency with representational power, making it a suitable model for cost text classification where localized terminology and phrase structures play a crucial role.

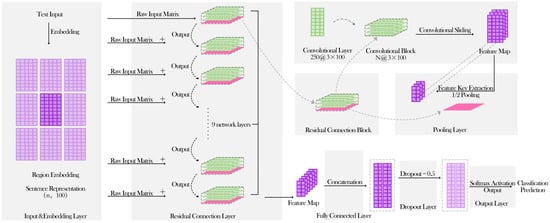

3.3.2. TextDPCNN Model

The TextDPCNN model (Figure 4) is grounded in the deep pyramid convolutional network framework, which extends traditional CNNs by introducing deeper hierarchical structures with residual connections. DPCNN employs region-based embeddings that aggregate information from multiple adjacent tokens, thereby broadening the receptive field and enabling the detection of more complex contextual patterns. These embeddings are processed through stacked convolutional blocks, where repeated convolutions progressively enrich the representation of textual features. To overcome the gradient vanishing problem that often arises in deep architectures, residual connections are incorporated, allowing information to flow across layers without degradation and enabling the network to maintain stability even at greater depth. Within each block, pooling operations are applied to gradually reduce the dimensionality of the feature maps, compressing information while retaining the most salient signals. At the final stage, the compressed features are mapped through connected layers to perform classification. Similar to TextCNN, dropout regularization is introduced to prevent overfitting and enhance generalization.

Figure 4.

TextDPCNN Model Architecture.

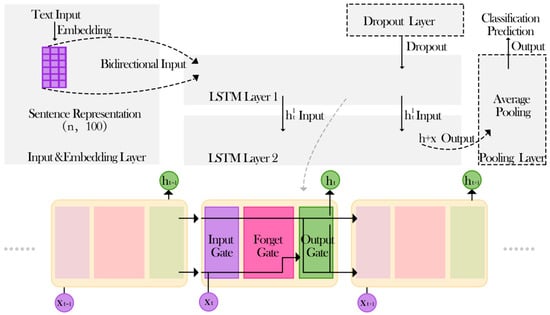

3.3.3. LSTM Model

The TextLSTM model (Figure 5) is built upon the long short-term memory (LSTM) network, a recurrent architecture designed to address the problem of vanishing and exploding gradients in sequential modeling. Unlike convolutional approaches that focus on local feature extraction, LSTM networks are effective at capturing long-range dependencies, which are essential for understanding the sequential and contextual nature of text.

Figure 5.

TextLSTM Model Architecture.

In this model, pre-trained embeddings are used to initialize the input representation, which is then processed by stacked LSTM layers capable of maintaining and updating hidden states across time steps. To further enhance its ability to represent context, a bidirectional structure is employed, enabling the network to consider both forward and backward dependencies simultaneously. This dual perspective provides a more comprehensive understanding of construction cost texts, where meaning often depends on both preceding and following terms. Instead of relying solely on the final hidden state, average pooling is applied across all time steps, producing a stable representation that integrates information from the entire sequence. The aggregated features are then passed to connected layers to perform classification. Dropout regularization is applied during training to reduce overfitting. Through its sequential processing and memory mechanisms, TextLSTM is able to capture semantic flows and dependencies that span beyond local windows, complementing the feature-focused nature of convolutional models and enriching the overall framework for cost text classification.

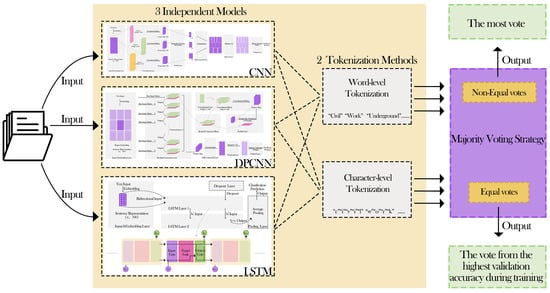

3.4. Ensemble Strategy

Model ensembling is a widely used strategy for improving model performance by combining the predictions of multiple independent models to achieve stronger generalization than single model. Given the complexity and diversity of the cost classification task in building construction projects, this study adopts an ensemble approach to integrate the strengths of the three baseline models under both character-level and word-level tokenization (Figure 6). The objective is to construct a more stable and accurate classification framework tailored to the characteristics of construction cost texts.

Figure 6.

The Ensemble Model Architecture.

3.4.1. Independent Training of Base Models

Three baseline deep learning architectures—TextCNN, TextDPCNN, and TextLSTM—were employed to capture complementary aspects of textual information. Each of these models was trained under two tokenization settings: character-level segmentation, which emphasizes fine-grained morphological patterns and alleviates out-of-vocabulary issues, and word-level segmentation, which preserves semantic units and enhances contextual interpretation. As a result, six independent classifiers were obtained.

To guarantee consistency across models, all six were trained on the same dataset partitions, with identical training, validation, and test splits. Furthermore, hyperparameters were tuned in a uniform manner to eliminate confounding effects, enabling the ensemble to combine models of comparable maturity.

3.4.2. Prediction Collection

The second stage involved gathering predictions from the independently trained models. Each sample in the test set was passed sequentially through all six classifiers, producing six independent predictions. This process generated a prediction matrix where rows correspond to samples and columns to models.

The rationale for this step lies in the diversity of predictive behavior. CNN-based models, for example, tend to excel at recognizing localized patterns, while LSTM-based models are better at capturing sequential dependencies, and DPCNN is adept at representing deeper hierarchical abstractions. By retaining the predictions of all models, the ensemble framework ensures that no single perspective dominates the classification process, thereby maintaining the complementary strengths of different architectures.

3.4.3. Majority Voting Integration

The six prediction outputs were aggregated through a majority voting mechanism. For each test sample, the label most frequently predicted across the six models was selected as the ensemble’s final output. A tie-breaking rule was incorporated to further enhance decision reliability. In cases where two or more categories received equal votes, the ensemble defaulted to the prediction from the model that had achieved the highest validation accuracy during training. This adjustment ensures that the ensemble is not only democratic but also guided by performance-based evidence. Through this three-stage design, the ensemble model integrates multi-architecture and multi-granularity perspectives.

4. Experiments

4.1. Experimental Setup

The experimental setup was designed to ensure reliability and reproducibility of the model training. The dataset was compiled from the tender control prices of building-construction projects in Zhejiang Province between 2018 and 2023. Data collection adhered strictly to the national Code of Bills of Quantities and Valuation for Construction Works (GB 50500-2013) [] and the 2018 edition of the Zhejiang Provincial Budget Quota for Building and Decoration Engineering []. The corpus comprises 12,838 cost item records from real tender control prices of Zhejiang building projects, covering affordable housing, schools, hospitals, and factories. Labels follow a three-level, with samples distributed across five major disciplines and 23 sub-disciplines. The resulting dataset spans four major disciplines—civil works, electromechanical installation, interior fit-out, and landscape greening, it covers 40 representative projects, including residential compounds, educational facilities, and medical institutions.

To guarantee data integrity, duplicates were systematically identified and removed. A total of 1647 duplicate records, representing 0.34% of the raw corpus, were eliminated. After deduplication, 12,838 unique samples remained as the basis for subsequent experiments. These entries were aligned with the XML schema specified in the Zhejiang provincial Data Standard for Construction-Project Pricing Deliverables and transformed into structured records comprising three hierarchical levels of cost data: unit works, divisional work sections, and BoQ items.

Preprocessing was conducted to ensure textual consistency and enhance learnability. A stop-word lexicon was constructed by extending the Harbin Institute of Technology stop-word list with 54 domain-specific non-informative tokens frequently occurring in cost texts. In addition, a domain lexicon of 2178 curated construction expressions (e.g., “reinforced concrete,” “waterproof membrane,” “cable tray”) was incorporated into the tokenization process, enabling precise recognition of compound terms and technical terminology. Tokenization was implemented at both the character level and the word level, thus providing two complementary representations: the former addressed out-of-vocabulary issues and captured morphological details, while the latter preserved semantic integrity for phrase-level understanding.

The training corpus amounted to 4.8 GB of raw cost texts. Word embeddings were trained using the Skip-gram Word2Vec model with negative sampling. Embedding dimensionality was set to 100, with a context window of size 2 for “character-level tokenization and 1 for word-level tokenization. Model parameters were initialized using the Xavier uniform distribution and optimized with the Adam algorithm. Training proceeded with a maximum of 40 epochs for all models under both character-level and word-level tokenization. It adopted early stopping based on validation loss (dev_loss) evaluated every 10 mini-batches: whenever the current dev_loss improved upon the historical best (dev_best_loss), and then it updated dev_best_loss, saved the checkpoint, and recorded the iteration as last_improve. If dev_loss failed to improve for 100 consecutive validations (require_improvement = 100), a stop flag was raised and training was terminated at the beginning of the next epoch. All reported results are from the checkpoint with the best dev loss. Each sequence was represented as a tensor X ∈ R(L × 100), where L denotes sequence length, truncated to a maximum of 512 tokens.

4.2. Evaluation Metrics

To quantitatively evaluate the performance of classification models, widely used metrics were employed. Each of these measures captures a different aspect of classification quality, providing a more comprehensive assessment of model behavior beyond overall correctness. We use standard classification metrics. Let TP, TN, FP, FN denote true positives, true negatives, false positives, and false negatives, respectively.

Accuracy reflects the proportion of correctly predicted samples out of the total number of samples. It is a global indicator of performance and is defined as follows:

The precision is defined as follows. By employing these metrics together, the evaluation framework balances overall accuracy with class-sensitive measures, allowing a nuanced analysis of the ensemble model’s strengths and weaknesses across different construction cost categories.

Recall measures the model’s ability to identify positive samples within a given class. It quantifies the actual instances of a class are retrieved by the classifier. It is formally expressed as follows:

A high recall indicates that the model rarely misses relevant instances, which is important in domains where false negatives carry high costs, such as construction cost management where misclassification of certain categories may result in inaccurate budgeting. F1-score provides a balanced evaluation by combining precision and recall into a single metric through their harmonic mean. The F1-score is useful in multi-class classification settings with imbalanced distributions, as it ensures that both the correctness of retrieved instances and the completeness of retrieval are jointly considered. It is calculated as follows:

4.3. Results and Performance

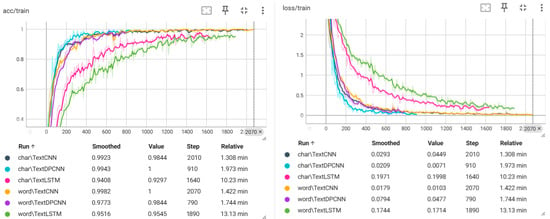

4.3.1. Performance of Individual Models

To evaluate the baseline performance of the independent models, three deep learning architectures—TextCNN, TextDPCNN, and TextLSTM—were trained and tested under both character-level and word-level tokenization. The results are presented in Figure 7. Overall, all models demonstrated competitive performance, with character-level tokenization consistently outperforming word-level tokenization. For example, TextCNN achieved an accuracy of 97.35% under character-level input, while its performance under word-level input decreased slightly to 95.99%. Similarly, TextDPCNN reached 97.31% in the character-level setting but dropped to 95.40% at the word level. The TextLSTM model, despite its ability to capture sequential dependencies, yielded comparatively lower results, with 93.81% in character-level and 92.41% in word-level tokenization.

Figure 7.

Training Results of Independent Models.

These findings indicate that character-level tokenization is more effective for construction cost text classification, likely due to its ability to capture fine-grained linguistic features and reduce issues associated with out-of-vocabulary terms. From a structural perspective, CNN- and DPCNN-based models show advantages in extracting local patterns and hierarchical semantic information, while LSTM-based models, although proficient in modeling sequential information, performed less effectively in this particular task.

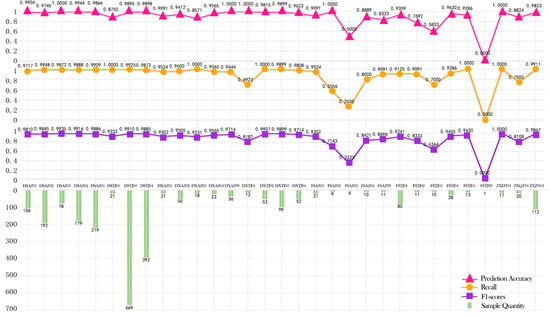

4.3.2. Performance of Ensemble Model

To further assess the effectiveness of the proposed ensemble strategy, the performance of the integrated model was compared against individual baselines at both macro and micro levels. As shown in Table 1 and Figure 8, the ensemble achieved an overall accuracy of 97.59%, surpassing all individual models. Specifically, it outperformed the best-performing independent model (TextCNN with character-level tokenization, 97.35%) by 0.24 percentage points and exceeded the weakest model (TextLSTM with word-level tokenization, 92.41%) by 5.18 percentage points. These results indicate that ensembling yields more consistent performance and overall effectiveness for this task.

Table 1.

The Macro-Level Performance Testing Results of the Models.

Figure 8.

The Micro-Level Performance Testing Results of the Ensemble Model.

Consistently attained high precision, recall, and F1-scores across most categories. For example, categories such as DSAZ01, DSAZ02, and DSAZ03 all achieved F1-scores above 0.98, showing the ensemble’s ability to handle frequent classes with high reliability. Even in smaller or less frequent categories, such as DSTJ01 and DXAZ03, the ensemble preserved acceptable performance with recall values close to 1.0, although some classes with very limited samples (e.g., FSTJ99) exhibited unstable results. This outcome highlights the ensemble model’s superior generalization ability and resilience against imbalanced distributions compared to single models. Table 2 summarizes the misclassified samples with cause analysis in the experiment.

Table 2.

Error Analysis of Representative Misclassifications in Text Classification in this study.

In summary, the ensemble model demonstrated superior performance in terms of accuracy, and stability compared with individual models. These results validate the effectiveness of the ensemble strategy for construction cost text classification, particularly in handling diverse and imbalanced categories.

5. Discussion

The experimental results provide several important insights into the task of construction cost text classification. First, the superiority of character-level tokenization over word-level tokenization demonstrates that construction cost texts, which often contain domain-specific abbreviations, compound terms, and irregular phrasing, benefit from finer-grained input representations. Character-level features allow models to bypass the limitations of predefined vocabularies, effectively handling out-of-vocabulary terms and capturing subtle variations in cost item descriptions. This finding is consistent with prior work in specialized domains where token sparsity and terminological heterogeneity challenge word-level approaches. Second, the comparative performance of the three baseline architectures reveals that convolutional models (TextCNN and TextDPCNN) consistently outperform recurrent models (TextLSTM) in this application. CNN-based architectures excel at extracting local n-gram patterns and are highly effective in identifying fixed expressions and collocations typical of cost descriptions. The DPCNN further extends this advantage by introducing hierarchical depth with residual connections, enabling the capture of both shallow and deep semantic structures without suffering from vanishing gradients. In contrast, LSTM, despite its strength in modeling sequential dependencies, tends to be less effective in this domain because cost texts are often short, fragmented, and less dependent on long-range sequential context. Instead, they rely more on local terminology and structural cues, which CNNs are better equipped to capture. Third, the ensemble strategy demonstrates clear benefits in terms of accuracy. By integrating predictions across six independently trained models under different tokenization granularities, the ensemble effectively reduces the variance of individual classifiers and compensates for their weaknesses. This design is particularly valuable in handling imbalanced categories, as shown in the micro-level analysis, where rare classes such as DXAZ03 or DSTJ01 still achieved high recall when incorporated into the ensemble framework. While the ensemble’s macro gain over the best single model is modest, it stabilizes per-class performance and improves recall on long-tail categories, reducing worst-case errors that matter in practice; the added cost is controlled by lightweight components and can be further bounded via cascaded inference or distillation for production use.

For industrial implementations, the proposed framework can be coupled with information-delivery specifications so that layered decisions operate under explicit guards and the cost-calculation process remains auditable. It can also align with existing BIM data models, enabling the same layered logic and rules to be reused consistently across engineering software environments. In this way, it supports an end-to-end pipeline from data classification and schema matching to intelligent information delivery. In contrast to approaches that depend on structured BIM assets and large transformer pipelines, this study adopts a text-first and taxonomy-guided framework that is lightweight. The contribution is to operationalize a cost taxonomy as a hierarchy-aware short-text classification workflow that combines character and word representations and uses an ensemble to stabilize performance, while remaining practical for offline and resource-limited deployment.

The main limitations concern the absence of explicit hierarchy-aware objectives and metrics, the lack of multimodal integration with BoQ and BIM artifacts, and the need to test generalization across regions and time beyond. Some rare categories with extremely small sample sizes (e.g., FSTJ99) yielded unstable results, reflecting the challenge of data sparsity. Moreover, while character-level tokenization enhances robustness, it increases sequence length and may introduce additional computational costs. Another limitation lies in the interpretability of deep models; despite high accuracy, explaining why a given cost item is assigned to a particular category remains difficult, which may reduce transparency in practical applications. The present model is trained on Zhejiang projects (2018–2023); cross-region transfer, cross-standard adaptation, and post-2023 temporal drift are therefore out of scope.

From an application perspective, the proposed ensemble framework offers tangible value for construction cost management. In this study, BERT or RoBERTa were not adopted due to engineering constraints: typical BERT-class models exceed the project’s compute, memory, and latency budgets, whereas lightweight CNN/DPCNN/LSTM architectures achieve target accuracy with lower resource demands. Moreover, the system must support offline deployment, making large pretrained models with their storage and runtime dependencies ill-suited for the resource-constrained field environment, while lightweight models remain portable under these conditions. By automating the classification of cost items with high reliability, the system can reduce manual workload, improve the consistency of cost documentation, and support downstream tasks such as budget estimation and project monitoring. The findings also suggest that CNN-based and ensemble architectures are especially well suited for engineering texts characterized by short, domain-specific phrases, while LSTM-based models may be less effective in such contexts.

6. Conclusions and Future Work

This study addresses the problem of cost classification in building construction projects and proposes a deep learning framework that integrates CNN, DPCNN, and LSTM models. It proposes a character–word complementary tokenization paradigm for engineering short-text classification: the character channel offers special symbols and terms, while the word channel captures phrases and local semantic relations. Under unified training with late fusion, the two collaborate to address term segmentation, symbol retention, and semantic modeling in tandem. Experiments show improved effectiveness without reliance on a particular architecture, well suited to lightweight, offline deployments, and usable as a general pre-model optimization strategy in engineering short-text settings. Experimental results show that the ensemble model achieves high accuracy on the test set and significantly outperforms single models, with particularly strong performance under character-level tokenization. These findings demonstrate that model ensembling can effectively improve the accuracy and robustness of cost classification, offering a feasible pathway for the automation of cost data processing. The proposed method not only validates, from a theoretical perspective, the applicability of deep learning to construction cost text classification but also provides practical support for cost management in building projects. By enhancing classification efficiency and accuracy, this research contributes to the digitalization and intelligent transformation of cost management, thereby improving the scientific rigor of project decision making and the effectiveness of cost control.

As digitalization and smart construction continue to advance in the construction industry, the complexity and diversity of cost data will further increase. The ensemble framework can be extended to cross-regional and cross-project datasets to test its generalization capability. Future work will extend the current flat predictor to hierarchy-aware classification, using the established three-level taxonomy to inform training objectives and decoding. We will also report level-wise metrics and hierarchical partial-credit to reflect realistic misclassification costs, which is expected to improve performance on long-tail sub-categories by leveraging parent–child relations. Moreover, by integrating multimodal information such as images and tables, it is possible to build a more comprehensive intelligent analysis system addressing class imbalance through data augmentation or transfer learning, and on improving interpretability via attention mechanisms. With the development of lightweight modeling and explainable AI techniques, the proposed approach is expected to have broader application prospects and provide continuous technological support for construction cost management.

Author Contributions

Formal analysis, H.S.; Funding acquisition, H.S. and G.R.; Methodology, H.S. and G.R.; Project administration, H.S. and G.R.; Resources, H.S.; Supervision, G.R.; Validation, H.S.; Writing—original draft, H.S. and G.R.; Writing—review and editing, H.S., G.R. and L.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This project is supported by the National Natural Science Foundation of China (Project No.: 52308031), the sub-project of the National Key R&D Program (Project No.: 2024YFC3809903), and the Fundamental Research Funds for the Central Universities of China.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study or due to technical/time limitations. Requests to access the datasets should be directed to PowerChina Huadong Engineering Corporation Limited.

Conflicts of Interest

Author Huajian Sun was employed by the PowerChina Huadong Engineering Corporation Limited. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Okereke, R.A.; Zakariyau, M.; Eze, E. The Role of Construction Cost Management Practices on Construction Organisations’ Strategic Performance. J. Proj. Manag. Pract. 2022, 2, 20–39. [Google Scholar] [CrossRef]

- Wang, S.; Zhu, D.; Wang, J. Impact of policy adjustments on low carbon transition strategies in construction using evolutionary game theory. Sci. Rep. 2025, 15, 3469. [Google Scholar] [CrossRef]

- Shamim, M.M.I.; Hamid, A.B.B.A.; Nyamasvisva, T.E.; Rafi, N.S.B. Advancement of Artificial Intelligence in Cost Estimation for Project Management Success: A Systematic Review of Machine Learning, Deep Learning, Regression, and Hybrid Models. Modelling 2025, 6, 35. [Google Scholar] [CrossRef]

- Elmousalami, H.; Maxy, M.; Hui, F.K.P.; Aye, L. AI in automated sustainable construction engineering management. Autom. Constr. 2025, 175, 106202. [Google Scholar] [CrossRef]

- Abioye, S.O.; Oyedele, L.O.; Akanbi, L.; Ajayi, A.; Delgado, J.M.D.; Bilal, M.; Akinade, O.O.; Ahmed, A. Artificial intelligence in the construction industry: A review of present status, opportunities and future challenges. J. Build. Eng. 2021, 44, 103299. [Google Scholar] [CrossRef]

- Tang, S.; Liu, H.; Almatared, M.; Abudayyeh, O.; Lei, Z.; Fong, A. Towards Automated Construction Quantity Take-Off: An Integrated Approach to Information Extraction from Work Descriptions. Buildings 2022, 12, 354. [Google Scholar] [CrossRef]

- Lee, G.; Lee, G.; Chi, S.; Oh, S. Automatic Classification of Construction Work Codes in Bill of Quantities of National Roadway Based on Text Analysis. J. Constr. Eng. Manag. 2023, 149, 04022163. [Google Scholar] [CrossRef]

- Dopazo, D.A.; Mahdjoubi, L.; Gething, B.; Mahamadu, A. An automated machine learning approach for classifying infrastructure cost data. Comput. Civ. Infrastruct. Eng. 2024, 39, 1061–1076. [Google Scholar] [CrossRef]

- Ahmed, Y.A.; Shehzad, H.M.F.; Khurshid, M.M.; Hassan, O.H.A.; Abdalla, S.A.; Alrefai, N. Examining the effect of interoperability factors on building information modelling (BIM) adoption in Malaysia. Constr. Innov. 2024, 24, 606–642. [Google Scholar] [CrossRef]

- Taha, K.; Yoo, P.D.; Yeun, C.; Homouz, D.; Taha, A. A comprehensive survey of text classification techniques and their research applications: Observational and experimental insights. Comput. Sci. Rev. 2024, 54, 100664. [Google Scholar] [CrossRef]

- Gasparetto, A.; Marcuzzo, M.; Zangari, A.; Albarelli, A. A Survey on Text Classification Algorithms: From Text to Predictions. Information 2022, 13, 83. [Google Scholar] [CrossRef]

- Karim, A.; Ryu, S.; Jeong, I.C. Ensemble learning for biomedical signal classification: A high-accuracy framework using spectrograms from percussion and palpation. Sci. Rep. 2025, 15, 21592. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Ma, Y.; Hua, Y. Financial Fraud Identification Based on Stacking Ensemble Learning Algorithm: Introducing MD&A Text Information. Comput. Intell. Neurosci. 2022, 2022, 1780834. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Wu, L.; Chen, J.; Lu, W.; Ding, J. A comparative study of automated legal text classification using random forests and deep learning. Inf. Process. Manag. 2022, 59, 102798. [Google Scholar] [CrossRef]

- RICS. RICS New Rules of Measurement; RICS: London, UK, 2009; p. 306. [Google Scholar]

- Deza, J.I.; Ihshaish, H.; Mahdjoubi, L. A Machine Learning Approach to Classifying Construction Cost Documents into the International Construction Measurement Standard. arXiv 2022. [Google Scholar] [CrossRef]

- Park, D.; Yun, S. Construction Cost Prediction Using Deep Learning with BIM Properties in the Schematic Design Phase. Appl. Sci. 2023, 13, 7207. [Google Scholar] [CrossRef]

- Li, Q.; Yang, Y.; Yao, G.; Wei, F.; Li, R.; Zhu, M.; Hou, H. Classification and application of deep learning in construction engineering and management—A systematic literature review and future innovations. Case Stud. Constr. Mater. 2024, 21, e04051. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Liu, H.; Wang, C.; Zhao, Z.; Wang, X.; Yan, L. Semantic enrichment of BIM models for construction cost estimation in pumped storage hydropower using industry foundation classes and interconnected data dictionaries. Adv. Eng. Inform. 2025, 68, 103670. [Google Scholar] [CrossRef]

- Lee, S.K.; Kim, K.R.; Yu, J.H. BIM and ontology-based approach for building cost estimation. Autom. Constr. 2014, 41, 96–105. [Google Scholar] [CrossRef]

- Im, H.; Ha, M.; Kim, D.; Choi, J. Development of an Ontological Cost Estimating Knowledge Framework for EPC Projects. KSCE J. Civ. Eng. 2021, 25, 1578–1591. [Google Scholar] [CrossRef]

- Vakaj, E.; Cheung, F.; Cao, J.; Tawil, A.-R.H.; Patlakas, P. An Ontology-Based Cost Estimation for Offsite Construction. J. Inf. Technol. Constr. 2023, 28, 220–245. [Google Scholar] [CrossRef]

- Williams, T.P.; Gong, J. Predicting construction cost overruns using text mining, numerical data and ensemble classifiers. Autom. Constr. 2014, 43, 23–29. [Google Scholar] [CrossRef]

- Wu, S.; Zhang, N.; Luo, X.; Lu, W.-Z. Intelligent optimal design of floor tiles: A goal-oriented approach based on BIM and parametric design platform. J. Clean. Prod. 2021, 299, 126754. [Google Scholar] [CrossRef]

- Li, Q.; Peng, H.; Li, J.; Xia, C.; Yang, R.; Sun, L.; Yu, P.S.; He, L. A Survey on Text Classification: From Shallow to Deep Learning. arXiv 2021. [Google Scholar] [CrossRef]

- Soni, S.; Chouhan, S.S.; Rathore, S.S. TextConvoNet: A convolutional neural network based architecture for text classification. Appl. Intell. 2023, 53, 14249–14268. [Google Scholar] [CrossRef]

- Zhang, H. Research on Text Classification Based on LSTM-CNN. In Proceeding of the 2024 5th International Conference on Computer Science and Management Technology, Xiamen, China, 18–20 October 2024; pp. 277–282. [Google Scholar] [CrossRef]

- Minaee, S.; Kalchbrenner, N.; Cambria, E.; Nikzad, N.; Chenaghlu, M.; Gao, J. Deep Learning Based Text Classification: A Comprehensive Review. ACM Comput. Surv. 2021, 54, 62. [Google Scholar] [CrossRef]

- Jianan, G.; Kehao, R.; Binwei, G. Deep learning-based text knowledge classification for whole-process engineering consulting standards. J. Eng. Res. 2024, 12, 61–71. [Google Scholar] [CrossRef]

- Sousa, L.J.; Martins, J.P.; Sanhudo, L.; Baptista, J.S. Automation of text document classification in the budgeting phase of the Construction process: A Systematic Literature Review. Constr. Innov. 2024, 24, 292–318. [Google Scholar] [CrossRef]

- Zhong, B.; Shen, L.; Pan, X.; Zhong, X.; He, W. Dispute Classification and Analysis: Deep Learning–Based Text Mining for Construction Contract Management. J. Constr. Eng. Manag. 2024, 150, 04023151. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. An effective ensemble deep learning framework for text classification. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 8825–8837. [Google Scholar] [CrossRef]

- Kathirgamanathan, A.; Patel, A.; Khwaja, A.S.; Venkatesh, B.; Anpalagan, A. Performance comparison of single and ensemble CNN, LSTM and traditional ANN models for short-term electricity load forecasting. J. Eng. 2022, 2022, 550–565. [Google Scholar] [CrossRef]

- Kamateri, E.; Salampasis, M. An Ensemble Framework for Text Classification. Information 2025, 16, 85. [Google Scholar] [CrossRef]

- Hou, D.; Liu, F.; Peng, H.; Gu, Y.; Tang, G. Temporal convolutional network construction and analysis of single-station TEC model. J. Atmos. Sol.-Terr. Phys. 2024, 262, 106309. [Google Scholar] [CrossRef]

- Elmadjian, C.; Gonzales, C.; da Costa, R.L.; Morimoto, C.H. Online eye-movement classification with temporal convolutional networks. Behav. Res. Methods 2023, 55, 3602–3620. [Google Scholar] [CrossRef] [PubMed]

- Priyadarshini, I.; Cotton, C. A novel LSTM–CNN–grid search-based deep neural network for sentiment analysis. J. Supercomput. 2021, 77, 13911–13932. [Google Scholar] [CrossRef] [PubMed]

- Agbesi, V.K.; Chen, W.; Yussif, S.B.; Ukwuoma, C.C.; Gu, Y.H.; Al-antari, M.A. MuTCELM: An optimal multi-TextCNN-based ensemble learning for text classification. Heliyon 2024, 10, e38515. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Liao, L.; Gao, Y.; Huang, H. Extracting salient features from convolutional discriminative filters. Inf. Sci. 2021, 558, 265–279. [Google Scholar] [CrossRef]

- Huynh, H.D.; Do, H.T.-T.; Van Nguyen, K.; Nguyen, N.L.-T. A Simple and Efficient Ensemble Classifier Combining Multiple Neural Network Models on Social Media Datasets in Vietnamese. In Proceedings of the 34th Pacific Asia Conference on Language, Information and Computation, Hanoi, Vietnam, 24–26 October 2020. [Google Scholar]

- Zangari, A.; Marcuzzo, M.; Rizzo, M.; Giudice, L.; Albarelli, A.; Gasparetto, A. Hierarchical Text Classification and Its Foundations: A Review of Current Research. Electronics 2024, 13, 1199. [Google Scholar] [CrossRef]

- Kowsari, K.; Brown, D.E.; Heidarysafa, M.; Meimandi, K.J.; Gerber, M.S.; Barnes, L.E. HDLTex: Hierarchical Deep Learning for Text Classification. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 364–371. [Google Scholar] [CrossRef]

- GB/T50500; Construction Project Bill of Quantities Pricing Standards. Ministry of Housing and Urban-Rural Development of the People’s Republic of China: Beijing, China, 2024.

- Zhejiang Construction Project Cost Management Station. Zhejiang Provincial Budget Quota for Building and Decoration Engineering; Zhejiang Construction Project Cost Management Station: Hangzhou, China, 2013. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).