Abstract

Artificial intelligence (AI) is rapidly reshaping gastrointestinal (GI) surgery by enhancing decision-making, intraoperative performance, and postoperative management. The integration of AI-driven systems is enabling more precise, data-informed, and personalized surgical interventions. This review provides a state-of-the-art overview of AI applications in GI surgery, organized into four key domains: surgical simulation, surgical computer vision, surgical data science, and surgical robot autonomy. A comprehensive narrative review of the literature was conducted, identifying relevant studies of technological developments in this field. In the domain of surgical simulation, AI enables virtual surgical planning and patient-specific digital twins for training and preoperative strategy. Surgical computer vision leverages AI to improve intraoperative scene understanding, anatomical segmentation, and workflow recognition. Surgical data science translates multimodal surgical data into predictive analytics and real-time decision support, enhancing safety and efficiency. Finally, surgical robot autonomy explores the progressive integration of AI for intelligent assistance and autonomous functions to augment human performance in minimally invasive and robotic procedures. Surgical AI has demonstrated significant potential across different domains, fostering precision, reproducibility, and personalization in GI surgery. Nevertheless, challenges remain in data quality, model generalizability, ethical governance, and clinical validation. Continued interdisciplinary collaboration will be crucial to translating AI from promising prototypes to routine, safe, and equitable surgical practice.

1. Introduction

AI has begun to profoundly impact surgery, influencing everything from pre-operative planning and training to intraoperative guidance and postoperative outcomes [1,2,3,4]. Gastrointestinal (GI) surgery encompasses a high volume of procedures, for instance, colorectal cancer screening via colonoscopy and laparoscopic abdominal operations, which generate vast amounts of visual and clinical data [5,6,7,8,9]. This abundance of data makes GI surgery a fertile ground for AI applications [10,11,12,13]. Recent AI techniques are being leveraged to enhance surgeons’ capabilities. Supervised learning (SL) is adopted in computer vision (CV) to improve the detection of GI lesions [14], to optimize surgical decision-making [15], and for patient-specific surgical simulation [16]. Reinforcement learning (RL) and imitation learning (IL) are investigated for workflow automation [17] and augmented dexterity in robotic surgeries [18]. Recent studies indicate that AI can enhance colorectal cancer screening by augmenting endoscopists’ visual detection of precancerous polyps and improving the classification of lesions [19]. Likewise, machine learning (ML) models are now matching or exceeding traditional statistical models in predicting GI surgical outcomes, aiding in risk assessment and tailored patient management [20].

Search Strategy and Study Selection

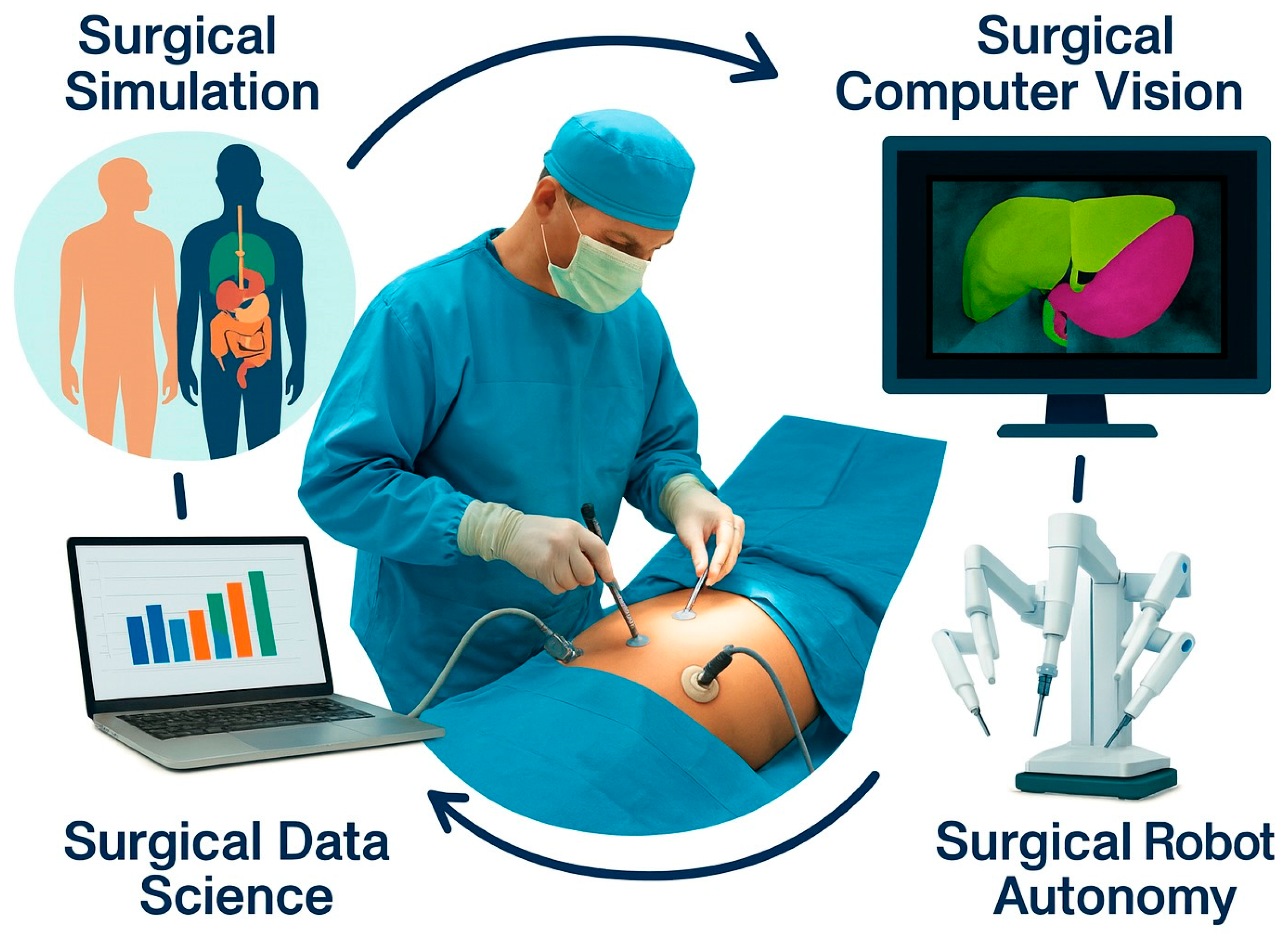

Based on our expertise, we identified four major domains of AI application in surgery (Figure 1): Surgical Simulation, Surgical Computer Vision, Surgical Data Science, and Surgical Robot Autonomy. With this review, we highlight the state-of-the-art developments, current challenges, and potential research directions. In each section, we maintain discussions of core AI methodologies (e.g., digital twins, computer vision algorithms, workflow recognition, autonomy levels) but anchor them to GI surgery use cases, such as laparoscopic cholecystectomy, colorectal surgery, endoscopic interventions, and GI cancer surgery.

Figure 1.

Overview of the major applications and recent advances of artificial intelligence in minimally invasive and robotic gastrointestinal surgery provided in this review. The chapters discuss the applications of AI for surgical simulation (Section 2), surgical computer vision (Section 3), surgical data science (Section 4), and surgical robot autonomy (Section 5).

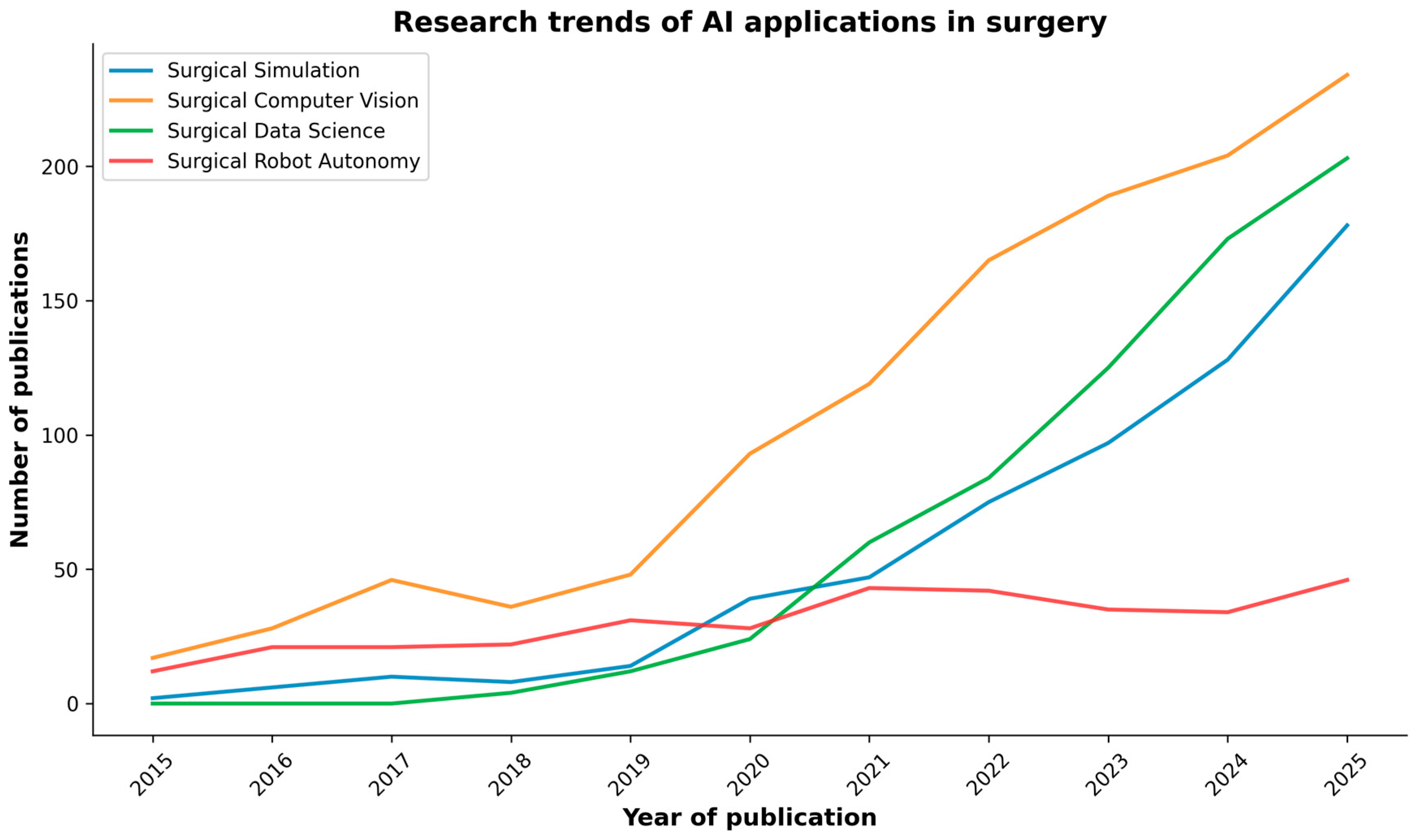

As research in AI applications in surgery is rapidly increasing (Figure 2), a review of key research studies was conducted across reputable academic databases, including PubMed, IEEE Xplore, and Google Scholar. The search was conducted using keywords and phrases such as “Artificial Intelligence”, “Simulation”, “Computer Vision”, “Data Science”, “Robot Autonomy”, and “Gastrointestinal Surgery”. These terms were used to identify relevant research articles, reviews, and conference papers written in English within the last decade (2015–2025). Occasionally, for very recent works accepted at major conferences, we also included preprint papers from open databases such as arXiv and TechRxiv. Two independent reviewers evaluated the titles and abstracts of the identified articles to assess their relevance. Subsequently, the entire articles were reviewed based on the inclusion criteria. Any discrepancies were resolved through discussions involving all the authors.

Figure 2.

PubMed search results of publications on AI in surgery over the past decade, categorized by the major AI domains discussed in the review.

It is crucial to understand that this narrative review does not intend to provide a comprehensive taxonomy of research publications, and no formal PRISMA workflow, quality scoring, or meta-analysis was performed. On the contrary, this article presents a comprehensive perspective on AI in minimally invasive and robotic gastrointestinal surgery by integrating four major domains within a unified framework. While summarizing recent advancements, we identify recurring challenges (data, validation, and governance) and provide a synthesis (Table 1) to guide future translational research.

Through these examples and recent studies, we illustrate how surgical AI is transforming training, intraoperative guidance, data-driven decision support, and robotic assistance in the GI domain.

2. Surgical Simulation

The integration of AI into surgical simulation (Table 1) is shifting the field from isolated task trainers toward data-driven coaching, automated assessment, and patient-specific digital twins. In clinical education and perioperative planning, particularly in hepatobiliary and colorectal surgery, AI-enabled tools are being deployed to enhance preparation and decision-making [16]. A virtual-reality (VR) trainer paired with an AI assessor has demonstrated face, content, and construct validity for early psychomotor skills, indicating that scalability and objectivity can coexist from the outset [21]. Beyond these foundations, VR-augmented differentiable simulations allow surgeons to co-design operative plans while a physics-constrained optimizer maintains physiological plausibility, offering an early view of interactive, twin-based planning workflows [22]. Layered onto simulation, AI provides adaptive feedback and supports governance and reporting standards [16,23], while digital-twin-assisted surgery frameworks specify data flows, validation steps, and ethical considerations for both training and intraoperative navigation [24,25].

In simulated skills training, AI-supported instruction can be advantageous relative to traditional expert tutoring. An AI coach has matched or improved learning efficiency compared with expert instruction [26], and augmenting human teaching with AI analytics has yielded superior acquisition and retention on procedural tasks [27]. When AI-assisted video analysis is coupled to structured coaching for simulated laparoscopic cholecystectomy, novice performance and safety endpoints improve without loss of efficiency, with high acceptability among trainees [28]. Systematic reviews confirm that simulated curricula, especially those providing continuous motion feedback, enhance global ratings and shorten operative time, supporting adoption at scale [29,30]. Complementing these data, a randomized comparison of real-time, multifaceted AI with in-person instruction shows comparable or better skill acquisition with the AI tutor, pointing to more equitable access where faculty bandwidth is limited [31].

The Virtual Operative Assistant demonstrated that explainable-AI scores can align with expert ratings, accelerating deliberate practice while preserving interpretability, an essential prerequisite for clinical acceptance [32]. More recent work introduces larger simulated-laparoscopy datasets and deep architectures that discriminate skill levels with strong performance, reducing annotation burden and enabling objective program-level evaluation [33]. In parallel, deep learning models trained on simulator kinematics can estimate bimanual expertise in real time, yielding granular learning trajectories that drive adaptive coaching loops [34].

AI is likewise transforming patient-specific modeling for rehearsal and planning. In rectal cancer, an AI pipeline can generate detailed three-dimensional pelvic reconstructions directly from MRI, supporting preoperative simulation and spatial understanding [35]. In hepatic surgery, AI improves the extraction of portal and hepatic venous anatomy from CT to support virtual hepatectomy and remnant assessment [36], and end-to-end pipelines now produce comprehensive anatomical models that also predict resection complexity, informing multidisciplinary decision-making [37]. A randomized comparison of AI-accelerated segmentation for 3D-printed models versus digital simulation alone reports in preparation efficiency and selected intraoperative outcomes [38]. For complex hepatic procedures, mixed reality and AI are being integrated to enhance precision and safety, while exposing practical challenges in registration, workflow integration, and privacy that demand rigorous validation [25].

These developments converge toward dynamic, interactive simulations that link preoperative imaging with physics-based constraints [22,39], and toward digital twins for organ transplantation that simulate postoperative regeneration and functional recovery to personalize risk counseling and follow-up [40]. Finally, simulation environments underpin progress in surgical robotics: reinforcement learning benchmarks spanning spatial reasoning, deformable manipulation, dissection, and threading are lowering barriers to research on autonomy [41,42], while photorealistic digital twins and curated demonstrations enable behavior-cloning policies to master contact-rich subtasks such as needle handling and suturing, with systematic benchmarks across RGB and point-cloud visual inputs [43].

3. Surgical Computer Vision

Surgical computer vision (Table 1) has progressed from isolated prototypes to integrated systems that perceive the operative scene, document safety, assess skill, and begin to anticipate next actions in clinically meaningful ways. EndoNet established the feasibility of multi-task recognition (instruments and phases) from laparoscopic video and seeded today’s long-horizon models, while early phase-recognition pipelines on endoscopic video clarified how label design and temporal context affect real-world performance [44,45]. The CholecInstanceSeg dataset standardized instance-level tool segmentation for laparoscopic cholecystectomy and provided recommended splits and baselines to enable fair cross-study comparison [46]. In laparoscopic gastrectomy, convolutional neural networks (CNNs) reliably detected instruments and generated usage heatmaps [47]. Subsequent systems achieved real-time tool localization with clinically acceptable latency [48]; colorectal instrument-recognition models broadened coverage beyond cholecystectomy [49]; and a multi-hospital platform recognized instruments across five laparoscopic procedures and offered heatmaps, rapid review, and reporting that surgeons rated useful for quality improvement and education [50]. At the same time, deployment studies cautioned that single-site models may degrade when transferred across hospitals, devices, or surgical contexts, underscoring the need to plan for domain shift, diversify training data, and perform external validation [51].

Video platforms can localize critical events, such as the timing of cystic duct division, and extract concise clips to support objective reporting during laparoscopic cholecystectomy [52]. Pixel-level segmentation of the hepatocystic region combined with image-based classification of the Critical View of Safety (CVS) criteria shows that assessment can move beyond retrieval to evaluate whether the view is truly “critical” [53]. Other groups have trained CNNs to predict each CVS criterion directly from still images, demonstrating feasibility and highlighting inter-rater variability to address in prospective studies [54]. Near real-time intraoperative scoring systems can provide live feedback correlated with expert judgment, positioning AI as a natural adjunct to the pre-clipping safety check [55].

Anatomy-centric models now operate near real time and, for certain structures, can match or exceed the performance of most human raters; importantly, these studies candidly enumerate blind spots where anatomy is intermittently obscured [56]. By highlighting loose connective tissue, the dissectable layer, across gastrointestinal procedures, AI overlays can render “safe planes” more explicit [57]. In parallel, multi-center datasets and pipelines now detect and segment surgical gauze in liver surgery, mitigating a persistent source of error for both humans and algorithms [58].

Surgical workflow analysis has expanded across procedures. Step or phase recognition systems trained on full-length videos have been reported for transanal total mesorectal excision, laparoscopic hepatectomy, and totally extraperitoneal hernia repair, demonstrating robust parsing of complex operations in diverse fields [59,60,61,62]. In cholecystectomy, both classic CNNs and more recent long-video transformers capture extended temporal dependencies, improving recognition of transition-heavy segments where short windows struggle [62,63,64]. In gastric cancer surgery, phase-based metrics derived from recognition models correlate with expert skill scores; independent instrument-trajectory analyses further suggest that experts achieve shorter travel distances and more regular motion despite similar velocities [65,66].

A framework for simultaneous polyp and lumen detection during lower-GI endoscopy stabilized camera behavior and supported semi-autonomous navigation on a soft robotic colonoscope, supported by newly released real and phantom datasets [67]. Related work showed that CNNs can extract geometric features of the gastrointestinal tract from wireless-capsule imagery [68], and experimental studies demonstrated that narrowly scoped classifiers can provide reliable, explainable feedback in constrained settings such as laparoscopic Nissen fundoplication [69]. Finally, multimodal models, including large language models (LLMs), vision–language models (VLMs), and vision–language–action models (VLAs), are emerging as frameworks for surgical intelligence: modular agents can reason about workflow, decisions, and uncertainty from video and text [70]; LLMs can infer the next operative step from rich near-history context [71]; and, in VLAs, a dual-phase policy (perception–action) has performed autonomous tracking tasks, following lesions and adhering to cutting markers with interpretable intermediate reasoning and encouraging zero-shot generalization [72]. These higher-level systems will only be as reliable as their inputs; here, synthetic-to-real strategies are promising. For example, a reproducible pipeline for photorealistic robotic suturing produced instance-level labels at scale and improved instance segmentation when mixed with limited real data, an attractive approach for niche tasks with expensive annotation [73].

4. Surgical Data Science

Surgical data science (Table 1) is emerging as a disciplined, assistive layer that shapes performance, decisions, and team coordination throughout the perioperative pathway. In bariatric surgery, case-specific technical skill quantified from operative video correlated with excess weight loss at six to twelve months, linking intraoperative performance to patient outcomes and elevating technical skill from a proxy to a prognostic variable [74]. In colorectal surgery, machine learning (ML) models improved 30-day readmission prediction over conventional approaches and identified actionable contributors, including ileus, organ-space infection, and ostomy placement, thereby supporting targeted post-discharge pathways [75]. Real-time prediction of duodenal stump leakage during gastrectomy further illustrates how streaming perioperative signals can be converted into early warnings, enabling mitigation before biochemical or radiologic confirmation [76].

AI-based video analytics can also quantify how the evolving surgical field tracks expert standards, achieving high discrimination for skill classification and supporting objective, reproducible feedback loops for training and quality improvement [77]. Multi-institutional efforts emphasize reliability and fairness so that performance feedback remains consistent across sites and user groups, an essential prerequisite for broad deployment [78]. Beyond assessment, predictive tools have been developed to estimate case duration for common general and robot-assisted procedures, improving block allocation, on-time starts, and downstream staffing and bed management [79,80]. In parallel, pipelines for automated skill assessment have matured; for example, a three-stage video algorithm separates good from poor skill with high accuracy, laying the groundwork for finer-grained metrics as datasets expand in size and diversity [81]. Disease-specific perioperative models are also proliferating in gastrectomy, as early-complication predictors flag patients for targeted surveillance [82], while deep learning applied to intraoperative video has been leveraged to estimate colorectal anastomotic leakage risk in real time, potentially informing intraoperative and early postoperative decisions [83].

At the systems level, AI has been integrated with the Internet of Medical Things to collect, process, and analyze real-time vital signs during surgical and interventional care, creating pipelines that can deliver timely, interpretable alerts to clinicians [84]. Within anesthesia, a predictive ML system has been shown to warn of impending hypotension up to 20 min in advance, shifting management from reactive to anticipatory and aiming to reduce cumulative hypotension exposure [85]. Temporal models extend into the postoperative period as well: a recurrent neural network outperformed logistic regression for predicting colorectal postoperative complications, including wound and organ-space infections, supporting its potential use as a bedside risk-assessment tool with continuously updated estimates [86].

Random-forest models predict anastomotic leakage after anterior resection for rectal cancer, highlighting predictors that align with surgical judgment [87]. Phase-recognition models, long used for workflow analysis, now serve as substrates for skill assessment in laparoscopic cholecystectomy, aligning with expert ratings and stratifying proficiency levels [88]. In hepatobiliary surgery, classifiers for liver-resection complications achieve high discrimination while surfacing practical predictors, such as operative duration, body mass index, and incision length, that naturally integrate with prehabilitation and intraoperative planning [89]. Similarly, decision-tree and gradient-boosting models have been constructed to predict postoperative ileus after laparoscopic colorectal carcinoma surgery, pointing toward parsimonious, modifiable risk bundles [90]. Finally, an AI-based perioperative safety-verification system improved the execution and standardization of surgical checklist steps in general surgery, underscoring how thoughtfully embedded decision support can enhance team reliability without disrupting clinical flow [91].

5. Surgical Robot Autonomy

Recent research on surgical robot autonomy (Table 1) aims to increase the levels of autonomy and assistance achievable in the operating room (OR) [92]. Despite steady progress, most commercial systems are still classified as Level 0 (i.e., no autonomy), underscoring the translational gap between research prototypes and deployed platforms [93,94]. Early landmark demonstrations in soft-tissue anastomosis showed that supervised autonomy can match or surpass expert performance on precision and consistency, reframing the clinical question from “whether” to “where” autonomy is safely useful [95]. Subsequent in vivo studies, incorporating vision-guided self-corrections, further demonstrated that delicate suturing can be performed reliably if perception, planning, and safety monitors are tightly integrated [96]. Endoluminal platforms have, in parallel, articulated staged “intelligence layers” from teleoperation to semi-autonomy, showing that even non-experts benefit when navigation or viewpoint management is delegated to intelligent controllers [17].

Visualization and exposure are natural entry points for autonomy because they relieve routine burdens without displacing surgical judgment. Instrument-aware visual servoing can center tools and maintain task-appropriate viewpoints while allowing smooth handover to the surgeon when needed, improving coordination without eroding trust [97]. Extending this beyond rigid scopes, a tendon-driven flexible endoscope coupled with optimal control tracked instruments while minimizing both internal and external workspace occupation, addressing safety and ergonomics central to minimally invasive procedures [98]. Learning-based camera assistance has also matured: a cognitive controller that improves with experience, reduces operative time, and increases the proportion of “good” views across sessions [99]. On the da Vinci Research Kit (dVRK) [100], a voice-enabled autonomous camera achieved workload and user-preference profiles comparable to a human operator despite modest differences in completion time [101]; likewise, a purely vision-based RL agent mapped endoscopic images directly to camera actions to maintain centering without additional sensors [102]. Most recently, robotic camera holders for laparoscopy have shown rapid response while respecting the remote center of motion (RCM) with favorable usability ratings, suggesting practical readiness for hands-free camera assistance in standard tasks [103].

Beyond visualization, autonomy is being explored for intraoperative support. Blood suction, a frequent cause of pauses and errors, is an attractive target: investigators transferred an RL policy trained in a particle-based fluid simulator to physical setups, reliably evacuating clinically meaningful volumes [104]. Building on this, multimodal large language models have acted as high-level planners that sequence suction primitives over long horizons, generalizing across tools and bleeding conditions and hinting at how language can couple perception, intent, and action in the OR [105]. For tissue exposure, demonstration-guided RL produced reliable soft-tissue retraction with safeguards that respect tissue mechanics [106], while pixel-space domain adaptation enabled the first successful visual sim-to-real transfer for deformable-tissue retraction without retraining, an important milestone for data efficiency and robustness [107]. Complementary work trained RL agents to learn optimal tensioning policies and action sequences for soft tissue cutting, addressing a well-known source of variability during dissection [108].

Suturing remains the principal proving ground because it couples perception, dexterity, planning, and error recovery into a single loop. Augmented-dexterity pipelines have achieved fully autonomous throws with 6-DoF needle pose estimation, thread coordination, hand-offs, and recovery primitives on the dVRK [109]. Iterative upgrades, extended Kalman filtering for needle tracking, 3D suture-line alignment, and improved thread management have yielded materially more throws in less time and higher wound-closure quality, especially when limited human interventions are permitted as guardrails [110]. Other efforts integrate finite-element modeling of tissue forces with deep networks for wound-edge detection and then execute sutures end-to-end on industrial arms fitted with surgical wrists, illustrating how physics-aware reasoning can raise reliability and safety margins [111]. At the skill-composition level, RL has automated the surgeon–assistant needle hand-off with sparse rewards while preserving surgeon-like trajectories and robustness to variable starting conditions, a micro-policy that plugs into larger suturing pipelines [112]. More recently, a diffusion-model-based framework combined with dynamic time warping-based locally weighted regression achieved high success rates in both simulated and real settings, advancing long-horizon autonomy under variability [113]. To standardize evaluation and prevent metric drift, a benchmark with substantial demonstrations and goal-conditioned assessment has been introduced, showing sizable gains in insertion-point accuracy over task-only baselines across newer multimodal AI models [114].

IL foundations have progressed in parallel to support multi-task behaviors. Transformer-based architectures cast low-level surgical manipulation as sequence modeling, enabling the learning of dexterous skills and their composition across tasks, such as needle picking, knot tying, and tissue retraction on the dVRK [115,116]. Extending this paradigm, a hierarchical, language-conditioned framework was introduced: a high-level policy selects the subtask while a low-level controller executes precise motions, enabling fully automated clipping-and-cutting in ex vivo cholecystectomy with high success on previously unseen specimens [117].

Table 1.

For each section of the review, technical and clinical applications of surgical AI models and methods are reported.

Table 1.

For each section of the review, technical and clinical applications of surgical AI models and methods are reported.

| Technical Application | Clinical Application | Data Modality | AI Model/Method | Outcome | References |

|---|---|---|---|---|---|

| Surgical Simulation | |||||

| Skill assessment | GI | VR, kinematics | Supervised scoring | Intraoperative expert tutoring | [21] |

| Planning | Colorectal | VR, medical imaging, digital twins | Anatomy segmentation | Improved efficiency, planning fidelity | [22,35,36,37,38,39,40] |

| Coaching, training | Laparoscopy, cholecystectomy | VR, telemetry | Analytics | Scalable coaching workflows | [23,24,25,26,27,28,29,30,31] |

| Autonomous task execution | GI | Image RGBD, kinematics | RL, IL | Benchmark for AI agents training | [41,42,43] |

| Surgical Computer Vision | |||||

| Scene understanding | Laparoscopy | Video RGB | CNN detection | Improved spatial understanding and contextualization | [44,45] |

| Tool segmentation | Laparoscopy, cholecystectomy | Video RGB | CNN segmentation | Improved spatial understanding and contextualization | [46,73] |

| Tool and object detection | Laparoscopy | Video RGB | CNN detection | Improved spatial understanding and contextualization | [47,48,49,50,58] |

| Unsafe event detection | Laparoscopy, cholecystectomy | Video RGB | CNN detection | Timing of critical steps | [51,52] |

| Safety verification | Critical view of Safety scoring | Video RGB | CNN data aggregation | Near real-time safety check | [53,54,55] |

| Anatomy segmentation | GI dissection | Video RGB | CNN segmentation | Enhanced intraoperative guidance | [56,57] |

| Anatomy segmentation | Colonoscopy | Video RGB | CNN detection | Enhanced intraoperative guidance | [67,68,69] |

| Workflow/Phase recognition | GI | Video RGB | Transformer temporal detection | Enhanced intraoperative guidance | [59,60,61,62,63,64,65,66] |

| Multi modal frameworks | GI | Video RGB, text, kinematics | LLM, VLM, VLA | Robust perception and planning | [70,71,72] |

| Surgical Data Science | |||||

| Outcome prediction | GI, bariatric, colorectal | Video RGB | RNN regression | Procedure outcome prediction | [74,86,87] |

| Outcome prediction | Colorectal | Perioperative data | Classification | Mid/Long term readmission prediction | [75] |

| Unsafe event prediction | GI, Gastrectomy | Perioperative data | Classification | Procedural events prediction | [76,82,83,84,85,89,90] |

| Skill classification and assessment | GI | Video, kinematics | CNN classification | Automated skill assessment | [77,78,81] |

| Case duration prediction | GI | Perioperative data | Regression | Automated case duration prediction | [79,80] |

| Safety verification system | GI | Video RGB, sensors | Regression | Perioperative unsafe events detection | [91] |

| Surgical Robot Autonomy | |||||

| Camera control | Laparoscopy, Endoscopy | Video, kinematics | RL, visual servoing, voice control | Autonomous tool-centering camera | [97,98,99,100,101,102,103] |

| Blood suction | Laparoscopy | Image RGBD, forces | RL, sim to real | Autonomous blood suction | [104,105] |

| Tissue retraction | GI | Video RGBD, forces | RL, sim to real | Autonomous organ exposure and tissue tensioning | [106,107,108,116] |

| Suturing | GI | Video RGB, kinematics | CNN segmentation, planning and control | Autonomous anastomosis | [95,96,109,110,111,112] |

| Surgical tasks | GI | Video RGB, kinematics | Diffusion models | Autonomous surgical subtasks | [113,114] |

| Surgical tasks | GI, ex-vivo cholecystectomy | Text, video RGB, kinematics | VLA, Transformers, Diffusion models | Hierarchical, Language-conditioned policy | [115,116] |

6. Discussion

Artificial intelligence is revolutionizing surgery, impacting various aspects of surgical training, planning, execution, and evaluation. Over the past decade, particularly in the last five years (Figure 2), the field has transitioned from proof-of-concept studies to practical tools on the verge of routine use [1].

To translate these advances from research prototypes to routine practice in GI surgery, three cross-cutting challenges are particularly relevant: (i) multidisciplinary implementation and technical integration of heterogeneous data sources, (ii) robust validation and benchmarking that link technical metrics to patient- and system-level outcomes, and (iii) ethical and legal frameworks that keep pace with increasing levels of intelligence and autonomy.

6.1. Multidisciplinary Implementation and Integration Across Data Sources

Successful deployment of AI in GI surgery depends on close collaboration between surgeons, endoscopists, computer scientists, data engineers, ethicists, and hospital IT teams. Each of the four domains requires integration of multiple data streams and, increasingly, multi-omics and longitudinal follow-up data.

In surgical simulation, AI-enabled VR trainers, patient-specific 3D models, and early digital-twin frameworks will only reach their potential if they are embedded in formal curricula and institutional workflows. This implies standardized interfaces to hospital PACS and planning systems, automated ingestion of CT/MRI and endoscopic video, and alignment with competency-based training programs in surgery. Multidisciplinary teams must also define how simulation outputs feed back into decisions about credentialing and operative planning.

In surgical computer vision, clinically useful systems must be integrated with the existing OR stack. Real-time instrument recognition, anatomy overlays, and Critical View of Safety assessment need low-latency access to video and a robust path to display feedback without disrupting existing workflows. Co-development with OR nurses, biomedical engineers, and human-factor specialists is essential to ensure that feedback is presented in an intuitive and non-taxing way.

In surgical data science, predictive models require federated access to heterogeneous data sources. Implementing such models demands robust data pipelines, data-quality checks, and governance structures within hospitals and networks. Clinicians, data scientists, and IT departments must jointly determine how risk estimates are surfaced and how they are combined with existing risk scores to support decisions without overwhelming clinicians.

In surgical robot autonomy, shared control and autonomous systems must be interoperable with commercial robotic platforms and meet stringent safety and usability constraints. Implementation requires collaboration among OR personnel and regulatory experts to design interfaces that make autonomy transparent and overridable, and to ensure that autonomous behaviors can be tailored to different GI procedures.

6.2. Need for Robust Validation and Benchmarking

While many of the systems described in this review report impressive technical performance, current evidence is still dominated by single-center, retrospective, or simulation-based studies. To ensure reliable benefits in GI surgery, each domain requires systematic validation and standardized benchmarking [118].

For surgical simulation, most evidence relates to construct validity and short-term training outcomes. The next step is to design prospective, multi-center trials that test whether AI-enhanced simulation improves not only simulator metrics but also real-world performance. Benchmarking efforts should define common tasks, outcome measures, and follow-up intervals so that different simulators and AI coaching systems can be compared on a common scale.

In surgical computer vision, there is a growing ecosystem of datasets and benchmarks for tools, phases, anatomy, and safety cues. Robust translation into routine surgeries requires external validation across institutions, devices, and geographical regions, with explicit reporting of performance under domain shift. Common benchmark tasks should be associated with transparent releases of data splits, evaluation scripts, and clinically meaningful metrics to support fair comparison and cumulative progress.

For surgical data science, predictive models often achieve higher AUROC or calibration than traditional scores, but many remain at the proof-of-concept stage. Future studies should prioritize prospective validation, ideally with impact analyses that quantify how using these models changes management and outcomes. Benchmarking here should include not only discrimination and calibration but also decision-curve analysis, subgroup performance (e.g., by age, comorbidity, center), and robustness to missing data.

In surgical robot autonomy, many demonstrations are performed in benchtop or preclinical settings, using research platforms and synthetic tasks. To progress toward clinical deployment in GI surgery, the field needs standardized evaluation protocols, including task definitions, environmental conditions, and success criteria that link technical performance to surrogate clinical endpoints. Multi-center preclinical trials and, ultimately, carefully controlled first-in-human studies will be required to establish safety and efficacy, with pre-defined stopping rules and oversight by independent committees.

Beyond validation, the choice of model itself involves practical trade-offs that impact generalizability and deployability. In surgical computer vision, CNN-based detectors and segmenters excel when targets are primarily spatial (e.g., tools, anatomy, safety landmarks) and low latency is crucial. They often achieve robust real-time performance with moderate dataset sizes. On the other hand, Transformer-based long-video workflows are particularly advantageous for phase and workflow recognition, as well as higher-level understanding. This is because attention mechanisms can leverage long-range temporal context across minutes. However, they are typically more data and compute-intensive, and real-time deployment may necessitate pretraining, efficient attention, or model compression. In surgical data science, classical machine learning (e.g., regularized regression, random forests, gradient boosting) is often competitive for structured perioperative variables. This is because they offer simpler calibration and interpretability. In contrast, deep learning (e.g., RNNs/temporal transformers and multimodal models) becomes more valuable when raw time-series, waveforms, kinematics, or video are available, and manual feature engineering becomes a bottleneck. Therefore, a pragmatic direction for gastrointestinal surgery is to develop hybrid pipelines. These pipelines combine deep models to extract representations from unstructured signals with simpler, well-calibrated predictors for risk estimation. This approach aims to balance performance, transparency, and data requirements.

6.3. Ethical and Legal Considerations in GI Surgical AI

As AI systems in GI surgery progress from decision support to higher levels of autonomy, ethical and legal questions become central to their design and deployment. These considerations cut across simulation, computer vision, data science, and robot autonomy and must be addressed explicitly if AI is to be trusted as a clinical partner rather than perceived as a black box.

In surgical simulation, large datasets of surgical gestures, errors, and video recordings from surgeons raise questions about data ownership, consent, and the use of performance metrics. Institutions must define who can access simulation-derived performance data, for what purposes (education, credentialing, research), and how long such data may be retained. Surgeons should be informed when their data are used to train new AI models or to benchmark others. If simulation-based metrics are eventually used in formal certification or privileging, transparent criteria and appeals processes will be needed to avoid unfair or opaque decisions.

In surgical computer vision, the routine capture and analysis of high-resolution operative and endoscopic video for tool tracking, anatomy segmentation, or safety checks raises issues of privacy, annotation of identifiable content, and secondary use of video. De-identification of GI surgical video is non-trivial, as visual clues can reveal institutions, devices, and even patient characteristics. Governance frameworks must specify consent procedures for using operative video in research and commercial development, restrictions on cross-border data transfer, and safeguards against unintended uses. Moreover, biases in training datasets and underrepresentation of certain patient groups, disease stages, or centers may lead to systematically worse performance in those populations, which is ethically unacceptable for safety-critical tasks.

In surgical data science, models that assign risk scores for leak, readmission, or complications may influence decisions about postoperative monitoring, ICU admission, or even patient selection. This raises concerns about algorithmic fairness, explainability, and accountability. Surgeons and patients should understand, at least at a high level, what variables drive risk estimates and how uncertainties are handled. If AI-derived scores disproportionately classify specific groups (e.g., older or comorbid patients) as “high risk” based on biased training data, there is a risk of reinforcing inequities in access to complex GI procedures. Legal questions also arise: when recommendations from AI systems are followed, and adverse events occur, responsibility will be shared between clinicians, institutions, and manufacturers [119]. Clear guidance from regulators and professional societies will be required on how to document, audit, and govern the use of AI-powered decision support in perioperative care.

In surgical robot autonomy, ethical and legal stakes are particularly high. Shared-autonomy systems for camera control or suction are already influencing intraoperative actions, and more advanced systems for suturing or tissue manipulation may, in the future, execute parts of GI procedures with limited human intervention. Core questions include: who is accountable for an adverse outcome when autonomous actions contribute to a complication? What level of human oversight (e.g., always-in-the-loop vs. on-the-loop) is acceptable for different tasks? How should informed consent documents describe the role of autonomous functions in surgical procedures? Regulatory frameworks will likely differentiate between AI used as decision-support software, AI embedded in robotic platforms, and systems granting task-level autonomy, each with specific requirements for preclinical evidence, post-market surveillance, and incident reporting. From an ethical perspective, maintaining surgeon situational awareness and preserving the ability to override autonomous actions at any time will be important to maintain trust and uphold the surgeon’s primary responsibility toward the patient.

7. Conclusions

This review delves into the significant applications and recent advancements of artificial intelligence (AI) in gastrointestinal (GI) surgery. It covers various aspects, including surgical simulation, surgical computer vision, and surgical data science.

Each section explores the principal AI methodologies, such as digital twins, computer vision algorithms, workflow recognition, and autonomy levels. However, these methodologies are anchored to specific GI surgery use cases, including laparoscopic cholecystectomy, colorectal surgery, endoscopic interventions, and GI cancer surgery. Diagnostic AI applications, such as polyp detection and classification, have been introduced briefly but not extensively explored due to the existing extensive literature on the subject. Also, while AI models for predicting early postoperative outcomes and complications are discussed, AI applications for long-term postoperative follow-up, recurrence detection, or monitoring of recurrent symptoms are beyond the scope of this narrative review.

In GI surgery, AI holds promise for supporting organ-preserving pathways. It can refine patient selection for local excision and watch-and-wait strategies, optimize risk stratification, and standardize functional and oncologic outcome assessment. Additionally, computer vision and autonomous robotic tools have the potential to enhance the quality and reproducibility of surgical tasks, whether laparoscopic, robotic, or transanal. This improvement can be achieved by enhancing anatomical identification, dissection planes, and intraoperative feedback.

To realize this potential, several factors must be considered. Large, high-quality multicenter datasets are essential, along with shared benchmarks and metrics. Robust validation on clinically meaningful endpoints is crucial, and careful integration into surgical workflows, training programs, and regulatory frameworks is necessary. If developed and implemented responsibly, AI-enabled technologies can become a key enabler of safer, more standardized, and more personalized minimally invasive and robotic surgery. This has a particularly significant impact on rectal cancer care and organ-preserving strategies.

In the coming years, we anticipate the emergence of AI-driven surgical systems that continuously learn and improve, ultimately leading to safer surgeries, better patient outcomes, and more efficient utilization of healthcare resources in gastrointestinal surgery. The key applications and advancements will serve as the driving force behind this transformative journey, propelling us closer to the era of truly intelligent surgery that benefits both patients and surgeons. Despite these promising developments, significant obstacles remain in the widespread clinical adoption of AI, including high costs, technical complexity, limited generalization capabilities on unseen data, and the need for seamless integration into existing clinical workflows.

These technologies hold the potential to significantly enhance the accuracy, safety, and efficiency of gastrointestinal surgeries, ultimately leading to improved patient outcomes. To overcome the current challenges and fully realize the potential of surgical AI, continued collaboration between engineers, clinicians, and researchers is essential.

Author Contributions

Conceptualization, M.P., F.M., A.A. and G.D. (Giulio Dagnino); Data curation, M.P., F.M., G.D. (Giovanni Distefano) and C.A.A., methodology, M.P., F.M., F.B. and P.L.; validation, P.L., F.B., G.D. (Giovanni Distefano)., G.D. (Giulio Dagnino), C.A.A. and A.A.; resources, M.P., C.A.A. and G.D. (Giovanni Distefano); writing—original draft preparation, M.P., F.M. and G.D. (Giovanni Distefano); writing—review and editing, M.P., F.M., F.B., C.A.A., G.D. (Giovanni Distefano), G.D. (Giulio Dagnino) and A.A.; visualization, M.P., P.L. and F.B.; supervision, C.A.A., G.D. (Giovanni Distefano), G.D. (Giulio Dagnino). and A.A.; funding acquisition, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the European Research Council (ERC) under the Horizon Europe programme (Grant EndoTheranostics, Grant Agreement No. 101118626, https://doi.org/10.3030/101118626), by the CLASSICA project (Grant Agreement No. 101057321, https://doi.org/10.3030/101057321) and the PALPABLE project (Grant Agreement No. 101092518, https://doi.org/10.3030/101092518). The work has also been partially supported by the Italian Ministry of University and Research (MUR) under the PRIN 2022 programme “Towards Intelligent ROBOTIC ENDOSCOPIC DISSECTION (TI-RED)”, funded by the European Union. Views and opinions expressed are, however, those of the author(s) only and do not necessarily reflect those of the European Union, the European Research Council Executive Agency, or the Health and Digital Executive Agency. Neither the European Union nor the granting authority can be held responsible for them.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data are presented in this review article.

Acknowledgments

During the preparation of this manuscript/study, the authors used GPT 5.1 to refine and improve the overall textual clarity and enhance the readability and flow of the manuscript. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ML | Machine Learning |

| DL | Deep Learning |

| GI | Gastrointestinal |

| MR | Mixed Reality |

| VR | Virtual Reality |

| CV | Computer Vision |

| SL | Supervised Learning |

| RL | Reinforcement Learning |

| IL | Imitation Learning |

| RNN | Recurrent Neural Network |

| CNN | Convolutional Neural Network |

| LLM | Large Language Model |

| VLM | Vision Language Model |

| VLA | Vision Language Action Model |

References

- Varghese, C.; Harrison, E.M.; O’grady, G.; Topol, E.J. Artificial intelligence in surgery. Nat. Med. 2024, 30, 1257–1268. [Google Scholar] [CrossRef]

- Pescio, M.; Kundrat, D.; Dagnino, G. Endovascular robotics: Technical advances and future directions. Minim. Invasive Ther. Allied Technol. 2025, 34, 239–252. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Zhang, F.; Zhang, F.; Wu, X.; Xinye, Y. Machine learning-driven inverse design of puncture needles with tailored mechanics. Minim. Invasive Ther. Allied Technol. 2025, 35, 18–27. [Google Scholar] [CrossRef]

- Dagnino, G.; Kundrat, D. Robot-assistive minimally invasive surgery: Trends and future directions. Int. J. Intell. Robot. Appl. 2024, 8, 812–826. [Google Scholar] [CrossRef]

- Ammirati, C.A.; Arezzo, A.; Gaetani, C.; Strazzarino, G.A.; Faletti, R.; Bergamasco, L.; Barisone, F.; Fonio, P.; Morino, M. Can we apply the concept of sentinel lymph nodes in rectal cancer surgery? Minim. Invasive Ther. Allied Technol. 2024, 33, 334–340. [Google Scholar] [CrossRef] [PubMed]

- Baldari, L.; Boni, L.; Cassinotti, E. Lymph node mapping with ICG near-infrared fluorescence imaging: Technique and results. Minim. Invasive Ther. Allied Technol. 2023, 32, 213–221. [Google Scholar] [CrossRef]

- Benzoni, I.; Fricano, M.; Borali, J.; Bonafede, M.; Celotti, A.; Tarasconi, A.; Ranieri, V.; Totaro, L.; Quarti, L.M.; Dendena, A.; et al. Fluorescence-guided mesorectal nodes harvesting associated with local excision for early rectal cancer: Technical notes. Minim. Invasive Ther. Allied Technol. 2025, 34, 290–296. [Google Scholar] [CrossRef]

- Takahashi, M.; Nakazawa, K.; Usami, Y.; Mori, K.; Suzuki, J.; Asami, S.; Okada, Y.; Tajima, H.; Kozawa, E.; Baba, Y. Feasibility and clinical outcomes of CT-guided percutaneous gastrostomy with non-guidewire device. Minim. Invasive Ther. Allied Technol. 2025, 35, 28–33. [Google Scholar] [CrossRef]

- Igami, T.; Hayashi, Y.; Yokyama, Y.; Mori, K.; Ebata, T. Development of real-time navigation system for laparoscopic hepatectomy using magnetic micro sensor. Minim. Invasive Ther. Allied Technol. 2024, 33, 129–139. [Google Scholar] [CrossRef]

- Mintz, Y.; Brodie, R. Introduction to artificial intelligence in medicine. Minim. Invasive Ther. Allied Technol. 2019, 28, 73–81. [Google Scholar] [CrossRef] [PubMed]

- Hao, J.; Cheng, D.; Jiang, J.; Zuo, B.; Zhang, Y. Real-time indocyanine green fluorescence imaging and navigation for cone unit laparoscopic hepatic resection of intrahepatic duct stone: A case series study. Minim. Invasive Ther. Allied Tech-Nologies 2024, 33, 351–357. [Google Scholar] [CrossRef]

- Koseoglu, B.; Ozercan, A.Y.; Colak, A.; Basboga, S.; Tuncel, A. Can near-infrared imaging distinguish a gelatin-based matrix granuloma from a malignancy in a kidney? Minim. Invasive Ther. Allied Technol. 2024, 33, 58–62. [Google Scholar] [CrossRef]

- Oderda, M.; Marquis, A.; Calleris, G.; D’aGate, D.; Delsedime, L.; Vissio, E.; Dematteis, A.; Gatti, M.; Faletti, R.; Marra, G.; et al. Transperineal 3D fusion imaging-guided targeted microwaves ablation for low to intermediate-risk prostate cancer: Results of a phase I-II study. Minim. Invasive Ther. Allied Technol. 2025, 34, 194–202. [Google Scholar] [CrossRef]

- Ward, T.M.; Mascagni, P.; Ban, Y.; Rosman, G.; Padoy, N.; Meireles, O.; Hashimoto, D.A. Computer vision in surgery. Surgery 2021, 169, 1253–1256. [Google Scholar] [CrossRef] [PubMed]

- Maier-Hein, L.; Eisenmann, M.; Sarikaya, D.; März, K.; Collins, T.; Malpani, A.; Fallert, J.; Feussner, H.; Giannarou, S.; Mascagni, P.; et al. Surgical data science—From concepts toward clinical translation. Med. Image Anal. 2022, 76, 102306. [Google Scholar] [CrossRef] [PubMed]

- Park, J.J.; Tiefenbach, J.; Demetriades, A.K. The role of artificial intelligence in surgical simulation. Front. Med. Technol. 2022, 4, 1076755. [Google Scholar] [CrossRef]

- Martin, J.W.; Scaglioni, B.; Norton, J.C.; Subramanian, V.; Arezzo, A.; Obstein, K.L.; Valdastri, P. Enabling the future of colonoscopy with intelligent and autonomous magnetic manipulation. Nat. Mach. Intell. 2020, 2, 595–606. [Google Scholar] [CrossRef] [PubMed]

- Attanasio, A.; Scaglioni, B.; De Momi, E.; Fiorini, P.; Valdastri, P. Autonomy in Surgical Robotics. Annu. Rev. Control Robot. Auton. Syst. 2021, 4, 651–679. [Google Scholar] [CrossRef]

- Handa, P.; Goel, N.; Indu, S.; Gunjan, D. AI-KODA score application for cleanliness assessment in video capsule endoscopy frames. Minim. Invasive Ther. Allied Technol. 2024, 33, 311–320. [Google Scholar] [CrossRef]

- Wang, J.; Tozzi, F.; Ganjouei, A.A.; Romero-Hernandez, F.; Feng, J.; Calthorpe, L.; Castro, M.; Davis, G.; Withers, J.; Zhou, C.; et al. Machine learning improves prediction of postoperative outcomes after gastrointestinal surgery: A systematic review and meta-analysis. J. Gastrointest. Surg. 2024, 28, 956–965. [Google Scholar] [CrossRef]

- Bogar, P.Z.; Virag, M.; Bene, M.; Hardi, P.; Matuz, A.; Schlegl, A.T.; Toth, L.; Molnar, F.; Nagy, B.; Rendeki, S.; et al. Validation of a novel, low-fidelity virtual reality simulator and an artificial intelligence assessment ap-proach for peg transfer laparoscopic training. Sci. Rep. 2024, 14, 16702. [Google Scholar] [CrossRef]

- Cremese, R.; Godard, C.; Bénichou, A.; Blanc, T.; Quemada, I.; Vestergaard, C.L.; Hajj, B.; Barbier-Chebbah, A.; Laurent, F.; Masson, J.-B. Virtual reality-augmented differentiable simulations for digital twin applications in surgical planning. Sci. Rep. 2025, 15, 24377. [Google Scholar] [CrossRef]

- Riddle, E.W.; Kewalramani, D.; Narayan, M.; Jones, D.B. Surgical Simulation: Virtual Reality to Artificial Intelligence. Curr. Probl. Surg. 2024, 61, 101625. [Google Scholar] [CrossRef] [PubMed]

- Kyeremeh, J.; Asciak, L.; Blackmur, J.P.; Luo, X.; Picard, F.; Shu, W.; Stewart, G.D. Digital twins assisted surgery: A conceptual framework for transforming surgical training and navigation. The Surgeon, 2025; in press. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Yang, J.; Zhou, B.; Tang, L.; Liang, Y. Integrating mixed reality, augmented reality, and artificial intelligence in complex liver surgeries: Enhancing precision, safety, and outcomes. iLIVER 2025, 4, 100167. [Google Scholar] [CrossRef] [PubMed]

- Fazlollahi, A.M.; Bakhaidar, M.; Alsayegh, A.; Yilmaz, R.; Winkler-Schwartz, A.; Mirchi, N.; Langleben, I.; Ledwos, N.; Sabbagh, A.J.; Bajunaid, K.; et al. Effect of Artificial Intelligence Tutoring vs Expert Instruction on Learning Simulated Surgical Skills Among Medical Students: A Randomized Clinical Trial. JAMA Netw. Open 2022, 5, e2149008. [Google Scholar] [CrossRef]

- Giglio, B.; Albeloushi, A.; Alhaj, A.K.; Alhantoobi, M.; Saeedi, R.; Davidovic, V.; Uthamacumaran, A.; Yilmaz, R.; Lapointe, J.; Balasubramaniam, N.; et al. Artificial Intelligence–Augmented Human Instruction and Surgical Simulation Performance: A Randomized Clinical Trial. JAMA Surg. 2025, 160, 993–1003. [Google Scholar] [CrossRef]

- Wu, S.; Tang, M.M.; Liu, J.B.; Qin, D.; Wang, Y.B.; Zhai, S.; Bi, E.; Li, Y.; Wang, C.; Xiong, Y.; et al. Impact of an AI-based laparoscopic cholecystectomy coaching program on the surgical performance: A ran-domized controlled trial. Int. J. Surg. 2024, 110, 7816–7823. [Google Scholar] [CrossRef]

- Suh, I.; Li, H.; Li, Y.; Nelson, C.; Oleynikov, D.; Siu, K.-C. Using Virtual Reality Simulators to Enhance Laparoscopic Cholecystectomy Skills Learning. Appl. Sci. 2025, 15, 8424. [Google Scholar] [CrossRef]

- Vitale, S.G.; Carugno, J.; Saponara, S.; Mereu, L.; Haimovich, S.; Pacheco, L.A.; Giannini, A.; Chellani, M.; Urman, B.; De Angelis, M.C.; et al. What is the impact of simulation on the learning of hysteroscopic skills by residents and medical students? A systematic review. Minim. Invasive Ther. Allied Technol. 2024, 33, 373–386. [Google Scholar] [CrossRef]

- Yilmaz, R.; Bakhaidar, M.; Alsayegh, A.; Hamdan, N.A.; Fazlollahi, A.M.; Tee, T.; Langleben, I.; Winkler-Schwartz, A.; Laroche, D.; Santaguida, C.; et al. Real-Time multifaceted artificial intelligence vs In-Person instruction in teaching surgical technical skills: A randomized controlled trial. Sci. Rep. 2024, 14, 15130. [Google Scholar] [CrossRef]

- Mirchi, N.; Bissonnette, V.; Yilmaz, R.; Ledwos, N.; Winkler-Schwartz, A.; Del Maestro, R.F. The Virtual Operative As-sistant: An explainable artificial intelligence tool for simulation-based training in surgery and medicine. PLoS ONE 2020, 15, e0229596. [Google Scholar] [CrossRef]

- Power, D.; Burke, C.; Madden, M.G.; Ullah, I. Automated assessment of simulated laparoscopic surgical skill perfor-mance using deep learning. Sci. Rep. 2025, 15, 13591. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, R.; Winkler-Schwartz, A.; Mirchi, N.; Reich, A.; Christie, S.; Tran, D.H.; Ledwos, N.; Fazlollahi, A.M.; Santaguida, C.; Sabbagh, A.J.; et al. Continuous monitoring of surgical bimanual expertise using deep neural networks in virtual reality sim-ulation. npj Digit. Med. 2022, 5, 54. [Google Scholar] [CrossRef]

- Hamabe, A.; Ishii, M.; Kamoda, R.; Sasuga, S.; Okuya, K.; Okita, K.; Akizuki, E.; Miura, R.; Korai, T.; Takemasa, I. Artificial intelligence-based technology to make a three-dimensional pelvic model for preoperative sim-ulation of rectal cancer surgery using MRI. Ann. Gastroenterol. Surg. 2022, 6, 788–794. [Google Scholar] [CrossRef]

- Kazami, Y.; Kaneko, J.; Keshwani, D.; Takahashi, R.; Kawaguchi, Y.; Ichida, A.; Ishizawa, T.; Akamatsu, N.; Arita, J.; Hasegawa, K. Artificial intelligence enhances the accuracy of portal and hepatic vein extraction in computed tomography for virtual hepatectomy. J. Hepato-Biliary-Pancreat. Sci. 2022, 29, 359–368. [Google Scholar] [CrossRef] [PubMed]

- Ali, O.; Bône, A.; Accardo, C.; Acidi, B.; Facque, A.; Valleur, P.; Salloum, C.; Rohe, M.-M.; Vignon-Clementel, I.; Vibert, E.; et al. Automatic Generation of Liver Virtual Models with Artificial Intelligence: Application to Liver Resection Complexity Prediction. Ann. Surg. 2025. online ahead of print. [Google Scholar] [CrossRef]

- Li, L.; Cheng, S.; Li, J.; Yang, J.; Wang, H.; Dong, B.; Yin, X.; Shi, H.; Gao, S.; Gu, F.; et al. Randomized comparison of AI enhanced 3D printing and traditional simulations in hepatobiliary surgery. npj Digit. Med. 2025, 8, 293. [Google Scholar] [CrossRef]

- Sagberg, K.; Lie, T.; Peterson, H.F.; Hillestad, V.; Eskild, A.; Bø, L.E. A new method for placental volume measurements using tracked 2D ultrasound and automatic image segmentation. Minim. Invasive Ther. Allied Technol. 2025, 34, 230–238. [Google Scholar] [CrossRef] [PubMed]

- Halder, S.; Lawrence, M.C.; Testa, G.; Periwal, V. Donor-specific digital twin for living donor liver transplant recovery. Biol. Methods Protoc. 2025, 10, bpaf037. [Google Scholar] [CrossRef]

- Scheikl, P.M.; Gyenes, B.; Younis, R.; Haas, C.; Neumann, G.; Wagner, M.; Mathis-Ullrich, F. LapGym—An Open Source Framework for Reinforcement Learning in Robot-Assisted Laparoscopic Surgery. J. Mach. Learn. Res. 2023, 24, 1–42. [Google Scholar]

- Long, Y.; Lin, A.; Kwok, D.H.C.; Zhang, L.; Yang, Z.; Shi, K.; Song, L.; Fu, J.; Lin, H.; Wei, W.; et al. Surgical embodied intelligence for generalized task autonomy in laparoscopic robot-assisted surgery. Sci. Robot. 2025, 10, eadt3093. [Google Scholar] [CrossRef]

- Moghani, M.; Nelson, N.; Ghanem, M.; Diaz-Pinto, A.; Hari, K.; Azizian, M.; Goldberg, K.; Huver, S.; Garg, A. SuFIA-BC: Generating High Quality Demonstration Data for Visuomotor Policy Learning in Surgical Subtasks. arXiv 2025, arXiv:2504.14857. [Google Scholar]

- Twinanda, A.P.; Shehata, S.; Mutter, D.; Marescaux, J.; de Mathelin, M.; Padoy, N. EndoNet: A Deep Architecture for Recognition Tasks on Laparoscopic Videos. IEEE Trans. Med. Imaging 2017, 36, 86–97. [Google Scholar] [CrossRef] [PubMed]

- Guédon, A.C.P.; Meij, S.E.P.; Osman, K.N.M.M.H.; Kloosterman, H.A.; van Stralen, K.J.; Grimbergen, M.C.M.; Eijsbouts, Q.A.J.; Dobbelsteen, J.J.v.D.; Twinanda, A.P. Deep learning for surgical phase recognition using endoscopic videos. Surg. Endosc. 2021, 35, 6150–6157. [Google Scholar] [CrossRef]

- Alabi, O.; Toe, K.K.Z.; Zhou, Z.; Budd, C.; Raison, N.; Shi, M.; Vercauteren, T. CholecInstanceSeg: A Tool Instance Segmentation Dataset for Laparoscopic Surgery. Sci. Data 2025, 12, 825. [Google Scholar] [CrossRef] [PubMed]

- Yamazaki, Y.; Kanaji, S.; Matsuda, T.; Oshikiri, T.; Nakamura, T.; Suzuki, S.; Hiasa, Y.; Otake, Y.; Sato, Y.; Kakeji, Y. Automated Surgical Instrument Detection from Laparoscopic Gastrectomy Video Images Using an Open Source Convolutional Neural Network Platform. J. Am. Coll. Surg. 2020, 230, 725–732e1. [Google Scholar] [CrossRef]

- Benavides, D.; Cisnal, A.; Fontúrbel, C.; de la Fuente, E.; Fraile, J.C. Real-Time Tool Localization for Laparoscopic Surgery Using Convolutional Neural Network. Sensors 2024, 24, 4191. [Google Scholar] [CrossRef]

- Kitaguchi, D.; Lee, Y.; Hayashi, K.; Nakajima, K.; Kojima, S.; Hasegawa, H.; Takeshita, N.; Mori, K.; Ito, M. Development and Validation of a Model for Laparoscopic Colorectal Surgical Instrument Recognition Using Convolutional Neural Network–Based Instance Segmentation and Videos of Laparoscopic Procedures. JAMA Netw. Open 2022, 5, e2226265. [Google Scholar] [CrossRef]

- Hu, R.M.; Tang, M.M.; Jin, Y.; Xiang, L.M.; Li, K.; Peng, B.M.; Liu, J.B.; Qin, D.; Liang, L.B.; Li, Y.; et al. Development and application of an intelligent platform for automated recognition of surgical instruments in laparoscopic procedures: A multicenter retrospective study (experimental studies). Int. J. Surg. 2025, 111, 5048–5057. [Google Scholar] [CrossRef]

- Kitaguchi, D.; Fujino, T.; Takeshita, N.; Hasegawa, H.; Mori, K.; Ito, M. Limited generalizability of single deep neural network for surgical instrument segmentation in different surgical environments. Sci. Rep. 2022, 12, 12575. [Google Scholar] [CrossRef]

- Mascagni, P.; Alapatt, D.M.; Urade, T.; Vardazaryan, A.M.; Mutter, D.M.; Marescaux, J.M.; Costamagna, G.; Dallemagne, B.; Padoy, N. A Computer Vision Platform to Automatically Locate Critical Events in Surgical Videos: Documenting Safety in Laparoscopic Cholecystectomy. Ann. Surg. 2021, 274, e93–e95. [Google Scholar] [CrossRef]

- Mascagni, P.; Vardazaryan, A.M.; Alapatt, D.M.; Urade, T.; Emre, T.M.; Fiorillo, C.; Pessaux, P.; Mutter, D.; Marescaux, J.M.; Costamagna, G.; et al. Artificial Intelligence for Surgical Safety: Automatic Assessment of the Critical View of Safety in Lapa-roscopic Cholecystectomy Using Deep Learning. Ann. Surg. 2022, 275, 955–961. [Google Scholar] [CrossRef]

- Hegde, S.; Namazi, B.; Mahnken, H.; Dumas, R.M.; Sankaranarayana, G. Automated Prediction of Critical View of Safety Criteria in Laparoscopic Cholecystectomy Using Deep Learning. J. Am. Coll. Surg. 2022, 235, S71. [Google Scholar] [CrossRef]

- Kawamura, M.; Endo, Y.; Fujinaga, A.; Orimoto, H.; Amano, S.; Kawasaki, T.; Kawano, Y.; Masuda, T.; Hirashita, T.; Kimura, M.; et al. Development of an artificial intelligence system for real-time intraoperative assessment of the Critical View of Safety in laparoscopic cholecystectomy. Surg. Endosc. 2023, 37, 8755–8763. [Google Scholar] [CrossRef]

- Kolbinger, F.R.; Rinner, F.M.; Jenke, A.C.; Carstens, M.; Krell, S.; Leger, S.; Distler, M.; Weitz, J.; Speidel, S.; Bodenstedt, S. Anatomy segmentation in laparoscopic surgery: Comparison of machine learning and human expertise—An experimental study. Int. J. Surg. 2023, 109, 2962–2974. [Google Scholar] [CrossRef] [PubMed]

- Kumazu, Y.; Kobayashi, N.; Senya, S.; Negishi, Y.; Kinoshita, K.; Fukui, Y.; Mita, K.; Osaragi, T.; Misumi, T.; Shinohara, H. AI-based visualization of loose connective tissue as a dissectable layer in gastrointestinal surgery. Sci. Rep. 2025, 15, 152. [Google Scholar] [CrossRef] [PubMed]

- Ao, X.; Leng, Y.; Gan, Y.; Cheng, Z.; Lin, H.; QiaoLi, J.F.; Wang, J.; Wu, T.; Zhou, L.; Li, H.; et al. Gauze detection and segmentation in laparoscopic liver surgery: A multi-center study. Eur. J. Med. Res. 2025, 30, 905. [Google Scholar] [CrossRef]

- Padoy, N. Machine and deep learning for workflow recognition during surgery. Minim. Invasive Ther. Allied Technol. 2019, 28, 82–90. [Google Scholar] [CrossRef] [PubMed]

- Kitaguchi, D.; Takeshita, N.; Matsuzaki, H.; Hasegawa, H.; Igaki, T.; Oda, T.; Ito, M. Deep learning-based automatic surgical step recognition in intraoperative videos for transanal total mesorectal excision. Surg. Endosc. 2022, 36, 1143–1151. [Google Scholar] [CrossRef]

- Sasaki, K.; Ito, M.; Kobayashi, S.; Kitaguchi, D.; Matsuzaki, H.; Kudo, M.; Hasegawa, H.; Takeshita, N.; Sugimoto, M.; Mitsunaga, S.; et al. Automated surgical workflow identification by artificial intelligence in laparoscopic hepatectomy: Experimental research. Int. J. Surg. 2022, 105, 106856. [Google Scholar] [CrossRef]

- Ortenzi, M.; Ferman, J.R.; Antolin, A.; Bar, O.; Zohar, M.; Perry, O.; Asselmann, D.; Wolf, T. A novel high accuracy model for automatic surgical workflow recognition using artificial intelligence in laparoscopic totally extraperitoneal inguinal hernia repair (TEP). Surg. Endosc. 2023, 37, 8818–8828. [Google Scholar] [CrossRef]

- Liu, Y.; Boels, M.; Garcia-Peraza-Herrera, L.C.; Vercauteren, T.; Dasgupta, P.; Granados, A.; Ourselin, S. LoViT: Long Video Transformer for surgical phase recognition. Med. Image Anal. 2025, 99, 103366. [Google Scholar] [CrossRef]

- Yang, H.Y.; Hong, S.S.; Yoon, J.; Park, B.; Yoon, Y.; Han, D.H.; Choi, G.H.; Choi, M.-K.; Kim, S.H. Deep learning-based surgical phase recognition in laparoscopic cholecystectomy. Ann. Hepatobiliary Pancreat. Surg. 2024, 28, 466–473. [Google Scholar] [CrossRef]

- Komatsu, M.; Kitaguchi, D.; Yura, M.; Takeshita, N.; Yoshida, M.; Yamaguchi, M.; Kondo, H.; Kinoshita, T.; Ito, M. Automatic surgical phase recognition-based skill assessment in laparoscopic distal gastrectomy using multicenter videos. Gastric Cancer 2024, 27, 187–196. [Google Scholar] [CrossRef]

- Matsumoto, S.; Kawahira, H.; Fukata, K.; Doi, Y.; Kobayashi, N.; Hosoya, Y.; Sata, N. Laparoscopic distal gastrectomy skill evaluation from video: A new artificial intelligence-based in-strument identification system. Sci. Rep. 2024, 14, 12432. [Google Scholar] [CrossRef] [PubMed]

- Ng, W.Y.; Li, Y.; Pan, T.; Sun, Y.; Dou, Q.; Heng, P.A.; Chiu, P.W.Y.; Li, Z. A Simultaneous Polyp and Lumen Detection Framework Toward Autonomous Robotic Colonoscopy. IEEE Trans. Med. Robot. Bionics 2024, 6, 163–174. [Google Scholar] [CrossRef]

- Sharif, M.; Khan, M.A.; Rashid, M.; Yasmin, M.; Afza, F.; Tanik, U.J. Deep CNN and geometric features-based gastrointestinal tract diseases detection and classification from wireless capsule endoscopy images. J. Exp. Theor. Artif. Intell. 2021, 33, 577–599. [Google Scholar] [CrossRef]

- Till, H.; Esposito, C.; Yeung, C.K.; Patkowski, D.; Shehata, S.; Rothenberg, S.; Singer, G.; Till, T. Artificial intelligence based surgical support for experimental laparoscopic Nissen fundoplication. Front. Pediatr. 2025, 13, 1584628. [Google Scholar] [CrossRef]

- Low, C.H.; Wang, Z.; Zhang, T.; Zeng, Z.; Zhuo, Z.; Mazomenos, E.B.; Jin, Y. SurgRAW: Multi-Agent Workflow with Chain-of-Thought Reasoning for Surgical Intelligence. arXiv 2025, arXiv:2503.10265. [Google Scholar] [CrossRef]

- Xu, M.; Huang, Z.; Zhang, J.; Zhang, X.; Dou, Q. Surgical Action Planning with Large Language Models. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2025; Gee, J.C., Alexander, D.C., Hong, J., Iglesias, J.E., Sudre, C.H., Venkataraman, A., Golland, P., Kim, J.H., Park, J., Eds.; Springer Nature: Cham, Switzerland, 2026; pp. 563–572. [Google Scholar] [CrossRef]

- Ng, C.K.; Bai, L.; Wang, G.; Wang, Y.; Gao, H.; Kun, Y.; Jin, C.; Zeng, T.; Ren, H. EndoVLA: Dual-Phase Vision-Language-Action for Precise Autonomous Tracking in Endoscopy. In Proceedings of the 9th Annual Conference on Robot Learning, Seoul, Republic of Korea, 27–30 September 2025. PMLR 305:4958-4974. [Google Scholar]

- Leoncini, P.; Marzola, F.; Pescio, M.; Casadio, M.; Arezzo, A.; Dagnino, G. A reproducible framework for synthetic data generation and instance segmentation in robotic suturing. Int. J. CARS 2025, 20, 1567–1576. [Google Scholar] [CrossRef]

- Addison, P.; Bitner, D.; Carsky, K.; Kutana, S.; Dechario, S.; Antonacci, A.; Mikhail, D.; Pettit, S.; Chung, P.J.; Filicori, F. Outcome prediction in bariatric surgery through video-based assessment. Surg. Endosc. 2023, 37, 3113–3118. [Google Scholar] [CrossRef]

- Chen, K.A.; Joisa, C.U.; Stitzenberg, K.B.; Stem, J.; Guillem, J.G.; Gomez, S.M.; Kapadia, M.R. Development and Validation of Machine Learning Models to Predict Readmission After Colorectal Surgery. J. Gastrointest. Surg. 2022, 26, 2342–2350. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.H.; Kim, Y.; Lee, D.; Lim, D.; Hwang, S.-H.; Lee, S.-H.; Jung, W. Machine learning-based real-time prediction of duodenal stump leakage from gastrectomy in gastric cancer patients. Front. Surg. 2025, 12, 1550990. [Google Scholar] [CrossRef]

- Igaki, T.; Kitaguchi, D.; Matsuzaki, H.; Nakajima, K.; Kojima, S.; Hasegawa, H.; Takeshita, N.; Kinugasa, Y.; Ito, M. Automatic Surgical Skill Assessment System Based on Concordance of Standardized Surgical Field Development Using Artificial Intelligence. JAMA Surg. 2023, 158, e231131. [Google Scholar] [CrossRef] [PubMed]

- Kiyasseh, D.; Laca, J.; Haque, T.F.; Miles, B.J.; Wagner, C.; Donoho, D.A.; Anandkumar, A.; Hung, A.J. A multi-institutional study using artificial intelligence to provide reliable and fair feedback to surgeons. Commun. Med. 2023, 3, 42. [Google Scholar] [CrossRef] [PubMed]

- Kwong, M.; Noorchenarboo, M.; Grolinger, K.; Hawel, J.; Schlachta, C.M.; Elnahas, A. Optimizing surgical efficiency: Predicting case duration of common general surgery procedures using machine learning. Surg. Endosc. 2025, 39, 5227–5234. [Google Scholar] [CrossRef]

- Zhao, B.; Waterman, R.S.; Urman, R.D.; Gabriel, R.A. A Machine Learning Approach to Predicting Case Duration for Robot-Assisted Surgery. J. Med. Syst. 2019, 43, 32. [Google Scholar] [CrossRef]

- Lavanchy, J.L.; Zindel, J.; Kirtac, K.; Twick, I.; Hosgor, E.; Candinas, D.; Beldi, G. Automation of surgical skill assessment using a three-stage machine learning algorithm. Sci. Rep. 2021, 11, 5197. [Google Scholar] [CrossRef]

- Li, R.; Zhao, Z.; Yu, J. Development and validation of a machine learning model for predicting early postoperative complications after radical gastrectomy. Front. Oncol. 2025, 15, 1631260. [Google Scholar] [CrossRef]

- Mascarenhas, M.; Mendes, F.; Fonseca, F.; Carvalho, E.; Santos, A.; Cavadas, D.; Barbosa, G.; da Costa, A.P.; Martins, M.; Bunaiyan, A.; et al. A Novel Deep Learning Model for Predicting Colorectal Anastomotic Leakage: A Pioneer Multi-center Transatlantic Study. JCM 2025, 14, 5462. [Google Scholar] [CrossRef] [PubMed]

- Ouakasse, F.; Mosaif, A. Integrating IoMT and AI for Real-Time Surgical Risk Assessment and Decision Support. In Proceedings of the 2025 International Conference on Circuit, Systems and Communication (ICCSC), Fez, Morocco, 19–20 June 2025; pp. 1–4. [Google Scholar] [CrossRef]

- Pretto, G.; Silva, D.d.O.; Morales, V.H.d.F. Artificial Intelligence as a Tool to Support Decision-Making in the Management of Intraoperative Hypotension. Int. J. Cardiovasc. Sci. 2025, 38, e20240221. [Google Scholar] [CrossRef]

- Ruan, X.; Fu, S.; Storlie, C.B.; Mathis, K.L.; Larson, D.W.; Liu, H. Real-time risk prediction of colorectal surgery-related post-surgical complications using GRU-D model. J. Biomed. Inform. 2022, 135, 104202. [Google Scholar] [CrossRef] [PubMed]

- Wen, R.; Zheng, K.; Zhang, Q.; Zhou, L.; Liu, Q.; Yu, G.; Gao, X.; Hao, L.; Lou, Z.; Zhang, W. Machine learning-based random forest predicts anastomotic leakage after anterior resection for rectal cancer. J. Gastrointest. Oncol. 2021, 12, 921–932. [Google Scholar] [CrossRef]

- Yanagida, Y.; Takenaka, S.; Kitaguchi, D.; Hamano, S.; Tanaka, A.; Mitarai, H.; Suzuki, R.; Sasaki, K.; Takeshita, N.; Ishimaru, T.; et al. Surgical skill assessment using an AI-based surgical phase recognition model for laparoscopic chole-cystectomy. Surg. Endosc. 2025, 39, 5018–5026. [Google Scholar] [CrossRef]

- Zeng, S.; Li, L.; Hu, Y.; Luo, L.; Fang, Y. Machine learning approaches for the prediction of postoperative complication risk in liver resection patients. BMC Med. Inf. Decis. Mak. 2021, 21, 371. [Google Scholar] [CrossRef]

- Zhou, C.-M.; Li, H.; Xue, Q.; Yang, J.-J.; Zhu, Y. Artificial intelligence algorithms for predicting post-operative ileus after laparoscopic surgery. Heliyon 2024, 10, e26580. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Wang, Z.; Wu, J.; Weng, D. Artificial intelligence-based perioperative safety verification system improved the performance of surgical safety verification execution. Am. J. Transl. Res. 2024, 16, 1295–1305. [Google Scholar] [CrossRef]

- Grüne, B.; Burger, R.; Bauer, D.; Schäfer, A.; Rothfuss, A.; Stallkamp, J.; Rassweiler, J.; Kriegmair, M.C.; Rassweiler-Seyfried, M.-C. Robotic-assisted versus manual Uro Dyna-CT-guided puncture in an ex-vivo kidney phantom. Minim. Invasive Ther. Allied Technol. 2024, 33, 102–108. [Google Scholar] [CrossRef]

- Lee, A.; Baker, T.S.; Bederson, J.B.; Rapoport, B.I. Levels of autonomy in FDA-cleared surgical robots: A systematic review. npj Digit. Med. 2024, 7, 103. [Google Scholar] [CrossRef]

- Yang, G.-Z.; Cambias, J.; Cleary, K.; Daimler, E.; Drake, J.; Dupont, P.E.; Hata, N.; Kazanzides, P.; Martel, S.; Patel, R.V.; et al. Medical robotics—Regulatory, ethical, and legal considerations for increasing levels of autonomy. Sci. Robot. 2017, 2, eaam8638. [Google Scholar] [CrossRef]

- Shademan, A.; Decker, R.S.; Opfermann, J.D.; Leonard, S.; Krieger, A.; Kim, P.C.W. Supervised autonomous robotic soft tissue surgery. Sci. Transl. Med. 2016, 8, 337ra64. [Google Scholar] [CrossRef]

- Saeidi, H.; Opfermann, J.D.; Kam, M.; Wei, S.; Leonard, S.; Hsieh, M.H.; Kang, J.U.; Krieger, A. Autonomous robotic laparoscopic surgery for intestinal anastomosis. Sci. Robot. 2022, 7, eabj2908. [Google Scholar] [CrossRef]

- Gruijthuijsen, C.; Garcia-Peraza-Herrera, L.C.; Borghesan, G.; Reynaerts, D.; Deprest, J.; Ourselin, S.; Vercauteren, T.; Poorten, E.V. Robotic Endoscope Control Via Autonomous Instrument Tracking. Front. Robot. AI 2022, 9, 832208. [Google Scholar] [CrossRef]

- Ma, X.; Song, C.; Chiu, P.W.; Li, Z. Autonomous Flexible Endoscope for Minimally Invasive Surgery with Enhanced Safety. IEEE Robot. Autom. Lett. 2019, 4, 2607–2613. [Google Scholar] [CrossRef]

- Wagner, M.; Bihlmaier, A.; Kenngott, H.G.; Mietkowski, P.; Scheikl, P.M.; Bodenstedt, S.; Schiepe-Tiska, A.; Vetter, J.; Nickel, F.; Speidel, S.; et al. A learning robot for cognitive camera control in minimally invasive surgery. Surg. Endosc. 2021, 35, 5365–5374. [Google Scholar] [CrossRef]

- Kazanzides, P.; Chen, Z.; Deguet, A.; Fischer, G.S.; Taylor, R.H.; DiMaio, S.P. An open-source research kit for the da Vinci® Surgical System. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6434–6439. [Google Scholar] [CrossRef]

- Paul, R.A.; Jawad, L.; Shankar, A.; Majumdar, M.; Herrick-Thomason, T.; Pandya, A. Evaluation of a Voice-Enabled Autonomous Camera Control System for the da Vinci Surgical Robot. Robotics 2024, 13, 10. [Google Scholar] [CrossRef]

- Yu, L.; Xia, Y.; Wang, P.; Sun, L. Automatic adjustment of laparoscopic pose using deep reinforcement learning. Mech. Sci. 2022, 13, 593–602. [Google Scholar] [CrossRef]

- Shi, J.; Zhou, C.; Wang, L.; Lin, W.; Zhou, S.; He, Z.; Ma, H.; Hu, J.; Feng, D. Hands-Free Camera Assistant: Autonomous Laparoscope Manipulation in Robot-Assisted Surgery. Robot. Comput. Surg. 2025, 21, e70103. [Google Scholar] [CrossRef] [PubMed]

- Ou, Y.; Soleymani, A.; Li, X.; Tavakoli, M. Autonomous Blood Suction for Robot-Assisted Surgery: A Sim-to-Real Re-inforcement Learning Approach. IEEE Robot. Autom. Lett. 2024, 9, 7246–7253. [Google Scholar] [CrossRef]

- Zargarzadeh, S.; Mirzaei, M.; Ou, Y.; Tavakoli, M. From Decision to Action in Surgical Autonomy: Multi-Modal Large Language Models for Robot-Assisted Blood Suction. IEEE Robot. Autom. Lett. 2025, 10, 2598–2605. [Google Scholar] [CrossRef]

- Singh, A.; Shi, W.; Wang, M.D. Autonomous Soft Tissue Retraction Using Demonstration-Guided Rein-forcement Learning. arXiv 2023, arXiv:2309.00837. [Google Scholar] [CrossRef]

- Scheikl, P.M.; Tagliabue, E.; Gyenes, B.; Wagner, M.; Dall’ALba, D.; Fiorini, P.; Mathis-Ullrich, F. Sim-to-Real Transfer for Visual Reinforcement Learning of Deformable Object Manipulation for Ro-bot-Assisted Surgery. IEEE Robot. Autom. Lett. 2023, 8, 560–567. [Google Scholar] [CrossRef]

- Shahkoo, A.A.; Abin, A.A. Autonomous Tissue Manipulation via Surgical Robot Using Deep Reinforcement Learning and Evolutionary Algorithm. IEEE Trans. Med. Robot. Bionics 2023, 5, 30–41. [Google Scholar] [CrossRef]

- Hari, K.; Kim, H.; Panitch, W.; Srinivas, K.; Schorp, V.; Dharmarajan, K.; Ganti, S.; Sadjadpour, T.; Goldberg, K. STITCH: Augmented Dexterity for Suture Throws Including Thread Coordination and Handoffs. arXiv 2024, arXiv:2404.05151. [Google Scholar] [CrossRef]

- Hari, K.; Chen, Z.; Kim, H.; Goldberg, K. STITCH 2.0: Extending Augmented Suturing with EKF Needle Estimation and Thread Management. IEEE Robot. Autom. Lett. 2025, 10, 12700–12707. [Google Scholar] [CrossRef]

- Vargas, H.F.; Vivas, A.; Bastidas, S.; Gomez, H.; Correa, K.; Muñoz, V. Advances towards autonomous robotic suturing: Integration of finite element force analysis and instantaneous wound detection through deep learning. Biomed. Signal Process. Control 2025, 101, 107181. [Google Scholar] [CrossRef]

- Varier, V.M.; Rajamani, D.K.; Goldfarb, N.; Tavakkolmoghaddam, F.; Munawar, A.; Fischer, G.S. Collaborative Suturing: A Reinforcement Learning Approach to Automate Hand-off Task in Suturing for Surgical Robots. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 1380–1386. [Google Scholar] [CrossRef]

- Xu, W.; Tan, Z.; Cao, Z.; Ma, H.; Wang, G.; Wang, H.; Wang, W.; Du, Z. DP4AuSu: Autonomous Surgical Framework for Suturing Manipulation Using Diffusion Policy with Dynamic Time Wrapping-Based Locally Weighted Regression. Int. J. Med. Robot. Comput. Assist. Surg. 2025, 21, e70072. [Google Scholar] [CrossRef] [PubMed]

- Haworth, J.; Chen, J.-T.; Nelson, N.; Kim, J.W.; Moghani, M.; Finn, C.; Krieger, A. SutureBot: A Precision Framework & Benchmark For Autonomous End-to-End Suturing. arXiv 2025, arXiv:2510.20965. [Google Scholar] [CrossRef]

- Kim, J.W.; Zhao, T.Z.; Schmidgall, S.; Deguet, A.; Kobilarov, M.; Finn, C.; Krieger, A. Surgical Robot Transformer (SRT): Imitation Learning for Surgical Tasks. arXiv 2024, arXiv:2407.12998. [Google Scholar] [CrossRef]

- Abdelaal, A.E.; Fang, J.; Reinhart, T.N.; Mejia, J.A.; Zhao, T.Z.; Bohg, J.; Okamura, A.M. Force-Aware Autonomous Robotic Surgery. arXiv 2025, arXiv:2501.11742. [Google Scholar] [CrossRef]

- Kim, J.W.; Chen, J.-T.; Hansen, P.; Shi, L.X.; Goldenberg, A.; Schmidgall, S.; Scheikl, P.M.; Deguet, A.; White, B.M.; Tsai, D.R.; et al. SRT-H: A Hierarchical Framework for Autonomous Surgery via Language Conditioned Imitation Learning. Sci. Robot. 2025, 10, eadt5254. [Google Scholar] [CrossRef] [PubMed]