Artificial Intelligence Applications in Interventional Radiology

Abstract

1. Introduction

2. Pre-Procedural Applications of AI in IR

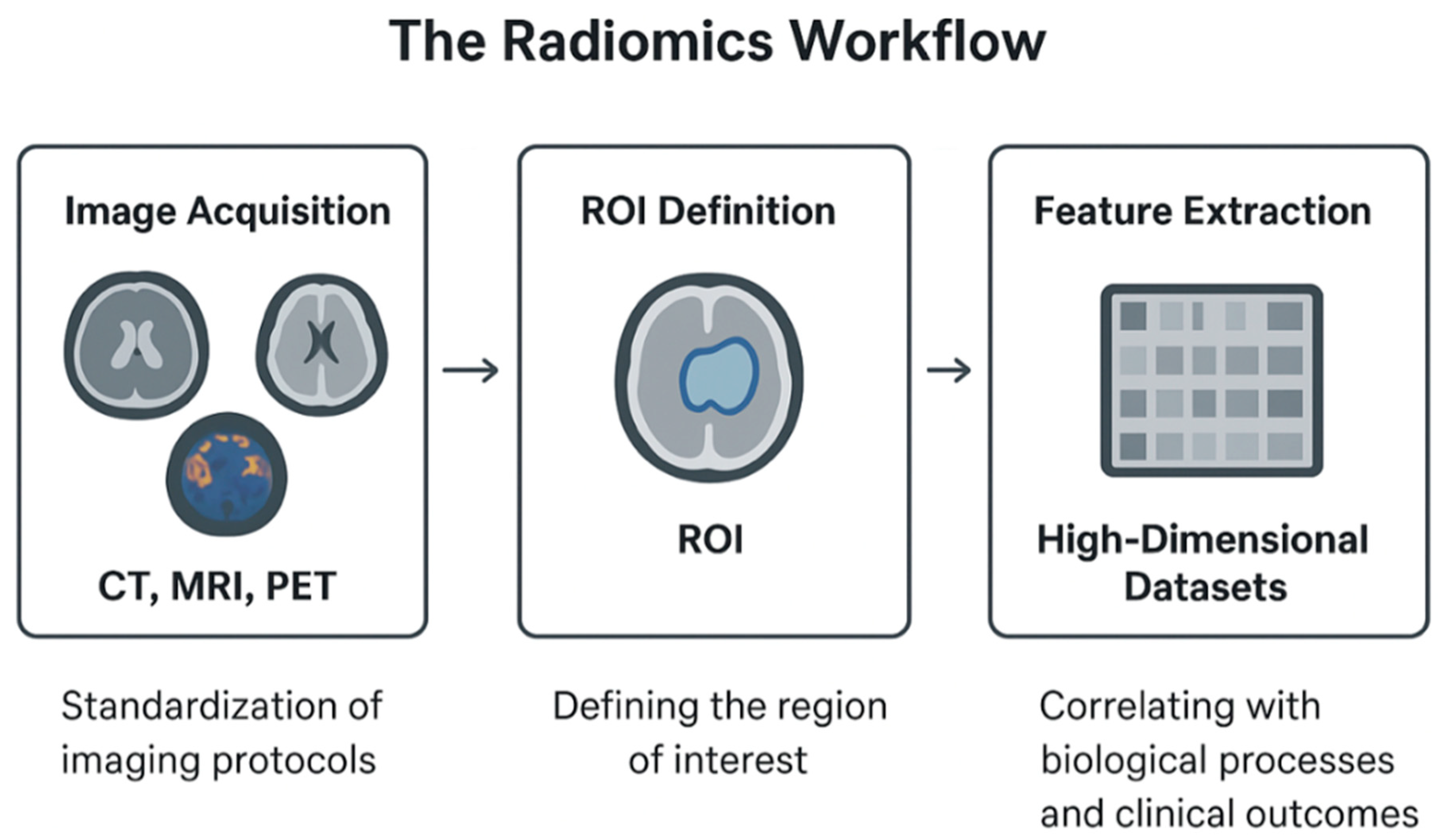

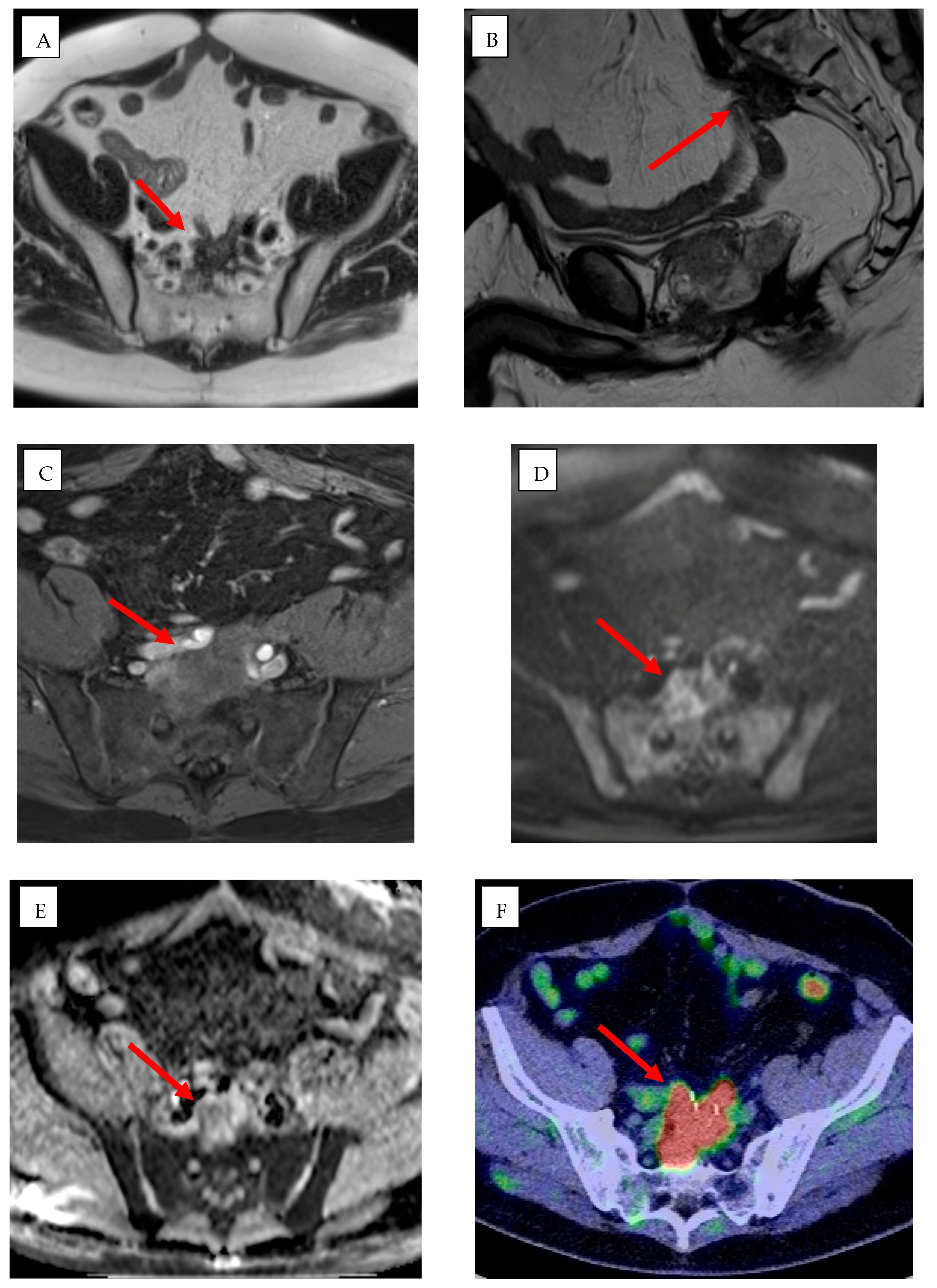

2.1. Radiomics: Principles and Clinical Applications in IR

2.2. Virtual Reality in Learning

2.3. Advancing Medical Education Through Three-Dimensional (3D) Modeling

3. Intra-Procedural Applications of AI in IR

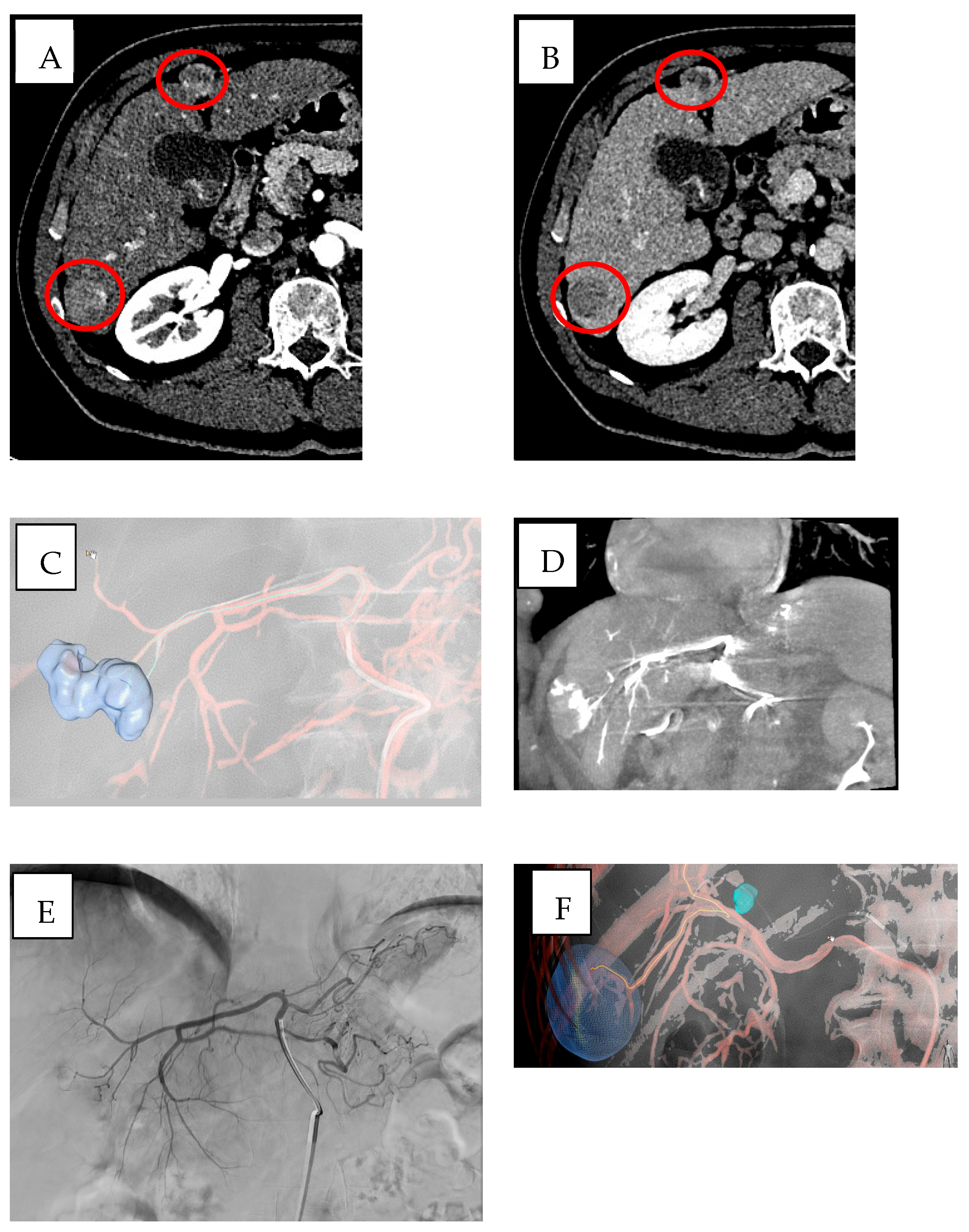

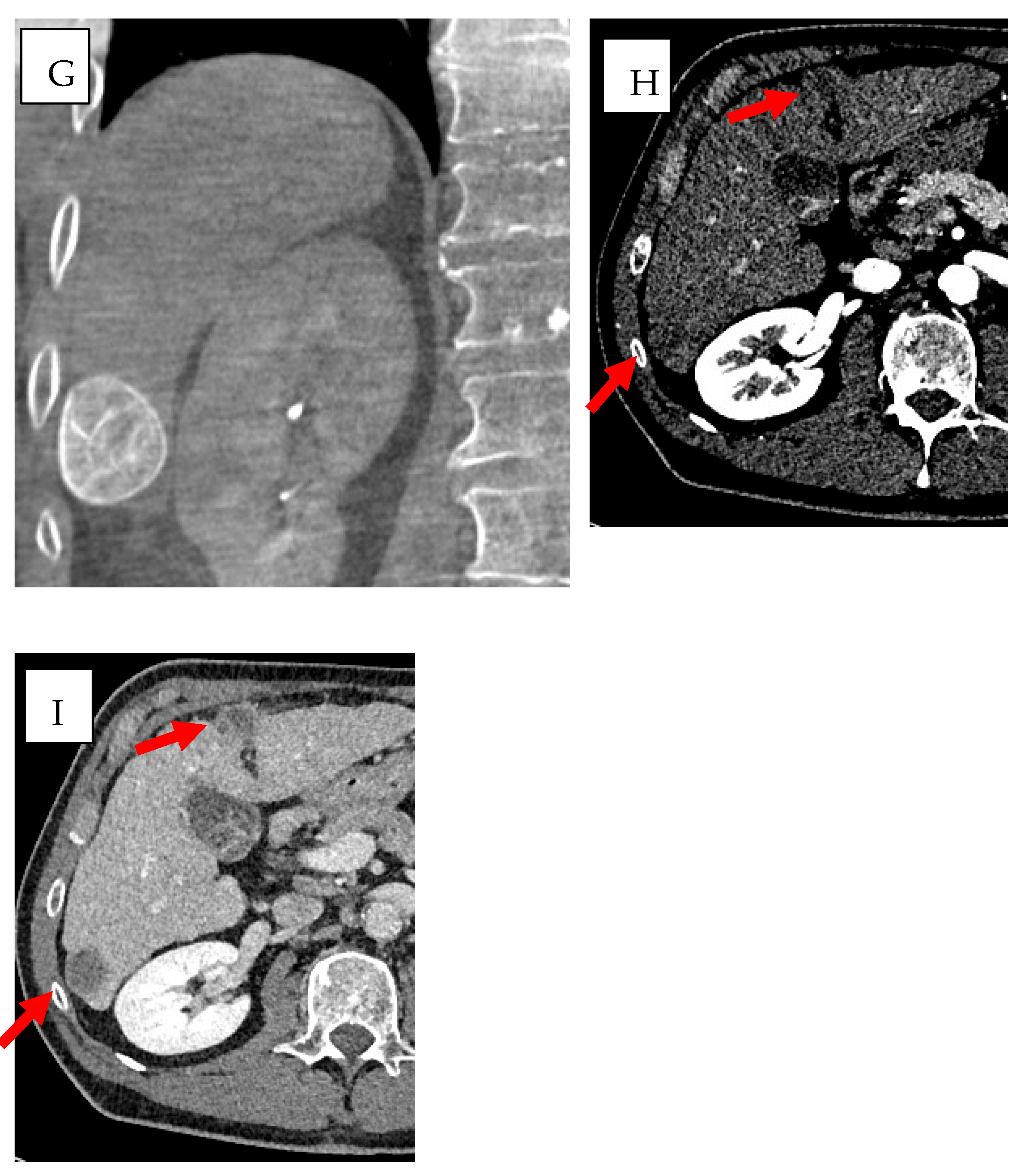

3.1. Cone-Beam Computed Tomography (CBCT) and Imaging Guidance Software

3.2. Intraoperative Applications of VR

3.3. Robotics

- Table-Mounted Systems: These systems manipulate the needle under imaging guidance and have shown high accuracy in clinical settings [80];

- Floor-Mounted Systems: These devices can hold and orient needles and have demonstrated improved accuracy in phantom and animal studies [80];

- Patient-Mounted Systems: These systems offer ergonomic advantages and have shown promising results in clinical trials [80].

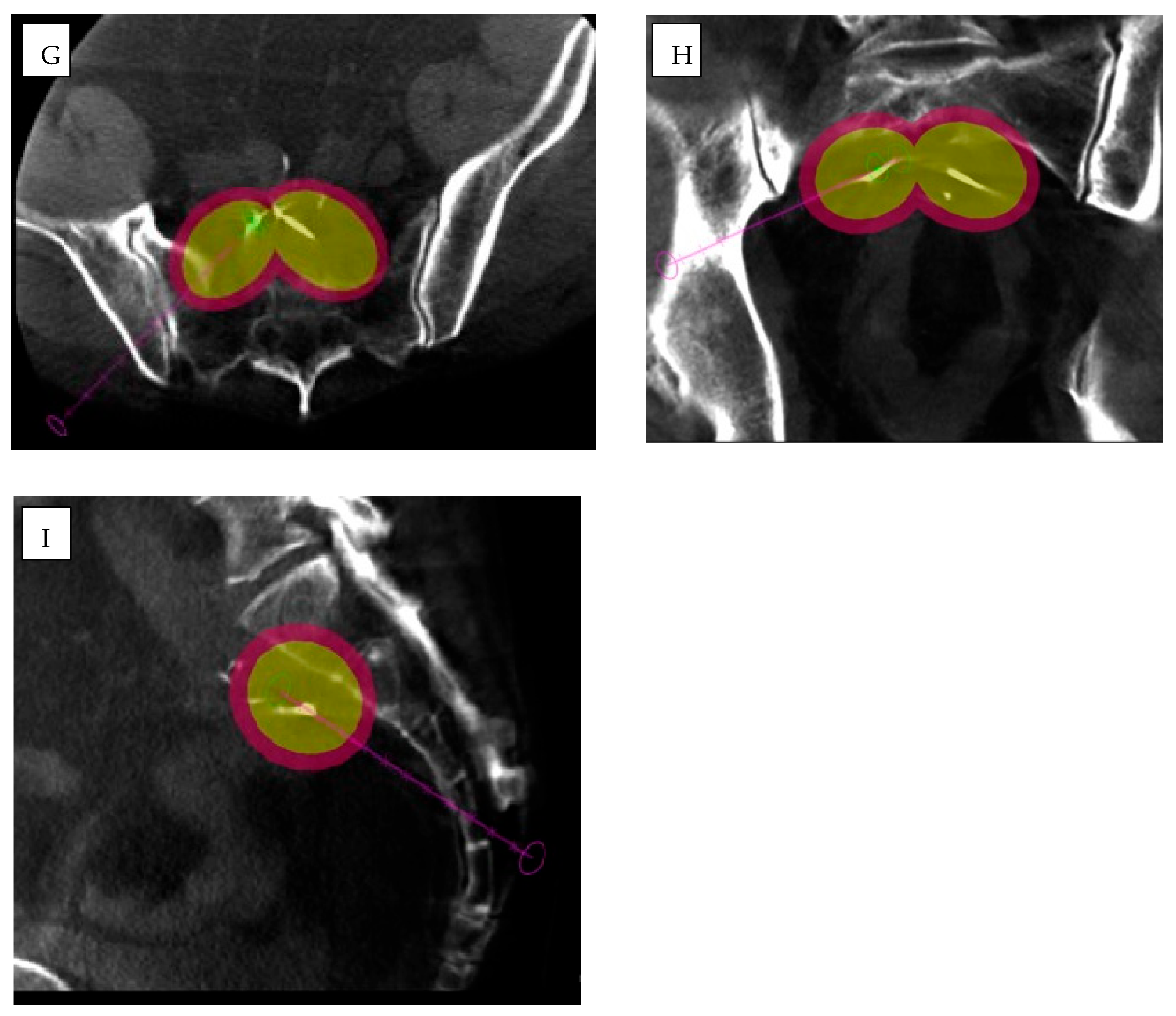

3.3.1. Percutaneous Applications

3.3.2. Endovascular Applications

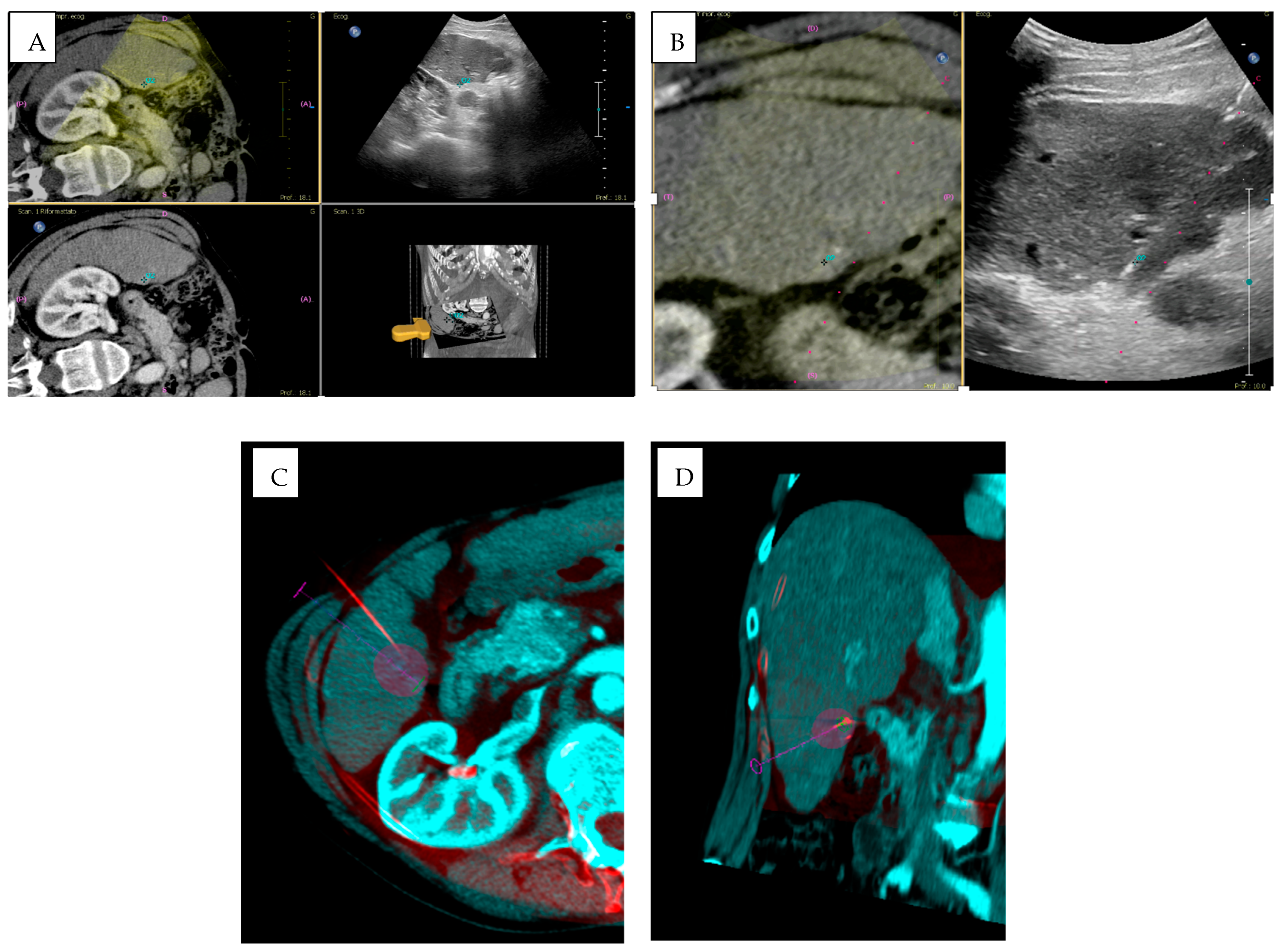

3.4. Imaging Fusion

4. Post-Procedural Applications of AI in IR

5. Discussion

6. Future Perspectives

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Iezzi, R.; Goldberg, S.N.; Merlino, B.; Posa, A.; Valentini, V.; Manfredi, R. Artificial Intelligence in Interventional Radiology: A Literature Review and Future Perspectives. J. Oncol. 2019, 2019, 6153041. [Google Scholar] [CrossRef] [PubMed]

- Waller, J.; O’Connor, A.; Rafaat, E.; Amireh, A.; Dempsey, J.; Martin, C.; Umair, M. Applications and challenges of artificial intelligence in diagnostic and interventional radiology. Pol. J. Radiol. 2022, 87, e113–e117. [Google Scholar] [CrossRef]

- Abajian, A.; Murali, N.; Savic, L.J.; Laage-Gaupp, F.M.; Nezami, N.; Duncan, J.S.; Lin, M.; Geschwind, J.F.; Chapiro, J. Predicting Treatment Response to Intra-arterial Therapies for Hepatocellular Carcinoma with the Use of Supervised Machine Learning-An Artificial Intelligence Concept. J. Vasc. Interv. Radiol. 2018, 29, 850–857.e1. [Google Scholar] [CrossRef]

- Letzen, B.; Wang, C.J.; Chapiro, J. The Role of Artificial Intelligence in Interventional Oncology: A Primer. J. Vasc. Interv. Radiol. 2019, 30, 38–41.e1. [Google Scholar] [CrossRef]

- Chartrand, G.; Cheng, P.M.; Vorontsov, E.; Drozdzal, M.; Turcotte, S.; Pal, C.J.; Kadoury, S.; Tang, A. Deep Learning: A Primer for Radiologists. Radiographics 2017, 37, 2113–2131. [Google Scholar] [CrossRef] [PubMed]

- Najafi, A.; Cazzato, R.L.; Meyer, B.C.; Pereira, P.L.; Alberich, A.; Lopez, A.; Ronot, M.; Fritz, J.; Maas, M.; Benson, S.; et al. CIRSE Position Paper on Artificial Intelligence in Interventional Radiology. Cardiovasc. Intervent. Radiol. 2023, 46, 1303–1307. [Google Scholar] [CrossRef]

- Lesaunier, A.; Khlaut, J.; Dancette, C.; Tselikas, L.; Bonnet, B.; Boeken, T. Artificial intelligence in interventional radiology: Current concepts and future trends. Diagn. Interv. Imaging 2025, 106, 5–10. [Google Scholar] [CrossRef]

- von Ende, E.; Ryan, S.; Crain, M.A.; Makary, M.S. Artificial Intelligence, Augmented Reality, and Virtual Reality Advances and Applications in Interventional Radiology. Diagnostics 2023, 13, 892. [Google Scholar] [CrossRef]

- Nardone, V.; Reginelli, A.; Rubini, D.; Gagliardi, F.; Del Tufo, S.; Belfiore, M.P.; Boldrini, L.; Desideri, I.; Cappabianca, S. Delta radiomics: An updated systematic review. Radiol. Med. 2024, 129, 1197–1214. [Google Scholar] [CrossRef]

- Barral, M.; Lefevre, A.; Camparo, P.; Hoogenboom, M.; Pierre, T.; Soyer, P.; Cornud, F. In-Bore Transrectal MRI-Guided Biopsy With Robotic Assistance in the Diagnosis of Prostate Cancer: An Analysis of 57 Patients. AJR Am. J. Roentgenol. 2019, 213, W171–W179. [Google Scholar] [CrossRef] [PubMed]

- Zhou, G.; Liu, W.; Zhang, Y.; Gu, W.; Li, M.; Lu, C.; Zhou, R.; Che, Y.; Lu, H.; Zhu, Y.; et al. Application of three-dimensional printing in interventional medicine. J. Interv. Med. 2020, 3, 1–16. [Google Scholar] [CrossRef]

- Sequeira, C.; Oliveira-Santos, M.; Borges Rosa, J.; Silva Marques, J.; Oliveira Santos, E.; Norte, G.; Gonçalves, L. Three-dimensional simulation for interventional cardiology procedures: Face and content validity. Rev. Port. Cardiol. 2024, 43, 389–396. [Google Scholar] [CrossRef]

- Mosconi, C.; Cucchetti, A.; Bruno, A.; Cappelli, A.; Bargellini, I.; De Benedittis, C.; Lorenzoni, G.; Gramenzi, A.; Tarantino, F.P.; Parini, L.; et al. Radiomics of cholangiocarcinoma on pretreatment CT can identify patients who would best respond to radioembolisation. Eur. Radiol. 2020, 30, 4534–4544. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Xu, H.; Li, Y.; Li, X. Application of artificial intelligence (AI)-enhanced biochemical sensing in molecular diagnosis and imaging analysis: Advancing and challenges. TrAC Trends Anal. Chem. 2024, 174, 117700. [Google Scholar] [CrossRef]

- Ferrari, R.; Trinci, M.; Casinelli, A.; Treballi, F.; Leone, E.; Caruso, D.; Polici, M.; Faggioni, L.; Neri, E.; Galluzzo, M. Radiomics in radiology: What the radiologist needs to know about technical aspects and clinical impact. Radiol. Med. 2024, 129, 1751–1765. [Google Scholar] [CrossRef] [PubMed]

- Seong, H.; Yun, D.; Yoon, K.S.; Kwak, J.S.; Koh, J.C. Development of pre-procedure virtual simulation for challenging interventional procedures: An experimental study with clinical application. Korean J. Pain 2022, 35, 403–412. [Google Scholar] [CrossRef]

- Gent, D.; Kainth, R. Simulation-based procedure training (SBPT) in rarely performed procedures: A blueprint for theory-informed design considerations. Adv. Simul. 2022, 7, 13. [Google Scholar] [CrossRef]

- Gurgitano, M.; Angileri, S.A.; Roda, G.M.; Liguori, A.; Pandolfi, M.; Ierardi, A.M.; Wood, B.J.; Carrafiello, G. Interventional Radiology ex-machina: Impact of Artificial Intelligence on practice. Radiol. Med. 2021, 126, 998–1006. [Google Scholar] [CrossRef]

- D’Amore, B.; Smolinski-Zhao, S.; Daye, D.; Uppot, R.N. Role of Machine Learning and Artificial Intelligence in Interventional Oncology. Curr. Oncol. Rep. 2021, 23, 70. [Google Scholar] [CrossRef]

- Bang, J.Y.; Hough, M.; Hawes, R.H.; Varadarajulu, S. Use of Artificial Intelligence to Reduce Radiation Exposure at Fluoroscopy-Guided Endoscopic Procedures. Am. J. Gastroenterol. 2020, 115, 555–561. [Google Scholar] [CrossRef]

- Zimmermann, J.M.; Vicentini, L.; Van Story, D.; Pozzoli, A.; Taramasso, M.; Lohmeyer, Q.; Maisano, F.; Meboldt, M. Quantification of Avoidable Radiation Exposure in Interventional Fluoroscopy With Eye Tracking Technology. Investig. Radiol. 2020, 55, 457–462. [Google Scholar] [CrossRef]

- Yin, Y.; de Haas, R.J.; Alves, N.; Pennings, J.P.; Ruiter, S.J.S.; Kwee, T.C.; Yakar, D. Machine learning-based radiomic analysis and growth visualization for ablation site recurrence diagnosis in follow-up CT. Abdom. Radiol. 2024, 49, 1122–1131. [Google Scholar] [CrossRef]

- Lim, S.; Shin, Y.; Lee, Y.H. Arterial enhancing local tumor progression detection on CT images using convolutional neural network after hepatocellular carcinoma ablation: A preliminary study. Sci. Rep. 2022, 12, 1754. [Google Scholar] [CrossRef] [PubMed]

- Moon, C.M.; Lee, Y.Y.; Kim, S.K.; Jeong, Y.Y.; Heo, S.H.; Shin, S.S. Four-dimensional flow MR imaging for evaluating treatment response after transcatheter arterial chemoembolization in cirrhotic patients with hepatocellular carcinoma. Radiol. Med. 2023, 128, 1163–1173. [Google Scholar] [CrossRef] [PubMed]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images Are More than Pictures, They Are Data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef]

- Avery, E.; Sanelli, P.C.; Aboian, M.; Payabvash, S. Radiomics: A Primer on Processing Workflow and Analysis. Semin. Ultrasound CT MRI 2022, 43, 142–146. [Google Scholar] [CrossRef] [PubMed]

- Deng, K.; Chen, T.; Leng, Z.; Yang, F.; Lu, T.; Cao, J.; Pan, W.; Zheng, Y. Radiomics as a tool for prognostic prediction in transarterial chemoembolization for hepatocellular carcinoma: A systematic review and meta-analysis. Radiol. Med. 2024, 129, 1099–1117. [Google Scholar] [CrossRef]

- Orlhac, F.; Nioche, C.; Klyuzhin, I.; Rahmim, A.; Buvat, I. Radiomics in PET Imaging: A Practical Guide for Newcomers. PET Clin. 2021, 16, 597–612. [Google Scholar] [CrossRef]

- Tomaszewski, M.R.; Gillies, R.J. The Biological Meaning of Radiomic Features. Radiology 2021, 298, 505–516. [Google Scholar] [CrossRef]

- Varghese, B.A.; Cen, S.Y.; Hwang, D.H.; Duddalwar, V.A. Texture Analysis of Imaging: What Radiologists Need to Know. AJR Am. J. Roentgenol. 2019, 212, 520–528. [Google Scholar] [CrossRef]

- Yamada, A.; Kamagata, K.; Hirata, K.; Ito, R.; Nakaura, T.; Ueda, D.; Fujita, S.; Fushimi, Y.; Fujima, N.; Matsui, Y.; et al. Clinical applications of artificial intelligence in liver imaging. Radiol. Med. 2023, 128, 655–667. [Google Scholar] [CrossRef]

- Triggiani, S.; Contaldo, M.T.; Mastellone, G.; Ce, M.; Ierardi, A.M.; Carrafiello, G.; Cellina, M. The Role of Artificial Intelligence and Texture Analysis in Interventional Radiological Treatments of Liver Masses: A Narrative Review. Crit. Rev. Oncog. 2024, 29, 37–52. [Google Scholar] [CrossRef] [PubMed]

- Mamone, G.; Comelli, A.; Porrello, G.; Milazzo, M.; Di Piazza, A.; Stefano, A.; Benfante, V.; Tuttolomondo, A.; Sparacia, G.; Maruzzelli, L.; et al. Radiomics Analysis of Preprocedural CT Imaging for Outcome Prediction after Transjugular Intrahepatic Portosystemic Shunt Creation. Life 2024, 14, 726. [Google Scholar] [CrossRef]

- Tabari, A.; D’Amore, B.; Cox, M.; Brito, S.; Gee, M.S.; Wehrenberg-Klee, E.; Uppot, R.N.; Daye, D. Machine Learning-Based Radiomic Features on Pre-Ablation MRI as Predictors of Pathologic Response in Patients with Hepatocellular Carcinoma Who Underwent Hepatic Transplant. Cancers 2023, 15, 2058. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Wu, C.; Huang, Y.; Chen, J.; Ye, D.; Su, Z. Radiomics for the Preoperative Evaluation of Microvascular Invasion in Hepatocellular Carcinoma: A Meta-Analysis. Front. Oncol. 2022, 12, 831996. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Qian, J.; Zhu, G.; Wu, Z.; Cui, L.; Tu, S.; Luo, L.; Shan, R.; Liu, L.; Shen, W.; et al. Development and validation of nomograms to evaluate the survival outcome of HCC patients undergoing selective postoperative adjuvant TACE. Radiol. Med. 2024, 129, 653–664. [Google Scholar] [CrossRef]

- Yang, C.; Yang, H.C.; Luo, Y.G.; Li, F.T.; Cong, T.H.; Li, Y.J.; Ye, F.; Li, X. Predicting Survival Using Whole-Liver MRI Radiomics in Patients with Hepatocellular Carcinoma After TACE Refractoriness. Cardiovasc. Intervent. Radiol. 2024, 47, 964–977. [Google Scholar] [CrossRef]

- Bernatz, S.; Elenberger, O.; Ackermann, J.; Lenga, L.; Martin, S.S.; Scholtz, J.E.; Koch, V.; Grünewald, L.D.; Herrmann, Y.; Kinzler, M.N.; et al. CT-radiomics and clinical risk scores for response and overall survival prognostication in TACE HCC patients. Sci. Rep. 2023, 13, 533. [Google Scholar] [CrossRef]

- Zhang, L.; Jin, Z.; Li, C.; He, Z.; Zhang, B.; Chen, Q.; You, J.; Ma, X.; Shen, H.; Wang, F.; et al. An interpretable machine learning model based on contrast-enhanced CT parameters for predicting treatment response to conventional transarterial chemoembolization in patients with hepatocellular carcinoma. Radiol. Med. 2024, 129, 353–367. [Google Scholar] [CrossRef]

- Wang, C.; Leng, B.; You, R.; Yu, Z.; Lu, Y.; Diao, L.; Jiang, H.; Cheng, Y.; Yin, G.; Xu, Q. A Transcriptomic Biomarker for Predicting the Response to TACE Correlates with the Tumor Microenvironment and Radiomics Features in Hepatocellular Carcinoma. J. Hepatocell. Carcinoma 2024, 11, 2321–2337. [Google Scholar] [CrossRef]

- Ma, M.; Gu, W.; Liang, Y.; Han, X.; Zhang, M.; Xu, M.; Gao, H.; Tang, W.; Huang, D. A novel model for predicting postoperative liver metastasis in R0 resected pancreatic neuroendocrine tumors: Integrating computational pathology and deep learning-radiomics. J. Transl. Med. 2024, 22, 768. [Google Scholar] [CrossRef]

- Gelmini, A.Y.P.; Duarte, M.L.; de Assis, A.M.; Guimaraes Junior, J.B.; Carnevale, F.C. Virtual reality in interventional radiology education: A systematic review. Radiol. Bras. 2021, 54, 254–260. [Google Scholar] [CrossRef]

- Li, B.; Eisenberg, N.; Beaton, D.; Lee, D.S.; Al-Omran, L.; Wijeysundera, D.N.; Hussain, M.A.; Rotstein, O.D.; de Mestral, C.; Mamdani, M.; et al. Predicting inferior vena cava filter complications using machine learning. J. Vasc. Surg. Venous. Lymphat. Disord. 2024, 12, 101943. [Google Scholar] [CrossRef]

- Tortora, M.; Luppi, A.; Pacchiano, F.; Marisei, M.; Grassi, F.; Werner, H.; Kitamura, F.C.; Tortora, F.; Caranci, F.; Ferraciolli, S.F. Current applications and future perspectives of extended reality in radiology. Radiol. Med. 2025, 130, 905–920. [Google Scholar] [CrossRef] [PubMed]

- Gould, D. Using simulation for interventional radiology training. Br. J. Radiol. 2010, 83, 546–553. [Google Scholar] [CrossRef] [PubMed]

- Chaer, R.A.; Derubertis, B.G.; Lin, S.C.; Bush, H.L.; Karwowski, J.K.; Birk, D.; Morrissey, N.J.; Faries, P.L.; McKinsey, J.F.; Kent, K.C. Simulation improves resident performance in catheter-based intervention: Results of a randomized, controlled study. Ann. Surg. 2006, 244, 343–352. [Google Scholar] [CrossRef]

- Knudsen, B.E.; Matsumoto, E.D.; Chew, B.H.; Johnson, B.; Margulis, V.; Cadeddu, J.A.; Pearle, M.S.; Pautler, S.E.; Denstedt, J.D. A randomized, controlled, prospective study validating the acquisition of percutaneous renal collecting system access skills using a computer based hybrid virtual reality surgical simulator: Phase I. J. Urol. 2006, 176, 2173–2178. [Google Scholar] [CrossRef]

- Kaufmann, R.; Zech, C.J.; Takes, M.; Brantner, P.; Thieringer, F.; Deutschmann, M.; Hergan, K.; Scharinger, B.; Hecht, S.; Rezar, R.; et al. Vascular 3D Printing with a Novel Biological Tissue Mimicking Resin for Patient-Specific Procedure Simulations in Interventional Radiology: A Feasibility Study. J. Digit. Imaging 2022, 35, 9–20. [Google Scholar] [CrossRef] [PubMed]

- Tenewitz, C.; Le, R.T.; Hernandez, M.; Baig, S.; Meyer, T.E. Systematic review of three-dimensional printing for simulation training of interventional radiology trainees. 3D Print Med. 2021, 7, 10. [Google Scholar] [CrossRef]

- Bini, F.; Missori, E.; Pucci, G.; Pasini, G.; Marinozzi, F.; Forte, G.I.; Russo, G.; Stefano, A. Preclinical Implementation of matRadiomics: A Case Study for Early Malformation Prediction in Zebrafish Model. J. Imaging 2024, 10, 290. [Google Scholar] [CrossRef]

- Barral, M.; Chevallier, O.; Cornelis, F.H. Perspectives of Cone-beam Computed Tomography in Interventional Radiology: Techniques for Planning, Guidance, and Monitoring. Tech. Vasc. Interv. Radiol. 2023, 26, 100912. [Google Scholar] [CrossRef]

- Racadio, J.M.; Babic, D.; Homan, R.; Rampton, J.W.; Patel, M.N.; Racadio, J.M.; Johnson, N.D. Live 3D guidance in the interventional radiology suite. AJR Am. J. Roentgenol. 2007, 189, W357–W364. [Google Scholar] [CrossRef]

- Monfardini, L.; Orsi, F.; Caserta, R.; Sallemi, C.; Della Vigna, P.; Bonomo, G.; Varano, G.; Solbiati, L.; Mauri, G. Ultrasound and cone beam CT fusion for liver ablation: Technical note. Int. J. Hyperth. 2018, 35, 500–504. [Google Scholar] [CrossRef]

- Key, B.M.; Tutton, S.M.; Scheidt, M.J.; Cone-Beam, C.T. With Enhanced Needle Guidance and Augmented Fluoroscopy Overlay: Applications in Interventional Radiology. AJR Am. J. Roentgenol. 2023, 221, 92–101. [Google Scholar] [CrossRef]

- Morimoto, M.; Numata, K.; Kondo, M.; Nozaki, A.; Hamaguchi, S.; Takebayashi, S.; Tanaka, K. C-arm cone beam CT for hepatic tumor ablation under real-time 3D imaging. AJR Am. J. Roentgenol. 2010, 194, W452–W454. [Google Scholar] [CrossRef] [PubMed]

- Serrano, E.; Valcarcel Jose, J.; Paez-Carpio, A.; Matute-Gonzalez, M.; Werner, M.F.; Lopez-Rueda, A. Cone Beam computed tomography (CBCT) applications in image-guided minimally invasive procedures. Radiologia 2025, 67, 38–53. [Google Scholar] [CrossRef] [PubMed]

- Tacher, V.; Radaelli, A.; Lin, M.; Geschwind, J.F. How I do it: Cone-beam CT during transarterial chemoembolization for liver cancer. Radiology 2015, 274, 320–334. [Google Scholar] [CrossRef] [PubMed]

- Geis, J.R.; Brady, A.P.; Wu, C.C.; Spencer, J.; Ranschaert, E.; Jaremko, J.L.; Langer, S.G.; Borondy Kitts, A.; Birch, J.; Shields, W.F.; et al. Ethics of Artificial Intelligence in Radiology: Summary of the Joint European and North American Multisociety Statement. Radiology 2019, 293, 436–440. [Google Scholar] [CrossRef]

- Chiaradia, M.; Izamis, M.L.; Radaelli, A.; Prevoo, W.; Maleux, G.; Schlachter, T.; Mayer, J.; Luciani, A.; Kobeiter, H.; Tacher, V. Sensitivity and Reproducibility of Automated Feeding Artery Detection Software during Transarterial Chemoembolization of Hepatocellular Carcinoma. J. Vasc. Interv. Radiol. 2018, 29, 425–431. [Google Scholar] [CrossRef]

- Abdelsalam, H.; Emara, D.M.; Hassouna, E.M. The efficacy of TACE; how can automated feeder software help? Egypt. J. Radiol. Nucl. Med. 2022, 53, 43. [Google Scholar] [CrossRef]

- Lanza, C.; Carriero, S.; Buijs, E.F.M.; Mortellaro, S.; Pizzi, C.; Sciacqua, L.V.; Biondetti, P.; Angileri, S.A.; Ianniello, A.A.; Ierardi, A.M.; et al. Robotics in Interventional Radiology: Review of Current and Future Applications. Technol. Cancer Res. Treat. 2023, 22, 15330338231152084. [Google Scholar] [CrossRef]

- Kim, D.J.; Chul-Nam, I.; Park, S.E.; Kim, D.R.; Lee, J.S.; Kim, B.S.; Choi, G.M.; Kim, J.; Won, J.H. Added Value of Cone-Beam Computed Tomography for Detecting Hepatocellular Carcinomas and Feeding Arteries during Transcatheter Arterial Chemoembolization Focusing on Radiation Exposure. Medicina 2023, 59, 1121. [Google Scholar] [CrossRef]

- Zeiler, S.R.; Wasserman, B.A. Vessel Wall Imaging: A Powerful Diagnostic Tool but Not a Substitute for Biopsies. AJNR Am. J. Neuroradiol. 2021, 42, E79. [Google Scholar] [CrossRef] [PubMed]

- Braak, S.J.; van Strijen, M.J.; van Leersum, M.; van Es, H.W.; van Heesewijk, J.P. Real-Time 3D fluoroscopy guidance during needle interventions: Technique, accuracy, and feasibility. AJR Am. J. Roentgenol. 2010, 194, W445–W451. [Google Scholar] [CrossRef]

- Shinde, P.; Jadhav, A.; Gupta, K.K.; Dhoble, S. Quantification of 6d Inter-Fraction Tumour Localisation Errors in Tongue and Prostate Cancer Using Daily Kv-Cbct for 1000 Imrt and Vmat Treatment Fractions. Radiat. Prot. Dosim. 2022, 198, 1265–1281. [Google Scholar] [CrossRef]

- Schernthaner, R.E.; Duran, R.; Chapiro, J.; Wang, Z.; Geschwind, J.F.; Lin, M. A new angiographic imaging platform reduces radiation exposure for patients with liver cancer treated with transarterial chemoembolization. Eur. Radiol. 2015, 25, 3255–3262. [Google Scholar] [CrossRef]

- Floridi, C.; Radaelli, A.; Abi-Jaoudeh, N.; Grass, M.; Lin, M.; Chiaradia, M.; Giovagnoni, A.; Brunese, L.; Wood, B.; Carrafiello, G.; et al. C-arm cone-beam computed tomography in interventional oncology: Technical aspects and clinical applications. Radiol. Med. 2014, 119, 521–532. [Google Scholar] [CrossRef] [PubMed]

- Abdel-Rehim, M.; Ronot, M.; Sibert, A.; Vilgrain, V. Assessment of liver ablation using cone beam computed tomography. World J. Gastroenterol. 2015, 21, 517–524. [Google Scholar] [CrossRef] [PubMed]

- Busser, W.M.; Braak, S.J.; Futterer, J.J.; van Strijen, M.J.; Hoogeveen, Y.L.; de Lange, F.; Schultze Kool, L.J. Cone beam CT guidance provides superior accuracy for complex needle paths compared with CT guidance. Br. J. Radiol. 2013, 86, 20130310. [Google Scholar] [CrossRef]

- Wallace, M.J.; Kuo, M.D.; Glaiberman, C.; Binkert, C.A.; Orth, R.C.; Soulez, G.; Technology Assessment Committee of the Society of Interventional Radiology. Three-dimensional C-arm cone-beam CT: Applications in the interventional suite. J. Vasc. Interv. Radiol. 2008, 19, 799–813. [Google Scholar] [CrossRef]

- Finos, K.; Datta, S.; Sedrakyan, A.; Milsom, J.W.; Pua, B.B. Mixed reality in interventional radiology: A focus on first clinical use of XR90 augmented reality-based visualization and navigation platform. Expert Rev. Med. Devices 2024, 21, 679–688. [Google Scholar] [CrossRef] [PubMed]

- Lang, M.; Ghandour, S.; Rikard, B.; Balasalle, E.K.; Rouhezamin, M.R.; Zhang, H.; Uppot, R.N. Medical Extended Reality for Radiology Education and Training. J. Am. Coll. Radiol. 2024, 21, 1583–1594. [Google Scholar] [CrossRef]

- Briganti, F.; Tortora, M.; Loiudice, G.; Tarantino, M.; Guida, A.; Buono, G.; Marseglia, M.; Caranci, F.; Tortora, F. Utility of virtual stenting in treatment of cerebral aneurysms by flow diverter devices. Radiol. Med. 2023, 128, 480–491. [Google Scholar] [CrossRef]

- Elsakka, A.; Park, B.J.; Marinelli, B.; Swinburne, N.C.; Schefflein, J. Virtual and Augmented Reality in Interventional Radiology: Current Applications, Challenges, and Future Directions. Tech. Vasc. Interv. Radiol. 2023, 26, 100919. [Google Scholar] [CrossRef]

- Nielsen, C.A.; Lonn, L.; Konge, L.; Taudorf, M. Simulation-Based Virtual-Reality Patient-Specific Rehearsal Prior to Endovascular Procedures: A Systematic Review. Diagnostics 2020, 10, 500. [Google Scholar] [CrossRef] [PubMed]

- Grange, L.; Grange, R.; Bertholon, S.; Morisson, S.; Martin, I.; Boutet, C.; Grange, S. Virtual reality for interventional radiology patients: A preliminary study. Support. Care Cancer 2024, 32, 416. [Google Scholar] [CrossRef]

- Lake, K.; Mc Kittrick, A.; Desselle, M.; Padilha Lanari Bo, A.; Abayasiri, R.A.M.; Fleming, J.; Baghaei, N.; Kim, D.D. Cybersecurity and Privacy Issues in Extended Reality Health Care Applications: Scoping Review. JMIR XR Spat. Comput. 2024, 1, e59409. [Google Scholar] [CrossRef]

- Rudschies, C.; Schneider, I. Ethical, legal, and social implications (ELSI) of virtual agents and virtual reality in healthcare. Soc. Sci. Med. 2024, 340, 116483. [Google Scholar] [CrossRef]

- Zhou, S.; Gromala, D.; Wang, L. Ethical Challenges of Virtual Reality Technology Interventions for the Vulnerabilities of Patients With Chronic Pain: Exploration of Technician Responsibility. J. Med. Internet Res. 2023, 25, e49237. [Google Scholar] [CrossRef]

- Chlorogiannis, D.D.; Charalampopoulos, G.; Bale, R.; Odisio, B.; Wood, B.J.; Filippiadis, D.K. Innovations in Image-Guided Procedures: Unraveling Robot-Assisted Non-Hepatic Percutaneous Ablation. Semin. Intervent. Radiol. 2024, 41, 113–120. [Google Scholar] [CrossRef] [PubMed]

- Beaman, C.B.; Kaneko, N.; Meyers, P.M.; Tateshima, S. A Review of Robotic Interventional Neuroradiology. AJNR Am. J. Neuroradiol. 2021, 42, 808–814. [Google Scholar] [CrossRef] [PubMed]

- Rueda, M.A.; Riga, C.T.; Hamady, M.S. Robotics in Interventional Radiology: Past, Present, and Future. Arab. J. Interv. Radiol. 2021, 2, 56–63. [Google Scholar] [CrossRef]

- Levy, S.; Goldberg, S.N.; Roth, I.; Shochat, M.; Sosna, J.; Leichter, I.; Flacke, S. Clinical evaluation of a robotic system for precise CT-guided percutaneous procedures. Abdom. Radiol. 2021, 46, 5007–5016. [Google Scholar] [CrossRef]

- Kettenbach, J.; Kronreif, G.; Figl, M.; Furst, M.; Birkfellner, W.; Hanel, R.; Bergmann, H. Robot-assisted biopsy using ultrasound guidance: Initial results from in vitro tests. Eur. Radiol. 2005, 15, 765–771. [Google Scholar] [CrossRef]

- Berger, J.; Unger, M.; Landgraf, L.; Bieck, R.; Neumuth, T.; Melzer, A. Assessment of Natural User Interactions for Robot-Assisted Interventions. Curr. Dir. Biomed. Eng. 2018, 4, 165–168. [Google Scholar] [CrossRef]

- Christou, A.S.; Amalou, A.; Lee, H.; Rivera, J.; Li, R.; Kassin, M.T.; Varble, N.; Tsz Ho Tse, Z.; Xu, S.; Wood, B.J. Image-Guided Robotics for Standardized and Automated Biopsy and Ablation. Semin. Intervent. Radiol. 2021, 38, 565–575. [Google Scholar] [CrossRef]

- Zheng, W.; Wu, J.; Xia, W.; Zuo, R.; Chang, X.; Yin, H.; Li, C.; Zhang, C. Whole-Workflow Robotic-Assisted Percutaneous Endoscopic Lumbar Discectomy via a Two-Step Access Method: Technical Report and Preliminary Results. J. Pain Res. 2025, 18, 4361–4371. [Google Scholar] [CrossRef]

- Rafii-Tari, H.; Payne, C.J.; Yang, G.Z. Current and emerging robot-assisted endovascular catheterization technologies: A review. Ann. Biomed. Eng. 2014, 42, 697–715. [Google Scholar] [CrossRef]

- Mendes Pereira, V.; Cancelliere, N.M.; Nicholson, P.; Radovanovic, I.; Drake, K.E.; Sungur, J.M.; Krings, T.; Turk, A. First-in-human, robotic-assisted neuroendovascular intervention. J. Neurointerv. Surg. 2020, 12, 338–340. [Google Scholar] [CrossRef] [PubMed]

- Alderliesten, T.; Konings, M.K.; Niessen, W.J. Modeling friction, intrinsic curvature, and rotation of guide wires for simulation of minimally invasive vascular interventions. IEEE Trans. Biomed. Eng. 2007, 54, 29–38. [Google Scholar] [CrossRef]

- Allaqaband, S.; Solis, J.; Kazemi, S.; Bajwa, T.; American Heart A, American College of C. Endovascular treatment of peripheral vascular disease. Curr. Probl. Cardiol. 2006, 31, 711–760. [Google Scholar] [CrossRef] [PubMed]

- Rao, S. Robot-assisted transarterial chemoembolization for hepatocellular carcinoma: Initial evaluation of safety, feasibility, success and outcomes using the Magellan system. J. Vasc. Interv. Radiol. 2015, 26, S12. [Google Scholar] [CrossRef]

- Gunduz, S.; Albadawi, H.; Oklu, R. Robotic Devices for Minimally Invasive Endovascular Interventions: A New Dawn for Interventional Radiology. Adv. Intell. Syst. 2020, 3, 2000181. [Google Scholar] [CrossRef]

- Najafi, G.; Kreiser, K.; Abdelaziz, M.; Hamady, M.S. Current State of Robotics in Interventional Radiology. Cardiovasc. Intervent. Radiol. 2023, 46, 549–561. [Google Scholar] [CrossRef] [PubMed]

- European Society of Radiology. Abdominal applications of ultrasound fusion imaging technique: Liver, kidney, and pancreas. Insights Imaging 2019, 10, 6. [Google Scholar] [CrossRef]

- Biondetti, P.; Ierardi, A.M.; Casiraghi, E.; Caruso, A.; Grillo, P.; Carriero, S.; Lanza, C.; Angileri, S.A.; Sangiovanni, A.; Iavarone, M.; et al. Clinical Impact of a Protocol Involving Cone-Beam CT (CBCT), Fusion Imaging and Ablation Volume Prediction in Percutaneous Image-Guided Microwave Ablation in Patients with Hepatocellular Carcinoma Unsuitable for Standard Ultrasound (US) Guidance. J. Clin. Med. 2023, 12, 7598. [Google Scholar] [CrossRef]

- Abi-Jaoudeh, N.; Kruecker, J.; Kadoury, S.; Kobeiter, H.; Venkatesan, A.M.; Levy, E.; Wood, B.J. Multimodality image fusion-guided procedures: Technique, accuracy, and applications. Cardiovasc. Intervent. Radiol. 2012, 35, 986–998. [Google Scholar] [CrossRef]

- Tacher, V.; Kobeiter, H. State of the Art of Image Guidance in Interventional Radiology. J. Belg. Soc. Radiol. 2018, 102, 7. [Google Scholar] [CrossRef]

- McNally, M.M.; Scali, S.T.; Feezor, R.J.; Neal, D.; Huber, T.S.; Beck, A.W. Three-dimensional fusion computed tomography decreases radiation exposure, procedure time, and contrast use during fenestrated endovascular aortic repair. J. Vasc. Surg. 2015, 61, 309–316. [Google Scholar] [CrossRef]

- Zhong, B.Y.; Jia, Z.Z.; Zhang, W.; Liu, C.; Ying, S.H.; Yan, Z.P.; Ni, C.F. Application of Cone-beam Computed Tomography in Interventional Therapies for Liver Malignancy: A Consensus Statement by the Chinese College of Interventionalists. J. Clin. Transl. Hepatol. 2024, 12, 886–891. [Google Scholar] [CrossRef]

- Angle, J.F. Cone-beam CT: Vascular applications. Tech. Vasc. Interv. Radiol. 2013, 16, 144–149. [Google Scholar] [CrossRef] [PubMed]

- Seah, J.; Boeken, T.; Sapoval, M.; Goh, G.S. Prime Time for Artificial Intelligence in Interventional Radiology. Cardiovasc. Intervent. Radiol. 2022, 45, 283–289. [Google Scholar] [CrossRef]

- Charalambous, S.; Klontzas, M.E.; Kontopodis, N.; Ioannou, C.V.; Perisinakis, K.; Maris, T.G.; Damilakis, J.; Karantanas, A.; Tsetis, D. Radiomics and machine learning to predict aggressive type 2 endoleaks after endovascular aneurysm repair: A proof of concept. Acta. Radiol. 2022, 63, 1293–1299. [Google Scholar] [CrossRef]

- Daye, D.; Staziaki, P.V.; Furtado, V.F.; Tabari, A.; Fintelmann, F.J.; Frenk, N.E.; Shyn, P.; Tuncali, K.; Silverman, S.; Arellano, R.; et al. CT Texture Analysis and Machine Learning Improve Post-ablation Prognostication in Patients with Adrenal Metastases: A Proof of Concept. Cardiovasc. Intervent. Radiol. 2019, 42, 1771–1776. [Google Scholar] [CrossRef]

- Sinha, I.; Aluthge, D.P.; Chen, E.S.; Sarkar, I.N.; Ahn, S.H. Machine Learning Offers Exciting Potential for Predicting Postprocedural Outcomes: A Framework for Developing Random Forest Models in IR. J. Vasc. Interv. Radiol. 2020, 31, 1018–1024.e4. [Google Scholar] [CrossRef]

- Neri, E.; Aghakhanyan, G.; Zerunian, M.; Gandolfo, N.; Grassi, R.; Miele, V.; Giovagnoni, A.; Laghi, A.; SIRM Expert Group on Artificial Intelligence. Explainable AI in radiology: A white paper of the Italian Society of Medical and Interventional Radiology. Radiol. Med. 2023, 128, 755–764. [Google Scholar] [CrossRef] [PubMed]

- van Timmeren, J.E.; Cester, D.; Tanadini-Lang, S.; Alkadhi, H.; Baessler, B. Radiomics in medical imaging-how-to guide and critical reflection. Insights Imaging 2020, 11, 91. [Google Scholar] [CrossRef]

- Sheng, R.; Zheng, B.; Zhang, Y.; Sun, W.; Yang, C.; Zeng, M. A preliminary study of developing an MRI-based model for postoperative recurrence prediction and treatment direction of intrahepatic cholangiocarcinoma. Radiol. Med. 2024, 129, 1766–1777. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhou, K.; Sun, Z.; Wang, H.; Xie, J.; Zhang, T.; Sang, S.; Islam, M.T.; Wang, J.Y.; Chen, C.; et al. Non-invasive tumor microenvironment evaluation and treatment response prediction in gastric cancer using deep learning radiomics. Cell. Rep. Med. 2023, 4, 101146. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Luo, T.; Yan, M.; Shen, H.; Tao, K.; Zeng, J.; Yuan, J.; Fang, M.; Zheng, J.; Bermejo, I.; et al. Voxel-level radiomics and deep learning for predicting pathologic complete response in esophageal squamous cell carcinoma after neoadjuvant immunotherapy and chemotherapy. J. Immunother. Cancer 2025, 13, e011149. [Google Scholar] [CrossRef]

- Granata, V.; Fusco, R.; De Muzio, F.; Brunese, M.C.; Setola, S.V.; Ottaiano, A.; Cardone, C.; Avallone, A.; Patrone, R.; Pradella, S.; et al. Radiomics and machine learning analysis by computed tomography and magnetic resonance imaging in colorectal liver metastases prognostic assessment. Radiol. Med. 2023, 128, 1310–1332. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Peng, Y.; Feng, X.; Zhao, Y.; Seeruttun, S.R.; Zhang, J.; Cheng, Z.; Li, Y.; Liu, Z.; Zhou, Z. Development and Validation of a Computed Tomography-Based Radiomics Signature to Predict Response to Neoadjuvant Chemotherapy for Locally Advanced Gastric Cancer. JAMA Netw. Open. 2021, 4, e2121143. [Google Scholar] [CrossRef]

- Yang, M.; Liu, H.; Dai, Q.; Yao, L.; Zhang, S.; Wang, Z.; Li, J.; Duan, Q. Treatment Response Prediction Using Ultrasound-Based Pre-, Post-Early, and Delta Radiomics in Neoadjuvant Chemotherapy in Breast Cancer. Front. Oncol. 2022, 12, 748008. [Google Scholar] [CrossRef]

- Zheng, C.; Gu, X.T.; Huang, X.L.; Wei, Y.C.; Chen, L.; Luo, N.B.; Lin, H.S.; Jin-Yuan, L. Nomogram based on clinical and preoperative CT features for predicting the early recurrence of combined hepatocellular-cholangiocarcinoma: A multicenter study. Radiol. Med. 2023, 128, 1460–1471. [Google Scholar] [CrossRef]

- Qin, S.; Liu, K.; Chen, Y.; Zhou, Y.; Zhao, W.; Yan, R.; Xin, P.; Zhu, Y.; Wang, H.; Lang, N. Prediction of pathological response and lymph node metastasis after neoadjuvant therapy in rectal cancer through tumor and mesorectal MRI radiomic features. Sci. Rep. 2024, 14, 21927. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Zhao, W.; Xie, J.; Lin, H.; Hu, X.; Li, C.; Shang, Y.; Wang, Y.; Jiang, Y.; Ding, M.; et al. Development and validation of a radiomics-based nomogram for predicting a major pathological response to neoadjuvant immunochemotherapy for patients with potentially resectable non-small cell lung cancer. Front. Immunol. 2023, 14, 1115291. [Google Scholar] [CrossRef]

- Buijs, E.; Maggioni, E.; Mazziotta, F.; Lega, F.; Carrafiello, G. Clinical impact of AI in radiology department management: A systematic review. Radiol. Med. 2024, 129, 1656–1666. [Google Scholar] [CrossRef]

- Chehab, M.A.; Brinjikji, W.; Copelan, A.; Venkatesan, A.M. Navigational Tools for Interventional Radiology and Interventional Oncology Applications. Semin. Interv. Radiol. 2015, 32, 416–427. [Google Scholar] [CrossRef]

- Chehab, M.; Kouri, B.E.; Miller, M.J.; Venkatesan, A.M. Image Fusion Technology in Interventional Radiology. Tech. Vasc. Interv. Radiol. 2023, 26, 100915. [Google Scholar] [CrossRef]

- Boeken, T.; Pellerin, O.; Bourreau, C.; Palle, J.; Gallois, C.; Zaanan, A.; Taieb, J.; Lahlou, W.; Di Gaeta, A.; Al Ahmar, M.; et al. Clinical value of sequential circulating tumor DNA analysis using next-generation sequencing and epigenetic modifications for guiding thermal ablation for colorectal cancer metastases: A prospective study. Radiol. Med. 2024, 129, 1530–1542. [Google Scholar] [CrossRef] [PubMed]

- Khanna, N.N.; Maindarkar, M.A.; Viswanathan, V.; Fernandes, J.F.E.; Paul, S.; Bhagawati, M.; Ahluwalia, P.; Ruzsa, Z.; Sharma, A.; Kolluri, R.; et al. Economics of Artificial Intelligence in Healthcare: Diagnosis vs. Treatment. Healthcare 2022, 10, 2493. [Google Scholar] [CrossRef]

- Ahmed, M.I.; Spooner, B.; Isherwood, J.; Lane, M.; Orrock, E.; Dennison, A. A Systematic Review of the Barriers to the Implementation of Artificial Intelligence in Healthcare. Cureus 2023, 15, e46454. [Google Scholar] [CrossRef] [PubMed]

- Contaldo, M.T.; Pasceri, G.; Vignati, G.; Bracchi, L.; Triggiani, S.; Carrafiello, G. AI in Radiology: Navigating Medical Responsibility. Diagnostics 2024, 14, 1506. [Google Scholar] [CrossRef] [PubMed]

- Brady, A.P.; Neri, E. Artificial Intelligence in Radiology-Ethical Considerations. Diagnostics 2020, 10, 231. [Google Scholar] [CrossRef] [PubMed]

| Application Area | AI/Technology Used | Purpose | Key Outcomes/Findings |

|---|---|---|---|

| Radiomics | ML-based image analysis | Prediction and patient stratification | Improved diagnosis, prognosis, therapy planning; validated biomarkers |

| VR | Immersive simulation | Skill development and training | Enhanced proficiency, reduced errors, global accessibility |

| 3D Modeling | 3D printing + AI integration | Preprocedural planning, algorithm training | Realistic anatomy models, better predictive accuracy, hybrid learning environments |

| Category/ Technology | Main Applications | AI Functions/ Automation | Clinical Advantages | Main Limitations |

|---|---|---|---|---|

| IMAGING GUIDANCE SOFTWARES | ||||

| Cone-Beam CT (CBCT) and Automated Feeder Detection (AFD) Systems | Endovascular procedures | Real-time 3D reconstruction, automated tumor feeder detection, vessel segmentation | Improved target vessel recognition and treatment verification; enhanced embolization precision; reduced radiation dose and procedure time | Sensitive to image quality (motion, contrast); requires manual input for complex anatomy |

| XperGuide/XperCT (Philips) | Percutaneous iprocedures | Real-time needle trajectory optimization, ablation volume prediction | Higher accuracy of needle placement; reduced complications, repositioning, | Not fully deep learning-based; high cost |

| Virtual Reality (VR)/Augmented Reality (AR) Systems | Intraoperative navigation, real-time guidance, training, remote collaboration | AI-enhanced spatial mapping, trajectory suggestion, complication prediction | Improved spatial orientation and depth perception; dynamic adaptation to anatomy; analgesic and anxiety reduction | High cost, rendering latency, need for operator training, data privacy concerns |

| ROBOTICS | ||||

| Percutaneous Robotic Systems (e.g., Maxio, iSYS, XACT, Mazor X) | Biopsy, ablation, infiltration, discectomy | Automated trajectory planning, robotic needle insertion, respiratory motion compensation | Sub-2 mm targeting accuracy; shorter procedure time; reduced operator radiation exposure | MRI compatibility limitations; lack of tactile feedback; high system cost |

| Endovascular Robotic Systems (e.g., Magellan, CorPath, Sensei X) | Embolization, revascularization, cardiac and aortic interventions | AI-assisted catheter navigation and path optimization | Enhanced catheter stability and precision; reduced operator radiation dose | Lack of haptic feedback; long setup time; limited flexibility in tortuous anatomy |

| IMAGING FUSION | ||||

| Multimodal Fusion Imaging (CT/MR/PET + US/CBCT) | TACE, ablation, biopsies, vascular malformations | AI-supported registration and alignment of multimodal datasets; real-time anatomical matching | Improved lesion localization and targeting; reduced procedural time and radiation exposure | Accuracy depends on registration precision; requires training and expensive equipment |

| Type | Definition | Key Features/Functions | Devices Used |

|---|---|---|---|

| VR | Full immersion in a completely virtual environment. | -Replaces the real world entirely with a simulated one. -Users can interact with virtual objects. | Headsets covering the entire field of vision, gloves, earphones. |

| MR | Hybrid approach combining real and virtual elements. | -Real-time interaction between real and digital worlds. -Digital objects are integrated and anchored in the real environment. | Advanced headsets enabling seamless blending and interaction. |

| AR | Overlays digital content onto the real world. | -Enhances but does not replace the physical environment. -Superimposes digital elements on real surroundings. | Smartphones, tablets, AR glasses. |

| Application Category | Author | Methodology | Imaging Modality | Key Findings |

|---|---|---|---|---|

| Radiomics | Mamone et al. [33] | Radiomics | Pre-procedural CT | Predicted survival, hepatic encephalopathy, and clinical response after TIPS creation |

| Tabari et al. [34] | Radiomics + ML | Pre-ablation MRI | Predicted pathological response in HCC patients undergoing transplantation | |

| Li et al. [35] | Radiomics | MRI/CT | Demonstrated role of radiomics in forecasting microvascular invasion (MVI) in HCC | |

| He et al. [36] | Prognostic nomogram integrating radiomics | Pre-procedural CT (HCC after surgery) | Evaluated survival benefit of HCC patients receiving adjuvant TACE | |

| Yang et al. [37] | Radiomics-based survival model | Pre-treatment enhanced MRI | Predicted prognosis of HCC patients undergoing continued TACE after resistance | |

| Bernatz et al. [38] | Radiomics feature extraction + ML | Post-embolization CT | Identified HCC patients responding to repetitive TACE | |

| Zhang et al. [39] | Interpretable ML model | Contrast-enhanced CT | Predicted treatment response to initial cTACE in intermediate-stage HCC | |

| Wang et al. [40] | Radiogenomic approach (radiomics + transcriptomics) | Pre-treatment imaging | Transcriptomic biomarker correlated with radiomics features to predict TACE efficacy and immunotherapy outcomes | |

| Ma et al. [41] | Radiomics + DL | Pre-op CT | Predicted postoperative liver metastasis in pancreatic neuroendocrine tumor (panNET) after R0 resection | |

| Mosconi et al. [13] | Radiomics + ML | Pre-treatment CT | Predicted responders to radioembolization in cholangiocarcinoma | |

| Avery et al. [26] | Radiomics Quality Score (RQS) | N/A | Provided 16-component framework for evaluating radiomics research | |

| Ferrari et al. [15] | CLEAR checklist | N/A | Introduced structured criteria to ensure methodological rigor and reproducibility in radiomics studies | |

| Virtual Reality | Waller et al. [2] | Review of AI applications | N/A | Overview of AI opportunities and challenges in diagnostic and interventional radiology |

| Gelmini et al. [42] | Systematic review (VR in IR training) | VR-based simulation VR-based simulation | Demonstrated improved skill acquisition, cost-effectiveness, and reduced morbidity/mortality risk | |

| Li et al. [43] | ML predictive models | CT/clinical data | Used ML to predict IVC filter complications; findings relevant to pre-procedural planning | |

| Tortora et al. [44] | Extended reality (AR/VR/MR) | Simulation and imaging | Reviewed applications of extended reality in radiology and discussed future perspectives | |

| Chaer et al. [46] | VR simulation training | Simulation (angiography, catheter skills) | Simulator training improved surgical residents’ performance compared with didactic instruction | |

| Knudsen et al. [47] | Hybrid VR simulator | Computer-based surgical simulation | Improved acquisition of percutaneous renal access skills compared with traditional training | |

| 3D Modeling | Kaufmann et al. [48] | 3D vascular printing with AI integration | CT/MRI-derived 3D models | Patient-specific anatomical models improved pre-procedural planning and provided datasets for AI training |

| Tenewitz et al. [49] | Systematic review of 3D modeling in IR training | 3D printed vascular models | Demonstrated feasibility and educational value of 3D printing for IR trainees |

| Application Category | Subcategory | Author | Methodology | Imaging Modality | Clinical Application | Key Findings |

|---|---|---|---|---|---|---|

| CBCT and AFD | Imaging guidance/feeder detection | Abi-Jaoudeh et al. [97] | AFD (feeder detection) and vessel segmentation | CBCT, CT, US, Fluoroscopy | Embolization (TACE/TAE), vascular malformation | Improves targeting and procedural accuracy. |

| Imaging guidance/feeder detection | Schernthaner et al. [66] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Ablation/embolization (general) | Shortens procedures and reduces radiation. | |

| Imaging guidance/feeder detection | Wallace et al. [70] | AFD (feeder detection) and vessel segmentation | CT, MRI | Renal tumor | Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Chiaradia et al. [59] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Ablation/embolization (general) | Enhances feeder detection and embolization planning. | |

| Imaging guidance/feeder detection | Barral et al. [51] | AFD (feeder detection) and vessel segmentation | CT | Ablation/embolization (general) | Enhances feeder detection and embolization planning. | |

| Imaging guidance/feeder detection | Monfardini et al. [53] | AFD (feeder detection) and vessel segmentation | CBCT, CT, US | Ablation/embolization (general) | Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Shinde et al. [65] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Ablation/embolization (general) | Shortens procedures and reduces radiation. | |

| Imaging guidance/feeder detection | Lanza et al. [61] | AFD (feeder detection) and vessel segmentation | Fluoroscopy | Ablation/embolization (general) | Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Abdelsalam et al. [60] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Ablation/embolization (general) | Enhances feeder detection and embolization planning. | |

| Imaging guidance/feeder detection | Busser et al. [69] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Lung tumor | Shortens procedures and reduces radiation. Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Racadio et al. [52] | AFD (feeder detection) and vessel segmentation | CT | Ablation/embolization (general) | Enhances feeder detection and embolization planning. | |

| Imaging guidance/feeder detection | Braak et al. [64] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Embolization (TACE/TAE) | Shortens procedures and reduces radiation. Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Key et al. [54] | AFD (feeder detection) and vessel segmentation | CBCT, CT, US | Ablation/embolization (general) | Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Zeiler et al. [63] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Embolization (TACE/TAE) | Shortens procedures and reduces radiation. Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Kim et al. [62] | AFD (feeder detection) and vessel segmentation | Fluoroscopy | Embolization (TACE/TAE) | Shortens procedures and reduces radiation. Improves targeting and procedural accuracy. | |

| Imaging guidance/feeder detection | Tacher et al. [98] | AFD (feeder detection) and vessel segmentation | CBCT, CT, US, Fluoroscopy | Embolization (TACE/TAE), vascular malformation | Improves targeting and procedural accuracy. | |

| Treatment verification | Tacher et al. [57] | AFD (feeder detection) and vessel segmentation | CBCT/Fluoroscopy | Ablation, embolization (TACE/TAE) | Enhances feeder detection and embolization planning. | |

| Treatment verification | Morimoto et al. [55] | AFD (feeder detection) and vessel segmentation | CBCT, CT | Ablation | Improves targeting and procedural accuracy. | |

| Fusion Imaging | Clinical fusion applications | Angle et al. [101] | Automatic/semiautomatic registration for multimodal fusion | CT | Ablation, liver tumor | Improves targeting and procedural accuracy. |

| Clinical fusion applications | Zhong et al. [100] | Automatic/semiautomatic registration for multimodal fusion | CT | Ablation, liver tumor | Improves targeting and procedural accuracy. | |

| Registration and alignment | European Society of Radiology [95] | Automatic/semiautomatic registration for multimodal fusion | CT, US, PET | Fusion-guided interventions | Improves lesion visualization and targeting. | |

| Robotics | Endovascular applications | Beaman et al. [81] | Robotic actuation and image-guided navigation | CT, MRI, US, Fluoroscopy | Percutaneous and/or endovascular | Increases precision and standardization. |

| Endovascular applications | Rueda et al. [82] | Robotic actuation and image-guided navigation | CT, MRI, US, Fluoroscopy | Percutaneous and/or endovascular | Increases precision and standardization. | |

| Percutaneous applications | Levy et al. [83] | Robotic actuation and image-guided navigation | CT, US | Percutaneous and/or Endovascular | Improves targeting and procedural accuracy. Motion compensation enhances safety. | |

| Percutaneous applications | Kettenbach et al. [84] | Robotic actuation and image-guided navigation | CT/MRI/US/Fluoro (varies) | Percutaneous and/or endovascular | Improves targeting and procedural accuracy. | |

| Percutaneous applications | Christou et al. [86] | Robotic actuation and image-guided navigation | CT, MRI, US | Biopsy, prostate | Improves targeting and procedural accuracy. | |

| Percutaneous applications | Chlorogiannis et al. [80] | Robotic actuation and image-guided navigation | CT/MRI/US/Fluoro (varies) | Percutaneous and/or endovascular | Increases precision and standardization. | |

| Percutaneous applications | Lanza et al. [61] | Robotic actuation and image-guided navigation | CT/MRI/US/Fluoro (varies) | Percutaneous and/or endovascular | Increases precision and standardization. | |

| Percutaneous applications | Berger et al. [85] | Robotic actuation and image-guided navigation | CT/MRI/US/Fluoro (varies) | Percutaneous and/or endovascular | Improves targeting and procedural accuracy. | |

| Percutaneous applications | Zheng et al. [87] | Robotic actuation and image-guided navigation | CT | Percutaneous and/or endovascular | Increases precision and standardization. | |

| Percutaneous applications | Barral et al. [10] | Robotic actuation and image-guided navigation | CT, MRI, US | Biopsy, prostate | Improves targeting and procedural accuracy. | |

| VR/AR | Navigation and guidance | Gunduz et al. [93] | AI-assisted VR/AR guidance and trajectory suggestion | CT, US | Navigation and guidance | Shortens procedures and reduces radiation. Improves targeting and procedural accuracy. |

| Navigation and guidance | Najafi et al. [94] | AI-assisted VR/AR guidance and trajectory suggestion | Fluoroscopy/US/CBCT (integrated) | Navigation and guidance | Improves workflow efficiency. | |

| Navigation and guidance | Rafii-Tari et al. [88] | AI-assisted VR/AR guidance and trajectory suggestion | Fluoroscopy/US/CBCT (integrated) | Navigation and guidance | Shortens procedures and reduces radiation. Improves targeting and procedural accuracy. | |

| Navigation and guidance | Allaqaband et al. [91] | AI-assisted VR/AR guidance and trajectory suggestion | CT, US | Navigation and guidance | Improves spatial orientation and safety. | |

| Navigation and guidance | Rao et al. [92] | AI-assisted VR/AR guidance and trajectory suggestion | CT | Embolization (TACE/TAE), peripheral revascularization | Improves spatial orientation and safety. | |

| Navigation and guidance | Alderliesten et al. [90] | AI-assisted VR/AR guidance and trajectory suggestion | CT, US | Navigation and guidance | Improves spatial orientation and safety. | |

| Navigation and guidance | Mendes Pereira et al. [89] | AI-assisted VR/AR guidance and trajectory suggestion | CT, US | Navigation and guidance | Improves spatial orientation and safety. |

| Application Category | Author | Methodology | Imaging Modality | Key Findings |

|---|---|---|---|---|

| Tumor-related | Abajian et al. [3] | Random Forest | MRI | Combines MRI signal, contrast enhancement, and clinical parameters to classify TACE response; promising predictive performance |

| Moon et al. [24] | Quantitative analysis (4D flow) | 4D Flow MRI | Quantitative flow data predicts complete response after TACE in cirrhotic HCC patients | |

| Daye et al. [104] | ML integrating clinical + radiomic data | Pre-treatment CT | Predicts local tumor progression and survival after percutaneous thermal ablation (adrenal metastases) with high accuracy | |

| Vascular | Li et al. [43] | ML | Clinical and imaging data | Predicts IVC filter complications by integrating clinical, anatomical, and device-related variables; supports personalized follow-up |

| Charalambous et al. [103] | SVM | Post-EVAR CT angiography | Detects aggressive type II endoleaks associated with aneurysmal sac expansion; supports personalized surveillance | |

| Procedural outcomes | Sinha et al. [105] | ML | CT, procedural imaging | Predicts pneumothorax post-CT biopsy, in-hospital mortality post-TIPS, prolonged hospital stay post-uterine artery embolization |

| General overview | Iezzi et al. [1] | Narrative Review of AI approaches | Multiple imaging modalities | Summarizes AI applications in IR across pre-, intra-, and post-procedural settings; highlights opportunities and future challenges |

| Seah et al. [102] | ML, DL | Post-procedural imaging | Enhances workflow, reduces inter-observer variability, improves post-procedural assessment accuracy, quantifies treatment response, supports prognostic evaluation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lanza, C.; Angileri, S.A.; Carriero, S.; Triggiani, S.; Ascenti, V.; Mortellaro, S.R.; Ginolfi, M.; Leo, A.; Arnone, F.; Torcia, P.; et al. Artificial Intelligence Applications in Interventional Radiology. J. Pers. Med. 2025, 15, 569. https://doi.org/10.3390/jpm15120569

Lanza C, Angileri SA, Carriero S, Triggiani S, Ascenti V, Mortellaro SR, Ginolfi M, Leo A, Arnone F, Torcia P, et al. Artificial Intelligence Applications in Interventional Radiology. Journal of Personalized Medicine. 2025; 15(12):569. https://doi.org/10.3390/jpm15120569

Chicago/Turabian StyleLanza, Carolina, Salvatore Alessio Angileri, Serena Carriero, Sonia Triggiani, Velio Ascenti, Simone Raul Mortellaro, Marco Ginolfi, Alessia Leo, Francesca Arnone, Pierluca Torcia, and et al. 2025. "Artificial Intelligence Applications in Interventional Radiology" Journal of Personalized Medicine 15, no. 12: 569. https://doi.org/10.3390/jpm15120569

APA StyleLanza, C., Angileri, S. A., Carriero, S., Triggiani, S., Ascenti, V., Mortellaro, S. R., Ginolfi, M., Leo, A., Arnone, F., Torcia, P., Biondetti, P., Ierardi, A. M., & Carrafiello, G. (2025). Artificial Intelligence Applications in Interventional Radiology. Journal of Personalized Medicine, 15(12), 569. https://doi.org/10.3390/jpm15120569