Abstract

The removal of pineapple eyes is a crucial step in pineapple processing. However, their irregularly distributed spiral arrangement presents a dual challenge for positioning accuracy and automated removal by the end-effector. In order to solve this problem, a pineapple eye removal device based on machine vision was designed. The device comprises a clamping mechanism, an eye removal end-effector, an XZ two-axis sliding table, a depth camera, and a control system. Taking the eye removal time and rotational angular velocity as variables, the relationship between the rod length of the prime mover and the contact force and gear torque during the eye removal process was simulated and analyzed using ADAMS (2020) software. Based on these simulations, the optimal length of the prime mover for the end-effector was determined to be 23.00 mm. The performance of various YOLOv5 models was compared in terms of accuracy, recall rate, mean detection error, and detection time. The YOLOv5s model was chosen for real-time pineapple eye detection, and the eye’s position was determined through coordinate transformation. The control system then actuated the XZ two-axis sliding table to position the eye removal end-effector for effective removal. The results indicated an average complete removal rate of 88.5%, an incomplete removal rate of 6.6%, a missed detection rate of 4.9%, and an average removal time of 156.7 s per pineapple. Compared with existing solutions, this study optimized the end-effector design for pineapple eye removal. Depth information was captured with a depth camera, and machine vision was combined with three-dimensional localization. These steps improved removal accuracy and increased production efficiency.

1. Introduction

China is one of the world’s leading pineapple producers, with a planting area of approximately 1 million mu and an annual output of about 1.65 million tons [1]. However, due to the perishable nature of fresh pineapple, market prices fluctuate significantly, and to stabilize farmer incomes, around 30% of the pineapple harvest must undergo processing [2,3]. During processing, the removal of pineapple eyes is essential. These eyes are rich in coarse fibers, have a hard texture, and may contain pesticide residues or insect eggs. If not properly removed, they not only compromise taste and consumer experience but also pose potential food safety hazards. Currently, eye removal is primarily performed manually, which significantly limits the scalability and efficiency of the pineapple industry [4].

To address these challenges, researchers have conducted studies on mechanized pineapple eye removal and related technologies. Liu [5] designed a novel device featuring a V-shaped blade, which excises eyes through a manual pulling mechanism. Prakash Kumar [6] enhanced traditional extraction tools by optimizing the handle for improved ergonomics. Chen et al. [7] developed a semi-automatic device that integrates manual cranking with automated components, enabling eye removal through human-powered rotation. Wen et al. [8] proposed an integrated machine for simultaneous peeling and eye removal, wherein the pineapple was secured using a clamping mechanism while rotating cutters executing the operation. In recent years, machine vision technology has been widely adopted in agricultural engineering applications, such as pest detection and fruit processing [9,10,11,12]. Several innovations have emerged in postharvest pineapple processing. Zhang et al. [13] developed an automated system that captures external images of the pineapple to detect the spiral trajectories of its eyes; the system then generated control commands to guide a cutting tool along these paths as the fruit was being rotated for sequential excision. Qian et al. [14] designed a device that utilizes multi-view image acquisition and 3D reconstruction to extract surface data and determine the cutting trajectory via path-planning algorithms. Nguyen Minh Trieu et al. [15] developed a fully automatic machine that fixes the pineapple in place, detects eye positions using cameras, and removes them precisely with rotary cutters. Liu et al. [16] proposed a system in which cameras identify the eye positions and a tool-closing cylinder drives a tweezer-like actuator to remove them.

While traditional manual or semi-automatic approaches improve operational efficiency, their low level of automation and high labor intensity make them struggle to meet the demands of mass production. Spiral trajectory cutting methods based on machine vision have enhanced automation, but often damage surrounding fruit flesh, resulting in excessive waste. Individual eye removal methods based on machine vision reduce waste; however, they face challenges such as inconsistent removal performance, suboptimal end-effector design, low positioning accuracy, and inadequate real-time responsiveness.

To overcome these limitations, this study presents an integrated solution that combines the YOLOv5s object detection algorithm with a contour-adaptive eye removal gripper. Machine vision was employed to identify the fruit eyes and acquire their three-dimensional coordinates. An optimized end-effector driven by a dual-axis sliding table enabled precise and fast excision of each fruit eye.

2. Materials and Methods

2.1. Overall Design and Principle of Operation of the Device

2.1.1. Components of the Device

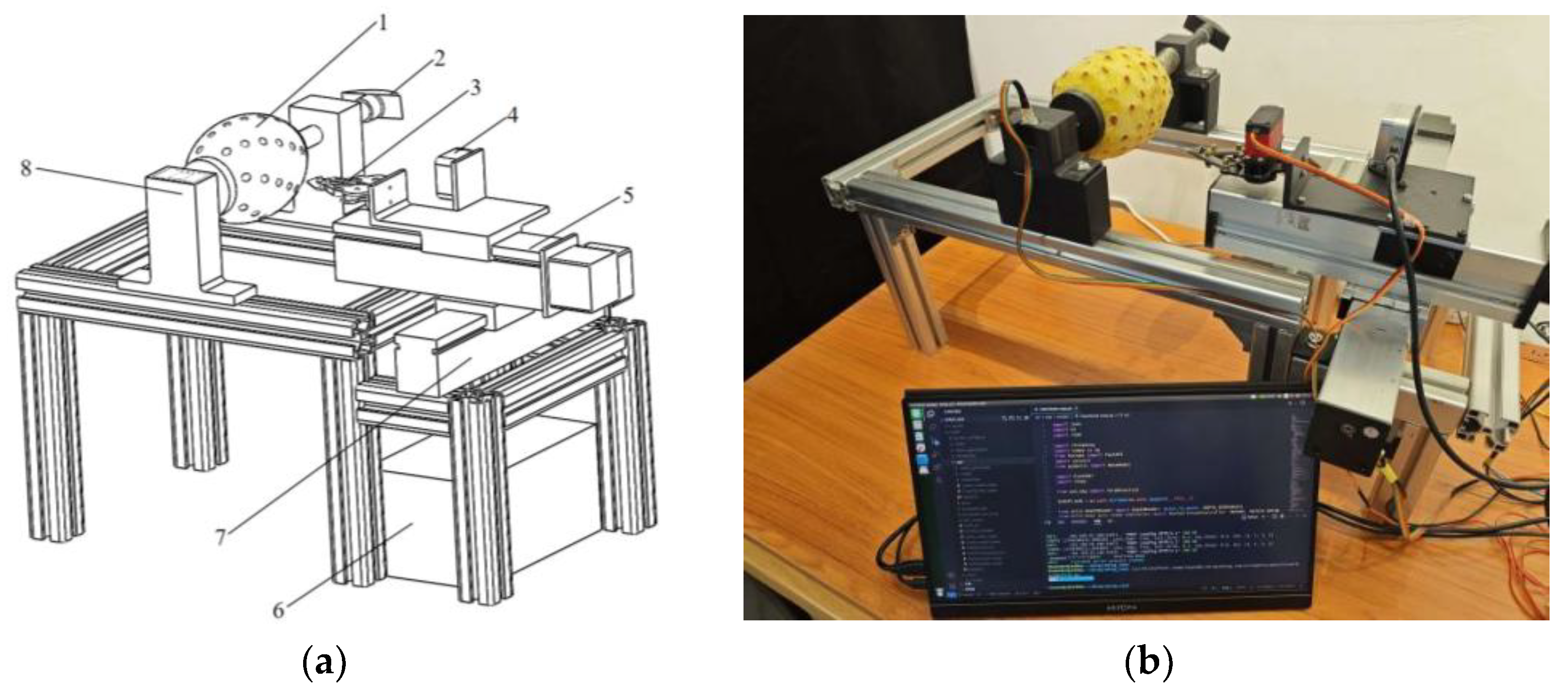

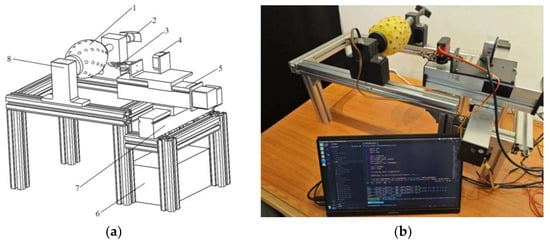

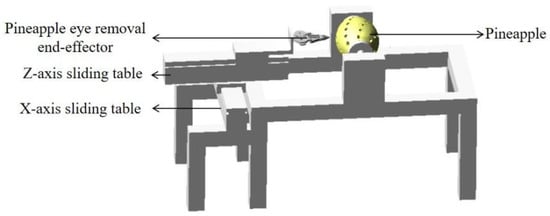

The pineapple eye removal machine consisted of a clamping mechanism, an eye removal end-effector, an XZ two-axis sliding table, a depth camera, and a control system. A structural diagram of the device is shown in Figure 1. The clamping mechanism was responsible for securing and rotating the pineapple during the removal process. It included a stepper motor, clamping column, pineapple clamping plate 1, pineapple clamping tooth plate 2, fixed seat 1, fixed seat 2, and a spring. A stepper motor (Nema 17, 12 V, 4 A/phase, StepperOnline, New York, NY, USA) was mounted on the frame via fixed seat 1. The motor’s spindle was connected to pineapple clamping disc 1, which featured six spines designed to secure the pineapple. The clamping column was fixed at one end to the frame via fixed seat 2, while the other end was attached to pineapple clamping plate 2. A spring was placed on the clamping column, allowing the mechanism to clamp pineapples with diameters ranging from 90 mm to 150 mm. An integrated RGB and depth camera (D405, Intel Realsense, Santa Clara, CA, USA) with the resolution 640 × 480 pixels was used for eye detection and localization. A microcomputer (Jetson Nano, Nvidia, Santa Clara, CA, USA) was used for the control system.

Figure 1.

Pineapple automatic eye removal device. (a) Schematic diagram of the 3D model; (b) Prototype photograph. 1: Pineapple; 2: Clamping column; 3: Pineapple eye removal end-effector; 4: Depth camera; 5: Z-axis sliding table; 6: Control box; 7: X-axis sliding table; 8: Stepper motor.

2.1.2. Principle of Operation

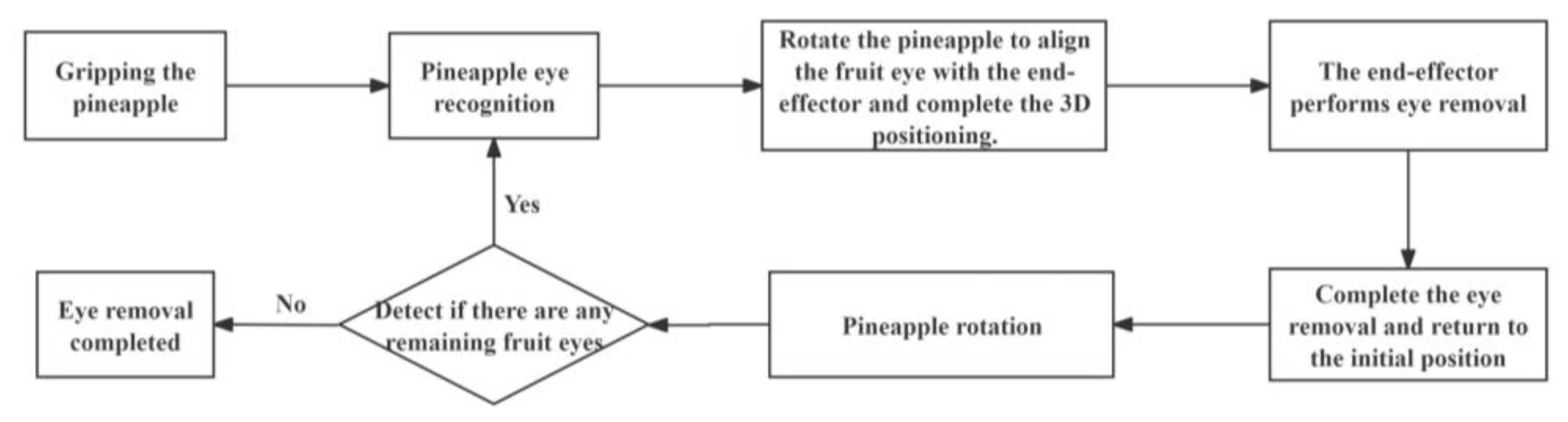

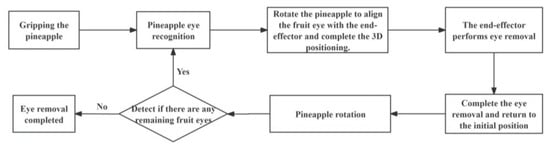

The peeled pineapple was manually placed into the clamping mechanism, where the spring force caused the tips of the pineapple clamping discs to pierce the two ends of the fruit, securing it in place. The depth camera detected the pineapple eyes, and the identified coordinates were converted into world coordinates via a coordinate transformation. Based on these coordinates, the X-axis sliding table drove the end-effector to align with the pineapple fruit eye on the horizontal plane, while the Z-axis sliding table actuated the end-effector to approach and perform the piercing operation on the fruit eye. Once the piercing was completed, the end-effector closed to remove the eye. The Z-axis sliding table then retracted, pulling the end-effector out of the pineapple. The end-effector opened to release the fruit eye, completing the removal process for one eye. This process was repeated for each identified fruit eye. If no fruit eye was detected within the preset threshold range, the clamping mechanism rotated 15° under the control of the stepper motor to re-identify and reposition. Once all eyes had been removed, the pineapple completed a full 360° rotation. The complete workflow is illustrated in Figure 2.

Figure 2.

Eye removal flow chart.

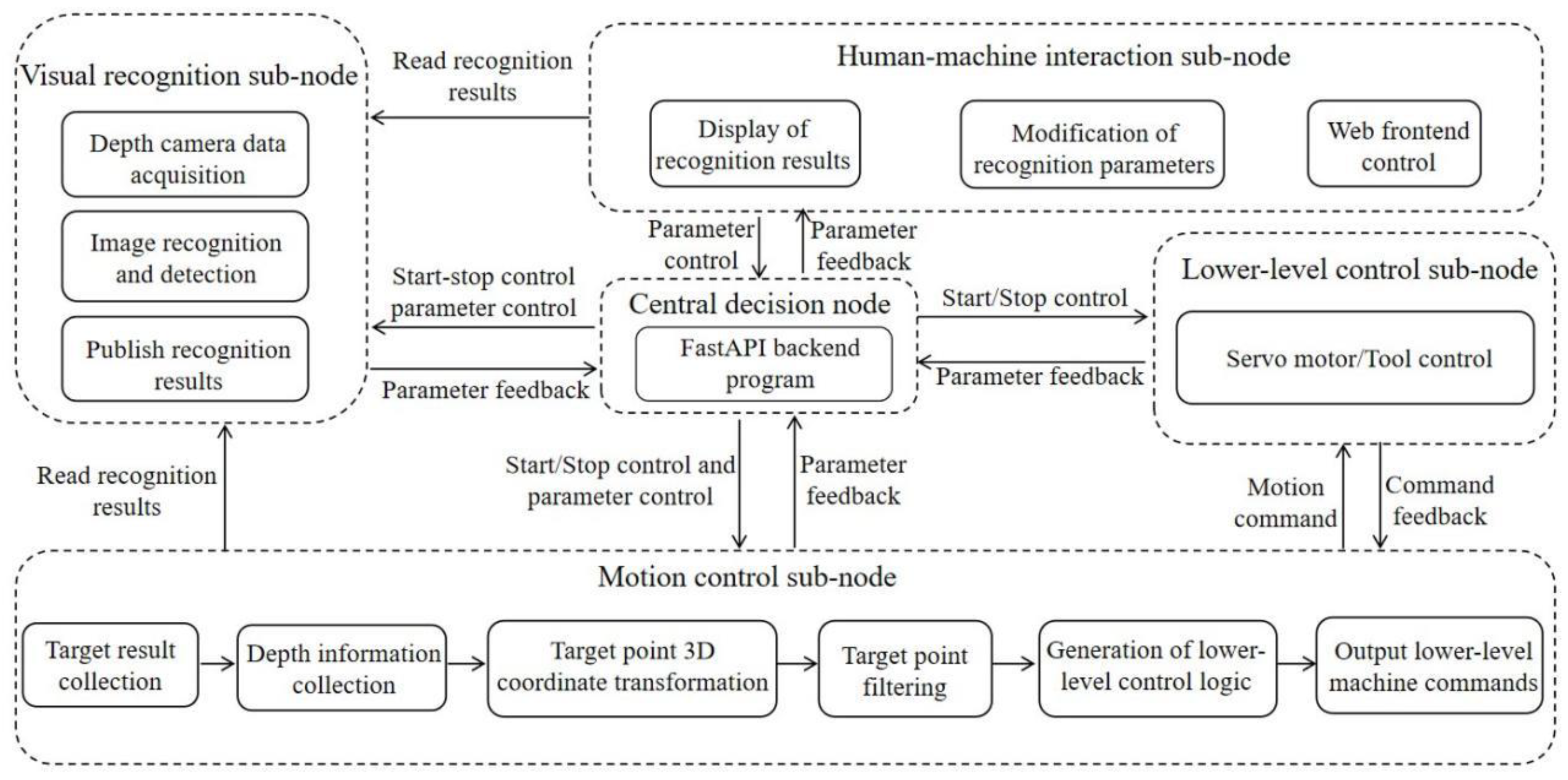

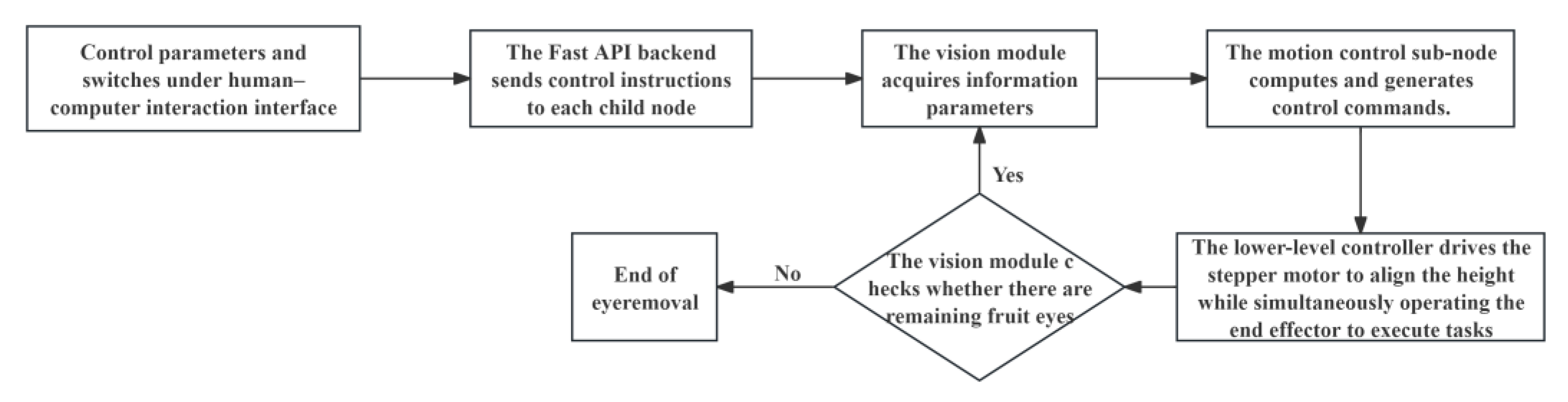

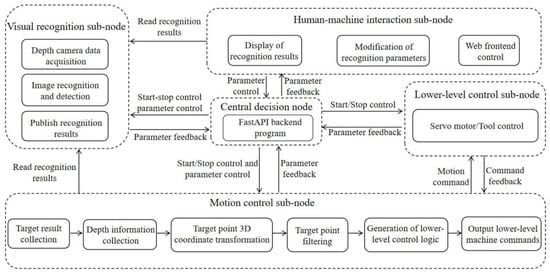

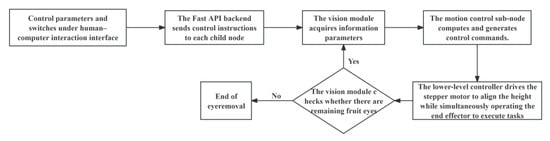

2.2. Control System

The control system consisted of two main components: the host computer software and the motion control system. Its primary functional areas included a real-time camera display module and a YOLO parameter configuration panel, which allowed the adjustment of parameters such as the region of interest and confidence levels. The control microcomputer managed the operation of the camera, the sliding table, the stepper motor controlling the rotation of the pineapple, and the servo motor driving the movement of the end-effector. The principle of the control system is shown in Figure 3. The flow chart of the control system was shown in Figure 4. The system was actuated through the human–machine interaction interface after the control parameters were configured. Once control instructions were received, the FastAPI backend forwarded them to the corresponding functional sub-nodes. The vision module subsequently acquired image data in accordance with these instructions and extracted parameters for fruit eye recognition. The system then evaluated whether fruit eyes requiring processing had been detected by the vision module. The motion control sub-node calculated the trajectory using the recognition coordinates and generated control commands when fruit eyes were identified. These commands were subsequently transmitted to the lower-level control module, which actuated the stepper motor and end-effector to execute the eye removal operation. Conversely, if no fruit eyes were detected on the visible side, the current pineapple was considered fully processed, and the system concluded the eye removal task.

Figure 3.

Control system structure.

Figure 4.

Control system flow chart.

2.3. Overall Design and Simulation Optimization of the Eye Removal End-Effector

2.3.1. The Design of the End-Effector

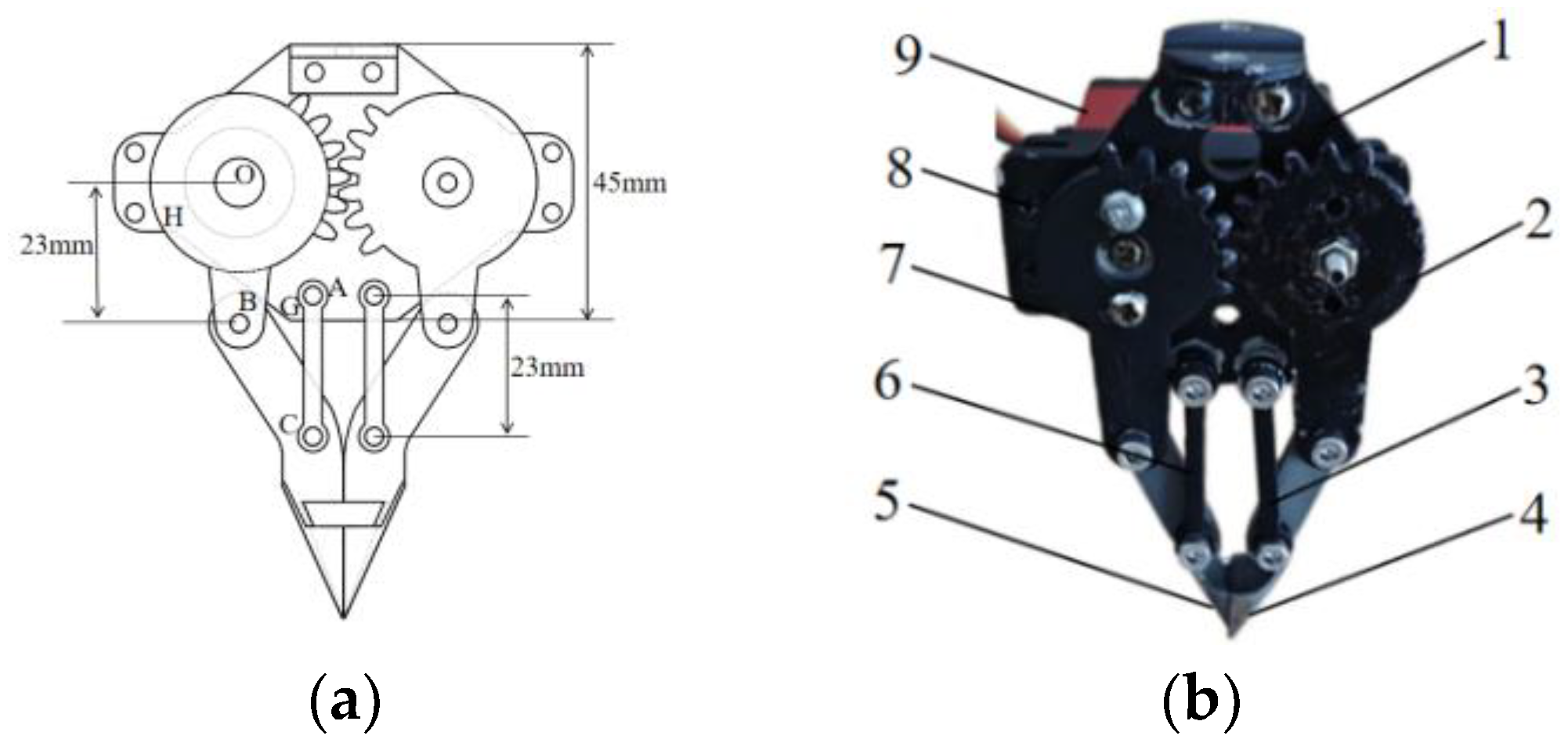

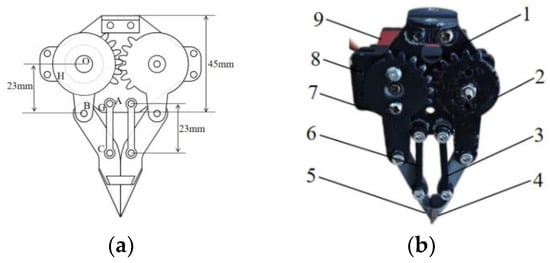

To achieve better removal of pineapple fruit eyes, an eye removal end-effector was designed, as shown in Figure 5. The end-effector featured a claw designed to match the shape of the pineapple eye. To ensure consistent motion of the eye removal gripper, a limiting component was incorporated between the gripper and the gear mechanism to restrict its movement trajectory. The TBSN-K20 servo motor (Xingbei Technology, Taizhou, China, 20 Kg·cm, 5 V) was chosen to actuate the eye removal gripper. The eye removal end-effector was mounted on the Z-axis sliding table, which was powered by a 12 mm diameter lead screw with a 4 mm lead to pierce the pineapple. The X-axis sliding table, driven by a 16 mm diameter screw with a 10 mm lead, drove the eye removal end-effector for horizontal movement.

Figure 5.

Pineapple eye removal end-effector. (a) Schematic diagram of the 3D model; (b) Prototype photograph. 1: Fixed buckle; 2: The first gear mechanism; 3: The first limit original; 4: First hand; 5: Second claw; 6: Second limit original; 7: Second gear mechanism; 8: Base plate; 9: Servo motor.

The designed end-effector was symmetrical relative to the central plane, and for analysis, the structure on one side of the centerline was selected [17]. Points O and A were fixed joints consisting of the gear mechanism, limit element, and base plate, respectively. Points B and C served as rotating joints connected to the gear mechanism, limit element, and eye removal gripper. HG represented the lower level of the base plate. As shown in Figure 5, when the length of the OB rod is decreased below a certain value, interference occurred between the BC rod and the lower bevel HG of the base plate, requiring a minimum length for the BC rod. The width of the rod was modeled as a circle with a radius of 5 mm, centered at point B. When this circle was tangent to bevel HG, the BC rod reached its minimum achievable length. A rectangular coordinate system was established with point O as the origin. The coordinates of point H are given as (x1, y1) and those of point G as (x2, y2). The linear equation of lGH was

Point B was located on the y-axis, with coordinates (0, y). According to the formula for the distance from a point to a line

Substituting the coordinates of point H (−10.5, 8.0) and point G (8.0, −22.5) yielded

Therefore, the length of the OB rod was required to be at least 22.58 mm.

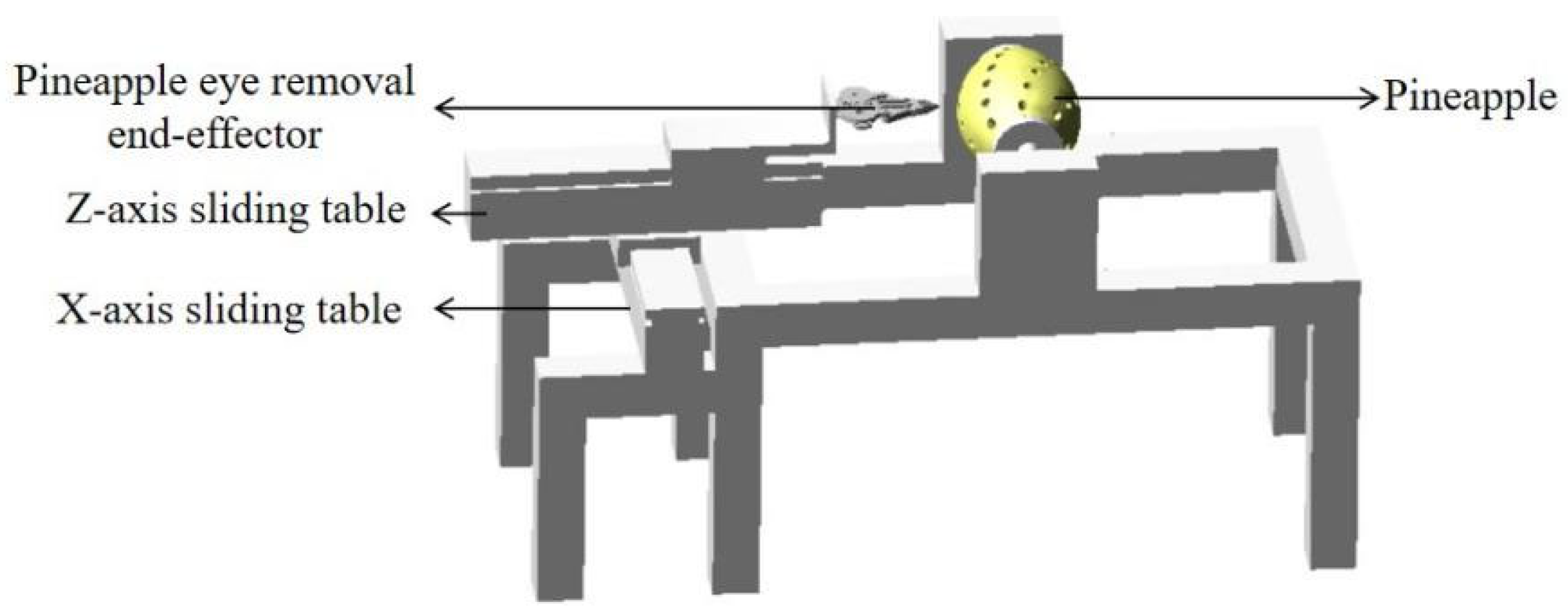

2.3.2. Simulation Optimization of Rod Length

A three-dimensional model of the pineapple eye removal device was constructed in SolidWorks (2018) and subsequently simplified [18]. This model was imported into ADAMS for further analysis [19,20]. In ADAMS, the dynamic parameters of the model were defined by assigning materials, constraints, external forces, and other factors of components based on specific requirements. The eye removal action was achieved by driving the motion of the slide and gear mechanism through the STEP function. The primary material used for the virtual prototype was steel, while the pineapple part used data obtained from the compression test by Xue Zhong of the Chinese Academy of Tropical Agricultural Sciences [21]. The kinematics simulation and analysis of the prototype were conducted by adding appropriate driving functions [22,23,24,25]. The physical properties of the materials used are detailed in Table 1, and the 3D model is shown in Figure 6.

Table 1.

Material property parameters.

Figure 6.

The 3D model built based on ADAMS.

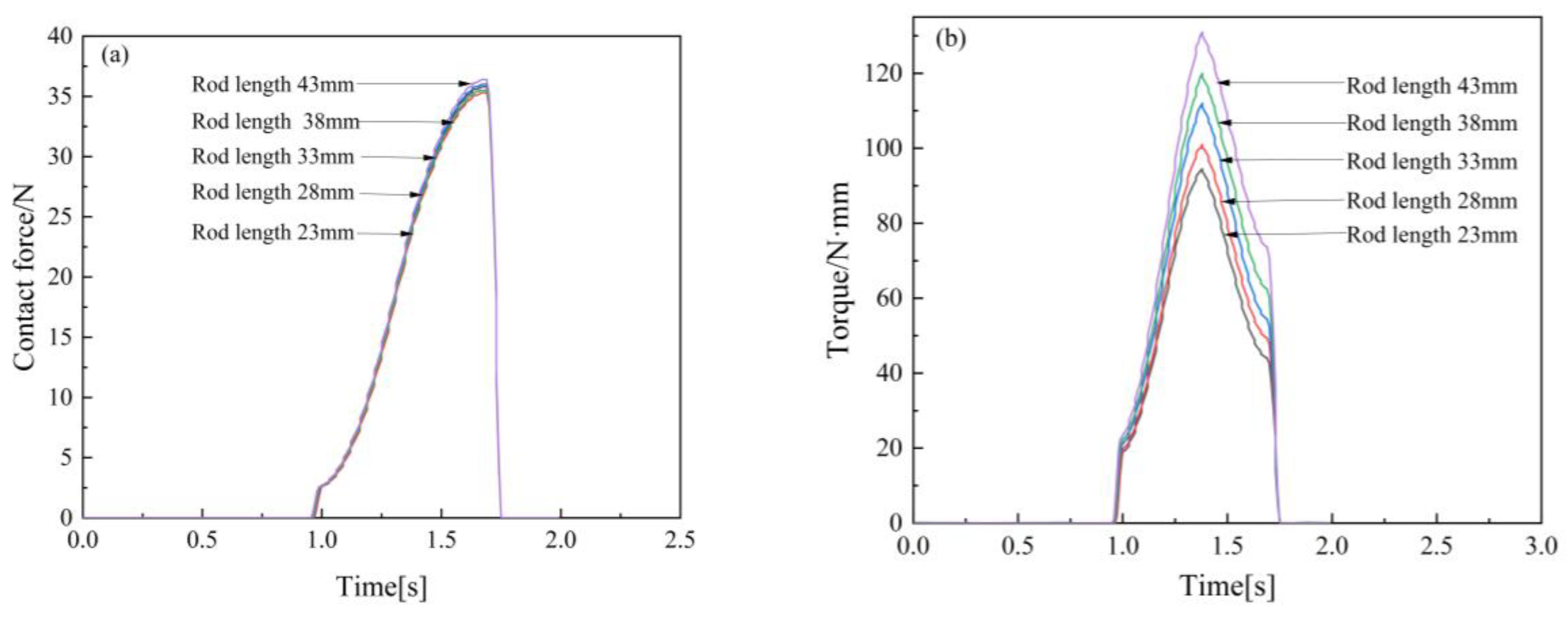

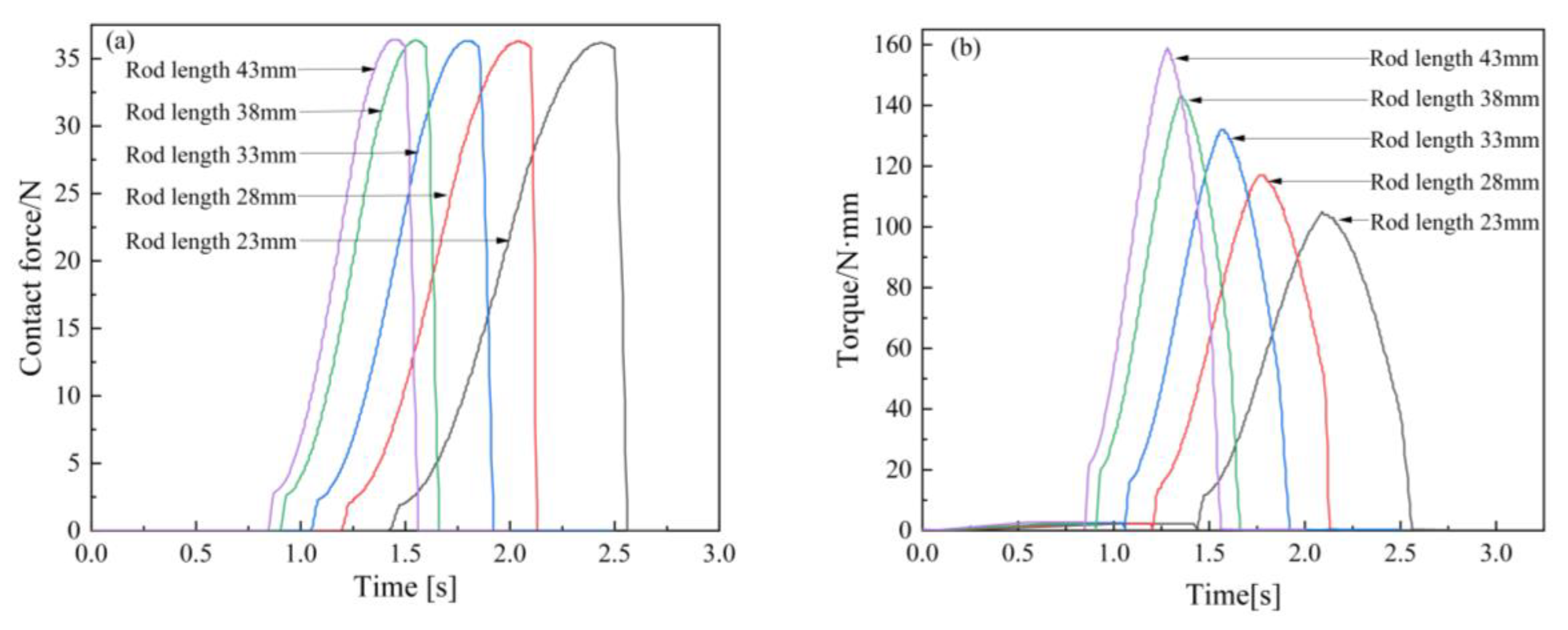

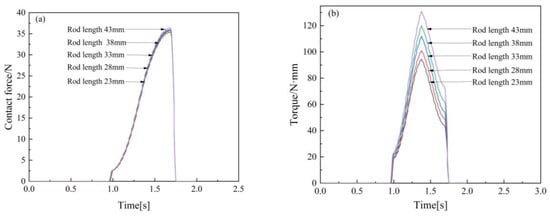

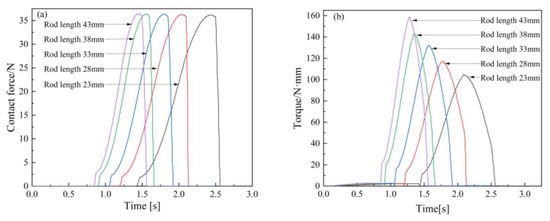

To determine the optimal length of the OB rod, a dynamic simulation was performed using ADAMS (2020) software, following the actual path of the pineapple eye removal process. The total simulation time was set to 3 s with 2000 simulation steps to ensure the accuracy of the results. By controlling the two variables of pineapple eye removal time and rotational angular velocity, the eye removal time was unified to 0.75 s and the rotational angular velocity was unified to 12 °/s. The eye removal simulation of a 10 mm pineapple fruit eye was carried out for different rod lengths, and the influence of the length of the prime mover rod on the contact force and gear torque of the pineapple eye removal process was analyzed, so as to obtain the optimal rod length of the OB rod. Based on the results, the shortest achievable OB rod length was rounded to 23 mm. Starting from this length, the rod was incrementally increased by 5 mm until reaching 43 mm. The simulation results are illustrated in Figure 7 and Figure 8.

Figure 7.

The eye removal time is the same. (a) The effect of different rod lengths on contact force; (b) The effect of different rod lengths on torque.

Figure 8.

The rotational angular velocity is the same. (a) The effect of different rod lengths on contact force; (b) The effect of different rod lengths on torque.

It could be observed from Figure 7 that, under the same eye removal time, the change in rod length did not significantly affect the contact force; however, the torque increased as the rod length increased. It could be observed from Figure 8 that, under constant rotational angular velocity, the contact force remained largely unchanged with varying rod lengths, while the torque increased as the rod length grew. The simulation results indicated that shorter rod lengths led to smaller torque, confirming that the optimal OB rod length was 23 mm.

2.4. Pineapple Fruit Eye Recognition and Positioning Method

2.4.1. Dataset Construction and Augmentation

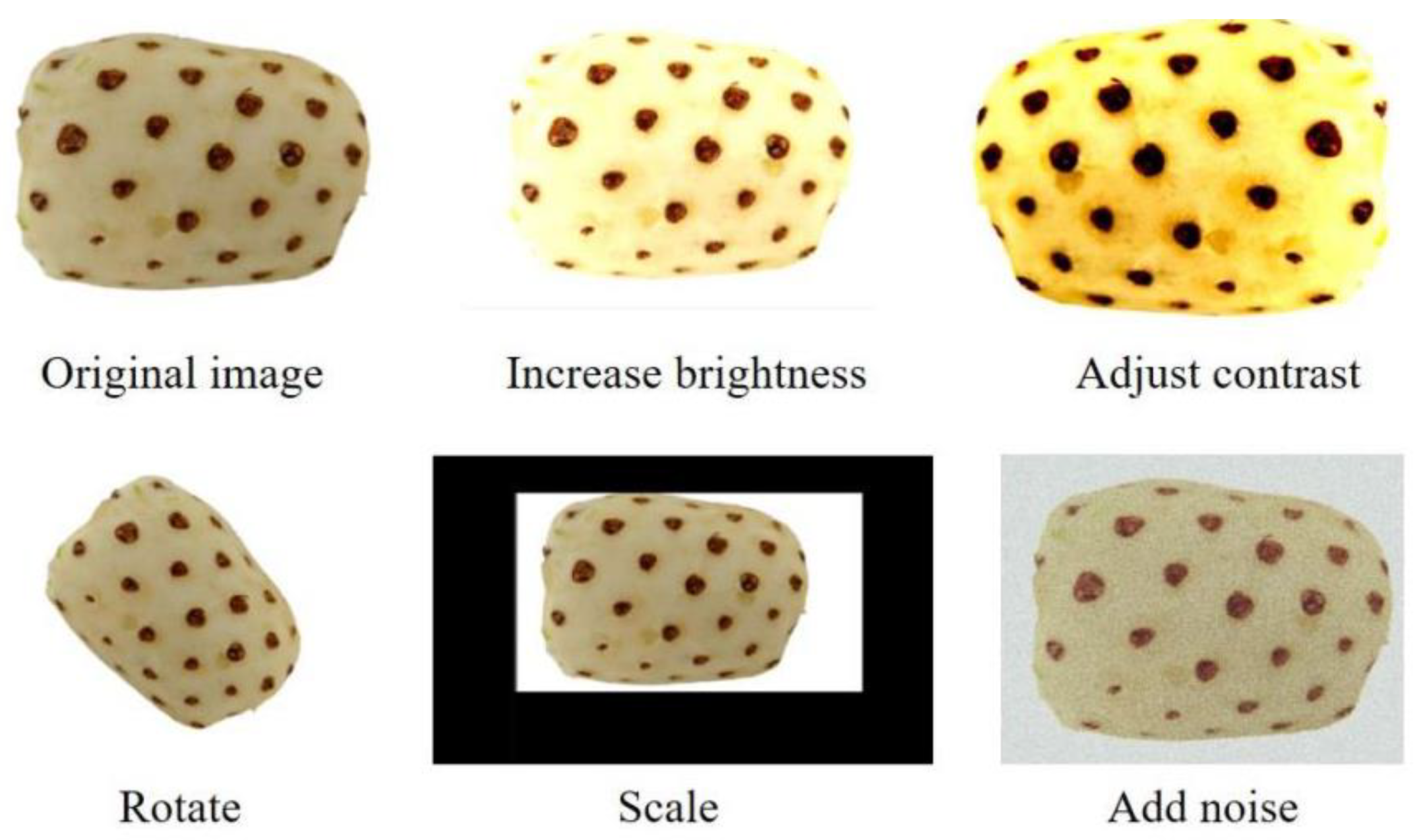

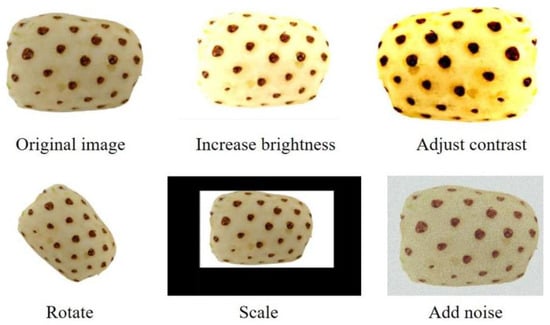

For the experiment, the Bali pineapple variety was used. After manual peeling, images of 20 Bali pineapples were collected using a high-resolution smartphone (Xiaomi 11) for multi-angle shooting. A total of 1300 original images were captured with various orientations and lighting conditions. To improve the generalization ability and robustness of the pineapple eye recognition model, data augmentation techniques such as brightness and contrast adjustment, rotation, scaling, and noise addition were applied to the collected images. This process resulted in an expanded dataset of 2000 images, as shown in Figure 9.

Figure 9.

The processed image dataset.

The images were then randomly split into three sets: training, verification, and test sets, with a ratio of 8:1:1. Using LabelImg (version 1.8.6) image annotation software, each image was annotated to frame the pineapple eye [26]. The annotation process generated XML files, which were subsequently converted into the TXT format required for YOLOv5 training. Each TXT file included the coordinates, size, and label name of the pineapple eye, thereby creating a complete pineapple eye dataset.

2.4.2. YOLOv5 Target Detection Algorithm

For this study, YOLOv5 was selected as the target detection algorithm for identifying pineapple fruit eyes. The input image size was set to 640 × 640 pixels, the learning rate was set to 0.0001, and the model was trained using 16 images per batch for 150 epochs. The models YOLOv5n, YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x were trained on the dataset, and their performance was evaluated using the test set. The model with the best performance was chosen as the final target detection model for pineapple fruit eyes.

2.4.3. Camera Calibration

Camera calibration was required to remove image distortion, improve positioning accuracy, and obtain the parameters of the depth camera [27,28,29]. In this study, the Zhang Zhengyou calibration method was employed to determine the internal parameters of the camera. This method involved fixing the world coordinates on a calibration board and capturing images of the board from various angles and distances. The pixel coordinates (x, y) of the corner points on the board were extracted, and each corner point corresponded to a known three-dimensional coordinate (X, Y, Z) in the world coordinate system. Using these data, the camera parameters were calculated with OpenCV, and the camera parameters are summarized in Table 2.

Table 2.

Camera parameters.

2.4.4. Coordinate Transformation

After obtaining the depth camera parameters, the world coordinates of the pineapple eye were calculated using the pinhole imaging principle and matrix operations. The process of determining the world coordinates of the pineapple fruit eye involved transforming between the pixel coordinate system, the camera coordinate system, and the world coordinate system. The position of the pineapple fruit eye in the pixel coordinate system (x, y) was obtained by the recognition algorithm, and the coordinates of the pineapple fruit eye in the camera coordinate system (Xc, Yc, Zc) could be obtained by the following transformation:

where fx, fy are the focal length of the camera, cx, cy are the pixel coordinates of the center of the camera image, and Zc is the depth value.

Using the external parameters of the camera, the camera coordinate system was then transformed into the world coordinate system through coordinate transformation. The world coordinates of the pineapple eye, denoted as (Xw, Yw, Zw), were obtained using the following formula:

where R is the rotation matrix and T is the translation vector.

After the three-dimensional coordinates of the pineapple fruit eyes had been determined, the eye removal performance was evaluated by three indicators: complete removal rate (Cr), incomplete removal rate (Pr), and missed detection rate (Mr). The mathematical expressions of these metrics are listed below.

where Na is the number of pineapple eyes, Ra is the number of completely removed pineapple eyes, Rb is the number of unremoved pineapple eyes, and Rc is the number of undetected pineapple eyes.

3. Results

3.1. Identification Test and Results

The performance of the five models of YOLOv5 in pineapple eye recognition was evaluated using four indicators: precision, recall, mAP and single image detection time. The test results are shown in Table 3.

Table 3.

The recognition results of YOLOv5 models.

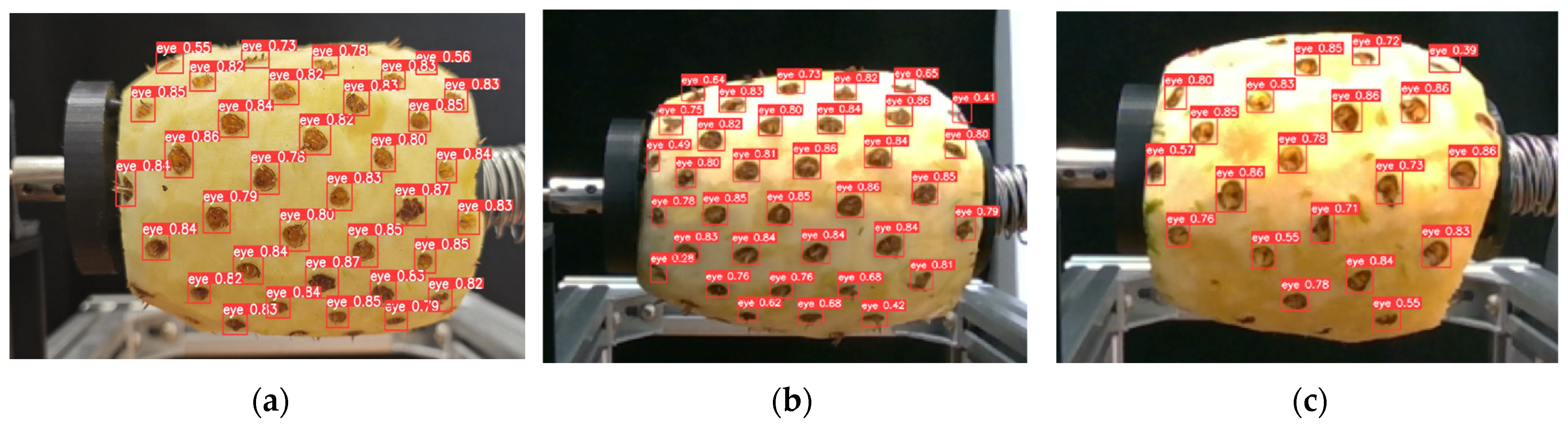

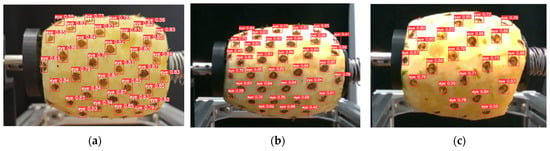

As shown in Table 3, the accuracy (Precision) and recall rate (Recall) for all YOLOv5 models exceed 97%, and the average detection mean (mAP) is above 98%. The average detection times for a single image are as follows: 0.008 s, 0.013 s, 0.03 s, 0.051 s, and 0.062 s for YOLOv5n, YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x, respectively. Among these, YOLOv5x and YOLOv5l have longer detection times, while YOLOv5n achieves the fastest detection speed but shows slightly lower accuracy, recall rate, and mAP compared to the other models. YOLOv5m is superior to YOLOv5s in accuracy and false positives, but YOLOv5s performs better in recall rate, average detection mean, and detection speed, and YOLOv5s has the lowest false negative rate, which can greatly reduce security risks. Considering these factors, YOLOv5s was selected as the optimal model for pineapple fruit eye detection. The trained model was tested on three pineapple varieties: Bali, Golden Diamond, and Golden Pineapple, with detection results shown in Figure 10. As shown, YOLOv5s exhibits robust detection performance across different pineapple varieties.

Figure 10.

Detection performance of different pineapple varieties. (a) Bali; (b) Golden Diamond; (c) Golden Pineapple.

3.2. Eye Removal Test and Results

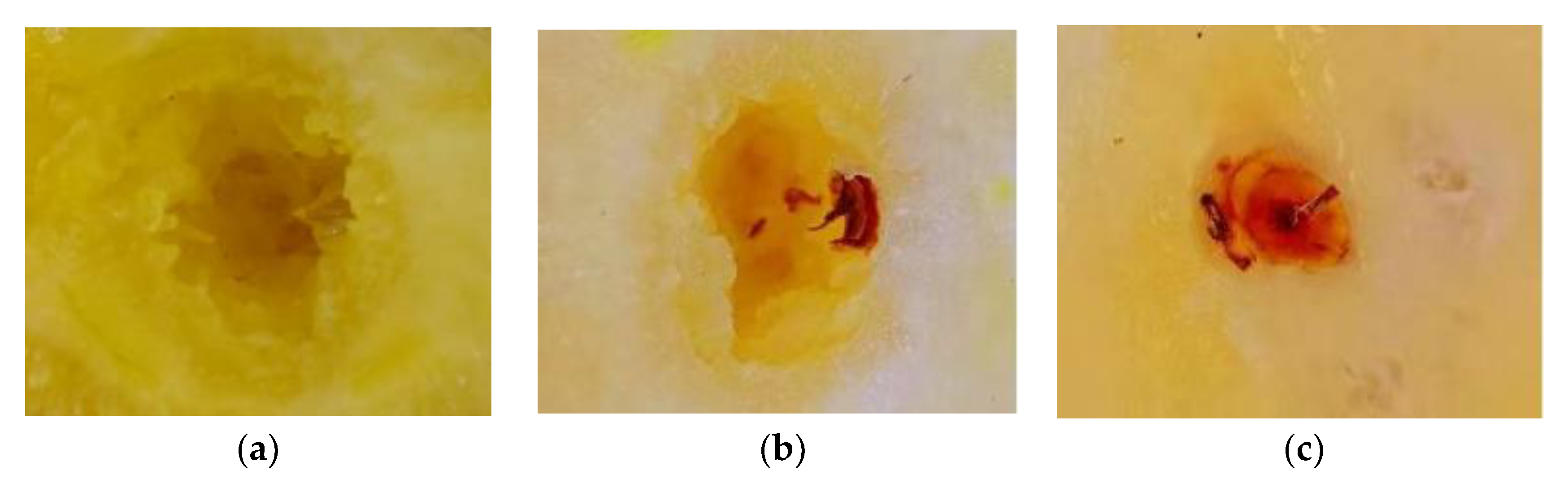

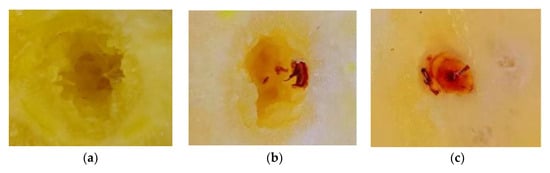

The pineapple eye removal device was constructed and tested. To evaluate its performance, 10 pineapples of the Bali variety were selected as test subjects. The efficiency and accuracy of the device were assessed by measuring the eye removal time and removal rate. Different eye removal results are shown in Figure 11. The removal success measures are summarized in Table 4.

Figure 11.

Pineapple eye removal effect. (a) Complete removal; (b) Not completely removed; (c) Omissions.

Table 4.

Pineapple eye test data.

As shown in Figure 11, the device has a high recognition accuracy and removal success rate for practical applications. However, the pit section is not sufficiently smooth, and the flatness is moderate. Due to the small size and shallow depth of the edge fruit eyes, some fruit eyes were damaged during the peeling process, leading to feature loss, which affected the recognition accuracy and caused incomplete removal.

From Table 4, the device’s performance metrics indicate an average complete removal rate of 88.5%, an average incomplete removal rate of 6.6%, and an average missed detection rate of 4.9%. The average eye removal time per pineapple is 156.7 s, which generally meets the operational requirements for pineapple eye removal.

4. Discussion

In this study, a machine vision-based pineapple eye removal device was designed. The end-effector for eye removal was developed using a profile-mimicking approach, and the linkage lengths were optimized through ADAMS simulation analysis. Simulation results indicated that shorter linkage lengths reduced the required torque, thereby enhancing mechanical efficiency. Based on this, the optimal rod length was determined to be 23 mm to reduce the torque demand and improve the mechanical stability.

The YOLOv5 target detection algorithm was selected to achieve rapid detection of pineapple fruit eyes. Validation using 200 test-set images demonstrated that all YOLOv5 variants achieved accuracy and recall rates exceeding 97%, with a mean detection accuracy of over 98%, confirming the model’s effectiveness. After a comprehensive evaluation of detection accuracy and computational efficiency, YOLOv5s was ultimately selected as the optimal detection model. Camera calibration was performed to obtain intrinsic and extrinsic parameters, followed by coordinate system transformations to determine the three-dimensional positions of pineapple eyes. A prototype was constructed, and removal trials were conducted to evaluate the performance of the device. Experimental results demonstrated stable detection and removal capabilities, achieving an average complete removal rate of 88.5%, an incomplete removal rate of 6.6%, a missed detection rate of 4.9%, and a processing time of 156.7 s per pineapple, meeting operational requirements.

A comparison of the proposed method with existing eye removal approaches is presented in Table 5. As shown in Table 5, compared with conventional mechanical and manual eye removal equipment, the proposed system incorporates machine vision and automatic control technologies, which improves the level of automation and reduces manual labor requirements. In contrast to rotary eye removal devices, the integration of the YOLOv5s object detection algorithm with coordinate transformation techniques enables precise localization and sequential removal of pineapple eyes, thereby avoiding the pulp waste often caused by fixed-path cutting methods. Although the complete removal rate is slightly lower than that of other machine-vision-based systems, the use of a depth camera to capture three-dimensional information enhances the positioning accuracy and real-time performance of the device, while also lowering overall production costs. Additionally, the end-effector was designed with a profiling structure to further improve the precision and processing quality of eye removal.

Table 5.

Comparison of Eye Removal Methods.

5. Conclusions

The device mainly includes key components such as the Jetson Nano B01 controller, depth camera, stepper motor, linear slide table, and processing units. The average processing time per pineapple is 156.7 s, and the automatic eye removal is basically realized. Compared with traditional manual methods, this system significantly reduces operational labor intensity and eliminates the inconsistencies associated with human fatigue or operator experience. It is particularly suitable for the automation needs of small and medium-sized fruit-processing enterprises. However, detection accuracy decreased when processing blurred or shallow-edge fruit eyes, leading to incomplete removal. Additionally, the designed end-effector occasionally caused uneven cutting or excessive compression of the pulp during the removal process. Future research will focus on the further optimization of the recognition algorithm to enhance the detection accuracy of edge eyes, and the blade angle and material composition of the eye-removing gripper will be optimized to enhance removal efficacy and operational efficiency. The findings of this research offer a novel perspective for the design of intelligent pineapple eye removal equipment, possessing significant application value in the realm of agricultural automation and providing essential technical support for subsequent investigations.

Author Contributions

Conceptualization, S.W. and X.M.; methodology, M.G.; software, S.W.; validation, M.G., X.M. and H.L.; formal analysis, C.T.; investigation, Z.G.; resources, C.T.; data curation, Z.G.; writing—original draft preparation, S.W.; writing—review and editing, X.M.; visualization, H.L.; supervision, M.G.; project administration, X.M.; funding acquisition, M.G. All authors have read and agreed to the published version of the manuscript.

Funding

Guangdong Province Key Field Project for Regular Universities, grant number 2024ZDZX4025; Guangdong Province Innovation Team for Smart Agricultural Machinery Equipment and Key Technologies in Western Guangdong, grant number 2020KCXTD039; Zhanjiang Science and Technology Development Special Fund Competitive Allocation Project, grant number 2022A01058.

Data Availability Statement

All data are presented in this article in the form of figures and tables.

Acknowledgments

We gratefully acknowledge anonymous referees for thoughtful review of this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zheng, C.; Li, Y.; Liang, W.; Ye, L. The Current Situation and Countermeasures of Pineapple Industry Development in China. Shanxi Agric. Sci. 2018, 46, 1031–1034. [Google Scholar]

- Fang, W. The Current Situation and Development Suggestions of Pineapple Industry in Guangdong Province. China Fruits 2023, 9, 123–126. [Google Scholar]

- Gong, Y. Research on Strategies for Optimization and Upgrading of Pineapple Industry in Zhanjiang. Master’s Thesis, Guangdong Ocean University, Zhanjiang, China, 2020. [Google Scholar]

- Liu, A.; Xiang, Y.; Li, Y.; Hu, Z.; Dai, X.; Lei, X.; Tang, Z. 3D Positioning Method for Pineapple Eyes Based on Multiangle Image Stereo-Matching. Agriculture 2022, 12, 2039. [Google Scholar] [CrossRef]

- Liu, X.Y. A Novel Pineapple Eye Removal Device. China CN211130616U, 21 July 2020. [Google Scholar]

- Kumar, P. Advances in Manufacturing Technology and Management. In Proceedings of the 6th International Conference on Advanced Production and Industrial Engineering (ICAPIE 2021), Delhi, India, 18–19 June 2021. [Google Scholar]

- Chen, S.; Hu, Y.; Huang, L.Y. A Pineapple Eye Removal Device. China CN202420662805.1, 27 December 2024. [Google Scholar]

- Wen, B.; Zhang, C.; Li, C. A Pineapple Peeling and Eye Removal Integrated Machine. China CN212307524U, 8 January 2021. [Google Scholar]

- Zhou, D.; Fan, Y.; Deng, G.; He, F.; Wang, M. A new design of sugarcane seed cutting systems based on machine vision. Comput. Electron. Agric. 2020, 175, 105–116. [Google Scholar] [CrossRef]

- Hou, Y.; Qian, J.; Wang, L.; He, J.; Bi, Y. Design of the Sorting Mechanism for Sugar Orange Harvesting Robot. J. Chin. Agric. Mech. 2023, 44, 183–189. [Google Scholar]

- Duan, X.; Wang, S.; Zhao, Q.; Zhang, J.; Zheng, G.Q.; Li, G.Q. Research on Detection Method of Major Pests in Summer Maize Based on Improved YOLOv4. Shandong Agric. Sci. 2023, 55, 167–173. [Google Scholar]

- Liu, S.; Hu, B.; Zhao, C. Detection and Recognition of Diseases and Pests on Cucumber Leaves Based on Improved YOLOv7. Trans. Chin. Soc. Agric. Eng. 2023, 39, 163–171. [Google Scholar]

- Zhang, H.J.; Wang, Y.K.; Hu, J.H. Pineapple Automatic Eye Removal Device and Method for Automatic Pineapple Eye Removal. China CN113412954A, 21 September 2021. [Google Scholar]

- Qian, S.J.; Zhao, M.Y.; Chen, H.K.; Liang, X.F.; Wei, Y.H. Pineapple Spiral Pruning Method and Experiment Based on 3D Reconstruction. Exp. Tech. Manag. 2024, 41, 73–83. [Google Scholar]

- Nguyen, M.T.; Nguyen, T.T. Pineapple Eyes Removal System in Peeling Processing Based on Image Processing. In Proceedings of the International Conference on Mobile Computing and Sustainable Informatics (ICMCSI 2022), Patan, Nepal, 27–28 January 2022. [Google Scholar]

- Liu, A.W.; Xie, F.P.; Xiang, Y.; Li, Y.J.; Lei, X.M. Pineapple Eye Removal Method and Experiment Based on Machine Vision. Agric. Eng. J. 2024, 40, 80–89. [Google Scholar]

- Ye, M.; Zou, X.J.; Cai, P.F. Design of a Universal Gripper Mechanism for Fruit-Picking Robots. J. Agric. Mech. 2011, 42, 177–180. [Google Scholar]

- Zhao, F.; Yang, X.; Zhao, N. SOLIDWORKS 2023 Chinese Edition: From Beginner to Advanced; People’s Posts and Telecommunications Press: Beijing, China, 2023. [Google Scholar]

- Xia, W.; Zhang, Y. Research on Joint Simulation of Six-Degree-of-Freedom Manipulator Based on SolidWorks and ADMAS. J. Mech. Eng. Autom. 2021, 5, 79–81. [Google Scholar]

- Li, P.; Cao, X. Design and Simulation of a Light Manipulator Using ADAMS Virtual Prototype. Int. J. Mechatron. Appl. Mech. 2021, 2, 25–35. [Google Scholar]

- Xue, Z.; Zhang, X.; Chen, R. Experimental Study on the Morphology and Mechanical Properties of Pineapple. Agric. Mech. Res. 2024, 46, 170–174. [Google Scholar]

- Li, Z.; Kota, S. Virtual Prototyping and Motion Simulation with ADAMS. ASME J. Comput. Inf. Sci. Eng. 2001, 1, 276–279. [Google Scholar] [CrossRef]

- Han, M.; Li, M.; Duan, H.; Xu, K.; Yu, K. Rigid-flexible Coupling Simulation and Experiment of Plant Stem Flexible Gripping Device. Agric. Mech. J. 2024, 55, 109–118. [Google Scholar]

- Wang, S.; Hu, Z.; Yao, L. Simulation and Parameter Optimisation of Pickup Device for Full-Feed Peanut Combine Harvester. Comput. Electron. Agric. 2022, 192, 106602. [Google Scholar] [CrossRef]

- Jiang, Y.; Liu, J.; Hu, Z. Design and Experiment of the End-Effector for Navel Orange Harvesting Based on Underactuated Principle. Mech. Transm. 2024, 48, 105–113. [Google Scholar]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. Label Me: A Database and Web-Based Tool for Image Annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Wang, X.; Chen, S. Positioning Error and Correction of Vision-Guided Gripping Robot on Working Plane. Mech. Sci. Technol. 2015, 34, 720–723. [Google Scholar]

- Li, L.; Liang, J.; Zhang, Y. Precision Detection and Localization of Citrus Targets in Complex Environments Based on Improved YOLO v5. Agric. Mech. J. 2024, 55, 280–290. [Google Scholar]

- Liu, J.; Wang, G. Photometric Stereo LED Light Source Position Parameter Calibration Method Based on Stereo Vision. Laser Optoelectron. Prog. 2022, 59, 309–316. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).