1. Introduction

No discussion by an executive of a product company is complete without a mention of quality. Quality is one of the most important characteristics of products today. Manufacturing companies expend a great deal of time, money, and effort in an attempt to build quality products. All other things being equal, a product that is higher in quality commands a better price than a product that is lower in quality. Below a certain threshold of quality, the price buyers will pay will drop to approximately zero.

The cost of quality (COQ) is significant to manufacturers. COQ includes both failure costs and prevention plus appraisal costs. The classical view is that COQ is a U-shaped curve with prevention plus appraisal costs rising dramatically the closer quality becomes to the 100% level. A contradictory claim is that prevention and appraisal cost are linear and that COQ does not exceed about a quarter of sales costs [

1,

2]. However, both claims put the minimum COQ at between 20–30% of sales.

The American Society of Quality (ASQ) states [

3], “Many organizations will have true quality-related costs as high as 15–20% of sales revenue, some going as high as 40% of total operations. A general rule of thumb is that costs of poor quality in a thriving company will be about 10–15% of operations.”

However, the issue of quality is a complicated one. While there is much talk about quality, there is also much confusion about what quality actually is. If we look to the definition of quality in the dictionary, we find that there are at least eight different definitions of quality that have very separate meanings from each other.

The source of much of the confusion is the term “quality control.” Quality control originated in manufacturing and has been exhaustively covered [

4,

5]. However, this “quality control” is actually specification control. Manufacturing Quality Control or MQC, which the paper will use to refer to this, is the assessment of whether the manufactured product meets its specifications.

However, simply manufacturing products to its required specification does not ensure that a product is a quality product. A product is only a quality product if it performs in operation to the user’s perceived standard. The only true measure of quality is a posteriori, that is at the end of the product’s useful life in the physical world. Obviously, that is far too late to be useful to a prospective buyer or user of the product.

What we need is a means of predicting that life performance. Digital Twins (DTs) are a potential capability to better predict the ability of a manufactured product to perform to the user’s perceived standard. With Additive Manufacturing (“AM”), it is only going to become more difficult to make these kinds of predictions. The remainder of the paper will address this issue.

2. Related Work

There are some areas that will impact the advances here. Those areas are Product Lifecycle Quality (PLQ), virtual testing, Digital Twins, and physics/data fusion.

2.1. Product Lifecycle Quality

The COQ literature is almost exclusively focused on manufacturing related quality issues. However, there are substantial lifecycle costs related to product liability, brand reputation, lost sales, and legal liabilities from products that fail to live up to customer’s expectations of performance.

I have long proposed that product quality is a product lifecycle issue.

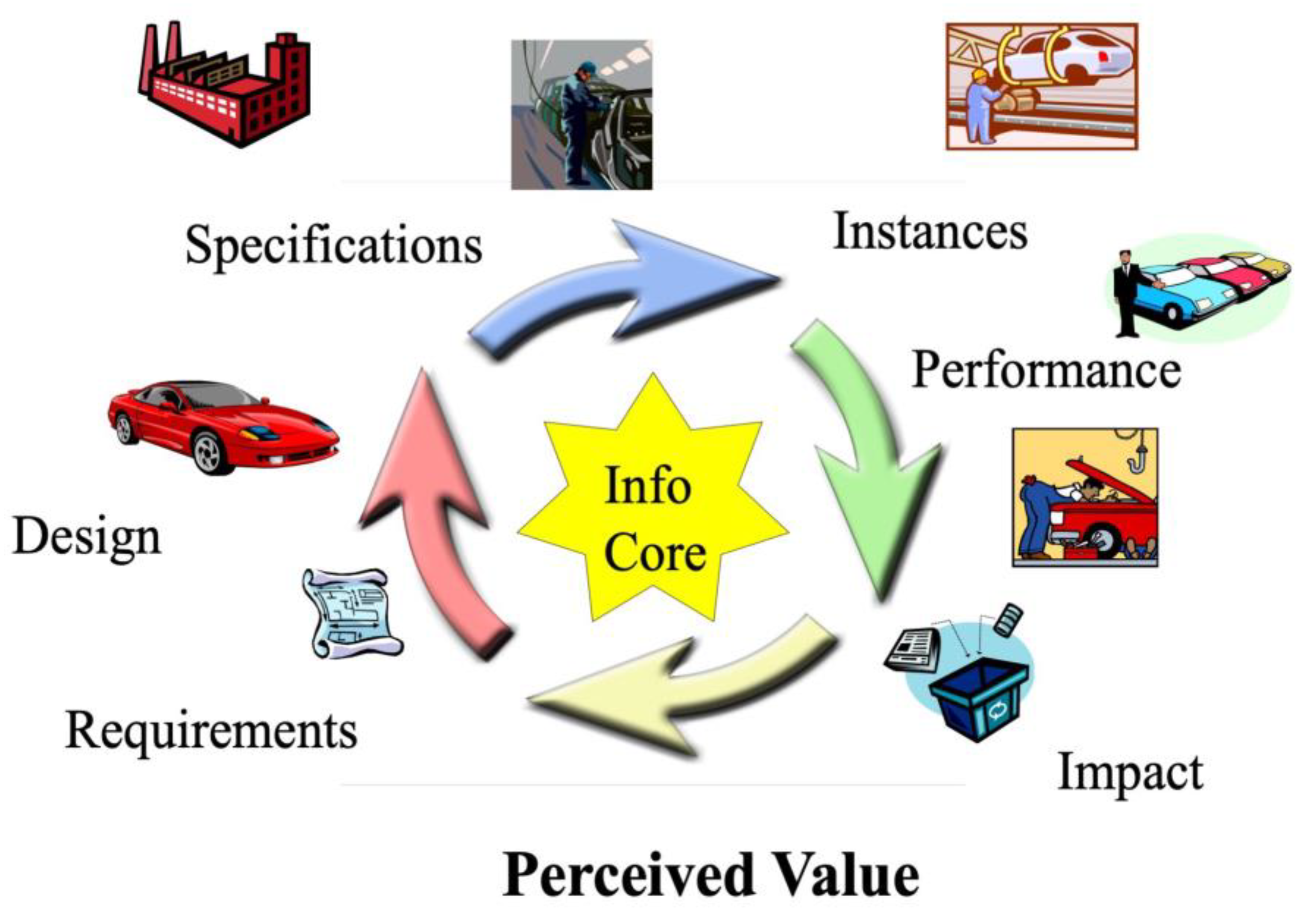

Figure 1 shows the PLQ model I introduced over a decade ago [

6]. The PLQ model is based on the earlier Product Lifecycle Management (PLM) model [

7] focusing on the attributes of product quality. It is patterned after PLM with a product-centric view as opposed to traditional siloed functional-centric views of product lifecycles.

The PLQ model starts with the perspective of the customer’s expectations of the perceived value of the product. This is the customer’s expectation of the “ilities”: functionality, durability, serviceability, etc. In the creation phase of the product lifecycle, the perceived value of these expectations as understood by the manufacturer will be turned into requirements. An engineered design is created that meets those requirements. The engineered design is turned into specifications and manufacturing processes to create physical products. In the build phase, product instances are built using these manufacturing processes and checked against the specifications. In the operation phase, the product instances are acquired and put into operation by customers. If and only if the products perform to the customers’ expectation of perceived value is it a quality product.

The build or manufacturing phase is a critical phase because it is here that products move from virtual form into a final physical form. It is in this phase that the means of production takes physical material and produces a product that physically instantiates the engineering plans. The assumption of traditional MQC is that if a product instance passes its MQC inspections/tests, then it will be a quality product. Product recalls and liability claims often contradict that assumption.

2.2. Virtual Testing

I first proposed the value of virtual testing in my book,

Virtually Perfect [

8], over a decade ago. At that time, the computing capability for virtual testing at a high granularity level was very limited. Manufacturing companies were in the early stages of considering virtual testing in place of physical testing. The virtual testing was limited to the creation phase of the product lifecycle. It was also more model testing than DT testing [

9].

The outcome that we desire from testing is reliability and completeness. By reliability, we mean that the test results will reflect what will occur with the product in actual use. By completeness, we mean that testing will cover all the possible conditions that the product will encounter in the environment. While reliability is achievable, completeness is an ideal. We can never hope to test for all possible conditions, but we can try for the probable ones that we have identified. Where human safety is involved, we need to go beyond probable and even test for some improbable conditions. The testing described here is something that is cost prohibitive for physical testing, but quite feasible and becomes more feasible over time for virtual testing.

As our virtual products and our virtual environments continue to gain in richness and robustness, the question becomes when and how should we replace physical testing with virtual testing. As

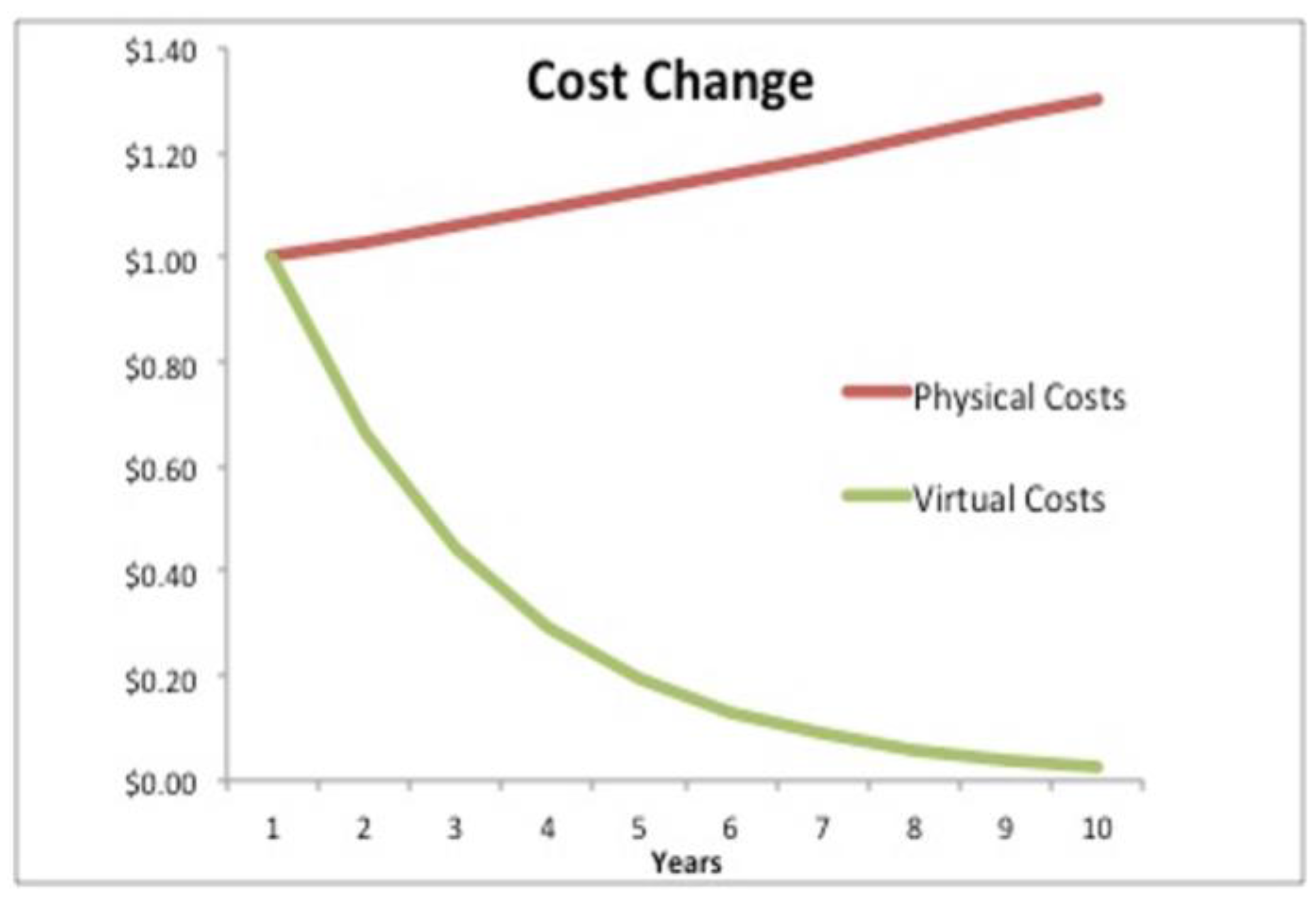

Figure 2 illustrates, the costs of physical testing and virtual testing are moving in different directions. The cost of physical resources continues to grow over time, even if it is simply at the rate of inflation, which is not so insignificant these days. Virtual costs will continue to decrease over time as Moore’s Law and its corollaries continue to remain in effect. PLM’s main premise, that we should replace atoms with bits, will become more and more attractive.

It is important to note as part of this discussion that the physical testing as described below has not been and will not be a guarantee of desired product performance. While in the past, the physical testing we used was the best tool we had in order to predict product performance, it has been by no means an unqualified success. If it had been, we would not see the level of product recalls that we do.

Because product testing requires physical resources, and often substantial physical resources at that, physical testing is limited. While the engineering discipline has devoted substantial time and effort to devising methodology and techniques to make testing as efficient and effective as possible, physical testing is a very limited subset of all possible combinations of environmental effects that the product would encounter in actual use.

In addition, physical testing uses individual test items whose physical properties are specific to that item. It has material from a specific material lot. It has shape and dimensions that are distinct to that particular test artifact. This test artifact is assumed to be a proxy for the class of products it represents. The assumption is that the same physical tests on other products would produce results that would not differ in any meaningful way. However, that is only an assumption.

That is clearly not the issue in deciding whether we can substitute virtual testing for physical testing. The issue is whether or not the virtual testing will give us the same risk profile that the physical testing gave us. What we need to convince ourselves is that our informational bits will perform in the same way that the physical atoms do. In order to convince ourselves that this is the case, we need a methodology that does this. One instance of the virtual testing giving us a result similar to the physical test result is not enough.

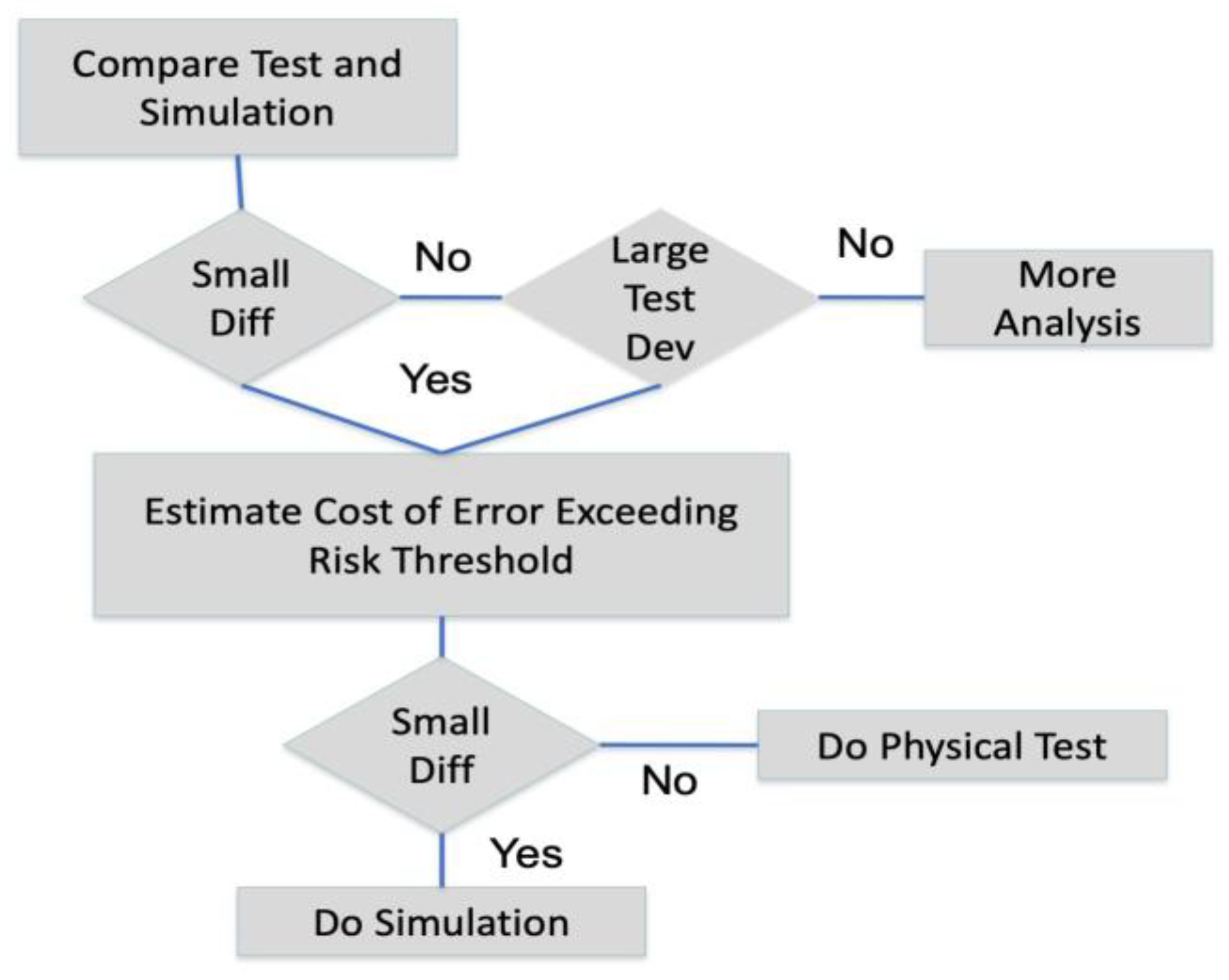

Figure 3 is one such methodology that I have proposed before [

8]. The first step is selecting a number of physical tests that have been previously performed or will perform as part of this comparison. The sample size should be representative of the complexity of the physical tests: the simpler the test, the smaller the sample size; the more complex the test, the larger the sample size.

The virtual tests should then be performed. If the difference between the physical test and the virtual testing is small, meaning that the mean is close to zero and the deviation is small, then the virtual testing is a candidate to replace the physical test.

Even if the deviation of the virtual testing to the physical tests is large, it may be that deviations of the physical tests themselves are also large. If there is a large deviation between the physical test and the virtual testing, but intra-test deviations are small, then clearly, further analysis needs to be carried out in order to understand and model the physics involved.

The last step is to estimate the cost of making an error above a certain monetary threshold between conducting physical and virtual tests. That cost should be reduced by the cost of performing the respective physical or virtual tests. If those costs are comparable, then we should consider replacing the physical test with the virtual test. Since the cost of the virtual test will be less than the physical test, sometimes by one or more magnitudes, the initial cost will be much less. It is only if there is an error, i.e., the virtual test does not identify a problem, that there will be additional costs. However, these additional costs could also be incurred if the physical test also fails to catch the problem.

If the virtual test produces the equivalent reliability as a physical test, what we gain is in the area of completeness. We can now afford to carry out many more simulations than we could afford to carry out physical tests. For example, in crash tests where we might have examined a front impact and a side impact, we can now perform virtual tests of impact angles in between those right angles. It may be that an oblique impact causes a structural collapse that a front or a side impact would not uncover. The costs of these additional virtual tests would be a fraction of the costs of performing the physical crash tests.

Virtual tests have the potential to yield a huge reduction in physical testing costs. For complex products such as airplanes or automobiles, the cost savings can easily be in the hundreds of millions of dollars. While it is clear that virtual testing will add to the completeness of testing, the need is to be convinced that virtual tests have the same reliability as physical testing. This requires a methodology like the one that I have outlined here. However, the adoption of virtual testing is as much of a culture problem with the testing community acknowledging the efficacy of virtual testing as it is a technology issue.

For physical or virtual testing, the ideal situation would be to test each individual product as it was manufactured. However, that is clearly not feasible, except for very-high-value products. Even then, only non-destructive testing (NDT) can be carried out. However, for virtual products, it is feasible and becomes more feasible to test all individual products, as will be discussed below. With virtual testing, there is no requirement to limit testing to NDT. We can conduct destructive tests on virtual products.

2.3. Digital Twins

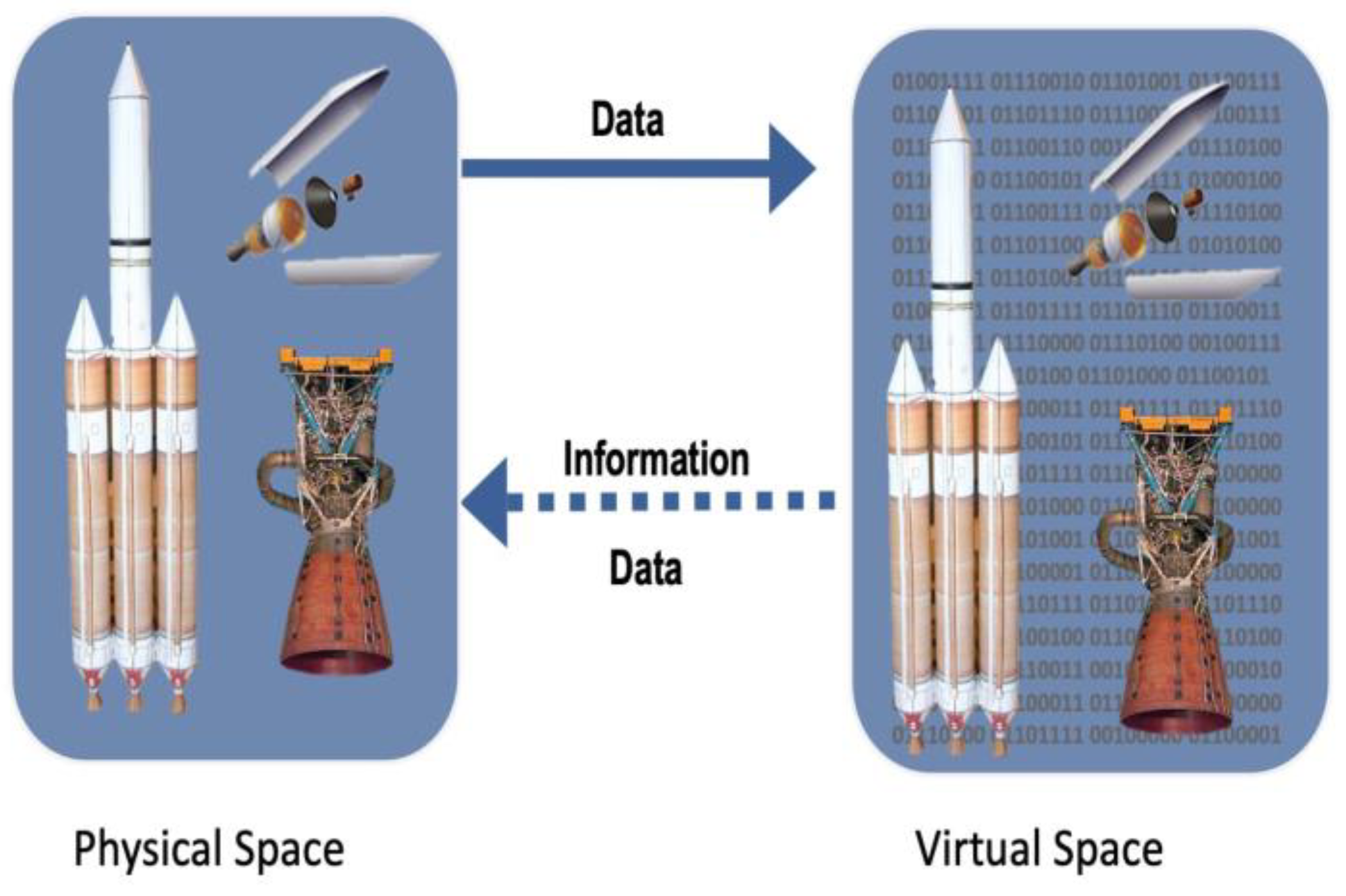

The underlying premise for PLQ and the virtual testing target is the DT. The commonly accepted Digital Twin Model that I introduced in 2002 [

10] and has evolved to the one as shown in

Figure 4 consists of three main components [

11]:

The physical product in our physical environment;

The DT in a digital/virtual environment;

The connection between the physical and virtual for data and information.

The digital twin is where the data and information are populated and consumed on a product centric basis from the functional areas over the entire product lifecycle. The digital twin and the virtual environment that the digital twin operates in are engaged for virtual testing.

The digital/virtual environment of the digital twin, referred to as the Digital Twin Environment (DTE) [

11], requires that it have rules that are identical as possible to our physical environment. We need to be assured that the behavior of the digital twin in the DTE mirrors the behavior of its physical counterpart for the use cases we require. The DTE has often been called the Metaverse [

12].

2.3.1. Types of Digital Twins

There are three types of digital twins: the Digital Twin Prototype (DTP), the Digital Twin Instance (DTI), and the Digital Twin Aggregate (DTA).

The DTP originates at the creation phase of product lifecycle. The DTP of a product begins when the decision is made to develop a new product, and work begins doing just that. The DTP consists of the data and information of the product’s physical characteristics, proposed performance behaviors, and the manufacturing processes to build it. As much of this work as possible should take place virtually.

The DTI originates when individual physical products are manufactured. DTIs are the as-builts of the individual products and are connected to their corresponding physical product for the remainder of the physical product’s life and even exist beyond that. Much of the DTI can simply be linked to the DTP. For example, the DTI can link back to the DTP 3D model and only needs to contain the offset of exact measurements to the designed geometrical dimensioning and tolerance (GD&T).

The DTP will contain the manufacturing process and the parameters associated with the process. The DTI will contain any variations that occurred in actual production. For example, the DTP process may require heat treating within a temperature range. The DTI will capture the temperature that actually occurred. The data and information that are needed for the DTI will be driven by the use cases of the organization.

Because the DTI remains connected to its physical counterpart for the rest of that physical product’s life, it will also contain the data from its operation. The DTI will contain sensor reading and fault indicators. Based on use cases, the DTI will contain a history of performance of state changes and resulting outcomes.

The DTA is the aggregate of all DTIs. The DTA contains all the data and information about all the products that have been produced. The DTA may or may not be a separate information structure. Some of the DTA data may be processed and stored separately for analysis and prediction purposes. Other DTA data may simply be mined on an ad hoc basis. The bigger the population of DTIs, the more data that will be available to improve Bayesian based predictions. The DTA will also be the source for Artificial Intelligence and Machine Learning (AI/ML) to predict expected performance.

2.3.2. Digital Twins Are Unconstrained by Space or Time

There are two major constraints in physical space that are not constrained in the virtual spaces of the Digital Twin Model. The two major constraints of our physical universe are the existence of a single space and of time. As I highlighted from the first presentation in 2002, we are unconstrained by a single physical space [

10]. We can have an unlimited number of virtual spaces where we can take the exact same initial conditions and vary the environmental conditions to see different outcomes.

Within a specific virtual space, we are also unconstrained. In the physical world, we need to be in physical proximity with a product to have access to the data it contains. That means we must expend physical resources including time to travel in order to obtain that proximity by either moving the physical product or the person. In virtual space, we can have simultaneous and instantaneous access.

We are also unconstrained by time [

13]. In our physical universe, time is completely out of our control. We cannot go back in time. The only way to move forward in time is to wait for the next tick of the clock. Even then, we move forward only according to the set time. We cannot slow it down, nor speed it up.

In virtual spaces, we can completely control time. We can run our simulations at any speed. We can quickly progress years and decades into the future. We can also move very slowly. We can break down actions that happen in split seconds in our physical universe into microseconds. We can even reverse time.

2.4. Physics/Data Fusion

The fusion of physics and data based statistics has the potential to improve predictions [

14]. First, these two methods can serve as a check on each other. If the two methods disagree substantially, then it’s an indication that there is a problem with either or both of methods. The actual outcome will help determine that. Second, the two methods can be blended to improve the prediction [

15].

Physics-based simulations and predictions rely on fundamental laws and first principles of the physical world to make predictions. Physics-based models can be useful in predicting the behavior of systems that are well-understood and can be described by mathematical equations. These models can be used to simulate the behavior of a system under different conditions and can provide insight into the underlying mechanisms that govern the system’s behavior. Physic models can also generate data that we that we do not have instruments to capture and measure [

16]. However, physics-based models are limited by the accuracy of the underlying physical laws and assumptions made in the model.

On the other hand, statistics which are data-driven are typically used to analyze and make predictions about large data sets, where the relationships between variables are complex and not well-understood. Statistical models can be useful in identifying patterns in the data, making predictions, and estimating the uncertainty associated with those predictions. However, statistical models are only as good as the data they are based on, and the accuracy of statistical predictions can be limited if the data is incomplete, biased, or insufficient to capture the complexity of the problem being studied.

With new products, physics-based models will need to be relied upon until there is a sufficient population of data from products in the field. This may take time for longitudinal data on product degradation and failure. Data from previous generations of products or even synthetic data sets may be adequate in the short term if the product design and manufacturing is sufficiently similar.

3. Manufacturing Quality Hierarchy of Risk vs. Cost Strategies

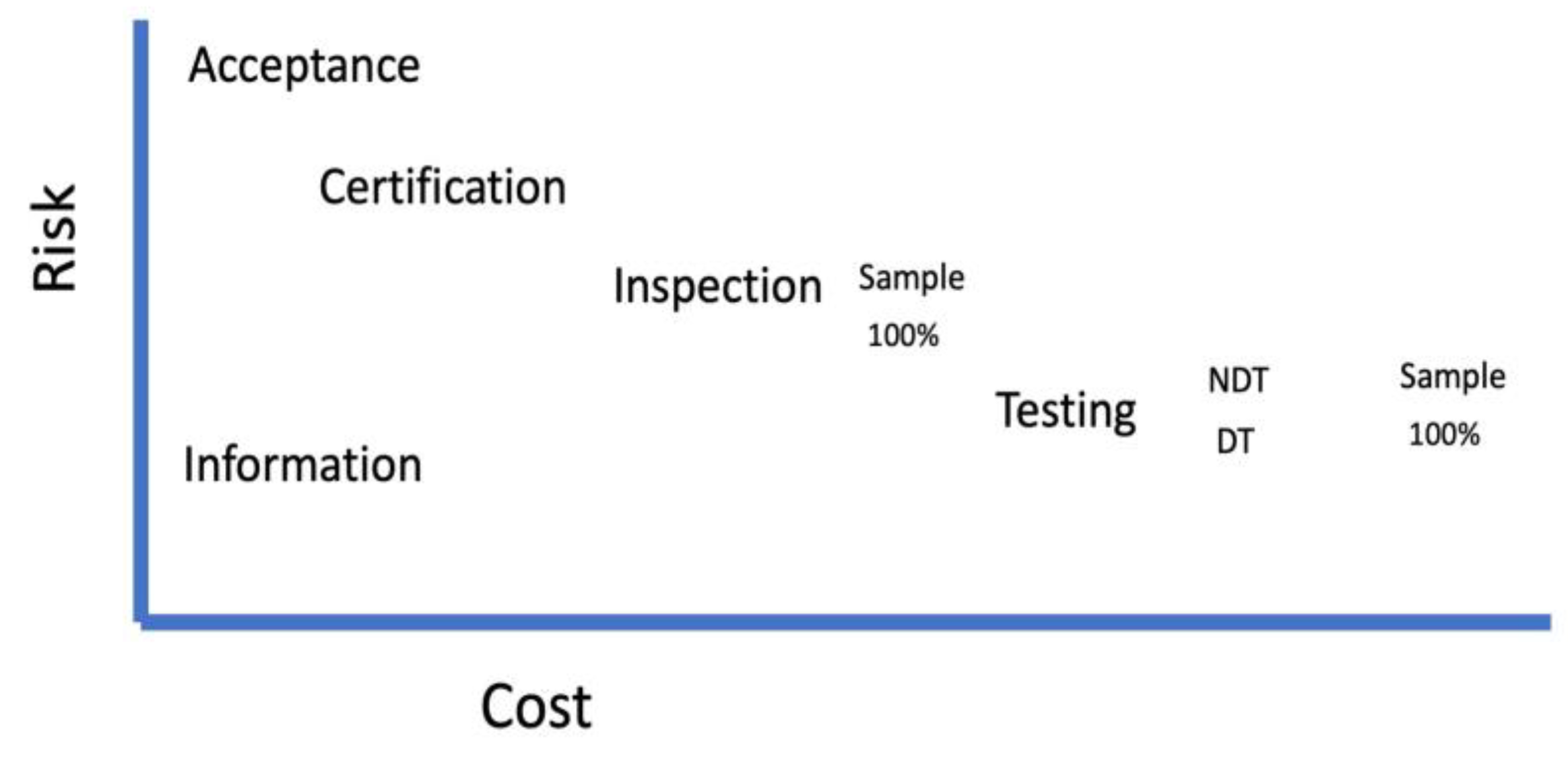

Focusing on the manufacturing product lifecycle phase, MQC strategies are about balancing risks with the costs of preventing non-conforming products.

Figure 5 shows product quality hierarchy in terms of risk versus cost. This applies to both internal and external production.

3.1. Acceptance

The highest risk with the lowest cost is simply to accept whatever has been produced. The assumption is that the product has been produced to the required specifications and tolerances. There is no checking with this assumption. The product is simply produced within the factory or is shipped in from a supplier, and we simply install it as part of our manufacturing process.

Clearly, there are huge potential problems with this approach. The cost of this assumption being incorrect could be astronomical not only in terms of MQC issues but also in terms of product safety.

3.2. Certification

The next approach is certification. The producer of the product goes through a certification process that attests to the following of a specific process that will result each and every time in producing products that meet the requisite requirements of MQC. The certification process may be one time or on some periodic basis. The risk decreases, but the cost will increase using this approach. The customer is still relying on the assumptions that the product producer will follow the process that is required by the certification and that following the process will result in a conforming product. Certifications are not free so the product producer will increase the price of the product as a result.

3.3. Inspection

With an inspection approach, risk decreases and cost increases. Inspection can be performed using statistical methods or at the hundred percent level, meaning every product is inspected. However, inspections are at a superficial level, meaning the inspections are conducted on what can be observed. External measurements can be taken, but internal measurements usually cannot. Inspection regimes are useful in decreasing risk of nonconforming products. However, they do add expenses, sometimes substantially, in terms of labor costs and time delays.

3.4. Testing

Testing can be of two types: nondestructive or destructive. Testing in manufacturing is usually that the manufactured part meets its requirements. It is not a test of behavior.

3.4.1. Testing—Nondestructive

NDT will reduce risk substantially but will increase costs, also substantially. NDT can be performed by statistical sampling or at the hundred percent level. NDT can give more assurance than simply inspection. However, it is based on visualization and not behavior. NDT techniques can look internally into metal structures to determine whether there are flaws. The determination of whether those flaws render the product nonconforming is a matter of heuristics as opposed to certain information that the flaw will result in the product not performing properly.

3.4.2. Testing—Destructive

Again, risk decreases very substantially, but costs increase also substantially. Destructive testing can be conducted via statistical sampling or to the hundred percent level. While 100% destructive testing will give great confidence that the product was a conforming product in all respects, it obviously makes no sense. There is no market for completely destroyed products, except by scrap dealers. Destructive testing gives us a great deal information about the ability of the product to withstand forces that it is exposed to. Destructive testing gives us great confidence that the product is conforming and would produce to the expectations of the user.

Destructive testing is generally only used during the development process to ensure that the product design and engineering met its requirements. Destructive testing could be used in the first batch from a new supplier or when a supplier changes their processes. However, despite the lowering of risk, the costs increase dramatically to the extent that frequent destructive testing is rarely used.

3.5. Information

The lowest risk is if we had completely reliable information about the product and how it performs throughout its life. If we could look into the future and follow the product throughout its life, we would eliminate all risk of the product not being conforming. If this information would be available to us, it would also be the least costly approach. Outside of time travel, this approach would appear to be closed to us. However, there may be a substitute for generating equivalent information that would be a proxy we could use.

That proxy would be virtual testing. That virtual testing can be of two types, NDT, which is data analysis, and destructive testing, which is simulation. While physical NDT requires that we perform some active physical operation, virtual NDT (VNDT) can be performed passively. We can use data analytics in the form of AI/ML. Using the DTA of operational products, AI/ML would indicate the set of DTI product attributes that correlated with a future failure. While this might not tell us what the causal relationship was for failure, it will indicate that we need to carry out more testing of this particular product.

Virtual destructive testing (VDT) will be the simulation of forces on the DTI. While it can be the testing of specifications. It can also be testing for behaviors that the product will exhibit in use. With the required computing capability and exploiting that, in virtual space, time is unconstrained, VDT could virtually test a newly manufactured airplane wing with identified flaws by simulating the flying of that wing through a lifetime of flight profiles. The forces of those profiles could be increased until the wing failed. The forces then would be a tracked as the plane flew missions so that the testing limit would never be allowed to be approached.

While the processing of data into information is not free,

Figure 2 illustrates that even if virtual and physical testing are equal today, it becomes increasingly less expensive over time compared to physical testing. Virtual testing creates its data without destroying physical products and can destroy the exact same virtual product over and over again. This means that we can reduce more risk at a significant reduction in cost as shown in

Figure 2.

4. Digital Twin Certified (DTC) for Traditional Manufacturing

There are two types of testing that can be considered DTC: data-based and simulation-based. The data-based testing would test the DTI attributes of the manufactured product against products that subsequently had substantial adverse effects or failed in operation. The simulation tests would run simulations to failures of forces on the DTIs.

While DTC can be used for all types of testing, i.e., fit and finish, tolerance stacking, CFD, rheology, etc., the focus of this paper will be on product structure. Some configurations may be susceptible to failures. The durability of product is also dependent on its ability to endure forces without failing. The two forces it must endure or withstand are external forces it is subject to and internal forces it generates. Product structure durability from these forces can be evaluated by Finite Element Analysis (FEA).

The key elements of DTC are:

Capture of the data from the physical manufacture of the DTI.

Capture of data from DTIs of physical products in operation.

The virtual tests that will produce the highest level of assurance of future product performance.

AI/ML analysis of product configurations as subsequent failures.

The DTC methodology would consist of the following:

As the product proceeded through the manufacturing process, the defined data would be captured to produce its unique DTI.

At selected points in the manufacturing process, the DTI would be subject to virtual testing up to and including failure.

This virtual testing could be repeated during all phases of product manufacturing: components, sub-assemblies, assemblies, and up final product assembly.

It is important to note that DTC does not start at the manufacturing phase. As the PLQ model showed, this is a product centric approach and not a functional siloed approach in terms of information. Data and information that is populated in one phase can be consumed at another phase of the product lifecycle.

The testing in the creation phase has historically included physically testing the product to destruction. Automobiles are crash tested. Airplanes have their wings bent until they break. NASA tested their new composite fuel tank until its destruction [

17]. Testing to destruction gives a high level of assurance of the safety factor and indications of the most probable sites of weakness.

However, this physical destructive testing is extremely expensive in both cost and time, so manufacturing organizations are very interested in replacing these physical tests with virtual tests. The goal is to replace the destructive physical testing with destructive virtual testing, with a final physical destructive test to validate the destructive virtual testing.

The virtual testing that is devised and used in the creation phase now can be used in the build (manufacturing) phase that will give assurance that the product will perform in operation throughout its life. In addition, the creation phase of virtual and physical testing will give the development engineers indications of where the most likely structural failure points are. The virtual testing during the manufacturing phase can prioritize these likely failure points.

The testing that will come out of the creation phase is going be primarily physics-based, although data-based testing is also possible from previous generations of products if they share a high degree of similarity with the new generation. The simulations that are used will be “white box”, meaning that they are driven by formulae, equations, and causation. It would be very risky to approve designs that used even highly correlated data. Black box or even grey box methods by themselves would usually be insufficient to approve new product designs.

The testing also has been designed to verify and validate that the design requirements are being met. However, when products are in operation, there will be failure conditions that arise that the design testing did not consider. As VNDT testing, AI/ML can be employed to associate that data with DTI data to predict configuration failures that physic-based methodologies are unable to predict or predict accurately [

14]. While VNDT might start with the AI/ML of previous generations of products, the accuracy of VNDT will improve the more DTIs there are and the larger the DTA population grows.

The application of VDT in the manufacturing process will depend on the specific product with its estimate of potential product weakness. The general idea is that the DTI would be created by capturing geometric data as the product is manufactured. The DTI, which is the DTP 3D model modified with actual measurements, would undergo virtual FEA testing.

This testing at selected points in the manufacturing process would be to the point of failure, focusing on identified weak points. The results of each FEA test would be compared to the requirements to see if the DTI passed. This could be carried out at the component level, the assembly, and up to the entire product. As discussed above, the VDT could test not just its specification compliance, but its behavioral performance in a virtual lifetime.

As an example, given the requisite computing capability, every automobile could be crash tested as a DTI. A by-product of DTC is that this would be both a protection and a defense in product liability claims. One of the ambush tactics used by a plaintiff attorney when the product manufacturer presents their product crash testing as a defense is, “But did you crash test this particular product.” This would allow the product manufacturer to answer, “Yes.”

5. Digital Twin Certified and Additive Manufacturing (AM)

The certification of AM is the top challenge for the manufacturing industry. It is costing the industry billions of dollars because of AM complexity, the unknowns in AM processes, and the severe delays in not being able to adopt AM [

18]. This is negating the major potential benefits of adopting AM for safety related products and general production.

The assumption in DTC for traditional or subtractive manufacturing is that the material is not a factor because it is homogeneous and undergoes minimum transformation in the manufacturing process. Composites can be considered a form of additive manufacturing. While the material is homogeneous, the geometric shape is formed by layering and curing. The issues composites have would be better addressed by this section than by the previous section. The material is made into its required shape by a variety of manufacturing methods. It is subtracted by cutting or milling a larger piece of material. It is formed into the required shape by stamping or extruding. Welding is one of the few processes where material is not homogenous, and as a result it is one of the most common failure points in subtractive manufacturing.

That is not the case for additive manufacturing. In additive manufacturing, the material undergoes a transformation as it is processed. The shape is created by depositing or printing layer by layer. In the case of metals, the material is transformed from powdered metal to a continuous form by creating liquid melt pools with high powered lasers that merge material horizontally and vertically.

AM has both aleatory and epistemic uncertainty in its process inputs [

19]. As a result, there are substantial efforts to address the inherent variability of AM at the front end of production. Particularly for metals, there are host of qualification and certification initiatives that involve standards, rules, and regulations [

20]. While this will be helpful in reducing uncertainty, the inherent variability of AM processes requires a production backend methodology like DTC.

My first introduction of the DTC concept was for AM because current methods did not address these new issues that came with AM technology [

21]. The three key elements of DTC are the same, except that the first element, the capture of the data from the physical manufacture of the DTI will need to be much more extensive and robust. In situ monitoring will need to capture and record data on the formation of the material on a voxel-by-voxel basis. The captured data of these voxels will need to be assembled into a 3D image that can be virtually tested.

The structural simulation tests will also need to be more extensive. Structural tests of subtractive manufacturing assume that the forces are uniformly transmitted through the material. However, that assumption will not apply to AM. There may be anomalies within the material due to inconsistent development of melt pools. As a result, forces may not be transferred uniformly. Virtual testing will need to be robust enough to determine when these anomalies will result in potential structural failures. Depending on the product, forces can be oriented differently to give a profile of where the structure is most at risk and how much force over the requirements will cause failure.

Again, the virtual testing can be performed until structural failure occurs with each DTI. This will establish the safety factor of such failures. Currently, the determination of the nonconformance of an AM build is to capture in situ monitoring data and attempt to characterize the build to other successful and unsuccessful ones [

22]. Virtual testing of each specific DTI should be far more reliable than correlations.

We will also be able to use data-based DTC over time. With a voxel-by-voxel DTI, we will be able to use AI/ML to assess which pattern of structural anomalies are benign and which result in future failures. Since this is unknown territory now, it would be useful to start collecting this data as soon as possible.

This is an issue with the assessment of AM structures today. The concern and ability to use AM in safety rated products is a barrier to AM use. The attempted mitigation is to use NDT techniques such as CT scanning. However, this is only a visual inspection technique. The widespread adoption of AM could be enabled and accelerated by DTC capability.

To add more complexity to this, the development of new and unique materials is being investigated for AM. In order to increase a product’s fit for purpose, one area of research is Integrated Computational Materials Engineering (ICME) [

23]. The intent would be to design custom materials that then would be additively manufactured. This would mean that the VDT would need to incorporate the ICME specific characteristics into its testing.

6. Research Needed

In order to realize DTC, research and advancements are needed in a number of areas.

The virtual testing simulations will need to mirror the physical tests. We will need a high degree of confidence that if we test the DTI structure to failure that a physical test will produce the same results with an acceptable margin of error. For AM, we will need to incorporate ICME capability. This will need to be done for all the different virtual tests that we intend to rely on. How such virtual tests can be qualified so that all parties have confidence on relying on their results is a research area of significant interest.

For AM, we will need in situ monitoring capabilities that will allow us to capture anomalies during the process and create DTIs that will be reliable in virtual testing.

There is much work needed in developing the fusion of physics based and data hybrids.

Advances in these areas will require substantial computing capabilities to be fully realized. Based on Moore’s Law, I have been estimating that there is a tremendous amount of new computing capability that will be available in the not-too-distant future [

24] (I recently was an invited speaker at a researchers’ conference of a top semiconductor manufacturing company. As a provider of the machines that make semiconductors, they are not as optimistic about the continuation of Moore’s Law as it relates to transistor density. However, there is optimism that overall computing capability will still increase significantly). These estimates do not include the availability of quantum computing. We should be planning our capabilities now to take advantage of those increases.

7. Conclusions

DTC does not by itself ensure that the product will meet the perceived value the customers expect of their products. As the PLQ Model illustrates, all the manufacturer’s organizational functions need to populate and consume the appropriate data and information. Marketing needs to have accurately captured the customer’s requirements. The design and engineering function needs to produce those requirements in material, geometry, and behaviors in a virtual product that they rigorously test. The product needs to be properly supported and repaired quickly and efficiently, ideally predicting failures before they occur.

However, the critical phase in this lifecycle is manufacturing which takes the virtual product from the creation phase and produces multiples instances of physical ones that will never be exactly the same in a physical world. Virtually testing these unique products will give us a high level of confidence that they will perform to the user’s expectations. This will be of special importance as we move from traditional or subtractive manufacturing to additive manufacturing. In fact, it may be the only way that we can have assurance that additive manufacturing is producing the products that will perform to the perceived value of the customer.