Abstract

In this paper, a new self-adaptive step size algorithm to approximate the solution of the split minimization problem and the fixed point problem of nonexpansive mappings was constructed, which combined the proximal algorithm and a modified Mann’s iterative method with the inertial extrapolation. The strong convergence theorem was provided in the framework of Hilbert spaces and then proven under some suitable conditions. Our result improved related results in the literature. Moreover, some numerical experiments were also provided to show our algorithm’s consistency, accuracy, and performance compared to the existing algorithms in the literature.

MSC:

46N10; 47H10; 47J26; 65K10

1. Introduction

Throughout this paper, we denote two nonempty closed convex subsets of two real Hilbert spaces and by C and Q, respectively. We denote the orthogonal projections onto a set C by and let be an adjoint operator of , where A is a bounded linear operator.

Over the past decade, inverse problems have been widely studied since they stand at the core of image reconstruction problems and signal processing. The split feasibility problem (SFP) is one of the most popular inverse problems that has attracted the attention of many researchers. Cencer and Elfving first considered the split feasibility problem (SFP) in 1994 [1]. The split feasibility problem (SFP) can mathematically be expressed as follows: find an element x with:

As mentioned above, the SFP (1) has received much attention from many researchers because it can be applied to various science branches. Several practical algorithms to solve the SFP (1) presented in recent years were given in [2,3,4,5,6,7]. It is important to note that the split feasibility problem (SFP) (1) is equivalent to the following minimization formulation:

In 2002, Byrne [2] introduced a practical method called the CQ algorithm for solving the SFP, which is defined as follows:

for all and is arbitrarily chosen. They considered the step size . The advantage of the CQ algorithm is that there is no need to compute the inverse of a matrix because it only deals with an orthogonal projection. However, the CQ algorithm still needs to compute an operator norm of A.

A self-adaptive step size was then introduced by Yang [8] to avoid computing an operator norm of A. Yang designed the step size as follows:

where is a positive sequence parameter that satisfies and . Moreover, there are two additional conditions for the self-adaptive step size: (1) Q must be a bounded subset; (2) A must be a full-column-rank matrix.

After that, López [9] modified a self-adaptive step size to remove the two additional conditions of Yang [8]. López then obtained a practical self-adaptive step size given by:

where is a positive sequence bounded below by zero and bounded above by four.

The split minimization problem is presented below. Let f and g be two proper semi-continuous and convex functions on and , respectively. Moudafi and Thakur [10] considered the interesting problem called the proximal split feasibility problem. This problem is defined to find a minimizer x such that:

where and is the following Moreau–Yoshida approximate:

It is fascinating to observe the case of . The minimization problem (6) can be reduced to the SFP (1) when we set and , where and are the indicator functions of the subsets C and Q, respectively. The reader can refer to [11] for details. By using the relations (7), we can then define the proximity operator of a function g of order as the following form:

Moreover, the subdifferential of function f at the point x is given by the following form:

Recall the following notations:

and

In the case of , Moudafi and Thakur [10] also considered a generalization for the minimization problem (6), named the split minimization problem (SMP), which can be expressed to find:

Besides considering the SMP (10), they also introduced an algorithm to solve the SMP (10). It is defined as follows:

where is arbitrarily chosen and is a self-adaptive step size. In addition, Moudafi and Thakur [10] proved a weak convergence result under some suitable conditions imposed on the parameters.

Recently, Abbas [12] constructed and introduced two iterative algorithms to solve the split minimization problem (SMP) (10). These algorithms are defined as follows:

and:

where is arbitrarily chosen, step size with , and functions and are defined in Section 3. Abbas [12] provided the sequences generated by the algorithms (12) and (13), which converge strongly to a solution.

Furthermore, currently, fixed point problems of a nonexpansive mapping are still extensively studied by many research works since they are at the core of several problems in the real world, such as signal processing and image recovery. One of the famous algorithms to solve the fixed point problem of a nonexpansive mapping is as follows:

where is a nonexpansive mapping and the initial point is chosen in C, . The algorithm (14) is known as Mann’s algorithm [13]. It is well known that a Mann-type algorithm gives strong convergence provided the underlying space is smooth enough. There are many works in this direction. The reader can refer to [14,15,16] for details.

Apart from studying all the above problems, speeding up the convergence rate of algorithms has been often studied by many authors. Polyak [17] introduced a helpful technique to accelerate the rate of convergence called the heavy ball method. After that, many researchers have modified the heavy ball method to use with their algorithms. Nesterov [18] modified the heavy ball method to improve the rate of convergence for the algorithms. This algorithm is known as the modified heavy ball method:

where are arbitrarily chosen, , is an extrapolation factor, and the term is called the inertia. For more details, the reader is directed to [19,20,21].

Based on the above ideas, the aims of this work were: (1) to construct a new self-adaptive step size algorithm combine with the proximal algorithm, the modified Mann method with the inertial extrapolation to solve the split minimization problem (SMP) (10), and the fixed point problems of a nonexpansive mapping; (2) to establish the strong convergence results for the SMP and fixed point problems using the proposed algorithm; (3) to give numerical examples for our algorithm to present its consistency, accuracy, and performance compared to the existing algorithms in the literature.

2. Preliminaries

Some notations used throughout this paper are presented in this section. For an element x in a Hilbert space, and are denoted by a strong convergence and a weak convergence, respectively.

Lemma 1.

For every u and v in a real Hilbert space H, then,

where .

Proposition 1.

Let be a mapping with , where u and v are elements in C. The mapping S is called:

- 1.

- monotone if:

- 2.

- ξ-inverse strongly monotone (ξ-ism) if:for some constants ;

- 3.

- nonexpansive if:

- 4.

- firmly nonexpansive if:

It is well known that the metric projection of onto C is a nonexpansive mapping where is a nonempty closed convex, and it satisfies for all Moreover, is characterized by the following properties:

and:

for all and . We denote the collection of proper convex lower semicontinuous functions on .

Definition 1.

Refs. [22,23]: Let and . Define the proximal operator of g by:

The proximal of g of order λ () is given by:

Below are some of the valuable properties of the proximal operators.

Property 1.

Refs. [24,25]: Let , , and Q be a nonempty closed convex subset of .

- 1.

- If where is an indicator function of Q, then the proximal operators , for all ;

- 2.

- is firmly nonexpansive;

- 3.

- , the resolvent of the subdifferential of g;

- 4.

- if and only if .

Let . In [26], it was shown that . Moreover, they showed that and are both firmly nonexpansive.

Lemma 2.

Ref. [27]: Any sequence in a Hilbert space satisfies Opial’s condition if implies the following inequality:

for every with .

Lemma 3.

Ref. [28]: Any sequence of nonnegative real number can be written in the following relation:

and the following three conditions hold:

- 1.

- 2.

- 3.

Then, .

Lemma 4.

Ref. [29]: Let a sequence be nondecreasing at infinity in the sense that there is a subsequence such that for all . For an integer , define the integer sequence by:

Then, a sequence does not decrease and verifies . Furthermore,

for all

3. Results

This section proposes an iterative algorithm generating a sequence that strongly converges to a solution of split minimization problems (10) and fixed point problems of a nonexpansive mapping. We established the convergence theorem of the proposed algorithm under the statements as follows:

Let be a nonexpansive mapping. Denote the set of all solutions of a split minimization (10) by and the set of all fixed points of the mapping S by . Let , and suppose that:

Then, we obtained the gradients of the functions h and l as follows:

Lemma 5.

Let and be two functions that are defined as (21). Then, the gradients and are Lipschitz continuous.

Proof.

By the notation , we find that:

where . On the other hand,

Therefore, we obtained that is -inverse strongly monotone. Moreover:

Similarly, one can prove that is also Lipschitz continuous. This completes the proof. □

A valuable assumption for analyzing our main theorem is given as follows.

Assumption 1.

Suppose that , are positive sequences and , are sequences in interval that satisfy the following assumptions:

- (A1)

- ;

- (A2)

- and ;

- (A3)

- with ;

- (A4)

- and as .

Theorem 1.

Let and be two real Hilbert spaces and S be a nonexpansive mapping on . Assume that A is a bounded linear operator from to with its adjoint operator , and and are proper lower semicontinuous and convex functions. Assume that SMP (10) is consistent (that is, ), and let and v be in . Then, the sequence in Algorithm 1 strongly converges to , where

| Algorithm 1 A split minimization algorithm. |

Initialization: Let and be arbitrarily chosen. Choose some positive sequences , and satisfying Assumption 1. Set . Iterative step: Given the current iteration , calculate the next iterations as follows: Stopping criterion: If , stop. Otherwise, put , and go to Iterative step. |

Proof.

Assume that . By using the firm non-expansiveness of (see [30,31] for details), we find that:

and:

This implies:

Next, we set . For fixed we obtain that:

and

Since S is nonexpansive, we find that:

Thus, is bounded, and this implies that , and are also bounded.

Next, we observe that:

Next, we claim that and . Consider:

and:

Moreover, consider:

Therefore, we obtain:

We next show the sequence by dividing into two possible cases.

Case 1. Assume that is the non-increasing sequence. There exists such that for each . Then, the sequence converges, and so:

We then obtain by using and being bounded that:

and:

Thus, we obtain by using (33) that:

Moreover, it easy to see that:

We next observe that:

By using the fact that , we find that:

Moreover, we observe that:

Next, we observe that:

Thus, we obtain immediately that:

We next show that , where To prove this, we can choose a subsequence of with:

Since is a bounded sequence, we can take a weakly convergent subsequence of that converges to , that is . By using the fact that we find that .

We next show in two steps. First, we show that w is a fixed point of S. By contradiction, we assume that . Since and by Opial’s conditions, we conclude that:

This is a contradiction. This implies Second, we show Since w is a weak limit point of , there is a such that . Since h is lower semicontinuous, we find that:

This implies:

Then, , and so, . This means that is a minimizer of the operator g.

Similarly, since l is lower semicontinuous, we find that:

This implies:

Thus, w is in a fixed point set of the proximal operator , that is . This means that w is a minimizer of the operator f. This implies . Therefore, we can conclude that

According to the properties of matric projections, since and , then Consider,

In the final step, we show that the sequence as . We observe that:

and:

This implies:

Thus, we obtain by using Lemma 3, Assumption (A4), Inequality (50), and the boundedness of that

Case 2. Assume that is increasing. By applying Assumptions (A1), (A2), and (A4) to (36), we find that . Thus, we obtain by using (33) that .

Suppose that , and for each (where large enough), define a mapping as follows:

Thus, when n tends to infinity, and for each ,

We then obtain by using Inequality (36) that:

Since as and also when n tends to infinity, we observe that:

and:

Moreover, we obtain that:

This implies that:

Thus, we obtain:

We now obtain by using Lemma 4 that:

as . This implies that and The proof is complete. □

Remark 1.

- (a)

- If we put , and for all in our proposed algorithm, we found that the algorithm (11) of Moudafi and Thakur was obtained. Moreover, we obtained a strong convergence theorem, while Moudafi and Thakur [10] only obtained a weak convergence theorem;

- (b)

- If we put , and for all in our proposed algorithm, we found that Algorithm (1.2) in [32] was obtained;

- (c)

- If we put , , and for all in our proposed algorithm, we found that the Mann iteration algorithm in [13] was obtained. Moreover, we obtained a strong convergence theorem, while Mann [13] only obtained a weak convergence theorem;

- (d)

- As an extraordinary choice, an extrapolation factor in our proposed algorithm can be chosen as follows: ,for each integer n greater than or equal to three and a positive sequence with , as . This choice was recently derived in [33,34] as an inertial extrapolated step.

4. Applications and Numerical Results

This section provides the numerical experiments to illustrate the performance and compare Algorithm 1 with and without the inertial term. Moreover, we present an experiment to compare our scheme with the Abbas algorithms [12]. All code was written in MATLAB 2017b and run on a MacBook Pro 2012 with a 2.5 GHz Intel Core i5.

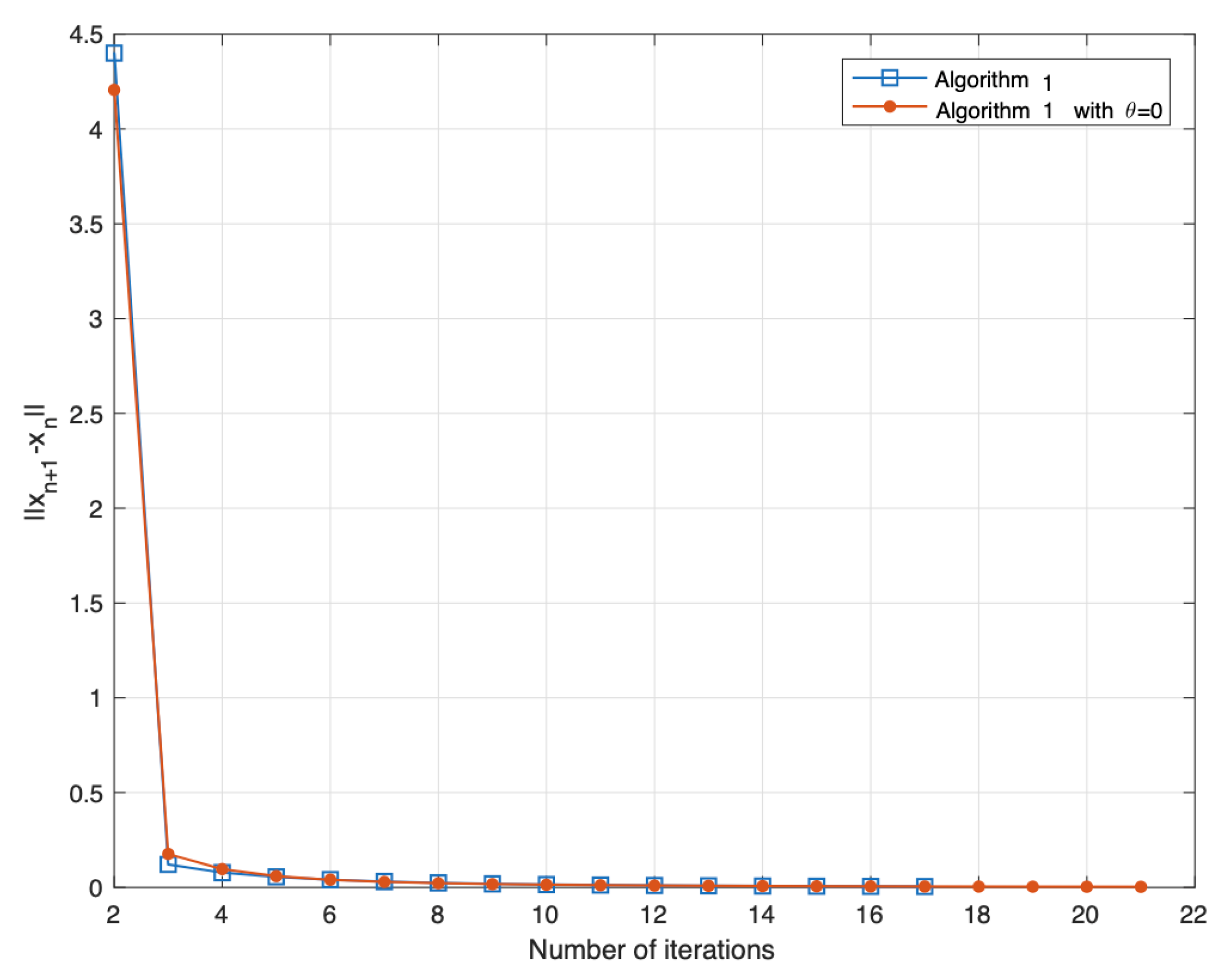

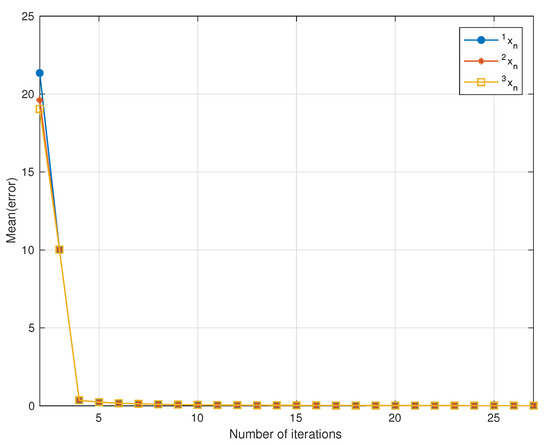

First, we illustrate the performance of our proposed algorithm by comparing the proposed algorithm with and without the inertial term as the following experiment:

Example 1.

Suppose , and let . In problem (10), assume that and , where δ is the indicator function. Then:

Thus, the problem (10) becomes the SEP (1). We next took the parameters and . Thus, by Algorithm 1, we obtained that:

We then provide a comparison of the convergence of Algorithm 1 with:

and Algorithm 1 with in terms of the number of iterations with the stopping criterion . The result of this experiment is reported in Figure 1.

Figure 1.

Comparing Algorithm 1 with Algorithm 1 defined without the inertial term.

Remark 2.

By observing the result of Example 1, we found that our proposed algorithm with inertia was faster and more efficient than our proposed algorithm without inertia ().

Second, we used the example in Abbas [12] to show the performance of our algorithm by comparing our proposed algorithm with Algorithms (12) and (13) in terms of CPU time as the following experiment:

Example 2.

Let and be the Euclidean norm in . The metric projection onto the Euclidean unit ball B is defined by the following:

Thus, the proximal operator (the block soft thresholding) [24] is given by:

For , let ,

and:

Then (see [35]),

and:

for all . Assume that , and let us consider the split minimization problem (SMP) (10) as follows:

It is easy to check that is in the set of solutions of Problem (64). We now took and:

for all . We next took , then we obtained by Algorithm 1 that:

We now provide a comparison of the convergence of the iterative schemes (12) and (13) in Abbas’s work [12] with our proposed algorithm with in terms of CPU time, where initial points , were randomly generated vectors in . We tested this experiment with different choices of N as follows: .

We used as the stopping criterion. The result of this experiment is reported in Table 1.

Table 1.

Computation results for Example 2.

Remark 3.

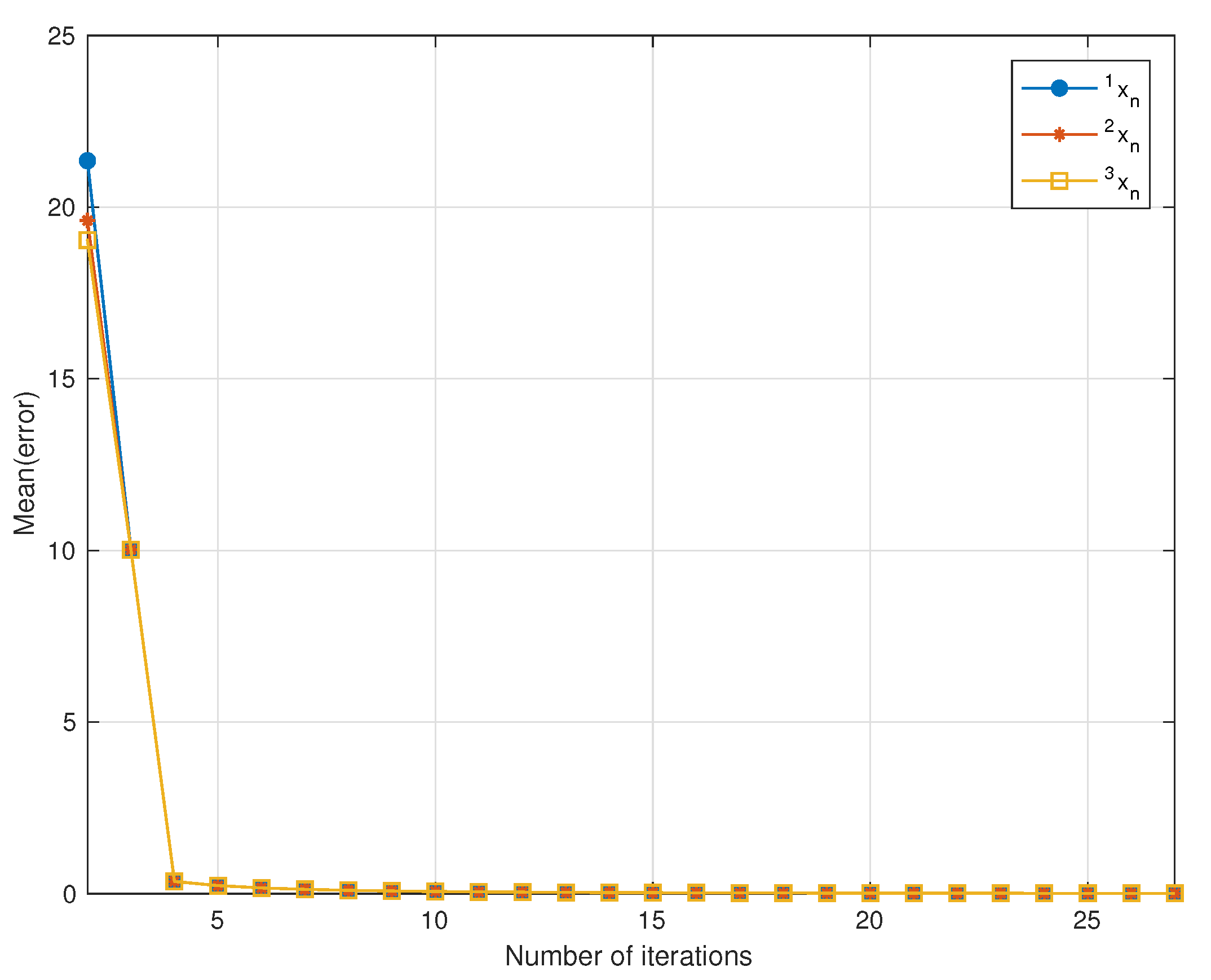

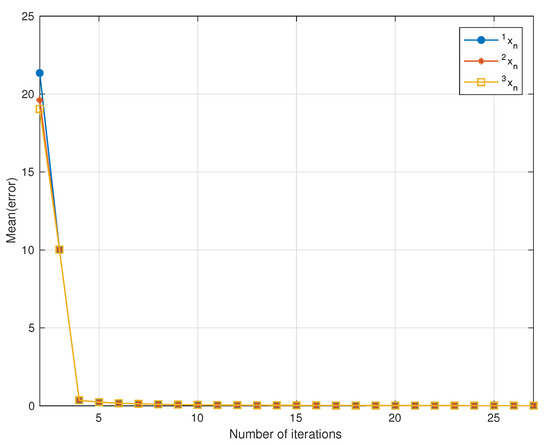

Finally, we show the average error of our algorithm as the following experiment:

Example 3.

Let , and be defined as in example (2). In this experiment, we took , where . Let be a sequence generated by Algorithm 1 and the parameters , and The mean-error is given by:

We used as the stopping criterion of this experiment. We then observed that the sequence generated by Algorithm 1 converged to a solution if converged to zero. Figure 2 shows the average error of our method in three groups of 20 initial points.

Figure 2.

Computation results for Example 3.

Remark 4.

By observing the result of Example 3, we found that the choice of the initial value did not affect the ability of our algorithm to achieve the solutions.

5. Conclusions

This paper discussed split minimization problems and fixed point problems of a nonexpansive mapping in the framework of Hilbert spaces. We introduced a new iterative scheme that combined the proximal algorithm and the modified Mann method with an inertial extrapolation and a self-adaptive step size. For the proposed algorithm, the main advantage was that there was no need to compute the operator norm of A. Moreover, we illustrated the performance of our proposed algorithm by comparing with other existing methods in terms of CPU time. The obtained results were improved and extended various existing results in existing pieces of literature.

Author Contributions

The authors contributed equally in writing this article. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by King Mongkuts University of Technology North Bangkok, Contract No. KMUTNB-PHD-62-03.

Acknowledgments

The authors would like to thank the Department of Mathematics, Faculty of Applied Science, King Mongkuts University of Technology North Bangkok.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441. [Google Scholar] [CrossRef]

- Yao, Y.; Liou, Y.C.; Postolache, M. Self-adaptive algorithms for the split problem of the demicontractive operators. Optimization 2018, 67, 1309–1319. [Google Scholar] [CrossRef]

- Wang, F. Polyak’s gradient method for split feasibility problem constrained by level sets. Numer. Algorithms 2018, 77, 925–938. [Google Scholar] [CrossRef]

- Dong, Q.; Tang, Y.; Cho, Y.; Rassias, T.M. “Optimal” choice of the step length of the projection and contraction methods for solving the split feasibility problem. J. Glob. Optim. 2018, 71, 341–360. [Google Scholar] [CrossRef]

- Dadashi, V.; Postolache, M. Forward–backward splitting algorithm for fixed point problems and zeros of the sum of monotone operators. Arab. J. Math. 2019, 9, 89–99. [Google Scholar] [CrossRef]

- Paunovic, L. Teorija Apstraktnih Metričkih Prostora–Neki Novi Rezultati; University of Priština: Leposavić, Serbia, 2017. [Google Scholar]

- Yang, Q. On variable-step relaxed projection algorithm for variational inequalities. J. Math. Anal. Appl. 2005, 302, 166–179. [Google Scholar] [CrossRef]

- López, G.; Martín Márquez, V.; Wang, F.; Xu, H.K. Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Moudafi, A.; Thakur, B. Solving proximal split feasibility problems without prior knowledge of operator norms. Optim. Lett. 2014, 8, 2099–2110. [Google Scholar] [CrossRef]

- Moudafi, A.; Xu, H.K. A DC Regularization of Split Minimization Problems. Appl. Anal. Optim. 2018, 2, 285–297. [Google Scholar]

- Abbas, M.; AlShahrani, M.; Ansari, Q.; Iyiola, O.S.; Shehu, Y. Iterative methods for solving proximal split minimization problems. Numer. Algorithms 2018, 78, 193–215. [Google Scholar] [CrossRef]

- Mann, W.R. Mean value methods in iteration. Proc. Am. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Xu, H.K. A variable Krasnosel’skii–Mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 2006, 22, 2021. [Google Scholar] [CrossRef]

- Podilchuk, C.I.; Mammone, R.J. Image recovery by convex projections using a least-squares constraint. JOSA A 1990, 7, 517–521. [Google Scholar] [CrossRef]

- Youla, D. Mathematical theory of image restoration by the method of convex projections. In Image Recovery: Theory and Application; Academic Press: Orlando, FL, USA, 1987; pp. 29–77. [Google Scholar]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer Science & Business Media: Berlin, Germany, 2003; Volume 87. [Google Scholar]

- Dang, Y.; Sun, J.; Xu, H. Inertial accelerated algorithms for solving a split feasibility problem. J. Ind. Manag. Optim. 2017, 13, 1383–1394. [Google Scholar] [CrossRef]

- Suantai, S.; Pholasa, N.; Cholamjiak, P. The modified inertial relaxed CQ algorithm for solving the split feasibility problems. J. Ind. Manag. Optim 2018, 14, 1595–1615. [Google Scholar] [CrossRef]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Moreau, J.J. Propriétés des applications “prox”. Comptes Rendus Hebd. SéAnces L’AcadéMie Sci. 1963, 256, 1069–1071. [Google Scholar]

- Moreau, J.J. Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. Fr. 1965, 93, 273–299. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Micchelli, C.A.; Shen, L.; Xu, Y. Proximity algorithms for image models: Denoising. Inverse Probl. 2011, 27, 045009. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator THEORY in Hilbert Spaces; Springer: Berlin, Germany, 2011; Volume 408. [Google Scholar]

- Opial, Z. Weak convergence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73, 531–537. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Maingé, P.E. Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

- Chaux, C.; Combettes, P.L.; Pesquet, J.C.; Wajs, V.R. A variational formulation for frame-based inverse problems. Inverse Probl. 2007, 23, 1495. [Google Scholar] [CrossRef]

- Rockafellar, R.T.; Wets, R.J.B. Variational Analysis; Springer Science & Business Media: Berlin, Germany, 2009; Volume 317. [Google Scholar]

- Maingé, P.E. Convergence theorems for inertial KM-type algorithms. J. Comput. Appl. Math. 2008, 219, 223–236. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Attouch, H.; Peypouquet, J. The rate of convergence of Nesterov’s accelerated forward-backward method is actually faster than 1/k2. SIAM J. Optim. 2016, 26, 1824–1834. [Google Scholar] [CrossRef]

- Combettes, P.L.; Pesquet, J.C. Proximal splitting methods in signal processing. In Fixed-Point Algorithms for Inverse Problems in Science and Engineering; Springer: Berlin, Germany, 2011; pp. 185–212. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).