Abstract

Structured knowledge representation is of great significance for constructing a knowledge graph of coal mine equipment safety. However, traditional methods encounter substantial difficulties when handling the complex semantics and domain-specific terms in technical texts. To tackle this challenge, we propose a knowledge extraction framework that integrates large language models (LLMs) with prompt engineering to achieve the efficient joint extraction of information. This framework strengthens the traditional triple structure by introducing symmetric entity-type information encompassing the head entity type and the tail entity type. Furthermore, it enables simultaneous entity recognition and relation extraction within a unified model. Experimental results demonstrate that the proposed knowledge extraction framework significantly outperforms the traditional step-by-step approach of first extracting entities and then relations. To meet the requirements of actual industrial production, we verified the impacts of different prompt strategies, as well as small lightweight models and large complex models, on the extraction task. Through multiple sets of comparative experiments, we found that the Chain-of-Thought (CoT) prompting strategy can effectively improve performance across different models, and the choice of model architecture has a significant impact on task performance. Our research provides an accurate and scalable solution for knowledge graph construction in the coal mine equipment safety domain, and its symmetry-aware design exhibits great potential for cross-domain knowledge transfer.

1. Introduction

Coal mine safety is a critical prerequisite for the stable and sustainable development of the energy industry [1,2]. In actual production scenarios, equipment failures [3] and safety incidents [4] not only undermine operational efficiency but also pose serious threats to miners’ lives. With the continuous advancement of intelligent mine construction, coal enterprises have accumulated vast volumes of unstructured textual data, including equipment operation manuals, accident investigation reports, and safety regulations [5]. These documents encapsulate rich, domain-specific knowledge; however, their unstructured nature poses significant challenges to traditional information extraction methods, which often struggle to identify and effectively utilize key information.

At the core of constructing a coal mine equipment safety knowledge graph lies the accurate extraction of entity and relation. Existing deep learning-based methods for entity and relation extraction face considerable limitations in this domain. Current approaches require large-scale annotated corpora, yet generating high-quality labeled data in the field of coal mine safety is both resource-intensive and reliant on the involvement of domain experts [6]. These shortcomings substantially impair both the construction quality and the downstream applicability of knowledge graphs in safety-critical settings.

In recent years, LLMs have achieved remarkable progress in the field of natural language processing, offering a novel and effective approach to information extraction tasks. Empirical studies have demonstrated that owing to their powerful semantic representation capabilities and few-shot learning abilities, LLMs can significantly enhance the accuracy of entity recognition and relation extraction even with limited amounts of high-quality annotated data, while simultaneously ensuring high processing efficiency [7,8,9]. As a result, LLMs have been increasingly applied across a wide range of domains for information extraction purposes [10,11,12,13,14].

Notably, the integration of domain-adaptive prompt engineering [15,16,17] further amplifies the performance of LLMs in low-resource environments. By tailoring prompts to specific disciplinary contexts, these models exhibit a strong capacity to accurately identify domain-specific entities and their semantic associations, even in complex and unstructured textual corpora. This dual advantage—deep semantic comprehension combined with minimal reliance on large-scale labeled data—endows LLMs with distinct technical value and broad application potential for knowledge extraction in specialized domains such as coal mine equipment safety.

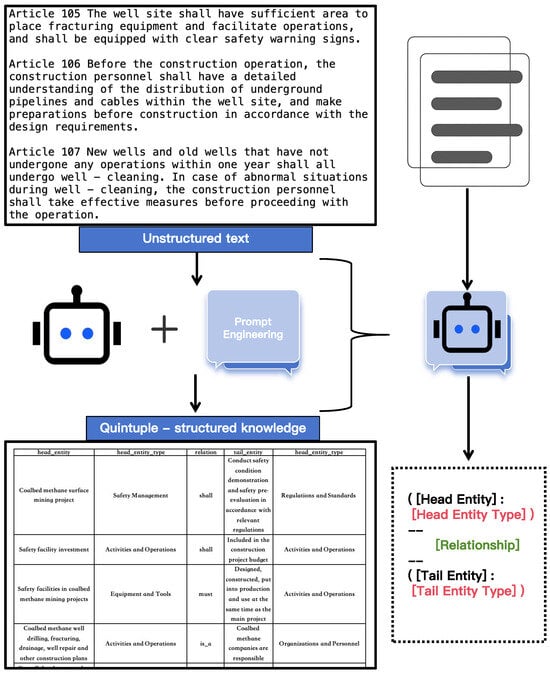

Figure 1 shows the overall structure of the quintuple knowledge extraction framework based on the large language model. The work in this paper has three main points of contribution:

- 1.

- In the absence of publicly available datasets for the coal mine equipment safety domain, this study constructs a dedicated corpus by collecting 194 safety-related documents. Through OCR processing, regular-expression-based segmentation, and expert-guided manual annotation (with dual-layer verification by three safety engineers and achieving 0.85 inter-annotator agreement) using the Doccano platform, a high-quality dataset tailored to the needs of domain-specific knowledge extraction is established.

- 2.

- Aiming at the problems in knowledge extraction for the coal mine equipment safety field, such as the unequal semantic status of head and tail entities in the traditional triple structure (head entity–relationship–tail entity) and the tendency of relation extraction to favor one-sided semantics, we propose a coal mine equipment safety knowledge extraction framework based on symmetric constraints. The core of this framework lies in the introduction of a quintuple-structured representation (head entity–head entity type–relationship–tail entity–tail entity type). By imposing a symmetry constraint on the head entity type and the tail entity type in prompt engineering, a mirror-image structure between the head and tail entities in the type dimension is constructed. This symmetric design effectively balances the semantic status between entities, guiding the model to more accurately capture two-way semantic associations. As a result, the joint completion of entity recognition and relation extraction can be efficiently achieved within a unified model;

- 3.

- In this paper, three sets of experiments were conducted. The performance of the proposed extraction framework was compared with that of the traditional step-by-step method of first extracting entities and then relations on Ernie-4, ChatGLM4-9B, Qwen-plus, and DeepSeek-R1. Moreover, on DeepSeek-R1, the large language model with the best performance, the impacts of three prompt strategies, namely zero-shot, few-shot, and CoT, on knowledge extraction were verified. Additionally, the effects of model scales were examined using models of varying sizes including DeepSeek-R1-distill-qwen-14b/32b, Qwen2.5-14B/32B, Qwen-plus, and DeepSeek-R1. We demonstrated the crucial role of extraction paradigm selection in acquiring domain-specific knowledge. Our work provides empirical evidence for the boundary of model capabilities in different extraction scenarios and offers a practical guide for implementing coal mine equipment safety knowledge extraction with minimal supervision and configuration.

Figure 1.

Quintuple-structured knowledge extraction framework for coal mine equipment safety texts. This figure illustrates how to use large language models in combination with prompt engineering to automatically transform unstructured coal mine equipment safety text information into symmetric quintuple-structured knowledge of (head entity, head entity type, relationship, tail entity, tail entity type).

2. Related Research

Entity–relationship extraction is a fundamental task in natural language processing [18,19] that transforms unstructured text into structured knowledge, serving as a critical component in the construction of knowledge graphs. Specifically, it involves identifying pairs of entities with specific semantic associations and classifying the relationship types between them, resulting in structured triples in the form of head entity–relation–tail entity.

Existing approaches to entity–relationship extraction can be broadly categorized into three groups: rule-based methods, traditional machine learning methods, and deep learning-based methods. Rule-based methods rely on handcrafted patterns and templates developed by domain experts. However, due to their heavy dependence on domain-specific knowledge, linguistic heuristics, and fixed text structures, such methods suffer from poor generalizability and have become less favored in both research and real-world applications [20,21].

Entity and relation extraction in traditional approaches is mainly accomplished through rule-based heuristics or statistical machine learning methods [22,23,24,25,26]. While these models achieved early success, they struggle when applied to domain-specific texts such as technical manuals or accident reports in the coal mine safety domain. Their limitations in handling complex syntactic structures, specialized terminology, and long-range dependencies often lead to subpar performance in practical scenarios.

In recent years, deep learning-based methods have emerged as the dominant paradigm for entity–relationship extraction [27,28]. Various architectures and enhancements have been proposed. For instance, Cai et al. [29] adopted a BiLSTM-CRF model with Word2Vec embeddings to improve semantic representation in annotated knowledge graphs for fully mechanized mining equipment. Building on the character-level BiLSTM-CRF framework, Liu et al. [30] introduced the Lattice-LSTM model, which integrates both character and lexicon information to capture richer contextual semantics. Notably, the advent of pre-trained language models, especially BERT-based architectures, has brought significant improvements to entity recognition tasks in coal mining equipment by capturing deeper contextual representations [31].

Despite their effectiveness, deep learning models typically require large volumes of high-quality annotated data [32]. In the domain of coal equipment safety, the scarcity of such resources poses a major obstacle. Annotating data in this field is not only labor-intensive and time-consuming but also demands substantial input from domain experts, thereby limiting the scalability and practical deployment of these models. To this end, this paper proposes a domain-adaptive knowledge extraction framework based on LLMs. By designing a five-tuple knowledge representation structure and integrating it with dynamic prompt engineering techniques, the proposed framework enables high-precision entity–relationship extraction in the domain of coal mine equipment safety, even under limited annotated data conditions.

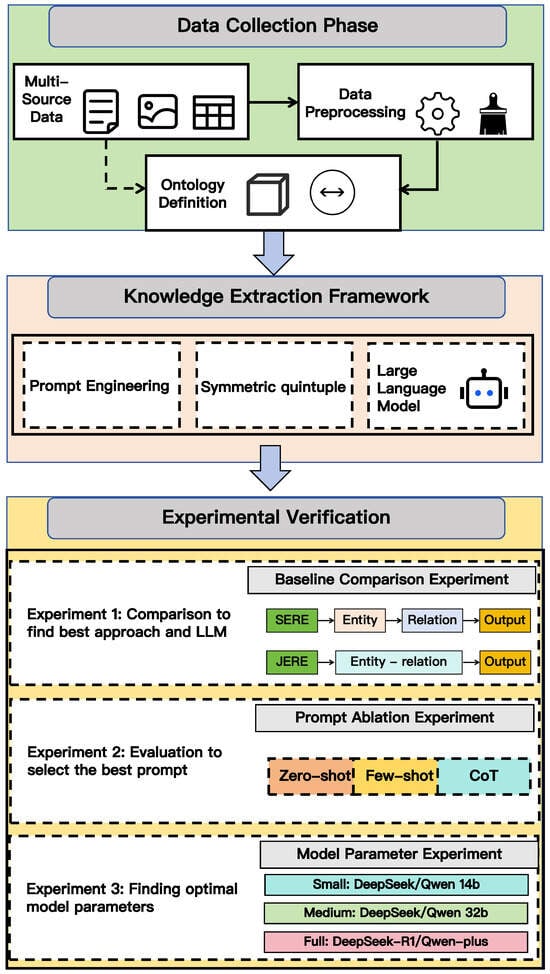

The organizational structure of this paper is as follows: Section 3 introduces in detail the research materials and methods (Materials and Methods). The Materials part contains three core contents: (1) Data Acquisition and Pre-Processing: It elaborates on the acquisition of raw data from the National Mine Safety Administration and the cleaning process. (2) Ontology Definition: This section formally defines an ontology comprising 6 entity types and 13 relation types. (3) Data Annotation: It explains the annotation process based on Doccano and the quality control measures. The Methods part encompasses the following: (1) Models: The large language model architectures selected for the comparative experiments. (2) Symmetric Joint Entity–Relation Extraction: The proposed joint extraction framework. (3) Prompt Strategy: The designed zero-shot/few-shot/CoT prompt engineering schemes. Section 4 validates the effectiveness of the methods through three sets of systematic experiments: (1) Baseline Comparison Experiment: A comparison with the pipeline-based method of first performing entity recognition and then relation extraction. (2) Prompt Ablation Experiment: An analysis of the impacts of different prompt strategies. (3) Model Parameter Experiment: Testing the extraction capabilities of models with different parameter sizes on coal mine equipment safety texts. Finally, the research achievements are summarized and the possible future expansions are discussed. The overall framework of the article is shown in Figure 2.

Figure 2.

Overall flowchart.

3. Materials and Methods

3.1. Dataset Construction

3.1.1. Data Acquisition and Pre-Processing

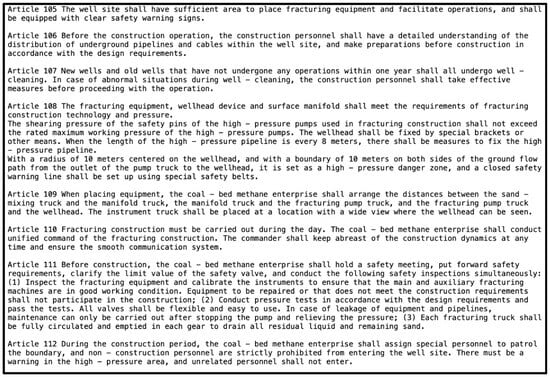

Given the scarcity of publicly available datasets in the field of coal mine equipment safety, this study constructs a dedicated corpus of coal mine safety regulations through systematic data acquisition and pre-processing. The source data is primarily obtained from China’s official website of the State Administration of Mine Safety Supervision, ensuring its authority and reliability. Using Python-based web scraping techniques (Requests + BeautifulSoup), a total of 194 safety-related documents were collected. The source data contains abundant knowledge in the field of coal mine safety production, rendering it of great value for information extraction. As depicted in Figure 3, this text presents information across key dimensions. These include safety norms and standards (such as stipulating that the shearing pressure of the safety pin of a high-pressure pump shall not exceed the rated maximum working pressure), equipment and space management (specifying in detail the relative distances among the sand-mixing truck, manifold truck, and fracturing pump truck), operation procedures and emergency measures (mandating that the distribution of underground pipelines and cables must be grasped prior to construction), as well as communication, coordination, and emergency response (requiring unified command and unobstructed communication). All this information is embedded in unstructured text. To transform this into structured information, we need to systematically clean and structure these texts. First, irrelevant symbols and redundant white-space characters in the text are removed through regular expressions. The expressions of numbers and units are uniformly standardized. In view of the unique structural features of legal provisions, we use “Article x” as the demarcation point for sentence segmentation to ensure the integrity of each semantic unit. Meanwhile, potential format-disorder problems in the original documents are addressed.

Figure 3.

Content of coal mine equipment safety data.

3.1.2. Ontology Definition

In this study, through a systematic analysis of coal mine operation documents and safety regulations, a specialized ontology for the coal mine equipment safety domain was developed. This ontology framework encompasses six types of entities and thirteen types of relationships, comprehensively covering all dimensions of coal mine safety management.

In terms of data screening, we implemented strict quality control regarding text length and entity density. For text segments that are too short (character count < 15) and contain less than 2 entities, a context-merging strategy was adopted, combining them with adjacent sentences to form complete semantic units. For complex texts that are too long (character count > 200) and contain more than 5 entities, reasonable segmentation was carried out based on punctuation marks and semantic boundaries.

Regarding entity classification, we defined six major types (as shown in Table 1): Organizations and Personnel, Activities and Operations, Equipment and Tools, Safety Management, Environment and Conditions, and Regulations and Standards. A pre-annotation filtering mechanism was used to automatically eliminate redundant texts (such as pure numerical numbering, repeated headings, etc.).

Table 1.

Ontology entity types for coal mine equipment safety.

In terms of relationship definition, based on the semantic features of safety regulation texts, we designed thirteen types of relationships (as shown in Table 2), including shall, shall_comply, prohibited, strictly_prohibited, must, requires, contains, performs, allowed, without_approval, is_a, belongs_to, and configures. The annotation quality was ensured through a relationship-density detection algorithm (with a threshold set at 3-5 relationships per sentence).

Table 2.

Relationship types in coal mine safety ontology.

3.1.3. Data Annotation

To validate the effectiveness of the proposed knowledge extraction method, we manually annotated a coal mine safety corpus using the open-source platform Doccano, which was deployed locally. A team of 3 safety engineers and 2 domain experts completed the annotation process within 2 weeks, averaging 45 min per document. Doccano supports annotation for text classification, sequence labeling, and sequence-to-sequence tasks, making it well-suited for our extraction requirements. Following a rigorously defined ontology (6 entity types and 13 relation types), the annotation workflow included (1) independent dual-layer annotation achieving 0.85 inter-annotator agreement (F1-score), and (2) expert arbitration for 15% disputed cases. The processed raw texts were imported into the platform, where each sentence was annotated individually. For each instance, the annotators first identified the head and tail entities, analyzed the semantic relation between them, and then labeled both the entity types and the relation type to form structured triples. Post-validation applied a relation-density check (3-5 relations/sentence) to ensure semantic coherence. Upon completion of the annotation process, a total of 635 labeled samples and 1018 unique entity pairs were obtained, with each triple verified by at least two annotators. The dataset’s detailed statistical characteristics are summarized in Table 3.

Table 3.

Revised statistics of the coal mine safety knowledge graph dataset.

3.2. Entity–Relation Extraction

3.2.1. Models

To address the challenge of knowledge extraction in the coal mine equipment safety domain, this study proposes a domain-adaptive entity–relationship extraction framework based on LLMs. The model architecture follows a conventional encoder–decoder structure and comprises three key components: an input module, an encoding layer, and a structured output layer.

In the input module, the unstructured texts are processed using a schema-guided prompt mechanism. A structured prompt template is constructed, incorporating three key elements: task role definition, domain-specific ontology, and a predefined relation schema. The task role is fixed as a knowledge extraction expert, guiding the model to identify conceptually relevant entities and semantically meaningful relationships.

The encoding layer utilizes domain-adaptive LLMs such as ERNIE-4, ChatGLM4-9B, Qwen-Plus, or DeepSeek-R1, which exhibit strong semantic understanding and generalization capabilities in low-resource or few-shot learning settings. A dynamic prompt engineering strategy is employed to improve domain alignment, wherein the predefined task instructions and ontology constraints are embedded directly into the input text in natural language form. This mechanism enhances the model’s ability to accurately recognize entity boundaries and classify relationships, even in the absence of extensive annotated data.

The output layer adopts a structured extraction language to represent knowledge units in a unified five-tuple format. To preserve semantic integrity, compound noun phrases are treated as single entities. When multiple knowledge points are present within a sentence, the model extracts each as an independent five-tuple. Hierarchical or conditional relationships are explicitly expressed using relation types from the predefined schema, enabling accurate modeling of nested or constrained semantic structures in documents.

3.2.2. Symmetric Joint Entity–Relation Extraction

In traditional knowledge representation, the triple structure (head entity, relation, tail entity) is the foundational unit of knowledge graphs, offering a simple yet powerful means of encoding relational semantics between entities. The corresponding formula is as follows:

where denotes a knowledge triple consisting of head entity , relation , and tail entity , with representing the set of all entities and the set of predefined relations in our coal mine safety domain, forming a complete factual statement where h and t are domain-specific named entities and r expresses their semantic association, following standard knowledge representation conventions.

However, when applying LLMs for knowledge extraction, the absence of clearly defined extraction boundaries can lead to significant deviation from the target ontology. This issue arises due to the strong generalization capabilities of LLMs, which may result in off-topic or erroneous extractions when relying solely on traditional triples. Furthermore, directly instructing LLMs to generate triples from input text without contextual constraints often leads to incorrect extraction directions or entity misclassification.

To address these limitations, this study proposes a symmetry-enhanced knowledge representation structure that extends conventional triplets by incorporating mirrored entity-type constraints. As the formal output schema for our LLM-based extraction framework, each knowledge unit consists of five elements: head entity, relation, tail entity, head entity type, and tail entity type, where the head and tail entity types form symmetric constraints. Then, each quintuple representation can be defined as

where extends the basic knowledge triple to a quintuple representation by incorporating entity-type information, maintaining the original components (head entity), (relation), and (tail entity) as defined previously, while introducing two new elements and that respectively denote the predefined types of the head and tail entities from our type hierarchy , thereby enriching the structured representation with explicit semantic typing information.

Here, we explain and illustrate the quintuple representation. Take the source unstructured text “Coalbed methane enterprises shall sign a special safety production management agreement with the contracting unit” as an example. The head entity, “Coalbed methane enterprises”, belongs to the “Organizations and Personnel” type, and the tail entity, “safety production management agreement”, belongs to the “Safety Management” type. The two entities form a complete quintuple expression through the “shall” relationship. This type of annotation is derived from the six-entity system predefined in this study (see Table 1). After initial identification by the large language model, it was verified and confirmed by domain experts.

3.2.3. Prompt Strategy

Large language models, with their massive parameter counts, powerful semantic understanding capabilities, and extensive knowledge coverage, can generally be adapted to tasks using two main methods: fine-tuning and prompt engineering. Since fine-tuning requires a large amount of annotated data and computing resources, in this study, we adopt the more efficient method of prompt engineering.

Prompt engineering is a key technique for guiding large language models to generate expected outputs by carefully designing input instructions. It not only involves writing basic task descriptions but also encompasses how to organize examples and design reasoning paths. There are three strategies: zero-shot, few-shot, and Chain-of-Thought (CoT). In the zero-shot strategy, the task requirements are directly described without providing examples, merely stating the requirements through natural language instructions. The few-shot strategy establishes a task paradigm through a small number of typical examples. The CoT strategy requires the model to demonstrate the reasoning process before outputting the result.

For coal mine equipment safety texts, we have meticulously designed prompt templates that incorporate domain-specific characteristics, taking full account of the unique terminology system and relationship expressions in safety regulation texts. As shown in Table 4, our prompt template consists of five key components: Role, Ontology, Instruction, Content, and Result. Through this structured design, we ensure that the model accurately understands the extraction requirements in the coal mine safety domain.

Table 4.

Prompt words for symmetric joint entity–relationship extraction.

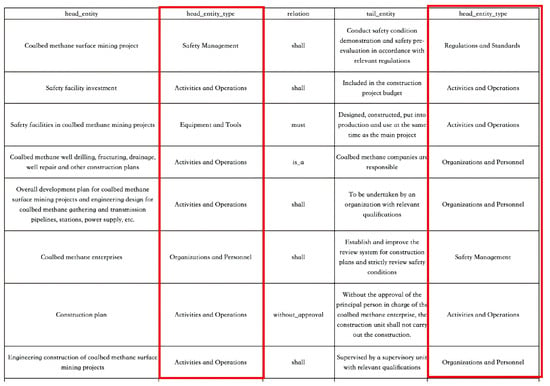

3.2.4. The Crucial Role of Symmetry in Knowledge Extraction

This study draws crucial inspiration from the principle of structural symmetry. By introducing constraints on the head entity type and the tail entity type, a representation framework of (head entity, head entity type, relationship, tail entity, tail entity type) quintuples is constructed, which significantly enhances the semantic balance of knowledge elements. The traditional triple (head entity, relationship, tail entity), due to the lack of the type dimension, easily leads to unequal semantic status of the head and tail entities. However, the symmetric embedding of type information in the quintuple forces the model to establish a mirror mapping of the head and tail entities at the representation level, forming a structural self-balancing mechanism. The visually demonstrable mirror structure is illustrated in Figure 4, where red-highlighted type fields in our structured data schema enforce geometric symmetry. In the model’s internal representations, head and tail types form mirrored cluster patterns in the embedding space.

Figure 4.

Symmetric structure of coal mine safety quintuples, as highlighted by the red box.

To verify this design, we have planned experimental verification in three aspects. Experiment 1 will compare the performance differences between the joint extraction and step-by-step extraction methods under the quintuple structure, with a focus on examining the degree of symmetry preservation. Experiment 2 will test the expressive ability of different prompt-word strategies for the type-symmetric structure. Experiment 3 will analyze the impact of changes in the model scale on the processing ability of the quintuple structure. These three experiments will systematically evaluate the potential value of the symmetry design in knowledge extraction from different perspectives.

4. Experimental Results and Discussion

4.1. Experimental Environment

The evaluation employs several large language models to assess the proposed framework’s effectiveness. ChatGLM4-9B was deployed locally on our cloud server equipped with NVIDIA RTX3090 (NVIDIA Corporation, Santa Clara, CA, USA) 24GB GPUs and Intel Xeon E5-2680 v4 (NVIDIA Corporation, Santa Clara, CA, USA) CPUs @ 2.40GHz. For all other models (Ernie-4, Qwen-plus, DeepSeek-R1, DeepSeek-R1-distill-qwen-14b/32b, and Qwen2.5-14B/32B), we utilized API services: Baidu Qianfan Platform for Ernie-4, Alibaba’s Qwen Platform for Qwen-plus, DeepSeek-R1-distill-qwen variants and Qwen2.5 variants, and DeepSeek Platform for DeepSeek-R1. The implementation is built on PyTorch 2.6.0 and Hugging Face Transformers library, with Python 3.12.0 as the development environment. All experiments were conducted using consistent hardware configurations to ensure fair comparison. The 24GB GPU memory proved sufficient for deploying the locally tested ChatGLM4-9B model without quantization techniques.

4.2. Computational Efficiency Analysis

To quantify computational costs critical for real-world deployment, we measured average inference speeds across all models. For API-accessed models, Ernie-4 (via Baidu Qianfan) achieved 18.17 tokens/s, Qwen-plus (via Alibaba Qwen API) reached 53 tokens/s, and DeepSeek-R1 (via DeepSeek API) attained 20 tokens/s. Notably, all models accessed through Alibaba’s Qwen Platform API—including Qwen-plus, both DeepSeek-R1-distill-qwen variants (14b/32b), and both Qwen2.5 variants (14B/32B)—exhibited identical throughput of 53 tokens/s. This consistency suggests platform-level optimizations or rate limiting within the Qwen API service. For locally deployed models, ChatGLM4-9B achieved 8.3 tokens/s on our RTX3090 hardware while efficiently utilizing the available 24GB GPU memory without quantization.

4.3. Indicators for Model Evaluation

To quantitatively evaluate the performance of the proposed knowledge extraction framework, three commonly used metrics are adopted: precision, recall, and F1-score.

Precisionevaluates the exactness/correctness of a model’s positive predictions. It reflects the model’s ability to avoid false positives. Formally, precision is defined as

Recallmeasures the model’s ability to capture all actual positive instances. It indicates the model’s ability to cover all relevant information. The recall is calculated as

In the given formulations, true positives (TP) are correctly predicted positive cases that match the ground truth, false positives (FP) are negative cases incorrectly predicted as positive, and false negatives (FN) are positive cases incorrectly predicted as negative.

F1-score represents the harmonic mean of precision and recall, providing a balanced performance measure. It is defined as

4.4. Experiments and Analysis of Results

We designed a three-stage experiment to comprehensively evaluate the proposed framework. These experiments are as follows: (1) Baseline Comparison Experiment: Using standardized metrics (precision/recall/F1-score), we compared our joint extraction framework on four representative large language models (LLMs), namely Ernie-4, ChatGLM4-9B, Qwen plus, and DeepSeek-R1, with the traditional pipeline method (first entity recognition, then relation extraction) to establish a performance benchmark. This initial stage identified the most suitable infrastructure for subsequent optimizations. (2) Prompt Ablation Experiment: By testing the performance of the best-performing model from the first stage under three strategies: zero-shot, few-shot, and CoT, we explored the impact of prompt strategies on the model’s performance. (3) Finally, Model Parameter Experiment: Under the combination of the optimal model and the optimal prompt, we evaluated different model parameter sizes to examine the efficiency boundaries of the framework in practical generation. This cascading design gradually isolated key variables (extraction paradigm → prompt efficiency → model scale) while maintaining the ecological validity of coal mine safety applications. The ultimate goal is to establish an optimized and deployable solution for extracting real-world coal mine equipment safety knowledge.

4.4.1. Baseline Comparison Experiment

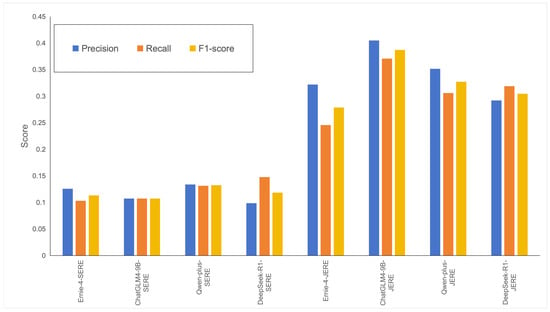

To systematically verify the advantages of the Joint Entity and Relation Extraction (JERE) proposed in this paper over the traditional Sequential Entity and Relation Extraction (SERE), we designed a two-dimensional comparative experiment to evaluate the performance of entity recognition and relation extraction, respectively. The experiment adopts a unified evaluation framework to comprehensively test four mainstream large language models, namely ERNIE-4, ChatGLM4-9B, Qwen-plus, and DeepSeek-R1, under two frameworks: JERE (e.g., Ernie-4-JERE) and SERE (e.g., Ernie-4-SERE). Here, JERE represents the joint extraction framework proposed in this paper, and SERE represents the traditional pipeline framework.

To ensure the rigor and comparability of the experimental results, this study established a systematic and fair comparison framework when evaluating the JERE and SERE methods. At the technical implementation level, both methods adopted completely consistent API call configurations, including model versions, parameter settings, prompt templates, and post-processing procedures, thereby ensuring absolute consistency of the comparison benchmarks. In terms of evaluation methods, although the JERE framework realizes end-to-end joint extraction through a unified architecture, we still decoupled its output results into two subtasks: entity recognition and relation extraction, and strictly used the exact same evaluation metrics as SERE for independent calculation. All subsequent designed experiments adhere to this evaluation principle.

As evidenced in Table 5 and Figure 5, our systematic evaluation demonstrates significant performance disparities between the proposed JERE and conventional SERE paradigms, with JERE consistently outperforming SERE across all models and metrics. The experimental results show that JERE achieves an average F1-score improvement of 157%, with ChatGLM4-9B-JERE attaining the highest F1 score of 0.3872, while precision gains are particularly pronounced, exemplified by ChatGLM4-9B-JERE’s precision of 0.4050 compared to its SERE counterpart’s 0.1074. Among the evaluated models, Qwen-plus exhibits the strongest relative improvement with a 147% increase in F1-score, while DeepSeek-R1 demonstrates the most balanced precision–recall tradeoff in JERE mode, maintaining a difference of less than 0.03 between precision and recall.

Table 5.

Comparative evaluation of named entity recognition performance: joint vs. staged extraction paradigms.

Figure 5.

Performance comparison of different named entity recognition paradigms.

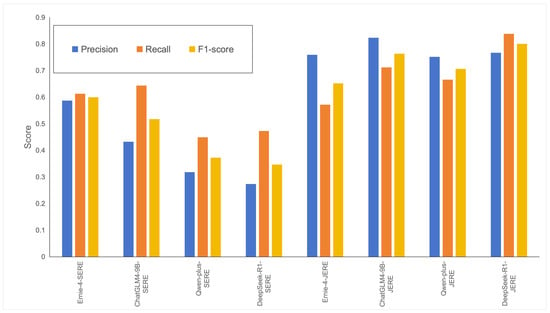

The relation extraction results in Table 6 and Figure 6 demonstrate even more substantial improvements of JERE over SERE than observed in NER. DeepSeek-R1-JERE emerges as the top-performing model, achieving an exceptional F1-score of 0.8006—a 131% improvement over its SERE counterpart (0.3468)—while maintaining strong precision (0.7668) alongside outstanding recall (0.8376), indicating its ability to comprehensively identify relationships without excessive false positives. Notably, JERE’s advantage proves more pronounced in relation extraction than in NER, suggesting that symmetric constraints are particularly effective for capturing relational semantics. This pattern holds across all evaluated models, which exhibit significant F1-score improvements in JERE mode (averaging +85%), with ChatGLM4-9B-JERE (0.7635) and Qwen-plus-JERE (0.7061) also demonstrating robust performance. Furthermore, the precision–recall tradeoff differs markedly between paradigms, as JERE consistently maintains a more balanced performance compared to SERE.

Table 6.

Comparative evaluation of relation extraction performance: joint vs. staged extraction paradigms.

Figure 6.

Performance comparison of different relation extraction paradigms.

The superior performance of JERE over SERE across all evaluation metrics strongly validates our hypothesis regarding symmetric structure preservation. The joint extraction better maintains the inherent symmetry of our quintuple representation, whereas the cascaded SERE approach disrupts this balance through its sequential processing.

4.4.2. Prompt Ablation Experiment

In the Baseline Comparison Experiment, the DeepSeek-R1-JERE model demonstrated the most outstanding performance. Therefore, we selected this model as the research object for the Prompt Ablation Experiment. To verify the generalizability of this finding to other large language models, we additionally incorporated the Qwen-Plus-JERE model into the experiment. Under the premise of keeping the JERE joint extraction framework unchanged, this experiment systematically evaluated the impacts of three prompt strategies, namely zero-shot, few-shot, and CoT, on the relation extraction (RE) and named entity recognition (NER) tasks. By using the method of controlling variables, we were able to accurately quantify the contributions of different prompt engineering strategies to the model’s performance.

From the experimental results (as shown in Table 7), the three prompt strategies showed distinct performance gradients across models. In the RE task, the CoT strategy consistently delivered superior performance, achieving peak F1-values of 80.65% for DeepSeek-R1-JERE and 84.96% for Qwen-Plus-JERE. The performance improvement mainly stemmed from the step-by-step reasoning mechanism’s ability to decouple complex semantic relationships. The few-shot strategy demonstrated strong recall in DeepSeek-R1-JERE (83.76%), while Qwen-Plus-JERE achieved even higher recall under CoT (92.68%), indicating model-specific responses to example learning. In the NER task, the CoT strategy yielded dramatically different outcomes: it produced an 81.4% F1 improvement in DeepSeek-R1-JERE (F1 = 47.54%) but only marginal gains in Qwen-Plus-JERE (F1 = 26.99%). This contrast verifies that explicit reasoning steps significantly enhance entity boundary determination in certain architectures. Notably, while zero-shot RE performance was comparable across models (77.9% vs 75.39%), their NER results (26.2% vs 25.71%) collectively revealed limitations in entity-type discrimination without guidance. Overall, the CoT strategy provided the most balanced joint extraction capability for DeepSeek-R1-JERE and optimal RE performance for Qwen-Plus-JERE, confirming structured reasoning prompts’ general suitability for coal mine safety text extraction despite model-specific variations.

Table 7.

Performance comparison of prompt strategies in joint extraction method.

The comparative performance of different prompting strategies provides compelling evidence for their varying capacity to express type symmetry in our quintuple structure. The CoT strategy’s superior performance demonstrates that its step-by-step reasoning explicitly maintains the head type–relation–tail type symmetry through discrete verification steps. While the NER results show room for improvement, error analysis reveals that most misclassifications occur with complex technical entities like “coalbed methane gathering and transmission steel pipeline” and “fracturing equipment safety pin shear pressure”, where the models struggle with long, domain-specific compound terms. This challenge is compounded by OCR error propagation. Common mitigation approaches in safety engineering include (1) developing OCR-resistant entity recognition through hybrid dictionary matching, (2) implementing post-processing rules to handle common nested patterns in safety regulations, and (3) creating a domain-specific tokenization standard for compound safety terms. While we preserved methodological purity in this study by not implementing these optimizations, they represent immediate practical enhancements for production systems.

4.4.3. Model Parameter Experiment

In the Prompt Ablation Experiment, the CoT strategy demonstrated the most outstanding performance and was thus selected as the fixed variable. We conducted systematic comparisons across five model variants: the original DeepSeek-R1 (full parameters), its two distilled versions (DeepSeek-R1-distill-qwen-14b/32b), and Qwen2.5 baselines (Qwen2.5-14B/32B). All distilled models were trained through consistent knowledge distillation protocols: using their respective full-parameter versions as teachers (DeepSeek-R1 for the first group, Qwen2.5-72B for the latter), with temperature for soft target generation and a hybrid loss function (KL-divergence for distribution alignment plus cross-entropy on labeled data), optimized with batch size and learning rate . This unified approach enables rigorous evaluation of parameter scaling effects on coal mine safety text processing while maintaining methodological consistency across architectures.

The comprehensive comparison (Table 8) reveals distinct architectural advantages across tested models. In relation extraction, Qwen2.5-32B achieved the highest F1-score (83.62%), while DeepSeek-R1-distill-qwen-32b showed exceptional recall (89.33%). Notably, Qwen-plus demonstrated superior NER performance (F1 = 32.73%), suggesting its architecture better handles entity boundary detection compared to other models. The Qwen2.5 series exhibited particularly balanced RE performance across precision and recall metrics, with Qwen2.5-14B achieving 81.24% F1-score.

Table 8.

Performance comparison of different parameter models in joint extraction method.

These results highlight how both parameter scale and model architecture influence task performance. For entity recognition, all models showed room for improvement, though Qwen-plus maintained a clear advantage, indicating that architectural choices significantly impact fine-grained task performance. The Qwen2.5 series demonstrated strong capabilities in maintaining precision–recall balance, especially in relation extraction tasks.

The findings provide important guidance for model selection in coal mine safety applications. Qwen2.5-32B and DeepSeek-R1-distill-qwen-32b offer optimal “precision–efficiency” balances for different scenarios: Qwen2.5 for end-to-end performance, and DeepSeek for recall-oriented tasks. Architecture choice should align with task priorities—Qwen2.5 for balanced extraction, Qwen-plus for entity-centric applications, and distilled models for resource-constrained environments.

In addition, the experimental results demonstrate that medium-scale models can achieve an optimal balance between computational efficiency and accuracy when processing the symmetric quintuple structure. While larger models show advantages in entity recognition tasks, the distilled medium-sized architecture proves particularly effective at capturing the type–entity–relationship symmetry that characterizes our framework. The findings reveal an important characteristic of our symmetric structure—its core relational semantics can be maintained with moderate computational resources, while still achieving performance comparable to larger models. This efficiency advantage positions our framework as a viable solution for knowledge extraction tasks where resource constraints exist, without compromising the fundamental benefits of symmetric representation.

4.5. Discussion

This study has verified the optimal practice for coal mine safety knowledge extraction through systematic experiments. At the methodological level, the proposed JERE joint extraction framework significantly outperforms the traditional SERE method by better preserving the symmetric structure of head/tail entities and their types, effectively addressing the error-accumulation problem of the pipeline method while maintaining balanced semantic representation. In terms of prompt engineering, the Chain-of-Thought (CoT) strategy, through its step-by-step reasoning mechanism that explicitly enforces type symmetry constraints, has enabled the DeepSeek-R1-JERE and Qwen-Plus-JERE models to achieve F1-scores of 80.65% and 84.96%, respectively, in the relation extraction task. Compared with the zero-shot strategy, this represents a maximum improvement of 9.6 percentage points. This not only confirms the adaptability of structured prompts to professional domain texts but also verifies the importance of maintaining symmetric knowledge representation. Regarding model selection, the distilled model with 32 billion parameters shows outstanding performance in the relation extraction task where symmetric pattern recognition is crucial, while the full-parameter model is more proficient in entity recognition that requires deeper type discrimination. This “task-model” correspondence provides important reference for practical deployment while highlighting how different components of our symmetric framework benefit from distinct architectures. It is worth noting that the performance breakthrough achieved by the knowledge distillation technology with medium-scale parameters indicates that domain-adapted model compression can balance both accuracy and efficiency while still effectively capturing the essential symmetric relationships in our quintuple knowledge representation. The extracted entity relationships demonstrate direct applicability for building industrial safety knowledge bases, particularly in mining safety contexts (e.g., roof collapse and rock burst databases). With proven domain adaptability, the framework shows strong potential for extension to other high-risk sectors like chemical and construction industries, where it can provide structured knowledge foundations for risk early-warning and decision-support systems.

5. Conclusions

This study presents a knowledge extraction framework integrating LLMs with prompt engineering for coal mine safety applications. Through systematic experiments comparing different approaches, we demonstrate that our joint extraction framework consistently outperforms traditional pipeline methods across various evaluation metrics. The results show that structured prompting strategies significantly enhance extraction performance, while distilled model variants achieve an effective balance between accuracy and computational efficiency. However, the framework’s performance is contingent on input document quality, as OCR errors in low-resolution scans can propagate through the extraction pipeline. Additionally, while the symmetric architecture handles flat entity–relation structures effectively, it shows limitations with nested entities. The symmetric constraint-based architecture proves particularly robust for simultaneous entity and relation extraction, though we observe notable variations in how different model architectures adapt to this joint learning paradigm. These findings not only validate our proposed framework’s effectiveness but also provide insights into optimal model configuration and prompting strategies for domain-specific knowledge extraction tasks. The combined approach offers a practical solution for processing complex safety regulations while maintaining computational efficiency.

Author Contributions

Conceptualization, R.D. and Z.Z.; methodology, Z.Z.; software, Z.Z.; validation, Z.Z. and Y.L.; formal analysis, Z.Z.; investigation, Y.L.; resources, Z.Z.; data curation, Z.Z. and Y.L.; writing—original draft preparation, H.M.; writing—review and editing, Z.Z. and H.M.; visualization, Y.L.; supervision, R.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data presented in this study is available on request from the author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, Y.; Cui, C.; Zhang, F.; Kang, Y.; Bao, K. Statistical analysis and research on major and above coal mine accidents in China from 2011 to 2020. J. Saf. Environ. 2023, 29, 3269–3276. [Google Scholar]

- Zhang, S.; Zhang, M.; Zhu, R.; Meng, Q. Analysis of the characteristics of China’s mine accidents in the past five years and countermeasures for prevention and control. Coal Chem. Ind. 2021, 44, 101–109. [Google Scholar]

- Zhang, J.; Yao, Y.; Feng, Y.; Liu, Q. Application of fault diagnosis and early warning system in coal mining. Coal Sci. Technol. 2021, 49, 175–182. [Google Scholar]

- Wang, H.; Qi, Q.; Liang, Y.; Qi, Q.; Liu, Y.; Sun, Z. Statistical analysis and countermeasures of major accidents in coal mines in China. China Saf. Sci. J. 2024, 34, 9–18. [Google Scholar]

- Qiu, Z.; Liu, Q.; Li, X.; Zhang, J.; Zhang, Y. Construction and analysis of a coal mine accident causation network based on text mining. Process Saf. Environ. Prot. 2021, 153, 320–328. [Google Scholar] [CrossRef]

- Zhang, P.; Sheng, L.; Wang, W.; Wei, W.; Zhao, J. Construction of a mine accident knowledge graph based on Large Language Models. J. Mine Autom. 2025, 2, 76–105. [Google Scholar]

- Dagdelen, J.; Dunn, A.; Lee, S.; Walker, N.; Rosen, A.S.; Ceder, G.; Persso, K.A.; Jain, A. Structured information extraction from scientific text with large language models. Nat. Commun. 2024, 15, 1418. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Chen, Q.; Du, J.; Peng, X.; Keloth, V.K.; Zuo, X.; Zhou, Y.; Li, Z.; Jiang, X.; Lu, Z.; et al. Improving large language models for clinical named entity recognition via prompt engineering. J. Am. Med Informatics Assoc. 2024, 31, 1812–1820. [Google Scholar] [CrossRef]

- Remadi, A.; El Hage, K.; Hobeika, Y.; Bugiotti, F. To prompt or not to prompt: Navigating the use of large language models for integrating and modeling heterogeneous data. Data Knowl. Eng. 2024, 152, 102313. [Google Scholar] [CrossRef]

- Wang, C.; Liu, X.; Chen, Z.; Hong, H.; Tang, J.; Song, D. DeepStruct: Pretraining of language models for structure prediction. Findings ACL 2022, 803–823. [Google Scholar]

- Tang, X.; Su, Q.; Wang, J.; Deng, Z. Chisiec: An information extraction corpus for ancient chinese history. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation, Torino, Italy, 20–25 May 2024; pp. 3192–3202. [Google Scholar]

- Li, M.; Zhou, H.; Yang, H.; Zhang, R. Rt: A retrieving and chain-of-thought framework for few-shot medical named entity recognition. J. Am. Med. Inform. Assoc. 2024, 31, ocae095. [Google Scholar] [CrossRef]

- Shao, W.; Zhang, R.; Ji, P.; Fan, D.; Hu, Y.; Yan, X.; Cui, C.; Tao, Y.; Mi, L.; Chen, L. Astronomical knowledge entity extraction in astrophysics journal articles via large language models. Res. Astron. Astrophys. 2024, 24, 065012. [Google Scholar] [CrossRef]

- Meoni, S.; Clergerie, D.L.E.; Ryffel, T. Large language models as instructors: A study on multilingual clinical entity extraction. In Proceedings of the 22nd Workshop on Biomedical Natural Language Processing and BioNLP Shared Tasks, Toronto, ON, Canada, 13 July 2023. [Google Scholar]

- Moassefi, M.; Houshmand, S.; Faghani, S.; Chang, P.D.; Sun, S.H.; Khosravi, B.; Triphati, A.G.; Rasool, G.; Bhatia, N.K.; Folio, L.; et al. Cross-institutional evaluation of large language models for radiology diagnosis extraction: A prompt-engineering perspective. J. Imaging Inform. Med. 2025. [Google Scholar] [CrossRef]

- Sasaki, Y.; Washizaki, H.; Li, J.; Sander, D.; Yoshioka, N.; Fukazawa, Y. Systematic literature review of prompt engineering patterns in software engineering. In Proceedings of the 2024 IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024; pp. 670–675. [Google Scholar]

- Zheng, Z.; Chao, W.; Qiu, Z.; Zhu, H.; Xiong, H. Harnessing large language models for text-rich sequential recommendation. In Proceedings of the ACM on Web Conference 2024, Singapore, 13–17 May 2024; pp. 3207–3216. [Google Scholar]

- Khurana, D.; Koli, A.; Khatter, K. Natural language processing: State of the art, current trends and challenges. Multimed. Tools Appl. 2023, 82, 3713–3744. [Google Scholar] [CrossRef]

- Lavrinovics, E.; Biswas, R.; Bjerva, J. Knowledge Graphs, Large Language Models, and Hallucinations: An NLP Perspective. J. Web Semant. 2025, 85, 100844. [Google Scholar] [CrossRef]

- Krupka, G. Description of the SRA system as used for MUC-6. In Proceedings of the Sixth Message Understanding Conference (MUC-6), Columbia, MA, USA, 6–8 November 1995; pp. 1–12. [Google Scholar]

- Li, L.; Xi, X.; Sheng, S.; Cui, Z.; Xu, J. Research progress on named entity recognition in Chinese deep learning. Comput. Eng. Appl. 2023, 59, 46–69. [Google Scholar]

- Morwal, S.; Jahan, N.; Chopra, D. Named entity recognition using hidden Markov model (HMM). Int. J. Nat. Lang. Comput. 2012, 1, 15–23. [Google Scholar] [CrossRef]

- Chieu, H.L.; Ng, H.T. Named entity recognition: A maximum entropy approach using global information. In Proceedings of the 19th International Conference on Computational Linguistics, Taipei, Taiwan, 24 August–1 September 2002. [Google Scholar]

- Bekoulis, G.; Deleu, J.; Demeester, T.; Develder, C. Joint entity recognition and relation extraction as a multi-head selection problem. Expert Syst. Appl. 2018, 114, 34–45. [Google Scholar] [CrossRef]

- Zheng, S.; Hao, Y.; Lu, D.; Bao, H.; Xu, J.; Hao, H.; Xu, B. Joint entity and relation extraction based on a hybrid neural network. Neurocomputing 2017, 257, 59–66. [Google Scholar] [CrossRef]

- Isozaki, H.; Kazawa, H. Efficient support vector classifiers for named entity recognition. In Proceedings of the 19th International Conference on Computational Linguistics, Taipei, Taiwan, 24 August–1 September 2002. [Google Scholar]

- Yang, W.; Qin, Y.; Huang, R. Adaptive feature extraction for entity relation extraction. Comput. Speech Lang. 2025, 89, 101712. [Google Scholar] [CrossRef]

- Sharma, T.; Emmert-Streib, F. Deep mining the textual gold in relation extraction. Artif. Intell. Rev. 2025, 58, 1–69. [Google Scholar] [CrossRef]

- Cai, A.; Zhang, Y.; Ren, Z. Fault knowledge graph construction for coal mine fully mechanized mining equipment. J. Mine Autom. 2023, 49, 46–51. [Google Scholar]

- Liu, P.; Ye, S.; Shu, Y.; Lu, X.; Liu, M. Coal mine safety: Knowledge graph construction and its QA approach. J. Chin. Inf. Process. 2020, 34, 49–59. [Google Scholar]

- Zhang, G.; Cao, X.; Zhang, M. A knowledge graph system for the maintenance of coal mine equipment. Math. Probl. Eng. 2021, 13, 2866751. [Google Scholar] [CrossRef]

- Menghani, G. Efficient deep learning: A survey on making deep learning models smaller, faster, and better. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).