Feature Equalization and Hierarchical Decoupling Network for Rotated and High-Aspect-Ratio Object Detection

Abstract

1. Introduction

- (1)

- Spatial distribution differences of features: For targets with arbitrary orientations and high aspect ratios, their feature information is intensely clustered in the spatial dimension aligned with the target direction, whereas features in the spatial dimension orthogonal to this direction are relatively sparse. For instance, ship features in remote sensing images are mainly concentrated along their long edges, with fewer features distributed on the wide edges. Current convolutional structures adopt square convolution kernels sliding horizontally for feature extraction of such targets. This inflexible sampling mode with a fixed shape struggles to address the misalignment in anisotropic target representation within irregular feature spaces. To enhance the feature representation of rotating targets, some methods dynamically rotate convolution kernels according to the orientations of different objects in images, aiming to extract high-quality features of rotating targets more accurately. Nevertheless, due to the heavy concentration of ship features on long edges, this approach lacks the capability to model long-range information in the target’s directional dimension, making it hard to effectively capture features in distal or edge regions of the ship, thereby impairing detection accuracy.

- (2)

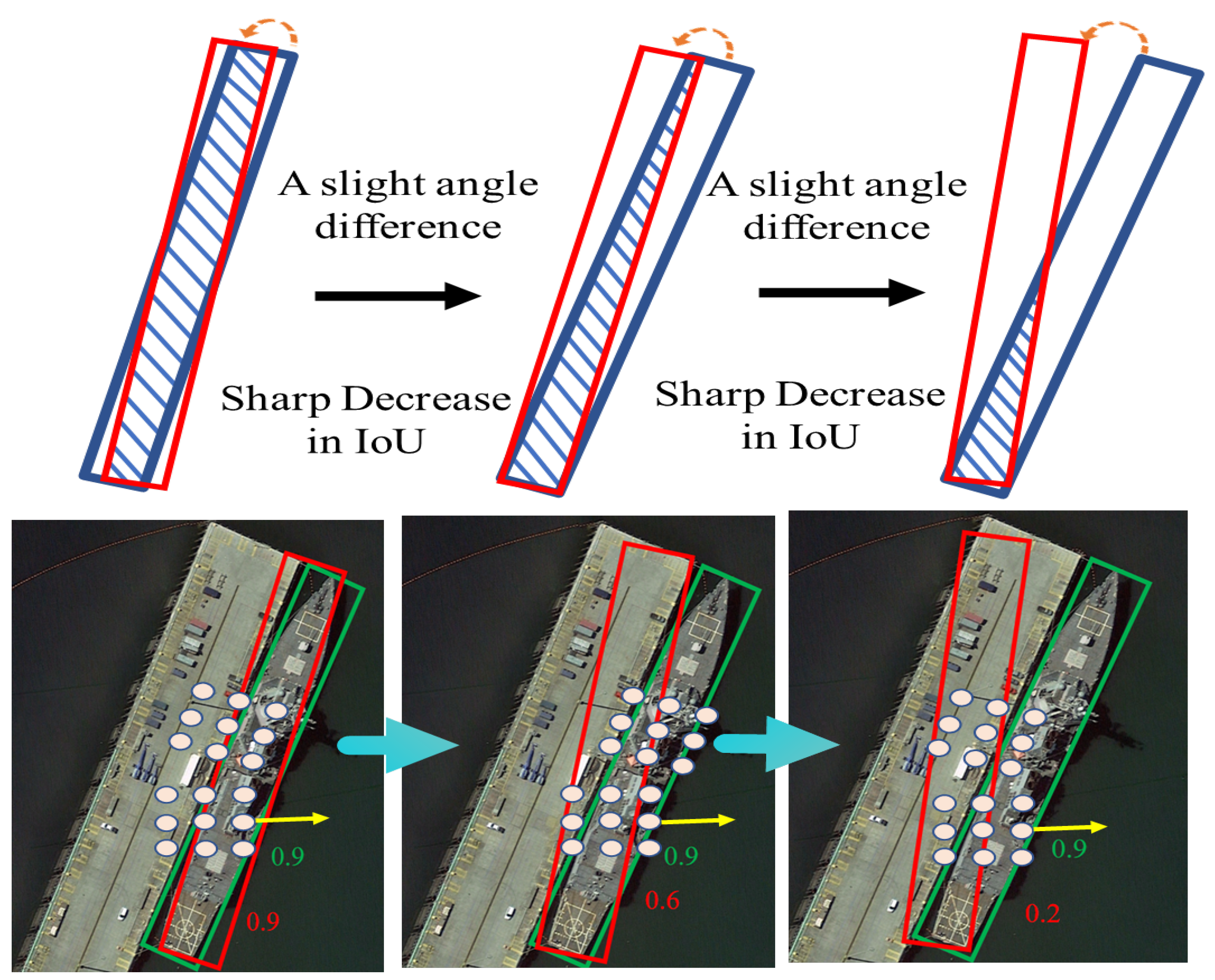

- Differential coupling of bounding box characterization parameters: Owing to their unique geometric properties, high-aspect-ratio objects are highly sensitive to angular changes during detector regression. Remote sensing target detection requires additional angular regression, and for high-aspect-ratio targets, even a small angular prediction error can lead to a significant deviation between the predicted bounding box and the real label. This deviation causes drastic fluctuations in the loss function gradient, making it difficult to find a suitable optimization direction when updating bounding box parameters and thus resulting in unstable training processes. Consequently, extremely precise prediction of the angle of elongated bounding boxes is required. However, among the parameters describing a rotating target, the target’s category and scale need to be predicted based on rotationally invariant features, while the target’s positional coordinates and orientation rely on rotationally isotropic features. Existing remote sensing multi-orientation target detection methods employ a set of shared feature maps to predict the above parameters, causing features describing the target’s shape to mix with those reflecting changes in its position and angle. This easily leads to inaccurate parameter prediction.

- (1)

- We systematically analyze the difficult problem of anisotropy of feature distributions for detecting targets with high aspect ratios in any direction, revealing the inherent conflict between directional asymmetry and detection parameter symmetry.

- (2)

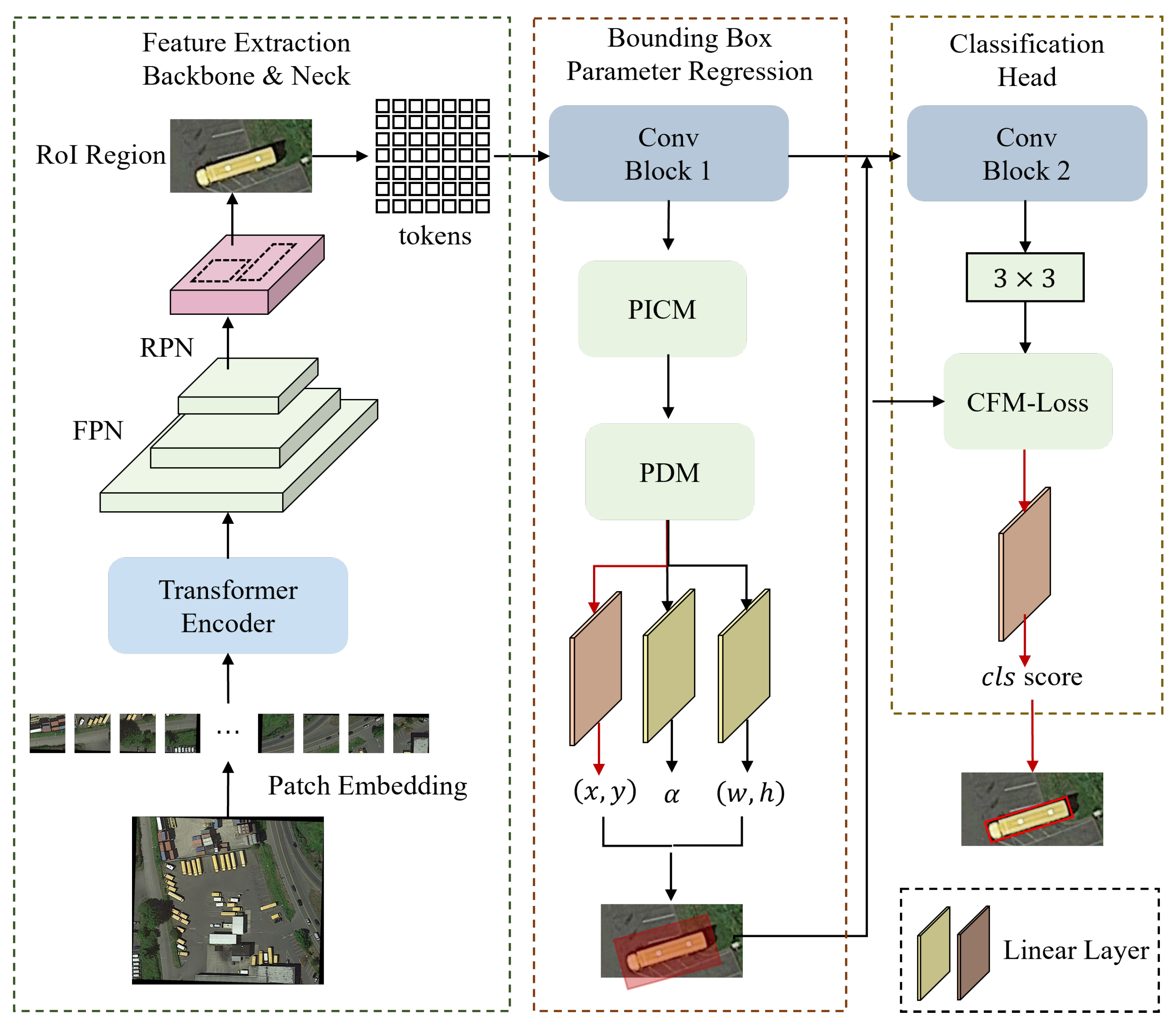

- A parallel interleaved convolution module is proposed, the core of which is to construct a large kernel strip convolution with multi-branch sequential orthogonalization for feature extraction. This architecture simultaneously captures the rotationally symmetric context and directionally specific details through multi-scale orthogonal receptive fields, effectively modeling geometric symmetry variations across targets with diverse aspect ratios.

- (3)

- A parametric regression decoupling (PRD) method is proposed, which decomposes the regression process of different bounding box parameters into different network branches so that they no longer share a set of shared feature maps for computation in order to solve the problem of mutual coupling between rotationally isotropic and rotationally invariant features. This symmetry-driven decoupling resolves the inherent conflict between isotropic position estimation and anisotropic orientation prediction in shared feature spaces.

- (4)

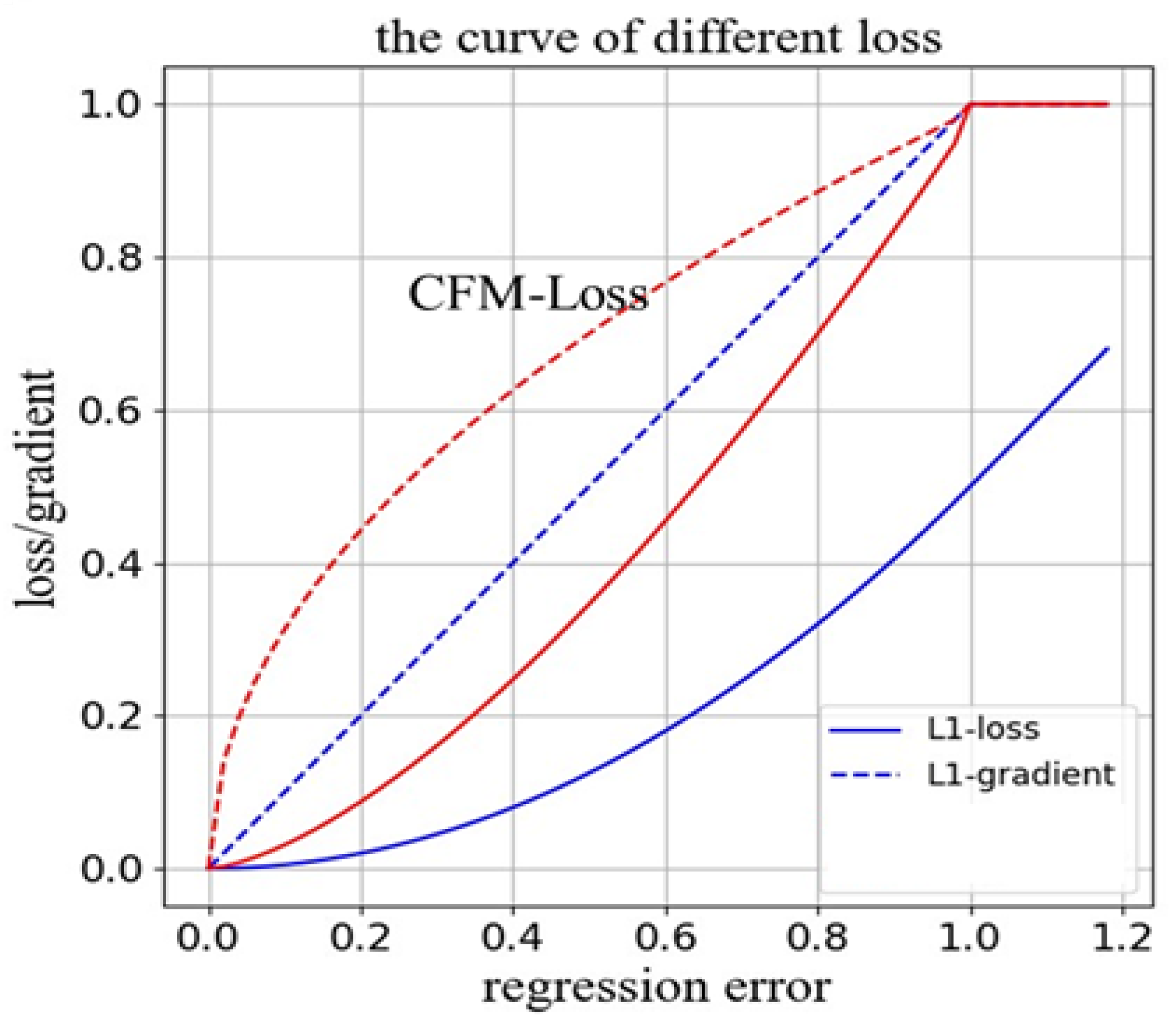

- A joint loss function (Critical Feature Matching, CFM-Loss) based on critical feature matching is proposed to assign weight factors according to the degree of change before and after the correction of different templates, which enhances the detector’s focus on high-quality samples and promotes stable training of the network.

2. Related Works

2.1. Rotation Feature Extraction

2.2. Rotating Bounding Box Representation

3. Methodology

3.1. Basic Architecture

3.2. Parallel Interleaved Convolution Module

3.3. Parameter Decoupling Module

3.4. Critical Feature Matching Loss Function

4. Experiments

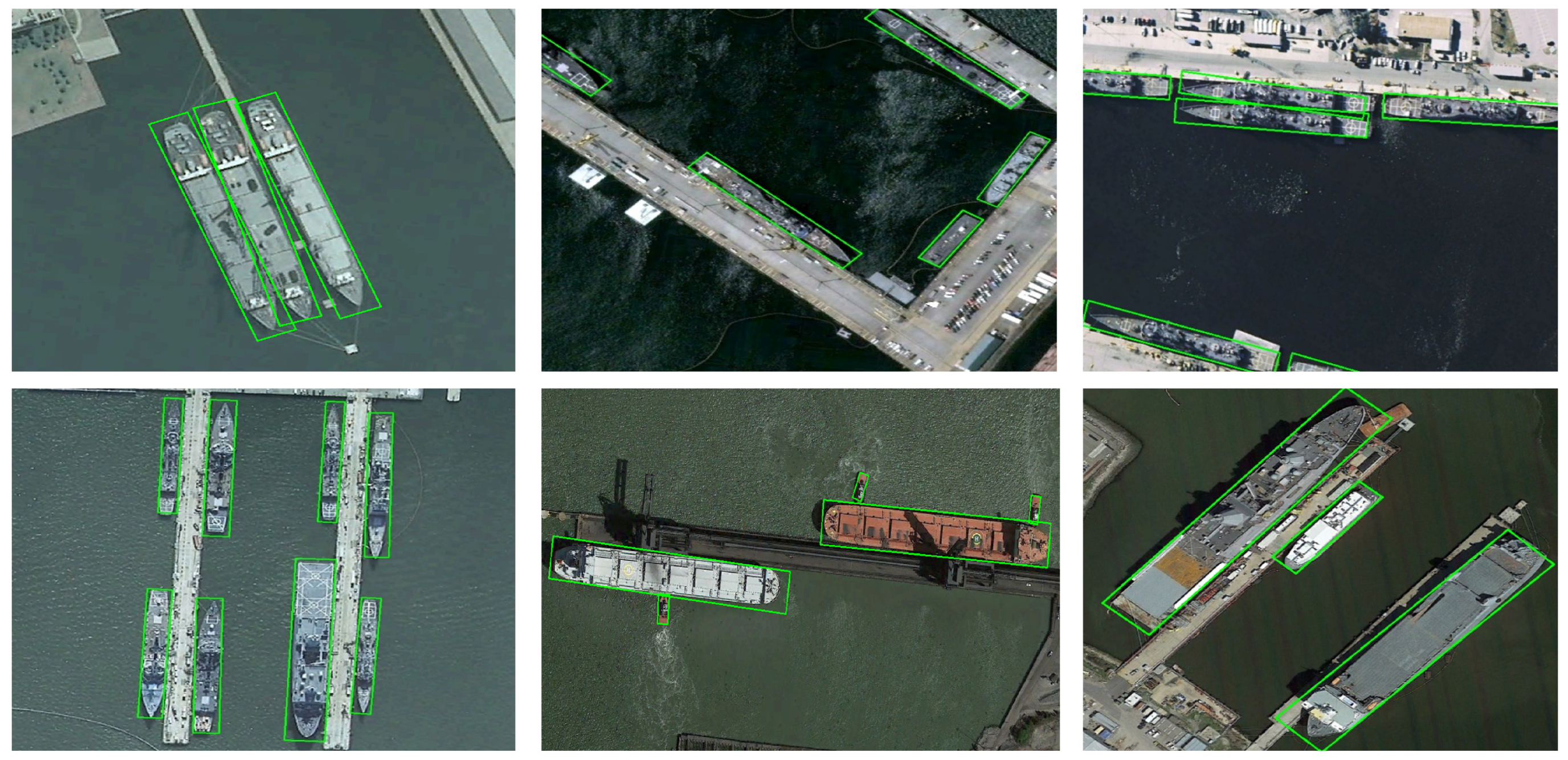

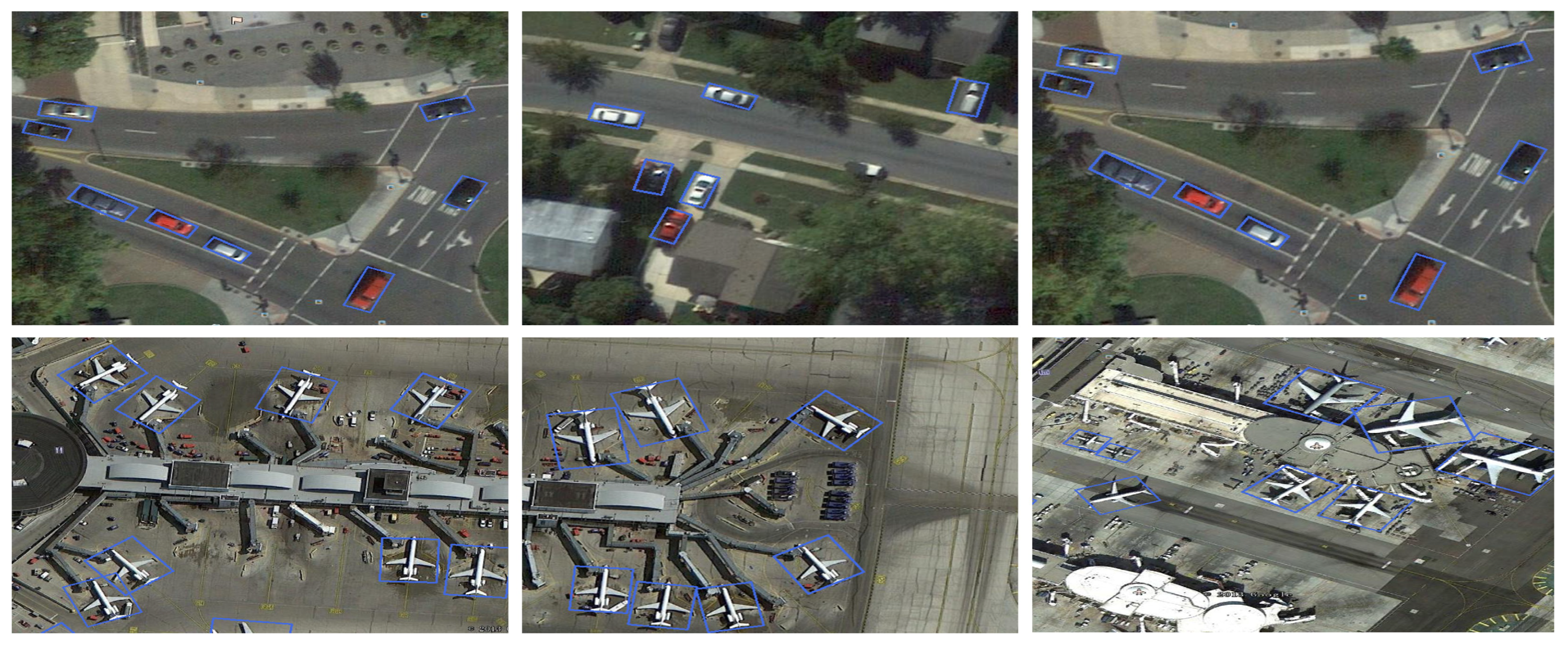

4.1. Experimental Dataset

4.2. Parameter Settings

4.3. Evaluation Metrics

4.4. Comparative Experiments

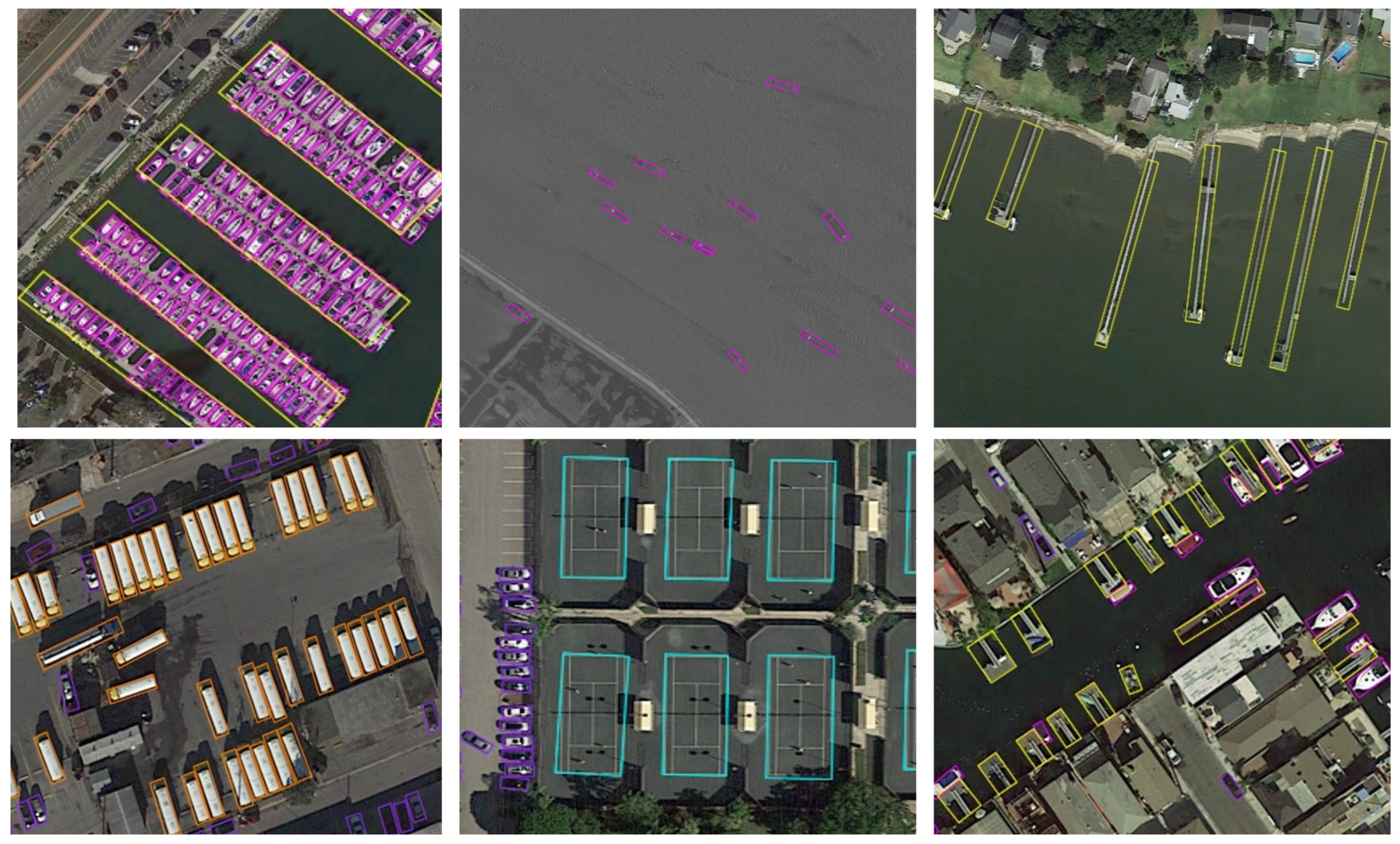

4.4.1. Comparative Experiment Results on DOTA

4.4.2. Comparative Experiment Results on HRSC2016

4.4.3. Comparative Experimental Results on UCAS-AOD

4.5. Ablation Studies

4.5.1. Analysis Experiment of Different Components

4.5.2. Effects of Cascaded Parameter Branches

4.5.3. Analysis of Parallel Interleaved Convolution Module’s Parameters

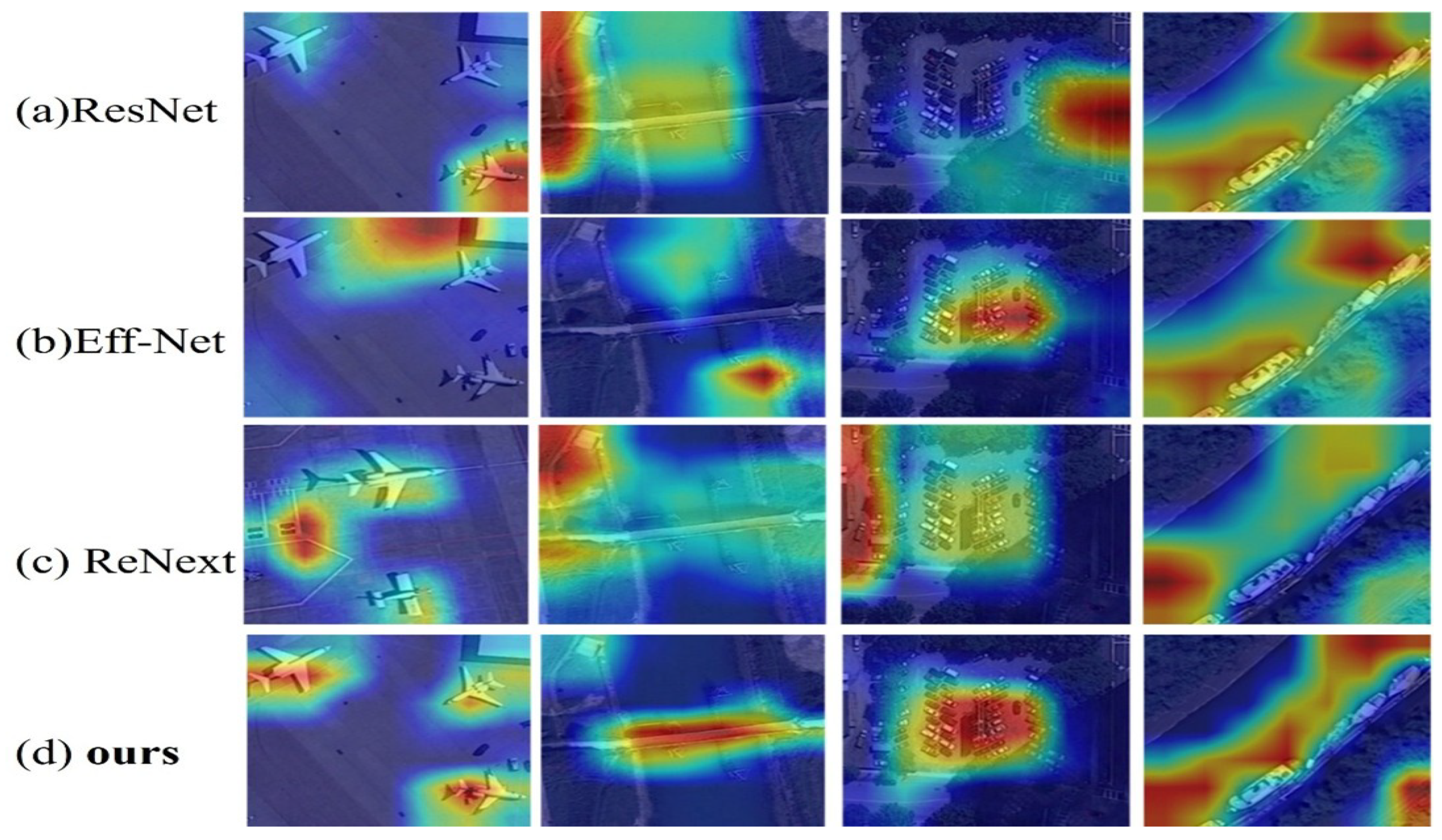

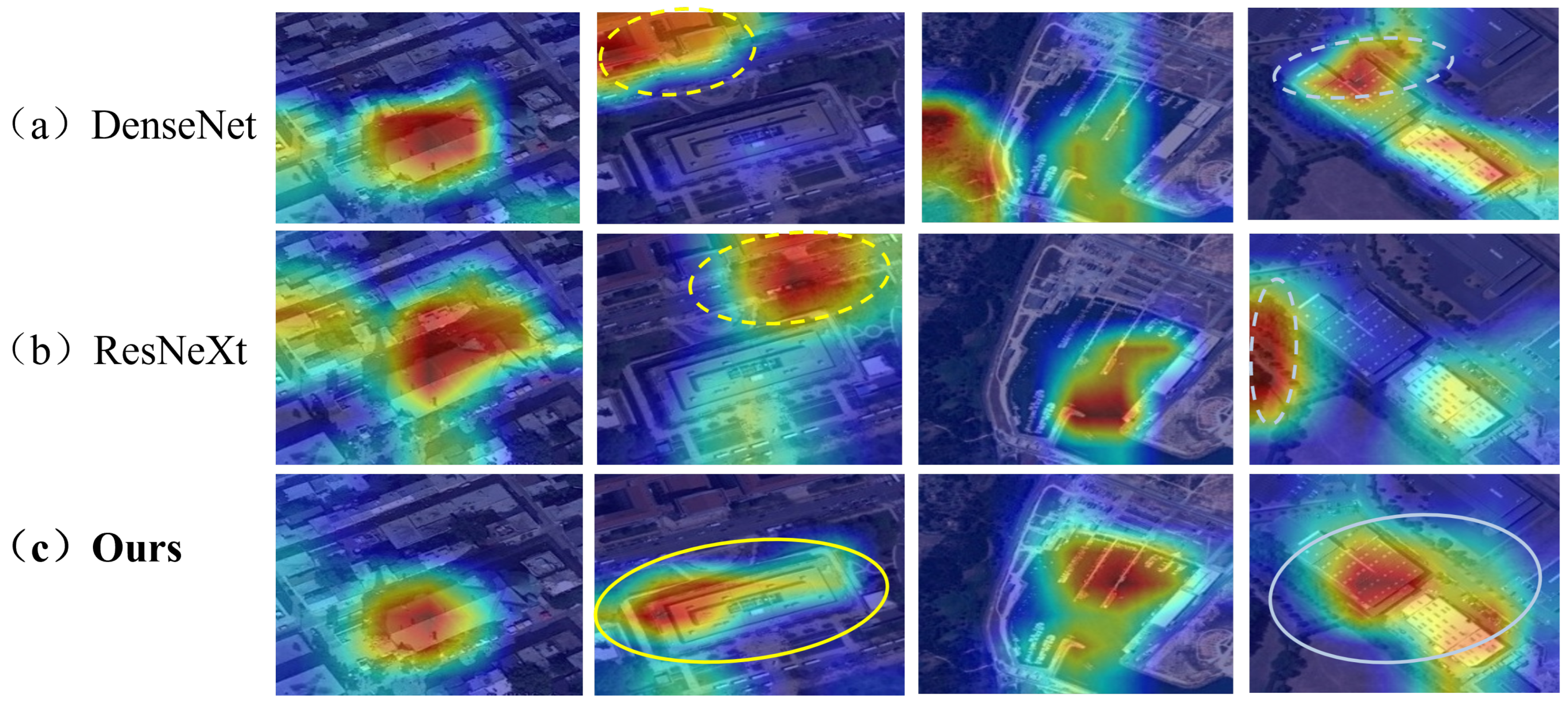

4.5.4. Intermediate Feature Visualization Analysis

4.5.5. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. ReDet: A Rotation-Equivariant Detector for Aerial Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Virtually, 19–25 June 2021. [Google Scholar]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An improved swin transformer-based model for remote sensing object detection and instance segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Ma, T.; Mao, M.; Zheng, H.; Gao, P.; Wang, X.; Han, S.; Ding, E.; Zhang, B.; Doermann, D. Oriented object detection with transformer. arXiv 2021, arXiv:2106.03146. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Ming, Q.; Wang, W.; Zhang, X.; Tian, Q. Rethinking rotated object detection with gaussian wasserstein distance loss. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11830–11841. [Google Scholar]

- Huang, Z.; Li, W.; Xia, X.G.; Tao, R. A General Gaussian Heatmap Label Assignment for Arbitrary-Oriented Object Detection. IEEE Trans. Image Process. 2022, 31, 1895–1910. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-Based Fully Convolutional Networks. Adv. Neural Inf. Process. Syst. 2016, 29, 379–387. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Qiu, H.; Li, H.; Wu, Q.; Meng, F.; Ngan, K.N.; Shi, H. A2RMNet: Adaptively Aspect Ratio Multi-Scale Network for Object Detection in Remote Sensing Images. Remote Sens. 2019, 11, 1594. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Fu, K.; Chang, Z.; Zhang, Y.; Xu, G.; Zhang, K.; Sun, X. Rotation-aware and multi-scale convolutional neural network for object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 161, 294–308. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Xian, S.; Fu, K. SCRDet: Towards More Robust Detection for Small, Cluttered and Rotated Objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Lu, Y.; Javidi, T.; Lazebnik, S. Adaptive Object Detection Using Adjacency and Zoom Prediction. arXiv 2015, arXiv:1512.07711. [Google Scholar]

- Deng, C.; Jing, D.; Han, Y.; Wang, S.; Wang, H. FAR-Net: Fast anchor refining for arbitrary-oriented object detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6505805. [Google Scholar] [CrossRef]

- Yi, X.; Gu, S.; Wu, X.; Jing, D. AFEDet: A Symmetry-Aware Deep Learning Model for Multi-Scale Object Detection in Aerial Images. Symmetry 2025, 17, 488. [Google Scholar] [CrossRef]

- Deng, C.; Jing, D.; Han, Y.; Chanussot, J. Toward hierarchical adaptive alignment for aerial object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5615515. [Google Scholar] [CrossRef]

- Zhu, H.; Jing, D. Optimizing slender target detection in remote sensing with adaptive boundary perception. Remote Sens. 2024, 16, 2643. [Google Scholar] [CrossRef]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets V2: More Deformable, Better Results. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, X.; Yu, F.; Dou, Z.Y.; Darrell, T.; Gonzalez, J.E. Skipnet: Learning dynamic routing in convolutional networks. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018; pp. 409–424. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Liu, Z.; Yuan, L.W.L.; Yang, Y. A high resolution optical satellite image dataset for ship recognition and some new baselines. In Proceedings of the International Conference on Pattern Recognition Applications and Methods, Porto, Portugal, 24–26 February 2017. [Google Scholar]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar] [CrossRef]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding Vertex on the Horizontal Bounding Box for Multi-Oriented Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1452–1459. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv 2017, arXiv:1706.09579. [Google Scholar] [CrossRef]

- Wang, J.; Ding, J.; Guo, H.; Cheng, W.; Pan, T.; Yang, W. Mask OBB: A Semantic Attention-Based Mask Oriented Bounding Box Representation for Multi-Category Object Detection in Aerial Images. Remote Sens. 2019, 11, 2930. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. arXiv 2019, arXiv:1908.05612. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Liao, W.; Yang, X.; Tang, J.; He, T. SCRDet++: Detecting Small, Cluttered and Rotated Objects via Instance-Level Feature Denoising and Rotation Loss Smoothing. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2384–2399. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J. On the Arbitrary-Oriented Object Detection: Classification based Approaches Revisited. arXiv 2020, arXiv:2003.05597. [Google Scholar]

- Qian, W.; Yang, X.; Peng, S.; Guo, Y.; Yan, J. Learning Modulated Loss for Rotated Object Detection. arXiv 2019, arXiv:1911.08299. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Yi, J.; Wu, P.; Liu, B.; Huang, Q.; Qu, H.; Metaxas, D. Oriented object detection in aerial images with box boundary-aware vectors. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision 2021, Virtual, 5–9 January 2021. [Google Scholar] [CrossRef]

- Huang, Z.; Li, W.; Xia, X.G.; Wu, X.; Cai, Z.; Tao, R. A Novel Nonlocal-Aware Pyramid and Multiscale Multitask Refinement Detector for Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5601920. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Yang, X.; Dong, Y. Optimization for Arbitrary-Oriented Object Detection via Representation Invariance Loss. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8021505. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602511. [Google Scholar] [CrossRef]

- Yu, H.; Tian, Y.; Ye, Q.; Liu, Y. Spatial transform decoupling for oriented object detection. In Proceedings of the AAAI Conference on Artificial Intelligence 2024, Vancouver, BC, Canada, 20–28 February 2024; Volume 38, pp. 6782–6790. [Google Scholar]

- Yuan, X.; Zheng, Z.; Li, Y.; Liu, X.; Liu, L.; Li, X.; Hou, Q.; Cheng, M.M. Strip R-CNN: Large Strip Convolution for Remote Sensing Object Detection. arXiv 2025, arXiv:2501.03775. [Google Scholar] [CrossRef]

- Qian, W.; Yang, X.; Peng, S.; Yan, J.; Guo, Y. Learning Modulated Loss for Rotated Object Detection. In Proceedings of the National Conference on Artificial Intelligence, Virtually, 2–9 February 2021. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Yang, X. Sparse Label Assignment for Oriented Object Detection in Aerial Images. Remote Sens. 2021, 13, 2664. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Dong, Y. CFC-Net: A Critical Feature Capturing Network for Arbitrary-Oriented Object Detection in Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5605814. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Dong, Y.; Yang, X. Task interleaving and orientation estimation for high-precision oriented object detection in aerial images. ISPRS J. Photogramm. Remote Sens. 2023, 196, 241–255. [Google Scholar] [CrossRef]

- Ming, Q.; Zhou, Z.; Miao, L.; Zhang, H.; Li, L. Dynamic Anchor Learning for Arbitrary-Oriented Object Detection. arXiv 2020, arXiv:2012.04150. [Google Scholar] [CrossRef]

| Methods | Backbone | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gliding Vertex [25] | ResNet101 | 89.64 | 85.00 | 52.26 | 77.34 | 73.01 | 73.14 | 86.82 | 90.74 | 79.02 | 86.81 | 59.55 | 70.91 | 72.94 | 70.86 | 57.32 | 75.02 |

| FR-O [7] | ResNet101 | 79.42 | 77.13 | 17.70 | 64.05 | 35.30 | 38.02 | 37.16 | 89.41 | 69.64 | 59.28 | 50.30 | 52.91 | 47.89 | 47.40 | 46.30 | 54.13 |

| RoI-Trans [20] | ResNet101 | 88.64 | 78.52 | 43.44 | 75.92 | 68.81 | 73.68 | 83.59 | 90.74 | 77.27 | 81.46 | 58.39 | 53.54 | 62.83 | 58.93 | 47.67 | 69.56 |

| A2RMNet [8] | ResNet101 | 89.84 | 83.39 | 60.06 | 73.46 | 79.25 | 83.07 | 87.88 | 90.90 | 87.02 | 87.35 | 60.74 | 69.05 | 79.88 | 79.74 | 65.17 | 78.45 |

| RRPN [26] | ResNet101 | 88.52 | 71.20 | 31.66 | 59.30 | 51.85 | 56.19 | 57.25 | 90.81 | 72.84 | 67.38 | 56.69 | 52.84 | 53.08 | 51.94 | 53.58 | 61.01 |

| R2CNN [27] | ResNet101 | 80.94 | 65.67 | 35.34 | 67.44 | 59.92 | 50.91 | 55.81 | 90.67 | 66.92 | 72.39 | 55.06 | 52.23 | 55.14 | 53.35 | 48.22 | 60.67 |

| MASK-OBB [28] | ResNet101 | 89.69 | 87.07 | 58.51 | 72.04 | 78.21 | 71.47 | 85.20 | 89.55 | 84.71 | 86.76 | 54.38 | 70.21 | 78.98 | 77.46 | 70.40 | 76.98 |

| SCRDet [29] | ResNet101 | 89.98 | 80.65 | 52.09 | 68.36 | 68.36 | 60.32 | 72.41 | 90.85 | 87.94 | 86.86 | 65.02 | 66.68 | 66.25 | 68.24 | 65.21 | 72.61 |

| SCRDet++ [30] | ResNet101 | 90.01 | 82.32 | 61.94 | 68.62 | 69.62 | 81.17 | 78.83 | 90.86 | 86.32 | 85.10 | 65.10 | 61.12 | 77.69 | 80.68 | 64.25 | 76.24 |

| CSL [31] | ResNet101 | 90.25 | 85.53 | 54.64 | 75.31 | 70.44 | 73.51 | 77.62 | 90.84 | 86.15 | 86.69 | 69.60 | 68.04 | 73.83 | 71.10 | 68.93 | 76.17 |

| RSDet [32] | ResNet101 | 89.80 | 82.90 | 48.60 | 65.20 | 69.50 | 70.10 | 70.20 | 90.50 | 85.60 | 83.40 | 62.50 | 63.90 | 65.60 | 67.20 | 68.00 | 72.20 |

| R3Det [29] | ResNet101 | 89.54 | 81.99 | 48.46 | 62.52 | 70.48 | 74.29 | 77.54 | 90.80 | 81.39 | 83.54 | 61.97 | 59.82 | 65.44 | 67.46 | 60.05 | 71.69 |

| R-RetinaNet [33] | ResNet101 | 88.82 | 81.74 | 44.44 | 65.72 | 67.11 | 55.82 | 72.77 | 90.55 | 82.83 | 76.30 | 54.19 | 63.64 | 63.71 | 69.73 | 53.37 | 68.72 |

| BBAVectors [34] | ResNet101 | 88.63 | 84.06 | 52.13 | 69.56 | 78.26 | 80.40 | 88.06 | 90.87 | 87.23 | 86.39 | 56.11 | 65.62 | 67.10 | 72.08 | 63.96 | 75.36 |

| NPMMR-Det [35] | ResNet101 | 89.44 | 83.18 | 54.50 | 66.10 | 76.93 | 84.08 | 88.25 | 90.87 | 88.29 | 86.32 | 49.95 | 68.16 | 79.61 | 79.51 | 57.26 | 76.16 |

| GGHL [5] | ResNet101 | 89.74 | 85.63 | 44.50 | 77.48 | 76.72 | 80.45 | 86.16 | 90.83 | 88.18 | 86.25 | 67.07 | 69.40 | 73.38 | 68.45 | 70.14 | 76.95 |

| RIDet-O [36] | ResNet101 | 88.94 | 78.45 | 46.87 | 72.63 | 77.63 | 80.68 | 88.18 | 90.55 | 81.33 | 83.61 | 64.85 | 63.72 | 73.09 | 73.13 | 56.87 | 74.70 |

| S2A-Net [37] | ResNet101 | 89.28 | 84.11 | 56.95 | 79.21 | 80.18 | 82.93 | 89.21 | 90.86 | 84.66 | 87.61 | 71.66 | 68.23 | 78.58 | 78.20 | 65.55 | 79.15 |

| STD [38] | ResNet101 | 88.56 | 84.53 | 62.08 | 81.80 | 81.06 | 85.06 | 88.43 | 90.59 | 86.84 | 86.95 | 72.13 | 71.54 | 84.30 | 82.05 | 78.94 | 81.66 |

| Strip-RCNN [39] | ResNet101 | 89.14 | 84.90 | 61.78 | 83.50 | 81.54 | 85.87 | 88.64 | 90.89 | 88.02 | 87.31 | 71.55 | 70.74 | 78.66 | 79.81 | 78.16 | 81.40 |

| FEHD-Net (ours) | Transformer | 90.12 | 84.31 | 69.52 | 82.17 | 69.60 | 87.23 | 89.93 | 90.86 | 88.02 | 71.84 | 79.85 | 76.04 | 77.53 | 74.44 | 83.42 | 81.73 |

| Model | Backbone | mAP (%) |

|---|---|---|

| RoI-Transformer [20] | ResNet101 | 86.20 |

| RSDet [40] | ResNet50 | 86.5 |

| BBAVectors [34] | ResNet101 | 88.60 |

| R3Det [29] | ResNet101 | 89.26 |

| S2ANet [37] | ResNet101 | 90.17 |

| ReDet [1] | ResNet101 | 90.46 |

| Oriented R-CNN [41] | ResNet101 | 90.50 |

| FEHD-Net (Ours) | ResNet101 | 92.73 |

| Model | Backbone | Input Size | Car | Airplane | mAP (%) |

|---|---|---|---|---|---|

| Faster RCNN [7] | ResNet50 | 800 × 800 | 86.87 | 89.86 | 88.36 |

| RoI Transformer [20] | ResNet50 | 800 × 800 | 88.02 | 90.02 | 89.02 |

| SLA [42] | ResNet50 | 800 × 800 | 88.57 | 90.30 | 89.44 |

| CFC-Net [43] | ResNet50 | 800 × 800 | 89.29 | 88.69 | 89.49 |

| TIOE-Det [44] | ResNet50 | 800 × 800 | 88.83 | 90.15 | 89.49 |

| RIDet-O [36] | ResNet50 | 800 × 800 | 88.88 | 90.35 | 89.62 |

| DAL [45] | ResNet50 | 800 × 800 | 89.25 | 90.49 | 89.87 |

| S2ANet [37] | ResNet50 | 800 × 800 | 89.56 | 90.42 | 89.99 |

| Ours | ResNet50 | 800 × 800 | 90.28 | 92.19 | 91.67 |

| With PICM | With PDM | With CFM-Loss | mAP (%) |

|---|---|---|---|

| ✗ | ✗ | ✗ | 83.47 |

| ✓ | ✗ | ✗ | 86.63 |

| ✗ | ✓ | ✗ | 85.02 |

| ✓ | ✓ | ✗ | 88.15 |

| ✓ | ✗ | ✓ | 88.93 |

| ✓ | ✓ | ✓ | 92.73 |

| Cascade Number | HRSC2016 (mAP) (%) | UCAS-AOD (mAP) (%) |

|---|---|---|

| 1 | 90.31 | 89.83 |

| 2 | 91.02 | 89.94 |

| 3 | 92.73 | 91.67 |

| 4 | 89.28 | 89.88 |

| 3 × 3 | 5 × 1, 1 × 5 | 7 × 1, 1 × 7 | 9 × 1, 1 × 9 | mAP (%) | ↑ (%) |

|---|---|---|---|---|---|

| Square-Conv | Strip-Conv | Strip-Conv | Strip-Conv | - | - |

| ✓ | ✗ | ✓ | ✗ | 88.93 | - |

| ✓ | ✓ | ✗ | ✗ | 89.21 | +0.28 |

| ✓ | ✗ | ✗ | ✓ | 89.73 | +0.80 |

| ✓ | ✓ | ✓ | ✓ | 91.67 | +2.74 |

| ✗ | ✓ | ✓ | ✓ | 89.46 | +0.53 |

| ✓ | 1 × 5, 5 × 1 | 1 × 7, 7 × 1 | 1 × 9, 9 × 1 | 90.57 | +1.64 |

| ✓ | 5 × 5 | 7 × 7 | 9 × 9 | 89.75 | +0.82 |

| ✓ | 1 × 5, 5 × 1 | 1 × 7, 7 × 1 | 1 × 9, 9 × 1 | 89.75 | +0.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, W.; Ji, J.; Jing, D. Feature Equalization and Hierarchical Decoupling Network for Rotated and High-Aspect-Ratio Object Detection. Symmetry 2025, 17, 1491. https://doi.org/10.3390/sym17091491

Gao W, Ji J, Jing D. Feature Equalization and Hierarchical Decoupling Network for Rotated and High-Aspect-Ratio Object Detection. Symmetry. 2025; 17(9):1491. https://doi.org/10.3390/sym17091491

Chicago/Turabian StyleGao, Wenbin, Jinda Ji, and Donglin Jing. 2025. "Feature Equalization and Hierarchical Decoupling Network for Rotated and High-Aspect-Ratio Object Detection" Symmetry 17, no. 9: 1491. https://doi.org/10.3390/sym17091491

APA StyleGao, W., Ji, J., & Jing, D. (2025). Feature Equalization and Hierarchical Decoupling Network for Rotated and High-Aspect-Ratio Object Detection. Symmetry, 17(9), 1491. https://doi.org/10.3390/sym17091491