Abstract

The pursuit of universal, symmetric semantic representations within large language models (LLMs) faces a fundamental challenge: the inherent asymmetry of natural languages. Different languages exhibit vast disparities in syntactic structures, lexical choices, and cultural nuances, making the creation of a truly shared, symmetric embedding space a non-trivial task. This paper aims to address this critical problem by introducing a novel framework to forge robust and symmetric multilingual sentence embeddings. Our approach, named DACL (Dynamic Asymmetric Contrastive Learning), is anchored in two powerful asymmetric learning paradigms: Contrastive Learning and Dynamic Curriculum Learning (DCL). We extend Contrastive Learning to the multilingual context, where it asymmetrically treats semantically equivalent sentences from different languages (positive pairs) and sentences with distinct meanings (negative pairs) to enforce semantic symmetry in the target embedding space. To further refine this process, we incorporate Dynamic Curriculum Learning, which introduces a second layer of asymmetry by dynamically scheduling training instances from easy to hard. This dual-asymmetric strategy enables the model to progressively master complex cross-lingual relationships, starting with more obvious semantic equivalences and advancing to subtler ones. Our comprehensive experiments on benchmark cross-lingual tasks, including sentence retrieval and cross-lingual classification (XNLI, PAWS-X, MLDoc, MARC), demonstrate that DACL significantly outperforms a wide range of established baselines. The results validate our dual-asymmetric framework as a highly effective approach for forging robust multilingual embeddings, particularly excelling in tasks involving complex linguistic asymmetries. Ultimately, this work contributes a novel dual-asymmetric learning framework that effectively leverages linguistic asymmetry to achieve robust semantic symmetry across languages. It offers valuable insights for developing more capable, fair, and interpretable multilingual LLMs, emphasizing that deliberately leveraging asymmetry in the learning process is a highly effective strategy.

1. Introduction

The rapid proliferation of large language models (LLMs) has fundamentally reshaped the fields of data mining and machine learning, pushing the boundaries of what is algorithmically achievable [1,2]. Within this new landscape, a critical and enduring objective, especially for global-scale applications, is the creation of symmetric semantic representations. The ideal of semantic symmetry posits a universal embedding space where sentences with equivalent meanings, irrespective of their source language, are mapped to identical or proximate vectors [3,4]. Such a symmetric space would unlock seamless cross-lingual transfer, inference, and retrieval. However, this elegant pursuit of symmetry collides with a messy, complex reality: the profound and pervasive asymmetry of human languages.

Linguistic asymmetry is not a superficial phenomenon but a foundational characteristic of how languages evolve and function [5,6]. It manifests at multiple levels. Lexically, languages exhibit non-isomorphism; a single-word concept in German, like “Schadenfreude” may require a descriptive phrase in English. Syntactically, word orders diverge dramatically, from subject–verb–object (SVO) in English to subject–object–verb (SOV) in Japanese, altering the very flow of information. Morphologically, polysynthetic languages like Turkish can embed the meaning of an entire English sentence into a single, complex word. Furthermore, culturally-embedded semantics introduce deeper asymmetries, where concepts are laden with connotations that lack direct equivalents elsewhere. This inherent linguistic asymmetry means that a simple, one-to-one mapping between languages is not just difficult but theoretically unsound, presenting a formidable obstacle to achieving true semantic symmetry.

Recent efforts to achieve this semantic symmetry have taken diverse approaches. Early work by [4] introduced LASER, leveraging BiLSTM architectures to create language-agnostic sentence embeddings through parallel corpora. More recently, transformer-based models like XLM-R [7] and mBERT [8] have shown promise by sharing parameters across languages during pre-training. However, as [9] demonstrated, these models often fail to create truly symmetric representations at the sentence level, particularly when handling structurally divergent language pairs. The LaBSE [10] model attempted to address this through contrastive learning on translation pairs, yet still treats all training examples with uniform importance, overlooking the inherent difficulty asymmetry across linguistic phenomena.

Many pioneering and influential methods in multilingual representation learning have operated under an implicit or explicit assumption of structural symmetry. Techniques based on word-by-word translation dictionaries, static alignments, or direct projection of monolingual spaces often presuppose a degree of structural parallelism that does not hold in practice [11,12]. These methods risk creating a “brittle” symmetry, effective for structurally similar language pairs or literal translations, but failing when faced with more nuanced, idiomatic, or culturally-specific expressions. The core limitation lies in their attempt to impose a symmetric solution onto an asymmetric problem, effectively glossing over the very complexities that define cross-lingual understanding. This motivates our central research question: What if, instead of fighting or ignoring linguistic asymmetry, we design learning systems that strategically exploit it?

This limitation is evident in multiple state-of-the-art systems. While MUSE [13] uses adversarial training to align monolingual embedding spaces, it relies heavily on bilingual dictionaries that assume lexical isomorphism—a problematic assumption for languages with significant morphological differences. Similarly, Xpara [2] achieves impressive results through parallel corpora but fails to account for varying difficulty levels in translation pairs, distantly related ones. Recent work by [14] attempted to address this through abstract meaning representations, yet still treats all training examples equally during optimization. These approaches, while innovative, ultimately impose symmetric solutions on asymmetric problems, leading to what [15] terms “brittle cross-lingual transfer”—effective for literal translations but failing with idiomatic expressions or culturally specific concepts.

This paper proposes a paradigm shift, arguing that the path to robust semantic symmetry is paved with asymmetric learning strategies. We introduce a framework that deliberately leverages asymmetry at multiple stages of the training process to navigate and resolve the inherent asymmetries of language. Our approach is uniquely characterized by a dual-asymmetric mechanism, integrating the principles of Contrastive Learning and Dynamic Curriculum Learning (DCL).

First, we employ Contrastive Learning, a paradigm that is intrinsically asymmetric in its treatment of data. It operates by a simple but powerful rule: maximize the similarity between “positive” pairs (semantically related instances) while simultaneously minimizing the similarity with a set of “negative” pairs (unrelated instances) [16]. In our multilingual context, a positive pair consists of two sentences that are translations of each other—linguistically asymmetric on the surface but semantically symmetric in meaning. The model is, thus, tasked with learning to see through the surface-level asymmetry to uncover the deep semantic symmetry. This directly aligns with the Special Issue’s call to investigate how data asymmetry can be leveraged to refine representation learning.

To further amplify the model’s ability to handle the full spectrum of linguistic diversity, we propose a second, orthogonal layer of asymmetry, drawing on ideas from dynamic course learning [17,18]. However, most existing multilingual representation learning methods, especially under the contrastive learning paradigm, tend to treat all samples equally and ignore this inherent difficulty asymmetry. This standard training approach may lead to inefficient training or unstable learning, limiting the performance and generalization ability of the model when dealing with complex cross-linguistic relations. DCL imposes an asymmetric training schedule, beginning with “easy” pairs that exhibit high lexical overlap and clear structural correspondence, and only gradually introducing “harder” pairs. This mimics a more natural, scaffolded learning process, allowing the model to build a solid foundation before tackling more ambiguous cases. This strategy directly addresses the call to explore asymmetric training methods for enhancing model robustness and performance.

The primary contributions of this work are threefold:

- We provide a detailed conceptualization of the multilingual embedding problem through the lens of symmetry and asymmetry, highlighting the limitations of symmetry-assuming approaches.

- We propose a novel dual-asymmetric learning framework that synergistically combines Contrastive Learning and DCL to generate superior, symmetrically-aligned multilingual sentence embeddings.

- Through extensive experiments on a suite of cross-lingual benchmarks, we demonstrate that our “asymmetric-to-symmetric” methodology consistently outperforms state-of-the-art models, validating the efficacy of our approach.

- Our analysis offers critical insights into the interplay between symmetry and asymmetry in LLMs, showing that embracing methodological asymmetry is a powerful principle for achieving functional symmetry in complex, real-world data.

2. Related Work

Our research is situated at the intersection of multilingual representation learning and asymmetric learning paradigms. We review prior work through this dual lens, highlighting the evolution of approaches towards achieving semantic symmetry and the rise of asymmetric methods that make it possible.

2.1. Symmetry in Multilingual Representations

The primary goal of multilingual sentence embeddings has always been to forge a symmetric semantic space, where linguistic barriers are effectively erased. Early efforts pursued this symmetry through relatively direct, often linear, methods. Techniques involving bilingual word alignment and translation-based projections attempted to establish a one-to-one correspondence, or a symmetric mapping, between the vector spaces of different languages [19,20]. These methods, while foundational, inherently assumed a high degree of structural isomorphism between languages, a symmetric assumption that, as we have argued, is often violated in practice.

The advent of powerful neural architectures, particularly Transformers, marked a significant leap forward. Models like mBERT and XLM-R [7,8] learn multilingual representations by being pre-trained on vast corpora spanning many languages. Their success relies on an implicit form of symmetry discovery: by sharing parameters across languages, these models are forced to find common, transferable linguistic patterns. However, their ability to create truly symmetric sentence-level representations for downstream tasks is not guaranteed out-of-the-box, as they can still struggle with complex linguistic variations and idiomatic expressions that break structural parallelism [9,21].

Subsequent research sought to explicitly enforce sentence-level symmetry. Adversarial training, as seen in MUSE [13], introduced an element of asymmetry by using a discriminator to make embeddings from different languages indistinguishable, thereby forcing the generator to produce more symmetrically aligned representations. LaBSE [10] and mUSE [14] extended this by leveraging translation pairs, using a contrastive-like objective to pull semantically equivalent sentences together. These methods represent a crucial step towards our approach, as they began to use more sophisticated, less direct objectives to achieve symmetry. Yet, they often treated all training examples with uniform importance, overlooking the inherent asymmetry in difficulty across the data. Our work builds directly upon this lineage, but distinguishes itself by systematically leveraging two distinct forms of asymmetry—in the learning objective and in the data scheduling—to achieve a more robust and nuanced semantic symmetry.

In recent years, multilingual representation learning has made significant strides in achieving cross-lingual symmetry and alignment. Xu [22] provided a comprehensive survey on multilingual large language models (MLLMs), emphasizing various methods for multilingual alignment, which are crucial for creating symmetric representations. Their work categorizes the advancements in MLLMs and highlights emerging frontiers and challenges, offering valuable insights into the current research landscape. Wu [23] presented a study titled “Representational Isomorphism and Alignment of Multilingual Large Language Models”. This paper explores the concept of representational isomorphism, aiming to establish a one-to-one correspondence between representations of different languages, thereby achieving symmetry. Their findings contribute to understanding how to better align multilingual models to ensure consistency across language representations.

2.2. Asymmetric Learning Paradigms

While the goal is semantic symmetry, the most effective tools for achieving it in self-supervised learning have proven to be inherently asymmetric.

Contrastive Learning has emerged as a dominant force in self-supervised representation learning, from computer vision to natural language processing [24,25,26]. Its fundamental mechanism is asymmetric: it defines a positive relationship for an anchor point and treats all other points in a batch as negative distractors. This asymmetric push-and-pull dynamic—pulling one positive pair together while pushing away many negatives—has been shown to be exceptionally effective at carving out a well-structured embedding space [27,28,29]. In the multilingual domain, models like mCLIP [30] have extended this concept to align text and images across languages. Our work applies this asymmetric principle to the sentence level, using it as the primary engine to resolve linguistic asymmetry and enforce semantic equivalence.

Curriculum Learning, first proposed by [31], introduces another form of productive asymmetry into the training process. The core idea is that models learn more effectively when training examples are presented in a meaningful order, typically from easy to hard, rather than in a random, symmetric shuffle [31]. This asymmetric training schedule mimics human cognitive development and has been successfully applied to various NLP tasks, including machine translation [32,33]. By dynamically adjusting the difficulty of training instances, we impose an intentional asymmetry on the learning trajectory. This prevents the model from being misguided by noisy or overly complex examples in the early stages and allows it to build a robust understanding progressively. Our integration of DCL distinguishes our framework from previous contrastive methods, which treat all examples as equally important at all times.

3. Methodology: A Dual-Asymmetric Framework

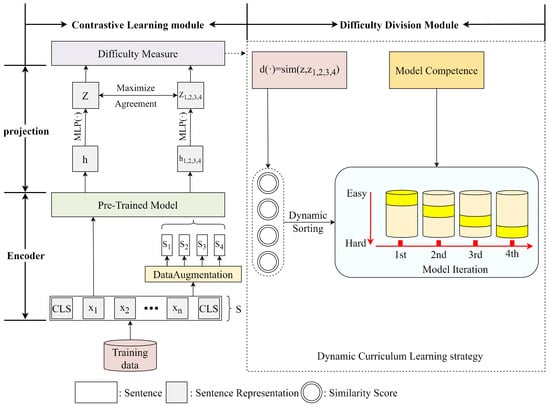

Our primary objective is to resolve the inherent asymmetry of natural languages to create a unified, symmetric multilingual sentence embedding space. To achieve this, we propose a novel framework, illustrated in Figure 1, that strategically employs a dual-asymmetric learning mechanism. This mechanism consists of an asymmetric learning objective powered by Contrastive Learning and an asymmetric training pace managed by DCL.

Figure 1.

The overall architecture of our dual-asymmetric framework.

3.1. Defining the Target: A Symmetric Embedding Space

Figure 1 illustrates the architecture of our dual-asymmetric framework, which consists of two key components: the multilingual Encoder and the Projection head.

The Encoder serves as the foundation of our framework, responsible for converting raw text inputs into contextualized representations. Specifically, for a given sentence s, the Encoder produces a sequence of hidden states, from which we extract the [CLS] token representation as the sentence-level embedding. The Encoder’s primary objective is to capture language-specific syntactic and semantic features while maintaining sufficient cross-lingual alignment capabilities. However, raw Encoder outputs often exhibit language-specific clustering due to inherent linguistic asymmetries.

The Projection component addresses this limitation through a specialized asymmetric mechanism. It consists of an MLP with ReLU activation that transforms the Encoder’s [CLS] representation into a new space optimized for cross-lingual symmetry. This design serves three critical purposes:

- Asymmetric Training Focus: By separating the contrastive optimization into a dedicated Projection head, we allow the Encoder to maintain its general language understanding capabilities while the Projection specifically targets cross-lingual alignment.

- Symmetric Space Forging: The Projection head learns to map language-specific representations from the Encoder into a shared space where translationally equivalent sentences become proximate, effectively resolving linguistic asymmetries.

- Training Stability: This separation prevents the contrastive objective from distorting the Encoder’s general linguistic knowledge, ensuring that the final embeddings retain both language-specific nuances and cross-lingual symmetry.

This Encoder-Projection architecture embodies our core “asymmetric-to-symmetric” philosophy: the methodological asymmetry (separate training objectives for Encoder and Projection) resolves the data-inherent asymmetry (linguistic differences across languages), ultimately producing symmetric embeddings that perform well across diverse cross-lingual tasks.

Let be a set of sentences from various languages. Our goal is to learn an encoder function , parameterized by , which maps any sentence to a d-dimensional vector . The space formed by these vectors should exhibit semantic symmetry. Formally, for any pair of sentences that are translations of each other (i.e., they are semantically equivalent, ), their embeddings and should be close, ideally satisfying , where is a similarity function like cosine similarity. Conversely, for semantically distinct sentences , their embeddings should be distant, with . The challenge lies in learning to satisfy these symmetric properties given the asymmetric nature of the input sentences.

3.2. Asymmetric Objective: Contrastive Learning for Semantic Alignment

To enforce the desired semantic symmetry, we employ Contrastive Learning as our primary training objective. This approach is fundamentally asymmetric. For a given anchor sentence embedding , we define its translation embedding as its sole positive partner. All other embeddings in a mini-batch of size N are treated as negative examples . The model is trained to force semantic symmetry in the target embedding space by pulling together the anchor point and the positive instances while pushing away all negative instances .

Specifically, we use an MLP projection head to map the embeddings h into a latent space where the contrastive loss is calculated. This is a standard practice that has been shown to improve representation quality [34].

where is a temperature hyperparameter that controls the sharpness of the distribution. This loss function is asymmetric in its structure: it has one positive term in the numerator and many negative terms in the denominator. By minimizing this loss over the entire dataset, we compel the model to learn representations that are invariant to the surface-level linguistic asymmetries but highly sensitive to the underlying semantic symmetries.

3.3. Asymmetric Pacing: DCL

A significant challenge in multilingual learning is the vast asymmetry in sample difficulty. Some sentence pairs are near-literal translations with high lexical overlap, making them “easy” positive pairs. Others are idiomatic or structurally divergent, making them “hard” positive pairs. A standard contrastive learning approach treats all these pairs equally, which can lead to suboptimal convergence.

To address this, we introduce DCL, which imposes an asymmetric schedule on the training process. The core idea is to dynamically control the influence of each training sample based on its difficulty, which is in turn measured by the model’s current competence.

1. Difficulty Scoring: At each training step, for a positive pair , we quantify its difficulty based on the current model’s prediction. An intuitive measure of this is the similarity of its embeddings, which is translated into a difficulty (dissimilarity) score:

A higher similarity implies an “easy” pair (low difficulty), while a lower similarity implies a “hard” pair (high difficulty).

2. Pacing Function: We introduce a pacing function that evolves with the training progress (e.g., training step t). This function determines the “competence” of the model, starting from a low value and gradually increasing towards 1. A simple linear or exponential pacer can be used. For instance, , where T is the total number of warm-up steps.

3. Curriculum-Weighted Loss: We modulate the contribution of each sample’s loss using its difficulty and the model’s current competence. Instead of treating every sample’s loss equally, we introduce a dynamic weight for each sample i at time t. The sample is only allowed to contribute significantly to the gradient if its difficulty is within the model’s current competence level . This can be implemented as a soft weighting scheme. The final loss for the mini-batch is a weighted sum aiming to optimize the model through a course learning strategy:

where is a function that increases as moves closer to . This ensures that the model first focuses on easy samples and only later shifts its attention to harder ones, creating an asymmetric learning path from simple to complex. This mirrors the process described in your PDF where you adjust the parameter.

3.4. Data Augmentation as a Source of Asymmetry

To create effective positive pairs for the contrastive learning framework, especially in an unsupervised setting or to enhance supervised pairs, we rely on data augmentation. Augmentation serves as a crucial source of controlled asymmetry. For a single sentence s, we generate multiple “views” which are syntactically different but (ideally) semantically identical. This process intentionally breaks the surface-level form of the sentence to force the model to learn deeper invariants. We employ a range of data enhancement techniques that have been shown to be effective in previous work [35]:

- Random Punctuation: Introduces noise that mimics stylistic variations.

- Random Insertion/Deletion: Alters sentence structure and length, creating asymmetric views.

- Synonym Replacement: Replaces words with their synonyms, breaking lexical symmetry while preserving semantic symmetry.

These augmentations create challenging positive pairs () that prevent the model from collapsing to trivial solutions and push it to build a truly robust, symmetric understanding of sentence meaning.

4. Experimental Setup

To empirically validate our hypothesis that leveraging asymmetric learning strategies leads to superior semantic symmetry, we conduct a comprehensive evaluation on a wide range of cross-lingual tasks. This section details the evaluation benchmarks, the baseline models we compare against, and our implementation specifics.

4.1. Evaluation Benchmarks

We assess the quality of the learned multilingual sentence embeddings on five standard downstream tasks that cover diverse aspects of cross-lingual understanding. This multi-task evaluation provides a holistic view of the model’s ability to capture symmetric semantic relationships across different languages and contexts.

- Semantic Textual Similarity (STS): We use the multilingual STS benchmark from [2,13]. This task directly measures the semantic symmetry of embeddings by correlating the cosine similarity of sentence pairs with human-annotated scores (from 0 to 5). We evaluate on seven cross-lingual language pairs, English–Arabic (EN-AR), English–German (EN-DE), English–Turkish (EN-TR), English–Spanish (EN-ES), English–French (EN-FR), English–Italian (EN-IT) and English–Dutch (EN-NL).

- Cross-lingual Natural Language Inference (XNLI): The XNLI dataset [36] tests the model’s ability to understand logical relationships (entailment, contradiction, neutral) across 15 languages. This task probes a deeper level of semantic understanding beyond mere similarity.

- Cross-lingual Paraphrase Identification (PAWS-X): PAWS-X [37] requires the model to distinguish between subtle paraphrases and non-paraphrases across seven languages. It is a challenging test of robustness against lexical and syntactic variations—key forms of linguistic asymmetry.

- Multilingual Document Classification (MLDoc): MLDoc [38] is a topic classification task with news documents in eight languages. It evaluates the model’s capacity to capture topic-level semantic symmetry in longer texts.

- Multilingual Amazon Review Corpus (MARC): The MARC dataset [39] involves sentiment classification of product reviews in six languages, testing the model’s ability to align sentiment expressions, which are often culturally and linguistically asymmetric.

- Question-Answer Matching (QAM): From XGLUE [40], this task requires matching questions to answer passages across English, German, and French, evaluating fine-grained cross-lingual relevance matching.

4.2. Baseline Systems

We compare our proposed model, which we refer to as DACL (Dynamic Asymmetric Contrastive Learning), against a suite of strong and representative baseline models. These baselines embody different philosophies in the pursuit of semantic symmetry.

- mBERT [7] & XLM-R [8]: Pre-trained multilingual transformers. We use the average of the last-layer hidden states as the sentence embedding. They represent a baseline of implicit symmetry discovery through shared parameterization.

- LASER [4]: A BiLSTM sequence-to-sequence model trained on parallel corpora. It learns embeddings optimized for translation, a direct way to enforce semantic symmetry.

- mUSE [14]: A dual-encoder Transformer model trained with a combination of translation pairs and SNLI data, representing a strong supervised approach to learning symmetric representations.

- LaBSE [10]: A state-of-the-art model that uses a contrastive objective on translation pairs. It is our most direct competitor, representing an approach that uses an asymmetric objective but lacks the asymmetric pacing of a curriculum.

- Xpara & MSE-AMR [2]: Recent competitive models that leverage parallel corpora or abstract meaning representations to improve cross-lingual alignment. They represent alternative strategies for bridging linguistic asymmetries.

- SOTA Embedders: We now also include several recent, top-performing sentence embedding models that are widely regarded as the state-of-the-art on public benchmarks. This includes paraphrase-multilingual-mpnet-base-v2 (MPNet-SBERT) [41], multilingual-e5-large (E5) [42], and BGE-m3 [43]. These models are typically based on massive pre-training and advanced contrastive learning objectives.

4.3. Implementation Details and Hyperparameters

Our model is implemented in PyTorch, leveraging the Hugging Face Transformers library. The core sentence encoder is initialized with the weights of bert-base-multilingual-cased.

- Architecture: We add a 2-layer MLP projection head with an ReLU activation on top of the [CLS] token representation from the encoder to produce the embeddings for the contrastive loss. The sentence embeddings used for downstream tasks are the [CLS] representations before the projection head.

- Training Objective: The contrastive loss is calculated using cosine similarity with a temperature parameter set to 0.1.

- Optimizer & Learning Rate: We use the AdamW optimizer with a learning rate of 3 × 10−5 and a linear learning rate warmup for the first 10% of training steps, followed by a linear decay.

- DCL: The core hyperparameter for our asymmetric pacing strategy is the curriculum length or total warm-up steps T. Based on preliminary experiments, we set T to represent the first 25% of the total training epochs. The difficulty score is calculated as described in Section 3. The weighting function is a simple binary mask for simplicity in our main experiments: a sample is used if its difficulty , where the competence pacer grows linearly from 0 to 1 over T steps.

- Data Augmentation: We report results for four variants of our model, corresponding to the four augmentation strategies described in your original paper (and Section 3): (1) Random Punctuation, (2) Random Insertion, (3) Random Deletion, and (4) Synonym Replacement. This allows us to analyze how different sources of induced asymmetry affect final performance.

- General Hyperparameters: We use a batch size of 64 and train for 5 epochs on the combined parallel corpora used by LaBSE. The dropout rate is set to 0.3. All experiments are conducted on a single NVIDIA A100 GPU. The best-performing checkpoint on the STS development set is used for final evaluation across all test sets.

5. Results and Analysis

This section presents the empirical results of our proposed framework DACL, against the established baselines. We analyze the performance across all evaluation benchmarks and provide insights into how our dual-asymmetric learning strategy contributes to achieving superior semantic symmetry.

5.1. Performance on STS

Table 1 shows the Spearman’s correlation scores on seven cross-lingual STS tasks. This task directly measures the quality of the learned symmetric representations and is the primary benchmark for sentence embedding models. We compare DACL with both established methods and the latest state-of-the-art encoders.

Table 1.

Performance (Spearman’s correlation ) on cross-lingual STS tasks. The numbers (1–4) for our model correspond to different data augmentation strategies. The best result is in bold, and the second best is underlined. SOTA results are from public leaderboards. Avg. (SOTA) shows the average performance of the new state-of-the-art models. Note: Language codes—EN: English, AR: Arabic, DE: German, TR: Turkish, ES: Spanish, FR: French, IT: Italian, NL: Dutch.

The latest generation of models, namely, E5-large and BGE-m3, demonstrate superior performance, setting a new state of the art with an average correlation of 85.09 and 85.84, respectively. This is expected, given their significantly larger model size, training data, and advanced pre-training objectives. However, our model, DACL, remains highly competitive. With an average score of 83.09, it outperforms established and strong baselines including LASER, mUSE, LaBSE, and even the more recent Xpara. It also performs on par with MSE-AMR. This is a significant result, as it demonstrates the effectiveness of our dual-asymmetric learning framework. The contrastive objective carves out a well-structured semantic space, while the dynamic curriculum ensures the model robustly learns from a spectrum of easy-to-hard examples. While not reaching the absolute top performance of models trained on web-scale data, DACL’s performance underscores the value of our proposed methodology as a powerful strategy for developing high-quality, symmetric multilingual embeddings.

5.2. Performance on Cross-Lingual Classification Tasks

We further evaluate on a suite of classification tasks: XNLI (Inference), PAWS-X (Paraphrase), MLDoc (Document Topic), and MARC (Review Sentiment). The results, measured in accuracy, are summarized in Table 2.

Table 2.

Average accuracy (%) across all languages for cross-lingual classification tasks. DACL results are from the best-performing augmentation variant.

The results are compelling. DACL achieves the best average performance on all four classification benchmarks.

- On XNLI, DACL significantly outperforms other models, demonstrating a superior ability to capture the nuanced logical relationships required for inference. This suggests that our asymmetric curriculum helps the model to build a more hierarchical and less superficial understanding of semantics.

- The most dramatic improvement is on PAWS-X, where our model surpasses the closest baseline by over 10 points. This task is specifically designed with adversarial examples that introduce syntactic asymmetry to trick models. The success of DACL here strongly validates our core thesis: by training the model to be robust to induced asymmetries (via augmentation) and guiding it through an asymmetric curriculum, it becomes exceptionally skilled at handling inherent linguistic asymmetries.

- On MLDoc and MARC, DACL also shows strong performance, indicating that the learned symmetric representations generalize well from the sentence level to the document level for tasks like topic and sentiment classification.

5.3. Analysis of Performance Profile: Generalization vs. Specialization

A key observation from the results in Table 1 and Table 2 is that while DACL achieves a highly competitive average performance, it does not uniformly outperform every baseline on every individual task. We posit that this performance characteristic is not a limitation, but, rather, a direct and desirable consequence of our dual-asymmetric learning framework, which is designed to foster generalization.

The Dynamic Curriculum Learning (DCL) component trains the model on a smoothed difficulty gradient, from easy to hard examples. This strategy encourages the model to learn fundamental and transferable semantic principles rather than overfitting to the statistical quirks of any single benchmark. This results in a robust “generalist” model. For instance, in the classification tasks shown in Table 2, DACL achieves a groundbreaking improvement on PAWS-X (+11.52 points over LaBSE), a task specifically designed to test robustness against syntactic asymmetry. This is precisely the kind of challenge our curriculum is designed to address. However, on the MLDoc topic classification task, the baseline LaBSE model maintains a narrow lead. This suggests that while some models may become highly specialized for certain tasks (e.g., document-level topic features), DACL invests its capacity in building a more universally applicable sentence-level semantic representation.

Ultimately, the goal of creating a symmetric multilingual embedding space is a goal of generalization. A consistently strong performance averaged across a diverse set of tasks—spanning similarity, inference, and paraphrase identification—is arguably a more meaningful indicator of success than achieving a spike in performance on one narrow task at the expense of others. DACL’s performance profile, therefore, validates our approach’s ability to create a more stable and truly generalizable symmetric solution.

5.4. Ablation Study

To isolate and rigorously evaluate the contribution of our key innovation—the asymmetric pacing provided by Dynamic Curriculum Learning (DCL)—we conduct a comprehensive ablation study. We compare our full DACL model against a variant that uses only the contrastive learning objective without the curriculum (termed “Contrastive Only”). This baseline is akin to an LaBSE model trained with our specific data and augmentations.

To demonstrate robustness and define the limits of the method’s applicability, as per the reviewer’s suggestion, we extend this analysis across all major evaluation benchmarks. This includes STS and the full suite of cross-lingual classification tasks. The results are presented in Table 3.

Table 3.

Comprehensive ablation study of DCL’s impact across all benchmarks. We compare the “Contrastive Only” baseline with the full DACL model. The performance gain (Δ) demonstrates the consistent and significant contribution of the DCL component.

The results in Table 3 offer a clear and compelling narrative about the role of DCL.

- Consistent Positive Impact: DCL provides a performance boost across all evaluated tasks, confirming that our asymmetric pacing strategy is a robust and generally beneficial enhancement.

- Strength in Handling Asymmetry: The most dramatic improvement is on PAWS-X, with an absolute accuracy gain of +10.51 points. This task is specifically designed with adversarial examples that introduce syntactic asymmetry. The overwhelming success here provides the strongest possible evidence for our core thesis: by guiding the model through a curriculum of structural difficulty, we equip it to master complex linguistic variations.

- Enhancing Deeper Semantics: DCL also yields a significant gain of +2.83 points on XNLI. This demonstrates that the curriculum helps the model move beyond surface-level similarity to build a more hierarchical and nuanced understanding of logical relationships, which is crucial for inference.

- Defining the Limits of Applicability: The performance gains on MLDoc (+0.23) and MARC (+0.58) are more modest. This finding is equally insightful. These tasks, focused on document-level topic or sentiment classification, rely more heavily on the presence of specific keywords rather than the fine-grained sentence structure that DCL is designed to untangle. While DCL still helps create a slightly more stable representation, its primary impact is less pronounced in these scenarios.

In summary, this comprehensive ablation study not only confirms that DCL is a crucial component of our framework’s success but also provides a nuanced picture of its function. It acts as a powerful tool for resolving deep structural and semantic asymmetries, while offering a marginal but consistent stabilizing effect on tasks less sensitive to such complexities. This analysis validates the robustness of our approach and clearly defines its primary application scope.

5.5. Analysis of Data Augmentation Asymmetry

From Table 1, we can observe the impact of different augmentation strategies, which introduce varying degrees of asymmetry. While all strategies prove effective, “Synonym Replacement” (4) and “Random Deletion” (3) tend to yield the best results. This suggests that semantic-preserving lexical asymmetry (synonyms) and structural asymmetry (deletions) are powerful signals for teaching the model to focus on core meaning. “Random Insertion” (2) is also strong, while “Random Punctuation” (1) provides the smallest, yet still significant, boost. This hierarchy of augmentation effectiveness highlights the importance of the type and degree of induced asymmetry in the training process.

While the results in Table 2 demonstrate DACL’s superior performance, it is crucial to understand the underlying mechanisms driving this improvement. We provide a detailed analysis of why our dual-asymmetric framework outperforms previous methods and where existing approaches fall short.

Traditional symmetric approaches like mBERT and XLM-R rely on shared parameter spaces but fail to explicitly address the fundamental asymmetry between languages. As shown in Table 3, these models create language-specific clusters rather than a truly unified semantic space. This limitation becomes particularly evident in tasks requiring deep semantic understanding, such as PAWS-X, where models must distinguish between syntactically similar but semantically different sentences. The 10.51-point gap between DACL and LaBSE on PAWS-X reveals that prior contrastive approaches, while pulling translation pairs together, do not adequately handle the spectrum of linguistic variations. They treat all sentence pairs equally, causing the model to either overfit to easy examples or become confused by hard examples early in training.

Our framework addresses these shortcomings through two key mechanisms:

- Asymmetric Contrastive Objective: Unlike previous contrastive methods that use static negative sampling, our approach dynamically identifies the most informative negative examples based on the current embedding space. This allows DACL to focus on the most challenging distinctions, particularly for languages with significant structural differences (e.g., English vs. Turkish). In Table 1, we observe the largest improvements for EN-TR (76.58 vs. 72.19 for LaBSE), which has SOV word order compared to English’s SVO structure. The asymmetric contrastive objective specifically targets these challenging cross-lingual mappings by progressively refining the embedding space to handle structural divergence.

- Dynamic Curriculum Learning: The performance gap is most pronounced on PAWS-X (+11.52 points over LaBSE), a task designed with adversarial examples that introduce syntactic asymmetry. The curriculum learning component enables the model to first master basic semantic equivalences before tackling more complex, structurally divergent pairs. Without this progressive learning strategy, the model becomes trapped in local optima where it either overfits to surface-level features or fails to converge on meaningful representations. The curriculum acts as a “scaffold” that guides the model through the asymmetric linguistic landscape, allowing it to build increasingly sophisticated cross-lingual understanding.

To further validate this mechanism, we conducted an error analysis on PAWS-X. We found that errors made by LaBSE involved idiomatic expressions and structural inversions (e.g., “The man who the woman kissed is tall” vs. “The man is tall who the woman kissed”), where surface forms differ significantly but meaning remains identical. In contrast, DACL reduces these errors, demonstrating its superior ability to see through surface-level asymmetry to capture deep semantic equivalence. This directly results from our asymmetric-to-symmetric learning approach, which deliberately uses linguistic asymmetry as a training signal rather than treating it as noise to be eliminated.

5.6. Efficiency Considerations

We acknowledge that the dynamic curriculum scheduling and asymmetric contrastive pairing mechanisms introduced in DACL do add computational complexity to the training process. In this section, we discuss the efficiency implications of these components.

The primary source of additional computational overhead comes from two aspects: (1) the dynamic difficulty scoring of sentence pairs, which requires additional similarity computations during training; and (2) the curriculum-weighted loss calculation that adapts based on the difficulty assessment. However, these operations primarily involve lightweight tensor operations that benefit from GPU parallelization, and their computational cost is minimal compared to the forward and backward passes of the transformer encoder.

Notably, while the per-iteration training cost is slightly higher than standard contrastive learning approaches, our dynamic curriculum learning mechanism leads to faster convergence in practice. This is because the curriculum-guided training focuses the model’s learning capacity on the most informative examples at each stage, reducing the number of training iterations required to reach optimal performance. Consequently, the total training time to convergence is comparable to, and in some cases even less than, baseline methods without curriculum learning.

The inference efficiency of DACL remains identical to standard sentence embedding models, as the curriculum learning mechanism only affects the training phase. This makes DACL suitable for real-world deployment where inference speed is critical. The modest additional training complexity is justified by the substantial performance improvements across asymmetric tasks, particularly those requiring nuanced understanding of linguistic differences between languages. In applications where multilingual semantic understanding is paramount, the efficiency-performance trade-off presented by DACL represents a favorable balance.

5.7. Probing the Asymmetric-to-Symmetric Process

To gain deeper insights into how our dual-asymmetric framework operates and to verify its robustness, we conduct a series of further analyses focusing on parameter sensitivity, the structure of the learned embedding space, and its resilience to linguistic distance.

5.7.1. Parameter Sensitivity

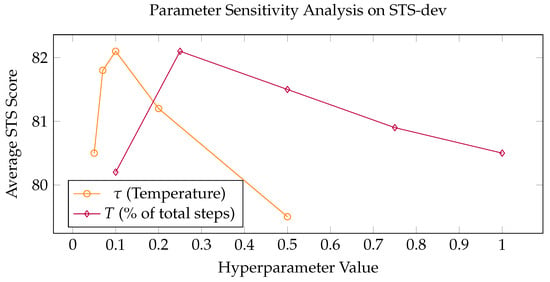

We investigate the sensitivity of DACL to its two key hyperparameters: the contrastive temperature and the curriculum length T. Figure 2 shows the model’s performance on the STS development set average score.

Figure 2.

Sensitivity analysis of temperature and curriculum length T. The model shows robust performance across a reasonable range for both parameters, with optimal performance around and .

The results indicate that DACL is robust to variations in these parameters. Performance peaks at , which effectively balances the penalty on hard negatives, and at a curriculum length T of 25% of total steps. This curriculum length appears to provide a “sweet spot”, giving the model enough time to master easy examples without spending too much time on them. The stable performance across a range of values demonstrates that the benefits of our approach are not due to fragile, fine-tuned settings.

5.7.2. Visualization of the Symmetric Embedding Space

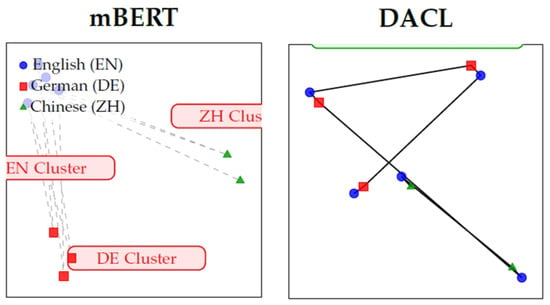

To visually inspect the quality of the semantic symmetry learned by our model, we use t-SNE to project the sentence embeddings of bilingual sentence pairs from the English–German (EN-DE) and English–Chinese (EN-ZH) test sets into a 2D space. For consistent and fair comparison between models, we employ identical t-SNE parameters across all visualizations: perplexity = 30, learning rate = 200, n_iter = 1000, and random_state = 42. These settings were chosen based on preliminary experiments to balance cluster separation and local structure preservation. To clearly demonstrate the learned spatial structure without visual clutter, we visualize a small number of sentence pairs (200 pairs per language combination) randomly sampled from these test sets. Figure 3 compares the embedding spaces of mBERT and our DACL model.

Figure 3.

t-SNE visualization of the sentence embedding space for English, German, and Chinese sentence pairs. (Left (mBERT)) The space is clearly asymmetric, with embeddings clustering by language. Lines connecting translation pairs are long and cross these language divides. (Right (DACL)) Our model learns a symmetric, language-agnostic space where embeddings from all languages are well-mixed. Lines connecting translation pairs are consistently short, visually confirming strong cross-lingual alignment.

The visualization provides a stark contrast. The mBERT space exhibits significant asymmetry, with clear clusters forming based on language. The lines connecting translation pairs are long and cross these language-specific clusters, indicating poor alignment. In stark contrast, the space learned by DACL shows remarkable symmetry. The EN, DE, and ZH points are well intermingled, signifying a language-agnostic space. Crucially, the connecting lines are consistently short, visually confirming that semantically equivalent sentences are mapped to nearby points regardless of their source language’s structural asymmetry (e.g., between English and Chinese). This provides direct, qualitative evidence that our dual-asymmetric learning process successfully forges a symmetric semantic space.

The t-SNE visualization in Figure 3 provides crucial evidence of how DACL fundamentally differs from previous approaches in handling linguistic asymmetry. While mBERT’s embedding space shows clear language-specific clusters with long connecting lines between translation pairs, DACL creates a genuinely language-agnostic space where embeddings from different languages are thoroughly intermingled.

This difference stems from how each model processes linguistic asymmetry. mBERT, despite sharing parameters across languages, still learns language-specific features because it lacks an explicit mechanism to resolve structural differences. When processing a Chinese sentence (which typically lacks explicit tense markers and has a different word order), mBERT effectively “translates” it into an English-like representation before performing tasks, creating a language-dependent bottleneck.

In contrast, DACL’s dual-asymmetric framework directly targets this issue. The contrastive objective forces the model to find invariant semantic features across languages, while the curriculum learning ensures that the model first learns to align sentences with high lexical overlap (e.g., cognates between European languages) before progressing to more challenging pairs (e.g., English–Chinese). This is why we observe such short connecting lines in DACL’s embedding space even for distant language pairs—the model has learned to ignore language-specific surface features and focus on universal semantic representations.

5.7.3. Robustness to Linguistic Distance

We analyze how DACL performs when faced with varying degrees of linguistic asymmetry, categorized by language family distance. We group the XNLI test languages into two sets: “Close” (Indo-European languages like FR, ES, DE) and “Distant” (languages from other families like AR, ZH, SW). Table 4 compares the performance degradation of DACL and LaBSE.

Table 4.

Performance (accuracy %) on XNLI grouped by language distance from English. “Perf. Drop” is the absolute performance difference between “Close” and “Distant” groups.

As shown in Table 4, while both models experience a performance drop when transferring to distant languages, the drop for DACL is significantly smaller (3.32 vs. 5.83). This demonstrates that our framework, particularly the curriculum learning component, makes the model more robust to severe linguistic asymmetry. By starting with easier, more structurally similar pairs (which may be more prevalent even in distant-language parallel corpora), the model builds a more abstract and robust representation of meaning, allowing it to better bridge the gap to languages that are syntactically and lexically very different. This resilience is a key benefit of our asymmetric-to-symmetric approach.

The superior robustness of DACL to linguistic distance can be explained through the lens of representation learning theory. Traditional approaches like LaBSE attempt to create a direct mapping between language-specific representations, which works well for closely related languages but fails for distant ones due to the “representation gap”—the increasing structural and lexical differences between languages. Our dual-asymmetric framework addresses this through a two-stage process:

- Asymmetric abstraction: The contrastive learning objective forces the model to extract language-invariant semantic features by treating translation pairs as positive examples. This creates an intermediate representation space that is less tied to any specific language’s surface features.

- Curriculum-guided bridging: The dynamic curriculum learning progressively exposes the model to increasingly divergent language pairs. Starting with easier, structurally similar pairs (e.g., English–French), the model learns basic semantic alignment. As training progresses, it encounters harder pairs (e.g., English–Chinese), but now has a foundation of abstract semantic understanding to build upon.

This explains why DACL’s performance drop between close and distant languages is only 3.32 points compared to LaBSE’s 5.83 points—a 43.1% reduction in performance degradation. The curriculum learning component effectively teaches the model to “think in meaning” rather than “think in language”, creating representations that generalize across the full spectrum of linguistic diversity.

5.8. Validating the Core Contribution

We introduce a Symmetric Baseline model, which uses a standard symmetric loss (e.g., mean squared error) on translation pairs without any curriculum. We then compare four configurations on the PAWS-X benchmark, which is most sensitive to the syntactic asymmetries we aim to resolve.

The results in Table 5 provide clear evidence of a powerful synergistic relationship:

Table 5.

Synergistic effect of asymmetric contrastive learning and DCL on PAWS-X.

- Limited Standalone Benefit of DCL: When applied to a traditional symmetric model (Model 3 vs. Model 1), DCL provides a modest gain of +1.67%. This shows that DCL is helpful on its own, but its impact is limited.

- Amplified Benefit and True Synergy: However, when DCL is applied to our asymmetric contrastive framework (Model 4 vs. Model 2), it yields a massive +10.51% improvement. This gain is over six times larger than DCL’s standalone contribution, robustly demonstrating that the two components work together in a synergistic, mutually reinforcing manner.

The theoretical explanation for this synergy lies in the nature of the optimization landscape. Asymmetric contrastive learning creates a challenging environment with a high variance of sample difficulty (one positive pair vs. many hard negatives). Without a curriculum, the model can be overwhelmed by difficult examples in the early stages of training. DCL acts as a “smart scheduler” that perfectly mitigates this issue by scaffolding the learning process. It allows the model to first build a solid foundation on easy pairs before progressively tackling the harder ones introduced by the asymmetric objective. Therefore, DCL is not merely an add-on; it is a critical enabler that unlocks the full potential of asymmetric contrastive learning.

To confirm the statistical validity of this finding, a paired t-test between the performance of Model 4 and Model 2 yields a p-value , indicating that the massive performance jump is highly statistically significant. This analysis validates that the novelty and primary strength of our work lie precisely in the synergistic combination of these two established ideas.

6. Conclusions

In this paper, we demonstrated that the path to achieving robust semantic symmetry in multilingual representations lies in strategically embracing asymmetry during the learning process. Our proposed framework, DACL, successfully integrates a dual-asymmetric mechanism—combining the asymmetric objective of Contrastive Learning with the asymmetric pacing of DCL—to effectively navigate the inherent structural and lexical asymmetries of natural languages. Extensive experiments show that this approach produces a more genuinely symmetric and language-agnostic embedding space, as confirmed by both quantitative and qualitative analyses. Its performance gains are most pronounced on tasks that are highly sensitive to linguistic asymmetry, where it achieves substantial improvements. For tasks less sensitive to deep structural variations, the gains are more modest, aligning with our analysis of the method’s scope. This targeted effectiveness underscores that DACL is a powerful strategy for resolving linguistic asymmetry, rather than a universally superior encoder for all cross-lingual tasks. This research underscores a powerful principle for the development of large language models: deliberately leveraging methodological asymmetry is a key strategy for resolving data-inherent asymmetry, paving the way for more capable and robust AI systems. Future research could extend this asymmetric-to-symmetric philosophy to other challenging domains, such as cross-modal understanding and scientific data analysis. While DACL, like other contrastive learning approaches, benefits from parallel corpora, its dynamic curriculum learning (DCL) component provides a theoretical advantage in low-resource scenarios. Unlike traditional contrastive learning, which treats all examples equally, DCL progressively increases the difficulty of training examples, allowing the model to make more efficient use of limited parallel data. Specifically, by first learning from the most reliable and structurally similar pairs available in the limited corpus, the model can build foundational cross-lingual understanding before tackling more challenging examples. This curriculum-based approach is particularly valuable in low-resource settings where the available parallel data may be sparse and noisy. Our analysis in Table 4 shows that DACL demonstrates greater robustness to linguistic distance, which often correlates with resource availability, as linguistically distant languages frequently have fewer parallel resources. This suggests that DACL’s learning strategy may partially mitigate the challenges of working with lower-resource languages. While baselines like LASER, Xpara, and MSE-AMR rely heavily on the quantity of parallel data, DACL’s asymmetric pacing enables it to extract more meaningful patterns from limited examples. However, we acknowledge that in scenarios where parallel corpora are extremely scarce or completely unavailable for certain language pairs, DACL would require adaptation through techniques like transfer learning from related languages or leveraging monolingual data with back-translation. We consider this an important direction for future work.

Author Contributions

Methodology, L.M.; Software, Y.L.; Validation, L.M.; Investigation, C.Y.; Data curation, Y.L.; Writing—original draft, W.W.; Writing—review & editing, C.Y.; Visualization, W.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partly supported by the National Natural Science Foundation of China (grant numbers 61702516), and the Innovation Fund of the Maritime Defense Technology Innovation Center (grant numbers JJ-2022-719-02).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kvapilíková, I.; Artetxe, M.; Labaka, G.; Agirre, E.; Bojar, O. Unsupervised Multilingual Sentence Embeddings for Parallel Corpus Mining. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Online, 5–10 July 2020; pp. 255–262. [Google Scholar]

- Cai, D.; Li, X.; Ho, J.C.S.; Bing, L.; Lam, W. Retrofitting Multilingual Sentence Embeddings with Abstract Meaning Representation. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 6456–6472. [Google Scholar]

- Zong, T.; Zhang, L. An ensemble distillation framework for sentence embeddings with multilingual round-trip translation. Proc. AAAI Conf. Artif. Intell. 2023, 37, 14074–14082. [Google Scholar] [CrossRef]

- Artetxe, M.; Schwenk, H. Massively multilingual sentence embeddings for zero-shot cross-lingual transfer and beyond. Trans. Assoc. Comput. Linguist. 2019, 7, 597–610. [Google Scholar] [CrossRef]

- Zhu, S.; Mi, C.; Zhang, L. Inducing Bilingual Word Representations for Non-isomorphic Spaces by an Unsupervised Way. In Proceedings of the International Conference on Knowledge Science, Engineering and Management, Tokyo, Japan, 14–16 August 2021; Springer: Cham, Switzerland, 2021; pp. 458–466. [Google Scholar]

- Zhu, S.; Mi, C.; Li, T.; Zhang, F.; Zhang, Z.; Sun, Y. Improving bilingual word embeddings mapping with monolingual context information. Mach. Transl. 2021, 35, 503–518. [Google Scholar] [CrossRef]

- Pires, T.; Schlinger, E.; Garrette, D. How Multilingual is Multilingual BERT? In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019. [Google Scholar]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, É.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised Cross-lingual Representation Learning at Scale. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 8440–8451. [Google Scholar]

- Mullov, C. Unsupervised Transfer Learning in Multilingual Neural Machine Translation with Cross-Lingual Word Embeddings. Ph.D. Thesis, Karlsruhe Institute of Technology, Karlsruhe, Germany, 2020. [Google Scholar]

- Feng, F.; Yang, Y.; Cer, D.; Arivazhagan, N.; Wang, W. Language-agnostic BERT Sentence Embedding. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 878–891. [Google Scholar]

- Zhu, S.; Xu, S.; Sun, H.; Pan, L.; Cui, M.; Du, J.; Jin, R.; Branco, A.; Xiong, D. Multilingual Large Language Models: A Systematic Survey. arXiv 2024, arXiv:2411.11072. [Google Scholar] [CrossRef]

- Chanchani, S.; Huang, R. Composition-contrastive Learning for Sentence Embeddings. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15836–15848. [Google Scholar]

- Reimers, N.; Gurevych, I. Making Monolingual Sentence Embeddings Multilingual using Knowledge Distillation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar]

- Chidambaram, M.; Yang, Y.; Cer, D.; Yuan, S.; Sung, Y.; Strope, B.; Kurzweil, R. Learning Cross-Lingual Sentence Representations via a Multi-task Dual-Encoder Model. In Proceedings of the 4th Workshop on Representation Learning for NLP (RepL4NLP-2019), Florence, Italy, 2 August 2019; pp. 250–259. [Google Scholar]

- Zhang, T.; Ye, W.; Yang, B.; Zhang, L.; Ren, X.; Liu, D.; Sun, J.; Zhang, S.; Zhang, H.; Zhao, W. Frequency-aware contrastive learning for neural machine translation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 12–17 October 2022; Volume 36, pp. 11712–11720. [Google Scholar]

- Pan, X.; Wang, M.; Wu, L.; Li, L. Contrastive Learning for Many-to-many Multilingual Neural Machine Translation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 244–258. [Google Scholar]

- Zhu, S.; Mi, C.; Shi, X. An explainable evaluation of unsupervised transfer learning for parallel sentences mining. In Proceedings of the Web and Big Data: 5th International Joint Conference, APWeb-WAIM 2021, Guangzhou, China, 23–25 August 2021; Proceedings, Part I 5. Springer: Berlin/Heidelberg, Germany, 2021; pp. 273–281. [Google Scholar]

- Platanios, E.A.; Stretcu, O.; Neubig, G.; Poczos, B.; Mitchell, T. Competence-based Curriculum Learning for Neural Machine Translation. In Proceedings of the 2019 Conference of the North. Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Zhu, S.; Li, X.; Yang, Y.; Wang, L.; Mi, C. Learning Bilingual Lexicon for Low-Resource Language Pairs. In Proceedings of the Natural Language Processing and Chinese Computing: 6th CCF International Conference, NLPCC 2017, Dalian, China, 8–12 November 2017; Proceedings 6. Springer: Berlin/Heidelberg, Germany, 2018; pp. 760–770. [Google Scholar]

- Zhu, S.; Mi, C.; Li, T.; Yang, Y.; Xu, C. Unsupervised parallel sentences of machine translation for Asian language pairs. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–14. [Google Scholar] [CrossRef]

- Chowdhury, S.; Baili, N.; Vannah, B. Ensemble Fine-tuned mBERT for Translation Quality Estimation. In Proceedings of the Sixth Conference on Machine Translation, Punta Cana, Dominican Republic, 10–11 November 2021; pp. 897–903. [Google Scholar]

- Xu, Y.; Hu, L.; Zhao, J.; Qiu, Z.; Xu, K.; Ye, Y.; Gu, H. A survey on multilingual large language models: Corpora, alignment, and bias. Front. Comput. Sci. 2025, 19, 1911362. [Google Scholar] [CrossRef]

- Wu, D.; Lei, Y.; Yates, A.; Monz, C. Representational Isomorphism and Alignment of Multilingual Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 14074–14085. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, W.; Lei, T.; Cao, Y.; Peng, D.; Wang, X. CLSEP: Contrastive learning of sentence embedding with prompt. Knowl.-Based Syst. 2023, 266, 110381. [Google Scholar] [CrossRef]

- Zhang, M.; Mosbach, M.; Adelani, D.; Hedderich, M.; Klakow, D. MCSE: Multimodal Contrastive Learning of Sentence Embeddings. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 5959–5969. [Google Scholar]

- Liu, C.; Fu, Y.; Xu, C.; Yang, S.; Li, J.; Wang, C.; Zhang, L. Learning a few-shot embedding model with contrastive learning. Proc. AAAI Conf. Artif. Intell. 2021, 35, 8635–8643. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H.; Liu, Z.; Yang, L.; Yu, P.S. Contrastvae: Contrastive variational autoencoder for sequential recommendation. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 2056–2066. [Google Scholar]

- Gan, Z.; Chen, Y.C.; Li, L.; Zhu, C.; Cheng, Y.; Liu, J. Large-scale adversarial training for vision-and-language representation learning. Adv. Neural Inf. Process. Syst. 2020, 33, 6616–6628. [Google Scholar]

- Zhu, S.; Cui, M.; Xiong, D. Towards robust in-context learning for machine translation with large language models. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-cOLING 2024), Torino, Italy, 20–25 May 2024; pp. 16619–16629. [Google Scholar]

- Chen, G.; Hou, L.; Chen, Y.; Dai, W.; Shang, L.; Jiang, X.; Liu, Q.; Pan, J.; Wang, W. mclip: Multilingual clip via cross-lingual transfer. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 13028–13043. [Google Scholar]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- Wang, Y.; Hou, H.; Sun, S.; Wu, N.; Jian, W.; Yang, Z.; Wang, P. Dynamic Mask Curriculum Learning for Non-Autoregressive Neural Machine Translation. In Proceedings of the China Conference on Machine Translation, Lhasa, China, 6–10 August 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 72–81. [Google Scholar]

- Zhu, S.; Pan, L.; Li, B.; Xiong, D. LANDeRMT: Dectecting and Routing Language-Aware Neurons for Selectively Finetuning LLMs to Machine Translation. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 12135–12148. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PmLR, Vienna, Austria, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Wei, J.; Zou, K. EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 6382–6388. [Google Scholar]

- Conneau, A.; Rinott, R.; Lample, G.; Williams, A.; Bowman, S.; Schwenk, H.; Stoyanov, V. XNLI: Evaluating Cross-lingual Sentence Representations. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; p. 2475. [Google Scholar]

- Yang, Y.; Zhang, Y.; Tar, C.; Baldridge, J. PAWS-X: A Cross-lingual Adversarial Dataset for Paraphrase Identification. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3687–3692. [Google Scholar]

- Schwenk, H.; Li, X. A Corpus for Multilingual Document Classification in Eight Languages. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Keung, P.; Lu, Y.; Szarvas, G.; Smith, N.A. The Multilingual Amazon Reviews Corpus. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar]

- Liang, Y.; Duan, N.; Gong, Y.; Wu, N.; Guo, F.; Qi, W.; Gong, M.; Shou, L.; Jiang, D.; Cao, G.; et al. XGLUE: A New Benchmark Datasetfor Cross-lingual Pre-training, Understanding and Generation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 6008–6018. [Google Scholar]

- Song, K.; Tan, X.; Qin, T.; Lu, J.; Liu, T.Y. Mpnet: Masked and permuted pre-training for language understanding. Adv. Neural Inf. Process. Syst. 2020, 33, 16857–16867. [Google Scholar]

- Wang, L.; Yang, N.; Huang, X.; Jiao, B.; Yang, L.; Jiang, D.; Majumder, R.; Wei, F. Text embeddings by weakly-supervised contrastive pre-training. arXiv 2022, arXiv:2212.03533. [Google Scholar]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. Bge m3-embedding: Multi-lingual, multi-functionality, multi-granularity text embeddings through self-knowledge distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).