BERNN: A Transformer-BiLSTM Hybrid Model for Cross-Domain Short Text Classification in Agricultural Expert Systems

Abstract

1. Introduction

- (1)

- A large-scale agricultural question dataset is constructed, containing 110,647 entries categorized into seven classes. This dataset is carefully designed to provide a generalized benchmark for model training and evaluation.

- (2)

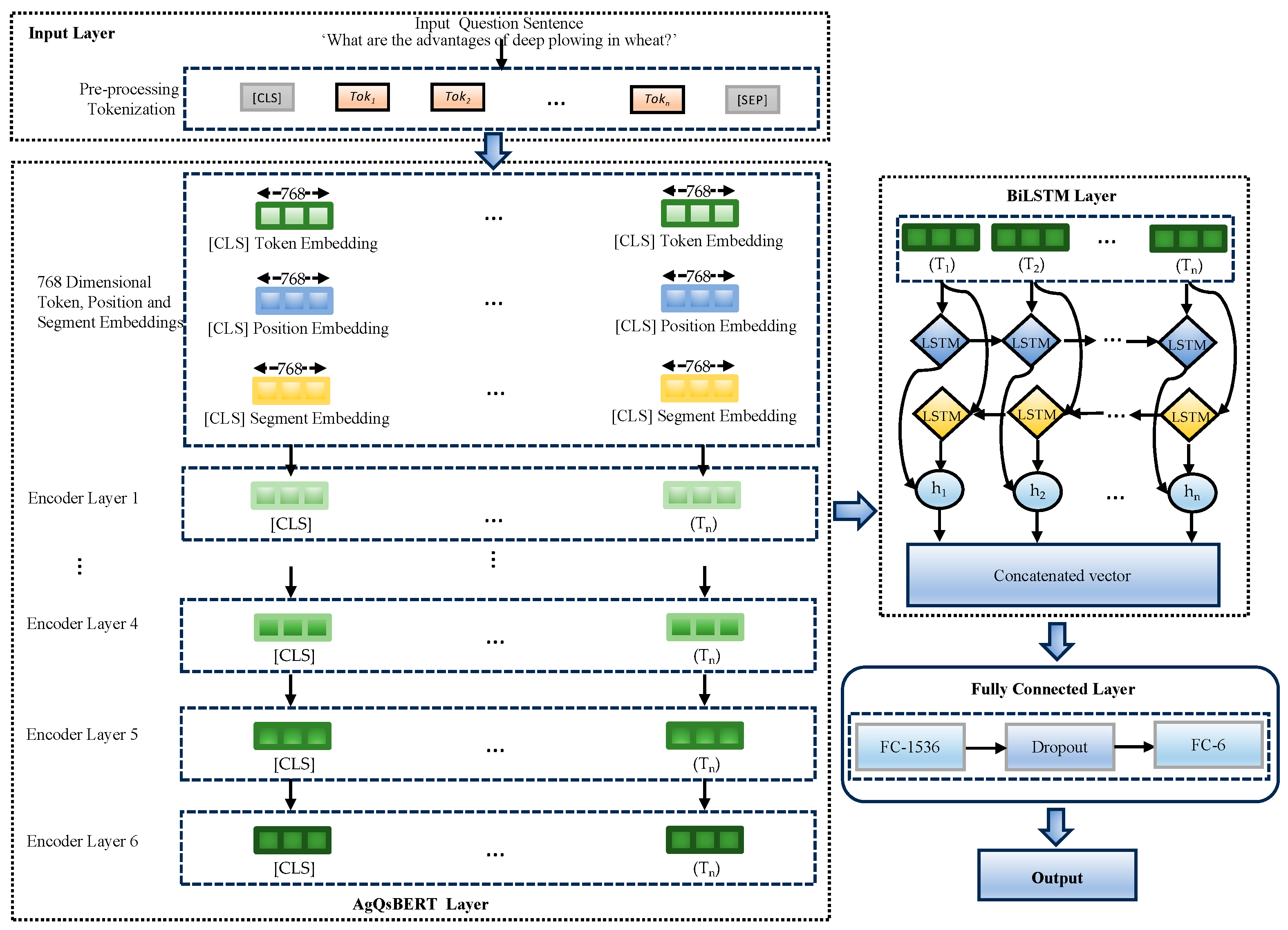

- A fusion semantic feature extraction and classification architecture is proposed for the BERNN model. Specifically, we integrate the Agricultural Question Bidirectional Encoder Representations from Transformers (AgQsBERT) with a BiLSTM network, enabling the model to simultaneously capture sentence-level attributes and contextual dependencies, effectively addressing challenges such as word polysemy. AgQsBERT contributes domain-sensitive semantic understanding by leveraging pretraining on agricultural texts, while the bidirectional nature of BiLSTM reflects a symmetric structure that plays a crucial role in modeling contextual interactions. Their integration yields a synergistic semantic framework tailored to the linguistic characteristics of agricultural short texts.

- (3)

- Extensive and comprehensive experiments are conducted. The results demonstrate the superior performance of BERNN in handling diverse and complex agricultural text data, establishing its advantages in the field of agricultural short-text classification.

2. Related Studies

2.1. Machine Learning-Based Agricultural Text Classification Methods

2.2. Deep Learning-Based Agricultural Text Classification Approaches

2.3. Fusion-Based Agricultural Text Classification Strategies

2.4. Advances in Large Language Models for Agricultural Text Understanding

- (1)

- The lack of large-scale, standardized agricultural text corpora limits the development of downstream research and applications.

- (2)

- The prevalence of synonymous and heterogeneous expressions in agricultural texts complicates feature extraction and causes category imbalance, ultimately affecting classification performance.

3. Materials and Methods

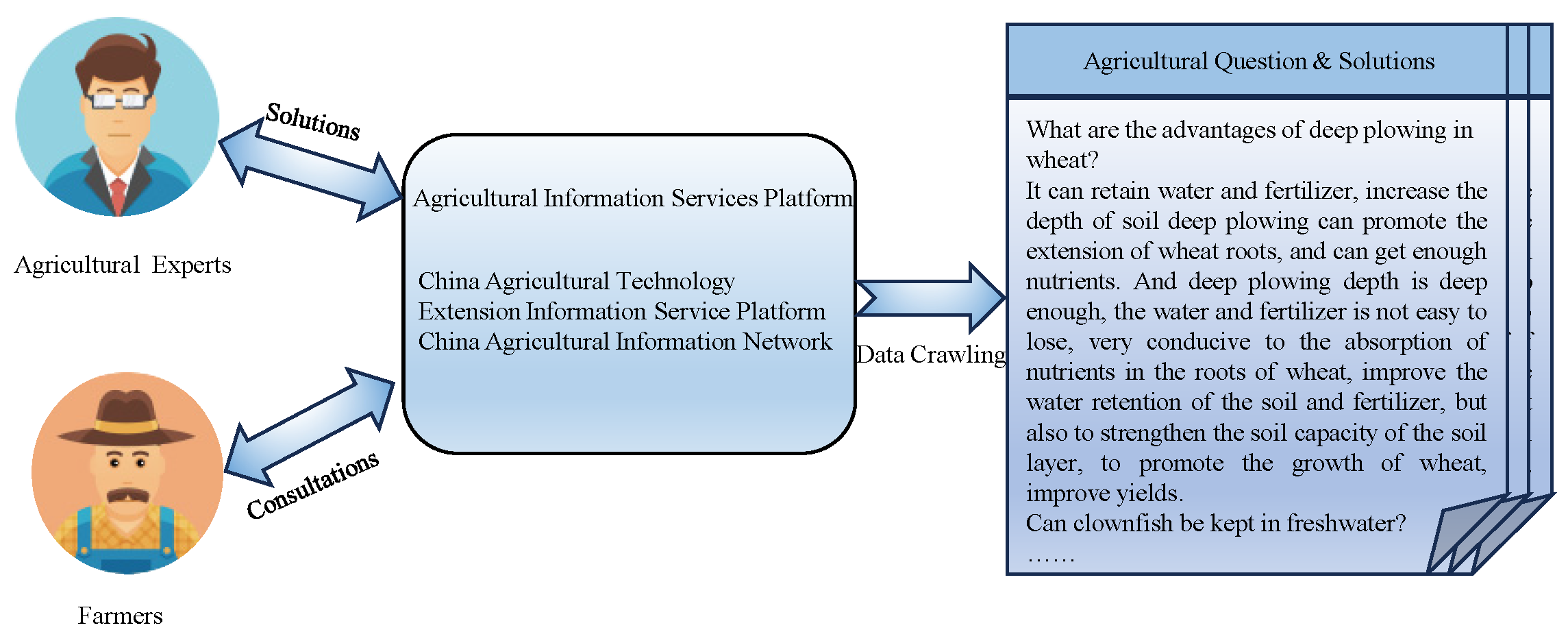

3.1. Data Sources

3.1.1. Corpus Construction

3.1.2. Dataset Preprocessing

3.1.3. Dataset Analysis

- (1)

- High Specialization—All questions are situated within the agricultural domain, with fuzzy boundaries between sentence categories. For example, questions like “Preventing rickets in chickens during winter” and “Diagnosis and prevention of wheat nutrient deficiency” highlight the challenges in transferring general domain models to the agricultural domain for detailed question categorization.

- (2)

- Imbalanced category distribution—As illustrated in Figure 2, the distribution of question categories is uneven. The largest category is planting, which makes up 45.6% of the entire dataset. In contrast, categories such as processing, edible fungi and technology consulting are underrepresented, each comprising less than 5% of the dataset. To address the class imbalance, particularly the overrepresentation of the “Planting” category and the underrepresentation of categories like “Edible Fungi”, we employed weighted loss during model training. Specifically, we used class weights inversely proportional to the class frequencies to penalize misclassification of minority classes more heavily. Additionally, performance metrics such as precision, recall, and F1 score were computed using weighted averages to ensure a fair evaluation across all categories. These strategies effectively mitigated the imbalance and improved classification performance for low-resource categories.

- (3)

- Shorter text—Most question texts in the agricultural datasets are under 30 characters and often around 10 characters, such as “Sheep rumen acidosis” and “How to treat hemorrhagic disease in grass carp?” This brevity complicates semantic extraction and model classification.

3.2. Model of BERNN

3.2.1. The Architecture of BERNN

3.2.2. Layers of BERNN

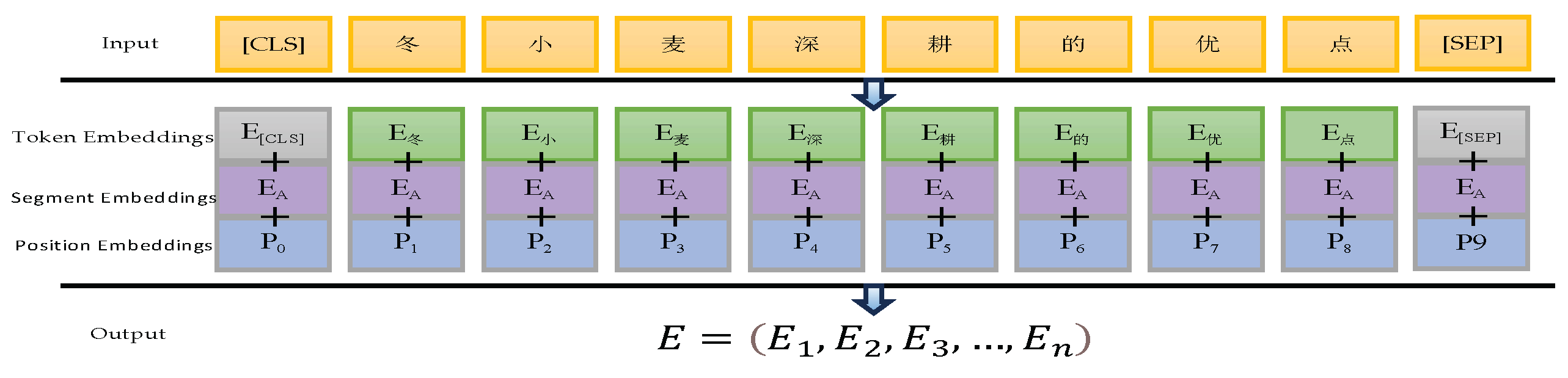

3.2.3. Agricultural Question Bidirectional Encoder Representations from Transformers

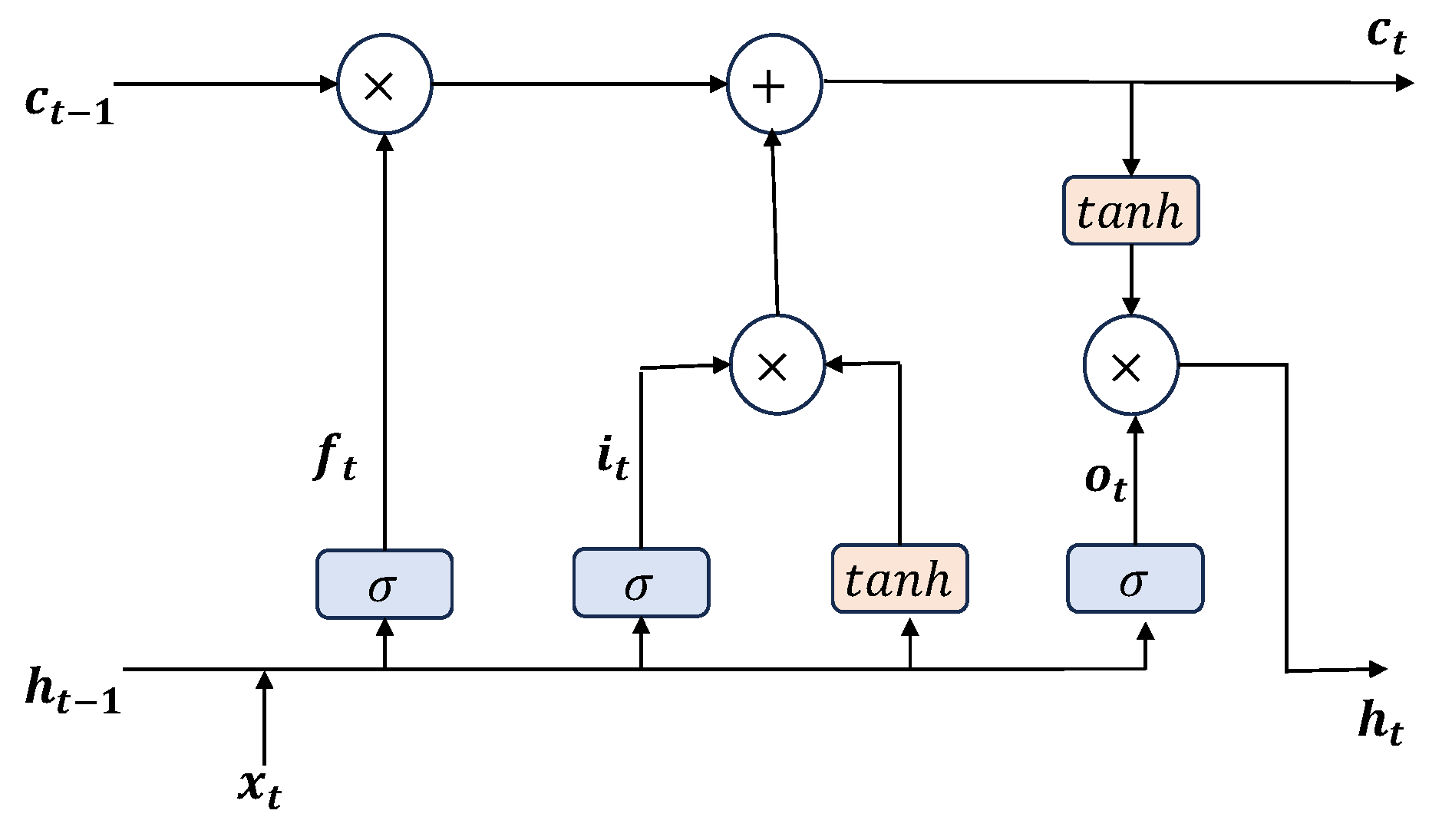

3.2.4. Bidirectional Long Short-Term Memory

| Algorithm 1 The Bidirectional Long Short-Term Memory Algorithm | |

| 1: | ) |

| // Outline LSTM Cell Procedures | |

| 2: | function LSTM_cell(h_prev, c_prev, xt): |

| 3: | |

| 4: | |

| 5: | |

| 6: | |

| 7: | |

| 8: | |

| 9: | |

| 10: | end function |

| // Perform bidirectional semantic analysis | |

| // Set initial values for hidden and cell states | |

| 11: | 0 |

| 12: | 0 |

| 13: | ) // Forward feature extraction |

| 14: | LSTM_cell(h_forward [t−1], c_forward [t−1], xt) |

| 15: | end for |

| 16: | ) // Backward feature extraction |

| 17: | LSTM_cell(h_backward [t + 1], c_backward [t + 1], xt) |

| 18 | end for |

| 19: | ) // Integrating forward and backward outcomes |

| 20: | [h_forward [t], h_backward [t]] |

| 21: | end for |

| 22: | (H1, H2, …, HT) |

3.2.5. Computational Complexity of BERNN

- (1)

- Computational Complexity of AgQsBERTAgQsBERT is based on the Transformer architecture, which includes a six-layers encoder; the computational complexity can be analyzed as follows.

- (1)

- Input Representation: AgQsBERT takes a series of tokens as input, which are transformed into embeddings. The complexity for this step is typically , where n is the number of tokens in the input sequence.

- (2)

- Transformer Layers: AgQsBERT consists of six layers, with each layer integrating a self-attention mechanism and a feed-forward neural network. The self-attention mechanism has a complexity of for each layer, where n represents the length of the sequence, and d denotes the dimensionality of the embeddings (768 for the AgQsBERT). The feed-forward networks have a complexity of for each layer.

- (2)

- Computational Complexity of BiLSTM

- (3)

- Computational Complexity of BERNN

4. Experiments and Analysis

4.1. Parameter Settings

4.2. Evaluation Metrics

4.3. Hyperparameter Selection of the BERNN

- (1)

- Comparison of learning rates

- (2)

- comparison of dropout rates

4.4. Ablation Experiments

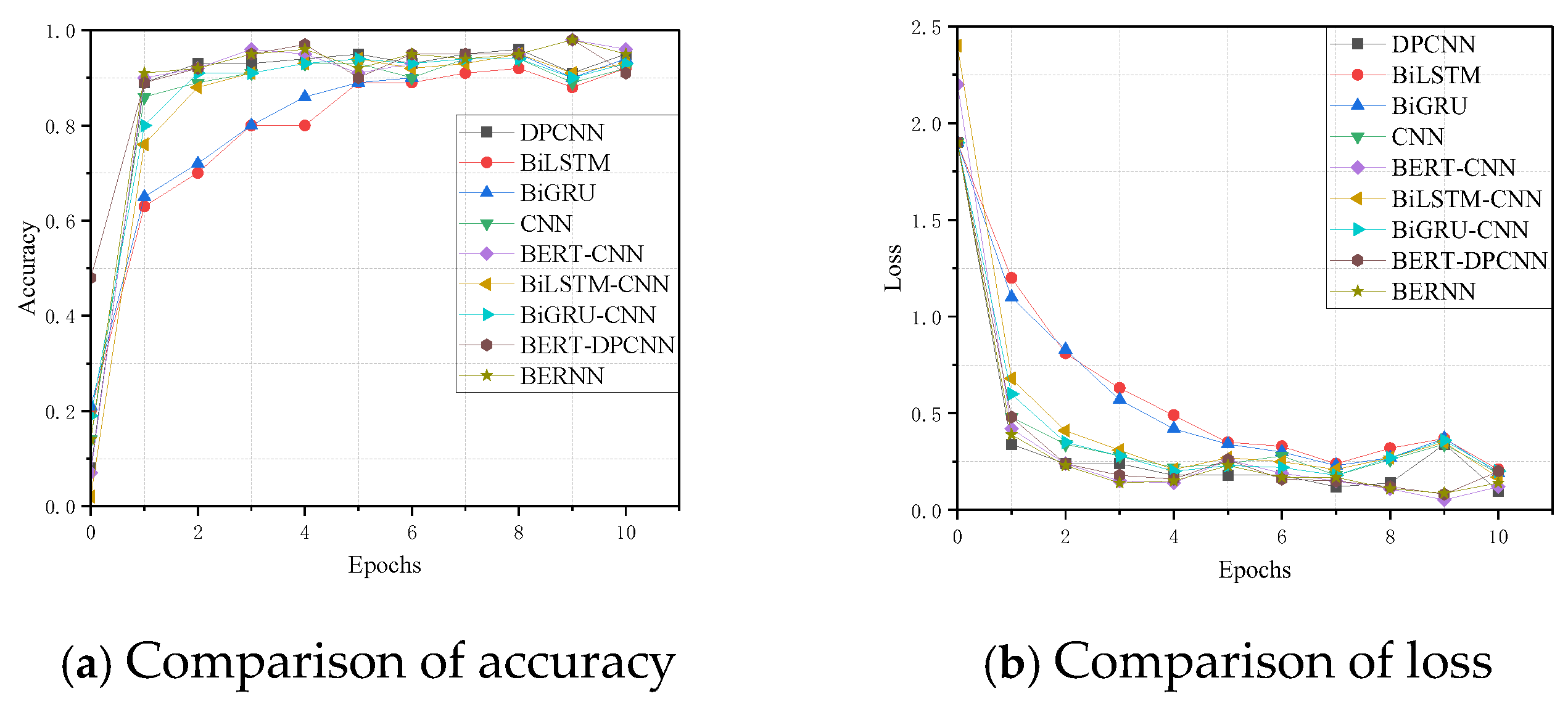

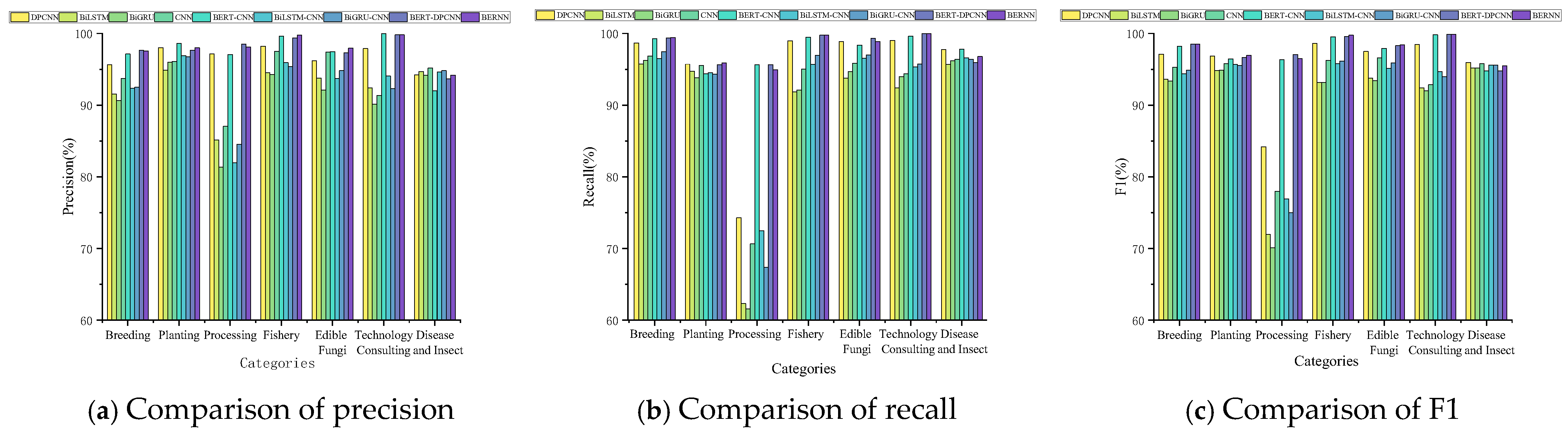

4.5. Comparative Experiments

- (1)

- Single models

- (2)

- Fusion models

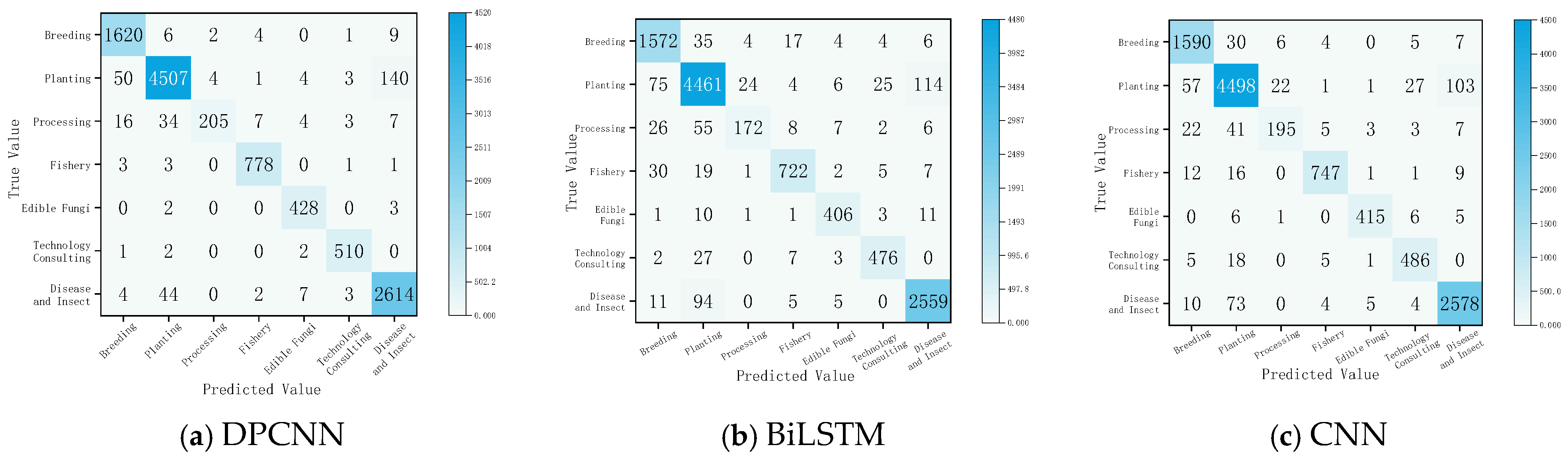

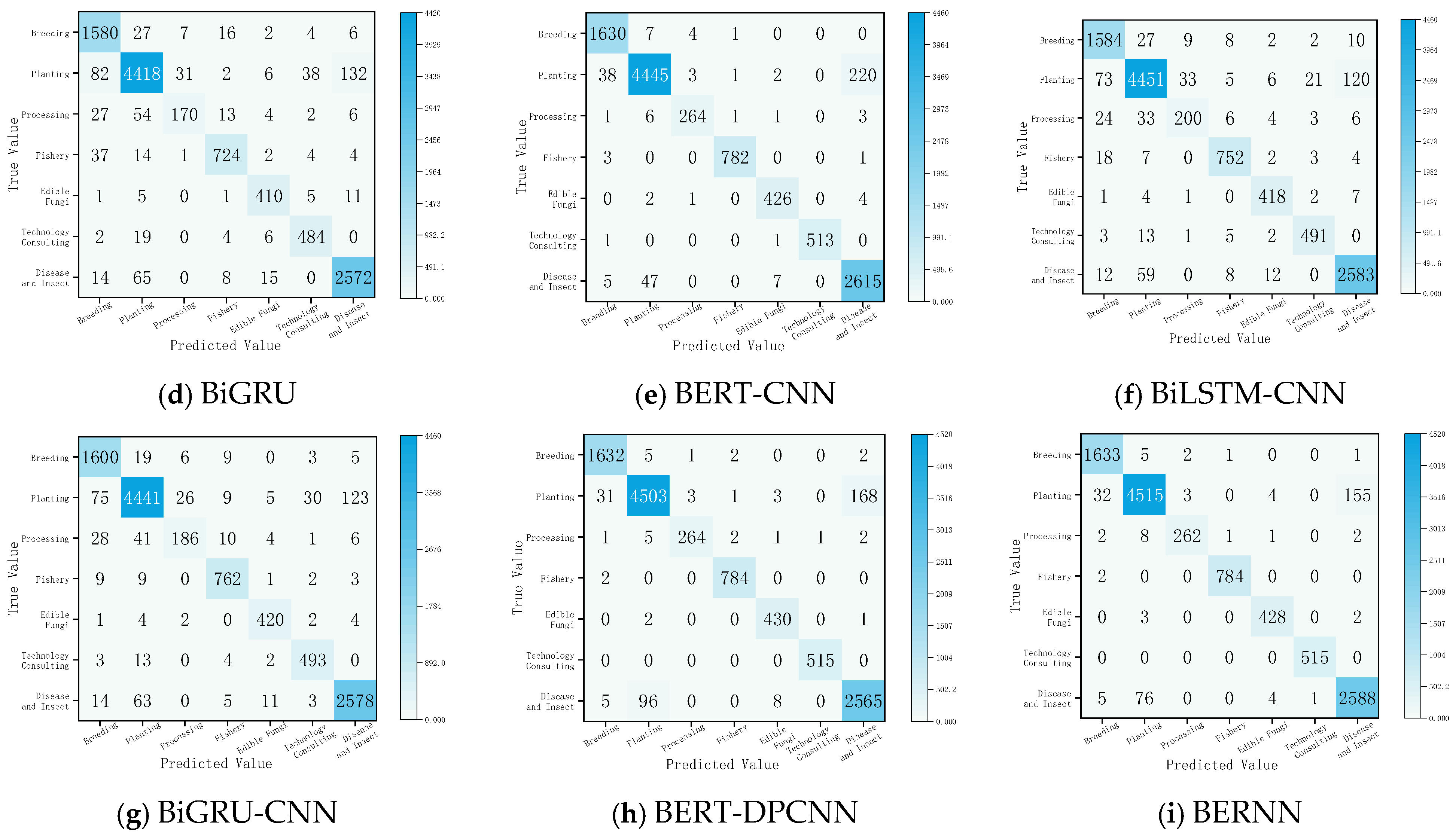

4.6. Classification Experiments

4.7. Generalization Experiments

5. Discussion

5.1. Ablation Study

- (1)

- a model utilizing only the AgQsBERT encoder;

- (2)

- a baseline model using only the BiLSTM module;

- (3)

- the full BERNN model integrating both AgQsBERT and BiLSTM.

5.2. Comparative Model Performance Analysis

5.3. Limitations and Future Work

6. Results

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AES | Agricultural Expert Systems |

| BERNN | Bidirectional Encoder Recurrent Neural Network |

| BiLSTM | Bidirectional Long Short-Term Memory |

| BiLSTM | Bidirectional LSTM. |

| NLP | Natural Language Processing |

| AgQsBERT | Agricultural Question Bidirectional Encoder Representations from Transformers |

| CKA-FSVM | Centered Kernel Alignment-based Fuzzy Support Vector Machine |

| BERT | Bidirectional Encoder Representations from Transformers |

| PDCS | Pest and Disease Interrogative Classification System |

| BiGRU | Bidirectional Gated Recurrent Unit |

| TextCNN | Text Convolutional Neural Network |

| Q&A | Question and Answer |

| ERNIE | Enhanced Representation through kNowledge IntEgration |

| DPCNN | Deep Pyramidal Convolutional Neural Network |

| Multi-CNN | Multi-scale Convolutional Neural Network |

| LLMs | Large Language Models |

References

- Wu, H.R.; Guo, W.; Deng, Y.; Wang, H.Q.; Han, X.; Huang, S.F. Review of semantic analysis techniques of agricultural texts. Trans. Chin. Soc. Agric. Mach. 2022, 53, 1–16. [Google Scholar] [CrossRef]

- Elbasi, E.; Mostafa, N.; AlArnaout, Z.; Zreikat, A.I.; Cina, E.; Varghese, G.; Shdefat, A.; Topcu, A.E.; Abdelbaki, W.; Mathew, S.; et al. Artificial intelligence technology in the agricultural sector: A systematic literature review. IEEE Access 2022, 11, 171–202. [Google Scholar] [CrossRef]

- Aladakatti, S.S.; Senthil Kumar, S. Exploring natural language processing techniques to extract semantics from unstructured dataset which will aid in effective semantic interlinking. Int. J. Model. Simul. Sci. Comput. 2023, 14, 2243004. [Google Scholar] [CrossRef]

- Zhao, X.; Song, Y. Review of Chinese Text Mining in Agriculture. In Big Data Quantification for Complex Decision-Making; IGI Global: Hershey, PA, USA, 2024; pp. 192–218. [Google Scholar] [CrossRef]

- Palanivinayagam, A.; El-Bayeh, C.Z.; Damaševičius, R. Twenty years of machine-learning-based text classification: A systematic review. Algorithms 2023, 16, 236. [Google Scholar] [CrossRef]

- Duan, X.; Liu, Y.; You, Z.; Li, Z. Agricultural Text Classification Method Based on ERNIE 2.0 and Multi-Feature Dynamic Fusion. IEEE Access 2025, 13, 52959–52971. [Google Scholar] [CrossRef]

- Wang, H.; Wu, H.; Wang, Q.; Qiao, S.; Xu, T.; Zhu, H. A dynamic attention and multi-strategy-matching neural network based on bert for chinese rice-related answer selection. Agriculture 2022, 12, 176. [Google Scholar] [CrossRef]

- Song, J.; Huang, X.; Qin, S.; Song, Q. A bi-directional sampling based on K-means method for imbalance text classification. In Proceedings of the 2016 IEEE/ACIS 15th International Conference on Computer and Information Science (ICIS), Okayama, Japan, 26–29 June 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Feng, G.; Guo, J.; Jing, B.Y.; Sun, T. Feature subset selection using naive Bayes for text classification. Pattern Recognit. Lett. 2015, 65, 109–115. [Google Scholar] [CrossRef]

- Haddoud, M.; Mokhtari, A.; Lecroq, T.; Abdeddaïm, S. Combining supervised term-weighting metrics for SVM text classification with extended term representation. Knowl. Inf. Syst. 2016, 49, 909–931. [Google Scholar] [CrossRef]

- Wei, F.; Duan, Q.; Xiao, X.; Zhang, L. Classification technique of Chinese agricultural text information based on SVM. Trans. Chin. Soc. Agric. Mach 2015, 46, 174–179. [Google Scholar] [CrossRef]

- Cui, X.; Shi, D.; Chen, Z.; Xu, F. parallel forestry text classification technology based on XGBoost in spark framework. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach 2019, 50, 280–287. [Google Scholar] [CrossRef]

- Du, R.P.; Xian, G.J.; Kou, Y.T. Improvement and application of TF-IDF-CHI in agricultural science text feature extraction. Digit. Libr. Forum 2019, 8, 18–24. [Google Scholar] [CrossRef]

- Lu, H.; Qiu, Y.Z.; Dai, X.L.; Wang, T.H. Chinese Agricultural Text Classification Using Centered Kernel Alignment-based Fuzzy Support Vector Machine. J. Gannan Norm. Univ. 2021, 6, 57–61. [Google Scholar] [CrossRef]

- Li, C.; Liu, N.; Zheng, G.; Yang, J.; Dao, L. Research Review on Short Text Classification Method Based on Word Vector Model. J. Nanjing Norm. Univ. (Eng. Technol. Ed.) 2025, 25, 54–68. [Google Scholar] [CrossRef]

- Yunlai, S.H.I.; Yunpeng, C.U.I. A classification method of agricultural news text based on BERT and deep active learning. J. Libr. Inf. Sci. Agric. 2022, 34, 19–29. [Google Scholar] [CrossRef]

- Zhao, M.; Dong, C.C.; Dong, Q.X.; Chen, Y. Question classification of tomato pests and diseases question answering system based on BIGRU. Trans. Chin. Soc. Agric. Mach. 2018, 49, 271–276. [Google Scholar] [CrossRef]

- Wang, F.J.; Wei, L.J.; An, Z.X.; Liu, Z.Z. Classification of Agricultural Short Texts Based on FastText Model. SOFTWARE 2022, 43, 27–29. [Google Scholar] [CrossRef]

- Bao, T.; Luo, R.; Guo, T.; Gui, S.T.; Ren, N. Agricultural question classification model based on BERT word vector and TextCNN. J. South. Agric. 2022, 53, 2068–2076. [Google Scholar] [CrossRef]

- Tang, R.; Yang, J.; Tang, J.; Aridas, N.K.; Talip, M.S.A. Design of agricultural question answering information extraction method based on improved BILSTM algorithm. Sci. Rep. 2024, 14, 24444. [Google Scholar] [CrossRef]

- Chen, L.; Gao, J.; Yuan, Y.; Wan, L. Agricultural Question Classification Based on CNN of Cascade Word Vectors. In Pattern Recognition and Computer Vision. PRCV 2018; Lai, J.H., Liu, C.L., Chen, X., Zhou, J., Tan, T., Zheng, N., Zha, H., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11257, pp. 110–121. [Google Scholar] [CrossRef]

- Rose Mary, C.A.; Raji Sukumar, A.; Hemalatha, N. Text based smart answering system in agriculture using RNN. arXiv 2021, arXiv:20210310498. [Google Scholar] [CrossRef]

- Yang, S.Q.; Duan, X.L.; Xiao, Z.; Lang, S.S.; Li, Z.Y. Text classification of agricultural news based on ERNIE+ DPCNN+ BiGRU. Comput. Appl. 2023, 5, 1461–1466. [Google Scholar]

- Jin, N.; Zhao, C.J.; Wu, H.R.; Miao, Y.S.; Li, S.; Yang, B.Z. Classification technology of agricultural questions based on BiGRU_MulCNN. Trans. Chin. Soc. Agric. Mach. 2020, 51, 199–206. [Google Scholar] [CrossRef]

- Chen, P.; Guo, X.Y. Study on agricultural short text information classification based on LSTM-attention. Softw. Guide 2020, 19, 21–26. [Google Scholar] [CrossRef]

- Zhao, Q. Construction of agricultural local culture text classification model based on CNN-Bi-LSTM. Inf. Technol. 2025, 03, 107–115. [Google Scholar] [CrossRef]

- Wang, H.; Wu, H.; Zhu, H.; Miao, Y.; Wang, Q.; Qiao, S.; Zhao, H.; Chen, C.; Zhang, J. A residual LSTM and Seq2Seq neural network based on GPT for Chinese rice-related question and answer system. Agriculture 2022, 12, 813. [Google Scholar] [CrossRef]

- Xiang, H.; Li, D.; Bai, T. Multi-label classification for agricultural text based on ALBERT-Seq2Seq model. Inf. Technol. 2024, 05, 22–29+37. [Google Scholar] [CrossRef]

- Guo, X.; Wang, J.; Gao, G.; Zhou, J.; Li, Y.; Cheng, Z.; Miao, G. Efficient Agricultural Question Classification with a BERT-Enhanced DPCNN Model. IEEE Access 2024, 12, 109255–109268. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar] [CrossRef]

- Wang, H.R.Q.; Wu, H.R.; Feng, S.; Liu, Z.C.; Xu, T.Y. Classification technology of rice questions in question answer system based on attention_densecnn. Trans. Chin. Soc. Agric. Mach. 2021, 52, 237–243. [Google Scholar] [CrossRef]

- Chen, X.; Cong, P.; Lv, S. A long-text classification method of Chinese news based on BERT and CNN. IEEE Access 2022, 10, 34046–34057. [Google Scholar] [CrossRef]

- Mao, K.; Xu, J.; Yao, X.; Qiu, J.; Chi, K.; Dai, G. A text classification model via multi-level semantic features. Symmetry 2022, 14, 1938. [Google Scholar] [CrossRef]

- Bianbadroma; Ngodrup; Zhao, E.; Wang, Y.; Zhang, Y. Multi-level attention based coreference resolution with gated recurrent unit and convolutional neural networks. IEEE Access 2023, 11, 4895–4904. [Google Scholar] [CrossRef]

- Zheng, L.M.; Qiao, Z.D.; Tian, L.J.; Yang, L. Multi-label Classification of Food Safety Regulatory Issues Based on BERT-LEAM. Trans. Chin. Soc. Agric. Mach. 2021, 52, 244–250. [Google Scholar] [CrossRef]

- Li, F.; Zhao, Z.; Wang, L.; Deng, H. Tibetan Sentence Boundaries Automatic Disambiguation Based on Bidirectional Encoder Representations from Transformers on Byte Pair Encoding Word Cutting Method. Appl. Sci. 2024, 14, 2989. [Google Scholar] [CrossRef]

- Johnson, R.; Zhang, T. Deep pyramid convolutional neural networks for text categorization. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 562–570. [Google Scholar] [CrossRef]

- Correa-Delval, M.; Sun, H.J.; Matthews, P.C.; Jiang, J. Appliance Classification using BiLSTM Neural Networks and Feature Extraction. In Proceedings of the 2021 IEEE PES Innovative Smart Grid Technologies Europe (ISGT Europe), Espoo, Finland, 18–21 October 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, X.; Wang, C.; Li, D.; Sun, X. A new early rumor detection model based on bigru neural network. Discret. Dyn. Nat. Soc. 2021, 2021, 2296605. [Google Scholar] [CrossRef]

- Gao, S.; Li, S.J.; Cai, Z.P. A survey of Chinese text classification based on deep learning. Comput. Eng. Sci. 2024, 46, 684–692. [Google Scholar] [CrossRef]

- Huang, X.R.; Sun, S.J.; Yang, X.; Peng, S.L. Recognition of Channel Codes based on BiLSTM-CNN. In Proceedings of the 2022 31st Wireless and Optical Communications Conference (WOCC), Shenzhen, China, 11–12 August 2022; pp. 151–154. [Google Scholar] [CrossRef]

- Yao, J.; Wang, C.; Hu, C.; Huang, X. Chinese spam detection using a hybrid BiGRU-CNN network with joint textual and phonetic embedding. Electronics 2022, 11, 2418. [Google Scholar] [CrossRef]

- Haibo, Z.; Tian, L. Research on Short Text Satirical Text Classification based on BERT-CNN Intermediate Task Transfer Model. Intell. Comput. Appl. 2023, 13, 156–160. [Google Scholar]

| ID | Question | Answer | Category |

|---|---|---|---|

| 1 | How can Mount Huangshan squirrels be raised? | The diet for artificially raised squirrels is categorized into two types: green feed and concentrated feed. Green feed primarily consists of carrots, water spinach, various fresh fruits, and other fresh vegetables. Concentrated feed includes ingredients such as wheat bran, corn flour, soybean flour, and grains, supplemented with small amounts of salt, yeast powder, bone meal, honey, and trace elements. | Breeding |

| 2 | Can clownfish be raised in fresh water? | Clownfish cannot be kept in freshwater because they are ornamental marine fish that require a saltwater environment. They can only thrive in natural seawater or in water made by adding sea salt to replicate seawater conditions. Attempting to raise clownfish in freshwater will result in their inability to adapt, ultimately leading to their death. | Fishery |

| 3 | What are the advantages of deep cultivation of wheat? | Enhancing the depth of deep cultivation in the soil can encourage the extension and expansion of wheat roots, ensuring they receive adequate nutrition. Additionally, sufficiently deep cultivation helps prevent the loss of water and fertilizers, which significantly benefits the nutrient absorption of wheat roots. This practice improves the soil’s ability to retain water and nutrients, strengthens the topsoil, promotes wheat growth, and ultimately increases yield. | Planting |

| 4 | How are potatoes processed into vinegar essence? | Potatoes will be cleaned, boiled, and added to amylase for saccharification; then, yeast will be added for fermentation into alcohol and bacteria fermentation into acetic acid. Finally, distillation and concentration are carried out to obtain a high concentration of vinasse. The whole process needs to be strictly controlled in terms of temperature, sanitary conditions, and good safety protections. | Processing |

| 5 | What are the technical aspects of growing edibles in season? | Winter mushroom planting should be temperature-controlled, moisturized (8–20 °C/80–90% humidity for flat mushrooms), and ventilated in the morning and evening to prevent smothering of the bag. The bag must be covered with heat preservation and disinfected regularly to prevent mold and mildew. | Edible fungi |

| 6 | How can AI optimize planting decisions? | AI analyzes data on weather, soil, and crop growth; combines machine learning to predict yields, pest and disease risks; optimal planting plans; and intelligently recommends sowing times, water and fertilizer dosages, etc. to help farmers accurately manage, reduce costs, and improve yields and quality. | Technology consulting |

| 7 | How can peach tree pests and diseases be controlled? | Cleaning and disinfecting the garden, alternately spraying low-toxicity pesticides, hanging insect traps, strengthening pruning and ventilation, and scientific fertilizer management. | Disease and insects |

| Category | Number of Entries | Thematic Words |

|---|---|---|

| Breeding | 16,715 | Feeding, Breeding, Preventive measures, Treatment approaches |

| Planting | 50,412 | Cultivation methods, Field control, High output |

| Processing | 2733 | Manufacturing, Production, Preservation, Craft techniques |

| Fishery | 8065 | Fish farming, Reproduction, Ponds, and Aquatic goods |

| Edible Fungi | 3458 | Shiitake, Edible fungi, Oyster mushroom, Cultivation techniques |

| Technology Consulting | 5154 | Scientific advancements, Agriculture, Growth, Technology |

| Disease and Insects | 24,110 | Disease identification, Insect pest identification |

| Parameters | Value |

|---|---|

| Batch_size | 128 |

| Maximum sequence length | 32 |

| Hidden_size | 768 |

| Epoch | 10 |

| Learning_rate | 5 × 10−5 |

| Dropout | 0.4 |

| Filter_sizes | (2, 3, 4) |

| Num_filters | 256 |

| Optimizer | Adam |

| Loss function | CrossEntropy |

| Max_Position_Embedding | 512 |

| Attention_Heads_Num | 6 |

| Hidden_Layers_Num | 6 |

| Pooler_FC_Size | 768 |

| Pooler_Attention_Heads_Num | 6 |

| Pooler_FC_Layers_Num | 3 |

| Pooler_Perhead_Num | 128 |

| Vocab_Size | 18,000 |

| Rnn_Hidden | 768 |

| Activation | Softmax |

| LR | Loss | Accuracy (%) | Precisionw (%) | Recallw (%) | F1w (%) |

|---|---|---|---|---|---|

| 1 × 10−5 | 0.12 | 96.97 | 97.03 | 96.98 | 96.98 |

| 2 × 10−5 | 0.11 | 97.09 | 97.14 | 97.09 | 97.09 |

| 3 × 10−5 | 0.11 | 96.96 | 97.01 | 96.96 | 96.96 |

| 4 × 10−5 | 0.11 | 97.05 | 97.10 | 97.05 | 97.05 |

| 5 × 10−5 | 0.12 | 97.19 | 97.22 | 97.19 | 97.19 |

| 6 × 10−5 | 0.12 | 96.63 | 96.69 | 96.63 | 96.63 |

| Dropout | Loss | Accuracy (%) | Precisionw (%) | Recallw (%) | F1w (%) |

|---|---|---|---|---|---|

| 0.1 | 0.12 | 96.77 | 96.89 | 96.77 | 96.79 |

| 0.2 | 0.12 | 96.95 | 97.01 | 96.95 | 96.95 |

| 0.3 | 0.12 | 97.04 | 97.07 | 97.04 | 97.04 |

| 0.4 | 0.12 | 97.19 | 97.22 | 97.19 | 97.19 |

| 0.5 | 0.12 | 96.95 | 96.99 | 96.95 | 96.95 |

| 0.6 | 0.12 | 96.93 | 97.01 | 96.93 | 96.94 |

| Model | Loss | Accuracy (%) | Precisionw (%) | Recallw (%) | F1w (%) |

|---|---|---|---|---|---|

| AgQsBERT | 0.11 | 96.83 | 96.93 | 96.83 | 96.84 |

| BiLSTM | 0.23 | 93.96 | 93.91 | 93.96 | 93.88 |

| AgQsBERT-BiLSTM (BERNN) | 0.12 | 97.19 | 97.22 | 97.19 | 97.19 |

| Model Parameters | Batch_Size | Maximum Sequence Length | Epoch | Learning_Rate | Dropout | Num_Filters | Hidden_Size |

|---|---|---|---|---|---|---|---|

| DPCNN | 128 | 32 | 10 | 3 × 10−5 | 0.4 | 250 | / |

| BiLSTM | 128 | 32 | 10 | 3 × 10−5 | 0.4 | / | 128 |

| BiGRU | 128 | 32 | 10 | 3 × 10−5 | 0.4 | 128 | |

| CNN | 128 | 32 | 10 | 3 × 10−5 | 0.4 | 256 | / |

| BiLSTM-CNN | 128 | 32 | 10 | 3 × 10−5 | 0.4 | / | 256 |

| BiGRU-CNN | 128 | 32 | 10 | 3 × 10−5 | 0.4 | / | 256 |

| BERT-CNN | 128 | 32 | 10 | 3 × 10−5 | 0.4 | / | 768 |

| BERT-DPCNN | 128 | 32 | 10 | 3 × 10−5 | / | 128 | 768 |

| Model | Loss | Accuracy (%) | Precisionw (%) | Recallw (%) | F1w (%) |

|---|---|---|---|---|---|

| DPCNN | 0.12 | 96.62 | 96.66 | 96.62 | 96.58 |

| BiLSTM | 0.23 | 93.96 | 93.91 | 93.96 | 93.88 |

| BiGRU | 0.22 | 93.86 | 93.85 | 93.86 | 93.80 |

| CNN | 0.17 | 95.23 | 95.22 | 95.23 | 95.20 |

| BiLSTM-CNN | 0.19 | 94.96 | 94.97 | 94.96 | 94.94 |

| BiGRU-CNN | 0.18 | 94.97 | 94.96 | 94.97 | 94.92 |

| BERT-CNN | 0.11 | 96.74 | 96.84 | 96.74 | 96.75 |

| BERT-DPCNN | 0.11 | 96.90 | 96.92 | 96.90 | 96.90 |

| BERNN | 0.12 | 97.19 | 97.22 | 97.19 | 97.19 |

| Model | Loss | Accuracy (%) | Precisionw (%) | Recallw (%) | F1w (%) |

|---|---|---|---|---|---|

| DPCNN | 0.32 | 89.93 | 89.97 | 89.93 | 89.92 |

| BiLSTM | 0.43 | 86.44 | 86.51 | 86.44 | 86.42 |

| BiGRU | 0.45 | 85.32 | 85.28 | 85.32 | 85.27 |

| CNN | 0.35 | 88.81 | 88.84 | 88.81 | 88.81 |

| BERT-CNN | 0.28 | 91.05 | 91.01 | 91.01 | 91.02 |

| BiLSTM-CNN | 0.36 | 88.05 | 88.1 | 88.05 | 88.03 |

| BiGRU-CNN | 0.34 | 89 | 89.01 | 89 | 88.97 |

| BERT-DPCNN | 0.31 | 90.74 | 90.92 | 90.74 | 90.76 |

| BERNN | 0.3 | 90.76 | 90.81 | 90.76 | 90.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Zhang, M.; Guo, X.; Zhang, J.; Sun, J.; Yun, X.; Zheng, L.; Zhao, W.; Li, L.; Zhang, H. BERNN: A Transformer-BiLSTM Hybrid Model for Cross-Domain Short Text Classification in Agricultural Expert Systems. Symmetry 2025, 17, 1374. https://doi.org/10.3390/sym17091374

Li X, Zhang M, Guo X, Zhang J, Sun J, Yun X, Zheng L, Zhao W, Li L, Zhang H. BERNN: A Transformer-BiLSTM Hybrid Model for Cross-Domain Short Text Classification in Agricultural Expert Systems. Symmetry. 2025; 17(9):1374. https://doi.org/10.3390/sym17091374

Chicago/Turabian StyleLi, Xueyong, Menghao Zhang, Xiaojuan Guo, Jiaxin Zhang, Jiaxia Sun, Xianqin Yun, Liyuan Zheng, Wenyue Zhao, Lican Li, and Haohao Zhang. 2025. "BERNN: A Transformer-BiLSTM Hybrid Model for Cross-Domain Short Text Classification in Agricultural Expert Systems" Symmetry 17, no. 9: 1374. https://doi.org/10.3390/sym17091374

APA StyleLi, X., Zhang, M., Guo, X., Zhang, J., Sun, J., Yun, X., Zheng, L., Zhao, W., Li, L., & Zhang, H. (2025). BERNN: A Transformer-BiLSTM Hybrid Model for Cross-Domain Short Text Classification in Agricultural Expert Systems. Symmetry, 17(9), 1374. https://doi.org/10.3390/sym17091374