Abstract

Symmetry in a differential evolution (DE) transforms a solution without impacting the family of solutions. For symmetrical problems in differential equations, DE is a strong evolutionary algorithm that provides a powerful solution to resolve global optimization problems. DE/best/1 and DE/rand/1 are the two most commonly used mutation strategies in DE. The former provides better exploitation while the latter ensures better exploration. DE/Neighbor/1 is an improved form of DE/rand/1 to maintain a balance between exploration and exploitation which was used with a random neighbor-based differential evolution (RNDE) algorithm. However, this mutation strategy slows down convergence. It should achieve a global minimum by using 1000 × D, where D is the dimension, but due to exploration and exploitation balancing trade-offs, it can not achieve a global minimum within the range of 1000 × D in some of the objective functions. To overcome this issue, a new and enhanced mutation strategy and algorithm have been introduced in this paper, called DE/Neighbor/2, as well as an improved random neighbor-based differential evolution algorithm. The new DE/Neighbor/2 mutation strategy also uses neighbor information such as DE/Neighbor/1; however, in addition, we add weighted differences after various tests. The DE/Neighbor/2 and IRNDE algorithm has also been tested on the same 27 commonly used benchmark functions on which the DE/Neighbor/1 mutation strategy and RNDE were tested. Experimental results demonstrate that the DE/Neighbor/2 mutation strategy and IRNDE algorithm show overall better and faster convergence than the DE/Neighbor/1 mutation strategy and RNDE algorithm. The parametric significance test shows that there is a significance difference in the performance of RNDE and IRNDE algorithms at the 0.05 level of significance.

1. Introduction

Today, the modern world has entered a post peta scale era; the requirements are growing exponentially for computation and data processing, and the need for high-performance computation is increasing day by day; thus, the trend has changed from serial execution to high-performance computation. For achieving high-performance computation, several hurdles need to be tackled. Examples are those problems where the solution is very hard to find, or the solution merely exists or is very hard to achieve, e.g., NP-complete problems. To achieve the solution to those problems, we have very well-known heuristic techniques which provide the solution to these types of problems, but those solutions are not completely optimized. However, using optimization algorithms, such as the differential evolution (DE) and particle swarm optimization (PSO), optimized solutions to such problems can still be found.

Moreover, we will observe and discuss its variants, as it is already known that the original DE was first proposed by Storn and Price [1] in 1995; this drew the attention of many researchers as it was the simplest algorithm that provided the optimized solutions to many real-world problems. Thus, based on the original DE algorithm, different variants were introduced later. Some of the well-known approaches were the hybridization with other techniques, modification of mutation strategies, adaptation of mutation strategy and parameter settings, and use of neighbor information.

1.1. Problem Statement

The local optima issue is a challenging issue if the population loses its diversity in the differential evolution algorithm. The selection of parents is important to incorporate diversity in mutation and crossover operations’ DE algorithm. The perturbation of a vector that evolves the population around the neighborhood will be stuck in local optima because of the imbalance between the exploration and exploitation capability of the algorithm. The RNDE algorithm utilizes only one difference vector and a neighbor best vector from a set of N neighbors, where N is taken from the interval of and . Less diversity and slow convergence degrade the convergence speed of the RNDE Algorithm 1 [2].

| Algorithm 1: Improved Random Neighbor-Based Differential Evolution |

|

1.2. Research Significance

The selection of the number of parents used in the perturbation of any individual is considered important in the evolution of the DE algorithm. The RNDE algorithm utilizes a difference vector that reduces the diversity in the population and, as a result, the algorithm converges slowly. The perturbation of one neighborhood’s best vector results in more exploitation than exploration and ultimately results in being stuck in local optima that can be fixed by increasing the exploration capability of the DE algorithm.

1.3. Research Contributions

- This paper presents a novel mutation strategy in the RNDE algorithm to maintain the balance between the exploration and exploitation of the DE algorithm. The proposed IRNDE is helpful in increasing the convergence speed and average fitness solution quality of results.

- Experimental results show that the performance of the improved RNDE algorithm is superior, as compared to the RNDE algorithm for the standard test suit of benchmark functions.

- Convergence graphs confirm the quick convergence of the proposed IRNDE algorithm and statistical results show the significance of the IRNDE algorithm.

1.4. Research Question and Hypothesis

- Ways to increase population diversity and incorporate a balance between exploration and exploration during the evolution process of the RNDE algorithm.

- Finding significance in the performance of the RNDE algorithm and proposed algorithm.

In the rest of the paper, Section 2 shows how the DE algorithm works; a brief literature review is presented in Section 3; material and methods are given in Section 4; results and discussion are presented in Section 5; statistical analysis is given in Section 5.4; conclusion and future are given in the last section.

2. Principle of the Classical Differential Evolution Algorithm

As mentioned earlier, the purpose of the DE algorithm is to provide optimized solutions [3]. The algorithm keeps searching for the best individual among the given population [4]. It is also considered that DE can solve the problem for immediate goals using a given population and a set of parameters [5]. It is a population-based algorithm, such as genetic algorithms, and uses crossover and mutation as operators; the last step is the selection step. Moreover, it is self-adaptive, where all solutions have the same chance of being selected, no matter what their fitness values are [6]. It follows the greedy approach, especially in the selection phase. DE uses NP (number of population) D-dimensional parameter vectors, and it is a parallel direct search method. Once we obtain the result or new offspring from the DE algorithm, we compare the new offspring/generation with their parents and we evaluate both the parents and the new generation based on their fitness value. We obtain a new individual by applying mutation, crossover, and selection operators. Those who are better at fitness are kept, no matter whether it is a new generation or their parents. In the selection operation, the greedy selection is applied to select the individual among the target vector and trial vector [7]. DE uses NP, a Population Size, and D-dimensional parameter vectors, and it is a parallel direct search method. The individual is represented by and the population size for the population of each generation G. The classical DE works in three phases: mutation, crossover, and selection.

2.1. Mutation Phase

The mutation phase is used to generate a mutant vector or donor vector that is then used in a crossover operation. To calculate each target vector …, the donor or the mutant vector is generated according to

This equation during the generation generates a donor vector, . , , , with a mutually different integer and . The random integers , , and are taken from the running index i; thus, the NP should be greater or equal to four to meet the condition. F is the real time constant factor, in which , and is responsible for amplification of differential variations of . It shows the two-dimensional illustration which is responsible for the generation of .

2.2. Crossover Phase

The crossover is introduced to increase the diversity of the disconcerted parameter vectors [8]. The trial vector is

where .

In the crossover phase, is the calculation of an unvarying random number generator with outcome . CR is the crossover constant , and this is set by the user. The is the randomly chosen index which should make sure that always obtains at least one parameter from .

2.3. Selection Phase

The selection phase is responsible for deciding whether an individual should become a member of G + 1 or not. Hence, trial vector is always compared with target vector by using the greedy approach and if to achieve minimum fitness value , then is set to ; otherwise, the old value is taken [1].

2.4. Commonly Used Mutation Strategies

As the focus of the current study is DE neighbor information, for classical DE and in other variants of DE, the most commonly used group of mutation strategies [9] are given below

The above-mentioned mutation strategies are used, not only in neighbor information types of DE algorithms, but also by different researchers of different variants of DE. Moreover, these strategies are also used in the classical version of DE.

2.5. Major Contributions of Study

A number of studies by various researchers are available in the literature to handle the local optima issue, balance between exploration and exploitation, improve the convergence speed and improve the solution quality of the DE algorithm. A few of the variants introduced by researchers include tournament selection-based DE [10], rank-based DE [11], fuzzy-based DE [12], self-adaptive DE [13], adaptive DE [14], and Pool-based DE [15] to maintain the balance between exploration and exploitation as well as to improve the convergence performance of the DE algorithm in their research work.

There are two commonly used mutation strategies for DE. The first is DE/best/1, which provides better exploitation, as it obtains the best population but results in poor exploration. On the other hand, in the second strategy of DE/rand/1, in which exploration is better as it obtains the base vector randomly, exploitation is not good, as there is no balance between exploration and exploitation. So far, to overcome this issue, the DE/Neighbor/1 mutation strategy and random neighbor-based differential evolution (RNDE) algorithm were introduced in [2] and tested on 27 extensively used benchmark functions a few years earlier. The authors stated that the DE/Neighbor/1 and RNDE algorithm is successful in maintaining the balance between exploration and exploitation. It is built to use the lower and upper bound limits to control the balance between exploration and exploitation. However, this mutation strategy shows a slow convergence. It should achieve a global minimum as the function falls within 1000 × D, but due to exploration and exploitation balancing trade-offs, it is unable to obtain a global minimum within the range of 1000 × D in some of the objective functions.

This study introduces a new approach, based on the RNDE variant, namely, the improved random neighbor-based differential evolution (IRNDE). The proposed algorithm uses neighbor information similar to RNDE; however, in addition, we added a new concept: weighted differences after various tests. The proposed IRNDE is tested on the same 27 commonly used benchmark functions on which RNDE was tested. Experiments are performed to compare its performance with RNDE. Results demonstrate faster convergence of IRNDE and its superior performance compared to RNDE.

3. Related Work

Many researchers proposed models/techniques to improve the DE algorithm to provide better and more optimized results [16,17]. Few researchers provided techniques or other algorithms that work with the DE algorithm to provide hybrid techniques obtaining more optimized and satisfactory results. DE algorithm has attracted many scholars around the globe; according to their work, the DE algorithm can be categorized in the following sections.

3.1. Hybridization with Other Techniques

The study [18] proposed a hybrid algorithm CADE which combines a customized canonical version of CA and DE. The canonical CA uses the ’Accept()’ function which selects the best individual from the population; then, it is updated in the belief space knowledge source by using the ’Update()’ function. The ’Influence()’ function selects the knowledge source that affects the evolution of the next generation of the population. The authors state that in CA, the major source of exploration is topographic knowledge, which is the knowledge about the functional landscape. Moreover, DE can also provide a complementary source of exploration knowledge hence it makes the perfect complement of CA. Both algorithms share the same population space and hence follow high-level teamwork. The study [19] proposed a mechanism, called ADE-ALC, which is abbreviated to the adaptive DE algorithm with an aging leader and challenges, which is helpful to solve optimization problems. It is introduced in the framework of DE, which helps in maintaining the diversity of the population. Moreover, in the DE algorithm, it is critical to retain the diversity of the evolutionary population in solving multimodal optimization problems. ADE-ALC achieves the optimal solution with fast-converging speed. In the ADE-ADC approach, the key parameters are updated that depend on the given probability distribution that could learn from their successful experience in the next generation. In the end, the effectiveness of the ADE-ALC algorithm is checked by numerical results of twenty-five benchmark test functions, where they found that ADE-ALC shows better or at least competitive optimization performance in terms of statistical performance. The authors proposed a hybrid technique in [20] to provide a statistically better performance in the optimization problems. The authors used a combination of the DE algorithm and the stochastic fractal search algorithm. As the hybrid approach is used, the combination of both algorithms has the strength of both competent algorithms and produces better results than the single algorithm. Moreover, to test the performance of the hybrid approach, they used the IEEE 30 benchmark suite, IEEE CEC2014. The results show a better performance of the hybrid approach compared to a single algorithm, and results show the statistical superiority of the hybrid approach.

3.2. Modification of Mutation Strategies

The study [21] proposed an approach to improve the search efficiency of the DE algorithm. The performance of DE is badly affected by parameter settings and evolutionary operators, e.g., the mutation, crossover, and selection process. To overcome this issue, the authors proposed a new technique, called a combined mutation strategy. A guiding individual-based parameter setting method and a diversity-based selection strategy are used. The proposed algorithm uses the concept of sub-population and divides the population into two subcategories, superior and inferior. Experiments are performed using CEC 2005 and CEC 2014 benchmarks. Moreover, their algorithm is different from greedy selection strategies; hence, they proved their algorithm produced more efficient results than previous proposed techniques. The study [22] points out that DE uses only the best solution to deal with global optimization problems. Similarly, mutation strategies in the existing literature utilize only one best solution. The authors challenged this concept and introduced the concept of m best candidates. The authors proposed that m best candidates should be selected to obtain the better gain or better achievement. A technique called the collective information-powered DE (CIPDE) algorithm is proposed to obtain the m best candidates and enhance the power of DE. The CEC2013 benchmark functions are used for experiments that prove that the CIPDE technique is much better than existing mutation strategies. The study [23] proposed a new technique in which they improved the structure of the DE algorithm. The authors argue that the performance of DE is based on control parameters and the mutation strategy; if we enhance both the selection of proper mutation strategy and control parameter, we can obtain better results. An automated system is proposed to produce an evolution matrix that later takes the place of the control parameter crossover rate, Cr. Furthermore, parameter F is renewed in the evolution process. The mutation strategy along with the time stamp system is also progressive in this study. The experiment results showed that the proposed technique is very competitive with the existing strategies.

3.3. Adaptation of Mutation Strategy and Parameter Settings

The study [24] proposed a new algorithm that can investigate problem landscape information and the performance histories of operators for dynamically selecting the most suitable DE operator during the evolution process. The need for this mutation strategy is justified by the fact that predominantly existing works use a single mutation strategy. The authors present the concept of using multiple mutation strategies. Multiple mutation strategy-based algorithms are reported to provide far better results than single mutation-based algorithms. In such algorithms, the emphasis is to obtain the better performing evolutionary operator, which will be totally based on performance history for creating new offspring. This procedure is carried out dynamically; it selects the most suitable evolutionary operator. Experimental results using 45 optimization problems show the efficacy of the proposed algorithm. The study [21] proposed a new and improved version of the DE algorithm. Firstly, the search strategy of the previous DE is improved by using the information of individuals to set the parameter of DE and update the population, and the combined mutation strategy is produced by combining two single mutation strategies. Secondly, the fitness value of the original and guiding individual is used. Finally, a diversity-based selection strategy is developed by applying a greedy selection strategy. The performance is evaluated using CEC 2005 and CEC 2014 benchmarks, and better results are reported. The study [25] investigates the high-level ensemble in the mutation strategies of DE algorithms. For this purpose, a multi-population-based framework (MFT) was introduced. An ensemble of differential evolution variants (EDEV) based on three high, popular, and efficient DE versions is utilized. JADE-adaptive DE with optional external archive, CoDE DE with composite trial vector generation strategies and control parameters, and EPSDE DE algorithm with an ensemble of parameters and mutation strategies are joined. Furthermore, the whole population of EDEV is divided into four subcategories. In the end, the EDEV-based test is run on the CEC 2005 and CEC 2014, which shows better performance of EDEV.

3.4. Use of Neighbor Information

The study [26] proposed an adaptive social learning (ASL) strategy for the DE algorithm so that neighborhood relationship information of individuals in the current population can be extracted; this is called the social learning of DE (SL-DE). In the classical DE algorithm, parents in mutation are randomly selected from the population. However, in the ASL strategy, the selection of parents is intelligently guided. In ASL, every individual can only interact with their neighbor and parents. To check the efficacy of SL-DE, it is applied to the advanced DE algorithm. Results demonstrate that SL-DE can achieve a better performance than most of the existing variants of DE. The study [27] proposed the technique in which the authors applied the global numerical optimization and the index-based neighborhood on DE. In this technique, the authors used information and population to enhance the performance of DE. In the existing literature, neighborhood information of the current population has not been systematically exploited in DE design. The authors proposed neighborhood-adaptive DE (NaDE). The NaDE technique is based on the pool of index-based neighborhood topologies. Firstly, several neighborhood interactions for every discrete individual are recorded and later used adaptively for specific function selection. Secondly, the authors introduced a neighborhood-directional mutation operator in NaDE to obtain the new resolution in the designated neighborhood topology. Finally, NaDE is easy to operate and implement and can be matched with earlier DE versions on different kinds of optimization problems. The authors proposed a new approach called enhancing De with a random neighbors-based strategy in [2]. Traditionally, DE/rand/1 and DE/best/1 mutation strategies are used with DE. In DE/rand/1, the base vector is chosen from the population randomly for better exploration. On the other hand, the DE/best/1 strategy has better exploitation and poor exploration. To overcome this issue, the authors proposed DE/Neighbor/1. In the proposed technique, for each individual population at every generation, the neighbors are chosen from the population in a random manner and the base factor of the DE/Neighbor/1 mutation strategy should be the best one among neighbors. Xiong et al. [28] introduced a speciation-based DE algorithm in their research work. The presented algorithm utilizes the mechanism of the adaptive neighborhood by considering multimodal benchmark functions. They used the concept of achievement to store inferior individuals in each iteration and remove similar-performing individuals using the mechanism of crowding relief. In their presented approach, the use can fine-tune the parameters adaptively. Liao et al. [29] considered the system of non-linear equations using the DE algorithm in their research work. They utilized neighborhood-based information to increase the exploitation capability of the DE algorithm. The size of the neighborhood is dynamically selected with the adjustment of parameter adaption in the state of evolution. The search efficiency of the DE algorithm was enhanced by achieving significant results. The research work [30] presented binary differential evolution based on a self-adaptive neighborhood method for change detection in super-pixels. The change detection process is carried out by using a binary DE mutation strategy to reduce the dimension of super-pixels. Lio et al. [31] introduced a variable neighborhood-based DE algorithm by utilizing a history archive in their research work. During the evolution process, the neighborhood size is dynamically controlled in their presented approach. The information exchange process is performed between the current population and the population stored in the achieved research. The information exchange is helpful to escape from local optima during the evolutionary process. Liu et al. [32] considered the economic dispatch problem by incorporating a direction-inducted strategy in neighborhood-based DE algorithm in their research work. They have used a new mutation strategy named a neighborhood-based non-elite direction strategy that enhances the exploitation capability of the presented algorithm. Sheng el al. [33] introduced the concept of an adaptive neighborhood-based mutation in the DE algorithm. The presented technique is helpful to focus on an intensive search followed by an initial search by the DE algorithm. They also used a Gaussian local search to evolve promising individuals during the search process. Wang et al. [34] introduced an adaptive memetic-based neighborhood crossover strategy in their research work. They used the concept of a multi-nitching sampling for the evolution of the sub-population to ensure intensive search. They also presented the design of adaptive elimination-based local search in their research work. Their neighborhood crossover strategy focuses on an exploitation capability in the DE algorithm to encourage a good quality solution. Cai et al. [35] presented a self-organizing DE algorithm in their research work that is helpful in guiding the search process by utilizing neighborhood information. The adaptive adjustment of various individuals in the explored works use a cosine similarity in the self-organizing map. Segredo et al. [36] proposed a neighborhood based on proximity in the DE algorithm that is helpful to balance between exploration and exploitation during the evolution process. They used Euclidean-based distance to measure the similarity between neighbors of individuals and termed it a similarity-based neighborhood search. Baioletti et al. [37] presented algebraic differential evolution based on a variable neighborhood concept in their research work. Their presented algorithm utilizes the information of three neighborhoods for shifting and swapping purposes to form permutations. Tian and Gao [38] introduced the adaptive evolution method by using the neighborhood mechanism in the DE algorithm. They used a selection probability based on the selection of individuals, as well as two mutation operators based on the neighborhood to improve the evolution process. They also used a simple reduction method to adjust the population size to incorporate diversity in the DE algorithm. Tarkhaneh and Moser [39] performed a cluster analysis by incorporating a neighborhood search and Archimedean spiral in the DE algorithm in their research work. Mantegna Levy’s flight mechanism was used in the Archimedean spiral by generating robust solutions to balance between exploration and exploitation during the searching process. In this section, we analyzed the DE variants in terms of mutation strategies, use of neighbor information, hybridization of the DE algorithm, etc. Experimental results and performance reports from these works indicate that the performance of DE can be enhanced in several ways. Some of the studies used hybrid approaches to achieve the enhancement while others used a combination of something likr test functions, etc. We can say that, to some extent, researchers were able to obtain a better performance from enhanced versions rather than from the simple version of the DE algorithm. However, to achieve a better performance of DE, they had to make a trade-off. We realize that there are many research challenges for DE to further improve its performance. This research aims to enhance the concept of random neighbors; the focus is to obtain a faster convergence compared to the existing random neighbors approach.

4. Materials and Methods

4.1. DE with Random Neighbor-Based Mutation Strategy

For this research, we selected the random neighbor-based differential evolution (RNDE) approach by [2]. It was proposed to achieve a balance between a better exploration and exploitation, which cannot be achieved using traditional DE/rand/1 and DE/best/1 mutation strategies. The mutation phase is RNDE, given as

The number of neighbors N plays a critical role in leading the balance between exploration and exploitation by using the upper and lower bound limits. A small value of N makes the mutation strategy similar to the DE/rand/1 strategy, which results in better exploration and poor exploitation. Contrarily, the large value of N (near to NP) makes the mutation strategy similar to DE/best/1, which provides a better exploitation. The large value of N is not a wise choice because it can make the algorithm become stuck in the local optimum. The authors also proposed a self-adaptive strategy that dynamically updates N and the number of neighbors for each individual , as follows

where and show lower bounds and upper bounds, respectively, is the smallest best value of the objective function in the population in the current generation and is used as the smallest constant to avoid a zero division-error.

The RNDE is successful in maintaining the balance between exploration and exploitation, as it was built to use the lower and upper bound limits to control the balance between exploration and exploitation. However, as the whole focus of the RNDE algorithm is to maintain the balance between exploration and exploitation, this mutation strategy makes convergence very slow, thus requiring a larger number of iterations in achieving the global optimum.

4.2. Proposed Approach

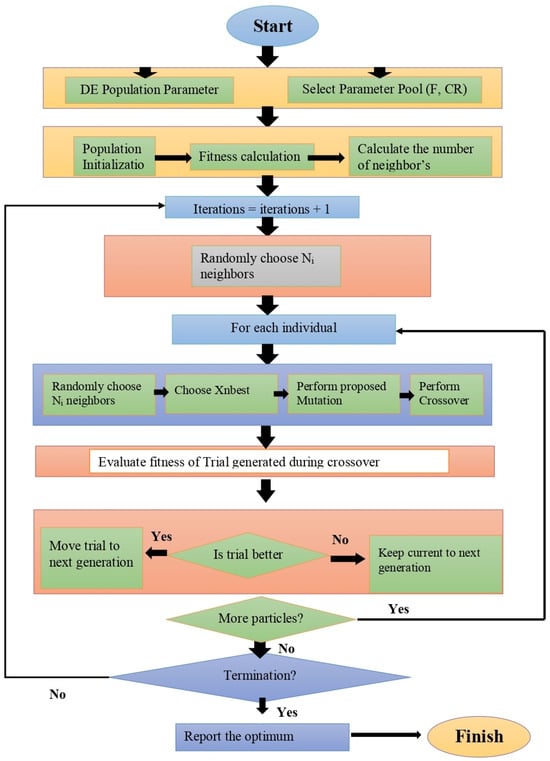

To overcome the slower convergence problem of RNDE, this study proposes an improved random neighbor-based mutation strategy for DE (IRNDE). The flow chart of the proposed IRNDE is given in Figure 1. IRNDE also uses neighbor information, such as the RNDE algorithm and DE/Neighbor/1 mutation strategy; however, in addition, we added another term of weighted differences in the DE/neighbor/2 mutation strategy after various tests. As we added an extra-weighted vector in the mutation phase, the upper and lower bound limits of N, which is denoted by neighbors, are also increased. The proposed IRNDE mutation equation is given as

Figure 1.

Flowchart of proposed IRNDE algorithms.

The original/base RNDE algorithm and DE/Neighbor/1 mutation strategy have one weighted difference vector

On the contrary, the proposed IRNDE algorithm and DE/neighbor/2 mutation strategy have two weighted difference vectors

In addition, the upper and lower neighbor bounds limits are also adjusted accordingly. In the base algorithm RNDE, the mutation strategy DE/Neighbor/1 lower bound was set to 3, and the upper bound was set to 10 after experimentation. For the proposed IRNDE, using the DE/neighbor/2 mutation strategy, we updated upper and lower bound limits accordingly, and set to 5 and to 12.

We used lower bound 5 because minimum vectors are 5 in our mutation equation. Moreover, the range of implication factor F in the base algorithm RNDE and the proposed algorithm IRNDE is between 0 to 2 and was varied according to the nature of the objective functions. The value of F is kept differently for each function until the best result is achieved. However, we have faced many difficulties during the implementation of IRNDE. In the RNDE algorithm, N denotes the neighbors and is very important in maintaining the balance between exploration and exploitation. If the individual from the population learns the best information from their neighbors, the efficiency of the overall algorithm will be enhanced and more fit offspring can be obtained.

Another major change from the original DE, which is used both by RNDE and the proposed IRNDE, is the dynamic updation of CR, if the trial vector is worse than the target/current vector. The idea behind the dynamic update of CR is that if the trial vector is worse than the target/current vector, i.e., , it means current CR cannot provide the best solution; it needs to be updated. Conversely, if a small value of CR is not suitable, then this method can shift the value to the larger one. This strategy, called the adaptive shift strategy, is based on and and uses a standard deviation of 0.1 and the random number denoted by , which is a real number between 0 and 1. To fulfill this, RNDE uses two terms and , where means large and means the smaller value of CR. The value of is set to 0.85 and the value of is set to 0.1 after conducting many experiments. If the fitness of the trial vector is worse than the target vector, then CR is updated using the negation from to or to using the following equations

In IRNDE, the crossover phase is given as

Equation (16) is responsible for performing the crossover operation, as it is used in the same classical DE crossover phase studies. Moreover, where , and randomly choose an integer from , is a random value consistently distributed in [0, 1], , and, normally, CR should be in between [0, 1], as it is a crossover probability.

However, in RNDE and IRNDE, CR is dynamically updated, because if the value of CR is large, then the CR-made trial vector learns more from the mutant vector and less from the target vector; this causes an increase in the population diversity and is contrary to a small value of CR, making the trial vector learn more from the target vector and less from the mutant vector. IRNDE selection phase is denoted as

Equation (17) shows the selection phase of IRNDE, which is different from the classical DE. As in classical DE, greedy choice is used between the trial vector and target vector, and if is better, then is replaced with . Hence, it survives in the next generation; however, in RNDE and IRNDE, if the trial vector is not better than the target vector, then will be replaced with and will dynamically update the CR. This is where shows the number of individuals in the population, is the number of function evaluations, is the maximum number of functions evaluated, is the mutant vector around the individual (or called a target vector), is the trial vector, is the minimum (best) value of the objective function in the population at the current generation, and is the smallest constant in the computer to avoid a zero-division-error. is used to inverse the value of and is initialized with 0, is the crossover probability, is the large mean value which is generated by Gaussian distribution, is a small mean value that is generated by the Gaussian distribution, and 0.1 is the standard deviation. After some experimentation and surveys, is set to 0.85 and is set to 0.1.

5. Results and Discussions

To evaluate the effectiveness of the proposed algorithm IRNDE and the new enhanced mutation strategy, namely DE/Neighbor/2, we utilized 27 commonly used benchmark functions in which the previous RNDE algorithm and DE/Neighbor/1 mutation strategy were tested. For fair testing with the base algorithm, we implement both RNDE and the proposed IRNDE using the DE/Neighbor/1 mutation strategy and DE/Neighbor/2 mutation strategy, respectively, on the same parameter settings.

5.1. Parameter Settings

As mentioned earlier, the original/base RNDE algorithm and DE/Neighbor/1 mutation strategy have one amplified difference vector, however, the proposed IRNDE algorithm and DE/neighbor/2 mutation strategy have two amplified difference vectors used to generate the mutant vector or donor vector. In addition, upper and lower bounds limits for the calculation of neighbors are also adjusted accordingly. In the base algorithm, the RNDE mutation strategy DE/Neighbor/1 lower bound was set to 3, and the upper bound was set to 10 after some research and experimentation. We have improved the mutation equation in our algorithm and added an extra weighted difference vector in the proposed IRNDE mutation strategy DE/neighbor/2. We have updated the upper and lower bound limits as well and set 5 and 12.

The lower bound is set to 5 because minimum vectors are 5 in our mutation equation. Moreover, the range of implication factor F in the base algorithm RNDE and the proposed algorithm IRNDE was between 0 and 2 and has been varied according to the nature of the objective functions. The value of F is kept different for each function until the best result is achieved. Finally, the selection phase is the same as used in the original DE algorithm except for the updating process of CR, which is already explained.

5.2. Benchmark Functions

For experimental evaluation, we have used a test suite with 27 benchmark functions, which are also used by the RNDE algorithm. The details of the test suit are provided in Table 1.

Table 1.

Test suite with 27 benchmark functions.

5.3. Results

The list given in Table 1 is the list of benchmark functions, their ranges, and their global minimum. These are the functions that we used to check the performance of both algorithms, RNDE and IRNDE. For experiments, 5000 iterations are used to evaluate the performance of both algorithms. Convergence graphs are shown only for f1 to f6; however, tabular data are presented for f1 to f15.

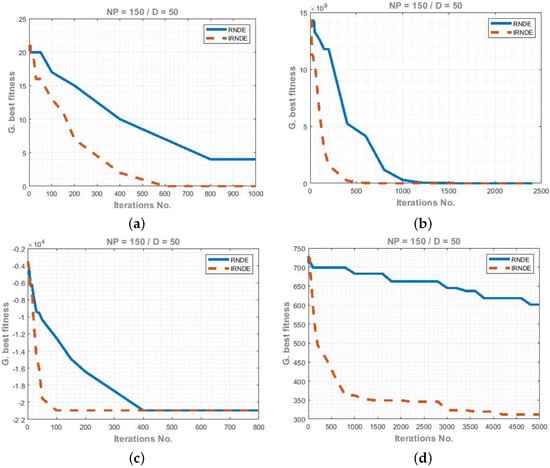

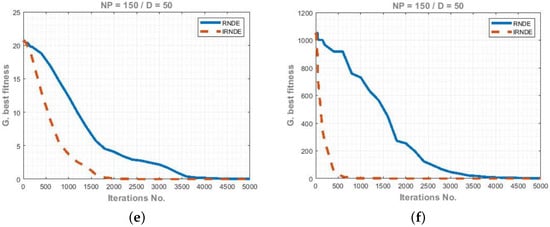

Figure 2 shows the graphical representation of the fitness results of f1 to f6, where iterations are 5000, population NP = 150, and dimension D = 50. Moreover, the overall enhancement of the proposed algorithm IRNDE can be clearly observed.

Figure 2.

Convergence graphs of f1 to f6 for RNDE and IRNDE. (a) Function f1 convergence graph of RNDE and IRNDE for NP = 150, D = 30, Iterations = 5000, (b) Function f2 convergence graph of RNDE and IRNDE for NP = 150, D = 30, Iterations = 5000, (c) Function f3 convergence graph of RNDE and IRNDE for NP = 150, D = 30, Iterations = 5000, (d) Function f4 convergence graph of RNDE and IRNDE for NP = 150, D = 30, Iterations = 5000, (e) Function f5 convergence graph of RNDE and IRNDE for NP = 150, D = 30, Iterations = 5000, and (f) Function f6 convergence graph of RNDE and IRNDE for NP = 150, D = 30, Iterations = 5000.

The performance of both algorithms, RNDE and IRNDE, is analyzed with respect to variations in the number of populations (NP) and dimensions that are denoted by D. Results of fitness values are reported in Table 2 for population size NP = 150, dimension size D = 50, and iterations = 5000. The results are divided into the pair of five benchmark functions: f1 to f5, f6 to f10, and f11 to f15. It can be clearly observed that the proposed algorithm IRNDE has performed far better than the base algorithm RNDE. There is a visible difference, as the proposed algorithm IRNDE is reducing more quickly than the base algorithm RNDE.

Table 2.

Fitness values of function f1 to f5 for NP = 150, D = 50, and iterations = 5000.

Results given in Table 3 are generated using population size NP = 150, dimension size D = 50, and iterations = 5000 for f6 to f10. It can be observed that the proposed algorithm IRNDE shows better performance compared to the base algorithm RNDE.

Table 3.

Fitness values of function f6 to f10 for NP = 150, D = 50, and iterations = 5000.

The results for f11 to f15 are given in Table 4, which is indicative of the superior performance of the proposed IRNDE for f11 to f15. Results demonstrate that the proposed IRNDE algorithm can obtain a global optimum with less numbers of iterations than the RNDE algorithm.

Table 4.

Fitness values of function f11 to f15 for NP = 150, D = 50, and iterations = 5000, * indicates global optimum could not reach.

In Table 5, results are given for both RNDE and IRNDE regarding the best, mean, and worst values with standard deviation and number of iterations needed to reach the global optimum. Results are generated using the population size NP = 150, dimension size D = 10, and iterations = 5000. It can be observed that f14 RNDE at the 5000th iteration still could not reach the global optimum, as −450 is the rounded value and the original value is (−449.99983911013300), whereas IRNDE reached the global optimum in 3976 iterations. While observing the number of iterations for f1 to f27, it can be observed that IRNDE can achieve a global optimum with much less numbers of iterations compared to RNDE, which shows the superiority of the proposed IRNDE algorithm.

Table 5.

Number of function evaluations for functions f1 to f15 for NP = 150, D = 10, and iterations = 1000 × D.

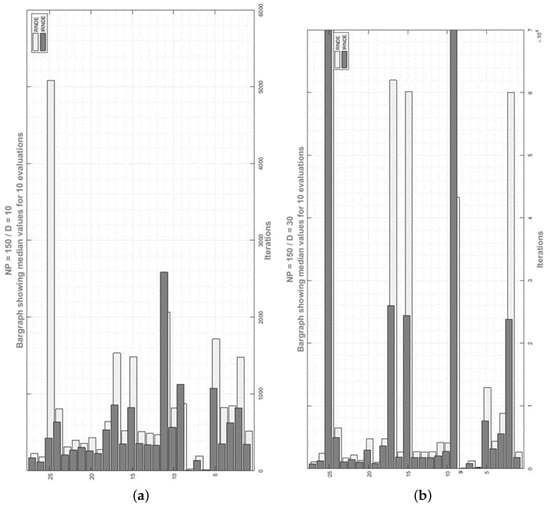

Results given in Table 5, Table 6 and Table 7 report the performance of both RNDE and IRNDE for dimensions D of 10, 30, and 50. The performance is analyzed with respect to the number of fitness evaluations (NFE). This test is based on 10 runs of fitness evaluation and will keep running until the terminating condition is satisfied, where the termination condition is set as 10,000 × D. The size of D varies from 10, and 30 to 50. For example, if the number of dimensions is D = 30 then the algorithm has to run for 10,000 × 30 = 300,000 iterations to achieve the global minimum. Moreover, if the algorithm reaches the global minimum in 300,000 iterations, then we record in how many iterations the global minimum is achieved; if the algorithm is not able to achieve the global minimum in 300,000 iterations, it will consider and mark that the global minimum is not achieved so the output will be the error, as shown for f9 and f25 in Table 6 and Table 7, where both RNDE and IRNDE are unable to obtain the global minimum. Similarly from the above-mentioned discussion, it is concluded that if there is a change in dimensions, then the iterations must change as there is a direct relation between dimensions and iterations.

Table 6.

Number of function evaluations for functions f1 to f15 for NP = 150, D = 30, and iterations = 1000 × D.

Table 7.

Number of function evaluations for functions f1 to f15 for NP = 150, D = 50, and iterations = 1000 × D.

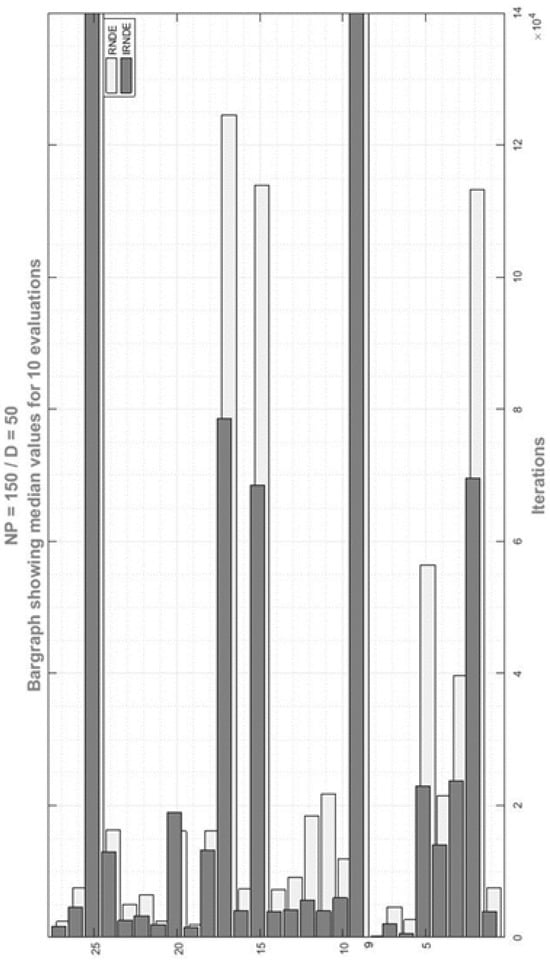

Moreover, we performed the abovementioned tests on the same well-known 27 benchmark functions that were used by the RNDE algorithm for its evaluation. In addition, we also discussed the different scenarios, such as how many iterations are required for both algorithms to achieve the global minimum if the algorithms obtain the worst, median, or best population data and what the success rate of both algorithms is in achieving the global minimum during the calculation of NFE. Finally, Figure 3 and Figure 4 show a bar graph of the median values of both algorithms and demonstrate the performance of both algorithms. It is noteworthy to point out that the proposed IRNDE outperforms the RNDE algorithm.

Figure 3.

Number of fitness evaluations for RNDE and IRNDE. (a) NFE comparison of RNDE and IRNDE when NP = 150 and D = 10; (b) NFE comparison of RNDE and IRNDE when NP = 150 and D = 30.

Figure 4.

NFE comparison of RNDE and IRNDE when NP = 150 and D = 50.

5.4. Statistical Significance

The statistical significance performance of average fitness values of two algorithms are analyzed using the two-sampled pair t-test significant test. The null hypothesis states that there is no significance difference between the average fitness performance of the RNDE algorithm () and IRNDE algorithm (). The s.t and alternate hypothesis state that there is a significance difference between the average fitness performance of the RNDE algorithm and IRNDE algorithm() s.t . We used a 0.05 level of significance test to generate significant t-Test results, which are reported in Table 8 of this paper. The degree of freedom used in the research was 34 for 35 observations used to generate test statistics, the sample mean, variance, Pearson correlation, and p values for two-tailed critical t-test values. We generated significant values for fifteen functions; the results of the rest of the functions were similar. It can be observed from the table that all p-values except and are less than the level of significance (0.05). It can be summarized that overall there is a significant difference in the performance of the RNDE algorithm and IRNDE algorithm.

Table 8.

Statistically paired two-sample significance t-test for means of RNDE vs. IRNDE.

6. Conclusions

Differential evolution is a strong evolutionary algorithm that provides a powerful solution to resolve global optimization problems. However, the existing mutation strategies, DE/best/1 and De/rand/1, do not provide a balance between better exploitation and exploration. So far, to overcome this issue, the DE/Neighbor/1 mutation strategy and RNDE algorithm have been introduced. The DE/Neighbor/1 mutation strategy maintains a balance between exploration and exploitation, as it was built to use the lower and upper bound limits. However, this mutation strategy makes the whole procedure or convergence very slow, and requires a higher number of iterations for convergence. This study overcomes this limitation by introducing IRNDE with a DE/neighbor/2 mutation strategy. Contrary to the DE/Neighbor/1 mutation strategy in the RNDE algorithm, the proposed IRNDE adds weighted differences after various tests. The proposed IRNDE algorithm and DE/neighbor/2 mutation strategy are tested on the same 27 commonly used benchmark functions, on which the DE/Neighbor/1 mutation strategy and RNDE algorithm were tested. Experimental results demonstrate that the new mutation strategy DE/neighbor/2 and IRNDE algorithm is better and faster overall in convergence. Moreover, while performing successful tests on both state-of-the-art algorithms, we have gone through different situations during the implementation of benchmark functions concerning minimum, mean, and worst values. Although it has been proven that the proposed algorithm IRNDE is better and more successful overall, not only in maintaining the balance between exploration and exploitation but also in converging more quickly than the base RNDE algorithm, it may not provide optimal results in some scenarios. For results using 27 benchmark functions’ test suites, the proposed algorithm IRNDE performs better than the base algorithm RNDE except for the f9 Rastrigin function and f25 Schwefels Problem. The experimental results of the average fitness ensures the significant difference between the performance of RNDE and IRNDE algorithms using a two-tailed paired t-test at a 0.05 level of significance. The limitation of this study is that it is applied to constrained problems. Applying this study to unconstrained problems could be a good idea in future work. Finally, we intend to apply the proposed algorithm to complex, real-world problems, such as steganography, which remains an attractive topic. Another future work of this study could be the usage of memory to store the convergence track of parameters associated with the proposed algorithm based on user-defined time periods.

Author Contributions

Conceptualization, M.H.B. and Q.A.; Data curation, Q.A. and J.A.; Formal analysis, M.H.B. and J.A.; Funding acquisition, S.A.; Investigation, K.M. and S.A.; Methodology, J.A.; Project administration, S.A. and M.S.; Software, K.M. and M.S.; Supervision, I.A.; Validation, I.A.; Visualization, K.M. and M.S.; Writing—original draft, M.H.B. and Q.A.; Writing—review and editing, I.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by the Researchers Supporting Project Number (RSPD2023R890), King Saud University, Riyadh, Saudi Arabia.

Data Availability Statement

Not applicable.

Acknowledgments

The authors extend their appreciation to King Saud University for funding this research through Researchers Supporting Project Number (RSPD2023R890), King Saud University, Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Storn, R.; Price, K. Differential evolution–A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Peng, H.; Guo, Z.; Deng, C.; Wu, Z. Enhancing differential evolution with random neighbors based strategy. J. Comput. Sci. 2018, 26, 501–511. [Google Scholar] [CrossRef]

- Deng, W.; Shang, S.; Cai, X.; Zhao, H.; Song, Y.; Xu, J. An improved differential evolution algorithm and its application in optimization problem. Soft Comput. 2021, 25, 5277–5298. [Google Scholar] [CrossRef]

- Hu, Z.; Gong, W.; Li, S. Reinforcement learning-based differential evolution for parameters extraction of photovoltaic models. Energy Rep. 2021, 7, 916–928. [Google Scholar] [CrossRef]

- Kharchouf, Y.; Herbazi, R.; Chahboun, A. Parameter’s extraction of solar photovoltaic models using an improved differential evolution algorithm. Energy Convers. Manag. 2022, 251, 114972. [Google Scholar] [CrossRef]

- Yu, X.; Liu, Z.; Wu, X.; Wang, X. A hybrid differential evolution and simulated annealing algorithm for global optimization. J. Intell. Fuzzy Syst. 2021, 41, 1375–1391. [Google Scholar] [CrossRef]

- Cheng, J.; Pan, Z.; Liang, H.; Gao, Z.; Gao, J. Differential evolution algorithm with fitness and diversity ranking-based mutation operator. Swarm Evol. Comput. 2021, 61, 100816. [Google Scholar] [CrossRef]

- Kumar, R.; Singh, K. A survey on soft computing-based high-utility itemsets mining. Soft Comput. 2022, 26, 1–46. [Google Scholar] [CrossRef]

- Abbas, Q.; Ahmad, J.; Jabeen, H. The analysis, identification and measures to remove inconsistencies from differential evolution mutation variants. Scienceasia 2017, 43S, 52–68. [Google Scholar] [CrossRef]

- Abbas, Q.; Ahmad, J.; Jabeen, H. A novel tournament selection based differential evolution variant for continuous optimization problems. Math. Probl. Eng. 2015, 2015, 1–21. [Google Scholar] [CrossRef]

- Li, J.; Yang, L.; Yi, J.; Yang, H.; Todo, Y.; Gao, S. A simple but efficient ranking-based differential evolution. IEICE Trans. Inf. Syst. 2022, 105, 189–192. [Google Scholar] [CrossRef]

- Kaliappan, P.; Ilangovan, A.; Muthusamy, S.; Sembanan, B. Temperature Control Design with Differential Evolution Based Improved Adaptive-Fuzzy-PID Techniques. Intell. Autom. Soft Comput. 2023, 36, 781–801. [Google Scholar] [CrossRef]

- Chen, X.; Shen, A. Self-adaptive differential evolution with Gaussian–Cauchy mutation for large-scale CHP economic dispatch problem. Neural Comput. Appl. 2022, 34, 11769–11787. [Google Scholar] [CrossRef]

- Deng, W.; Ni, H.; Liu, Y.; Chen, H.; Zhao, H. An adaptive differential evolution algorithm based on belief space and generalized opposition-based learning for resource allocation. Appl. Soft Comput. 2022, 127, 109419. [Google Scholar] [CrossRef]

- Abbas, Q.; Ahmad, J.; Jabeen, H. Random controlled pool base differential evolution algorithm (RCPDE). Intell. Autom. Soft Comput. 2017, 24, 377–390. [Google Scholar] [CrossRef]

- Thakur, S.; Dharavath, R.; Shankar, A.; Singh, P.; Diwakar, M.; Khosravi, M.R. RST-DE: Rough Sets-Based New Differential Evolution Algorithm for Scalable Big Data Feature Selection in Distributed Computing Platforms. Big Data 2022, 10, 356–367. [Google Scholar] [CrossRef]

- Zhan, Z.H.; Li, J.Y.; Zhang, J. Evolutionary deep learning: A survey. Neurocomputing 2022, 483, 42–58. [Google Scholar] [CrossRef]

- Awad, N.H.; Ali, M.Z.; Suganthan, P.N.; Reynolds, R.G. CADE: A hybridization of cultural algorithm and differential evolution for numerical optimization. Inf. Sci. 2017, 378, 215–241. [Google Scholar] [CrossRef]

- Fu, C.; Jiang, C.; Chen, G.; Liu, Q. An adaptive differential evolution algorithm with an aging leader and challengers mechanism. Appl. Soft Comput. 2017, 57, 60–73. [Google Scholar] [CrossRef]

- Awad, N.H.; Ali, M.Z.; Suganthan, P.N.; Jaser, E. A decremental stochastic fractal differential evolution for global numerical optimization. Inform. Sci. 2016, 372, 470–491. [Google Scholar] [CrossRef]

- Tian, M.; Gao, X.; Dai, C. Differential evolution with improved individual-based parameter setting and selection strategy. Appl. Soft Comput. 2017, 56, 286–297. [Google Scholar] [CrossRef]

- Zheng, L.M.; Zhang, S.X.; Tang, K.S.; Zheng, S.Y. Differential evolution powered by collective information. Inf. Sci. 2017, 399, 13–29. [Google Scholar] [CrossRef]

- Meng, Z.; Pan, J.S. QUasi-Affine TRansformation Evolution with External ARchive (QUATRE-EAR): An enhanced structure for differential evolution. Knowl.-Based Syst. 2018, 155, 35–53. [Google Scholar] [CrossRef]

- Sallam, K.M.; Elsayed, S.M.; Sarker, R.A.; Essam, D.L. Landscape-based adaptive operator selection mechanism for differential evolution. Inf. Sci. 2017, 418, 383–404. [Google Scholar] [CrossRef]

- Wu, G.; Shen, X.; Li, H.; Chen, H.; Lin, A.; Suganthan, P.N. Ensemble of differential evolution variants. Inf. Sci. 2018, 423, 172–186. [Google Scholar] [CrossRef]

- Cai, Y.; Liao, J.; Wang, T.; Chen, Y.; Tian, H. Social learning differential evolution. Inf. Sci. 2018, 433, 464–509. [Google Scholar] [CrossRef]

- Cai, Y.; Sun, G.; Wang, T.; Tian, H.; Chen, Y.; Wang, J. Neighborhood-adaptive differential evolution for global numerical optimization. Appl. Soft Comput. 2017, 59, 659–706. [Google Scholar] [CrossRef]

- Xiong, S.; Gong, W.; Wang, K. An adaptive neighborhood-based speciation differential evolution for multimodal optimization. Expert Syst. Appl. 2023, 211, 118571. [Google Scholar] [CrossRef]

- Liao, Z.; Zhu, F.; Mi, X.; Sun, Y. A neighborhood information-based adaptive differential evolution for solving complex nonlinear equation system model. Expert Syst. Appl. 2023, 216, 119455. [Google Scholar] [CrossRef]

- Gao, T.; Li, H.; Gong, M.; Zhang, M.; Qiao, W. Superpixel-based multiobjective change detection based on self-adaptive neighborhood-based binary differential evolution. Expert Syst. Appl. 2023, 212, 118811. [Google Scholar] [CrossRef]

- Liao, Z.; Mi, X.; Pang, Q.; Sun, Y. History archive assisted niching differential evolution with variable neighborhood for multimodal optimization. Swarm Evol. Comput. 2023, 76, 101206. [Google Scholar] [CrossRef]

- Liu, D.; Hu, Z.; Su, Q. Neighborhood-based differential evolution algorithm with direction induced strategy for the large-scale combined heat and power economic dispatch problem. Inf. Sci. 2022, 613, 469–493. [Google Scholar] [CrossRef]

- Sheng, M.; Chen, S.; Liu, W.; Mao, J.; Liu, X. A differential evolution with adaptive neighborhood mutation and local search for multi-modal optimization. Neurocomputing 2022, 489, 309–322. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, Z.; Wang, Z.; Wei, J.; Chen, X.; Li, Q.; Zheng, Y.; Sheng, W. Adaptive memetic differential evolution with multi-niche sampling and neighborhood crossover strategies for global optimization. Inf. Sci. 2022, 583, 121–136. [Google Scholar] [CrossRef]

- Cai, Y.; Wu, D.; Zhou, Y.; Fu, S.; Tian, H.; Du, Y. Self-organizing neighborhood-based differential evolution for global optimization. Swarm Evol. Comput. 2020, 56, 100699. [Google Scholar] [CrossRef]

- Segredo, E.; Lalla-Ruiz, E.; Hart, E.; Voß, S. A similarity-based neighbourhood search for enhancing the balance exploration–Exploitation of differential evolution. Comput. Oper. Res. 2020, 117, 104871. [Google Scholar] [CrossRef]

- Baioletti, M.; Milani, A.; Santucci, V. Variable neighborhood algebraic differential evolution: An application to the linear ordering problem with cumulative costs. Inf. Sci. 2020, 507, 37–52. [Google Scholar] [CrossRef]

- Tian, M.; Gao, X. Differential evolution with neighborhood-based adaptive evolution mechanism for numerical optimization. Inf. Sci. 2019, 478, 422–448. [Google Scholar] [CrossRef]

- Tarkhaneh, O.; Moser, I. An improved differential evolution algorithm using Archimedean spiral and neighborhood search based mutation approach for cluster analysis. Future Gener. Comput. Syst. 2019, 101, 921–939. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).