Abstract

This study proposes a collaboration-based interaction as a new method for providing an improved presence and a satisfying experience to various head-mounted display (HMD) users utilized in immersive virtual reality (IVR), and analyzes the experiences (improved presence, satisfying enjoyment, and social interaction) of applying collaboration to user interfaces. The key objective of the proposed interaction is to provide an environment where HMD users are able to collaborate with each other, based on their differentiated roles and behaviors. To this end, a collaboration-based interaction structured in three parts was designed, including a synchronization procedure and a communication interface that enable users to swiftly execute common goals with precision, based on immersive interactions that allow users to directly exchange information and provide feedback with their hands and feet. Moreover, experimental VR applications were built to systematically analyze the improved presence, enjoyment, and social interaction experienced by users through collaboration. Finally, by conducting a survey on the participants of the experiment, this study confirmed that the proposed interface indeed provided users with an improved presence and a satisfying experience, based on collaboration.

1. Introduction

Immersive virtual reality (VR) can either refer to the technology that is used to render a virtual environment that has been created artificially with a computer to resemble reality, or a particular environment or situation thus created. In addition, immersion in VRs has been enhanced with the development of head-mounted display (HMD) technologies that provide three-dimensional visual information, e.g., the HTC Vive, Oculus Rift CV1, and Oculus GO. Based on these developments, a variety of research on immersive VR is currently being conducted with respect to interactions (gaze, hand gestures, etc.), which provide realistic spatial and temporal experiences, by stimulating the user’s various senses inside a VR, as well as research on hardware technology (motion platforms, haptic systems, etc.) [1,2,3,4].

The sense of presence is a key factor that must be considered when providing users with a realistic experience inside immersive VRs. The sense of presence refers to a psychological experience of feeling as though the user is inside a real place (“being there”), even when he or she is inside a virtual environment. To improve the sense of presence, the user must be provided with an environment in which he or she can immerse him- or herself, through the process of interacting with the virtual environment or objects by utilizing a variety of senses, such as vision, audio, and touch. Thus, interactive technologies are being researched to enhance immersion and provide an improved presence. Related research topics include a study on producing audio effects by combining sound source clustering and hybrid audio rendering technologies [5], based on display devices that transfers three-dimensional visual information, which enhances the immersion inside a virtual space; studies conducted on enhancing immersion through user interfaces that enable the user to interact more directly and realistically with virtual environments through gaze and hand gestures [6,7]; and technological research conducted to offer an improved presence by combing haptic systems, which provide physical reactions as feedback. Moreover, studies are being conducted on portable walking simulators using walking in place [8] or algorithms [4,9], without the use of expensive equipment such as treadmills or motion capture devices, to realistically represent the experience of walking inside a vast and dynamic virtual space.

Studies on immersive VRs based on the sense of presence are being developed in ways that integrate collaborative virtual environments (CVEs) shared by multiple users. In particular, methods are being researched for multiple users wearing HMDs to collaborate and communicate realistically with each other through interactions that are appropriate to a given condition or environment. It is difficult to expect social interactions to be important in collaborative virtual environments; interest and immersion based on these social interactions can be improved only by increasing HMD users from one to many in immersive VRs. Therefore, it is necessary to provide each user with distinctive roles based on communication that he or she can fulfill in a collaborative environment, which reflect the restricted/increased interactions or different ranges of experience required by each user inside VRs. Studies such as TurkDeck [10] and Haptic Turk [11] conducted by Cheng et al. and ShareVR by Gugenheimer et al. [12] have proposed a different type of interaction and an asymmetrical environment by including Non-HMD wearers among its users. This method helped provide HMD wearers with better immersion and presented all users with a new experience. Meanwhile, more research is required to provide new experiences and enhanced immersion through communicative and collaborative interactions, based on differentiated roles and behaviors for each user in CVEs shared solely by HMD wearers.

Therefore, this study designed a collaboration-based experience environment, based on differentiated roles and immersive interactions appropriate to each role inside an immersive VR with multiple HMD users. Moreover, this study presents a collaborative environment that provides a new experience and presence that may even exceed those experienced in one-person immersive VRs. The proposed interaction in the immersive collaborative virtual environments is structured into the following three steps:

- Defining immersive interactions that correspond to VR controllers by utilizing hands and feet, i.e., body parts that are frequently used when humans interact with virtual environments or objects. This is essential to increase immersion, which is the most important element for the sense of presence.

- Designing a synchronizing procedure that is required for users to collaborate through distinctive roles and behaviors based on these roles. This procedure ensures that shared information and resources are precisely computed and processed, while all users collaborate and work toward a common goal.

- Finally, presenting an interactive hand-to-controller interface that enables users to effectively exchange information that must be delivered to other users while the application is being executed in a remote environment, including their thoughts and states.

Through these procedures, this study aims to deliver a new kind of collaboration-based experience by allowing each individual user to share their thoughts and behaviors through communication. Furthermore, this study systematically analyzed the sense of presence and satisfaction experienced by users by conducting a comparative experiment on participants with the use of professional questionnaires related to presence and experience.

2. Related Work

2.1. Immersive Virtual Reality

Studies on immersive VR have been aimed toward improving the sense of presence by providing a more realistic experience inside a virtual environment through the senses, including vision, audio, and touch [1,13]. A variety of studies are being conducted on user interface, haptic feedback, motion platforms, etc., to enable users to have a realistic experience of with whom and where they are interacting, albeit virtually [14,15]. Starting with display methods that provide realistic spatial experiences to users by offering three-dimensional visual information, studies on immersive VRs centered on the sense of presence have developed ways to provide users with realistic experiences through direct interactions with virtual environments, using their own hands and feet to grab, touch, and walk; as well as through improved audio immersion, based on audio sources that enhance spatial awareness [8,16].

To enable users to realistically experience a variety of possible actions and behaviors inside virtual environments, the movement of their joints must be rapidly detected and accurately recognized, and reflected in the virtual environment. To this end, studies are being conducted on computing joint movements from videos captured with optical markers attached to the surfaces of a subject’s joints [16]; as well as on detecting and recognizing the movements of the whole body, beyond merely the hands, with various equipment, including the Kinect and Leap Motion [2,17]. In recent years, studies have been conducted on detection models for computing movements made with elaborate hand joint models [18], and on realistically representing user behavior in a virtual space from video data obtained with motion capture equipment [6]. These studies have been performed to improve the sense of presence by representing user behavior in a virtual environment and enabling users to provide a variety of physical responses as direct feedback, using their hands or other body parts. User immersion has been enhanced by lending precision to touch sensations; studies have been conducted on producing haptic feedback with electrical actuators [19], as well as on various haptic devices, including the three revolute-spherical-revolute (3-RSR) haptic wearable [3]. These studies on haptic systems have also been developed into studies on multi-modality that satisfy both the tactile and sound or tactile and visual senses. Moreover, as VR HMD devices have become more popular, studies on haptic devices have also been developed with consideration of portability and cost, to render them more applicable to the general public [20]. In relation to motion platforms, studies are also being conducted to realistically represent user behavior in a dynamic virtual space. Research has focused on representing a virtual character’s behavior in a restricted space by detecting the user’s walking motion using walking in place [8,21], or on allowing users to walk indefinitely inside a restricted space by computing the walking motion by applying distortions to a projected video [4]. When structuring immersive VRs with multiple users, interactions between the users must also be taken into consideration, in addition to adapting preceding studies on user interface, haptic systems, and motion platforms for multiple users. In other words, with the aim of improving the sense of presence, immersive interactions must be designed, based on collaboration or communication.

2.2. Collaborative Virtual Environments

Studies on CVEs are being conducted to find methods and technologies that will enable multiple users to interact with each other in a virtual environment through collaboration and communication, in addition to interacting with the virtual environment [22]. Research has been performed on this topic, ranging from studies on distributed interactive virtual environments (DIVEs) to studies on applied technologies for multiple remote users [23,24]. A variety of studies are being conducted to improve the sense of presence for multiple users that share a common experience in a virtual environment: on analyzing how multiple users perceive and recognize each other’s behavior when they are experiencing VR in the same space [25], on methods for effectively processing realistic walking motion in a situation where multiple VR users are sharing a common experience [26], on communication technologies for improving collaboration in a remote mixed reality [27], and on enabling users to effectively control communication in a CVE through gestures [28]. These have occasionally been developed as studies on asymmetrical VRs that encompass Non-HMD wearers among a collaborative environment of HMD wearers [12,29]. Asymmetric VR refers to an environment or technology in which Non-HMD as well as HMD users participate in the same virtual environment together to achieve a given goal based on independent interactions. In these instances, studies have often designed communicative or collaborative structures to encourage participation from Non-HMD wearers, who experience a relatively Non-immersive environment. Conversely, in immersive VRs designed solely for HMD wearers, users are usually asked to execute similar behaviors, based on the same methods for processing input, to achieve a common goal. If users are presented with different roles to each user as well as with experience environments in which they can communicate and collaborate in performing their own roles, an increase in immersion by forming social relationships between users is expected in addition to an improved sense of presence compared with one-person experience environments [12,30].

Therefore, to overcome the limitations of the existing research, this study adopts experience environments based on independent roles and interactions in asymmetrical VR as the collaboration-based user interface of immersive VR. In other words, this study proposes a collaboration-based experience environment and application in Immersive VRs, with the aim of presenting an immersive virtual environment where the independent roles of multiple users are differentiated and where users can communicate and collaborate with each other in environments and situations that are unique to each user.

3. Collaboration-Based Experience Environment

This study is ultimately focused on providing users with an improved presence, enjoyment, and social interactions by designing a user interface that renders a new experience and enables more than two users to experience an improved presence through user-to-user collaboration. Toward this aim, this study proposes the collaboration-based experience environment. A key factor of this framework is to design an interface optimized to offer an improved presence with only an HMD and a dedicated controller (without motion capture hardware, a treadmill, VR gloves, etc.) to minimize the need for equipment that could inconvenience users with regard to cost or the experience environment.

3.1. Overview

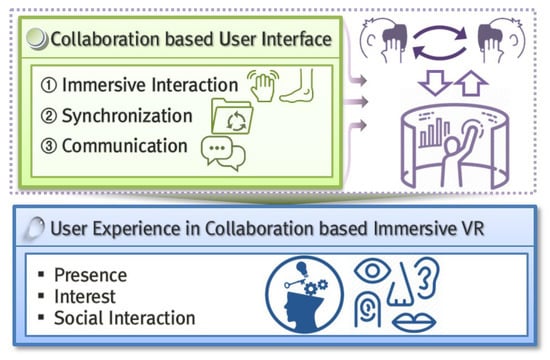

As shown in Figure 1, the proposed user interface based on collaboration is structured in three parts. The first part consists of designing a structure for direct interactions between the user and the virtual environment. This study proposes an interaction system that uses hands and feet, which are the body parts most frequently used in human behavior, to enhance immersion by representing how a single user controls objects inside a virtual environment in a more direct and realistic way. To enable users to interact directly with the virtual environment or objects through the hands and feet of a virtual character, their hand and foot movements correspond to a VR controller. The next part consists of interactions between users for collaboration. This study focuses on providing a collaborative experience, rather than an environment where each user performs individualistic behaviors, based on their own roles. Therefore, a synchronizing process is required that supports swift and accurate collaboration between users. This process includes synchronizing information on the collaborative behaviors of each independent user (including animation, communication, etc.), as well as conversion data shared by multiple users on objects. The last part consists of designing a communication interface that enables multiple users to easily exchange information on their current status or any other information necessary for collaboration, by simple operations.

Figure 1.

Structure of the proposed collaboration-based experience environment in immersive virtual reality (VR).

The present study suggests a specific application method based on the interface of the three-phase structure. However, it defines the generalized interface structure as shown in Figure 1 such that users can apply it with various interaction methods. This includes building an interface in a development environment that utilizes the Unity 3D engine, and creating a virtual space that users can experience with the HTC Vive HMD (HTC Corporation, Taipei, Republic of China) and its dedicated controller. Thus, the key features of each part are developed by combining the Unity 3D engine with the SteamVR plugin, supported in the HTC Vive environment.

3.2. Immersive Interaction Using Hands and Feet

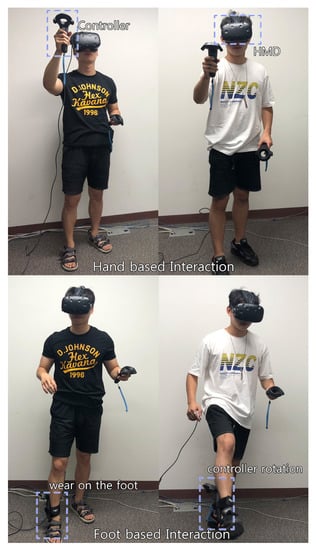

The hands and feet are the most frequently used body parts when the user wishes to execute a variety of behaviors in an immersive VR. Therefore, the proposed interface is designed to allow users to use their hands and feet to interact with virtual environments, the objects inside those environments, as well as other users.

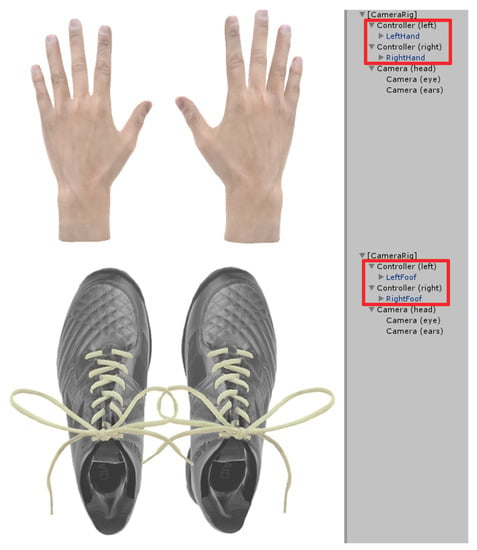

First, it is crucial to provide users with the feeling that they are actually using their own hands inside a virtual environment, based on the hand interface model proposed by Han et al. [2]. For a more detailed development of the model, a three-dimensional hand model that includes joint data, which is used to represent finger movement in detail, is imported to the Unity 3D engine. To represent foot motions, a virtual feet model or a virtual shoes model is utilized. Then, these hand and foot models are set in relation to the objects provided in the SteamVR plugin. Because the basic objects provided in the plugin include the abilities to control both the left and right cameras and the left and right hand controllers, the hand and foot models are registered as child objects of the controllers. However, owing to the limited number of controllers used in this study, the hands and feet are configured to be used separately, rather than simultaneously. Figure 2 represents the process of getting the controllers to correspond to the three-dimensional hand/foot models in the Unity 3D engine development environment, which includes the SteamVR plugin.

Figure 2.

Process of getting the controllers to correspond with three-dimensional models in the Unity 3D development environment that includes the SteamVR plugin.

After the left and right controllers have each been made to correspond to the three-dimensional models, the movements and animations of the models are processed, in the order outlined in Algorithm 1. For the key codes, the functions and classes that are provided in the SteamVR plugin are used, which can be operated in the Unity 3D engine. First, the virtual objects (hands or feet) defined by the user are matched with the VR controller (by defining the virtual object as the child of the VR controller, they are set to be transformed together). Subsequently, the VR controller input keys are defined. This aims to connect the keys with actions of the virtual hands or feet using the keys provided by the controller. Furthermore, the input keys are used to manipulate interfaces, execute animations defined in virtual objects, and control movements.

| Algorithm 1 Immersive interaction using hand or foot. |

|

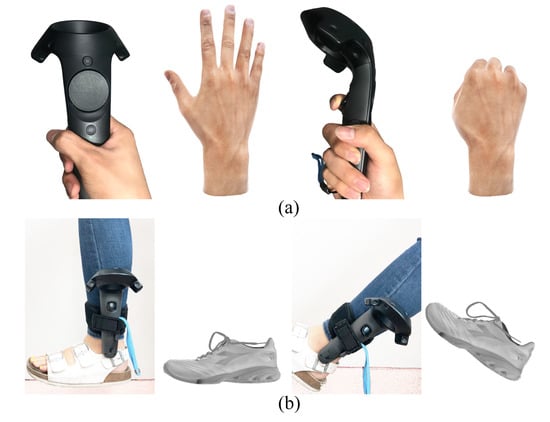

Figure 3 represents the process of getting the movements of the three-dimensional hand model to correspond or controlling the movements of the three-dimensional foot model by using the input keys of the HTC Vive controller. By closing and opening the hands, the user is able to grasp and control objects that exist in a three-dimensional virtual environment. For foot motions, controllers are worn on each foot and the motion is represented according to the rotations of the controller. In this way, foot motions, including walking motions executed on the same spot or the kicking of objects, can be represented.

Figure 3.

Processing of hand and foot motions through changes in the controller’s input or rotation: (a) virtual hand; (b) virtual foot.

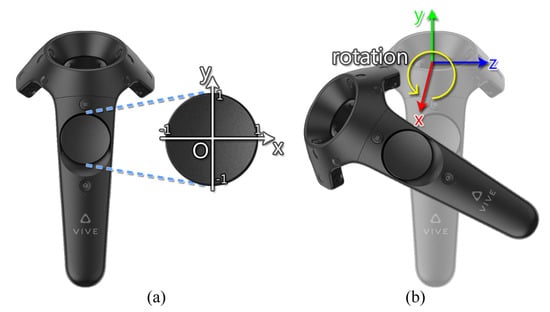

In addition, the controller is also used to move virtual characters that correspond to users inside virtual environments. This is executed in two ways: first, when getting the the hands to correspond with their controllers, the touchpad on the left-hand controller is used to compute the direction of the character’s movement. The movement vectors of the character are computed by projecting the controller’s touchpad onto the -plane (Figure 4a). Equation (1) is used to compute the character’s movement vector from the touchpad inputs. represents the input coordinate of the controller’s touchpad and returns a value between −1 and 1. s represents the scalar value of the character’s speed. For foot movements, a controller-based processing procedure with a simple but effective structure for walking motions through walking in place is defined, based on the recognition technology used in the walking simulator proposed by Lee et al. [8]. Based on the rotational change about the x-axis applied to the controllers worn on the ankles (), the user’s walking motion () is evaluated to determine whether the virtual character will be moved (Figure 4b). Equation (2) is used for this procedure. T denotes the threshold value for evaluating whether walking motions have been executed.

Figure 4.

Processing of controller input for moving virtual characters: (a) calculation of movement vector from touchpad, (b) measurement of rotational change for walking motion.

3.3. Synchronization for Collaboration

Utilizing the user’s hands and feet, this study presents an environment where users can immerse themselves through direct interactions with the objects inside virtual environments and even between users. The aim of this study is to enhance social interactions between users and thus improve the sense of presence simultaneously for all users by designing an immersive VR based on collaboration. This ultimately means adding collaboration to the range of different interactions between users and designing a synchronization procedure required for collaboration. Collaboration includes sharing experiences or resources to achieve a common goal. Therefore, fast and accurate synchronization is required to prevent awkwardness or discomfort during collaboration. Otherwise, users could experience VR sickness or a lessened presence.

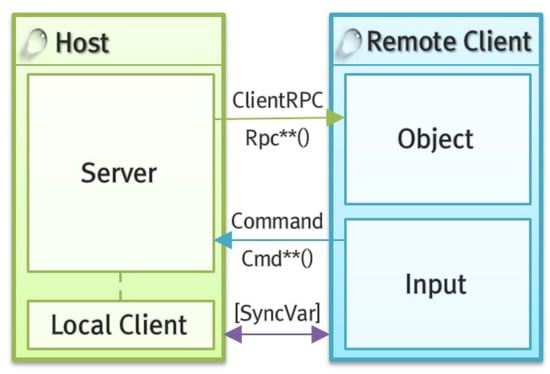

To enable collaboration between HMD-wearing users inside an immersive VR, the Unity Network (UNet), provided in the Unity 3D engine development environment, is used. UNet allows the developer to access commands that can satisfy the requirements of communication between users, without having to consider the low-level details. In the UNet system, an application has one server and multiple clients. Because the collaboration structure proposed by this study presupposes at least two users, one of the clients acts as a host that also acts as the server to constitute the network. The host communicates directly with the server through function calls and message queues because it includes both the server and a local client. Direct communication between clients without a dedicated server minimizes delays and interruptions in the collaboration process (Figure 5). Collaboration between multiple users is enabled by allowing multiple clients to access the host.

Figure 5.

Unity network (UNet) based synchronization procedure.

Information on the users’ locations, motions, and behaviors performed with the hands and feet, and objects that can be shared, selected, and controlled by all users must be synchronized for collaboration. The network transform function, provided in UNet, is used to synchronize basic conversion data, including the locations and directions of the virtual characters. However, because this function can only be applied to client characters, and only to the highest-level objects among those, the synchronization for all other objects and situations is processed with commands and the client remote procedure calls (RPC) function. Commands are called from the clients and executed on the server, whereas the client RPC function is called from the server and executed on the clients.

To ensure that the users remain in the same state during collaboration, the conversion state information of objects that require synchronization is processed in two ways, depending on the whether the current user is a host or a local client. Figure 5 represents the structure of the synchronizing process between a host that also acts as the server and clients, by using commands and the client RPC function, and also includes actual code execution methods. In UNet, the names of the command function and the client RPC function must begin with Cmd and Rpc, respectively, to be recognized. In cases where synchronization must be performed on member variables during the synchronization process, the command word [SyncVar] is used. Specific instances of collaboration performed through synchronization will be explained in detail in Section 4, which describes the process of creating the application.

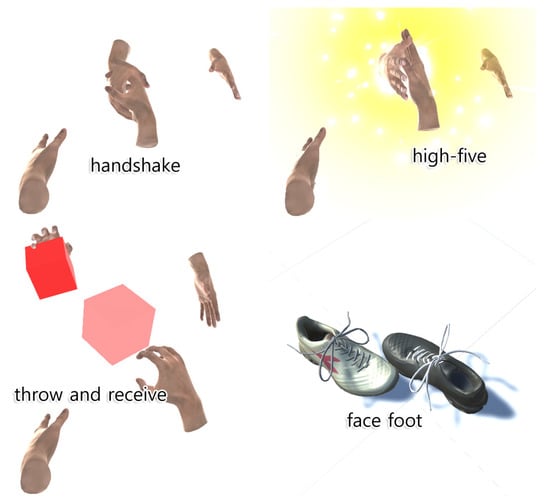

Figure 6 illustrates the results of presenting a variety of different interactions between users, based on synchronization. The results show that information on hands and feet animation have been precisely synchronized, and users can accurately share a variety of behaviors, including handshakes, touches, and exchange of objects.

Figure 6.

Examples of synchronization-based interactions between users, using hands or feet.

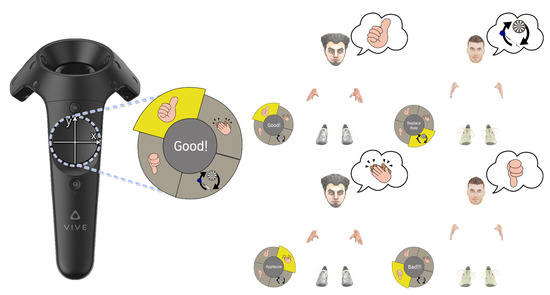

3.4. Communication Method

The last part of the proposed interface consists of communication. If multiple users experience the application in the same location, they can exchange thoughts and required information through direct communication. However, when it comes to remote users, fast and effective communication structures must be designed because direct communication is impossible. Thus, a communication method is designed that is easily navigable with a controller. Figure 7 shows an example in which emoticons that are frequently exchanged between users via messengers are used for communication. Various icons can be arranged to represent elements that must be determined for collaboration within applications or elements for entertainment. In the motion vector computation process of Equation (1), the touchpad coordinates and the interface coordinates are aligned to enable easy input. A speech balloon icon is activated over a virtual character’s head to enable other users to view the icon at once.

Figure 7.

Communication method design for efficient exchange of information or thoughts among users.

4. Immersive Multi-User VR Application

4.1. Design

The proposed interface is designed to enable multiple HMD-wearing users to perform individual behaviors, as well as collaborative behaviors, toward achieving a common goal in a VR. The aim of the proposed experience environment is thus to provide users with an improved presence, based on social interactions through this process. To realize this goal, an experimental application is needed that can be used to evaluate the effectiveness of the proposed interface. In existing multi-user VRs, such as the Farpoint VR [31], users are only required to perform the roles and behaviors that they have each been allotted to achieve a mission. A particular user’s behavior can influence the entire gameplay or some of the behaviors of other players, but the users do not form direct relationships with each other. Therefore, shared gameplay is rendered less significant.

The VR applications of this study are built with collaboration-based experience environments where multiple users must be cooperative to achieve the goal. Although the application has limitations in generalizing the influence of the proposed interface to a presence, it is nevertheless designed to highlight the significance of shared gameplay by emphasizing collaboration as much as possible.

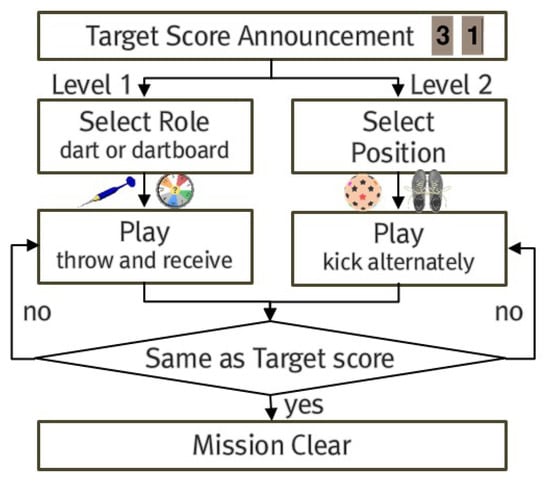

4.2. Structure and Flow

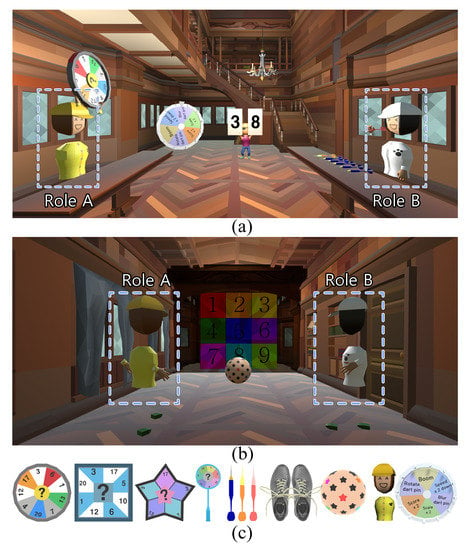

The flow of the application is divided in two ways: collaboration using hands and collaboration using feet. First, for the hand-based collaboration application, users need to correctly guess the target number specified by a non-player character (NPC) by using a dart and dart board within a set period of time. When one user selects the dart, the other user must select the dart board. When the first user throws the dart toward the specified number on the dart board, the second user must move with the dart board to enable the first user to hit the correct number. The feet-based collaboration application is executed in the same way. An NPC specifies a target number, and the first user and second should achieve a number in the tens and units digit, respectively. In this instance, when the first user starts a shot, then the second user must end the shot. Therefore, collaboration between the two users is crucial to hit the correct target number. Figure 8 summarizes the flow of the application proposed in this study.

Figure 8.

Process flow of the proposed immersive multi-user VR application.

The proposed application is shown in Figure 9, where a virtual environment has been created, in which two users must collaborate to achieve a common goal. In the environment shown in Figure 9a, the users must each use a dart or dartboard and monitor each other’s behavior to collaborate; in the environment shown in Figure 9b, the users must take turns to hit the number board with a ball by using their foot.

Figure 9.

Implementation results of the proposed application: (a) dart using hands; (b) soccer using foot; (c) synchronization objects.

When users are induced to collaborate with each other while playing the application, certain objects need to be synchronized. The objects shown in Figure 9c are those synchronized in the two applications.

5. Experimental Results and Analysis

5.1. Environment

To provide users with an improved presence and a new kind of experience in a multi-user VR, this study proposes an user interface based on collaboration. Experience environments where multiple users are either co-located or remotely located are both taken into consideration.

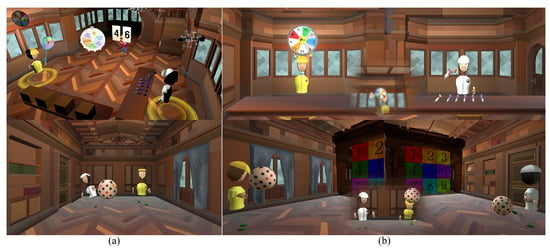

The proposed experience environment and the VR application, which applies this interface, have been created, based on the Unity 3D 2017.3.1f1 engine, in combination with the SteamVR plugin. Among the graphic resources required to build the application, the key characters, NPCs, and key resources required for collaboration have been created ourselves using Autodesk 3ds Max 2017 (Autodesk, San Rafael, CA, USA), Adobe Photoshop CS5 (Adobe, San Jose, CA, USA), and Adobe Illustrator CS5 (Adobe, San Jose, CA, USA). In addition, Unity’s asset store was used for background elements. Moreover, a game-playing environment has been prepared, where multiple users can play the application based on the proposed interface. The PCs used in the environment were each equipped with an Intel Core i7-6700 CPU (Intel Corporation, Santa Clara, CA, USA), 16 GB RAM, and a GeForce 1080 GPU (NVIDIA, Santa Clara, CA, USA). VR applications require minimum recommendation specifications to prevent VR sickness due to system specifications (refresh rate, frame per seconds, etc.). Experience environments that satisfy these specifications are built in this study. Figure 10 shows the game-playing environment created for this study. In this environment, users wearing HTC Vive HMDs each use dedicated controllers to process hands and feet input, and collaborate with other users to accomplish a given mission.

Figure 10.

Construction of our collaborative experience environment consisting of co-located or remote users.

Figure 11 shows the results based on the process flow in Figure 8. It illustrates the separate viewpoints of two users performing each role provided by the application using their hands and feet.

Figure 11.

The proposed interface-applied our immersive VR application: (a) execution scenes; (b) each user’s viewpoint.

5.2. Presence and Experiences

This study conducted an experiment to evaluate whether the experience environment based on collaboration-based interface provided users in a VR with an improved presence and a satisfying user experience (including enjoyment and social interaction). 24 participants (16 males and 8 females) between the ages of 21 and 37 were divided into groups of two, the participants in each group took turns to play each of the two roles. Here, the participants consisted of users who experienced one-person VR applications more than once. This is for analyzing the effects of collaboration on the sense of presence and experience based on interaction experience centered on traditional one-person plays. In addition, a comparative experiment was conducted, in which two users were asked to play both in the same location and in remote locations. In other words, comparative experiments confirm the effects of collaboration on the sense of presence and experience by comparing one-person experience environments, and further compare the relationship between users’ experience locations in the collaboration process.

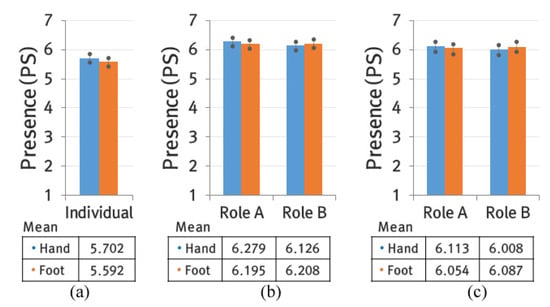

The first experiment was centered on the sense of presence. For this experiment, the questionnaire proposed by Witmer et al. [32] was used to record the degree of the sense of presence that participants experienced, and the results were analyzed. The participants were asked to answer each question with a number between 1 and 7. The closer the average answer is to 7, the higher the presence experienced by the participants. Based on existing studies [12,15,21], in general, an average of over five points is determined as having provided a satisfying presence inside a VR. Figure 12 shows the overall result of the questionnaire on the sense of presence. First, when participants were asked to play the application in the same location and take turns playing each role, the average score measured for hand-based interactions were 6.279 (SD: 0.345), 6.126 (SD: 0.351); for foot-based interactions, the average was measured as 6.195 (SD: 0.304), 6.208 (SD: 0.314). These results show that regardless of the different roles, the application was able to deliver a high presence to its users. The variance between the more active roles and the more passive roles resulted in a difference of less than 0.16 points. Calculating the statistical significance of each score through a one-way ANOVA analysis revealed that the sense of presence was not significantly influenced by the different roles in either the hand-based interaction () or foot-based interaction (): : hand (), foot (). Additionally, a comparative experiment was conducted, in which participants had to play the application separately to analyze the effects of the collaboration-based interface on the sense of presence. In this case, each participant was asked to play the role of the dart thrower, which delivered a relatively higher presence, and the sum of the numbers hit by each participant was recorded. For this experiment, the interface only provided interactions using hands and feet without the chance for collaboration (i.e., the dartboard was fixed). The questionnaire results revealed that the average scores for hand-based and foot-based interactions were 5.702 (SD: 0.374) and 5.592 (SD: 0.361), respectively. The difference between these scores and the previous scores indicates that sharing an experience with another user heightens one’s presence in a virtual environment. As before, a one-way ANOVA analysis was conducted on these scores to calculate the statistical significance of the sense of presence for individual users and collaborative users. For hand-based interaction, the sense of presence measured for individual users was (Role A: , Role B: ); for foot-based interaction, the sense of presence was measured as (Role A: , Role B: ). Thus, these results revealed that collaboration created a significant difference in the sense of presence.

Figure 12.

Results of presence survey experiments (Role A: dart or 1st Foot, Role B: dartboard or 2nd Foot): (a) individual experience; (b) co-located users; (c) remote users.

The final comparative experiment was conducted on varying conditions of location. The proposed interface encompasses both an environment where users can collaborate through direct communication in the same location and an environment where users must communicate with each other from remote locations. Figure 12c shows the questionnaire results for participants playing from remote locations. According to each role, the results for hand-based interaction were 6.113 (SD: 0.411) and 6.008 (SD: 0.423); for foot-based interaction, the results were 6.054 (SD: 0.415) and 6.087 (SD: 0.408) for each role. The results revealed a lower presence compared to when the participants were playing in the same location, but a higher presence compared to when they were playing separately. The participants were of the opinion that even though exchanging necessary information for collaboration proved relatively inconvenient when playing from remote locations, the sense of presence was improved because the basic structure for collaboration remained intact, in that their behaviors influenced other users (and were themselves in turn influenced by the behaviors of other users) and that they shared common goals.

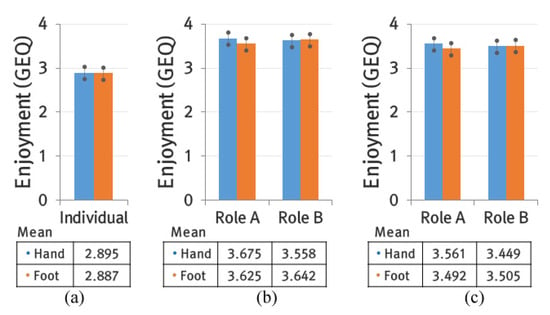

The second experiment was centered on experience. The Game Experience Questionnaire [33] (GEQ) was used in this experiment to evaluate the degree of enjoyment and social interaction provided by the application. GEQ is composed of not only psychological factors (e.g., competence, flow, challenge, etc.) that users who have experienced interactive contents, such as games, can feel during the experience process but also items that can be used to analyze social interactions among users in detail. For both elements, the participants were asked to assign a score between zero and four. Furthermore, the experiment was conducted in the same way as the questionnaire for the sense of presence. Figure 13 illustrates the questionnaire results on enjoyment. The results for each role in hand-based interaction were (hand: 3.675 (SD: 0.479) and 3.558 (SD: 0.483); the results for foot-based interaction were 3.625 (SD: 0.465) and 3.642 (SD: 0.460). For both types of interaction, the results revealed a high enjoyment. These results show that the proposed interface was able to provide enjoyment to its users. The statistical significance for enjoyment were (hand: , feet: ). These statistics indicate that there were no significant differences between the enjoyment values for different user roles: (: hand (), and foot ()). To confirm the standard degree of enjoyment provided by the proposed application, the questionnaire was also conducted on participants who played the application independently. The results for hand-based interaction and foot-based interaction were 2.895 (SD: 0.485) and 2.887 (SD: 0.477), respectively. These differences suggest that collaborating toward common goals bestowed greater activity to participants, and, in turn, a greater achievement when those goals were accomplished. A one-way ANOVA analysis was conducted to compare these results with those of individual users. The results for hand-based interaction were (Role A: , Role B: ); for foot-based interaction, the results were (Role A: , Role B: ). These results confirm that a collaborative experience environment created a significant difference in the users’ enjoyment. Furthermore, this can be confirmed based on the items of the presence questionnaire (i.e., realism, possibility to act). When the proposed interface is used in the collaboration process, the users’ immersion and interest are induced significantly high compared to one-person experiences, and these points are reflected equally in enjoyment.

Figure 13.

Results of enjoyment survey experiments (Role A: dart or 1st Foot, Role B: dartboard or 2nd foot): (a) individual experience; (b) co-located users; (c) remote users.

Comparing the results for different locational conditions revealed that there was no significant difference in the enjoyment between remotely located users and co-located users: (hand: 3.561 (SD: 0.484), 3.449 (SD: 0.486), feet: 3.492 (SD: 0.471), 3.505 (SD: 0.479)). Some participants were of the opinion that, in a co-located environment, adjustments were required as participants would sometimes collide with each other during gameplay, whereas remotely-located environments bestowed a greater sense of freedom while also providing a sense of togetherness, similar to online games.

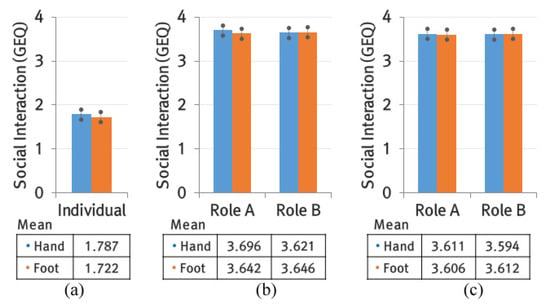

Finally, both roles received a high score for social interaction. For hand-based interaction, the results were 3.696 (SD: 0.345) and 3.621 (SD: 0.364); for foot-based interaction, the results were 3.642 (SD: 0.321) and 3.646 (SD: 0.324)). The key factor of the proposed interface is collaboration, which presupposes social interaction. The results of the experiment confirmed that the proposed application delivered a satisfying experience of social interaction to its users. Furthermore, they revealed that there were no significant differences between the different roles: (hand: > 0.05, feet: ). Asking participants to play the application independently, through interactions without collaboration, resulted in an absence of empathy and shared behaviors or experiences, which in turn resulted in low scores: (hand: 1.787 (SD: 0.412), feet: 1.722 (SD: 0.394)). Recognition of the influence of one’s behaviors on another user was the only aspect that resulted in relatively high scores. A one-way ANOVA analysis further confirmed a significant difference in social interaction: (hand (Role A/B) vs. individual: , feet (Role A/B) vs. individual: ).

In remotely located environments, the enjoyment could not be directly shared, which thus resulted in lower scores: (hand: 3.611 (SD: 0.328), 3.594(SD: 0.341), feet: 3.606 (SD: 0.337), 3.612(SD: 0.329)). However, these scores were still within the range of a satisfying experience. In particular, many participants were of the opinion that the communication interface allowed users to instantly exchange necessary information and that the application provided interactions that helped them focus on their respective distinct roles (Figure 14).

Figure 14.

Results of social interaction survey experiments (Role A: dart or 1st foot, Role B: dartboard or 2nd foot): (a) individual experience; (b) co-located users; (c) remote users.

Ultimately, this study suggests that designing a VR application for multiple users, where the roles and participation methods of each user are differentiated and collaboration between users is encouraged, will present application methods for this technology that provide an improved presence and various satisfying experiences compared to one-person-centered VR applications and multi-user VRs that only offer simple participation.

6. Limitation and Discussion

The proposed collaboration-based experience environments aim to enhance the sense of presence in collaborative environments where users communicate with each other in a divided role compared to a one-person application or a virtual environment where multiple users enjoy independent experiences. Therefore, it can be expanded into the education and tourism sectors that require shared ideas and experiences as well as the collaboration-based entertainment (e.g., games, etc.) sector. However, the current experimental environment is limited to two users and the participants for the comparative experiment are fewer than 24 participants. Therefore, the current study on the comparative analysis of the relationship between the collaboration-based interface and sense of presence is at an early stage. However, this study confirmed that the collaborative experience environment could provide various types of experiences and the sense of presence compared to one-person-centered VR applications. Therefore, it is necessary to develop an experience environment where many users can participate and advance research by building a large number of experimental groups.

7. Conclusions

This study proposes a collaboration-based interface that provides users with an improved presence and a new kind of experience through collaboration in an immersive multi-user VR experience environment beyond the one-person HMD user environment. Based on this interface, a collaboration experience environment and application were created, and a questionnaire on user experience based on collaboration was conducted and analyzed. The proposed interface included a synchronization procedure for facilitating swift and accurate collaboration-based on interaction to enhance immersion, while exchanging behaviors and thoughts between users or with the virtual environment, using hands and feet. Furthermore, the communication interface was designed in three parts to enable users to effectively exchange information necessary to achieve a common goal. This method presupposes a system that differentiates the behaviors and roles of each user and induces users to interact with each other through collaboration, according to their given roles, to accomplish the missions of the application. This is distinct from existing VR experience environments that apply the same behaviors and interactions to multiple users and ask them to achieve a common goal through performing their roles independently from each other. Therefore, this study designed a unique VR application that presents a specific situation, and conducted an experiment on its participants. The participants (n = 12) were asked to play the VR application (independently, collaboratively in the same location, and collaboratively in remote locations), and answer questions on the sense of presence and experience (of enjoyment and social interaction). The results of the questionnaire revealed that all the participants experienced an improved presence, enjoyment, and social interactions in the collaboration-based experience environment, which confirmed that the aim of this study was achieved. Based on the questionnaire results, this study suggests that, rather than merely applying a one-person VR experience environment to multiple users, by applying interactions to an immersive VR that allow users to collaborate based on distinct roles, VR experience environments will provide users with enjoyment through greater tension and immersion.

The experimental application used to measure the sense of presence and satisfaction in this study was designed for two users. For future studies, we will be designing a variety of new applications and conducting experiments on them, with the aim of providing collaborative experiences for more than two users and presenting a more generalized interface. Furthermore, we will be conducting comparative experiments on a larger number of participants to improve the credibility of our analyses.

Author Contributions

W.P., S.P. and J.K. conceived and designed the experiments; W.P., H.H. and S.P. performed the experiments; J.K. analyzed the data; J.K. wrote the paper.

Acknowledgments

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. NRF-2017R1D1A1B03030286).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sutherland, I.E. A Head-mounted Three Dimensional Displa. In Proceedings of the Fall Joint Computer Conference, San Francisco, CA, USA, 9–11 December 1968; ACM: New York, NY, USA, 1968; pp. 757–764. [Google Scholar]

- Han, S.; Kim, J. A Study on Immersion of Hand Interaction for Mobile Platform Virtual Reality Contents. Symmetry 2017, 9, 22. [Google Scholar] [CrossRef]

- Leonardis, D.; Solazzi, M.; Bortone, I.; Frisoli, A. A 3-RSR Haptic Wearable Device for Rendering Fingertip Contact Forces. IEEE Trans. Haptics 2017, 10, 305–316. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.-C.; Fu, X.-M.; Zhang, C.; Wu, K.; Liu, L. Smooth Assembled Mappings for Large-scale Real Walking. ACM Trans. Graph. 2017, 36, 211. [Google Scholar] [CrossRef]

- Schissler, C.; Manocha, D. Interactive Sound Propagation and Rendering for Large Multi-Source Scenes. ACM Trans. Graph. 2017, 36, 2. [Google Scholar] [CrossRef]

- Joo, H.; Simon, T.; Sheikh, Y. Total Capture: A 3D Deformation Model for Tracking Faces, Hands, and Bodiese. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; IEEE Computer Society: Washington, DC, USA, 2018; pp. 8320–8329. [Google Scholar]

- Kim, M.; Lee, J.; Jeon, C.; Kim, J. A Study on Interaction of Gaze Pointer-Based User Interface in Mobile Virtual Reality Environment. Symmetry 2017, 9, 189. [Google Scholar]

- Lee, J.; Jeong, K.; Kim, J. MAVE: Maze-based immersive virtual environment for new presence and experience. Comput. Anim. Virtual Worlds 2017, 28, e1756. [Google Scholar] [CrossRef]

- Vasylevska, K.; Kaufmann, H.; Bolas, M.; Suma, E.A. Flexible spaces: Dynamic layout generation for infinite walking in virtual environments. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces, Orlando, FL, USA, 16–17 March 2013; IEEE Computer Society: Washington, DC, USA, 2013; pp. 39–42. [Google Scholar]

- Cheng, L.P.; Roumen, T.; Rantzsch, H.; Köhler, S.; Schmidt, P.; Kovacs, R.; Jasper, J.; Kemper, J.; Baudisch, P. TurkDeck: Physical Virtual Reality Based on People. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Daegu, Kyungpook, Korea, 8–11 November 2015; ACM: New York, NY, USA, 2015; pp. 417–426. [Google Scholar]

- Cheng, L.P.; Lühne, P.; Lopes, P.; Sterz, C.; Baudisch, P. Haptic Turk: A Motion Platform Based on People. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; ACM: New York, NY, USA, 2015; pp. 3463–3472. [Google Scholar]

- Gugenheimer, J.; Stemasov, E.; Frommel, J.; Rukzio, E. ShareVR: Enabling Co-Located Experiences for Virtual Reality Between HMD and Non-HMD Users. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17), Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 4021–4033. [Google Scholar]

- Schissler, C.; Nicholls, A.; Mehra, R. Efficient HRTF-based Spatial Audio for Area and Volumetric Sources. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1356–1366. [Google Scholar] [CrossRef] [PubMed]

- Prattichizzo, D.; Chinello, F.; Pacchierotti, C.; Malvezzi, M. Towards Wearability in Fingertip Haptics: A 3-DoF Wearable Device for Cutaneous Force Feedback. IEEE Trans. Haptics 2013, 6, 506–516. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Jeon, C.; Kim, J. A Study on Immersion and Presence of a Portable Hand Haptic System for Immersive Virtual Reality. Sensors 2017, 17, 1141. [Google Scholar]

- Zhao, W.; Chai, J.; Xu, Y.Q. Combining Marker-based Mocap and RGB-D Camera for Acquiring High-fidelity Hand Motion Data. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Lausanne, Switzerland, 29–31 July 2012; Eurographics Association: Aire-la-Ville, Switzerland, 2012; pp. 33–42. [Google Scholar]

- Kim, Y.; Lee, G.A.; Jo, D.; Yang, U.; Kim, G.; Park, J. Analysis on virtual interaction-induced fatigue and difficulty in manipulation for interactive 3D gaming console. In Proceedings of the 2011 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–12 January 2011; IEEE Computer Society: Washington, DC, USA, 2011; pp. 269–270. [Google Scholar]

- Remelli, E.; Tkach, A.; Tagliasacchi, A.; Pauly, M. Low-Dimensionality Calibration through Local Anisotropic Scaling for Robust Hand Model Personalization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 2554–2562. [Google Scholar]

- Hayward, V.; Astley, O.R.; Cruz-Hernandez, M.; Grant, D.; Robles-De-La-Torre, G. Haptic interfaces and devices. Sens. Rev. 2004, 24, 16–29. [Google Scholar] [CrossRef]

- Nordahl, R.; Berrezag, A.; Dimitrov, S.; Turchet, L.; Hayward, V.; Serafin, S. Preliminary Experiment Combining Virtual Reality Haptic Shoes and Audio Synthesis. In Proceedings of the 2010 International Conference on Haptics—Generating and Perceiving Tangible Sensations: Part II, Amsterdam, The Netherlands, 8–10 July 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 123–129. [Google Scholar]

- Lee, J.; Kim, M.; Kim, J. A Study on Immersion and VR Sickness in Walking Interaction for Immersive Virtual Reality Applications. Symmetry 2017, 9, 78. [Google Scholar]

- Carlsson, C.; Hagsand, O. DIVE A multi-user virtual reality system. In Proceedings of the IEEE Virtual Reality Annual International Symposium, Seattle, WA, USA, 18–22 September 1993; IEEE Computer Society: Washington, DC, USA, 1993; pp. 394–400. [Google Scholar]

- Phon, D.N.E.; Ali, M.B.; Halim, N.D.A. Collaborative Augmented Reality in Education: A Review. In Proceedings of the 2014 International Conference on Teaching and Learning in Computing and Engineering (LATICE ’14), Kuching, Sarawak, Malaysia, 11–13 April 2014; IEEE Computer Society: Washington, DC, USA, 2014; pp. 78–83. [Google Scholar]

- Le, K.D.; Fjeld, M.; Alavi, A.; Kunz, A. Immersive Environment for Distributed Creative Collaboration. In Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology (VRST ’17), Gothenburg, Sweden, 8–10 November 2017; ACM: New York, NY, USA, 2017; pp. 16:1–16:4. [Google Scholar]

- Lacoche, J.; Pallamin, N.; Boggini, T.; Royan, J. Collaborators Awareness for User Cohabitation in Co-located Collaborative Virtual Environments. In Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology (VRST ’17), Gothenburg, Sweden, 8–10 November 2017; ACM: New York, NY, USA, 2017; pp. 15:1–15:9. [Google Scholar]

- Marwecki, S.; Brehm, M.; Wagner, L.; Cheng, L.P.; Mueller, F.F.; Baudisch, P. VirtualSpace—Overloading Physical Space with Multiple Virtual Reality Users. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 241:1–241:10. [Google Scholar]

- Piumsomboon, T.; Day, A.; Ens, B.; Lee, Y.; Lee, G.; Billinghurst, M. Exploring Enhancements for Remote Mixed Reality Collaboration. In Proceedings of the SIGGRAPH Asia 2017 Mobile Graphics and Interactive Applications (SA ’17), Bangkok, Thailand, 27–30 November 2017; ACM: New York, NY, USA, 2017; pp. 16:1–16:5. [Google Scholar]

- Pretto, N.; Poiesi, F. Towards Gesture-Based Multi-User Interactions in Collaborative Virtual Environments. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W8, 203–208. [Google Scholar] [CrossRef]

- Ibayashi, H.; Sugiura, Y.; Sakamoto, D.; Miyata, N.; Tada, M.; Okuma, T.; Kurata, T.; Mochimaru, M.; Igarashi, T. Dollhouse VR: A Multi-view, Multi-user Collaborative Design Workspace with VR Technology. In Proceedings of the SIGGRAPH Asia 2015 Emerging Technologies (SA ’15), Kobe, Japan, 2–6 November 2015; ACM: New York, NY, USA, 2015; pp. 8:1–8:2. [Google Scholar]

- Sajjadi, P.; Cebolledo Gutierrez, E.O.; Trullemans, S.; De Troyer, O. Maze Commander: A Collaborative Asynchronous Game Using the Oculus Rift & the Sifteo Cubes. In Proceedings of the First ACM SIGCHI Annual Symposium on Computer-human Interaction in Play (CHI PLAY ’14), Toronto, ON, Canada, 19–21 October 2014; ACM: New York, NY, USA, 2015; pp. 227–236. [Google Scholar]

- Farpoint-VR. 2017. Available online: https://www.playstation.com/en-us/games/farpoint-ps4/ (accessed on 16 May 2017).

- Witmer, B.G.; Jerome, C.J.; Singer, M.J. The Factor Structure of the Presence Questionnaire. Presence Teleoper. Virtual Environ. 2005, 14, 298–312. [Google Scholar] [CrossRef]

- Ijsselsteijn, W.A.; de Kort, Y.A.W.; Poels, K. The Game Experience Questionnaire: Development of a Self-Report Measure to Assess the Psychological Impact of Digital Games; Technische Universiteit Eindhoven: Eindhoven, The Netherlands, 2013; pp. 1–9. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).