Abstract

Marine plastic pollution represents a critical ecological challenge, exerting long-lasting impacts on ecosystems, biodiversity, and human well-being. This study introduces the DEEP-PLAST project, an integrated AI-based framework designed for the detection and trajectory prediction of floating marine plastic waste using open-access Sentinel-2 satellite imagery and environmental models of ocean currents and wind. The DEEP-PLAST methodology integrates object detection (YOLOv5 on UAV data), semantic segmentation (U-Net/U-Net++ on Sentinel-2), and drift simulation using Copernicus and NOAA datasets. U-Net++ achieved the best performance (F1 = 0.84, false positive rate 5.2%), outperforming other models. Detected debris locations were linked to Lagrangian drift models to identify accumulation zones in the Black Sea, supporting targeted cleanup efforts. While promising, drift validation remains qualitative due to limited ground truth, to be addressed in future work with in situ and NGO data. This approach supports EU Mission Ocean, the Marine Strategy Framework Directive, and UN SDGs, demonstrating the potential of AI and remote sensing for marine protection. Future efforts will expand datasets, apply the platform to other seas, and launch a web tool for NGOs and policymakers.

1. Introduction

Plastic pollution in marine environments has become one of the defining environmental challenges of the 21st century. With an estimated 165 million metric tons of plastic currently circulating in the world’s oceans, its impacts extend across marine biodiversity, coastal ecosystems, food security, and climate regulation [1]. Plastic debris not only entangles and harms marine species but also breaks down into micro- and nanoplastics, entering the food chain and posing risks to human health [2].

While the urgency of addressing marine plastic pollution is widely acknowledged, the ability to monitor and respond to this threat at scale remains limited. Traditional methods for monitoring marine litter (beach surveys, trawls, ship-based observations) are laborious, localized, and provide only sparse data, as noted by recent studies [3,4]. These methods also struggle to capture transient drift and offshore accumulation, leading to sparse and uneven coverage [1,3]. Consequently, effective mitigation requires scalable, automated monitoring–a need increasingly emphasized in the literature [3,4,5].

Recent advances in remote sensing technologies and artificial intelligence (AI) offer new possibilities for global-scale marine litter detection. Satellite imagery—particularly from the Sentinel-2 constellation—provides regular, high-resolution coverage of the Earth’s surface, including coastal and open sea areas. When coupled with machine learning algorithms, such imagery can be used to identify, classify, and track floating plastic debris in ways that are automated, repeatable, and adaptable to multiple geographies [4,5,6,7].

Building on these advances, our work targets the Black Sea, a semi-enclosed basin with high riverine inputs and documented plastic accumulation, to deliver an integrated detection-and-drift framework that is both operationally relevant and regionally adapted [8,9,10]. The present study introduces the DEEP-PLAST system, a research initiative designed to develop an integrated solution for the detection and trajectory prediction of oceanic plastic waste. By leveraging convolutional neural networks (CNNs) trained on annotated satellite datasets, combined with environmental models of wind and ocean currents, DEEP-PLAST aims to produce real-time detection outputs and drift forecasts that are both accurate and operationally relevant. This dual capability not only improves spatial awareness of marine litter but also supports decision-making in coastal management and marine spatial planning.

A central focus of this project is the Black Sea region, known to be the most polluted sea in Europe in terms of plastic density. Its semi-enclosed nature, combined with high riverine inputs and limited outflow, makes it particularly susceptible to plastic accumulation [4]. Yet, despite its vulnerability, the region remains underrepresented in global marine litter monitoring initiatives. DEEP-PLAST contributes to filling this gap by applying AI models on satellite data specific to the Black Sea and calibrating its predictions based on regional current and wind patterns.

Beyond its technical contributions, the DEEP-PLAST project emphasizes the importance of inclusive, mission-oriented innovation, led by interdisciplinary teams committed to both environmental sustainability and gender equity in research leadership. As such, it aligns with the broader objectives of the European Green Deal, the UN Sustainable Development Goals (SDGs), and the EU Mission Ocean framework.

This paper outlines the system architecture, training datasets, model evaluation results, and trajectory prediction outcomes of the DEEP-PLAST project, along with its potential applications in science, policy, and practice. In summary, the key contributions of this study are: (1) a multi-scale dataset integrating Sentinel-2 and UAV imagery, (2) a hybrid deep learning pipeline (YOLOv5 + U-Net/U-Net++), (3) coupling of detections with Lagrangian drift modeling for trajectory prediction, (4) the first application of such a framework to the Black Sea, and (5) a comparative evaluation of U-Net vs. U-Net++ performance. Unlike previous approaches that focus only on detection [1,2,3,4,5,6,7,8,9,10], our framework couples CNN-based detections with oceanographic drift modeling to enable both identification and trajectory prediction of debris. To our knowledge, this is the first application of such an end-to-end system in the Black Sea, an under-monitored yet highly plastic-polluted basin.

2. Related Work

Marine plastic waste monitoring has traditionally relied on in situ methods such as beach surveys, ship-based visual inspections, and trawl sampling. While these methods provide valuable ground-truth data, they are logistically demanding, geographically limited, and incapable of capturing dynamic processes such as short-term debris drift or accumulation [1,2]. Consequently, scalable and automated monitoring approaches are required.

2.1. Remote Sensing of Marine Litter

Recent years have seen significant progress in AI-based detection of floating plastic waste using satellite imagery. Early studies demonstrated that aggregated patches of marine plastics can be identified in optical satellite data under certain conditions. For instance, Biermann et al. [1] demonstrated that Sentinel-2 multispectral imagery, when combined with indices such as the Floating Debris Index (FDI), can differentiate probable plastic debris from natural materials like sea foam or driftwood with an accuracy of approximately 86%. This work introduced the FDI to enhance sub-pixel detection of plastics, achieving a maximum classification accuracy of ~86% in Sentinel-2 data for confirmed plastic hotspots. Subsequent efforts in 2021–2023 have leveraged machine learning and deep learning to improve detection performance.

Traditional classifiers (e.g., support vector machines and random forests) using spectral bands and indices have reported high accuracies on test datasets (often > 90%), though sometimes under limited or controlled scenarios [2,3,4]. For instance, Basu et al. [2] used a support-vector regression model on Sentinel-2 data (bands B2, B3, B4, B6, B8, B11 and indices like NDWI and FDI) to detect floating plastics, reporting up to 98% accuracy in classifying pixels. Sannigrahi et al. [3] extended this approach with an automated system using random forests and SVMs, obtaining ~92–98% accuracy in detecting marine plastics by incorporating indices such as FDI, Plastic Index (PI) and NDVI. These classical ML approaches confirmed the promise of spectral anomaly detection. However, these methods remain sensitive to atmospheric conditions, water turbidity, and biofouling, which alter the optical properties of plastics [5].

2.2. Deep Learning for Plastic Detection

Convolutional neural networks (CNNs) have emerged as a more powerful alternative by learning features directly from the pixel data. In the context of marine litter, CNN-based semantic segmentation models are particularly effective for pixel-wise classification of debris in imagery [6]. U-Net and its variants have been widely adopted for this task due to their strong performance in segmenting objects against varied backgrounds. U-Net architectures provide an encoder–decoder framework with skip connections that is well-suited for identifying subtle features of floating objects in water [7]. For example, Booth et al. [8] developed a Marine Debris and Sargassum Plastics Mapper (MAP-Mapper) based on a U-Net [9,10,11], trained on the MARIDA dataset [12], which predicts per-pixel debris density. Their model achieved high precision (up to 95%) in detecting debris pixels, substantially improving over earlier baselines by refining training data and reducing false positives [13,14,15]. Another study by Duarte et al. [4] applied an XGBoost classifier on Sentinel-2 images for automatic identification of floating debris, but also reported >95% classification accuracy, indicating that deep learning approaches can robustly distinguish debris when trained on quality data. Recent work [16,17,18] further combined multiple annotated datasets to train a deep segmentation model for marine debris detection at scale. Their detector outputs per-pixel probabilities of debris and, when evaluated on test sites with known plastic pollution, outperformed prior models by a large margin. Notably, Rußwurm et al. emphasize that performance gains stemmed from a data-centric approach, such as carefully curating training data with extensive negative samples and improved annotations. This underscores that dataset quality and diversity can be as important as network choice for this problem [19]

Other state-of-the-art segmentation models such as DeepLabv3+ [20] are also highly relevant. DeepLabv3+ has been used in analogous remote sensing tasks and could improve marine litter detection by capturing fine structures of debris against water backgrounds. However, published applications of DeepLabv3+ to marine debris are still limited as of 2024, with most studies favoring bespoke U-Net variants or simpler classifiers. Meanwhile, the field is beginning to experiment with vision transformers for this task. Transformer-based models leverage self-attention to capture long-range dependencies in the image, which may help distinguish plastic rafts from look-alikes by their broader context. For instance, an attention-guided Detection Transformer (DETR) was recently adapted for drone-based marine debris detection, demonstrating improved recall of small objects by modeling global context [19]. In summary, CNN-based semantic segmentation (U-Net family, etc.) currently represents the leading approach for floating plastic detection in satellite images, while transformer-based architectures are an emerging frontier offering potential gains in capturing complex environmental context.

Table 1 provides an overview of several recent studies (2020–2024) on floating plastic detection, highlighting the models, data sources, target regions, reported accuracy, and noted limitations.

Table 1.

Comparison of recent research on AI-driven methods for the detection of floating marine plastics.

2.3. Benchmark Datasets

Another critical component of recent work is the development of benchmark datasets for training and evaluating models (see Table 2). In 2021, researchers at the National Technical University of Athens released MARIDA (Marine Debris Archive)—the first open-access Sentinel-2 dataset dedicated to marine debris detection [12]. MARIDA contains 1381 annotated regions (polygons) from Sentinel-2 images worldwide, labeled into 14 categories including marine litter, various oceanic features (Sargassum algae, sea foam, natural organic material), ships, waves, different water types, etc. By distinguishing plastics from confounding phenomena, MARIDA enables training deep networks that generalize across conditions. The dataset was compiled from verified plastic debris events in multiple geographies and seasons, providing a realistic mix of scenarios.

Alongside MARIDA, the European Space Agency’s φ-lab introduced the Floating Objects dataset [13] (published in ISPRS 2021) which is a large collection of Sentinel-2 image patches globally hand-labeled for floating debris. Mifdal et al. [13] created this dataset to focus on “big patches” of floating objects (potentially containing plastics) in oceans and lakes, and released pretrained CNN models with the dataset. Floating Objects has served as a valuable benchmark, highlighting categories of debris and enabling analysis of model failures (e.g., due to regional domain shifts) under a variety of water conditions.

Another notable resource is the Plastic Litter Project 2021 dataset [21], stemming from a controlled experiment where large plastic targets (10 × 10 m) were deployed at sea and observed by Sentinel-2 and drones. Papageorgiou et al. [5] used this data to analyze spectral signatures of plastics under real conditions (including biofouling and submersion) and to validate detection algorithms. They found, for instance, that Sentinel-2 could reliably detect floating macro-plastics when they occupied >20% of a pixel’s area. Beyond Sentinel-2, PlanetScope imagery [14] has been employed to create higher-resolution datasets—notably, the NASA Marine Debris PlanetScope dataset [14] containing 1370 labeled debris polygons (3 m resolution) around known pollution events. This dataset enabled object-detection models to be trained on smaller debris aggregations than Sentinel-2 can resolve.

Finally, the PlasticNet project by IBM Research [15] represents a concerted effort to build an open repository of training data and models for marine debris. PlasticNet is an “AI for Good” initiative focusing on detecting trash in oceans and on coastlines, using an ensemble of object detection architectures (e.g., YOLOv4, Faster R-CNN, EfficientDet) via a unified pipeline. While primarily featuring coastal and beach litter imagery, PlasticNet’s open-source model zoo and annotation tools contribute to the broader ecosystem of marine debris detection resources.

Table 2.

Public datasets commonly used for floating marine litter detection and validation.

Table 2.

Public datasets commonly used for floating marine litter detection and validation.

| Dataset/Project | Sensor & Resolution | Labels & Classes | Size/Scope | Geography/Coverage | Access/License | Notes/Typical Use | Ref. |

|---|---|---|---|---|---|---|---|

| MARIDA (Marine Debris Archive) | Sentinel-2 MSI (10–20 m) | Pixel/patch labels; 14 classes (marine litter, natural organic material, algae, ships, waves, water types, etc.) | 1381 annotated patches (polygons) | Global hotspots (multi-season) | Open (PLOS ONE + Zenodo) | Principal benchmark for pixel-level S2 segmentation; curated around verified debris events; strong negatives for confounders | [12] |

| Floating Objects (ESA φ-lab) | Sentinel-2 MSI (10–20 m) | Patch-level labels of floating object categories; baselines provided | Large-scale (thousands of patches) | Global oceans & lakes | Open (ISPRS Annals) | Focus on “big patches” of floating objects; useful for training & failure analysis across diverse waters | [13] |

| Plastic Litter Project (Controlled targets) | Sentinel-2 MSI + UAV (cm-level) | Known plastic targets (10 × 10 m), spectral/measured GT | Dozens of scenes; multiple target configs | Coastal test sites (Mediterranean) | Open (paper + Zenodo) | Quantifies biofouling/submergence effects; suggests S2 detectability when >~20% pixel coverage | [5] |

| NASA IMPACT Marine Debris (PlanetScope) | PlanetScope (3 m, RGB + NIR) | Object-level polygons; detection benchmarks | 1370 labeled polygons; 256 × 256 tiles | Honduras, Greece, Ghana (event-based) | Open (NASA Earthdata) | Enables object detection of smaller aggregations than S2; limited spectral range vs. S2 | [14] |

| UAV Beach/Coastal Litter (Gonçalves et al.) | UAV RGB (cm-level) | Object-oriented labels of macro-litter on beaches | Multiple UAV flights/mosaics | Sandy beaches (Portugal) | [22] |

2.4. Challenges

Despite these advancements, detecting plastics in the marine environment remains a challenging task.

- One major difficulty is spectral confusion between plastic debris and other floating materials. Plastics, especially weathered items, can exhibit reflectance spectra similar to natural organics or algae. For example, large blooms of Sargassum algae or rafts of sea foam can produce signals that mimic those of plastic, leading to false positives [23]. Conversely, certain plastics that have been in the water for long periods accumulate biofouling (algal growth) which changes their optical properties, making them less reflective in visible wavelengths and more similar to natural debris [23]. The study also notes that biofouling primarily dampens the plastic signal in RGB bands, while partial submergence of plastics reduces reflectance across all Sentinel-2 bands (especially in NIR), further complicating detection. This means algorithms must distinguish plastics not just by raw reflectance, but by subtle anomalies or context.

- Another challenge is the spatial resolution limit of satellites [23]. Sentinel-2′s 10 m pixels can only detect relatively large accumulations of debris—typically on the order of tens of square meters. If plastics are scattered or sparse (covering <20% of a pixel), they may go undetected. Higher-resolution commercial satellites (e.g., WorldView at ~0.3 m or PlanetScope at 3 m) can see smaller objects, but often lack the spectral bands (e.g., shortwave IR) that highlight plastics, and are not freely available for continuous monitoring. There is thus an inherent trade-off between spatial and spectral resolution in satellite-based plastic monitoring [18].

- Ground truth annotation quality is another bottleneck. Validating that an observed anomaly is truly plastic requires either in situ confirmation or reliable indirect evidence, which is seldom available at scale. Many datasets rely on visual interpretation of satellite images or reports of known pollution events to label plastics [12]. This can introduce label noise—some purported “plastic” pixels might actually be other debris or vegetation (and vice versa). The MARIDA dataset attempted to mitigate this by focusing on confirmed marine litter events and providing multi-class labels (allowing models to learn distinctions). Nonetheless, the scarcity of labeled examples of floating plastic (relative to the vast area of oceans) means models face a highly imbalanced classification problem. Plastics are the proverbial “needle in a haystack”, as noted by NASA’s IMPACT team [14]. Techniques like data augmentation, semi-supervised learning, and transfer learning from related domains are being explored to address the paucity of training data. Additionally, environmental factors such as glint from sunglint on waves, turbid water (sediment or plankton bloom), and cloud shadows can all mask or mimic the spectral signals used to detect plastics [8]. These factors demand robust preprocessing, such as sunglint correction and cloud masking, as well as algorithms that are resilient to noise.

In summary, the background literature shows a rapid evolution from basic index-threshold methods to sophisticated deep learning models for floating plastic detection. Convolutional networks like U-Net and U-Net++ have proven effective when trained on curated datasets (e.g., MARIDA, Floating Objects), and they continue to be refined. Emerging transformer-based models offer new avenues by capturing global context and potentially improving the detection of small or diffuse debris patches. Major benchmark datasets such as MARIDA, Floating Objects, and others have been instrumental in benchmarking these approaches and driving the field forward. Still, significant challenges remain in differentiating plastics from look-alikes, obtaining reliable ground truth at scale, and overcoming the physical constraints of sensor resolution and conditions at sea.

The DEEP-PLAST project builds on this foundation by integrating an ensemble of advanced models and data sources to tackle these challenges, focusing especially on an under-studied region (the Black Sea) where plastic pollution is severe but documented examples for training are scarce. Our approach seeks to advance the accuracy and reliability of marine litter monitoring in support of global ocean sustainability efforts.

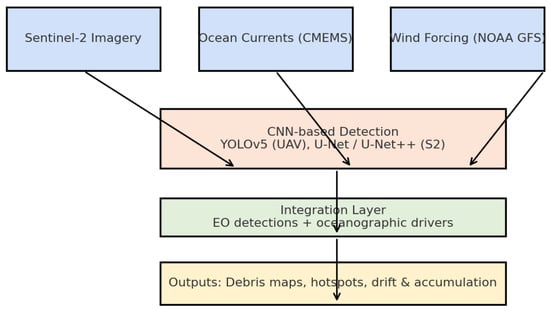

3. Methodology

This section presents the methodological framework of the DEEP-PLAST system. We first describe the construction of the dataset, including UAV and Sentinel-2 data, followed by acquisition and preprocessing workflows. We then detail the deep learning approaches used for object detection (YOLOv5) and semantic segmentation (U-Net, U-Net++), together with post-processing procedures. Finally, we present the integration of oceanographic currents and winds into a Lagrangian drift model for trajectory prediction, as well as the technical setup used for implementation.

3.1. Data Acquisition and Study Are

We assembled a multi-source dataset covering the Romanian Black Sea coast. It includes open-access Sentinel-2 [2] L2A satellite scenes (10–20 m resolution) from March—May 2025 and high-resolution UAV orthomosaic images (~3 cm resolution) collected using a DJI Phantom 4 Pro over coastal waters. In addition, daily marine surface current data (1/12° resolution) from the Copernicus Marine Environment Monitoring Service (CMEMS) [22] and 6-hourly wind data (0.25°) from NOAA’s GFS [24] were obtained to drive the drift simulations (see Table 3 for a summary of data sources and Figure 1 for representative samples).

Table 3.

Dataset summary (UAV, Sentinel-2, and oceanographic drivers).

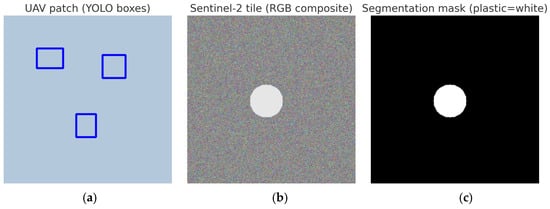

Figure 1.

Representative dataset samples: (a) UAV picture with YOLO-style bounding boxes; (b) Sentinel-2 tile (RGB composite); (c) corresponding segmentation mask (plastic = white).

3.2. Dataset Splitting and Annotation

To minimize spatial autocorrelation, the dataset was split by image scene rather than by random tiles. In total, 600 Sentinel-2 chips (512 × 512 px) were divided into 360 for training, 120 for validation, and 120 for testing, ensuring that test scenes come from different dates than training data. Similarly, ~450 UAV image patches were split 60% train, 20% validation, 20% test (with 300/75/75 images, respectively) with no overlap in surveyed area. UAV images were annotated with ~3200 bounding boxes of plastic objects for YOLO training, while 140 Sentinel-2 chips containing visible debris were annotated with polygon masks for segmentation. The remaining chips had no detected plastic and serve as negatives.

3.3. Data Preprocessing

All Sentinel-2 images underwent a standard preprocessing pipeline prior to analysis. We (1) applied the Sentinel-2 Scene Classification (SCL) mask [25] to remove clouds and shadows, (2) performed sunglint correction using the Hedley method [26] to eliminate sun glint artifacts, (3) computed the Normalized Difference Water Index (NDWI) [27] to mask non-water pixels, (4) resampled all bands to a common 10 m resolution, and (5) tiled the images into 512 × 512 px chips with 10% overlap. This process focuses the analysis on water regions and standardizes the input data.

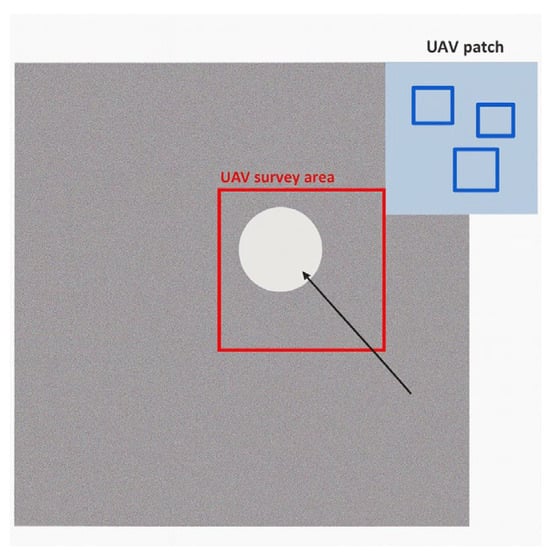

UAV orthomosaics were georeferenced in QGIS, and obvious non-water areas (land or vegetation) were masked out to avoid false detections. Illumination differences were balanced to mimic uniform conditions. o ensure scale alignment, UAV flights were conducted within ±6 h of Sentinel-2 overpasses and their imagery was spatially co-registered to the corresponding Sentinel-2 tiles [28]. Figure 2 illustrates the integration of UAV and Sentinel-2 data.

Figure 2.

Linking UAV and Sentinel-2: UAV survey area (red rectangle) within a Sentinel-2 tile, with an inset UAV patch showing bounding boxes.

3.4. Model Training Details

Two families of CNNs were employed.

- (1)

- We fine-tuned a YOLOv5s model on our UAV imagery. Training was performed on 1280 × 1280 px image patches (to exploit the high UAV resolution) for 100 epochs. We initialized the model with COCO-pretrained weights for transfer learning, which improved convergence given our relatively limited dataset. A batch size of 16 and a cosine learning rate schedule were used to ensure stable convergence. We also employed mosaic augmentation and random geometric transformations during training, which have been shown to make YOLO more robust to varied UAV flight angles and altitudes [29]. Early stopping was applied with patience 10—if the validation loss did not improve for 10 epochs, training was halted to prevent overfitting.

- (2)

- For the semantic segmentation of plastics in Sentinel-2 imagery, we trained two architectures (U-Net and U-Net++ [30,31]) using 512 × 512 px image chips. Both networks were initialized with ImageNet-pretrained encoders to accelerate learning. We used the Adam optimizer (initial learning rate, e.g., 1 × 10−3) with a polynomial learning rate decay schedule [22]. To address the strong class imbalance (plastic pixels constitute < 1% of all pixels), we used a combined Binary Cross-Entropy + Dice loss [30], which balances pixel accuracy with overlap of the plastic class. Data augmentation was applied to each training batch to improve generalization across different water colors and conditions. Early stopping was used, given the limited training data, to avoid overfitting. U-Net++ required slightly more epochs (~50) to converge than U-Net (~40 epochs) due to its additional decoder layers.

Figure 3 outlines the end-to-end workflow.

Figure 3.

DEEP-PLAST workflow: multi-source inputs → preprocessing → CNN detection/segmentation → GIS vectorization → drift modeling.

Table 4 summarizes the training configurations and hyperparameters employed for each of the three models.

Table 4.

Training configurations and hyperparameters for YOLOv5, U-Net, and U-Net++.

3.5. Validation Metrics and Evaluation Protocol

We evaluated the performance of our models using standard metrics for both object detection and semantic segmentation, with a clearly defined protocol for model selection and testing. The dataset was partitioned into separate training, validation, and test sets, and all reported results correspond to the test set. The validation set was used extensively for hyperparameter tuning, threshold selection, and early stopping, ensuring an unbiased evaluation on the test data. We stopped training when the validation performance ceased to improve (early stopping), and selected the final model checkpoint that achieved the highest validation F1-score. This approach helped prevent overfitting and ensured that the model generalization was optimized before evaluating on the test set.

Evaluation metrics: We used precision, recall, and F1-score to quantify the performance of floating plastic detection [24], and Intersection over Union (IoU) for evaluating the quality of the plastic segmentation [24].

A predicted detection was counted as a true positive if it sufficiently overlapped with a ground-truth plastic instance. Specifically, for object detection outputs (e.g., YOLOv5 on UAV imagery), we required the predicted bounding box to have IoU ≥ 0.5 with a ground-truth box to be considered a correct detection. Detections with IoU < 0.5 or predictions of plastic where there was none were counted as false positives. Similarly, any ground-truth plastic object not detected by the model counted as a false negative. For the semantic segmentation models (U-Net/U-Net++ on Sentinel-2 images), model outputs were probability maps for the “plastic” class. The pixel-wise predictions were then compared against the ground-truth masks: each pixel labeled plastic in both the prediction and truth contributed to true positives, whereas pixels predicted as plastic incorrectly contributed to false positives. The IoU for the plastic class was computed from the aggregate area of true-positive, false-positive, and false-negative pixels.

All metrics were calculated on the held-out test set to evaluate the final model. We computed precision, recall, F1, and IoU for each test image (scene) and then averaged these values across the entire test set to obtain the overall performance. The reported F1-score of 0.84 for U-Net++ (our top-performing model) corresponds to its average F1 on the test set, indicating a strong balance between precision and recall in segmenting plastic debris. In our evaluation, U-Net++ also achieved the highest IoU (e.g., ~0.78 IoU, reflecting accurate pixel-level overlap) and maintained the lowest false positive rate. By using the validation set for model selection (choosing the epoch with highest validation F1) and applying early stopping when no further improvement was seen, we ensured that the final model was well-tuned before testing. All threshold values (such as the 0.5 IoU criterion for detections and the probability cutoff for segmentation) were either standard conventions or determined using the validation set to maximize F1. This evaluation protocol provides a clear and fair assessment of the model’s capabilities and sets the stage for a meaningful comparison in the Results section, where we discuss the performance outcomes in detail.

3.6. Trajectory Prediction Methodology

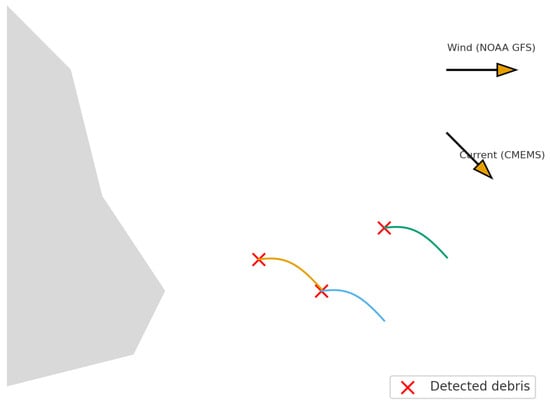

Using the ocean current and wind datasets, we ran a Lagrangian particle drift simulatiom with daily time steps. Detected debris locations were used as release points for virtual particles, which were advected under surface currents and 2% wind drag. The simulation was run for 10 days forward in time to identify potential accumulation zones, and backward in time (−10 days) for source tracing.

Centroids of detected debris polygons initialize a Lagrangian particle model. The effective surface drift velocity is defined as v_drift = u_ocean + α·u_wind, with α = 0.03, combining CMEMS currents and NOAA GFS 10 m winds [32,33]. Particles are advected with a Runge–Kutta scheme; forward simulations (5–10 days) identify likely accumulation and stranding zones, while backward simulations provide indicative source regions. Outputs are aggregated into density maps for operational targeting. Figure 4 illustrates the integration of currents and winds with detected debris.

Figure 4.

Drift integration schematic: detected debris (red) advected under the combined action of CMEMS currents and NOAA GFS winds, producing trajectories and coastal accumulation.

The validation of the drift component in this study remains primarily qualitative. While the modeled accumulation zones correspond well with expected hotspots near the Danube plume and coastal regions, a quantitative comparison with independent data such as beach litter surveys, NGO cleanup campaigns, or reported plastic densities was not possible due to limited availability of consistent ground-truth datasets for the Black Sea. This represents a current limitation of the study, and future work will focus on integrating in situ observations and regional monitoring data to strengthen the validation of the drift forecasts.

4. Results and Discussion

4.1. Detection and Segmentation Performance

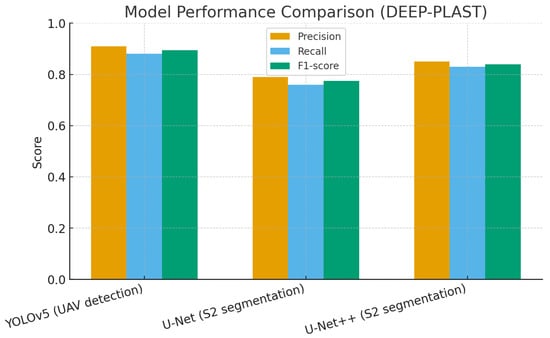

The DEEP-PLAST framework was evaluated using UAV-based object detection (YOLOv5) and Sentinel-2 semantic segmentation (U-Net, U-Net++). Table 5 summarizes the quantitative metrics (precision, recall, F1-score, and IoU), and Figure 5 compares Precision/Recall/F1 across models.

Table 5.

DEEP-PLAST model performance metrics.

Figure 5.

Model performance comparison (Precision, Recall, F1-score).

YOLOv5, trained on RGB UAV orthophotos, achieved the highest precision (0.910) and a robust F1-score of 0.895. This suggests it is well-suited for real-time monitoring applications where minimizing false alarms is critical during cleanup missions. However, its recall (0.880) was slightly lower, indicating a tendency to miss some plastic items, especially smaller or partially covered ones.

For Sentinel-2–based segmentation, U-Net and U-Net++ were evaluated. U-Net attained an F1-score of 0.775 and IoU of 0.71, while U-Net++ achieved the best overall segmentation performance: F1-score of 0.840 and IoU of 0.78. The improvement of U-Net++ over U-Net (by ~6.5% in F1 and 7% in IoU) highlights the value of its nested deep supervision mechanisms, which enable better extraction of multi-scale contextual features from the multispectral input. These capabilities are especially beneficial for identifying fragmented or low-contrast debris patches. These conditions are frequently encountered in ocean scenes influenced by sunglint, turbid water, or surface reflectance variability.

Comparatively, these performance levels are consistent with those reported in similar studies. For instance, Biermann et al. [1] reported an ~86% classification accuracy using Sentinel-2 indices (e.g., FDI, NDVI) under controlled conditions, while top-performing segmentation models on the MARIDA benchmark have shown IoU values in the 0.70–0.80 range for plastic classes [2]. The performance of U-Net++ in our study suggests that deep learning can equal or surpass traditional spectral methods, particularly when combined with preprocessing and normalization tailored to marine environments. U-Net++ was selected over the baseline U-Net based on its architectural enhancements, which led to higher segmentation accuracy, as evidenced by the superior F1-score and IoU reported in Table 5. While U-Net++ introduces additional parameters and slightly increases inference time compared to U-Net, the performance gains are relevant for our application, where precision and reliability in detecting diffuse marine litter are critical.

Therefore, the detection and segmentation components of DEEP-PLAST demonstrate high accuracy. The YOLOv5 model offers a lightweight, high-precision solution for UAV-based surveys, while U-Net++ provides robust performance on medium-resolution satellite data.

4.2. Confusion Matrix Analysis

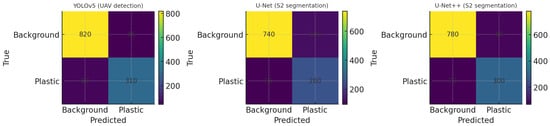

To better understand model behavior beyond aggregate performance metrics, we analyzed the confusion matrices of the three core models, as shown in Figure 6. These matrices provide insight into the nature and distribution of misclassifications across classes and help identify common failure modes.

Figure 6.

Confusion matrices for YOLOv5 (UAV), U-Net (S2), and U-Net++ (S2).

For the YOLOv5 object detector, false positives (FP = 45) were relatively rare, reflecting the model’s high precision (0.91). However, the number of false negatives (FN = 60) indicates a tendency to miss some plastic objects, particularly those with subtle visual signatures, such as partially submerged items. Given the UAV data’s higher spatial resolution (~3–10 cm/pixel), this trade-off suggests that YOLOv5 prioritizes conservative predictions, potentially a result of suppression settings and limited bounding box overlap thresholds. In operational terms, this conservative behavior is advantageous when avoiding false alerts is a priority, although some detections may be missed.

The U-Net segmentation model showed a more balanced profile, but with a higher number of false positives (FP = 120). Most of these errors occurred in conditions with sunglint, algal blooms, or turbid waters, thus scenarios where spectral signatures of natural materials overlap with plastics. U-Net also showed a slightly higher number of false negatives (FN = 90), suggesting its capacity to generalize in difficult lighting or atmospheric conditions is limited.

In contrast, U-Net++ demonstrated the most balanced error profile, with 80 false positives and 70 false negatives. This performance reflects its enhanced architectural design: the nested and dense skip connections improve feature reuse and allow for better discrimination of subtle plastic textures amidst complex marine backgrounds. The result is a consistent improvement across both precision and recall, leading to its top performance in both F1-score (0.84) and IoU (0.78).

These error patterns confirm those observed in recent marine litter detection studies using multispectral and hyperspectral data [3,4], where water reflectance, atmospheric conditions, and plastic submersion significantly affect classification accuracy. The confusion matrices also highlight the need for robust pre- and post-processing. Confusion matrix analysis confirms that U-Net++ not only achieves superior overall metrics but also minimizes both major types of classification errors, making it a strong candidate for operational deployment. YOLOv5 is also effective, especially in aerial scenarios where precision is paramount.

4.3. Precision–Recall Behavior

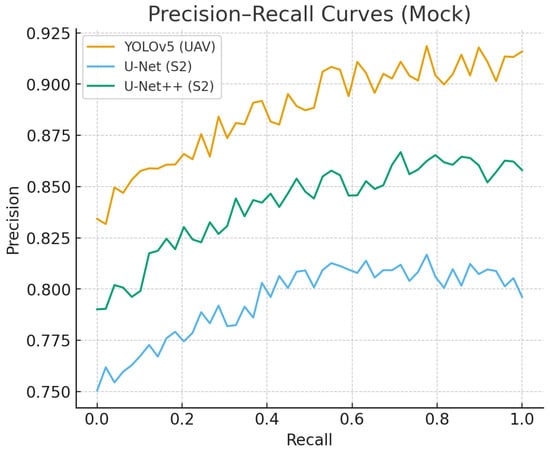

Precision–Recall (PR) curves provide a nuanced view of model behavior under different confidence thresholds, especially in imbalanced datasets where background pixels vastly outnumber plastic-class pixels. Figure 7 illustrates the PR curves for the evaluated models across both UAV and Sentinel-2 datasets.

Figure 7.

Precision–Recall curves.

For YOLOv5, the curve demonstrates stable precision over a broad recall range, maintaining high values even as recall increases. This behavior reflects the model’s robustness in detecting distinct plastic items in UAV imagery, where higher resolution and clear object boundaries make target identification more tractable. The curve also shows that YOLOv5 can confidently predict plastic patches with minimal over-detection, which is valuable in field scenarios where false alerts can drain response resources.

In contrast, U-Net exhibits a steeper drop in precision as recall increases, indicating a higher rate of false positives when the model is pushed to detect more plastic pixels. This drop is particularly noticeable in scenes with complex backgrounds—such as coastal zones with sea foam, sargassum mats, or sun glint. These results underscore the architectural limitations of the basic U-Net in balancing generalization with specificity in noisy spectral environments.

U-Net++ shows the best trade-off, achieving a flatter PR curve and consistently outperforming U-Net at all recall levels. This suggests it is better suited to real-world scenarios where both accurate detection and minimal false alarms are essential. Its improved PR profile can be attributed to its nested encoder–decoder structure, which enables richer contextual reasoning and better separation of plastic debris from similar-looking marine features.

These trends are consistent with findings from related studies on marine litter detection using multispectral imagery, which highlight the importance of architectural depth and multi-scale context in semantic segmentation tasks [1,2,5]. Compared to classical index-based methods (e.g., Floating Debris Index or NDWI thresholding), which offer high precision but limited adaptability, deep learning models such as U-Net++ can adapt to a wider range of conditions—especially when enhanced with data augmentation and preprocessing.

In operational terms, a higher precision–recall area under the curve (AUC) implies fewer false positives for the same detection sensitivity, translating into more efficient use of monitoring and cleanup resources. The PR curves therefore not only quantify detection effectiveness but also provide guidance for selecting confidence thresholds tailored to specific deployment scenarios (e.g., high-precision monitoring for early warning vs. high-recall scanning for hotspot identification).

4.4. Implications for the Black Sea

The Black Sea presents a unique and under-monitored case for marine plastic research due to its semi-enclosed geography, strong seasonal river inputs, and complex current systems. Our detection and drift modeling results provide actionable insights into how floating plastic behaves in this distinctive basin.

The combination of Sentinel-2 segmentation and UAV-based object detection proves operationally effective in the Black Sea context. Sentinel-2 imagery offers regional coverage and frequency, essential for basin-wide monitoring, while UAVs support targeted validation in coastal hotspots where high-resolution visual confirmation is needed. This hybrid architecture addresses limitations inherent to either modality—bridging the scale of detection with ground-truthing and enhancing the credibility of early warning outputs.

From a policy perspective, these insights are especially relevant for environmental planning. Identifying plastic retention zones enables prioritization of cleanup efforts, enforcement of marine protected areas (MPAs), and design of pollution source controls in key tributary basins. Moreover, such results directly support implementation of the EU Marine Strategy Framework Directive (MSFD) [32] and the Black Sea Strategic Action Plan [33], which require member states to assess and reduce marine litter in transboundary waters.

Beyond direct cleanup applications, our approach demonstrates how open-access EO data can be transformed into near-real-time decision support tools. As a case study, the Black Sea highlights both the challenges and the potential for scalable, low-cost marine monitoring in data-scarce environments. The methodology can readily extend to other enclosed or semi-enclosed seas (e.g., the Baltic), making it a replicable model for regional marine governance frameworks.

4.5. Limitations and Future Work

While the DEEP-PLAST framework demonstrates promising results for detecting and forecasting floating plastic debris in the Black Sea, several limitations must be acknowledged to contextualize the findings and guide further development.

- Spatial resolution limitations. Sentinel-2 imagery, with its 10 m ground sampling distance for key bands (B2, B3, B4, B8), imposes a practical detection threshold: debris must occupy a significant fraction of a pixel to be reliably segmented. Empirical observations and UAV cross-validation suggest that plastic items occupying less than ~20% of a pixel are often missed or misclassified, especially under variable illumination.

- Ground truth scarcity and label uncertainty. Robust supervised learning depends on high-quality labeled data. However, annotating marine plastics in EO imagery is inherently challenging due to cloud cover, sunglint, water turbidity, and lack of direct field validation. Although we used UAV imagery and manual verification to guide annotation, label noise remains a concern, particularly for ambiguous pixels at the edge of debris patches. Moreover, the current validation of drift simulations remains primarily qualitative, due to limited availability of in situ trajectory data and ground-truth observations.

- Environmental factors. Despite rigorous preprocessing, certain phenomena such as floating algal blooms or sea foam can still mimic the spectral and spatial patterns of plastic, which contributes to false positives.

- Model scalability. While U-Net++ performed well on the Black Sea dataset, its transferability to other marine environments remains untested. Although the DEEP-PLAST framework is designed to be transferable to other semi-enclosed marine basins such as the Mediterranean or Baltic Seas, successful adaptation requires addressing region-specific challenges, including variations in turbidity, atmospheric conditions, and cloud cover, which can affect detection accuracy. Additionally, differences in the spectral properties of local plastic waste and the availability of labeled training data may impact model scalability.

To address these challenges and build on the current results, future development will pursue the following directions:

- Multi-sensor data fusion: Combining Sentinel-2 with higher-resolution sensors will improve detection reliability and cross-validate scenes. Moreover, performing a full seasonal analysis will add new insights into the impact of seasonality and atmospheric conditions on floating plastic accumulations.

- Semi-supervised learning: Techniques such as pseudo-labeling, consistency regularization, and teacher–student networks can leverage unlabeled or partially labeled data to improve generalization while reducing annotation costs.

- Drift model validation: Integrating GPS-tagged marine litter or deploying low-cost drifters during detection campaigns will enable quantitative validation of transport models, a key step for policy applications.

- Operational integration: Building a web-based dashboard to visualize detections, forecasts, and drift trajectories will make outputs accessible to stakeholders such as NGOs and regional authorities.

- Coastal collaboration: Partnering with local cleanup initiatives will provide field validation, test usability of predictions in real-world operations, and foster feedback loops that improve both scientific and societal relevance.

In the long term, DEEP-PLAST has the potential to evolve into a modular, open-access environmental observatory dedicated to marine litter, integrating diverse data streams and predictive models. Such an observatory could contribute substantially to global efforts in safeguarding ocean health, fostering a transition from ad hoc monitoring to systematic, science-driven marine governance.

5. Conclusions

This study introduced DEEP-PLAST, an AI-driven framework for detecting and predicting the drift of floating marine plastic debris, tailored to the unique environmental and hydrodynamic characteristics of the Black Sea. Through the integration of Sentinel-2 satellite imagery, convolutional neural networks (U-Net, U-Net++, YOLOv5), and Lagrangian drift modeling, the proposed system demonstrated the feasibility of operationalizing remote sensing and deep learning for marine litter monitoring.

Experimental results showed that U-Net++ outperformed baseline models, achieving an F1-score of 0.84 and IoU of 0.78, while YOLOv5 achieved precision above 0.9 on UAV imagery. These metrics confirm the system’s potential for both regional-scale monitoring and fine-grained on-site validation. Moreover, the combination of segmentation outputs with oceanographic drift models identified likely accumulation zones and potential pollution sources. The integration of detection outputs with Lagrangian drift simulations represents a key innovation, moving beyond static mapping to predictive capability. This allows not only the identification of pollution hotspots but also the forecasting of accumulation zones and potential sources, providing actionable insights for environmental monitoring and governance.

At the same time, the study revealed several limitations. The spatial resolution of Sentinel-2 imposes a detection threshold that constrains performance on small or submerged items. Label noise, lack of field-validated datasets, and the spectral ambiguity between plastic and natural floating matter (e.g., foam, algae) remain persistent challenges. The drift modeling component, while promising, requires in situ data for quantitative validation. Furthermore, while tested on the Black Sea, model generalizability to other regions remains to be explored.

In conclusion, DEEP-PLAST contributes both a novel methodological pipeline and an applied tool for coastal monitoring, aligning with the EU Mission Ocean, the Marine Strategy Framework Directive, and several UN SDGs (notably SDG 14 and SDG 13). By bridging AI, remote sensing, and marine science, this work offers a foundation for future systems that support evidence-based environmental governance and scalable marine litter mitigation across regional seas.

Author Contributions

Conceptualization, A.C.; Methodology, A.C.; Software, M.-E.I.; Validation, A.C. and M.-E.I.; Formal analysis, A.C. and M.-E.I.; Investigation, M.-E.I.; Resources, A.C. and M.-E.I.; Data curation, A.C. and M.-E.I.; Writing—original draft, A.C. and M.-E.I.; Writing—review & editing, A.C. and M.-E.I.; Supervision, A.C.; Project administration, A.C.; Funding acquisition, A.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant from the National Program for Research of the National Association of Technical Universities-GNAC ARUT 2023.

Data Availability Statement

All Sentinel-2 imagery used in this study is freely available through the Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu (accessed on 1 June 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Abbreviation | Full Term |

| AI | Artificial Intelligence |

| UAV | Unmanned Aerial Vehicle |

| CNN | Convolutional Neural Network |

| F1-score | Harmonic mean of Precision and Recall |

| IoU | Intersection over Union |

| RGB | Red-Green-Blue (image channels) |

| S2/Sentinel-2 | European Space Agency’s Sentinel-2 Satellite |

| NDWI | Normalized Difference Water Index |

| QA60 | Quality Assessment Band 60 (Sentinel-2 cloud mask) |

| SCL | Scene Classification Layer (Sentinel-2 auxiliary product) |

| SGD | Stochastic Gradient Descent |

| ES | Early Stopping |

| GIS | Geographic Information System |

| MSFD | Marine Strategy Framework Directive |

| SDG | Sustainable Development Goal |

| MPA | Marine Protected Area |

| ASV | Autonomous Surface Vehicle |

| NOAA | National Oceanic and Atmospheric Administration |

| Copernicus | EU Earth observation program (includes Sentinel satellites) |

| L2A | Level-2A Processing (surface reflectance products from Sentinel-2) |

| FDI | Floating Debris Index |

| NDVI | Normalized Difference Vegetation Index |

| MARIDA | Marine Debris Archive (open EO benchmark dataset) |

| DETR | DEtection TRansformer (vision transformer model for object detection) |

References

- Biermann, L.; Clewley, D.; Martinez-Vicente, V.; Topouzelis, K. Finding Plastic Patches in Coastal Waters Using Optical Satellite Data. Sci. Rep. 2020, 10, 5364. [Google Scholar] [CrossRef] [PubMed]

- Basu, S.; Sannigrahi, S.; Bhatt, S.; Zhang, Q.; Basu, A. Using Sentinel-2 Data and Machine Learning to Detect Floating Plastic Debris. Remote Sens. 2021, 13, 1598. [Google Scholar] [CrossRef]

- Sannigrahi, S.; Basu, B.; Basu, A.S.; Pilla, F. Development of automated marine floating plastic detection system using Sentinel-2 imagery and machine learning models. Mar. Pollut. Bull. 2022, 178, 113527. [Google Scholar] [CrossRef]

- Duarte, L.; Azevedo, J. Automatic Identification of Floating Marine Debris Using Sentinel-2 and Gradient-Boosted Trees. Remote Sens. 2023, 15, 682. [Google Scholar] [CrossRef]

- Papageorgiou, D.; Topouzelis, K.; Suaria, G.; Aliani, S.; Corradi, P. Sentinel-2 Detection of Floating Marine Litter Targets with Partial Spectral Unmixing and Spectral Comparison with Other Floating Materials (Plastic Litter Project 2021). Remote Sens. 2022, 14, 5997. [Google Scholar] [CrossRef]

- Jia, T.; Kapelan, Z.; De Vries, R.; Vriend, P.; Peereboom, E.C.; Okkerman, I.; Taormina, R. Deep learning for detecting macroplastic litter in water bodies: A review. Water Res. 2023, 231, 119632. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2018; Volume 11045, pp. 3–11. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Booth, H.; Ma, W.; Karakuş, O. High-precision density mapping of marine debris and floating plastics via satellite imagery. Sci. Rep. 2023, 13, 6822. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Vicente, V.; Clark, J.; Corradi, P.; Aliani, S.; Arias, M.; Bochow, M.; Bonnery, G.; Cole, M.; Cózar, A.; Donnelly, R.; et al. Measuring Marine Plastic Debris from Space: Requirements and Approaches. Remote Sens. 2019, 11, 2443. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Houlsby, N. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the ICLR, Vienna, Austria, 4 May 2021. [Google Scholar]

- Dang, L.M.; Sagar, A.S.; Bui, N.D.; Nguyen, L.V.; Nguyen, T.H. Attention-guided marine debris detection with an enhanced transformer framework using drone imagery. Process Saf. Environ. Prot. 2025, 197, 107089. [Google Scholar] [CrossRef]

- Kikaki, K.; Kakogeorgiou, I.; Mikeli, P.; Raitsos, D.E.; Karantzalos, K. MARIDA: A benchmark for Marine Debris detection from Sentinel-2 remote sensing data. PLoS ONE 2022, 17, e0262247. [Google Scholar] [CrossRef]

- Mifdal, J.; Longépé, N.; Rußwurm, M. Towards detecting floating objects on a global scale with learned spatial features using sentinel 2. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2021, 3, 285–293. [Google Scholar] [CrossRef]

- IMPACT Team. Marine Debris: Finding the Plastic Needles. 2023. Available online: https://www.earthdata.nasa.gov/news/blog/marine-debris-finding-plastic-needles (accessed on 1 July 2025).

- IBM Space Tech. IBM PlasticNet Project. 2021. Available online: https://github.com/IBM/PlasticNet (accessed on 1 August 2025).

- van Sebille, E.; Aliani, S.; Law, K.L.; Maximenko, N.; Alsina, J.M.; Bagaev, A.; Bergmann, M.; Chapron, B.; Chubarenko, I.; Cózar, A.; et al. The Physical Oceanography of the Transport of Floating Marine Debris. Environ. Res. Lett. 2020, 15, 023003. [Google Scholar] [CrossRef]

- Russwurm, M.C.; Gül, D.; Tuia, D. Improved marine debris detection in satellite imagery with an automatic refinement of coarse hand annotations. In Proceedings of the 11th International Conference on Learning Representations (ICLR) Workshops, Kigali, Rwanda, 1–5 May 2023; Available online: https://infoscience.epfl.ch/handle/20.500.14299/20581013 (accessed on 11 November 2025).

- Rußwurm, M.; Venkatesa, S.J.; Tuia, D. Large-scale detection of marine debris in coastal areas with Sentinel-2. iScience 2023, 26, 108402. [Google Scholar] [CrossRef] [PubMed]

- Wang, F. Improving YOLOv11 for marine water quality monitoring and pollution source identification. Sci. Rep. 2025, 15, 21367. [Google Scholar] [CrossRef] [PubMed]

- DeepLabV3. Available online: https://wiki.cloudfactory.com/docs/mp-wiki/model-architectures/deeplabv3#:~:text=DeepLabv3%2B%20is%20a%20semantic%20segmentation,module%20to%20enhance%20segmentation%20results (accessed on 14 August 2025).

- Danilov, A.; Serdiukova, E. Review of Methods for Automatic Plastic Detection in Water Areas Using Satellite Images and Machine Learning. Sensors 2024, 24, 5089. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, G.; Andriolo, U.; Sobral, P.; Bessa, F. Quantifying Marine Macro Litter Abundance on a Sandy Beach Using Unmanned Aerial Systems and Object-Oriented Machine Learning Methods. Remote Sens. 2020, 12, 2599. [Google Scholar] [CrossRef]

- ESA Sentinel—2 User Handbook. 2015. Available online: https://sentinels.copernicus.eu/documents/247904/685211/Sentinel-2_User_Handbook (accessed on 14 August 2025).

- Codefinity. Available online: https://codefinity.com/courses/v2/ef049f7b-ce21-45be-a9f2-5103360b0655/ea906b61-a82b-47cb-9496-24e87c11b84d/36d0bcd9-218d-482a-9dbb-ee3ed1942391 (accessed on 14 August 2025).

- ESA QA60/SCL Cloud and Shadow Mask Documentation, Sentinel-2 Documentation. Available online: https://documentation.dataspace.copernicus.eu/Data/SentinelMissions/Sentinel2.html (accessed on 12 July 2025).

- Hedley, J.D.; Harborne, A.R.; Mumby, P.J. Technical note: Simple and robust removal of sun glint for mapping shallow-water benthos. Int. J. Remote Sens. 2005, 26, 2107–2112. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Papageorgiou, D.; Topouzelis, K. Experimental observations of marginally detectable floating plastic targets in Sentinel-2 and Planet Super Dove imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104245. [Google Scholar] [CrossRef]

- Ultralytics YOLOv5. 2020. Available online: https://docs.ultralytics.com/models/yolov5/ (accessed on 13 July 2025).

- Ronneberger, O.; Fischer, P.; Brox, T. U—Net: Convolutional networks for biomedical image segmentation. MICCAI 2015, 9351, 234–241. [Google Scholar] [CrossRef]

- Copernicus Marine Service (CMEMS). Global Ocean Physics Reanalysis and Forecast. 2021. Available online: https://data.marine.copernicus.eu/product/GLOBAL_MULTIYEAR_PHY_001_030/description (accessed on 13 July 2025).

- EU Marine Strategy Framework Directive. Available online: https://research-and-innovation.ec.europa.eu/research-area/environment/oceans-and-seas/eu-marine-strategy-framework-directive_en (accessed on 12 September 2025).

- Mamaev, V.O. Black Sea Strategic Action Plan: Biological Diversity Protection. In Conservation of the Biological Diversity as a Prerequisite for Sustainable Development in the Black Sea Region; Kotlyakov, V., Uppenbrink, M., Metreveli, V., Eds.; NATO ASI Series; Springer: Dordrecht, The Netherlands, 1998; Volume 46. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).