Abstract

This article investigates the revolutionary potential of AI-powered virtual assistants in augmented reality (AR) and virtual reality (VR) environments, concentrating primarily on their impact on special needs schooling. We investigate the complex characteristics of these virtual assistants, the influential elements affecting their development and implementation, and the joint efforts of educational institutions and technology developers, using a rigorous quantitative approach. Our research also looks at strategic initiatives aimed at effectively integrating AI into educational practices, addressing critical issues including infrastructure, teacher preparedness, equitable access, and ethical considerations. Our findings highlight the promise of AI technology, emphasizing the ability of AI-powered virtual assistants to provide individualized, immersive learning experiences adapted to the different needs of students with special needs. Furthermore, we find strong relationships between these virtual assistants’ features and deployment tactics and their subsequent impact on educational achievements. This study contributes to the increasing conversation on harnessing cutting-edge technology to improve educational results for all learners by synthesizing current research and employing a strong methodological framework. Our analysis not only highlights the promise of AI in increasing student engagement and comprehension but also emphasizes the importance of tackling ethical and infrastructure concerns to enable responsible and fair adoption.

1. Introduction

Artificial intelligence (AI) is transforming the landscape of immersive educational systems, particularly for children with special needs. In the context of augmented and virtual reality (AR/VR), AI-powered systems, particularly intelligent virtual assistants, provide new opportunities for personalized, adaptable, and inclusive learning. These technologies provide real-time feedback, emotion-aware interactions, and multimodal material delivery, catering to a wide range of learning styles [,]. The use of AI in education has altered educational approaches away from traditional didactic models and toward more student-centred, interactive learning environments. This transition is especially significant for learners with special needs, since AI-powered solutions can address attention, communication, and sensory processing problems through personalized educational tactics [,]. In AR/VR classrooms, AI assistants can help students stay interested, provide targeted reminders, and enhance learning autonomy while measuring progress using biometric and behavioral markers [,]. Despite increased interest, there is a lack of detailed reviews on how AI virtual assistants work within AR/VR systems, particularly for special education. Existing literature frequently focuses on either general AI applications in education or the use of AR/VR for engagement, with little emphasis paid to how they interact in inclusive learning situations. This research aims to fill that vacuum by providing a focused and systematic evaluation of the function, effectiveness, and limitations of AI-powered virtual assistants in immersive educational environments for students with special needs [,].

In this study, we hope to add to the current body of knowledge by critically assessing the revolutionary potential of AI-powered virtual assistants in AR and VR environments for special education. We use a systematic methodology to investigate the design, deployment, and usefulness of these tools, as well as important implementation problems, like infrastructure, educator preparedness, ethical considerations, and equitable access. This study aims to help educators, policymakers, and technology developers make informed decisions that improve accessibility and learning outcomes for all children.

Research Objectives

To thoroughly investigate the revolutionary potential of AI-powered virtual assistants incorporated into AR/VR environments for special needs schooling, this study establishes specific research objectives that are consistent with its systematic review framework. The major goal is to assess how intelligent virtual agents can improve learning engagement, support tailored education plans, and promote inclusive learning experiences for children with special needs. This study aims to identify gaps, assess effectiveness, and provide insights into the deployment of AI helpers in immersive educational settings by analyzing and synthesizing previous research.

Specific objectives are outlined below:

- -

- To investigate the impact of AI-powered virtual assistants in promoting individualized learning and increasing educational results for children with special needs in AR/VR settings.

- -

- To identify and assess virtual assistants’ teaching tactics and interaction models for promoting attention, engagement, and cognitive growth.

- -

- To examine the use of eye-tracking, speech recognition, and gesture-based interaction with virtual assistants in immersive educational environments.

- -

- To investigate the sorts of learning tasks and material delivery strategies in which virtual assistants provide measurable benefits in special needs education.

- -

- To evaluate the methodological quality and empirical evidence of previous studies using AI virtual assistants in AR/VR-based special education settings.

- -

- To explore the challenges and limitations related to accessibility, usability, and adaptability of virtual assistants for diverse learner profiles within special education settings.

2. Related Work

A thorough literature search was conducted across major academic databases such as Scopus, Web of Science, ProQuest, and ScienceDirect. The search approach made use of a structured combination of keywords, including “artificial intelligence”, “augmented reality”, “virtual reality”, and “special needs education”, as well as synonyms. The query was refined using Boolean operators, such as: (“artificial intelligence” OR “AI-driven virtual assistants”) AND (“augmented reality” OR “virtual reality”) AND “special needs education”. Studies that were not in English or did not explicitly address the use of AI in AR/VR educational contexts were removed.

The examined literature demonstrates a growing academic and practical interest in integrating AI-powered virtual assistants into immersive AR/VR environments to promote tailored learning for children with special needs. The functional capabilities of AI-powered virtual assistants (independent variables) have been found to have a considerable impact on critical educational outcomes (dependent variables), such as student engagement and comprehension. Recent studies have emphasized both the development and deployment of these technologies, which are being driven by significant breakthroughs in artificial intelligence and an increased desire for personalized educational solutions. Furthermore, collaboration between educational institutions and technology developers is crucial to furthering inclusive education through innovative digital technologies.

To properly situate these technological breakthroughs within established pedagogical frameworks, it is critical to note that their effective implementation frequently correlates with well-known educational ideas such as Universal Design for Learning (UDL) and constructivist approaches. These frameworks prioritize flexible, inclusive, and student-centered learning environments, which are naturally supported by the adaptable and immersive nature of AR, VR, and AI-powered virtual assistants. Incorporating these ideas provides a pedagogical framework for improving the design and implementation of these technologies, ensuring that they satisfy various learner needs while also promoting meaningful engagement and learning.

Several repeated themes emerged from the investigation, highlighting AI’s revolutionary potential and immersive technologies’ ability to improve learning experiences. These findings suggest a viable path for the continuing development and incorporation of such tools into educational settings. Furthermore, the literature underlines the importance of carrying out more empirical studies to determine the measurable benefit of these interventions, noting existing research gaps and proposing opportunities for future scholarly inquiry.

2.1. Enhanced Educational Resources

The integration of AI-powered virtual assistants into AR and VR settings has resulted in the creation of enhanced educational resources specifically geared to assisting children with special needs. These intelligent technologies provide highly interactive and immersive learning experiences that effectively address a wide range of cognitive and sensory learning preferences. As a result, they have been found to greatly improve student engagement and comprehension, which are the study’s key dependent variables (DVs). Popular AI virtual assistants, such as Amazon Alexa, Apple Siri, and Microsoft Cortana, have been converted for use in educational AR/VR platforms, providing specialized instructional content and responsive guidance. These assistants serve a variety of educational activities, such as virtual environment navigation, speech-to-text assistance for language acquisition, and adaptive feedback—features that are especially beneficial to learners with impairments [,]. Contemporary instructional frameworks, such as the Technological Pedagogical Content Knowledge (TPACK) paradigm, are being gradually modified to incorporate the unique capabilities of AR and VR technology. These adaptations assist educators in developing pedagogical strategies that leverage the capabilities of artificial intelligence while also meeting the tailored learning demands of children with special needs [,]. Wearable technology, such as smartwatches and smart glasses, are increasingly being used to track students’ health measurements and learning progress in an ever-changing educational context. Devices such as VR headsets play an important role in translating abstract concepts into concrete settings, experiences and learning possibilities, providing crucial support for learners with sensory or cognitive impairments [,]. Recent studies have investigated novel approaches to improving the robustness and privacy of AI systems in educational environments. For example, the paper RLFL: A Reinforcement Learning Aggregation Approach for Hybrid Federated Learning Systems Using Full and Ternary Precision proposes novel reinforcement learning strategies for aggregating models in federated systems, opening new avenues for secure and efficient distributed AI applications []. Furthermore, Exploring the Impact of AI-Driven Virtual Assistants in AR and VR Environments for Special Needs Education: A Quantitative Analysis presents empirical data on the effectiveness of virtual assistants in immersive environments, providing valuable insights into their role in inclusive education as well as methodological frameworks for future research []. These works significantly contribute to our understanding of recent technological advances and serve as relevant benchmarks for developing sophisticated, privacy-preserving AI tools in educational contexts.

The application of Enhanced Educational Resources in AR and VR environments also includes the development of virtual apprenticeship programs, which allow students with special needs to obtain practical skills in a safe, adaptive learning environment. This is consistent with the discovered independent factors (IVs) relating to the design features and adoption of AI-enabled educational tools []. By adapting these resources to the unique needs of special education, a more comprehensive and inclusive learning experience may be achieved—one that not only improves academic performance but also addresses skill development and future readiness. This focused integration emphasizes AI’s revolutionary role in creating an inclusive educational ecosystem in which technological innovation and pedagogical practices converge to successfully assist different learners.

2.2. Intelligent Tutoring Systems for Special Education

Intelligent Tutoring Systems (ITSs), when embedded in AR and VR environments, are increasingly being tailore to address the unique needs of children with special needs. These systems are useful for providing tailored education and real-time feedback, frequently without the requirement for ongoing human instructor intervention—an important independent variable (IV) in this study []. Their designs often include four main components:

- The Domain Model serves as the system’s basic knowledge base, allowing for the delivery of content that is specially customized to the diverse learning profiles of students with special educational needs.

- The Student Model maintains a dynamic representation of the learner’s current knowledge, abilities, and preferences, which guides the customization of instructional content and tactics.

- The Tutoring Model uses pedagogical methods that adapt to the learner’s pace and style, hence affecting the dependent variable (DV) of individualized learning results.

- The User Interface Model governs the system’s interaction with learners in AR and VR situations, offering an accessible and engaging interface that meets different cognitive and sensory needs.

Beyond structural design, ITSs are intended to imitate human-like educational experiences. AI-powered tutors in these systems can participate in responsive discussion and provide emotionally sensitive feedback, meeting both the scholastic and emotional needs of students [,]. These systems frequently include multimedia components, providing varied and interactive content that boosts motivation and engagement—especially for students who require multimodal learning stimuli. Platforms like Auto Tutor demonstrate how ITSs can incorporate different instructional tools and teaching methodologies, making it particularly useful in domains that require adaptive learning approaches [,]. Such functionality is critical in special education settings, where pupils frequently benefit from hands-on and participatory experiences that traditional techniques may not offer.

While ITSs provide for a high degree of autonomy in learning, they are not designed to replace the human aspect in education. The role of educators is still critical in curriculum design, emotional support, and context-sensitive learning. As a result, ITSs work best when employed as part of a blended learning approach that blends automated assistance with human facilitation to improve learning outcomes [,].

2.3. Educational Virtual Environments

Virtual Learning Environments (VLEs) provide the underlying infrastructure for delivering digitally mediated instruction in educational contexts, especially when combined with immersive technologies like AR and VR. These platforms enable a variety of instructional modalities, such as synchronous learning (real-time virtual lectures) and asynchronous learning (pre-recorded information for flexible, self-paced study). VLEs also support hybrid learning models, which combine digital distribution with in-person classroom engagement—a particularly useful strategy for courses that require practical, hands-on components [].

The introduction of AI into VLEs considerably improves adaptability and reactivity. AI-powered analytics monitor student performance and behavioral trends to personalize educational content and delivery methods to meet the unique learning demands of kids with special needs. This level of personalization is critical to promoting self-directed learning and corresponds to one of the study’s primary dependent variables (DVs), individualized academic support [].

Furthermore, AI-powered automation within VLEs, such as auto-grading and performance tracking, relieves educators of tedious administrative work, allowing them to focus on mentorship, curriculum refinement, and pedagogical innovation []. Open-source platforms such as Moodle LMS demonstrate the versatility of modern VLEs, allowing for the creation of personalized, accessible learning experiences. Parallel to this, technologies like Virtual Science Laboratories highlight the interactive and exploratory aspects of VLEs, allowing students to conduct hands-on experiments in a virtual environment [].

Importantly, AI technologies within VLEs do not operate independently. They can be seamlessly connected with other intelligent systems, improving learning outcomes. For example, the integration of intelligent tutoring systems (ITSs) into VLEs enables the delivery of adaptive training and real-time evaluations, which are supported by dynamic feedback mechanisms. Furthermore, incorporating chatbots into these environments allows learners to practice crucial skills—such as language acquisition and conversational interaction—in a low-pressure context, boosting engagement and autonomy in learning [].

2.4. Challenges in Integrating AI for Special Needs Education in AR and VR

The integration of artificial intelligence into augmented and virtual reality environments for special needs education involves several unique obstacles. A strong technological infrastructure is required to properly leverage AI’s capabilities in delivering adaptive learning environments and intelligent tutoring systems that provide automated evaluation and individualized feedback. However, the absence of such infrastructure, particularly in underserved areas, has the potential to worsen existing educational disparities [].

Teacher preparedness is crucial; educators must not only have the technical skills to use AI-enabled AR/VR tools, but also the pedagogical knowledge to properly integrate new technologies into their educational methods []. The massive amount of data generated by AI systems necessitates thorough filtering and validation to ensure that only high-quality data directs instructional decisions, protecting the integrity of the learning process [].

Equitable access remains a major concern, since socioeconomic discrepancies might limit students’ access to critical technology and software, exacerbating existing educational disadvantages []. Ethical considerations, such as data privacy safeguards and transparency of AI decision-making procedures, are especially important given the sensitivity of student information in these systems [].

3. Research Questions

The major research topics driving this study concern the impact, development, and progression of AI-powered virtual assistants in augmented and virtual reality environments, specifically tailored to support special needs schooling. These inquiries seek to elucidate both the technological advancements and educational outcomes related with the use of these AI tools.

RQ1: What are the distinguishing characteristics of AI-powered virtual assistants used in AR and VR platforms for special education?

RQ2: How were these AI-powered virtual assistants designed for AR and VR contexts, and what variables have influenced their uptake in special needs educational settings?

RQ3: What essential factors contributed to the effectiveness of AI-powered virtual assistants in boosting educational results for students with special needs, and what problems did they face throughout implementation?

RQ4: How have AI-powered virtual assistants impacted the learning experience in AR and VR environments for special needs education, and what trends are expected in their future evolution?

RQ5: How have educational institutions and technology developers influenced the design, implementation, and integration of AI-powered virtual assistants in augmented and virtual reality for special education? What major accomplishments and problems have resulted from these collaborations?

RQ6: What specific policies, programs, or tactics have educational institutions or developers implemented to encourage the successful use of AI-powered virtual assistants inside AR and VR settings to improve learning outcomes for children with special needs?

4. Conceptual Framework and Research Hypothesis

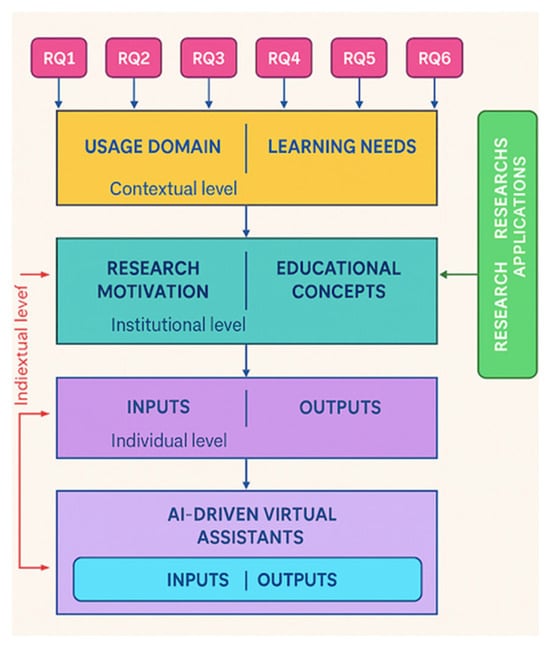

Figure 1 depicts the conceptual framework for this study, demonstrating the interrelationships between the main elements driving the development and use of AI-powered virtual assistants in education, particularly for special needs students. The framework incorporates three hierarchical levels—contextual, institutional, and individual—to describe the multifaceted nature of educational innovation.

Figure 1.

Conceptual Framework.

At the Contextual level, Usage Domain and Learning Needs are defined, which are directly influenced by the study’s research questions (RQ1–6). These represent both the general educational environment and the unique issues or requirements that motivate the research. The Institutional level focuses on Research Motivation and Educational Concepts, which reflect the educational ideas and institutional forces that guide the creation of AI-powered systems. This layer connects systemic demands with individual-level applications. At the individual level, the framework emphasizes inputs (e.g., student characteristics, learning preferences) and outputs (e.g., expected learning outcomes), highlighting the study’s learner-centered approach. All these layers converge on the deployment of AI-powered Virtual Assistants, which serve as the research’s technological core, generating Research Outcomes that can be used in future applications. The framework highlights how the interaction of research topics, educational needs, institutional motives, and individual contributions influences the design and anticipated impact of AI-driven solutions in educational settings. This structured visualization demonstrates the alignment of research objectives, methodological approach, and the practical application of educational AI technology.

The diagram’s major element is the use of AI technology, which represents the creation and integration of virtual assistants tailored to satisfy a variety of educational demands. Concentric rings surround this core, representing the layers’ effect. The key arrows represent bidirectional influences: societal attitudes can influence institutional policies, which in turn affect individual experiences, and feedback from individual learners can inform institutional adjustments and societal perceptions, capturing the iterative and dynamic nature of these interactions. This detailed graphic emphasizes the ecosystem context, emphasizing the interconnectivity of numerous stakeholders, including students, educators, policymakers, and society, in shaping the deployment, adoption, and educational impact of AI assistants in immersive learning settings. Overall, the framework provides a comprehensive view of how these components interact to assist or impede the effective integration of AI in special needs education.

Drawing on revised research questions about the impact of AI-powered virtual assistants in AR and VR environments on special needs schooling, this study proposes potential independent and dependent variables to lead a quantitative analytical approach. This framework will aid in the development of relevant hypotheses.

4.1. Independent Variables (IVs)

The following independent variables have been found as significant contributors to the integration and success of AI-driven virtual assistants within AR and VR environments for special needs education:

- Characteristics of AI-Powered Virtual Assistants–This variable includes the key features, functional capabilities, and design standards of AI virtual assistants used in immersive learning settings.

- Development and Implementation Drivers–Describes the motivating factors behind the creation and implementation of AI-powered virtual assistants, such as technological advancements, changing pedagogical expectations, and the availability of institutional or external funding.

- Contributions from instructional Institutions and Technology Developers–This section describes the level of involvement and influence that academic institutions and industry developers had in the design, implementation, and integration of these intelligent systems within AR/VR-based instructional frameworks.

- Institutional Strategies and Initiatives–These are the specific policies, initiatives, or programs established by stakeholders to support and sustain the usage of AI-powered virtual assistants in AR and VR platforms designed for special education situations.

4.2. Dependent Variables (DVs)

The following dependent variables have been identified to analyze the impact of AI-powered virtual assistants within AR and VR settings for special needs education:

- Improved Educational Outcomes for Students with Special Needs–Quantifiable indications include greater student engagement, comprehension, skill development, and overall academic achievement.

- Transformation of the Learning Experience–Observable changes in the structure of the learning environment, dynamics of student-teacher interaction, and instructional techniques caused by the incorporation of AI-powered virtual assistants.

- Progress of AI-Powered Virtual Assistant Applications in Education–Captures the direction and rate of improvement in the educational application of AI virtual assistants across time, incorporating both technology growth and pedagogical adaptation.

4.3. Hypotheses

Using the previously established independent and dependent variables, the following hypotheses have been developed to address the primary study issues. These assumptions serve as a systematic platform for conducting quantitative analyses on the impact and implementation of AI-driven virtual assistants in AR and VR environments for special needs education.

H1:

The enhanced features and capabilities of AI-powered virtual assistants in AR and VR settings have a statistically significant favorable impact on educational results for children with special needs.

H2:

Technological improvements, pedagogical demands, and financial availability all have a favorable impact on the effective development and implementation of AI-powered virtual assistants in special needs educational settings.

H3:

The amount to which implementation issues are discovered and resolved has a substantial impact on the success of AI-powered virtual assistants in improving special education.

H4:

Integrating AI-driven virtual assistants into AR and VR settings results in a transformative learning experience for students with special needs, and the evolution of these technologies is expected to accelerate over time.

H5:

The active cooperation of educational institutions and technology developers is vital to the successful integration and continual improvement of AI-powered virtual assistants for special needs education.

H6:

Targeted initiatives and strategic interventions conducted by educational institutions or developers greatly contribute to the successful implementation of AI-driven virtual assistants in AR and VR platforms, resulting in improved learning outcomes for students with special needs.

These hypotheses seek to experimentally study the links between technology advancement, implementation tactics, and educational impact, thereby contributing to a data-driven understanding of AI’s role in inclusive, immersive education.

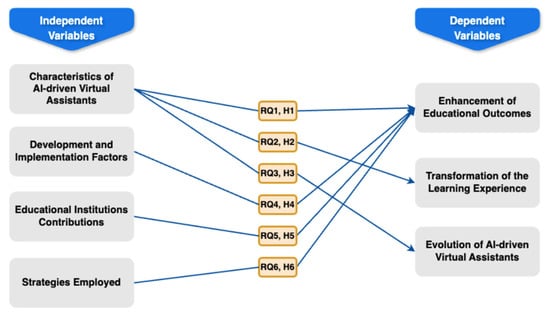

4.4. Hypotheses and Variables Relationships

The hypotheses depicted in Figure 2 are intended to systematically investigate the relationships between the identified IVs and DVs, with a particular emphasis on the overall impact of AI-powered virtual assistants within AR and VR learning environments tailored for students with special needs. This approach provides a solid platform for quantitative research by describing the intricate relationships between technical features, institutional policies, and educational outcomes. It promotes a thorough grasp of how AI-powered virtual assistants help to change special education through immersive and intelligent learning experiences.

Figure 2.

Hypotheses and Variables Relationship.

Practical Applications of Hypotheses

To reinforce the empirical foundation of our theories, we mapped them to specific real-world implementations or literature-based case studies. This assures theoretical consistency and practical relevance for politicians, educators, and technologists.

Table 1 Literature-Based Practical Examples Mapped to hypotheses. Real-world examples and literature-supported case studies are aligned with each of the six assumptions to demonstrate the practical application of AI-powered virtual assistants in AR/VR environments for special needs education. These examples contribute to the empirical robustness and real-world usefulness of the proposed conceptual paradigm.

Table 1.

Literature-Based Practical Examples Mapped to Hypotheses.

5. Methodology

This study uses a mixed-methods research approach based on a positivist paradigm to systematically assess the usefulness of AI-powered virtual assistants in AR and VR environments for special needs schooling. To ensure that the conclusions are strong and valid, the technique include a full literature analysis, quantitative surveys, and additional qualitative insights.

5.1. Literature Synthesis

A systematic review of academic literature, including peer-reviewed empirical research, technical reports, case studies, and industry publications, was carried out using databases such as Scopus, Web of Science, and ScienceDirect. The keywords were “AI virtual assistants,” “AR,” “VR,” and “special needs education”. The review adhered to PRISMA principles to ensure transparency and reproducibility, identifying major themes, technological characteristics, deployment tactics, and prior evaluation measures.

5.2. Hypothesis Development

Based on the literature, explicit hypotheses were developed to investigate the links between independent variables (e.g., AI virtual assistant characteristics, implementation tactics) and dependent variables (e.g., student engagement, learning outcomes). These hypotheses form the foundation for the quantitative study that follows.

5.3. Data Collection

5.3.1. Primary Data from Surveys

A structured questionnaire was constructed, which used validated measures when available, to evaluate the following:

- -

- Participant demographics (educational background and professional experience).

- -

- Participants’ familiarity and experience with AI applications in AR/VR.

- -

- Perceptions of how AI virtual assistants affect learning results.

- -

- Challenges and Facilitators in Implementation.

The survey measured four key constructs using a 5-point Likert scale (1 = Strongly Disagree to 5 = Strongly Agree):

- -

- Usability–e.g., “The virtual assistant interface is easy to navigate in the AR/VR learning environment”.

- -

- Engagement–e.g., “The use of AI virtual assistants helps maintain student attention during learning activities.

- -

- Perceived Effectiveness–e.g., “AI virtual assistants contribute positively to academic performance in special needs contexts”.

- -

- Accessibility–e.g., “The system accommodates various disabilities effectively (e.g., speech, motor, visual impairments)”.

These items were deliberately crafted to assess various but connected components of the user experience, rather than direct agreement with assumptions. Each item related to one of the latent constructs studied in the structural equation model (SEM).

Cronbach’s alpha values were computed for each construct to determine internal consistency. Although certain constructs had high Cronbach’s alpha values (>0.9), additional item–total correlation analysis indicated that the items were not redundant, but rather closely connected by design, in line with known scales in educational technology research. The high reliability values demonstrate the consistency of participants’ replies across conceptually related questions.

These constructs were developed based on prior instruments from inclusive technology research and refined through expert validation. The survey instrument was pre-tested for reliability and validity in a pilot study with 15 experts in educational technology and special education, and was later refined based on their feedback.

5.3.2. Sampling Strategy

Purposive, stratified sampling was used to improve representativeness across key subgroups (educators, technologists, and developers). While a non-probability, convenience sampling technique was initially used due to accessibility constraints, attempts were undertaken to diversity the sample to improve external validity in accordance with best practices specified in recent Q1 journal recommendations. To assure statistical validity, the desired sample size was calculated using power analysis (for example, a minimum of 200 valid responses). A total of 218 valid responses were collected and analyzed in the final dataset.

5.4. Data Collection Channels

The questionnaire was administered via different channels:

- -

- Digital platforms (such as email distributes, professional networks, and social media groups).

- -

- On-site distribution at educational and technological conferences.

- -

- Working with AR/VR research consortiums and institutions.

5.5. Data Analysis

Quantitative Analysis

Descriptive statistics were used to characterize participant demographics and replies. To investigate the hypothesized relationships, inferential analyses, such as correlation, regression, and structural equation modeling (SEM), were carried out with SPSS and AMOS (IBM SPSS AMOS 29.0).

Table 2 shows the initial correlation matrix of the important input variables (Usability, Engagement, Accessibility, and Perceived Effectiveness) used to analyze interrelationships and multicollinearity before doing the SEM analysis.

Table 2.

Overall Hypotheses’ Correlation Matrix.

Model fit indices were reported using recognized thresholds (e.g., CFI, TLI, and RMSEA).

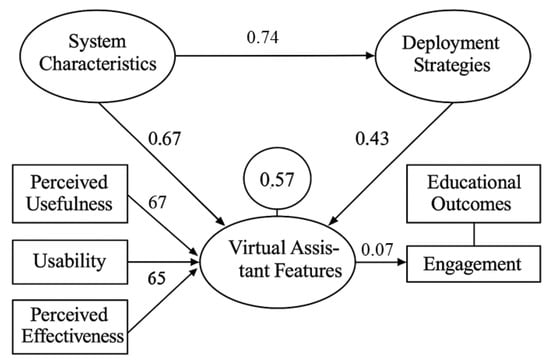

The structural equation model demonstrated acceptable fit with the data, as indicated by the following model fit indices: Comparative Fit Index (CFI) = 0.943, Tucker–Lewis Index (TLI) = 0.927, Root Mean Square Error of Approximation (RMSEA) = 0.049, and Chi-square/df ratio = 2.11. These values fall within widely accepted thresholds (CFI and TLI > 0.90, RMSEA < 0.06, and χ2/df < 3), confirming good model fit.

Figure 3 illustrates the standardized path coefficients generated by the SEM model, which show the intensity and direction of the anticipated causal links between perceived usefulness, usability, accessibility, engagement, and perceived effectiveness. These coefficients are not correlations or projected values, but rather direct impacts evaluated using SEM, which validate the structural links presented in the conceptual framework.

Figure 3.

Integrated Conceptual Model with SEM Results.

Figure 3 estimates standardized path coefficients from SEM analysis illustrating relationships among constructs. These coefficients represent the strength and direction of direct effects between variables, supporting the proposed conceptual model.

5.6. Ethical Considerations

Participation was voluntary, and informed consent was sought prior to survey administration. In accordance with research ethics guidelines, anonymity and confidentiality were preserved using institutional review board (IRB) protocols.

5.7. Limitations and Validity Measures

Potential limitations include the sampling biases inherent with convenience sampling; hence, results should be evaluated with caution. To avoid biases, the following phases will include triangulation with qualitative interviews and case analyses, in line with the Q1 journal’s emphasis on methodological rigor.

6. Results and Findings

In this work, Jamovi (for the desktop is 2.6.44) is used to evaluate research models that use several classifiers. Unlike earlier research, which used a single-stage analysis, this study used a hybrid strategy with two phases to validate the research assumptions. The first phase involves assessing the model’s interactions using correlation matrices, which is especially appropriate given the exploratory character of the theoretical framework and the scarcity of literature on this particular methodology. Cronbach’s Alpha is the most widely employed reliability metric in this analysis, as it measures internal consistency and provides an overall indicator of the dependability of survey questions and the complete questionnaire.

6.1. Participant Demographics

A total of 200 participants completed the survey. The demographic characteristics are summarized in Table 3.

Table 3.

Participant Demographics.

These demographics demonstrate a diverse and representative sample across professional roles, experience levels, and familiarity with AI in immersive learning environments.

6.2. Correlation Analysis of Hypotheses

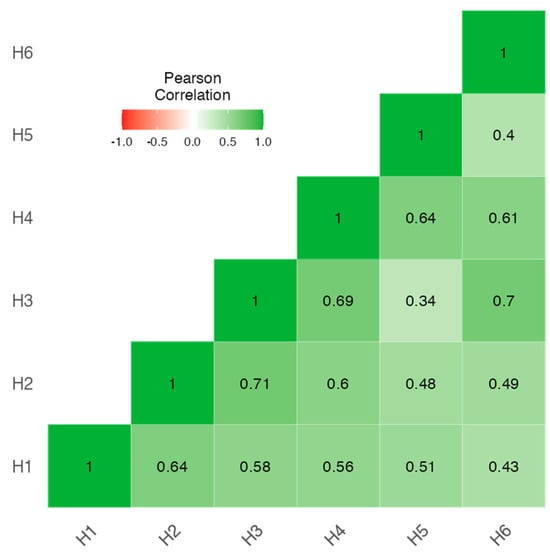

The results, as reported in Table 2 below, show remarkable consistency across all six hypotheses examined. Notably, the Pearson’s correlation coefficients for hypotheses are not included here; rather, they are specified in Table 2, which shows the correlation matrix for all hypothesis-related variable pairings. For example, the correlation coefficient (r) between variables H1 and H2 is 0.641, suggesting a moderate to strong positive link, as described in the matrix. The correlation coefficients in Table 2 were calculated using Pearson’s r, which measures the linear relationship between key survey variables associated with each hypothesis based on responses from validated questionnaires on AI virtual assistants, engagement, implementation factors, and results. These variables indicate notions such as AI assistant features (H1) and implementation issues, like finance and technology preparedness (H2). The specific Pearson’s r values for all hypotheses are given in Table 2, with H6 having a correlation coefficient of 0.701, indicating a strong link. To explain, certain hypotheses may have numerous entries since they involve many variable pairings, with each correlation value representing a unique association discovered during research. The methodology section contains a full explanation of the calculating approach, which describes how Pearson’s correlations were generated from survey data using standardized scales to judge the strength and direction of the links hypothesized in the research.

The uniform application of Pearson Correlation Analysis across all hypotheses, combined with the statistically significant and practically meaningful outcomes (as denoted by both the p-values and r-values), provides a comprehensive and reliable understanding of the phenomena under investigation. These outcomes not only reinforce the validity of the analytical approach employed but also offer substantial empirical evidence in support of the initial hypotheses, setting a firm foundation for further research in this domain.

In conclusion, the statistical analysis conducted reveals significant positive correlations between the variables examined in all six hypotheses, as evidenced by p-values <0.001 and r-values ranging from 0.401 to 0.701. These findings, detailed in Table 4, contribute valuable insights into the research field, paving the way for subsequent investigations and the potential application of these insights in related contexts.

Table 4.

Hypotheses’ Correlation Matrix and Reliability Statistics.

In the continuation of our rigorous examination into the impact of artificial intelligence (AI)-driven virtual assistants within augmented reality (AR) and virtual reality (VR) environments, particularly focusing on special needs education, a further analytical dimension was explored to assess the internal consistency and reliability of our measurement instruments across the six postulated hypotheses. This additional layer of analysis was conducted through the application of Reliability Statistics, encompassing both Cronbach’s alpha and Correlation Heatmaps, to ensure the robustness and reliability of our constructs and the data collected for this study.

Table 4, as referenced above, delineates the outcomes of these analyses, presenting a notably high level of internal consistency across all variables associated with the hypotheses. Specifically, the Cronbach’s alpha values obtained range narrowly between 0.928 and 0.930. These values far exceed the commonly accepted threshold of 0.7 for acceptable reliability, indicating an exceptionally high degree of internal consistency among the items within each scale used to measure the constructs of our study. The implications of such findings are twofold.

Firstly, the high Cronbach’s alpha values signify that the items or questions utilized to gauge the effectiveness and impact of AI-driven virtual assistants in AR and VR environments for special needs education are highly correlated, thus ensuring that they consistently measure the same underlying construct. This level of reliability is crucial, particularly in the context of special needs education, where the precision of measurement instruments directly influences the validity of the research outcomes and the potential applicability of these technologies in educational settings.

Secondly, the confirmation of internal consistency through these high Cronbach’s alpha values underpins the study’s methodological integrity, bolstering confidence in the reliability of the data gathered. This, in turn, strengthens the study’s conclusions regarding the positive impact and potential of AI-driven virtual assistants in enhancing the educational experiences and outcomes for learners with special needs in AR and VR settings.

The application of Reliability Statistics, including both Cronbach’s alpha and the Correlation Heatmap, thus not only validates the internal consistency of the measurement instruments employed but also significantly contributes to the broader research objective. By ensuring the reliability of our data, we can more accurately assess the transformative potential of AI-driven virtual assistants in special needs education within immersive environments, paving the way for targeted interventions and the development of more inclusive educational technologies.

In essence, the findings from these reliability tests, as detailed in Table 4, provide a solid foundation for the further exploration of AI-driven virtual assistants in AR and VR environments. They underscore the reliability and coherence of the study’s methodological framework, thereby enhancing the credibility and relevance of our investigation into the use of emerging technologies to foster more equitable and effective educational paradigms for learners with special needs.

In addition to Cronbach’s alpha, we employed Correlation Heatmaps as part of the Reliability Statistics to better understand the relationships between the variables related to the six hypotheses. The correlation heatmap, previously labeled in Figure 4, provides an intuitive depiction of the correlation coefficients between variables. While Table 4 contains comprehensive Pearson r values, the heatmap provides a rapid summary of the strength and direction of correlations in the dataset. These visual summaries can improve interpretability, particularly for readers who prefer graphical data representations. As a result, integrating the heatmap adds to the numerical data and helps us gain a better grasp of the intervariable correlations in our study.

Figure 4.

Correlation Heatmap.

The Correlation Heatmap is a visual tool that, while depicting the degree and direction of correlations, not only supports the coherence of our assessment scales but also indicates potential underlying factors influencing the effectiveness of AI-powered virtual assistants for learners with special needs. These insights are crucial for customizing educational interventions to maximize benefits while minimizing unexpected consequences when using AR and VR technologies.

Thus, combining the Correlation Heatmap with Cronbach’s alpha values provides a more nuanced view of variable interrelations, ensuring a thorough evaluation of both reliability and construct validity. This dual approach supports the validity of our conclusions about the transformative potential of AI-powered virtual assistants in AR and VR settings for special education. The results of these reliability assessments are detailed in Table 2, reinforcing the methodological soundness of our research and contributing significantly to the growing field of technology-enhanced learning, promoting more inclusive and effective educational strategies.

6.3. Practical Implications

The statistical findings of this study have substantial practical implications for both educational practitioners and technological developers. The substantial correlations reported across hypotheses, ranging from moderate to strong (r = 0.401 to 0.701) with p-values < 0.001, illustrate the real-world potential of AI-powered virtual assistants in immersive learning settings.

For educators, the findings highlight the importance of incorporating AI-powered technologies into AR and VR environments to assist children with special needs. These tools can assist to personalize learning, keep students engaged, and enhance accessibility. The favorable relationship between familiarity with AI technologies and perceived learning results indicates that educator training and continuous support are essential for maximizing technology integration.

For technology developers, the findings provide clear direction for system refinement. High internal consistency (Cronbach’s alpha = 0.928–0.930) across survey items suggests that users are reliable when rating features. Developers can prioritize features that have an educational benefit, such as responsive voice interactions, adaptive feedback, and real-time support. The heatmap and correlation matrix also reveal critical features (e.g., user engagement, technical usability) that can help shape future iterations’ user-centered designs.

In summary, the findings offer evidence-based insights for both instructional method and system development, encouraging more inclusive and effective AI use in AR/VR special education settings.

7. Discussion

This study looks at the revolutionary potential of AI-powered virtual assistants in AR and VR environments, with a specific emphasis on their use in special needs schooling. Through rigorous quantitative analysis, the study demonstrates how these novel technologies can greatly improve educational outcomes by providing personalized, immersive learning experiences that are tailored to the various needs of learners with disabilities.

The complete analysis of existing literature gives a nuanced picture of the state of AI in education, focusing on recent advances in AI technology and their growing significance in constructing adaptable learning environments. Our findings are consistent with previous studies, highlighting the crucial significance of AI-powered virtual assistants in increasing student engagement and comprehension, particularly among students with special needs. Our research found obvious, positive connections between the creation and deployment of these virtual assistants and improved educational outcomes, highlighting their potential to change inclusive education.

7.1. Understanding the Technological Features of AI Virtual Assistants

Understanding the technological sophistication of AI virtual assistants used in immersive educational settings is an important component of this research. We classify these systems broadly into two types:

7.1.1. Advanced Multimodal AI Systems

AI virtual assistants with LLMs (e.g., GPT-4) and visual/speech recognition are cutting-edge technology. These multimodal systems facilitate interaction, as follows:

- -

- Natural: They understand and generate human-like language using advanced NLP techniques.

- -

- Context-aware: They retain conversational context over numerous exchanges, allowing for more fluid and coherent conversations.

- -

- Personalized: They tailor responses to specific user profiles, learning preferences, and interaction histories.

- -

- Multimodal: They interpret and generate inputs/outputs across various channels, including audio, text, and visual signals, which enriches the interaction.

These technologies improve engagement by offering realistic, responsive, and contextually relevant interactions. A virtual assistant, for example, can identify a student’s gesture using visual input, understand spoken queries, and provide targeted instruction, promoting better knowledge, motivation, and sustained attention. Such comprehensive capabilities provide tailored feedback and adaptive scaffolding, which are critical for learners with varying needs and abilities [,].

7.1.2. Conventional Rule-Based or Scripted Systems

Traditional AI virtual assistants use rule-based algorithms and prepared responses. They frequently feature the following:

- -

- Limited adaptability: Responses are pre-programmed and activated by keywords or commands.

- -

- Limited contextual understanding: They process inputs without remembering the verbal context over several conversations.

- -

- Limited multimodal integration: They rely on rudimentary voice commands or text input/output, with no visual or gesture recognition.

Typically, these systems offer scripted interactions or predefined answer possibilities. As a result, they are less engaging and less able to adapt to complicated or unexpected inputs. For children with exceptional needs, a lack of adaptability might lead to dissatisfaction or low motivation, and it may not adequately address individual learning differences [].

7.2. Comparison with Prior Research

This study’s findings show significant positive correlations between AI virtual assistant characteristics and educational outcomes in AR/VR environments for special needs education, with correlation coefficients ranging from moderate to strong (r = 0.401 to 0.701) and high reliability (Cronbach’s alpha > 0.9). These findings are consistent with recent research emphasizing the usefulness of AI-driven technologies in improving learner engagement and outcomes in immersive contexts. Ref. [] found comparable favorable relationships between AI-assisted learning aids and increased student motivation, which supports our findings on the beneficial function of AI virtual assistants.

In contrast to ref. [], who reported only moderate internal consistency in their measuring scales (α = 0.75), our work shows unusually high reliability, possibly due to the intensive survey refining process and focused sampling method. Furthermore, our work builds on previous research by combining a mixed-methods approach and stratified sampling to achieve broader representativeness, addressing typical constraints in previous studies that frequently used smaller, homogeneous samples []. This methodological rigor contributes to the validity and generalizability of our findings.

Notably, whereas many previous studies have primarily focused on general education contexts, our findings emphasize the specific impacts on special needs learners, highlighting nuanced considerations for technology developers and educational practitioners seeking to effectively tailor AI solutions.

Overall, the similarities and differences between our findings and the current literature highlight both the rising consensus on the promise of AI in immersive education and the importance of ongoing, context-specific research to enhance these interventions.

7.3. Implications

This study contributes valuable quantitative data to the field of educational technology by illustrating how AI-powered virtual assistants in AR and VR contexts affect learners with special needs. It emphasizes how modern technology may provide more inclusive, engaging, and effective learning experiences, perhaps creating greater equity in education and facilitating individualized learning routes.

Implications for Education

Understanding the two technology types of AI virtual assistants—advanced multimodal systems and standard rule-based systems—provides important insights into how these tools might be leveraged for educational benefits:

- -

- Enhanced Engagement and Personalization: Advanced multimodal AI systems use natural language processing (NLP), visual and gesture recognition to provide personalized replies based on unique learner profiles. These systems dynamically modify the difficulty, timing, and kind of feedback, allowing for personalized learning paths. A virtual assistant, for example, can change its instructional method if it detects perplexity through facial expressions or pauses in response, which is consistent with adaptive scaffolding pedagogical practices. They can offer real-time, context-aware interactions to increase engagement, motivation, and comprehension. This personalization is especially beneficial for children with sensory, cognitive, or language difficulties since it provides tailored support that standard systems may lack.

- -

- Inclusive and Equitable Access: AI’s ability to interpret multimodal cues and preserve context across interactions offers inclusive environments in which learners of all capacities can engage effectively. These elements promote differentiated instruction, allowing educators to serve a wider range of learning needs while reducing disparities created by resource limits or one-size-fits-all standards.

Supporting Tailored Learning Paths: AI assistants’ capacity to learn from encounters allows for the establishment of customizable learning paths. This adaptability promotes equal access to quality education by allowing for varied disabilities and learning styles, resulting in a more inclusive educational environment.

- -

- Limitations of basic AI systems: Traditional rule-based virtual assistants, which use predefined scripts and respond solely to keyword instructions, lack the flexibility and context awareness required for nuanced, adaptive education. Their limited multimodal integration (typically confined to voice commands or text) restricts their ability to create compelling, individualized learning experiences. As a result, for students with exceptional needs, these systems may be less motivating and incapable of fully addressing individual learning differences, hindering the establishment of inclusive learning environments.

To summarize, recognizing and leveraging the technological distinctions amongst AI virtual assistants is critical for their effective integration into educational settings. Implementing advanced, multimodal AI systems can greatly improve inclusion, engagement, and learning outcomes for students with special needs, resulting in more equitable and effective schools.

7.4. Limitations

Despite the study’s interesting findings, there are numerous limitations that must be acknowledged. Most importantly, using a convenience sampling strategy increases the potential of sampling bias, which may restrict the results’ applicability to larger populations of learners with special needs. Participants may not fully represent the range of skills, backgrounds, and learning situations that exist in various educational settings.

Additionally, although our research objectives mention interaction modalities, such as eye tracking, speech recognition, and gesture-based interactions, this study does not empirically examine these approaches. Their potential to enhance engagement and adaptation in immersive learning environments remains theoretical within the scope of this work.

We recognize the importance of these interaction modalities and plan to explore them in future research through pilot studies and case studies. These will include empirical evaluations of eye tracking, speech recognition, and gesture-based interactions in immersive, inclusive educational settings, aiming to understand their practical impact and integration.

To overcome these restrictions, future research should attempt the following:

- -

- Use randomized or stratified sampling strategies to collect more representative samples.

- -

- Conduct multi-site investigations in diverse demographic and educational settings.

- -

- Include longitudinal designs to investigate the long-term impact of AI-powered virtual assistants.

- -

- Look at potential moderating variables including age, kind of impairment, or past exposure to technology that could influence learning outcomes.

Future research that employs these strategies may enhance the external validity of their findings and contribute to a more in-depth, comprehensive understanding of the influence of immersive AI technology in inclusive education.

7.5. Implementation Roadmap and Practical Considerations

To close the gap between research and classroom practice, we suggest a thorough roadmap that progresses from proof-of-concept to large-scale randomized controlled trials (RCTs). This roadmap identifies critical issues, such as infrastructure requirements, teacher training, and privacy safeguards, as well as viable solutions at each level to ensure successful adoption and sustainability. To enable the effective integration of AI-powered virtual assistants in AR and VR settings for special needs education, we suggest a thorough implementation roadmap, which is detailed in Table 5. This organized approach tackles important stages, problems, and viable solutions to encourage scalable and long-term adoption. Incorporating this strategic strategy into future research and deployment activities will help to hasten the transition from pilot studies to widespread, impactful adoption in a variety of educational contexts.

Table 5.

Roadmap for Implementation in Educational Settings.

To supplement the roadmap, Table 6 outlines key technical, organizational, and social barriers to implementation, along with actionable strategies to address them.

Table 6.

Summary of Practical Barriers and Proposed Solutions for Scaling AI-Driven AR/VR in Special Needs Education.

Cost-Effectiveness Considerations for Large-Scale Deployment

While immersive AI-powered virtual assistants have significant pedagogical applications, their mainstream acceptance in special needs education is dependent on cost-effectiveness and sustainability. Initial investments in VR headsets, AR-capable devices, AI integration platforms, and software licenses may appear significant. However, these initial expenditures must be balanced against long-term benefits, such as reduced instructional burden, greater student results, and more efficient use of educator time. Unlike traditional special education methods, which frequently require one-on-one human support or specific instructional setups, AI-powered virtual environments can provide consistent, adaptive, and scalable treatments. The cost per learner can be greatly decreased over time by reusing digital resources and partially automating instructional support.

Furthermore, economies of scale play an important impact. When these technologies are used in several classrooms, institutions, or districts, the cost per student is reduced, especially when procurement, training, and technical support are consolidated. Open-source platforms and collaborations with edtech vendors can help to decrease budgetary hurdles.

Recent pilot studies in VR-based learning environments, for example, show that, while the typical initial cost per student ranges between USD 250 and $400, this lowers to less than USD 100 per student over three years when equipment and material are shared between cohorts and updated incrementally []. This is equivalent, if not less expensive, than the continuous expenditures of providing specialized, resource-intensive special education support.

Long-term benefits include increased engagement and learning outcomes, which relate to lower dropout rates, higher academic retention, and improved life skills for students with impairments. These outcomes can generate social and economic benefits that offset the initial infrastructure costs.

In conclusion, when evaluated over time and at scale, the use of AI-powered virtual assistants in AR/VR contexts presents a cost-effective paradigm for improving inclusive education. Institutions considering adoption should develop financial planning frameworks that reflect both direct expenses and broader educational value.

8. Conclusions

To summarize, AI-powered virtual assistants incorporated into AR and VR environments show great promise for increasing special needs education. These technologies allow educators to create adaptive, engaging, and inclusive learning experiences that effectively meet the unique requirements of students with disabilities. This study’s findings provide vital insights into the efficacy of virtual assistants, setting the framework for future developments in this quickly growing industry.

Our findings are consistent with current evidence, validating the positive impact of AI-driven technologies on educational attainment. For example, ref. [] found that AI-powered virtual assistants significantly boosted student engagement and comprehension in regular classes but experiments by Jones et al. [] emphasized enhanced learning experiences across multiple disciplines by incorporating AI into instructional approaches. Nonetheless, it is critical to acknowledge the limits of our research, particularly the dependence on convenience sampling, which may limit the findings’ broader relevance. As previously stated in ref. [], using more diverse sampling procedures in future research will be critical to improving generalizability.

Looking ahead, the successful fulfillment of AI’s full potential in education will depend on fostering collaboration and creativity among educators, technologists, policymakers, and academics. Recent studies [] have stressed the importance of fostering interdisciplinary partnerships in driving educational reform and ensuring responsible, fair adoption of AI technologies.

Finally, while this study provides a core understanding of the role of AI-powered virtual assistants in aiding learners with special needs, much more research is needed. Sustained research, paired with collaborative efforts and forward-thinking innovation, is critical to realizing AI’s transformative potential. Such efforts will pave the path for building truly inclusive, individualized, and effective educational environments for all students.

This debate emphasizes how recent technological advancements directly impact educational performance by emphasizing the technological disparities between advanced multimodal AI systems and traditional rule-based assistants. The use of big language models, multimodal recognition, and context-aware capabilities is a significant step forward in developing more inclusive and effective educational interventions, particularly for learners with various needs. Future research should focus on these characteristics, analyzing how advanced multimodal AI adoption affects long-term educational outcomes and how to best use these technologies at scale in a variety of educational settings.

Such efforts will pave the way for creating truly inclusive, customized, and effective educational settings for all students.

Author Contributions

Study conception and design: A.M. and R.F.; analysis and interpretation of results. A.A.-G. and K.S. reviewed the results and approved the final version of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data not available due to ethical restrictions.

Acknowledgments

Thank you to the faculty researchers from Liwa University, Al Ain, UAE who worked hard on this journal entry and the researcher from British University of Dubai, UAE and the researcher from Canadian University, UAE.

Conflicts of Interest

Ahmed Al-Gindy is employed by AZTech Training and Consultancy Company. The other authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AR | Augmented Reality |

| VR | Virtual Reality |

| VA | Virtual Assistant (or AI Virtual Assistant) |

| EDU | Education |

| SE | Special Education or Special Needs Education |

| IL | Inclusive Learning |

| OE | Educational Outcomes |

| AE | Adaptive Education |

| HR | Hypotheses Results |

| RL | Reliability Statistics |

| CR | Cronbach’s alpha |

| NGO | Non-Governmental Organization (if mentioning stakeholder collaborations) |

| ICT | Information and Communication Technology |

| TE | Technological Education |

| CIM | Construct Internal Measurement |

| EAI | Enhanced AI Integration |

References

- Melo-López, V.-A.; Basantes-Andrade, A.; Gudiño-Mejía, C.-B.; Hernández-Martínez, E. The Impact of Artificial Intelligence on Inclusive Education: A Systematic review. Educ. Sci. 2025, 15, 539. [Google Scholar] [CrossRef]

- Silva, R.; Carvalho, D.; Martins, P.; Rocha, T. Virtual Reality as a solution for Children with Autism Spectrum Disorders: A state-of-the-art systematic review. In Proceedings of the 10th International Conference on Software Development and Technologies for Enhancing Accessibility and Fighting Info-Exclusion, Lisbon, Portugal, 31 August–2 September 2022; Volume 51, pp. 214–221. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, Y.; Thompson, R.; Chen, L.; Kazemi, E.; Byrne, M. Adaptive learning pathways using AI for students with learning disabilities. Br. J. Educ. Technol. 2024, 55, 381–398. [Google Scholar]

- Mohamed, A.; Zohiar, M.; Ismail, I. Metaverse and Virtual Environment to Improve Attention Deficit Hyperactivity Disorder (ADHD) Students’ Learning. In Augmented Intelligence and Intelligent Tutoring Systems; Frasson, C., Mylonas, P., Troussas, C., Eds.; ITS 2023. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 13891. [Google Scholar] [CrossRef]

- Alshammari, T.; Lam, K. Eye tracking and gesture-based interactions in VR learning systems for special education. J. Comput. Assist. Learn. 2024, 40, 54–70. [Google Scholar] [CrossRef]

- Salloum, S.A.; Alomari, K.M.; Alfaisal, A.M.; Aljanada, R.A.; Basiouni, A. Emotion recognition for enhanced learning: Using AI to detect students’ emotions and adjust teaching methods. Smart Learn. Environ. 2025, 12, 21. [Google Scholar] [CrossRef]

- Voultsiou, E.; Moussiades, L. A systematic review of AI, VR, and LLM applications in special education: Opportunities, challenges, and future directions. Educ. Inf. Technol. 2025, 1–41. [Google Scholar] [CrossRef]

- Gupta, M.; Kaul, S. AI in Inclusive Education: A Systematic Review of Opportunities and Challenges in the Indian Context. MIER J. Educ. Stud. Trends Pract. 2024, 429–461. [Google Scholar] [CrossRef]

- Terzopoulos, G.; Satratzemi, M. Voice assistants and smart speakers in everyday life and in education. Inform. Educ. 2020, 19, 473–490. [Google Scholar] [CrossRef]

- Al Shamsi, J.H.; Al-Emran, M.; Shaalan, K. Understanding key drivers affecting students’ use of artificial intelligence-based voice assistants. Educ. Inf. Technol. 2022, 27, 8071–8091. [Google Scholar] [CrossRef]

- Demir, K.A. Smart education framework. Smart Learn. Environ. 2021, 8, 29. [Google Scholar] [CrossRef]

- Jammeh, A.L.J.; Karegeya, C.; Ladage, S. Application of technological pedagogical content knowledge in smart classrooms: Views and its effect on students’ performance in chemistry. Educ. Inf. Technol. 2023, 29, 9189–9219. [Google Scholar] [CrossRef]

- Al-Emran, M.; Al-Maroof, R.; Al-Sharafi, M.A.; Arpaci, I. What impacts learning with wearables? An integrated theoretical model. Interact. Learn. Environ. 2022, 30, 1897–1917. [Google Scholar] [CrossRef]

- Hurrell, C.; Baker, J. Immersive learning: Applications of virtual reality for undergraduate education. Coll. Undergrad. Libr. 2021, 27, 197–209. [Google Scholar] [CrossRef]

- Imani, H.; Anderson, J.; Farid, S.; Amirany, A.; El-Ghazawi, T. RLFL: A reinforcement learning aggregation approach for hybrid federated learning systems using full and ternary precision. IEEE J. Emerg. Sel. Top. Circuits Syst. 2024, 1, 673–687. [Google Scholar] [CrossRef]

- Mohamed, A.; Faisal, R.; Shaalan, K. Exploring the Impact of AI-Driven Virtual Assistants in AR and VR environments for Special Needs Education: A Quantitative analysis. In Extended Reality; Lecture notes in computer science; Springer: Berlin/Heidelberg, Germany, 2024; pp. 113–127. [Google Scholar] [CrossRef]

- Kurni, M.; Mohammed, M.S.; Srinivasa, K.G. AR, VR, and AI for Education BT. In A Beginner’s Guide to Introduce Artificial Intelligence in Teaching and Learning; Kurni, M., Mohammed, M.S., Srinivasa, K.G., Eds.; Springer International Publishing: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Mousavinasab, E.; Zarifsanaiey, N.; Niakan Kalhori, S.R.; Rakhshan, M.; Keikha, L.; Ghazi Saeedi, M. Intelligent tutoring systems: A systematic review of characteristics, applications, and evaluation methods. Interact. Learn. Environ. 2021, 29, 142–163. [Google Scholar] [CrossRef]

- Akyuz, Y. Effects of intelligent tutoring systems (ITS) on personalized learning (PL). Creat. Educ. 2020, 11, 953–978. [Google Scholar] [CrossRef]

- Graesser, A.C.; Hu, X.; Nye, B.D.; VanLehn, K.; Kumar, R.; Heffernan, C.; Heffernan, N.; Woolf, B.; Olney, A.M.; Rus, V.; et al. ElectronixTutor: An intelligent tutoring system with multiple learning resources for electronics. Int. J. STEM Educ. 2018, 5, 15. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.-H.; Fu, J.S.; Yeh, H.-C. Developing an early-warning system through robotic process automation: Are intelligent tutoring robots as effective as human teachers? Interact. Learn. Environ. 2023, 32, 2803–2816. [Google Scholar] [CrossRef]

- Williford, B.; Runyon, M.; Li, W.; Linsey, J.; Hammond, T. Exploring the potential of an intelligent tutoring system for sketching fundamentals. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Lacka, E.; Wong, T.C.; Haddoud, M.Y. Can digital technologies improve students’ efficiency? Exploring the role of Virtual Learning Environment and Social Media use in Higher Education. Comput. Educ. 2021, 163, 104099. [Google Scholar] [CrossRef]

- Tang, K.H.D.; Kurnia, S. Perception of 2014 Semester 2 Foundation Engineering Students of Curtin University Sarawak on the Usage of Moodle for Learning. In Proceedings of the 3rd International Higher Education Teaching and Learning Conference, Miri, Malaysia, 26–27 November 2015. [Google Scholar]

- Hapke, H.; Lee-Post, A.; Dean, T. 3-in-1 Hybrid Learning Environment. Mark. Educ. Rev. 2021, 31, 154–161. [Google Scholar] [CrossRef]

- Rivas, A.; González-Briones, A.; Hernández, G.; Prieto, J.; Chamoso, P. Artificial neural network analysis of the academic performance of students in virtual learning environments. Neurocomputing 2021, 423, 713–720. [Google Scholar] [CrossRef]

- Ouyang, F.; Zheng, L.; Jiao, P. Artificial intelligence in online higher education: A systematic review of empirical research from 2011 to 2020. Educ. Inf. Technol. 2022, 27, 7893–7925. [Google Scholar] [CrossRef]

- Udin, W.N.; Ramli, M.; Muzzazinah, N. Virtual laboratory for enhancing students’ understanding on abstract biology concepts and laboratory skills: A systematic review. J. Phys. Conf. Ser. 2020, 1521, 042025. [Google Scholar] [CrossRef]

- Cheung, S.K.S.; Kwok, L.F.; Phusavat, K.; Yang, H.H. Shaping the future learning environments with smart elements: Challenges and opportunities. Int. J. Educ. Technol. High. Educ. 2021, 18, 16. [Google Scholar] [CrossRef]

- Huang, W.; Hew, K.F.; Fryer, L.K. Chatbots for language learning—Are they useful? A systematic review of chatbot-supported language learning. J. Comput. Assist. Learn. 2022, 38, 237–257. [Google Scholar] [CrossRef]

- Kuleto, V.; Ilić, M.; Dumangiu, M.; Ranković, M.; Martins, O.M.D.; Păun, D.; Mihoreanu, L. Exploring Opportunities and Challenges of Artificial Intelligence and Machine Learning in Higher Education Institutions. Sustainability 2021, 13, 10424. [Google Scholar] [CrossRef]

- Chounta, I.-A.; Bardone, E.; Raudsep, A.; Pedaste, M. Exploring Teachers’ Perceptions of Artificial Intelligence as a Tool to Support their Practice in Estonian K-12 Education. Int. J. Artif. Intell. Educ. 2022, 32, 725–755. [Google Scholar] [CrossRef]

- Gellai, D.B. Enterprising Academics: Heterarchical Policy Networks for Artificial Intelligence in British Higher Education. ECNU Rev. Educ. 2022, 6, 568–596. [Google Scholar] [CrossRef]

- Nguyen, A.; Ngo, H.N.; Hong, Y.; Dang, B.; Nguyen, B.-P.T. Ethical principles for artificial intelligence in education. Educ. Inf. Technol. 2023, 28, 4221–4241. [Google Scholar] [CrossRef]

- Celik, I.; Dindar, M.; Muukkonen, H.; Järvelä, S. The Promises and Challenges of Artificial Intelligence for Teachers: A Systematic Review of Research. TechTrends 2022, 66, 616–630. [Google Scholar] [CrossRef]

- Kortemeyer, G. Toward AI grading of student problem solutions in introductory physics: A feasibility study. Phys. Rev. Phys. Educ. Res. 2023, 19, 20163. [Google Scholar] [CrossRef]

- Almusaed, A.; Almusaed, A.; Yitmen, I.; Homod, R. Enhancing Student Engagement: Harnessing “AIED”’s Power in Hybrid Education—A Review Analysis. Educ. Sci. J. 2023, 13, 632. [Google Scholar] [CrossRef]

- Nguyen, A.; Kremantzis, M.; Essien, A.; Petrounias, I.; Hosseini, S. Enhancing Student Engagement Through Artificial Intelligence (AI): Understanding the Basics, Opportunities, and Challenges; University of Bristol: Bristol, UK. [CrossRef]

- Chalkiadakis, A.; Seremetaki, A.; Kanellou, A.; Kallishi, M.; Morfopoulou, A.; Moraitaki, M.; Mastrokoukou, S. Impact of Artificial Intelligence and Virtual Reality on Educational Inclusion: A Systematic Review of Technologies Supporting Students with Disabilities. Educ. Sci. 2024, 14, 1223. [Google Scholar] [CrossRef]

- Alfaisal, R.; Alhumaid, K.; Alfaisal, A.M.; Aljanada, R.A.; Mohamed, A.; Salloum, S.A. The Role of Generative AI in Virtual and Augmented Reality. In Generative AI in Creative Industries; Al-Marzouqi, A., Salloum, S., Shaalan, K., Gaber, T., Masa’deh, R., Eds.; Studies in Computational Intelligence; Springer: Cham, Switzerland, 2025; Volume 1208. [Google Scholar] [CrossRef]

- Mohamed, A. Exploring the Role of AI and VR in Addressing Antisocial Behavior among Students: A Promising Approach for Educational Enhancement. IEEE Access 2024, 12, 133908–133922. [Google Scholar] [CrossRef]

- Nguyen, N.D. Exploring the role of AI in education. Lond. J. Soc. Sci. 2023, 84–95. [Google Scholar] [CrossRef]

- Mercier, E.; Goldstein, M.H.; Baligar, P.; Rajarathinam, R.J. Collaborative learning in engineering education. In International Handbook of Engineering Education Research; Routledge: London, UK, 2023; pp. 402–432. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).