Abstract

The development of reliable visual inference models is often constrained by the burdensome and time-consuming processes involved in collecting and annotating high-quality datasets. This challenge becomes more acute in domains where key phenomena are time-dependent or event-driven, narrowing the opportunity window to capture representative observations. Yet, accelerating the deployment of deep learning (DL) models is crucial to support timely, data-driven decision-making in operational settings. To tackle such an issue, this paper explores the use of 2D synthetic data grounded in real-world patterns to train initial DL models in contexts where annotated datasets are scarce or can only be acquired within restrictive time windows. Two complementary approaches to synthetic data generation are investigated: rule-based digital image processing and advanced text-to-image generative diffusion models. These methods can operate independently or be combined to enhance flexibility and coverage. A proof-of-concept is presented through a couple case studies in precision viticulture, a domain often constrained by seasonal dependencies and environmental variability. Specifically, the detection of Lobesia botrana in sticky traps and the classification of grapevine foliar symptoms associated with black rot, ESCA, and leaf blight are addressed. The results suggest that the proposed approach potentially accelerates the deployment of preliminary DL models by comprehensively automating the production of context-aware datasets roughly inspired by specific challenge-driven operational settings, thereby mitigating the need for time-consuming and labor-intensive processes, from image acquisition to annotation. Although models trained on such synthetic datasets require further refinement—for example, through active learning—the approach offers a scalable and functional solution that reduces human involvement, even in scenarios of data scarcity, and supports the effective transition of laboratory-developed AI to real-world deployment environments.

1. Introduction

The rapid advancement of technology has enabled the widespread adoption of data processing techniques based on artificial intelligence (AI), with deep learning (DL) emerging as one of the most prominent approaches across both scientific and professional communities. DL has proven particularly effective for pattern analysis and recognition in imagery-based data. Within the realm of supervised learning, DL techniques have been extensively applied to automate tasks such as classification and object detection, offering significant contributions to various domains including industry [1], medicine [2], and agriculture [3]—fields in which the availability of high-quality, timely annotated datasets plays a critical role.

In viticulture, for instance, the timely detection of agro-environmental conditions that influence pest and disease outbreaks is essential for informed decision-making and the proper implementation of targeted interventions in vineyard management. To support this, specialized IoT platforms such as mySense [4,5] have proven valuable in monitoring a range of crop-influencing factors. In integration with mySense, VineInspector [6] demonstrates how DL-powered visual approaches can extract actionable insights by detecting and classifying events and anomalies in vineyards. These include monitoring vine shoot size in relation to primary downy mildew infections and identifying the presence of Lobesia botrana—European grape moth—in pheromone traps.

However, the development of effective visual inference models hinges on the availability of large, high-quality annotated datasets—whose acquisition is often constrained by the natural seasonality of viticulture [7]. More specifically, data collection for training models to detect specific events may only be feasible during narrow windows of the phenological cycle, which, in the case of being missed, can significantly delay the training process and, consequently, the deployment of operational systems. For example, in a study focused on yield estimation and wine-making potential based on grape counting [8], data acquisition and annotation campaigns spanned a couple of years, targeting the time window from July to September, during which the grapes develop and mature until harvest. Another noteworthy example refers to ampelography [9], where data acquisition was concentrated over a few months, corresponding to the phenological stage during which grapevines develop their canopy foliage. Nevertheless, the process took two years to complete, labeling included. In another related study, Kontogiannis et al. [10] collected and annotated 6800 images from ground stations and drones during the growing season to support the development of a downy mildew detection solution. Also, in works involving trap-based grape moth detection [6], one can infer the significant logistical effort required towards the development of the respective solutions—including trap preparation, waiting periods to capture the insects of interest, and subsequent imagery retrieval and annotation procedures.

To address the limitations associated with dataset scarcity and the significant effort required to develop deployable image-based inference solutions, this work draws inspiration from prior research (e.g., [9,11]) to explore synthetic data generation as a strategy for developing initial yet functional DL models. By creating synthetic, domain-compliant training datasets, the proposed approach reduces early reliance on time-consuming procedures from image acquisition to annotation, thereby potentially accelerating the deployment of inference models that, however, should be iteratively refined over time, for example, through active learning techniques. Two complementary synthetic image generation methods are proposed: (i) classical digital image processing techniques and (ii) advanced text-to-image diffusion models. These methods are applied and tested in two relevant viticulture-related use cases: the detection of Lobesia botrana, a major grapevine pest, and the identification of common but potentially destructive vine diseases such as black rot, leaf blight, and ESCA. In addition to presenting the generated datasets, models’ performances, and operational insights, this paper discusses each proposed synthetic data generation approach, outlining some of their advantages and limitations, and positioning them within the broader literature.

The remainder of this paper is structured as follows: Section 2 reviews related work; Section 3 describes the proposed synthetic data generation strategies; Section 4 presents the experimental results; Section 5 offers a critical analysis and discussion; and Section 6 concludes with directions for future research.

2. Related Work

At the core of reliable supervised machine learning (ML) and DL decision-support models—whether applied in healthcare [2], infrastructure engineering [12], the automotive industry [1], the textile sector [13], footwear businesses [14], or inclusive technologies [15]—lies the availability of well-structured, validated, and problem-representative datasets. This requirement is equally critical in the domains of agriculture [16], where the need for capturing the idiosyncrasies of crops and plants under naturally variable environmental conditions increases complexity.

In agricultural and viticultural contexts, data collection is particularly challenging. It is often restricted by seasonal cycles and specific phenological stages, which limit the opportunity to gather large-scale, annotated datasets. Despite the growing availability of repository systems that encourage cross-domain public dataset sharing—including in agriculture [3,17,18]—the scarcity of labeled training data remains one of the most critical bottlenecks for deploying deep learning models in real-world agricultural settings [3]. Building ML/DL models that can effectively operate in such environments requires not only advanced architectures, but also a comprehensive understanding of the domain problem at hand, allowing for the design of representative datasets either by engaging initiatives of data acquisition from scratch or by augmenting upon existing repositories [16].

IoT platforms such as mySense [4] have made strides in this area by enabling crowd-sourced contributions and expert validation for agricultural data aimed at supporting ML/DL-based decision making. Notably, mySense supports intelligent node integration, as demonstrated in [6] through the VineInspector system. This system tracks shoot growth to monitor infection risks such as downy mildew, while also observing pheromone traps for European grapevine moth to assess pest presence and activity. Also focusing on edge computing strategies, Gonçalves et al. [19] developed a dataset comprising 168 images with 8966 insect instances that needed to be manually annotated. This dataset was then used to train and benchmark five models—e.g., SSD MobileNetV2 and EfficientDet-D0. The class-wise accuracies ranged from to , with F1-scores between and . SSD ResNet50 was the architecture that achieved the best performances. Regarding inference times, the minimum observed was greater than 19 s per image in high-end smartphones. Concerned with the main vectors of Flavescence dorée—Scaphoideus titanus and Orientus ishidae—Checola et al. [20] used sticky traps to retain the target insects, enabling the construction of a dataset comprising 600 images and approximately 1500 annotations per class. YOLOv8 and Faster R-CNN were employed to support deep learning-based detections, with the former achieving superior performance: a mean average precision at 0.5 overlap (mAP@0.5) of , and an F1-score above .

Regarding the automatic detection and identification of grapevine leaf diseases, Xie et al. [21] developed the Grape Leaf Disease Dataset (GLDD), comprising 4449 original images across four classes—black rot, black measles, leaf blight, and mites. They also proposed a deep learning model named Faster DR-IACNN, which integrates components from established architectures such as Inception-ResNet-v2 for advanced feature extraction. The model achieved an mAP of . In another work with similar goals, Hasan et al. [22] developed and assessed their own convolutional neural network (CNN) model, reporting an accuracy of 91.37% for distinguishing black rot, ESCA, leaf blight, and healthy leaves from a public dataset [23] (built based on PlantVillage [17]). Matching the same disease classes, Prasad et al. [24] modified a VGG-16 CNN, whose performance reached a top accuracy of in tests on a public and relatively balanced dataset composed of 9027 images [25]. In [26], the same set of diseases was considered through the partial integration of the PlantVillage dataset [17]. In total, 14 CNN and 17 vision transformer models were assessed and compared, with 4 of them achieving an accuracy of . In another work, an Xception model fine-tuned by Nasra and Gupta [27] attained an accuracy of over a public grapevine diseases dataset [28] with characteristics similar to the ones previously addressed. More recently, Talaat et al. [29] proposed a plant disease detection algorithm, composed of a few modules, including for feature extraction and CNN-based classification, which works combined with a grid search strategy for hyperparameters optimization. Considering another PlantVillage [17] inspired dataset [30], an accuracy of was observed in tests.

These works are essentially grounded in supervised learning, where labeled data is essential. However, manual annotation is often time-consuming and labor-intensive [3]. Alternatives like self-supervised learning—as in the case of generative adversarial networks (GANs)—reduce reliance on labeled data but are still constrained by the quality and biases of the original datasets [31]. In complement, semi-supervised learning consists of either (i) combining labeled and unlabeled data to learn more robust features and therefore improve models generalization capabilities; or/and (ii) querying users (preferably, experts) for labeling specific data, leading to a sustainable active learning [3]. While in such approaches uncertainty is an accountable factor for generalization, strategies considering human-in-the-loop involvement can be quite beneficial [32], as they facilitate continuous model improvement. In turn, faster model deployment becomes viable, particularly in scenarios where sub-optimal performance is acceptable in initial setups.

To tackle the challenges posed by data scarcity, the need to perform generally burdensome and time-consuming data collection and annotations, and the time frame limitations imposed by nature-driven events (as in agricultural/viticultural contexts), the generation of synthetic datasets is becoming an increasingly explored strategy by both scientific and professional communities, with notable potential to support the rapid development of initial, yet deployable, deep learning models. For instance, Kubric [33] is a general-purpose engine capable of generating photorealistic scenes by combining various technologies and repositories containing prefabricated 3D assets while maintaining links to individual scene components to facilitate automated annotation. However, content generation appears to be either random or dependent on significant scripting skills and coding effort—especially in scenarios where object specificity and spatial distribution are critical—to build such virtual environments. Kubric is also classified as a substantial computational resources consumer. In another approach [34], an automated framework was proposed to generate CAD-based data with accurate labels for large-scale robotic bin-picking datasets, with applications in industrial settings and tracked conditions. More focused in natural environments, the PROMORE framework [11] uses procedural 3D modeling to produce synthetic images for object detection and segmentation in remote sensing. Prior to this framework, a preliminary system for virtual wildfire generation within a 3D environment [35]—constructed using a photogrammetric process based on remotely piloted aerial systems (RPASs)—was developed and tested using neural networks specialized in segmentation. The results showed promising indications of the potential of synthetic data to train models capable of operating in real-world scenarios. In another study, Adão et al. [9] explored grapevine variety classification through single-leaf image analysis. Building on Xception-based models and anticipating broader field applications, they created synthetic imagery involving leaves of an existing dataset embedded in vineyard-like backgrounds, enabling segmentation and classification work-flows. Other strategies relying on the combination of large language models (LLMs) with visual foundation models have opened new possibilities for generating synthetic datasets directly from text descriptions [36]. These technologies can be employed for rapid dataset creation for training custom inference models, even in highly specific agricultural domains.

Inspired by some of the above approaches and motivated by the persistent challenges in agricultural DL deployment, this paper explores synthetic dataset generation methods to accelerate the operationalization of DL models in precision viticulture. The proposed strategies are presented in the following sections.

3. Proposed Dataset-Related Methods for Expeditious DL Modeling

This section outlines the foundational principles of the proposed methods for guiding the generation of synthetic datasets through image-based coarse problem approximation, with the aim of bootstrapping DL models and circumventing the need for explicit data collection, annotation, and organization from scratch.

3.1. Dataset Design Approaches Overview

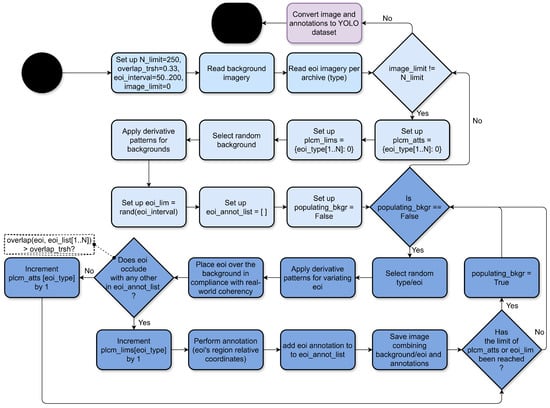

The image processing work-flow illustrated in Figure 1 describes a procedure in which background imagery is combined with foreground elements—referred to as elements of interest (EOIs). Specifically, for each image to be generated, a background is randomly selected from a local archive and subjected to a series of random transformations to introduce data diversity. These include scaling within a given range , adjustments to brightness and contrast within , and vertical flipping.

Figure 1.

General flowchart of image processing-based synthetic data generation, wherein N_limit is the maximum number of images to be generated; overlap_trsh is a tolerance value used for excluding EOIs superimposed on others; eoi is the same as EOI; eoi_interval is a range for randomizing the limit of EOIs to place per background; image_limit is an image counter controller; plcm_lims controls the number of successfully placed EOIs; plcm_atts tracks the number of EOIs that were discarded due to excessive overlap; rand is a randomization function; eoi_annot_list stores the bounding boxes of consolidated EOIs; and populating_bkgr is a flag that works as a stop condition for the generative process.

Subsequently, EOIs are selected through a stochastic process, resized within a predefined range—based on real-world measurements—and subjected to further transformations such as random rotation (within ), brightness and contrast adjustments (), and vertical or horizontal flips. EOIs are iteratively placed onto the background until either a predefined limit is reached, or a maximum number of placement attempts is exceeded—governed by an exclusion criterion that considers overlap tolerance between new EOIs and those already placed.

The resulting composite images and corresponding annotations are exported, ensuring the compatibility of a well-known object detection architecture—You Only Look Once (YOLO).

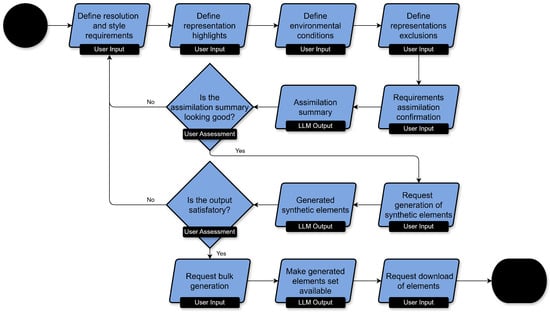

The integration of large language models (LLMs such as ChatGPT [37]), with diffusion models (e.g., DALL·E [38]) represents one of the most significant technological advancements in recent years, enabling artificial intelligence (AI) to perform generative tasks, including the autonomous creation of realistic imagery, grounded in a semantic understanding of, for example, user-provided textual input. Such integration is also explored in the present work aiming to accelerate and facilitate synthetic data generation. As illustrated in Figure 2, the user defines a set of generation requirements, which can be iteratively reviewed and refined as needed. Once finalized, a request for the bulk generation of elements—such as images and corresponding annotations—is issued, and the resulting outputs can be downloaded upon completion of the generation process.

Figure 2.

Synthetic data production resorting to a multimodal large language model (LLM).

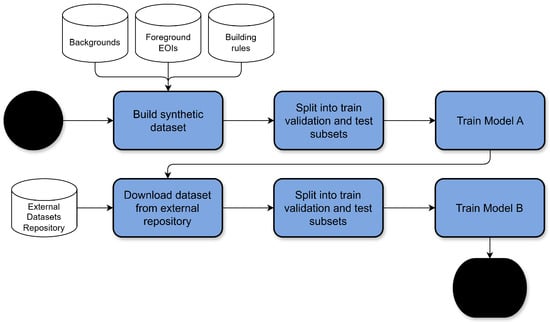

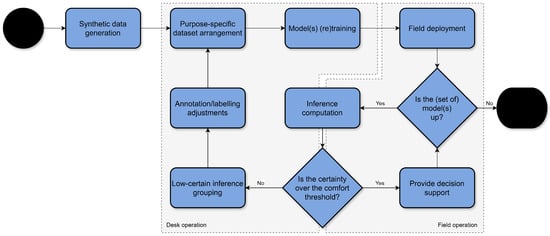

3.2. Collaborative Inference Design

A strategy to reach time-effective DL modeling while short-cutting steps related to in-field data acquisition, organization, and so on, may rely on combining available annotated imagery sources and automation pipelines for simulating not-yet-established datasets. Figure 3 proposes a flowchart encompassing such strategy.

Figure 3.

Combining datasets available in public repositories and dataset synthetization approaches into a single pipeline for the expeditious production of collaborating models.

For example, if a dataset cataloging a specific phenomenon (e.g., grapevine diseases) is already available in public repositories, it can be directly used to develop a single-instance classification model. However, for this model to function effectively in natural environments with complex visual compositions (e.g., a full grapevine canopy), a filtering approach is required to first identify and isolate EOIs. One viable way to enable such filtering is through the creation of a synthetic, object detection-oriented dataset designed to reflect real operational conditions. Such a dataset can be achieved by strategically positioning contour-based, isolated EOIs onto realistic background images, emulating the relevant settings that depict the target problem, while also generating corresponding annotations automatically—leveraging the algorithmic control over element placement. In turn, resulting synthetic images and their corresponding annotations can be used to train a preliminary object detection model capable of recognizing and isolating general instances. During operation, this object-detection model is responsible for primarily identifying EOIs, which are then cropped and passed to a classifier trained on the original, phenomenon-driven dataset, enabling the assignment of more specific labels (e.g., healthy, disease A, disease B, …, disease N). This synergistic strategy combines the strengths of both approaches: the availability of labeled datasets and the efficiency of automated, representative synthetic data generation.

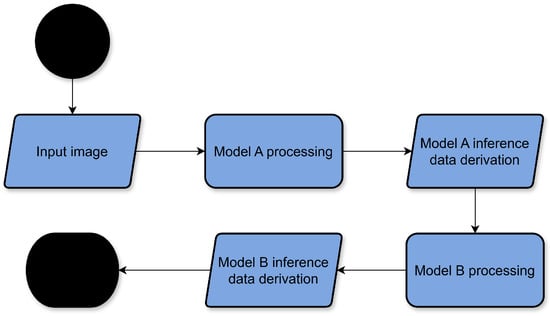

Once the desired models have been trained, they can collaborate by being integrated into a sequential pipeline, as illustrated in Figure 4. In this process, an input image is processed by an initial model, which distils relevant information to be interpreted by a subsequent model. This flow enables a modular and layered approach, where different models contribute distinct processing capabilities, progressively enriching the understanding derived from the original input.

Figure 4.

Two-stage DL models collaboration.

The following section demonstrates the application of the proposed dataset generation methods through two case studies: grape moth detection and vine leaf-based disease recognition.

4. Case Studies for Precision Viticulture

This section focuses on the practical application of the proposed methods for the generation of synthetic datasets targeting two distinct tasks with relevance for precision viticulture: grape moth detection in pheromone traps and the identification of grapevine diseases based on leaf symptoms. The process for creating datasets involves image processing techniques and AI-based generative tools, following the methodological foundations outlined in the previous section. Procedures related to data engineering and synthetic data refinement are detailed, demonstrating how these tools can be effectively employed in domain-specific contexts. All the DL activities were carried out on a computer with the following specifications:

- Processor: 11th Gen Intel® Core™ i7-11800H @ 2.30 GHz (Intel Corporation, Santa Clara, CA, USA);

- Random Access Memory (RAM): 32 GB @ 2933 MHz SODIMM (Corsair Gaming, Inc., Fremont, CA, USA);

- Graphics Card: Nvidia® GeForce RTX 3080 (laptop edition), 16.0 GB GDDR6 RAM (Nvidia Corporation, Santa Clara, CA, USA);

- Storage: 1 TB, 3500 MB/s Read, 3300 MB/s Write (Samsung Electronics Co., Ltd., Suwon, South Korea);

- Operating System: Windows 10 Home 64-bit (Microsoft Corporation, Washington, DC, USA).

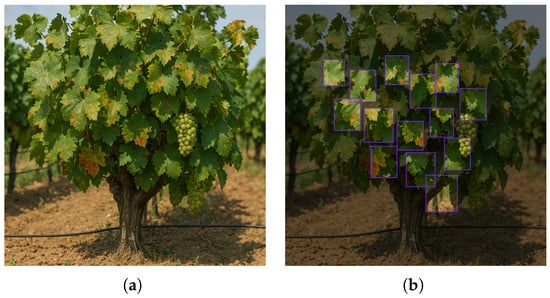

Regarding alignment with real-world observations, the synthetic imagery was generated based on domain-specific rules, either implemented through image processing techniques or expressed in natural language prompts for generative AI. These rules acted as heuristics derived from the target contexts the images were intended to model, namely (i) sticky traps with printed grids and grape moth specimens placed on them; and (ii) individual vine trunks with horizontal cordons, occasionally accompanied by grape clusters and covered by canopies of vine leaves with bounding-box overlaps equal to or less than 30% for leaf area visibility maximization.

4.1. Case Study 1: Image Processing for European Grapevine Moth Traps

One of the proposed case studies involves a pipeline for the synthetic generation of a representative dataset for grape moth detection in sticky/pheromone traps. To this end, preliminary steps were taken to collect imagery from available sources, aimed at preparing two types of visual assets: grape moth specimens and empty traps. The former were obtained by visually selecting insect-populated traps from public datasets [39,40], and isolating individual insects from the background using Canva’s background remover tool [41]. The latter were generated with the assistance of ChatGPT 4o [37] and DALL·E 3 [38]. Afterwards, initial preprocessing steps were applied for cleaning and sampling. Specifically, moth specimen images were inspected and cleaned to manually remove background artifacts, while trap images underwent histogram adjustments to introduce visual variations.

Image processing techniques were then used to randomly combine assets—individual moths and background traps—toward the production of annotated synthetic datasets with varied configurations. For each background image, a set of random transformations was applied, including scaling within the range , brightness and contrast adjustments (), vertical flipping, and the addition of salt-and-pepper noise to simulate operation in dusty field conditions. The moth instances were then resized to approximate real-world relative dimensions and subjected to further transformations such as random rotation (within ) and additional brightness and contrast adjustments. A sample of the resulting composite images, generated through the described image processing pipeline, is shown in Figure 5. At the end of the process, the datasets are converted into YOLO-compatible formats.

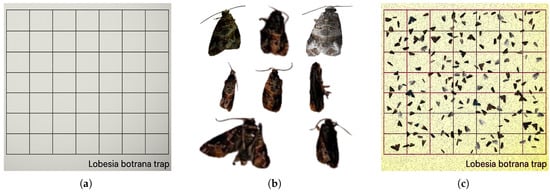

Figure 5.

Samples involved in the process for generating synthetic datasets for grape moth detection in sticky/pheromone traps: (a) trap image generated with ChatGPT 4o/DALL·E 3; (b) grape moth specimens cropped from public datasets [39,40]; (c) resulting synthetic image combining (a) and (b).

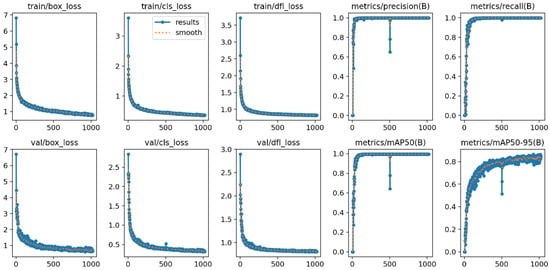

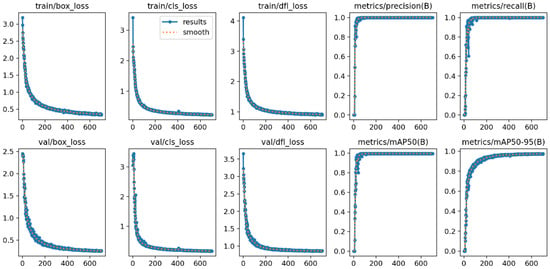

Following the previously specified pipeline, 400 synthetic images were generated and then used to compose a YOLO dataset that considers a split of 60%, 20%, and 20% for training, validation, and testing, respectively. Afterwards, a lightweight version of that CNN architecture was employed to train a grape moth detection model, adjusted to operate in single-board computers (SBCs) with the minimum requirements to host such AI processing. The corresponding training data is presented in Figure 6, which shows a smooth and progressive improvement in performance, indicating promising signs of convergence.

Figure 6.

Training curves of a YOLOv8s for grape moth detection, showing good signs of convergence with a progressive and consistent decreasing of box, class, and distribution focal losses.

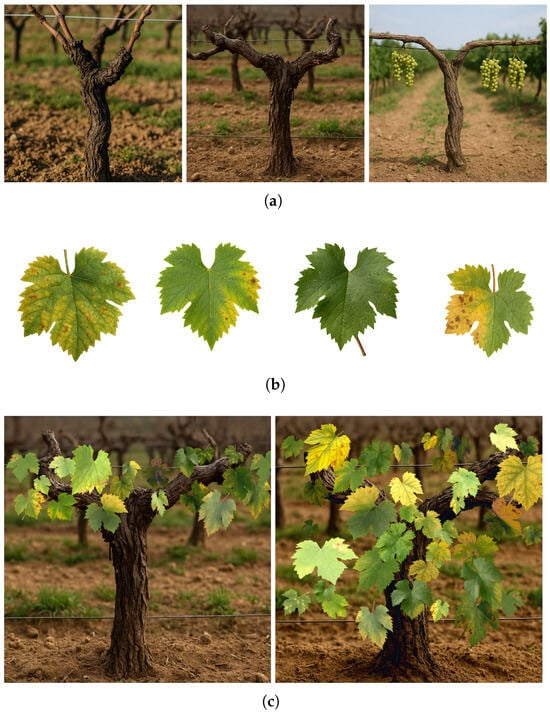

4.2. Case Study 2: Grapevine Diseases Identification

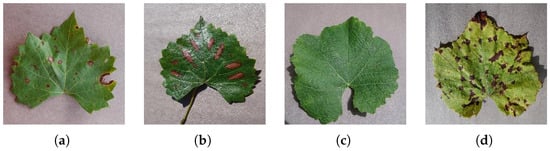

For the disease identification task, a hybrid approach combining object detection and classification models was explored. To render this approach practical, a publicly available dataset hosted on Kaggle [25] was employed (apparently inspired in a partial subgroup of PlantVillage [17]), which contains labeled images of grapevine leaves collected under controlled laboratory conditions and manifesting signs of various infections (Figure 7), making it suitable for training a disease classification model. More specifically, this dataset consists of a collection of images that categorize visual manifestations of black rot, ESCA, and leaf blight, as well as healthy leaves, distributed across two folders: train (7222 examples); and test (1805 examples). However, the referred imagery source does not reflect the complexity of in-field conditions such as leaves hanging within dense canopies, variable lighting, and occlusions—it may not generalize well when applied directly to vineyard imagery. To address this limitation, an object detection model was developed to locate and extract grapevine leaves from natural canopy-like images. These detected leaf regions are then passed through the disease classification model to assess the phytosanitary status of each one, aiming at effective plant health monitoring in realistic scenarios. This is where object detection becomes essential, acting as a preparatory stage that bridges the gap between real-world vineyard imagery and laboratory-trained classification models.

Figure 7.

Samples of grape leaves from a public dataset made available on Kaggle [25], manifesting different conditions: (a) black rot; (b) ESCA; (c) healthy; and (d) leaf blight.

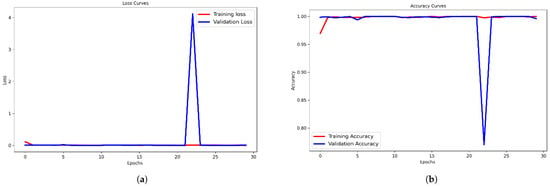

Focusing on the disease classification task, the Mandal [25] dataset was considered along with the consolidated training procedures outlined in [9], starting with the integration of the Xception architecture. To ensure robust model performance, class balancing was carried out prior to training by applying minimal data augmentation to underrepresented classes until all of them matched the sample count of the most represented one. Subsequently, the balanced train component of Mandal’s dataset was split into a couple of DL-compliant subsets using a 65–35% ratio to extend a validation group, with a small percentage of samples exchanged between them both to promote feature learning. Mandal’s testing subset was kept apart from training for performance assessment purposes. A grid search was conducted to identify an appropriate initial learning rate, and a batch size of 24 was adopted throughout training. The Nadam optimizer was employed to combine the benefits of adaptive moment estimation and Nesterov momentum. Furthermore, an early stopping monitor was set up with a patience of 20 epochs to halt the training in case of accuracy stagnation. As illustrated in the training history shown in Figure 8, which presents accuracy and loss curves throughout the training process, a steady training convergence was achieved.

Figure 8.

Training and validation history resulted from the training of an Xception-based grapevine diseases classifier: (a) corresponds to the loss behavior; (b) presents the accuracy progress.

4.2.1. ChatGPT/DALL·E Interaction for the Experimental Generation of Annotated YOLOv8-Compatible Datasets

To generate a dataset for leaf detection compatible with YOLO training requirements, the integration of ChatGPT with the DALL·E image generation system was explored. The primary goal was to assess the feasibility of this approach for generating synthetic vineyard scenes with realistic foliage representations and directly annotated leaves. Therefore, a set of structured generation guidelines was defined and iteratively communicated to ChatGPT, encompassing key aspects such as image resolution and style, target representation details, environmental conditions, elements to exclude, and annotation procedures. These guidelines, summarized in Table 1, were designed to ensure that the generated content would be both visually coherent and technically usable for training object detection models.

Table 1.

Guidelines for the generation of a dataset for grape leaves detection purely based on ChatGPT/DALL·E.

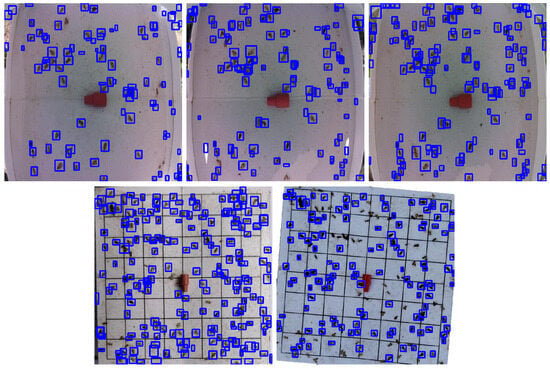

Although the generated images achieved a fair visual realism, several issues were identified regarding the quality of the associated annotations. Common problems included the incorrect labeling of non-leaf elements such as grape clusters or vine trunks as leaves, the omission of clearly visible leaves, and imprecise bounding box coordinates, as shown in Figure 9. When confronted with these issues, ChatGPT clarified that the annotations were randomly simulated and not actually linked to the visual content of the generated images. As a result, it was deemed unfeasible to pursue the construction of a reliable dataset solely based on this approach.

Figure 9.

Vine-like sample and annotations generated with ChatGPT/DALL·E: (a) clear image; (b) the same image overlaid with the proposed annotations (i.e., purple bounding boxes), displayed by Roboflow [42].

4.2.2. Leaf Recognition Modeling Based on Image Processing for the Articulation of Specific and Individual Grapevine Elements Generated Through ChatGPT/DALL·E

As the generation of datasets for leaf detection solely relying on ChatGPT/DALL·E’s image generation capabilities proved unfeasible—mainly due to severe annotation inconsistencies—an alternative strategy was delineated and followed. It consisted of separately generating (i) leafless grapevine plants with grape bunches as vineyard backgrounds; and (ii) grapevine leaves. Such separation intended to enable automatic and yet more controlled dataset composition and annotation. To confine the generative process, a set of rules in natural language—covering resolution, style, key elements, environmental conditions, and exclusions—was specified, as detailed in Table 2 and Table 3, to individually guide the production of the aforementioned imagery groups.

Table 2.

Guidelines for the generation of a library of leafless grapevines with bunches in a vineyard background based on ChatGPT/DALL·E.

Table 3.

Guidelines for the generation of a grape leaf library based on ChatGPT/DALL·E.

Based on the establishment of two libraries of AI-generated images—one containing leafless grapevines and the other containing individual grapevine leaves—the image processing-based procedure described in Section 3.1 was followed to automate the combination of both sets. More specifically, leaf placement followed a Gaussian probability distribution over different regions of the upper part of the background, aligning with the cordon training system and reflecting real-world patterns, as illustrated in Figure 10. Also, overlapping intersections exceeding trigger the elimination of the most recently generated leaf involved in the positional conflict. These steps culminated in a fully structured YOLOv8-complaint dataset, comprising 400 images along with labels, and bounding box annotations. As in the grape moth dataset setup, a split of 60%, 20%, and 20% was assigned for training, validation, and testing, respectively.

Figure 10.

Examples of synthetic imagery generated by combining ChatGPT/DALL·E-based assets and image processing techniques: (a) ChatGPT/DALL·E-generated vineyard backgrounds (leafless grapevines); (b) ChatGPT/DALL·E-generated grapevine leaves; (c) composite samples resulting from the automatic merging of leaves and backgrounds using a custom image processing pipeline.

Afterwards, a YOLOv8s-based model was trained considering the previously generated dataset. As shown in Figure 11, the model presented a stable and consistent convergence, with the box, class, and distribution focal loss values steadily decreasing over the course of approximately 700 training epochs. Training concluded upon activation of an early stopping monitor, which was configured with a tolerance of 100 epochs.

Figure 11.

Training curves of a YOLOv8s designed for grapevine leaves detection, showing good signs of convergence with a progressive and consistent decreasing of box, class, and distribution focal losses.

The following section focuses on the performance and operational insights associated with the different developed models for grapevine moth and leaf detection, as well as for disease classification.

5. Tests and Results

This section is dedicated to the analysis of the results obtained from the validation of the models presented in the previous sections. The performance of each model is examined in light of its specific task, with emphasis on quantitative evaluation metrics or/and visual insights highlighting operational capabilities. To this end, both the test set images—generated alongside the synthetic datasets but excluded from model’s training stage—and the external, environmentally distinct images were sourced from the public datasets or free repositories available online.

5.1. Grape Moth Identification

The performance of the YOLOv8s model, trained using the dataset generated according to the process described in Section 4.1 for grape moth detection, is presented in Table 4. The evaluation was conducted using a test set composed of images that were excluded from training—yet produced using the same methodology as the training and validation subsets. As observable, the model achieves remarkably high values across key metrics, including precision, recall, F1-score, mAPs, and overall fitness. More specifically, precision and recall values close to 1 reflect strong performance in minimizing false positives (i.e., misclassifying insects as background) and false negatives (i.e., missing insects that should have been detected). Derived from both precision and recall, the high F1-score can be attributed not only to the balanced class-wise distribution of examples in the dataset, but also to the consistency with which features are embedded in the images through the proposed synthetic generation process. The mAP@50 and mAP@50–95 metrics indicate the reliability of the detections, as they reflect good correlation between predicted bounding boxes and ground-truth annotations. Consequently, fitness is also considered satisfactory, as it results from a weighted average of precision, recall, and mAP@50–95—with the latter typically receiving the highest weight. These results indicate that the model effectively learned from the synthetic dataset and is likely to perform reliably on imagery captured under conditions similar to that dataset.

Table 4.

Grape moth detection performance over the unseen test group of the synthetic dataset.

The model trained with synthetic data for grape moth detection was subsequently evaluated in a simulated context designed to represent a relevant operational environment—in which, typically, ground-truth annotations are unavailable, yet visual inspection can still provide valuable insights. To that end, external imagery from public datasets [39,40] was used, depicting real traps containing grape moth specimens. Figure 12 presents predicted bounding boxes over a selection of these images, illustrating apparently strong detection capabilities under operational conditions that moderately differ from those found in the synthetic dataset. Nonetheless, some detection issues were observed in images affected by environmental variability, potentially due to factors such as the insects’ relative size, trap design and condition, camera-induced distortions, or lighting variations.

Figure 12.

Results of YOLOv8s operating over images retrieved from external and publicly available datasets [39,40].

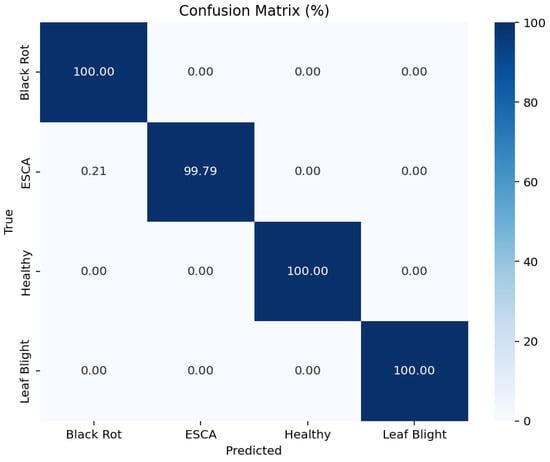

5.2. Disease Classification

The grapevine disease classification model, trained on leaf images acquired under controlled laboratory conditions [25], was evaluated, demonstrating a promising accuracy. As illustrated in the confusion matrix depicted in Figure 13, the model achieved a nearly flawless classification performance, with minimal misclassifications across disease categories—corroborating with the performances reported in other DL works done over the same dataset (e.g., [29]) and the effectiveness of the method presented in [9]. These results are further supported by the overall quantitative metrics presented in Table 5, obtained from the interaction between the grapevine disease classifier and the test set provided in [25], confirming the model’s effectiveness under conditions consistent with those represented in the training data.

Figure 13.

Confusion matrix of Xception-based grapevine disease model, assessed with a testing subset.

Table 5.

Results regarding the performance of Xception-based grapevine disease classifier (precision, recall, F1-score, accuracy), assessed with a testing group available in [25].

5.3. Leaves Detection

Using the process described in Section 4.2, a synthetic dataset was generated to simulate grapevine leaves distributed over a vine plant conduction system, and used to train a YOLOv8s-based model for EOIs detection. Such a model was initially evaluated using a test subset derived from the mentioned dataset, but deliberately excluded from the training stage. As shown in Table 6, the model demonstrated excellent inference capabilities on synthetic data, indicating effective learning from the generated samples. This high performance may be attributed to the consistent and controlled nature of the conditions considered to model the leaf detection task—namely, five vine trunks and 64 leaves—despite the application of various data augmentation strategies, including downscaling and upscaling, brightness and contrast adjustments, leaf and trunk rotations, and Gaussian-based foliage placement, among others, aimed at increasing variability. In such homogeneity, precision and recall align well with high resilience to false positive and false negative occurrences, which also contributes for the elevated F1-score. In turn, the couple of mAP metrics highlights a high correlation between bounding box estimations and ground-truth, with relevant impact in the fitness indicator.

Table 6.

Performance results of the YOLOv8s-based model trained for leaf detection using a synthetic dataset specifically generated for this purpose. The model was evaluated on the test subset of the same dataset, which, however, did not take part in training.

The model trained with synthetic data for leaf detection, however, was subsequently evaluated in a relevant operational environment where ground-truth annotations were unavailable, making visual inspection the primary approach for extracting insights. To that end, high-resolution imagery from the Nossa Senhora de Lourdes farm (41° N, 7° W), located near the University of Trás-os-Montes e Alto Douro campus, was collected and considered for the intended assessment. As illustrated in Figure 14 through the represented blue bounding boxes, in natural vineyard environments with dense foliage and surrounding vegetation, the model successfully detects a substantial number of leaves, though many are also missed. Comparing the grape moth and leaf detection models, one can infer that, although the latter outperforms the former on synthetic data, visual inspection in relevant operational environments suggests a reversal in performance. Despite these limitations, the inference capabilities of the leaves model still seem to be sufficient to extract EOIs for subsequent stages aimed at assessing the phytosanitary status of the targeted plant type.

Figure 14.

Results of YOLOv8s operating over original images acquired from the Nossa Senhora de Lourdes farm ( N, W), located near the University of Trás-os-Montes e Alto Douro campus.

5.4. Leaf Detection Articulated with Disease Classification

The functional integration of the YOLOv8s-based leaf detector with the Xception-based grapevine disease classifier was experimentally validated using external imagery curated to align with the operational context for which each model was individually trained and jointly orchestrated. The goal was to test the collaborative behavior of this two-stage pipeline, in which the detection and classification tasks are modular and, yet, complementary. To that end, aiming to assemble a representative testing sample, the following image sources and retrieval processes were applied per disease:

- Black rot: A publicly available dataset developed by Rossi et al. [43], comprising 384 images of viticultural black rot, was considered. From this set, 30 images were selected based on training condition compliance. To augment this limited sample, additional images were retrieved via web crawling using the Google Custom Search API [44], parameterized with the search query “black rot grapevine leaves” (alongside authentication credentials). An initial pool of 100 images was gathered, of which 45 were retained after manual curation. These images were sourced from agricultural extension platforms, scientific publishers, viticulture-specific websites, and photographic repositories, such as,

- –

- agriculture.vic.gov.au, https://agriculture.vic.gov.au (accessed on 2 June 2025),

- –

- extension.okstate.edu, https://extension.okstate.edu (accessed on 2 June 2025),

- –

- missouribotanicalgarden.org, https://missouribotanicalgarden.org (accessed on 2 June 2025),

- –

- americanvineyardmagazine.com, https://americanvineyardmagazine.com (accessed on 2 June 2025),

- –

- shutterstock.com, https://shutterstock.com (accessed on 2 June 2025).

The combination of both filtered image sources resulted in a total of 75 usable images for black rot evaluation. - ESCA: A publicly available dataset by Alessandrini et al. [45] was used, from which 888 images of grapevine leaves affected by ESCA were selected.

- Leaf blight: The dataset proposed by Özacar et al. [46] was included, particularly focusing on the dead arm class (caused by Phomopsis viticola), which contains examples in which characteristic visual lesions representative of this symptom can be noticed.

These imagery sets were processed through the proposed pipeline, beginning with the YOLOv8s-based leaf detector, followed by the Xception-based model for disease classification. The results, presented in Table 7, indicate that the proposed pipeline—comprising modular and complementary models—is promising for rapid deployment in field conditions, at least, as a preliminary inference system. Coupling this strategy with active learning paves the way for progressive model refinements, improving performance over time through iterative feedback and adaptation.

Table 7.

Operational insights of the modular YOLOv8s leaf detector and Xception disease classifier working collaboratively to identify black rot, ESCA, and leaf blight manifestations.

The following section presents a discussion, highlighting the main findings, limitations encountered, and potential directions for future improvements and applications.

6. Discussion

This work proposed and explored a set of methods based on synthetic data generation and was complemented by existing datasets, with the goal of enabling the rapid development of preliminary and yet functional DL models suitable for field deployment. Such methods involve customized image generation through LLM and diffusion models, domain-relevant composition strategies, and automatic annotation, which leads to datasets compliant with modern DL tools for model training. The case studies selected to demonstrate the viability of these methods lay within precision viticulture scope. Initially, an approach was developed for modeling datasets oriented to the challenge of detecting grape moths in pheromone traps. Generative AI was used to produce empty traps, while publicly available datasets [39,40] allowed to extract insect samples directly from the images. Classic image processing techniques were applied to compose synthetic images, wherein contour-based cropped grape moth specimens were placed over AI-generated trap backgrounds. The resulting YOLO-compatible dataset enabled the training of a YOLOv8s model—selected for its efficiency in edge computing environments—that attained precision values close to 0.99 and an mAP of approximately 0.86. Despite achieving a performance that seems to cope with those reported in the literature for similar contexts—e.g., [6,19]—, it should be noted that, due to the use of such a synthetic dataset for training, this grape moth detection model may lack environmental variability and, as such, be susceptible to blind spots when shifts in operational conditions occur (e.g., changes in lighting, background variation, or the presence of different insect species).

As for disease identification, the combination of available datasets with synthetic data to rapidly expand DL applicability was also explored. An Xception model was trained following the directives of a previous study [9] and using a publicly available dataset from Kaggle [25], which consists of a set of annotated images depicting healthy and infected leaves acquired under laboratory conditions. In similar datasets, the model achieved high accuracy (nearly ), comparable to or even outperforming results reported in the related literature [22,24,27,29]. These results also demonstrate the viability and flexibility of the training process proposed in [9], which was initially developed for digital ampelography but has proven effective for grapevine disease identification based on visual symptoms in the leaves. However, due to the limitations of the utilized dataset in representing field conditions—where leaves are embedded within complex canopies—a leaf detection model was required to bridge the gap between laboratory data and real-world deployment. An initial attempt to generate such a dataset relied solely on ChatGPT’s integration with DALL·E. While the tool produced visually convincing images, the annotations were found to be unreliable or entirely random, making the approach unfeasible for direct dataset construction. As an alternative, ChatGPT/DALL·E were used to separately generate leafless grapevine backgrounds and isolated grapevine leaves with various visual characteristics (e.g., healthy, chlorotic, or spot-affected). Some key operational considerations emerged during this process:

- Before generation, requirements must be specified in detail and confirmed by ChatGPT through summarization to mitigate potential issues related to linguistic ambiguity or model misinterpretation or even drifting;

- During batch generation, interruptions often occurred, requiring manual prompting to resume the process.

Once both background and foreground libraries were prepared, traditional image processing techniques—similar to those used for the grape moth case—were employed to synthesize new images and generate precise annotations. The resulting YOLOv8s leaf detector achieved strong results on unseen synthetic data (precision and mAP above 0.97), although performance declined when tested on external, real-world imagery (originally acquired in Nossa Senhora de Lourdes farm— N, W), revealing some limitations in generalization. Despite such limitations, the integrated use of the YOLOv8s-based leaf detector and the Xception-based disease classifier within a unified pipeline yielded promising outcomes. In external imagery, although not all leaves were detected, the inference system was still able to correctly classify diseased leaves among those successfully extracted, with class-wise accuracies ranging from to , supporting the feasibility of the proposed approach. Overall, the proposed methods offer a promising pathway for the rapid deployment of first-generation AI models, as evidenced by the tests conducted in viticultural scenarios. When integrated into an active learning framework—such as the one illustrated in Figure 15, inspired by works like [32]—these models can function not only as early inference tools but also as key enablers of a continuous improvement strategy. High-confidence predictions may directly support decision-making in the field, while low-confidence outputs can be flagged for expert review and incorporated into subsequent training cycles. This iterative process enables continuous refinement and adaptation of the models, leading to progressively improved performance and closer alignment with ideal real-world operating conditions.

Figure 15.

Active learning pipeline proposal for the progressive refinement of models trained through bootstrapping strategy.

One final note concerns the methodological positioning of this work within the broader literature on automatic approaches for generating credible datasets and annotations. While many of the existing studies—particularly in agriculture [47]—employ generative AI to enhance datasets by creating new instances from existing training data with the aim of improving DL model performance, the approaches proposed in this paper are fundamentally different. Specifically, foundational datasets are synthetically created from scratch and roughly modeled to reflect the general conditions of operational environments. The goal is to enable the initial deployment of DL models in a time-effective manner, allowing them to be incrementally improved over time through, for example, active learning strategies [32], rather than relying on the continuous expansion of existing datasets. Notwithstanding, while using ChatGPT/DALL·E to create fully labeled datasets remains an ongoing challenge—since these tools lack introspective mechanisms for determining the positioning and spatial occupation of entities of interest (EOIs), as observed in Section 4—other approaches (e.g., [11,33]) have explored alternative strategies grounded in the assembly of realistic 3D scenes with automatic annotation capabilities. Complementarily, the approach presented in this paper combines the believable 2D generation capabilities of natural language-driven models, as in the former, with the parameterization and control typical of the latter, aiming to provide context-aware synthetic imagery that is both quick and relatively simple to produce, accompanied by accurate annotations. As all of these approaches are still evolving to shape usable AI-oriented synthetic data sourcing strategies, their consolidation will naturally motivate future comparative analyses aimed at better understanding the strengths and limitations of each proposal.

The next section ends this paper by summarizing the main contributions, as well as by delineating a few orientations for future work.

7. Conclusions and Future Work

The development of reliable visual inference models is often hindered by the burdensome and time-consuming nature of collecting and annotating high-quality datasets. To address this challenge, this work explored the use of synthetic dataset generation to bootstrap DL models, with proof-of-concept applications in the context of precision viticulture.

Two case studies were examined. In the first, a fully synthetic dataset was generated to train a YOLOv8-based object detection model for identifying grape moths in pheromone trap imagery. In the second, a hybrid inference pipeline was proposed, combining a YOLOv8-based model for leaf detection in grapevine canopy images with an Xception-based classifier for disease identification. This two-stage approach enabled the classification of leaves into four categories—healthy, black rot, ESCA, and leaf blight—highlighting the synergistic potential of integrating object detection and classification tasks within a modular architecture.

Results from both scenarios demonstrate that fully or partially synthetic visual data pipelines can provide a valid foundation for the initial deployment of inference models, particularly in domains where annotated data is scarce. Although these models are not flawless, they showed promising performance when evaluated on synthetic imagery unseen during training, as well as apparently good operational capabilities under relevant conditions, based on visual assessment of detection and classification outputs. Additional inference results from collaborative models for grapevine leaf detection followed by disease recognition also provided reasonable indications of suitability for initial DL deployment. Nevertheless, it is important to emphasize the need for continuous improvement processes—potentially driven by active learning strategies—to enhance model robustness over time. In addition to the presented use cases, the proposed synthetic data generation approaches could also benefit other applications in—though not limited to—agriculture, particularly in visual contexts that share similar meta-requirements regarding scene composition. Examples include the detection of animals posing threats to crops (e.g., birds), anti-theft surveillance, drone-based cattle detection and counting, and agricultural fire monitoring, among others.

Looking ahead, future work will focus on deploying these models in edge computing environments to enable real-time, in-field operation. Additionally, active learning frameworks will be integrated, allowing the models to flag uncertain predictions for expert review. This feedback loop will support continuous, data-driven model refinement, progressively improving accuracy and adaptability under real-world conditions.

Author Contributions

Conceptualization, T.A.; methodology, T.A. and N.S.; software, T.A.; validation, R.M. and E.P.; formal analysis, N.S. and A.C.; investigation, T.A., N.S., A.C. and D.P.; resources, R.M. and E.P.; data curation, T.A. and A.C.; writing original draft preparation, T.A. and D.P.; writing, review and editing, T.A., A.C., E.P. and R.M.; visualization, T.A.; supervision, E.P. and R.M.; project administration, R.M.; funding acquisition, R.M. and E.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Vine and Wine Portugal Project, co-financed by the Recovery and Resilience Plan (RRP) and the European Union (EU) NextGenerationEU funds, within the scope of the Mobilizing Agendas for Reindustrialization, under the reference C644866286-00000011.

Data Availability Statement

All the relevant data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pozo Pérez, J.R.; Fernández Llerena, Y.; Chávez Castilla, Y.; Garcia Reyes, E.; Magalhães, L.G.; Guevara Lopez, M.A. AOI for automotive industry—A quality assessment approach combining 2D and 3D sensors. In Proceedings of the 29th International ACM Conference on 3D Web Technology, Guimarães, Portugal, 25–27 September 2024. [Google Scholar] [CrossRef]

- Fontes, J.P.P.; Raimundo, J.N.C.; Magalhães, L.G.M.; Lopez, M.A.G. Accurate phenotyping of luminal A breast cancer in magnetic resonance imaging: A new 3D CNN approach. Comput. Biol. Med. 2025, 189, 109903. [Google Scholar] [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P.; Rathee, P. A review of deep learning techniques used in agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- Morais, R.; Silva, N.; Mendes, J.; Adão, T.; Pádua, L.; López-Riquelme, J.A.; Pavón-Pulido, N.; Sousa, J.J.A.; Peres, E. mySense: A comprehensive data management environment to improve precision agriculture practices. Comput. Electron. Agric. 2019, 162, 882–894. [Google Scholar] [CrossRef]

- Morais, R. MySense IoT Framework UTAD. Available online: https://mysense.utad.pt (accessed on 9 May 2025).

- Mendes, J.; Peres, E.; Neves dos Santos, F.; Silva, N.; Silva, R.; Sousa, J.J.A.; Cortez, I.; Morais, R. VineInspector: The Vineyard Assistant. Agriculture 2022, 12, 730. [Google Scholar] [CrossRef]

- Mohimont, L.; Alin, F.; Rondeau, M.; Gaveau, N.; Steffenel, L.A. Computer Vision and Deep Learning for Precision Viticulture. Agronomy 2022, 12, 2463. [Google Scholar] [CrossRef]

- Shen, L.; Su, J.; He, R.; Song, L.; Huang, R.; Fang, Y.; Song, Y.; Su, B. Real-time tracking and counting of grape clusters in the field based on channel pruning with YOLOv5s. Comput. Electron. Agric. 2023, 206, 107662. [Google Scholar] [CrossRef]

- Adão, T.; Shahrabadi, S.; Mendes, J.; Bastardo, R.; Magalhães, L.; Morais, R.; Peres, E. Advancing digital ampelography: Automated classification of grapevine varieties. Comput. Electron. Agric. 2025, 229, 109675. [Google Scholar] [CrossRef]

- Kontogiannis, S.; Konstantinidou, M.; Tsioukas, V.; Pikridas, C. A Cloud-Based Deep Learning Framework for Downy Mildew Detection in Viticulture Using Real-Time Image Acquisition from Embedded Devices and Drones. Information 2024, 15, 178. [Google Scholar] [CrossRef]

- Adão, T.; Cerqueira, J.A.; Adão, M.; Silva, N.; Pascoal, D.; Magalhães, L.G.; Barros, T.; Premebida, C.; Nunes, U.J.; Peres, E.; et al. PROMORE: A Procedural Modeler of Virtual Rural Environments with Artificial Dataset Generation Capabilities for Remote Sensing Contexts. IEEE Access 2025, 13, 47632–47652. [Google Scholar] [CrossRef]

- Lyu, C.; Lin, S.; Lynch, A.; Zou, Y.; Liarokapis, M. UAV-based deep learning applications for automated inspection of civil infrastructure. Autom. Constr. 2025, 177, 106285. [Google Scholar] [CrossRef]

- Guder, O.; Isik, S.; Anagun, Y. Fabric defects identification for textile industry with a deep learning approach. J. Text. Inst. 2024, 116, 1493–1502. [Google Scholar] [CrossRef]

- Kreutz, M.; Böttjer, A.; Trapp, M.; Lütjen, M.; Freitag, M. Towards individualized shoes: Deep learning-based fault detection for 3D printed footwear. Procedia CIRP 2022, 107, 196–201. [Google Scholar] [CrossRef]

- Noor, T.H.; Noor, A.; Alharbi, A.F.; Faisal, A.; Alrashidi, R.; Alsaedi, A.S.; Alharbi, G.; Alsanoosy, T.; Alsaeedi, A. Real-Time Arabic Sign Language Recognition Using a Hybrid Deep Learning Model. Sensors 2024, 24, 3683. [Google Scholar] [CrossRef] [PubMed]

- Gong, Y.; Liu, G.; Xue, Y.; Li, R.; Meng, L. A survey on dataset quality in machine learning. Inf. Softw. Technol. 2023, 162, 107268. [Google Scholar] [CrossRef]

- Mohanty, S.P. PlantVillage-Dataset. Available online: https://github.com/spMohanty/PlantVillage-Dataset (accessed on 9 May 2025).

- Volunesia, S. Kaggle-Pest Dataset. Available online: https://www.kaggle.com/datasets/simranvolunesia/pest-dataset (accessed on 9 May 2025).

- Gonçalves, J.; Silva, E.; Faria, P.; Nogueira, T.; Ferreira, A.; Carlos, C.; Rosado, L. Edge-Compatible Deep Learning Models for Detection of Pest Outbreaks in Viticulture. Agronomy 2022, 12, 3052. [Google Scholar] [CrossRef]

- Checola, G.; Sonego, P.; Zorer, R.; Mazzoni, V.; Ghidoni, F.; Gelmetti, A.; Franceschi, P. A novel dataset and deep learning object detection benchmark for grapevine pest surveillance. Front. Plant Sci. 2024, 15, 1485216. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Ma, Y.; Liu, B.; He, J.; Li, S.; Wang, H. A Deep-Learning-Based Real-Time Detector for Grape Leaf Diseases Using Improved Convolutional Neural Networks. Front. Plant Sci. 2020, 11, 751. [Google Scholar] [CrossRef]

- Hasan, M.A.; Riana, D.; Swasono, S.; Priyatna, A.; Pudjiarti, E.; Prahartiwi, L.I. Identification of Grape Leaf Diseases Using Convolutional Neural Network. J. Phys. Conf. Ser. 2020, 1641, 012007. [Google Scholar] [CrossRef]

- Bhattarai, S. New Plant Diseases Dataset. 2021. Available online: https://www.kaggle.com/datasets/vipoooool/new-plant-diseases-dataset (accessed on 9 May 2025).

- Prasad, K.V.; Vaidya, H.; Rajashekhar, C.; Karekal, K.S.; Sali, R.; Nisar, K.S. Multiclass classification of diseased grape leaf identification using deep convolutional neural networkDCNN classifier. Sci. Rep. 2024, 14, 9002. [Google Scholar] [CrossRef]

- Mandal, R. Kaggle-Grapevine Disease Dataset (Original). Available online: https://www.kaggle.com/datasets/rm1000/grape-disease-dataset-original. (accessed on 9 May 2025).

- Kunduracioglu, I.; Paçal, I. Advancements in deep learning for accurate classification of grape leaves and diagnosis of grape diseases. J. Plant Dis. Prot. 2024, 131, 1061–1080. [Google Scholar] [CrossRef]

- Nasra, P.; Gupta, S. Deep Learning-Based Grapevine Leaf Disease Detection Using Fine-Tuned Xception Model. In Proceedings of the 2024 3rd International Conference for Advancement in Technology (ICONAT), Goa, India, 6–8 September 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Pushpalama. Grape Disease Dataset. 2021. Available online: https://www.kaggle.com/datasets/pushpalama/grape-disease (accessed on 9 May 2025).

- Talaat, F.M.; Shams, M.Y.; Gamel, S.A.; ZainEldin, H. DeepLeaf: An optimized deep learning approach for automated recognition of grapevine leaf diseases. Neural Comput. Appl. 2025, 37, 8799–8823. [Google Scholar] [CrossRef]

- Tejaswi, A. Plant Village Dataset. Available online: https://www.kaggle.com/datasets/arjuntejaswi/plant-village (accessed on 9 May 2025).

- Shahrabadi, S.; Alves, V.; Peres, E.; Morais Dos Santos, R.; Adão, T. Unbalancing Datasets to Enhance CNN Models Learnability: A Class-Wise Metrics-Based Closed-Loop Strategy Proposal. IEEE Access 2025, 13, 57485–57503. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, J.; Gong, S.; Lu, H.; Tao, D. Deep Reinforcement Active Learning for Human-in-the-Loop Person Re-Identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Greff, K.; Belletti, F.; Beyer, L.; Doersch, C.; Du, Y.; Duckworth, D.; Fleet, D.J.; Gnanapragasam, D.; Golemo, F.; Herrmann, C.; et al. Kubric: A Scalable Dataset Generator. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 3749–3761. [Google Scholar]

- Cordeiro, A.; Rocha, L.F.; Boaventura-Cunha, J.; Pires, E.J.S.; Souza, J.A.P. Object segmentation dataset generation framework for robotic bin-picking: Multi-metric analysis between results trained with real and synthetic data. Comput. Ind. Eng. 2025, 205, 111139. [Google Scholar] [CrossRef]

- Adão, T.; Pinho, T.M.; Pádua, L.; Santos, N.; Sousa, A.; Sousa, J.J.; Peres, E. Using Virtual Scenarios to Produce Machine Learnable Environments for Wildfire Detection and Segmentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-3-W8, 9–15. [Google Scholar] [CrossRef]

- Wu, C.; Yin, S.; Qi, W.; Wang, X.; Tang, Z.; Duan, N. Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models. arXiv 2023, arXiv:2303.04671. [Google Scholar] [CrossRef]

- OpenAI. ChatGPT: Language Model by OpenAI. 2025. Available online: https://chat.openai.com/ (accessed on 2 June 2025).

- OpenAI. DALL·E 3: AI Image Generation by OpenAI. 2023. Available online: https://openai.com/index/dall-e-3/ (accessed on 2 June 2025).

- Teixeira, C. Grape_Moth Dataset. 2023. Available online: https://universe.roboflow.com/anaclaudia/grape_moth (accessed on 2 June 2025).

- Teixeira, C. Grape_Moth_1 Dataset. 2022. Available online: https://universe.roboflow.com/claudia-teixeira/grape_moth_1 (accessed on 2 June 2025).

- Canva. Background Remover—Canva. 2025. Available online: https://www.canva.com/features/background-remover/ (accessed on 2 June 2025).

- Roboflow: Computer Vision Tools for Developers and Enterprises. Available online: https://roboflow.com/ (accessed on 9 May 2025).

- Rossi, L.; Prati, A. LDD: A Grape Diseases Dataset Detection and Instance Segmentation. In Proceedings of the 21st International Conference, Lecce, Italy, 23–27 May 2022. [Google Scholar] [CrossRef]

- Google. Custom Search JSON API. Available online: https://developers.google.com/custom-search/ (accessed on 2 June 2025).

- Alessandrini, M.; Calero Fuentes Rivera, R.; Falaschetti, L.; Pau, D.; Tomaselli, V.; Turchetti, C. ESCA-Dataset; Elsevier Inc.: Amsterdam, The Netherlands, 2021. [Google Scholar] [CrossRef]

- Özacar, T.; Öztürk, Ö.; Güngör Savaş, N. HERMOS: An Annotated Image Dataset for Visual Detection of Grape Leaf Diseases; Elsevier Inc.: Amsterdam, The Netherlands, 2021. [Google Scholar] [CrossRef]

- Lu, Y.; Chen, D.; Olaniyi, E.; Huang, Y. Generative adversarial networks (GANs) for image augmentation in agriculture: A systematic review. Comput. Electron. Agric. 2022, 200, 107208. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).